Image credit:

Cover - Vincent Ng, James Ruse Agricultural High School

Inside cover - Minalu de Graaf, Lambton High School

NSW Department of Education

Image credit:

Cover - Vincent Ng, James Ruse Agricultural High School

Inside cover - Minalu de Graaf, Lambton High School

In my role as Coordinator of Science, TAS, Mathematics and STEM secondary curriculum teams, I am well positioned to see how ground breaking the Science Extension course is and the positive impact it has on the students in NSW schools which is evident in the work presented in this volume of the Journal of Science Extension Research.

The Science Extension course really is a game changer in our science classrooms. From its innovative science content such as the history and philosophy of science, data science and ethics, to being the first HSC course to be examined online and also first Stage 6 science course to incorporate a student research project as a substantial part of the course mark. Further innovation in the Science Extension course are the opportunities that students have to work on projects that transcend the syllabus.

In December 2015, the Education Council presented a case for change though its National STEM School Education Strategy 2016 - 2026, where over the next five years, employment was predicted to increase in professional, scientific and technical services by 14 per cent and in health care by almost 20 per cent. The strategy called for a renewed national focus on STEM in school education being critical to ensuring that all young Australians are equipped with the necessary STEM skills and knowledge that they will need to succeed.

Building on this, the office of Australia's Chief Scientist 2020 report 'Australia's STEM Workforce', highlighted that Australians who have studied science, technology, engineering and mathematics (STEM) are helping to solve the problems of the future.

Evidence of how young people in our schools are making their contribution to solving the problems of the present and future can be found in Volume 2 of The Journal of Science Extension Research. Health challenges like the efficacy treatments Crohn's Disease, to environmental challenges such as the impact of microplastics on seafood, technological challenges like the effectiveness of sound absorbing materials

PEO STEM Coordinator -

through to disproving misconceptions around black holes, students in NSW public schools are proving that the Science Extension course is preparing them to meet these future workforce needs for STEM professionals.

Equally important to the development of the necessary STEM skills and knowledge in students is the role of the Science Extension teachers and professional mentors. Meeting the needs of the course while preparing to work as researchers, the support they provide our students is critical to their success not only in Science Extension but in their future after school.

I commend the efforts of the student, teacher and mentor teams that have resulted in the selection of the reports in the journal, of which all are of the highest quality. It will be exciting to see the direction taken by future students of Science Extension with the support of their teachers and mentors. Will they investigate how we can meet electricity demand and generation needs, adapt to the changing climate, integrate Al safely and appropriately into society and optimising healthcare for ageing populations? Time will tell, and I look forward to seeing what the following future STEM professionals will discover.

I am honoured to introduce this 2nd volume of The Journal of Science Extension Research. The NSW Science Extension syllabus is a world-leading program for secondary science education, as the quality of articles in this volume make evident. The content covers a wide range of relevant topics that clearly presented exciting challenges to the students taking part in the research.

The invention of the World-Wide Web at CERN sparked an unprecedented communication revolution around the globe. Everyone everywhere suddenly had access to everything, with instant communication at their fingertips. While the advantages were predictable, its usage by bad actors, seeking fame, financial or political gain blindsided many of us; it can pose an important risk to the mindset of young students, even when they have been raised in this environment. Nobody is immune.

This is why research opportunities, such as the Science Extension syllabus, play such an important role. By pursuing challenging scientific investigation into areas that pique their interest, these young scientists develop skills and learn valuable lessons. This pertains not only to their specific topics, but also the process that brings them their results.

Conversation is prevalent about the need for humanity to find common ground to solve our problems. Yet, science has provided this common ground for thousands of years. When students make a measurement, then remake the measurement, and finally call on others to check the measurement - they learn to throw out their preconceived opinions and to objectively interpret their results. It is through this process that these students learn to separate signal from background. To identify what is true.

It is this relentless pursuit of truth that is common to all of the articles presented in this fascinating volume, and a lesson these students will keep with them for life. I highly commend the journal for giving these bright minds an opportunity to share this lesson with the rest of us. Enjoy.

The Science 7-12 curriculum team acknowledges the incredible efforts of Science Extension teachers in inspiring, guiding and mentoring their students to complete their scientific research projects. Despite the novelty and innovativeness of the syllabus, those teachers spared no effort to nurture their students' scientific curiosity and engage them in conducting authentic scientific inquiry. As a result, their students have experienced new heights of academic and scientific achievements in their research journeys.

We acknowledge the following teachers whose students' reports appear in this publication:

• Seher Aslaner, Lurnea High School

• Ritu Bhamra, Normanhurst Boys High School

• Andrew Corcoran, Boorowa Central School

• Carina Dennis, James Ruse Agricultural High School

• Wade Fairclough, Cherrybrook Technology High School

• Marina Gulline, Willoughby Girls High School

• Ann Hanna, Menai High School

• Scott Hollingsworth, Coffs Harbour Senior College

• Kurt Nicholson, Lambton High School

• Joelle Rodrigues, Blacktown Girls High School

• Tim Smith, St Ives High School

• Joshua Westerway, Ulladulla High School

NSW Department of Education

To all NSW Department of Education schools, we thank you for your sustained efforts in achieving excellence in science education.

The Science Extension syllabus calls upon science teachers to develop students' capacity to 'work scientifically'. But what does it mean to truly work like a scientist? We know what it doesn't mean: it isn't memorising the periodic table and repeating well-trodden textbook practical experiments that work without fail within the time frame of a typical science lesson. Rather, it often means leaping into the unknown, exploring endless angles of investigation to try to make inroads into a challenging line of inquiry, with absolutely no guarantee of 'success' at the end. To quip the everquotable Einstein: 'failure is success in progress'. The Science Extension project helps students to re-frame prior conceptions of failure and challenge as being the nexus where much of the richest learning happens - and certainly where many great scientific insights occur.

While helping students develop resilience throughout working scientifically presents challenges to all Science Extension teachers, it is perhaps felt more acutely by teachers working with high potential and gifted (HPG) students. Many HPG students are drawn to the Science Extension course by virtue of being self-motivated learners who value the independence and choices that the course offers, and they may also be accustomed to achieving consistent success in other science courses and external scientific competitions, and therefore struggle with uncertainty. In my fourth year teaching the course in a specialist school setting for HPG learners, it has been humbling to reflect that some of my students' greatest successes (including those attaining state ranks) are those whose projects ostensibly 'failed', in terms of not generating the 'Eureka' moment they had so keenly hoped for at the outset. The Science Extension course is fortunate to be supported by a syllabus that provides students with a solid foundation to the historical, theoretical and applied conceptual frameworks around uncertainty and error. It's also supported by the emphasis on students being mentored by scientists who have the 'lived experience' of managing uncertainty and bias within their day-to-day careers working scientifically. Many of my students have established strong connections with their

mentors, so much so that some have been inspired to completely re-think their career and tertiary interests. They have spoken of their Science Extension experience in interviews for university and scholarship applications, which not surprisingly have resulted in positive outcomes as their infectious enthusiasm and intellectual commitment to the pursuit of inquiry, as evidenced in their work in Science Extension, is readily recognised across many sectors. This reflects the value of the course as a viable and demonstrable stepping stone to career progression across a range of disciplines, and particularly in STEM-related fields.

Arguably one of the greatest challenges for schools offering Science Extension is resourcing all the opportunities that the course offers. My school is in the fortunate position to have had course enrolments increase, but this has brought its own challenges in terms of managing the intensive demands of student projects, including individualised teacher supervision and feedback, provision of resources and materials, and access to mentors. At a whole-school level, having to think strategically and creatively about how best to resource materials and equipment to support Science Extension investigations has had a 'knock on' effect of benefitting the large cohorts of students enrolled in Stage 6 science courses at the school. However, it is increasingly challenging to be able to resource the teacher time for highly individualised guidance on student projects, particularly those requiring supervision of practical experiments. The finite resource of mentors also means that some students may not be able to access the rich mentoring partnership that is a unique aspect of the course, which distinguishes it from other extension courses and is invaluable in terms of career education linkage. As the course cohort numbers expand with interest, which is testament to the quality of the syllabus assessment of student outcomes, and most importantly to the science teachers who implement the course, there is a risk of equity disparity at both a local and state level in terms of adequate resourcing. The collaborative spirit and creative insights from the Science Extension teaching community is best placed to offer solutions on how to manage resource demands while not limiting the creativity and ingenuity of students who have been inspired through this course to become the next generation of scientists.

As I reflect on the incredible adventure that has been teaching the Science Extension course, it is my immense pleasure and privilege to welcome you to the 2nd volume of The Journal of Science Extension Research, a showcase of our students' scientific research and achievements.

I think back fondly to my first class of seven students in 2019, a bustling hive of excitement and potential that had not yet, in over a decade of schooling, been given the opportunity to be fully unleashed. I remember introducing the course and helping my students brainstorm their first ideas from their areas of passion. First ideas became literature searches, and these further evolved into big questions, and I was so impressed - it's unbelievable what our students will dare to do or ask when given the opportunity. This for me has been the absolute highlight of the Science Extension course. Each year, I am blown away by the diversity of topics that have fascinated my students and by their innovation in designing experiments from rudimentary resources.

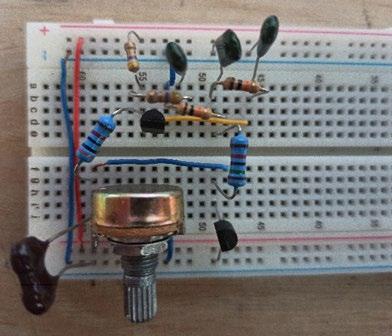

I have loved supporting them from the sidelines as they took ownership of their own scientific research, contacting scientists to discuss their papers, mentors to seek out subject-specific advice or access to more equipped research labs. Those who have taught the course will know that our students have continually pushed the boundaries of what we had thought would be possible. Who could have envisaged that public school students would be so adeptly conducting research into cancer? Or the impact of bushfires on frogs? Or the chaotic behaviour in circuits? But this is where we are today, a testament to the power of public education done right. I am so proud of all our students and their individual journeys. I am certain that as at my school, for every piece of finished and polished research, there were many highs and lows - we joke in my class that we've yet to avoid tears each year. But what a way to learn and to experience real science. Our Science Extension students are better equipped for scientific careers, and in fact for life, for having persisted and succeeded.

For those teachers considering the dive into Science Extension, I wholeheartedly recommend it - you will never experience a subject as fulfilling or rewarding and you will learn so so much. My advice for you is to start small and build up; your students will lead the way. Our first projects were based on our Year 11 depth study investigations, our students developing their ideas and questions from their piqued interests. With each year, our students have become increasingly sophisticated in their research as they 'stand on the shoulders of the giants' before them. This, in my view, is one of the most significant benefits of the journal we present here, an opportunity to expose students to what is possible. Enjoy!

This edition includes research reports from Science Extension students who completed the course in 2022. All reports are the work of students studying in NSW public schools. They have been supported by their teachers and schools, and in some cases, external mentors.

Running Away from Cancer: An investigation into the dynamic metabolism of cancer cells under an increase in extracellular lactate concentration

This experiment aimed to investigate how a cumulative increase in extracellular lactate affects the ability of a cancer cell line to switch from aerobic glycolysis to oxidative phosphorylation. It was found when the total lactate injected into the cancerous cell line was 15mM and 20mM, there was a significant increase in oxygen consumption rate compared to basal measurements. This suggests that an increase in extracellular lactate does cause cancer cells to shift to an oxidative phenotype in vitro , however further investigations involving a larger sample size and in vivo models are pivotal in assessing the role of lactate, and potentially exercise, in the metabolic processes of cancer.

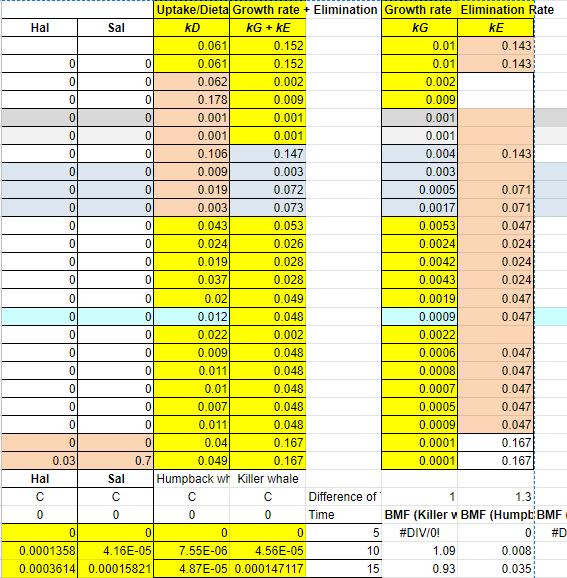

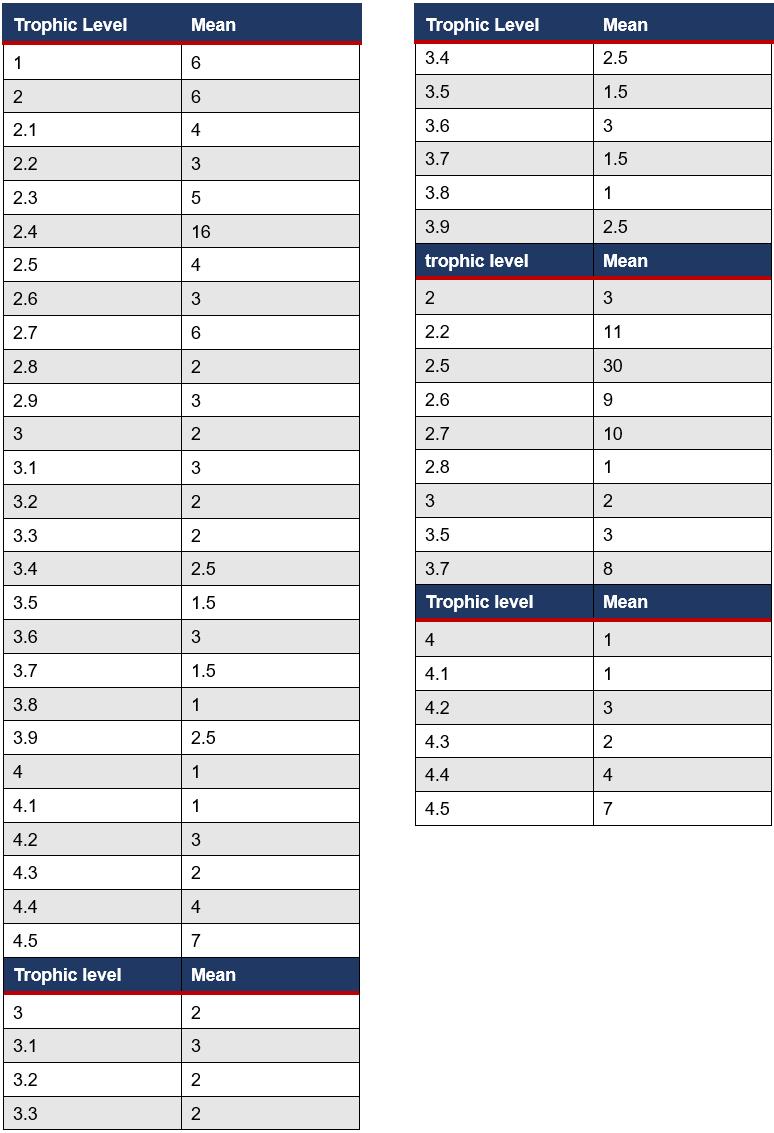

Secondary data was examined to determine if there is a relationship between the trophic level of ocean species and their bioaccumulation of microplastics. Results show that lower trophic levels are more susceptible to microplastic consumption. This suggests that if humans want to avoid microplastic consumption, they should avoid filter feeders (muscles, oysters, scallops etc.), deposit feeders (bass, eels, crabs etc.) and grazers (lobsters, shrimp etc.).

Flynn Croaker - Coffs Harbour Senior College pp 34-48

An investigation into the effect of temperature, light availability and chlorophyll concentration on the rate of photosynthesis in plants was undertaken. Higher temperatures, light intensity and chlorophyll concentration were found to increase the rate of photosynthesis.

49-61

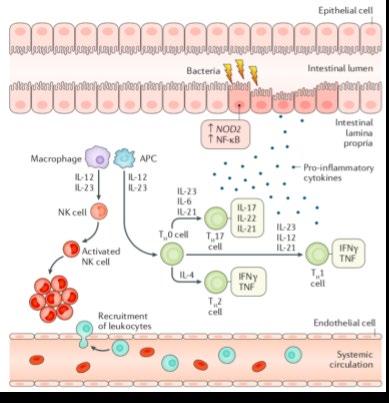

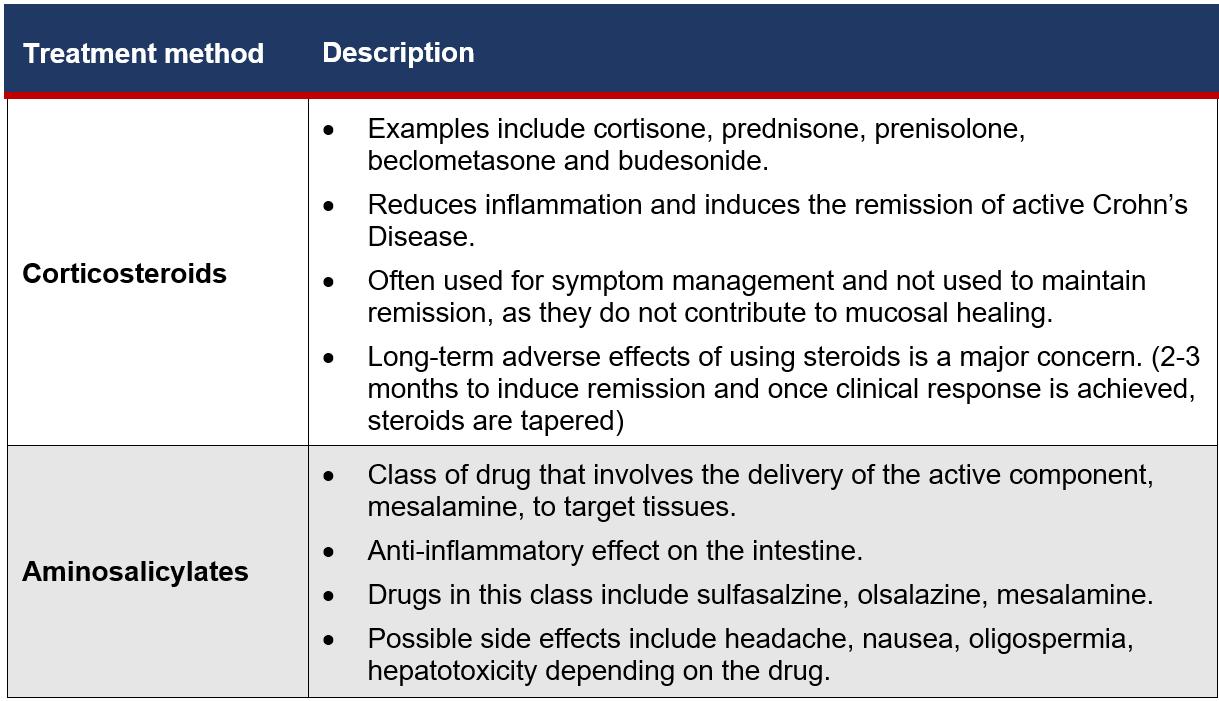

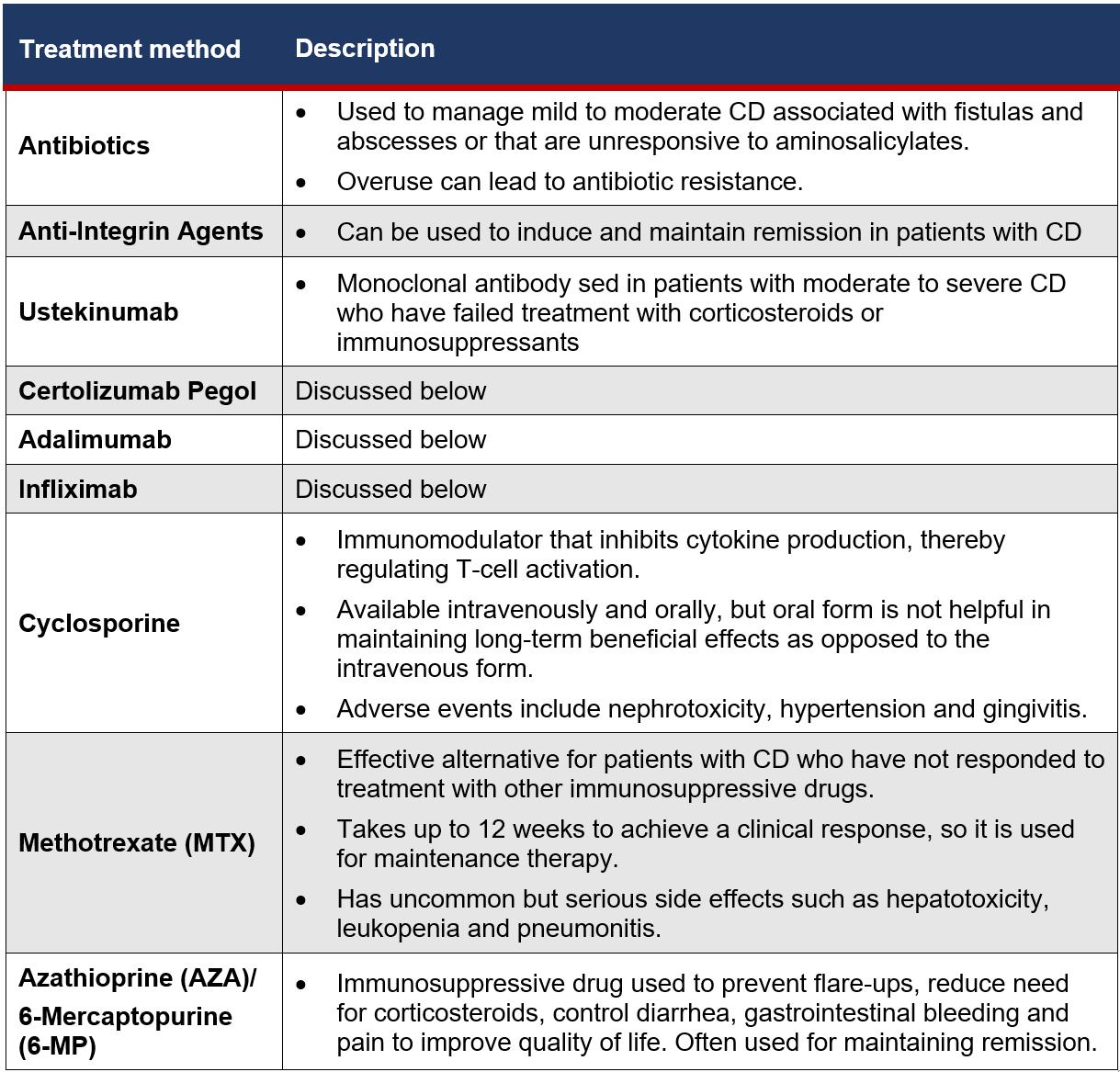

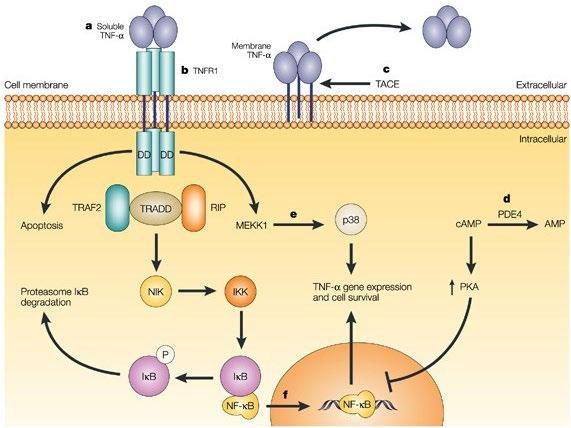

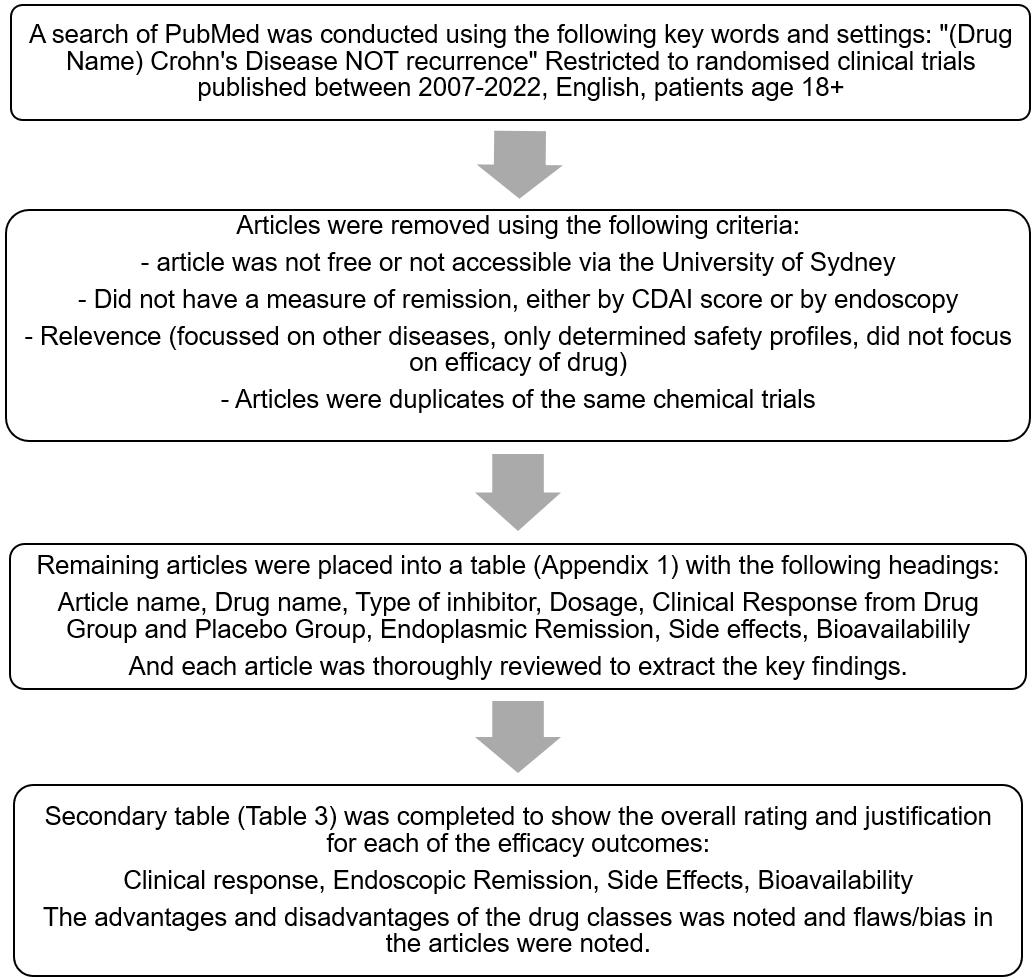

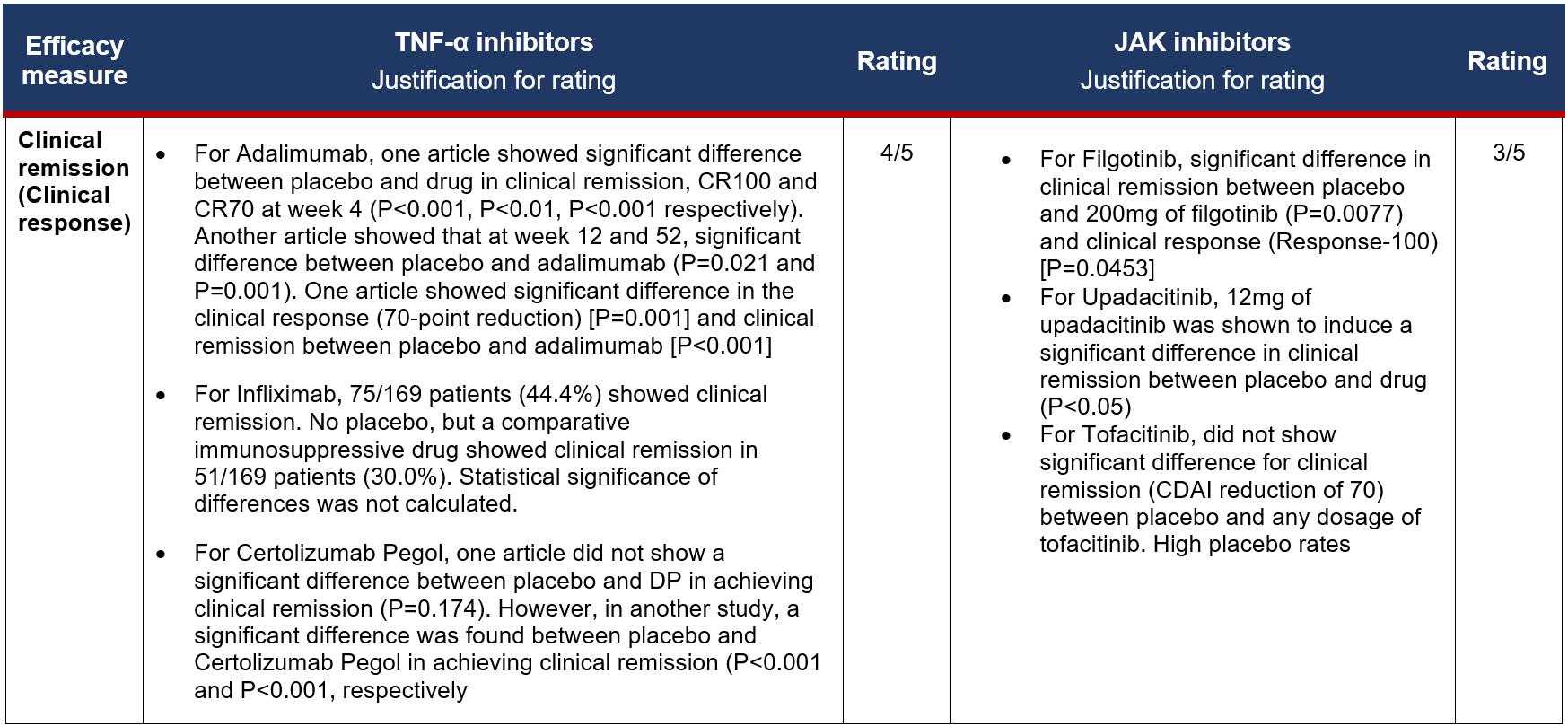

Sarah Arnold - Menai High School pp 19-33This study investigated and compared the efficacy of TNF-a inhibitors, the standard treatment for Crohn's Disease with JAKinhibitors, an emerging field of biologies. It was found that some JAK inhibitors did not induce a significant difference in clinical or endoscopic remission and the clinical development of one was discontinued, which failed to contend with TNF-a inhibitors which were reliable for safety, clinical and endoscopic remission. This study was significant as it provides CD patients and healthcare professionals with a comparison between the two classes of inhibitors to assist them with their treatment options.

62-84

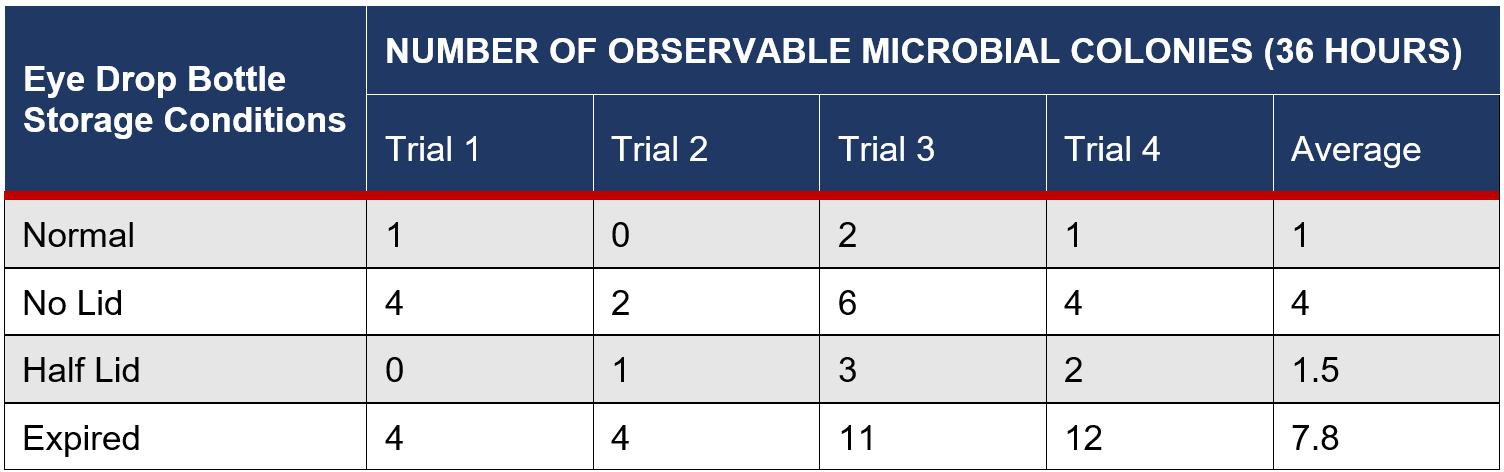

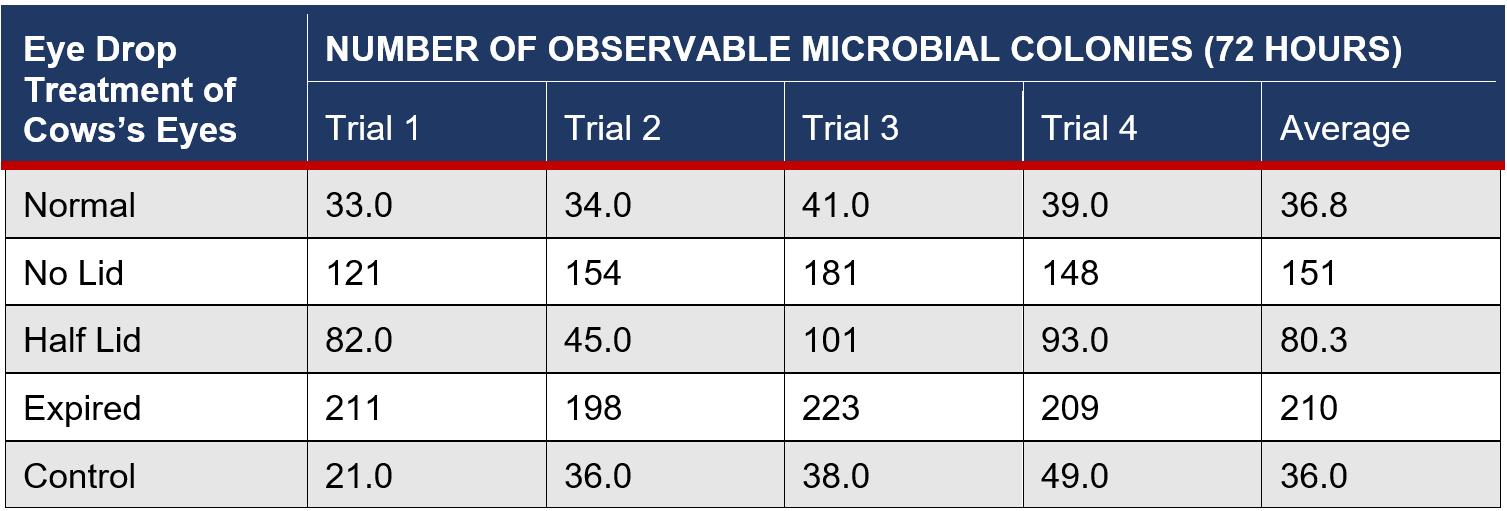

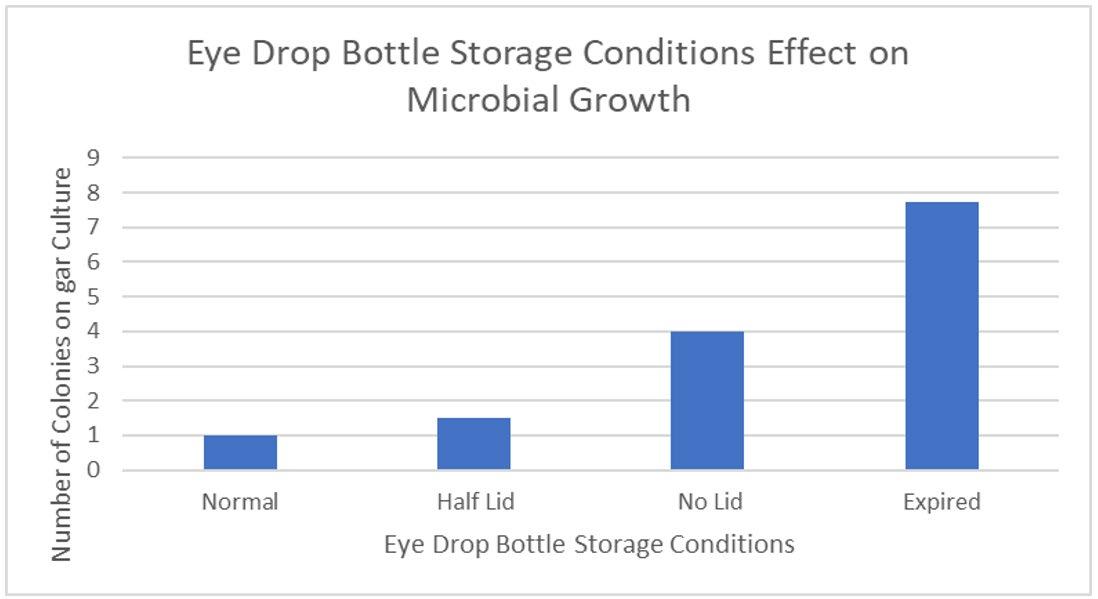

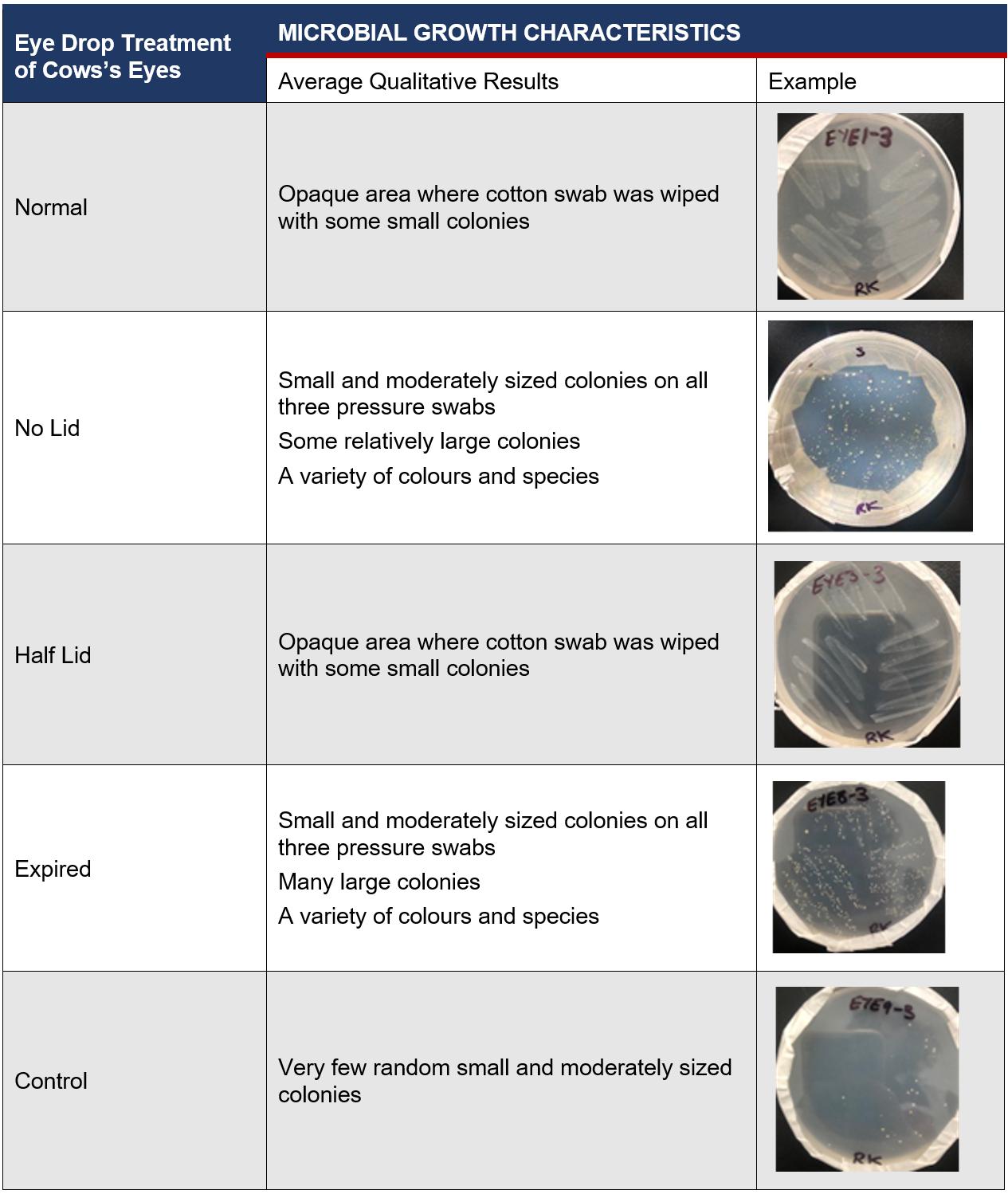

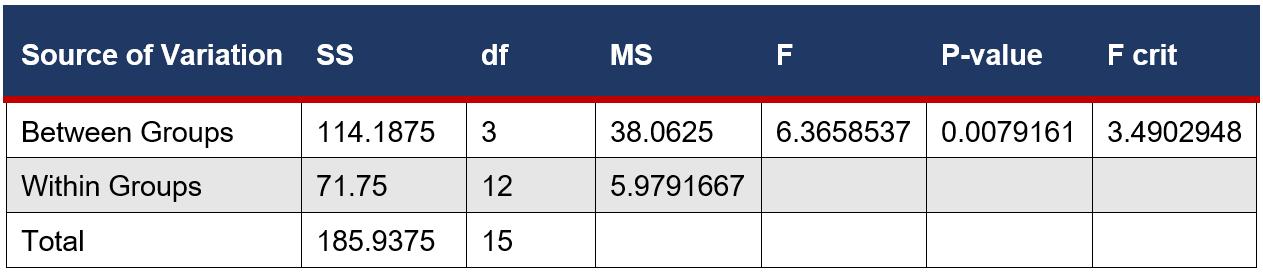

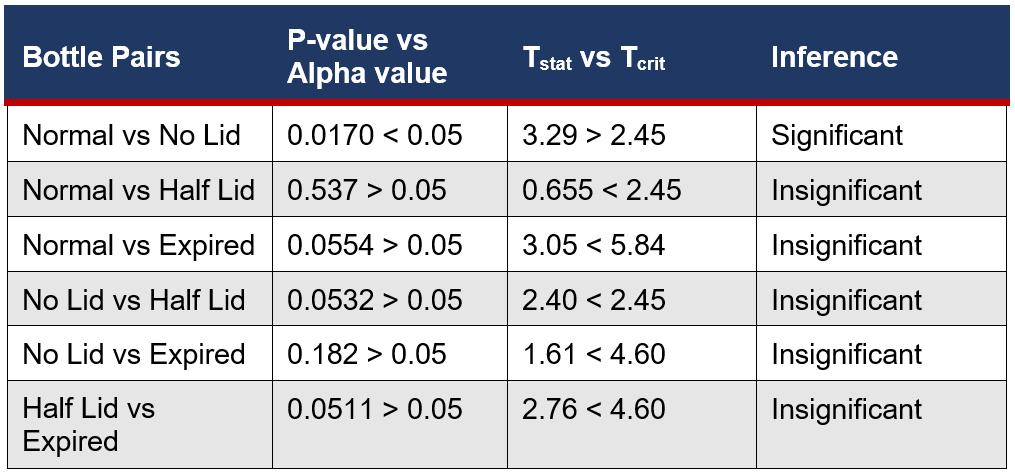

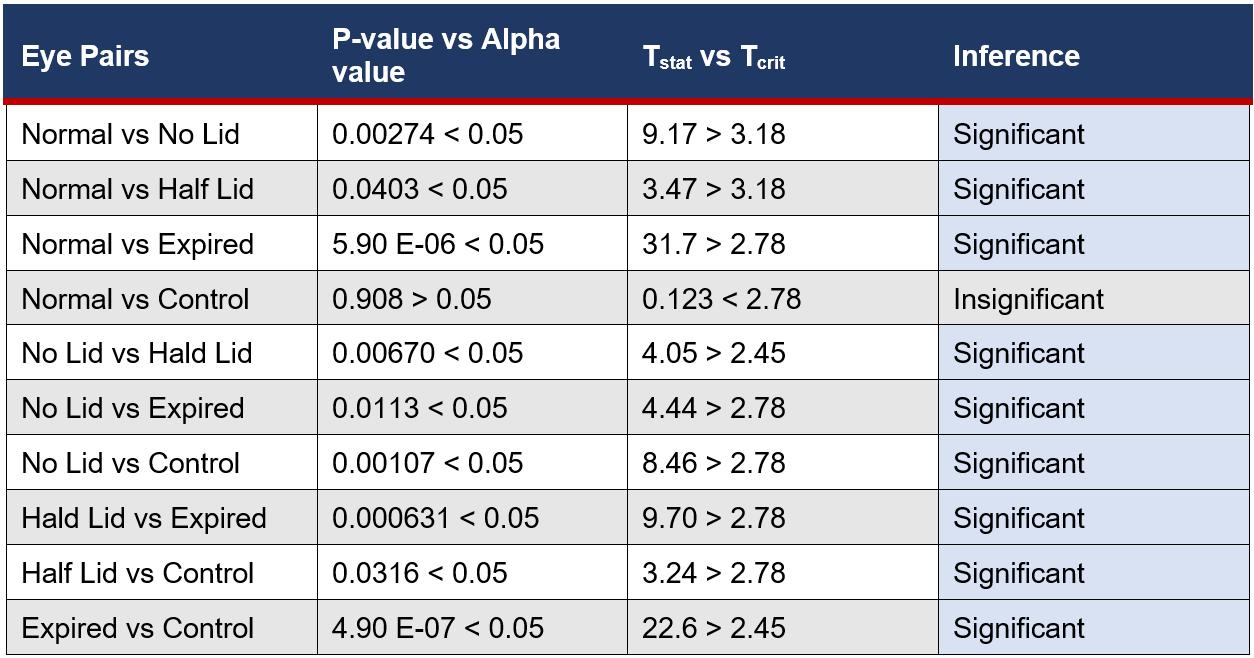

This study aimed to investigate how differing storage conditions of over-the-counter eye lubricants affected the microbial contamination on eye surfaces. It was found that there was a very strong positive correlation between storage conditions and microbial growth on the eye surface. This shows that the correct storage of eye drops is important in preventing or limiting microbial growth on the patient's eye surface.

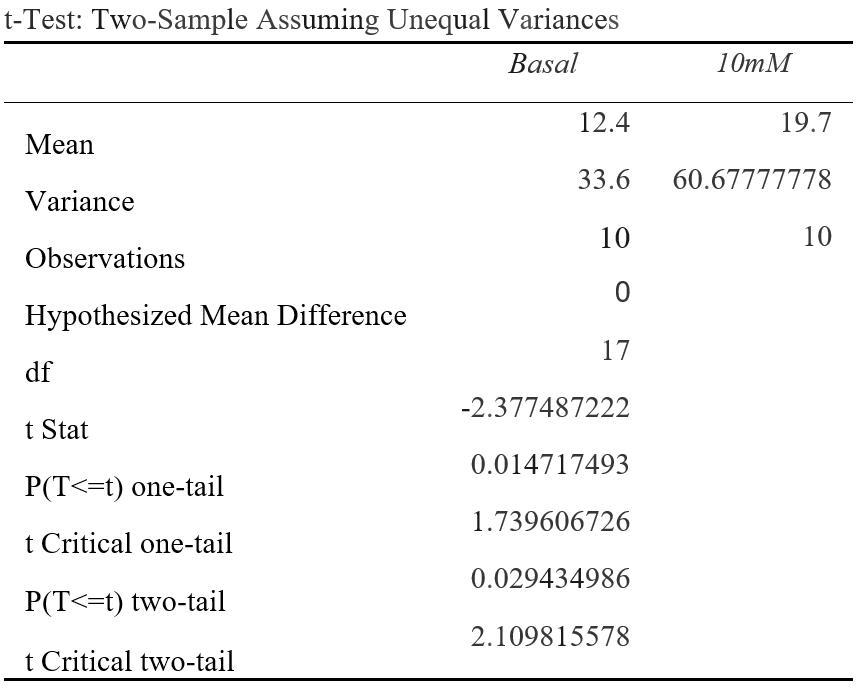

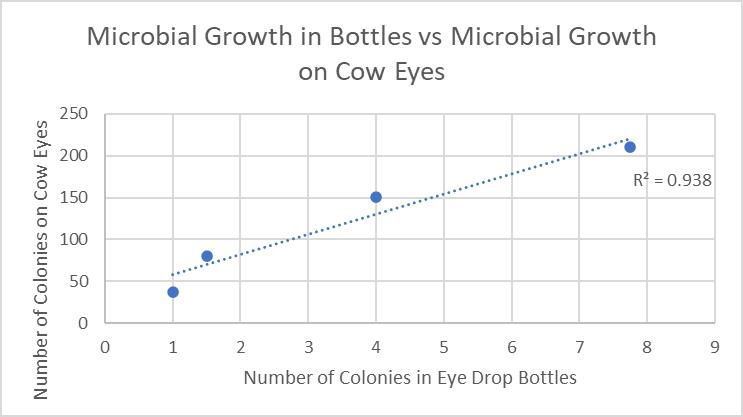

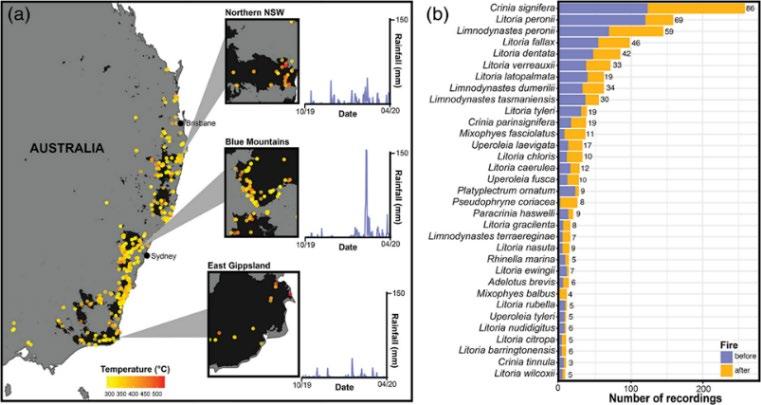

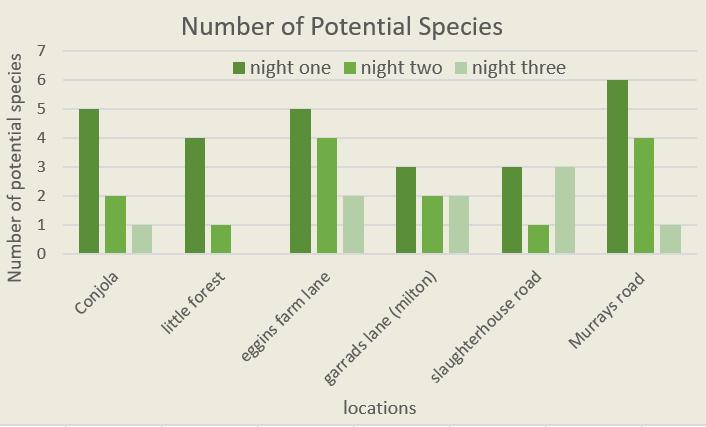

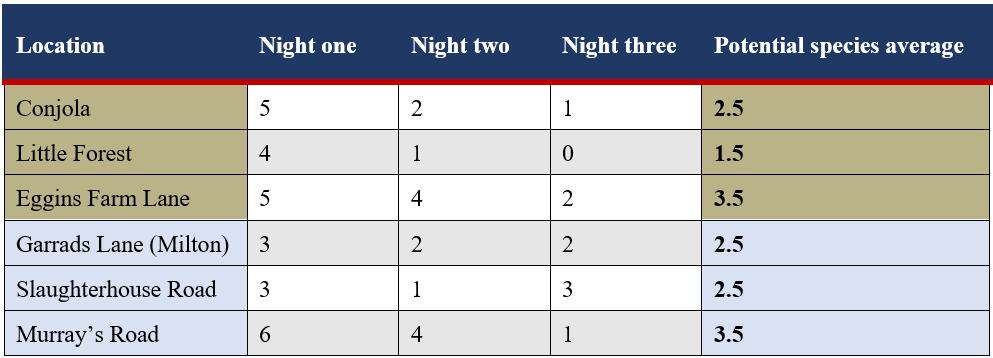

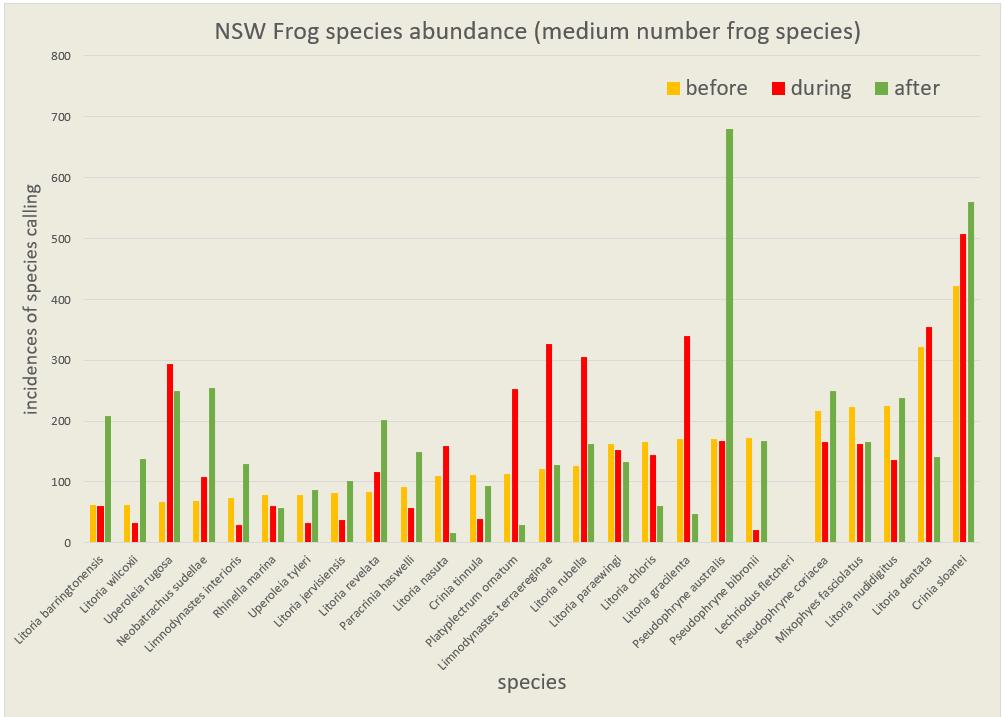

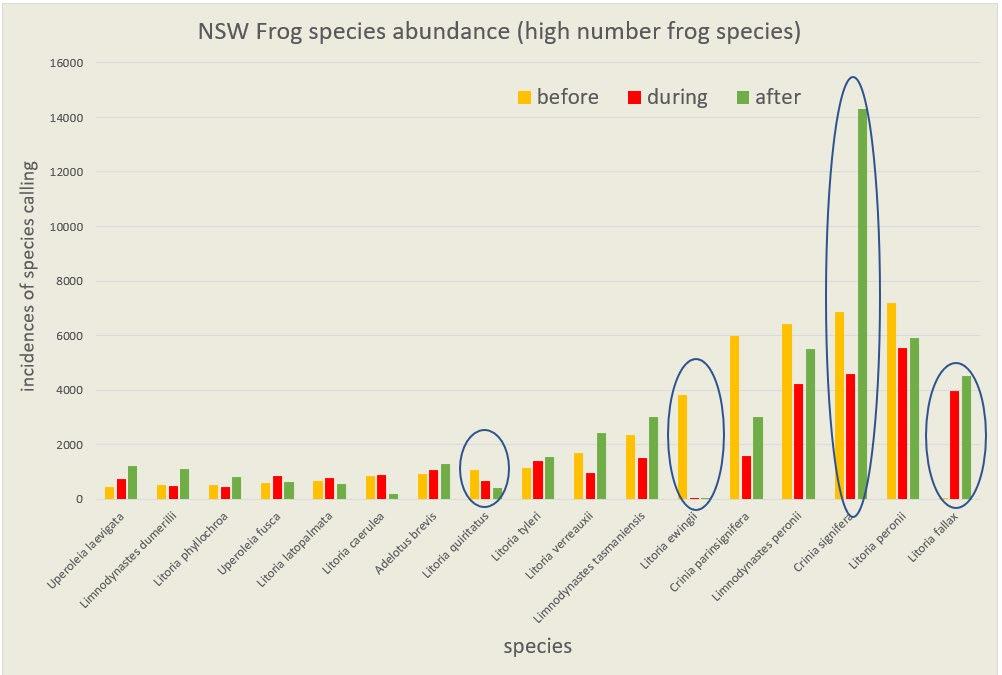

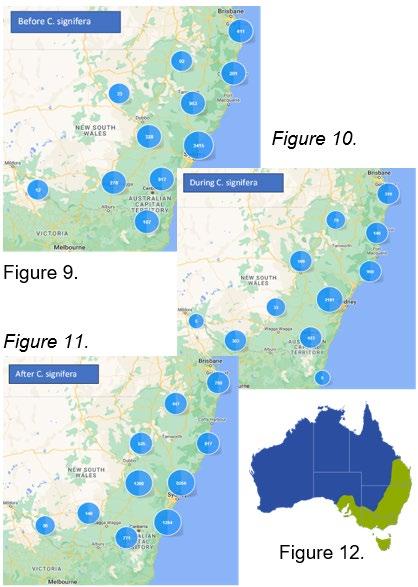

This paper investigates the impacts of the Currowan bushfires on the distribution and populations of frog species with reference to both intimate localised sites and the entirety of NSW. The findings concluded that all species' recordings decreased during the fire period, and many increased after the fire period. The impact of fires on frog species is scarcely researched, and it would be highly beneficial for frog conservation and future predications in the light out climate change.

101-113

Chaemin Joseph Kim - James Ruse Agricultural High School pp Rosie Kerruish - Willoughby Girls High School pp 85-100This study examines how the composition of the shrub layer within revegetation plantings affects species richness and abundance of birds. While areas with high shrub density did not have higher bird abundance, the investigation did show that brid abundance increased with increasing tree dens i ty. It is therefore important to maintain existing areas of established and mature native trees for the benefit of birds.

pp114-124

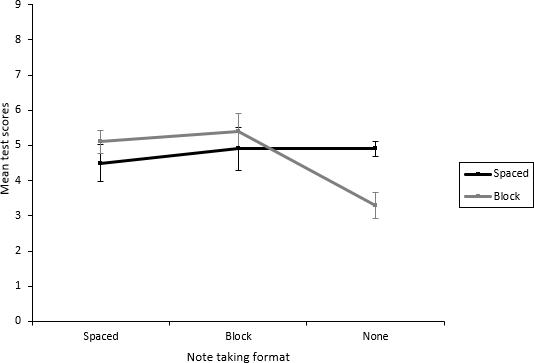

In this study the effect of paragraphing and note taking format on the recall of information was investigated. The results showed that the presence of notes was significant and the interaction between notes and textual format, varied through paragraphing , was also significant. This holds practical value for the improvement of the study habits of students, could be used in taking down key information from presentations , speeches and interviews and for improving recall driving more informed decision making in workplaces.

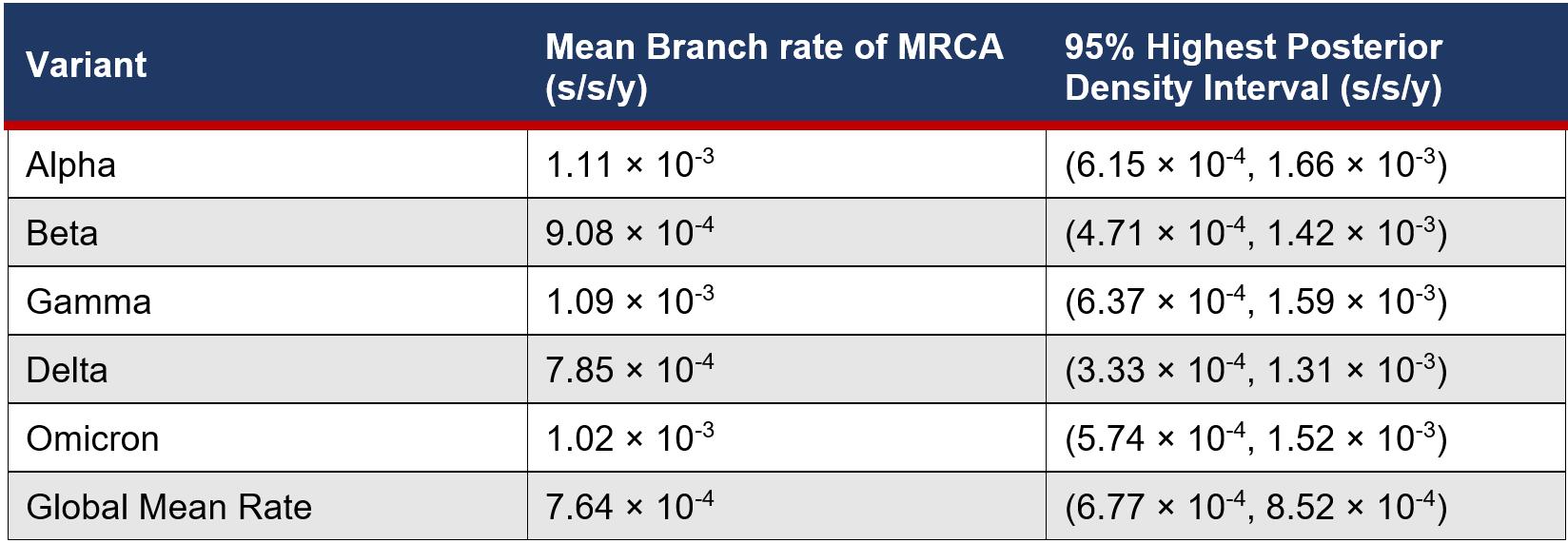

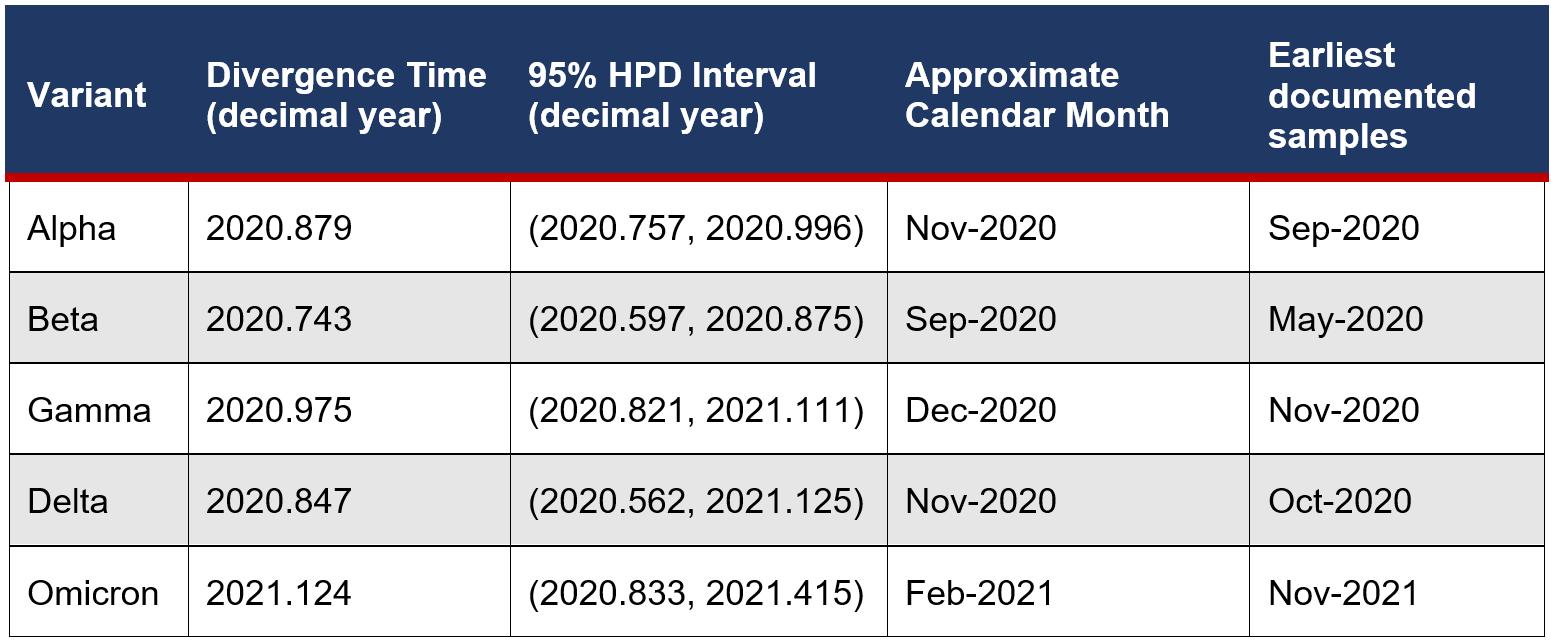

An investigation of how the evolutionary rate of SARS-Cov-2 has varied throughout the pandemic shows that there is long, but temporary, period of rate acceleration around 1.5 times above the mean rate in the Alpha, Gamma and Omicron variants. These results reflect the importance of large genomic datasets built upon global genet i c surveillance efforts in the understanding of the evolutionary dynamics of SARS-Cov-2 which allow for informed public health decisions.

NSW Department of Education

pp 134-144

The Journal of Science

Extension Research 2023

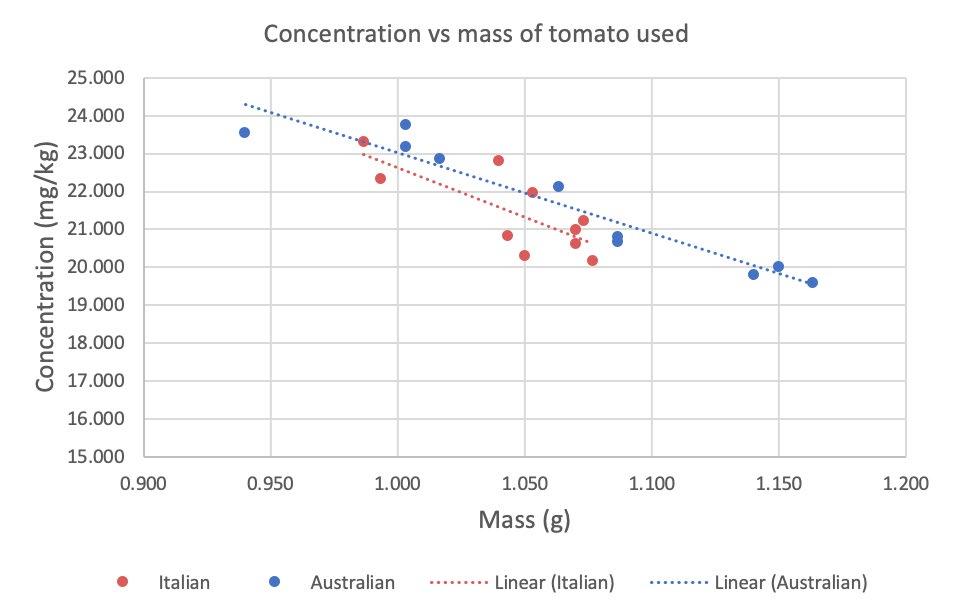

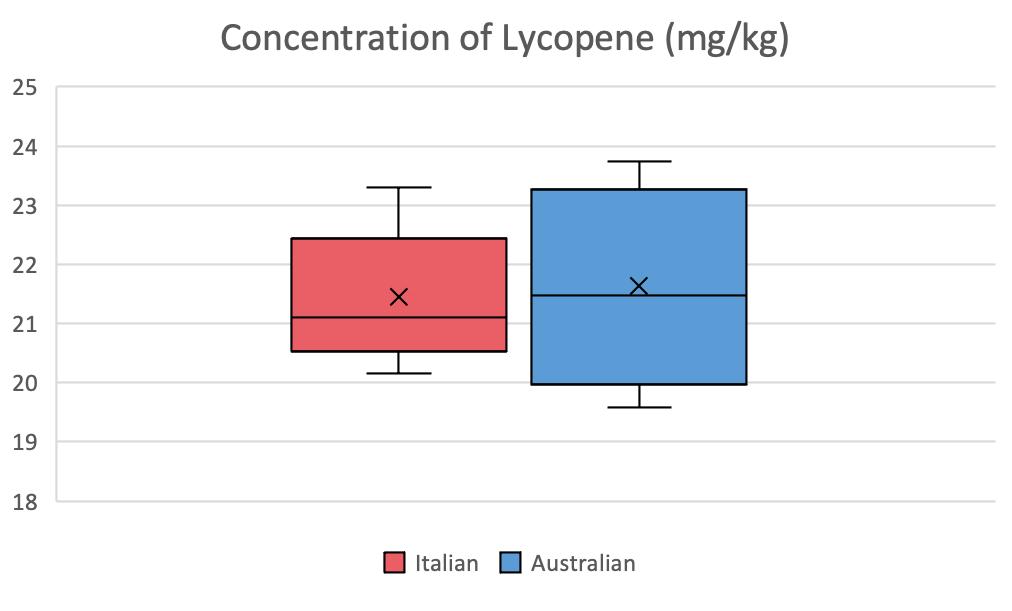

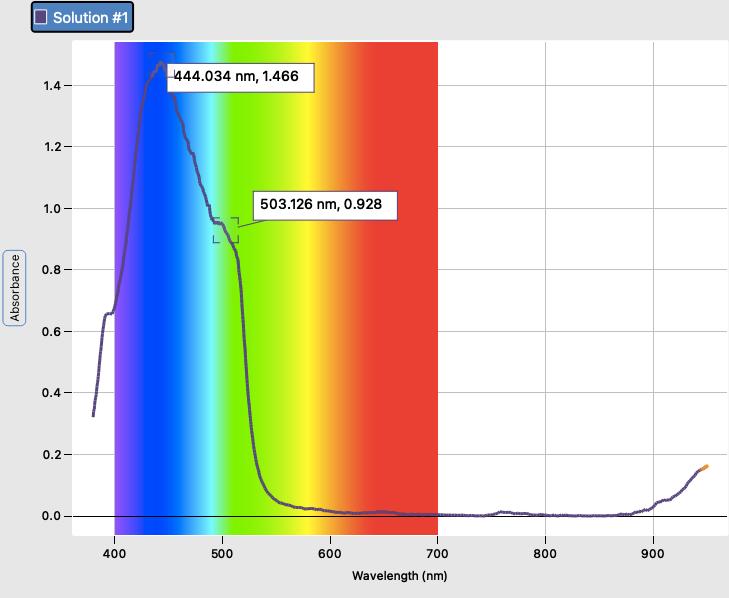

This study aimed to determine if there is a difference in the concentration of lycopene, a powerful antioxidant, in tinned tomatoes from Australia and Italy. The lycopene concentration of canned tomatoes from Italy and Australia was not significantly different. Consuming foods and meals made with tomatoes sourced locally or from Italy will provide the same amount of lycopene and thus the same antioxidant properties and health benefits.

pp 145-155

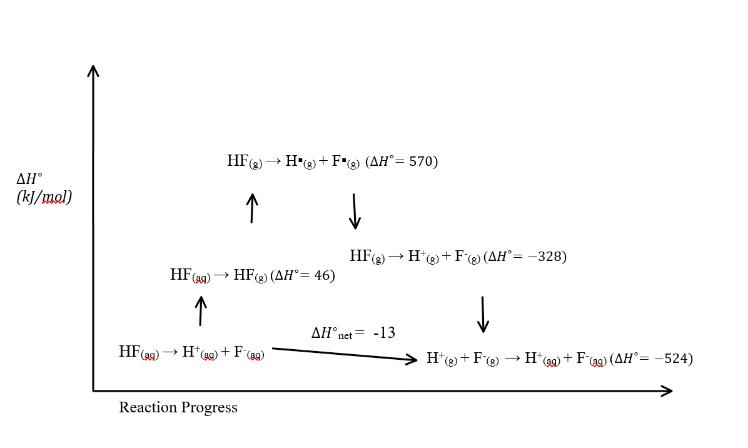

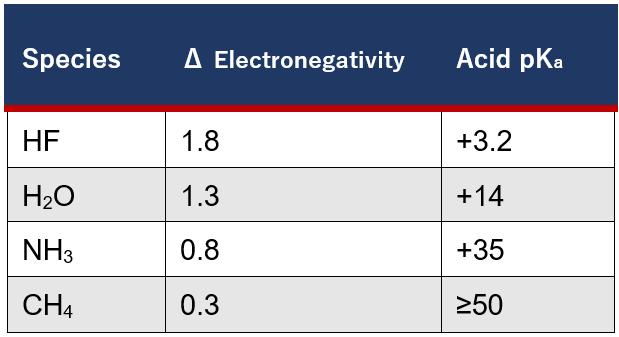

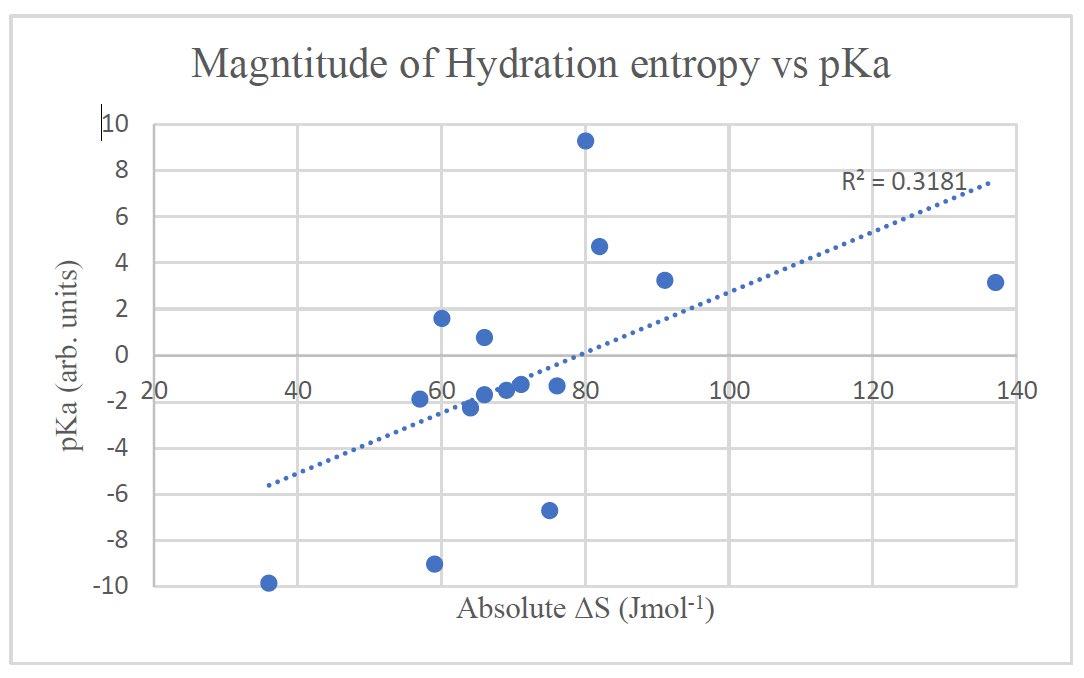

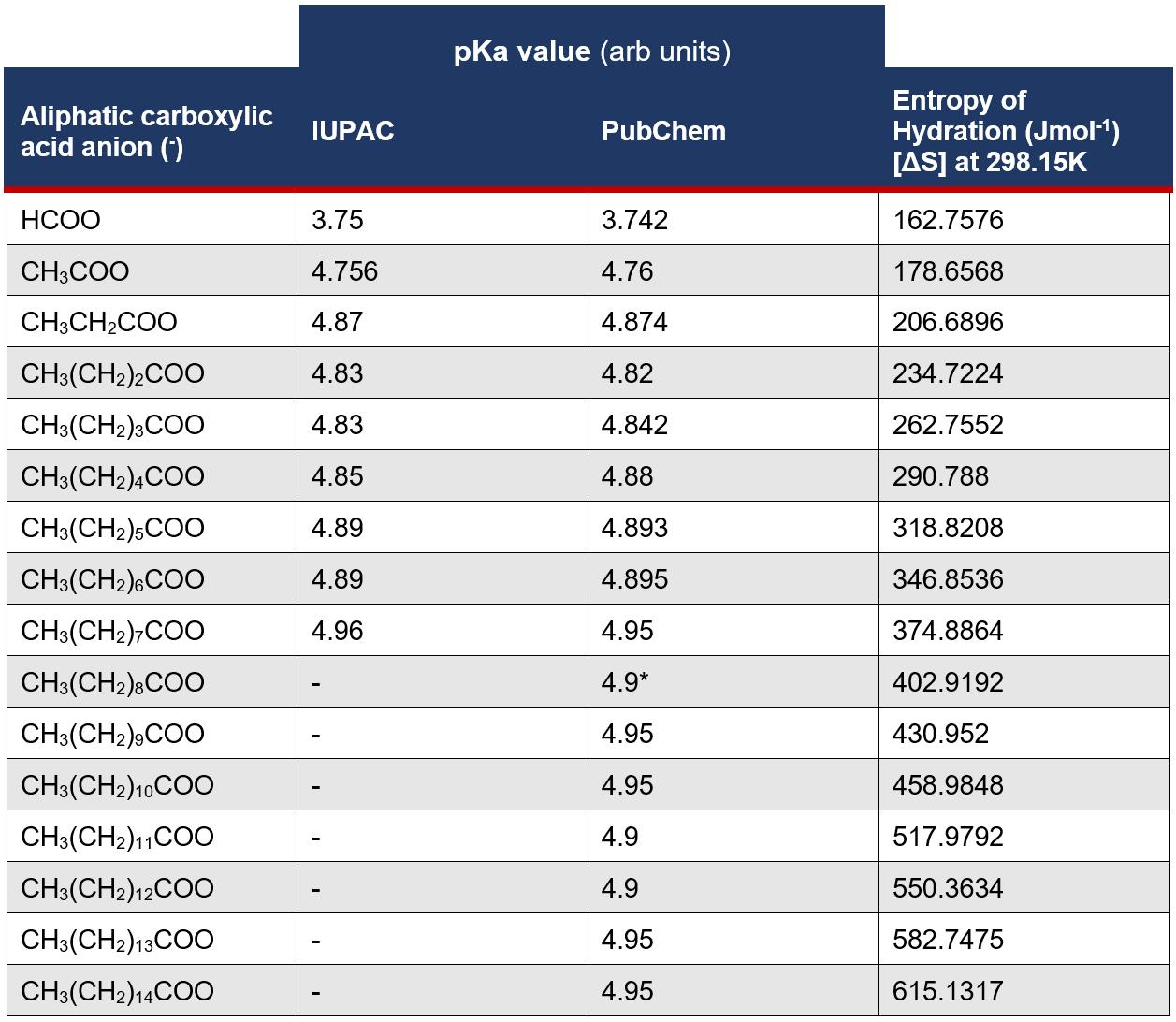

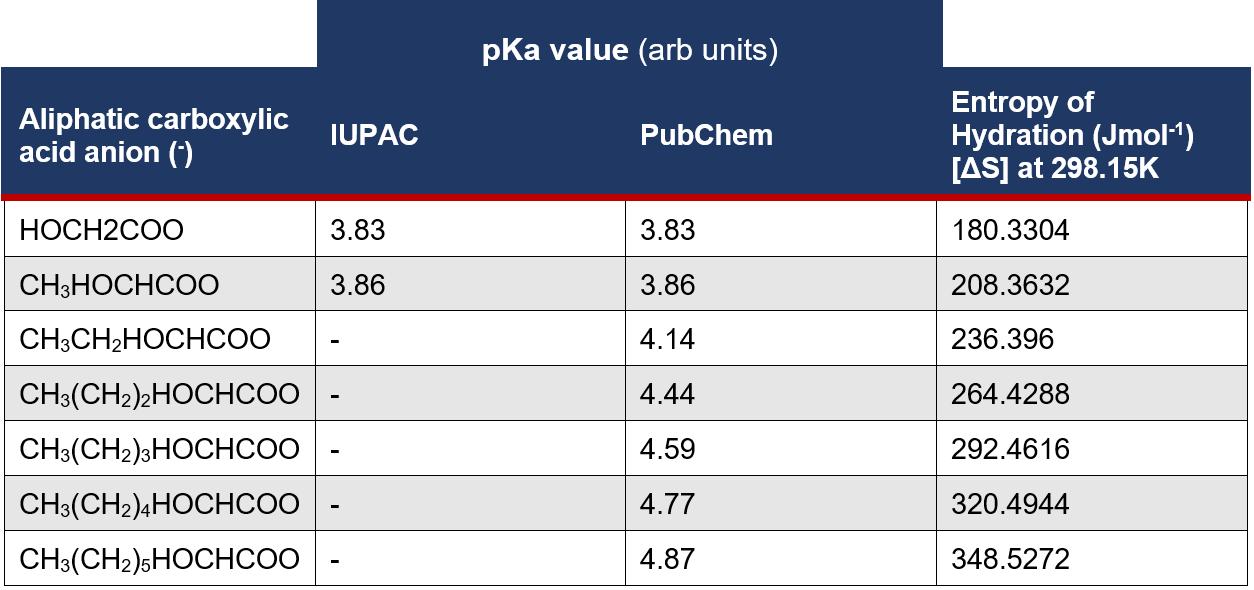

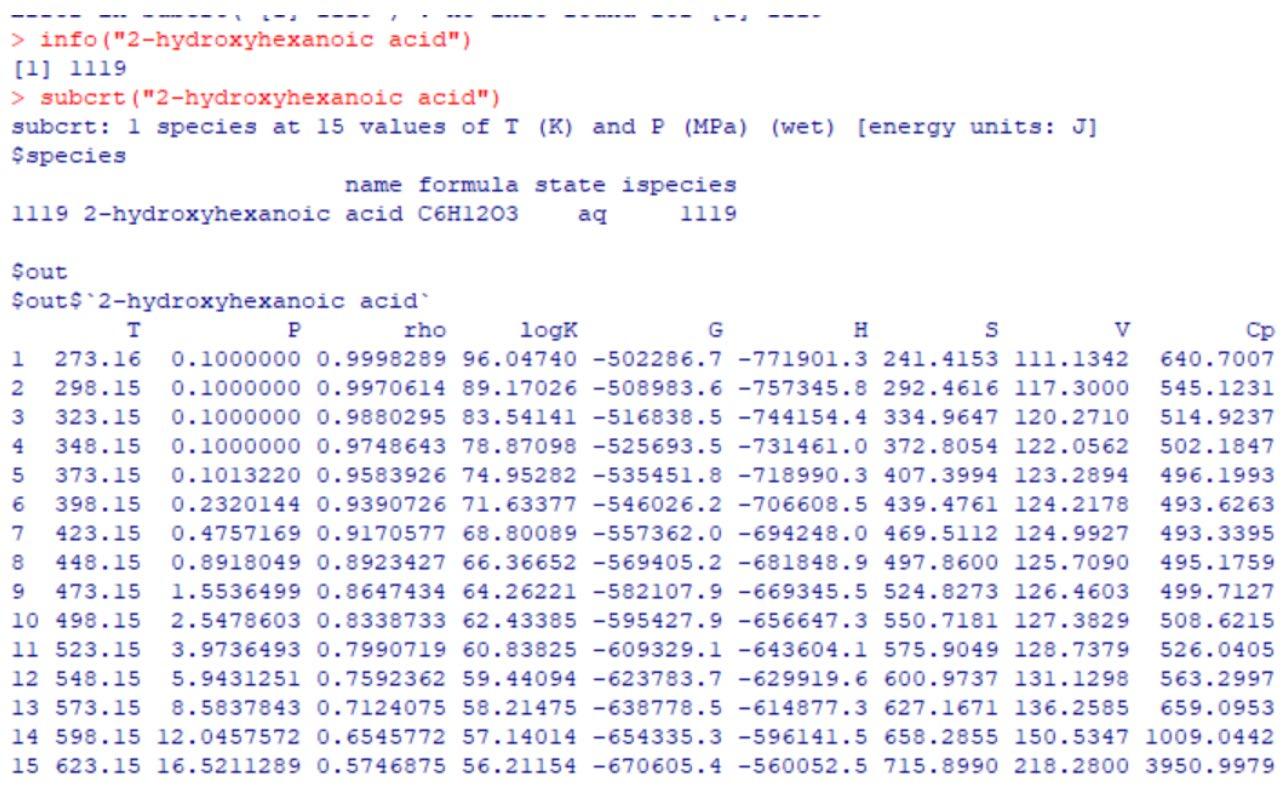

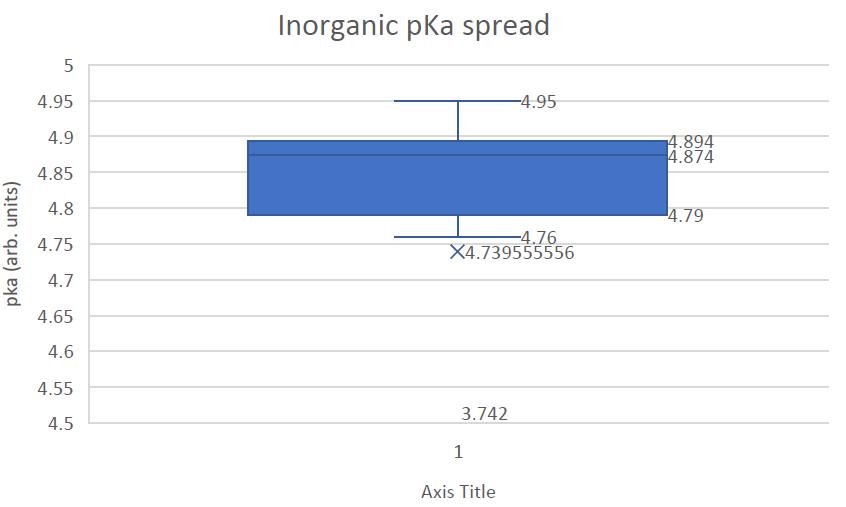

The anomaly behind the weak acid nature of hydrofluoric acid has sparked scientific discourse for nearly a century, with the most recent explanation accounting a lack of ionisation to entropic factors. This report extrapolates the theorised principle by establishing causation between hydration entropy of a wide array of monoprotic acid anions and their tendency to ionise in an aqueous system. It was found that an increased hydration entropy of monoprotic anions is directly related to a decreased tendency of the acid to ionise in an aqueous solution.

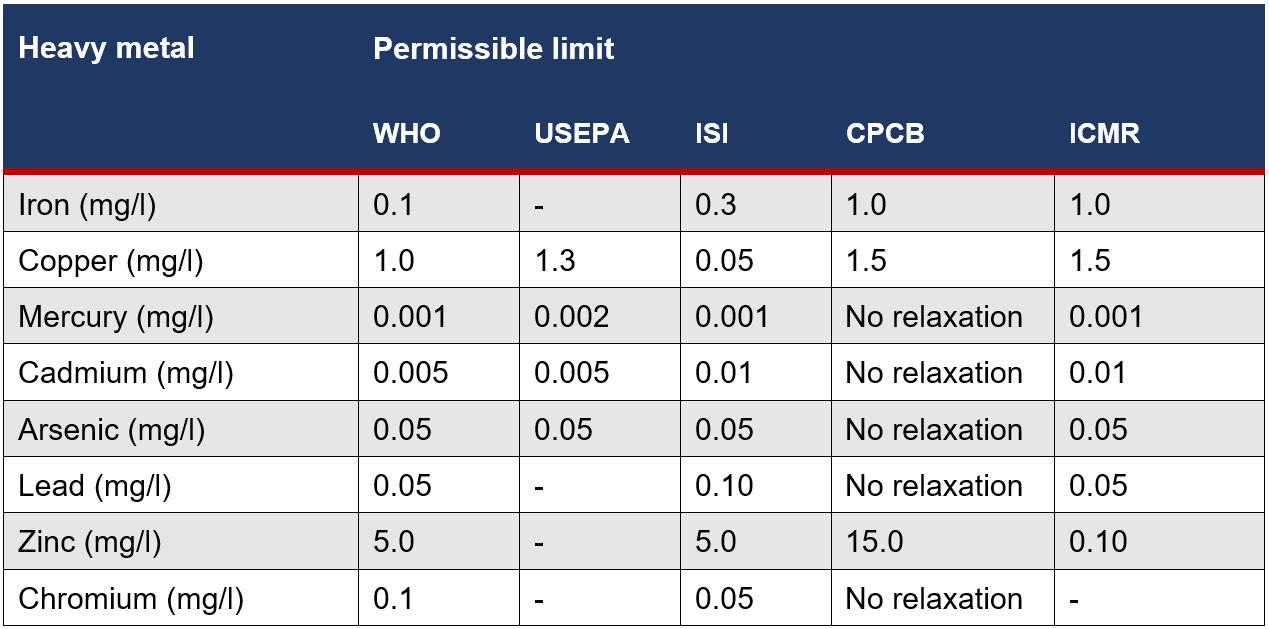

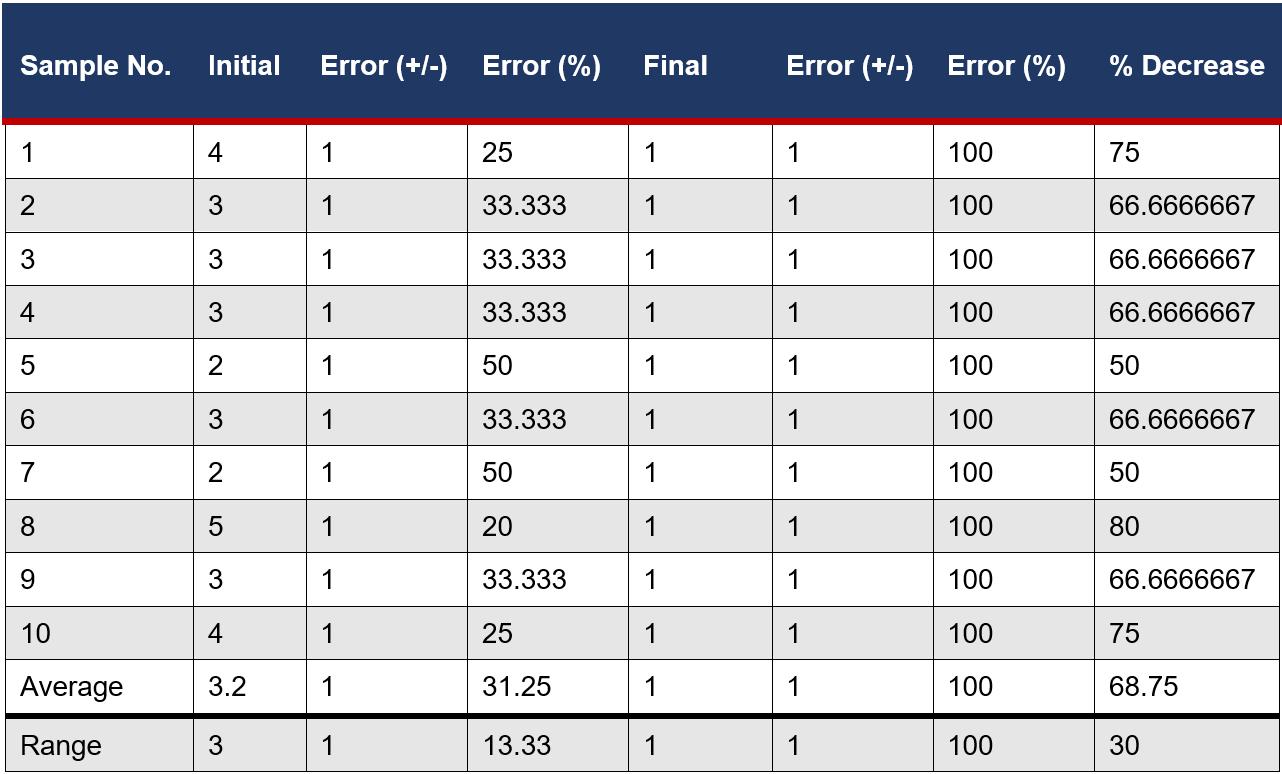

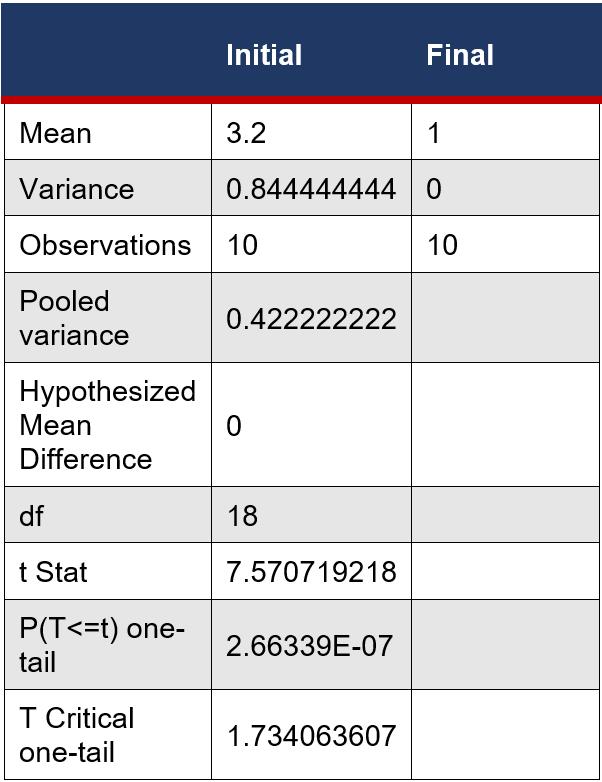

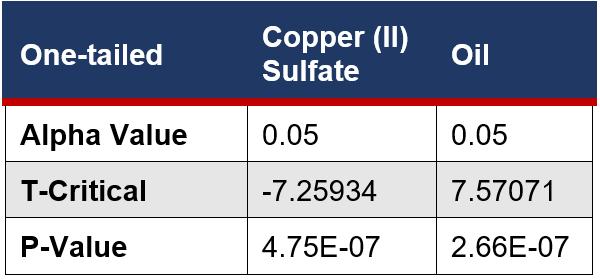

This investigation explored the detoxifying and filtrating properties of Typha Orientalis, a wetland plant, in the removal of Copper (11) Sulfate and Cooking Oil in water - to simulate pollutants found in Sydney Harbour. This experiment provided quantitative and qualitative data to support the application of Typha Orientalis in the filtration of polluted water in the real world, ultimately to reach a safe level of contaminants fit for consumption as deemed by World Health Organisation

pp172-189

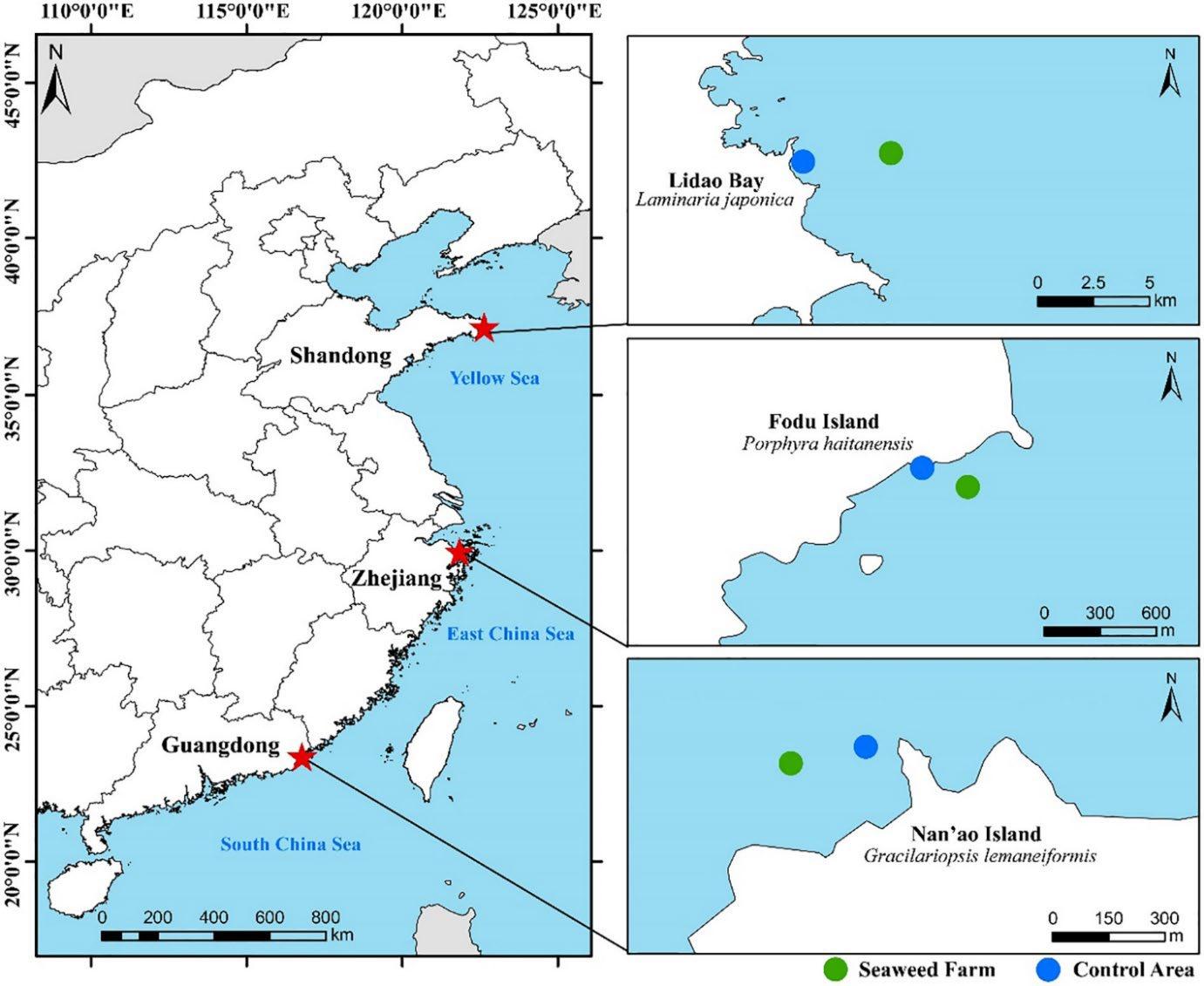

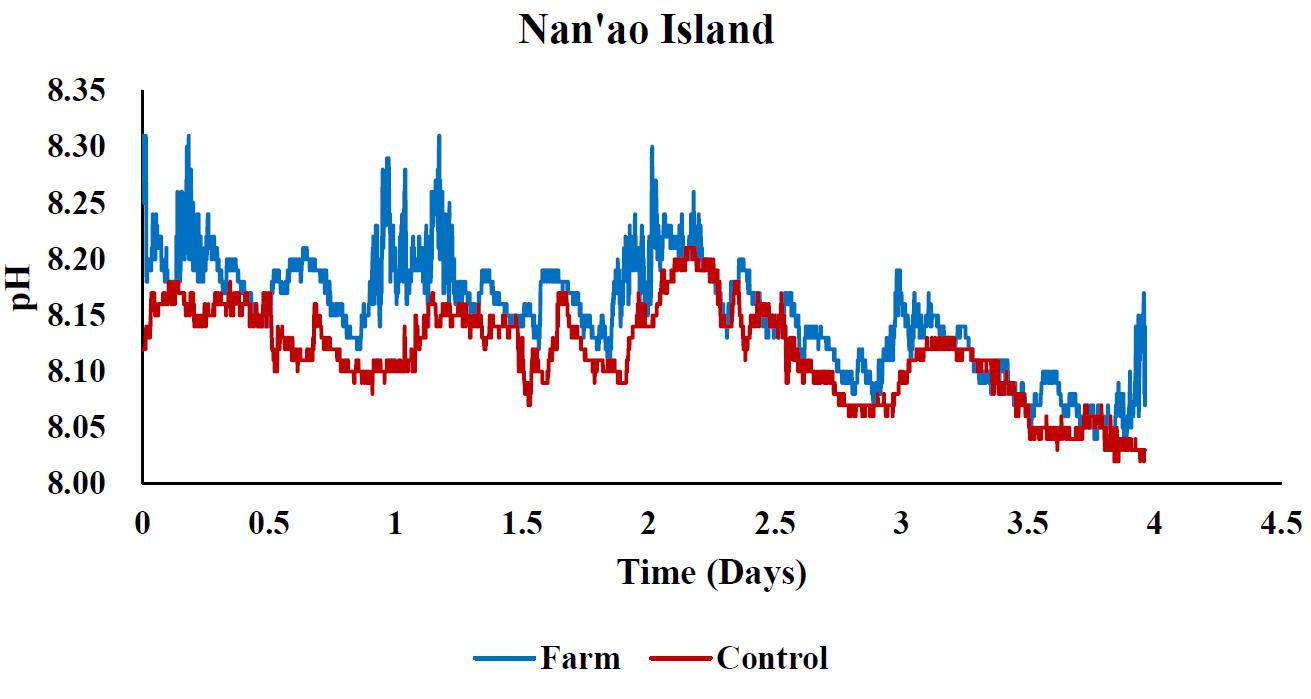

This meta-study used secondary sourced data to test whether seaweed farms had the ability to locally mitigate ocean acidification. It was found that the pH of seaweed farms was higher than the control sites without seaweed farms. Ocean acidification causes calcifying organisms' skeletons to become susceptible to dissolution, so any activity that could reduce ocean acidification would be beneficial, however more long term studies need to be undertaken to determine for how long and how widespread the effects of the seaweed farms are.

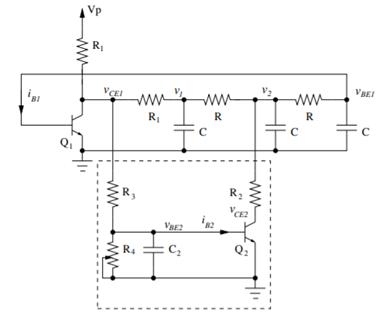

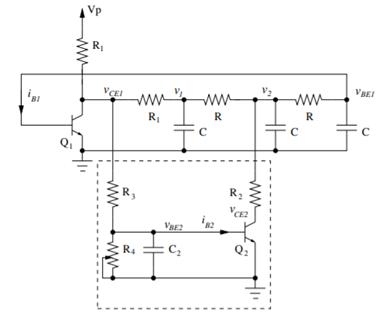

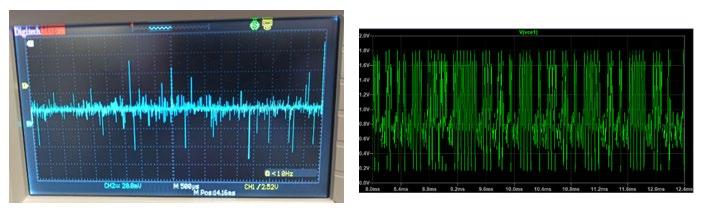

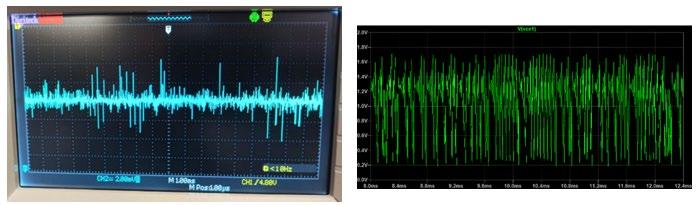

The claims of chaotic behaviour in a circuit were investigated through utilising both in-situ and in-silica solutions. The validity of the simulation was confirmed as chaotic behaviour was observed in the in-situ circuit. The proposed chaotic circuit utilised cheap and readily available parts, hence, there is scope for its implementation in areas such as robotics or encryption, where chaos greatly increases efficiency and effectiveness.

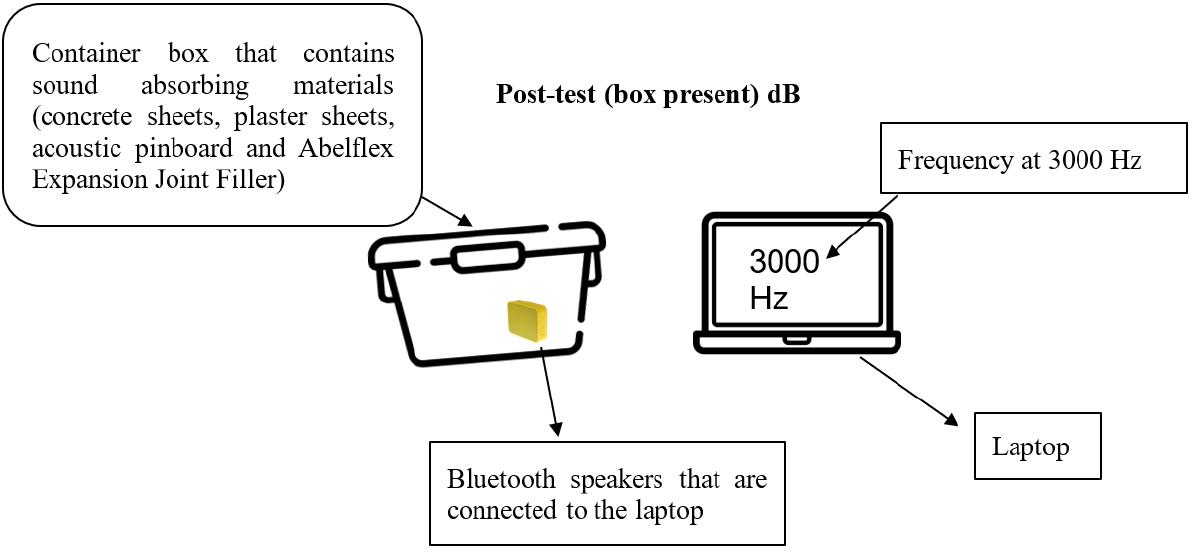

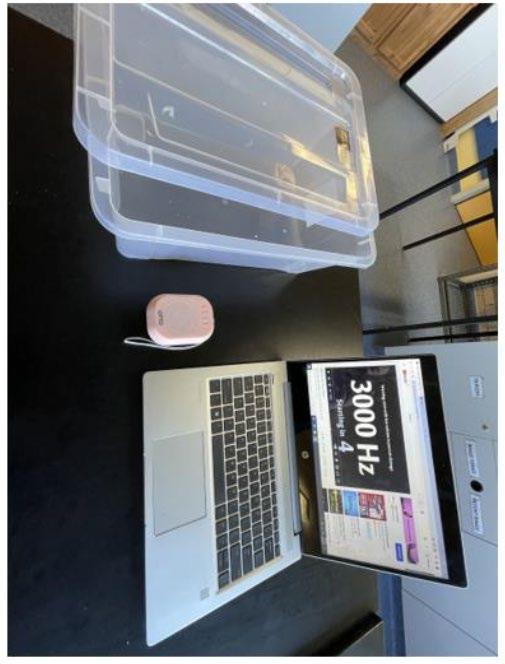

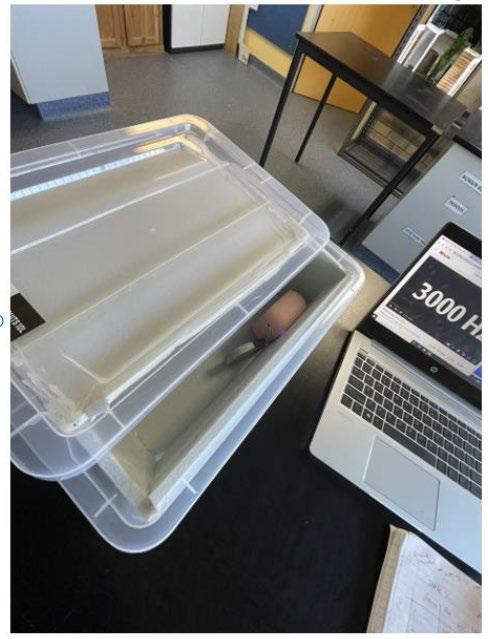

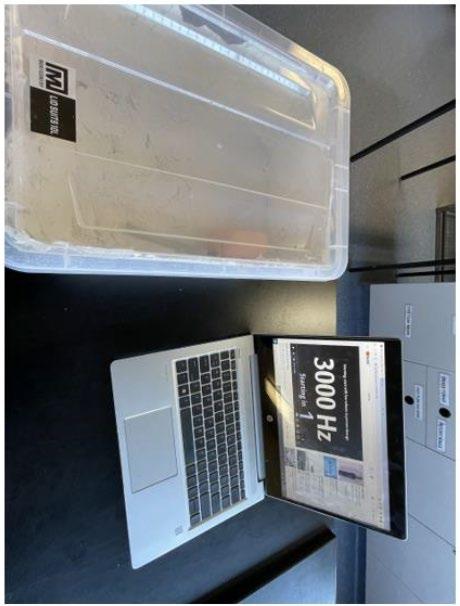

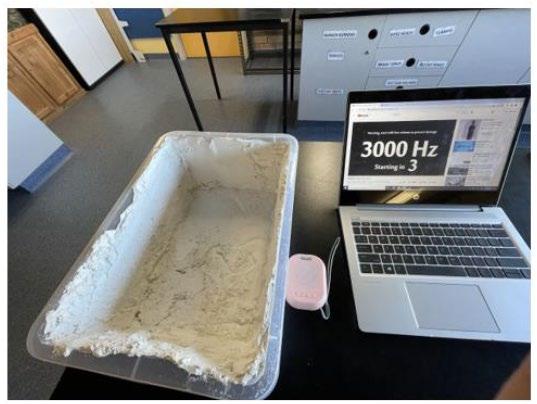

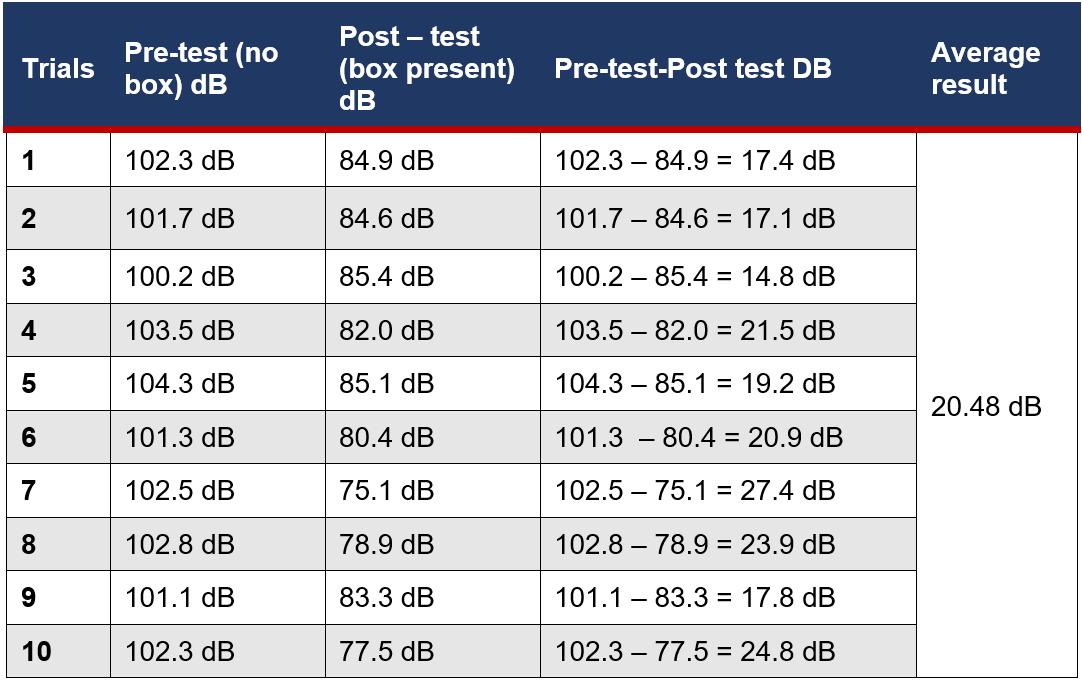

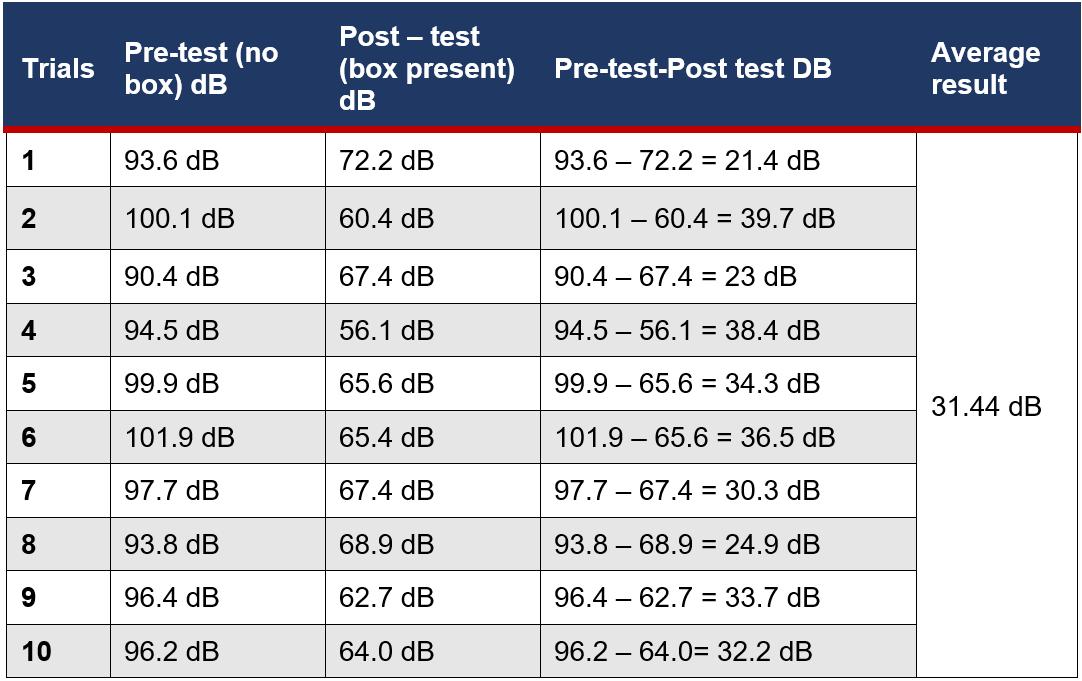

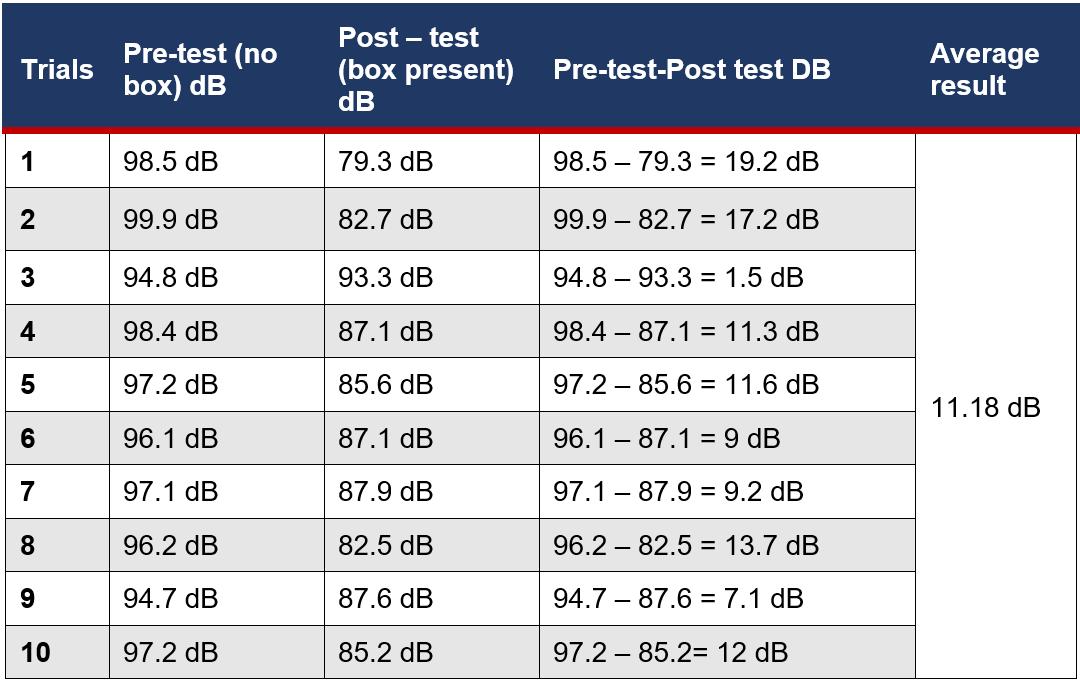

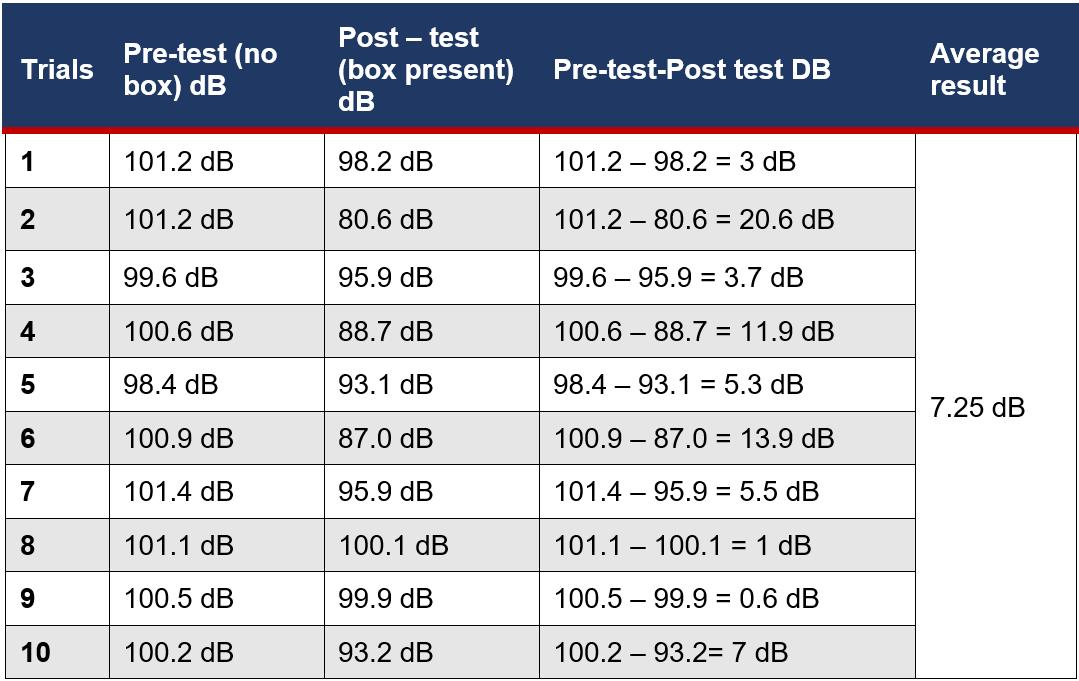

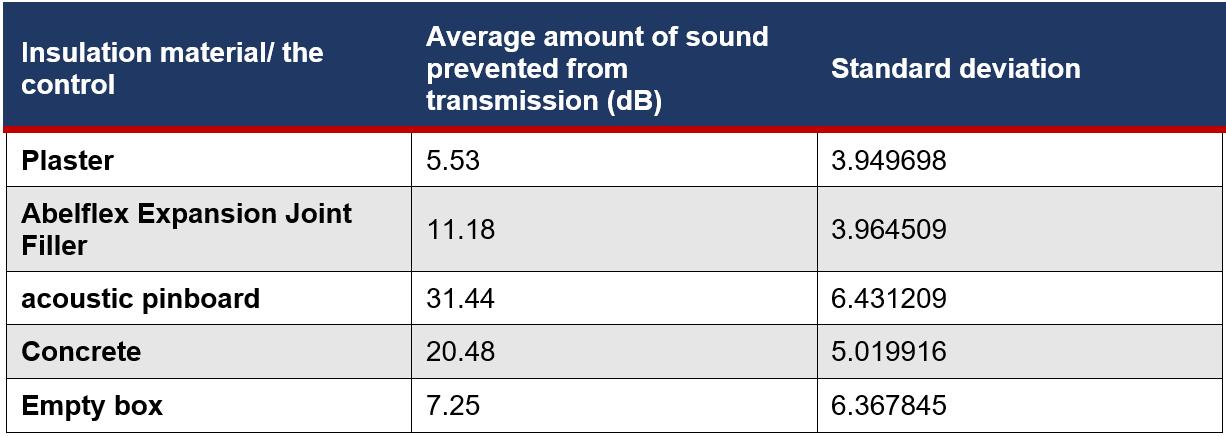

This investigation aimed to determine which sound absorbing material (concrete sheets, plaster sheets, acoustic pinboard and Abel flex Expansion Joint Filler) prevents transmission of sound when tested at a frequency of 3000 Hz. Acoustic pinboard was found to be the most effective in preventing sound transmission. This product would be most useful for controlling sound levels for environments like offices or sound rooms.

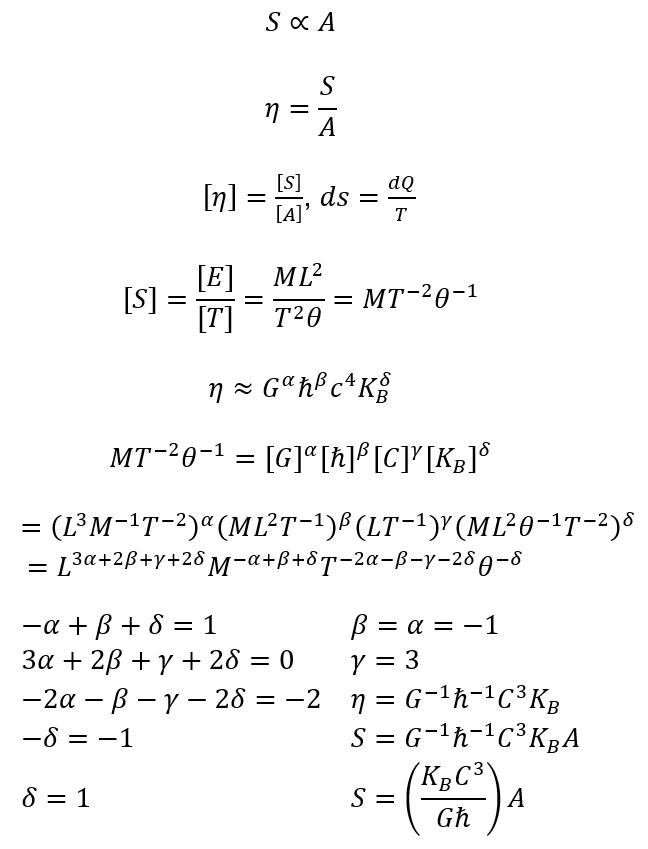

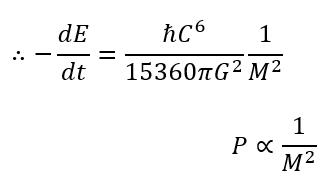

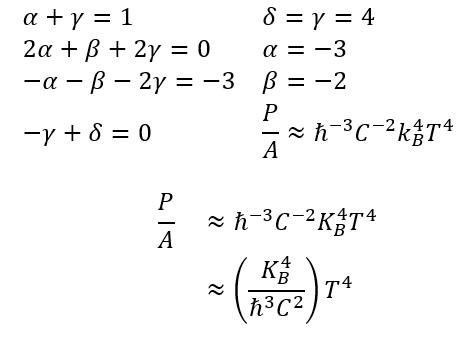

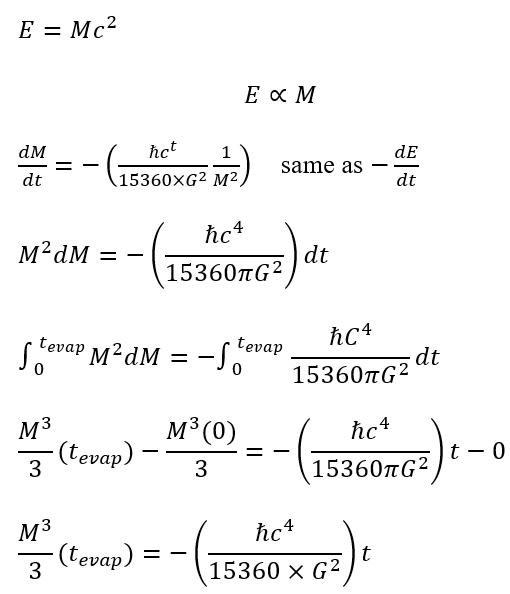

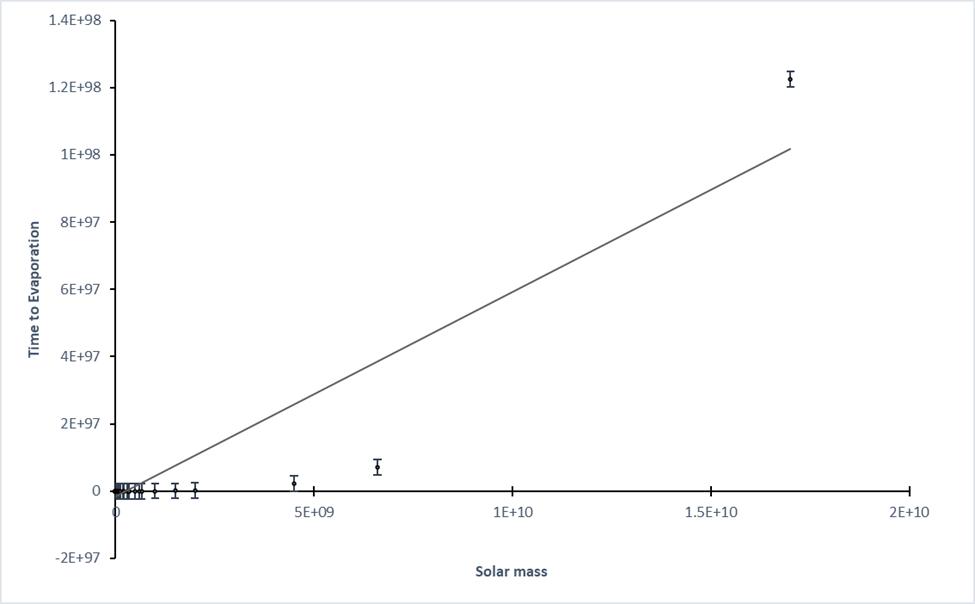

This derivation of Hawkins equations using dimensional analysis and observing the relationship between the variables of mass and time refutes the common misconception that a microscopic black hole produced in a supercollider can expand and absorb matter until it grows to the size of a cosmic black hole. The impact of this area of research can lead to new discoveries and development of technologies that will advance our knowledge and understanding of the universe.

pp 228-239

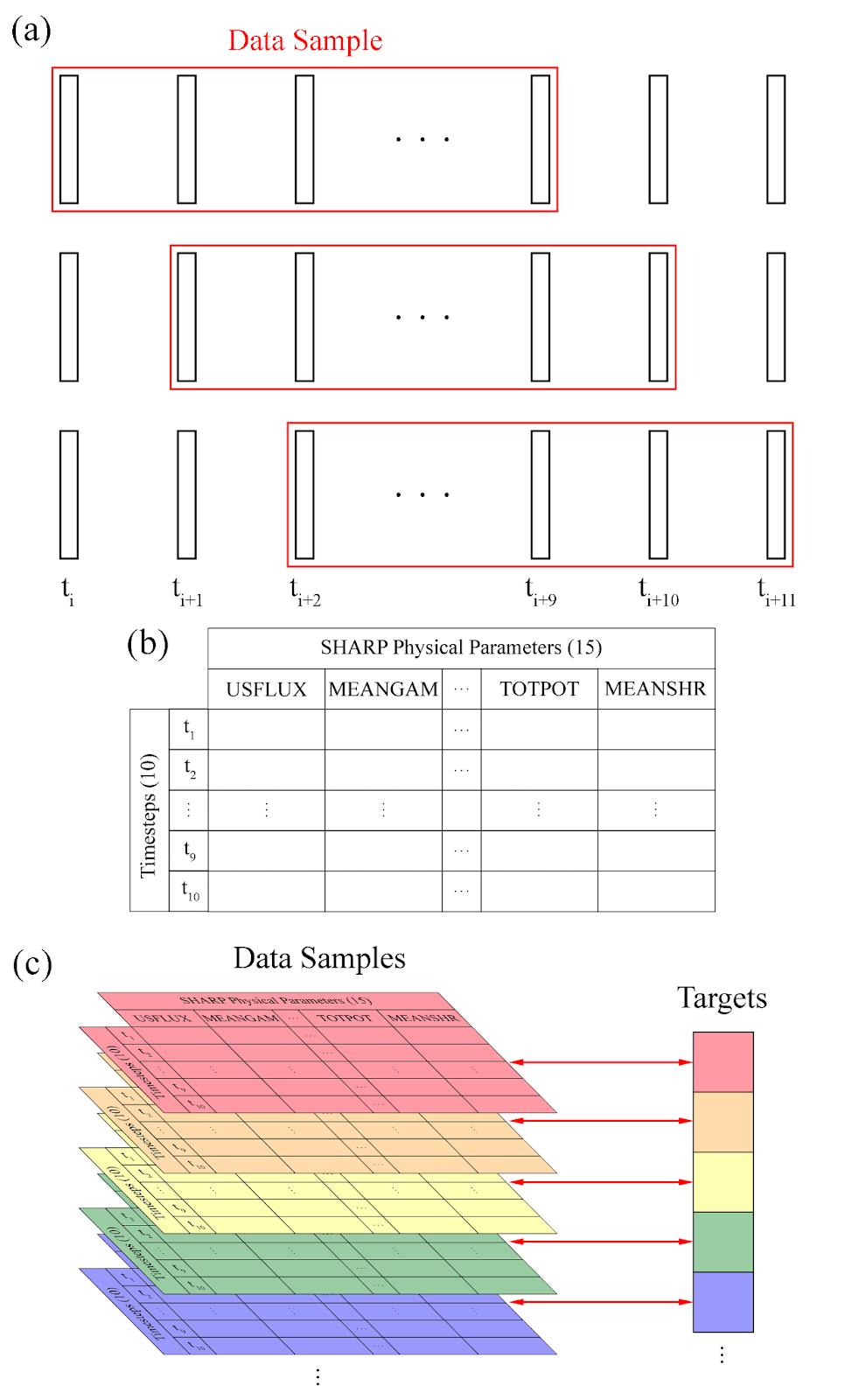

Data Samples

Ta rgets

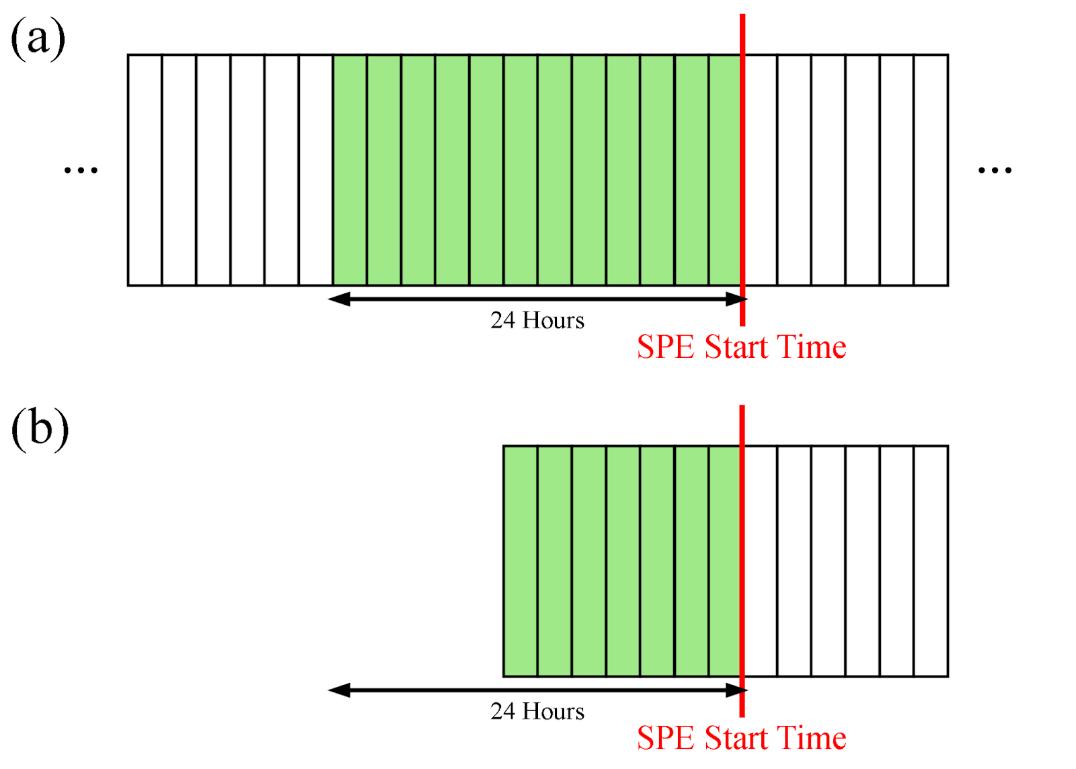

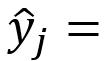

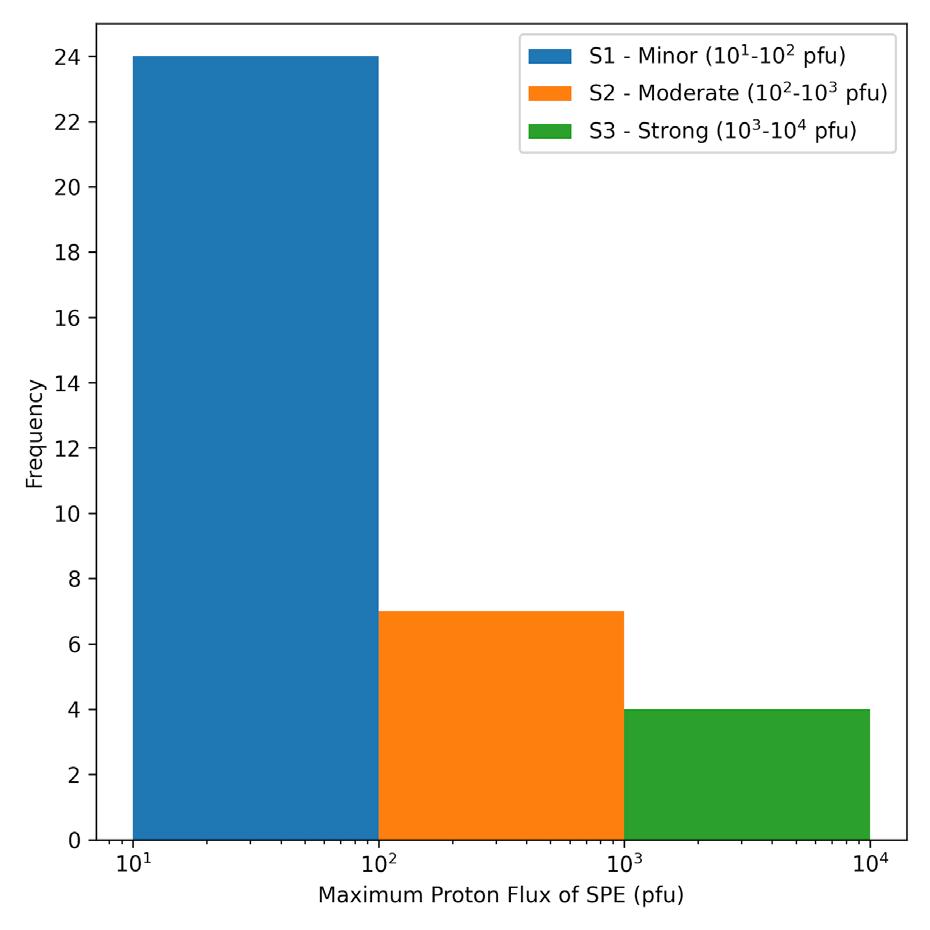

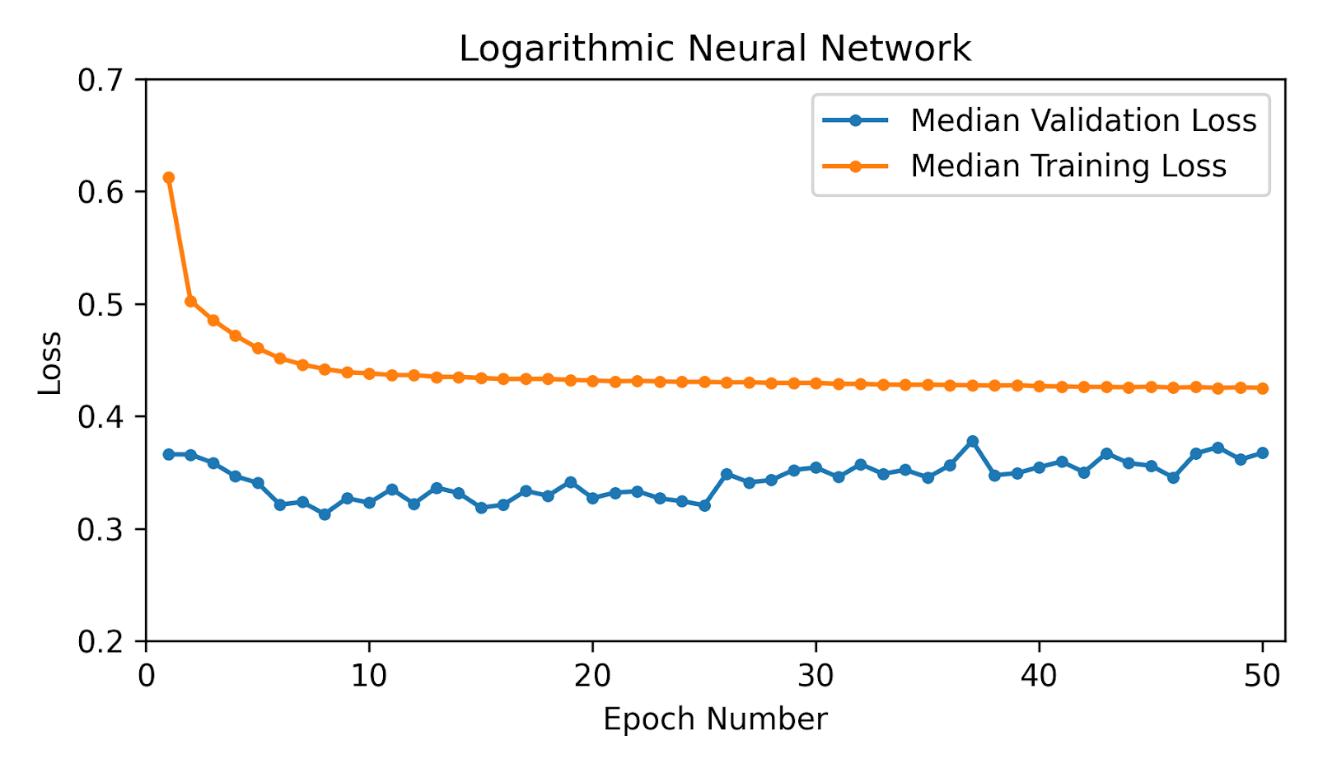

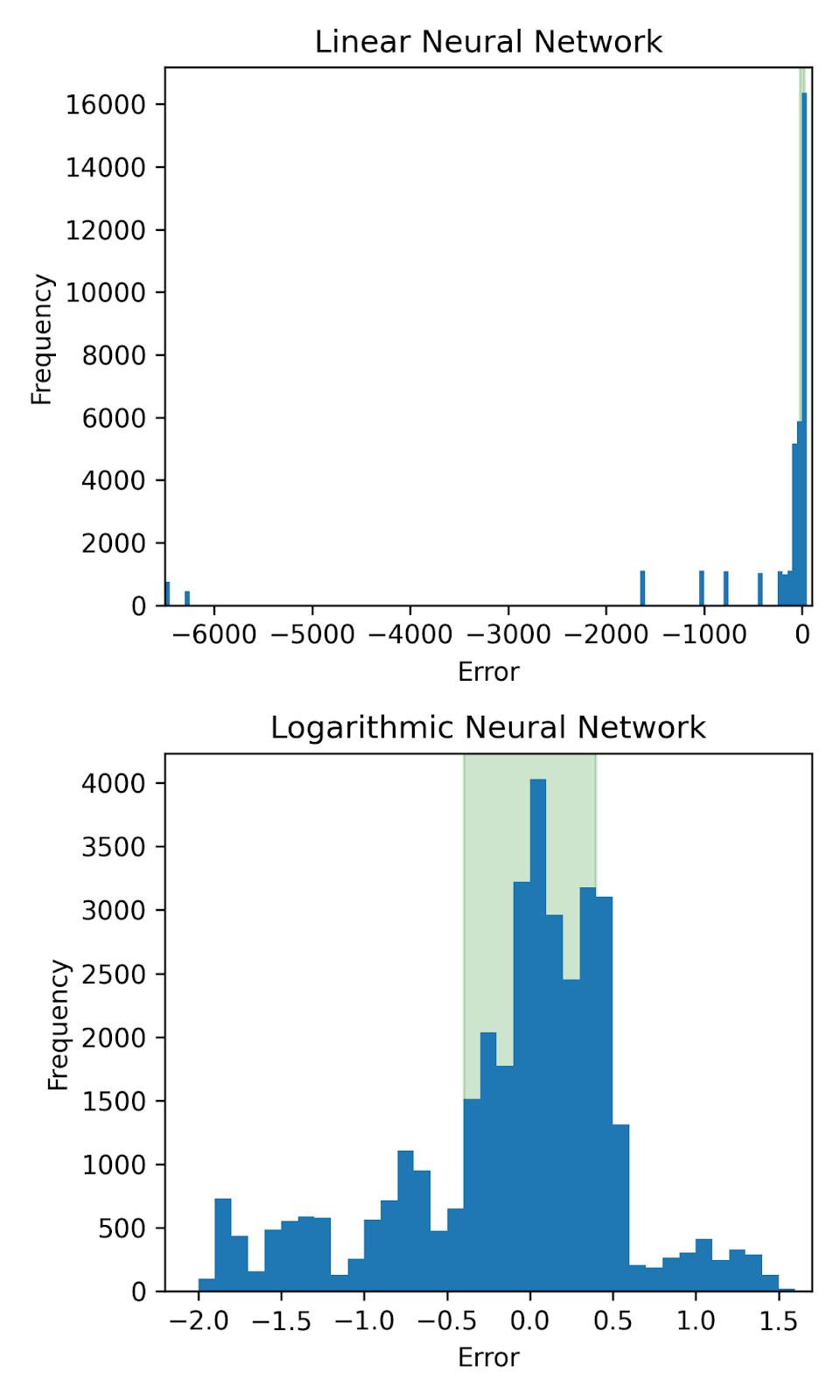

This study aimed to create and evaluate a neural network using vector magnetic data to predict the maximum proton flux at Earth caused by a future solar proton event (SPE). By analysing the ratio of underestimations to overestimations and comparing the error of the predictions made by these neural networks to the corresponding errors for baseline models with no skill, it was evident that neither neural network would be accurate enough to be used in a real-life scenario to alert authorities to a strong SPE likely to impact technologies and humans

pp 240-256

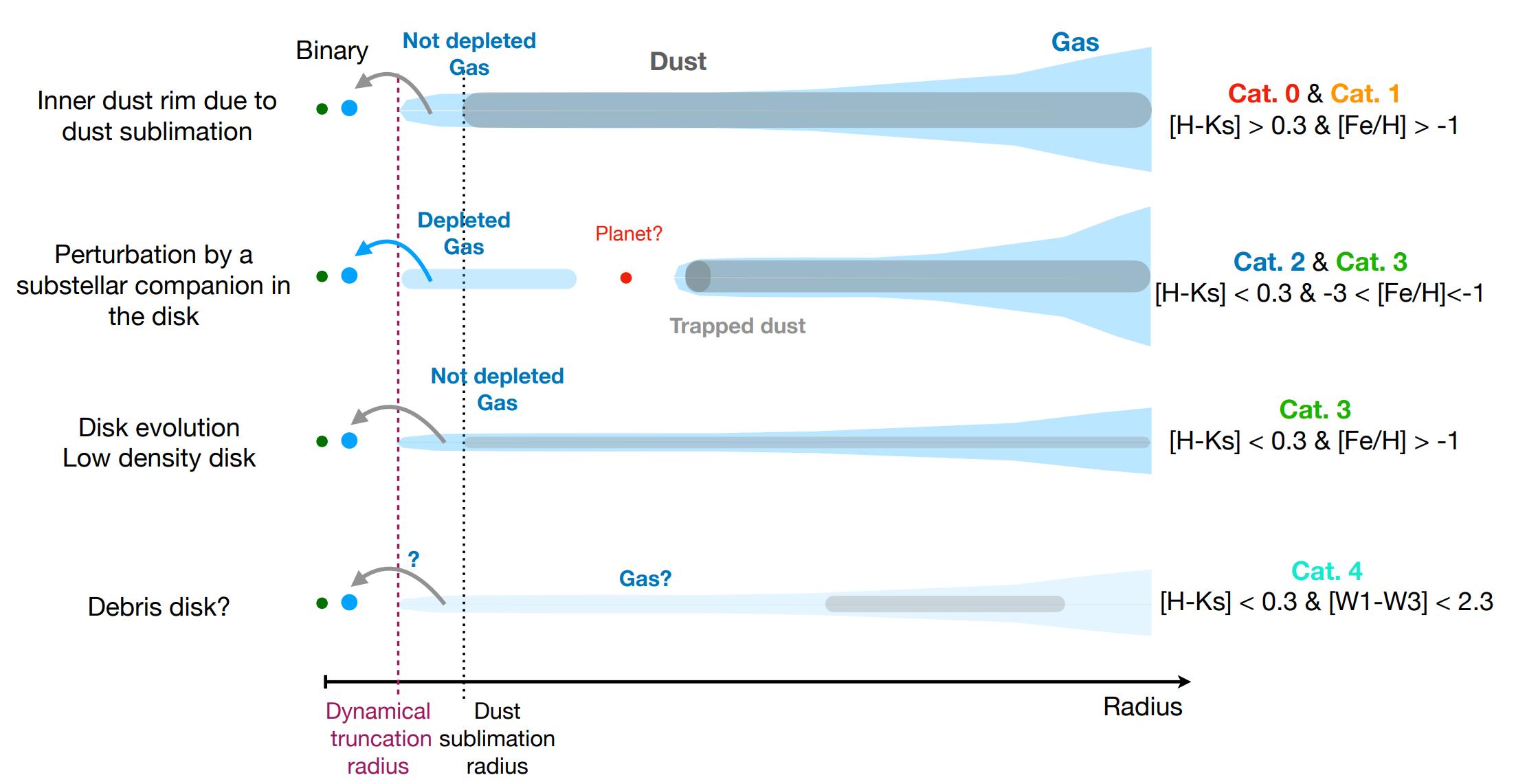

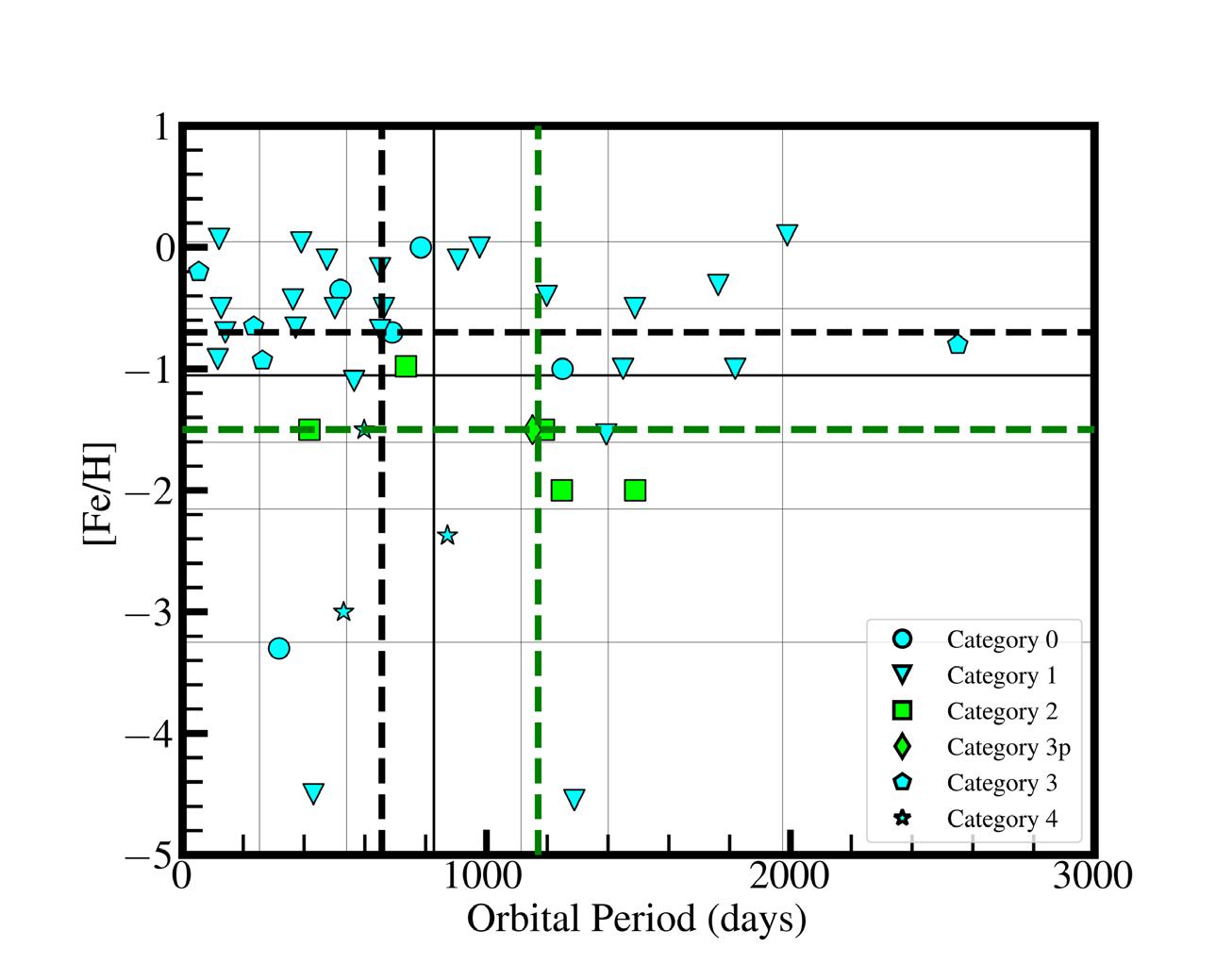

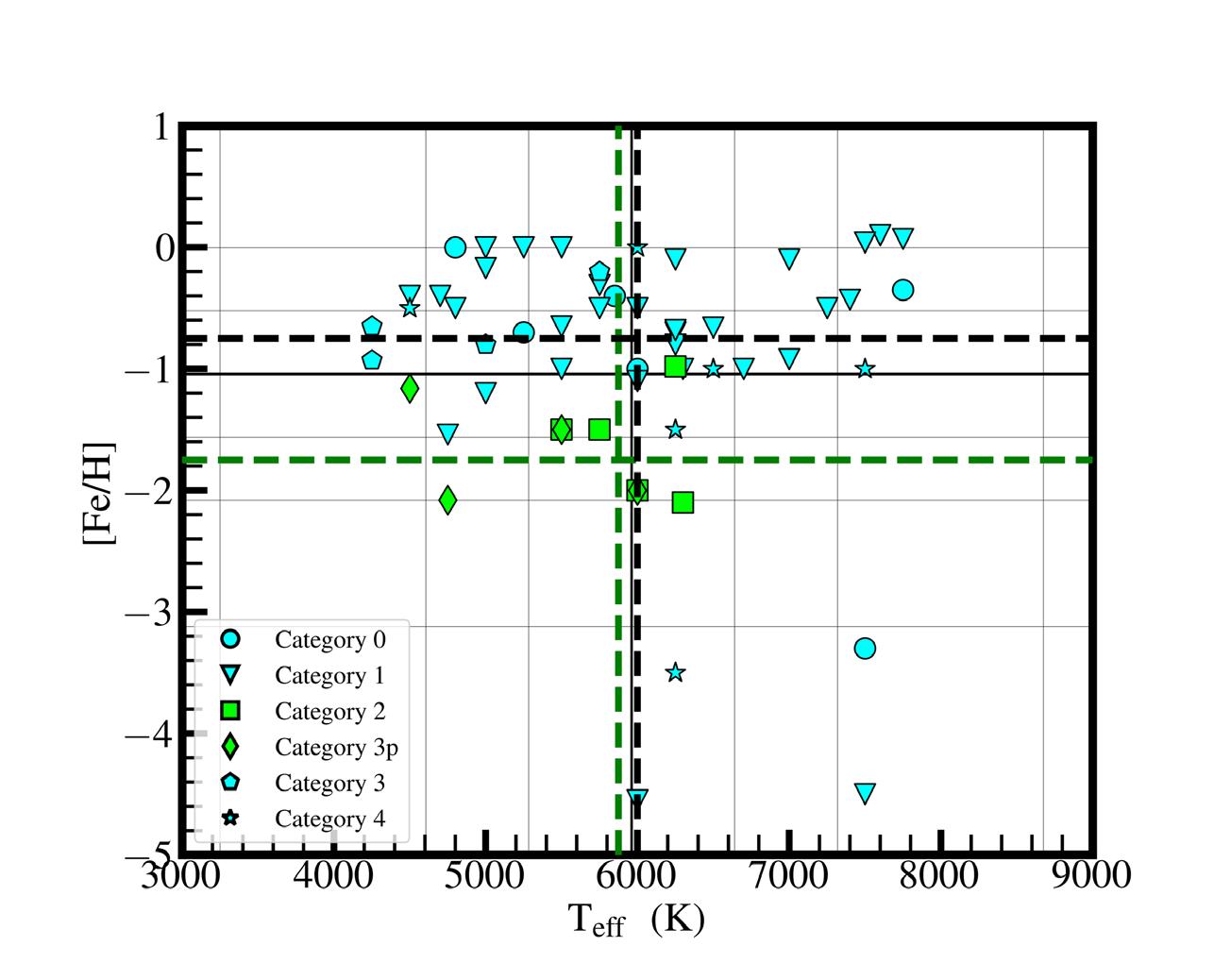

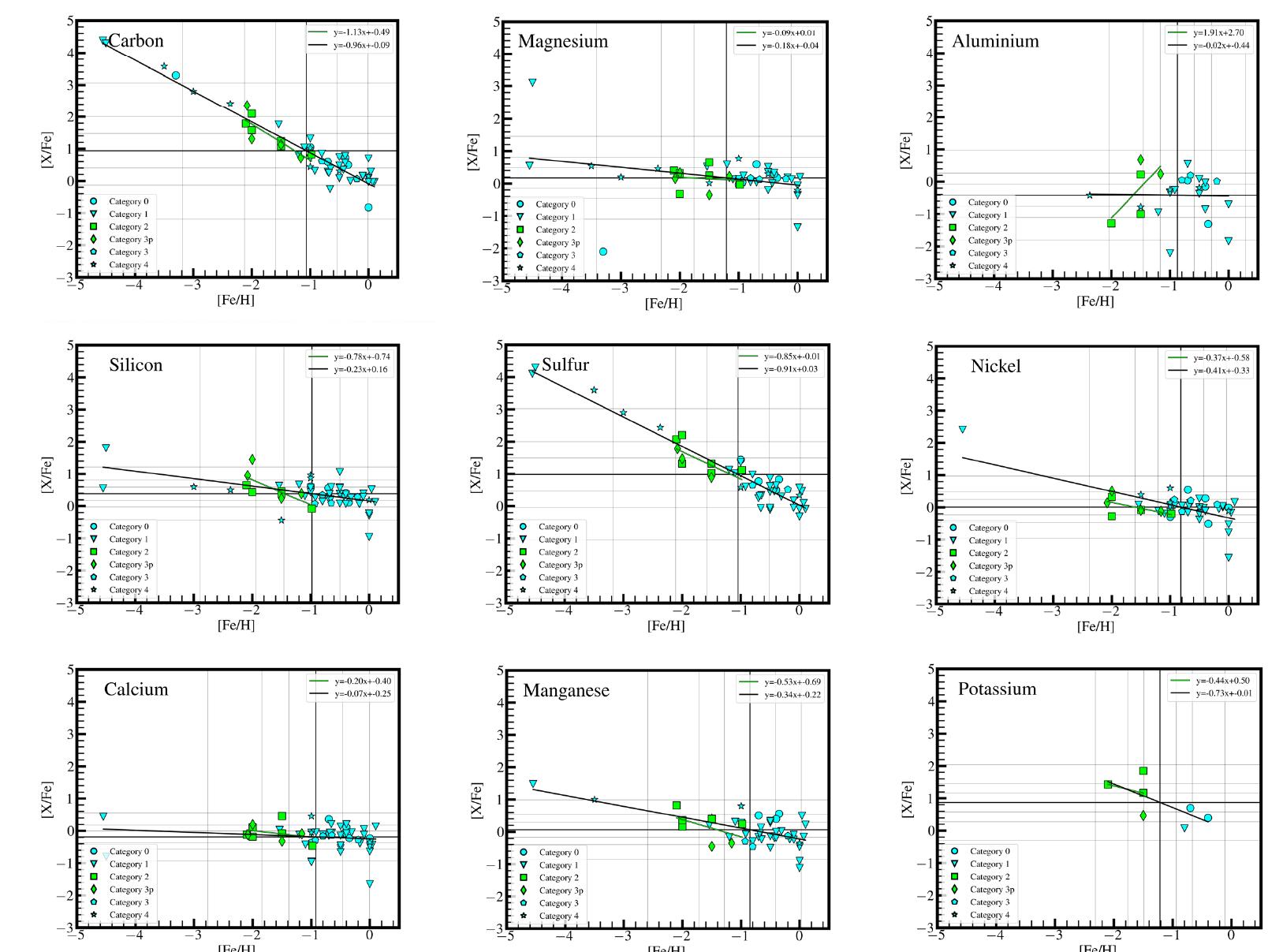

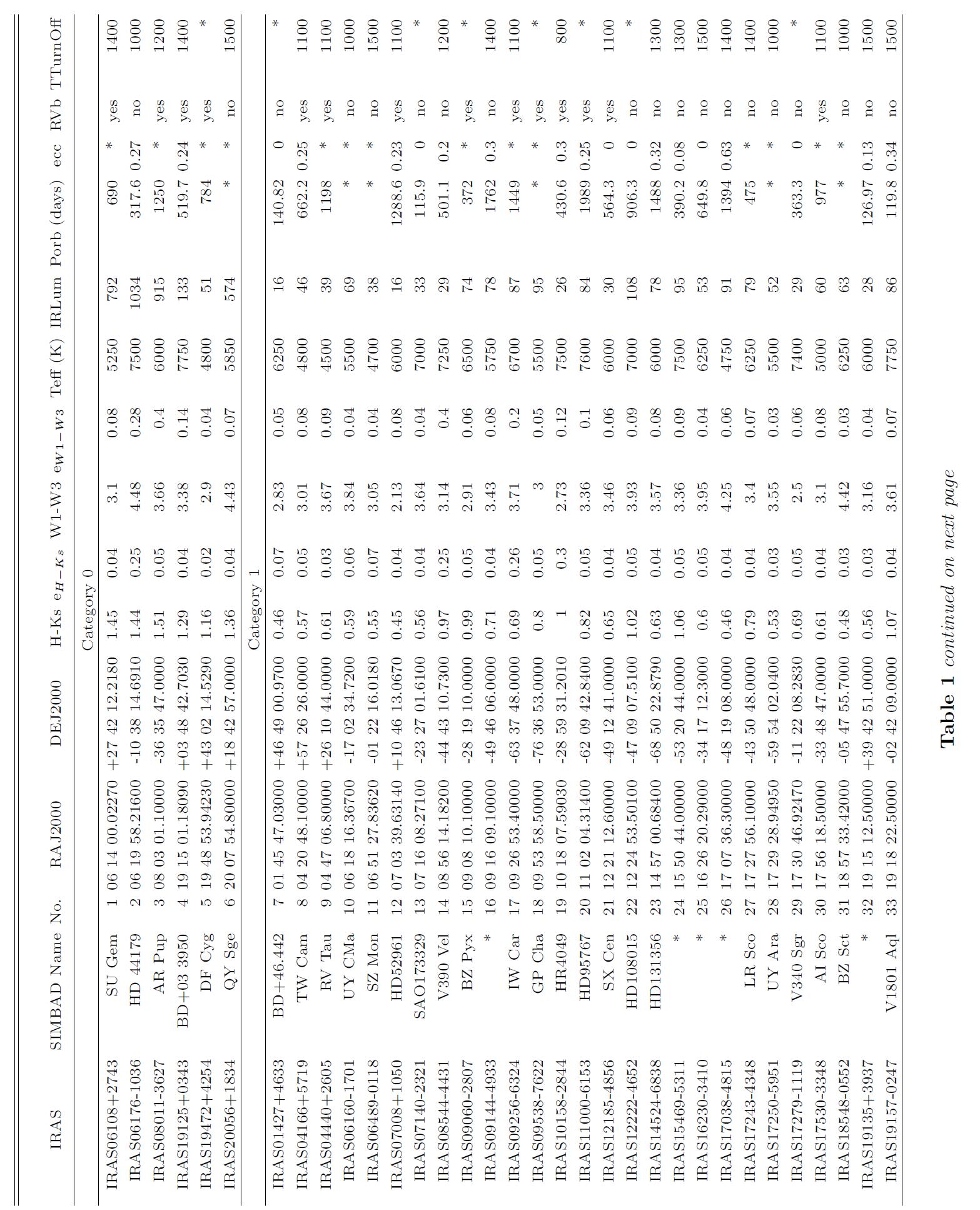

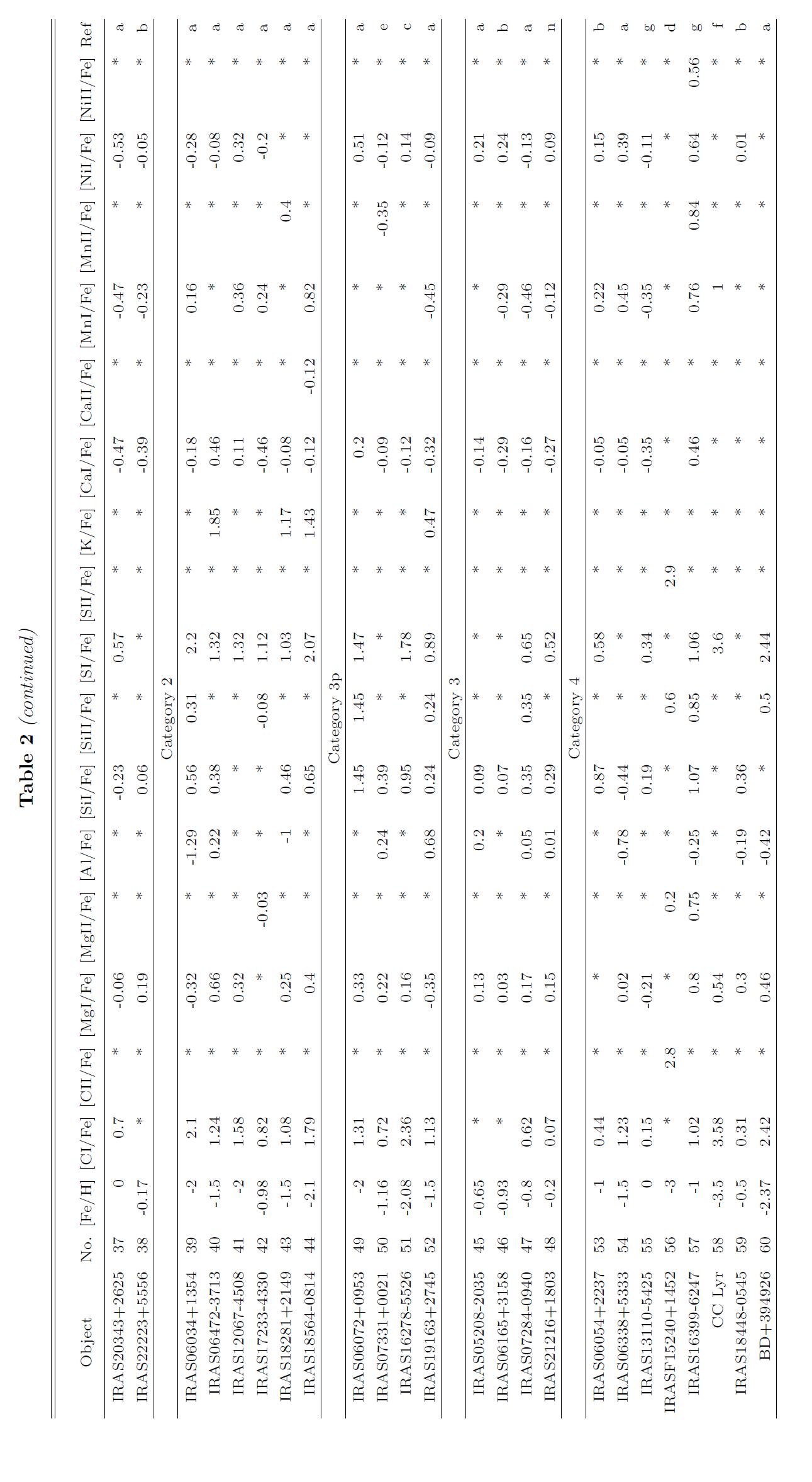

This work aims to analyse if properties such as chemical abundances of the post-Asymptotic Giant Branch star may correlate with planet occurrence established by previous photometric analysis. Planet-containing galactic post-AGB binaries were found to exhibit higher median elemental abundances but lower metallicities ([Fe/HJ) than the population

The post-AGB sample disobeys the PMC that characterises 1stgeneration planet forming stars likely due to the very different mechanisms behind disk and planet formation but further research is needed to determine the exact processes involved.

pp 257-276

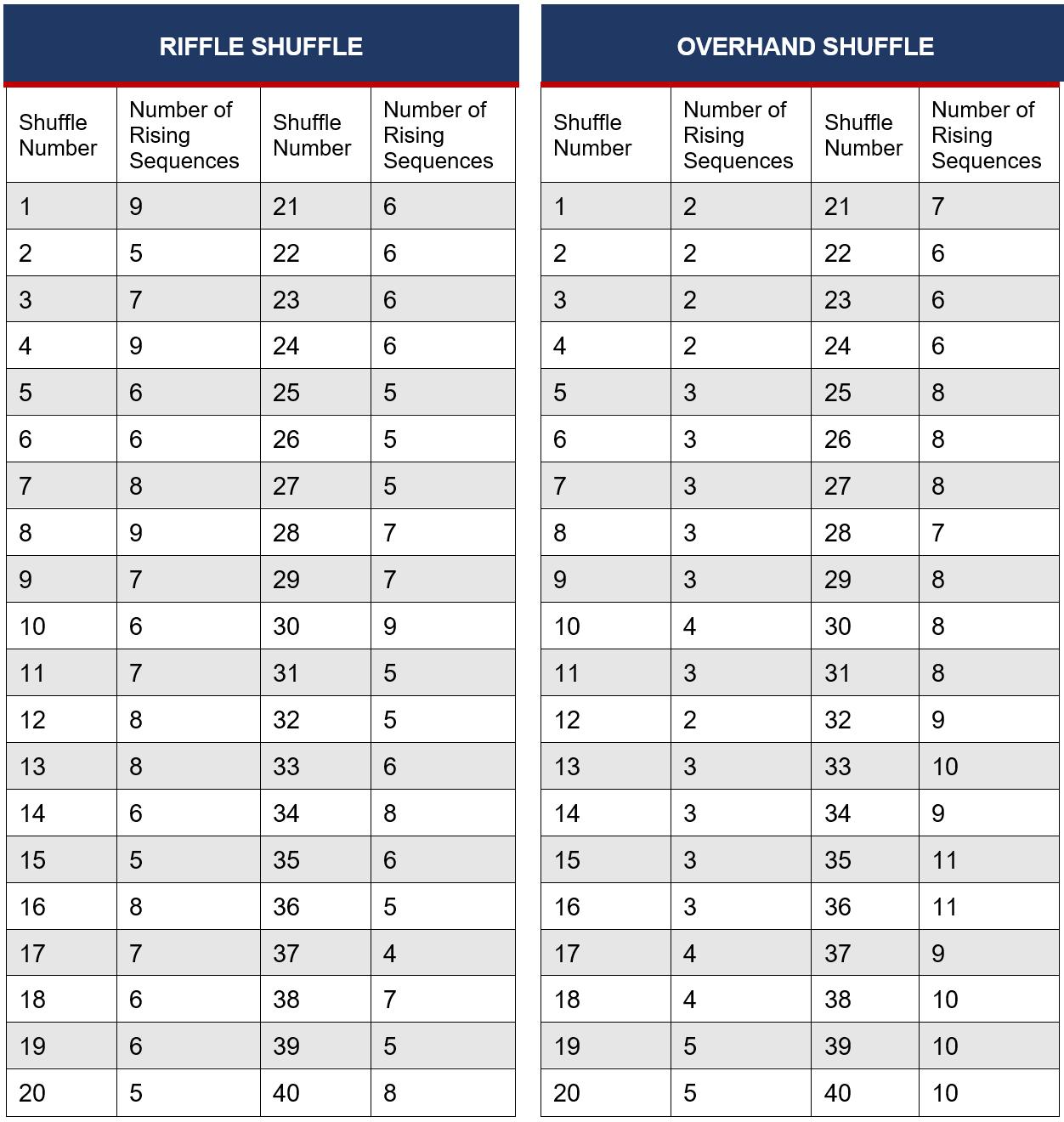

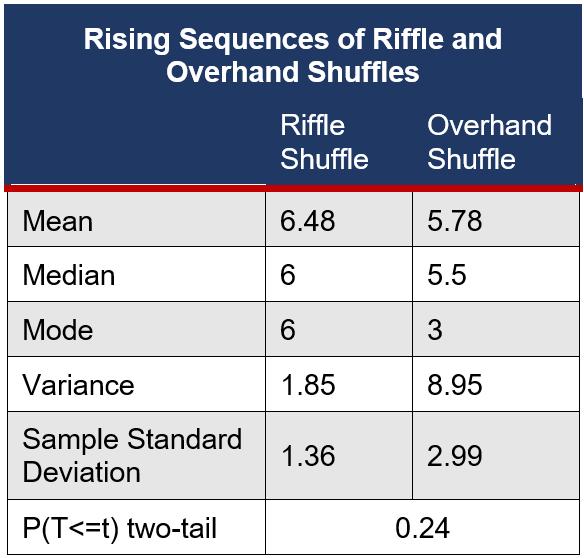

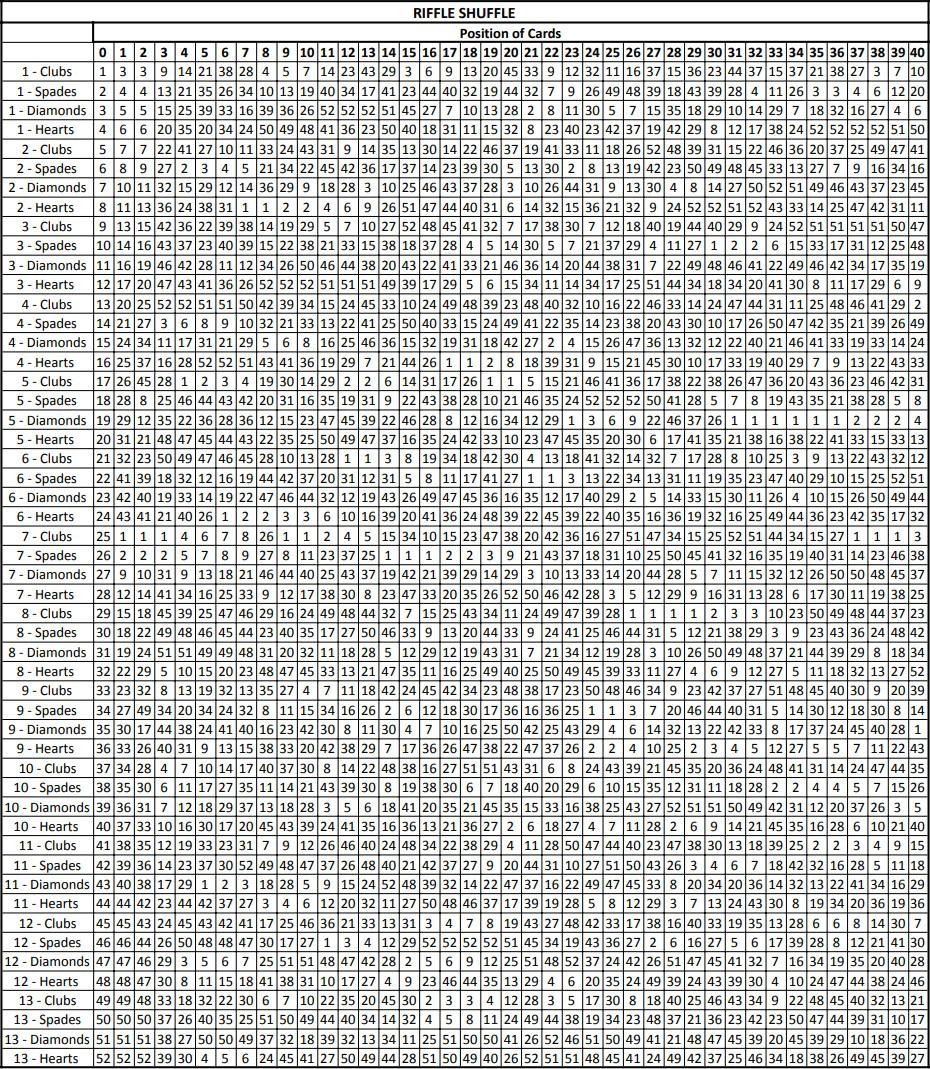

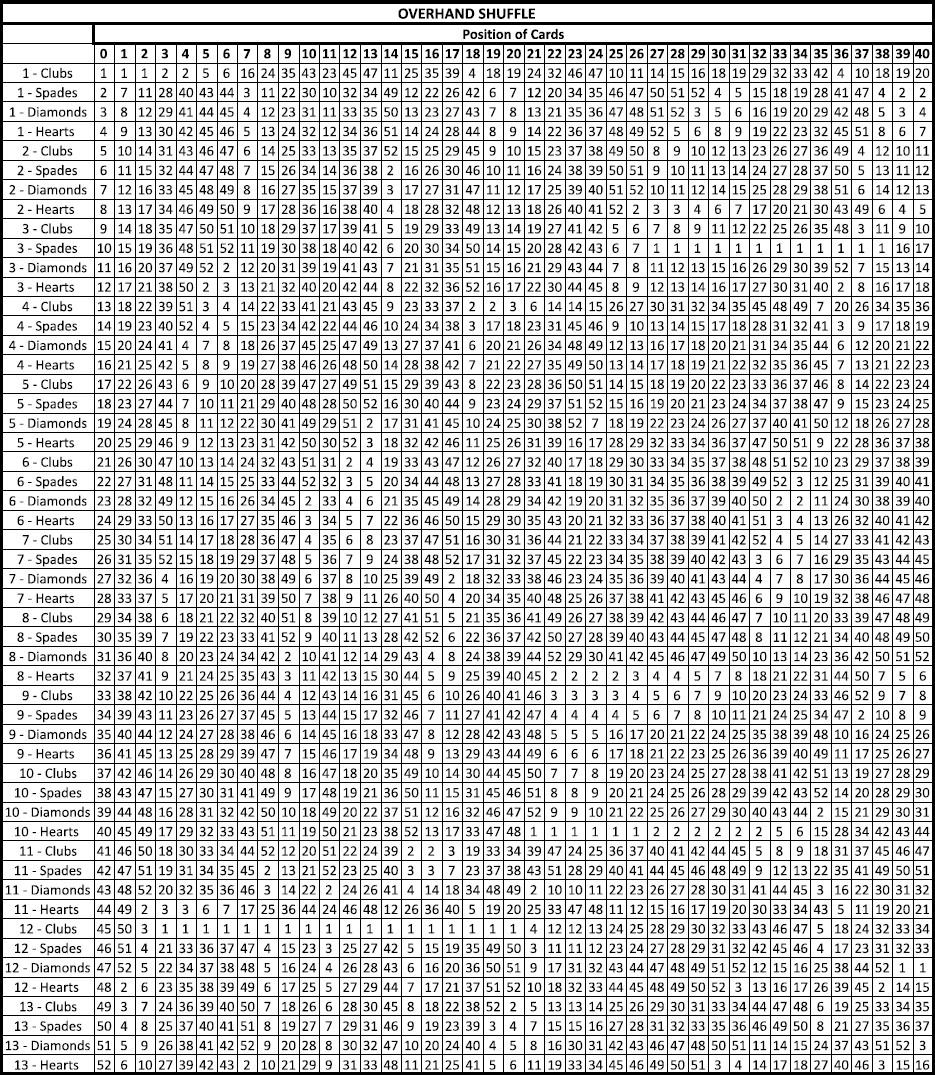

An experimental study examined the number of rising sequences of the rifle and overhand shuffling strategies. No significant difference was found between the two strategies. Future directions for experimentation could compare the randomness between the riffile and overhand manual shuffling practices with a mechnical shuffle.

277-288

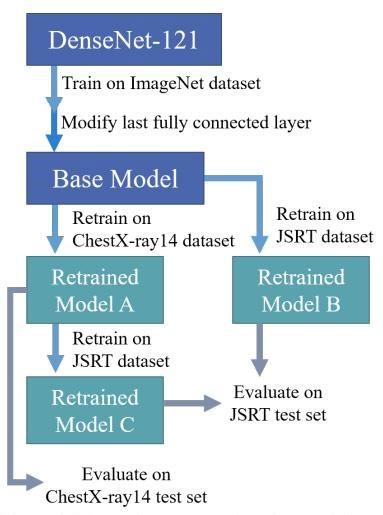

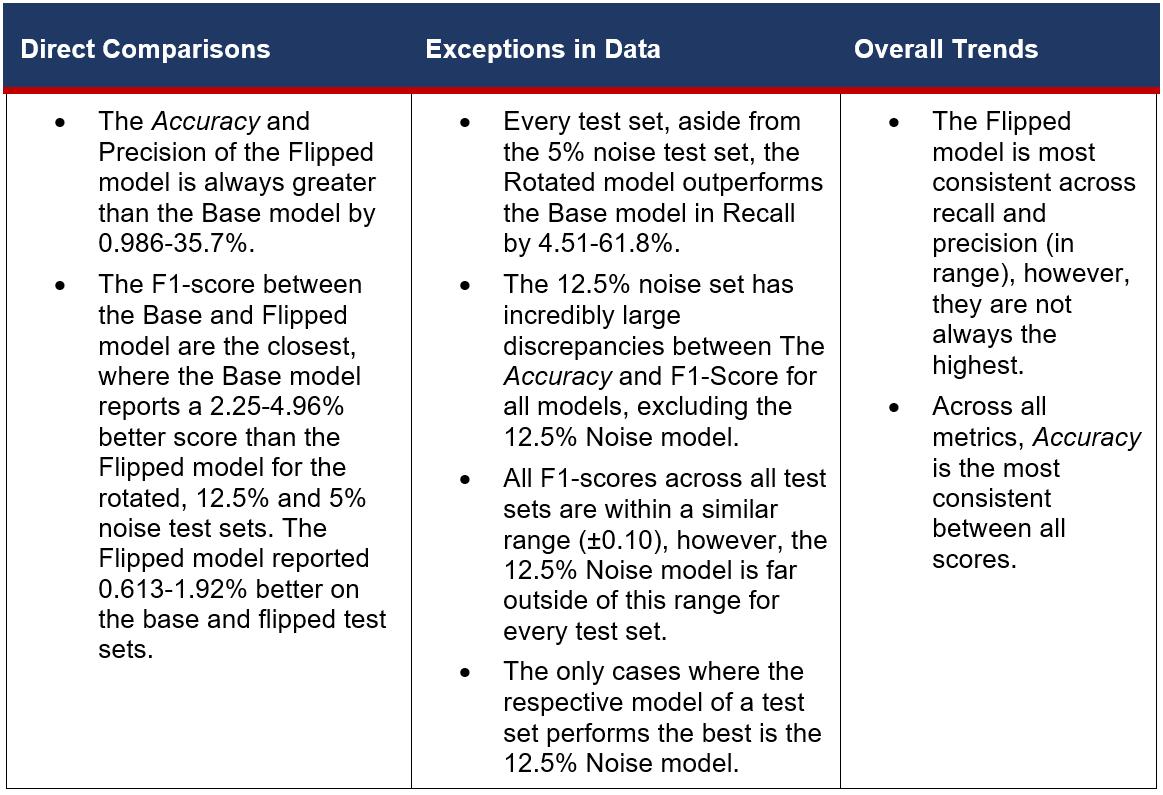

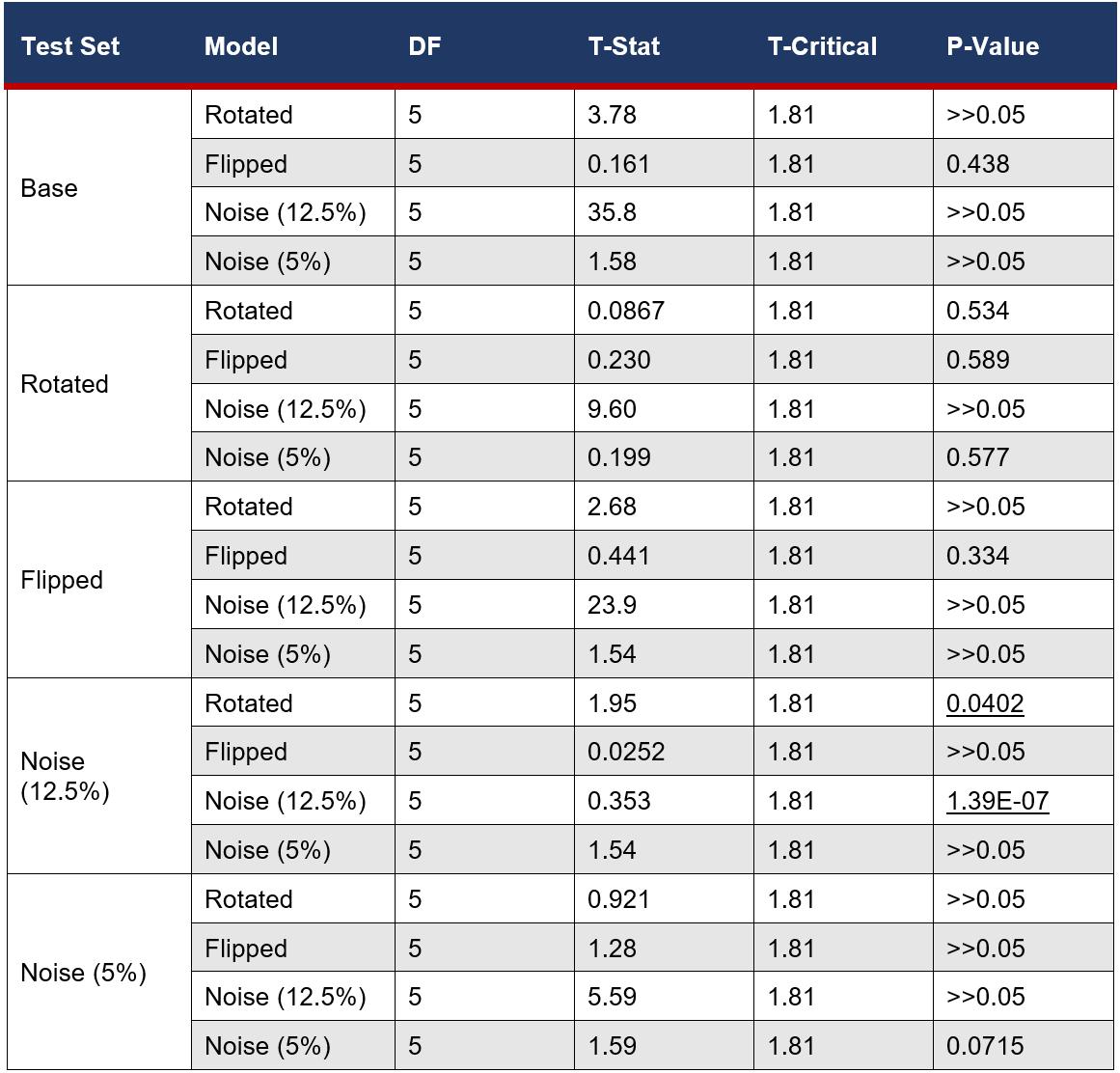

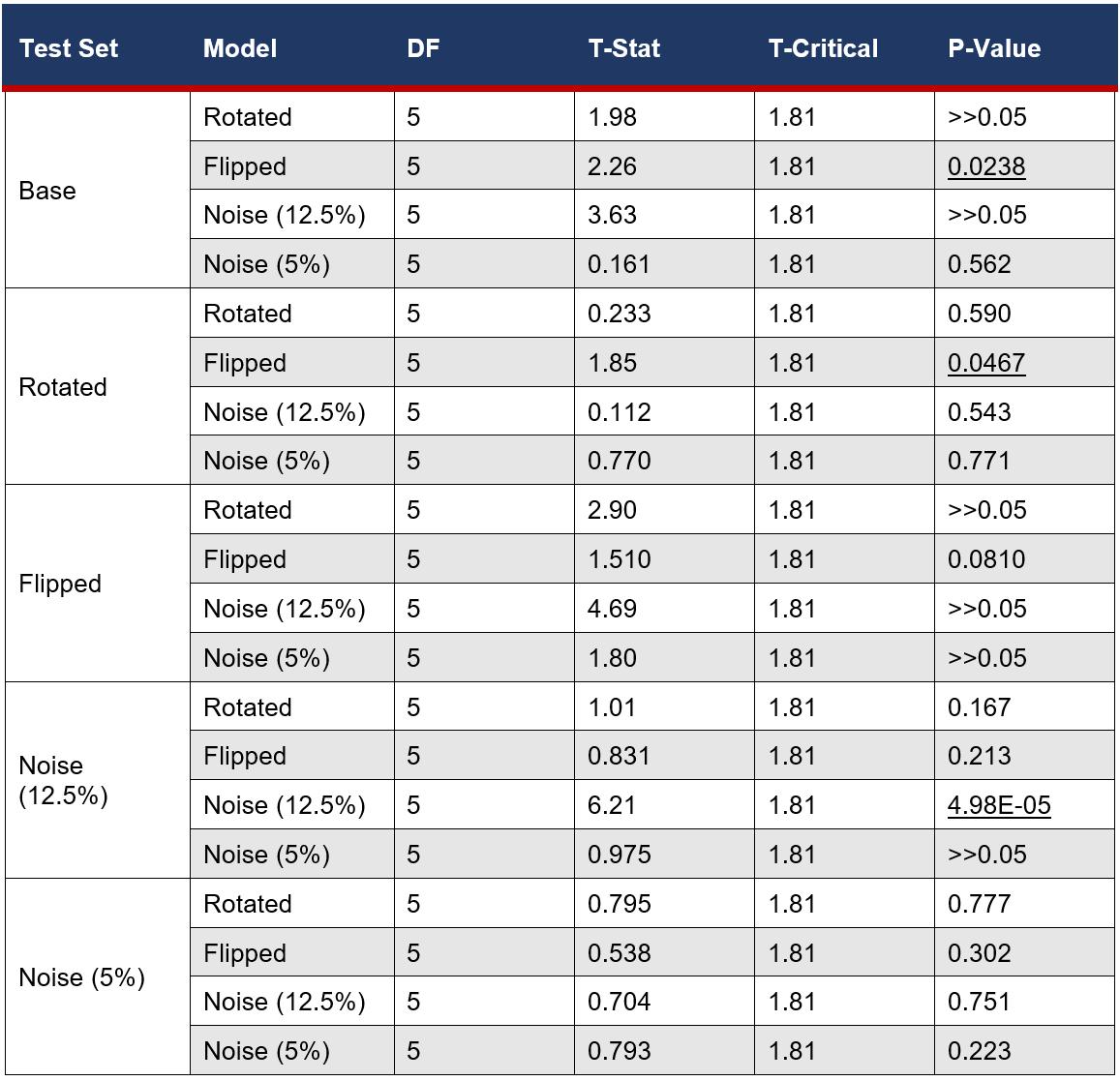

This performance of multi-stage transfer learning (MSTL) on a lung segmentation task within adverse conditions was investigated. It was concluded that most of the retrained models likely experienced covariate shifts with the exception of models trained on flipped datasets. This investigation gives insight into the thresholds of models trained on small datasets to perform under adverse conditions, adding to the knowledge base required to successfully integrate deep learning (DL) into the medical workflow.

A distinctive hallmark of cancer cells is a high glucose uptake and lactate production regardless of oxygen availability, known as the Warburg Effect. Emerging studies suggest the Warburg Effect could be counteracted by increasing extracellular lactate concentration, which could occur during anaerobic exercise, however research is scarce. This experiment aimed to investigate how a cumulative increase in extracellular lactate affects the ability of a cancer cell line to switch from aerobic glycolysis to oxidative phosphorylation (OXPHOS) through measuring extracellular acidification rate (ECAR) and oxygen consumption rate (OCR) respectively, using advanced Seahorse XF technology. It was found when the total lactate injected into the cancerous cell line was 15mM and 20mM, there was a significant increase in OCR compared to basal measurements, where P=0.00301 and P=0.000686 respectively. This suggests that an increase in extracellular lactate does cause cancer cells to shift to an oxidative phenotype in vitro, however further investigations involving a larger sample size and in vivo models are pivotal in assessing the role of lactate, and potentially exercise, in the metabolic processes of cancer.

Cancer is the leading cause of death worldwide, responsible for approximately 10 million deaths in 2020 (WHO, 2021). Additionally, it is estimated that ¼ of adults worldwide do not achieve sufficient amounts of physical exercise (Mathewson, 2018). Alarming, emerging studies suggest an intertwine between these global issues, indicating that lactate resulting from single bouts of exercise may have a direct effect on tumour intrinsic factors (Dethlefsen, 2017, Hofmann, 2018).

Noncancerous cells rely primarily on oxidative phosphorylation (OXPHOS) to generate approximately 70% of their ATP for cellular processes. One fuel for OXPHOS is pyruvate, the end product of the enzymatic breakdown of glucose, known as glycolysis (Ristow, 2006). Under aerobic conditions, pyruvate is transported to the mitochondria where it is oxidised to acetyl-CoA. Acetyl-CoA is further combined with oxaloacetate to initiate the tricarboxylic acid (TCA) cycle, leading to OXPHOS and resulting in the synthesis of ATP energy (Zheng, 2012).

Discovered by Otto Warburg (Warburg et al., 1927), a hallmark of cancerous cells is an accelerated glycolytic metabolism to

convert glucose to lactate rather than through OXPHOS, even under fully oxygenated conditions (San-Millán and Brooks 2017). This theory has been supported by various studies (Fadaka et al., 2017, Hirschhaeuser et al., 2011, Jose et al., 2010, Ruiz et al., 2009, SanMillán and Brooks, 2017, Zheng, 2012) and is known as the Warburg Effect or aerobic glycolysis. This phenomenon has remained a mystery across scientific literature, as glycolysis appears inefficient to cancer cells, yielding only 2 ATP molecules, compared to 40 ATP molecules generated by OXPHOS (Fadaka et al., 2017). Consequently, cancerous cells demand a high glucose consumption to maintain homeostasis (Hanahan & Weinberg, 2011).

Whilst previous literature accepts the Warburg Effect is a consequence of defects in cellular respiration, oncogenic alterations, and an overexpression of glycolytic enzymes and metabolite transporters (Hirschhaeuser et al., 2011), the underlying mechanisms of Warburg Effect in cancer cells has been unknown for nearly a century. This may be partially due to an unparalleled focus on genomic techniques in cancer research over the recent decades, which has resulted in a neglected understanding of cancer metabolics (Hofmann, 2018). However, it was recently proposed that the purpose of the Warburg Effect is solely lactate production, known as lactagenesis (SanMillán and Brooks, 2017), implying its role beyond a waste product. During lactagenesis, pyruvate is reduced into lactate, catalysed by the enzyme lactate dehydrogenase (LDH) (Xie et al., 2014), and this reaction is reversible (Mishra and Banerjee, 2019). However, limited studies have accounted for the reversible nature of this equilibrium reaction, in regards to

the underlying mechanisms of the Warburg Effect.

Building on Warburg’s model, the Reverse Warburg Effect proposes that not all cancer cells undergo aerobic glycolysis, but rather lactate is shuffled and used as an energy source from cancer-associated fibroblasts (CAFS) via monocarboxylate transporters (MCTs) (Wilde et al., 2017). This is supported by the findings that tumours are not exclusively hypoxic, but rather contain aerobic regions which receive shuttled lactate from other glycolytic cancer cells (Semenza, 2008). The Reverse Warburg Effect induces localised lactic acidosis in the tumour microenvironment (TME) causing an accumulation of lactate (Siska, 2020) and due to the high ionisation of lactic acid, a consequent decrease in pH which may favour metastasis, angiogenesis and immunosuppression (de la Cruz- Lopez et al., 2019) and potentially chemoresistance (Brown et al., 2019). However, it is important to note that cancers are extremely heterogeneous with individual metabolic features (Zheng, 2012, Semenza, 2008), potentially limiting the application of Reverse Warburg Effect.

It has also been outlined that the transport of lactate into cancer cells through MCTs is dependent on a concentration gradient (Hofmann 2018, Payen et al., 2019) in order to avoid intracellular acidification (Brown et al., 2019). In 2018, Hofmann further hypothesised if the blood lactate concentration surrounding the tumour can be increased, for example through exercise, this could inhibit the shuttling of

lactate and hence the process of the Reverse Warburg Effect. However, it was necessitated by Hofmann that there is extremely limited research on the mechanisms of single bouts of exercise on cancer.

The shift of energy metabolism from OXPHOS to aerobic glycolysis has now been widely accepted as a quintessential feature of cancer (Hanahan & Weinberg, 2011). In 2016, Wu inquired if cancer cells can revert from the Warburg effect to OXPHOS when induced by TME pressures. Wu’s results concluded without lactic acidosis, glycolysis and OXPHOS provided 23.7% - 52.2% and 47.8% - 76.3% of total ATP generated, respectively; whilst with lactic acidosis, glycolysis and OXPHOS provided 5.7%13.4% and 86.6% - 94.3% of total ATP generated respectively. This suggested lactic acidosis could revert cancer cells from the Warburg to the OXPHOS phenotype. Furthermore, it has been demonstrated, when 4T1 cancer cell lines were induced with lactic acidosis the cells showed a non-glycolytic phenotype characterised by a high oxygen consumption rate over glycolytic rate, negligible lactate production and efficient incorporation of glucose into cellular mass, revealing the dual metabolic nature of cancer cells (Xie et al., 2014).

However, Wu’s study induced the switch between glycolysis and OXPHOS using inhibitors (oligomycin, FCCP, Rotenone/ AntimycinA), and no research appears to stimulate this metabolic switch by increasing extracellular lactate. The study conducted by Wu in 2017 was also the first to quantitatively measure such a metabolic switch and only studied cell

lines in two conditions (lactic acidosis and a control group). Therefore, such metabolic switch has not been investigated under varying concentrations of lactate as a means to model the accumulation of lactate as demonstrated in anaerobic exercise. This metabolic switch was also hypothesised by SanMillán and Brooks in 2017, who suggested that aerobic exercise could contribute to counteracting such switch to a glycolytic metabolism in cancer cells by creating epigenetic responses to restorate oxidative phenotypes, however, experimentations have been conducted.

It has been further suggested, if glycolysis could be inhibited in cancer cells, OXPHOS could be restored (Zheng, 2012). This is supported by the increasing number of studies that reveal lactate released by glycolysis and/or CAFS is not discharged as cellular waste, but rather is taken up by oxygenated tumour cells as energy fuel. It has been proposed that this occurs as lactate is converted to pyruvate by LHD where it enters the mitochondria and undergoes OXPHOS (Zheng, 2012). Limited studies however investigate the implications of adding the product, i.e. lactate, to this equilibrium as a potential mechanism for lactic acidosis causing the apparent shift from glycolysis to OXPHOS. However, it was noted that circulating lactate levels are critical in dictating the status of the LHD equilibrium, and Wu in 2014, concluded no net lactate generation was due to the equal rate of pyruvate generated from glycolysis with the removal into the TCA cycle (Xie et al., 2014). However, these concepts are mechanistic explanations of the Warburg Effect, which have yet to be justified by experiment.

Despite a history of lactate being defined as only a waste product, over the last decade research has revealed lactate produced by exercise is an active metabolite, moving between cells and capable of being oxidised as a fuel (Philp, et al., 2005). Currently, there is limited research on the intrinsic effects of exercise on cancer cell metabolism. Exercise unquestionably has a role in regulating metabolic processes, but how this consequently affects tumour growth and metastatic rate is not currently mechanistically understood (Hojman, 2018). A study by McTiernan suggested physical activity may be linked to cancer production through exercise-dependent reductions in cancer risk factors; including sex hormones, insulin growth factor (IGF), inflammatory markers and improving immune function. The large inconsistencies regarding the exercise dose which is ideal for cancer management in current research with reference to intensity, duration, frequency and type hinders compelling conclusions (Ashcraft, 2016).

Therefore, cancer cell research demands quantitative over qualitative studies to meet the increasing prevalence of disease. Lactate holds promise to play a role in cellular properties beyond purely a waste product (San-Millan, 2017). Therefore, investigations in the role of lactate on cancer cells in vitro, could provide insight into a further understanding of the potential benefits, or risks, of exercising for cancer patients.

Will increased extracellular lactate concentrations in a cancerous cell line

cause a change in the extracellular acidification rate (ECAR) and oxygen consumption rate (OCR)?

Scientific Hypothesis:

The cancerous cell line exposed to increased lactate concentrations is expected to increase in OCR and decrease in ECAR. This is supported by studies which propose that lactic acidosis can revert glycolytic cancer cells to a dominant oxidative phenotype (Dethlefsen, 2017, Hofmann, 2018, Nijsten & van Dam, 2009, San-Millán & Brooks, 2017, Wu et al., 2016).

The investigation consisted of measuring extracellular acidification rate (ECAR) and oxygen consumption rate (OCR) in a cancerous cell line 4T1 (ATCC) at differing concentrations of sodium lactate using the novel Seahorse Extracellular Flux 24 (XF) analyser (Seahorse Bioscience). Sodium lactate was injected to the medium at cumulative increments from 0mM to 20mM (0mM, 5mM, 10mM, 15mM, 20mM) through integrated injection ports. In the control sample, a buffered serum, without lactate, was injected at the same concentrations and increments as the lactate group. This technology was specifically selected due to its dual ability to provide an indication of glycolysis and OXPHOS by measuring ECAR and OCR respectively, through real time measurements of changing pH and oxygen concentrations in the extracellular medium.

Glycolysis will be determined through measurements of the ECAR of the surrounding cell media, caused by the excretion of lactate per unit of time after the conversion from pyruvate in cells,

altering pH (Wu et al., 2006). However since the lactate injected into the cell line also has acidic properties, this will inevitably reduce the validity of glycolysis measurements by altering pH levels which may not reflect the metabolic measurements of the cells. Therefore this justifies the selection of the Seahorse technology which has the ability to measure oxygen consumption rate, which is calculated by oxygen concentration (Plitzko et al., 2017) and therefore will not be influenced by lactate injections.

Prior to the assay, the cells were stored in a biosafety cabinet II at 37oC and 5% CO2 to effectively replicate conditions of the human body. Both cell lines were cultured in a low glucose Dulbecco’s modified Eagle’s medium (DMEM) containing 10% fetal bovine serum (FBS) as well as other nutrients to control cell growth. 10 wells of the Seahorse XF 24 well microplate were each filled with approximately 10 000 cells of the cancerous 4T1 cell line and 10 remaining wells were likewise filled and allocated as the control. 4 wells were left blank as a control for the machine and the cells were left in the biosafety cabinet (37oC, 5% CO2) overnight. Prior to the assay the next morning, the cancerous cells were examined and observed under the microscope to monitor any abnormalities (Appendices 1).

The microplate was placed into the Seahorse XF and 3 basal measurements of ECAR and OCR were recorded with 0mM lactate. The assay then recorded 15 measurements for each of the 20 wells, recording ECAR and OCR rates at each time increment. Between each measurement the cells were mixed during a 5 minute cycle. Lactate concentration was cumulatively increased and 3

measurements were recorded for each concentration increment in each well. The same procedure was completed for the control, with the buffer solution injected.

Ethical and biosafety considerations have been addressed by the completion of the experiment to be conducted at Garvan Institute of Medical Research, where the hazardous and cancerous cell lines were stored in a Biosafety cabinet II and under appropriate conditions and protocol.

Results and analysis:

Null hypothesis:

As extracellular lactate cumulatively increases, the rates of extracellular acidification rate and oxygen consumption rate will not change as extracellular lactate concentration has no influence on cancer cell metabolism.

Alternative hypothesis H1:

As extracellular lactate cumulatively increases, the rates of extracellular acidification rate and oxygen consumption rate will change as extracellular lactate concentration has an influence on cancer cell metabolism.

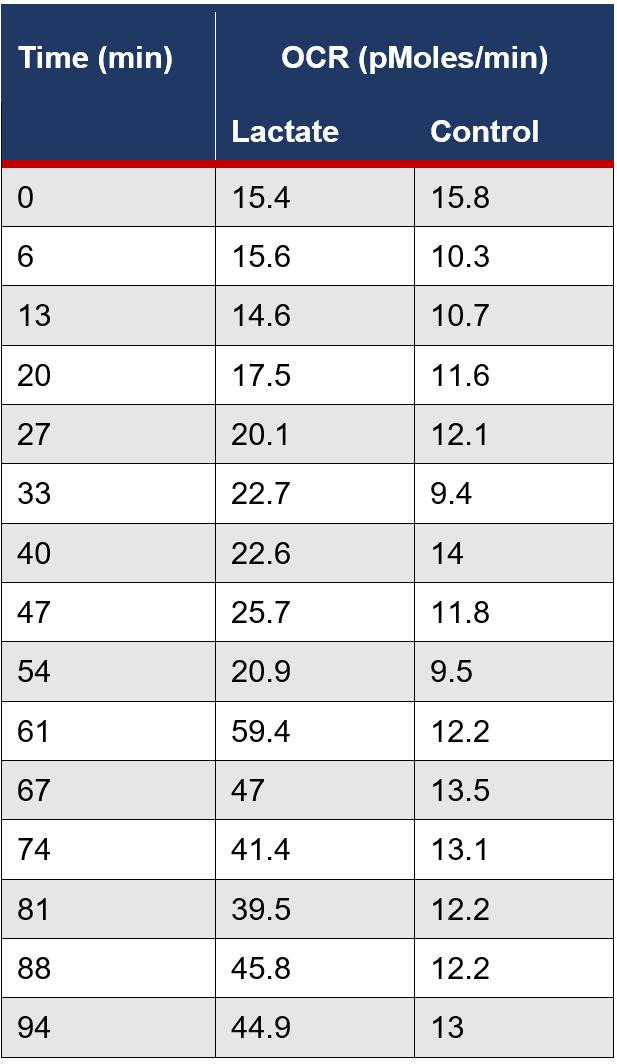

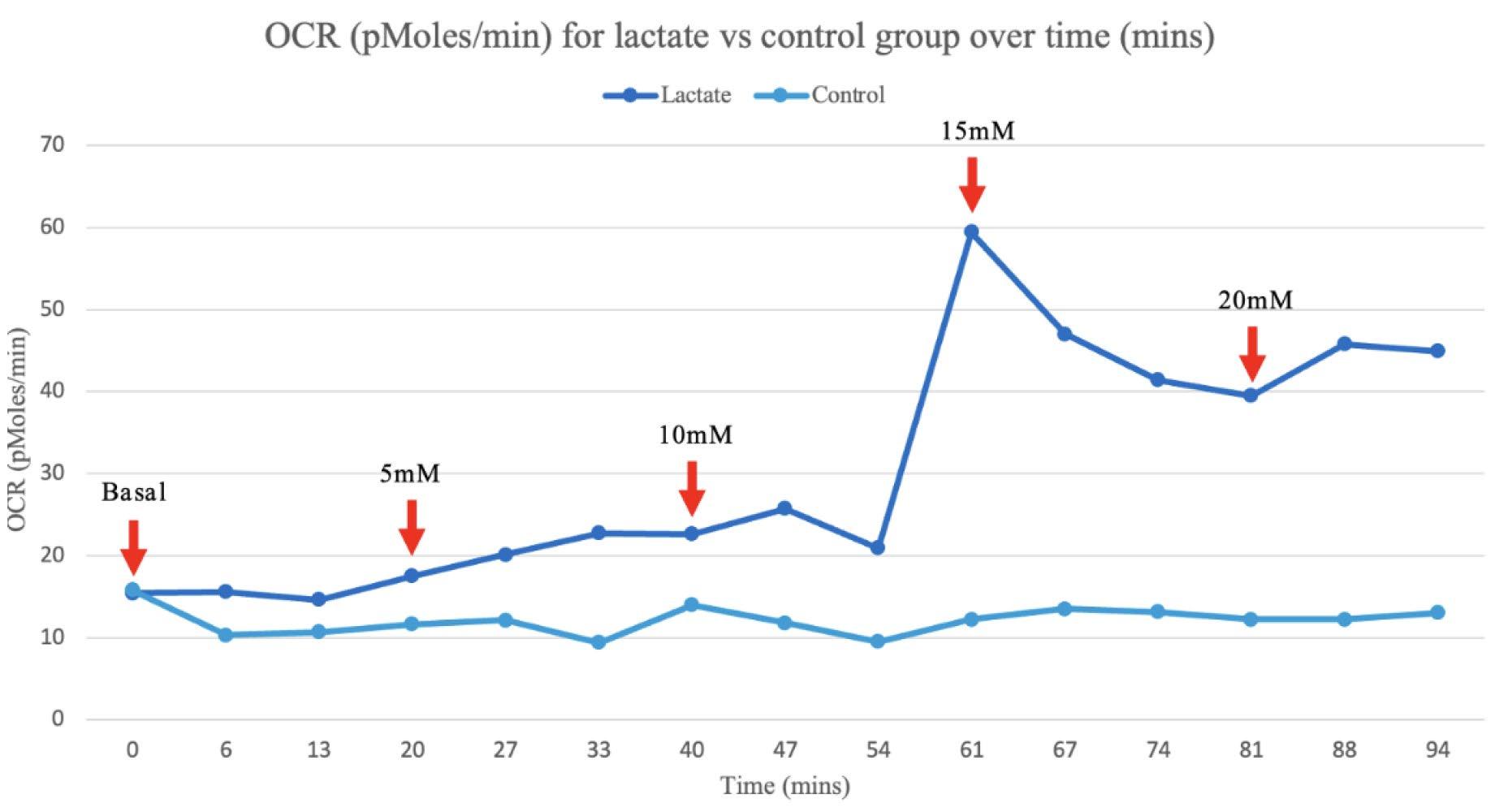

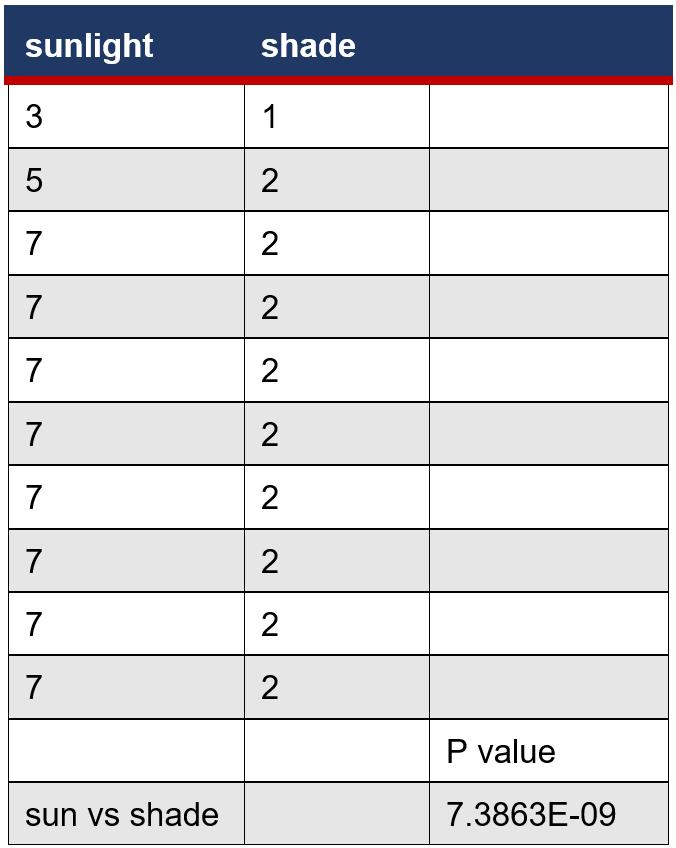

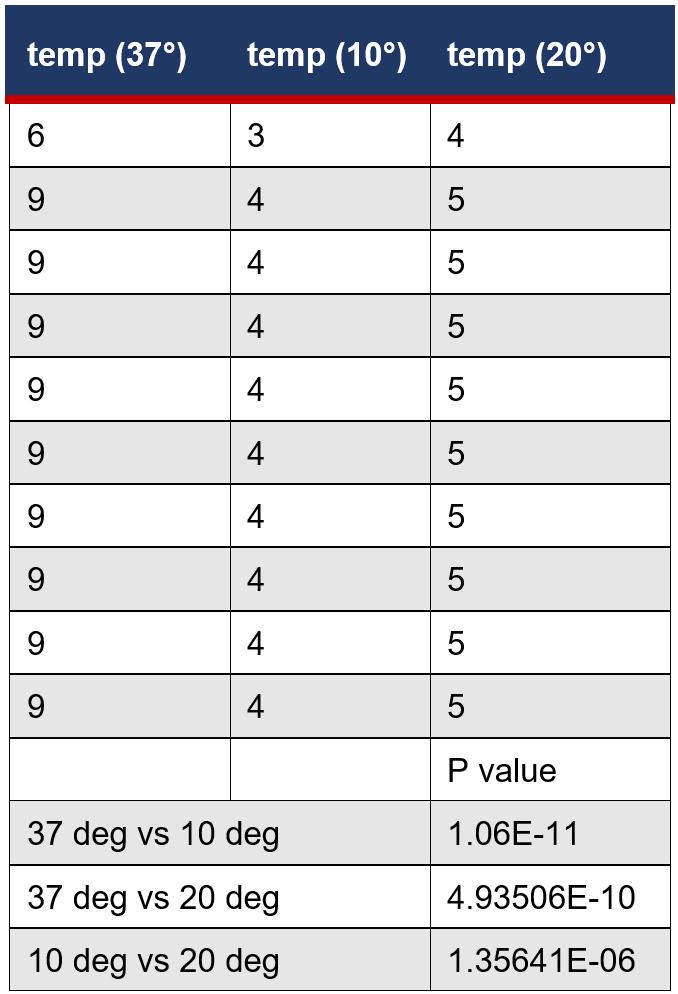

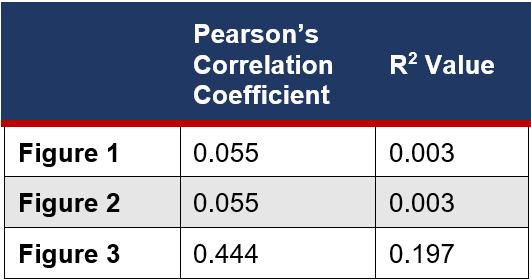

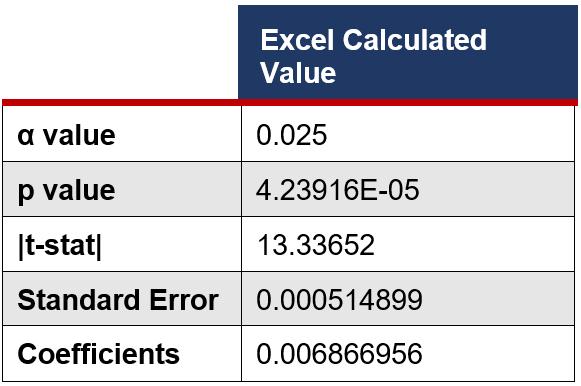

Unpaired, student t-tests were applied to determine if there was a statistical significance for each increment of lactate (5mM, 10mM, 15mM, 20mM) compared to basal measurements for both ECAR and OCR rates. The alpha value was set to p=0.05, meaning any values that were more than 5% due to chance, were deemed insignificant. The same t-tests were conducted for the control with the addition of a buffered solution. All p values for the control were insignificant (p > 0.05) and ranged from p=0.98 to p=0.40. Significant differences were measured at 15mM (Figure 1A) and 20mM for OCR (Figure 1B) and at 5mM (Figure 2A), 10mM (Figure 2B), and 20mM (Figure 2C) for ECAR.

Statistical analysis of lactate group for OCR measurements:

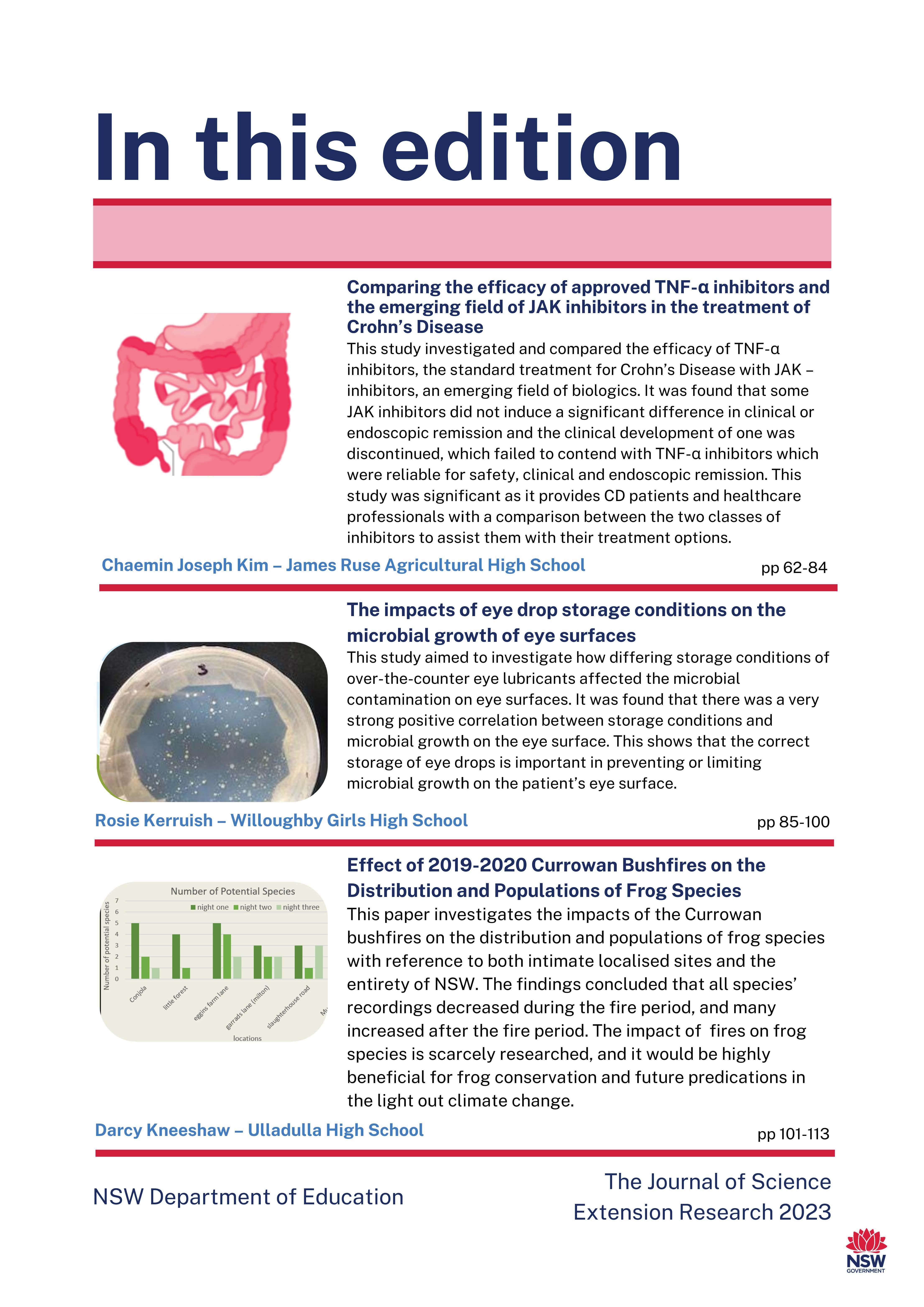

Figure 1B: P(T<=t) two-tail = 0.000686 (P<0.05) OCR (pMoles/min) was measured at a basal rate (13mins) and when 20mM was injected to the cell line (94mins). The difference between the average OCR for cancer cells at basal rates compared to 20mM were statistically significant as the results were 0.00686% due to chance. This suggests that 20mM of lactate could affect OCR.

Statistical analysis of lactate group for ECAR measurements:

Figure 1A: P(T<=t) two-tail = 0.00301 (P<0.05) OCR (pMoles/min) was measured at a basal rate (13mins) and when 15mM was injected to the cell line (74mins). The difference between the average OCR for cancer cells at basal rates compared to 15mM were statistically significant as the results were 0.0301% due to chance. This suggests that 15mM of lactate could affect OCR.

Figure 2A: P(T<=t) two-tail = 0.0128 (P<0.05) ECAR (mpH/min) was measured at a basal rate (13mins) and when 5mM was injected to the cell line (33mins). The difference between the average ECARfor cancer cells at basal rates compared to 5mM were statistically significant as the results were 1.28% due to chance. This suggests that 5mM of lactate could affect ECAR.

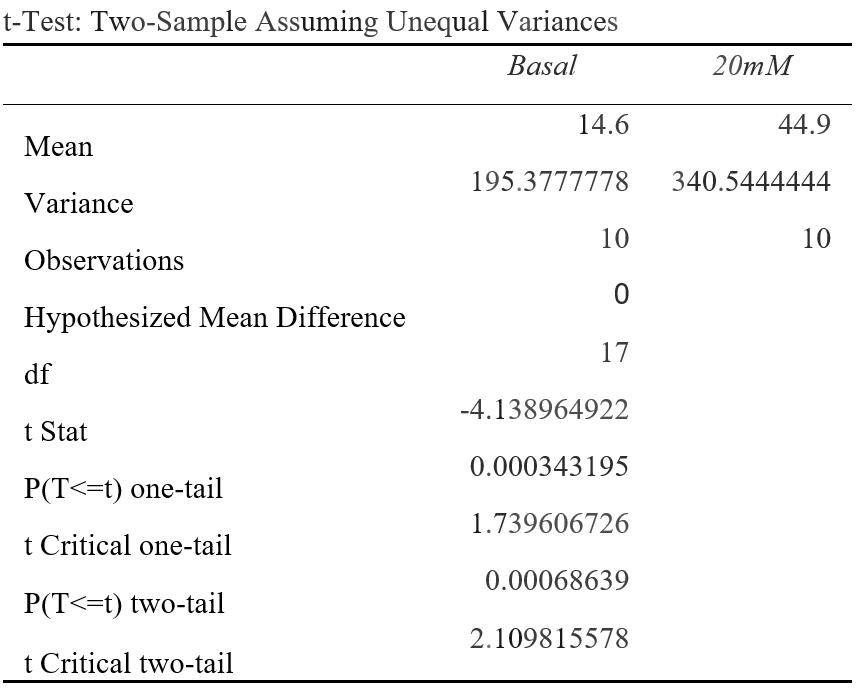

Figure 2B: P(T<=t) two-tail= 0.0294 (P<0.05) ECAR (mpH/min) was measured at a basal rate (13mins) and when 1 0mM was injected to the cell line (54mins). The difference between the average ECAR for cancer cells at basal rates compared to 10mM were statistically significant as the results were 2.94% due to chance. This suggests that 10mM of lactate could affect ECAR.

Descriptive statistical analysis:

Descriptive analysis for OCR measurements:

Figure 2C: P(T<=t) two-tail= 0.0337 (P<0.05) ECAR (mpH/min) was measured at a basal rate (13mins) and when 20mM was injected to the cell line (94mins). The difference between the average ECAR for cancer cells at basal rates compared to 20mM were statistically significant as the results were 3.37% due to chance. This suggests that 20mM of lactate could affect ECAR.

Figure 4A: The average OCR measurements were calculated for the lactate and control group for each time interval during the assay.

Figure 4B: The average OCR measurements for the lactate and control group were graphed for each recorded time interval. The red arrows signify the point at which 5mM of lactate was cumulatively injected.

Descriptive analysis for ECAR measurements:

Figure 5A: The average ECAR measurements were calculated for the lactate and control group for each time interval during the assay.

Discussion:

The T-tests conducted reveal lactate has a significant effect on the rates of ECAR and OCR at various concentrations when compared to basal measurements. For the independent group of cancerous cells, significant differences between the means (p<0.05) of the basal rate when compared to the interval at a set concentration (5mM, 10mM, 15mM or 20mM) suggested lactate had a profound effect on either ECAR or OCR measurements as there were a less than 5% likelihood that results were obtained by chance. This occurred at intervals

15mM (p=0.00301) and 20mM (p=0.000686) for OCR measurements

(Figure 1A and B), and at 5mM (p=0.0128), 10mM (p=0.0294) and 20mM (p=0.0337) for ECAR measurements

(Figure 2A, B and C). For the control group of cancerous cells, which were injected with a buffer serum without lactate, all p-values were high ranging

from p=0.404 to p=0.975. This indicates that there were most likely no confounding variables significantly influencing the results, and lactate was the source of change in the experiment. Therefore, the null hypothesis that lactate does not change the ECAR and OCR measurements in a cancerous cell line can be rejected in favour of the alternate hypothesis.

An average of the independent samples indicated ECAR measurements increased over the 5 minute cycle period following an increase in lactate concentration at 13, 40, 61 and 81 minutes, then returned to a similar rate, despite the cumulative increase in extracellular lactate concentration (Figure 5B). This is most likely due to the acidic properties of the sodium lactate added causing anomalous results as ECAR measurements are dependent on pH, evident in the significant increase in ECAR at 5 and 10mM increments (Figure 2A and B).

Figure 5B: The average ECAR measurements for the lactate and control group were graphed for each recorded time interval. The red arrows signify the point at which 5mM of lactate was cumulatively injected.However, the OCR measurements, which are calculated from moles rather than pH, suggest that as lactate concentration increases the rate of OCR also increases, evident in the spike in the rate of OCR in the independent group after 54 minutes when compared to the control (Figure 4B).

The evident switch in the cancerous cell line to a more oxidative metabolism when an accumulation of lactate injected was 15mM and greater, corresponds with emerging literature in the field and reitarties the importance of lactate in understanding cancer metabolics (Wu et al., 2016). The significant increase in OCR in the independent group at 15mM (p=0.00301) from 14.6 to 41.4 pMoles/min and at 20mM (p=0.000686) from 14.6 to 44.9 pMoles/min, provides a point at which lactate causes a metabolic switch from aerobic glycolysis to OXPHOS, extending from current scientific literature (Wu et al., 2016).

Current literature proposes that glycolytic cancer cells are able to sustain their metabolism through types of lactate shuttling including the Reverse Warburg Effect, metabolic symbiosis and vascular endothelial growth (Kooshki et al., 2021), as not all cancer cells necessarily produce lactate (Semenza, 2008). The increase in an oxidative metabolism from 15mM of lactate could therefore be due to disruption of the lactate shuttling concentration gradient required to transport lactate through MCTs, causing the cancer cells to rather use lactate as a fuel for oxidation, potentially indicated by the decrease plateau of OCR from 61 minutes onwards (Figure 4B). The increase in OCR at 15mM could also be attributed to an increase in lactate favouring the oxidation of lactate to

pyruvate via LDH, resulting in pyruvate to be used as fuel for OXPHOS (Xie et., 2014). The increase in OCR could also be due to the increase in extracellular lactate decreasing the efficacy at which glucose can diffuse into GLUT transporters in glycolytic cells, creating an environment where glycolytic cancer cells adapt by increasing OCR.

Key limitations and future directions for scientific research:

The in vitro nature of the experiment was beneficial for providing quantitative data on a cellular level for the effects of lactate on cell metabolism, however is limited in providing a thorough understanding of the role of lactate in vivo. The variation across each individual assay (Appendices 2), highlights the need for further replications to analyse results with a large variance to mirror the diversity of ways heterogeneous cancer cells may react under experimental conditions. Despite the highly advanced Seahorse XF technology, it is limited as during the mixing phase of each cycle during the assay, as there is potential that cells could have broken off, impacting the cell count and the reliability of the results. The Seahorse XF results also indicated on various occasions 0 values for either ECAR and OCR (Appendices 2) which also limits the validity of the results as it is questionable if the cells are ever at a state of 0. The limitations of using lactate as the independent variable, which affects pH measurements, also impacts findings as no noticeable trend was observed from ECAR measurements, which could further suggest why there was an insignificant Pearson's correlation coefficient (r=-0.114) between the two measurements (Appendices 3).

Whilst the experiment provided insight to the role of lactate on a cellular level, it cannot be concluded that this directly mirrors the role of exercise, which also produces lactate, on cancer cells. Whilst the concentrations of lactate in the experiment mirror the concentration produced during exercise, further research is required to understand how lactate is transported to tumours from a bodily perspective to assess the accuracy of this experiment as a potential model. Further experimentation should be conducted with a larger sample size over multiple occasions, as well as comparing the results with a noncancerous cell line to further scientific understanding.

Investigations into quantitative, in vivo experiments for the role of lactate on glycolysis and OXPHOS will also be beneficial in order to understand the role of single bouts of exercise on tumour intrinsic factors.

Conclusion:

A clear increase in OCR when lactate injected cumulated to 15mM and 20mM, where P=0.00301 and P=0.000686 respectively, indicates a significant link between the concentration of extracellular lactate and the metabolic profile of cancer cells in vitro. Therefore, despite the lack of validity of ECAR measurements due to the addition of lactate potentially impacting the pH of results, it can still be established that an increase in lactate does result in cancer cells switching to a more oxidative phenotype, and the null hypothesis can be rejected in favour of the alternate hypothesis. Hence, these results suggest that lactate could revert the Warburg Effect in cancer cells by potentially disrupting the concentration gradient required for cells to shuttle lactate intercellularly to sustain such a

highly glycolytic metabolism. It is critical that further investigations explore the effects of lactate on cancer cells from an in vivo perspective, in order to determine the effects of single bouts of lactate on cancer cell metabolics, as a means of providing a foundation of the possible mechanisms of the effects of exercise on cancer.

I would like to express my gratitude to Dr Andy Philip and Garvan Institute of Medical Research for conducting the experiment and providing the Seahorse XF experimental data. I would also like to thank my teacher Ann Hanna for guidance with the data analysis, and my mentor Clara Zwack from the University of Sydney for their support with contacting academics. I would also like to extend my appreciation to my peer Emily Cliff and chemistry teacher Zoe Liley for their feedback regarding the scientific report.

References: Ashcraft, K. A., Peace, R. M., Betof, A. S., Dewhirst, M. W., & Jones, L. W. (2016). Efficacy and Mechanisms of Aerobic Exercise on Cancer Initiation, Progression, and Metastasis: A Critical Systematic Review of In Vivo Preclinical Data. Cancer Research, 76(14), 4032–4050. https://doi.org/10.1158/00085472.can-16-0887

Brown, T. P., & Ganapathy, V. (2020). Lactate/GPR81 signaling and proton motive force in cancer: Role in angiogenesis, immune escape, nutrition, and Warburg phenomenon. Pharmacology & Therapeutics, 206, 107451.

https://doi.org/10.1016/j.pharmthera.2019

.107451

de la Cruz-López, K. G., Castro-Muñoz, L. J., Reyes-Hernández, D. O., GarcíaCarrancá, A., & Manzo-Merino, J. (2019). Lactate in the Regulation of Tumor Microenvironment and Therapeutic Approaches. Frontiers in Oncology, 9.

https://doi.org/10.3389/fonc.2019.01143

Dethlefsen, C., Pedersen, K. S., & Hojman, P. (2017). Every exercise bout matters: linking systemic exercise responses to breast cancer control. Breast Cancer Research and Treatment, 162(3), 399–408.

https://doi.org/10.1007/s10549-017-41294

Fadaka, A., Ajiboye, B., Ojo, O., Adewale, O., Olayide, I., & Emuowhochere, R. (2017). Biology of glucose metabolization in cancer cells. Journal of Oncological Sciences, 3(2), 45–51.

https://doi.org/10.1016/j.jons.2017.06.002

Hanahan, D., & Weinberg, Robert A. (2011). Hallmarks of cancer: the next generation. Cell, 144(5), 646–674.

https://doi.org/10.1016/j.cell.2011.02.013

Hirschhaeuser, F., Sattler, U. G. A., & Mueller-Klieser, W. (2011). Lactate: A Metabolic Key Player in Cancer. Cancer Research, 71(22), 6921–6925.

https://doi.org/10.1158/0008-5472.can11-1457

Hofmann, P. (2018). Cancer and Exercise: Warburg Hypothesis, Tumour Metabolism and High-Intensity Anaerobic Exercise. Sports, 6(1), 10.

https://doi.org/10.3390/sports6010010

Hojman, P., Gehl, J., Christensen, J. F., & Pedersen, B. K. (2018). Molecular

Mechanisms Linking Exercise to Cancer Prevention and Treatment. Cell Metabolism, 27(1), 10–21.

https://doi.org/10.1016/j.cmet.2017.09.01 5

Kooshki, L., Mahdavi, P., Fakhri, S., Akkol, E. K., & Khan, H. (2021). Targeting lactate metabolism and glycolytic pathways in the tumor microenvironment by natural products: A promising strategy in combating cancer. BioFactors.

https://doi.org/10.1002/biof.1799

Mathewson, T. (2018, October 30). More than 1 in 4 people across the world don’t get enough exercise, study says. Global Sports Matters.

https://globalsportmatters.com/health/201 8/10/30/over-1-in-4-people-across-theworld-dont-getenough-exercise-studysays/

Mishra, D., & Banerjee, D. (2019). Lactate Dehydrogenases as Metabolic Links between Tumor and Stroma in the Tumor Microenvironment. Cancers, 11(6), 750.

https://doi.org/10.3390/cancers11060750

Payen, V. L., Mina, E., Van Hée, V. F., Porporato, P. E., & Sonveaux, P. (2020). Monocarboxylate transporters in cancer. Molecular Metabolism, 33, 48–66.

https://doi.org/10.1016/j.molmet.2019.07. 006

Philp, A., Macdonald, A. L., & Watt, P. W. (2005). Lactate – a signal coordinating cell and systemic function. Journal of Experimental Biology, 208(24), 4561–4575. https://doi.org/10.1242/jeb.01961

Plitzko, B., Kaweesa, E. N., & Loesgen, S. (2017). The natural product mensacarcin induces mitochondrial toxicity and apoptosis in melanoma cells.

Journal of Biological Chemistry, 292(51), 21102–21116.

https://doi.org/10.1074/jbc.m116.774836

Ristow, M. (2006). Oxidative metabolism in cancer growth. Current Opinion in Clinical Nutrition and Metabolic Care, 9(4), 339–345.

https://doi.org/10.1097/01.mco.00002328

92.43921.98

San-Millán, I., & Brooks, G. A. (2016). Reexamining cancer metabolism: lactate production for carcinogenesis could be the purpose and explanation of the Warburg Effect. Carcinogenesis, bgw127.

https://doi.org/10.1093/carcin/bgw127

Semenza, G. L. (2008). Tumor metabolism: cancer cells give and take lactate. Journal of Clinical Investigation

https://doi.org/10.1172/jci37373

Siska, P. J., Singer, K., Evert, K., Renner, K., & Kreutz, M. (2020). The immunological Warburg effect: Can a metabolic‐tumor‐stroma score (MeTS) guide cancer immunotherapy? Immunological Reviews, 295(1), 187–202. https://doi.org/10.1111/imr.12846

Warburg, O. (1927). THE METABOLISM OF TUMORS IN THE BODY. The Journal of General Physiology, 8(6), 519–530.

https://doi.org/10.1085/jgp.8.6.519

Wilde, L., Roche, M., Domingo-Vidal, M., Tanson, K., Philp, N., Curry, J., & Martinez-Outschoorn, U. (2017). Metabolic coupling and the Reverse Warburg Effect in cancer: Implications for novel biomarker and anticancer agent development. Seminars in Oncology, 44(3), 198–203.

https://doi.org/10.1053/j.seminoncol.2017. 10.004

World Health Organization. (2022, February 3). Cancer. World Health Organization. https://www.who.int/newsroom/fact-sheets/detail/cancer

Wu, H., Ying, M., & Hu, X. (2016). Lactic acidosis switches cancer cells from aerobic glycolysis back to dominant oxidative phosphorylation. Oncotarget, 7(26).

https://doi.org/10.18632/oncotarget.9746

Wu, M., Neilson, A., Swift, A. L., Moran, R., Tamagnine, J., Parslow, D., Armistead, S., Lemire, K., Orrell, J., Teich, J., Chomicz, S., & Ferrick, D. A. (2007). Multiparameter metabolic analysis reveals a close link between attenuated mitochondrial bioenergetic function and enhanced glycolysis dependency in human tumor cells. American Journal of Physiology-Cell Physiology, 292(1), C125–C136.

https://doi.org/10.1152/ajpcell.00247.200 6

Xie, J., Wu, H., Dai, C., Pan, Q., Ding, Z., Hu, D., Ji, B., Luo, Y., & Hu, X. (2014). Beyond Warburg effect – dual metabolic nature of cancer cells. Scientific Reports, 4(1). https://doi.org/10.1038/srep04927

Xie, J., Wu, H., Dai, C., Pan, Q., Ding, Z., Hu, D., Ji, B., Luo, Y., & Hu, X. (2014). Beyond Warburg effect – dual metabolic nature of cancer cells. Scientific Reports, 4(1). https://doi.org/10.1038/srep04927

Zheng, J. (2012). Energy metabolism of cancer: Glycolysis versus oxidative phosphorylation (Review). Oncology Letters, 4(6), 1151–1157.

https://doi.org/10.3892/ol.2012.928

1: The Seahorse Bioscience XF Training Manual states that cells should be examined prior to assay to:

• Confirm cell health, morphology, seeding uniformity and purity (no contamination)

• Ensure cells are adhered, and no gaps present

• Make sure no cells are plated in the background correction wells

2: Experimental data provides by Garvan Research Institute

OCR data:

Flynn Croaker Coffs Harbour Senior College

Flynn Croaker Coffs Harbour Senior College

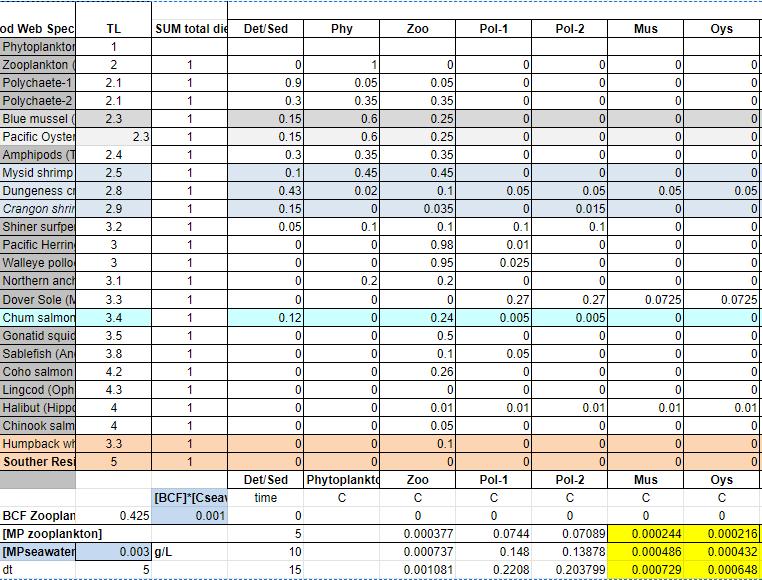

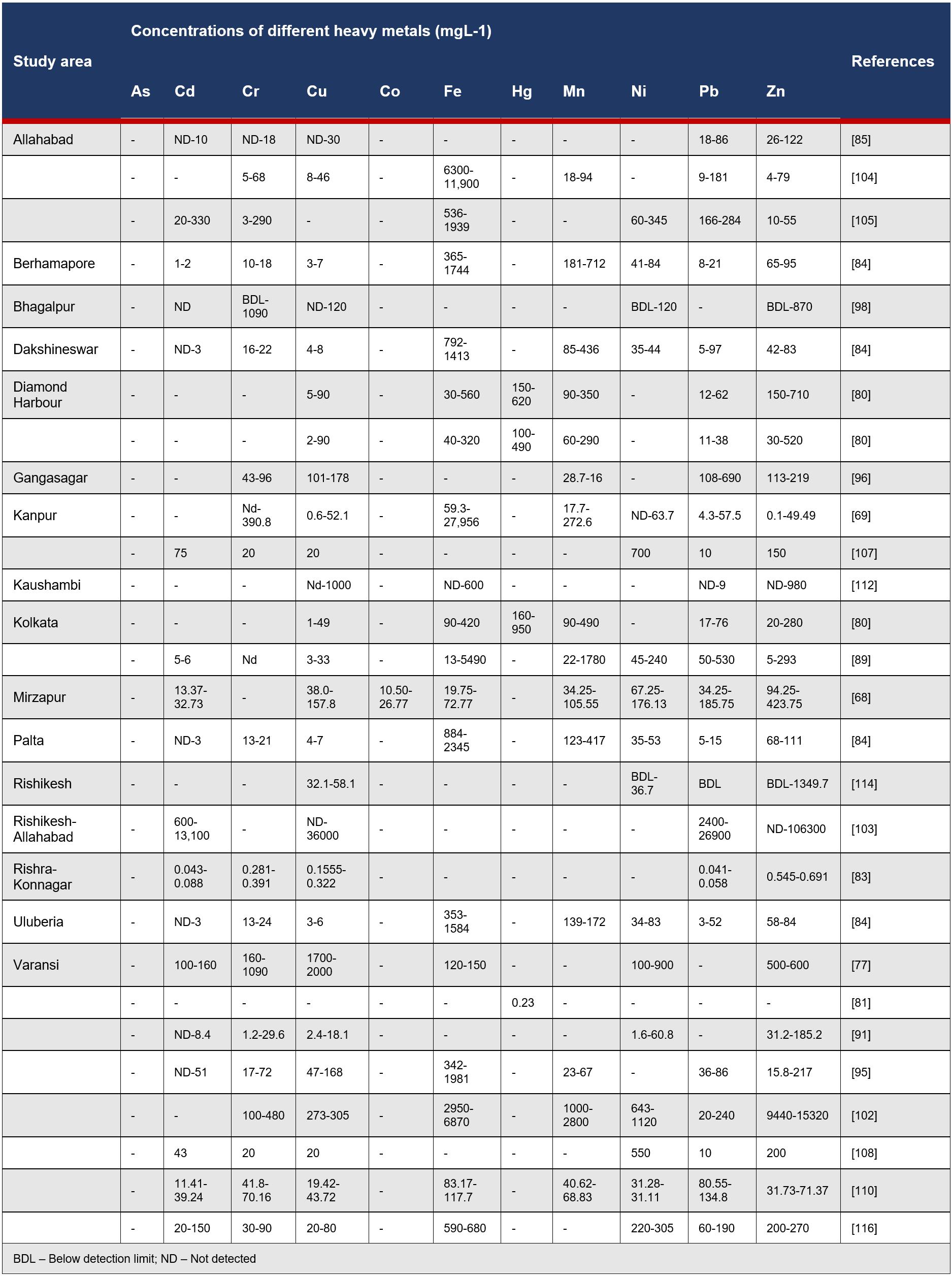

The increasing amount of microplastics (MPs) in the ocean raises concerns regarding the bioaccumulation of harmful and toxic chemicals which build up in seafood species. This meta looked at current data and surveyed the available information to depict whether different trophic levels make sea creatures that are commonly eaten by humans more susceptible to MPs and their respective chemicals which cause bioaccumulation. The results showed that there are discrepancies in the amount of information available, however, there is a good amount of evidence supporting that lower trophic levels are more susceptible to MP consumption and the toxic chemicals that accumulate. There would need to be an increase in laboratory-based experiments on the topic to have certain results.

Microplastic Identification

Microplastics are microscopic pieces of plastic that are less than 5mm in diameter. These can be either primary or secondary pieces. Primary MPs are small particles created for commercial use, this also encompasses microfibers that have rubbed off clothing and other materials. Secondary MPs are plastic pieces that have broken down from a larger piece of plastic, this decomposing is caused by environmental factors, usually ocean waves and the sun’s radiation waves. Since these foods are small, they are quite frequently mistaken throughout the marine food chain as food. As these MPs break down further, they can give off toxic chemicals which can be harmful to these animals.

Seafood Identification

Any sea creature which is known to be eaten by any general population regardless of the circumstances e.g. a cultural food or a nation's traditional food.

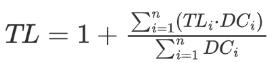

Trophic levels depend upon what a species eats. It can be obtained from

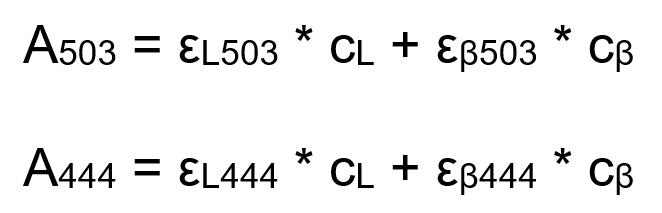

Figure 1. Some detected microplastics (MPs) in seafood species’ muscles from the Persian Gulf. The scale bar represents 250 μm. (Akhbarizadeh, Moore & Keshavarzi 2019)stable isotopes analyses, trophic ecosystem models, or stomach content analyses. As an example, a fish consuming 50% herbivorous-zooplankton (trophic level 2) and 50% zooplanktoneating fish (trophic level 3) would have a trophic level of 3.5. Trophic levels (TL) can be calculated from where n is the number of species or groups of species in the diet, DCi is the proportion of the diet consisting of species i, and TLi is the trophic level of species i. Thus, using dietary data, the trophic level of the predator is determined by adding 1.0 to the average trophic level of all the organisms that it eats. (Yodzi, Reichle & Trites 2017)

The trophic magnification factor (TMF) was calculated mathematically based on the relationship between trophic level (TL) and the concentration of contaminants. TMF was calculated using the following equation:

TMF = eb

Where b is the slope of the equation below:

lnCbiota = a + (b x TL)

If TMF of a contaminant is below 1, the contaminant is not biomagnified (Won et al. 2018) and (Akhbarizadeh, Moore & Keshavarzi 2019)

Research question: Do MPs and their respective chemicals cause

bioaccumulation more within seafood of lower trophic levels?

Alternative Hypothesis: Seafood species of lower trophic levels will be more susceptible to the bioaccumulation of MP chemicals.

Null hypothesis: Seafood species of higher trophic levels will be more susceptible to the bioaccumulation of MP chemicals.

To present a meta-analysis and systematic review of all scientific literature globally, analysing the data on MP contaminants within specific aquatic species. This literature was screened through a rigorous framework [Fig 1]. A thorough search was conducted to find literature regarding the bioaccumulation of MPs within seafood species of different trophic levels. The exploration for literature was not limited to a specific search platform and was finalised in August 2022, covering the years 2012 to 2022. The search included the following terms: Microplastics, bioaccumulation, fish, effects, toxic and impacts. If they fit this model, the data was scrutinised and isolated, allowing the location of relevant information which was study specific. Meta-analysis then took place, and the amount of data would need to be sufficient to support the study. Further, they also had to meet a level of validity. This was calculated through their study level and risk of bias [Fig 2]. Any literature which did not sufficiently satisfy these requirements was not included. These sources had to also uphold a deeper level of conceptual conclusions and analysis within their results and have a clear,

descriptive research method. Additional resources were acquired through the bibliographies from the literature that was going to be used. Once the relevant resources were acquired, the data was extracted and consolidated, this entailed extracting the relevant data to the specific research area and then making an assessment of the quality of the studies. This collected data was then evaluated to highlight the extent of between-study inconsistency (heterogeneity). Those results were pooled to calculate summary measures and the measure of effect, expanding the analysis on a macro study scale. These summaries created a general overall regression for the data, giving a trend in which the information was heading. These findings were then interpreted and provided recommendations within discussion for future works regarding the bioaccumulation of MPs within seafood species.

Figure 1.

with graphical data extraction from study figures. Fit Model module applies MonteCarlo error propagation approach to fit complex datasets to model of interest. Prior to further analysis, reviewers have opportunity to manually curate and consolidate data from all sources. Prepare Data module imports datasets from a spreadsheet into MATLAB in a standardized format. Heterogeneity, Metaanalysis and Meta-regression modules facilitate meta-analytic synthesis of data. (Mikolajewicz 2019)

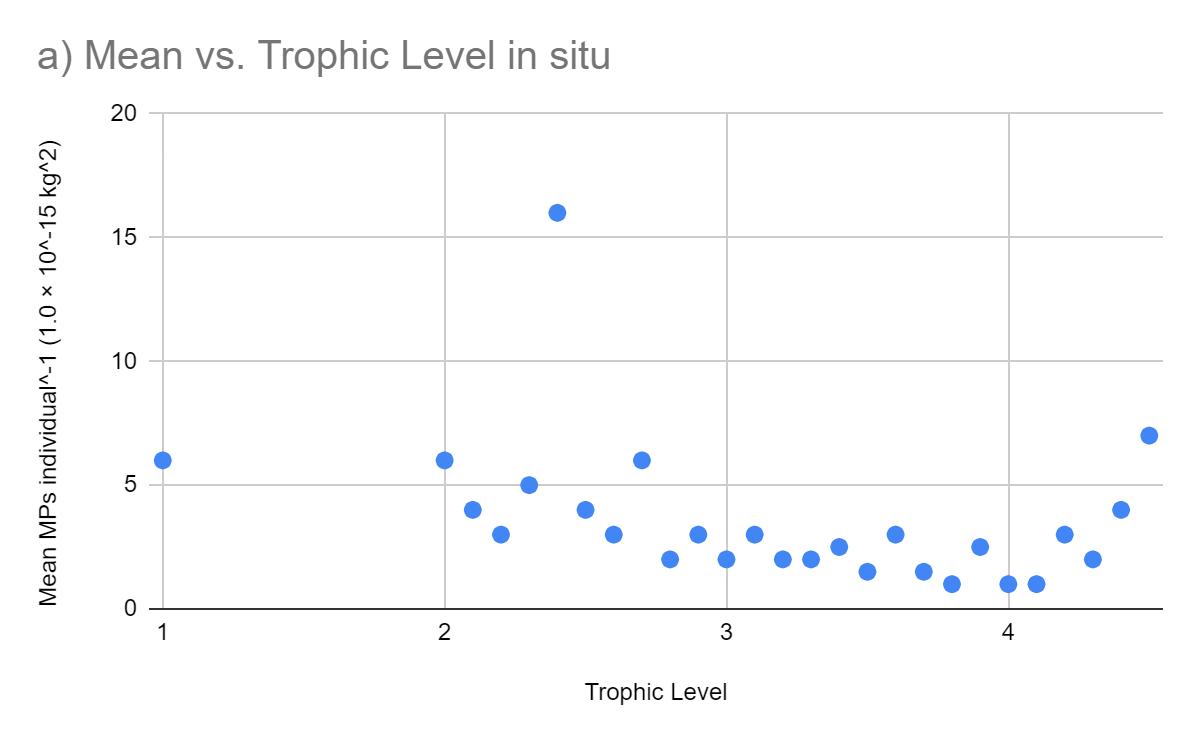

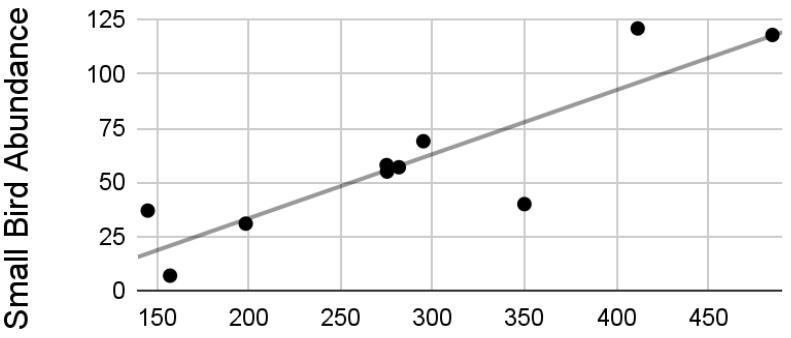

[Figure 1] suggests that there is a significant correlation between the

General framework of MetaLab. The Data Extraction module assists Figure 2. Proposed Framework of the validity through a process of considering both risk of bias and level of research. (Mikolajewicz 2019)amount of microplastic intake that occurs as trophic levels change. This graph proposes that seafood of a higher trophic level will be subject to less microplastic intake, making them less susceptible to bioaccumulation. This may be because the higher a sea creature's trophic level, the less likely they are to be directly affected by the lower trophic level’s accidental consumption of MPs mistaking them for food. The Portunus armatus remains an outlier and this may be because of the foods in its diet which are mostly made up of smaller organisms which cannot accumulate a lot of MPs. This puts forward that this diet is a not particularly prevalent occurrence, some organisms throughout all trophic levels which are made an anomaly due to their diets. The results within the trophic magnification factor (TMF) calculation suppose that the contaminants were not biomagnified throughout the food web because the TMF was below 1, causing no development of bioaccumulation within the animals of higher trophic levels (TMF=0.72). This study put forward that biomagnification was not occurring in their studied organisms through their diet as the Biomagnification factor (BMF) < 1.

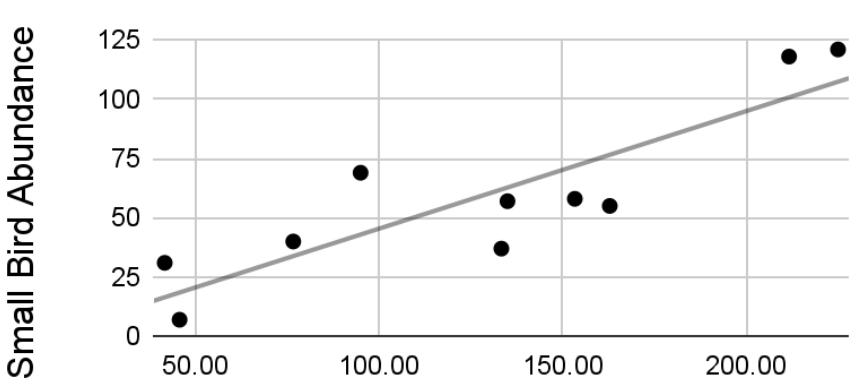

This data proposes that there are, on average, the most MPs within seafood from trophic levels 2-3 and 5. Level 1 is almost ruled out completely as it is composed of microorganisms that break down the food they consume, making them not as susceptible to bioaccumulation. There is a very significant difference between the results recorded in situ and in the laboratory. The lab has a much higher number of MPs per individual, this is most likely because of the controlled nature of the environment, allowing them to observe the changes in bioaccumulation of MPs, which again in [Fig 2. D] reaffirms that most MP bioaccumulation occurs within seafood around the trophic level of 2.5. Though in situ it is by chance whether species consume a higher number of MPs, however, it is clear to see a distinction between the means [Fig 3. a) and b)] across all the trophic levels, supporting the theory that around levels 2-3 and 5, more bioaccumulation takes place. This random intake in situ is the cause for so many outliers, all of which aren't able to be correlated according to their trophic level, and this randomness is controlled when in the lab, as the results prove there to be fewer outliers except for at trophic level 2, potentially because they are more susceptible to mistaking MPs for food based on their diet.

Figure 1. Relationship between MPs and trophic level of studied organisms from the Persian Gulf. (Akhbarizadeh, Moore & Keshavarzi 2019)

Figure 1. Relationship between MPs and trophic level of studied organisms from the Persian Gulf. (Akhbarizadeh, Moore & Keshavarzi 2019)

Upon looking at [figure 3] there’s an immediate realisation surrounding the lack of data from levels 2 to 3, even though there is still a large number of studies encountering MP chemical bioaccumulation. The significant difference between 2.8 and 2.9 is most likely due to the lack of results, therefore the data may not be accurate. There doesn’t at first appear to be a specific trend in the chemical confirmations, however, something to note is the spike

at trophic level 2.5, an area which has been of interest in other studies too, being the largest spike in MP intake, bioaccumulation and chemical accumulation as well. If the amount of study being done from 2-3, it would be assumed that there would be similar fluctuating results, however, there would most likely be a slightly lower average due to their trophic level being lower, meaning that they’re more likely to live a shorter life, not allowing for as much

Figure 2. Body burden of bioaccumulated microplastics individual-1 estimated for different trophic levels, based on reports for marine species (a) collected in situ from levels 1 to 4.5 and (b)exposed in laboratory experiments from levels 2 to 3.7. Trophic levels have been grouped into to a single decimal place, e.g. level 4.2 includes 4.21 to 4.29. (Miller, Hamann & Kroon 2020)

Figure 2. Body burden of bioaccumulated microplastics individual-1 estimated for different trophic levels, based on reports for marine species (a) collected in situ from levels 1 to 4.5 and (b)exposed in laboratory experiments from levels 2 to 3.7. Trophic levels have been grouped into to a single decimal place, e.g. level 4.2 includes 4.21 to 4.29. (Miller, Hamann & Kroon 2020)

accumulation of toxic chemicals from MPs.

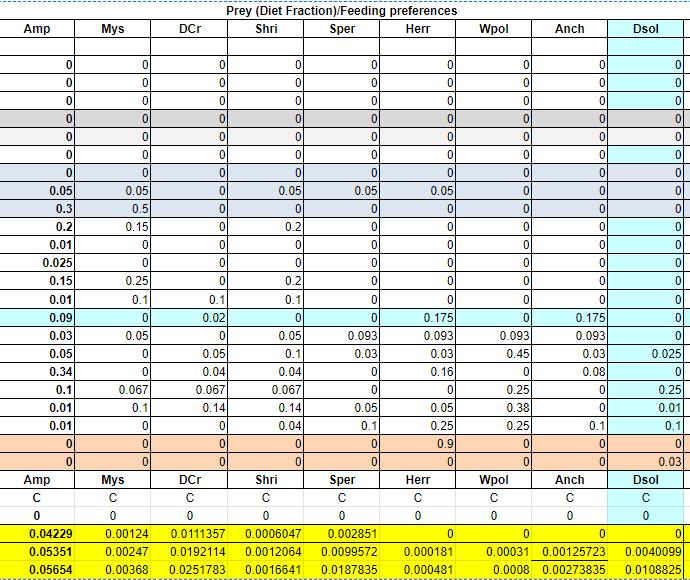

Within low abiotic concentrations, there is a constant downward trend, this infers that the higher the trophic level, the fewer MPs they have. As the simulation goes on, some sea creatures move up the levels system. This increase in the levels lowers the amount of bioaccumulation that takes place, again reaffirming the previous studies of which’s data has shown that there is a substantial increase in bioaccumulation/MP intake at around the level of 2.5. Something interesting about this data is that as time goes on, the trend steepens [fig 5 C-D] and then it flattens out with a lack of correlation, suggesting that in the end, all species will

bioaccumulate the same number of MPs over the long term. In the high abiotic simulation, this trend does not remain [fig. 6]. Proving that at the start of the simulation there was a much stronger correlation than in the longer term, which has no significant direction or correlation, appearing to be quite random. This ending in the data, though over a longer time, with no clear direction in the data, appearing to be flat also suggests that over the long term, the species will have roughly the same amount of MP bioaccumulation. The randomness of this in situ study suggests that the correlation could be stronger if given the right data set.

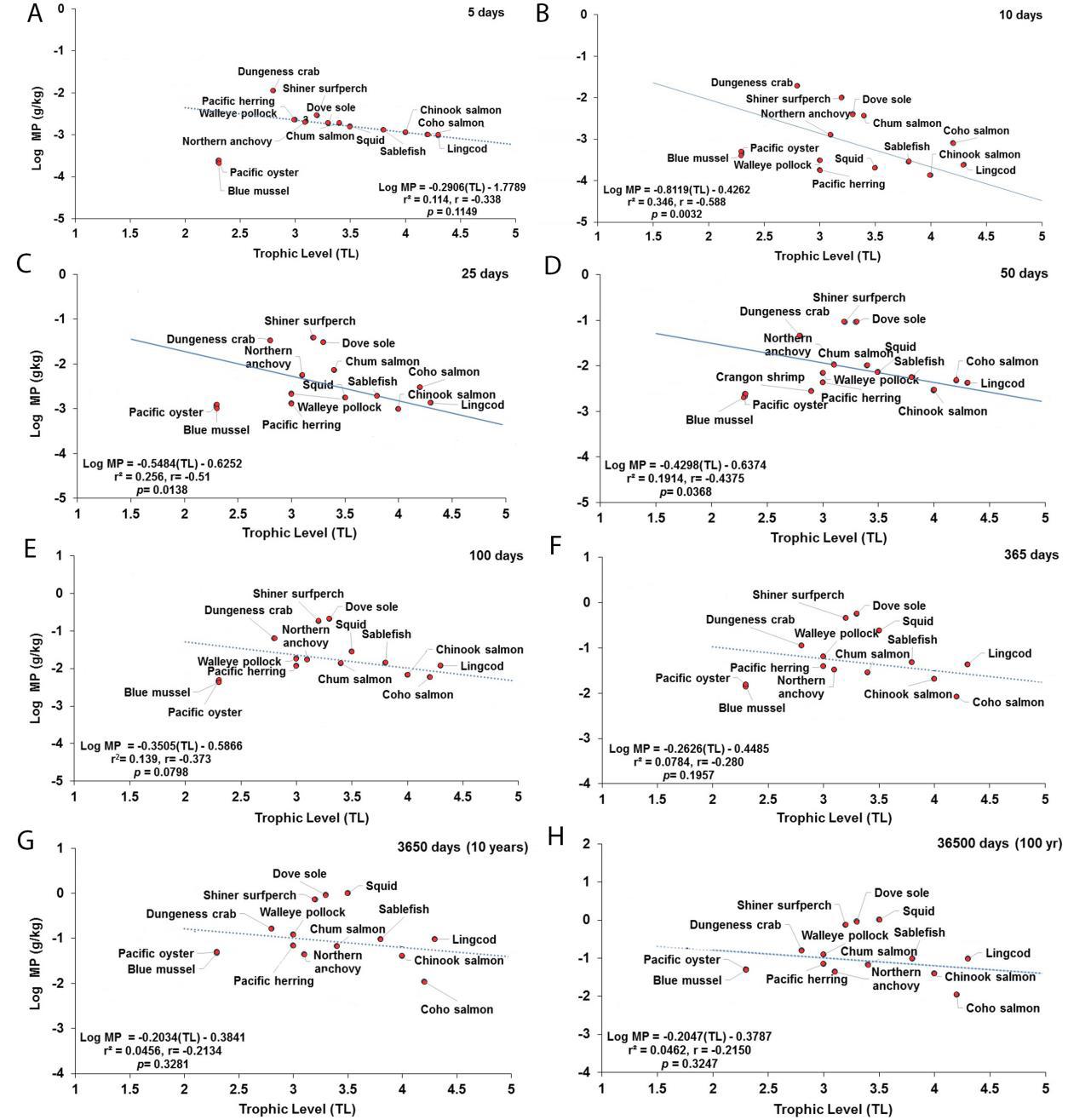

Fig 3. Frequency of microplastic (MP) chemicals confirmed in comparison to the number of studies done. Reporting on studies with marine species collected in situ. Data has been organised by trophic level, which are grouped into to a single decimal place, i.e. level 4.2 includes 4.21 to 4.29. (Miller, Hamann & Kroon 2020)Figure 5. Projections of the apparent trophic magnification factor (TMF) as a function of predicted concentration of microplastics (MPs) versus trophic level (TL) in the cetaceans’ food web of the Northeastern Pacific for simulations under a scenario of low abiotic concentrations (scenario 1: seawater = [0.003 particles/L]; and sediment = [0.266 g/kg dw] at: (A) 5 days (lack of significant relationship); (B) 10 days (regression line indicates a strong negative, significant relationship); (C) 25 days (regression line indicates a moderate and negative, significant relationship); (D) 50 days (regression line indicates a weak and negative, significant relationship); (E) 100 days (lack of significant relationship); (F) 365 days or 1 year (lack of significant relationship); (G) 3650 days or 10 year (lack of significant relationship); and (H) 36500 days or 100 year (the dotted line indicates the slope direction and a negative positive trend, but lack of a significant relationship). (Alava 2020)

Figure

factor (TMF) as a function of predicted concentration of microplastics (MPs) versus trophic levels (TL) in the cetaceans’ food web of the Northeastern Pacific for simulations under a scenario of high abiotic concentrations (scenario 3: seawater = [0.04 g/L]; and, sediment = [111 g/kg dw] at: (A) 5 days (the regression shows lack of significant relationship); (B) 10 days (regression line indicates a negative, significant relationship); (C) 25 days (dotted line indicates a negative trend but not a significant relationship; (D) 50 days (dotted line indicates a negative trend, but lack of significant relationship); (E) 100 days (dotted line indicates a negative trend, but lack of significant relationship); (F) 365 days or 1 year (lack of significant relationship); (G) 3650 days or 10 years (lack of significant relationship); and (H) 36500 days or 100 year (lack of significant relationship). (Alava 2020)

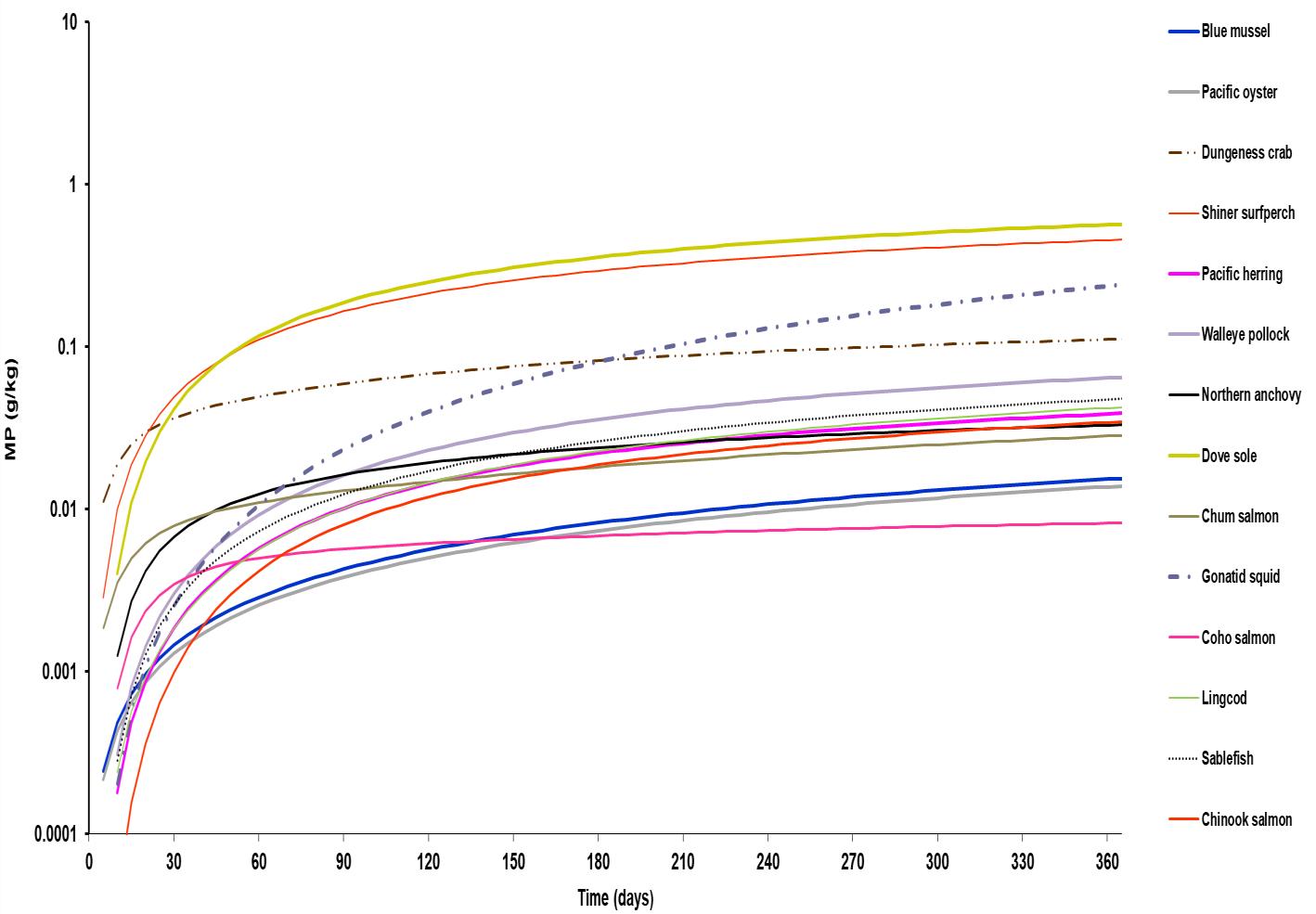

This logarithmic data set provides insight into the nature of when the most MPs are consumed within different seafood species. Certain species such as the gonatid squid increase their intake of MPs as they get older; in comparison to a Dungeness crab which is very limited in its increase of accumulated MPs over time, has a plateau-like statistic from around 120 days. This may suggest that the Dungeness crab learns how to tell the difference between MPs and real food early on in their lifespan. This is

surprising as they sit around a 2.7 trophic level, placing them near the usually spiking 2.5 trophic level. There isn’t a specific weight of MPs that all species meet at the end of the simulation as potentially suggested in [Figs 5 and 6] however, this graph suggests that there is quite a widespread in the closing MPs weight. Around 30 days it is clear to see that there is a very similar and strong correlation between all the seafood species and their MP weight, all sitting around 0.005 g/kg.

The data ultimately differs quite a lot between studies. This is because of the randomness within situ and the lack of data conducted within laboratories. There are studies that both confirm that more bioaccumulation takes place in levels 2-3, and in converse, there is one study that supports there being more accumulation of MPs within levels 3-4.5; This one study also explores the number of papers that have been done on the different trophic levels and it highlights a discrepant number of studies looking at lower levels, causing their data on the percentage of chemical confirmations to be potentially inaccurate. In summary, the data studied indicates that the seafood species which are around a 2.5 trophic level are the most susceptible to MP accumulation, however, there isn’t enough data in a controlled environment to be certain.

This supports the hypothesis given that the study suggests that fish of lower trophic levels will be more susceptible to the bioaccumulation of MP chemicals. These results suggest that if humans want to take the utmost measures to avoid MP consumption, they should avoid filter feeders (muscles, oysters, scallops etc.), deposit feeders (bass, eels, crabs etc.) and grazers (lobsters, shrimp etc.). The limitations of study are around the lack of research done in specific areas. These areas include lower trophic levels lack of studies done, and a lack of studies done in controlled environments such as labs to eliminate the randomness of data which can give clearer and more concise results, allowing for more accuracy and reliability. For further study, there would need to be a deeper understanding of the nature of MP bioaccumulation within all trophic levels, more studies would need

to be done in a laboratory setting that has more controlled variables. This is the future direction to further this area of research because the number of MPs the species are being exposed to can be limited by the people conducting the research, in comparison to in situ which varies depending on where the species are. However, the effects of doing a study in a lab could affect their eating habits and thus change the outcome of the final data, if this was the case there would just need to be a filling of the data done on lower trophic level seafood species in situ. This study could be implicated to give a deeper insight into the seafood which is the healthiest to eat regarding a lack of MP chemicals and MPs themselves, proving from this research to be food from higher trophic levels as they have appeared to have less MP bioaccumulation.

This report is an important step as it not only highlights the conclusions that can be drawn from existing studies but also proposes where there is a lack of data that can help further develop this area of study. The studies done were majorly targeting marine organisms rather than species that humans eat, meaning their research may have missed more in-depth seafood-specific data as it wasn’t what they were looking for. There is also the limitation that some of the figures used are based on simulations built upon calculations rather than actual real-world data. There is a seemingly larger focus on the biomagnification of MPs within food chains within marine ecosystems rather than bioaccumulation. This data could potentially be used and manipulated to draw further conclusions regarding bioaccumulation rather than biomagnification. A lack of correlation in some results is most likely due to the

random nature of in situ environments, which may by chance show a lack of correlation between MPs and trophic levels, or in other studies, it may demonstrate a strong correlation, neither would be “wrong” however, this means a vast number of studies are needed to be done to average the data and gain a deeper analysis of the whole study area. This is why a suggested laboratory test would be more efficient as it can collect more specific results which aren’t limited to chance. This study can not only enable people to be aware of the seafood which is the least likely to have MPs, but it can also allow companies to target certain fish markets which are less susceptible to MP bioaccumulation, making their products more appealable.

The findings suggest that overall, there is a spike in MP bioaccumulation at trophic level 2.5. Generally, there is a higher number of MP intake from levels 2-3, however, due to the randomness of the in-situ setting, it is a lot harder to measure because of a lack of controllable variables in terms of how many MPs are introduced to different species. This information demonstrates an acceptance of the alternative hypothesis: Seafood species of lower trophic levels will be more susceptible to the bioaccumulation of MP chemicals. A study evaluating the amount of data there already is surrounding the MP consumption regarding the percentage of chemical confirmations within the species demonstrated that there was a significant discrepancy between the amount of research on MP consumption for seafood species in trophic levels 2-2.9, but there was a comfortable amount of study on levels 34.5. This posed the question of whether

the data and analysis were accurate, however, this is hard to judge as it is in a random environment rather than in a laboratory which would have a significant increase in the variables which could be controlled. In situ environments provide a lot less explainable results than if they were to be tested in laboratories, as the experiment conductor can target variables and isolate causes for different effects, however, in situ it could be one of many different unknown factors. Ultimately, there would need to be a lot more research in both situ and laboratories targeting specifically the different trophic levels, not leaving any discrepancies in what levels are tested, and targeting seafood species rather than general marine organisms, as it allows a further development of understanding which can be implemented to improve human health and quality of life.

The author would like to acknowledge and thank all the sources which have contributed to the final conclusions of this study.

1. Akhbarizadeh, R, Dobaradaran, S, Nabipour, I, Tajbakhsh, S, Darabi, AH & Spitz, J2020, ‘Abundance, composition, and potential intake of microplastics in canned fish’, Marine Pollution Bulletin, vol. 160, p. 111633.