International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

Promod Kumar B M 1 , Shruthi V P 2

1 Assistant Professor, Department of Computer Science and Engineering, P.E.S. College of Engineering, Mandya, Karnataka, India

2 Department of Computer Science and Engineering, P.E.S. College of Engineering, Mandya, Karnataka, India.

Abstract - In the era of digital transformation, the evolutionofdataengineeringecosystemshasfundamentally reshapedhoworganizationsmanage,process,andanalyze data.Thisresearchdelvesintothetransitionfromtraditional on-premises Relational Database Management Systems (RDBMS) to modern, cloud-native architectures, with a specificfocusonIndia'sgrowinginfluenceasaglobalITand data engineering hub. The study shows how cloud-based platformsempowerenterprisestounlockthefullpotential oftheirdata,fosteringreal-timeanalytics,automation,and innovation. Leveraging cutting-edge technologies such as MicrosoftPowerApps,AzureDataLakeStorage(ADLS)Gen2, Azure Data Factory (ADF), Databricks, Power BI, and Artificial Intelligence/Machine Learning (AI/ML), this project builds an end-to-end scalable, secure, and highly efficient data pipeline. The pipeline ensures robust data ingestion, transformation, and visualization while incorporating advanced data governance techniques, includingdynamicandstaticdata masking,encryption-atrest, and role-based access control (RBAC) to safeguard Personally Identifiable Information (PII) and ensure regulatorycompliancewithframeworkslikeGDPRandRBI guidelines.UsingtheIndianbankingsectorasacasestudy a sector undergoing rapid digital adoption the research evaluates the economic and technological impact of cloud migration. Key benefits identified include significant operational efficiency, infrastructure cost reduction, improvedscalabilityandelasticity,enhanceddatasecurity, and enriched customer experiences through data-driven decision-making. Migration also enables seamless integration of disparate data sources, fostering unified analyticsandintelligentautomationthroughAI/MLmodel deployment within Databricks notebooks. Moreover, the study highlights how the democratization of cloud technologieslevelstheplayingfieldforsmallandmedium enterprises(SMEs),allowingthemtocompete on a global scalewithenterprise-gradecapabilities.Challengessuchas datasilos,latencyinprocessing,regulatorycompliance,and legacysystemcompatibilityareaddressedthroughmodular architecture, containerization, CI/CD pipelines, and metadata-drivenorchestrationinADF.

Key Words: Cloud Migration,RDBMS, DataEngineering, Azure Databricks, ADLS, Data Masking, Banking Sector, AI/ML Integration

The rapid evolution of data engineering ecosystems has transformed the landscape of enterprise datamanagement.Asbusinessesincreasinglyrelyondata forstrategicdecision-making,thelimitationsoftraditional Relational Database Management Systems (RDBMS) have become more pronounced. While RDBMS platforms have long served as the backbone of data storage and management,theyoftenstrugglewithscalability,datasilos, performancebottlenecks,andlackofsupportforreal-time analytics and big data processing. These constraints have catalyzedaglobalshifttowardcloud-baseddataplatforms thatofferelasticity,pay-as-you-gopricingmodels,seamless integrationcapabilities,andhighavailability.

Cloudcomputinghasemergedasafoundationalenablerfor modern data engineering, allowing organizations to constructresilient,scalable,andcost-effectivedatapipelines.

Cloud platforms such as Microsoft Azure provide a comprehensive suite of services ranging from storage (AzureDataLakeStorageGen2)andcompute(Databricks) to orchestration (Azure Data Factory) and visualization (Power BI) that collectively support the end-to-end lifecycle of data engineering. These services are further enhanced by native integration with AI/ML tools and frameworks,enablingbusinessestooperationalizeadvanced analyticsmodelsforreal-timeinsights.

India,recognizedgloballyforitsrobustITcapabilitiesanda growing ecosystem of cloud professionals, is uniquely positioned to lead this digital revolution. The country's extensive talent pool, supportive policy frameworks (e.g., DigitalIndiainitiative),andwidespreaddigitaladoption have accelerated cloud migration across sectors. Among these,the bankingandfinancialservicesindustry(BFSI) standsoutasaprimecandidateduetothevastvolumeand sensitivity of data it handles. From real-time transaction processingandloanapprovalstoregulatoryreportingand personalizedcustomerengagement,banksrequireagileand

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

securedataarchitecturesthatcanadapttoevolvingbusiness needs.

Thisresearchexploresthedesignandimplementationofa modern,cloud-nativedataengineeringframeworktailored to the Indian banking sector. The proposed solution leverages PowerApps for business process automation, Azure Data Lake Storage (ADLS) Gen2 for scalable and secure data storage, Databricks for large-scale data transformationandmachinelearningworkflows,andPower BI for rich, interactive analytics. Azure Data Factory orchestratestheentiredatapipelinewithmetadata-driven workflowsanderrorhandlingcapabilities.

A critical aspect of the solution is data privacy and compliance.Banksmustcomplywithstringentglobaland regionalregulationssuchasthe General Data Protection Regulation (GDPR), Reserve Bank of India (RBI) cybersecurity guidelines, and the Personal Data Protection Bill (PDPB). This research emphasizes the implementation of advanced data masking techniques, includingbothdynamicandstaticmasking,tokenization,and role-basedaccesscontrolstoprotectPersonallyIdentifiable Information(PII)andpreventunauthorizeddataexposure. Thesetechniquesensurethatsensitiveinformationsuchas account numbers, PAN, Aadhaar details, and customer names is obfuscated or anonymized during analytics and reporting, without impacting data utility for business insights.

Furthermore,theintegrationof AI/MLmodels developed anddeployedusingDatabricks addsapredictivelayerto the system, enabling use cases like fraud detection, credit riskscoring,andchurnprediction.Thescalablenatureofthe cloudenvironmentensuresthatthesemodelscanbetrained and retrained efficiently on large datasets with minimal infrastructureoverhead.

In conclusion, this research presents a comprehensive and future-proof data engineering architecture that not only modernizes legacy systems but also empowers banks to harness the full value of their data assets. The proposed solutionservesasablueprintfordigitaltransformation intheBFSIdomainandholdssignificantrelevancefor otherindustriesfacingsimilarchallengesindatascalability, security,andcompliance.

[1] Capgemini Research Institute, Cloud Adoption in the Banking Sector. Capgemini, 2022.

This study provides a comprehensive overview of the increasingadoptionofcloudtechnologieswithinthebanking and financial services sector. The research outlines key driversbehindthis transformation,including the needfor

enhanced scalability, operational agility, improved data security, and cost efficiency. It emphasizes how cloud solutions help banks respond to changing customer expectations and competitive pressures through rapid deployment of digital services. The study also identifies challengesrelatedtothemigrationoflegacyinfrastructure, particularly around data integration, vendor lock-in, and ensuringcompliancewithstringentregulatoryframeworks likeBaselIIIandGDPR.

Disadvantage: TheresearchpredominantlycentersonTier 1 and multinational financial institutions, offering limited insight into the cloud adoption barriers encountered by small-andmedium-sizedbanks,suchasbudgetconstraints, limited cloud expertise, and integration complexity with legacysystems.

[2] Oliver Wyman, Economic Impact of Cloud Computing in India. Oliver Wyman, 2021.

Thisreportanalyses the macroeconomic benefits ofcloud computingacrossIndia'sdigitalecosystem.Itestimatesthat the adoption of cloud technologies could contribute approximately 8% to India’s GDP by 2026, driven by enhancedproductivity,digitalinnovation,andthegrowthof emergingtechnologieslikeAIandIoT.

Disadvantage: While economically insightful, the study lacks granular technical details on the implementation of cloud infrastructure in banking environments. It does not address operational challenges such as data migration, architecturalcomplexity,andcloudsecurityenforcement.

[3] Gartner Research, Data Engineering Ecosystem Evolution, 2023.

This research outlines the transition from legacy onpremises data architectures to modern, cloud-native data engineeringplatforms.Thestudyunderscorestheincreasing roleoftechnologiessuchas AzureDataLakeStorageGen2, Databricks, and Power BI in enabling scalable, modular, andhigh-performancedatapipelines.Italsoelaborateson the importance of data democratization, real-time processing, and metadata-driven data orchestration in modern enterprises. Disadvantage: Thepublicationlacksdetailedusecasesand failstoprovidehands-onimplementationexamplesorbest practices from real-world deployments, making it less actionablefortechnicalpractitioners.

[4] McKinsey & Company, AI/ML in Banking: Fraud Detection and Risk Assessment, 2022.

This report delves into the growing role of Artificial Intelligence and Machine Learning in transforming the bankingsector.ItdiscusseshowMLalgorithmsareusedfor real-time fraud detection, credit risk scoring, loan

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

approval automation,and customer segmentation.The reportstressesthenecessityofintegratingAI/MLworkflows into cloud-based data platforms to leverage real-time insightsandpredictiveanalyticsatscale.

Disadvantage: Although insightful on business use cases, the study does not explore the technical complexity of implementing and maintaining AI/ML models in a production-grade cloud infrastructure. Topics like model versioning, data drift monitoring, ML Ops, and cloud resourceoptimizationarenotaddressed.

[5] National Institute of Standards and Technology (NIST) – Special Publication 800-122, Data Masking Techniques for Privacy Preservation in Cloud Environments,2020.

This publication presents a framework for protecting Personally Identifiable Information (PII) in cloud environments. It outlines key data protection methods, including Static Data Masking (SDM), Dynamic Data Masking (DDM), Tokenization, and Format-Preserving Encryption (FPE).Theguidelinesemphasizethetrade-off between data usability and privacy, advocating for rolebased access control, encryption-at-rest, and data anonymization strategies in compliance with global regulations such as GDPR, HIPAA, and India’s PDP Bill Disadvantage: Whilecomprehensiveinmaskingtechniques, thedocumentdoesnotexploreintegrationstrategieswith advancedanalyticsor AI/ML pipelines,wheremaskedor anonymized data may impact model accuracy or lead to traininginconsistencies.

3. METHODOLOGY

TheproposedsystemtransitionsfromtraditionalRDBMSto a cloud-native data engineering ecosystem, integrating cutting-edgeservicesandtoolsincludingPowerApps,Azure DataLakeStorageGen2(ADLSGen2),AzureDatabricks,and Power BI. This architecture is designed to overcome the challengesoflegacysystemsbyensuringscalability,security, real-timeanalytics,andregulatorycompliance allcritical tomodernbankinginfrastructure.

Key Components & Features:

1. Scalability with ADLS Gen2 and Azure Databricks

ADLSGen2: Provideshierarchicalnamespacestorageontop of Blob Storage. It enables massive-scale data ingestion (structured,semi-structured,and unstructured data)with optimizedthroughputandcost-effectivestoragetiers(Hot, Cool,Archive).

Azure Databricks: Built on Apache Spark, it supports distributed computing for big data pipelines. It enables

parallel processing, Delta Lake support, and auto-scaling clustersforon-demandresourceprovisioning.

Use Case: Easily process hundreds of millions of banking transactions per day across regions without downtime or performancedegradation.

2. Cost Efficiency via Serverless and Pay-as-You-Go Cloud-native components operate under elastic resource allocation,ensuringthatcomputeandstorageresourcesare billedonlywhenused.

Databricks supports job clusters and automated job scheduling, eliminating the need for persistent infrastructure.

Impact: Reduces capital expenditure (CapEx) and operationalcosts(OpEx),whileimprovingcostforecasting andresourceutilization.

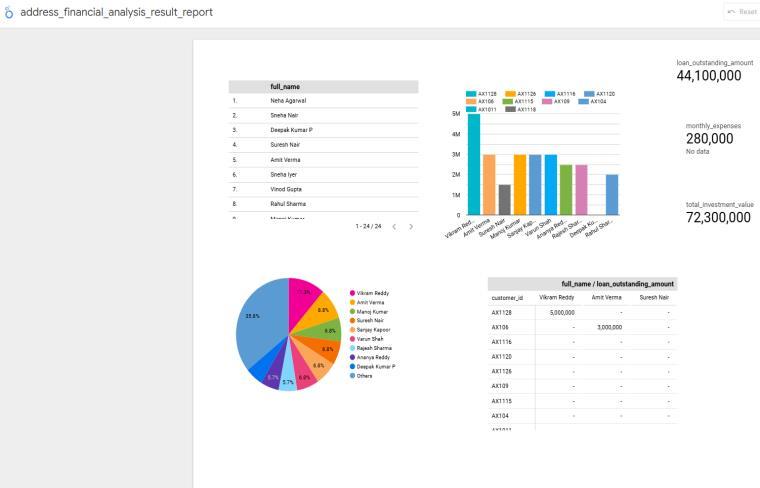

3. Real-Time Analytics & AI-Driven Insights

Power BI enables seamless visualization of banking KPIs such as credit risk scores, loan defaults, fraud detection alerts,andcustomersegmentation.

ML Models on Databricks allow for streaming analytics, predictivemodeling,and integration with toolslikeAzure MachineLearningorMLflowforexperimentationandmodel lifecyclemanagement.

Example:Real-timefrauddetectionbyscoringtransactions astheyflowthroughthepipelineusingtrainedMLmodels.

RBAC (Role-Based Access Control) ensures that only authorized users can access sensitive datasets, based on roles(e.g.,ComplianceOfficer,DataAnalyst,RiskManager).

Data Encryption: Both at rest (Azure Storage Encryption) andintransit(TLS1.2+)to protectcustomerdatasuchas Aadhaarnumbersandaccountdetails.

Multi-Factor Authentication (MFA) and Azure Active Directoryintegrationsafeguardlogincredentialsandaccess.

Auditing&Logging:ActivitiesaretrackedviaAzureMonitor andDatabricksauditlogs,essentialforforensicanalysisand compliance.

Compliance:AlignedwithstandardssuchasGDPR,RBIIT Guidelines,andPCI-DSS.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

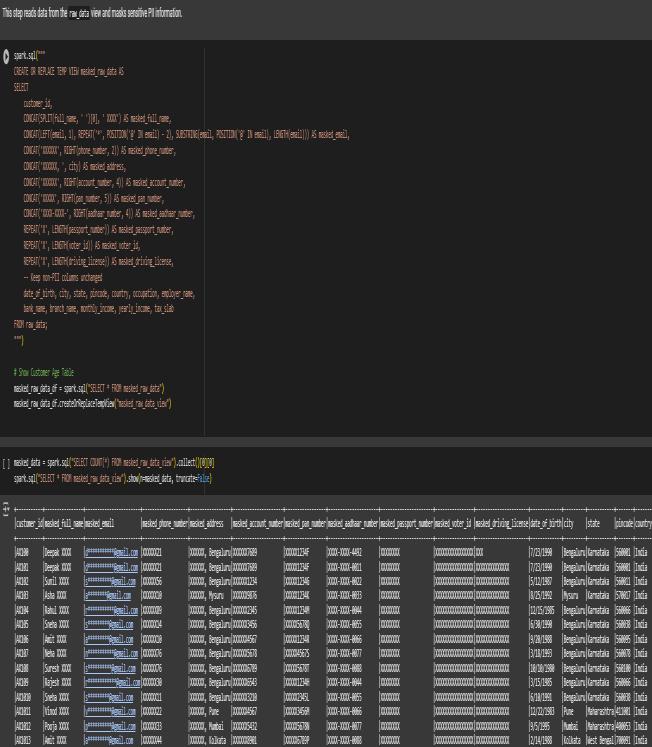

5. Integrated Data Masking for Privacy Preservation Model

Static Data Masking (SDM): Applied to backup or nonproduction environments for PII redaction (e.g., replacing realPANnumberswithdummyvalues).

DynamicDataMasking(DDM):Usedforproductionanalytics whererole-basedviewsarenecessary.Forexample,adata analystmayseemaskedaccountnumbers,whileafinancial controllerseesfulldata.

IntegrationwithAI/ML:Maskeddatasetsretainreferential integrity,allowingmodeltrainingwithoutexposingrealPII.

3.1 FLOWCHART

Data Collection: PowerApps captures real-time banking transactiondata,storingitinSharePoint.

Cloud Migration: Azure Data Factory (ADF) ingests structuredandsemi-structureddataintoADLSGen2.

DataProcessing&Transformation: Databricksprocesses raw data, performs ETL operations, applies data masking (SDM/DDM/FPE),andenhancesdatasecurity.

AI/ML Integration: AI/ML models analyse masked transactionpatternsforfrauddetectionandriskassessment.

Data Storage & Distribution: Processeddata isstoredin ADLS Gen2, enabling seamless access for business applications.

Visualization & AI: Power BI dashboards and AI-driven insightssupportdata-drivendecision-making.

[PowerApps]

[Azure Data Factory] > [ADLS Gen2]

[Azure Databricks] (ETL/ELT, ML Models, Masking)

[Delta Lake]

[Power BI Dashboards]

3.2 Data Masking Implementation:

StaticDataMasking(SDM):IrreversiblyanonymizesPIIin non-production environments (e.g., replacing actual customernameswithpseudonyms).

DynamicDataMasking(DDM):Restrictsreal-timeexposure ofsensitivefields(e.g.,maskingcreditcardnumbersas************-1234forunauthorizedusers).

Format-Preserving Encryption (FPE): Encrypts data while retaining its original format (e.g., masking a PAN number ABCDE1234FtoXYZWQ5678R).

Tokenization: Replaces sensitive data with non-sensitive tokens (e.g., substituting actual account numbers with tokensinanalyticsworkflows).

Compliance: Ensures alignment with GDPR, RBI’s data localizationnorms,andSOC2standards.

This project aims to demonstrate the feasibility and functionality of a robust data engineering ecosystem, conceptually mirroring the architecture of a productiongrade cloud solution like those built on Microsoft Azure. Giventhesignificantinvestmenttypicallyrequiredforsuch anAzure-basedsystem,especiallyforpreliminaryproof-ofconcept, we have opted to utilize readily available Google toolsforthisprototypemodel.

Thisapproachallowsusto:

MinimizeInitialCosts:Todemonstratethecoredata flowandfunctionalitywithoutincurringsubstantial upfrontexpenses.

RapidlyPrototypeandIterate:Toquicklybuildand testthedatapipeline,allowingforagileadjustments andrefinement.

FocusonConceptualUnderstanding:Toemphasize thelogicalflowofdata,transformationprocesses, andanalyticaloutputs,ratherthanthespecificsofa particularvendor'splatform.

Provide Proof of Concept: Before any large-scale investments are made, provide a functional demonstrationoftheproposedsystem.

It'simportanttonotethatthisprototype,whilefunctionally representative, is not intended to be a production-ready system.Googletools,whilepowerfulforprototyping,have limitations in scalability, performance, and security comparedtoenterprise-gradecloudplatformslikeAzure.

Specifically, we have replaced the following Azure componentswiththeirGoogleequivalents:

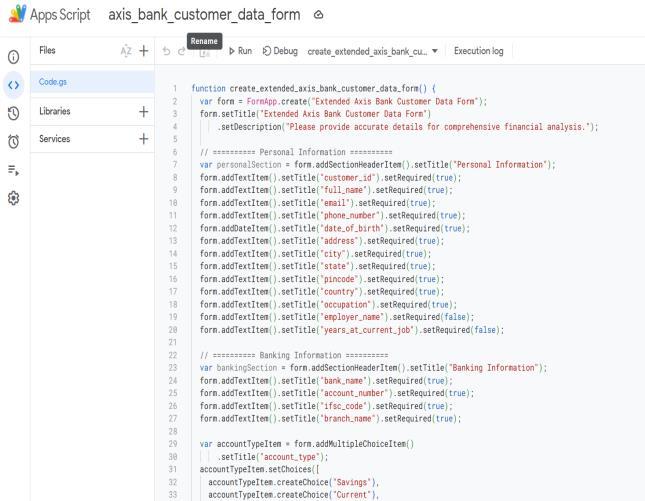

PowerApps:ReplacedwithGoogleForms,enhanced withGoogleAppsScriptforcustomfunctionality.

Azure Data Lake Storage Gen2 (ADLS Gen2): ReplacedwithGoogleSheetsforinitialdataviewing andasimulateddatalakestorage.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

Azure Databricks: Replaced with Google Colab, leveraging PySpark and Spark SQL for data processingandmasking.

Power BI: Replaced with Looker Studio for data visualizationandanalysis.

Thisapproachallowsustodemonstratethecoreconceptsof dataingestion,processing,masking,andvisualizationusinga cost-effective and accessible toolset. The insights gained from this prototype will serve as a foundation for future development and implementation in a production environment, potentially utilizing Azure or a similar platform."

Key Points to Emphasize:

Purpose of the Prototype: Clearly state that it's a proofofconcept.

Cost-Effectiveness:Highlightthefinancialbenefits ofusingGoogletools.

Conceptual Representation: Stress that the prototypedemonstratestheoverallarchitecture.

Limitations:AcknowledgethelimitationsofGoogle toolscomparedtoenterprise-gradesolutions.

FutureScalability:Indicatethattheprototypewill guidefutureproductiondeployments.

WhyUseGoogleToolsInsteadofPaidSoftware?

1. Cost Savings:

o Azure services (PowerApps, ADLS Gen2, Databricks,PowerBI)areenterprise-grade butcomewithsignificantcosts,especially forlarge-scaledeployments.

o Googletools(Forms,Sheets,Colab,Looker Studio)arefreeforbasicuse,makingthem idealforprototypingandproof-of-concept projects.

2. Accessibility and Ease of Use:

o Google tools have a low barrier to entry. Theyareuser-friendlyandrequireminimal setup.

o Colab provides a pre-configured environment for Python and Spark, eliminating the need for complex installations.

3. Rapid Prototyping:

o Googletoolsallowforquickiterationand development. You can rapidly build and testyourdatapipelinewithoutwaitingfor procurementorcomplexconfigurations.

4. Learning and Experimentation:

o Google tools provide a safe and costeffective environment to learn and experiment with data engineering concepts.

o Youcanexploredifferentdataprocessing and visualization techniques without financialrisk.

5. Collaboration:

o Google tools facilitate easy collaboration. You can share forms, sheets, and Colab notebookswithteammembers.

6. Resource Constraints:

o For individual projects, or for smaller companies,thecostoftheAzureecosystem couldbeprohibitive.

7. Proof of Concept:

o BeforeinvestingheavilyinAzure,itisbest toprovidethebusinessaproofofconcept.

LimitationsofGoogleTools:

Scalability: Google Sheets and Colab have limitationsinhandlingmassivedatasets.

Performance:Colab'sperformancemaynotmatch dedicatedcloudserviceslikeDatabricks.

Security: Google tools may not meet the security requirementsforhighlysensitivedata.

Features:Paidtoolsoffermoreadvancedfeatures andintegrations.

Enterprise support: Google tools do not have the same level of enterprise level support that a paid Azureservicewouldhave.

In essence, Google tools are excellent for prototyping and learning, but paid cloud platforms like Azure are better suitedforproductionenvironmentsthatrequirescalability, performance,androbustsecurity.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

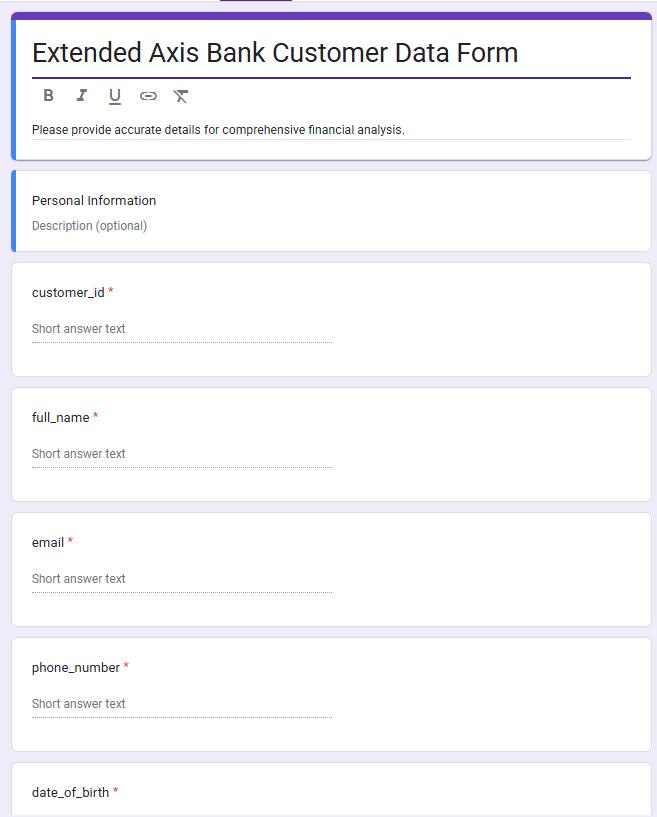

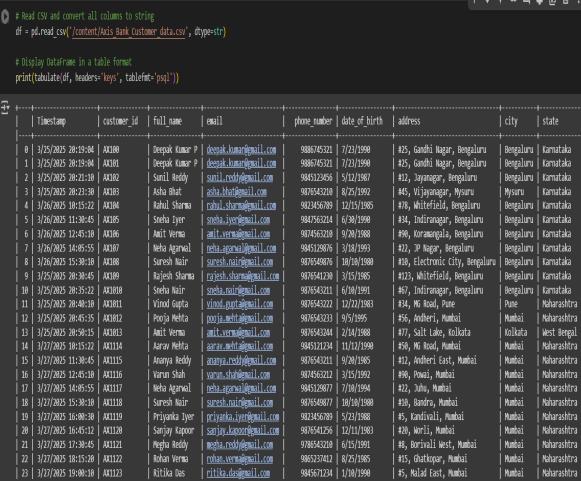

4.1 Phase 1: Data Collection (Google Forms & Scripting)

1. Define Data Schema (Elaborated):

o Data Dictionary:Createa data dictionary. Thiswillcontainallthefields,datatypes, validation rules, and descriptions. This helpstomaintainconsistencyandclarity.

o Data Integrity: Consider data integrity constraints.Forexample:

TransactionID should be unique. Implement checks or validations foruniqueness.

CustomerID should reference an existing customer. (You can simulate this using a dropdown withpredefinedcustomerIDs).

Amount should be a positive number.

TransactionType should be limitedtopredefinedvalues.

o Data Sensitivity: Identify sensitive data fields that will require masking (e.g., CustomerID, potentially Location or Merchant).

o Sections: Use form sections to organize questionslogically.Forexample,asection forcustomerinformationandanotherfor transactiondetails.

o Conditional Logic:Implementconditional logic to show or hide questions based on user responses. For instance, show "Merchant" only if TransactionType is "Debit."

o Descriptions:Adddetaileddescriptionsto questions to provide context and instructions.

o Required Fields: Mark essential fields as "required"toensuredatacompleteness.

o External Data Lookup: If you need to populatedatafromexternalsources(e.g.,a customer database), use Google Apps Script to fetch data and populate form fields.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

o Real-timeValidation:Implementreal-time validation as the user types, providing immediatefeedback.

o Automated Notifications: Send email notificationsuponformsubmission.

o DataLogging:Logformsubmissiondetails toaseparatesheetforauditingpurposes.

o Error Handling: Implement robust error handlingtopreventscriptfailures.

4. Testing (Thorough):

o EdgeCases:Testwithvariousedgecases, suchas:

Invaliddatatypes.

Missingdata.

Boundary values (e.g., zero amounts).

Longtextinputs.

o Performance:Testwithalargenumberof simulatedsubmissionstoassesstheform's performance.

o User Experience: Evaluate the form's usabilityandmakeadjustmentsasneeded.

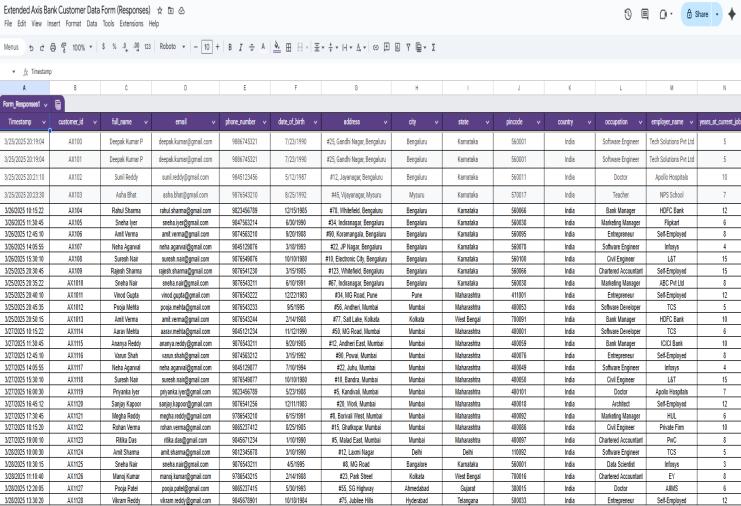

4.2 Phase 2: Data Storage and Initial View (Google Sheets)

1. GoogleSheetasDataView(Detailed):

o DataOrganization:

Usedatafilterstoquicklysortand filterdata.

Apply conditional formatting to highlightimportantdatapoints.

Freeze header rows and key columnsforeasynavigation.

o DataValidation:

UseGoogleSheets'datavalidation featurestoenforcedataintegrity.

Create dropdown lists for predefinedvalues.

Setdatatyperestrictions.

o DataRelationships(Simulated):

If you are simulating related tables, use VLOOKUP or INDEX/MATCHfunctionstocreate relationshipsbetweensheets.

Create a separate sheet for customerdataanduseVLOOKUP toretrievecustomerinformation basedonCustomerID.

2. Organization(Advanced):

o NamedRanges:Usenamedrangestomake formulasmorereadableandmaintainable.

o Data Slicers: Use data slicers to create interactivefilters.

o DataGrouping:Grouprelatedcolumnsfor betterorganization.

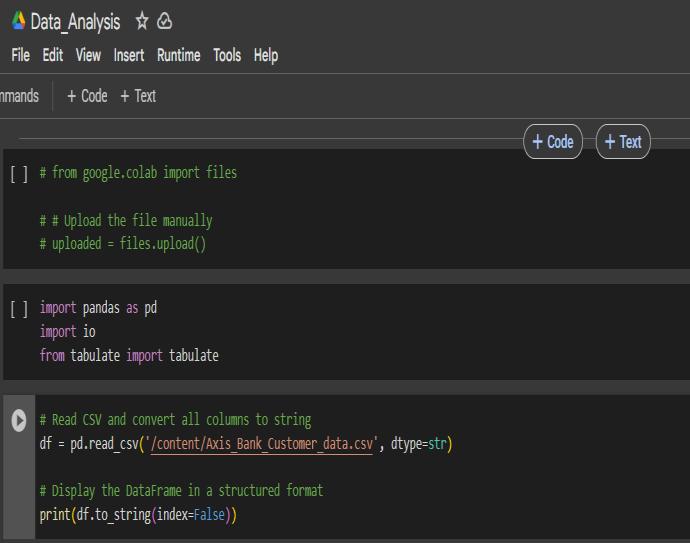

4.3Phase3:DataProcessingandMasking(GoogleColab & PySpark)

GoogleColabSetup(Detailed):

o EnvironmentSetup:EnsurethatyourColab runtimeisconfiguredforPython3.

o Dependency Management: Use requirements.txttomanagedependencies.

o Version Control (Simulated): If you are working with a team, use Google Drive versioningtotrackchanges.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

ConnecttoGoogleSheets(Robust):

o ServiceAccounts:UseGoogleCloudservice accountsformoresecureauthentication.

o ErrorHandling:Implementerrorhandling fornetworkissuesandAPIerrors.

o Retry Logic: Add retry logic to handle transienterrors.

InitializeSpark(Configured):

o Spark Configuration: Configure Spark settingsforoptimalperformance.

o Logging: Configure Spark logging to captureerrormessages.

PandastoSparkDataFrame(Efficient):

o Schema Inference: Explicitly define the schematoavoidSpark'sschemainference, whichcanbeinefficient.

DataProcessing(Comprehensive):

o Data Quality Checks: Implement data qualitycheckstoidentifyandhandledata errors.

o DataEnrichment:Enrichdatawithexternal sources(e.g.,usingAPIs).

o Window Functions: Use Spark window functionsforadvancedanalytics.

o User Defined Functions (UDFs): Create UDFsforcomplexdatatransformations.

DataMasking(Advanced):

o Parameterization: Parameterize masking functionsforflexibility.

o Consistent Masking: Ensure consistent maskingacrossdifferentdatasets.

o Masking Metadata: Store masking metadata(e.g.,maskingrules)forauditing.

o Tokenization Mapping: Store token mapping in a database or file for secure management.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

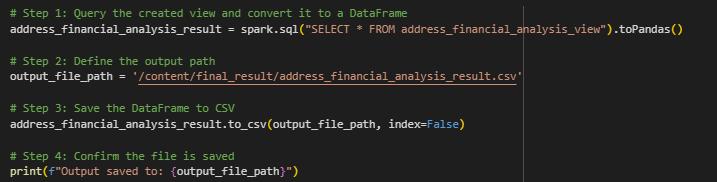

SaveOutput(Flexible):

o Partitioning:Partitiontheoutputdatafor efficientquerying.

o DataFormats:Useoptimizeddataformats likeParquet.

o Google Drive Integration: Save data to GoogleDrivefoldersfororganization.

4.4 Phase 4: Data Visualization (Looker Studio)

1. ConnecttoDataSource(Reliable):

o Data Refresh: Configure data refresh schedules.

o Data Blending: Blend data from multiple sources.

2. CreateVisualizations(Interactive):

o Drill-down Reports: Create drill-down reportsfordetailedanalysis.

o CalculatedFields:Usecalculatedfieldsfor custommetrics.

o InteractiveFilters:Createinteractivefilters andslicers.

o Custom Visualizations: Explore custom visualizations.

3. Analysis(Insightful):

o Trend Analysis:Analyzedata trendsover time.

o Comparative Analysis: Compare data acrossdifferentdimensions.

o Anomaly Detection: Use Looker Studio's featurestodetectanomalies.

o Storytelling: Create data stories to communicateinsightseffectively.

This project serves as a compelling demonstration of the profoundimpactthatstrategiccloudmigration,coupledwith advanced data masking techniques, can have on revolutionizing India's IT infrastructure and fostering a secure,data-drivenecosystem.Inanerawheredata isthe lifeblood of modern enterprises, particularly within the highlyregulatedbankingsector,theabilitytobalancedata utilitywithstringentprivacyrequirementsisparamount.

The core strength of the proposed system lies in its comprehensiveapproachtodatasecurity,integratingasuite of masking technologies Static Data Masking (SDM), Dynamic Data Masking (DDM), and Tokenization. By meticulously implementing these techniques, the system achievesacriticalobjective:enablingsecuredatasharingfor advancedanalyticswhilerigorouslyadheringtoglobaldata privacy regulations such as GDPR, RBI's data localization norms, and SOC 2. This multi-layered security framework notonlymitigatestherisksassociatedwithdata breaches butalsofostersacultureoftrustandtransparency,essential forbuildingrobustcustomerrelationshipsandmaintaining regulatorycompliance.

Thebankingsectorcasestudypresentedwithinthisproject provides a tangible illustration of how masked data can retainitsintrinsicanalyticalvalue.Specifically,thesystem demonstrates the feasibility of leveraging masked transactiondataforAI/ML-drivenfrauddetectionandrisk assessment. Byemployingsophisticated machinelearning models on anonymized datasets, financial institutions can effectivelyidentifyandmitigatefraudulentactivitieswithout

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

compromising customer privacy. This delicate balance betweenprivacypreservationandinnovationisatestament to the system's design and its potential to unlock new frontiersindata-drivendecision-making.

Furthermore, this research underscores the compelling economic and technical alignment of cloud adoption with India's strategic objectives. By embracing cloud-based solutions, organizations can achieve significant cost efficiencies,enhancescalability,andaccelerateinnovation cycles.Thepay-as-you-gomodelofferedbycloudproviders, coupled with the ability to dynamically scale resources, allowsbusinessestooptimizetheirITspendingandinvestin core competencies. The integration of advanced analytics tools, such as Power BI and Azure Databricks, empowers decision-makers with real-time insights, enabling them to respondswiftlytomarketdynamicsandcustomerneeds.

Beyondtheimmediatebenefitstoindividualorganizations, thisprojecthighlightsIndia'spotentialtoemergeasaglobal leader in the IT landscape. By fostering a culture of innovationandembracingcutting-edgetechnologies,India canattractforeigninvestment,stimulateeconomicgrowth, andcreatehigh-skilledjobs.Thedevelopmentofrobustdata protection frameworks, such as the one proposed in this project, is crucial for building trust and attracting internationalpartnerships.

In conclusion, this project serves as a blueprint for organizationsseekingtomodernizetheirITinfrastructure whileprioritizingdatasecurityandregulatorycompliance. Byleveragingthepowerofcloudcomputingandadvanced data masking techniques, India can unlock new opportunities for innovation and position itself as a frontrunner in the global digital economy. The insights gleanedfromthisresearchhavefar-reachingimplications, notonlyforthebankingsectorbutalsoforawiderangeof industriesseekingtoharnessthetransformativepotentialof data-driven decision-making. The future of India's IT landscapehingesonitsabilitytoembracethesetechnologies and cultivate a secure, innovative, and data-centric ecosystem.

1. Statista – “IT industry in India - statistics & facts.” (Providesgeneral marketdata andgrowth trends)

2. Reuters – “India cenbank plans 2025 launch of cloud services.” (Focuses on regulatory changes andcloudadoptioninfinance)

3. Capgemini – “91% of banks and insurers have initiated cloud adoption.” (Illustrates industrywidecloudadoptiontrends)

4. Oliver Wyman – “Future of cloud and its economic impact.” (Analyzes the broader economicbenefitsofcloudcomputing)

5. Wikipedia –“UnifiedPaymentsInterface(UPI).” (Contextualizes the Indian digital payments landscape)

6. NIST Special Publication 800-122 – “Guide to Protecting the Confidentiality of Personally Identifiable Information (PII).” (Provides foundational guidance on data masking and PII protection)

7. MicrosoftAzureDocumentation –“DataMasking in Azure SQL Database and Azure Synapse Analytics.” (Specific guidance on Azure's data maskingcapabilities)

Cloud Computing and Data Lakehouse:

8. Armbrust, M., Ghodsi, A., Xin, R. S., Zaharia, M., Franklin, M. J., Shenker, S., & Stoica, I. (2010). A Berkeley view of cloud computing. (Seminal paperoncloudcomputingconcepts)

9. Ghodsi, A., Zaharia, M., Xin, R. S., Davidson, A., Armbrust, M., Franklin, M. J., ... & Stoica, I. (2011).Mateizaharia:Spark:clustercomputing with working sets. (Explains the foundation of ApacheSpark,centraltoDatabricks)

10. Databricks. (n.d.). What is a Data Lakehouse? (OfficialDatabricksresourcedefiningthelakehouse architecture)

11. Microsoft Azure Documentation - Azure Data Lake Storage Gen2 (Official Microsoft Documentation)

12. Microsoft Azure Documentation - Azure Databricks Documentation (Official Microsoft Documentation)

13. Microsoft Azure Documentation - Azure Data Factory Documentation (Official Microsoft Documentation)

Data Security and Privacy:

14. Schneier, B. (1996). Applied cryptography: protocols, algorithms, and source code in C. (Classic cryptography text, relevant to FPE and encryption)

15. Cavoukian, A. (2009). Privacy by design: The 7 foundational principles. (Framework for embeddingprivacyintosystemdesign)

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

16. GDPR (General Data Protection Regulation). (2016). Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection ofnatural persons with regard to the processing of personal data and on the free movement of such data, and repealingDirective95/46/EC. (TheofficialGDPR text)

17. RBI (Reserve Bank of India). (2018). Storage of Payment System Data. (RBI guidelines on data localization)

18. ISO/IEC 27001:2022. Information security, cybersecurity and privacy protection Information security management systems Requirements. (International standard for informationsecuritymanagement)

19. CCPA(CaliforniaConsumerPrivacyAct).(2018). (California'sdataprivacylaw)

20. HIPAA (Health Insurance Portability and Accountability Act). (1996). (U.S. health data privacylaw,ifapplicabletohealth-relatedbanking data)

21. FIPS 140-2. Security Requirements for Cryptographic Modules. (U.S. government standardforcryptographicmodules)

AI/ML and Data Analytics:

22. Bishop, C. M. (2006). Pattern recognition and machine learning. (Fundamentaltextonmachine learningalgorithms)

23. Goodfellow,I., Bengio,Y.,& Courville,A.(2016). Deep learning. (Comprehensive book on deep learning)

24. Microsoft Azure Documentation - Power BI Documentation (OfficialMicrosoftDocumentation)

25. MLflow Documentation (Official Documentation fortheMLflowplatform)

26. Han,J.,Kamber,M.,&Pei,J.(2011).Datamining: concepts and techniques. (Textbook on data miningprinciples)

27. Provost, F., & Fawcett, T. (2013). Data science for business. (Focuses on the application of data scienceinbusinesscontexts)

Indian IT and Financial Sector:

28. NASSCOM(NationalAssociationofSoftwareand Services Companies). (Various reports and publications). (Providesindustryinsightsanddata ontheIndianITsector)

29. IBA (Indian Banks' Association). (Various reports andpublications). (Providesinformation ontheIndianbankingsector)

30. MeitY (Ministry of Electronics and Information Technology, India). (Various reports and publications). (Provides information on Indian governmentITpolicies)