Facial Recognition CNN - Final Submission Notebook

Introduction / Summary

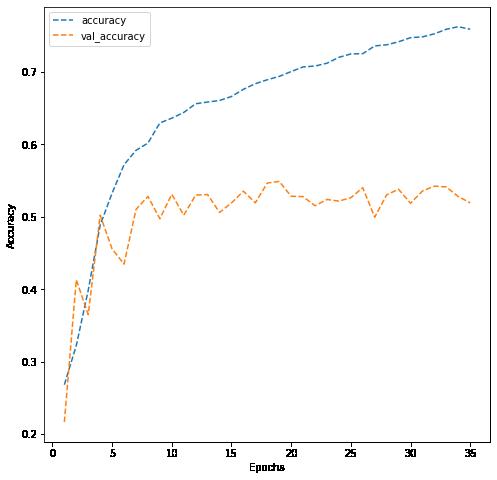

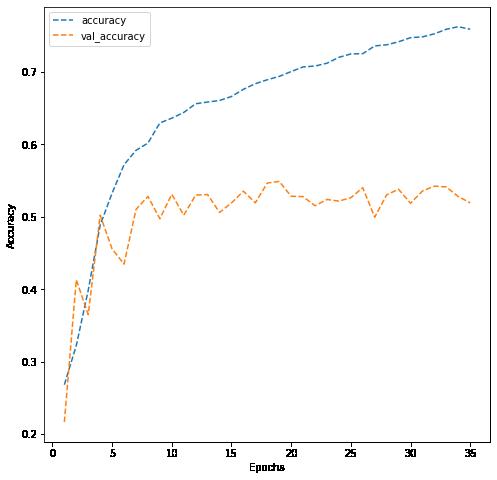

As Referenced in the Milestone 1 notebook, the intent of this project is train a model to determine facial expressions based on provided data, and test that model. The reason for such an effort is to provide a computer vision algorithm as a front end for emotion based applications. Such applications are applicable for social media, mental health, insider threat security, talent development and management, and driver behavior. Our original goal was to develop a model that would generalize at up to 90%; however, after several experiments with model parameters, hyper parameters, as well as with early stopping and different data augmentation techniques, the model did not yield noticeably improved results. One technique that I failed to execute was K-fold cross validation, I do believe this would have had a noticeable improvement on performance, as the model's current level of exceeding a validation above 55% is due to a lack of data, and quality of the data. ,

During the process, I did experiment with 4-layer convo model based on a paper by Tanoy Debnath, Md. Mahfuz Reza, Anichur Rahman, Amin Beheshti, Shahab S. Band, and Hamid Alinejad-Rokny. That model did continue to learn over 70 epics; however, it was unable to exceed the 55% threshold and did not improve the f1 score on the confusion matrix, as well as performing much more poorly on identifying the 'sad' class.

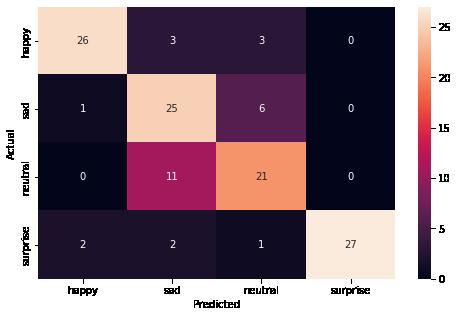

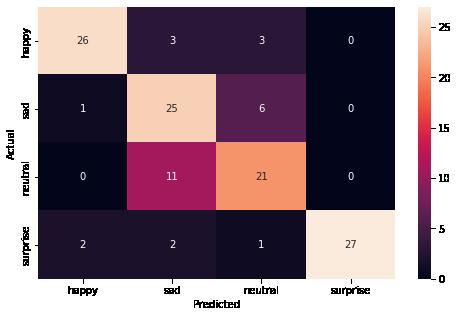

Overall, 'model3', used within this notebook (6 convolutional layers, supported by a flattening layer, three fully connected layers, and a classification layer) produced an accuracy score of 0.77 on the test data, and scored an 85 and 92 for the 'happy' and 'surprise' expressions respectively. At this point my recommendation is to feed the model far more samples, especially from the 'sad' and 'neutral' expression classes.

My final conclusion is that the model learns well and can generalize well with more data. My suggestion would be to continue to train the model on increasing data sets, anddepending on application - provide front end design for continuous learning.

The Data

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 1 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Project…ings%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

In [ ]: #Calling Data # Mounting the drive from google.colab import drive drive.mount('/content/drive')

Drive already mounted at /content/drive; to attempt to forcibly remount, cal l drive.mount("/content/drive", force_remount=True).

In [ ]: #Data Manipulation and visualization Libararies import zipfile import matplotlib.pyplot as plt import numpy as np import pandas as pd import seaborn as sns import os

# Importing Deep Learning Libraries from tensorflow.keras.preprocessing.image import load_img, img_to_array from tensorflow.keras.preprocessing.image import ImageDataGenerator from tensorflow.keras.layers import Dense, Input, Dropout, GlobalAveragePooling2D from tensorflow.keras.models import Model, Sequential from tensorflow.keras.optimizers import Adam, SGD, RMSprop import fileinput

In [ ]: # Storing the path of the data file from the Google drive path = '/content/drive/MyDrive/Colab_Notebooks/Applied_Data_Science/Deep_Learning/Facial_emotion_images.zip'

# The data is provided as a zip file so we need to extract the files from the zip with zipfile.ZipFile(path, 'r') as zip_ref: zip_ref.extractall()

In [ ]: #Calling the file path picture_size = 48 folder_path = "Facial_emotion_images/"

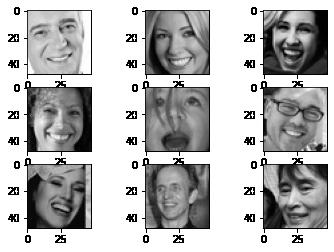

In [ ]: expression = ['happy','sad', 'neutral', 'surprise'] plt.figure(4) for i in expression: for x in range(1, 10, 1): plt.subplot(3, 3, x) img = load_img(folder_path + "train/" + i + "/" + os.listdir(folder_path + "train/" + i)[x], target_size = ( plt.imshow(img)

print ('Expression:', i) plt.show()

Expression: happy

file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Project…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 2 of 17

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 3 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Project…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html Expression: sad Expression: neutral Expression: surprise

In [ ]: # Getting count of images in each folder within our training path

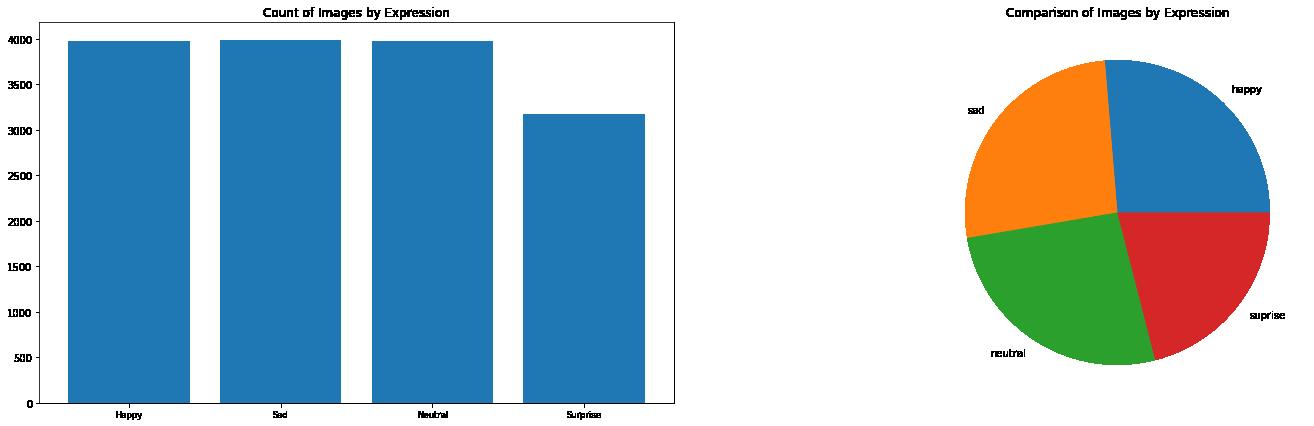

num_happy = len(os.listdir(folder_path + "train/happy"))

print("Number of images in the class 'happy': ", num_happy)

num_sad = len(os.listdir(folder_path+ "train/sad"))

print("Number of images in the calss 'sad': ",num_sad)

num_neutral = len(os.listdir(folder_path+ "train/neutral")) print("Number of images in the calss 'neutral': ",num_neutral)

num_surprise = len(os.listdir(folder_path+ "train/surprise"))

print("Number of images in the calss 'surprise': ",num_surprise)

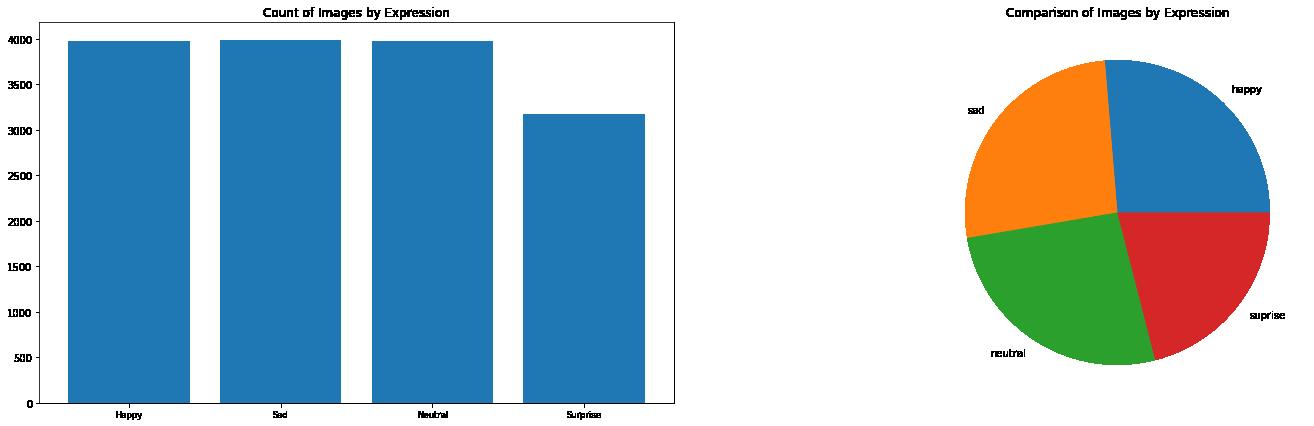

Number of images in the class 'happy': 3976

Number of images in the calss 'sad': 3982

Number of images in the calss 'neutral': 3978

Number of images in the calss 'surprise': 3173

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 4 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Project…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

In [ ]: # Code to plot histogram

data = {'Happy': num_happy, 'Sad': num_sad, 'Neutral': num_neutral, 'Surprise' df = pd.Series(data) gs = plt.GridSpec(1,2) plt.figure(figsize = (25, 7))

#Bar

ax = plt.subplot(gs[0,0]) ax = plt.bar(range(len(df)), df.values, align = 'center') plt.xticks(range(len(df)), df.index.values, size = 'small') plt.title('Count of Images by Expression')

#Pie ax1=plt.subplot(gs[0,1]) ax1.grid = True data = ([num_happy, num_sad, num_neutral, num_surprise]) my_labels = ['happy', 'sad', 'neutral', 'suprise'] plt.pie(data, labels = my_labels) plt.title('Comparison of Images by Expression') plt.show()

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 5 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Project…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

Note:

The picturs are all in grayscale, which ended up being the final filter used, although there was little difference in the results between rgb and grayscale. Also note that from the images shown there is not much varience in skin tone. However, key features are not in fixed areas on the page.

On the size and representation of the data. It was clear from the begining that data augmentation would be nessassary. From looking at the data, we suspected that sadness and neutral would be difficut to diferentiate. However, this complication may still allow for an effective model. The difference in representation accross the classes seems negligable.

Reviewing and Augmenting the Data

Data Augments:

Using ImageData Generator to flip, change brightness, shear, and rescale.bold text

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 6 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Project…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

In [ ]: batch_size = 32 img_size = 48

datagen_train = ImageDataGenerator(horizontal_flip = True, brightness_range = (0., 2.), rescale = 1./255, shear_range = 0.3)

train_set = datagen_train.flow_from_directory(folder_path + "train", target_size = (img_size, img_size color_mode = 'grayscale', batch_size = batch_size, class_mode = 'categorical', classes = ['happy', 'sad', 'neutral' shuffle = True)

datagen_validation = ImageDataGenerator(horizontal_flip = True, brightness_range=(0.,2.), rescale=1./255, shear_range=0.3) # Write your code here

validation_set = datagen_validation.flow_from_directory(folder_path + "validation" target_size = (img_size, img_size color_mode = 'grayscale', batch_size = batch_size, class_mode = 'categorical', shuffle = True) # Write your code here

datagen_test = ImageDataGenerator(horizontal_flip = True, brightness_range=(0.,2.), rescale=1./255, shear_range=0.3) # Write your code here

test_set = datagen_test.flow_from_directory(folder_path + "test", target_size = (img_size, img_size color_mode = 'grayscale', batch_size = batch_size, class_mode = 'categorical', shuffle = True) # Write your code here

Found 15109 images belonging to 4 classes. Found 4977 images belonging to 4 classes. Found 128 images belonging to 4 classes.

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 7 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Project…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

With respect to using ImageDataGenerater: I did attempt to use other techniques for data augmentation, such as image parameter changes in for loops (note: this technique used an excessive amount of RAM, forcing the VM to stop working) None of the changes in either existing kera's techniques or direct code techniques improved the model. As discussed in the introduction, I did attempt to utilize K Fold cross validation (a technique that is well documented for improving generalization with low data inputs); however, I had difficulty combining the training and validation files, and executing K Fold within the current code.

In [ ]: no_of_classes = 4

model3 = Sequential()

# 1st Conv Block model3.add(Conv2D(64, (2,2), padding = 'same', activation = 'relu', input_shape model3.add(BatchNormalization()) model3.add(LeakyReLU(alpha = 0.2)) model3.add(MaxPooling2D(2,2)) model3.add(Dropout(rate = 0.2))

# 2nd Conv Block model3.add(Conv2D(128, (2,2), padding = 'same', activation = 'relu')) model3.add(BatchNormalization()) model3.add(LeakyReLU(alpha = 0.2)) model3.add(MaxPooling2D(2,2)) model3.add(Dropout(rate = 0.2))

# 3rd Conv Block model3.add(Conv2D(512, (2,2), padding = 'same', activation = 'relu')) model3.add(BatchNormalization()) model3.add(LeakyReLU(alpha = 0.2)) model3.add(MaxPooling2D(2,2)) model3.add(Dropout(rate = 0.2))

# 4th Conv Block model3.add(Conv2D(512, (2,2), padding = 'same', activation = 'relu')) model3.add(BatchNormalization()) model3.add(LeakyReLU(alpha = 0.2)) model3.add(MaxPooling2D(2,2)) model3.add(Dropout(rate = 0.2))

# 5th Conv Block model3.add(Conv2D(256, (2,2), padding = 'same', activation = 'relu')) model3.add(BatchNormalization()) model3.add(LeakyReLU(alpha = 0.2)) model3.add(MaxPooling2D(2,2)) model3.add(Dropout(rate = 0.2))

# 6th Conv Block model3.add(Conv2D(512, (2,2), padding = 'same', activation = 'relu'))

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 8 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Project…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

model3.add(BatchNormalization()) model3.add(LeakyReLU(alpha = 0.2)) model3.add(MaxPooling2D(1,1)) model3.add(Dropout(rate = 0.2))

# Flattening layer model3.add(Flatten())

# First fully connected layer model3.add(Dense(256)) model3.add(LeakyReLU(alpha = 0.2)) model3.add(BatchNormalization()) model3.add(Dropout(rate = 0.2))

# Second fully connected layer model3.add(Dense(512)) model3.add(LeakyReLU(alpha = 0.2)) model3.add(BatchNormalization()) model3.add(Dropout(rate = 0.2))

# Third fully connected layer model3.add(Dense(64)) model3.add(LeakyReLU(alpha = 0.2)) model3.add(BatchNormalization()) model3.add(Dropout(rate = 0.2))

# Classification Layer model3.add(Dense(no_of_classes, activation = 'softmax')) model3.summary()

Model: "sequential_8"

Layer (type) Output Shape Param # =================================================================

conv2d_32 (Conv2D) (None, 48, 48, 64) 320 batch_normalization_60 (Bat (None, 48, 48, 64) 256 chNormalization)

leaky_re_lu_60 (LeakyReLU) (None, 48, 48, 64) 0 max_pooling2d_32 (MaxPoolin (None, 24, 24, 64) 0 g2D)

dropout_32 (Dropout) (None, 24, 24, 64) 0 conv2d_33 (Conv2D) (None, 24, 24, 128) 32896

batch_normalization_61 (Bat (None, 24, 24, 128) 512 chNormalization)

leaky_re_lu_61 (LeakyReLU) (None, 24, 24, 128) 0 max_pooling2d_33 (MaxPoolin (None, 12, 12, 128) 0

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 9 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Project…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

g2D)

dropout_33 (Dropout) (None, 12, 12, 128) 0 conv2d_34 (Conv2D) (None, 12, 12, 512) 262656

batch_normalization_62 (Bat (None, 12, 12, 512) 2048 chNormalization)

leaky_re_lu_62 (LeakyReLU) (None, 12, 12, 512) 0

max_pooling2d_34 (MaxPoolin (None, 6, 6, 512) 0 g2D)

dropout_34 (Dropout) (None, 6, 6, 512) 0 conv2d_35 (Conv2D) (None, 6, 6, 512) 1049088

batch_normalization_63 (Bat (None, 6, 6, 512) 2048 chNormalization)

leaky_re_lu_63 (LeakyReLU) (None, 6, 6, 512) 0 max_pooling2d_35 (MaxPoolin (None, 3, 3, 512) 0 g2D)

dropout_35 (Dropout) (None, 3, 3, 512) 0 conv2d_36 (Conv2D) (None, 3, 3, 256) 524544

batch_normalization_64 (Bat (None, 3, 3, 256) 1024 chNormalization)

leaky_re_lu_64 (LeakyReLU) (None, 3, 3, 256) 0

max_pooling2d_36 (MaxPoolin (None, 1, 1, 256) 0 g2D)

dropout_36 (Dropout) (None, 1, 1, 256) 0 conv2d_37 (Conv2D) (None, 1, 1, 512) 524800

batch_normalization_65 (Bat (None, 1, 1, 512) 2048 chNormalization)

leaky_re_lu_65 (LeakyReLU) (None, 1, 1, 512) 0

max_pooling2d_37 (MaxPoolin (None, 1, 1, 512) 0 g2D)

dropout_37 (Dropout) (None, 1, 1, 512) 0

flatten_8 (Flatten) (None, 512) 0

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 10 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Projec…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

dense_36 (Dense) (None, 256) 131328

leaky_re_lu_66 (LeakyReLU) (None, 256) 0

batch_normalization_66 (Bat (None, 256) 1024 chNormalization)

dropout_38 (Dropout) (None, 256) 0 dense_37 (Dense) (None, 512) 131584

leaky_re_lu_67 (LeakyReLU) (None, 512) 0

batch_normalization_67 (Bat (None, 512) 2048 chNormalization)

dropout_39 (Dropout) (None, 512) 0 dense_38 (Dense) (None, 64) 32832

leaky_re_lu_68 (LeakyReLU) (None, 64) 0

batch_normalization_68 (Bat (None, 64) 256 chNormalization)

dropout_40 (Dropout) (None, 64) 0

dense_39 (Dense) (None, 4) 260 =================================================================

Total params: 2,701,572

Trainable params: 2,695,940 Non-trainable params: 5,632

With respect to cheching the model with early stopping, it did slow some of my other models, and helped slow overfitting, however, out of six different models none were able to validate above 56%. This included changing dropout parameteres and total trainable parameters.

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 11 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Projec…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

In [ ]: from keras.callbacks import ModelCheckpoint, ReduceLROnPlateau, CSVLogger epochs = 35 steps_per_epoch = train_set.n//train_set.batch_size validation_steps = validation_set.n//validation_set.batch_size checkpoint = ModelCheckpoint("model3.h5", monitor = 'val_accuracy', save_weights_only = True, model = 'max', verbose reduce_lr = ReduceLROnPlateau(monitor = 'val_loss', factor = 0.1, patience = callbacks = [checkpoint, reduce_lr]

In [ ]: model3.compile(optimizer = Adam(learning_rate = 0.001), loss = 'categorical_crossentropy'

In [ ]: history = model3.fit(x= train_set, validation_data = validation_set, epochs

Epoch 1/35

473/473 [==============================] - 21s 39ms/step - loss: 1.5351 - ac curacy: 0.2683 - val_loss: 1.4125 - val_accuracy: 0.2168

Epoch 2/35

473/473 [==============================] - 18s 38ms/step - loss: 1.3769 - ac curacy: 0.3225 - val_loss: 1.2499 - val_accuracy: 0.4131

Epoch 3/35

473/473 [==============================] - 18s 38ms/step - loss: 1.2517 - ac curacy: 0.3987 - val_loss: 1.2843 - val_accuracy: 0.3651 Epoch 4/35

473/473 [==============================] - 18s 38ms/step - loss: 1.1355 - ac curacy: 0.4886 - val_loss: 1.0906 - val_accuracy: 0.5023

Epoch 5/35

473/473 [==============================] - 18s 39ms/step - loss: 1.0602 - ac curacy: 0.5328 - val_loss: 1.0823 - val_accuracy: 0.4557

Epoch 6/35

473/473 [==============================] - 18s 38ms/step - loss: 0.9916 - ac curacy: 0.5720 - val_loss: 1.1193 - val_accuracy: 0.4348

Epoch 7/35

473/473 [==============================] - 18s 37ms/step - loss: 0.9481 - ac curacy: 0.5918 - val_loss: 1.0570 - val_accuracy: 0.5101 Epoch 8/35

473/473 [==============================] - 18s 37ms/step - loss: 0.9305 - ac curacy: 0.6018 - val_loss: 0.9897 - val_accuracy: 0.5282 Epoch 9/35

473/473 [==============================] - 18s 38ms/step - loss: 0.8837 - ac curacy: 0.6296 - val_loss: 0.9981 - val_accuracy: 0.4975 Epoch 10/35

473/473 [==============================] - 18s 37ms/step - loss: 0.8686 - ac curacy: 0.6362 - val_loss: 0.9630 - val_accuracy: 0.5312

Epoch 11/35

473/473 [==============================] - 18s 37ms/step - loss: 0.8473 - ac curacy: 0.6441 - val_loss: 1.0571 - val_accuracy: 0.5023 Epoch 12/35

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 12 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Projec…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

473/473 [==============================] - 18s 38ms/step - loss: 0.8302 - ac curacy: 0.6562 - val_loss: 1.0133 - val_accuracy: 0.5300

Epoch 13/35

473/473 [==============================] - 18s 38ms/step - loss: 0.8188 - ac curacy: 0.6585 - val_loss: 0.9805 - val_accuracy: 0.5308

Epoch 14/35

473/473 [==============================] - 18s 38ms/step - loss: 0.8190 - ac curacy: 0.6607 - val_loss: 1.0900 - val_accuracy: 0.5061

Epoch 15/35

473/473 [==============================] - 18s 37ms/step - loss: 0.7998 - ac curacy: 0.6662 - val_loss: 0.9690 - val_accuracy: 0.5192

Epoch 16/35

473/473 [==============================] - 18s 37ms/step - loss: 0.7744 - ac curacy: 0.6761 - val_loss: 1.0082 - val_accuracy: 0.5355

Epoch 17/35

473/473 [==============================] - 18s 37ms/step - loss: 0.7622 - ac curacy: 0.6838 - val_loss: 1.0081 - val_accuracy: 0.5194

Epoch 18/35

473/473 [==============================] - 18s 37ms/step - loss: 0.7501 - ac curacy: 0.6893 - val_loss: 1.0151 - val_accuracy: 0.5467

Epoch 19/35

473/473 [==============================] - 18s 37ms/step - loss: 0.7475 - ac curacy: 0.6939 - val_loss: 0.9839 - val_accuracy: 0.5489

Epoch 20/35

473/473 [==============================] - 17s 37ms/step - loss: 0.7379 - ac curacy: 0.7006 - val_loss: 0.9956 - val_accuracy: 0.5284

Epoch 21/35

473/473 [==============================] - 18s 38ms/step - loss: 0.7242 - ac curacy: 0.7070 - val_loss: 0.9988 - val_accuracy: 0.5280

Epoch 22/35

473/473 [==============================] - 18s 37ms/step - loss: 0.7099 - ac curacy: 0.7082 - val_loss: 1.0305 - val_accuracy: 0.5154

Epoch 23/35

473/473 [==============================] - 18s 38ms/step - loss: 0.6984 - ac curacy: 0.7121 - val_loss: 1.0637 - val_accuracy: 0.5240 Epoch 24/35

473/473 [==============================] - 18s 38ms/step - loss: 0.6922 - ac curacy: 0.7202 - val_loss: 1.0359 - val_accuracy: 0.5218

Epoch 25/35

473/473 [==============================] - 18s 37ms/step - loss: 0.6832 - ac curacy: 0.7249 - val_loss: 1.0635 - val_accuracy: 0.5264

Epoch 26/35

473/473 [==============================] - 18s 37ms/step - loss: 0.6738 - ac curacy: 0.7253 - val_loss: 1.0595 - val_accuracy: 0.5403

Epoch 27/35

473/473

[==============================] - 18s 37ms/step - loss: 0.6559 - ac curacy: 0.7360 - val_loss: 1.0358 - val_accuracy: 0.4995

Epoch 28/35

473/473 [==============================] - 18s 37ms/step - loss: 0.6531 - ac curacy: 0.7375 - val_loss: 1.0697 - val_accuracy: 0.5304

Epoch 29/35

473/473 [==============================] - 18s 37ms/step - loss: 0.6380 - ac curacy: 0.7417 - val_loss: 1.0414 - val_accuracy: 0.5381

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 13 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Projec…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

Epoch 30/35

473/473 [==============================] - 18s 39ms/step - loss: 0.6249 - ac curacy: 0.7474 - val_loss: 1.0789 - val_accuracy: 0.5188

Epoch 31/35

473/473 [==============================] - 18s 39ms/step - loss: 0.6240 - ac curacy: 0.7484 - val_loss: 1.0483 - val_accuracy: 0.5357

Epoch 32/35

473/473 [==============================] - 20s 43ms/step - loss: 0.6069 - ac curacy: 0.7527 - val_loss: 1.0889 - val_accuracy: 0.5425

Epoch 33/35

473/473 [==============================] - 19s 40ms/step - loss: 0.6068 - ac curacy: 0.7590 - val_loss: 1.0790 - val_accuracy: 0.5413

Epoch 34/35

473/473 [==============================] - 18s 39ms/step - loss: 0.5921 - ac curacy: 0.7625 - val_loss: 1.1118 - val_accuracy: 0.5280

Epoch 35/35

473/473 [==============================] - 18s 39ms/step - loss: 0.6015 - ac curacy: 0.7590 - val_loss: 1.1415 - val_accuracy: 0.5194

In [ ]: dict_hist = history.history

list_ep = [i for i in range(1, 36)]

plt.figure(figsize = (8, 8))

plt.plot(list_ep, dict_hist['accuracy'], ls = '--' , label = 'accuracy') plt.plot(list_ep, dict_hist['val_accuracy'], ls = '--' , label = 'val_accuracy' plt.ylabel('Accuracy') plt.xlabel('Epochs') plt.legend() plt.show()

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 14 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Projec…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 15 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Projec…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

In [ ]: from sklearn.metrics import classification_report from sklearn.metrics import confusion_matrix test_set = datagen_test.flow_from_directory(folder_path + "test", target_size = color_mode = 'grayscale' batch_size = 128 class_mode = 'categorical' classes = ['happy' shuffle = True test_images, test_labels = next(test_set)

# Write the name of your chosen model in the blank pred = model3.predict(test_images) pred = np.argmax(pred, axis = 1) y_true = np.argmax(test_labels, axis = 1)

# Printing the classification report print(classification_report(y_true, pred))

# Plotting the heatmap using confusion matrix cm = confusion_matrix(y_true, pred) plt.figure(figsize = (8, 5)) sns.heatmap(cm, annot = True, fmt = '.0f', xticklabels = ['happy', 'sad', 'neutral' plt.ylabel('Actual') plt.xlabel('Predicted') plt.show()

Found 128 images belonging to 4 classes. precision recall f1-score support 0 0.90 0.81 0.85 32 1 0.61 0.78 0.68 32 2 0.68 0.66 0.67 32 3 1.00 0.84 0.92 32 accuracy 0.77 128 macro avg 0.80 0.77 0.78 128 weighted avg 0.80 0.77 0.78 128

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 16 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Projec…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html

In [ ]:

10/19/22, 9:40 AMJeffDavis_Final_Submission_Facial_RecognitionCNN (1) Page 17 of 17file:///Users/jeffreydavis/Desktop/Applied%20Data%20Science/Projec…ngs%20/JeffDavis_Final_Submission_Facial_RecognitionCNN%20(1).html