East Asia

China’s data center paradox

centers in China

Building earthquake resistant data centers

The state of play in China’s data center market

Despite the unsettling situation in China, the country looks set to see more stabilization in its data center landscape which so far has suffered from a mismatch in demand and supply.

By Jan Yong Shanghai

When Bain Capitalbacked Chindata announced in August that it planned to invest RMB 24 billion (US$3.3 billion) to build three hyperscale AI data centers with 1.2GW total IT capacity in Ningxia province, north-central China, it raised quite a few eyebrows. This followed recent news that the country was planning to build massive data center campuses or “megaplexes” in Xinjiang province in western China.

A group of Bloomberg journalists, skeptical of the claim, went there and saw lots of construction going on but wondered if they would be able to obtain the 115,000 advanced Nvidia chips that they were seeking. Without these chips, these data centers would probably end up idle, like many others before them. Chindata’s entry into the region with a massive investment has changed the perception somewhat.

Oversupply meets underdemand

China’s data center market is known to be saturated with empty data centers due to weak demand, regional overbuilding and speculative investment. The building boom began in 2022 when the government

launched an ambitious infrastructure project called “Eastern Data, Western Computing”. Data centers would be built in the western regions such as Xinjiang, Qinghai and Inner Mongolia, where energy costs and labor are cheaper, in order to feed the huge energy demands from the eastern megacities.

At least 7,000 data centers have been registered up to August, according to government data. Last year alone saw a tenfold increase in state investment over a one-year period, with 24.7 billion yuan (US$ 3.4 billion) spent, compared to more than 2.4 billion yuan in 2023. Up to August this year, about 12.4 billion yuan (half of 2024’s total) had been invested.

New projects continue to be built in western regions especially in Xinjiang, driven by low electricity costs, government incentives, national network node designations and expectations that government and state-owned firms would fill up the space, observes a China-based analyst. “However, market fragmentation, lack of professional operators, and immature capital exit mechanisms— despite emerging REITs and insurance investments—hinder sustainable growth,” adds the analyst who declined to be named.

Furthermore, the idea of building

The idea of building data centers in remote western provinces lacks economic justification.

data centers in remote western provinces lacks economic justification. “The lower operating costs had to be viewed against degradation in performance and accessibility,” Charlie Chai, an analyst with 86Research told Reuters.

But the situation is more than meets the eye, according to Professor PS Lee, Head of Mechanical Engineering, at the National University of Singapore (NUS), who said, “The oversupply is concentrated in legacy, low-density, air-cooled capacity (often in western and central regions) that’s cheap to power but poor for latencysensitive or AI-training workloads. At the same time, there is a shortage of AI data centers capable of large-scale training and fast inference.”

“In terms of the specs, the underutilized halls typically house less than 10 kW/racks, are air-cooled and often come with modest backbone connectivity. New AI campuses target 80–150 kW/rack today, moving to 200

kW+ with direct-to-chip or rear-door liquid cooling, larger MV blocks, heat reuse, and high-performance fabrics,” he adds.

Even so, the rush to build across the country has created an unprecedented oversupply evidenced by over 100 cancellations in the past 18 months mainly driven by growing fears among local governments which had financed the buildings, that they might not see any profit.

This explains why the Chinese government has recently decided to set up a national cloud and compute marketplace to resell underutilized capacity as well as implement selective approvals of AI data centers. New data centers since March 20 have to comply with more conditions such as providing a power purchase agreement and a minimum utilisation ratio. Local governments are also banned from participating in small-sized data center projects. This would help solve the mismatch situation, the government hopes.

Private sector bets big despite challenges

Despite the unsettling and very fast evolving situation in China, the private sector still has full confidence in the future of China’s AI and the infrastructure backbone supporting it.

For example, Alibaba has committed to spend 380 billion yuan (US$53 billion) over three years to develop AI infrastructure while an additional 50 billion yuan in subsidies will be used to stimulate domestic demand.

“These investments are evidence of our optimism in the strong potential in the Chinese market which is the largest in the world. China’s LLM is developing very rapidly and we foresee the demand for AI leading to huge innovations in AI applications,” Toby Xu, Alibaba’s CFO, told Xinhua, China’s

official news agency.

NUS’ Lee sees the investment as a bold, market-making move that can pay off depending on its execution. “It aligns Alibaba’s Qwen stack with commerce and logistics, and should lock in platform advantages. Given the national misallocation hangover, capital expenditure must be targeted or the investment risks adding ‘good megawatts in the wrong place’.”

On the AI front, China initially lagged behind the US, especially after the 2022 emergence of ChatGPT, which reset global AI paradigms. Chinese firms had to rapidly align with large language model (LLM) frameworks but companies like DeepSeek and Alibaba’s Qwen have since narrowed the gap with models that surpass American models in various metrics on global rankings. DeepSeek is now at its third iteration and very much improved, while Alibaba’s Qwen is not far behind, both being placed at the top on reasoning, coding, and math in crowdsourced rankings. The staggering cost-efficiency of the models is due to “clever training recipes”, in Lee’s opinion.

The hardware hurdle

But despite the strong software and talent advantages, China faces a critical hardware bottleneck - no domestic entity has assembled a GPU cluster exceeding 100,000 units, compared to U.S. projects like xAI’s Stargate, which boasts 400,000. This severely limits large-scale model training.

This also explains why China reportedly needs more than 115,000 Nvidia chips for its massive AI data center campuses that are currently being constructed in the western regions. But with the Chinese government recently discouraging its companies from using Nvidia chips, it’s very likely that these data centers would use more local AI chips until the situation stabilizes.

A China-based analyst says that while domestic players like Huawei are making significant progress, challenges remain in production capacity and full-stack ecosystem maturity. The shift towards domestic chips is framed not as a forced decoupling but as a natural commercial and strategic preference, provided local alternatives are

competitive in performance, cost, and scalability.

NUS professor Lee reckons that domestic chips such as Huawei Ascend is good enough for many training and inference tasks and hence would likely anchor sovereign workloads while heterogeneous fleets and a CUDA-lite pathway would dominate in the commercial sector. Gray supply would decline due to tighter enforcement but won’t completely disappear from the scene in the near future. According to Lee, there is apparently a repair ecosystem in Shenzhen that refurbishes up to hundreds of smuggled AI GPUs per month at CN¥10K– CN¥20K (US$1,400 –US$2,800) per unit!

Contrary to what the market thinks about the impact of US chip export restrictions to China, the NUS academic feels that it’s actually a positive thing for China in the longterm as it encourages self-reliance, an independent AI ecosystem and supply-chain sovereignty. In the near term, some pain can be expected and progress will slow down as developers get weaned off CUDA and Nvidia. But the pragmatic ones will adopt a dual-track approach, using Ascend for sensitive work and Nvidia where allowed.

What lies ahead

The NUS professor believes that AI utilization will gradually increase amid higher occupancy of AI-grade data centers while generic builds will slow down due to more selective approval process. There will be selective west–east interconnect investments, as well as clearer design convergence meaning liquid-first, heat-reuse-bydesign, bigger MV blocks, and fabricaware campus planning. The national marketplace will begin to clear some stranded capacity although latency and fabric limits remain.

On the other hand, the Chinabased analyst feels that the sector will see continuing intense competition with limited recovery prospects in the next six – 12 months due to ongoing project deliveries and sluggish demand. But long-term, with policy corrections underway, including construction bans in non-core regions, the industry will be on a path towards long-term stabilization, albeit slowly.

Beneath the waves: China’s bold bet on underwater data centers

Underwater data centers come with many advantages but can they replace land-based data centers?

By Jan Yong

In June, China launched its first offshore wind-powered underwater data center (UDC) off the coast of Shanghai. With Hainan having already witnessed the successful commercial deployment of a UDC, what’s new about this project?

The difference this time is that this project, known as the Shanghai Lingang UDC project, is an upgraded 2.0 version powered by an offshore wind farm. According to the developer, Shanghai Hicloud Technology Co., Ltd (Hailanyun), this makes the facility “greener and more commercially competitive.” Hailanyun is a leading Chinese UDC specialist that also built the Hainan UDC.

A UDC is a data center that keeps all

the servers and other related facilities in a sealed pressure-resistant chamber, which is then lowered underwater onto the seabed or on a platform close to shore. Power supply and internet connections are relayed through submarine composite cables.

Su Yang, general manager of Hicloud told Xinhua, China’s official news agency that the Lingang project design draws on their experience with their Hainan project which to date, had “zero server failure and no on-site maintenance [was] needed”.

The Hainan UDC has been hailed as a success in China – it claims to have current computing capacity equivalent to “30,000 high-end gaming PCs working simultaneously, completing in one second what would take a standard computer a year to accomplish”. An

additional module allows it to handle 7,000 DeepSeek queries per second, apparently.

The history of underwater data centers has leant more towards a rather positive outlook despite some criticisms. Microsoft, credited with pioneering Project Natick, the first underwater data center in the world, has effectively confirmed that it is abandoning the project. It has however somewhat cryptically said it “worked well” and that it is “logistically, environmentally, and economically practical.”

There are several other similar planned installations by American startups, namely Subsea Cloud and NetworkOcean, but nothing concrete has come out of those yet. Though some observers have sounded scepticism on the project, Hicloud is unfazed and is planning to allocate the two-phase project with an initial investment of 1.6 billion yuan (about US$ 222.7 million).

Proof-of-concept

The Lingang UDC’s first phase, a 2.3 MW demonstration facility to be operational in September, is more of a proof-of-concept project serving as a real-time laboratory for monitoring the various impacts of using off-shore wind energy to power a UDC. If successful, it will scale to the second phase with capacity of 24 MW, and a power usage effectiveness (PUE) below 1.15 with over 97 per cent of its power generated from offshore wind farms.

Underwater DC

The history of underwater data centers has leant more towards a rather positive outlook despite some criticisms.

According to Hicloud, the system, consisting of 198 server racks, can deliver enough computing power to train a large language model in just one day.

Anchored 10 kms off Shanghai, in the Lin-gang Special Area of China (Shanghai) Pilot Free Trade Zone, the facility leverages on the cooling effect of sea water to cool its servers. With cooling being practically free, this shaves off 30-40 percent of its electricity bill compared to its land-based counterparts. Cooling typically accounts for 30 – 40 per cent of total data center power consumption.

Additionally, the facility relies almost 97 per cent on offshore wind power, which represents a massive savings on power consumption. It operates a closed-loop water circulation system where the seawater is channelled through radiator-equipped racks. Hence, it does not require freshwater resources or traditional chillers. This again cuts power consumption as well as carbon emissions.

Another advantage is that the sealed vessel can easily be filled with nitrogen gas, after purging the oxygen. With zero oxygen atmosphere,

equipment can last longer hence server failure is minimized or practically zero. Microsoft has proven this with Project Natick although it’s only a two-year one module experiment.

As noted by an observer, most IT equipment today probably has only a lifespan of 3- 5 years due to technical obsolescence hence it doesn’t matter even if the equipment can last 10 or 20 years. Moreover, the higher operations, maintenance, and deployment costs at sea would offset some of the savings.

If there is server failure, it just means having to use a floating barge with an appropriate crane system to lift the capsule out to deal with the failure. Or, in some instances, it might require marine salvage teams and specialized vessels.

Adverse impacts

More critically though, critics have pointed out that heat emissions from UDCs would cause lasting damage to the marine ecosystems such as bleached coral reefs, killing off some species of marine life and local ecosystems, among others. Moreover, the long-term effect is still unknown. Some environmentalists are adamant that if more data centers are placed underwater, the cumulative impact, no matter how small, would contribute to global warming.

“Even if the vast ocean can dissipate this heat efficiently, and even if the temperature change is minimal and localised, it would still have an impact because the heat has to go somewhere,” notes a green activist.

“It could even bring invasive species and affect ecological balance,” says James Rix, JLL’s Head of Data Centre and Industrial, Malaysia and Indonesia. Rix also ponders whether it’s the right thing to do “even if it works”, due to the adverse impact on the environment.

Another issue is the potential outages should a natural disaster occur. “When it comes to disaster recovery, there is no emergency power generation for underwater facilities, such as an underwater generator,” he reflects.

Hicloud has countered with trial data showing the heat emitted has never exceeded one-degree Celsius increase, the threshold above which it is believed there will be some impact on the surrounding marine

life. Other issues raised include noise pollution, electromagnetic interference, biofouling and the effect of corrosion.

Swim or sink?

If successful, the implications are massive as it could become a model for the next generation of data centers with sustainability and high-performance computing deeply embedded. It has the potential to solve the most pressing issues currently facing land-based counterparts: scarcity of land, power and water, plus the problem of massive heat generated by high-density servers.

Mainstream adoption could even give a boost to current coastal data center hubs constrained by land shortage, such as Japan, Hong Kong and Singapore. This could place China at the forefront of green computing infrastructure, although countries like South Korea and Japan have also announced plans to explore UDCs.

The fact is, while early results look promising, long-term, differentlylocated performance still needs more evidence. And there is the serious environmental impact to consider. A more likely scenario, even if the Lingang UDC proves successful, would be UDCs operating alongside land data centers. Challenges remain: marine regulations, environmental laws, longterm maintenance costs, security, and scalability. Independent oversight will be essential whether or not UDCs become mainstream.

Divers around an underwater capsule

Deploying DC underwater

Unearthing the need for quake-resistant designs

Johor’s recent earthquake might unearth a real need for low-risk seismic regions to incorporate quakeresistance into their data center design.

Arecent 4.1 quake with its epicentre in Segamat, a small town in Johor, could portend the need to prepare for the next one. Because who knows where the next epicenter will be, when it will strike, or how intense it will be? Experts have already warned that a 5.0 or higher magnitude earthquake can happen any time in West Malaysia. That level would cause serious damage to property and even casualties. Sedenak in Kulai, which lies 130 kilometers south of the Segamat epicentre, is the fastest-growing region for data center buildouts in Southeast Asia. An epicentre close enough would have catastrophic consequences.

As PS Lee, Professor and Head of Mechanical Engineering, National University of Singapore, said: “In areas like Singapore and Malaysia that have little seismic activities, data centers should still incorporate safety measures. This is because tremors that originate from earthquakes in Sumatra can often be felt in both countries.”

Following the Segamat quake, it is becoming imperative for missioncritical buildings like data centers that house millions or even billions of dollars worth of compute equipment

to consider protecting their buildings and assets from earthquake damage, regardless of whether the jurisdictions mandate it.

The bare minimum

The bare minimum would involve the following, according to a booklet published by the Institute for Catastrophic Loss Reduction (ICLR) in May 2024. Most of the recommendations are fairly basic, such as ensuring most equipment is seismically rated and anchored, as well as braced to prevent sideways swaying during an earthquake.

Relevant items would include computer racks; all mechanical, electrical, and plumbing (MEP) equipment; raised floors; suspended pipes and HVAC equipment. In particular, battery racks should be strongly anchored, braced against sidesway in both directions, with restraint around all sides and foam spacers between batteries.

During and after an earthquake, there will be extended electricity failure, so emergency generators and an uninterruptible power supply (UPS) should be seismically installed with two weeks of refuelling planned. As water supply will also be interrupted,

consider closed-loop cooling rather than evaporative cooling.

For even better preparedness, Lee advocates several additional measures such as specifying seismic-qualified kit for critical plant; preparing post-event inspection and restarting checklists and Earthquake Early Warning (EEW)informed SOPs where accessible; and conducting periodic drills.

Different requirements for highrisk regions

It’s a different story however in earthquake-prone countries like the Philippines and Indonesia. There, earthquake-resistant design should be mandatory, especially near mapped faults, advises Lee. A practical stack, according to him, would include siting the data center at a safe place, away from rupture, liquefaction, and tsunami exposure; elevating critical floors; and diversifying power and fiber corridors.

For the structure, base isolation for critical halls should be employed. Base isolation is a technique that separates a structure from its foundation using flexible bearings, such as rubber and steel pads, to absorb earthquake shock and reduce swaying. In addition, use buckling-restrained braces (BRBs) or self-centering systems to control drift. Rack isolation should be applied for mission critical rows while for nonstructural items, ensure full anchorage and bracing, large-stroke loops, seismic qualification for plant as well as protecting day-tanks and bulk storage against slosh.

To ensure network operations continuation, a data center should employ multi-region architecture, EEW-

By Jan Yong

An earthquake fissure

Batterred by quake

triggered orchestration, and rehearsed failovers. These are the basics that could determine whether a data center emerges from an earthquake with little damage or rendered completely unusable.

Can AI help?

With artificial intelligence being applied widely now in many industrial spaces, can AI or digital twins help to predict when and where the next earthquake will occur, and its intensity? Yes, according to the NUS professor, but only as a decision support.

Real-time sensor networks feed data into digital models of the building - digital twins - that reflect actual structural behaviour. These models help assess equipment-level movement, simulate scenarios before construction, and help make decisions based on how quickly operations can resume after an event.

In addition, AI could help choose and tune the design, and still meet code requirements as well as keep peer review in the loop. Underlying that, AI could be instructed to prioritise capex by avoiding downtime and SLA risk reduction. In short, AI and digital twins could help significantly during the design stage by simulating all the options in order to help make the best decision.

Beyond basics

Beyond the basic methods such as base isolation, tie-downs, seismic anchorage, reinforced walls, flexible materials, and floating piles, NUS’ Lee came up with a list of some other methods that could be applied,

To reduce building shaking during earthquakes, tuned viscous or mass dampers, and viscoelastic couplers are typically used.

sometimes concurrently.

In low-damage structural systems, one can apply self-centering rocking frames or walls. Typically posttensioned, these allow structures to rock during an earthquake and then return to their original position through the use of high-strength tendons. Energydissipating fuses are incorporated – these help reduce residual drift, supporting faster re-occupancy postearthquake. Supporting these are buckling-restrained braces which help to dissipate energy under both tension and compression, effectively controlling lateral drift.

To reduce building shaking during earthquakes, tuned viscous or mass dampers, and viscoelastic couplers are typically used. These systems work quietly within the structure to minimize shaking and are often paired with base isolation or added stiffness to further reduce movement during seismic events. Where equipment operation is critical, isolation strategies such as racklevel isolators - like ball-and-cone or rolling pendulum units - are applied for the most sensitive server racks. Another option is to install isolated raised-floor slabs that protect entire server areas from floor movement. These can be done at a cheaper price than isolating the whole building.

At the ground level, soil instability is crucial especially protecting it from the risk of liquefaction. Techniques such as deep soil mixing, stone columns, and compaction grouting help stabilize weak soils. Foundation systems like pile-rafts are designed to spread loads while reinforcing retaining walls and utility pathways. When seismic isolation is employed, expansion joints or moats are built with enough clearance to overcome large movements, while utilities such as pipes, electrical bus ducts, and fiber cables are endowed with flexible connections to avoid breaking at the isolation boundary.

By linking into earthquake early

warning (EEW) systems, buildings can respond automatically seconds before the earthquake movement arrives. Actions like shutting off fuel and water lines, starting backup generators, moving elevators to the nearest floor, or shifting workloads in IT systems, could make the difference between survival or non-survival.

Lee adds that the winning stack for high-hazard and near-fault metro locations, would be to incorporate base isolation with low-damage lateral system plus non-structural measures. The data center also has the option to apply rack isolation for the most critical bays.

Examples of how successful methods saved the day

The methods employed as described earlier have been proven to work over the years, NUS’ Lee says. Some examples include the following:

• Base-isolated facilities in Japan: Friction-pendulum or laminated-rubber isolation, often with supplemental oil dampers, have kept white spaces intact through major earthquakes (including the 2011 M9.1), with building displacements staying within moat allowances and no significant IT damage reported.

• Retrofit cases in California: Base-isolated, frictionpendulum retrofits of mission-critical buildings have seen benign in-building accelerations during real events and preserved operability.

• Rack-level isolation deployments (Japan/US): Balland-cone platforms protecting long rows of cabinets showed markedly reduced rack accelerations and prevented topple or failure in documented earthquakes.

• Advanced fab/DC campuses in Taiwan: Post-1999 programmes combined equipment anchorage, foundation and ground improvements, and operational protocols; later M7+ events produced limited equipment damage and rapid restart.

Where AI workloads meet sustainable design

Here’s what a data center built for AI and sustainability looks like.

By Paul Mah

What if you could design a modern data center from scratch? In an era of explosive infrastructure growth and diverse workloads spanning enterprises, cloud platforms, and AI applications, what would that facility look like?

Founded in 2021, Empyrion Digital has expanded rapidly with the announcement of new developments across South Korea, Japan, Taiwan, and Thailand. The company’s newly launched KR1 Gangnam Data Center in South Korea demonstrates how modern facilities can meet evolving standards for scalability, performance, and sustainability.

Inside South Korea’s newest AIready facility

Empyrion Digital’s greenfield KR1 Gangnam Data Center in South Korea officially opened in July this year. The

29.4MW facility was purpose-built to support hyperscalers and enterprises requiring low-latency, high-density infrastructure for AI and cloud computing.

KR1 marks the first data center built in Gangnam – South Korea’s economic hub – in over a decade. Several factors created this gap: an informal moratorium targeting data centers and competition from other industries for limited power from a grid operating near capacity. Gangnam’s prohibitive land costs also played a part in discouraging data centers there.

The nine-story facility offers 30,714 square meters of colocation space. Floors are designed with 15 kN/m² loading capacity to support liquid cooling equipment necessary for the latest GPU systems for AI workloads. Crucially, it was designed to withstand magnitude 7.0 earthquakes, critical given South Korea’s dozens of annual tremors and occasional moderate quakes.

KR1’s AI readiness shows in its eightmeter ceiling heights, providing ample space for liquid cooling pipework. According to Empyrion Digital, selected data halls are already fitted with direct-to-chip (DTC) cooling piping, while others are ready for conversion as needed. Standard IT power runs 10kW per rack, with higher densities available on request.

Modular infrastructure allows integration with rear-door heat exchangers and direct-to-chip liquid cooling systems. Taken together, KR1 is a modern data center that is strategically located for hyperscalers, content delivery networks, and enterprise businesses.

Sustainable by design

KR1’s environmental approach extends beyond standard efficiency metrics. Technical specifications aside, the facility incorporates various green features throughout. This includes the use of eco-friendly construction materials, rainwater management systems, and rooftop solar panels.

Within data halls, fan wall systems, which are recognized for being energy efficient, handle air cooling requirements. Most notably, the facility uses StatePoint Liquid Cooling (SPLC) technology for its cooling infrastructure. The SPLC was specifically developed for sustainable, high-performance data center cooling.

SPLC delivers measurable improvements in thermal performance, water efficiency, and energy consumption. The system combines a liquid-to-air membrane exchanger with a closed-loop design. Water evaporates through a membrane separation layer to produce cooling without excessive mechanical intervention. This approach minimizes both energy requirements and water consumption compared to traditional cooling methods.

The Gangnam facility demonstrates what a modern data center designed from scratch looks like today. Flexibility, sustainability, and AI readiness aren’t add-on features but fundamental design principles. Empyrion Digital’s KR1 shows that meeting infrastructure demands while maintaining environmental responsibility requires integrating both priorities from the ground up, not treating them as competing goals.

Photo to use: Empyrion Digital

Is Your Business Ready to Take the Spotlight? Get in touch with us today at media@w.media

Scan to watch our exclusive interviews with Industry leaders.

With a readership of top decision-makers across APAC, we’ll ensure your message reaches the right audience. Our Offerings Include:

• Magazine – Online & Offline

• Interviews - In person & Virtual

• Editorial content

• Newsletter Features

• Digital Ad Campaigns

• Annual APAC Cloud and Datacenter Awards

Our Community at a Glance:

• 80,000+ Subscribers across APAC

• 70,000+ Monthly Website Visits

• 33,000+ Social Media Followers

• 16,000+ Industry Decision-Makers Engaged

Here are three ways you can get involved:

1.

Nomination Submission (Opens until July 31st)

You can nominate individuals, teams and initiatives pushing boundaries under 3 main themes: Projects, Planet, People and 16 categories. Multiple entries per organization are welcome.

Scan to submit (free entry) and give your work the recognition it deserves!

2.

Sponsorship Opportunities

We are welcoming sponsors with limited slots available!

Don’t miss this opportunity to be in the spotlight throughout our award promotion and at our highly anticipated Gala Ceremony, where 200+ C-level executives from top companies will join.

3.

Early Bird: To guarantee a seat at our black-tie ceremony event, grab a ticket at a discounted price now!

Get more information via: https://w.media/awards/#tickets Contact us: awards@w.media for any further inquiries

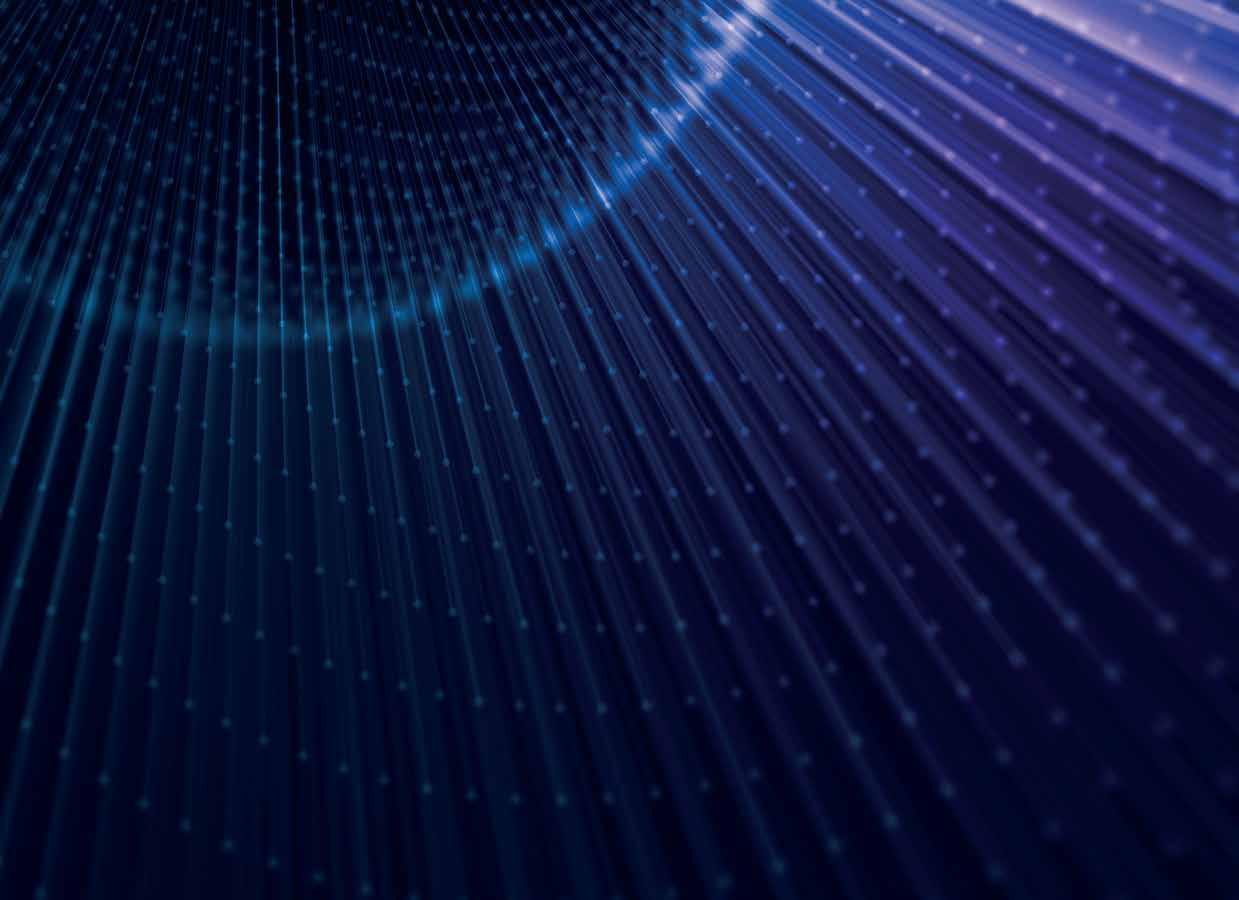

Here’s what transpired at KRCDC 2025!

If you missed w.media’s Korea Cloud & Datacenter Convention (KRCDC) 2025, here’s the lowdown on all that transpired.

Over 1,000 digital infrastructure and technology professionals including C-suite executives, business leaders, key buyers, architects, engineers and consultants attended the KRCDC this year.

The atmosphere was abuzz with excitement, as w.media brought together some of the biggest names of South Korea’s digital infrastructure industry to discuss some of the most pressing issues and concerns facing the digital infrastructure industry in South Korea. The day-long event was held at COEX, Seoul on September 19 2025. Check out our stellar line-up of speakers!

Topics of our power-packed panel discussions ranged from data center investment trends across Northeast Asia, multi-tenant data center design, modular data centers, energy efficiency and sustainability challenges.

“It was a privilege to join industry leaders at KRCDC 2025 for a timely discussion on Korea’s digital future. Our panel highlighted how the AI-era shift from air-cooling to advanced liquid and hybrid systems is redefining efficiency and sustainability. I argued that real progress means building flexible, future-ready facilities and adopting sustainability measures that go beyond PUE alone,” said Ben Bourdeau, Global Chief Technology Officer, AGP Data Centres. “Korea’s land constraints and emerging renewables market will push toward creative reuse of legacy assets.

That challenge could drive the kind of practical innovation needed as nextgeneration energy solutions mature.”

Another attendee Mozan Totani, SVP, ADA Infrastructure, said, “Through the conference and the panel discussions, I was pleased to see that people in the data center industry are extremely passionate about improving energy efficiency and minimizing environmental impact, even as AI is set to bring significant productivity gains that will improve our lives. We also discussed how we can work together to support sustainability. It was truly a great conference.”

KRCDC also saw a series of technology presentations on subjects ranging from AI data center infrastructure, to design and build innovations, to liquid cooling for AI, to flow solutions for data centers, and much more.

Here are a few pictures from KRCDC 2025.