11 minute read

What Are the Medicolegal Challenges of Implementing AI in Spine Surgery Care?

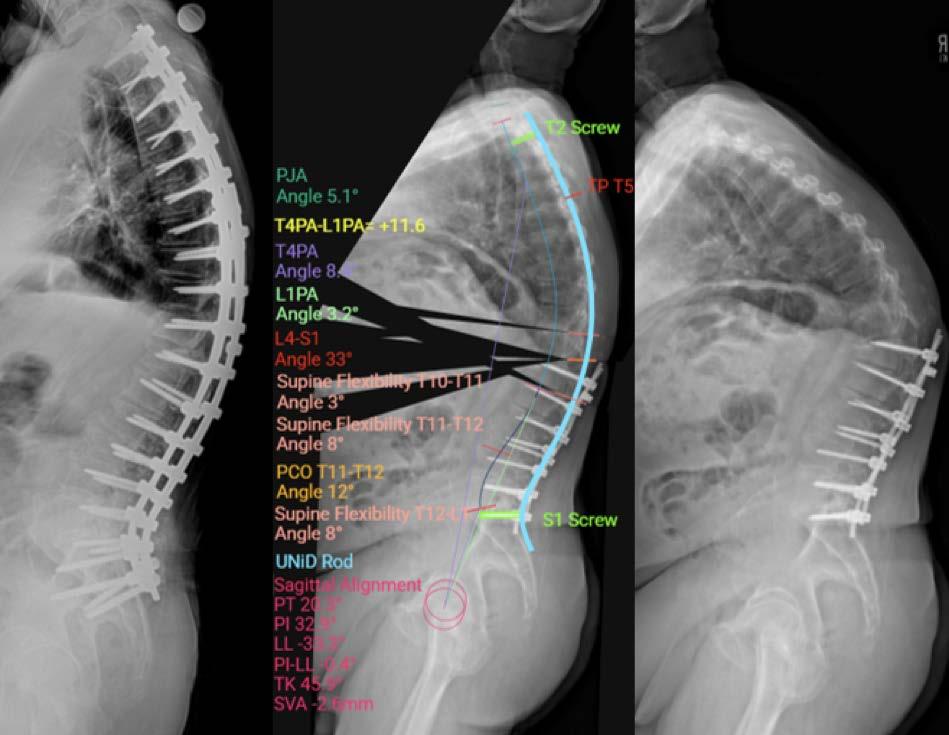

Artificial intelligence (AI) is being integrated into spine surgery, promising enhanced diagnostic power through tools for tasks such as imaging analysis, prediction of surgical outcomes, and guiding robotic surgery systems.1–3 For example, the first FDA-approved AI device in spine surgery—Medtronic’s UNiD Spine Analyzer, which was cleared in 2022—leverages a machine learning (ML) algorithm trained on thousands of cases to assist with surgical planning for complex spinal reconstructions4 (Figure 1). An AI app developed by Li et al demonstrated the ability to accurately and reliably measure the Cobb angle of the main curvature in scoliosis patients.5 These recent advancements highlight the increasing potential of AI to improve precision in surgical measurements and planning.

However, despite these promising developments, the implementation of AI into spine surgery care has brought numerous medicolegal challenges and ethical dilemmas that preclude rapid, unchecked integration. New issues surrounding liability, regulatory compliance, data bias, and informed consent are key challenges that must be addressed by spine surgeons and healthcare institutions alike.

This article aims to provide an academic overview of the key medicolegal issues associated with the implementation of AI in spine surgery, discussing how various innovative technologies have raised important questions. A rigorous understanding of these challenges are critical for spine surgeons to ethically and legally navigate AI’s potential in improving spine patient care.

Ethical Considerations for Medical Applications

Informed Consent for the Usage of AI

Spine surgeons have the duty to obtain informed consent in virtually all facets of relevant surgical care. When introducing novel AI-driven technologies into clinical care, disclosing the nature of the AI tool alongside its known risks, benefits, limitations, and alternative treatment options are of utmost importance. Navigating these areas of information are critical for achieving rigorous patient consent and requires physicians to possess relevant expertise.6

Complexities intrinsic to AI can complicate this process. A notable example is the “black box phenomenon,” wherein machine learning (ML) algorithms make predictions that are difficult to attribute to specific input parameters, thereby creating opacity in understanding the rationale behind clinical decision-making. As a result, spine surgeons may be unable to explain the generation of a particular diagnosis or treatment recommendation from AI to a patient.7 To help address this issue, AI systems are increasingly being developed with the inclusion of “saliency maps,” which identify key factors that mostly contribute to the predictive treatment or diagnosis output.8 Additionally, medical community members have called for increased training requirements for AI devices to ensure physician literacy and responsible usage.9

Data Accessibility and Security Concerns

ML models have demonstrated their effectiveness in the analysis of large datasets to provide insight into different areas of spine surgery research. For example, ML has allowed for the identification of age, laboratory parameters, and different comorbidities as predictors of mortality for patients with epidural abscesses and spinal metastases.10–12 ML-driven modeling in spine surgery has also revealed associations between the risk of adverse events such as Medicaid recipiency, infections, and insurance type.13–15 However, the development of these ML algorithms is challenged by the availability of large, diverse datasets with rigorous bias mitigation, standardization, and quality control.16 Furthermore, the siloing of database development by disjointed entities who seek to retain control of their data can prevent systematic sharing, limiting broad accessibility and preventing the development of ML algorithms in spine care.17 These datasets also have threats to their security during the sharing process between collaborators, an opportunity for hackers to exploit vulnerabilities.18 Federated learning (FL) offers a potential solution to these previous mentioned issues by using decentralized data collection as a safeguard mechanism. By locally training models and only transferring parametric model configurations, patient data remains secure while maintaining utility. FL is able to further combat hacking concerns by leveraging secure aggregation, which allows multiple parties to integrate their data with one another without direct interaction. FL is also capable of using the distributed Gaussian mechanism as another layer of security. FL establishes security to data points to protect their details.17

Algorithmic Bias

AI/ML algorithms are capable of inheriting biases from the data on which they are trained, which can result in lopsided performance across different patient populations. Since the end AI/ML product is inherently dependent on the data it is fed, unconscious discrimination can be unintentionally programmed if the dataset used is constructed without appropriate bias mitigation. Given the demonstrable data quality issues spanning inconsistency, incompleteness, and lack of standardization by entities constructing datasets, the criticality of balanced, well-documented data is incredibly important.19,20 If certain ethnic groups are not well represented or documented within a dataset (and therefore considered not statistically robust enough to be included for model training, thus leading to exclusion), the end model can present with biased characteristics that will preclude its confident application for those excluded groups. This can perpetuate healthcare inequalities among commonly excluded populations.20–22 Faulty guidance in spine surgery care can lead to catastrophic complications, including lifelong disability and death. Ensuring generalizability for underrepresented populations is imperative.

Legal Challenges and Responsibility in AI Implementation

Who Assumes Liability?

Despite the numerous promising applications of implementing AI into spine surgery care, responsible usage is warranted to minimize legal challenges that will naturally arise. This sentiment is further compounded by the higher rates of litigation faced by spine surgeons relative to other types of physicians due to increased malpractice claims.23

Liability, however, is difficult to ascribe to a singular party in the AI-physician-patient axis health system. Device manufacturers are also stakeholders in this ethical question of who takes responsibility in the event of an adverse outcome. In the event of malpractice, a breach in duty of care and deviation from the standard of care is required. Legally, this negligence is difficult to characterize because adverse events may arise from a failure in AI programming, physician supervision, or actions of the physician or algorithm itself.24 Abramoff et al suggested that autonomous AI, which functions independently of physician supervision, should have the product creators assume liability. Conversely, devices designed with assistive AI, which allow for physicians to supervise AI outputs, are suggested to have physicians be held liable.25 Because of this difficulty in ascribing liability in a multifactorial system, strong documentation of cleared indications and adverse-event risks should be provided alongside rigorous investigation of reported adverse events to ensure specificity of physician responsibilities.22,26

Risk Management

Spine surgeons must stay informed on developments in the literature, evaluating research landmarks and critically evaluating new technologies put forth by private companies. Rather than relying on private companies and technology manufacturers to ensure the reliability and generalizability of their products, surgeons should take steps to achieve a functional understanding of AI-driven spine technologies. Employing health systems may consider some sort of credentiality, seminar, or other sort of training to ensure basic literacy in AI-driven technology, especially for spine surgeons seeking to integrate these technologies into clinical practice.

As previously mentioned, the performance of AI models is oftentimes only as strong as the data on which they were trained. Thus, there must be a responsibility on behalf of healthcare systems and spine surgeons alike to rigorously evaluate the construction of these models to ensure their thoroughness and applicability. This responsibility, however, can only be actualized by transparency from the manufacturers and developers of these models, specifically with regard to methodological processes, data processing and sourcing, and relevant fiduciary relationships. By collaborating top-down from manufacturer to health system to spine surgeon, AI/ML products can be more confidently integrated into clinical care.

Conclusion

The integration of AI/ML into spine surgery care has demonstrable promises across diverse applications in surgical planning, image interpretation, and more. However, the excitement associated with this surge in innovation must be tempered with responsible application and navigation of relevant ethical and legal challenges that concurrently arise. Issues surrounding informed consent, model bias, data security, and liability underscore the need for surgeons to stay informed on these rapidly developing technologies. Technology manufacturers need to remain transparent in developmental processes to ensure collaboration and confidence. Although AI’s potential for improving medical care cannot be ignored, physicians’ ethical commitments to their patients’ safety and health must be maintained. Only with these commitments intact, with critical evaluation of new technologies and literature, and with transparency from manufacturers should AI/ ML technologies be safely and responsibly implemented into a new, burgeoning era of spine care.

References

1. Rasouli JJ, Shao J, Neifert S, et al. Artificial intelligence and robotics in spine surgery. Glob Spine J. 2021;11(4):556-564.

2. Goedmakers CMW, Pereboom LM, Schoones JW, et al. Machine learning for image analysis in the cervical spine: systematic review of the available models and methods. Brain Spine . 2022;2:101666.

3. Scheer JK, Osorio JA, Smith JS, et al. Development of validated computer-based preoperative predictive model for proximal junction failure (PJF) or clinically significant PJK with 86% accuracy based on 510 ASD patients with 2-year follow-up. Spine. 2016;41(22):E1328-E1335.

4. Bottini M, Ryu SJ, Terander AE, et al. The ever-evolving regulatory landscape concerning development and clinical application of machine intelligence: practical consequences for spine artificial intelligence research. Neurospine. 2025;22(1):134-143.

5. Li H, Qian C, Yan W, et al. Use of artificial intelligence in Cobb angle measurement for scoliosis: retrospective reliability and accuracy study of a mobile app. J Med Internet Res . 2024;26:e50631.

6. Schiff D, Borenstein J. How should clinicians communicate with patients about the roles of artificially intelligent team members? AMA J Ethics . 2019;21(2):E138-E145.

7. Chan B. Black-box assisted medical decisions: AI power vs ethical physician care. Med Health Care Philos . 2023;26(3):285-292.

8. Challen R, Denny J, Pitt M, et al. Artificial intelligence, bias and clinical safety. BMJ Qual Saf. 2019;28(3):231-237.

9. Char DS, Shah NH, Magnus D. Implementing machine learning in health care—addressing ethical challenges. N Engl J Med. 2018;378(11):981-983.

10. Karhade AV, Thio QCBS, Ogink PT, et al. Predicting 90-day and 1-year mortality in spinal metastatic disease: development and internal validation. Neurosurgery. 2019;85(4):E671-E681.

11. Shah AA, Karhade AV, Bono CM, et al. Development of a machine learning algorithm for prediction of failure of nonoperative management in spinal epidural abscess. Spine J. 2019;19(10):1657-1665.

12. Karhade AV, Thio QCBS, Ogink PT, et al. Development of machine learning algorithms for prediction of 30-day mortality after surgery for spinal metastasis. Neurosurgery. 2019;85(1):E83-E91.

13. Kim JS, Merrill RK, Arvind V, et al. Examining the ability of artificial neural networks machine learning models to accurately predict complications following posterior lumbar spine fusion. Spine. 2018;43(12):853-860.

14. Goyal A, Ngufor C, Kerezoudis P, et al. Can machine learning algorithms accurately predict discharge to nonhome facility and early unplanned readmissions following spinal fusion? Analysis of a national surgical registry. J Neurosurg Spine. 2019;31(4):568-578.

15. Han SS, Azad TD, Suarez PA, et al. A machine learning approach for predictive models of adverse events following spine surgery. Spine J. 2019;19(11):1772-1781.

16. Wang F, Casalino LP, Khullar D. Deep learning in medicine—promise, progress, and challenges. JAMA Intern Med. 2019;179(3):293.

17. Shahzad H, Veliky C, Le H, et al. Preserving privacy in big data research: the role of federated learning in spine surgery. Eur Spine J. 2024;33(11):4076-4081.

18. Saravi B, Hassel F, Ülkümen S, et al. Artificial intelligence-driven prediction modeling and decision making in spine surgery using hybrid machine learning models. J Pers Med. 2022;12(4):509.

19. Khera R, Butte AJ, Berkwits M, et al. AI in medicine— JAMA ’s focus on clinical outcomes, patient-centered care, quality, and equity. JAMA. 2023;330(9):818.

20. MacIntyre MR, Cockerill RG, Mirza OF, et al. Ethical considerations for the use of artificial intelligence in medical decision-making capacity assessments. Psychiatry Res. 2023;328:115466.

21. Vedantham S, Shazeeb MS, Chiang A, et al. Artificial intelligence in breast X-ray imaging. Semin Ultrasound CT MRI. 2023;44(1):2-7.

22. Bazoukis G, Hall J, Loscalzo J, et al. The inclusion of augmented intelligence in medicine: a framework for successful implementation Cell Rep Med. 2022;3(1):100485. doi:10.1016/j.xcrm.2021.100485

23. Rynecki ND, Coban D, Gantz O, et al. Medical malpractice in orthopedic surgery: a Westlaw-based demographic analysis. Orthopedics . 2018;41(5):e615-e620.

24. Mezrich JL. Demystifying medico-legal challenges of artificial intelligence applications in molecular imaging and therapy. PET Clin. 2022;17(1):41-49.

25. Abràmoff MD, Tobey D, Char DS. Lessons learned about autonomous AI: finding a safe, efficacious, and ethical path through the development process. Am J Ophthalmol. 2020;214:134-142.

26. Chung CT, Lee S, King E, et al. Clinical significance, challenges and limitations in using artificial intelligence for electrocardiography-based diagnosis. Int J Arrhythmia. 2022;23(1):24.

Contributors:

Swapna Vaja, BS

Vincent Federico, MD

Nathan J. Lee, MD

From Midwestern Orthopedics at Rush in Chicago, Illinois.