International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

Harsh M. Desai 1 , Abhishek S. Ghodase2 , Gaurang D. Ushir3 , Megha Patil4

123UG Student, School of Mechatronics Engineering, Symbiosis Skills and Professional University, Maharashtra, India

4Senior Assistant Professor, School of Mechatronics Engineering, Symbiosis Skills and Professional University, Maharashtra, India ***

Abstract - Especially in gaming situations where immersive and simple control techniques are widely appreciated, hand gesture detection has evolved into a major breakthrough in improving human-computer interaction. This work is to design and construct a Python hand gesture based control system for gaming. Using machine learning more specifically, convolutional neural networks, the system seeks to classify motions from real time video input. To increase model accuracy, a varied collection of often-used gaming gestures, swipes, taps, and rotationswasgatheredandpreprocessed.

Key Words: Hand Gesture detection, Human-Computer Interaction (HCI), Gaming Control System, Real-Time VideoProcessing, GestureClassification, AlternativeGame Controllers

1.INTRODUCTION

The gaming landscape has changed forever in the past several years with the advance of how players interact with their games. To make the experience more fluid, intuitive, and immersive, the approach in gaming has changed. One of the more interesting breakthroughs is handgesturerecognitionallowingplayerstocontrol their games purely by natural hand gestures which is a more organicwaytointeractwiththevideogame.

This approach gives us a fresh and dynamic way to connect with virtual environments and potentially changing the way we experience digital entertainment. In this research we explore the potential of gesture based gaming by developing a hand gesture recognition system using Python. Thanks to Python flexibility and the wide range of tools it offers for computer vision and machine learning it provides as the perfect platform for building andexperimentingwithsuchinteractivesystems.

Hand gesture recognition for gaming applications has attracted many researchers and developers over the last several years. We outline and establish datasets relatedto hand gesture gaming, while highlighting studies that look atPython-basedapproachesandfulfillingtheusers

Multiple studies has showed various techniques for hand gesture recognition, including traditional computer vision methods and deep learning approach. Techniques such as Haar cascades, Histogram of Oriented Gradients (HOG), and Convolutional Neural Networks (CNNs) have been mostlyusedfordetectinghandgesturesfromvideoinput.

Python has many libraries and frameworks that support thedevelopmentofhandgesturerecognitionsystems.The most widely used tool is OpenCV, an open-source library which allows you to process video input and perform computervisiontechniques.Fortrainingandrunningdeep learning models, there are popular frameworks such as TensorFlowandPytorch.

In a study by M.C. Parashar et al (2020), the researchers developed a real-time hand gesture recognition system using OpenCV and TensorFlow for controlling gaming applications. The authors showed that Python-based tools facilitate the development of gesture recognition systems whichprovideanaccessibleanduser-friendlyexperience.

Access to quality-datasets is paramount for the training and evaluation of hand gesture recognition models. A few publicly accessible datasets include multiple gesturing examplesandhandposestoservethesepurposes.

TheAmericanSignLanguage(ASL)datasetdevelopedbyJ. Deng et al. (2018) has become the dataset of choice for researchers investigating hand gesture recognition, as it contains a multitude of hand gesture images related to a givenASLsign.Thishasprovidedremarkableresourcesfor training and appraising hand gesture recognition algorithms.

Moreover,theChaLearnLookingatPeople(LAP)provides video footage of hand gestures across varied situations, yielding a wide and challenging dataset for gauging the performanceofgesturerecognition.

Python’ssimplicityandreadabilityarestrongattributesfor fast prototyping and developing hand gesture gaming applications.Theexpressivesyntaxandfunctionalityofthe libraries available in Python allow rapid implementation anditerationsongesturerecognitionalgorithms.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

PythonoffersstraightforwardintegrationwithOpenCV,the Open-Source Computer Vision Library, which is a great utility for processing video input and programming computer vision algorithms. OpenCV offers many ways to capture hand gesture data, preprocessing it, and general utilities to facilitate further inference and discern the data accurately.

With widely used libraries including TensorFlow, Pytorch, and scikit-learn all provided in Python, developers have easy access to the tools needed to train and deploy machine learning gesture recognition models. In conjunction with deep learning approaches such as convolutional neural networks (CNNs), developers can achievehighratesofaccuracywhenutilizingvideoinputto recognizeandclassifyhandgestures.Furtherresearchmay primarilycenteronnewalgorithmstoaddresstheseissues and may consider new paradigms of interaction and integrating gesture recognition into gaming experiences frontually

The execution engine for Python and it's optimized librariesisefficientenoughtoprocesshandgesturedatain realtime.Realtimeprocessingneedslowlatencyandhigh framerateforinteractivegesturerecognitionapplications. By using multithreading and GPU acceleration, we were abletotakeinuseofmultiplecores.

With Python having inherent cross-platform capabilities, hand gesture gaming applications built with Python can run seamlessly on different operating systems without altering code. This provides users with widely available compatibilityonmanydevicesandplatforms.

Python leverages engaged developer and research communities, as well as the ability to create or contribute to open source projects, to provide developers with large communities to share code snippets on forums and have developer community assistance to illuminate your problem while also avoiding the challenges developers have to conquer when adapting hand-gesture gaming technologies through evolving collaborations or shared experience.

One of the exciting aspects of Python for hand gesture gaming is the ability to utilize popular game engines such as Unity and Unreal Engine. By integrating hand gestures directlyintogamingprojectsviaPython,developersgaina powerfulwaytocreatesparksofdynamismthatutilizethe movementsofplayersadheringtotheirmovements.

Hand gesture gaming created in Python can refer the opportunity to improve accessibility and inclusivity in the global gaming space. Gesture interfaces designed to experience gaming when people either can not or do experience through other means recognizing alternative types of input based on natural movements of the body

making it available for many approaches that relate to historicindividualexperienceandmovement.

Python is a convenient language for development that providesareasonableopportunitytoexperiencemoderate performancewithamplelibraryecosystemstorealizehand gesturegaming.Developerscanleveragethesimplicityand dynamism from gesture recognition to create more immersiveandinteractivegamingexperienceswhilefreely allowing additional engagement and interactivity with handgestures.

Python is fundamentally designed as modular; the structure allows developers to reuse code and functionalities while developing new features with little effort to adapt supervised algorithms to users' specific gaming needs. By aspiration, developers adhere gesture recognitionsystemstothegenreandplatformofgaming.

The available options in hand gesture gaming development, including an array of visualization and debugging tools are robust with Python. Visualization libraries, such as Matplotlib and Plotly, can be used to visualize gesture data and model performance, and IDEs and debugging tools, such as PyCharm and Jupyter Notebook, also have advanced debugging capabilities to inspectandaddressissueseffectively.

Python allows the developers to use the hand gesture recognition input along with other input modalities, such asvoicecommands,touchscreensormotioncontrollers,to makeanengagingandimmersivegamingexperience.Using multiple input modalities will allow developers to make immersive gameplay dynamics and allow them to select touchinput,motioninput,oracombinationofboth.

Python based hand gesture gaming experiences can be modifiedandpersonalizedveryquicklytofituniquewants andneedsfromeachgamerseparately.Developerscanadd user profiles to implement gesture modifecations and adoupt changes in difficulty to allow players the option to personalizetheexperiencetobestsuittheiruniqueneeds.

The scalability and performance optimization of Python enabledeveloperstoeffectivelyscalehandgesturegaming solutions, and manage high volume of data and complex interactions. Developers canemploy different methods for maximising performance and responsiveness in a high demand gaming environment, such as parallel processing, algorithmoptimization,andhardware-acceleration.

Educational and Research Options:Handgesturegaming solutions based on Python offer valuable educational and research opportunities to studentes, academics, and hobbyists who are interested in computer vision, machine learning, and human-computer interaction. Students and other learners can explore gesture recognition algorithms

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

and/orapplytheirresearchintothereal-worldusinghand gesturegaming.

Commercial Viability and Market Potential: Hand gesture gaming solutions based on Python readily offer commercial viability and widely open up market opportunities, including monetisation options such as game purchases, in game purchases, sponsorship, and subscription models. Current market demand for immersive and interactivity gaming solutions to address thechallengesinhealth.

2. Literature review

This team designed a hand gesture recognition system to allow immersive interaction within virtual reality. Using image processing techniques such as Camshift, LucasKanade and Haar-like features, this hand gesture recognition systemcould detect, track and recognize hand gestures in real-time, improving the user's experienceand usabilityinfunctionssuchasgamingorvirtualinteractions suchassignlanguage[1]

This project involves designing a webcam-based hand gesture recognition system for controlling video games. Thisproductaddressesaccessibilityforuserswithphysical disabilities. It involves real-time image processing and gesture detection using OpenCV. The system is affordable and customizable and adaptable to multiple gaming genres[2]

This study details a side-by-side comparison between the Leap Motion, Kinect, and RealSense devices for use in hand-gaming controls. In this study, the researcher assessed the performance, comfort and the overall user experienceforeachdevice.TheresultsindicatedthatLeap Motion and Kinect had superior performance than RealSense with Leap Motion identified as the media speakers' most preferred device. Overall, the results suggest the importance ofassessing these devices priorto useforthedevelopmentofsensiblegames[3]

This paper presents HandReha, a webcam-based, gamebased wrist rehabilitation system built around hand gesture recognition. We explored a deep learning-based approach to detecting pre-defined therapeutic level gestures for controlling a 3D game avatar. Our user-study indicates that HandReha is an enjoyable and easy-to-use systemandwefeelconfidentinassertingthatitcanbean effective platform for rehabilitation.This study compares Leap Motion, Kinect, and RealSense for gesture-based gaming, assessing performance, comfort, and user experience. Results show Leap Motion and Kinect outperformedRealSense,withLeapMotionbeingthemost effective. The findings offer valuable guidance for game designersinselectingandintegratinggesturedevices[4]

This study compares Leap Motion, Kinect, and RealSense forgesture-basedgaming,assessingperformance,comfort, anduserexperience.ResultsshowLeapMotionandKinect outperformedRealSense,withLeapMotionbeingthemost effective. The findings offer valuable guidance for game designersinselectingandintegratinggesturedevices[5]

Thispaperpresentsa3Drehabilitationgamecontrolledby sEMG signals using a deep learning model for real-time hand gesture recognition. It uses data from the Myo armbandandaConv-GRUarchitecturetoclassifygestures. The system showed promising results in live testing and user studies, offering a low-cost, AI-driven rehab solution[6].

Thisstudyexploresusingdatagloveswithminimalsensors perfingerforaccurate,real-timehandgesturerecognition ingaming.Comparedtovision-basedmethods,datagloves offer greater flexibility and responsiveness. The results showeffectivecontrol ofin-gameactions,supportingtheir useinhuman-machineinteraction[7]

This study presents an interactive game using real-time skeleton-basedhandgesturerecognitionforrehabilitation, improvinghand-eyecoordinationinpatients.Alightweight residual graph convolutional architecture is used for gesture recognition. User tests showed improved performance in the game, supporting the system's effectivenessforrehabilitation[8].

The Hand Gesture Recognition Gaming Control System allows users to control gameplay through intuitive hand movements, eliminating traditional input devices. Using computer vision and machine learning, it translates gestures into in-game commands for actions like movement and weapon selection. The system offers an immersive and accessible experience, particularly for playerswithphysicaldisabilities[9]

This paper presents a gesture-based game controlled by hand movements using Computer Vision, specifically employingtheHaarCascadeclassifierforgesturedetection andrecognition.Thesystemenablesuserstointeractwith the game "Flying Wing Mario" through simple hand gestures, enhancing the immersive experience. The approach is adaptable to various games, integrating the virtualandrealworlds[10]

Hand gesture-driven gaming uses computer vision techniques like OpenCV and deep learning to track and interpret hand movements for inclusive, immersive gaming. This technology supports therapy, rehabilitation, and recovery, enabling people with varying physical abilities to participate. The paper reviews recent advancementsingesture-basedgamingsystems[11]

This article explores the integration of speech and hand gesture recognition in computer games to enhance

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

gameplay. It discusses key challenges in developing multimodal interfaces for human-computer interaction. The paper surveys current approaches to address these challengesingamingapplications[12]

Hand gesture data is collected using a webcam or depth sensor device capable of capturing real-time video input. The dataset is annotated with ground truth labels corresponding to different hand gestures, such as swipes, taps, and rotations. To enhance the diversity and robustness of the dataset, data augmentation techniques suchasrotation,scaling,andflippingmaybeapplied.

3.2

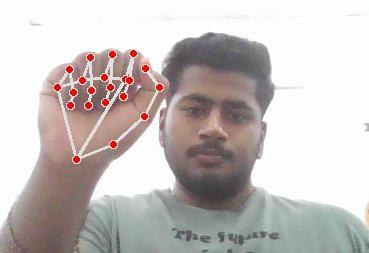

The raw video frames are pre-processed to enhance contrast,removenoise,andextractrelevantfeaturesusing techniques such as filtering, thresholding, and edge detection.Hand regions are detected within each frame using methods such as skin color segmentation, contour analysis, or machine learning-based approaches.Once the hand regions are detected, tracking algorithms are employedtoestimate thehand's position,orientation,and movementovertime.

Eitherhandcraftedfeaturesorfeatureextractorsbasedon deep learning principles are used to extract meaningful (discriminative) information from the processed hand images. A number of models that can be used in the trainingphase,includingCNN'srecurrentneuralnetworks (RNNs) or even a hybrid architecture are then trained basedontheannotateddatatorecognizeandclassifyhand gestures. Hyperparameter tuning applying regularization strategies and selecting of an optimization algorithm are employed to enhance the performance and generalization ofthemodel.

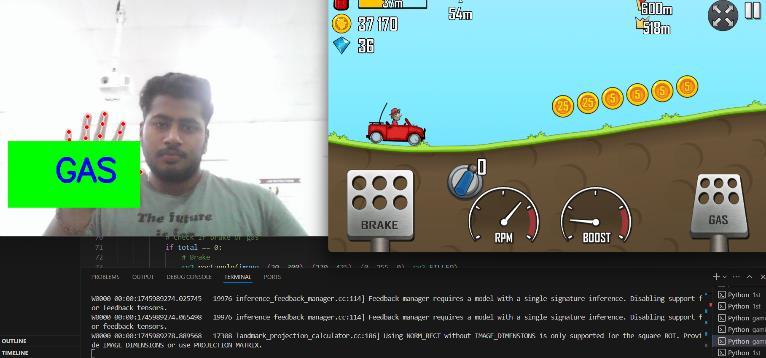

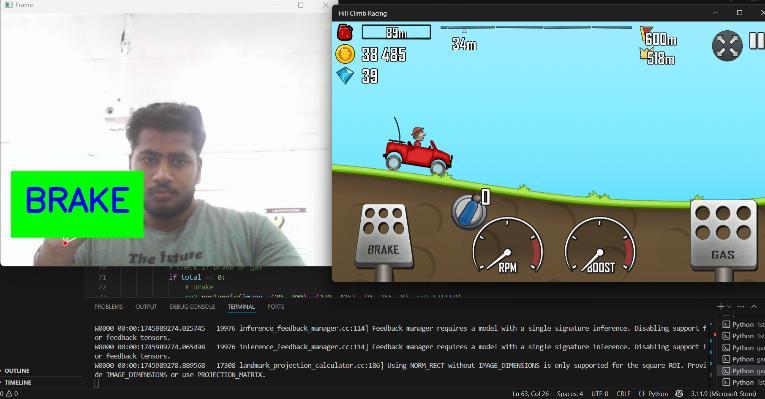

The trained hand gesture recognition model is integrated intoUnity,apopulargameengine,ifdesired,usingplugins or using the provided scripting APIs.Hand gestures are mapped to specific game actions, e.g., character movement, object manipulation, or menu selection, and may happen in monitored space according to pre-defined mappings,e.g.,userbased,oracross-trackedsystems,e.g., if/then statements, etc.The integrated system in Unity, now may facilitate real-time interactions between hand gestures and virtual environments, enabling players to controlgameelementswiththeirnaturalhandgestures.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

The accuracy and robustness of the trained model are measured by assessment metrics e.g. classification accuracy, precision, recall rate, and F1-score using a separate test dataset. The latency and responsiveness of thesystemareestablishedbymeasuringthetimetakento recognize gestures and the time taken to perform subsequent game actions. User testing and feedback sessions are conducted to gain unique insights regarding the usability and user experience and user satisfaction of thesystem

Haar cascade classifiers are a method for recognizing objectsorpatternsinpictures.Inthecaseofhandgesture gaming, Haar cascade classifiers can be trained to detect certain hand gestures by mapping the shapes of pixel intensity. OpenCV will train Haar cascade classifiers to detect hand gestures based on features Background subtraction algorithms work by inputing a sequence of images and determining what the foreground objects are in the sequence by eliminating the background from each image. This is beneficial to recognize moving pixels that are closely conceived to the background, i.e. a moving handinthevideofeed.Backgroundsubtractionalgorithms canalsobeusedasapreprocessingstagetoidentifyhand gestures. Looking ahead, the future of hand gesture gaming using Python can have huge potential and opportunities for further innovations and refinements in hand gesture recognition accuracy and system responsiveness.Continuedresearchanddevelopmentinto hand gesture gaming will be important for overcoming problemsthatexist,forexploringnewtypesofinteraction andrealizingthefullpotentialofhandgesturegamingasa change agent in the gaming and entertainment industry. The control signals are the main output of a hand gesture gaming system, and they are used to interact with the game. These signals include commands to control the gameenvironment,forexample,ahandgestureindicating

a move to the character, commands to select an option fromthemenu,andothercontextualactions.Forexample, a hand gesture that conveys a swipe motion to the right contributes to outputting a command to move the character in a rightward direction. Many hand gesture gaming systems provide users visual feedback as an indicationthatauser'sgestureshavebeenrecognizedand registered by the system. Typically visual feedback encompasseshighlightingthehandregiondetectedbythe application,gesturerecognitionfeedbackintextform,and animatedscreencontentthatrespondstotheusergesture. In contrast to visual feedback, some hand gesture gaming systems may provide auditory feedback to the user. Auditory feedback usually means that specific gestures will output sound effects, guidance to the user via a voice prompt, or sound feedback related to music that can changedynamicallydependingontheactionstakenbythe player.Manyhandgesturegamingsystemsusesometype of progress tracking and scoring system to give feedback on user performance. Scoring may involve tallying points based on gestures that were done successfully. Progress tracking may be used to indicate how far a player has progressed through searching features or levels or may keep track of what achievements the player has acquired throughtheirgamingexperience.

The output from the hand gesture gaming system may contain updates indicating either a change in the position orcharacteristicsofagameobjectorprovideanupdateto the user interface, or it may provide a way of altering the level of challenge presented to the player based on how the player is performing. If the hand gesture gaming system encounters a situation where it cannot determine whatgestureisbeingperformedbytheuserorifthehand gesture gaming system experiences an error while processing user input it may provide users with error messagesoralerts.

Python's simple syntax and rich set of libraries allows developerstorapidlyprototype,implement,anditerateon hand gesture gaming systems. Libraries such as OpenCV and TensorFlow make it easier for developers to create algorithms and programs for hand gesture based games where functionality is provided out of the box for computer vision (OpenCV) or machine learning (TensorFlow) tasks. Python is a versatile language with numerous capabilities, Python can be used across any handgesturegaming systemthedevelopers wouldliketo build. Developers can easily extend their hand gesture gaming ideas to other platforms such as desktop-based implementations, mobile-based implementations, or even web-based implementations. Python can greatly increase accessibilityinhandgesturegaminginthatplayerscanbe provided with another more natural and intuitive input meanscomparedtocontrollersorkeyboards.Playerswith physical disabilities or mobility impairments that want to

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 05 | May 2025 www.irjet.net p-ISSN: 2395-0072

play with the controller could use their hands to control the game with hand movements in a more natural way. A pipeline of implementation of the practical ideas, algorithms, and games mechanics tested by developers andusingwhatworksallowsforfasteriterationofahandgesturebasedgamingcomponents.

To sum up, Python hand gesture gaming is a vibrant and evolving field with the potential to reshape how we play and engage with games. Through accurate recognition systems inspired by Python, creators can apply a multi disciplinary method combining computer vision machine learning and gaming technologies to process hand gestures. This powerful combination of digital technologies has the prospect to create immersive and straightforward gaming experiences that are freeing and excitingplayerstoembarkonnew,virtualadventures.

The impacts of Python hand gesture gaming reach well beyondentertainment.Byembracinginclusionandaccess hand gesture systems have the power to overcome physicalbarriersandprovideengagementthroughgaming experiences that are meaningful for everyone. Through attentiontousercentereddesignandcohesiveintegration handgesturegamingcanbeanagentofsocialinclusionby allowing players to interact and access virtual worlds unlikeanyother.

[1] Rautaray, S. S., & Agrawal, A. (2011, December). Interaction with virtual game through hand gesture recognition. In2011 International Conference on Multimedia, Signal Processing and Communication Technologies(pp. 244-247). IEEE.Rautaray, S. S., & Agrawal,A.(2011,December).Interactionwithvirtual game through hand gesture recognition. In2011 International Conference on Multimedia, Signal Processing and Communication Technologies(pp. 244-247).IEEE.

[2] Le, N. V., Qarmout, M., Zhang, Y., Zhou, H., & Yang, C. (2021, December). Hand gesture recognition system for games. In2021 IEEE Asia-Pacific Conference on Computer Science and Data Engineering (CSDE)(pp. 1-6).IEEE.

[3] Khalaf, A. S., Alharthi, S. A., Dolgov, I., & Toups Dugas, P. O. (2019, November). A comparative study of hand gesture recognition devices in the context of game design. InProceedings of the 2019 ACM international conference on interactive surfaces and spaces(pp. 397-402).

[4] Farahanipad, F., Nambiappan, H. R., Jaiswal, A., Kyrarini,M.,&Makedon,F.(2020,June).HAND-REHA:

dynamic hand gesture recognition for game-based wrist rehabilitation. InProceedings of the 13th ACM International Conference on PErvasive Technologies RelatedtoAssistiveEnvironments(pp.1-9).

[5] Khalaf, A. S., Alharthi, S. A., Alshehri, A., Dolgov, I., & Toups Dugas, P. O. (2020, July). A comparative study of hand-gesture recognition devices for games. InInternational Conference on Human-Computer Interaction(pp. 57-76). Cham: Springer International Publishing.

[6] Nasri, N., Orts-Escolano, S., & Cazorla, M. (2020). An semg-controlled 3d game for rehabilitation therapies: Real-time time hand gesture recognition using deep learningtechniques.Sensors,20(22),6451.

[7] Schade, A., Schulz, J., Nguyen, V., Scheunert, C., Bodenstedt, S., Nguyen, G. T., ... & Fitzek, F. H. (2023, March). On the advantages of hand gesture recognition with data gloves for gaming applications. In2023 IEEE International Conference on Pervasive Computing and Communications Workshops and otherAffiliatedEvents(PerComWorkshops)(pp.313315).IEEE.

[8] Chen,J.,Zhao,S.,Meng,H.,Cheng,X.,&Tan,W.(2022). An interactive game for rehabilitation based on realtime hand gesture recognition.Frontiers in Physiology,13,1028907.

[9] Sharma, A., Verma, L., Kaur, H., Modgil, A., & Soni, A. (2024, May). Hand Gesture Recognition Gaming Control System: Harnessing Hand Gestures and Voice Commands for Immersive Gameplay. In2024 International Conference on Emerging Innovations andAdvancedComputing(INNOCOMP)(pp.101-107). IEEE.

[10] Singhvi,S.,Gupta,N.,&Satapathy,S.M.(2022).Virtual GamingUsingGestureRecognitionModel.InAdvances in Distributed Computing and Machine Learning: Proceedings of ICADCML 2021(pp. 114-124). SpringerSingapore.

[11] Sophiya, E., & Reddy, S. S. (2024, March). Hand gesture-driven gaming for effective rehabilitation and improved quality of life-a review. In2024 5th International Conference on Innovative Trends in InformationTechnology(ICITIIT)(pp.1-6).IEEE.

[12] Liu, J., & Kavakli, M. (2010, July). A survey of speechhand gesture recognition for the development of multimodal interfaces in computer games. In2010 IEEE International Conference on Multimedia and Expo(pp.1564-1569).IEEE.