International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

1Department of MCA, P.E.S College of Engineering, Mandya, Karnataka, India

2Department of CS&E, P.E.S College of Engineering, Mandya, Karnataka, India ***

Abstract - In the rapidly evolving landscape of digital communication,threatslikesocialengineeringhavebecomea serious challenge. Social engineering attacks, particularly those conducted through telephonic conversations and text messages, aim to manipulate individuals into revealing sensitive personal, financial, or security-related information. These attacks bypass traditional technical security measures by exploiting human psychology. The rise of fake calls, scam messages, and voice spoofing has necessitated the development of intelligent systems capable of detecting such malicious attempts in real-time.

Thisprojectpresents acomprehensivesystemforthedetection and classification of telephonic social engineering attacks, combining both textual message data and telephonic audio recordings. The system uses machine learning and deep learning models to classify text messages as either spam (social engineering attempt) or non-spam, and audio files as either fake (synthetic/manipulated) or real (genuine voice).

Key Words: Machine learning, deep learning, genetic algorithm, LSTM, TF-IDF, KNN, SVM

1.INTRODUCTION

Socialengineeringisapsychologicalmanipulationtechnique wherein attackers deceive individuals into revealing confidentialinformation.Unliketraditionalhacking,which requires technical expertise, social engineering relies on exploitinghumanemotionssuchasfear,urgency,curiosity, ortrust.Theseattacksoftencomeintheformoffraudulent phonecalls,messages,oremails.Forinstance,acallermay impersonate a bank representative and ask for OTPs, PIN numbers,oraccountdetails.Inothercases,automatedfake voices powered by AI technology may attempt to sound convincingenoughtobypassvoiceauthenticationsystems.

Giventhiscontext,thereisadireneedtodevelopintelligent systemsthatcanhelpdetectandpreventthesemanipulative attacks. This project, titled "AI-DRIVEN SOCIAL ENGINEERING AWARENESS TRAINING MODEL", is a step towards addressing this critical issue. It aims to build a detectionsystemthatcanclassifyinputs eitherintextor audioformat aseithergenuineorsuspicious.

Thesolutionistwo-fold:onepartdealswith text message analysis using natural language processing (NLP) and machine learning (ML), while the second part processes

audio files usingMFCC-basedfeatureextractionanddeep learningmodelssuchasLSTM(LongShort-TermMemory) and CNN (Convolutional Neural Networks). Text classificationisbasedonconvertingmessagecontentinto numerical vectors using TF-IDF and then feeding it to classifiers like Logistic Regression and SVM. For voice detection, MFCC features help in capturing speech characteristics which are then used to train the neural network modelsto distinguishbetween real and fake(AIgeneratedormimicked)audio.

[1] In an early study, Breda et al. (2017) emphasized the psychologicalmanipulationatthecoreofsocialengineering, whereattackersexploithumantrust,curiosity,andurgency togainaccesstosensitiveinformation.Althoughthestudy thoroughly discussed real-world incidents and human vulnerabilities, it lacked the development of technical or automatedsolutionstomitigatetheseattacks.

[2] Expanding on this foundation, Salahudin and Kabocha (2019) conducted a comprehensive survey categorizing social engineering methods, suchasphishing, baiting,and pretexting.Theirworkwaspivotalinidentifyingbehavioural triggersandproposingcountermeasureslikeaccesscontrol andemployeetraining.However,theirapproachremained reactiveanddidnotsuggestintelligent,real-timeAIsystems forproactivedetection.

[3] With the growing role of social media in daily communication,Nazetal.(2024)exploredtherisksofsocial engineeringthroughplatformslikeFacebookandTwitter. Thestudyshowcasedhowattackersusepubliclyavailable information to engineer convincing scams and impersonation. Despite its depth in social media-focused threats, the work lacked any integration of speech-based attackvectorsorvoice-baseddetectionsystems.

[4]Inamoreimplementation-orientedstudy,DeepakSingh Malik (2023) analysed phone-based scams and proposed machine learning-based anomaly detection as a potential solution.Hisrecommendationtocombineuserprofilingand voiceanalysislaidthegroundworkformulti-modalsecurity systems. However, the study lacked concrete implementationorexperimentalvalidationoftheproposed models.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

[5] A holistic view of the landscape was offered by Tejal Rathod et al. (2025), who presented a layered AI-based approach combining voice authentication, NLP, and sentiment analysis for combating deepfake-enabled social engineering.Theyproposedareal-timefraudscoringsystem butacknowledgedthelackofscalable,low-costsolutionsfor widespread public deployment highlighting a key opportunityforthisproject.

[6]Intheaudiodomain,AmeerHamza (2022)focused on detecting deepfake speech using MFCC feature extraction with machine learning classifiers like SVM and Random Forest.Hismodelsachievedover95%accuracyinclassifying synthetic audio. However, his models were trained on narrowly scoped datasets and lacked multilingual or realtimecapabilities.

[7] Addressing linguistic diversity, Omair Ahmad (2025) investigated deepfake detection in Urdu using LSTM and CNNnetworks.Hisstudyachievedover98%accuracyand emphasized the need for regional language datasets. This work highlights the importance of multilingual training, particularlyforapplicationsincountrieslikeIndia.

[8]FurthercontributionsbyHamzaetal.(2022)reinforced thevalueofMFCCanddeltafeaturesindeepfakedetection, stressingthe role ofrobust trainingundervariedacoustic conditions. However, their models still struggled with generalizationacrossdatasetsandlanguages underscoring theneedforscalablesolutions.

[9] Almutairi and Elgibreen (2022) reviewed a range of audiodeepfakedetectiontechniques,includingFFT,ResNet, and GAN-based methods. Their survey discussed current challenges like compressed audio detection, adversarial attacks, and lack of explainable AI in this domain. While comprehensive,thestudydidnotproposeortestanyunified platformcombiningbothtextandaudiodetection.

[10]EzerOseiYeboah-Boateng&PriscillaMatekoAmanor (2024),Phishing, Smishing & Vishing: An Assessment of Threats against Mobile Devices Conducted exploratory interviews with around 20 mobile users to assess their awareness and behavior toward phishing, vishing (voice phishing),andSMS-basedphishing(Smishing),Highlighteda taxonomy of persuasive (“alluring” or “decoying”) words usedinmobilephishingattackstoinformuserawareness.

3.

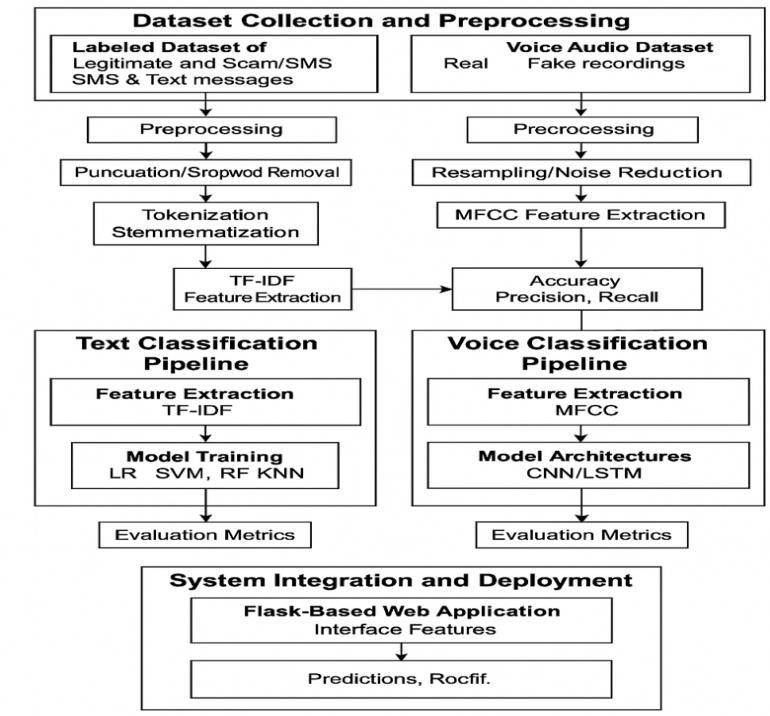

Theproposedsystemaimstodetectandclassifytelephonic social engineering attacks by analysing both textual messages and voice-based calls using a dual-modality machinelearninganddeeplearningframework.Thishybrid approach enhances the robustness of the detection mechanism by combining natural language processing techniqueswithaudiosignalprocessing.Themethodologyis

organized into several key phases: dataset collection and preprocessing, text message classification, voice call classification,andintegrationthroughaweb-basedinterface.

Tobeginwith,twodistinctdatasetsareprepared onefor textual data and the other for voice recordings. The text messagedatasetiscomposedoflabelledsamplescategorized as either legitimate messages or scam/spam messages, including phishing attempts, impersonation, fraudulent banking requests, and OTP (One-Time Password) scams. These messages are collected from publicly available repositories, open-source SMS datasets, and manually curated samples. To prepare the text for model training, several preprocessing steps are applied to ensure data uniformityandenhancelearning.Eachmessageisconverted to lowercase to eliminate case sensitivity. Punctuation marks,symbols,andirrelevantcharactersareremoved.Stop words commonly occurring words that carry minimal semanticload arefilteredout.Next,tokenizationisapplied, which splits the message into individual words or tokens. Finally,stemmingorlemmatizationisemployedtoreduce words to their base forms, thereby consolidating similar termsandreducingdimensionality.Oncecleaned,thetextual data is vectorized using TF-IDF (Term Frequency-Inverse DocumentFrequency).Thistechniquetransformstextinto numerical representations that reflect the importance of terms relative to a document and the overall corpus, capturingthecontextualweightofeachword.Thissparse matrixofTF-IDFfeaturesbecomestheinputtothemachine learningclassifiers.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

Simultaneously,avoiceaudiodatasetiscuratedcontaining two categories: real voice recordings, which represent genuine human speech from legitimate calls, and fake recordings,whichincludeAI-generated(deepfake)voices, mimicry attempts, or pre-recorded scam messages. The datasetiscompiledfromopen-sourcespeechrepositories, telephony datasets, and synthetic voice samples created usingtext-to-speech(TTS)tools.Eachaudiofileundergoesa series of preprocessing steps to normalize and extract meaningful features. The files are first converted to mono channelandresampledat16kHztostandardizefrequency. Silencetrimmingisperformedtoeliminatepauses,andnoise reduction techniques are applied to remove ambient disturbances.Thecorefeatureextractionprocessinvolves computing Mel-Frequency Cepstral Coefficients (MFCC), whichrepresenttheshort-term powerspectrumofsound andarewidelyusedinspeechrecognition.Typically,13to 40 MFCCs are extracted per frame. These coefficients effectivelycapturethetimbralandtonalqualitiesofhuman speech. To ensure uniform input size, feature vectors are paddedortruncatedtoafixedlength.Insomecases,MFCCs arevisualizedasspectrogramimagesforCNN-basedmodels.

Thetextclassificationmoduleleveragestraditionalmachine learningalgorithmstocategorizeinputmessagesaseither Spam (social engineering attempt) or Non-Spam (benign message).Thepre-processedTF-IDFfeaturematrixserves as input to a suite of classifiers, each evaluated for its predictiveaccuracyandgeneralizability.Themodelstrained include Logistic Regression (LR), Support Vector Machine (SVM), Random Forest (RF), and K-Nearest Neighbours (KNN). Logistic Regression is selected for its simplicity, interpretability, and effectiveness in binary classification. SVMischosenforitsabilitytohandlehigh-dimensionaldata and non-linear decision boundaries using kernel tricks. Random Forest is employed due to its ensemble-based robustnessandresistancetooverfitting,especiallyinnoisy datasets. KNN is used to assess performance based on similaritymeasures,albeitwithhighercomputationalcost duringinference.Thedatasetissplitinto80%trainingand 20%testing,andk-foldcross-validationisusedtovalidate results. Models are evaluated using standard metrics: accuracy,precision,recall,F1-score,andconfusionmatrix. Thebest-performingmodel typicallySVMorRF issaved usingjobliborpickleforlaterdeployment.

The voice analysis module employs deep learning architectures, specifically Convolutional Neural Networks (CNN)andLongShort-TermMemory(LSTM)networks,to distinguish between real and fake audio samples. Two parallel model architectures are designed to explore performancetrade-offs.IntheCNN-basedmodel,theMFCC features are treated as 2D spectrogram images. The

architectureincludes2–3convolutionallayersactivatedwith ReLU, followed by max pooling layers to down-sample feature maps and extract spatial patterns. These are then flattenedandpassedthroughfullyconnected(dense)layers, ending with a softmax activation function for binary classification.ThismodelisoptimizedusingAdamoptimizer andcategoricalcrossentropylossfunction.

In the LSTM-based model, MFCC features are treated as temporal sequences, which allow the model to capture dependencies over time. The architecture includes one or more LSTM layers capable of remembering long-term dependencies, followed by dense layers with dropout regularization to prevent overfitting. The final layer uses SoftMaxforclassification.Trainingisconductedwithearly stopping,learningratescheduling,andbatchnormalization to enhance stability and convergence. Both models are trained using the same 80/20 train-test split, and model checkpointsaresavedin.h5formatforKera’s/TensorFlow deployment.Evaluationisconductedusingmetricssuchas accuracy,ROC-AUC,F1-score,andconfusionmatrix.

Toprovideseamlessinteractionforend-users,bothmodules areintegratedintoaunifiedwebapplicationusingtheFlask framework. The web interface offers two primary functionalities:1)textinputboxformessageclassification, and 2) file upload form for voice classification. Upon submission, the Flask backend routes the request to the appropriate model. For text messages, the input is preprocessed, vectorized with TF-IDF, and fed into the serialized ML model to return a prediction label and confidencescore.Foraudio files,MFCCsare extracted onthe-fly,andthecorrespondingdeeplearningmodelclassifies theinputaseitherRealorFake,alsodisplayingaconfidence level. Users can listen to the uploaded audio through an integratedaudioplaybackfeature.

ThefrontendisdevelopedusingHTML,CSS,andBootstrap, ensuringresponsivenessandeaseofuseacrossdevices.The backendhandlesreal-timeinference,completingpredictions inunderthreesecondsforbothmodalities.Allmodelsare stored in appropriate formats: .pkl or .joblib for text classifiers, and .h5 for deep learning audio models. The application architecture is modular, supporting future expansion for multilingual detection, real-time streaming analysis,andintegrationwithexternalAPIsforfraudalerts.

Theproposedsystemwasevaluatedontwoprimarytasks: classification of social engineering text messages and detection of fake versus real audio calls. The goal was to assess the performance of machine learning and deep learning models on relevant datasets using standard evaluation metrics such as accuracy, precision, recall, F1-

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

score, and confusion matrix. The results from both classificationmodulesarepresentedandinterpretedbelow.

4.1Text Classification Results

A dataset of labelled SMS messages including phishing, impersonation,andgenuinemessages waspre-processed and transformed using TF-IDF. Four machine learning models were trained: Logistic Regression (LR), Support VectorMachine(SVM),RandomForest(RF),andK-Nearest neighbours(KNN).

Table4.1TextClassificationResult

Discussion – Text Models:

SVM outperformed other models, particularly in detectingborderlinespammessagesthatmimicreal communication.

Logistic Regression and Random Forest offered slightly lower but still competitive performance, withRandomForestshowingbetterrobustnesson noisyinputs.

KNN,thoughintuitive,laggedinaccuracyduetoits relianceondistancemetrics,whicharelesseffective insparseTF-IDFvectors.

4.2 Voice Classification Results

For the voice classification module, a dataset containing genuine and fake voice samples (including deepfake and synthetic speech) was pre-processed using MFCC feature extraction. Two deep learning models Convolutional Neural Network (CNN) and Long Short-Term Memory (LSTM) weretrainedandevaluated.

Discussion – Voice Models: Snapshot5.2Textpredictionpage

The LSTM model demonstrated superior performance, particularly in capturing temporal speechdynamicscriticalfordetectingmanipulated audio.

CNN performed slightly lower but remained a strong contender due to its strength in spatial patternrecognitionoverMFCCspectrograms.

ROC-AUC scores of 0.96 and 0.97 for CNN and LSTM respectively indicate excellent class separability.

MisclassificationsinCNNweremostlyattributedto short-durationorheavilycompressedaudioclips, wherespectralresolutionislow.

Table4.2VoiceClassificationResult

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

6.CONCLUSION

The growing sophistication of social engineering attacks, particularlythoseexploitinghumanvulnerabilitiesthrough telephoniccallsandfraudulenttextmessages,demandsthe development of intelligent, automated detection systems. This paper presented a dual-modality AI-based system capableofdetectingsocialengineeringthreatsinbothtext and voice communication channels. The proposed frameworkintegratesNaturalLanguageProcessing(NLP), classical Machine Learning (ML), and Deep Learning (DL) techniques to address both message-based phishing and audio-based impersonation attempts, including deepfake speech.

For textual classification, models such as Support Vector Machine(SVM),LogisticRegression,RandomForest,andKNearestNeighbourswereevaluatedusingTF-IDFfeatures. Among them, SVM consistently delivered superior performance, achieving over 96% accuracy in identifying scam messages. On the auditory front, a robust pipeline using Mel-Frequency Cepstral Coefficients (MFCC) was implemented, followed by CNN and LSTM models for deepfake voice detection. LSTM proved more effective, reachinganaccuracyof96.2%duetoitstemporalsensitivity tospeechpatterns.

ThesystemwassuccessfullydeployedasaFlask-basedweb application, offering users an accessible platform to input messages or upload voice recordings for real-time classification. The application demonstrated strong responsivenessandusability,withpredictiontimesunder three seconds and a clear, user-friendly interface. This confirmsthefeasibilityofdeployingsuchasysteminrealworldenvironmentslikecallcentres,banking,government, andtelecomsectors.

[1]Breda,Filipe&Barbosa,Hugo&Morais,Telmo.(2017). SOCIALENGINEERINGANDCYBERSECURITY.4204-4211. 10.21125/inted.2017.1008.

[2] Salahdine, Fatima & Kaabouch, Naima. (2019). Social Engineering Attacks: A Survey. Future Internet. 11. 10.3390/fi11040089.

[3]Naz,Anam&Sarwar,Madiha&Kaleem,Muhammad& Mushtaq, Muhammad & Rashid, Salman. (2024). A comprehensivesurveyonsocialengineering-basedattacks onsocialnetworks.InternationalJournalofADVANCEDAND APPLIEDSCIENCES. 11.139-154.10.21833/ijaas.2024.04.016.

[4]DeepakSinghMalikSocialEngineeringAttacksandtheir mitigationsolutionlink https://ijaem.net/issue_dcp/Social%20Engineering%20Atta cks%20and%20their%20mitigation%20solutions.pdf

[5] Tejal Rathod, Nilesh Kumar Jadav, Sudeep Tanwar, Abdulatif Alabdulatif, Deepak Garg, Anupam Singh,A comprehensive survey on social engineering attacks, countermeasures, case study, and research challenges, Information Processing & Management, Volume 62, Issue 1,2025,103928,ISSN0306-4573, https://doi.org/10.1016/j.ipm.2024.103928.

[6] AMEER HAMZA Deepfake Audio Detection via MFCC Features Using Machine Learning Received 14 December 2022,accepted 20December2022, dateof publication 21 December2022,dateofcurrentversion29December2022. DigitalObjectIdentifier10.1109/ACCESS.2022.3231480

[7] OMAIR AHMAD Deepfake Audio Detection for Urdu Language Using Deep Neural Networks Received 7 April 2025, accepted 7 May 2025, date of publication 19 May 2025,dateofcurrentversion10June2025.Digital Object Identifier10.1109/ACCESS.2025.3571293

[8]Hamza,Ameer&Javed,AbdulRehman&Iqbal,Farkhud &Kryvinska,Natalia&Almadhor,Ahmad&Jalil,Zunera& Borghol,Rouba.(2022).DeepfakeAudioDetectionviaMFCC Features Using Machine Learning. IEEE Access. PP. 1-1. 10.1109/ACCESS.2022.3231480.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | July 2025 www.irjet.net p-ISSN: 2395-0072

[9] Almutairi, Z.; Elgibreen, H. A Review of Modern Audio Deepfake Detection Methods: Challenges and Future Directions. Algorithms 2022, 15,155. https://doi.org/10.3390/a15050155

[10]EzerOseiYeboah-Boateng&PriscillaMatekoAmanor (2024),Phishing, Smishing & Vishing: An Assessment of ThreatsagainstMobileDevices

Lavanya K M, received Bachelor’s Degree in Computer Applications from University of Mysore, India andsheCurrentlypursuingMCAin P.E.S College of Engineering, Mandya.

Dr H.P Mohan Kumar, Obtained MCA, MSc(Tech) and PhD from University of Mysore, India in 1998,2009and2015respectively. He is Working as a Professor in Dept. of CS&E, P.E.S. College of Engineering, Mandya, Karnataka, India, His Area of Interest are Computer Vision, video analysis andDataMining.