• Resprep SPE & Q-sep QuEChERS

• PFAS Delay Columns

• Raptor & Force LC Columns

• PFAS Reference Materials

• Ultra-Clean Resin for Air Analyses

• ASE Cells & Parts

• Filters and vials

Access expert support and tailored PFAS testing solutions for U.S. EPA, ISO, ASTM methods, and more, at www.restek.com/PFAS

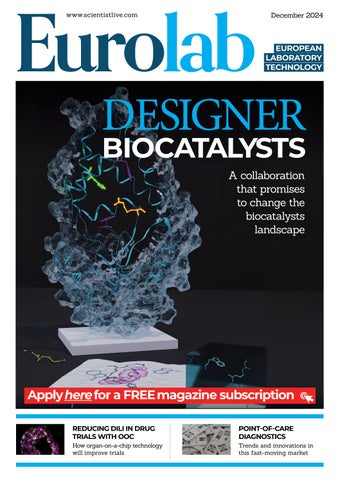

We have a bumper issue for you this December with the majority of articles focusing on new techniques and technologies within the sector. The cover story, Designer biocatalysts, looks at an interesting collaboration between the Manchester Institute of Biotechnology and a leading computational biologist that will see traditional ways of developing enzymes for industry transformed with the help of computational methods and big data. A piece from CN Bio (page 28) titled 'How OOC technology can reduce DILI in drug development’ explores organ-on-achip technology’s role in reducing drug-induced liver damage during trials. Another blue skies piece written by Rory O’Keefe from Europlaz (page 32) provides insight into global trends and innovations in point-of-care equipment.

Cloud computing plays a key role in data sharing and increasing speed to market for pharmaceutical companies, 'Cloud cover' on page 26 illustrates how cloud technologies can be used to ensure quality in pharma medicine. Readers who are interested in data management might also be interested in 'Too much data' (page 33) based on a report from data analytics company Phesi claiming that many trials are not discriminate enough with the data they're using.

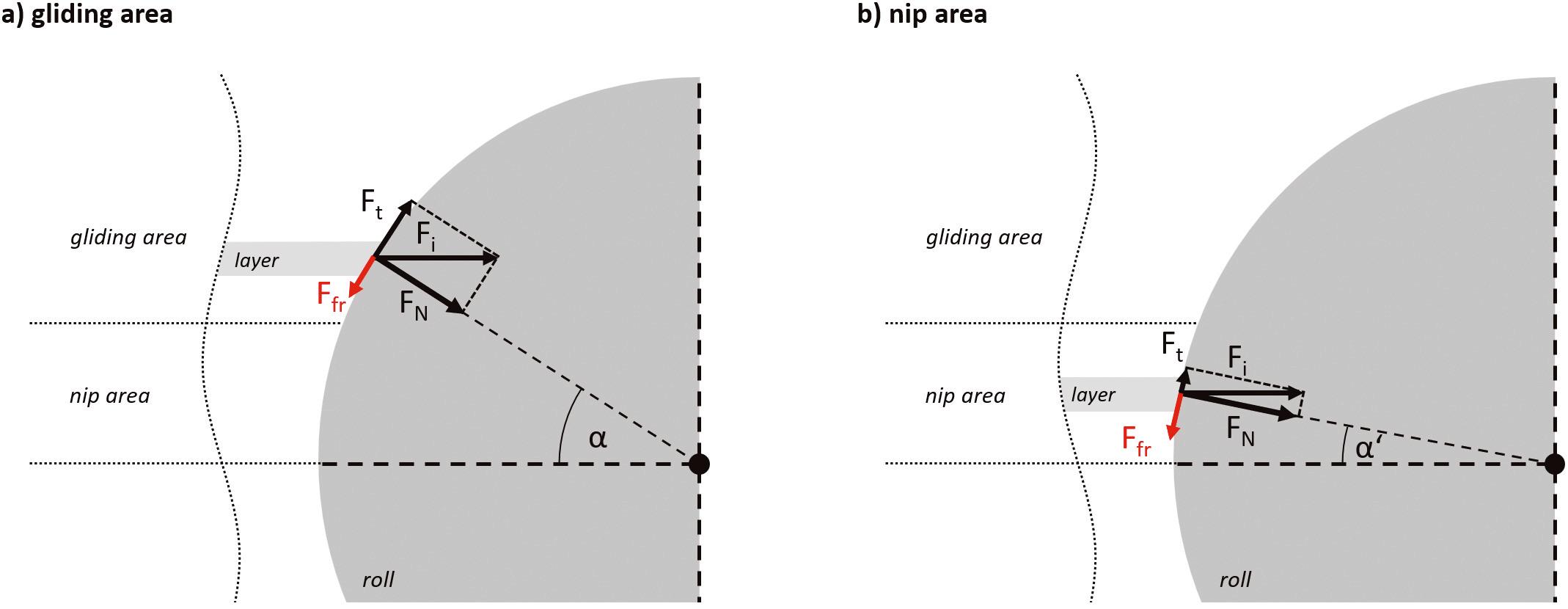

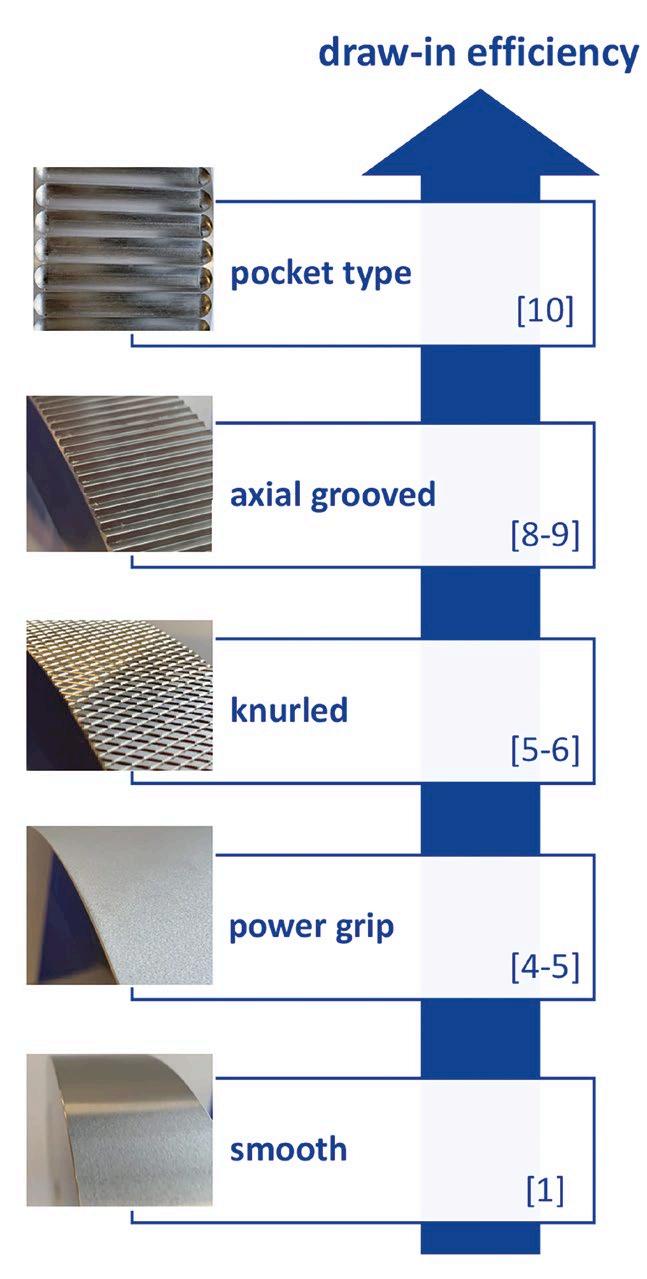

Regular readers will be interested to read the third and final instalment in the 'Understanding the nip angle' series from Gerteis Maschinen on page 42.

A bumper piece from Restek on page 36 provides in-depth insight into ultra-short chain PFAS and their impact on human health.

Nicola Brittain Editor

Protecting sensitive cleanroom devices from ESD

Small-scale sampling Effective sampling techniques in cleanrooms

microplate market examined A deep dive on the reasons for a recent surge in demand

Innovations in cannabinoid research

Accelerating drug discovery with microplate readers

OOC and reducing DILI in drug development

OOC’s role in streamlining drug research and manufacturing to reduce costs

Designer biocatalysts

An innovative collaboration that promises to change the biocatalyst landscape

POC diagnostics

– an overview

Insight into global trends in this fast-moving market

Too much data

How the overcollection of data adds months to clinical trials

Advances in 3D imaging

A recent collaboration will pave the way for 3D imaging

C1-C10 PFAS into biomonitoring

How ultra-short chain

PFAS can be integrated into biomonitoring methods

PUBLISHER

Jerry Ramsdale

EDITOR

Nicola Brittain nbrittain@setform.com

STAFF WRITER

Saskia Henn shenn@setform.com

DESIGN

Dan Bennett, Jill Harris

HEAD OF MARKETING

Shona Hayes shayes@setform.com

HEAD OF PRODUCTION

Luke Wikner production@setform.com

BUSINESS MANAGER

John Abey +44 (0)207 062 2559

SALES MANAGER

Darren Ringer +44 (0)207 062 2566

ADVERTISEMENT EXECUTIVES

John Davis, Iain Fletcher, Paul Maher

Remarkable innovations

Cybersecurity threats and solutions facing the biotech industry

Dry granulation: understanding the nip angle

The third part in this series on the nip angle

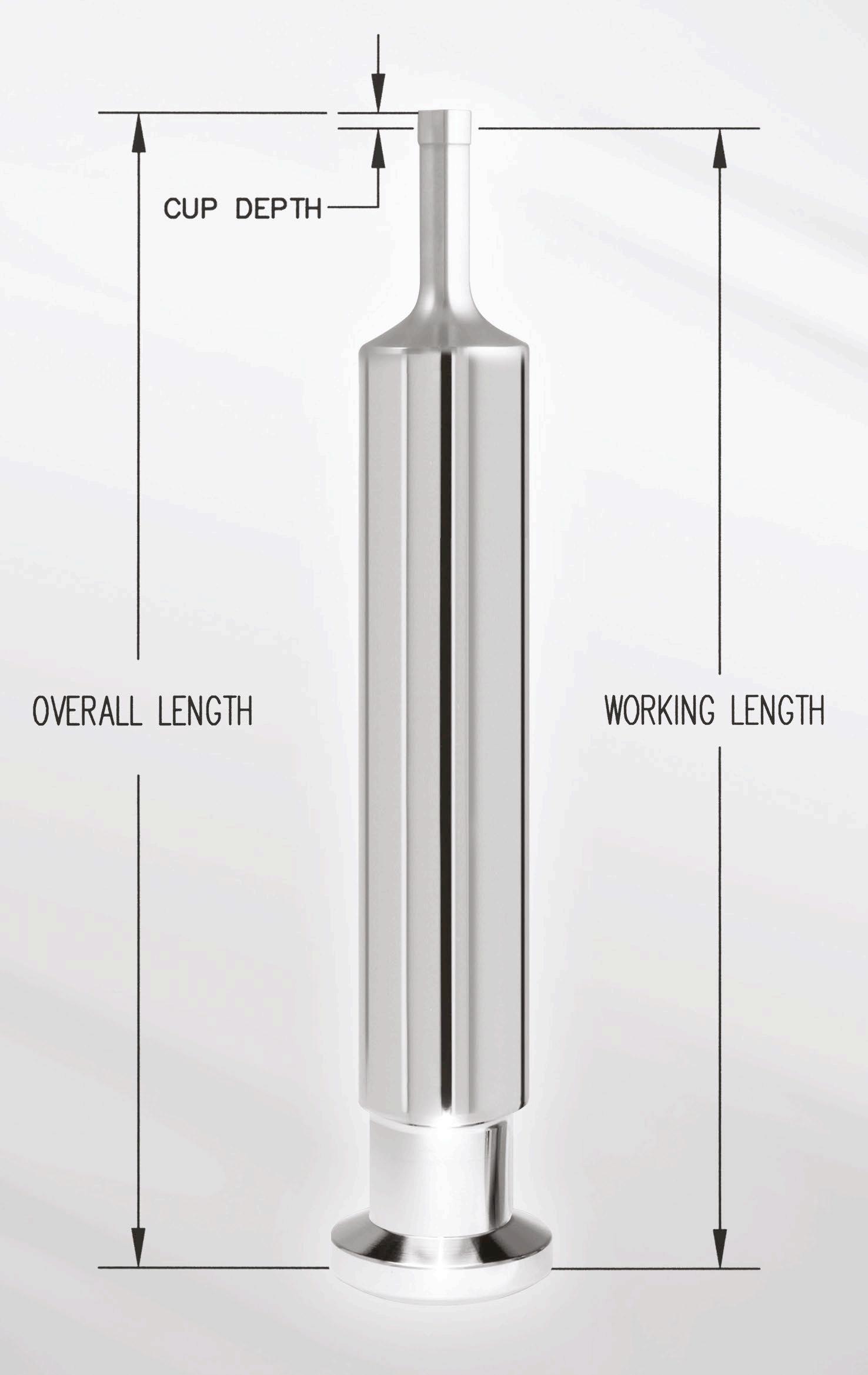

The right tools

The role of tooling specifications in tablet manufacturing

CHROMATOGRAPHY

Optical solution for hydrogen testing

High brightness technology that can help test hydrogen for contaminants

Under the microscope

Exploring microscopy and current trends in battery technology

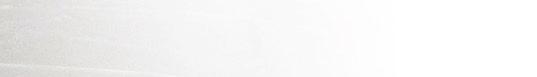

Filtech

A ‘must visit’ show reviewed

Setform’s international magazine for scientists is published twice annually and distributed to senior professionals throughout the world. Other titles in the company portfolio focus on Process, Design, Transport, Oil & Gas, Energy and Mining engineering.

The publishers do not sponsor or otherwise support any substance or service advertised or mentioned in this book; nor is the publisher responsible for the accuracy of any statement in this publication. ©2024. The entire content of this publication is protected by copyright, full details of which are available from the publishers. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior permission of the copyright owner.

Setform Limited, 6, Brownlow Mews, London, WC1N 2LD, United Kingdom

+44 (0)207 253 2545

A collaboration that will transform the sector

Linkam Scientific Instruments has launched updates to its specialist CMS196 cryo-stage product. The CMS196V now supports cryocorrelative light and electron microscopy (cryo-CLEM) and allows researchers to investigate samples at cryogenic temperatures to < -195 °C. The latest updates include an improved user interface featuring a touch panel and joystick, and an encoded and motorised XY stage allowing highly precise automated mapping of sample grids. The new product also features different sample holders and interface options for the newly-interchangeable optical bridge for imaging.

For more information visit: www.linkam.co.uk/cms196

Lab informatics platform Sapio Sciences has signed a comarketing agreement with Waters Corporation to integrate with its Waters Connect software. The companies claim this will improve data integrity, workflow efficiency, and laboratory productivity. As laboratory-based organisations expand and handle increasing numbers of samples, integrating liquid chromatography mass spectrometry (LC-MS) software with a Laboratory Information Management System (LIMS) will mean laboratory operations are more efficient with more streamlined workflows, reduced data silos, enhanced compliance with regulatory standards, and the elimination of manual data entry and transcription errors.

For more information visit: www.sapiosciences.com

Introducing Unity, the world’s first combined Backscattered Electron and X-ray (BEX) imaging detector.

Accelerate your journey to scientific discovery with instant microstructural and chemical images, acquired simultaneously with the Unity detector.

out how we’re making sophisticated sample analyses simpler and faster than ever before.

In the dynamic environment of modern laboratories, the quality of water used is a fundamental factor that can significantly influence the accuracy and reliability of experimental results. Whether it’s for sensitive assays, reagent preparation, or equipment sterilisation, laboratories require water that meets specific purity standards. Laboratory technicians and managers are continually seeking solutions that not only deliver high-quality water but also integrate seamlessly into their daily workflow.

One common challenge laboratories face is the need for both Type 1 ultrapure water for critical applications and Type 2 water for general laboratory tasks. Managing multiple water purification systems to meet these requirements can be cumbersome, expensive, and space-consuming. The Purite Fusion benchtop water purification unit addresses this issue by providing dual-quality water from a single, compact system, integrating smoothly into laboratory operations and instilling confidence in its users.

Laboratory technicians and managers are continually seeking solutions that not only deliver high-quality water but also integrate seamlessly into their daily workflow

Laboratory workflows are intricate and demand precision. On any given day, a technician might perform high-sensitivity techniques like highperformance liquid chromatography (HPLC), polymerase chain reactions (PCR), or cell culture experiments, all of which require Type 1 ultrapure water. Simultaneously, they may need Type 2 water for less critical tasks such as preparing buffers and reagents, sample dilution or rinsing glassware.

The Purite Fusion unit is engineered to meet these diverse needs without disrupting the laboratory’s workflow. It delivers up to 2lpm of Type 1 ASTM 18.2MΩ.cm ultrapure water from the point of use (POU), ensuring immediate and volumetric access when precision is paramount. For general laboratory applications, Type 2 water is readily available from the integrated 20 tank or optional external 50 or 100 litre storage tank’s bib tap. This dual availability from a single unit means valuable bench

space is released and technicians no longer need to manage consumables and maintenance contracts for different systems, thereby reducing OPEX and the use of higher cost water for less critical applications.

Laboratories depend on consistent water quality. High maintenance and consumable costs can impact the ability of a lab to properly support the needs of their water purification system which in turn can impact the efficacy of the unit and the reliability of results, The Purite Fusion unit is designed to tackle these challenges more effectively:

1. Consistent high-quality water supply: The Fusion unit’s comprehensive purification process ensures a reliable supply of both Type 1 and Type 2 water direct from a potable water supply using treatment methods including:

• Carbon pre-treatment to remove

The Purite Fusion unit is a comprehensive solution to the water purification challenges faced by modern laboratories

chlorine and organic compounds

• Reverse osmosis (RO) membranes to eliminate particulates and ionic contaminants

• Primary deionisation

• Polishing deionisation with highgrade nuclear resin

• Ultrafiltration to remove any remaining particles, microorganisms, endotoxins, RNase and DNase

• UV photo-oxidation at 185nm for total organic carbon (TOC) reduction

• LED UV disinfection at the dispense point to ensure water remains contaminant-free.

This meticulous process guarantees that the water meets the stringent purity requirements necessary for accurate and reproducible results, giving laboratory personnel the confidence they need in their daily tasks.

2. Reduced maintenance and operational costs: Choosing a water purification system extends beyond its immediate functionality; it encompasses the assurance of consistent performance and dependable support. The Purite Fusion unit is constructed with an emphasis on reliability, durability,

and longevity, ensuring it stands up to the rigorous demands of daily laboratory use.

3. Space optimisation and workflow efficiency: Space is a valuable commodity in any laboratory. The compact benchtop design of the Fusion ‘IT’ saves precious workspace by combining dualquality water production and a 20 litre Integrated Tank (IT) in one unit with a footprint of only 440mm x 560mm. This consolidation simplifies laboratory layouts and streamlines workflows, as all water purity needs are met through a single system. Furthermore, the system can be expanded with the addition of an optional remote dispense point (Type I) which can be located up to five metres away from the unit to serve a second location from the main unit. The footprint of the remote dispenser is just 228mm x 305mm.

The Fusion unit’s design and performance directly contribute to building confidence among laboratory technicians and managers by:

• Ensuring repeatability: consistent water quality leads to reproducible

results, a cornerstone of credible scientific work.

• Simplifying operations: With fewer consumables and maintenance requirements, the unit reduces the complexity of laboratory operations.

• Providing robustness: The system’s durable construction minimises the risk of unexpected failures, reducing downtime and maintaining productivity.

• Satisfying various usage demands: The Purite Fusion 160 and 320 models produce RO permeate at a flow of 17.6 and 36 l/h respectively to the storage tank at a feed water temp of 15ºC offering performance options to meet the lab’s usage needs.

In an era where precision and reliability are paramount, the Purite Fusion unit is a comprehensive solution to the water purification challenges faced by modern laboratories. By delivering dualquality water from a single, spacesaving unit, it integrates well into daily workflows, enhancing efficiency and simplifying operations, according to the company.

• Manufactured from non-toxic high grade LDPE

` Shatterproof and safe to use

` Inert to prevent sample contamination

• Responsive control for ease-of-use & reliable performance

• Low-affinity surface for complete sample delivery

• Available sterile or non sterile to suit your application

• No mould release agents used during manufacturing

Inline degassing is the silent guardian of precision and reliability in fluidic systems. Installation of an inline degasser (see Figure 1) will drastically diminish the concentration of dissolved gases in the liquids passing through. This reduces variations, improves baseline stability, shortens startup times, and ensures more consistent results. By reducing the concentration of dissolved gases beyond the level where outgassing can occur, bubble formation will not be an issue despite changes in temperature, pressure, or compositions of the liquid managed throughout the flow path.

Degassers are important equipment components in laboratory analysis equipment such as liquid chromatography, HPLC, UHPLC, ion chromatography and mass spectrometry. Machines for semiconductor manufacturing or assembly will also typically deliver more consistent results with a degasser included in the fluid path. The same is true for instruments in immunology, haematology, and in vitro diagnostics.

An inline degasser assembly contains a purposedly designed vacuum pump connected to one or several degassing chambers through which the liquid with dissolved gases flows. Inside the degassing chamber (see Figure 2) there is an inert gas-permeable membrane that must be compatible with the liquid to be degassed. A control board with a vacuum sensor ensures that the vacuum level is kept at a constant level to minimise fluctuations in degassing performance and wear on the vacuum pump.

Figure 1. Example of a stand-alone inline degasser from the DEGASi family, suitable for aqueous liquids and organic solvents at flow rates from 25 µL/min up to 1000 mL/min, depending on model and configuration

Modern inline degassers incorporated into instruments handling liquids with high precision and accuracy, are fortunately essentially maintenancefree. However, there are a few things to keep in mind not to shorten the lifetime of your inline degasser. Firstly, one should use a degassing chamber compatible with the solvents that will be used. Organic solvents such as hexane, heptane, toluene, tetrahydrofurane (THF), and dichloromethane (DCM), typically demand specially designed degassing chambers. These degassing chambers are often labelled GPC to highlight their suitability in gel permeation chromatography, but they are equally suitable for normal phase and flash chromatography applications where such organic solvents are also employed. In addition, there are degassing chambers explicitly designed for hexafluoro isopropanol (HFIP) that are required when this aggressive organic solvent is used. As with any fluidic component, it is advised not to leave your degassing chamber with liquid inside when disconnected from use. This is especially important when there are salts or buffer components dissolved into the liquid since they may precipitate. Precipitates will block the flow path and are notoriously problematic to wash out again. In

addition, buffered aqueous solutions exposed to open air may constitute an attractive environment for microbial growth, which can constrict the flow path.

Finally, it is strongly recommended to always suck solutions through the degassing chambers, rather than pumping or pushing liquids through them. The internal gas-permeable membrane material that allows gases to penetrate while blocking liquids, is designed to withstand a certain pressure difference. That limit may accidentally be exceeded if liquids are pumped too fast into the degassing chamber, which may cause irreparable damage.

Should a degasser chamber malfunction, it is most often either related to use with incompatible organic solvents, or a blocked flow path. If this happens, the chamber needs to be replaced. Fortunately, this is a straightforward and quick procedure in most systems. Note however, that in cases where there has been a leakage from the fluidic line into the vacuum line, there is a high risk that the vacuum sensor on the control board has been impaired. In these situations, there are often so many damaged components that one should consider exchanging the entire degasser.

Since modern inline degassers are highly robust equipment with a long lifetime, it may well be that if their internal vacuum pumps eventually fail, there is a risk the original part is no longer available from the manufacturer. The stepper motor driven vacuum pumps, with sensor and control boards to ensure constant vacuum, come in a range of variants for different degasser

Figure 2. Schematic sketch of a degassing chamber containing a gas permeable Systec AF tubular membrane, connected to a vacuum pump with constant vacuum controller

Inline degassers are robust pieces of equipment that require minimal maintenance to continuously safeguard precision in fluidic systems by reducing dissolved gases and eliminating troubles from bubbles. With proper handling, the user can ensure that their degasser will keep performing for many years, and if it eventually fails, replacements of parts will be a simple procedure.

[1] https://biotechfluidics.com/ products/degassing-debubbling/ degasi-inline-degassers/degasserspare-parts/

assemblies. These pumps may differ in capacity for various fl ow rate ranges, or in the vacuum setpoint, or in the control board design, or in the mounting orientation. Conveniently, Biotech Fluidics upholds an online vacuum pump replacement fi nder [1] that facilitates exchange of obsolete pumps and control boards in inline degassers from many different brands.

Anders Grahn, Chairman & Founder, Biotech Fluidics AB, Onsala, Sweden Dr. Fritiof Pontén, CEO, Biotech Fluidics AB, Onsala, Sweden

systems for Type I, II and III water Suitable for applications from glasswashing and reagent makeup through to UPW for histology diagnostics and DNA Sequencing. Bench top units through to bespoke system design for centralised systems.

Water activity is a critical parameter in product development across various industries, particularly in food, pharmaceuticals, and cosmetics. It refers to the energy status of water in a product, which can impact chemical reactions, physical properties, and the growth of microorganisms. Unlike moisture content, which measures the total water in a product, water activity focuses on the energy of water, which influences the product’s stability and overall quality. Understanding and controlling water activity is essential for creating products that are safe, durable, and appealing to consumers.

One of the most significant roles of water activity in product development is its impact on microbial growth. Microorganisms require a certain energy of water to maintain their internal turgor pressure, which is necessary for normal functioning. By controlling water activity, manufacturers can

inhibit microbial growth, thereby enhancing product safety.

Water activity also plays a crucial role in the chemical stability of products. Many chemical reactions are influenced by the energy of water. By adjusting water activity, developers can either slow down or accelerate these reactions, depending on the desired product characteristics. A well-balanced water activity level can ensure that a product retains its intended flavour, appearance, and nutritional profile throughout its shelf life. Similarly, in pharmaceuticals, maintaining optimal water activity can prevent the degradation of active ingredients, ensuring the product remains effective until its expiration date.

Water activity affects not only the safety and stability of products but also their sensory attributes, particularly texture. In food products, for instance, water activity influences whether a product is perceived as crunchy, chewy, soft, or dry. A slight change in water activity can alter the texture significantly, impacting the consumer’s sensory experience and satisfaction.

There may be a tendency to think of water activity as only a QA test to be done on a finished product. However, incorporating water activity testing into product development can improve efficiency and help avoid costly mistakes. For example, if the impact of water activity is considered from the onset of product development, an ideal water activity can be identified and then passed on to production as the release specification for the product. If the product is made to that specification, there will be a significant reduction in waste or failed product. In addition, wasteful reformulation of an already released product to meet a necessary water activity is avoided.

Water activity should also be considered when making decisions about product packaging. It is important that packaging acts as a moisture barrier that prevents changes in product water activity owing to exposure to abuse storage conditions. Modeling can be used to determine the appropriate packaging that will sufficiently restrict water activity changes while avoiding costly over-packaging.

In summary, tracking water activity during product development helps to set release specifications, identify external factors that could alter the water activity, and choose packaging that will ensure the product is safely delivered to the consumer. Thus, water activity remains a cornerstone of successful product development, shaping the characteristics and longevity of countless products in the market.

Mockups, often made from wood or cardboard, can help mitigate installation problems further down the line. Saskia Henn reports

During the implementation of a new aseptic lling line, adequate preparation is necessary to prevent

Implementing a new aseptic filling line can be daunting, since everything from the layout of the cleanroom to the height of the operators can influence how well your contamination control management system works.

A recent webinar titled ‘The Successful Implementation of a New Aseptic Filling Line,’ outlined how difficult it can be, as well as how technicians can prevent issues further down the line.

The webinar was hosted by Mark Hallworth, the pharmaceutical manager for PMS, and Marc Machauer, global OEM coordinator for the company. As Hallworth explained, although risk assessment can ensure that regulatory requirements are met, this process often occurs at the end of filling line implementation once it is hard to change anything – such an assessment is therefore not enough to mitigate operational problems.

Rather than relying on risk assessment, operators should ensure they prepare adequately for the build before it goes ahead because once a design is complete, it is often replicated and sold to pharma companies that must adapt their own process to the machine. It can be difficult to make a specialised product work for someone else.

Hallworth recommends that lab operators invest time and resources into a facility right from the beginning and that they create an adequate mock up. This will make it less likely that something will need to be retrofitted.

Machauer explained that he often sees filling lines that have amendments once they are installed as well as the problems that occur.

“Many times, we get pulled in after a machine is installed and the customer has come to us, and now we have to make it work somehow,” he said.

“It is expensive and time consuming to resolve the issue from this standpoint.”

This is why the mock-up phase is so important. The physical representation of a machine provides a realistic idea of what the final product will look like. Mock-ups are commonly made virtually or from wood, cardboard and foam core, allowing designers to quickly make changes as needed.

This type of testing can help operators understand what the risks are, where the airflow patterns are and what changes need to occur prior to installation.

Hallworth said: “A process study for each phase of the machine will give us many sample points that reflect the best risk within that environment.”

During the mock-up phase, operators of different heights can reach into the machine to pick up microbial plates and test if they work smoothly. This simulation testing can also be applied to automated equipment, such as robotic arms.

“It’s always better to do a wood model where you can put in some real components and you have a real reach,” said Machauer.

An accurate mock-up can prevent problems leading to operational shutdown later on, such as on-site modifications, measurements not adding up or problems with the physical space where the filling line sits. www.pmeasuring.com

“We’ve got this whole quality by design strategy. Rather than trying to measure for quality at the end, we’ve got to push that quality forward into the design and mockup phase,” said Hallworth.

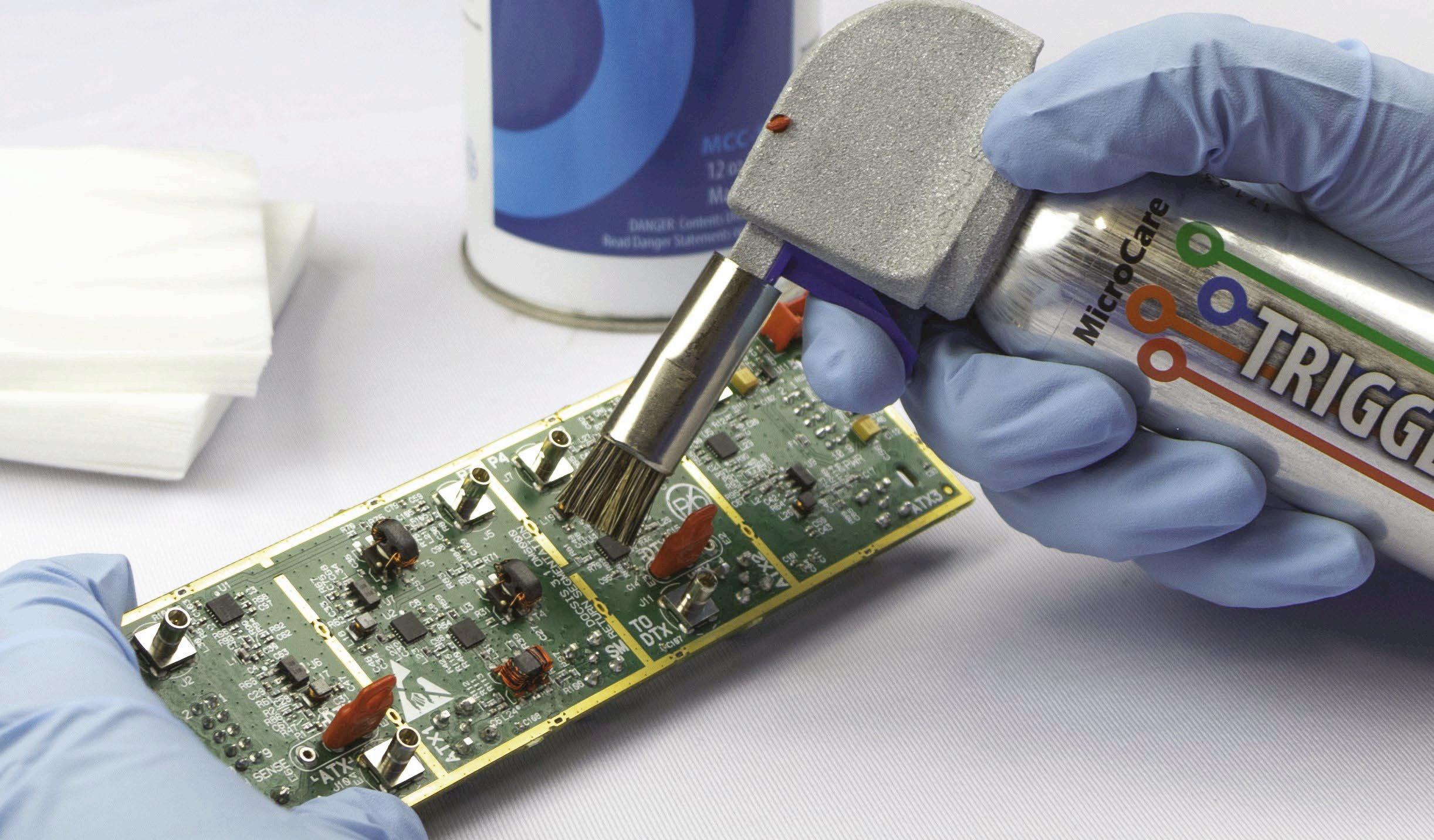

Cleanrooms present unique challenges for ESD control

Elizabeth Norwood, a senior chemist from MicroCare, explores how to protect sensitive cleanroom devices while ensuring they maintain quality and functionality

Electronic medical device manufacturers face the ongoing challenge of maintaining product reliability and performance. Electrostatic discharge (ESD) poses a serious threat across all production settings, particularly in cleanrooms. Sensitive devices like pacemakers, insulin pumps, imaging systems, and patient monitors are especially at risk owing to their intricate electronics. As these technologies become more compact and sophisticated, the potential for ESD-related damage increases. To address this, manufacturers must implement robust protection measures while adhering to strict regulatory requirements to ensure product quality and functionality.

Electrostatic discharge occurs when two objects with differing electrostatic potentials come into proximity or contact, resulting in a rapid transfer of electrons. Even minor ESD events can have severe consequences in manufacturing, particularly for sensitive electronic components such

as Printed Circuit Board Assemblies (PCBAs), which are integral to many modern medical devices.

ESD-related failures in medical devices typically take two forms: catastrophic failure and latent damage. Catastrophic failure results in immediate and detectable malfunction, often found during manufacturing or initial quality control procedures. Latent damage, however, poses a more complex challenge. Devices affected by latent ESD damage may pass initial tests but experience premature failure in the field, potentially compromising patient safety and the manufacturer’s reputation.

While essential for keeping sterility and precision in medical device production, cleanrooms present unique challenges for ESD control. Low humidity levels, necessary to inhibit microbial growth, can increase the risk of static build-up. Even though cleanroom garments are designed to prevent contamination, friction from personnel movement can still generate static charges. Routine activities like walking across the cleanroom floor can create static potentials,

sometimes reaching thousands of volts if ESD control measures are not effectively managed.

Furthermore, many cleanroom surfaces and equipment are constructed from insulating materials that can accumulate static charges.

To address these challenges, medical device manufacturers should implement a comprehensive, multifaceted approach to ESD control. This strategy begins with personnel management. All staff working in the cleanroom should use anti-static wrist straps or heel grounders connected to verified ground points. These devices continuously dissipate static charges that accumulate on the human body. ESD-safe footwear and clothing, including cleanroom-compatible garments manufactured from staticdissipative materials, further mitigate the risk of charge generation and accumulation.

Environmental modifications play a crucial role in ESD mitigation. Conductive flooring or floor mats

The

should be installed to dissipate static charges effectively. Humidity levels should be precisely regulated, typically kept between 40% and 70%, while temperatures should be controlled between 18-22°C (64-70°F) to balance static reduction with other cleanroom requirements. All work surfaces, equipment, and tools should be properly grounded, creating a network that safely channels static charges away from sensitive components. Material handling procedures are equally important in ESD prevention. PCBAs and components should be transported and stored in ESD-safe containers and packaging. In areas where non-conductive materials that cannot be grounded must be used, ionisation systems can help to neutralise static charges.

Cleaning and maintenance protocols are integral to ongoing ESD control. Effective cleaning in PCBA assembly is not just about removing contaminants but also about preventing ESD. Some cleaning fluids can generate significant static charges, worsening the ESD risk rather than mitigating it. For example, certain flux removers can

produce static as high as 12,000 volts owing to friction when dispensed from aerosol cans. To address this, manufacturers should use specially engineered static-dissipating tools that attach to the aerosol can to minimise the generation of static charges to just over 50 volts.

In addition, regular use of presaturated ESD-dissipating cleaning wipes can significantly reduce static build-up. These wipes effectively remove contaminants like fingerprints and grease without leaving lint, debris or static charges. They should be used in straight lines with overlapping strokes to ensure thorough coverage. It’s advisable to choose wipes with low/no alcohol content to prevent drying out surfaces and causing damage.

An ESD-safe controlled flux remover dispensing system is another tool that helps to manage ESD and improve cleaning outcomes. This system allows technicians to apply flux remover in a controlled manner, reducing waste and ensuring that the cleaning process does not introduce further static. Brushes attached to these systems can help clean under surface-mounted components more effectively while also grounding the cleaning tool to end any charge build-up.

Cleaning and maintenance protocols are integral

The effectiveness of ESD control measures relies on proper implementation by personnel meaning comprehensive training programs should be set up. All staff working in or entering the cleanroom should undergo regular ESD awareness training.

Manufacturers should conduct periodic audits of ESD control practices and equipment to ensure ongoing effectiveness and verify compliance with regulations.

Effective ESD control in cleanroom manufacturing medical devices is critical to the ongoing battle against electrostatic discharge. By minimising ESD-related damage, manufacturers significantly enhance the long-term reliability of their devices. This reduces costs associated with warranty claims, rework, and product recalls.

Microcare is a precision cleaning solutions company based in the US.

A paper written by Mark Hallworth from PMS explores sampling techniques in cleanrooms and associated controlled environments

The following article is an excerpt from the paper:

ISO/TR 14644-21:2023

Cleanrooms and Associated Controlled Environments: Airborne Particle Sampling Techniques, written by industry expert and ISO 14644 Technical Committee member Mark Hallworth of Particle Measuring Systems. It is an essential guide designed to help cleanroom operators and technicians refine their airborne particle sampling methods. The full paper is complete with specific requirements, explanatory diagrams and a decision tree.

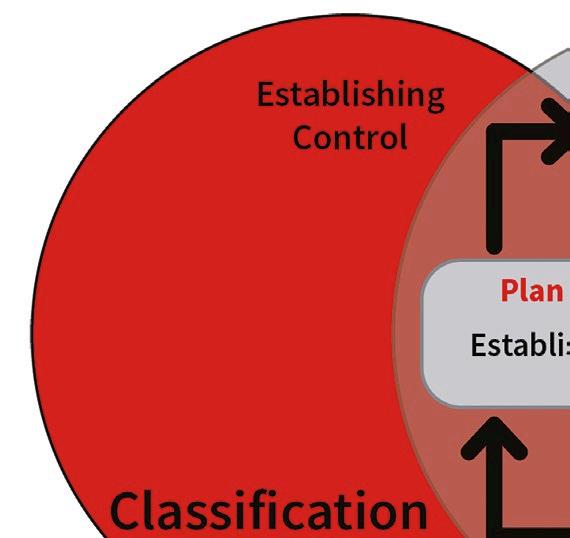

This technical report (TR21) was developed in response to updates in the EU GMP Annex 1 regulation regarding sterile medicinal product manufacturing. It provides a comprehensive discussion on classification and monitoring strategies for maintaining cleanroom

air quality and minimising particle contamination.

Cleanrooms are controlled environments where airborne particle concentration must be continuously monitored to meet standards, such as ISO 14644, required by regulatory bodies. Importantly, ISO/TR 1464421 discusses the importance of accurately detecting and measuring smaller particles, which also pose significant contamination risks.

ISO/TR 14644-21 identifies three primary areas of concern when sampling airborne particles:

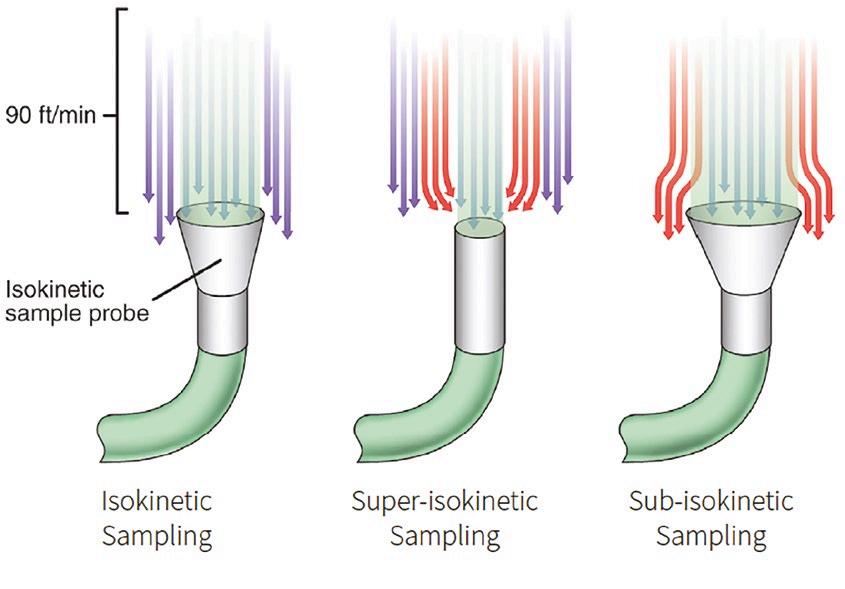

1. Sampling errors: These errors occur when the sample taken does not accurately represent the total air volume in the cleanroom. ISO/TR 1464421 stresses the importance of isokinetic sampling, where the air velocity at the sample inlet matches the room’s airflow. If this balance is not achieved, particles might be under or over-sampled, leading to inaccurate results.

2. Sample measurement errors: These errors stem

from the equipment used in particle counting. Light Scattering Aerosol Particle Counters (LSAPCs) are the most commonly used instruments. Particle coincidence, impact of optical contamination and accurate calibration of LSAPCs, based on ISO 21501-4, are crucial for reliable results.

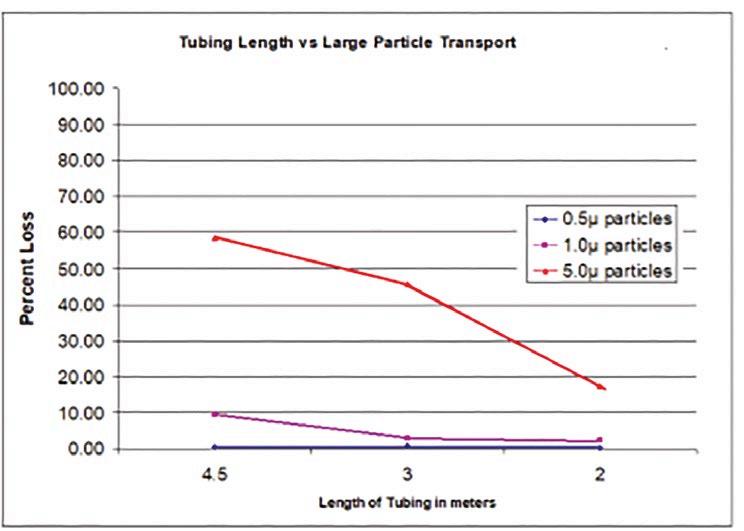

3. Sample transportation errors: When using tubing to transport air samples to a particle counter, particle losses can occur due to tubing bend sedimentation, electrostatic attraction, and Brownian motion. ISO/TR 1464421 gives specific advice for keeping tubing lengths short and using smooth-bore materials to minimize these losses.

ISO/TR 14644-21:2023 offers valuable guidance for both cleanroom classification and monitoring, with a focus on minimising errors in airborne particle sampling. By understanding and addressing the potential sources of error in particle sampling and transportation, cleanroom operators can better maintain control over their environments, ensuring compliance with regulatory standards and minimising contamination risk.

Microplate readers have evolved because of technological breakthroughs that have improved their features and broadened their range of applications

The recent market expansion has been driven by a need for high throughput screening in genomics and drug discovery, according to a recent report

Microplate readers are essential laboratory instruments used to analyse one or more parameters within microplates, such as absorbance, fluorescence, or luminescence signals. They are used for a variety of laboratory tasks, including quantifying protein, gene expression or metabolic processes; evaluating enzyme reactions; detecting diseases in cells; performing end point tasks; performing kinetic analyses; and detecting and measuring antibodies, proteins or antigens in biological samples.

Microplate readers work by detecting and quantifying light signals

produced by liquid samples; they use different detection modes and a standard curve to determine the experimental values as well as multiwell plates which are integral to the microplate reader and allow for many experiments to be performed at once.

A report released in November 2024 by research company Transparency Market Research predicts that the global microplate reader market will see compound annual growth rate of 7.0% to reach US$834.4m by 2031 (the market was valued at US$461.3 in 2022).

This surge will be driven by the requirement for high-throughput

screening in genomics and drug discovery, as well as increased demand in life sciences research and diagnostics.

Microplate readers have evolved because of technological breakthroughs that have improved their features and broadened their range of applications. Modern technology integration has helped to increase the versatility and effectiveness of these instruments. Advancements currently include multi-mode detection capabilities. Furthermore, increased sensitivity and signal-to-noise ratios have been made possible by sophisticated detectors, better light sources, and precise

optics, all of these present profitable prospects for market growth.

According to the report, the market for clinical trials is likely to grow at around 10% annually, creating a substantial need for advanced laboratory equipment. In 2022, the single-mode readers segment dominated the market. Dedicated instruments are increasingly needed for applications requiring specialised measurements, including enzyme assays, cell viability tests, or the detection of certain biomolecules, owing to their precision and accuracy. Measurements with higher sensitivity and specificity are possible thanks to single-mode readers, which are frequently tailored for a specific detection technique.

Single-mode microplate readers measure only one detection technology (for example absorbance or nucleic acid detection) and they are the best choice when it is clear that the instrument will be used for just one application. Such tasks are typically fairly long-term and they block the microplate reader for other measurements meaning one mode is sufficient. Examples of such studies include microbial growth monitoring which takes one or more days, or fluorescence detection of thioflavin T experiments which take up to a week.

Multi-mode readers are needed when a laboratory wants to measure several properties such as enzyme activity in combination with the quantity of DNA and proteins in a specimen for example. Lab technicians that currently plan applications based on one detection mode but think they may want to change in the future may want to consider a model that can be upgraded to a multi-mode machine.

All the major companies in the field are incorporating the aforementioned developments in the microplate

Multi-mode instruments are needed when a laboratory wants to measure enzyme activities and quantity of DNA and proteins

reader industry into their products in order to remain competitive. The following companies are well-known participants in the global microplate reader market:

- BMG LabTech (for more insight into the company’s work see the ‘Innovations in cannabis research’ on page 22).

• Bio-Rad Laboratories

• Merck KgaA

• Thermo Fisher Scientific

• Dynex Technologies Inc

• Grifols, S.A

• Tecan Group Ltd

• Monobind

The report from Transparency Market Research found that North America leads the global industry. The region’s market dynamics are being driven by

the presence of major companies and improved infrastructure in research laboratories. Two key drivers of the increase in demand are the rise in the number of clinical trials and the sizable government funding of current R&D projects.

The pharmaceutical and biotechnology industries using the technology are also located mostly in the US, according to the report, and the need for microplate readers is driven by ongoing innovation and expansion in drug research and screening.

In order to bolster their positions, major companies in the industry are increasingly focused on partnerships, collaborations, mergers, and the introduction of new products; and those observing the industry can expect to see an uptick in activity of this sort in the years to come.

For more information visit: www.transparencymarketresearch.com/microplate-readers-market.html

The PHERAstar FSX with its advanced assay stability function enables temperature control between 18oC and 45oC ensuring stable measuring conditions

Barry Whyte from BMG Labtech discusses how microplate readers could help accelerate drug discovery

Interest in cannabinoid research is growing as scientists look for new ways to advance drug discovery and development for different diseases. This field of study holds promise for new treatments for cancer, neurological disorders, pain management and other conditions. In this context, researchers need new approaches to investigate modes of action, ensure the safety of cannabinoid substances, and help develop novel drugs with reduced side effects.

Microplate readers can support a wide variety of analyses associated with cannabinoid research. The use of high-throughput methods offers time savings, reduced costs, and can help meet the growing demand for scientific analyses. Here, we look at some of the ways microplate readers can provide added value in cannabinoid research.

Cannabinoids are a diverse group of naturally occurring or

synthetic chemical compounds that fall into three main categories: Endocannabinoids are produced in the human body and are responsible for the regulation and control of functions like learning and memory; phytocannabinoids are plant-derived compounds but can partly bind to the same receptors; and synthetic cannabinoid receptor agonists (SCRAs) are made in the laboratory.

Microplate readers are often used to detect phytocannabinoids or their metabolites in medical samples or in forensics. For this purpose, immunoassays such as ELISAs serve as methods for quantification. Chemotyping of plants may also help identify the preferred botanical extracts for use or identify cannabis brought across geographic regions illegally. [1,2]

Researchers want to understand the pharmacology of naturally occurring and synthetic cannabinoids at the receptor level to aid drug discovery. Microplate-based methods using fluorescence polarisation, bioluminescence resonance energy transfer (BRET) or time-resolved

fluorescence resonance energy transfer (TR-FRET) enable the sensitive and reliable detection of receptor binding as well as the identification of downstream signal cascades.

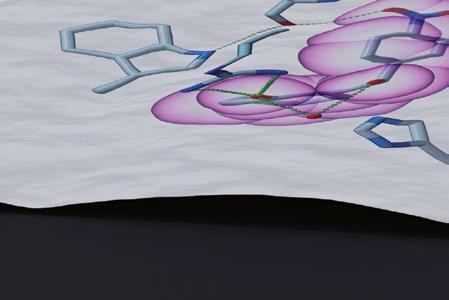

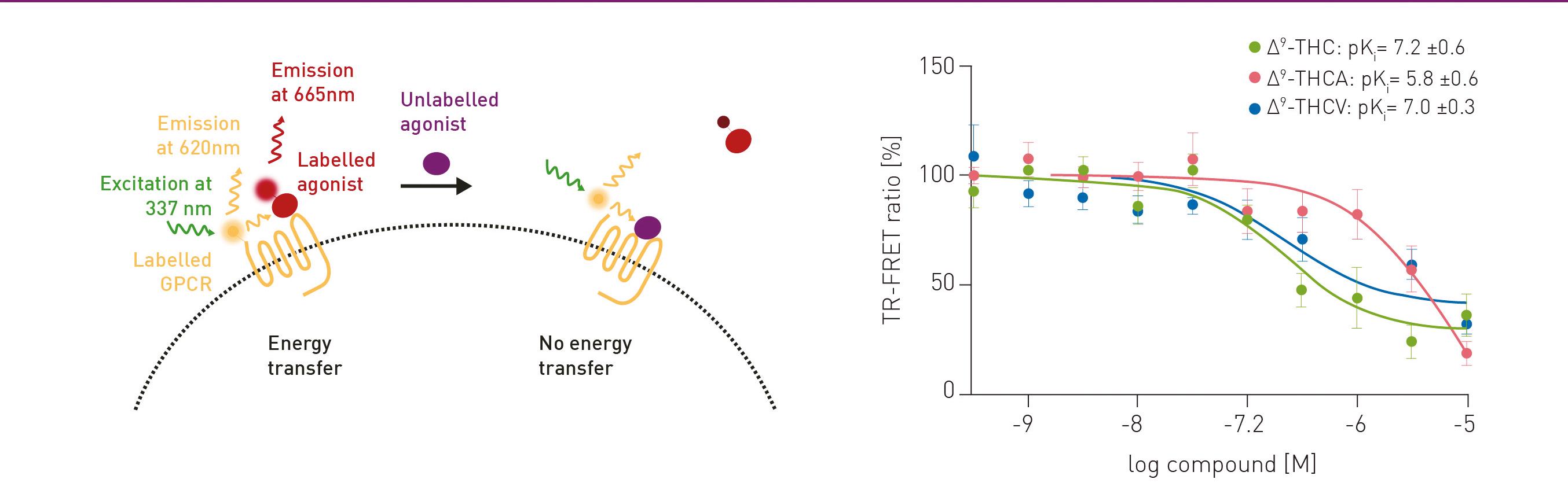

In the following example, TR-FRET was used to study the differential binding of natural cannabinoids to the cannabinoid receptor 1 (CB1). The fluorescent molecule CELT-335 acts as a ligand for CB1. CB1 was additionally labelled with terbium to detect the interaction. When the fluorescent agonist binds to the receptor, energy is transferred from terbium to CELT-335 which consequently emits light at 665 nm in addition to 620 nm derived from the terbium (figure 1A). In competition with 100 nM CELT-335, the binding affinity of 0-10 µM of the phytocannabinoids Δ9-THC, Δ9-THCA and Δ9-THCV was monitored. The TR-FRET ratio was determined using BMG LABTECH’s PHERAstar FSX

The binding affinities were similar for ∆9-THC and ∆9-THCV with pKi values of 7.2 ± 0.6 and 7.0 ± 0.3,

respectively (figure 1B). The affinity for ∆9-THCA was considerably lower with a pKi of 5.8 ± 0.6.

The Simultaneous Dual Emission (SDE) feature on the PHERAstar FSX allows both emission signals (from donor and acceptor) to be measured simultaneously. In addition to its high sensitivity and reliability, where even the smallest changes in receptorligand interactions can be measured, SDE results in further time savings and more robust data.

SCRAs were originally developed for scientific research or as therapeutic agents. Unfortunately, they have emerged as drugs of abuse and are associated with serious health risks including death.[3] Little is known about the detailed toxicology and pharmacology of SCRAs.[4] However, details of their mode of action are needed to reduce the risks they pose and pave the way for their use as therapeutic agents.

A B B

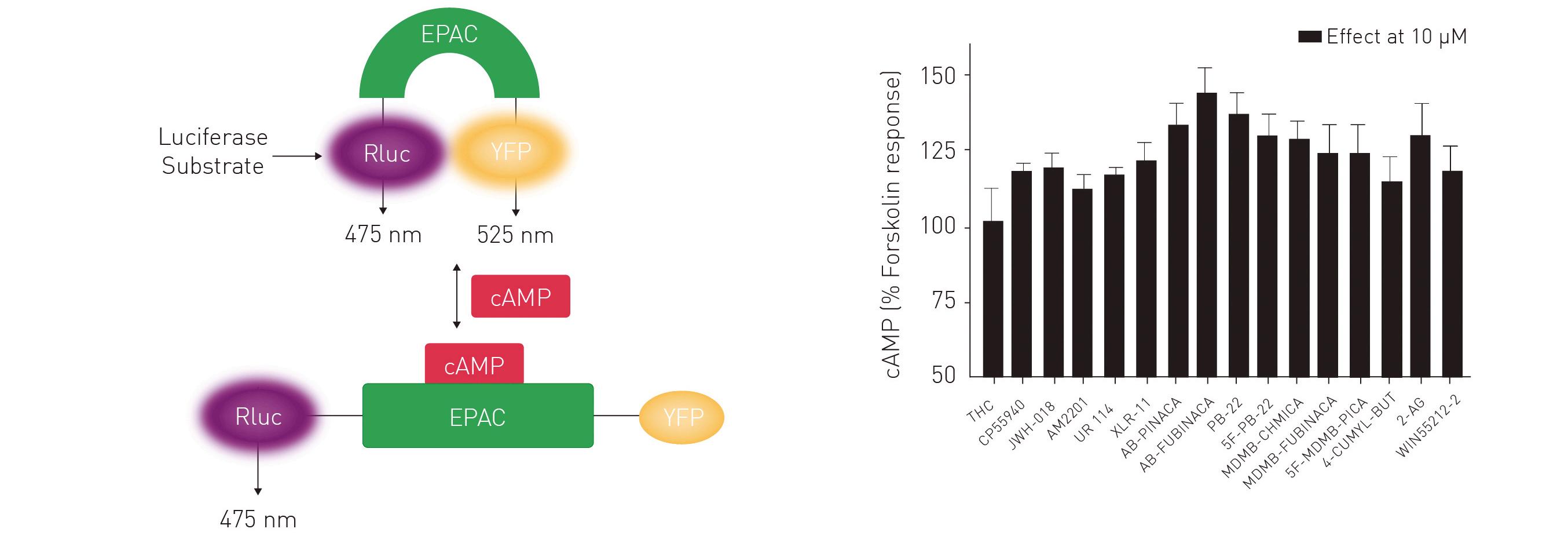

To study whether a selection of cannabinoids signal via the Gαi- or Gαs- subunit of the CB1 receptor, cAMP, a downstream second messenger of the Gαs-stimulated cascade was analysed in the following study. Binding of a receptor agonist to CB1 (a G-protein coupled receptor) may lead to dissociation of the trimeric G-protein complex and the release of the subunits into the cytoplasm, initiating various signalling pathways and cellular responses. cAMP levels were quantified with a BRET-based CAYMEL biosensor. The sensor is composed of an EPAC protein, a Renilla luciferase (Rluc) and a yellow fluorescent protein (YFP). Upon binding of cAMP by EPAC, Rluc and YFP are spatially separated owing to a conformational change. This leads to a reduction of the BRET signal which is generated in the absence of cAMP owing to proximity of Rluc and YFP (figure 2A). The overall levels of cAMP generated confirm that cannabinoid receptor agonists have significantly

different efficacies in the activation of the Gαs pathway (figure 2B).

Again, the high detection speed enabled the study of several potential compounds in parallel in a kinetic approach. The PHERAstar FSX also offers compatibility with other automation solutions which make it an ideal choice for high-throughput applications in all reading modes.

More information on cannabinoid research is available in this blog post or you can email applications@ bmglabtech.com with questions.

1. DOI: 10.1093/jat/bkab107

2. DOI: 10.1007/s10681-015-1585-y

3. DOI: 10.1056/NEJMp1505328

4. DOI: 10.3389/fpsyt.2020.00464

For more information visit: www.bmglabtech.com

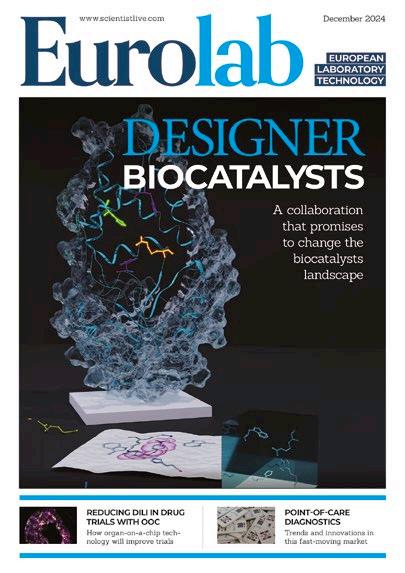

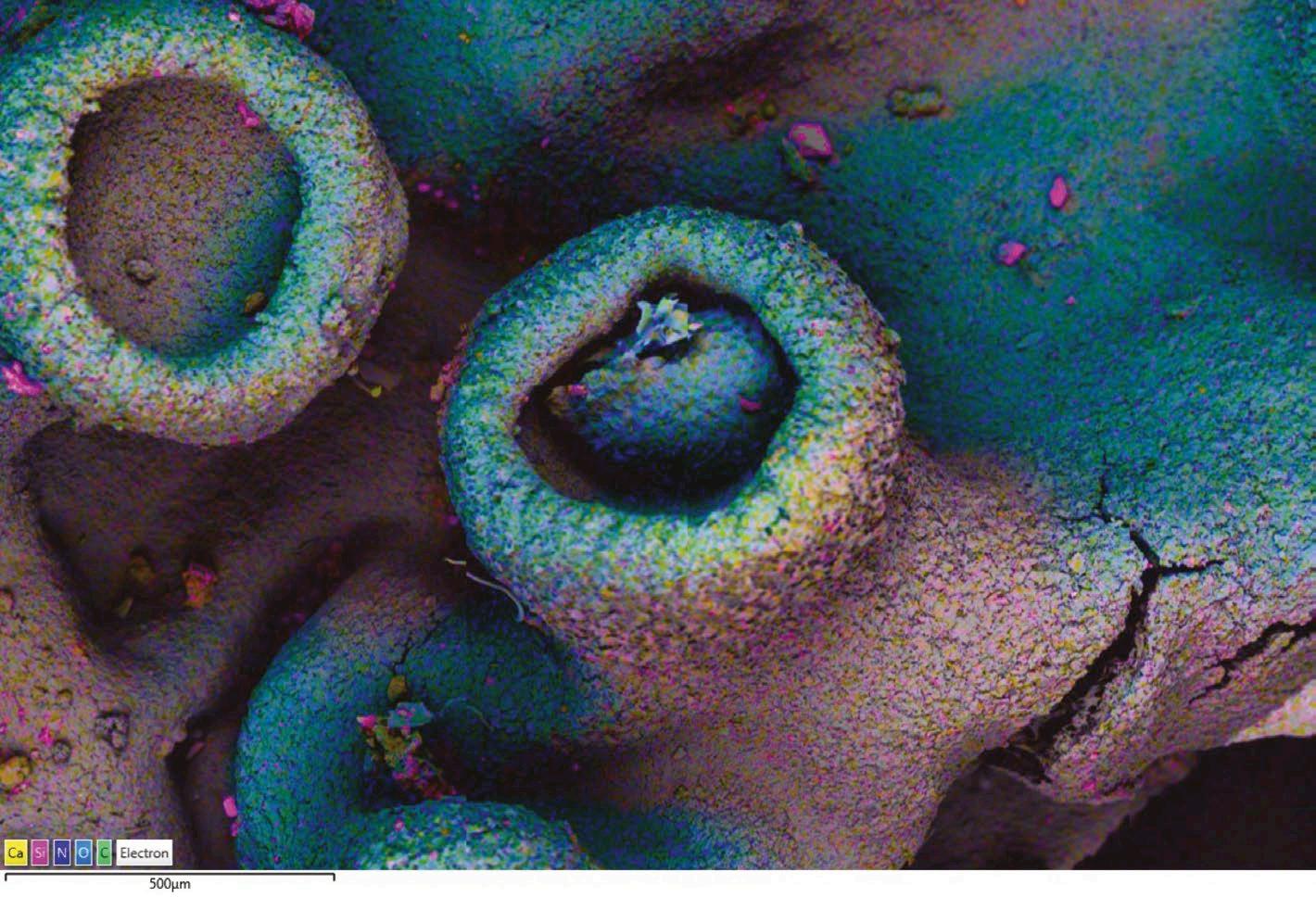

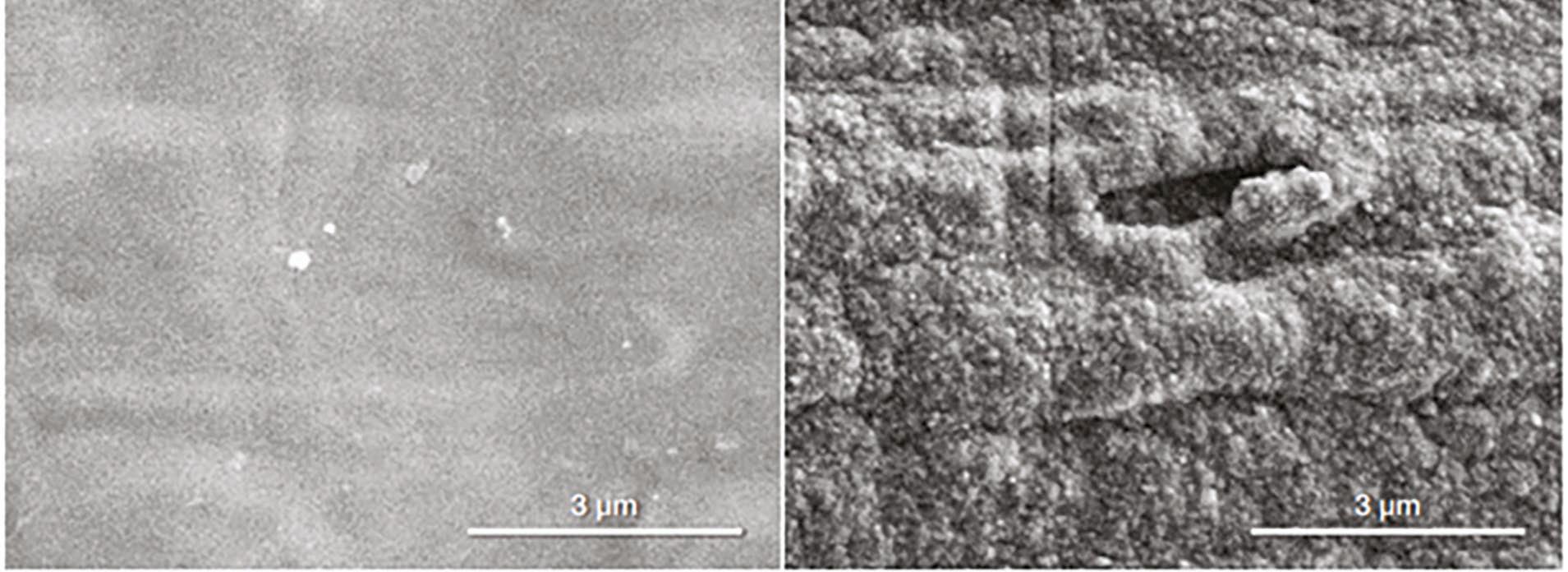

The most common modality used in scanning electron microscopy (SEM) is secondary electron (SE) imaging, which detects lower energy electrons emitted from the sample surface owing to interactions with the SEM electron beam. SE imaging provides information about sample topography.

Backscattered electron (BSE) imaging, on the other hand, detects higher energy electrons scattered back from deeper within the sample. BSE imaging reveals sample composition by atomic number contrast with heavier elements appearing brighter.

BEX imaging combines BSE sensors with X-ray sensors placed below the objective lens. The X-ray detectors collect characteristic X-ray emissions that are generated by the sample when irradiated by the SEM electron beam. The X-ray signal is processed to identify and assign colours to the detected elements, which are then combined with the BSE signal to create a final image. BEX imaging provides more information about sample composition and elemental distribution in the same acquisition time as SE or BSE imaging.

Compared with an Energy Dispersive Spectroscopy (EDS)

detector, a BEX imaging system offers a higher solid angle, enabling X-ray information collection at normal imaging speeds. The position of the X-ray sensors near the objective lens eliminates shadowing effects and allows for sample investigation at various working distances.

The Unity detector, recognised as one of the top microscopy innovations of 2024 by the Microscopy Today Innovation Awards, combines two types of sensors within a single detector head. It integrates

backscattered electron (BSE) sensors and X-ray sensors to provide comprehensive imaging with atomic number and elemental data. The detector is designed for daily imaging, offering flexibility in working distance, the ability to capture data from challenging sample topography, a wide field of view, and compatibility with variable pressure mode for non-conductive samples. The Unity detector delivers reliable and instant imaging results supported by advanced technology and software integration.

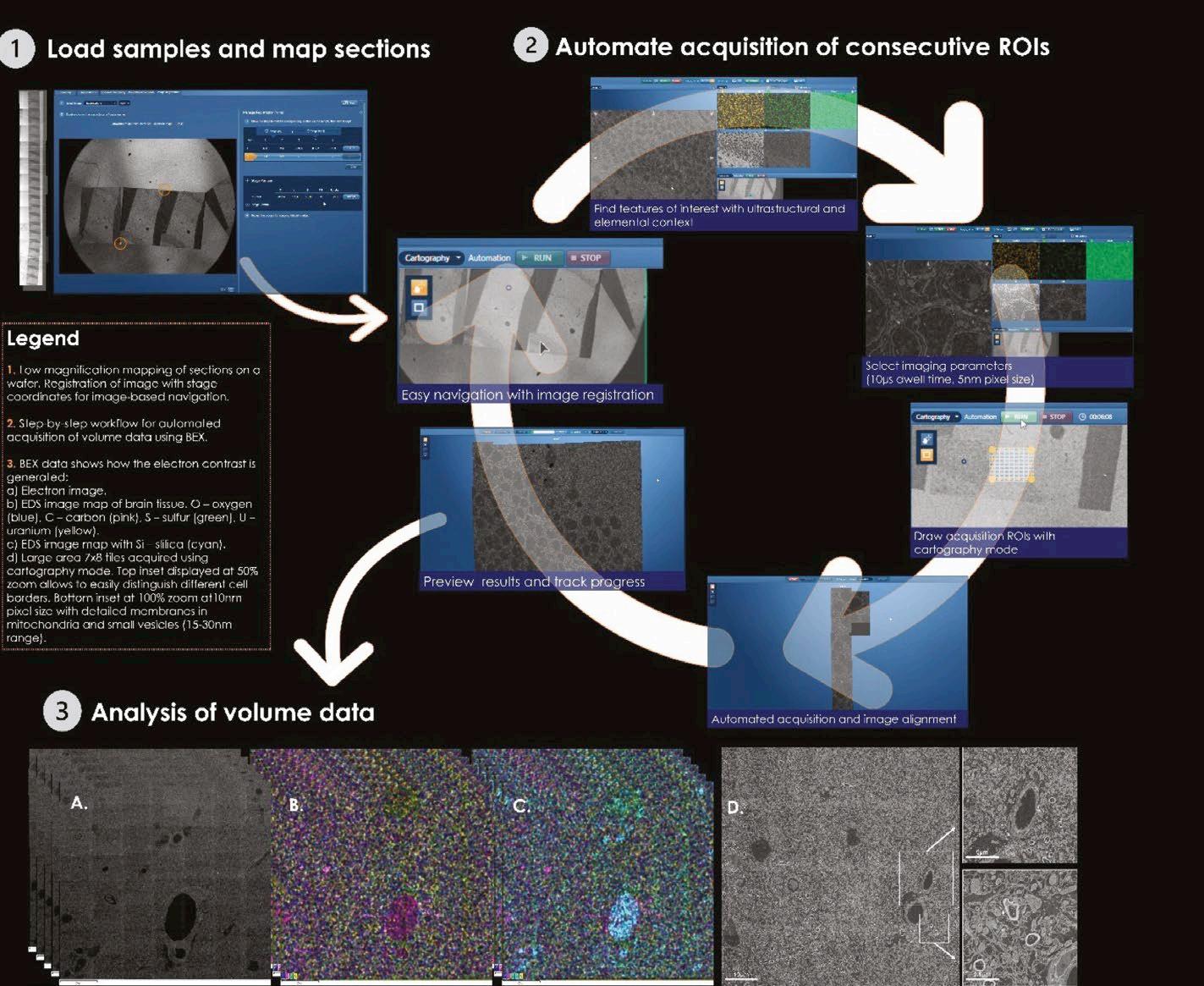

The Volume EM Technology Forum is a great opportunity for researchers and scientists to learn about the latest advances in volume electron microscopy (vEM). This year, the conference featured presentations from leading experts in the field, as well as technical presentations, posters, panel discussions, and hardware and software demos. Attendees also had the opportunity to network with other researchers and scientists, and to learn about new products and services from exhibitors.

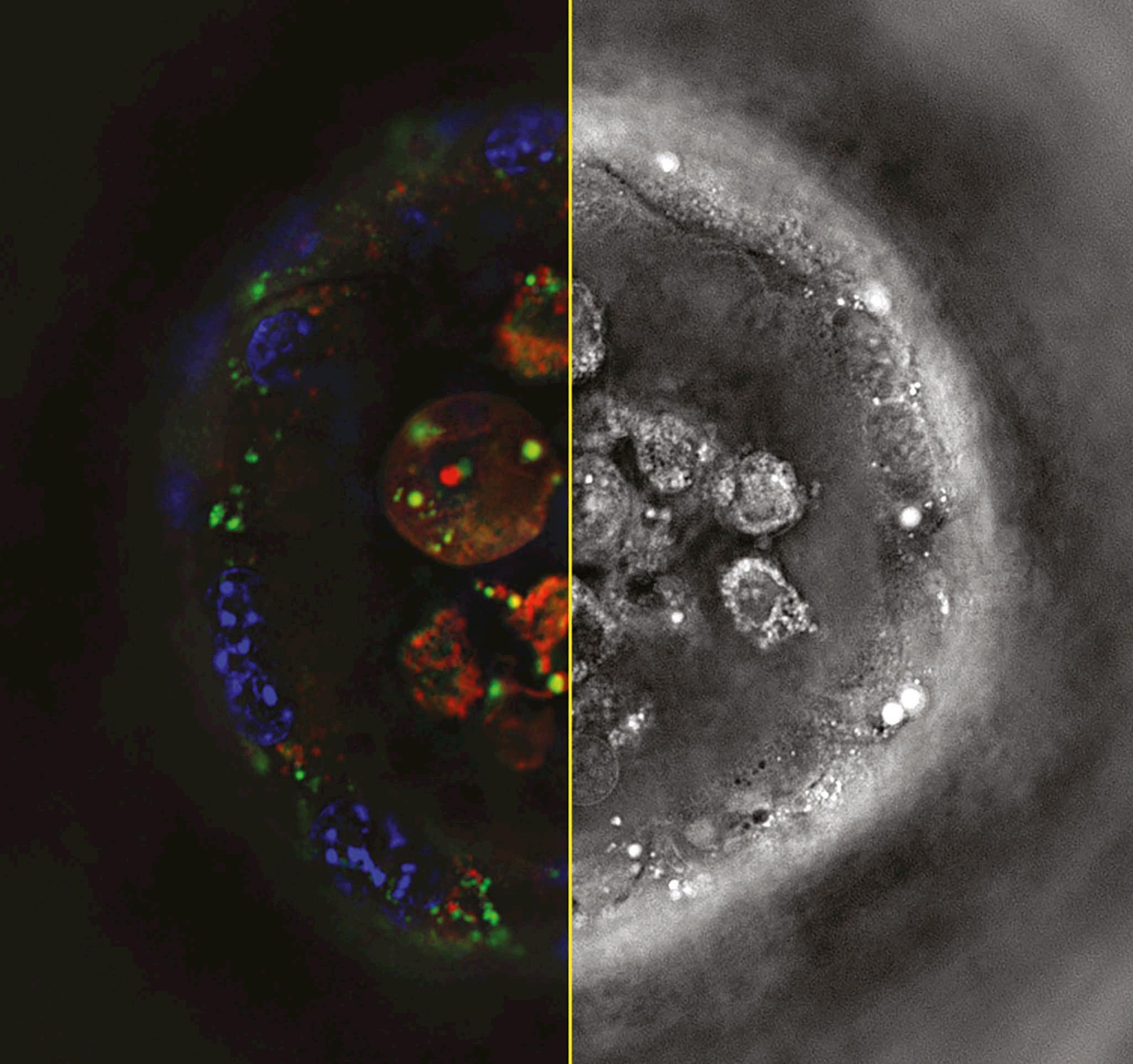

In this year’s event (21st October 2024 – 24th October 2024), the Oxford Instruments team presented a demo of our BEX detector, Unity, where we connected remotely to the Oxford Instruments Innovation Centre, a state-of-the-art facility at the High Wycombe site. We showcased the advantages of BEX imaging for biological and nonbiological samples, especially for vEM applications, where the speed and flexibility of BEX for different specimens is key (Figure 1).

With Unity Cartography mode, Oxford Instruments achieved highresolution chemical mapping of entire

biological samples. This workflow would previously have taken several hours to complete (even overnight) and can now be achieved within minutes with BEX imaging, enabling higher throughput and less beam damage for sensitive samples such as cells and tissues (Figure 2).

With BEX, new possibilities for vEM are open, for collecting elemental and ultrastructural information simultaneously, without added imaging time. In the example in Figure 3, BEX cartography was used to achieve high resolution images and fast mapping of array tomography brain slices. Large areas were imaged with a relatively short beam dwell time (10µs), which reduces beam damage, drift and resin charging. Elemental information was acquired simultaneously and provided chemical differentiation that can be used to further distinguish between sample features, opening the possibility of improving subsequent segmentation of data. BEX also provided information about strain distribution, which was quantified by the EDS detector. Being able to measure the amount of stain taken up by the sample improves the team’s ability to make direct comparisons between samples enabling them to optimise sample preparation techniques.

In this Q&A Robert Gaertner from Veeva Systems explores how cloud-based platforms are helping to both ensure quality in pharma manufacturing and support the shift to personalised medicine

GIVEN THAT QUALITY IS SO IMPORTANT IN HEALTHCARE. WHAT CHALLENGES DO BIOPHARMAS CURRENTLY FACE IN MAINTAINING THE NECESSARY STANDARDS?

The pharmaceutical industry faces many challenges and maintaining quality is one of them. Patients must trust that medicines are safe, meet high standards, and comply with regulations. With personalised medicine and other modern therapies, this becomes even more challenging. Unlike traditional medicines distributed through pharmacies, personalised therapies require extra layers of quality control. A good example is the public discussion around vaccines during the COVID-19 pandemic. People knew some vaccines had to be refrigerated, but they likely didn’t understand the extensive effort required. You can produce a high-quality medicine, but for it to remain safe and effective, you must monitor it until it reaches the patient.

HOW DOES VEEVA HELP BIOPHARMACEUTICAL COMPANIES MAINTAIN QUALITY?

There has been a significant shift in recent years. In the past, companies manufactured and tested medicines in-house. Now, manufacturers must pay more attention to the quality of their suppliers, and logistics play a growing role as more of the work is outsourced. While some companies specialise in logistics, the manufacturer remains responsible. This shift requires solutions that not only manage internal business processes but also integrate the entire supply chain, including partners. That’s why cloud-based, industry-specific solutions like Veeva Vault Quality have become critical. Cloud technologies play a key role in maintaining quality because companies need to communicate with suppliers, partners, and even patients, in real-time.

This requires easily accessible, secure, and traceable data, all while staying compliant with regulations like GDPR.

WHERE DO CONNECTED

CLOUD SOLUTIONS HELP THE MOST, PARTICULARLY WITH TRANSPARENCY?

Here’s an example: when a raw material is produced in another country, the quality decision requires approval based on the data the company receives. If this data is handled on paper, it causes delays. Cloud platforms provide real-time insights, and ensure data integrity. With cloud solutions, everyone in the supply chain can access the same data simultaneously, reducing redundancies and improving decision making.

HOW DOES VEEVA HELP KEEP DATA ENTRY CLEAN AND SECURE?

You have to look at the entire supply chain. In production, for example, some machines have sensors that provide data relevant for quality decisions. Another example is patient complaint management. If a patient experiences side effects, a doctor or healthcare professional enters this data manually. Ideally, they only need to enter it once, and the system ensures secure entry. Artificial intelligence can help ensure data is entered correctly, and quality checks can occur as soon as data is available. Connected cloud technologies, like those from Veeva, also bring together different data sources to maintain quality control.

HAS VEEVA DEVELOPED A STREAMLINED WAY TO ACHIEVE THIS?

Yes. Veeva’s Quality applications are scalable and easy to access online. Companies can define which groups of people need convenient access to

the database, and we can configure the system accordingly.

LOOKING TO THE FUTURE, WHAT CHALLENGES COULD THE INDUSTRY BETTER ADDRESS IN THE NEXT THREE TO FIVE YEARS, POSSIBLY WITH AI?

There’s a constant tension between efficiency and compliance. Regulations must be met, but at what cost? The key for the pharmaceutical industry is balancing GxP compliance with streamlining quality systems to improve both cost-efficiency and quality outcomes. The focus should shift from managing quality issues to preventing them. AI can help predict potential risks and prevent them before they occur. Additionally, the definition of a pharmaceutical product will evolve. We often think of medicine as a pill or physical product, but future therapies will be more complex and personalised. Homecare and clinical trials are moving from hospitals to patients’ homes, where wearables will report data. This brings new quality challenges, such as qualifying devices and validating processes, which are easier to manage with cloud solutions.

WHAT DOES THE INDUSTRY EXPECT FROM VEEVA IN THIS REGARD?

We develop our solutions in collaboration with our customers. We aim to understand their challenges and work with our experts to develop innovative solutions that align with their needs. It’s a combination of customer input and our strategic vision, alongside regulatory requirements, particularly in the area of quality.

Drug-induced liver injury (DILI) is a common cause of drug withdrawal during late drug development as well as post-approval. The liver, a primary site of drug metabolism, is particularly susceptible to drug-induced injury. However, DILI can occur through several pathways, each involving different mechanisms.

Intrinsic DILI is generally caused when a drug, or its metabolites, cause damage at high doses. It poses the least risk and is the easiest to predict using traditional approaches owing to its dose-dependency and early onset. Traditional in vitro and in vivo animal studies are less able to predict more complex indirect or idiosyncratic effects that are latent in onset. The former is generally mechanistically related to the pharmacodynamics of the drug causing immune system, or metabolic effects, with some

dose-dependency. The latter is more unpredictable and is often driven by genetic predisposition or underlying disease. These risks can pass undetected through development and early clinical trials, causing financial and reputation losses upon attrition. This poses the question - how can preclinical workflows be modernised to reduce DILI risk?

The safety toxicology toolbox requires modernisation through humanisation to understand how DILI is induced. Identifying potential issues earlier provides an opportunity to perform exploratory investigations to unlock the mechanism behind the cause. Previously, unlocking the cause has proven difficult as maintaining the key

phenotypic and functional attributes of primary human hepatocytes is notoriously challenging. Now there is a path forward that offers the potential to recover good drugs by engineering out the flaws, terminating programs before the clinic, or delivering the foresight to manage liabilities and proceed with caution.

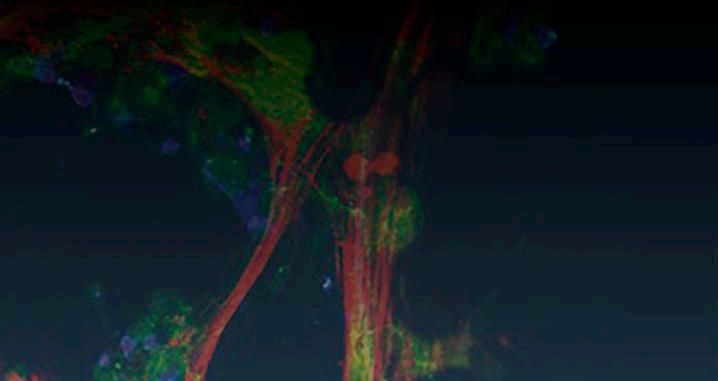

Liver-on-a-chip technology, or liver microphysiological systems, offer a sophisticated platform for studying DILI with greater accuracy and relevance to human physiology. These advanced in vitro NAMs replicate the complex 3D structure of the liver, including the arrangement of primary human hepatocytes and non-parenchymal cells, which are

❝ CN Bio’s approach has been recognised by the US FDA CDER (Centre for Drug

The company has created a webinar that aims to help people reduce their DILI risk

crucial for maintaining liver function and tissue-specific inflammatory responses. They are cultured under perfusion to simulate the liver’s microenvironment, including blood flow and shear stress, which are essential for promoting high metabolic activity and prolonged culture longevity to discover latent effects.

Liver-on-a-chip technology delivers deep mechanistic insights to elucidate the pathways through which drugs induce liver injury, such as oxidative stress, mitochondrial dysfunction, steatosis, dysregulation of bile acid synthesis or transport and importantly, immune-mediated damage to identify more indirect or idiosyncratic DILI events[1,2,3,4]. Additionally, by incorporating genetic variations and the presence or absence of common diseases such as metabolic disorders, these models can help to identify factors that increase susceptibility.

CN Bio’s PhysioMimix DILI assay utilises liver-on-a-chip technology to deliver exceptional performance, according to the company, as exemplified in a study using reference compounds from the IQ MPS Consortium DILI validation set and human-specific gene-therapies (antisense oligonucleotides), which are less-suited to animal testing. The

assay, cultured using PhysioMimix OOC Systems, delivered 100% sensitivity, 85% accuracy, and 100% precision, measuring six different biomarkers to produce a ‘signature of hepatotoxicity’, identifying hepatotoxicants that passed traditional in vitro tests [5].

CN Bio’s approach has been recognised by the US FDA CDER (Centre for Drug Evaluation and Research) group, which cited superior performance versus standard approaches in the first publication between the organ-on-a-chip (OOC) provider and the regulator [1]. Importantly, the utility of both the highly metabolically active hepatocytes and immune-competent Kupffer cells in the PhysioMimix assay were shown to be crucial in identifying hepatotoxic risk.

Recent technological advances have increased the assay’s throughput, enabling its use within lead optimisation, in addition to investigative toxicology, to justify the progression of promising drugs into in vivo studies by providing go/ no go or reengineer decisions at the tipping point between discovery and development.

The process of discovering and developing drugs is inefficient and costly. Change is required to reduce attrition rates and improve return on investment. OOC technology is becoming a lab essential because it provides the capacity to flag more liabilities than before and better inform next-step decisions [6]. CN Bio aims to help clients future proof their safety toxicology workflows, starting with DILI research, to help them reduce cost and time spent on projects.

1. https://doi.org/10.1111/cts.12969

2. https://doi.org/10.1016/j. tiv.2022.105540

3. https://doi.org/10.1016/j. tiv.2017.09.012

4. https://doi.org/10.1124/ dmd.116.074005

5. https://cn-bio.com/resource/ human-liver-microphysiologicalsystem-for-predicting-the-druginduced-liver-toxicity-of-differingdrug-modalities/

6. https://doi.org/10.1038/s41573-02200633-x

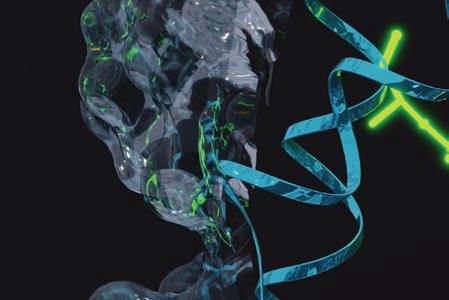

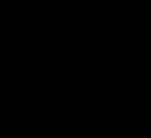

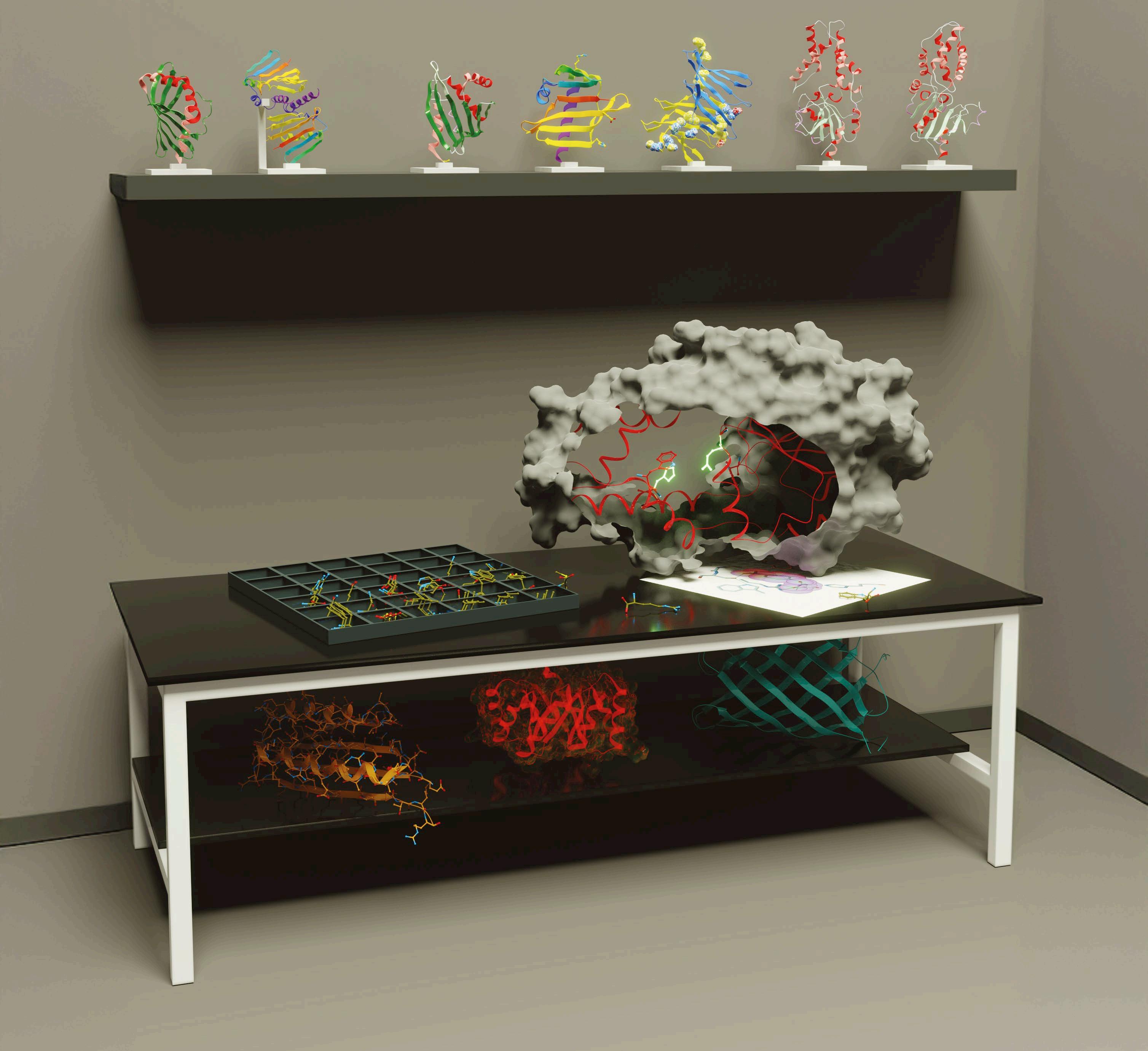

A collaboration between a team of enzyme engineers and a leading computational biologist promises to transform the industrial biocatalysis landscape, Nicola Brittain reports

Researchers at the Manchester Institute of Biotechnology (MIB) and the Institute for Protein Design at the University of Washington, recently received funding of £1.2m to set up an initiative called The International Centre For Enzyme Design which looks set to transform the design and engineering of enzymes for industrial applications.

The project, launched in November and to run for an initial four years, is a partnership between a group of University of Manchester UK academics and an international overseas partner, Professor David Baker, a renowned computational biologist based at the University of Washington. The consortium will use deep learning and computational techniques to create sustainable, industrial biocatalysts in a timely and cost-effective way.

Eurolab caught up with Anthony Green, director of the MIB, and Sarah Lovelock, a senior lecturer in the MIB, two of the lead researchers with the partnership to find out what the project will mean for business, the environment, and enzyme scientists more widely.

Green explained that the programme brings together leading computational and experimental teams to create a step-change in the speed of biocatalyst development. The methods developed will also allow

the development of new families of enzymes with valuable activities that are unknown in nature.

The Manchester-based team of scientists currently make use of experimental enzyme engineering techniques such as ‘directed evolution’ to create new biocatalysts for use in the chemical industry. This process aims to expedite the natural evolution of biological molecules and systems through iterative rounds of gene diversification and library screening. Green said: “The process is extremely powerful, but it’s also expensive, time consuming and requires specialist infrastructure.”

The new collaboration, and the resulting incorporation of advanced deep-learning methods for protein design pioneered by the Baker lab, will accelerate the enzyme development pipeline and potentially provide access to enzyme functions that are currently unavailable.

The enzymes developed will be

used for the sustainable manufacture of pharmaceuticals, including new therapeutic modalities, alongside other applications such as bioremediation (to help break down environmental pollutants), recycling of plastics, synthesis of flavours and fragrances, and production of bio-based fuels and commodity chemicals from renewable feedstocks.

The idea of designing new enzymes computationally has been around for decades, but the deep learning tools that have been developed recently offer a sufficiently high degree of accuracy, predictability of output and speed to make the researchers optimistic that the reliable design of efficient enzymes is within reach. These outcomes are likely to have wide-ranging impacts across the chemical industry, especially in the pharmaceutical sector for which time to market is extremely important.

Similarly, the process is much less computationally resource heavy than earlier methods of computational protein design making it less costly and time consuming.

Lovelock says: ‘’Although the activity of enzymes generated by computational methods may initially be lower than with natural enzymes, we can take advantage of the data generated to iteratively refine our design protocols, leading to improved success rates and higher catalytic efficiencies straight from the computer.

Experimental engineering will remain an important aspect of the process in the short-medium term, but over time we hope to reduce the need for extensive experimental engineering campaigns.’’

The enzymes developed can be used for the sustainable manufacture of pharmaceuticals, including new therapeutic modalities

As stated, the move from highthroughput experimental engineering of enzymes to a computational design process based on deep learning will save time and money. However, there are other key benefits according to Green. He explains that the findings will be shared widely via academic journals allowing more people in the field to carry out their own enzyme design campaigns. Over time the methods are likely to become more user friendly allowing non-specialists to work in the field and bring in new ideas. Green also expects that this will allow scientists like himself to devote their time to other aspects of their work.

Designing enzymes from scratch could also lead to an increase in the number of reactions that are accessible to the field of biocatalysis. “Designing enzymes computationally will allow access to new types of chemistry that we wouldn’t be able to access otherwise,” Lovelock says. “In this way we will maximize the impact that biocatalysis can have on the development of a greener and more sustainable chemical industry.”

Rory O’Keefe from Europlaz provides insight into global trends and innovations in this fast-moving market

As a specialist in medical device manufacturing, Europlaz is often at the front end of the latest product developments to hit the UK and global diagnostics marketplace. Commercial director at the company, Rory O’Keefe, believes the following changes in the point-of-care sector are destined to transform future clinical pathways.

Research recently published by Nova One Advisor indicates that the global point-of-care (PoC) diagnostics market reached US$44.25bn in 2023. In ten years it is expected to virtually double to US$80.75bn[1]. Much of this growth is being driven by rapid technological advances in non-invasive products, self-testing and digitally connected devices, coupled with an aging global population and the rising prevalence of chronic diseases.

There are some very established practices in this field. For example, PoC tests being done at bedsides, in wards, in community settings or at home. These are often done via portable devices that provide timely clinical decisions.

“PoC is far from new. Pregnancy and glucose over-the-counter self-tests have been around for decades. However, the pandemic demonstrated just how convenient PoC tests were for rapid results and population-scale testing.

“For remote communities where medical infrastructures are limited,

PoC tests are invaluable. We are now seeing the development of digital PoC devices which can provide contextual information on results to patients. This information can then be shared with healthcare professionals for further analysis, without them being physically present,” notes Rory. This reduces the burden on healthcare systems, while simultaneously speeding up the time it takes to get a result and treat conditions appropriately.

This transition from acute intervention to diagnostic technologies centered on wellness and prevention is being replicated across the world.

The three technologies that clinicians anticipate will most improve the efficiency and effectiveness of diagnostics in the next three to five years are Telehealth (83%), AI (75%) and Biosensors (74 %), according to one Deloitte survey.[2] Two-thirds of clinicians believe that these disruptive technologies will mean diagnostics will look ‘a great deal’ or ‘totally’ different in 6-10 years’ time.

Previous deterrents to PoC diagnostics, for instance expensive hardware, are no longer such big barriers to market access.

One company revolutionising the way people access diagnosis and treatment for major diseases, according to O’Keefe is Cambridgebased digital diagnostics platform

PocDoc. The company offers an appbased technology platform combining proprietary microfluidic assay tests, powered by its cloud-based applied AI diagnostics (HUESnap).

PocDoc’s Healthy Heart Check has been rolled out across the UK from Edinburgh down to Cornwall via pharmacies, the NHS and other healthcare providers. Healthy Heart Check screenings have also been run in underserved UK healthcare communities and at high footfall locations, including football stadiums.

PocDoc’s technology provides quantitative, multi-marker, clinical grade diagnostics using a bio-sensing approach that can be conducted across multiple settings, including at home. The company holds multiple patents, with a number pending.

PocDoc’s product development director Lucia Curson says “compared with conventional lateral flow tests, the PocDoc technology stack represents a huge leap forward in the field. It combines microfluidics, biochemistry, engineering, applied AI and integration with the healthcare system’s digital infrastructure, all through the same product experience.”

[1] https://www.novaoneadvisor.com/ report/point-of-care-diagnostics-market [2] https://www2.deloitte.com/uk/en/ pages/life-sciences-and-healthcare/ articles/future-of-diagnostics.html

Fuel therapeutic innovation with TriLink’s industry-leading nucleic acid products, available in Europe now through VWR

Through a new partnership with VWR, the delivery channel of Avantor, TriLink Biotechnologies catalog of nucleic acid technologies and capping reagents are now more accessible to customers in Europe. Explore a comprehensive series of products, including:

CleanCap® cap analogs – Achieve over 95% capping efficiency and high protein expression using a streamlined manufacturing process

Catalog mRNAs – Choose from three unique designs over 10 different genes, all capped with a CleanCap® cap analog

IVT enzymes – Discover IVT enzymes, including CleanScribe™ RNA Polymerase for up to 85% dsRNA reduction without compromising yield, quality, or purity

Modified and unmodified NTPs – Over 150 modified and unmodified nucleotides offered as catalog and custom options

See how TriLink’s cutting-edge offerings can advance your work, from discovery-stage research to commercial manufacturing.

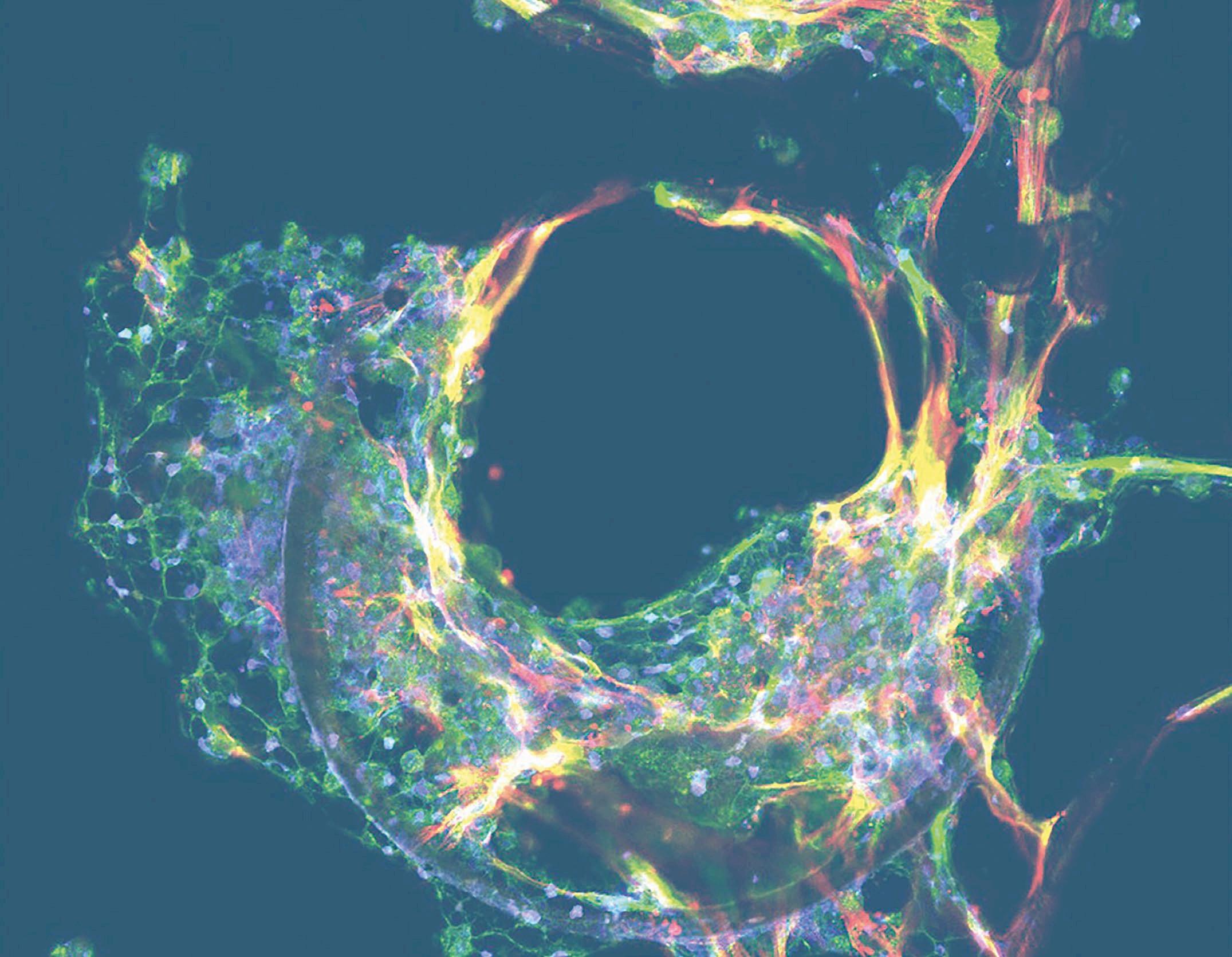

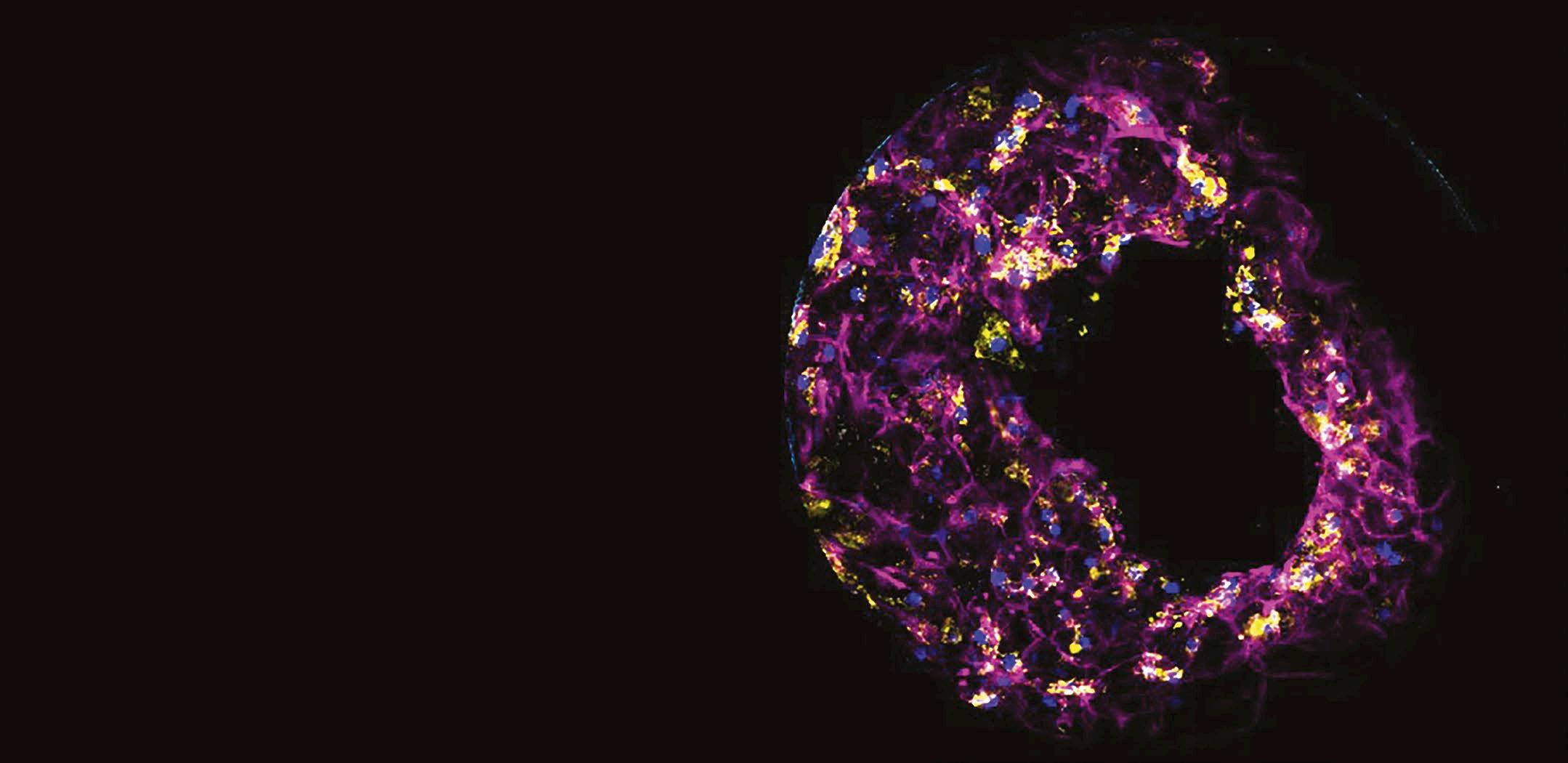

A partnership between leading technology companies promises to provide a next generation multimodal imaging platform

The HT-X1 Plus offers high resolution 3D imaging and stability

Manufacturer of highend microscopy solutions CrestOptics has partnered with a leader in holotomography (HT) technology Tomocube to develop a next-generation multimodal imaging platform, the HT-X1 Plus.

By combining the expertise of both companies, this platform integrates CrestOptics’ spinning disk confocal technology with Tomocube’s latest HT innovation allowing users to leverage high-resolution, 3D holotomographic imaging alongside fluorescence-based detection. This enables advanced and precise label-free biophysical imaging, according to a spokesperson for CrestOptics. The technique uses physics-based methods to study biological systems and the interactions between cells and molecules. It’s used to provide insight into living matter,

which can help with disease treatment. It is therefore a powerful tool in life scientific research.

The HT-X1 Plus offers high-resolution, 3D imaging and stability, according to CrestOptics. The platform is compatible with various imaging plates and powered by the TomoAnalysis software, which provides researchers with tools for detailed quantitative analysis and imaging across a wide range of applications.

The product provides imaging of multi-layered specimens with improved illumination optics and advanced image reconstruction algorithms. It uses a high-spec camera featuring a 4x larger field of view and

significantly reduced acquisition time than comparable products. It has been designed for use with highthroughput phenotypic screening of cells and organoids. The correlative imaging capabilities, incorporating an sCMOS-based fluorescence module, enable integration of molecular studies with single-cellresolution 3D images.

The product extends the reach of holotomography to a broad array of specimens, including dense organoids, tissue sections, and fastmoving microorganisms. The product enables acquisition of label-free, high-resolution 3D holotomography data and highly-sensitive, precise 3D fluorescence data.

Crest Optics’ product is the first commercially available spinning disc confocal holotomographic instrument enabling advanced 3D imaging with fluorescent-based detection according to the company.

CrestOptics is a specialist in spinning disc confocal technology (SDCM). This solution represents an alternative to laser scanning confocal technology (LSCM) and typically affords more detailed images. Rather than a single pinhole, a SDCM has hundreds of pinholes arranged in spirals on an opaque disk which rotates at high speeds. When spun, the pinholes scan across the sample

in rows, building up an image. The product will help researchers routinely perform challenging live-imaging experiments for extended periods of time. The multi-beam spinning method offers not only high-speed imaging but significantly reduced photo bleaching and phototoxicity. This gentle illumination combined with advanced optical sectioning makes the spinning disc confocal tool a standard for 3D live cell imaging, according to the company.

The product also uses Tomocube’s holotomographic imaging solution involving the illumination of a live cell with low power visible light from multiple illumination angles, this is followed by measurement of the phase delay of the transmitted light. The technique uses the measured refractive index as imaging contrast, allowing visualisation of 3D living cells and tissues without labelling or staining. With its high spatial resolution capable of capturing subcellular organelles, users have increasingly sought confocal fluorescence capabilities to design multiplex imaging workflows.

By combining these two non-invasive imaging modalities, the HT-X1 Plus affords more complete insights into molecular distribution within samples. Additionally, the integrated platform provides precision alignment of HT and fluorescence-based imaging datasets, allowing researchers to easily study molecular and phenotypic data in tandem and generate more powerful insights across biophysical imaging applications.

Dr Alessandra Scarpellini, chief commercial officer, CrestOptics, says “The integrated platform offers more complete insights into the molecular and phenotypic data from 3D cells and tissues, without requiring invasive methods, labelling or staining.”

Dr Sumin Lee, VP of customer development at Tomocube, said: “Collaborating with CrestOptics marks a major milestone in our commitment to meeting the needs of our customers. We’ve seen a growing demand for a solution that combines the label-free imaging of holotomography with the precision of fluorescence-based microscopy. The HT-X1 Plus provides unprecedented resolution and sensitivity for 3D biophysical imaging applications.”

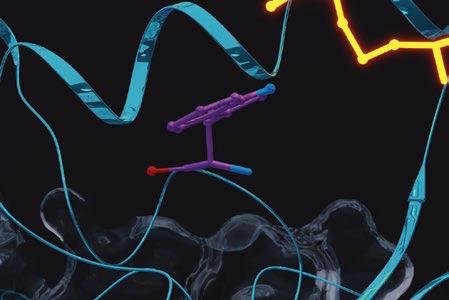

Restek’s Shun-Hsin Liang (PhD) provides a more comprehensive understanding of how ultra-short chain PFAS can be integrated into biomonitoring methods

While concerns about adverse human health effects from long-chain perand polyfluoroalkyl substances (PFAS) have led to increased biomonitoring, less is known about the potential toxicity of ultrashort-chain PFAS, which are ubiquitous and can occur at very high levels. Since both human plasma and serum are widely used for biomonitoring longer-chain PFAS, developing methods that include shorter chain PFAS are critical to gaining a more comprehensive understanding of PFAS exposure and human health. The workflow presented here was developed for the simultaneous analysis of C1 to C10 perfluoroalkyl carboxylic and sulfonic acids, along with four alternative PFAS, in human plasma and serum.

PFAS-free human plasma and serum were not found during screening tests, so charcoal-stripped fetal bovine serum (FBS) was used as the blank matrix for method verification because it did not contain any of

the target analytes, except TFA. To confirm the method’s suitability for real-world samples, NIST SRM 1950 (human plasma) and NIST SRM 1957 (human serum), which contain known concentrations of short-chain and long-chain PFAS, were also tested. Both SRMs contained PFOA, PFNA, PFDA, PFHxS, and PFOS; and NIST SRM 1957 also contained PFHpA. Calibration standards were prepared in reverse osmosis (RO) purified water with 1x phosphate-buffered saline (PBS) added to better match the sample matrices. RO water (generated at Restek) was used to prepare the standards and mobile phases because our testing revealed that it was devoid of PFAS contamination, except for barely detectable TFA.

To assess accuracy and precision, FBS was fortified at 0.4, 2, 10, and 30 ppb with non-labeled PFAS and isotopically labeled 13C-TFA, which served as a surrogate for the determination of TFA recovery. The fortified FBS samples were mixed with a quantitative internal standard (QIS) working solution containing

five isotopically labeled PFAS and an extracted internal standard (EIS) working solution. A single-step protein precipitation was performed in clean polypropylene HPLC vials to minimise background PFAS contamination. The NIST SRMs were prepared using the same procedure.

Gradient LC-MS/MS analysis was performed using an Ultra IBD analytical column (3 µm; 100 mm x 2.1 mm; cat.# 9175312) because its polarembedded stationary phase provides strong retention of polar compounds, even in the presence of the various salts, electrolytes, and buffers that are inherently found in plasma and serum. A PFAS delay column (cat.# 27854) was installed between the pump mixer and the injector to prevent coelution of any instrument-related PFAS with target analytes in the sample. Complete sample preparation and analytical method details, as well as additional discussion, are available in the full application note, which can be accessed at www.restek.com by entering ‘CFAN4273-UNV’ in the Resources Hub search.

Figure 1: Analysis of a 10 ppb PFAS Standard

All analytes exhibited recovery values within the range of 82.3 – 115% across the three fortification levels

A ADONA 9Cl-PF3ONS 11Cl-PF3OUdS

TFA

TFMS

PFPrA (C2) PFEtS (C4) PFBA (C3) PFPrS (C5) PFPe A (C4) PFBS (C5) PFPe S (C6) PFHxA (C6) PFHx S (C7) PFHp S (C7) PFHpA (C8) PFOS (C8) PFOA (C9) PFNS (C10) PFDS (C9) PFNA (C10) PFDA

RESULTS AND DISCUSSION

As shown in Figure 1, strong retention and good separation of early eluting, ultrashort-chain PFAS were obtained under reversed-phase conditions using the polar embedded Ultra IBD column. More important, the extensive retention of the ultrashort-chain PFAS, especially for the first-eluted analyte (TFA), led to reduced matrix interferences.