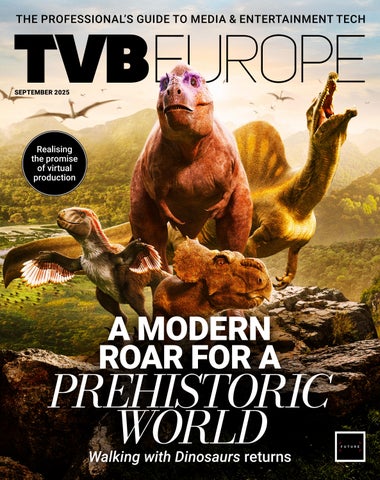

Realising the promise of virtual production

Realising the promise of virtual production

Walking with Dinosaurs returns

n the run-up to IBC Show, I’m often asked the same question: which themes are you tracking ahead of the show? Sometimes I find it hard to answer because of the sheer amount of different subjects we cover in both TVBEurope’s Daily newsletter and each of our magazine issues. But this year, there are definitely a few that stand out.

Everyone has been talking about artificial intelligence for the past 18 months, and it shows no sign of slowing down. Of course, I know that AI is not new to the media and entertainment industry; it’s generative AI that is shaking things up and generating the most headlines. In a way, it’s almost helping to democratise things like video generation, maybe even helping young people see a way into both the production and technology sides of the industry. I’ll let you decide what to make of that.

I’m also hearing a lot about the Media eXchange Layer (MXL), part of the Dynamic Media Facility reference architecture developed by the EBU. It aims to enable interoperable exchange of media between traditional broadcast and professional AV software. I would definitely recommend checking out the July/August issue of TVBEurope for a proper deep dive into the subject. But what I am seeing is media tech vendors starting to talk about it a lot more. Sometimes when that happens, vendors get excited about something that their customers take longer to adopt—cloud and IP being good examples. But I feel that having an organisation like the EBU helping to push MXL will bring broadcasters on board much faster.

“Virtual production doesn’t have to include a huge LED screen; you can use the phone in your pocket to create amazing backdrops”

Virtual production isn’t new; it’s been around for a few years now, but again I feel like traditional broadcasters are becoming more interested in it. I always say virtual production went mainstream when Coronation Street used it back in 2022. Now we’re seeing it employed on shows like Doctor Who, Good Omens and Brassic Broadcasters are realising the potential of virtual production and the benefits it offers. It also doesn’t have to include a huge LED screen in a building on an industrial estate somewhere; you can use the phone in your pocket to create amazing backdrops. It’s even being used by corporate entities to enhance their content, and is also finding its way into visual podcasts. You’ll find examples of all three in the following pages. That’s just three of the themes I’ve been talking about ahead of IBC2025. I’m sure you’ve probably been talking about completely different areas of innovation. That’s the great joy of this industry: there’s always something to talk about. All I can say is, make sure you’re signed up to TVBEurope’s Daily newsletter to stay up to date with all that’s going on in this dynamic, ever-changing world.

JENNY PRIESTLEY, CONTENT DIRECTOR @JENNYPRIESTLEY

FOLLOW US

X.com: TVBEUROPE / Facebook: TVBEUROPE1 Bluesky: TVBEUROPE.COM

Content Director: Jenny Priestley jenny.priestley@futurenet.com

Senior Content Writer: Matthew Corrigan matthew.corrigan@futurenet.com

Graphic Designers: Cliff Newman, Steve Mumby

Production Manager: Nicole Schilling

Contributors: David Davies, Helen Dugdale, Kevin Emmott, Kevin Hilton, Graham Lovelace, Matt Stagg

Cover image: Image courtesy of BBC Studios, Lola Post Production, Getty Images

Publisher TVBEurope/TV Tech, B2B Tech: Joseph Palombo joseph.palombo@futurenet.com

Account Director: Hayley Brailey-Woolfson hayley.braileywoolfson@futurenet.com

SUBSCRIBER CUSTOMER SERVICE

To subscribe, change your address, or check on your current account status, go to www.tvbeurope.com/subscribe

ARCHIVES

Digital editions of the magazine are available to view on ISSUU.com Recent back issues of the printed edition may be available please contact customerservice@futurenet.com for more information.

LICENSING/REPRINTS/PERMISSIONS

TVBE is available for licensing. Contact the Licensing team to discuss partnership opportunities. Head of Print Licensing Rachel Shaw licensing@futurenet.com

SVP, MD, B2B Amanda Darman-Allen

VP, Global Head of Content, B2B Carmel King MD, Content, Broadcast Tech Paul McLane

Global Head of Sales, B2B Tom Sikes

Managing VP of Sales, B2B Tech Adam Goldstein VP, Global Head of Strategy & Ops, B2B Allison Markert VP, Product & Marketing, B2B Andrew Buchholz

Head of Production US & UK Mark Constance

Head of Design, B2B Nicole Cobban

By Matt Stagg, founder and consultant, MTech Sport

Virtual production is no longer a niche or high-end technique. Broadcasters, sports organisations, and creators are now using real-time tools to deliver high-quality content faster, more consistently, and without traditional overheads.

Green screens, camera tracking, and real-time engines are making content workflows more flexible and accessible. From matchday studios to branded social campaigns, virtual production is quickly becoming part of the everyday production toolkit. What used to need a Hollywood soundstage now fits in the corner of a club media room.

Virtual production blends live action with real-time graphics. It works with LED walls or green screens, using camera tracking to align virtual backgrounds with real-world movement. Broadcasters and content teams now use compact virtual production setups to build reusable environments that support everything from live programming to fast-turnaround digital content. Virtual scenes can be reused, modified quickly, and adapted across formats without the delays of physical set changes.

With the pressure to produce more content across more platforms, virtual production helps deliver speed, flexibility, and consistency while keeping control of production quality. Visual identity can be locked in and reused, even when producing from different locations or with remote contributors.

Virtual production also solves problems around physical space and set availability. It reduces the need for repeated set builds and allows a single asset to be adapted for different audiences. For sport and live-event teams working to tight schedules, it can be the difference between making content and missing the window.

There is also a clear sustainability benefit. Reducing travel, set construction, and on-site logistics lowers the environmental impact of production. As broadcasters and brands face rising pressure to hit sustainability targets, virtual production offers a way to cut emissions while improving output—and saving budget in the process.

Sports clubs are using virtual production for interviews, tactical segments, media days, and sponsor content. A basic green-screen setup with camera tracking and a real-time engine can serve multiple teams and outputs, from digital match previews to studio clips for international feeds. Broadcasters are turning to virtual environments to add flexibility and consistency to daily production. Studio-based

formats such as magazine shows, discussion panels, and hybrid news-sport formats benefit from adaptable sets that match brand identity and allow for quick format changes. Creators and branded content teams are using similar workflows. Virtual production allows them to film different scenes in a single session and deliver tailored assets for multiple platforms with a consistent look and feel. This is especially valuable when turnaround is tight or when clients demand location-style output without travel.

Virtual production is often misunderstood as expensive or overly technical. In reality, many teams are running small-footprint setups with green screen, basic lighting, camera tracking, and real-time rendering. It does not require a LED wall, VFX supervisor, or Hollywood-grade facility. The tools are becoming more intuitive, and training is more accessible. Roles like virtual studio operator and real-time content designer are now common within traditional broadcast teams.

The technology is also helping to bring in a new generation of creators. Tools like Unreal and Unity are widely used in gaming, animation, and interactive design, meaning many younger professionals are already using them. This opens the door for a more diverse workforce and new creative approaches. When paired with experienced broadcast operators and editorial teams, these creators are building a stronger pipeline that reflects how content is being made today.

Virtual production is shifting from a specialist tool set to shared infrastructure. Cloud-based control, remote production, and AI-driven automation will all continue to influence how these environments are created and used. Graphics, lighting, and data feeds can already be updated in real time. Virtual production will soon be more deeply connected to editorial and commercial workflows. Broadcasters and rightsholders who build this capability now will be ready to respond faster, scale production intelligently, and take advantage of new commercial formats. In short, virtual production won’t just support the next wave of content; it will shape it.

Virtual production is not a trend. It is already transforming how content is made and delivered, giving teams more control, creative flexibility, and production consistency while reducing cost and complexity. From sports clubs to entertainment studios to digital-first creators, the same tools are now in play, and those who adopt early will not just keep up, they will lead.

By Graham Lovelace, AI strategist, writer and keynote speaker

Google stole a march on its AI rivals in May by launching a video generator that introduced sound. Not just music and sound effects, but dialogue, too, with near-perfect lip-sync. Within hours of its release, the first Veo 3 outputs lit up social media with AI-generated street interviews (more “faux pops” than vox pops) and a chilling fake news reel on YouTube labelled It’s time for the thing that everyone feared: deepfakes looking and sounding as real as genuine live news.

Shortly afterwards, advertising legend Sir Martin Sorrell urged agencies to move from “time-based to output-based compensation” as 30-second commercials that previously required months and cost millions would now “take days and cost thousands”, thanks, of course, to AI. Speaking on the sidelines at Cannes Lions, Sir Martin said AI would transform copywriting and visual production.

US audiences watching the NBA Finals in June got a glimpse of that transformation—a totally wild AI-generated TV ad that delighted its client Kalshi, the online prediction market, and startled its creator, video producer PJ “Ace” Accetturo. Why the surprise? That Accetturo had been hired “to make the most unhinged” TV ad possible, and that a TV network had approved it. Accetturo blogged how he’d turned Kalshi’s rough ideas into prompts, using Google’s AI assistant Gemini, then fed those prompts to Veo 3.

Hundreds of Veo 3 generations resulted in 15 usable clips. The project took two days and cost $2,000. Anyone can do this, right? “You still need experience to make it look like a real commercial,” he said. “I’ve been a director 15+ years, and just because something can be done quickly doesn’t mean it’ll come out great.”

A month later, Netflix co-CEO Ted Sarandos admitted generative AI had been used for the first time in one of its original TV series. Sarandos told analysts that AI was used to simulate a building collapse in the making of the hit Argentine sci-fi series The Eternaut “Using AI-powered tools, they were able to achieve an amazing result with remarkable speed and in fact, that visual effects sequence was completed 10 times faster than it could have been completed with traditional VFX tools and workflows,” said Sarandos.

“We remain convinced that AI represents an incredible opportunity to help creators make films and series better, not just cheaper.”

We don’t know which AI model was used, but soon after Sarandos’ revelation, Bloomberg reported that Netflix had been trialling Runway AI’s generative tools. The same report claimed Disney was also testing Runway’s technology–though none of the parties wanted to comment.

Their reticence is understandable. Memories of the 2023 Hollywood actors’ and writers’ strikes are still raw. The longrunning disputes centred on the potential for AI to replace artists by scanning their faces and creating digital replicas, as well as substitute writers with AI prompts generating scripts. The studios made major concessions to creatives, but now those hard-won victories feel more like a temporary truce. The temptation on the part of studios to at least dabble with AI that, by the week, becomes more powerful and more cost-effective, is so great we’re bound to see a re-run of those labour battles.

The other tightrope TV and film producers are walking is the degree to which they want to be seen to be on the right side of AI’s ethical debate.

Video generators from the major AI developers were largely trained on material scraped from the web without the consent of rightsholders. Runway is being sued in the US by a group of artists who accuse it of infringing on their copyright. But Runway has another approach: training video models on a studio’s archive. That bespoke arrangement is at the heart of the company’s landmark deal with Lionsgate Studio, struck last year.

TV and film studios can now take a bigger leap along the ethical path. In July, AI-start-up Moonvalley released Marey, the first fully-licensed AI video generator for professional filmmakers with around 80 per cent of its training footage coming from indie creators and agencies. While Marey has been trained on a smaller dataset, its architect says he’s overcoming the shortfall by using better technology.

TV and film studios now have three options: dirty data, bespoke data, clean data. The question is no longer when they will embrace AI, since they’re doing it now, but how? Will they do it in a way that respects creators, or replaces them?

Graham charts the global impacts of generative AI on human-made media at grahamlovelace.substack.com

By Carrie Wootten, co-founder, Global Media and Entertainment Talent Manifesto

It seems crazy that when I attended NAB Show earlier in the year, On Air was only really a concept. Fast forward a few months, and it’s now on track to become the world’s largest global student-led broadcast with giants of the media industry such as AWS and ITV Studios supporting the project and 17 universities across six continents taking part—all who are challenged with producing one hour of live content which will be streamed to a global audience via YouTube.

What has been striking over the last few months is how quickly partners, whether education or industry, have come on board for this project. And it has made me ask, why has it resonated so strongly?

Bridging talent and industry

At its core, On Air is about connection. Between students and industry. Between education and opportunity.

At a time when headlines are filled with talk of AI replacing entry-level roles, it’s no wonder young people are feeling uncertain about their future in media. Many of today’s students are entering the workforce with uncertainty. They’ve come through disrupted education experiences during the Covid-19 pandemic and are graduating into a rapidly evolving sector. AI, automation, remote workflows and structural shifts are all changing the shape of the media industry, and with them, the types of roles available. While some companies are making cuts, others are scaling up. Start-ups are innovating fast. Entire disciplines, from production to post, are being reshaped. All of them need talent with the right skills to futureproof their operations.

We need diverse talent entering this global industry now more than ever. Students won’t just be entering a job role, but a global, networked industry built around innovation and change. They won’t be focused on a local business, but a sector that spans every part of the globe, with jobs and career paths available for them to grasp and adapt with.

This is what On Air was built to support. It offers students practical experience, global exposure, and a network that starts before their first job does. At the same time, it provides the industry with an early window into the talent shaping tomorrow.

Bringing education and industry closer together has always been beneficial, and as the industry adapts to new skill demands, education must do the same. Collaboration is vital—and that’s exactly what On Air is designed to support. The benefits for students are both immediate and long-lasting. As well as broadcast-ready experience,

participants gain production credits, creative confidence, and international exposure—all before graduation. They’ll be building networks with peers from around the world and connecting with some of the biggest names in the sector. With over 500 students predicted to be involved in On Air, this initiative empowers them to start building international networks from day one—and gives companies the chance to support and engage with emerging talent that’s ready for the future.

In addition to this, we are also offering students personal branding training, sustainability workshops, access to major trade shows like IBC and NAB Show, and opportunities to contribute to the OTTRED app and community. It’s an extraordinary chance to become fully immersed in the media and entertainment technology ecosystem from the outset.

On Air is proudly delivered by the Global Media and Entertainment Talent Manifesto, which I co-founded in 2023 to address mounting skills challenges across the sector. This project is one example of what can happen when we stop talking about the talent pipeline and start building real bridges.

We believe On Air has the potential to become a repeatable model for how industry and education can collaborate—not just as a one-off. With advertising and sponsorship opportunities still open, and the livestream staying online post-event, the channel will also become an enduring showcase of student work, accessible to employers and educators worldwide. It’s a practical way to build skills, visibility and confidence in the people who will shape the future of our industry, and a chance for us all to play a more proactive role in supporting them.

To find out more or get involved, visit: mediatalentmanifesto.com/on-air

“Collaboration is vital—and that’s exactly what On Air is designed to support”

By Mathieu Mazerolle, director of product—new technology, Foundry

Virtual production has progressed significantly since LED walls first captured the imagination of filmmakers with their promise of real-time, in-camera visual effects (VFX). Over the past few years, studios and vendors have adopted a spectrum of techniques ranging from simple 2D plates to full 3D environments created using game engines. But as both the technology and practices mature, a middle ground built on the 2.5 techniques established by VFX over the years is emerging as the most viable approach, poised to best realise the promise of virtual production.

Real-time 3D environments offer the broadest flexibility, but the cost, quality, performance, and complexity for supporting them is often prohibitive. Using 2D plates is simpler and more cost-effective while also offering higher quality, but the lack of depth means that the technique is only applicable to a narrow set of use cases.

In contrast, 2.5D techniques use photographic content projected onto geometry, creating parallax and depth while maintaining the highest image quality, and doing so without the computational overhead or production demands of full 3D. It’s a creative sweet spot where many productions are finding the control, quality, and flexibility they need.

Take, for example, the rooftop sunset scene in VFX Oscar nominee The Batman. The Gotham skyline was created from photography of New York, stylised and enhanced with just enough 2.5D geometry to deliver the right amount of perspective and parallax. The result: a striking, cinematic backdrop with a fraction of the complexity and cost of a fully modelled city. This approach ensured the production remained efficient and manageable, and delivered a spectacular end result.

The creative advantages of 2.5D are clear. It offers high-resolution imagery based on real photography, with believable parallax and depth cues as the camera moves. But 2.5D techniques were previously difficult to achieve on set, largely because the tools and technologies were cobbled together from other industries and not built with filmmaking in mind. At Foundry, we serve VFX artists who drive innovative techniques and live at the intersection of technology and artistry. Our goal is to accelerate their craft with tools that empower them to create breathtaking imagery. Tools like Nuke Stage are designed specifically to bring VFX sensibilities into virtual production. Instead of treating LED walls and on-set visualisation as a separate virtual production pipeline, Nuke Stage integrates them into established VFX pipelines.

Artists can now fine-tune imagery on set using live compositing to bridge the virtual and physical elements. This approach enables productions to work with seasoned VFX studios and artists during filming, empowering supervisors who excel at capturing convincing digital imagery in-camera and maximising both quality and believability.

By integrating tools like Nuke Stage into 2.5D workflows, VFX artists effectively become virtual art departments, with the ability to prep, preview, and refine scenes while also contributing creatively on set. This stands in contrast to the more technically-focused workflows often seen in “brain bar” operations on typical LED wall shoots. The result is fewer iterations, greater consistency, and the integration of pre- and post-production thinking. In doing so, it fulfils the original promise of virtual production: to streamline creative decision-making and lower costs by placing skilled artists closer to the point of capture.

At a time when studios are under pressure to deliver more with less, these efficiencies matter. Virtual production, once seen as a bleeding-edge experiment, can be a practical tool in the filmmaker’s kit—and an efficient one in the eyes of producers and department heads. In many ways, virtual production is entering a more mature phase. And as the technology settles from dizzying hype to sustainable reality, it’s becoming clear that VFX professionals are central to making it work.

The early excitement around LED walls, game engines, and media servers generated plenty of buzz, but not all of it led to better filmmaking. Today, successful virtual productions blend creative disciplines, build on the foundations of established VFX practices, and understand how to make image-based content truly shine.

Tools like Nuke Stage are helping to usher in this new phase. By giving artists precise control over 2.5D environments, integrated colour pipelines, and the ability to work directly with photographic assets using established compositing tools, we’re bridging the gap between traditional VFX and real-time production.

It’s not about replacing one workflow with another. It’s about building a continuum where artists can choose the right level of dimensionality for the job.

Virtual production doesn’t need to be all-or-nothing. And it doesn’t need to mean reinventing the wheel. With the right tools and the right mindset, we can turn the promise of virtual production into a practical, creative, and sustainable reality.

TVBEurope’s newsletter is your free resource for exclusive news, features and information about our industry. Here are some featured articles from the last few weeks…

Aiming to blend cutting-edge technologies such as immersive camera systems with insights into performance data, OBS will shift to a fully IP and IT-based infrastructure for the Games. Cloudintegrated, virtualised workflows will be enabled across venues with key focus areas including AIdriven content processing, increased use of 5G and wireless systems for agile camera operations and sustainable practices across the entire event.

The streamer has revealed some of the technology behind its move into live content.

BUILDING THE SOUND OF BUILDING THE BAND

Sound supervisor Oliver Waton talks exclusively to TVBEurope about his work on Netflix’s new music series, and why Shure’s new Nexadyne microphones turned out to be the perfect fit.

DON’T RECEIVE THE NEWSLETTER? YOU CAN SIGN UP FOR FREE HERE: YOU CAN SUBSCRIBE FOR FREE VIA THIS QR CODE

As the EBU marks its 75th year, Jenny Priestley sits down with director-general Noel Curran to discuss its enduring mission and the critical challenges facing broadcasters today

While the core mandate of the European Broadcasting Union (EBU), to defend and promote public service media, remains steadfast, the organisation itself has undergone a dramatic transformation since it first launched in 1950. “It started out as a very old-fashioned international organisation,” explains Noel Curran, the EBU’s director-general. “Now, it’s a more dynamic, agile organisation providing a much broader range of services, and it’s much more vocal.”

The EBU’s importance extends beyond public service media, serving as a critical unifier for the entire European broadcasting industry. “Within Europe, what we are realising more and more is, while we think we’re big, we’re actually quite small,” says Curran. This awareness has helped foster a growing imperative for collaboration, not just among public service broadcasters but also with the commercial sector.

“We will always have flashpoints and things we disagree on, but I think in Europe we are beginning to realise that there is a common challenge which is significantly greater than us in size, and that we need to work closer together,” he states.

This shared understanding of an external threat, particularly around the scale and influence of big tech, is a driving force behind the EBU’s collaborative efforts as it aims to act as a crucial facilitator, opening doors for communication and cooperation that would otherwise be difficult to achieve.

Looking back at the EBU’s biggest achievement over its 75-year history, Curran points to the organisation’s unwavering commitment to defending and promoting public-service media. Beyond that, the EBU has been crucial in fostering an environment in which leaders, often insular due to the intense national pressures they face, can look beyond their borders.

“As the head of a public service media organisation, you’re in the middle of everything, usually storms and controversies, and you can become a bit insular,” he says. “The EBU’s achievement is to open people’s minds to what is happening elsewhere, collaborate, communicate, learn, and work together. I think that’s very special.”

A challenging political and technological landscape Since his appointment in 2017, Curran’s role has mirrored the EBU’s own expanding scope, as it offers a much broader range of services, encompassing content, technology, AI, and legal aspects, all of which have impacted his responsibilities. The relationship with technology and big tech, particularly with the rise of AI, has become a significantly larger part of the EBU’s work.

Perhaps the most notable change has been the increase in political pressure on public-service media across Europe (and beyond). “When I joined, 40 to 50 per cent of my travel was to do with policy, either in relation to political parties or regulation,” Curran states. “Now the vast majority of my travel is to meet governments and regulators, as well as to help broadcasters who are in some difficulties and facing political pressure.”

The EBU also actively works with a wide array of international organisations, from the World Broadcasting Union and the United Nations to commercial broadcasters and journalism advocacy groups like Reporters Without Borders. “It’s one of the most fascinating elements of the job for me, but it also shows how organisations want to work together more and more. How do we achieve scale of impact? That’s why we’re talking to each other much more than we did previously.”

In recent years, broadcasters have been widening their impact and saving costs by sharing content, pooling research and development, collaborating on innovative projects, and undertaking co-productions. This collective approach also provides a “much stronger voice when it is a unified voice in Brussels and even nationally,” says Curran.

Asked about the main challenges facing publicservice broadcasters today, he cites increasing political pressure and the overwhelming influence of big tech. “We see that right around Europe, and that is problematic and dangerous.”

Regarding big tech, he explains, “we need to work with them, but also we need to find ways of making sure that what they do is not detrimental to public service media and to culture and business in Europe.”

Despite these challenges, public service media maintains a critical advantage: “Trust is our lifeblood. It’s core to what we do. In 91 per cent of European countries, public service media is the most trusted media, and we shouldn’t lose sight of that or be complacent about it. We need to show our independence, impartiality, transparency and be prepared to put our hands up when we make mistakes.

“Infallibility is never a virtue that I have claimed for public service media. We will make mistakes, and it’s important that we own those mistakes and learn from

them, but we shouldn’t feel that public service media has fallen off some trust cliff. It hasn’t.”

Even in times of crisis, younger audiences turn to public service media, demonstrating that trust is earned and must never be taken for granted. “If you’re complacent, you lose it, and it’s very hard to get it back when you lose it,” Curran warns.

The EBU’s role in technology development continues to be important in a world where change, particularly in areas like generative AI, is moving at a spectacular pace. It serves as a vital reference point for members, allowing them to understand what others are doing, learn from successes and failures, and find opportunities for collaboration. “A lot of our members feel they’re too small to really have an impact,“ Curran says. ”So I think all of those things just show how important it is to have a central reference point, and that’s the EBU.”

While the EBU isn’t a regulator, it plays a crucial role in shaping the technological landscape by working on approaches and standards. It also advocates for key issues like prominence and transparency, helping regulators craft effective policies.

The EBU Academy is a great example of this commitment, with its School of AI seeing extraordinary success since its launch last year, with more than 1,000 individuals completing the programme. “Training is the absolute bedrock of that for us and for all our members,” says Curran. It’s about shared experiences across the members on a range of different topics.

”EBU Academy is growing in terms of impact and influence, and I fully support that. I see it developing even further in the years ahead.”

Talking of the future, Curran’s current seven-year term as director-general is due to end in 2028. While he stresses he has no plans to step down, he does have aspirations for the future of the organisation.

“I would hope that the EBU will have strengthened public service media by helping the members transform themselves, and by bringing the members together,” he says.

“The EBU has changed fundamentally as an organisation,” Curran continues. “We are much more agile, much more responsive, much more dynamic. We offer a much broader range of services, and that’s down to everybody in the EBU who has instituted that change.”

While Curran says it’s “a bit early for me on the legacy front,” his vision for a stronger, more collaborative, and resilient public service media in Europe is clear.

Broadley Studios uses Brainstorm’s InfinitySet with Unreal Engine to enable clients to film in any virtual world they can imagine

For a few years now, virtual production has become a common technique widely used in film, broadcast, and live events worldwide. The industry agreement is that virtual production is here to stay and responds to the profound changes the digital age has driven in content creation. Broadcasters

and production companies are aware of the opportunities virtual production brings to improve the carbon footprint, content quality, and flexibility in creation.

With real-time rendering, augmented reality, and LED volume stages now accessible to a broader range of creators, the industry has reached a turning point. No longer confined to big-budget studios, virtual production is now powering everything, from independent films and news broadcasts to corporate presentations and branded content. Helping shape this future is Broadley Studios, a London-based virtual production facility known not only for its cutting-edge Brainstorm technology but also for its commitment to sustainable production practices.

Broadley has built a reputation for making high-end virtual production technology both accessible and adaptable. The studio uses Brainstorm’s InfinitySet with the latest Unreal Engine 5.3 in combination with a versatile chroma to enable clients to film in any virtual world they can imagine without leaving the building.

The increasing popularity of virtual production has enabled companies like Broadley to take on a significant role in the filmmaking and broadcast industry because of the technology’s ability to notably cut time and economic resources. Unlike traditional filmmaking methods, virtual production leverages real-time rendering technologies, LED volume stages, chroma sets, and sophisticated motion capture systems to bring creative visions to life with precision and flexibility, and it can even make them indistinguishable from reality.

“Our virtual production setup, headed by Brainstorm’s InfinitySet, allows us to put together high-quality productions in an insanely short period of time,” says Richard Landy, managing director

of Broadley Studios. “Productions like the videoclip for Oliver Andrew’s single Saviour used a hybrid approach that allowed us to plan, shoot, and composite the entire video in just two days, with fantastic results.”

At Broadley Studios, this flexibility and precision are delivered through a fast-paced, real-time pipeline that prioritises creativity, efficiency, and environmental responsibility. With an agile approach to virtual production, Broadley offers content creators the chance to work within photorealistic virtual environments without the high carbon footprint of location shoots. Whether enabling remote shoots or powering fast-paced productions like podcasts or live shows, this flexibility supports a diverse array of creative projects.

Elevating the holiday spirit with the Jonas Brothers

This last holiday season, Jimmy Fallon’s Holiday Seasoning Spectacular featured over 10 artists and celebrities, and although the special was primarily filmed in a studio in New York, Broadley Studios was brought in by the showrunners to contribute a key segment to the show with a unique scene featuring the Jonas Brothers, Jimmy Fallon, and LL Cool J.

The shoot required three motion-tracked cameras, each with its own render engine running on Brainstorm’s InfinitySet, which allowed Broadley to produce precise, high-quality footage that would align with the production values of NBC’s festive special. The result was a realistic, immersive scene that matched the show’s festive aesthetic and blended seamlessly with the on-location footage.

production and podcasting

When Al Arabiya News decided to launch their flagship football podcast, The Dressing Room, they turned to Broadley Studios to create a cuttingedge production that matched the energy and ambition of the show. Hosted by football legends Joe Cole, Wayne Bridge, and Carlton Cole, the weekly series dives into global football stories, blending expert analysis with humour.

At the core of the production’s virtual workflow is Brainstorm’s InfinitySet, enabling a high-end, real-time set-up that delivers stunning visuals on a tight weekly schedule.

Combined with Unreal Engine 5.3, the system runs five motiontracked cameras and render engines, capturing dynamic multi-angle shots with smooth movement inside a custom-designed virtual environment created specifically for the podcast.

The Dressing Room has launched across major platforms, including Spotify, Apple Podcasts, and Al Arabiya News’ website, where it has quickly gained traction with football fans. The visually striking virtual production has elevated the podcast, setting it apart in a highly competitive market.

How virtual production is transforming content creation Virtual productions like The Dressing Room, Jimmy Fallon’s Holiday Seasoning Spectacular, and many others not only deliver high-quality results, they also help reduce costs, enhance industry sustainability, and open new creative horizons for filmmakers.

With a variety of local and international clients like NBC Universal, BBC Studios, Al Arabiya, and Nova Nordisk, the team at Broadley Studios has experience in both mixed and fully virtual productions, empowering creatives to choose the best path for each project while maintaining sustainability and budget goals.

As the technology continues to evolve, its impact on the industry is sure to grow, setting new standards for creativity, efficiency, and sustainability in visual storytelling. Broadley Studios, supported by Brainstorm’s virtual production tools, offers a clear example of how innovation can meet responsibility, and how the future of storytelling can be both beautiful and green.

“Virtual production isn’t just transforming how we tell stories; it’s showing that high-quality content can be made faster, greener, and more affordably,” says Landy. “At Broadley Studios, we’re proving that innovation and sustainability can go hand in hand.”

Walking with Dinosaurs was one of the first TV natural history series to focus on dinosaur life using state-of-the-art visual effects. Following its return to screens, Kevin Hilton looks at how a new selection of dinosaurs were created using modern CG animation techniques and creative sound design

Compared to how long ago prehistoric creatures lived, the 26 years since the BBC natural history documentary series Walking with Dinosaurs was first shown is a relatively short time. But in that intervening period, the visual effects technology that re-created the likes of Tyrannosaurus, Iguanodon, Stegosaurus, and Diplodocus has evolved significantly, and that has allowed today’s VFX to realise a fresh cast of terrible lizards for a rebooted series.

The new series comprises six hour-long episodes, each focusing on not just one dinosaur species but a specific individual that existed in a particular location and how it interacted with its family and the other creatures there. Also part of the story is footage of experts unearthing fossils that helped unlock the story. Backgrounds were filmed on location, with members of the visual effects team from Lola Post on-site so they could get a feel for the surroundings and how they would place their animated creations in them.

“The film crew scouted for locations that had similar vegetation to where the dinosaurs lived, although it is different today,” comments executive VFX supervisor

Rob Harvey. “For example, the Cretaceous apparently didn’t have grass, and certain trees and flowers didn’t exist. We found a location that got a lot of the way there and then had to use props of the correct scale for the camera operator to frame on. For a Raptors sequence, one of our supervisors ran around the forest dressed in a blue suit. We’d be shooting empty plates the whole time and then imagining what the creatures would be doing within those, although it was all based on storyboards or previsualisation.”

Prehistoric animals are now well-established on screen thanks to the Jurassic Park/World franchise and TV documentaries such as Dinosaurs—The Final Day with David Attenborough (2022), for which Lola Post also provided effects. Because of this, Harvey says, the team had to ensure the creatures in the new Walking with Dinosaurs were as convincing as possible. “There have been many creature shows now and the audience expectation is for quality,” he says. “The trick was trying to do something that worked within the budget and schedule but also looked good and told the stories.”

To do this, the Lola VFX artists used established animation and modelling systems such as Autodesk Maya and SideFX Houdini, as well as Unreal Engine. “I think it was the first time we’d used Unreal Engine at previz and then produced the entire background and environment,” Harvey says. Among the sequences created were the heavily armoured Gastonia fighting the vicious Utahraptors and the immense Spinosaurus in the forests of ancient Morocco. “For the underwater Spino, we transferred the Houdini creature into an Unreal environment and rendered it there. It sped things up and gave us freedom with lighting and atmosphere. It was a real groundbreaker.”

While there is a degree of suspension of disbelief on the part of viewers, who know dinosaurs no longer exist, the creatures still had to fit and move believably in the real filmed locations. This delicate balance was carried out during the colour grade, performed at ENVY Post Production, along with the online edit and sound mix. Senior colourist Sonny Sheridan says part of his job was to make the creatures sit in the backgrounds and create a sense of reality. “There was a lot of softening involved and contrast came into play massively because we really needed the dinosaurs to bed into the plates,” he explains. “It was about creating a world for each story. The experts were leading us but for me it was about making the worlds believable, where the dinosaurs sat in them and felt real.”

The images were further finessed during the online edit by editor Adam Grant. “My primary focus was working on the final plates, ensuring any objects that didn’t belong in the time period were removed, stripping it back to prehistoric times,” he says. “In some instances, I was painting in layers such as sand and dirt to build up the environment.”

The ongoing study of fossils, particularly those discovered more recently, has given palaeontologists a better idea of what dinosaurs looked like and how they moved. There is still some interpretation and speculation in this, but not as much as for an important element in a TV production; how the creatures sounded. “In the last ten years there have been huge leaps in the knowledge based on re-examining fossils and different ideas about how the dinosaurs might appear and certainly how they sounded,” comments Jonny Crew, sound designer and editor at Wounded Buffalo Sound Studios.

Crew has previous experience creating “dinosaur vocalisations” from his work on the 2022 series Prehistoric Planet. The key to creating noises that may be like how these long-silent creatures could have sounded, he explains, is to use source material as close to them as possible. “Anything that’s a descendant or related to some of the species,” he says. “The wider brief was no mammals, we had to stick to birds and reptiles. But those are much smaller, so you’ve got to use them as a starting point and beef things up to make it sound more impressive. There were some interesting behavioural notes from the palaeontologists, who think that, for example, the Albertosaurus was a social reptile that made [quieter] bonding noises, which was an interesting challenge.”

Based in Bristol, Wounded Buffalo is a specialist in natural history programming and has built up an extensive library of animal sounds, which provided the basis for many of the creatures in the series.

A dinosaur new to Crew–and one that generated some excitement when it was announced it would feature in the series–was the sailbacked, crocodile-snouted, mostly aquatic Spinosaurus.

“The main note from the producers was that this was a crocodilian character, so I used a lot of crocodile and alligator sounds, both for the adult and its babies,” he says. “The story was about the Spino Dad and his brood, and I worked with the producer on getting the right sounds for the kids. I pitched the effects down and then brightened them back

up again but kept the depth by adding lots of bass and resonance to give a sense of scale, because these were pretty big.” Among the plug-ins Crew employed were the Waves Aphex Vintage Aural Exciter and Sonnox Oxford Inflator.

Another plug-in, The Cargo Cult’s Envy, was used on the Foley work by Arran Mahoney, supervising sound editor and re-recording mixer at Mahoney Audio Post.

“It allowed us to extract the performance envelope, including the dynamics, transients and rhythmic flow, of our original Foley and apply it to new textures,” he explains. “For example, we could ‘borrow’ a well-synced pass on wet clay to simulate a muddy surface, and map it onto other materials, such as stone, foliage, and water. We recorded footstep performances using a variety of materials, everything from sand and gravel to thick mud and heavy cloth, which were selected based on the terrain and weight class of each dinosaur.”

The Foley and Dino vocalisations were mixed into the overall soundtrack by Bob Jackson, senior dubbing mixer at ENVY. This was something of a return to the past for Jackson, who worked on the

original Walking with Dinosaurs. “It was a stereo production back then,” he recalls. “This time I mixed the different dinosaur environments in 5.1, consisting of maybe 40 tracks, but did produce a stereo mix-down for editing. We started work in May 2024, with each of the six shows set in a different time period, millions of years apart."

With no location sound for the dinosaur scenes Jackson used tracks from ENVY’s “enormous” effects library. These he mixed together with the music by Ty Unwin and narration from actor Bertie Carvel on an Avid S6 desk into a Pro Tools digital audio workstation.

“I’d decided quite early on that the dinosaur scenes would be 5.1 and the ones at the dig with the experts would go down to stereo, so the audience would be subconsciously brought back to the present day,” he says. “Ty’s music was constant but we went to some lengths to make the effects come through.”

And come through they do, not perhaps in the big, blockbuster style of the Jurassic Park films, but in a believable way to work with the visual effects that have given life to long-extinct creatures.

The Sony PXW-Z300 is the world’s first camcorder to support recording of authenticity information in video.

For news in particular, content authenticity is no longer a theoretical concern—it’s an urgent, growing challenge for broadcasters, news organisations, and audiences alike. As synthetic content becomes more sophisticated and widespread, particularly across social media platforms, the industry is grappling with the implications of fake or manipulated imagery entering trusted channels.

Navigating a sea of synthetic content

From deepfakes to AI-generated imagery, the volume of synthetically created content has exploded. While generative technologies can unlock creative potential, they simultaneously pose a critical threat to trust in journalism and media, a trust already partially eroded in the age of social media. Editorial teams are now under pressure to not only assess content for editorial value but to publicise to

their viewers why it can be trusted, outlining its provenance and the different changes it might have gone through. A situation that many organisations are tackling head on.

This new reality has created multiple challenges, as illustrated by many news stories over the past few years. Newsrooms must be able to evaluate the authenticity of incoming and user-generated footage, especially if it is to be featured within their own channels. They need to be prepared to prove the inauthenticity of media falsely attributed to their brand. And, most importantly, they must be able to stand behind the provenance of their own output—especially in an era when misinformation can go viral in seconds.

Erosion of trust: what’s at stake

Audiences rely on trusted media brands to inform them about the world. When that trust is compromised—even by accident—the reputational damage can be significant and lasting. In an environment where misinformation spreads rapidly and often elicits strong

emotional responses, media organisations risk losing credibility if they unwittingly publish manipulated footage. Conversely, hesitation to publish while verifying footage can result in competitors breaking news faster, albeit maybe less responsibly.

The stakes are high. In a climate of increasing scepticism and polarisation, audiences are more likely to question what they see, even from reputable sources. Broadcasters are increasingly finding that it’s no longer enough to be accurate—they must prove it. And that means rethinking the relationship between capture and delivery to ensure that what viewers see can be verifiably traced back to the original event.

Industry-wide collaboration

Recognising the scale of the challenge, leading industry players have moved to build consensus around content authenticity. Initiatives like the Coalition for Content Provenance and Authenticity (C2PA), where Sony has been part of the Steering Committee since 2022, are working to create open technical standards that define how authenticity metadata can be captured, stored, and validated across multi-vendor workflows.

These standards represent a major step forward, not just technologically but culturally. The implementation of cryptographically secure metadata—embedded directly into video files—opens the possibility of building end-to-end verification into their production pipelines. When universally adopted, this would allow content to pass between organisations in a tamper-proof digital “wrapper,” clearly identifying its origin and any modifications.

first camcorder to embed digital signatures directly into video files , enabling content authentication to address the evolving needs of the content creation industry. This groundbreaking development marks a significant evolution in broadcast journalism and professional video production.

A game-changer for video authentication: the PXW-Z300

The PXW-Z300 camcorder applies a digital signature to each video file at the moment of capture. For broadcasters, this changes the equation. In a fast-moving news cycle, knowing the provenance of a piece of content in this way, from the moment it is shot, means it can be processed, verified, included in the news item, and aired with greater confidence and speed. For legal teams, the verifiable chain-of-custody this signature provides could offer a powerful safeguard against disputes over provenance.

The Z300 thus becomes more than just a tool for recording. Combined with Sony’s connected ecosystem and secure cloud platforms, this camera represents a cornerstone of next-generation media workflows.

The work by Sony and the wider industry is far from done. Video poses additional challenges that still images do not, from larger file sizes and synchronised audio to complex post-production workflows.

But the progress made with the Z300 proves that these hurdles are surmountable.

Sony is now collaborating with broadcasters, technology vendors and standards organisations to implement C2PA-compliant metadata throughout the production and distribution pipeline.

Sony’s role: enabling trust from lens to viewer

Sony has long been a trusted partner to news organisations and media companies around the world. As a member of C2PA and a key participant in IBC’s high-profile Accelerator Project on content provenance, Sony is deeply invested in helping the industry with the tools and infrastructure it needs to adapt.

Sony’s leadership in still-image authenticity, particularly through the Alpha series of cameras, laid the foundation for the work in video verification. These cameras were among the first to support C2PA standards, embedding metadata such as who created the content, when and where it was shot, and what devices were used— all recorded directly in the image file.

Building on this foundation, Sony has now extended authenticity workflows to video with the launch of the PXW-Z300, the world’s

Initiatives such as the IBC Accelerator Stamping Your Content project, which Sony and major broadcasters are part of, are also helping to showcase the initiatives around content provenance.

Trust in media can no longer be taken for granted. But it can be rebuilt—through technology, transparency, and collaboration. By embedding authenticity into the very fabric of visual storytelling, Sony and its partners are offering a way forward in an uncertain digital age.

At this year’s IBC, Sony invites media professionals to see the PXW-Z300 in action and discover how authenticity can once again become the foundation of public trust. With C2PA-compliant technology now a reality, the journey from camera to screen can become not just a path of content, but a chain of trust.

Come and see the new Z300 on SONY Stand Hall 13 and learn more on authenticity in the IBC Accelerator Zone at stand 14.A21.

In the fast-moving world of broadcast television, standing out is about more than good content: it is about delivering an experience that captivates, feels real, and keeps audiences engaged from start to finish. The evolution from traditional green screens to Extended

Betfred's Nifty 50 employs a virtual backdrop to deliver an engaging experience for viewers

Reality (xR) and high-performance LED walls has opened a new era for broadcasters, where dynamic visual storytelling meets reliability and cinematic quality.

Betfred, a family-owned brand with over 50 years in the betting and gaming industry, is committed to “delivering a fair, safe, and enjoyable experience”. While continuing to grow and innovate, the company remains true to its core values of integrity, innovation, and customer satisfaction. As part of a major refurbishment at their UK headquarters in Birchwood, Warrington, the company commissioned a 5m by 2m hi-tech studio for their new Nifty 50 lottery game. The game required a virtual backdrop to deliver an engaging experience for viewers.

The existing Betfred TV production setup, including a traditional studio with a simple grey backdrop, was functional but lacked scale, flexibility, and visual appeal. The aim was to introduce live presenters for the first time, create a more interactive atmosphere, and give the Nifty 50 draw the polished energy of a television broadcast, all while remaining within the footprint of the current space.

To realise this vision, Betfred turned to d&b solutions, a company that provides cutting-edge solutions for live events, corporate clients, and broadcasters. Working closely with Betfred from the initial design phase through to installation and commissioning, d&b solutions

provided a complete end-to-end service. The centrepiece of the new studio is a 4.9m-by-1.8m LED wall, installed and calibrated by d&b solutions and driven by Brompton Technology’s Tessera LED video-processing platform. This combination offers both the flexibility to transform the studio environment at a moment’s notice and the image quality required for broadcast.

Unlike green screens, which require post-production compositing and careful lighting to avoid spill, LED backdrops deliver instant realism. The set looks and feels real to the presenters, which in turn makes their delivery more natural. With xR environments, broadcasters can make a small studio appear like a grand stadium, bustling trading floor, or sweeping cityscape, all without the costs or logistics of physical location shoots. For Betfred TV, this means rapid set changes to match programming needs, greater creative freedom for producers, and a much richer viewing experience for audiences.

Central to the upgrade is the role of Brompton’s Tessera processing, which ensures that the LED wall delivers consistent, flicker-free performance across all camera angles. For live television, reliability is non-negotiable; a single dropped frame or colour mismatch can undermine audience confidence.

The Tessera platform brings precise colour reproduction, synchronisation between LED refresh rates and camera shutters, and the benefits of High Dynamic Range (HDR), producing deep contrast, vibrant colour, and cinematic detail that stand up under studio lighting. This level of performance gives Betfred TV a consistent, high-quality output day after day, even under the demands of live production.

For Betfred’s presenters, the change has been transformative. Instead of imagining a backdrop and timing their performance to cues, they can now interact with the visual environment as if it were a physical set. This immediacy not only improves delivery but also creates a stronger connection with the audience.

For viewers, the difference is equally striking: the flat, static backgrounds of the past have been replaced with immersive visuals that bring energy, depth, and a heightened sense of occasion to the Nifty 50 draw.

The success of the project lies in the collaborative approach between Betfred, d&b solutions, and Brompton Technology. d&b solutions took responsibility for the complete technical delivery, ensuring that every element of the installation met the requirements of a fast-paced broadcast environment. Brompton Technology, as a trusted supplier, provided the processing expertise that enabled the LED wall to perform flawlessly on camera. The result is a studio that is as robust as it is flexible, capable of supporting both Nifty 50 and any future programming Betfred chooses to produce.

This transformation reflects a broader shift within the industry. Smaller studios are now capable of producing content that rivals

big-budget productions, thanks to advances in LED technology and video processing. The combination of efficient space usage, rapid set changes, and broadcast-grade visuals is redefining what is possible, making high-quality production accessible without the overheads of larger facilities.

For Betfred, the investment has delivered more than just a new look. It has created a platform for ongoing innovation, allowing the brand to evolve its in-house broadcasting and explore new formats with confidence. For d&b solutions, the project demonstrates how integration expertise, combined with high-performance technology, can deliver lasting value for clients in the broadcast sector. And for Brompton Technology, it is a showcase of how Tessera processing can enable studios of any size to achieve uncompromising image quality.

With more LED elements planned for its studio, Betfred TV is continuing to build on its commitment to innovation. The upgraded facility has already set a new benchmark for what can be achieved in a compact broadcast space, and its impact is clear in the energy and engagement of the on-air product. In a media landscape where audience attention is harder than ever to capture and keep, Betfred TV’s transformation shows how the right combination of vision, integration expertise, and advanced processing technology can turn a studio into a powerful storytelling tool.

Ultimately, it is about more than just looking good on camera. It is about creating an environment where technology fades into the background, and the story, whether it’s a game draw, a news segment, or an entertainment show, takes centre stage.

And thanks to LED processing, that story has never looked better.

n today’s live sport production landscape, SMPTE ST 2110 has become the de facto norm. Nearly every new project, whether a greenfield build or a facility refresh, starts with IP. That said, SDI remains an essential part of various operations, so many organisations are navigating the transition to IP with hybrid deployments—by creating IP “islands” within an otherwise SDI-based environment, or encapsulating legacy SDI workflows within an IP core. This multi-path approach reflects a simple reality: facilities must balance the business-driving flexibility and scalability that IP brings against the practical reality that much of their existing SDI equipment remains adequate for today’s needs.

For facilities with established SDI infrastructures or tight budgets, maintaining the status quo eliminates the need for retraining, replacing control panels, and overhauling existing workflows. But that doesn’t mean media companies should put IP entirely on hold, as gateway technologies allow for incremental modernisation without a full rip-and-replace.

The IP advantage

From a performance standpoint, IP becomes the dominant option once a facility approaches the limits of traditional SDI routing—a 1Kx1K matrix. Even at smaller sizes, perhaps all the way down to 200x200, IP has become the better approach from the standpoints of cost, flexibility, and time to implement.

There’s also a long-term benefit to IP: future readiness. For 3G HD facilities with no plans for UHD or high-density routing, SDI may still be suitable. But if plans include a future move to UHD and 4K workflows, where each stream demands 12Gbps, SDI limitations and workarounds become impractical and costly, making the deployment of a native IP infrastructure economically sound.

Another powerful advantage of IP in live sport production is virtualisation. SDI relies on a point-to-point architecture with a cable connecting one device to another. In an IP environment, however, signal and transport are decoupled, with video treated as data packets rather than waveforms. Any signal can be routed anywhere—from on-prem appliances to virtualised environments in a data centre or fully cloud-native platforms.

The ability to extend the virtualised model into the cloud is a gamechanger for remote production. Cameras remain at the venue, but switching, graphics, and replay occur entirely in the cloud, allowing technical directors, graphics teams, and replay operators to work from anywhere via centralised systems, using browsers or remote applications.

These cloud-native advantages are also reshaping playout. Functions like master control, switching sources, aligning audio, inserting graphics, and regionalising feeds can be executed in a virtualised, softwarebased environment. However, cloud infrastructure comes with ongoing costs, making it less economical for 24/7/365 channels. Where robust IT infrastructure already exists, running core services on-prem—while leveraging the cloud for overflow, disaster recovery, or rapid channel deployment—is often the most cost-effective approach.

Occasional-use scenarios further highlight the value of cloud-based infrastructure. For a short-term event, like a multi-week sport broadcast, spinning up cloud capacity offers better economics than a permanent investment in hardware. The same applies to redundancy: cloud-based disaster recovery provides a scalable, on-demand alternative for business continuity that reduces waste and simplifies maintenance.

In live sport production, latency gets between the producer and the action. Milliseconds can mean the difference between staying ahead of the moment or falling behind it.

A camera feeding directly into a switcher, then to playout, and out through transmission—all over copper or fibre—represents one of the fastest possible signal paths. It’s a tightly integrated chain that remains difficult to match with cloud-based or virtualised workflows, which introduce latency at various points from contribution to the final mile. This is a problem set that is rapidly being addressed, with technologies such as JPEG XS and other low-latency codecs.

This also points to perhaps the greatest benefit of the move towards IP technologies: the ability for the broadcast and sport production segments to tap into the much larger global IT industry and leverage the investments made into that economy around scalability, security, and performance.

Major rebuilds don’t happen every year, so planning for future requirements should be part of any significant facility transformation. While UHD and HDR might not be on today’s menu, the call could come at any time, and the facility infrastructure must be able to adapt without requiring another costly rip and re-do.

The scalability and flexibility of IP at the core, coupled with the extensibility of virtualised and cloud computing, positions production and playout facilities to be ready for whatever comes next.

In

7 Wonders of the World with Bettany Hughes, the historian and adventurer goes on a journey across three continents to investigate the world’s first travel bucket list. In a unique collaboration with Snapchat’s AR Studio Paris, viewers can use their phones to scan an on-screen QR code, bringing to life each of the ancient sites in a virtual, immersive experience

Where did the idea for 7 Wonders of the World come from?

Shula Subramaniam, series producer, SandStone Global: Bettany Hughes dedicated almost 10 years to her book of the same name, and upon its release, we knew instantly it would make a fantastic series. The core idea was to go beyond the familiar list: many people have heard of the 7 Wonders list, but they often don’t know the original sites or that ancient tourists and travellers actually journeyed to visit them, recognising them as the most significant human achievements of their era. Given that most of these Wonders no longer stand and Bettany had already spent years researching and consulting with experts and archaeologists for her book, we saw a clear path to use stunning graphics to accurately illustrate all the detail from the book. This combination of travel, surprising historical revelations, and deep archaeological exploration was the perfect formula for a new series our viewers would absolutely love.

Did you always plan to include the AR experience?

SS: At SandStone, our core mission revolves around connecting with people–delivering authentic and accessible global storytelling to our audiences. With evolving TV viewing habits, particularly the rise of on-demand content and second-screen usage among younger generations, we’re continuously exploring digital platforms to engage viewers while maintaining the high quality of our broadcast projects.

For 7 Wonders specifically, we saw an incredible opportunity to use our detailed

the QR code

a

graphics to offer an additional layer to the viewing experience. Our collaboration with the Snapchat team was perfectly timed. By combining their cutting-edge AR technology with our compelling storytelling, we could bring these ancient Wonders directly into people’s living rooms in a whole new way. This approach not only deepens engagement for our viewers but also helps us reach a digital-first audience who could come across the experience on Snapchat, ultimately drawing them to the series. Together, our teams wanted to demonstrate how AR can enhance traditional media and enrich cultural content.

How does the AR experience work alongside the TV series?

Antoine Gilbert, Snap Paris AR Studio senior manager.: The beauty of AR is that it allows you to bring a new layer of creativity to traditional experiences. AR transforms how we learn about the world today, from cultural experiences to art and history in a more engaging, immersive experience. This is especially true for 7 Wonders of the

World with Bettany Hughes. It’s really easy to access the experience; viewers are prompted during each episode to explore an experience that brings the 7 Wonders to life in a beautiful, virtual experience. For example, the Pyramid of Giza experience kicks off with a map slowly expanding on the floor as it reveals the location of the Wonder and its surroundings in Egypt, including the Mediterranean Sea and the Nile. Once the map is fully revealed, a golden pyramid will start to form with mysterious sounds and visuals of a structure being physically created. Once it is fully formed, people see the famous British landmark, Big Ben, alongside the Pyramid so they can get a sense of the scale of this incredible site when compared to a popular landmark. The experience ends with a small pop-up, which reveals the name of the Wonder and the era it was built in.

Can you explain the process behind recreating the Wonders?

AG: Let me start by explaining the mission of our AR Studio Paris. In 2021, we opened our AR studio to raise awareness of the potential of augmented reality and to show how

it can impact cultural, entertainment, and education sectors. Since then, our AR Studio Paris has worked on some of the most incredible AR experiences, from partnering with Daft Punk for the launch of its new album, to working with the Louvre and National Portrait Gallery to enhance the museum experience. AR is transforming how we experience culture.

Here is where it gets a bit technical. For this series, our talented team of developers, 3D, visual, and concept artists worked closely with SandStone to develop the 3D model of the 7 Wonders for the AR experience from the visual effects they had created with Flow Postproduction for the series. Once the 3D model was created, we developed visual and sound effects to help create a compelling virtual experience. This was a fun discovery process as we played around with a few effects, working closely with SandStone to make sure the look and feel of the AR experience complemented the series. The AR experience was developed on our software called Lens Studio, which lets anyone easily develop an AR experience. Thousands of developers use it to create AR experiences and lenses for Snapchat and our AR glasses (spectacles).

Our second challenge was more technical. It’s easier to develop 3D models for TV than in AR, as there are some technology challenges between TV and mobile which our team had to work through to ensure the 3D models shown in the series on TV were as accurate as possible in AR. While these challenges stretched the thinking of our AR Studio team, we were able to work through it successfully to create an AR experience that truly reflects the beauty of the 7 Wonders.

What were your biggest achievements?

AG: It’s been great to partner with a historian like Bettany Hughes to bring her research to life in AR. Her expertise and insights on the Wonders really helped us bring these historical discoveries to life for millions of people who were able to travel virtually to moments in time to explore, learn, and see the Wonders.

Do you expect to update the experience in the future?

How faithful a representation is each end result—was there any element of ‘guesswork’ involved?

SS: Accuracy was paramount for us, and the detective story of what these Wonders actually looked like is the backbone narrative of the series. Bettany spent a decade on her book, so we had a wealth of research to hand, but it was crucial for us to visit all the sites and get access to the archaeological teams and experts who dedicate their time to understanding these Wonders. We meticulously drew upon every available piece of evidence: ancient texts, archaeological findings, and the latest scholarly consensus and fed this into every iteration of the models our graphics team Flow Postproduction produced.

While some elements require informed academic reconstruction due to the passage of time, it’s not guesswork, but careful interpretation of fragments and evidence and we’re really proud of the results. What often pops up online if you just search these Wonders can be quite different from the historical truth, so we’re thrilled to be putting our Wonders out there.

What were the biggest challenges you faced throughout the production?

AG: The beauty of the 7 Wonders is that they are truly breathtaking sights with so much rich history. Imagine trying to bring the beauty of these Wonders to life in an immersive experience—we wanted to ensure that the experience we created would help people understand the scale, the detail of each Wonder and create the feeling of excitement and awe that would be felt from seeing them in real life. This is where the magic of AR comes in, as it’s a powerful creative tool to bring moments to life.

AG: It’s too early to say what we might add to the experience in the future; however, as the pace of technology continues to accelerate, particularly with artificial intelligence, we’re able to supercharge our technical capability to create more quality AR experiences.

What did you learn about augmented reality while working on the project?

AG: We are grateful for the opportunity to work on this project. It’s another great example of how AR can completely transform how we experience and learn about the world around us. From helping people travel to Egypt’s Old Kingdom to check out the Giza Pyramids to seeing the beautiful Hanging Gardens of Babylon, millions of people around the world will be able to experience the 7 Wonders from their homes.

Grass Valley returns to IBC this September with major new innovations covering every stage of the media production workflow. The company will demonstrate end-to-end solutions that empower media organisations around the world, setting the pace for industry transformation.

"For Grass Valley, IBC is more than a trade show: as a global leader, it’s an annual opportunity to engage with customers and partners, notably across EMEA, and showcase the latest innovations in the GV Media Universe (GVMU),” says Grass Valley CEO Jon Wilson. “It’s a key event in our calendar, and we’re excited to share our continuing growth story in Amsterdam.”

Grass Valley arrives in Amsterdam on the back of impressive organic growth, including strong year-over-year gains in bookings and revenue across its product portfolio.

The company’s commitment to innovation is helping to drive this momentum, alongside the rapid adoption of its AMPP ecosystem (with bookings up over 120 per cent YoY), and the continued success of core products such as the newly updated Karerra and

K-Frame VXP production switchers, and the refreshed LDX 100 series camera range, including the new LDX 180 Super 35 system camera.

“Our strong organic growth is setting the pace for industry transformation, driven by continuous innovation in our GVMU hardware and software offerings, powered by AMPP.

"Stand 9.A01 will show how our open-platform approach—together with our expanding partner ecosystem—is leading a media revolution,” adds Wilson.

Visitors will be able to see the company’s LDX 180 camera, first launched in April and making its European debut at IBC2025.

Boasting an in-house-developed 10K Super 35 Xenios imager, the new camera combines true cinematic depth-offield with the speed and precision required for live production, giving media companies the ability to redefine storytelling with native UHD output in unparalleled quality.

“The LDX 180 is built for premium live production where cinematic storytelling

meets broadcast speed, delivering unmatched UHD quality for high-end sport, entertainment, concerts, and studio shows. Built on the LDX 100 platform, it integrates natively with the wider camera chain, for a shared shading solution, and consistent look and feel no matter the shot–giving directors creative freedom and greater emotional depth.”

The company is also unveiling the new LDX C180 compact version of the camera at IBC2025. Designed for Steadicam and PTZ operation, the C180 empowers content creators to achieve new levels of dynamic and dramatic camera angles to elevate their visual storytelling at the speed of live. Featuring the same in-house-developed 10K Super 35 Xenios imager as its flagship sibling LDX 180, the new compact offering captures true cinematic depth-of-field while being purpose-built for the fastpaced realities of live production environments.

Elsewhere, Grass Valley will be showcasing the K-Frame VXP production switcher and Karerra V2 control panels. The agile, virtualised systems are designed for production teams working in space-and budget-conscious environments and have become the trusted foundation for hybrid and software-based operations, transforming the way many forward-thinking companies work.

Also set to make their debut at IBC2025 are the company's newest networking solutions, which combine high-density FPGA processing with the flexibility of virtualisation and software-defined workflows across hybrid compute platforms—all managed through a single control layer.

All of the solutions integrate seamlessly into SDI, IP, cloud, or hybrid setups via standard formats, flexible APIs, and GV Alliance partner integrations, allowing customers to adopt at their own pace, extend asset life, and unify operations under AMPP without disruption.

GVMU’s ability to orchestrate the entire production chain, from first cue to final cut, will be demonstrated by major new developments in integrated replay and end-to-end production automation. Alongside upgrades to LiveTouch X replay system and the Framelight X ingest and content management platform, Grass Valley will showcase how the core capabilities of AMPP OS are providing tightly integrated workflows that reduce effort, increase flexibility, and deliver measurable efficiency.

The company will also showcase its instantly deployable playout and FAST offerings that provide media organisations with the tools to launch, adapt, and scale with ease. These solutions are developed in close collaboration with key GV Alliance partners.

Once again, Grass Valley will be hosting its annual GV Forum, which takes place on Thursday, 11th September, at the Rosarium, Amstelpark. Wilson will be joined by the company’s leadership team to provide insights on strategic industry perspectives and updates

on the latest innovations in software-defined technology. “We’ll be sharing our business outlook, unveiling new product innovations, and offering insights and approaches to the leading technology trends shaping the industry. Attendees will also hear from our customers— including members of the GVx Council—on how they are adapting to the realities of hybrid production.”