MASTERING BUILDING SAFETY COMPLIANCE

WITH SYMETRI, YOUR DIGITAL PARTNER FOR BUILDING SAFETY

Want to get your building applications approved with confidence? Join our session with The Property Smart Group at Digital Construction Week to learn how!

4th June | 12:30 - 13:00

LEARN MORE & CONNECT

editorial

MANAGING EDITOR

GREG CORKE greg@x3dmedia.com

CONSULTING EDITOR

MARTYN DAY martyn@x3dmedia.com

CONSULTING EDITOR

STEPHEN HOLMES stephen@x3dmedia.com

advertising

GROUP MEDIA DIRECTOR

TONY BAKSH tony@x3dmedia.com

ADVERTISING MANAGER

STEVE KING steve@x3dmedia.com

U.S. SALES & MARKETING DIRECTOR

DENISE GREAVES denise@x3dmedia.com

subscriptions MANAGER

ALAN CLEVELAND alan@x3dmedia.com

accounts

CHARLOTTE TAIBI charlotte@x3dmedia.com

FINANCIAL CONTROLLER

SAMANTHA TODESCATO-RUTLAND sam@chalfen.com

AEC Magazine is available FREE to qualifying individuals. To ensure you receive your regular copy please register online at www.aecmag.com

about

AEC Magazine is published bi-monthly by X3DMedia Ltd 19 Leyden Street London, E1 7LE UK

T. +44 (0)20 3355 7310

F. +44 (0)20 3355 7319

© 2025 X3DMedia Ltd

Industry news 6

Cityweft brings spatial context into CAD/BIM, Dassault re-packages Catia for AEC teams, Maxon targets architects with real-time viz, plus lots more

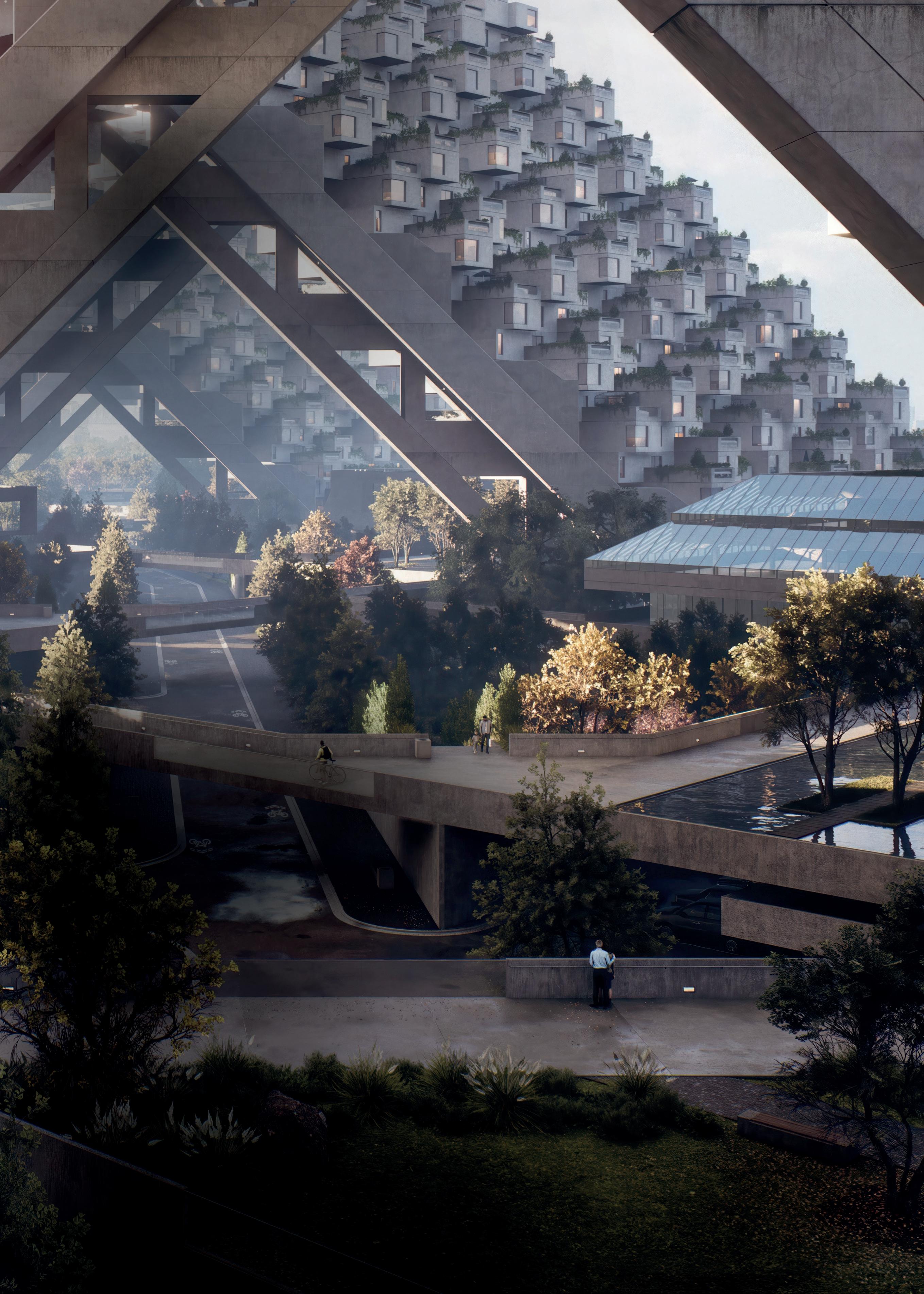

Dimensional shift: 2D to 3D and back 12

New technologies are emerging to transform 2D drawings into models, as well as generating drawings automatically from 3D model data

What’s on at NXT BLD and NXT DEV 18

We highlight the key themes - from AI and BIM 2.0 to pioneering tech perspectives from the biggest AEC firms

AI and design culture (part 1) 26

How is AI shaping architectural design? Keir Regan-Alexander explores the opportunities and tensions between creativity and computation

AI and the future of arch viz 32

register.aecmag.com

AI is streamlining viz workflows, pushing realism to new heights, and unlocking new creative possibilities

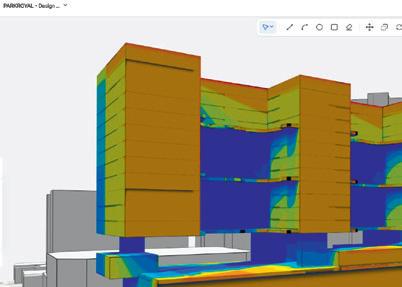

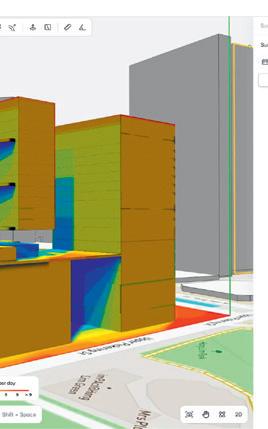

Foster + Partners Cyclops 36

Foster + Partners, renowned for its in-house software development team, is giving away one of its custom tools for free — an environmental analysis plug-in

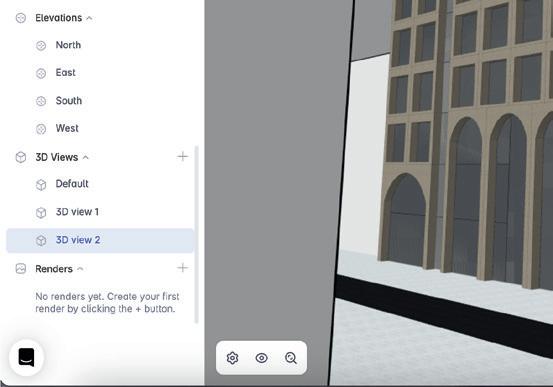

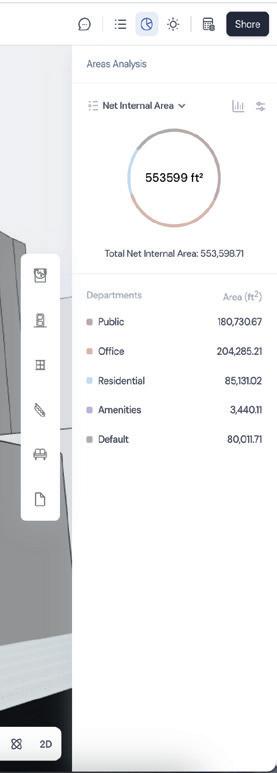

Snaptrude reimagined 39

Snaptrude, the original BIM 2.0 player, is now adding AI discipline-centric knowledge to each phase

Why no unicorns in construction? 43

Despite AEC’s digital potential, start-ups still struggle. Tal Friedman outlines a strategy to break through

HP ZBook Ultra G1a / Ryzen AI Max Pro 46

It’s not often a mobile workstation comes along that truly rewrites the rulebook, but this 14-incher does just that

Cityweft brings tuned spatial context into CAD/BIM tools

Cityweft, a new web platform aimed at architects, urban designers, property developers and planners, launched this month.

The Cityweft platform enables users to create ‘high-quality’ customisable 3D models of cities and sites that can be used for early-stage design in CAD and BIM authoring tools such as SketchUp, Revit, Archicad and Rhino.

“Context modelling should be fast, accurate, and built for the way architects and AEC professionals actually work,” said Cityweft CEO Alexander Groth.

Cityweft transforms disparate realworld datasets – such as 3D buildings, terrain, and infrastructure data – into ‘unified’ CAD-editable 3D geometry. Each mesh layer is generated separately for ‘easy customisation’.

Through the web-platform, users can find city models and data from around the world (e.g. OpenStreetMaps, Google Open Buildings, Microsoft ML Buildings and Esri Community Buildings), preview and customise directly on the platform, and then export to Rhino, SketchUp, GLTF, OBJ, STL and IFC, or connect via API.

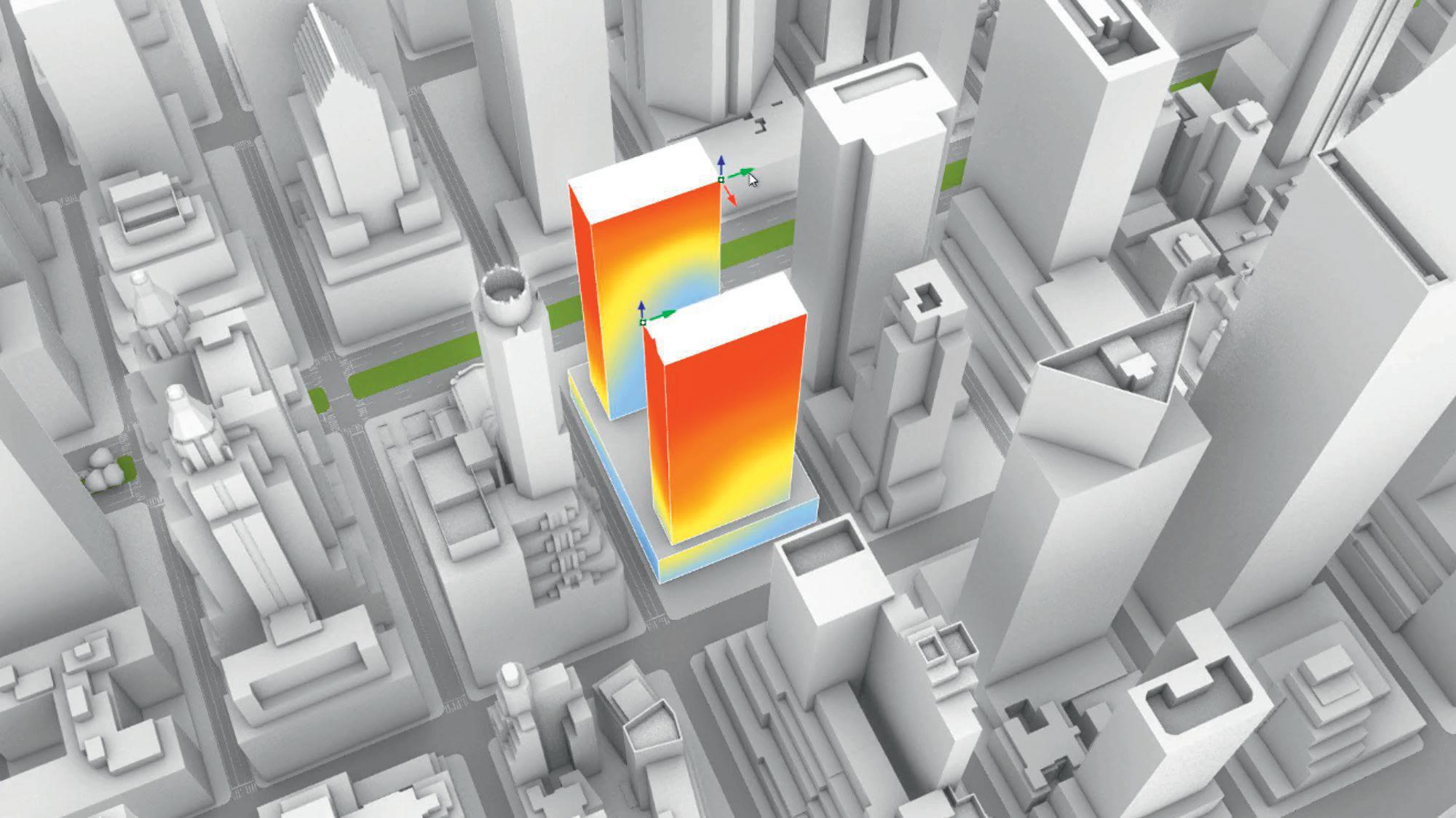

According to the company, in contrast to other solutions that use simple extrusion geometry, Cityweft’s advanced geometry processing and proprietary algorithms produce complex and accurate geometry including close to 20 roof types. Higher quality building models not only aid design but can help deliver more reliable sunlight analysis in tools like Autodesk Forma.

Cityweft will be presenting at NXT BLD in London on 11 June 2025.

■ www.cityweft.com

Dassault re-packages Catia for AEC teams

assault Systèmes (DS) has launched a new Catia software bundle tailored specifically for the AEC sector, offering price reductions to encourage wider adoption among AEC firms dealing with complex geometry and digital fabrication workflows. Prices start at $4,500 per user, per year.

The AEC Special Offer packages Catia’s powerful modelling and design tools with additional applications for scripting and 2D drafting. The bundle includes the full desktop version of Catia, with cloud-

based data management via the 3DExperience platform. It supports simultaneous collaboration, advanced geometry, BIM capabilities, and scripting via the Visual Script Designer.

Also included is DraftSight Premium, the DWG-based 2D drafting tool, which connects to the 3DExperience cloud and supports IFC and Revit model imports for 2D documentation.

DS will be at NXT BLD and NXT DEV on 11-12 June. See page 34 for more info.

■ www.3ds.com/store/catia-for-aec

Autodesk boosts Revit graphics

Autodesk is in the process of updating the Revit graphics engine, with the launch of Accelerated Graphics, a tech preview for Revit 2026, which comes with limited features. According to the company, with Accelerated Graphics users will experience a significant navigation performance improvement in 3D and 2D views. To make this possible Autodesk has implemented new techniques that use the GPU as efficiently as possible. According to the developers, users do not need an expensive graphics card for the Accelerated Graphics Tech Preview to work well, but having one with more memory would allow them to work more efficiently with larger models.

■ www.autodesk.com

Data portability for BIM 360 and ACC

ymetri has launched Naviate Nebula, a cloud-to-cloud solution for transferring files from multiple projects in BIM 360 and Autodesk Construction Cloud (ACC) to other BIM 360 or ACC projects. Folders and files are transferred with version history and attributes. Naviate aims to address three key cloud-to-cloud data transfer issues: regional transfer of data between local hubs in US, EU and Australia; automating timeconsuming and manual processes by setting up automated workflows and schedules; and limiting the risk of losing data or working with the wrong files thanks to continuous backups on ongoing projects from project collaborations.

■ www.naviate.com

Maxon targets architects with real-time rendering plug-in

axon is aiming to put its rendering tech into the hands of more architects and designers with a new ‘real-time’ visualisation plug-in for Vectorworks — with support for other CAD and BIM tools to follow.

“We’re giving architects a powerful yet intuitive tool that elevates their work visually, without adding complexity, so their designs can be expressed as intended with clarity and emotion,” said David McGavran, CEO of Maxon.

Users of the new rendering solution will get access to ‘intuitive controls’ seamlessly integrated within the Vectorworks BIM environment, along

with smart asset libraries.

They will be able to move from realtime previews to stunning final renders with ‘seamless’ export to Cinema 4D and Redshift. According to Maxon, this will allow them to create more advanced architectural visualisations, including procedural animations and simulations.

“Our new partnership with Maxon addresses a significant challenge faced by AEC professionals: the need for a realtime rendering solution that seamlessly integrates with Vectorworks and evolves alongside it,” said Vectorworks senior director of rendering, Dave Donley.

■ www.maxon.net

Intel packs more memory into entry GPUs

Intel has unveiled the Arc Pro B50 (16 GB) and Arc Pro B60 (24 GB), two new professional desktop GPUs built on its Xe2 ‘Battlemage’ architecture, featuring Intel Xe Matrix Extensions (XMX) AI cores and ray tracing units.

Compared to the previousgeneration PCIe Gen 4 ‘Alchemist’-based Arc Pro A50 (6 GB) and A60 (12 GB) the new PCIe Gen 5 GPUs offer a big performance uplift and increase in memory. On paper, this makes the Arc Pro B50 and B60 much better equipped to handle more demanding workflows, including larger

viz scenes in applications like D5 Render and Twinmotion, and AI tools such as Stable Diffusion.

The Intel Arc Pro B50 is a low-profile, dual-slot graphics card with a total board power of 70W, which makes it compatible with small form factor (SFF) and micro workstations. Priced at $299 MSRP, the Arc Pro B50 competes directly with the Nvidia RTX A1000 (8 GB) — but, as Intel highlights, it offers double the memory.

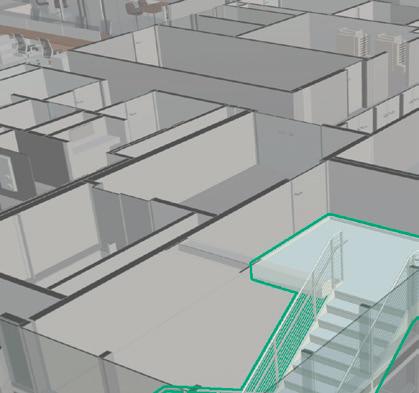

Procore doubles down on BIM

rocore is beefing up the BIM capabilities of its cloud-based construction management platform, with the acquisitions of Novorender, a viz platform for viewing huge BIM models, and FlyPaper, makers of Sherlock, a Navisworks plugin for BIM coordination.

Novorender is billed as one of the world’s fastest 3D model viewers. The streaming platform is designed to federate terabytes of BIM/GIS data in the browser, with a view to making 3D construction data available to everyone in the field.

FlyPaper is a long time Procore partner and its technology is already used by Procore customers. Once FlyPaper is integrated, Procore customers will have access to automated 3D design coordination, clash detection, and collaboration to help improve predictability, reduce rework costs, and bolster construction site safety.

■ www.procore.com

Allplan boosts structural workflows

AThe Arc Pro B60 is a full-sized board with 120W – 200W of total board power. Learn more at aecmag.com

■ www.intel.com/arcpro

llplan 2025-1 includes several structural design focused workflow improvements designed to enhance integration between the BIM authoring software and Bimplus, the cloud-based collaboration platform.

The Frilo BIM-Connector is now fully integrated into Bimplus, so engineers can access 3D model data directly from Allplan. Also, with Structural Analysis Format (SAF) integration, Allplan now offers direct imports into Bimplus.

■ www.allplan.com

Architect

Engineer

Chaos Envision launches for immersive presentations ROUND UP

Gauzilla Pro

Gauzilla Pro is a new gaussian splatting web editor that allows for real-time and photorealistic 3D/4D rendering and editing of scenes reconstructed from phone and drone videos. The software can be used to track construction progress by creating a ‘4D time lapse’.

Founder Yoshiharu Sato will be speaking at NXT BLD / DEV on 11-12 June

■ www.gauzilla.xyz

AI partnership

The Nemetschek Group has announced a partnership with Google Cloud which will allow Nemetschek to use Google Cloud’s advanced AI and cloud technologies at a group-level. One of Nemetschek’s BIM authoring brands, Graphisoft, already uses Google cloud for its collaboration platform BIMcloud

■ www.nemetschek.com

Highway maintenance

Bentley Systems has introduced a new asset analytics capability that takes imagery insights from Google Maps to ‘rapidly detect and analyse’ roadway conditions. The new capability is born from its Blyncsy product offering which applies AI to crowdsourced imagery for automated highway asset detection and inspection

■ www.bentley.com

Faro acquisition

Ametek is to acquire all outstanding shares of reality modelling company Faro Technologies common stock. The transaction values Faro at approximately $920 million. The transaction is expected to be completed in the second half of 2025 ■ www.faro.com

Bluesky acquired

Woolpert, a global specialist in geospatial services, has acquired Bluesky International, an aerial survey firm that largely serves the UK market, specialising in aerial imaging, LiDAR, 3D modelling, vegetation, and renewables mapping

■ www.bluesky-world.com

Going underground

A new AR solution that combines Pix4D’s 3D mapping technology with underground utility mapping software

ProStar PointMan helps construction and utility professionals visualise and manage buried infrastructure

■ www.pix4d.com ■ www.pointman.com

ollowing its beta launch last year, Chaos has released Chaos Envision, a real-time storytelling tool that helps architects and designers turn 3D models into immersive, cinematic presentations.

Chaos Envision can bring multiple 3D components into its collaborative environment. It accepts content from any application that hosts Enscape or V-Ray and can import common industry formats, so users ‘don’t have to worry about scene prep or data loss’. All lighting, materials and assets from their original CAD or Enscape design will carry over.

Users can add entourage with Chaos

Cosmos, and through a direct integration with the Chaos Anima engine, drag-anddrop ‘hyper-realistic 4D people and crowds’ with AI-enhanced behaviour into scenes, and then direct their movement by assigning them a path. Paths can also be applied to other objects and cameras for more cinematic looks. Chaos Envision also supports variation-based animation to help designers depict sun studies, construction phasing or to cycle through design options. Meanwhile, Chaos has also announced a range of ‘affordable’ industry-specific suites for architectural design, architectural visualisation, and media & entertainment.

■ www.chaos.com

Twinmotion 2025.1.1, the latest release of the real-time rendering software from Epic Games, supports Nvidia DLSS 4, a suite of neural rendering technologies that uses AI to boost 3D performance.

Epic Games shows that when DLSS 4 is enabled in Twinmotion it can render almost four times as many frames per second (FPS) than when DLSS is set to off. DLSS 4 uses a technology called Multi Frame Generation, an evolution of Single Frame Generation, which was introduced in DLSS 3.

Single Frame Generation uses the AI Tensor cores on Nvidia GPUs to interpolate one synthetic frame between every two traditionally rendered frames, improving performance by reducing the

number of frames that need to be rendered by the GPU.

Multi Frame Generation extends this approach by using AI to generate up to three frames between each pair of rendered frames, further increasing frame rates. The technology is only available on Nvidia’s new Blackwellbased RTX GPUs.

■ www.twinmotion.com

Lenovo updates AMD-based mobile workstations

enovo has updated its AMDbased mobile workstation line up with the launch of the ThinkPad P14s Gen 6 AMD and ThinkPad P16s Gen 4 AMD. Both laptops feature the ‘Strix Point’ AMD Ryzen AI Pro 300 Series processor with integrated AMD Radeon 890M graphics and a 50 TOPS NPU for AI workloads.

Topcon launches CR-H1 scanner

T Lto the 500 nit 2.8K OLED (2,880 x 1,800). Meanwhile, the Lenovo ThinkPad P16s Gen 4 AMD starts at 1.71kg and comes with a choice of 16-inch displays up to the 400 nit WQUXGA OLED (3,840 x 2,400).

Starting at 1.39kg and 16.13mm thick, the Lenovo ThinkPad P14s Gen 6 AMD is the thinnest and lightest mobile workstation in Lenovo’s portfolio. It comes with a choice of 14-inch displays up

Both laptops are built for CAD and BIM workloads and offer up to the AMD Ryzen AI 9 HX PRO 370 processor with 12 cores, 24 threads and a Max Boost Clock of up to 5.1 GHz. There’s support for up to 96 GB of DDR5 (5600MT/s) memory which can be dynamically allocated between the CPU and GPU.

■ www.lenovo.com

Sensat and Transcend forge integration

Sensat, a visualisation platform designed to bring real-world context to infrastructure projects, has formed a partnership with Transcend, a generative-design engine for water, wastewater, and power facilities.

The two companies have developed an optimised workflow designed to automate early-stage engineering in Transcend, then

stream ‘highly detailed’ conceptual BIM models into Sensat for contextual review, with any feedback then pushed back into Transcend. Everything is synchronised through Autodesk Construction Cloud.

Severn Trent Water, one of the UK’s largest water and wastewater service providers, has piloted the workflow on its Westwood Brook treatment-plant project.

■ www.sensat.com ■ www.transcendinfra.com

OpenSpace Air consolidates reality data

OpenSpace, a specialist in 360° reality capture and AI-powered analytics for the construction sector, has announced OpenSpace Air as part of its core subscription.

OpenSpace Air enables construction

teams to consolidate all reality data –from drones, 360° cameras, mobile phones, and laser scanners – into a ‘comprehensive visual record’ accessible from a single platform.

■ www.openspace.ai/products/air

opcon has announced the CR-H1, a handheld reality capture solution that uses PIX4Dcatch, a software tool which runs on iPhones with integrated LiDAR scanners, and uses photogrammetry to create fullcolour 3D point clouds.

The iPhone connects to Topcon’s HiPer CR receiver, enabling the application to collect georeferenced images. The receiver and iPhone are both mounted on a specialised handle designed and manufactured by Topcon, so users can capture point clouds without a tripod.

The solution is designed for use across multiple disciplines, including utilities and subsurface mapping, construction verification and earthworks, civil engineering and site verification.

■ www.topconpositioning.com

Blink by Faro combines viz and automation

aro Blink is a new 3D reality capture solution designed to combine visualisation with automated workflows via the Faro Sphere XG Digital Reality Platform.

“Blink is a ground-breaking innovation designed to break down the barriers to 3D data, facilitating better insights from job sites through straightforward and user-friendly workflows,” said Faro President and CEO Peter J. Lau. “By automating complex tasks and prioritising simplicity, we’ve developed a costeffective solution that enables anyone, regardless of their expertise, to achieve professionalquality data insights.”

■ www.faro.com

From 2D to 3D and back

In this magazine 2D often gets overlooked, despite it being the primary dimension for AEC deliverables. But, as Martyn Day reports, new technologies are emerging to transform 2D drawings into models, as well as to generate drawings automatically from 3D model data

Widespread adoption of 3D technology and the growth of BIM expertise have transformed AEC –but the most important output for any firm is still documentation — more specifically, the production of 2D drawings.

Before the arrival of BIM, CAD represented a way to accelerate workflows, a speedy alternative to manual drafting. It supported quick drawing, fast editing and some automation. Early dedicated AEC applications (such as the UK-developed AutoCAD AEC) provided still more acceleration, since AutoCAD came with a raft of blocked symbols, layering conventions, hatching and linetype styles. Now, we thought, we’re cooking on gas!

Then along came BIM, insisting we all model buildings in 3D and, as a by-product of this process, derive 2D line takeoffs from the geometry in order to fast-track the production of drawings. If we changed the design (in other words, the 3D model), the drawing would automatically update. BIM front-loaded the design system with more work and more decisions to be made, but at the same time, it helped improve understanding of a design, as well as offering renderings and analysis capabilities. It also fostered an explosion in the output of drawings.

Drawings are not going away – but there is certainly a movement focused on the mass-automation of their creations. Some believe they might be eliminated altogether, with the model becoming the deliverable instead. At AEC Magazine, we don’t think that will ever happen, given that in more advanced sectors such as automotive and aerospace, drawings are still produced, even in situations where fabrication is highly automated around models.

That said, a great deal of effort is dedicated to the automation of drawings, with companies including SWAPP, Graebert, Evolvelab (now part of Chaos) all developing automated BIM to 2D drawing tools.

Both Graebert and Evolvelab will be at NXT DEV in London on 12 June and

Graebert’s auto drawing capabilities will be demonstrated by BIM 2.0 start-up Qonic, which has integrated them into its own product – but more on that later.

Old drawings

But let’s not get ahead of ourselves. While it’s true that most new builds over the last four decades and more have likely been created through 2D CAD or BIM, what about all the buildings that were built before the emergence of the large digital design footprint?

These buildings are documented mostly on paper, or perhaps digitised to images, once archived through raster scanning. With refurbishment becoming an increasingly attractive option for building owners faced with sustainability regulations, wouldn’t it be a good idea to be able to automate BIM from 2D?

Long-held drawing standards and defined 2D symbolic representation give intelligent systems a language that can help 2D drawings be translated into a 3D model. Dimensions and markings can also assist in driving accuracy, in situations where perhaps the drawings are inaccurate, stretched or left askew following scanning.

Several software firms are working on building a bridge between dumb 2D drawings and intelligent 3D models. This means old drawings might be rapidly repurposed as 3D models for digital twins, layouts for facilities management, or as a baseline for refurbishment.

In the last edition we looked at how new start-up Higharc has developed an AI algorithm that can take hand-drawn sketches and convert them into fully detailed BIM (www.tinyurl.com/AEC-higharc)

In the case of Higharc, the AI recognises external and internal walls, doors and windows, and their dimensions, and can identify anchored floors in its American timber frame housing expert system.

This capability was created not to show off how well AI could perform this task, but because Higharc had a real

problem it needed to solve. The type of companies that Higharc typically sells to are house building firms with limited inhouse digital skills.

Higharc’s powerful cloud-based 3D design system models timber frame housing at fabrication-level detail and connects to ERP systems to generate costings, based on the design the customer has chosen.

Considering its impressive capabilities, Higharc is easy to learn, even where employees aren’t that familiar with CAD or digital design tools. The system’s drawing AI enables experienced builders more comfortable with hand-sketching layouts to get involved in the digital design process. It takes dumb lines and substitutes them with intelligent walls and building components that are construction-accurate and that links to the system’s ERP. Aimed at a pretty specific target customer group, Higharc may not be a 2D-to-3D solution for the masses, but it’s an interesting representation of what is enabled when AI is applied to 2D sketches.

2D-to-3D for the masses

If you search, you will find several applications that support the conversion of 2D to 3D for BIM. There’s WiseBIM, Plans2BIM, and usBIM.planAI by ACCA.

In fact, all three systems are related, since they are all powered by the 2D-to-3D technology built by Paris-based software developer WiseBIM. This uses AI to convert 2D images to Revit and IFC objects.

In addition, a new Sweden-based startup called BIMify also uses AI and supports Revit and IFC workflows. Although BIMify claims Archicad support, this is via IFC for now, while its Revit support is a lot more integrated to the format.

For this article we spoke with both WiseBIM and BIMify to discover what’s possible today. We also got a sneak peek of BIM software startup Qonic’s forthcoming auto drawing capability

Conclusion (see page 16)

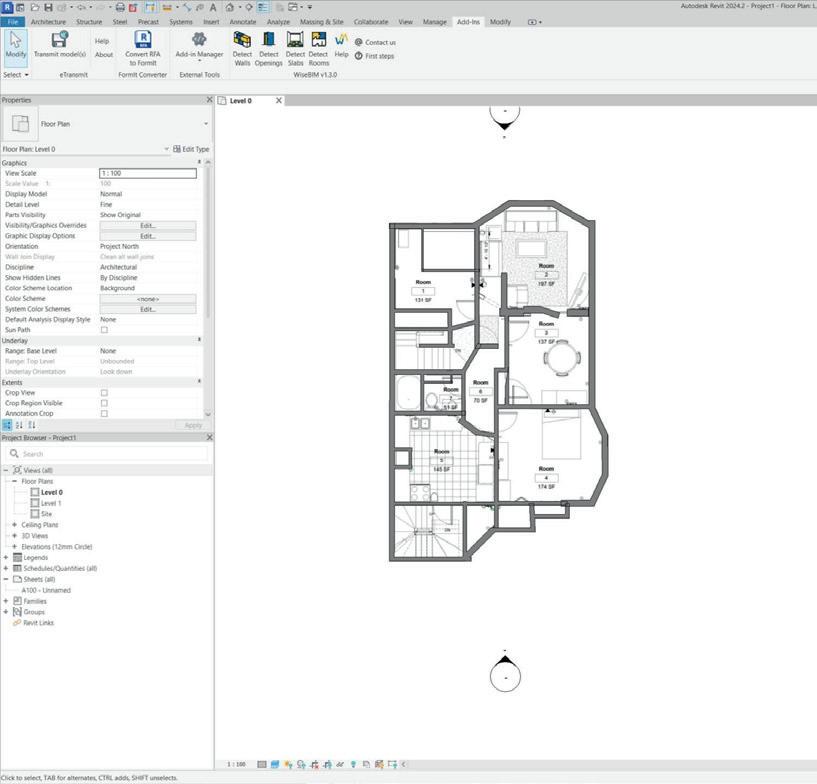

WiseBIM - 2D-to-3D conversion for IFC and Revit

Last summer, the AEC Magazine web server almost reached a meltdown when we ran a story on Paris-based AEC software company, WiseBIM. News of the developer’s in-Revit 2D-to-3D application attracted tens of thousandsofviews.

In fact, WiseBIM has been leading the charge in 2D-to-3D conversion for some time, having begun its journey around 2017. Before releasing a version that works within Revit, WiseBIM promoted itself under various names—including Plans2BIM and built its online presence across multiple platforms. Its tools were alsolicensedbyItalianBIMsoftware developerACCA.

The primary use case for WiseBIM is projects that involve existing buildings, for renovation, facility management, maintenance and digitaltwininitiatives

The company’s core technology relies on AI algorithms that work on pixel data from 2D plans, such as PDFs, PNGs, JPEGs, DWGs and DXFs. The software automatically identifies building elements from raster or vector input. The process involves importing the plan, setting the scale, running the AI detection forspecificelements(walls,openings, slabs, roofs, columns, beams, furniture, text and so on), and then allowing users to review, edit and correctthegeneratedmodel.

single building model.

In Revit, users can specify Revit families for detected elements to ensure consistency in the generated BIM model. As Garcia explained, “In our latest version, you can specify what family you are looking for, which means, because it’s using pixel-level identification, that if in your plan, the internal walls are ten centimetres thick,

development purposes. Users can add properties (for example, materials) to building elements, such as indicating that a wall is made of concrete, and all properties can be added, such as thermal coefficients.

WiseBIM’s origins can be traced back to a patented thesis at a French research centre initially developed for delivering thermal simulation. The company has a team of nine, who are predominantly technical.

While initial versions attempted ‘all at once’ detection, the current approach allows for more piecemeal detection of elements (walls, then openings, and so on). This has been a deliberate choice by the developers, as Tristan Garcia, co-founder and CEO of WiseBIM, explains. “It’s actually better this way. AI does make mistakes, but now users can correct theseintheworkflow.”

There are two flavours of the application:onethatworksinsideRevit, and one that operates standalone as a web service (Plans2BIM). Obviously, the Revit solution is all about delivering RVTcomponents,whilethewebversion aims to convert 2D to standard IFC components for generic reuse. The online variant allows for the creation and assembly of multiple floors into a

you can say, ‘Okay, every wall that you identify that is between nine centimetres and 11 centimetres is a ten-centimetre wall. This helps the AI deliver homogeneous output, and model those walls in the same family.”

WiseBIM supports multiple export formats, including IFC, DXF, and CSV/ XLSX (quantity take-offs). A specific JSON format is also available for

Their advice to improve the chances of a successful output is to focus on the quality of the 2D plan. This significantly impacts the accuracy of the AI detection. A minimum resolution of 100 pixels per metre is recommended. Removing noise and cropping irrelevant parts of the drawing (like legends) can also improve results. If you leave in the title block, lines around it may be identified as walls. So there is some pre-preparation required to clean up drawings.

In the translation process, certain information, such as wall height or sill height, is often not explicitly present in 2D plans. This will need to be manually set by the user during configuration.

Currently focused on architectural elements, the company plans to tackle the more complex task of converting structural and MEP drawings next year.

Plans2BIM is priced at €49 euros per month or €299 per year. The Revit add-on is $29 per month or $249 per year.

Huge interest in this technology points to significant demand for automating the conversion of existing building data into BIM models –particularly for renovation, facility management and digital twin initiatives. WiseBIM and Plans2BIM offer a compelling AI-powered solution for converting 2D plans to BIM.

While the AI’s accuracy sometimes requires user intervention, the iterative editing process and customisable features still deliver a time-saving solution over the manual alternative. There could be some rework of the standalone user interface, but it seems to work well in Revit as an add-in.

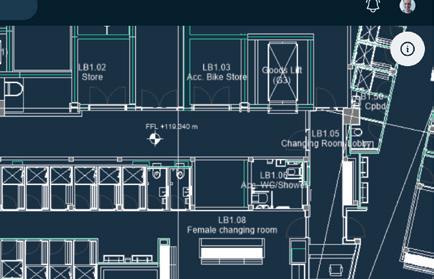

WiseBIM AI for Revit: Detect wall 2D (top) and 3D (above)

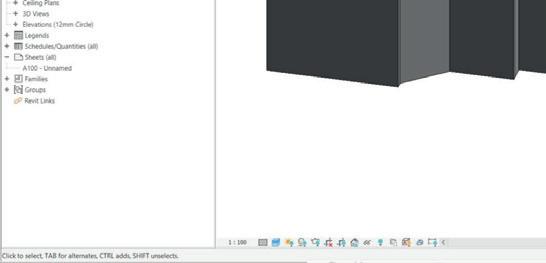

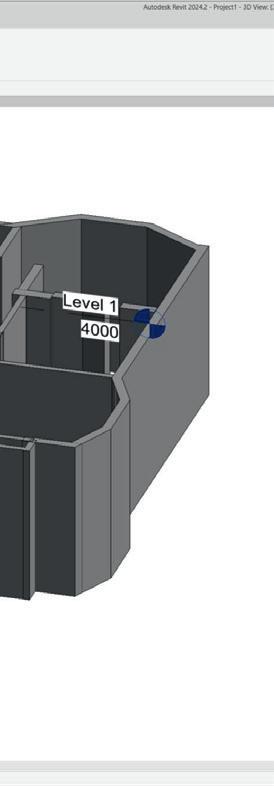

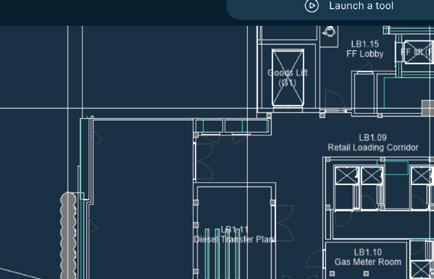

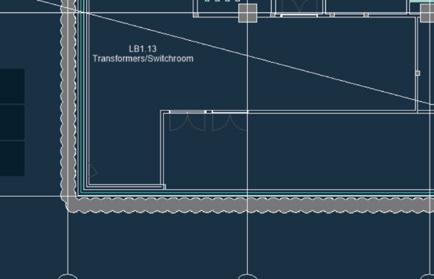

BIMify: 2D drawings and point clouds into BIM models

I first heard the term ‘BIMify’ used in relation to a feature in BricsCAD that converted dumb 3D geometry into intelligent BIM components. Now, we have a new service called BIMify, from a totally unrelated company, which is applying AI to convert dumb 2D drawings and point clouds into BIM models, mainly Revit.

Based in Sweden, BIMify is the brainchild of Aleksandar Balicevac and the result of five years of research and experimentation into reliable conversion technologies. As mentioned, BIMify is a service, rather than a software that you buy. It’s built on Balicevac’s AI code and a number of key Autodesk Forge (APS) components. While the BIMify website claims support for Archicad, this is currently via IFC, while Revit is native RVT.

BIMify takes a ‘factorylike’ approach, using machine learning and AI to batch process files, so that it’s possible to ‘feed in’ individual floors of a building and get a fully assembled Revit model out the other end. It’s even possible to have this model use your own family of parts. That means you can go from six dumb floor plan drawings to a fully editable RVT model in minutes.

for example, or the minimum areas for spaces like bathrooms, which improves the confidence level of the AI. It can recognise interior and exterior walls, doors, windows, slabs and so on.

The BIMify workflow

The process begins with a user providing input data for their building, specifying the type of model needed on the platform. This includes defining the purpose-based specification (for example, for space management, reconstruction, or detailed design) and the desired level of detail (LoD).

Users describe their building (type, gross area, number of levels, level heights) and assign the input 2D files to the correct levels. They can also select the desired output format. Critically,

that rely on identifying spaces first, instead processing the full drawing (or parts) to identify objects directly.”

Some elements cannot currently be modelled automatically. These include stairs, railings, roofs, vertical openings and custom specification details, and require manual work by BIMify’s inhouse team.

The model undergoes a standardised, semi-automated quality assurance step. This ensures the delivered model is complete and meets the specified quality standards. The manual completion and QA process also provide direct feedback to the development team to improve the automation algorithms.

Balicevac also tells us that the company has clients that are moving from other BIM systems to Revit and are using their system to convert old projects, as IFC does not give them editable Revit geometry. This means taking 2D out to get 3D native RVT files, which is an extremely interesting workflow.

BIMify supports various input formats – DWG, DXF, point cloud, PDF, image – and can output in RVT or IFC. While focusing on architecture currently, the company plans to expand into other disciplines and its team is developing features for model maintenance and seamless integration with other industry systems.

Balicevac says he is primarily focusing on Europe to start, where he estimates there are over 20-25 billion square metres of buildings, of which some 95% were built pre-BIM and will need digitising sooner or later.

As to the accuracy of the AI, he seems supremely confident of his system. As it’s working, the AI is checked against building rules, leading to what he calls ‘engineering intelligence’ –knowing standard wall thicknesses,

users can specify their own Revit template and families to be used in the generated model. The platform provides an instant price quote based on the building size and specification.

Automated generation uses the company’s deep learning and AI to read the input files. Machines go through the drawing or scan to identify objects like walls, doors, windows, curtain walls, slabs, plumbing fixtures, columns, and rooms. The AI aims to determine object types and their locations (and is being developed to add increased granularity, such as single/double/triple windows).

Engineering intelligence is applied, with building rules used to validate and refine the AI output, as previously described.

Balicevac claims 100% accuracy here, which, if true, would certainly distinguish this tool from many other AI tools. He says that BIMify “avoids heuristic methods

We find that most AI developers are very protective of their ‘secret sauce’. They like to keep quiet about how they achieve what they do, and BIMify is more tight-lipped than most.

However, the company is clearly using Autodesk APS components to build these native Revit models with real-world families, such as the Forge viewer, data exchange and, I’m guessing, Revit.io (a headless Revit in the cloud). This is probably reassuring to Revit users, but may make it hard for BIMify to tackle native Archicad, as there is no equivalent to Revit.io.

BIMify makes big claims about output accuracy. Talking with Balicevac, you get the sense that he really knows what he’s doing with AI and ML. He’s put code in place to keep the AI in check with real-world engineering constraints. Autodesk’s approach with Forge is certainly advantageous here, but additional formats will be harder to achieve. Either way, BIMify is certainly an interesting firm to follow.

Predict Failures Before They Happen.

CivilSense™ uses AI and multi-source data — including GIS, infrastructure, and climate insights — to pinpoint high-risk pipeline segments before failure strikes.

Take control of your water asset management. Proactive planning starts here.

the only asset-management solution that delivers predictive and real-time AI leak detection with market-leading 93% accuracy. Backed by the expertise and scale of America’s leading infrastructure business.

Cover story

Qonic: Autodrawings

In 2024, Ghent-based developer Qonic launched its BIM 2.0 platform. On the face of it, the first iteration is a cloudbased common data environment (CDE), capable of handling massive BIM models that far surpass Revit’s loading capability. It offers a really simple interface for filtering and interrogating BIM data, with intuitive sectioning and a frame rate that approaches computer-game level. Underneath, there’s a solid modelling engine that supports highly accurate editing of geometry and is aimed at the junction where architectural BIM meets construction BIM. In short, this was the starting point of what looks set to be a rapid and exciting adventure in software development.

Qonic is beefing up its platform. For the purpose of this article, we are going to focus on just one of the new introductions - and that’s the long-promised Autodrawings function.

has plenty of readers that use Catia, Solidworks, Siemens NX and Inventor, for example, and even when they manufacture parts directly from CAD models, the production of drawings is still mandated for them. But that’s a discussion for another time.

In Qonic, the drawing generation process utilises the rich data and structure within the Qonic 3D model – derived from IFC or enriched from RVT – to produce intelligent and wellannotated 2D outputs. Graebert’s server-side automation and browserbased viewing of drawings, using its Kudo technology, is key to this new,

With this goal in mind, Qonic has licensed Graebert’s Kudo DWG technology, which now comes with some autodrawing capability. It’s taking this base layer and building a powerful integration so that Qonic can ingest huge, multi-disciplinary models and quickly output 2D general assembly drawings.

When the technology was shown to me, I got the message that while the AEC industry generally recognises the necessity of drawings, executives at Qonic feel that their importance may dwindle in the future as model-based workflows become more prevalent, not to mention more accessible to a broader audience.

While I tend to agree with this idea in theory, I know from experience that it’s not always the case in practice. Our sister publication, DEVELOP3D,

Conclusion (from page 12)

While many people are still waiting around for new 3D BIM tools, it’s clear that development work is increasing our options, when it comes to what capabilities we can buy and how we will work in future. Drawing capabilities are a big part of that picture.

The companies developing 2D-to-3D

for export to formats including DWG and PDF. Drawings can be viewed directly within the Qonic environment, with basic annotation supported, such as adding dimensions and moving tags. A mechanism to flag outdated annotations following model updates is planned for the future.

combined cloud functionality.

Qonic’s drawing generation is primarily a one-directional output. Changes to dimensions, tags or other annotations must be made in the 3D model, which then triggers an update to the drawing. Qonic has no intention to create a full 2D editor that directly modifies the 3D model.

The automated generation works a bit like Hypermodels in Bentley iModel, with drawings generated automatically from defined section planes and templates, with the potential for scheduling this process (for example, it might take the form of nightly updates). The output is fully vectorised, allowing

capabilities are heavily focused on using machine learning and AI to perform this task, together with rules and configurations. It’s our understanding that Graebert’s technology is predominantly linear processing without any AI, but in the future, we fully expect to see some machine learning used in the production of auto drawings, as they scale from

Many firms struggle to get BIM models exactly how they want them, and resort to ‘fixing it in the drawing’. Here, Qonic is empowering users to fix inaccuracies in the model due to performance capabilities and provides automation tools for propagating changes, rather than relying on manual fixes in 2D drawings. Initially, the focus is on producing general arrangement (GA) drawings with the goal of extending the capability to more detailed construction and design delivery drawings in the future. It’s also worth pointing out that many firms have their own internal visual styles for general arrangement drawings. Qonic will enable configurations to cater to most firms’ visual tastes for wall styles, openings and other content.

The drawing functionality will likely form part of the paid subscription options and not appear in the free version of Qonic. Pricing is not expected to be based on tokenisation or usage, although these pricing structures are used by some competitors.

Drawing generation capabilities are now in active development. The company already has working prototypes and is hoping to release the software later this year. The Qonic team will appear on the main stage at NXT BLD (London, 11 June) demonstrating this new functionality and will also be offering attendees a chilled-out exhibition space in which to relax and talk to the team.

general arrangement (GA) to Level 400. The key takeaway here is that dumb 2D drawings won’t stay dumb for long. If a drawing is an accurate plan, then there are simple ways to digitise that information and convert it to 3D. As for autodrawings, it’s clear that this June at NXT BLD, there will be real productivity benefits to witness first-hand.

Unlocking New Creative Workflows with AI

Architects and designers have long embraced technology to work smarter, faster, and more creatively. Since its introduction to the industry, AI has emerged as a powerful force, reshaping how teams optimise processes, brainstorm ideas, and deliver results. This article explores AI’s impact, how it transforms processes, and the new workflows it has created in architecture

AI’s impact on architecture

AI’s significance in architecture is thanks to its ability to process large amounts of data, automate time-consuming tasks, help with quick design exploration, and optimise image quality. It’s useful across various stages of the architectural design process, from early-stage planning and conceptualisation to construction.

According to a survey conducted by Architizer and Chaos on the state of architectural visualisation in 2025, excitement around AI experimentation is up by 20% compared to 2024. Larger firms are more enthusiastic about it, however, smaller firms and freelancers are also finding their own creative approaches.

The same survey reveals that people’s use of AI differs depending on the kind of firm they work for. For instance, large and small firms are using AI to help produce traditional, still renderings quickly and more economically, whereas medium-sized firms are using AI to experiment and discover new creative processes.

The benefits of implementing AI

Increased efficiency: One major way AI is transforming architectural workflows is by accelerating time-consuming tasks. AI tools can handle these processes with impressive efficiency, from generating preliminary floor plans to evaluating structural feasibility and refining spatial organisation.

Enhanced creativity: Generative AI technologies push the boundaries of traditional architectural design. The mix of human creativity and machine intelligence unlocks the potential for new ideas and aesthetics that are unique and functional. It also introduces architects and designers to creative solutions they may not have considered independently. With a single prompt, you can quickly explore different forms, layouts, and styles.

Visualisation quality: AI tools can sharpen and upscale images, often without sacrificing performance. From reducing noise in renders to simulating atmospheric lighting, you can have realistic and presentation-ready images in no time. This level of quality can help communicate technical insight with emotional clarity, ensuring clients and stakeholders understand the vision you are trying to convey.

Better client communication: AI-powered tools can facilitate transparent and effective communication between you and your clients by offering more immersive, comprehensive ways to present ideas. They can also interpret vague client feedback and translate it into actionable design suggestions, helping align expectations early in the process and reducing costly revisions later on.

AI-powered visualisation workflows

Chaos Veras is an AI-powered visualisation app for leading modelling tools like Revit and SketchUp. It uses your 3D model geometry as a substrate for creativity and inspiration, making it ideal for ideation and pre-design.

Ideation and pre-design are phases where fast decision-making is crucial, and design quality cannot be sacrificed. Veras helps minimise the gap during these stages by allowing you to generate fast AI-rendered concepts that you can share with colleagues and clients. This makes early design feedback quicker and easier.

The Chaos AI Enhancer allows you to elevate the quality of your visualisations within Chaos Enscape, an industry-leading real-time rendering and VR plug-in. It leverages AI to improve people and vegetation assets, enhancing the realism of your visualisations.

Realistic assets bring a project to life, and the Chaos AI Enhancer allows you to export betterlooking assets straight from Enscape. As it’s a

feature and not a third-party solution, using it means you don’t have to leave your design application, giving you a streamlined visualisation workflow.

New ways to design and visualise Enscape and Veras are popularly used together for an enhanced architectural visualisation workflow. This combination gives you a more robust workflow and eliminates the need for multiple disconnected tools.

Users benefit from faster iteration cycles. The integrated workflow lets you explore a number of different design ideas without locking anything into your BIM/CAD model too early. It also offers a richer canvas for enhancement, which allows you to produce more realistic and immersive output.

Hanns-Jochen Weyland of Störmer Murphy and Partners, an award-winning architectural practice based in Hamburg, Germany, shares, “Over a year ago, we began exploring AI tools to speed up our workflows and were excited to discover Veras, a solution specifically designed for AEC that seamlessly integrates with host platforms. Veras is now our go-to for initial ideation before transitioning to renderings in Enscape. This powerful combination accelerates concept development and ensures reliable outcomes.”

Supercharge your designs with Chaos AI is shaping new ways to work within architecture. By streamlining the design process, it contributes to more polished, functional, and cost-effective solutions, allowing architects and designers to redirect their energy toward designing and solving complex challenges.

Chaos is a global leader in design and visualisation technology, providing world-class visualisation solutions that help you share ideas, optimise workflows, and create immersive experiences.

Visit www.Chaos.com to learn more

AI-rendered in Veras

Shaping the future of AEC

With NXT BLD and NXT DEV fast approaching, we put the spotlight on the key themes of our two-day event—from AI and BIM 2.0 to pioneering technology perspectives from some of the biggest firms in AEC

AEC leaders

Pioneer perspectives

A core element of NXT BLD is the insight shared by leading AEC firms and heads of design technology, who present the real-world workflows and innovations shaping their firms. This year’s line-up includes industry heavyweights from Heatherwick Studio, Perkins&Will, Foster + Partners, HOK, Bouygues, and Buro Happold — with more high-profile names to be announced very soon.

At NXT DEV, many of these same design technology leaders return to help steer the conversation—offering guidance, feedback, and insight to ensure that the next generation of tools directly address the challenges and needs faced by today’s AEC professionals.

City Hall / London Foster + Partners

Francis Crick Institute London / HOK

AI

Artificial thinking

Artificial Intelligence (AI) and machine learning are now impossible to ignore. The pace of advancement is exponential—what seemed like a distant possibility just a year ago has already been surpassed.

Soon, every firm will have access to AI tools as capable as the world’s best software developers, profoundly impacting how software is created and how AEC firms interact with their data.

Automatic for the people

This month’s cover feature explores the automation of 2D—from converting 2D drawings to 3D models to generating automated documentation directly from BIM (see page 12). At NXT BLD and NXT DEV, you’ll see live demonstrations of new tools from EvolveLAB (now part of Chaos), Qonic, and Graebert, showcasing both current capabilities and upcoming features. As these technologies mature, they promise significant productivity gains by reducing the time spent on manual drawing production. They also open the door to more efficient workflows, with fewer high-cost software licences required for documentation tasks.

At NXT BLD, expect thoughtprovoking presentations from leading voices in this space, including Martha Tsigkari, Head of Applied R+D at Foster + Partners; Keir Regan-Alexander of Arka.Works (see page 26); and Sean Young of Nvidia. You’ll also get a first look at cutting-edge AI-powered tools shaping the future of design and AEC collaboration including Finch3D. Consigli and Tektome.

Meanwhile, NXT DEV will take the conversation even further, hosting a deep-dive panel discussion with AI experts to explore the real-world implications of these technologies across the AEC landscape. Nvidia will also explore how the AEC industry is rapidly shifting from AI curiosity to AI action. of discussion

Martha Tsigkari Head of Applied R+D Foster + Partners

Veras by Chaos AI-powered visualisation

Relighting Hamburg scene with Midjourney v7: Courtesy of Keir Regan-Alexander (Arka.Works)

Let’s get down to business

Investing in software and infrastructure has always been key to driving business value—but with the rise of AI, data lakes, open systems, and productivity tools like autonomous drawings, the return on investment is becoming even more tangible. NXT BLD and NXT DEV offer a unique opportunity to gain insight into these developments, connect with innovators, and see real-world solutions that can directly impact your bottom line.

One standout session will focus on how to negotiate effectively with software vendors and resellers, offering a behind-the-scenes look at how pricing models, metrics, and deal structures actually work.

The new power generation BIM 2.0

AEC Magazine has long championed the evolution of nextgeneration BIM tools—alternatives to the traditional, filebased desktop modelling systems that have dominated the industry for decades. This year marks a milestone: for the first time, many of the innovators we’ve followed from early development now have real products in the market.

At NXT BLD, the main stage will host a back-to-back showcase of these groundbreaking platforms: Arcol, Qonic, Motif, Snaptrude, and Hypar — all demonstrating new capabilities and sharing their visions for the future of BIM.

Building a full-featured competitor to something as mature as Revit, now 25 years old, is no small feat. These tools are evolving in phases—some still focused on early-stage or conceptual modelling—but the goal is clear: to deliver comprehensive BIM 2.0 platforms, designed for cloud-native workflows, real-time collaboration, and entirely new ways of working.

Alongside a global lineup of startups, there’ll be presentations from major AEC software developers including Autodesk, Bentley Systems, and Graphisoft. Each will showcase new technologies and never-before-seen applications. The energy and innovation driven by emerging startups is clearly influencing the broader industry, inspiring established vendors to accelerate their own development and push the boundaries of what’s possible in AEC.

Motif

Qonic

Snaptrude

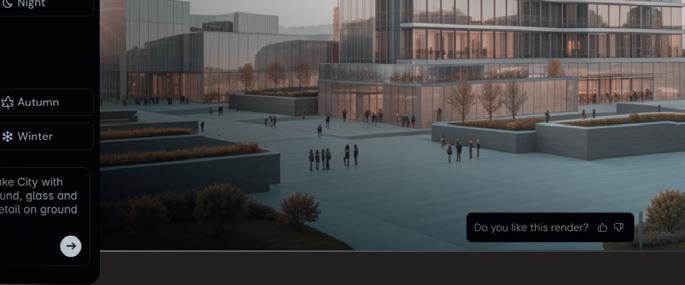

Reality check

Capturing buildings and terrain once meant hiring a surveyor with a total station—but the landscape has changed dramatically. Technologies like LiDAR and photogrammetry paved the way, and now cutting-edge methods such as NeRFs and Gaussian Splatting are pushing accuracy and realism even further. Add to that the rapid evolution of mobile phones, SLAM scanners, drones, and advanced reality capture software, and it’s clear we’re entering a new era of digital site capture.

NXT BLD will feature speakers from across the industry, while at NXT DEV, don’t miss a dedicated panel session hosted by Robert Klaschka, where the future of reality capture will take centre stage.

Geo whiz

Machine made

Geospatial & city context

GIS and BIM have long been on a path toward convergence—and that future is finally taking shape. Thanks to new tools, growing industry collaboration, and opensource initiatives, integrating GIS data into everyday workflows is becoming faster, easier, and more accessible than ever. Advances in game engine technology, hardware, and graphics now make it possible to explore highly detailed, cityscale models in real time, while AI is unlocking powerful new ways to analyse environmental conditions.

At NXT BLD, a special group session hosted by Esri will spotlight the latest innovations in this space, along with exciting new startups helping to shape the future of geoBIM.

Open all hours

A recurring theme at NXT BLD has been the push for industry-owned open data lakes. AEC firms increasingly want full ownership of their project data—without being locked into paying software vendors to access and manage it. This issue has become even more urgent with the rise of AI, as firms recognise the immense value their data holds for software companies.

This year at NXT BLD / DEV, expect major announcements on new developments in this space—advancing the vision of open, accessible, and AI-ready project data.

Digital transformation in AEC goes far beyond modelling and documentation. The real opportunity lies in connecting design systems with modern, digitised manufacturing—enabling seamless machine-to-machine communication. Achieving this demands a fundamental rethink of current processes. Increasingly, firms are turning to modular construction and kit-of-parts strategies to drive efficiency and scalability in the next generation of buildings and infrastructure.

Don’t miss an exclusive NXT DEV panel discussion exploring the current state of this rapidly evolving space and what it means for the future of design and delivery.

Open source & data lakes

Reality modelling

Gauzilla: 4D construction time-lapse with Gaussian Splats

Digital fabrication / automation

Power play

AEC workflows are evolving at pace, making highperformance workstations more important than ever. We’ll explore how firms can best prepare for the rising demands of AI, visualisation, reality modelling, and other compute-intensive workflows.

A thought-provoking panel of experts at NXT DEV will tackle a variety of topics including AI and sustainability. With AI still in its early stages of adoption, firms must consider how to scale their compute infrastructure — balancing desktop, mobile, datacentre, and cloud. At the same time, rising performance demands bring increased energy consumption. How can firms unlock the full potential of AI while keeping sustainability in focus?

Meanwhile, at NXT BLD, Lenovo’s Mike Leach will offer insight in his presentation - Navigating AI in AECO: balancing visionary potential with real-world practice, while delegates can get handson with Lenovo’s latest Intel and Nvidia-powered workstations built for tomorrow’s AEC challenges.

Digital discovery

The exhibition brings together the very latest technologies for AEC — think browser-based BIM, advanced AI, spatial data integration, visualisation and nextgen collaborative platforms.

You’ll also find brand new workstation hardware from Lenovo, Nvidia, and Intel, built to power the most demanding AEC workflows, including AI.

It’s a rich mix of rising stars and industry veterans — each helping to redefine what’s possible in AEC, and driving our industry into exciting new digital territory.

The BIM 2.0 startups will be out in force and for the first time ever you’ll be able to meet Snaptrude, Motif, Qonic and Arcol under one roof —offering hands-on demos of their commercial platforms and fresh approaches to digital design and collaboration.

Innovation from established players will be equally strong. Expect major updates from Autodesk, Graphisoft, and Esri, as well as a brand-new suite of AEC-focused software bundles from Dassault Systèmes, the developer of Catia (see page 34).

And that’s just the beginning. Check out the full exhibitor line up below.

NXT BLD

■ www.nxtbld.com/sponsors-2025

NXT DEV

■ www.nxtdev.build/sponsors-2025

AI & design culture (part 1)

As AI tools rapidly evolve, how are they shaping the culture of architectural design? Keir Regan-Alexander, director of Arka.Works, explores the opportunities and tensions at the intersection of creativity and computation — challenging architects to rethink what it means to truly design in the age of AI

An awful lot has been happening recently in the AI image space, and I’ve written and rewritten this article about three times to try and account for everything. Every time I think it’s done, there seems to be another release that moves the needle. That’s why this article is in two parts; first I want to look at recent changes from Gemini and GPT-4o and then, in the July / August edition, take a deeper dive into Midjourney V7 and give a sense of how architects are using these models.

I’ll start by describing all the developments and conclude by speculating on what I think it means for the culture of design.

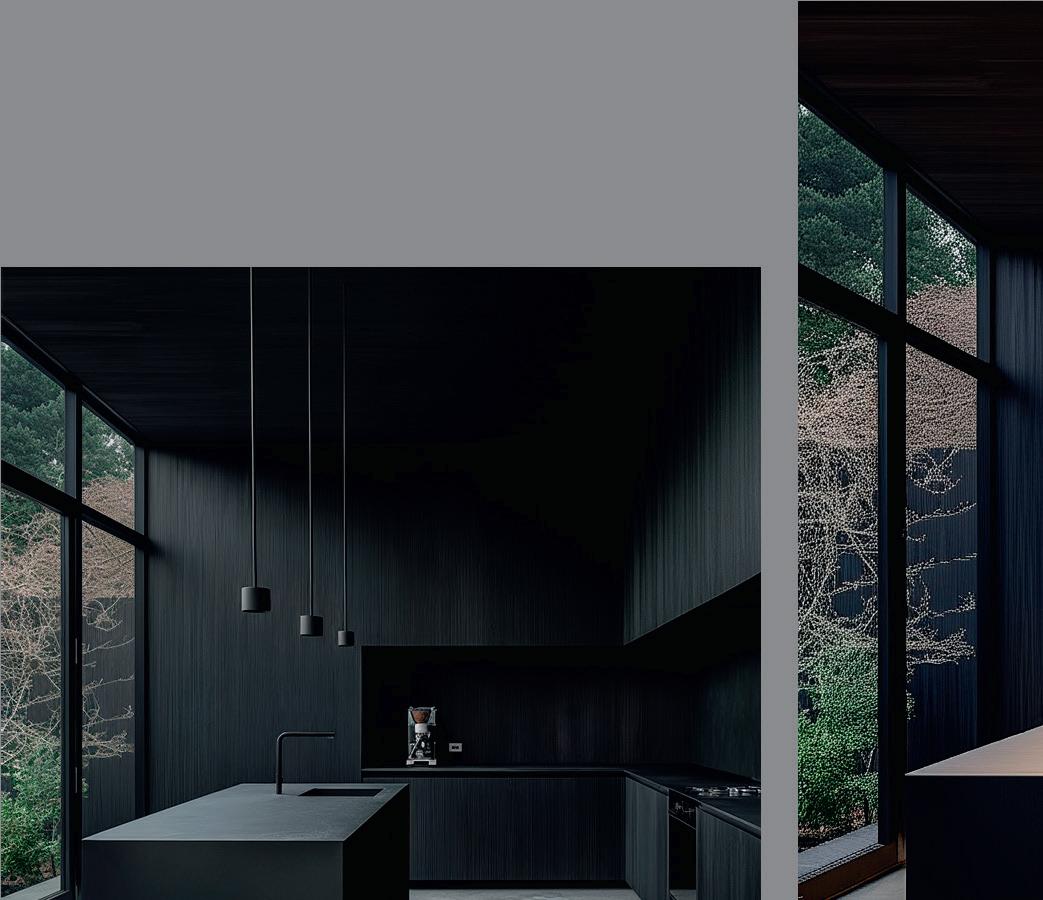

Right off the bat, let’s look at exactly what we’re talking about here. In figure 1 you’ll see a conceptual image for a modern kitchen, all in black. This was created

with a text prompt in Midjourney. After that I put the image into Gemini 2.0 (inside Google AI Studio) and asked it:

“Without changing the time of day or aspect ratio, with elegant lighting design, subtly turn the lights (to a low level) on in this image - the pendant lights and strip lights over the counter.”

Why is this extraordinary?

Well, there is no 3D model for a start. But look closer at the light sources and shadows. The model knew where exactly to place the lights. It knows the difference between a pendant light and a strip light and how they diffuse light. Then it knows where to cast the multi-directional shadows and also that the material textures of each surface would have diffuse, reflective or caustic illumination qualities.

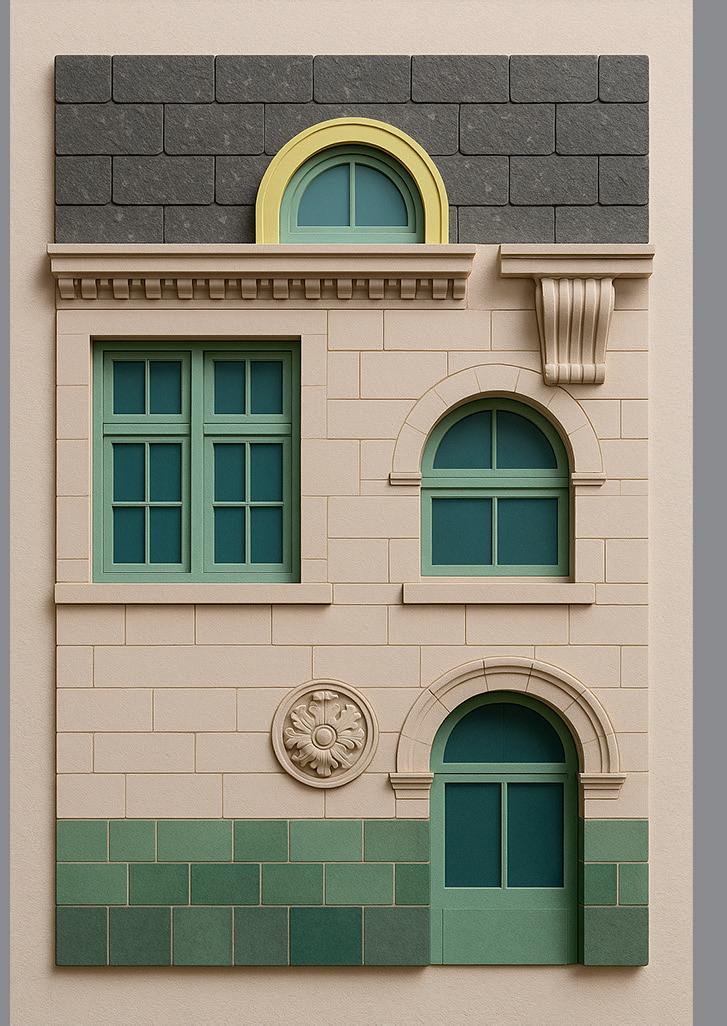

Here’s another one (see figure 2). This time I’m using GPT-4o in Image Mode.

“Create an image of an architectural sample board based on the building facade design in this image”

Why is this one extraordinary?

Again, no 3D model and with only a couple of minor exceptions, the architectural language of specific ornamentation, materials, colours and proportion have all been very well understood. The image is also (in my opinion) very charming. During the early stages of design projects, I have always enjoyed looking at the local “Architectural Taxonomy” of buildings in context and this is a great way of representing it. If someone in my team had made these images in practice I would have been

(Left) an image used as input (created in Midjourney).

(Right) an image returned from Gemini that precisely followed my text-based request for editing

delighted and happy for them to be included in my presentations and reports without further amendment.

A radical redistribution of skills

There is a lot of hype in AI which can be tiresome, and I always want to be relatively sober in my outlook and to avoid hyperbole.

You will probably have seen your social media feeds fill with depictions of influencers as superhero toys in plastic wrappers, or maybe you’ve observed a sudden improvement in someone’s graphic design skills and surprisingly judicious use of fonts and infographics … that’s all GPT-4o Image Mode at work.

So, despite the frenzy of noise, the surges of insensitivity towards creatives and the abundance of Studio Ghibli IP infringement surrounding this release - in case it needs saying just one more time - in the most conservative of terms, this is indeed a big deal.

away and you start to think about what it means for creators, for design methods … for your craft … and you get a sinking feeling in your stomach. For a couple of weeks after trying these new models for the first time I had a lingering feeling of sadness with a bit of fear mixed in.

I think this feeling was my brain finally registering the hammer dropping on a long-held hunch; that we are in an entirely new industry whether we like it or not and even if we wanted to return to the world of creative work before AI, it is impossible. Yes, we can opt to continue to do things however we choose, but this

image and video domains.

This is because the method of production and the user experience is so profoundly simple and easy compared to existing practices, that the barrier for access to image production in many, many realms has now come right down.

‘‘ These techniques are so accessible in nature that we should expect to see our clients briefing us with ever-more visual material. We therefore need to not be afraid or shocked when they do ’’

new method now exists in the world and it can’t be put back in the box.

Again, we may not like to think about this from the perspective of having spent years honing our craft, yet the new reality is right in front of us and it’s not going anywhere. These new capabilities from image models can only lead to a permanent change in the working relationship between the commissioning client and the creative designer, because the means of production for graphical and image production have been completely reconfigured. In a radical act of forced redistribution, the access to sophisticated skill sets is now being packaged up by the AI companies to anyone who pays the licence fee.

The first time you get a response from these new models that far exceeds your expectations, it will shock you and you will be filled with a genuine sense of wonder. I imagine the reaction feels similar to the first humans to see a photograph in the early c19th - it must have seemed genuinely miraculous and inexplicable. You feel the awe and wonder, then you walk

I’ll return to this internal conflict again in my conclusion. If we set aside the emotional reaction for a moment, the early testing I’ve been doing in applying these models to architectural tasks suggest that, in both cases, the latest OpenAI and Google releases could prove to be “epochdefining” moments for architects and for all kinds of creatives who work in the

What has not become distributed (yet) is wise judgement, deep experience in delivery, good taste, entirely new aesthetic ideas, emotional human insight, vivid communication and political diplomacy; all attributes that come with being a true expert and practitioner in any creative and professional realm.

These are qualities that for now remain inalienable and should give a hint at

(Left) a photograph taken in London on my ride home (building on Blackfriars Road).

(Right) GPT-4o’s response to my request, a charming mock up of a model sample board of the facade

where we have to focus our energies in order to ensure we can continue to deliver our highest value for our patrons, whomever they may be. For better or worse, soon they will have the option to try and do things without us.

Chat-based image creation & editing

For a while, attempting to produce or edit images within chat apps has produced only sub-standard results. The likes of “Dall-E” which could be accessed only within otherwise text-based applications had really fallen behind and were producing ‘instantly AI identifiable images’ that felt generic and cheesy. Anything that is so obviously AI created (and low quality) means that we instantly attribute a low value to it.

As a result, I was seeing designers flock instead to more sophisticated options like Midjourney v6.1 and Stable Diffusion SDXL or Flux, where we can be very particular about the level of control and styling and where the results are often either indistinguishable from reality or indistinguishable from human creations. In the last couple of months that dynamic has been turned upside down; people can now achieve excellent imagery and edits directly with the chat-based apps again.

The methods that have come before, such as MJ, SD and Flux are still remarkable and highly applicable to practicebut they all require a fair amount of technical nous to get consistent and repeata-

ble results. I have found through my advisory work with practices that having a technical solution isn’t what matters most’ it’s having it packaged up and made enjoyable enough to use that it’s able to make change to rigid habits.

A lesser tool with a great UX will beat a more sophisticated tool with a bad UX every time.

These more specialised AI image methods aren’t going away, and they still represent the most ‘configurable’ option, but text-based image editing is a format that anyone with a keyboard can do, and it is absurdly simple to perform.

More often than not, I’m finding the results are excellent and suitable for immediate use in project settings. If we take this idea further, we should also assume that our clients will soon be putting our images into these models themselves and asking for their ideas to be expressed on top…

We might soon hear our clients saying; “Try this with another storey”, “Try this but in a more traditional style”, “Try this but with rainscreen fibre cement cladding”, “Try this but with a cafe on the ground floor and move the entrance to the right”, “Try this but move the windows and make that one smaller”...

You get the picture.

Again, whether we like this idea or not (and I know architects will shudder even

thinking of this), when our clients received the results back from the model, they are likely to be similarly impressed with themselves, and this can only lead to a change in briefing methods and working dynamics on projects.

To give a sense of what I mean exactly, in figure 3 over the page I’ve included an example of a new process we’re starting to see emerge whereby a 2D plan can be roughly translated into a 3D image using 4o in Image Mode. This process is definitely not easy to get right consistently (the model often makes errors) and also involves several prompting steps and a fair amount of nuance in technique. So far, I have also needed to follow up with manual edits.

Despite those caveats, we can assume that in the coming months the models will solve these friction points too. I saw this idea first validated by Amir Hossein Noori (co-founder of the AI Hub) and while I’ve managed to roughly reproduce his process, he gets full credit for working it out and explaining the steps to me - suffice to say it’s not as simple as it first appears!

Conclusion: the big leveller

1. Client briefing will change

My first key conclusion from the last month is that these techniques are so accessible in nature that we should expect to see our clients briefing us with ever-more visual material. We there-

(Left) Image produced in Midjourney using a technique called ‘moodboards’. (Right) Image produced in GPT-4o Image Mode with a simple text prompt

fore need to not be afraid or shocked when they do.

I don’t expect this shift to happen overnight, and I also don’t think all clients will necessarily want to work in this way, but over time it’s reasonable to expect this to become much more prevalent and this would be particularly the case for clients who are already inclined to make sweeping aesthetic changes when briefing on projects.

Takeaway: As clients decide they can exercise greater design control through image editing, we need to be clearer than ever on how our specialisms are differentiated and to be able to better explain how our value proposition sets us apart. We should be asking; what are the really hard and domainspecific niches that we can lean into?

had to use ComfyUI (a complex nodebased interface for using Stable Diffusion) for ‘re-lighting’ imagery. This method remains optimal for control, but now for many situations we could just make a text request and let the model work out how to solve it directly. Let’s extrapolate that trend and assume that as a generalisation; the harder things we do will gradually become easier for others to replicate.

Muscle memory is also a real thing in the workplace, it’s often so much easier to revert back to the way we’ve done things in the past. People will say “Sure it might be

‘‘

willing to do something in a new way. I do a lot of work now on “applied AI implementation” and engagement across practice types and scales. I see again and again that there are pockets of technical innovation and skills with certain team members, but I also see that it’s not being translated into actual changes in the way people do things across the broader organisation. This is a lot to do with access to suitable training, but also to do with a lack of awareness that improving working methods are much more about behavioural incentives than they are about ‘technical solutions’.

In a radical act of forced redistribution, the access to sophisticated skill sets are now being packaged up by the AI companies to anyone who pays the licence fee ’’

2. Complex techniques will be accessible to all Next, we need to reconsider technical hurdles as being a ‘defensive moat’ for our work. The most noticeable trend in the last couple of years is that the things that appear profoundly complicated at first, often go on to become much more simple to execute later. As an example, a few months ago we

better or faster with AI, but it also might not - so I’ll just stick with my current method”. This is exactly the challenge that I see everywhere and the people who make progress are the ones who insist on proactively adapting their methods and systems.

The major challenge I observe for organisations through my advisory work is that behavioural adjustments to working methods when you’re under stress or a deadline are the real bottleneck. The idea here is that while a ‘technical solution’ may exist, change will only occur when people are

There is an abundance of new groundbreaking technology now available to practices, maybe even too much - we could be busy for a decade with the inventions of the last couple of years alone. But in the next period, the real difference maker will not be technical, it will be behavioural. How willing are you to adapt the way you’re working and try new things? How curious is your team? Are they being given permission to experiment? This could prove a liability for larger practices and make smaller, more nimble practices more competitive.

Takeaway: Behavioural change is the biggest hurdle. As the technical skills needed for the ‘means of creative production’

(Left) Image produced in Midjourney (Right) Gemini has changed the cladding to dark red standing seam zinc and also changed the season to spring. The mountains are no longer visible but the edit is extremely high quality.

Opinion

become more accessible to all, the challenge for practices in the coming years may not be all about technical solutions, it will be more about their willingness and ability to adjust behaviour and culture. The teams who succeed won’t be the people who have the most technically accomplished solutions, more likely it will be those who achieve the most widespread and practical adaptations of their working systems.

3 Shifting culture of creativity I’ve seen a whole spectrum of reactions towards Google and OpenAI’s latest releases and I think it’s likely that these new techniques are causing many designers a huge amount of stress as they consider the likely impacts on their work. I have felt the same apprehension many times too. I know that a number of ‘crisis meetings’ have taken place in creative agencies for example, and it is hard for me to see these model releases as anything other than a direct threat to at least a portion of their scope of creative work.

This is happening to all industries, not least across computer science, after all -

LLMs can write exceptional code too. From my perspective, it’s certainly coming for architecture as well, and if we are to maintain the architect’s central role in design and placemaking, we need to shift our thinking and current approach or our moat will gradually be eroded too.

The relentless progression of AI technology cares little about our personal career goals and business plans and when

we consider the sense of inevitability of it all - I’m left with a strong feeling that the best strategy is actually to run towards

similar — we try to explain objectively what great design quality is, but it’s hard. Certainly it fits the brief - yes, but the intangible and emotional reasons are more powerful and harder to explain. We know it when we see it.

While AIs can exhibit synthetic versions of our feelings, for now they represent an abstracted shadow of humanness - it is a useful imitation for sure and I see widespread applications in practice, but in the creative realm I think it’s unlikely to nourish us in the long term. The next wave of models may begin to ‘break rules’ and explore entirely new problem spaces and when they do I will have to reconsider this perspective.

We mistake the mastery of a particular technique for creativity and originality, but the thing about art is that it comes

multistep process that uses the

the opportunities that change brings, even if that means feeling uncomfortable at first.

Among the many posts I’ve seen celebrating recent developments from thought leaders and influencers seeking attention and engagement, I can see a cynical thread emerging ... of (mostly) tech and sales people patting themselves on the back for having “solved art”.

from humans who’ve experienced the world, felt the emotional impulse to share an authentic insight and cared enough to express themselves using various mediums. Creativity means making something that didn’t exist before.

The posts I really can’t stand are the cavalier ones that actually seem to rejoice at the idea of not needing creative work anymore and salivating at the budget savings they will make ... they seem to think you can just order “creative output” off a menu and that these new image models are a cure for some kind of long held frustration towards creative people.

Takeaway: The model “output” is indeed extraordinarily accomplished and produced quickly, but creative work is not something that is “solvable”; it either moves you or it doesn’t and design is

That essential impulse, the genesis, the inalienably human insight and direction is still for me, everything. As we see AI creep into more and more creative realms (like architecture) we need to be much more strategic about how we value the specifically human parts and for me that means ceasing to sell our time and instead learning to sell our value.

In the next edition I will be looking in depth at Midjourney and how it’s being used in practice, I’ll also be looking specifically at the latest release (V7) in more detail, until then — thanks for reading.

A

3D view from the previous study and applies material and colour ideas form the pallette to produce interior look and feel options. Left image produced in GPT-4, all other images produced in Midjourney v7 (AW)

Keir Regan-Alexander is director at Arka Works, a creative consultancy specialising in the Built Environment and the application of AI in architecture. He will be speaking at AEC Magazine’s NXT BLD (www.nxtbld.com) in London on 11 June Catch

(Left) An example plan of an apartment (AI Hub), with a red arrow denoting the camera position. (Right), a render produced with GPT-4o Image Mode (produced by Arka Works)

4.6

AI and the future of arch viz

Tudor Vasiliu, founder of architectural visualisation studio Panoptikon, explores the role of AI in arch viz, streamlining workflows, pushing realism to new heights, and unlocking new creative possibilities without compromising artistic integrity

AI is transforming industries across the globe, and architectural visualisation (let’s call it

‘Arch Viz’) is no exception. Today, generative AI tools play an increasingly important role in an arch viz workflow, empowering creativity and efficiency while maintaining the precision and quality expected in high-end visuals.

In this piece I will share my experience and best practices for how AI is actively shaping arch viz by enhancing workflow efficiency, empowering creativity, and setting new industry standards.

Streamlining workflows with AI AI, we dare say, has proven not to be a bubble or a simple trend, but a proper productivity driver and booster of creativity. Our team at Panoptikon and others in the industry leverage generative AI tools to the maximum to streamline processes and deliver higher-quality results.

Tools like Stable Diffusion, Midjourney and Krea.ai transform initial design ideas or sketches into refined visual concepts. Platforms like Runway, Sora, Kling, Hailuo or Luma can do the same for video. With these platforms, designers can enter descriptive prompts or reference images, generating early-stage images or

videos that help define a project’s look and feel without lengthy production times.

This capability is especially valuable for client pitches and brainstorming sessions, where generating multiple iterations is critical. Animating a still image is possible with the tools above just by entering a descriptive prompt, or by manipulating the camera in Runway.ml.

Sometimes, clients find themselves under pressure due to tight deadlines or external factors, while studios may also be fully booked or working within constrained timelines. To address these challenges, AI offers a solution for generating quick concept images and mood boards, which can speed up the initial stages of the visualisation process.

In these situations, AI tools provide a valuable shortcut by creating reference images that capture the mood, style, and thematic direction for the project. These AI-generated visuals serve as preliminary guides for client discussions, establishing a strong visual foundation without requiring extensive manual design work upfront.

Although these initial images aren’t typically production-ready, they enable both the client and visualisation team to align quickly on the project’s direction.

Once the visual direction is confirmed,

the team shifts to standard production techniques to create the final, high-resolution images that would accurately showcase the full range of technical specifications that outline the design. While AI expedites the initial phase, the final output meets the high-quality standards expected for client presentations.

Dynamic visualisation