Building Information Modelling (BIM) technology for Architecture, Engineering and Construction

Building Information Modelling (BIM) technology for Architecture, Engineering and Construction

Ticking away your rights? In a data-centric AI world, what should we do when software EULAs go rogue?

Autodesk AEC evolves

Forma expands into detailed design

Welcome to neural CAD

Autodesk shows its AI hand

From pixels to prompts

Chaos blending AI with traditional viz

editorial MANAGING EDITOR

GREG CORKE greg@x3dmedia.com

CONSULTING EDITOR

MARTYN DAY martyn@x3dmedia.com

CONSULTING EDITOR

STEPHEN HOLMES stephen@x3dmedia.com

advertising

GROUP MEDIA DIRECTOR

TONY BAKSH tony@x3dmedia.com

ADVERTISING MANAGER

STEVE KING steve@x3dmedia.com

U.S. SALES & MARKETING DIRECTOR

DENISE GREAVES denise@x3dmedia.com

subscriptions MANAGER

ALAN CLEVELAND alan@x3dmedia.com

accounts

CHARLOTTE TAIBI charlotte@x3dmedia.com

FINANCIAL CONTROLLER

SAMANTHA TODESCATO-RUTLAND sam@chalfen.com

AEC Magazine is available FREE to qualifying individuals. To ensure you receive your regular copy please register online at www.aecmag.com about

AEC Magazine is published bi-monthly by X3DMedia Ltd 19 Leyden Street London, E1 7LE UK

T. +44 (0)20 3355 7310

F. +44 (0)20 3355 7319 © 2025 X3DMedia Ltd

Autodesk targets BIM with Forma Building Design, Construction robot printer links to CAD, Nvidia launches compact GPUs, plus lots more

Egnyte puts AEC Agents to work, AI drives electrical design, Snaptrude targets early stage design, and lots more

Chaos is blending AI with traditional viz, rethinking how architects explore, present and refine ideas

Most architects overlook software small print, but today’s EULAs are redefining ownership, data rights and AI use — shifting power from users to vendors

Autodesk has presented live, productionready tools, giving customers a clear view of how AI could soon reshape workflows

Register your details to ensure you get a regular copy register.aecmag.com

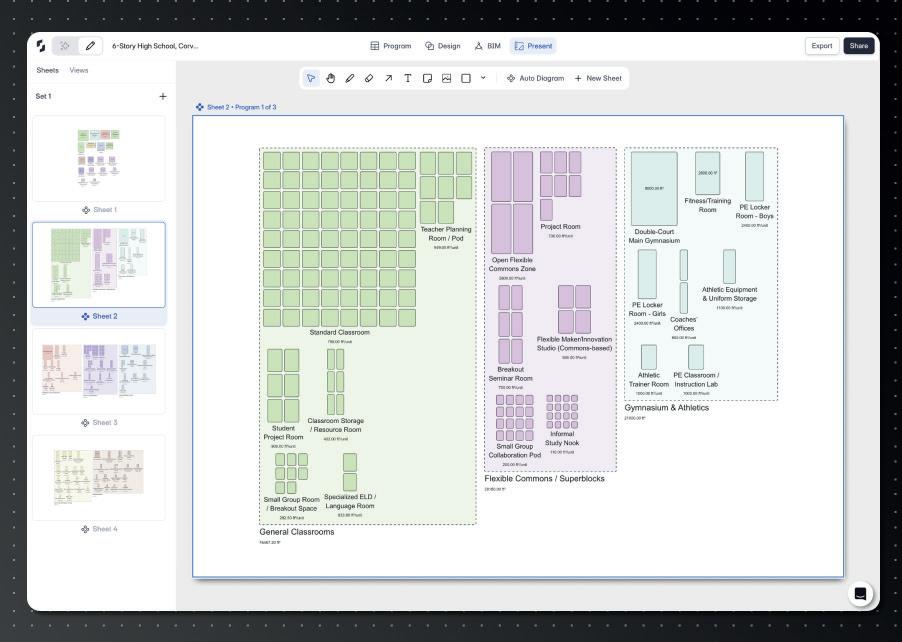

Forma is finally expanding beyond its early-stage design roots with a brand-new product focused on detailed design

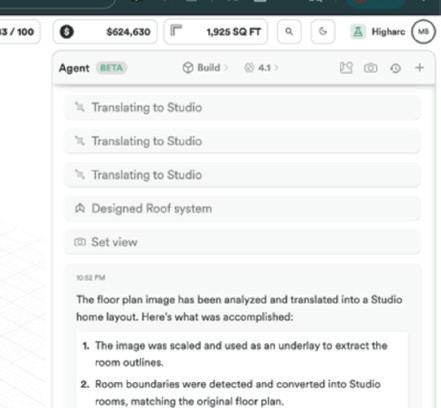

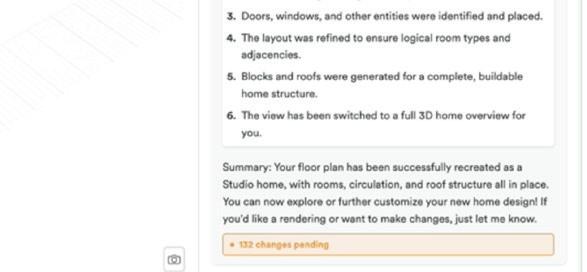

We spoke with Snaptrude CEO Altaf Ganihar about the AI capabilities coming soon to the collaborative design tool

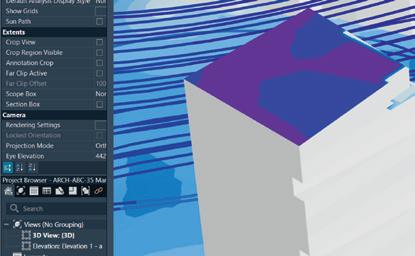

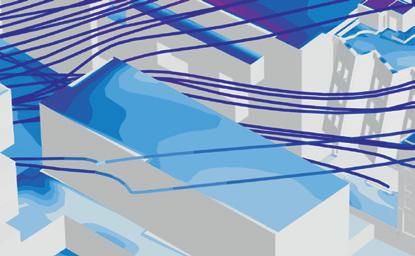

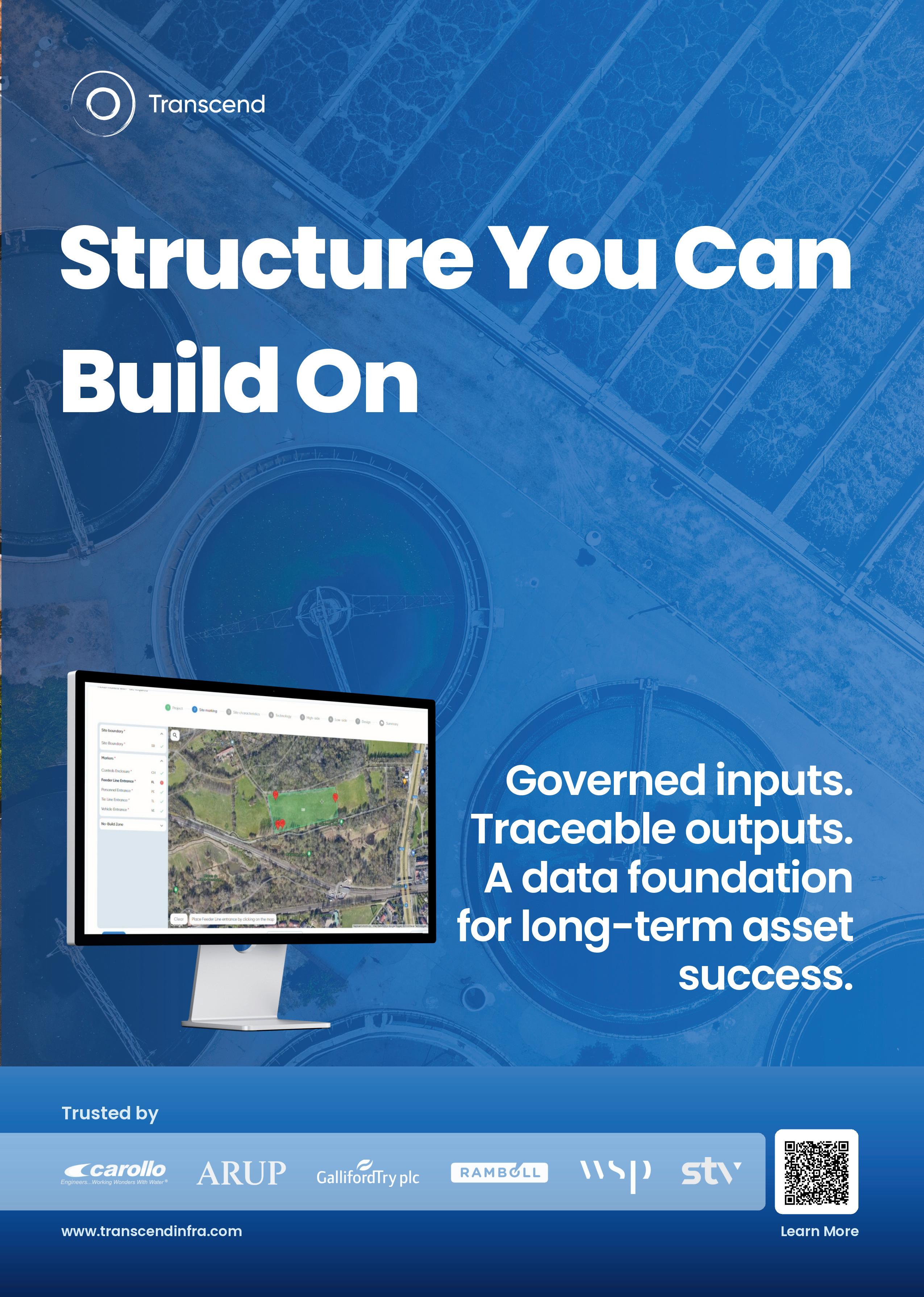

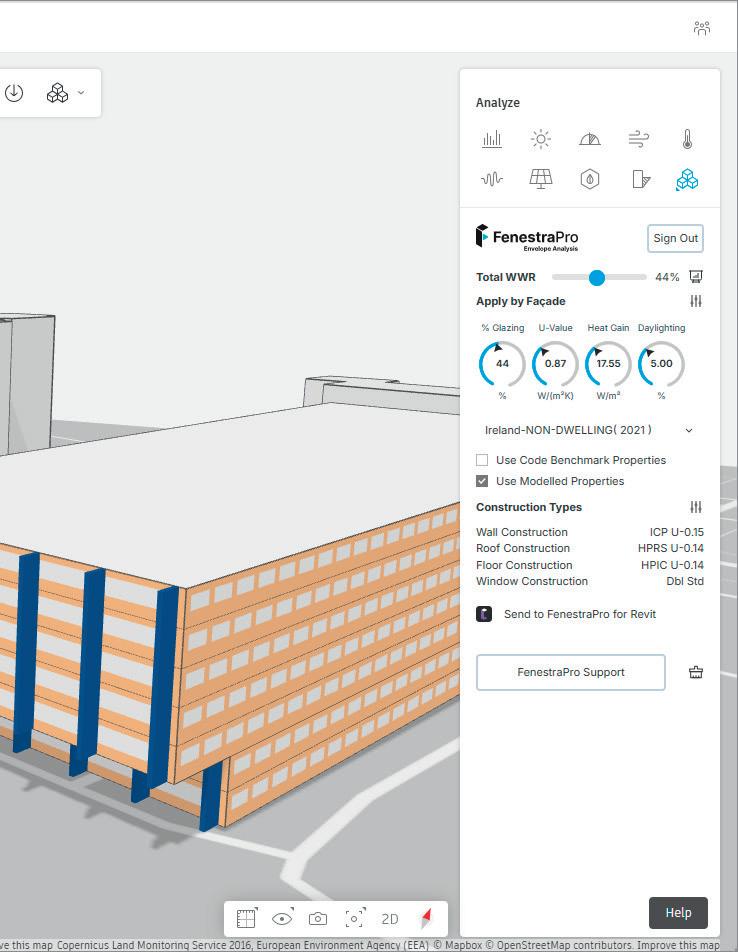

This façade design optimisation tool works with Revit and Forma to help create sustainable, detailed designs

Martyn Day explores how the Vectorworks product set is evolving under new CEO Jason Pletcher

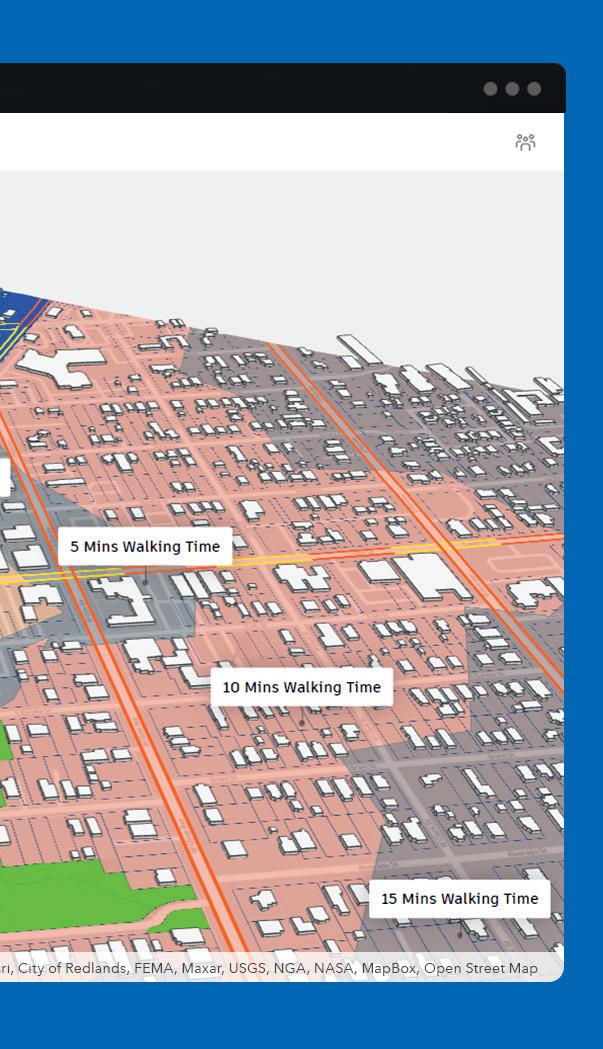

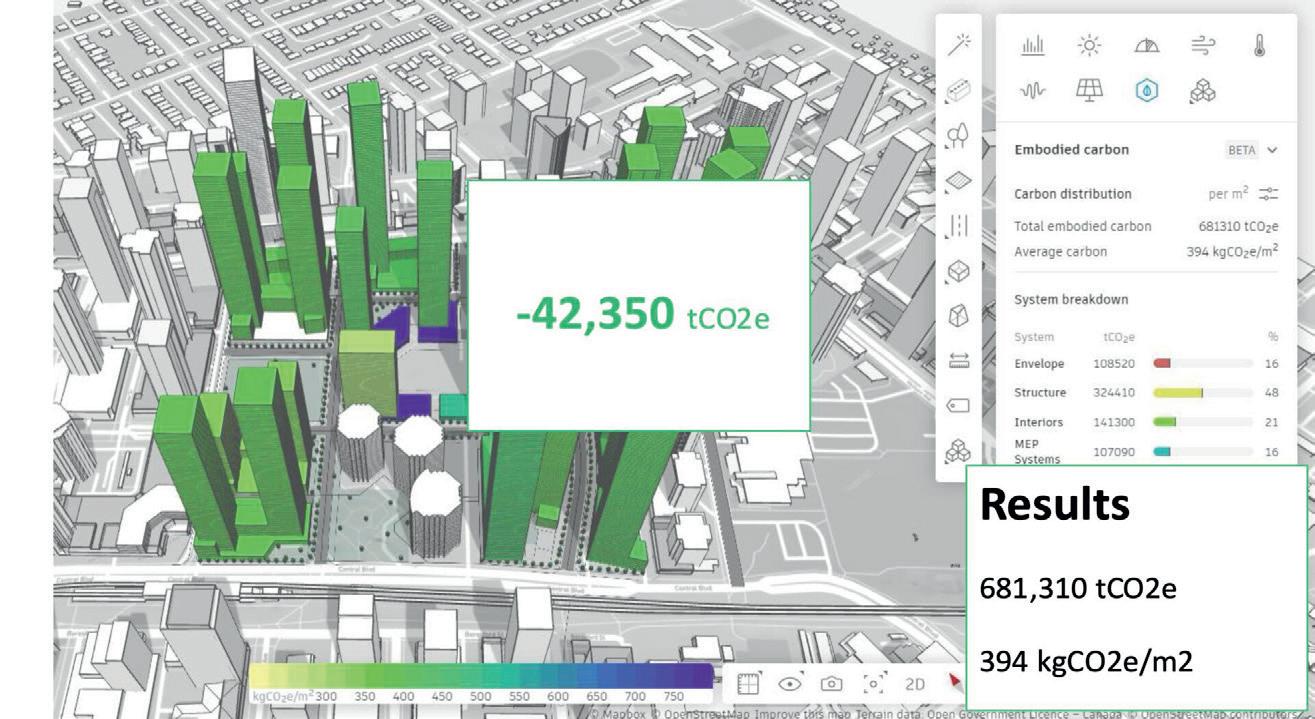

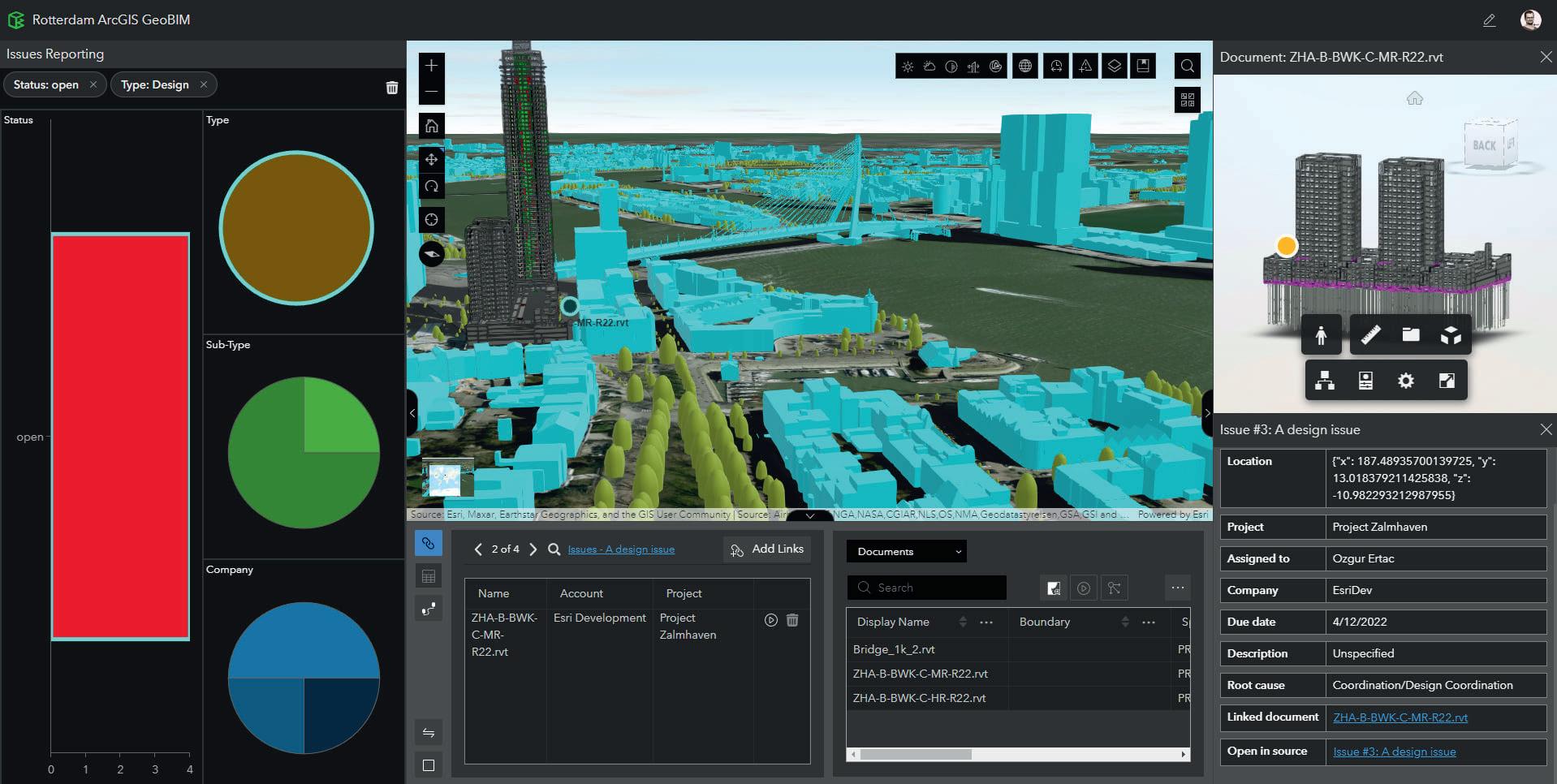

We caught up with Esri’s Marc Goldman to discuss the geospatial company’s focus on BIM integration

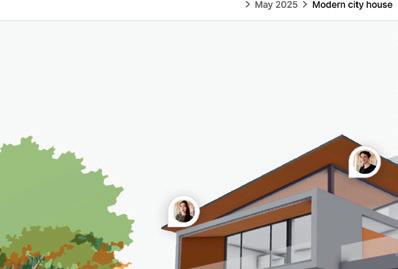

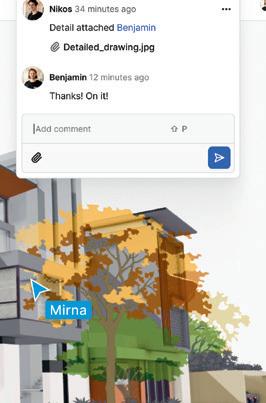

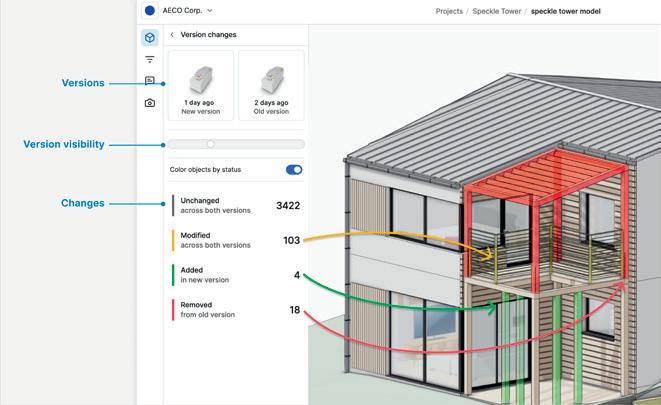

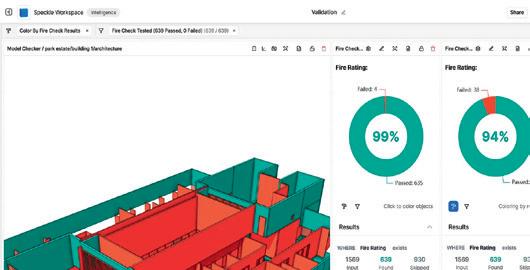

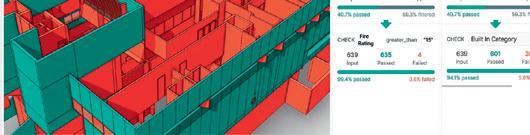

Speckle CEO Dimitrie Stefanescu shares five examples of how the collaborative platform can benefit AEC workflows

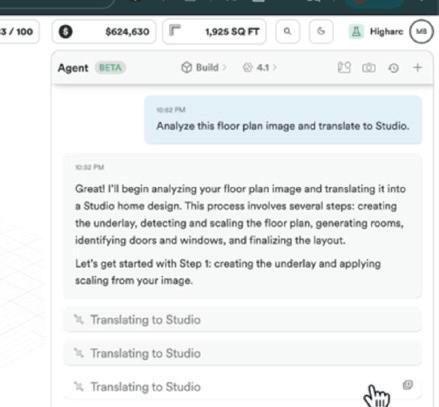

There may well come a time when AI will take a sketch or basic idea and design the entire building

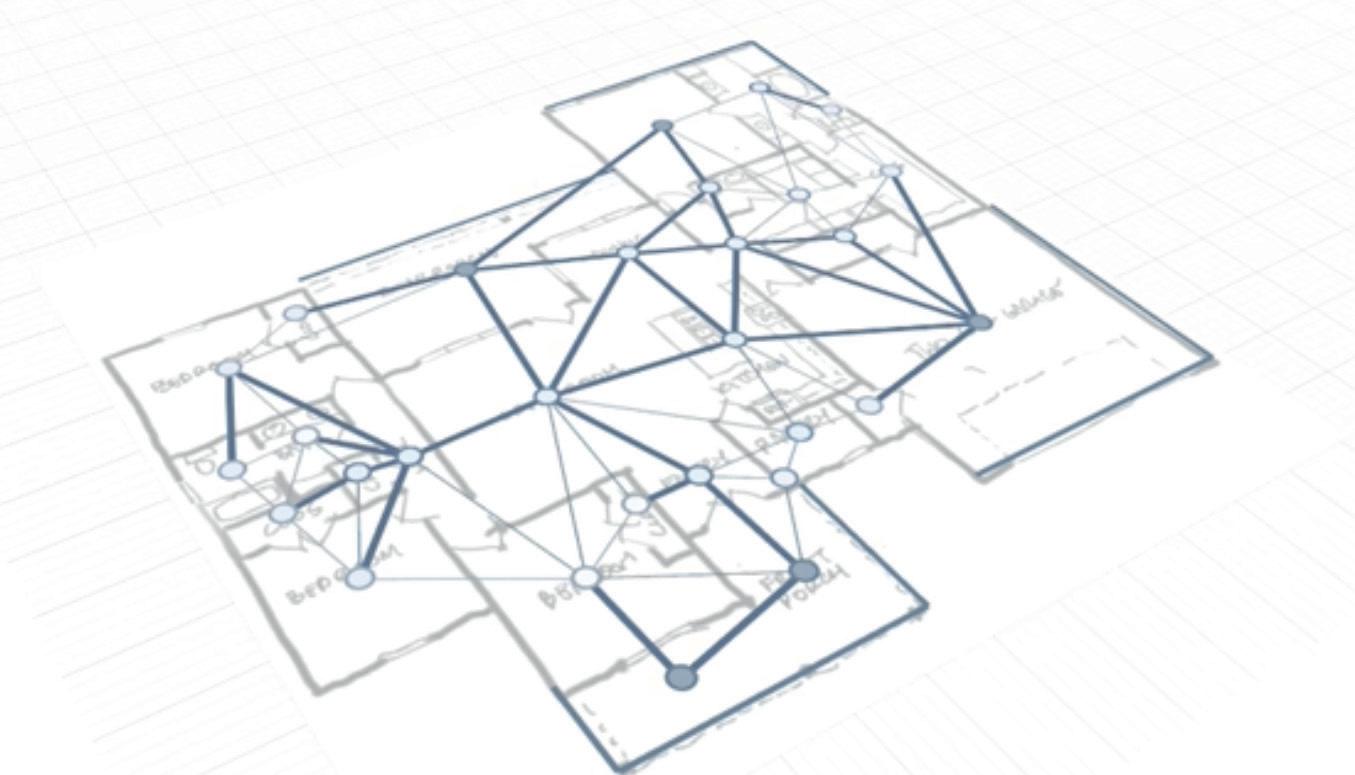

Remap believes that the key to better buildings may lie in the ability of firms to create better tools for themselves

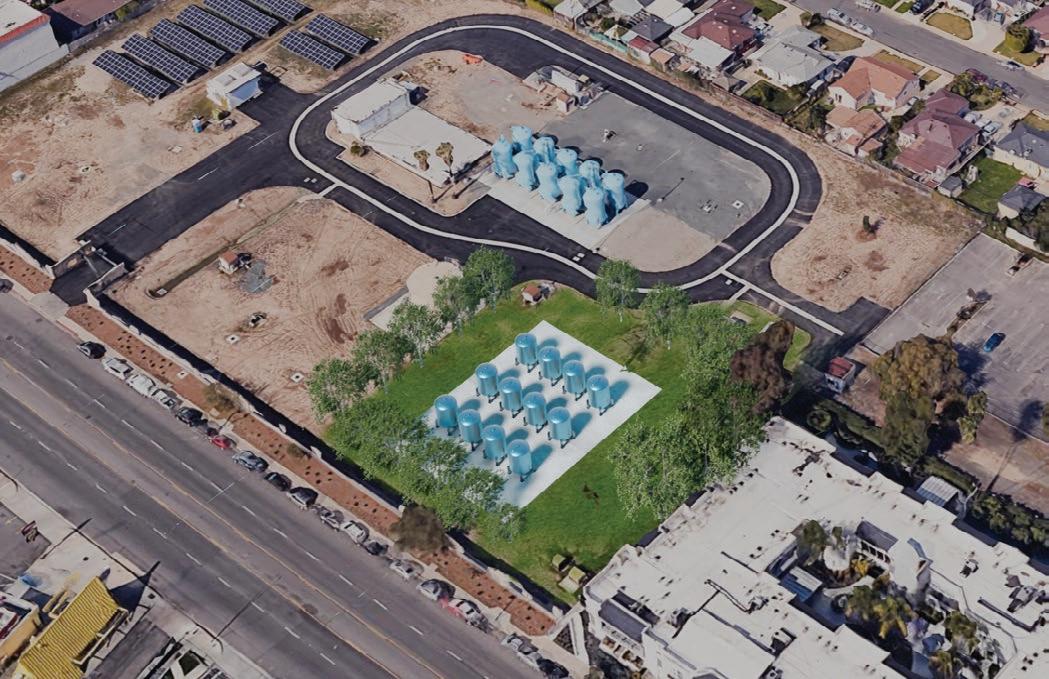

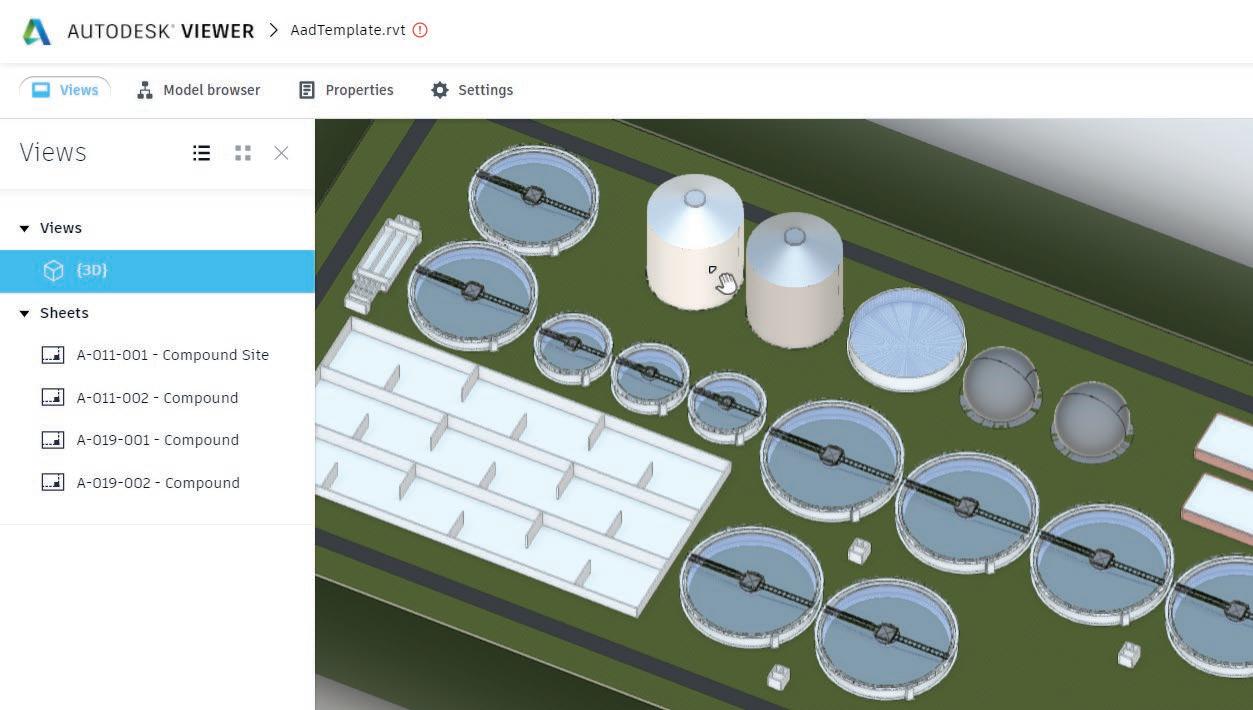

Transcend is looking to bring new efficiencies to the design of water, wastewater and power infrastructure

A two-pronged approach to technology deployment is enabling the timely detection of leaks on water networks

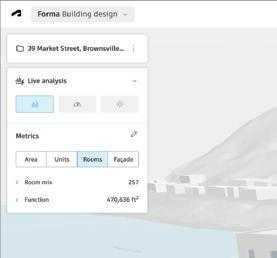

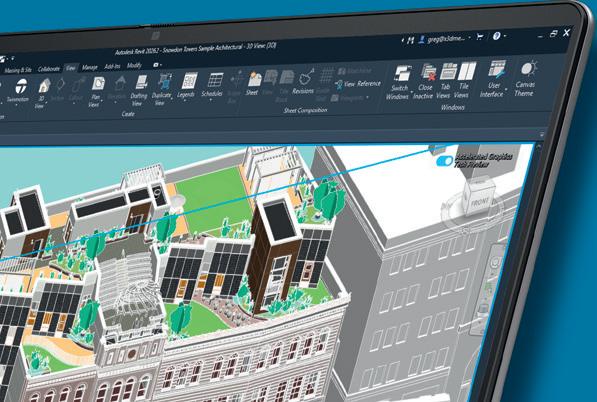

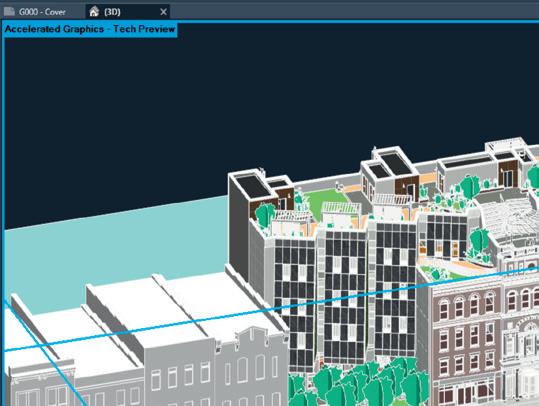

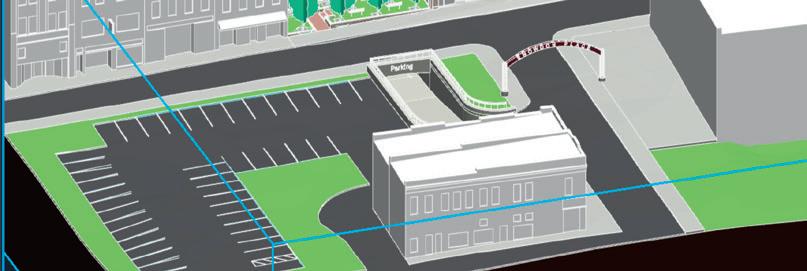

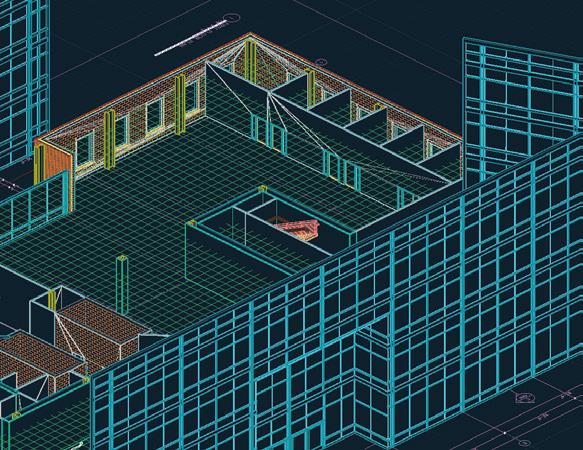

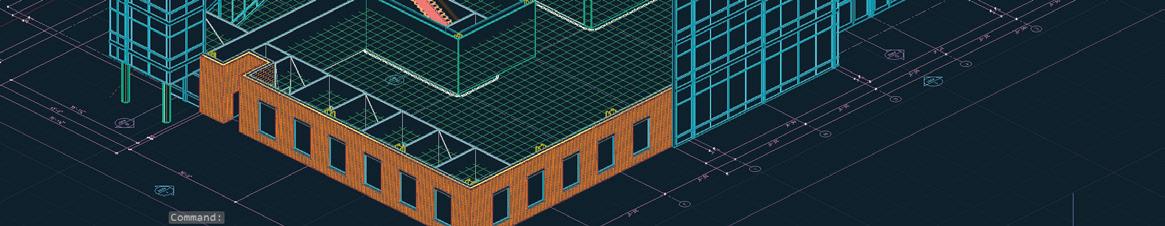

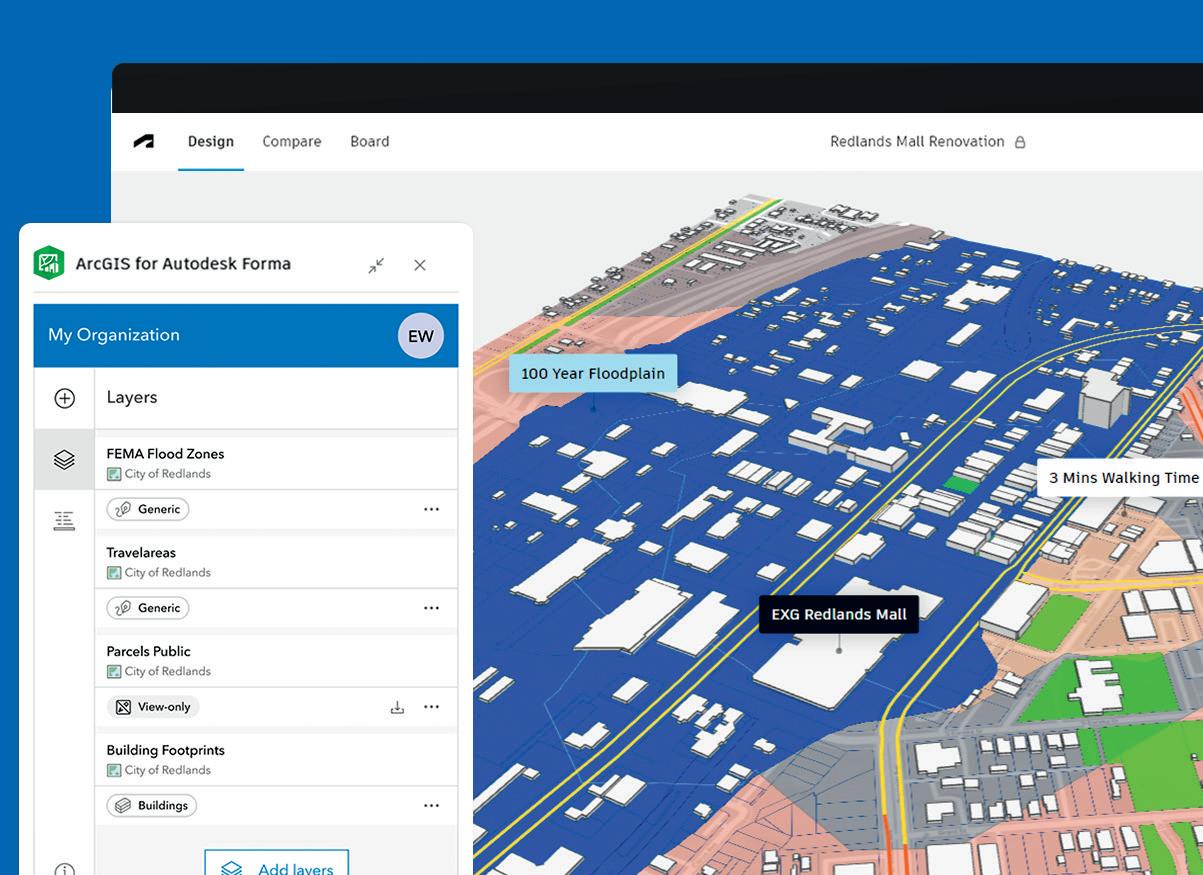

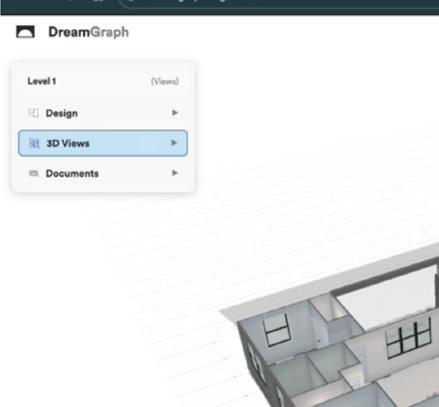

Autodesk is reshaping its AEC design software portfolio, introducing a new suite of Forma solutions, while making desktop products like Revit more deeply connected to the Forma industry cloud.

At Autodesk University, the company unveiled a new product, Forma Building Design, and announced that its existing early-stage planning tool, Autodesk Forma, will be rebranded as Forma Site Design.

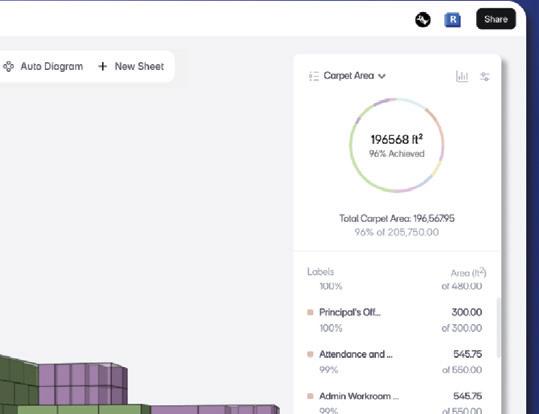

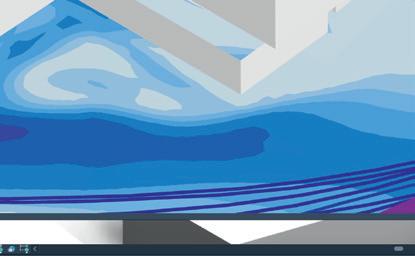

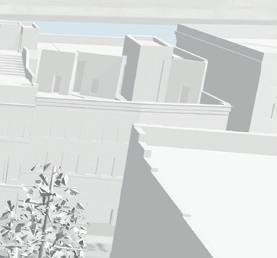

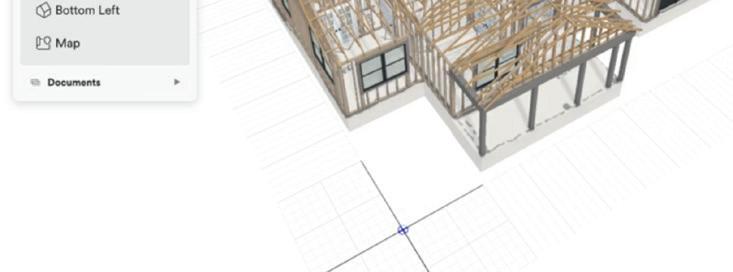

Launching in beta in the coming months, Forma Building Design is billed as an easy-to-use detailed building design solution, offering ‘BIM-level’ LOD 200 detail, ‘AI-powered’ automated design tools, and integrated analysis capabilities.

Nicolas Mangon, VP of AEC industry strategy, explained that with Forma Building Design users will be able to check facades, explore interior layouts,

and optimise performance with carbon and daylight metrics.

“Forma Building Design is just the first of many new Forma solutions that will support a broader range of industries and project phases, all powered by AI,” he said.

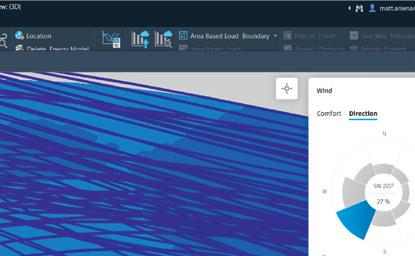

Meanwhile, Autodesk is continuing to develop its desktop design tools while working to bridge the gap between desktop and cloud-native workflows. The company announced that Revit will be the first official Forma Connected Client — a designation for desktop products deeply integrated with the Forma industry cloud.

Revit users will be able to utilise shared, granular data and Forma’s cloud capabilities, such as environmental analyses, directly within the desktop tool, without the need for exports, imports or rework. Turn to page 30 to learn more.

■ www.autodesk.com/forma

Leica Geosystems has launched Leica BLK360 SE Essentials, a new ‘cost-effective’ solution designed to make reality capture more accessible to smaller AEC firms. Priced at £12,500 (€14,000), it bundles a Leica BLK360 SE laser scanner with a one year subscription of Leica Cyclone Field 360 laser scanning software, and PinPoint software for turning scan data into usable 3D models.

According to Leica, the solution is designed for new users across AEC, design, and trades that previously couldn’t

justify the investment in 3D scanning.

The Leica BLK360 SE is a lightweight, compact imaging laser scanner that produces 2D and 3D models with ‘onebutton operation’. It is said to capture the same exact quality of data as the original BLK360, but takes a little longer to scan .

According to Leica, in under a minute, users can complete a 360-degree scan, accurate to 40 millimetres from 10 metres away. The four-camera system captures 104-megapixel HDR images to add detailed visual context to every model.

■ www.leica-geosystems.com

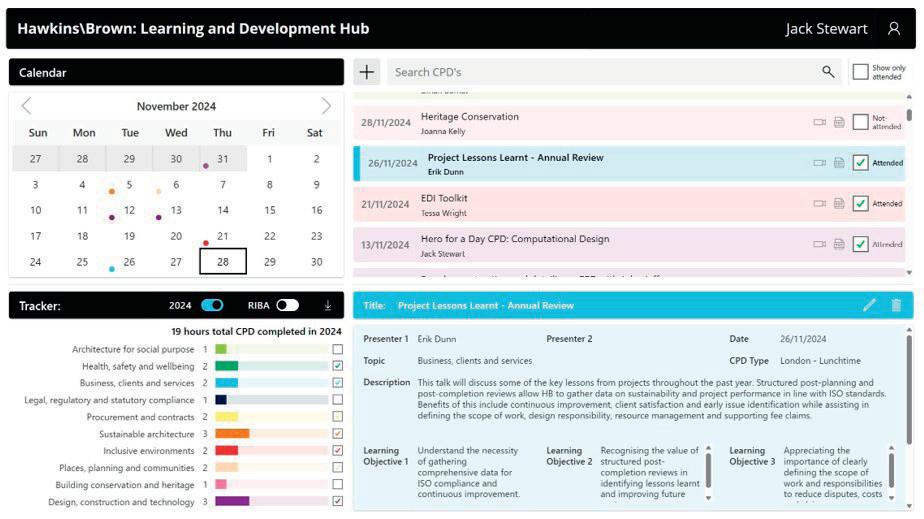

W: Workplace

BExperts, a UK-based fit-out and refurbishment contractor and design-and-build consultancy, has partnered with NavLive, a specialist in construction AI-driven 3D scanning, to help give its teams faster, more reliable site data and strengthen its ability to compete for tenders.

BW has already deployed NavLive across a range of projects in London and Europe, from heritage refurbishments to modern workplaces.

In one project, BW used NavLive to survey a multi-storey commercial property in Mayfair. The team completed a full walkthrough in under 15 minutes, capturing a 3D point cloud and elevation views that enabled architects to produce a full Revit drawing in just two hours.

Looking ahead, BW and NavLive are exploring how the technology could integrate with robotics and automation, from robotic dogs to emerging humanoid platforms.

■ www.navlive.ai

Looq AI has announced that its photogrammetric data capture platform is now compatible with Trimble Business Center software.

The integration enables engineering professionals to utilise ‘survey-grade’ georeferenced, ground-classified point clouds and orthomosaic images from the Looq Platform directly within Trimble’s desktop workflows.

Looq AI combines handheld photogrammetry with computer vision algorithms to deliver high-resolution spatial data.

■ www.looq.ai

We improve the way that built environment organisations work using our knowledge of design, technology and construction.

We’re helping industry leading organisations use technology to work more effectively.

And we can help you to do the same through…

Consulting

We spend time understanding how you operate and develop solutions for you – kind of like tuning an engine!

We deliver software applications or toolkits that solve particular problems or create specific experiences.

We help deliver a specific project or package of work that can benefit from digital engineering. 1 2 3 4

Remap are a London-based digital transformation and software development consultancy providing technology services to the built environment industry. Services.

Digitaltransformation: Building on ten years’ experience in the industry; supporting organisations to get the most out of emerging technologies.

ComputationalBIM&Design: Parametric design tools, automated workflows, and custom Revit add-in development.

2D/3Dapplicationdevelopment: Bespoke software development of desktop and web applications using both 2D and 3D technologies.

Designtoconstructionsolutions: As showcased at Here East, AJ100 Building of the Year winner; using technology to streamline from design team to contractors.

Vioso has launched Exaplan, an AEC visualisation solution that enables the presentation of 1:1 scale CADbased floor plans in real time. Users can navigate through the projected plan in ‘true scale’ regardless of the size of the physical room

■www.vioso.com

Buildots’ AI construction progress tracking technology is being used to support the delivery of the National Rehabilitation Centre, a £105 million NHS project by Integrated Health Projects, a joint venture between Vinci Building and Sir Robert McAlpine ■ www.buildots.com

Tekla PowerFab 2025i, the latest release of the software suite for managing steel fabrication, offers improved production planning and forecasting, as well as a new integration with Trimble ProjectSight to help optimise construction project management workflows

■ www.tekla.com

A new 3D model viewer for Viewpoint Field View has enhanced the model-based workflows in construction site software. Teams can now more effectively capture information, allowing forms, checklists and tasks to be raised against the 3D model

■ www.viewpoint.com

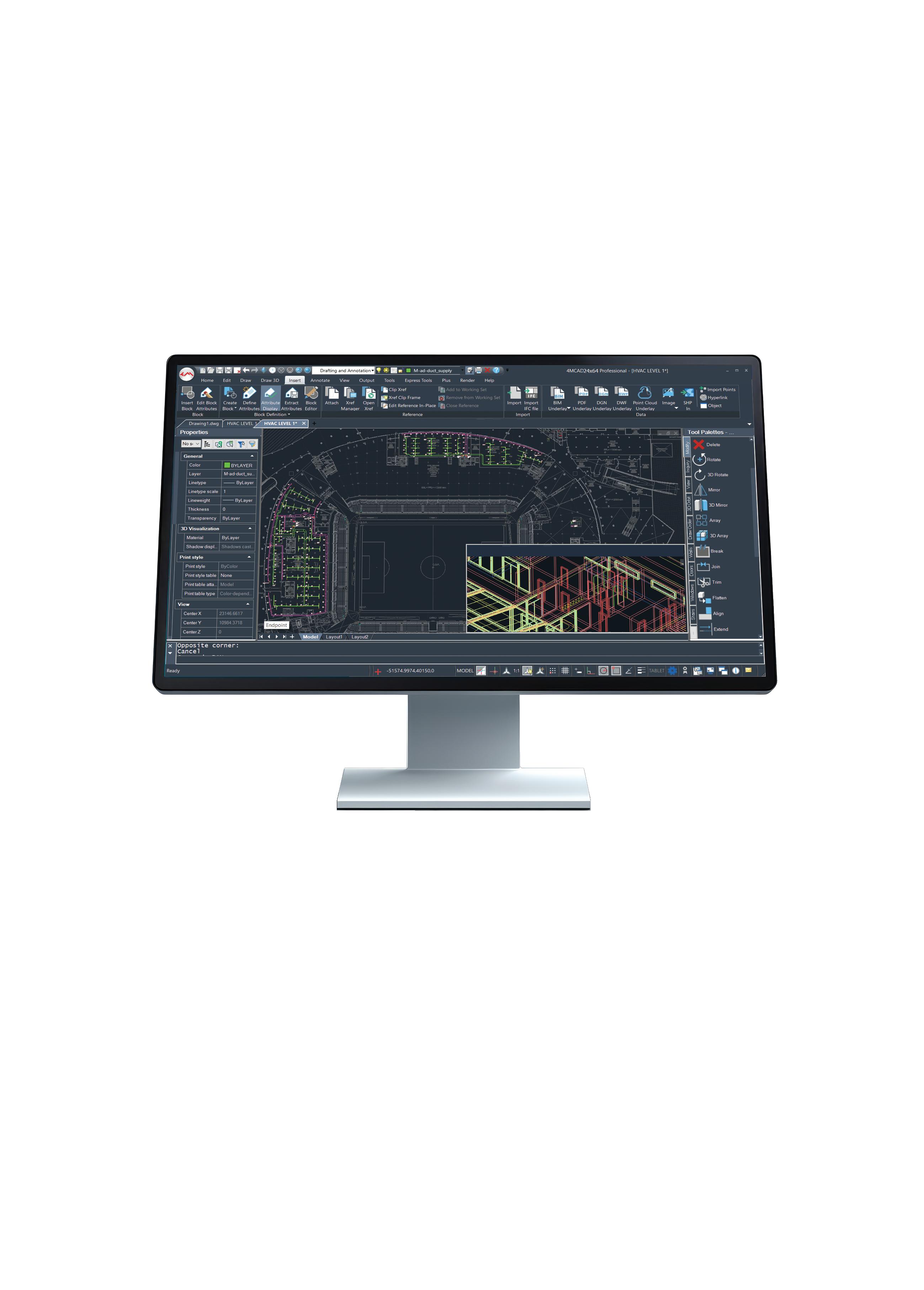

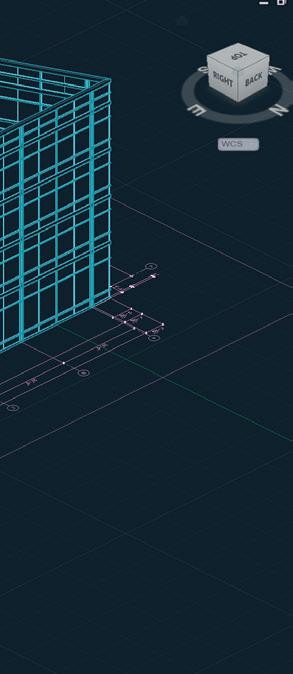

Trimble has launched Stabicad for AutoCAD Mechanical in the UK. The software is designed to enhance and streamline MEP design workflows by bridging the gap between traditional CAD drafting and ‘intelligent modelling’ ■ www.mep.trimble.com

Reality capture data platform NavVis Ivion has added Hide Overlap, a new feature designed to help those working with multiple scan datasets. The smart cleanup tool automatically detects and removes lower-quality points among overlapping scan data, selecting only the best data from each scan to produce a ‘clean, streamlined’ point cloud

■ www.navvis.com/ivion

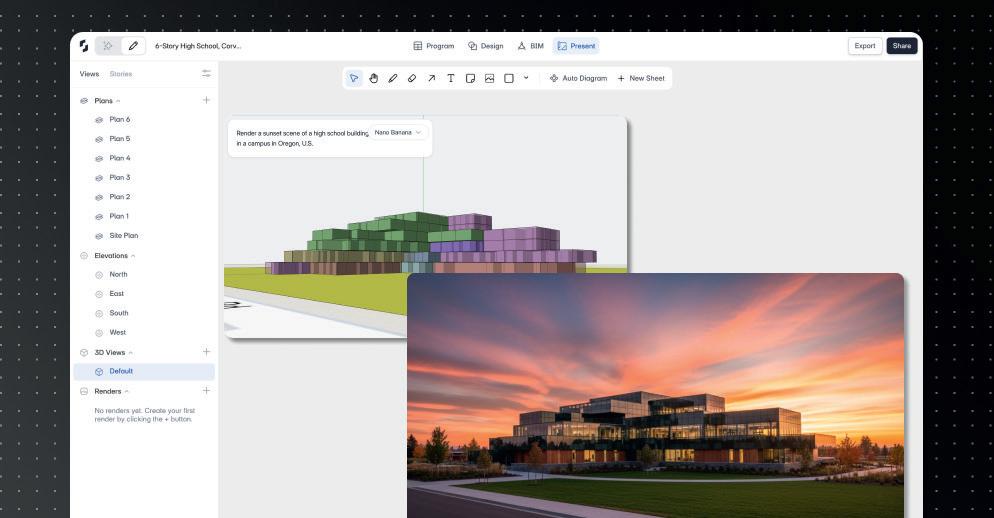

Veras 3.0, the latest release of the AI-powered viz software from Chaos, includes a new image-to-video generation capability designed to transform static renderings into ‘dynamic animations’ through simple prompts.

It builds on the software’s original capabilities, which enable designers to take 3D models and images and quickly create AI-rendered designs and style variations. Image-to-video generation in Veras allows designers to pan and zoom

cameras, animate weather, and change the time of day with ‘just a few clicks.’ Once the look is determined, motion can be added to the scene through vehicles and digital people, turning still images into ‘immersive, moving stories.’

Veras was one of the first AI viz tools to be designed specifically for AEC, integrating natively with Revit, Rhino, SketchUp and other 3D software. It was originally developed by EvolveLabs, which Chaos acquired in 2024.

■ www.chaos.com/veras

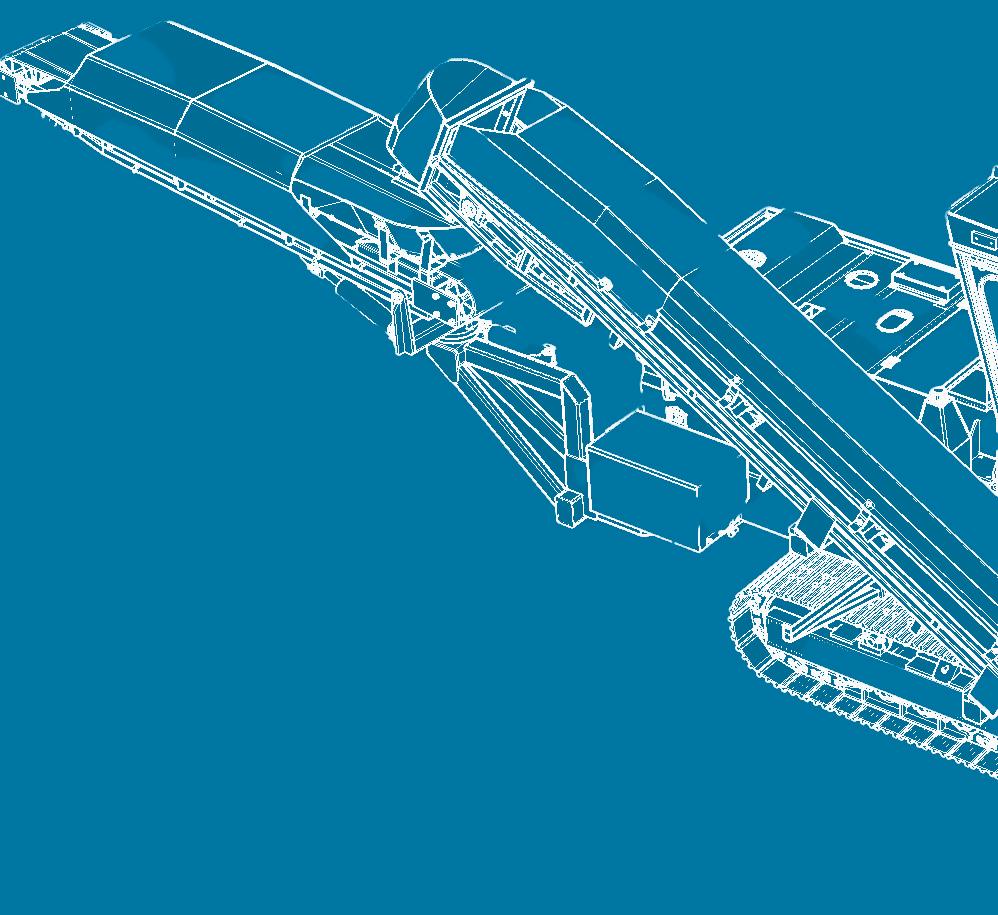

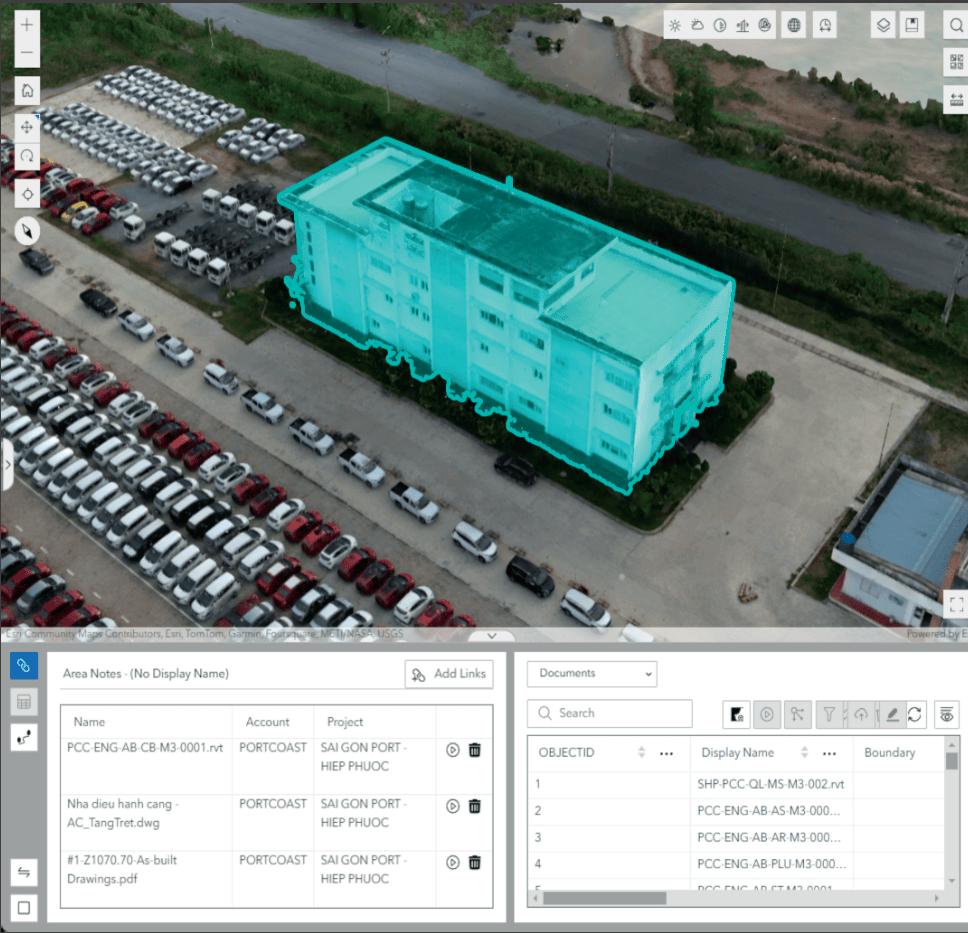

entley Systems is offering new resources to help developers use its iTwin Platform APIs within Cesium, the 3D geospatial visualisation technology that Bentley acquired in September 2024. The move is intended to streamline integration between infrastructure digital twins and large-scale geospatial applications.

The iTwin Platform provides a set of open-core APIs for creating and managing digital twins — data-rich virtual models used across the lifecycle of infrastructure assets.

Cesium is known for its 3D globe and mapping technology and for originating the 3D Tiles open standard, widely adopted for streaming large-scale 3D datasets.

Bentley now provides tutorials and example workflows that demonstrate how iTwin APIs can be used inside CesiumJS applications. Developers can, for instance,

combine geospatial context streamed from Cesium ion with engineering design data managed in Bentley iTwin, and visualise both in a single environment.

The integration reflects Bentley’s strategy following the Cesium acquisition: to bring together detailed engineering models with scalable geospatial visualisation under a single umbrella, while continuing to support open standards. For infrastructure owners, operators, and developers, the alignment is designed to reduce duplication of effort when linking project data to broader geographic settings.

The iTwin–Cesium connection is particularly relevant for organisations that need to situate infrastructure data within a regional or national context, such as utilities, transportation agencies, and government bodies.

■ www.bentley.com ■ www.cesium.com

Nvidia has announced two new low-profile workstation GPUs, the Nvidia RTX Pro 4000 Blackwell SFF Edition and the Nvidia RTX Pro 2000 Blackwell, designed to accelerate a range of professional workloads, including CAD, visualisation, simulation and AI.

Both are expected to appear in small form factor and micro workstations later this year, including the HP Z2 Mini G1i and Lenovo ThinkStation P3 Ultra SFF.

The Nvidia RTX Pro 4000 SFF and RTX Pro 2000 feature fourth-generation RT Cores and fifth-generation Tensor Cores with lower power in half the size of a traditional GPU.

Compared to its predecessor, the RTX

4000 SFF Ada, Nvidia claims the RTX Pro 4000 Blackwell SFF delivers up to 2.5× faster AI performance, 1.7× higher ray tracing performance, and 1.5× more bandwidth — all while maintaining the same 70-watt maximum power draw. It also gets a memory boost, increasing from 20 GB of GDDR6 to 24 GB of GDDR7.

Meanwhile, compared to the RTX 2000 Ada, the RTX Pro 2000 Blackwell is said to deliver up to 1.6× faster 3D modelling, 1.4× faster CAD performance, and 1.6× faster rendering. It also promises a 1.4× improvement in AI image generation and a 2.3× boost in AI text generation. Memory has been increased as well, rising from 16 GB of GDDR6 to 20 GB of GDDR7.

■ www.nvidia.com

Lenovo has redesigned its flagship mobile workstation, the ThinkPad P16, with a new Gen 3 edition that is thinner, lighter and draws less power than its Gen 2 predecessor, now with a 180W PSU.

The 16-inch pro laptop features the latest ‘Arrow Lake’ Intel Core Ultra 200HX series processors (up to 24 cores and 5.5 GHz) and a choice of Nvidia graphics up to the RTX Pro 5000 Blackwell Generation (24 GB) Laptop GPU.

Lenovo has also rolled out updates across its wider Intel-based mobile workstation portfolio. The ThinkPad P1 Gen 8, ThinkPad P16v Gen 3, ThinkPad P14s i Gen 6, and ThinkPad P16s i Gen 4

are all powered by more energy-efficient Intel Core Ultra 200H processors (up to 16 cores, 5.4 GHz) and feature less powerful Nvidia RTX Pro Blackwell GPUs — up to the RTX Pro 2000 Blackwell (8 GB).

While they share core components, the models are differentiated by design and positioning: the ThinkPad P1 Gen 8 is Lenovo’s premium thin-and-light mobile workstation, the ThinkPad P16v Gen 3 is pitched as a more affordable alternative, while the ThinkPad P14s i Gen 6 and ThinkPad P16s i Gen 4 combine thin-andlight designs with a GPU best suited to mainstream CAD and BIM workflows.

■ www.lenovo.com

UK-based subscription workstation platform

Computle has secured a £500k pre-seed investment from technology veteran Mark Boost, who takes a minority stake in the company.

The funding will support Computle’s development of its remote workstation service for creative, architecture, and engineering teams.

Founded in 2020 by technology architect Jake Elsley, Computle claims to deliver 30–50% cost savings compared to alternative solutions. Unlike virtualised remote workstation solutions, Computle provides each user with a dedicated workstation, accessed over a 1:1 connection. Every custom-built blade workstation includes its own CPU, GPU, NVMe storage, and RAM.

■ www.computle.com

Creative ITC has launched a new virtual cloud desktop solution purpose built for the AEC sector that it claims delivers the full power of a high-end in-office workstation to architects, wherever they work.

The Virtual Cloud Desktop Pod (VCDPod) is purpose-built for processor- or graphics-intensive design, visualisation, simulation, and modelling applications. With VCDPod, AEC professionals use a dedicated laptop, thin client or iPad to login into a virtual cloud desktop that offers ‘single tenant’ access to high-end computing resources. The service uses dedicated Lenovo workstations.

■ www.creative-itc.com

Bluebeam has acquired Firmus AI, a specialist in pre-construction design review and risk analysis. The move will see Firmus’ technology integrated into Bluebeam’s document review and markup workflows, extending the company’s focus into AI-assisted risk management

■ www.bluebeam.com

PMSPACE.AI is a new AI-powered platform for real-estate developers, operators and stakeholders designed to transform construction management through predictive analytics, intelligent automation, and a unified data foundation

■ www.pmspace.ai

ChatAEC is a new platform designed to help developers, engineers, and planners quickly find zoning and site requirements. Users can enter a parcel address or Assessor’s Parcel Number (APN) and ask questions like “Can I build multifamily here?” or “What are the setback requirements?”

■ www.chataec.ai

Allsite.ai, an AI-powered design platform built for civil engineering, has expanded into the North American market. The software uses AI Agents to automate grading, roading, drainage, and utility design, producing surface-ready designs for AutoCAD Civil 3D that meet local standards

■ www.allsite.ai

V-Ray 7 for 3ds Max, Update 2, the latest release of the production quality renderer from Chaos, introduces two new AI features: AI Material Generator can create materials from a real-world photo, while AI Enhancer can automatically enhance and upscale scenes

■ www.chaos.com

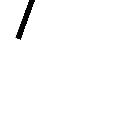

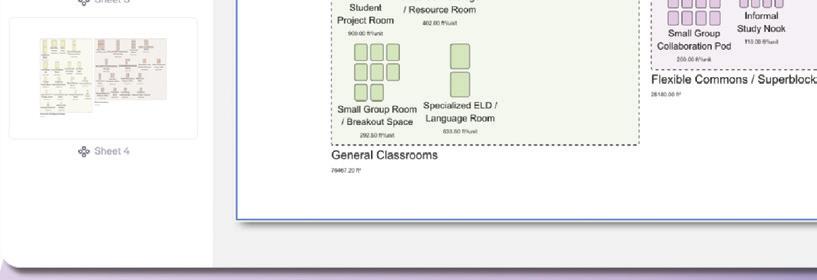

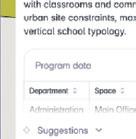

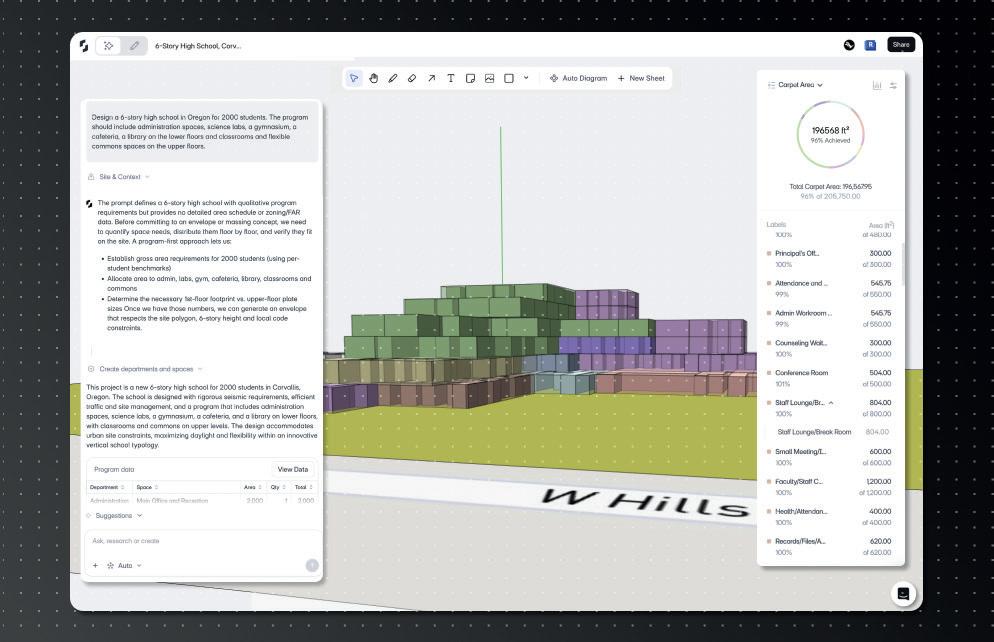

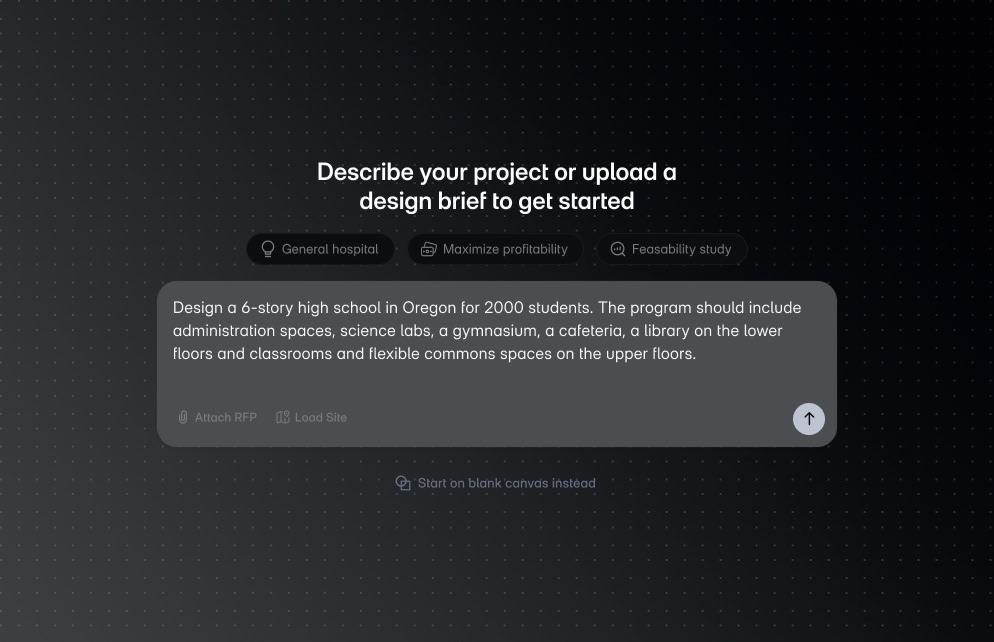

naptrude has announced that the next major release of its collaborative building design platform will bring AI directly into the early stages of design.

With Snaptrude’s ‘AI stack’ users can simply describe a building type, the site, and the intended use, while the software automatically generates a layout with the right spaces, providing an ‘intelligent starting point’ for manual design.

Snaptrude also includes a new built-in AI research capability, designed to help architects save hours by not having to dig

through codes, guidelines, and precedent studies before they can start shaping a design. Snaptrude’s AI can analyse building codes and ADA requirements, benchmark space standards and costs. Meanwhile Snaptrude has enhanced its ‘AI-powered inspiration tools’ – in other words, its AI renderers. Users now have a wider choice of AI models, including Nano-Banana for ‘ super-fast image generation for quick iterations’ and Veo 3 for ‘smooth & realistic’ video generation. For more on Snaptrude AI see page 37.

■ www.snaptrude.com

Egnyte has embedded its first ‘secure, domain-specific’ AI agents within its platform to target some of the most timeconsuming and costly parts of the AEC process, from bid to completion.

The Specifications Analyst and Building Code Analyst are designed to extract details from large specification files and quickly deliver AI guidance for building code compliance.

“These tools enable customers to take advantage of the power of AI without having to move their data and potentially expose it to security, compliance, and governance risks,” said Amrit Jassal, co-founder and CTO, Egnyte. “The AEC industry relies heavily on complex, content-intensive documents to make informed decisions throughout the project lifecycle, and a

single error in a spec sheet or misinterpretation of a building code can lead to significant project delays and cost overruns. These AEC AI agents fundamentally reduce project risk and help firms to deliver better, more profitable outcomes.”

According to Egnyte, the Specifications Analyst allows users to transform any size specification document or multiple documents into source data that delivers fast and useful answers.

Meanwhile, the Building Code Analyst is designed to consolidate disparate codebooks into a unified source of truth. Egnyte explains that the agent enables users to quickly find, compare, and check code requirements across relevant codebooks and produce consistent, useful AI-powered answers.

■ www.egnyte.com

Autodesk has introduced neural CAD, a new category of 3D generative AI foundation models coming to Forma and Fusion, which the company says will “completely reimagine the traditional software engines that create CAD geometry” and “automate 80 to 90% of what you [designers] typically do.”

Unlike general-purpose large language models (LLMs) such as ChatGPT, Gemini, and Claude, neural CAD models are trained on professional design data, enabling them to reason at both a detailed geometry level and at a systems and industrial process level – exploring ideas like efficient machine tool paths or standard building floorplan layouts.

According to Mike Haley, senior VP of research, Autodesk, neural CAD models are trained on the typical patterns of how people design, using a combination of

synthetic data and customer data. “They’re learning from 3D design, they’re learning from geometry, they’re learning from shapes that people typically create, components that people typically use, patterns that typically occur in buildings.”

Autodesk says that in the future, customers will be able to customise the neural CAD foundation models, by tuning them to their organisation’s proprietary data and processes.

Autodesk has so far presented two types of neural CAD models: ‘neural CAD for geometry’, which will be used in Fusion and ‘neural CAD for buildings’ for Forma.

In Forma, Autodesk explains that architects will be able to ‘quickly transition’ between early design concepts and more detailed building layouts and systems with the software ‘autocompleting’ repetitive aspects of the design.

■ www.autodesk.com

ugmenta has enhanced its Augmenta Construction Platform (ACP), a fully agentic design environment capable of automating electrical raceway routing and coordination. According to the Toronto-based firm, the new updates make the product substantially easier and faster to use, and enable it to generate designs that more closely meet strict project requirements.

New features include enhanced schedule creation capabilities, intelligent

routing guidance, and improved solution inspection tools.

“Today’s ACP is a direct result of our close collaboration with our clients on the front lines — the electrical contractors and engineers who are racing to meet unprecedented demand,” said Aaron Szymanski, co-founder and CPO of Augmenta. “This platform is everything they need to cut their BIM modeling time in half and take on more work.”

■ www.augmenta.ai

OpenSpace has introduced AI Autolocation, a new Spatial AI technology that it claims will give every smartphone a real-time indoor positioning capability without the need for specialist hardware, such as Bluetooth Beacons. The company believes that as the system matures, powered by ongoing machine learning, it will ultimately exceed GPS-level accuracy.

AI Autolocation works by comparing real-time sensor readings from a smartphone with sensor maps generated from preexisting 360° captures created on the OpenSpace platform.

The adaptive system progressively refines its location estimations even as the jobsite itself changes over time.

Looking to the future, OpenSpace claims AI Autolocation is laying the groundwork for advanced Spatial AI agents that will enable proactive issue detection and resolution by flagging issues in specific zones and suggesting immediate actions. The agents will also provide ‘executive-level insights’, with AI-powered summaries derived from visual and spatial data delivering clear, actionable guidance.

■ www.openspace.ai

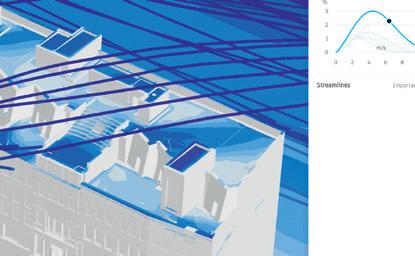

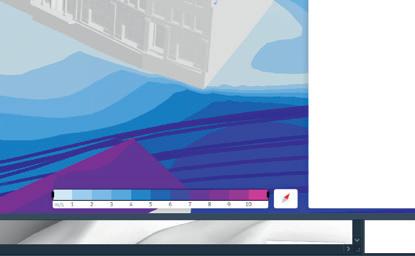

Chaos is blending generative AI with traditional visualisation, rethinking how architects explore, present and refine ideas using tools like Veras, Enscape, and V-Ray, writes Greg Corke

From scanline rendering to photorealism, real-time viz to realtime ray tracing, architectural visualisation has always evolved hand in hand with technology.

Today, the sector is experiencing what is arguably its biggest shift yet: generative AI. Tools such as Midjourney, Stable Diffusion, Flux, and Nano Banana are attracting widespread attention for their ability to create compelling, photorealistic visuals in seconds — from nothing more than a simple prompt, sketch, or reference image.

The potential is enormous, yet many architectural practices are still figuring out how to properly embrace this technology, navigating practical, cultural, and workflow challenges along the way.

The impact on architectural visualisation software as we know it could be huge. But generative AI also presents a huge opportunity for software developers.

Like some of its peers, Chaos has been gradually integrating AI-powered features into its traditional viz tools, including Enscape and V-Ray. Earlier this year, however, it went one step further by acquiring EvolveLAB and its dedicated AI rendering solution, Veras.

“Basically, [it takes] an image input for your project, then generates a five second video using generative AI,” explains Bill Allen, director of products, Chaos. “If it sees other things, like people or cars in the scene, it’ll animate those,” he says.

This approach can create compelling illusions of rotation or environmental activity. A sunset prompt might animate lighting changes, while a fireplace in the scene could be made to flicker. But there are limits. “In generative AI, it’s trying to figure out what might be around the corner [of a building], and if there’s no data there, it’s not going to be able to interpret it,” says Allen.

Chaos is already looking at ways to solve this challenge of showcasing buildings from multiple angles. “One of the things we think we could do is take mul-

‘‘

or improved model variant may come in the future.

But, as Allen points out, the choice of model isn’t just down to the quality of the visuals it produces. “It depends on what you want to do,” he says. “One of the reasons that we’re using Stable Diffusion right now instead of Flux is because we’re getting better geometry retention.”

One thing that Veras doesn’t yet have out of the box is the ability for customers to train the model using their own data, although as Allen admits, “That’s something we would like to do.”

In the past Chaos has used LORAs (Low-Rank Adaptations) to fine-tune the AI model for certain customers in order to accurately represent specific materials or styles within their renderings.

With Veras 3.0, the software’s capabilities now extend to video, allowing designers to generate short clips featuring dynamic pans and zooms, all at the push of a button ’’

tiple shots - one shot from one angle of the building and another one - and then you can interpolate,” says Allen.

Model behaviour

Veras allows architects to take a simple snapshot of a 3D model or even a hand drawn sketch and quickly create ‘AI-rendered’ images with countless style variations. Importantly, the software is tightly integrated with CAD / BIM tools like SketchUp, Revit, Rhino, Archicad and Vectorworks, and offers control over specific parts within the rendered image.

With the launch of Veras 3.0, the software’s capabilities now extend to video, allowing designers to generate short clips featuring dynamic pans and zooms, all at the push of a button.

Veras uses Stable Diffusion as its core ‘render engine’. As the generative AI model has advanced, newer versions of Stable Diffusion have been integrated into Veras, improving both realism and render speed, and allowing users to achieve more detailed and sophisticated results.

“We’re on render engine number six right now,” says Allen. “We still have render engine, four, five and six available for you to choose from in Veras.”

But Veras does not necessarily need to be tied to a specific generative AI model. In theory it could evolve to support Flux, Nano Banana or whatever new

Roderick Bates, head of product operations, Chaos, imagines that the demand for fine tuning will go up over time, but there might be other ways to get the desired outcome, he says. “One of the things that Veras does well is that you can adjust prompts, you can use reference images and things like that to kind of hone in on style.”

While Veras experiments with generative creation, Chaos is also exploring how AI can be used to refine output from its established viz tools using a variety of AI post-processing techniques.

Chaos AI Upscaler, for example, enlarges render output by up to four times while preserving photorealistic quality. This means scenes can be rendered at lower resolutions (which is much quicker), then at the click of a button upscaled to add more detail.

While AI upscaling technology is widely available – both online and in

generic tools like Photoshop – Chaos AI Upscaler benefits from being directly accessible at the click of a button directly inside the viz tools like Enscape that architects already use. Bates points out that if an architect uses another tool for this process, they must download the rendered image first, then upload it to another place, which fragments the workflow. “Here, it’s all part of an ecosystem,” he explains, adding that it also avoids the need for multiple software subscriptions.

Chaos is also applying AI in more intelligent ways, harnessing data from its core viz tools. Chaos AI Enhancer, for example, can improve rendered output by refining specific details in the image. This is currently limited to humans and vegetation, but Chaos is looking to extend this to building materials.

“You can select different genders, different moods, you can make a person go from happy to sad,” says Bates, adding that all of this can be done through a simple UI.

There are two major benefits: first, you don’t have to spend time searching for a custom asset that may or may not exist and then have to re-render; second, you don’t need highly detailed 3D asset models to achieve the desired results, which would normally require significant computational power, or may not even be possible in a tool like Enscape.

The real innovation lies in how the software applies these enhancements. Instead of relying on the AI to interpret and mask off elements within an image, Chaos

brings this information over from the viz tool directly. For example, output from Enscape isn’t just a dumb JPG — each pixel carries ‘voluminous metadata’, so the AI Enhancer automatically knows that a plant is a plant, or a human is a human. This makes selections both easy and accurate.

As it stands, the workflow is seamless: a button click in Enscape automatically sends the image to the cloud for enhancement.

But there’s still room for improvement. Currently, each person or plant must be adjusted individually, but Chaos is exploring ways to apply changes globally within the scene.

Chaos AI Enhancer was first introduced in Enscape in 2024 and is now available in Corona and V-Ray 7 for 3ds Max, with support for additional V-Ray integrations coming soon.

materials

Chaos is also extending its application of AI into materials, allowing users to generate render-ready materials from a simple image. “Maybe you have an image from an existing project, maybe you have a material sample you just took a picture of,” says Bates. “With the [AI Material Generator] you can generate a material that has all the appropriate maps.”

Initially available in V-Ray for 3ds Max, the AI Material Generator is now being rolled out to Enscape.

In addition, a new AI Material

Recommender can suggest assets from the Chaos Cosmos library, using text prompts or visual references to help make it faster and easier to find the right materials.

Chaos is uniquely positioned within the design visualisation software landscape. Through Veras, it offers powerful oneclick AI image and video generation, while tools like Enscape and V-Ray use AI to enhance classic visualisation outputs. This dual approach gives Chaos valuable insight into how AI can be applied across the many stages of the design process, and it will be fascinating to see how ideas and technologies start to cross-pollinate between these tools.

A deeper question, however, is whether 3D models will always be necessary. “We used to model to render, and now we render to model,” replies Bates, describing how some firms now start with AI images and only later build 3D geometry.

“Right now, there is a disconnect between those two workflows, between that pure AI render and modelling workflow - and those kind of disconnects are inefficiencies that bother us,” he says.

For now, 3D models remain indispensable. But the role of AI — whether in speeding up workflows, enhancing visuals, or enabling new storytelling techniques — is growing fast. The question is not if, but how quickly, AI will become a standard part of every architect’s viz toolkit.

■ www.chaos.com

contractually restrict you from applying them in one of the most strategically valuable ways: training your own AI models.

I know many of the more progressive AEC firms that attend our NXT BLD event are training their own in-house AI based on their Revit models, Revit derived DWGs and PDFs. With no caveats or carve outs for customers, they potentially now have the Sword of Damocles hanging over their data. As worded, the broad use of the word ‘output’ could theoretically even apply to an Industry Foundation Classes (IFC) file exported from Revit, as it’s an output from Autodesk’s product stack, which could mean you are not even allowed to train AI on an open standard!

Legally, the company has not taken your intellectual property; instead, it may have ring-fenced its permitted uses, in a very specific way. This creates what I’d characterise as a “legal DRM moat” around customer data.

If

Autodesk potentially positions itself as the arbiter of how your data can be exploited, leaving you in possession of your files but without full freedom to decide their fate. The fine print ensures Autodesk maintains leverage over emerging AI workflows, even while telling customers their data still belongs to them. And the one place where this restriction doesn’t apply is within Autodesk’s cloud ecosystem, now called Autodesk Platform Services (APS). Only last month at Autodesk University, Autodesk was showing the AI training of data within the Autodesk Cloud.

owned by them. If a vendor asserts ownership rights through its licence terms, that warranty could be undermined. The risk goes further: consultancy agreements often contain indemnities, requiring the designer to protect the client against copyright breaches or claims. If a software vendor were to allege ownership or misuse under its EULA, a client might look to recover damages from the consultant. This creates a potential double exposure — liability to the vendor, and liability to the client.

The rationale behind this clause is open to interpretation. Autodesk maintains that its intent is to protect intellectual property and ensure AI use occurs within secure, governed environments. Some industry observers worry that the breadth could inadvertently chill legitimate customer innovation, despite Autodesk’s stated intent.

‘‘

what Autodesk claims to be its intent and the plain language of what is written. If the intent is to stop reverse engineering of Autodesk AI IP, then why not state that clearly?

The reverse engineering of its products and services is covered quite extensively in section 13 Autodesk Proprietary Rights in its General Terms. Machine Learning, AI, data harvesting and API, are all in addition to this.

When Nathan Miller, a digital design strategist and developer from Proving Ground, discovered these limitations, he ran a series of posts on LinkedIn (www. tinyurl.com/Miller-Terms). Prior to this none of the AEC firms we had spoken with for this article had any insight into this, despite the Terms of Service being published seven years ago.

licence agreements prevent firms from using

their

own

outputs to train AI, they forfeit the ability to build unique, in-house intelligence from their past projects

Some commentators also speculated that such clauses could serve to pre-empt potential misuse of design data by large AI firms. However, Autodesk’s 2018 publication date predates the current wave of generative AI, suggesting the clause was originally framed more broadly as an IP-protection measure, challenging Autodesk’s hold on its customers. 2018 is a long time before these major AI players were a potential threat.

While it was certainly a topic hotly commented on, the only Autodesk-related person to add their thoughts to the LinkedIn post was Aaron Wagner of reseller Arkance. He commented: “I don’t think the common interpretation is accurate to the spirit of that clause. Your data is your data and the way you use it is under your own discretion. Of course, you should always seek legal counsel to refine any grey areas.

Knock-on risks for consultants

Winfield also points out that Autodesk’s broad claims over “outputs” may have knock-on consequences for customer–client agreements. Most design and consultancy contracts require the consultant to warrant that deliverables are original and fully

The short solution to this would be for Autodesk to refine the language in its Terms of Use and not have such an implied broad restriction on customers creating their own trained AIs on their own design data, irrespective of the software that produced it.

There is a lot of daylight between

EULA vs Terms of Use: what’s the difference?

At first glance, a EULA (End User Licence Agreement) and Terms of Use can look like the same thing. In practice, they operate at different levels — and together form the legal framework that governs how customers engage with software and cloud services.

The EULA is the traditional

licence agreement tied to desktop software. It explains that you do not own the software itself, only the right to use it under certain conditions. Typical clauses cover installation limits, restrictions on copying or reverse-engineering, and confirmation that the software is licensed, not sold.

The Terms of Use apply more

“This statement to me reads that the clause is from a standpoint of Autodesk wanting to protect its products from being reverse engineered and hold themselves free of liability of sharing private information, but model element authors can still freely use AI/ML to study their own data / designs and improve them.”

Following an early draft of this article, Autodesk responded, “This clause was written to prevent the use of AI/ML technology to reverse engineer Autodesk’s IP or clone Autodesk’s product functionalities, a common protection for software companies. It does not broadly restrict our users’

broadly to online services, platforms, APIs and cloud tools. They include acceptable use rules, data storage and sharing conditions, API restrictions, and often a right for the vendor to change the terms unilaterally.

One unresolved issue is how to interpret contradictions. If the EULA states ‘you own your

work’ but the Acceptable Use Policy restricts what you can do with that work, and neither agreement specifies which takes precedence, which clause governs? In practice, customers may only discover the answer in the event of a dispute — an unsettling prospect for firms relying on predictable rights.

ability to use their IP or give Autodesk ownership to our users’ content.”

Buro Happold’s Winfield shared her perspective, “Contract interpretation is generally not impacted by spirit of a clause - if the drafting is clear, it is not changed by the assertion of a different intention? Unless there are contradictions in other clauses and copyright law then it all needs to be read together and squared up to be interpreted in a workable way? It may be the “outputs” in the clause needs to qualify / clarify its intentions, if different from the seemingly clear drafting of read alone?”

The interpretation that this was a sweeping restriction on AI training using any output from Autodesk software has not gone unnoticed by major customers. Autodesk already has a reputation for running compliance audits and issuing fines when licence breaches are discovered, so the presence of this clause in an updated, binding contract has raised alarm.

The fear was simple: if the restriction exists, it can be enforced. Several design IT directors have already told their boards that, on a strict reading of Autodesk’s updated terms, their firms are probably now out of compliance – not for piracy, but for training their own AI models, on their own project data.

Some of the commenters on Miller’s original LinkedIn post, reported that they raised the issue with Autodesk execs in meetings. By and large these execs had not heard of the EULA changes and said they would go find out more information.

Looking around at what other firms have done here, their EULAs include clauses about AI training of data, but it always appears to be in relation to protecting IP or reverse engineering commercial software - not broad prohibitions.

Adobe has explicit rules around its Firefly generative AI features and the company’s Generative AI User Guidelines

forbid customers from using any Fireflygenerated output to train other AI or machine learning models. However, in product-specific terms, Adobe defines “Input” and “Output” as your content and extends the same protections to both.

Graphisoft has so far left customer data largely unconstrained in terms of AI use. Bentley Systems sits somewhere in between, allowing AI outputs for your use but prohibiting their use in building competing AI systems. The standard Allplan EULA / licence terms do not appear to contain blanket prohibitions on using output for AI training.

Meanwhile, Autodesk’s wording has no caveats or carve out for customers’ data just what appears to be a blanket

This mirrors tactics used in other industries. Social media platforms, for example, restrict third-party scraping to ensure AI training occurs only within their walls –although in that instance the third party would be using data it does not own.

In finance, regulators have intervened to stop institutions from controlling both infrastructure and the datasets flowing through them. Europe’s Digital Markets Act directly targets such gatekeeping, while US antitrust agencies are scrutinising restrictive contract terms that entrench platform dominance.

For the AEC sector, the potential impact of the restrictions in Autodesk’s Acceptable Use Policy is clear: it risks concentrating AI innovation inside Autodesk’s ecosystem, raising barriers for independent development and narrowing customer choice.

restriction on AI training using outputs from its software, combined with an exception for its own cloud ecosystem. This appears to effectively grant the company a monopoly over how design data can fuel AI. Customers are free to create, but if they wish to train internal AI on their own project history, the contract could shut the door — unless that training happens inside Autodesk’s APS environment. The effect is to funnel innovation into Autodesk’s platform, where the company retains commercial leverage.

As the industry moves into an era defined by artificial intelligence and machine learning, customer content has become more than just the product of design work, it has become the raw material for training and insight.

BIM and CAD models are no longer viewed solely as

deliverables for projects, but as vast datasets that can be mined for patterns, efficiencies, and predictive value. This is why software vendors increasingly frame customer content as “data goods” rather than private work.

With so much of the design process shifting to cloud-based platforms, vendors are in a

How Autodesk might enforce an AI training ban is an open question. Traditional licence audits can detect unlicensed installs or overuse. Meanwhile, proving that a customer has trained an AI on Autodesk outputs is way more complex. But Autodesk file formats (DWG, RVT, etc.) do contain unique structural fingerprints that could, in theory, be detected in a trained model’s weights or outputs - for example, if an AI consistently reproduces proprietary layering systems, metadata tags, or parametric structures unique to Autodesk tools.

Autodesk could also monitor API usage patterns: large-scale systematic exports or conversions may signal that datasets are being harvested for training. Another possible avenue is watermarking — embedding invisible markers in outputs that survive export and could later be detected. APIs, APS and developers

Autodesk is also making significant changes to other areas of its business –changes that could have a big impact on those that develop or use complementary software tools. Autodesk’s API and

powerful position to influence, and often restrict, how those datasets can be accessed and reused.

The old mantra that “data is the new oil” captures this shift neatly: just as oil companies controlled not only the drilling but also the refining and distribution, software firms now want to control both the pipelines of

design data and the AI refineries that turn it into intelligence. What used to be customerowned project history is being reconceptualised as a strategic asset for software vendors themselves and EULAs and Terms of Use are the contractual tools that allow them to lock down that value.

Autodesk Platform Services (APS) ecosystem has long been central to the company’s success, enabling customers and commercial third parties to extend tools like Autodesk Revit and Autodesk Construction Cloud (ACC).

But what was once a relatively open environment is now being reshaped into a monetised, tightly governed platform — with serious implications for customers and developers.

Nathan Miller of Proving Ground points out that virtually every practice he has worked with relies on open-source scripts, third-party add-ins, or in-house extensions. These are the utilities that make Autodesk’s software truly productive. By introducing broad restrictions and fresh monetisation barriers, Autodesk risks eroding the very ecosystem that helped drive its dominance.

The most visible change is the shift of APS into a metered, consumption-based service. Previously bundled into subscriptions, APIs will now incur line-item costs for common tasks such as model translations, batch automations and dashboard integrations.

A capped free tier remains, but highvalue services like Model Derivative, Automation and Reality Capture will now be billed per use. For firms, this means operational budgets must now account for API spend, with the risk of projects stalling mid-delivery if quotas are exceeded or unexpected charges triggered.

Autodesk has also tightened authentication rules. All integrations must be registered with APS and use Autodeskcontrolled OAuth scopes. These scopes, which define the exact permissions an app has, can be added, redefined or retired by Autodesk — improving security, but also centralising control over what kinds of applications are permitted.

Perhaps the most profound change is not technical, but contractual. Firms can still create internal tools for their own use. But turning those into commercial products — or even sharing them with peers — now requires Autodesk’s explicit approval. The line between “internal tool” and “commercial app” is no longer a matter of technology but of contract law. Innovation, once free to circulate, is now fenced in.

This changing landscape for software development is not unique to Autodesk. Dassault Systèmes (DS), which is strong in product design, manufacturing, automotive, and aerospace, has sparked controversy by revising its agreements with third-party developers for its Solidworks MCAD software. DS is demanding developers hand over 10% of their turnover along with detailed financial disclosures. (www.tinyurl.com/solidworks-10).Small firms

fear such terms could make their businesses unviable.

Across the CAD/BIM sector, ecosystems are being re-engineered into revenue streams. What were once open pipelines of user-driven innovation are narrowing into gated conduits, designed less to empower customers than to deliver shareholder returns.

The stakes are high for both customers and developers. For customers, the greatest risk is losing meaningful control over their design history. Project files, BIM models and CAD data are no longer just records of completed work; they are the foundation for future AI-driven workflows. If licence agreements prevent firms from using their own outputs to train AI, they forfeit the ability to build unique, inhouse intelligence from their past projects. The value of their data, arguably their most strategic asset, is redirected into the vendor’s ecosystem. The result is growing dependence: firms must rely on vendor tools, AI models and pricing, with fewer options to innovate independently or move their data elsewhere.

For software developers, the risks are equally severe. Independent vendors and in-house innovators who once built addons or utilities to extend core CAD/BIM platforms now face new costs and restrictions. Revenue-sharing models, such as Dassault Systèmes’ 10% royalty scheme, threaten commercial viability, especially for smaller firms. When API use is metered and distribution fenced in by contract, ecosystems shrink. Innovation slows, customer choice narrows, and vendor lock-in grows.

AI is the existential threat vendors don’t want to admit. Smarter systems could slash the number of licences needed on a project, deliver software on demand, and let firms build private knowledge vaults more valuable than off-the-shelf tools. Vendors see the danger: EULAs are now their defensive moat, crafted to block customers from using their own data to fuel AI. The fine print isn’t just about compliance — it’s about making sure disruption happens on the vendor’s terms, not those of the customer.

This trajectory is not inevitable. Customers and developers can push back. Large firms, government bodies and consortia hold leverage through procurement. They can demand carve-outs that preserve ownership of outputs and guarantee the right to train AI. Developers, too, can resist punitive revenue-sharing schemes and press for fairer terms. Only collective action will ensure innovation remains in the hands of the wider AEC community,

Autodesk’s Acceptable Use Policy (AUP) appears to ban AI/ML training on any “output” from its software unless done within Autodesk’s APS cloud. This could include models, drawings, exports, even IFCs.

Why it matters

Customers risk losing the ability to train internal AI on their own design history. Strict licence audits mean firms could be flagged noncompliant even without intent.

Legal experts warn the AUP’s broad claims over “outputs” may conflict with copyright law, which in many jurisdictions gives authors automatic ownership of their creations.

Consultants could face knock-on risks if client contracts require them to warrant full ownership of deliverables — raising potential indemnity exposure.

Autodesk gains leverage by funnelling AI innovation into its paid ecosystem.

The big picture

This move mirrors gatekeeping strategies in other tech sectors, where platforms wall off data to consolidate control. Regulators in the EU (Digital Markets Act, Data Act) and US antitrust bodies are increasingly scrutinising such practices.

not locked in vendor boardrooms.

The tightening of EULAs and developer agreements is not happening in a vacuum. In Europe, new regulations like the Digital Markets Act (DMA) and the Data Act could directly challenge these practices. The DMA targets “gatekeepers” that restrict competition, while the Data Act enshrines customer rights to access and use data they generate, including for AI training. Clauses banning firms from training AI on their own outputs may sit uncomfortably with these principles.

In the US, antitrust law is less settled but moving in the same direction. The FTC has signalled increased scrutiny of contract terms that suppress competition, and restrictions such as Autodesk’s AI-output ban or Solidworks’ 10% developer royalty could draw attention.

For customers and developers, this creates negotiating leverage. Large firms, government clients, and consortia can

What changed?

Autodesk has overhauled Autodesk Platform Services (APS): APIs are now metered, consumption-based, and gated by stricter terms. While firms can still build internal tools, sharing or commercialising scripts now requires Autodesk’s explicit approval.

Why it matters

Independent developers face new costs and quotas for integrations that were once bundled into subscription fees.

In-house teams must now budget for API usage, turning process automation into an ongoing operational cost.

Quota limits mean projects risk disruption if thresholds are unexpectedly exceeded mid-delivery.

The contractual line between “internal tool” and “commercial app” is now defined by Autodesk, not developers.

Innovation that once flowed freely into the wider ecosystem is fenced in, with Autodesk deciding what can be shared.

The big picture

Across the CAD/BIM sector, developer ecosystems are being monetised and restricted to generate shareholder returns. What were once open innovation pipelines are narrowing into vendor-controlled platforms, threatening the independence of smaller developers and reducing customer choice.

push for carve-outs citing regulatory rights, while developers may resist punitive revenue-sharing as disproportionate. Yet smaller players face a harder reality: challenging vendors risks losing access to platforms that underpin longstanding businesses.

A Bill of Rights?

With so many software firms busily updating their business models, EULAs and terms, the one group here that is standing still and taking the full force of this wave are customers. A constructive way forward could be the creation of a Bill of Rights for AEC Software customers — a simple but powerful framework that customers could insist their vendors sign up to and be held accountable against. The goal is not to hobble innovation, but to

ensure it happens on a foundation of fairness and trust. Knowing this month’s ‘we have updated our EULA’ will not transgress some core concepts.

At its heart we’re suggesting five core principles:

Data Ownership - a statement that customers own what they create; vendors cannot claim control of drawings, models, or project data through the fine print.

AI Freedom - guarantees that firms may use their own outputs to train internal AI systems, preserving the ability to innovate independently rather than relying solely on vendor-driven tools.

Developer fairness - ensures that APIs remain open, with transparent and nonpunitive revenue models that allow third-party ecosystems to thrive.

Transparency - requires vendors to clearly disclose when and how customer data is used in their own AI training or analytics.

Portability - commits vendors to interoperability and open standards, so that customers are never locked into one ecosystem against their will.

Such a Bill of Rights would not prevent Autodesk, Bentley Systems, Nemetschek, Trimble and others from building profitable AI services or new subscription tiers. But it would establish clear boundaries: vendors innovate and capture value, but not at the expense of customer autonomy. For customers, developers, and ultimately the built environment itself, this would restore balance and accountability in a market where the fine print has become as important as the software itself.

AEC Magazine is now working with a group of customers, developers and software vendors to see how this could be shaped in the coming months.

EULAs are no longer obscure boilerplate legalese, tucked at the end of an installer. They have become the front line in a new battle, not over software piracy, but over who controls the data, workflows, and ecosystems that shape the future of design.

In my view, as currently worded, Autodesk’s clause could be interpreted as a prohibition on AI training, although this may be counter to Autodesk’s intentions with regards to customer ‘outputs’. Furthermore, Dassault Systèmes’ demand for a slice of developer revenues illustrates just how quickly the ground is shifting. Contracts

are no longer just protective wrappers around software; they are strategic levers which can be used to lock in customers and monetise ecosystems.

This should concern everyone in AEC. Customers risk losing the ability to use their own project history to innovate, while mature developers face sudden, new revenue-sharing models that could undermine entire businesses. Left unchallenged, the result will be less competition, less innovation, and greater dependency on a handful of large vendors whose first loyalty is to shareholders, not users.

The only path forward I see is collective action. Customers and developers must push back, demand transparency, insist on long-term contractual safeguards, and possibly unite around a shared Bill of Rights for AEC software. The question is no longer academic: in the age of AI, do you own your tools and your data — or does your vendor own you?

In response to this article, D5 Render provided the following statement:

We fully understand and share the community’s concerns regarding data rights in the evolving field of AI. We remain committed to maintaining clear and fair agreements that protect user rights while fostering innovation.

Our Terms of Service (publicly available at www. d5render.com/service-agreement) do not claim any ownership or perpetual usage rights over user-generated content, including AI-rendered images. On the contrary, Section 6 of our Terms of Service explicitly states that users “retain rights and ownership of the Content to the fullest extent possible under applicable law; D5 does not claim any ownership rights to the Content.”

When users upload content to our services, D5 is granted only a non-exclusive, purpose-limited operational license, which is a standard clause in most cloud-based software products. This license merely allows us to technically operate, maintain, and improve the service. D5 will never use user content as training data for the Services or for developing new products or services without users’ express consent.

As for liability, Sections 8 and 9 of our Terms of Service are standard in the software industry. They are designed to protect D5 from risks arising from user-uploaded content that infringes on third-party rights. These clauses are not intended to transfer the liability of D5’s own actions to users.

In the fast-moving world of modern work, time is precious, and every second lost is an opportunity missed. Whether it’s waiting for large project files to load, struggling with multiple applications slowing your system down, or dealing with clunky collaboration tools, these bottlenecks add up quickly. They disrupt the flow of ideas, delay important decisions, and make everyday tasks feel like a constant battle with technology.

But this doesn’t have to be the case. Lenovo mobile workstations are designed to break free from the constraints of traditional laptops. These latest devices, such as Lenovo’s newly launched ThinkPad P14s / P16s powered by AMD Ryzen™ processors, are built for professionals who need more than just a basic device. They’re tailored to handle the complex workflows that define modern work, where data, collaboration, and creative tasks often collide.

Let’s consider a common scenario: project managers juggling multiple applications— everything from Primavera P6 and SAP to Teams, emails, and spreadsheets. Each application demands memory, processing power, and attention. But what happens when your laptop can’t handle the load? It starts freezing, stalling, and you’re left waiting. ThinkPad P series are built to eliminate this problem. Equipped with high-speed memory, these workstations allow you to run multiple heavy applications at once, without the system crashing

or slowing down. Whether it’s tracking a project or responding to urgent emails, you’ll experience smooth multitasking with no interruptions. With Unified Memory Architecture, your machine intuitively allocates system resources to handle demanding tasks, so you don’t have to worry about your computer holding you back.

The Unified Memory Architecture also benefits professionals in with 3D rendering and visualization needs, where slow model edits or long render times can halt creativity. You need great graphics memory to tackle the demanding graphical tasks. The latest Lenovo mobile workstations ensure there is no more waiting for renders to finish or materials to load— just a continuous, uninterrupted workflow that lets you focus on the creative process.

AI Collaboration:

Seamless Teamwork Across Borders

In today’s remote-first world, collaboration is at the heart of almost every workflow. Yet, the challenges of managing team members across different locations, software tools, and time zones are significant. Whether you’re handling data analysis, designing 3D models, or simply working on a multimedia project, it’s easy to feel overwhelmed when your system struggles to keep up with multiple apps running simultaneously. The latest AI-powered mobile workstations from Lenovo, like ThinkPad P14s and P16s powered by AMD Ryzen™ processors, solve this by ensuring smooth multitasking and collaboration. Equipped with 50+ TOPS NPU for AI-driven performance, these workstations accelerate real-time

on-device AI tasks, from data processing to virtual meetings. This ensures smooth collaboration even when working with large, complex files. Whether it’s editing 4K video, reviewing models, or sharing your screen during a client presentation, these machines can handle the load without delays. You’ll experience better, faster teamwork, all while benefiting from real-time security and adaptive power management, keeping you productive on the go.

These workstations don’t just eliminate lag and delays; they transform your workflow into something fluid, something that feels natural. Collaboration tools are more intuitive, creative processes are less interrupted, and even data analysis is faster. It’s like having a team that anticipates your next move—empowering you to do more, faster, and with less frustration.

In the past, workstations were specialized tools used only by engineers, designers, or 3D professionals. But as the demands of work have shifted, the definition of what a power user needs have expanded. Today, professionals in data analysis, project management, and even content creation are faced with AI assisted workflows that require more than a typical laptop can provide. AI-powered workstations offer solutions to these challenges, delivering productivity and realtime processing that can handle even the most complex tasks.

Whether you’re tracking resources, analyzing data, or rendering designs, these workstations provide the power and flexibility you need to stay productive and efficient. No longer is it about whether you can get by with a laptop—it’s about whether your device can keep up with your growing workflow demands. As AI continues to reshape industries, those who are equipped with the right tools will stay ahead of the curve.

Is your current machine holding you back? Evaluate your device with the self-assessment tool to see if it meets today’s demands, or if an upgrade is needed for future workflows.

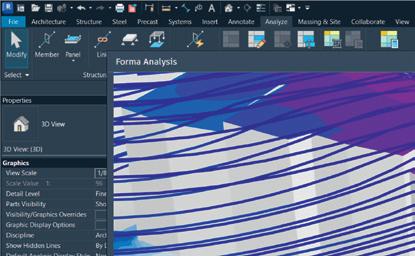

Autodesk’s AI story has matured. While past Autodesk University events focused on promises and prototypes, this year Autodesk showcased live, production-ready tools, giving customers a clear view of how AI could soon reshape workflows across design and engineering, writes Greg Corke

At AU 2025, Autodesk took a significant step forward in its AI journey, extending far beyond the slide-deck ambitions of previous years.

During CEO Andrew Anagnost’s keynote, the company unveiled brand-new AI tools in live demonstrations using pre-beta software. It was a calculated risk — particularly in light of recent high-profile hiccups from Meta — but the reasoning was clear: Autodesk wanted to show it has tangible, functional AI technology and it will be available for customers to try soon.

The headline development is ‘neural CAD’, a completely new category of 3D generative AI foundation models that Autodesk says could automate up to 80–90% of routine design tasks, allowing professionals to focus on creative decisions rather than repetitive work. The naming is very deliberate, as Autodesk tries to differ entiate itself from the raft of generic AECfocused AI tools in development.

neural CAD AI models will be deeply integrated into BIM workflows through Autodesk Forma, and product design workflows through Autodesk Fusion. They will ‘completely reimagine the tra ditional software engines that create CAD geometry.’

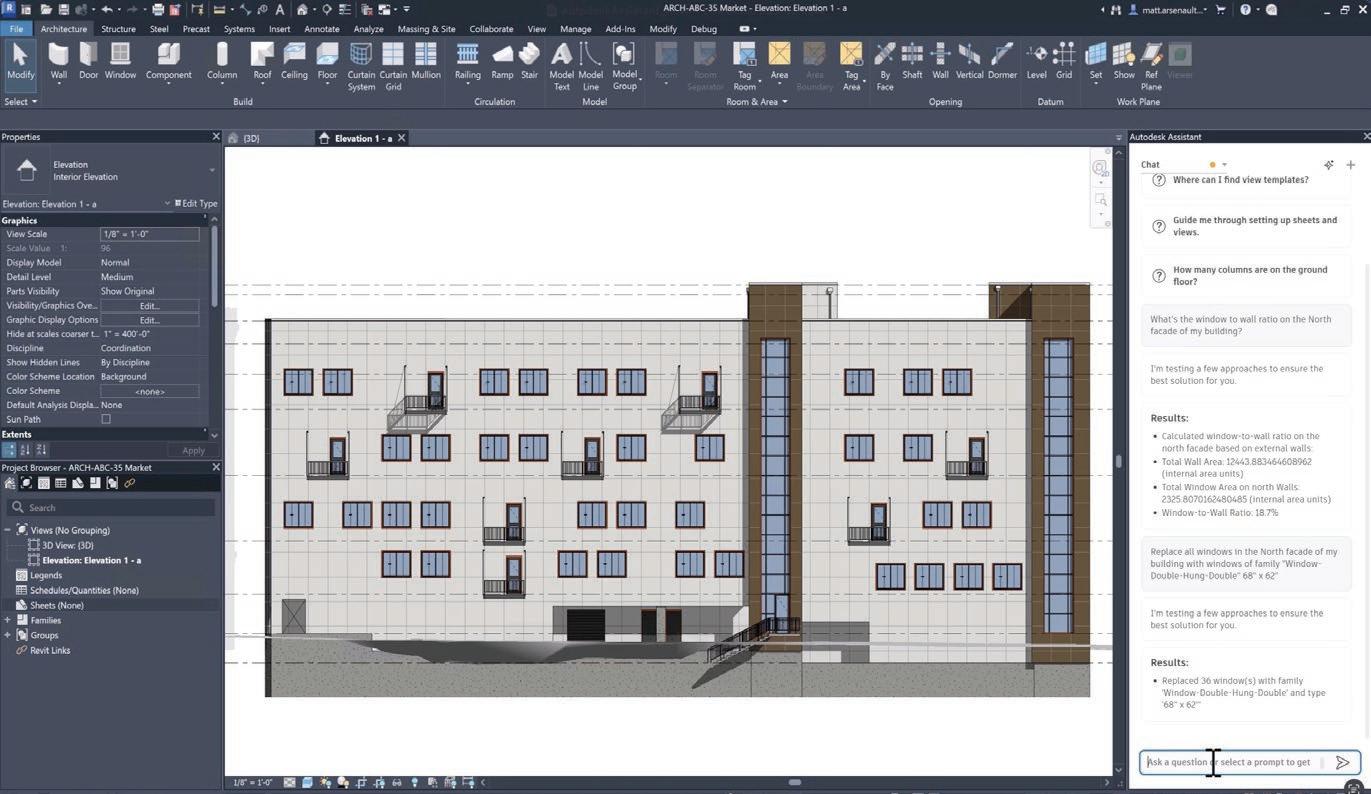

Autodesk is also making big AI strides in other areas. Autodesk Assistant is evolv ing beyond its chatbot product support ori gins into a fully agentic AI assistant that can automate tasks and deliver insights based on natural-language prompts.

Big changes are also afoot in Autodesk’s AEC portfolio – develop ments that will have a significant impact on the future of Revit.

The big news was the release of Forma Building Design, a brand-new tool for LoD 200 detailed design. Autodesk also

announced that its existing early-stage planning tool, Autodesk Forma, will be rebranded as Forma Site Design and Revit will gain deeper integration with the Forma industry cloud, becoming Autodesk’s first Connected client. You can learn more about these developments in the article on page 30.

neural CAD marks a fundamental shift in Autodesk’s core CAD and BIM technology. As Anagnost explained, “The various brains that we’re building will change the way people interact with design systems.”

Unlike general-purpose large language models (LLMs) such as ChatGPT and Claude, or AI image generation models

Autodesk has so far presented two types of neural CAD models: ‘neural CAD for geometry’, which is being used in Fusion and ‘neural CAD for buildings’, which is being used in Forma.

For Fusion, there are two AI model variants, as Tonya Custis, senior director, AI research, explained, “One of them generates the whole CAD model from a text prompt. It’s really good for more curved surfaces, product use cases. The second one, that’s for more prismatic sort of shapes. We can do text prompts, sketch prompts and also what I call geometric prompts. It’s more of like an auto complete, like you gave it some geometry, you started a thing, and then it will help you continue that design.”

On stage, Mike Haley, senior VP of research, demonstrated how neural CAD for geometry could be used in Fusion to automatically generate multiple iterations of a new product, using the example of a power drill.

“Just enter the prompts or even drawing and let the CAD engines start to produce options for you instantly,” he said. “Because these are first class CAD models, you now have a head start in the creation of any new

It’s important to understand that the AI doesn’t just create dumb 3D geometry – neural CAD also generates the history and sequence of Fusion commands required to create the model. “This means you can make edits as if you modelled it yourself,” he said. Meanwhile, in the world of BIM, Autodesk is using

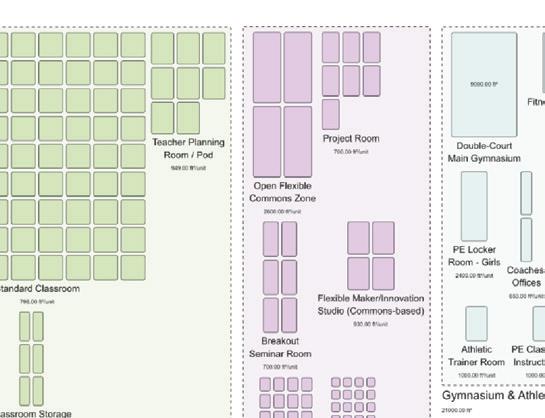

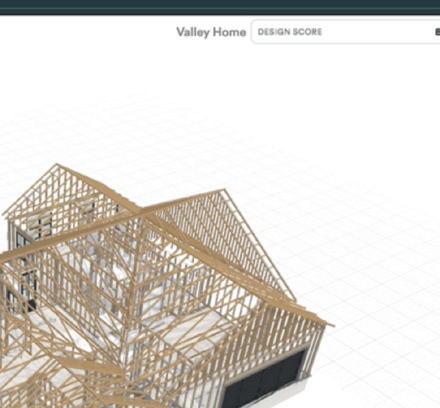

neural CAD to extend the capabilities of Forma Building Design to generate BIM elements.

The current aim is to enable architects to ‘quickly transition’ between early design concepts and more detailed building layouts and systems with the software ‘autocompleting’ repetitive aspects of the design.

Instead of geometry, ‘neural CAD for buildings’ focuses more on the spatial and physical relationships inherent in buildings as Haley explained. “This foundation model rapidly discovers alignments and common patterns between the different representations and aspects of building systems.

“If I was to change the shape of a building, it can instantly recompute all the internal walls,” he said. “It can instantly recompute all of the columns, the platforms, the cores, the grid lines, everything that makes up the structure of the building. It can help recompute structural drawings.”

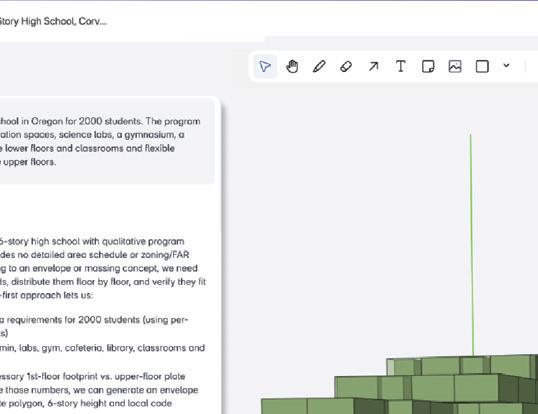

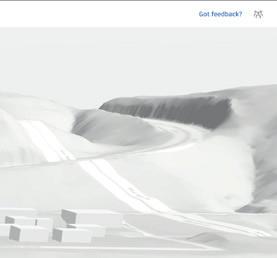

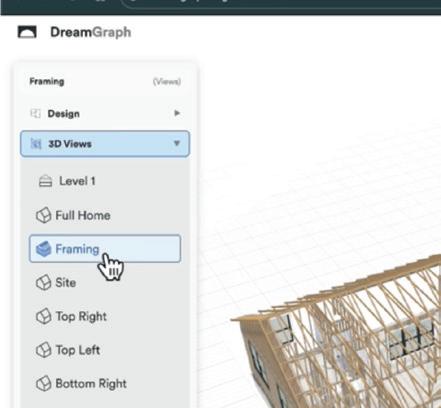

At AU, Haley demonstrated ‘Building Layout Explorer’, a new AI-driven feature coming to Forma Building Design. He presented an example of an architect exploring building concepts with a massing model, “As the architect directly manipulates the shape, the neural CAD engine responds to these changes, auto generating floor plan layouts,” he said.

But, as Haley pointed out, for the system to be truly useful the architect needs to have control over what is generated, and therefore be able to lock down certain elements, such as a hallway, or to directly manipulate the shape of the massing model.

“The software can re-compute the locations and sizes of the columns and create an entirely new floor layout, all while honouring the constraints the architect specified,” he said.

Of course, it’s still very early days for neural CAD and, in Forma, ‘Building Layout Explorer’ is just the beginning. Haley alluded to expanding to other disciplines within AEC, “Imagine a future where the software generates additional architectural systems like these structural engineering plans or plumbing, HVAC, lighting systems and more.”

In the future, neural CAD in Forma will also be able to handle more complexity, as Custis explains. “People like to go between levels of detail, and generative AI models are great for that because they can translate between each other. It’s a really nice use case, and there will definitely be more levels of detail. We’re currently at LoD 200.”

The training challenge

neural CAD models are trained on the typical patterns of how people design. “They’re learning from 3D design, they’re learning from geometry, they’re learning from shapes that people typically create, components that people typically use, patterns that typically occur in buildings,” said Haley.

In developing these AI models, one of the biggest challenges for Autodesk has been the availability of training data. “We don’t have a whole internet source of data like any text or image models, so we have to sort of amp up the science to make up for that,” explained Custis.

For training, Autodesk uses a combination of synthetic data and customer data. Synthetic data can be generated in an ‘endless number of ways’, said Custis, including a ‘brute force’ approach using generative design or simulation.

Customer data is typically used later-on in the training process. “Our models are trained on all data we have permission to

train on,” said Amy Bunszel, EVP, AEC.

But customer data is not always perfect, which is why Autodesk also commissions designers to model things for them, generating what chief scientist Daron Green describes as gold standard data. “We want things that are fully constrained, well annotated to a level that a customer wouldn’t [necessarily] do, because they just need to have the task completed sufficiently for them to be able to build it, not for us to be able to train against,” he said.

Of course, it’s still very early days for neural CAD and Autodesk plans to improve and expand the models, “These are foundation models, so the idea is we train one big model and then we can task adapt it to different use cases using reinforcement learning, fine tuning. There’ll be improved versions of these models, but then we can adapt them to more and more different use cases,” said Custis.

In the future, customers will be able to customise the neural CAD foundation models, by tuning them to their organisation’s proprietary data and processes. This could be sandboxed, so no data is incorporated into the global training set unless the customer explicitly allows it.

“Your historical data and processes will be something you can use without having to start from scratch again and again, allowing you to fully harness the value locked away in your historical digital data, creating your own unique advantages through models that embody your secret source or your proprietary methods,” said Haley.

When Autodesk first launched Autodesk Assistant, it was little more than a natural language chatbot to help users get

support for Autodesk products.

Now it’s evolved into what Autodesk describes as an ‘agentic AI partner’ that can automate repetitive tasks and help ‘optimise decisions in real time’ by combining context with predictive insights.

Autodesk demonstrated how in Revit, Autodesk Assistant could be used to quickly calculate the window to wall ratio on a particular façade, then replace all the windows with larger units. The important thing to note here is that everything is done though natural language prompts, without the need to click through multiple menus and dialogue boxes.

Autodesk Assistant can also help with documentation in Revit, making it easier to use drawing templates, populate title blocks and automatically tag walls, doors and rooms. While this doesn’t yet rival the auto-drawing capabilities of Fusion, when asked about bringing similar functionality to Revit, Bunszel noted, ‘We’re definitely starting to explore how much we can do.’

Autodesk also demonstrated how Autodesk Assistant can be used to automate manual compliance checking in AutoCAD, a capability that could be incredibly useful for many firms.

technology has also been built with agent-to-agent communication in mindthe idea being that one Assistant can ‘call’ another Assistant to automate workflows across products and, in some cases, industries.

“It’s designed to do three things: automate the manual, connect the disconnected, and deliver real time insights, freeing your teams to focus on their highest value work,” said CTO, Raji Arasu.

In the context of a large hospital construction project, Arasu demonstrated how a general contractor, manufacturer, architect and cost estimator could collaborate more easily through natural language in Autodesk Assistant. She showed how teams across disciplines could share and sync select data between Revit, Inventor and Power Bi, and manage regulatory requirements more efficiently by automating routine compli-

into the mix. To pay for this Autodesk is introducing a new usage-based pricing model for customers with product subscriptions, as Arasu explains, “You can continue to access these select APIs with generous monthly limits, but when usage goes past those limits, additional charges will apply.”

But this has raised understandable concerns among customers about the future, including potential cost increases and whether these could ultimately limit design iterations.

Autodesk is designing its AI systems to assist and accelerate the creative process, not replace it. The company stresses that professionals will always make the final decisions, keeping a human firmly in the loop, even in agent-toagent communications, to ensure accountability and design integrity.

‘‘ This feels like a pivotal moment in Autodesk’s AI journey, as the company moves beyond ambitions and experimentation into production-ready AI that is deeply integrated into its core software

“You’ll be able to analyse a submission against your drawing standards and get results right away, highlighting violations and layers, lines, text and dimensions,” said Racel Amour, head of generative AI, AEC.

Meanwhile, in Civil 3D it can help ensure civil engineering projects comply with regulations for safety, accessibility and drainage, “Imagine if you could simply ask the Autodesk Assistant to analyse my model and highlight the areas that violate ADA regulations and give me suggestions on how to fix it,” said Amour.

So how does Autodesk ensure that Assistant gives accurate answers? Anagnost explained that it takes into account the context that’s inside the application and the context of work that users do.

“If you just dumped Copilot on top of our stuff, the probability that you’re going to get the right answer is just a probability. We add a layer on top of that that narrows the range of possible answers.”

“We’re building that layer to make sure that the probability of getting what you want isn’t 70%, it’s 99.99 something percent,” he said.

While each Autodesk product will have its own Assistant, the foundation

ance tasks. “In the future, Assistant can continuously check compliance in the background. It can turn compliance into a constant safeguard, rather than just a one-time step process,” she said.

Arasu also showed how Assistant can support IT administration — setting up projects, guiding managers through configuring Single Sign-On (SSO), assigning Revit access to multiple employees, creating a new project in Autodesk Construction Cloud (ACC), and even generating software usage reports with recommendations for optimising licence allocation.

Agent-to-agent communication is being enabled by Model Context Protocol (MCP) servers and Application Programming Interfaces (APIs), including the AEC data model API, that tap into Autodesk’s cloud-based data stores. APIs will provide the access, while Autodesk MCP servers will orchestrate and enable Assistant to act on that data in real time.

As MCP is an open standard that lets AI agents securely interact with external tools and data, Autodesk will also make its MCP servers available for third-party agents to call.

All of this will naturally lead to an increase in API calls, which were already up 43% year on year even before AI came