2024 Showcase Exploring the Cutting-Edge Research of The University of Tulsa Ph.D. Students

Cyber

2024 Showcase Exploring the Cutting-Edge Research of The University of Tulsa Ph.D. Students

Cyber

The Cyber Fellows initiative brings together a diverse group of talented Ph.D. students from various programs, all united by their passions for pushing the boundaries of knowledge in their respective fields, cyber security research projects, and entrepreneurship. The exceptional academic papers authored by these dedicated scholars offer a glimpse into the depth and breadth of their research.

Each paper represents the culmination of months, if not years, of tireless effort, intellectual curiosity, and unwavering commitment to advancing their disciplines. These students have worked with their esteemed faculty advisors, whose guidance and expertise have been instrumental in shaping the direction and quality of their research.

From cutting-edge advancements in cybersecurity and artificial intelligence to groundbreaking insights in data science and network systems, the papers showcased here demonstrate the immense potential of these scholars. Their work contributes to the academic community and holds the promise of real-world applications that can transform industries and improve lives.

As you explore the abstracts and delve into the full papers, you will be struck by the originality of their ideas, the rigor of their methodologies, and the clarity of their presentations. Each paper is a testament to the power of academic inquiry and the importance of nurturing the next generation of researchers.

We invite you to join us in celebrating the achievements of these remarkable Cyber Fellows. Please take a moment to appreciate the dedication and brilliance that shines through in their work. We encourage you to engage with their research, ask questions, and share your thoughts. Who knows? You may discover the next groundbreaking idea and ignite a spark of inspiration to engage with a Cyber Fellow in the entrepreneurial spirit to help build their ideas into successful companies.

Let us embark on this exploration of knowledge and innovation. We hope you will find the papers as captivating and thought-provoking as we have. Thank you for being a part of this celebration of academic excellence.

Cameron Alred and Joshua Schultz

BRAT Research Group

Dept. of Mechanical Engineering, University of Tulsa

Traditional servomotors have served the field of robotics well for decades. They are powerful and precise, relatively affordable, and are well-understood. However, as the field of biomimetic and soft robotics advances, there has been increasing interest in actuators that mimic the impressive capabilities of vertebrate skeletal muscles. Muscles are appropriately sized to their role, they are robust against damage, and they are able to elastically store energy and even modulate their mechanical impedance. The first two of these benefits are directly owed to the fact that muscle tissue is composed of cells. The body contains the genetic information to produce several different types of muscle cell, and grows more or less cells as needed, whether for the tiny muscles in the face or the large, powerful muscles in the legs. Furthermore, when cells die or are damaged, the many other undamaged fibers of cells continue to function. Minor muscle damage does not lead to immobilization, only pain, soreness, and fatigue. Thus, in order to capture the full benefits of muscles, we may take inspiration from the cellular nature of muscle and construct actuators which are made of many discrete interconnected actuation units. Ueda et al. provide a good overview of this field in their 2017 book, in which they are referred to as cellular actuators. These discrete muscle-like actuators promise many of the inherent advantages of skeletal muscle. However, they are far from straightforward to model and control: the more units are connected together, the more complex and high-order the dynamical behavior of the overall actuator becomes. Thus, in order to make this type of actuator function, we must first solve this modeling and control challenge.

This challenge is multifaceted. There are numerous ways in which contractile actuation units can be connected together – they can be placed in series chains, parallel bundles, some combination thereof, and possibly cross-linked. For every new configuration of units, a new dynamic input-output response is produced. Thus, it is not sufficient to produce one plant model and develop a controller for it – a new control algorithm must be generated for a given number and connectivity arrangement of units, in addition to the control integration into the robot or system itself. Thus, the goal is not to develop a single controller, but a controller generator – a function that takes the dynamics of an actuation unit and the configuration of some number of them as input, and utilizes the calculated actuator dynamics to produce a controller as output. Also, for a given level of contraction, there are multiple possible distributions of contraction across the various actuation units.

The other aspect of developing discrete muscle-inspired actuators is the hardware itself. There have been several approaches to this studied – from pneumatic muscles to shape memory alloys. Each

offers its unique advantages and drawbacks. Our approach, first published by Mathijssen et al. in 2015, is to utilize binary solenoids as the primary contractile element. Solenoids possess the advantage of requiring less power to maintain their contracted state after contraction occurs; once the plunger has made magnetic contact with the back, they can drop to a lower “holding” current. They also respond nearly instantaneously and require only an electrical power source to operate, and lack the need for pneumatic compressors and valves (as air muscles do) or time to heat up and cool down (as SMAs do). The design considerations are instead the magnetic performance (how efficiently the electromagnet functions) and thermal performance (keeping the solenoids cool under load). Because they are either on or off, and aren’t inherently elastic, they must be combined in series with an elastic element (a spring), forming a “motor unit.” These motor units may be arranged in parallel with one another to allow quantized contraction level, achieved by energizing different numbers of solenoids. Thus, more force is developed at a given strain the more solenoids are energized because activating the solenoids changes the effective resting length of the elastic element. This design also has the potential for variable-impedance behavior - because the solenoid plungers transition from rigid attachment (coil energized) to a damping action (de-energized/sliding), the system behavior changes a function of the pattern of activation across the discrete units that comprise an actuator. This behavior will factor into the development of controllers, but it is not yet understood what impact this has on control, and how it could be exploited.

The goal of our research is to develop the field of discrete muscle-inspired actuators by modelling their behavior in a flexible way that is agnostic of configuration or number, to develop and test a smart controller generator, and to validate this modeling and control strategy on improved prototype hardware.

Cameron Alred is a lifelong Tulsa resident who received his BS in mechanical engineering from the University of Tulsa in 2020. He joined the first cohort of the TU Cyber Fellows program and began his PhD work that same year, and is currently pursuing his PhD in mechanical engineering. In 2021, he worked as a robotics intern with the US Army Corps of Engineers ERDC. His research surrounds muscleinspired actuators, and his interests include bio-inspired robotics and the biology of movement and sensation, as well as robotic prosthesis and augmentation.

Dr. Schultz has been the director of the Biological Robotics At Tulsa research group since 2013. His research interests are in all aspects of robot motion and control. This includes grasping and manipulation, legged locomotion, and actuators that are inspired by muscle physiology. He tends to approach problems in this area from the standpoint of how they are accomplished in the bodies of humans and animals.

Dr. Schultz received his Ph.D. from Georgia Institute of Technology in 2012 and was a postdoctoral researcher at Istituto Italiano di Tecnologia before joining The University of Tulsa.

Mathijssen, G., Schultz, J., Vanderborght, B., & Bicchi, A. (2015). A muscle-like recruitment actuator with modular redundant actuation units for soft robotics. Robotics and Autonomous Systems, 74, 40–50. https://doi.org/10.1016/j.robot.2015.06.010

Ueda, J., Schultz, J. A., & Asada, H. H. (2017). Cellular Actuators: Modularity and Variability in Muscleinspired Actuation. In Cellular Actuators: Modularity and Variability in Muscle-inspired Actuation. Elsevier Inc.

About Cameron

Professional Experience Preference

Working Location Preference

Internships / RA / TA / Work History

Jacob Regan and Dr. Mahdi Khodayar

Tandy School of Computer Science

The University of Tulsa

Seth Hastings

The University of Tulsa seth-hastings@utulsa.edu

Corey Bolger

The University of Tulsa corey-bolger@utulsa.edu

Abstract Authentication logs can be helpful to Security Operations Centers (SOCs), but they are often messy, reporting details more relevant to system configurations than user experiences and spreading information on a single authentication session across multiple entries. This paper presents a method for converting raw authentication logs into user-centered “event logs” that exclude non-interactive sessions and capture critical aspects of the authentication experience. This method is demonstrated using real data from a university spanning three semesters. Event construction is presented along with several examples to demonstrate the utility of event logs in the context of a SOC. Authentication success rates are shown to widely vary, with the bottom 5% of users failing more than one third of authentication events. A proactive SOC could utilize such data to assist struggling users. Event logs can also identify persistently locked out users. 2.5% of the population under study was locked out in a given week, indicating that interventions by SOC analysts to reinstate locked -out users could be manageable. A final application of event logs can identify problematic applications with above average authentication failure rates that spike periodically. It also identifies lapsed applications with no successful authentications, which account for over 50% of unique applications in our sample.

A Security Operations Center (SOC) serves as the “nerve center” of an organization’s cybersecurity efforts. It should receive inputs from multiple sources, be sensitive to stimuli that may signal danger, and present the organization with a comprehensive representation of its environment. The primary functions range from monitoring, assessing, and defending against cyber threats, to surveillance of networks, servers, applications

Philip Shumway

The University of Tulsa philip-shumway@utulsa.edu

Tyler Moore

The University of Tulsa tylermoore@utulsa.edu

and users. This enables the SOC to identify pain points, potential vulnerabilities, and areas for improvement.

As such, a SOC is heavily limited by the quality of its inputs, i.e., its data sources. Many tools are utilized to develop and leverage data sources, such as Security Information and Event Management (SIEM) systems, Intrusion Detection Systems (IDS), vulnerability management tools, and other analytical tools. These systems work together to enable SOCs to detect, investigate, and respond to issues at speed.

Workshop on SOC Operations and Construction (WOSOC) 2024

1 March 2024, San Diego, CA, USA ISBN 979-8-9894372-3-8 https://dx.doi.org/10.14722/wosoc.2024.23xxx www.ndsssymposium.org

One primary data source for SOCs is authentication logs. Controlling who uses a given service or application, and in what capacity, is key to both proper security and functionality. Many organizations have deployed single sign-on (SSO) services such as Microsoft Azure AD (now Azure Entra AD) to streamline their users’ authentication experience.

Currently, authentication logs are used to investigate user and application issues, as well as sources for systems that generate alerts of suspicious activities [13], [9]. For example, SOC analysts can identify potential account takeovers when logs indicate login attempts from an unexpected country or high frequency failures.

Increasingly, artificial intelligence (AI) and machine learning (ML) models are employed to flag anomalies, promising to reduce time to detection. However, Zhao et al [14] identify several limitations including difficulty dealing with complex abnormal log patterns, poor interpretability of alerts, and lack of domain knowledge.

Traditional monitoring involves engineers examining logs and writing keyword and regular expression based rules for detection. This method is growing more challenging as the number of components and variety of logs increases, resulting in noisy datasets that require extensive domain knowledge to interpret, with new and updated service components producing ever diversifying log messages. While AI and ML systems can offer sensitivity to abnormality, they struggle with interpretation: engineers might be alerted that a given state is anomalous, but it is unclear why something is an anomaly, and what a “normal” pattern would look like. Raw authentication logs are noisy. They have not been created with easy interpretability in mind. A single login attempt often generates dozens of log entries, each apparently disconnected from another. Wading through that mess, either manually or with an automated system, can be problematic.

In this paper, we describe a process to construct interpretable, user-centric “event logs” from raw authentication logs that reduce noise, eliminate redundant entries, and combine entries into discrete user experiences. This event-focused dataset can be implemented in several ways: as an input for an IDS that allows for more interpretable alerts, as a more straightforward dataset for investigation that lowers the bar for domain knowledge, and as a means of generating performance metrics that enable proactive identification of struggling users or applications.The paper is organized as follows. Section II reviews related work. Section III describes the method for distilling raw authentication logs into distinct events. Section IV explores examples of how event data can be leveraged. Finally, we conclude in Section V.

Prior work incorporating authentication logs falls into a few broad categories. First, a small group of usability research on multi-factor authentication (MFA), some of which has used authentication logs to measure adoption rates and basic counts of errors associated with MFA use[11] [3] [10]. The most relevant examples being from Reynolds et al., who tracked users through a 90 day MFA adoption period at a university. They introduced “recovery time”, defined as the time between a failed login attempt and the next successful login for a given user [12]. They also performed some basic data cleaning, including removing duplicate log entries and malformed logs. Note that they used an individual log row as the unit for analysis, and did not use aggregation of log entries.

Our next and larger body of work uses authentication logs to create metrics and derivatives to directly identify

insider threats and profile groups of users with similar behaviors. Recently, Sonneveld et al. published a study examining the non-intrusive security relevant information available to an SOC [13]. Through examining which resources users accessed, and when they were accessing them, they were able to identify each “ITAdmin” user. Using similar measures, they clustered users and tested deviation from cluster baselines as a potential indicator of insider threats. Carnegie Mellon’s synthetic “Insider Threat” data set was used to test their methodology. Using this data set, they correctly detected 80% of insider threats in the ITAdmin group [6]. Intuitively, having high cluster consistency is key to getting a consistent measure for deviation; however, when they applied the clustering methodology to realworld data, consistency was cut in half. They attribute this partially to the much higher granularity of the real world data compared to the synthetic data. For other work clustering users, see Garchery and Freeman [5] [4].

Third, there is similar research that focuses on indicators of compromise or impersonation rather than insider threats, again using authentication logs to derive relevant metrics and measures. Liu et al. [7] created a behavior-based model to detect compromise using only two features: consecutive failures and login time of day. Their low computation-cost probabilistic model showed a good true positive to false positive trade off with high accuracy and low false positive ratio. They used a realworld private dataset of 4 million logs, and state that it contains no authentication compromises. This paper is of particular interest to us due to the unique way they construct derivative authentication “events” as their unit of analysis, rather than using individual log entries as atomic units. The authors aggregated raw log rows into series of 0-n failures prior to a success; series that don’t result in success are discarded. The maximum gap between a failed log row and the following success is not stated. The resulting “events” do not include failures, and some “events” may span time periods longer than the user’s interaction. See Bian et al [2] for similar work using to identify lateral movement.

Finally, we note the work of Alahmadi [1], who surveyed SOC practitioners investigating analysts perspectives on security alerts. They report an excessive number of alerts experienced across organizations, which contributes to analyst fatigue and human error. This is exacerbated by the low interpretability of the alerts being generated. These findings, in combination with Zhao et al. [14] who found that log data was used in over 30% of incident diagnoses, with indicators that this portion would be larger if the logs had greater interpretability, suggest the potential benefit that could accompany more interpretable logs and alerts.

Using data obtained through the University of Tulsa IT department, and approved for analysis by the

Institutional Review Board (IRB), we developed a process to capture user authentication “events” from raw authentication logs. We define an event as:

The occurrences reflected in log data that are directly experienced by a user, beginning when an authentication to a particular application is initiated, and terminated upon the eventual success, or abandonment of the authentication attempt.

By filtering sign-in logs to events directly experienced by the user, we can construct event-based metrics of usage and performance while reducing noise and increasing interpretability. In this section we provide an overview of the process to translate authentication logs to events, followed by a description of each step, and concluded with a description of the resulting events.

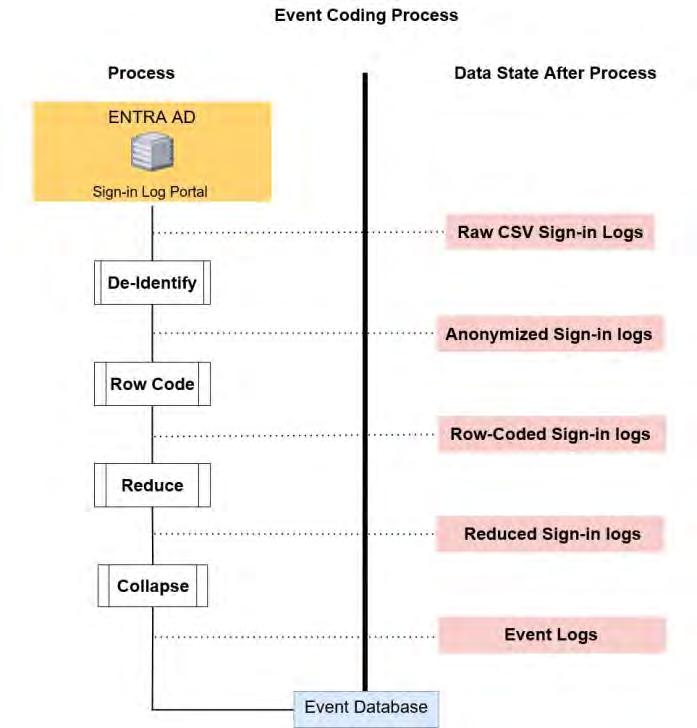

Before we dive into details of the process, we first give a high level example in Figure 1. The steps are:

1) De-Identify: These logs are first stripped of four direct identifiers which are replaced by the “Participant ID” attribute1

2) Row Code: Each row is assigned one of 46 “Row Codes” which captures both the overall success or failure result and detail about the action performed. This row code is the backbone of the encoding system, and will be explained in greater detail in IIIC.

3) Reduce: Several helper attributes are added, such as “event number” to indicate which “event” a particular authentication entry is associated with. An attribute tracking if a password is entered is added by cross referencing an entry’s “RequestID” with its entry(s) in the “authDetails” files. These attributes are used in combination with the row code to produce the “interactive” attribute. Duplicates and known or suspected malicious entries are removed.

4) Collapse: Finally, we create a derivative data set by aggregating the key attributes from all rows for a given event, tracking the number and type of errors encountered, the form of MFA used, etc. This yields a smaller data set comprised of rows with 20 attributes, each row describing a complete authentication attempt to a particular application. The final set of attributes is easily adjusted based on the attributes available in the raw data.

The author’s university utilizes Microsoft Entra AD for authentication. Data is first collected through the Entra AD portal, which presents the sign-in logs broken down into six categories. The first four categories are interactive and noninteractive sign-in logs and their corresponding interactive and non-interactive “auth details” files. The final two categories are called “Application logs” and “MSISignins”; these refer to authentications by service principals, and authentications by an Azure Managed Identity, respectively. Interactive logs are defined by Microsoft as those sign-ins where “a user provides an authentication factor, such as a password, a response through an MFA app, a biometric factor, or a QR code”.

To investigate user experience, we ignore the application and managed identity logs, as they are not indicative of human interaction. Microsoft’s labeling of interactive and noninteractive may be helpful in some cases; however, it does not strictly adhere to the definition

1 This step is only necessary in a research context where the users remain anonymous to the researcher.

of interactivity we use in this paper. For example, we want visibility into the errors that occur prior to or following presentation of an authentication factor. Thus, both files and their associated authentication details are downloaded. A single log entry contains 44 attributes

TABLE I RAW AZURE AD SIGNIN LOG ATTRIBUTES

Category Attributes

Direct ID User, User ID, Username, Sign-in Identifier

Device Device ID, Operating System, Browser

Connection IP Address, Location, Latency

Connection IP (seen by resource)

Session Info Date (UTC), Application, Application ID

Session Info Resource, Resource ID, Resource Tenant ID

Session Info Home Tenant ID, Home Tenant Name, Request ID

Session Info Correlation ID, Cross Tenant Access Type

Session Info Incoming Token Type, Unique Token Identifier

Session Info Client app, Client Credential Type

Session Info Autonomous System Number, Token Issuer Type

Session Info Incoming Token Type, Token Issuer Name

User

Provenance User Type, Compliant, Managed, Join Type

Authentication Info Authentication Protocol, Conditional Access

Authentication

Result Info Status, Sign-in Error Code, Failure Reason

Result Info MFA Result, MFA Auth Method, MFA Auth Detail and describes a single system interaction. A small period of user interaction can generate several to dozens of log entries per minute, many of which may represent backend processes that users don’t directly experience within an authentication attempt. The process described below is implemented on Entra AD logs, but is designed to be generalize to other sources of authentication logs.

Table I summarizes the attributes, which we have split into 7 broad categories. Direct ID attributes identify the specific user, which are immediately removed and replaced with a unique user number. Device and Connection attributes detail the network connection and device characteristics. Session Info attributes comprise the bulk of the data, including the name and ID of the application and resource being used, token information, client application, and so on. Redundant attributes will be dropped in processing, such as alpha-numeric “ID” fields like “Resource ID”; as “Resource” is retained, which is the name of the Resource. The “Request ID” field is always retained, as it is the unique key linking a particular log item with other associated data in the Azure AD system. The User Provenance category includes information about a particular user’s account, such as their user type (member or guest) and join type (Azure AD Registered, Azure AD Joined, Hybrid Azure AD Joined). The smallest category is Authentication Info. Relevant attributes include “Authentication Requirement”, which indicates if the authentication requires single or multi-factor authentication, and “Conditional Access”, which indicates any conditional access policies that were applied and the result. Finally, the Result Info category includes details about the authentication attempt and result.

The “Status” attribute has one of three values: Failure, Interrupted, and Success. Note that many “Failure” results are not caused by improper user action, and “Interrupted” results often do not tangibly disturb the user experience. The “Sign-in error code” attribute contains a numerical error code when an error is present, which is true for any entry that is not labeled “Sucesss”. This error code is the key attribute used to assign

row codes for non-pass rows. The “Failure reason” attribute contains a description of the error code result when an error is present, and detailed descriptions of errors and remediation are available from Microsoft on their website [8]. There are three MFA-related fields: “MFA result” provides a text description of the authentication result; “MFA auth method” contains the type of MFA used when applicable, and “MFA auth detail”, which may contain a phone number associated with the MFA with the last two digits revealed. The last field is a Boolean “Flagged for review”, which is only true when an admin flags a user account.

Adding a row code enables us to distill the 44 attributes included in raw log instances to a minimal expression. Thus, a set of 46 row codes were created to capture critical information about an authentication attempt’s result. There are two broad results that a single entry can indicate: Pass (Success), or Fail, indicated by the attempt concluding in an entry marked “Failure” or “Interrupted” in the “Result” field of raw sign-in logs. A selection of row codes can be seen in Table II and Table III below.

Nine categories of logs were identified that indicate authentication has passed as seen in Table II. These 9 categories are variations of 3 basic results: Token Successes, Remembered Device Successes, and MFA Successes. Token Successes are split between single and multi-factor authentications, and all multi-factor authentications that are not token-related are either a primary form of MFA such as Text message, OATH, etc. or fulfilled through remembered device. Six row codes capture the various forms of MFA Successes, and two capture the remaining single factor successes.

The remaining row codes are used for entries that do not indicate an authentication pass, and we group these 36 row codes into 3 primary categories of errors: Interrupts, User Errors, and Configuration Errors.

Interrupts occur when the “Failure” (or Interruption) reported is not a true failure, it is a redirect or part of the intended authentication flow. In our user-centric paradigm, this means the user is not met with an error message, they do not experience a failure. One example is Row Code #9: Token Failure: it is not an error in the sense that the user or application had an issue; rather, it is an expected part of a token’s life-cycle. When this Token Failure error occurs, a user has entered their password and asserts a token that would otherwise satisfy the second factor requirement, but that token is invalid for one of many reasons. The user experiences this as being directed to their MFA prompt screen after inputting their password. This is a typical use case and not experienced as failure or extra delay. “Interrupts” do not detract from typical user experience.

The key difference between user and configuration errors is the agency of the user to resolve the error. Any error that was either directly caused by the user, or is within the user’s power to resolve, is considered a user error. 8 row codes are used for the user errors. For example, row code #27 indicates a user initiated a multifactor sign in but never provided the second factor, and row code #26 indicates a user input an incorrect password.

An additional 8 row codes are used for configuration errors, which includes transient errors. Row Code #18 is a good example, wherein a user tries to authenticate to an application, but is denied because their account has no associated role in the application. The error message presented indicates that an administrator must give the user access, it can not be dynamically requested, making this an error outside direct control of the user. Finally, we have codes that capture behavior identified by Azure AD as malicious, and a catchall for uncategorized errors. We now describe the process of creating these row codes, beginning with non-pass entries. Three of the co-authors, two with high domain knowledge and one with low domain knowledge, independently inspected log samples encompassing each unique “Sign-in Error Code” present in the dataset. Co-authors labeled each error code with one of four categories: Interrupt, User Error, Configuration Error, or Hacking Error. Each error was

considered alongside all available documentation and examples of the error appearing in the data. Krippendorff’s alpha was 0.73 considering all three raters, and 0.86 for the two raters with high domain knowledge. Majority opinion was sufficient for all but one of 127 unique error codes labeled, and each labeling was reviewed and confirmed by the authors. Labeled errors were then grouped into row codes by similar themes within each category of error. These processes yielded the final set of 36 error groupings, which were then given integer representations

TABLE IV EVENT LOG ATTRIBUTES

Attribute Category Comments

Direct ID User Participant ID

Device OS String

Device Browser String

Connection IP Address Alpha-numeric

Session Info Event# Int

Session Info Application String

Session Info Service String

Session Info ClientApp String

Session Info Start DateTime

Session Info End DateTime

Auth Info MFA Type String

Auth Info AuthReq. Single/Multi-Factor

Result Info Result Success/Failure

Result Info Detail Result Details

Result Info Password Entries Int

Result Info Elapsed Elapsed Time in Seconds

Result Info TA Time Away in Minutes

Result Info UEs User Errors Count

Result Info IEs Int. Errors Count

Result Info CEs Config Errors Count

Result Info Error Codes Int List of Errors beginning after the 10 “Pass” row codes. In total, 127 distinct sign-in error codes from the logs were mapped to 36 row codes. This is best explained using examples.

1) Row Code 11: There are 2 error codes that indicate MFA Completion is required. They redirect the user to use their second factor for the authentication, “Sign-in Error Code” 50074 and 50076.

2) Row Code 18: error codes 50105 and 50177 both describe a user who has not been granted specific access to an application, and is classified as a configuration error. This is distinct from a user who is dynamically requesting access to an application, which is classified as an interrupt, as it is an intended step in the authentication cycle, not the result of incorrect permissions or any failure.

There are four steps taken to reduce the authentication logs after row coding. Here, we note that the focus of this paper and the authors’ related research has been on measuring and characterizing legitimate use. As such, we discard known and suspected malicious authentication attempts when constructing events. First, we discard logs from non-standard user agents including POP and IMAP, and logs categorized as “Hacking Errors”, such as those with row code #34: “Blocked for Malicious IP”, as these attempts are unlikely to be from legitimate users

interacting with our applications. Second, we discard logs from authentication attempts made to “API” resources, which are not indicative of interactive user authentication, as these are authentications performed by some user-side application to access a third party resource. Third, we discard duplicate logs, defined as logs with identical attributes occurring within one second of each other. Finally, we also discard any logs whose row codes are not labeled as interactive, which is a subattribute of our row codes. These reductions ensure we have non-redundant data that focuses on legitimate, interactive user behaviors and experiences.

Returning to our definition, we define an event as:

The occurrences reflected in log data that are directly experienced by a user, beginning when an authentication to a particular application is initiated, and terminated upon the eventual success, or abandonment of the authentication attempt.

Each event captures the number of errors encountered before eventual success or failure, as well as the type of errors involved, time spent on an attempted authentication, and the type of authentication used. Since these characteristics are reflected in the row codes outlined above, tracking their occurrence in events is straightforward.

Events are constructed by aggregating rows with the same “Event Number”. This number is created by first sorting entries by user and datetime, and setting a boolean “New Event” to TRUE if the gap between the current entry and prior entry exceeds 90 seconds. A cumulative sum is run on the “New Event” attribute to assign an event number to each log. In an enterprise environment without SSO implementation, a second condition is introduced: the successful completion of an authentication. In our SSO environment, once an authentication succeeds, any subsequent authentications to related sites will be non-interactive and fulfilled by the token presented by the user, resulting in no authentication interaction.

By defining events in this manner, we are flexible enough to accommodate situations where the user initiates multiple applications simultaneously. For example, a user might first be prompted for MFA on their desktop Outlook client. If that fails, a user could authenticate using a web-based interface instead. For our purposes, this is treated as a single event when occurrin g in close temporal proximity, which is effective for our enterprise environment in which there are many different applications which can be satisfied by completing authentication in any one service. The resulting “event” provides a clear indication of overall success, the application used, MFA Type, time spent, count and

2 TA is similar to “recovery time” reported by [12], which captures the time between a failure and the next success.

classification of errors, and provides the error codes associated with the errors to enable user and population metrics.

“Events” are comprised of the 21 attributes listed in Table IV. The first attributes tell us who authenticated, the system they used to do so, and total time elapsed. We also retain authentication information (MFA type and whether one or two factors were required). The final 9 attributes capture relevant details about the authentication experience by aggregating the observed row codes for log entries in the event. Note that a user can experience one or more errors, from misconfigurations to failed passwords or MFA prompts, before ultimately succeeding in the authentication. Such impediments are reflected in the other fields, such as the “Password Entries” attribute that tracks the number of times the user input their password during the authentication event. The “Elapsed” attribute is calculated by the difference between the first and

V SAMPLE

last rows in a sequence that collapses into an event. Because there is no indicator in the raw sign-in logs when a Multifactor prompt is initiated, this measure captures the extra time spent due to errors and interruptions in the authentication process. Time Away (TA) measures the gap in time between a failed authentication event and the next attempted login 2 . The final attributes tally the number of User, Interrupt, and Configuration Errors experienced during the authentication event.

Table V illustrates the “event” log with example events. Event #3 shows a simple failure with a single “Configuration Error” (CE). A “Time Away” of 4 minutes is listed, indicating that 4 minutes elapsed before the next successful authentication, event #4. Event #4: App-based MFA was used to successfully sign into the Azure Portal on a Windows device after a single “User Error” (UE), an invalid password entry. The authentication process took 16 seconds after initiation, significantly longer than that observed by [10], which is likely a consequence of the failed password entry. Event #6 offers another example of a simple success with no errors that takes 0 seconds after initiation to complete. This zero second time reflects the complete lack of friction in the event, as we do not know when the user started to input their password, use MFA, etc; we only know when the user hit ENTER or otherwise

imitated the authentication. By breaking down authentication logs into discrete user-centric events, we can provided meaningful insight into the user experience and application health, as we demonstrate next.

For this section of the paper, we utilize a subset of collected data that centers around three semesters: Spring and Fall of 2022 and Spring of 2023. These slices include one week prior to the first day of class and end one week after the semester concludes; January 8th through May 17th for the spring semesters, and August 13th to December 19th for the Fall. After filtering for users that had at least one successful authentication, we are left with 1.7m events across 7,419 users, an average of 77 authentication events per user, per semester.

The examples discussed in this section demonstrate the utility of user-focused event aggregates and their derivatives. A proactive SOC may directly utilize some of these capabilities beyond the standard authentication log use cases of alert diagnosis and incident response. For example, the detection of lapsed applications discussed in section IV-C could be used to reduce threat surfaces by retiring unused applications. As we consider the utility of an event-based approach to authentication logs in a SOC, we begin by examining the basic unit of analysis, the event, before moving on to derivative measures. As we see in Table V, each event reports success or failure, the time elapsed, the form of MFA used, types of errors encountered, and application being authenticated to. The most straightforward measure then, is failure rate, the complement of success rate.

An intuitive way to examine failure rates is by error content: does error type impact the user experience differently? We anticipate that errors caused by users are both more common and more easily resolved; passwords can be re-entered, MFA can be properly completed, etc. We find that over 80% of users who encounter a configuration error will never succeed when they experience a configuration error, and 94% of events containing a configuration error end in failure. Conversely, we find that only 7% of users who encounter user errors will never succeed when they experience a user error, and only 56% of events with user errors conclude in failure. While configuration errors are clearly more difficult to resolve, they are also less common. 93% of users experience user errors, while only 27% of users experience configuration errors. This confirms our expectation that user errors are both more common and more easily resolved.

Examining the cumulative distribution function (CDF) plots in Figure 2, the majority of our 7,305 valid users experience a very low failure rate. Mean failure rate is 8%, with the 10% worst users failing over 20% of authentications, and the 10% best users fail only 0.4%. The failure rate increases substantially for our worst users

when we examine those who ever experience configuration errors, plotted here in red. The 10% best users fail only 1.5% of authentication events, whereas the 10% worst fail over 30% of authentications. The bottom 5% fail an astounding 47% of authentication attempts. We can take away a few lessons from these distributions for utilizing event data in a SOC. First, configuration errors may be worth investigating, as they reliably trigger failures through no fault of the user. Second, relatively few users fail frequently, and it may be beneficial to target efforts at assisting these struggling users.

Creating derivative metrics lends greater utility, such as the ability to identify locked-out users. An alert prompted by a lockout metric might trigger automated assistance, which in turn could forestall help tickets and issue early alerts for developer issues that cause service interruptions.

To construct this measure, we first add helper variables to our event dataset: we add a “consecutive failures” and “hours away” attribute to each event. Next, we set a variable “lockout” to true when consecutive failures is greater than one and time away exceeds twelve hours. Each week is summarized by the longest lockout experienced for each user.

Figure 3 shows the number of users locked out for each week of the semesters. The average number of users locked out for more than 12 hours, each week in the semester, was approximately 2.5% (152 of 6017) of the total. If we filter this for lockouts over 24 hours in duration, it shifts to 105 users per week, or 1.7% of our users.

Next we plot another set of CDFs, this time examining the duration of lockouts. As configuration errors affect failure rates more than user errors, we plot Figure 4 with a series of mixed errors in black, and a series with only configuration errors in red. Across three semester, we observe 8350 lockouts for 2656 unique users, which is 36% of our total user base. We note that nearly 93% of lockouts were associated with both user and configuration

errors, and the mean ratio of CEs to UEs for those lockouts was 3.7. Over 6% of lockouts only had configuration errors, and less than 1% only had user errors. Lockouts commonly persist beyond 12 hours, with a median lockout duration of 43 hours, and the 90th percentile being locked out for over 193 hours, or 8 days. Lockout times begin to diverge based on error composition after the 24 hour mark and are longer when caused by configuration errors.

Lockouts happen often enough to benefit from proactive investigation and resolution, but they are uncommon enough to not overwhelm analysts. Moreover, since lockouts can persist for a long time, steps to eliminate them sooner would bring substantial value.

Maintaining the security and performance of enterprise applications is a key function of a SOC. Applications that are unused and/or not associated with any successful authentications present a security risk; these applications are more likely to lapse into unsafe states, and misuse may be harder to detect. In our data, we observe 689 unique applications across three semesters, 348 of which never show a successful authentication. In our organization, over 50% of applications can be easily identified and classified as lapsed, and may be de-

Locked Out Users Per Week

3 We do not currently posses a master list of applications for our organization, and can only detect applications with at least one authentication

commissioned to increase security. 3 These lapsed applications may otherwise persist for long periods of time, as we observe in the bar chart 6, which shows the number of valid and invalid applications per semester.

The next utility is early identification of struggling applications. Using the most recent semester, SP23, we first filter out the lapsed applications with no record of successful authentications. This results in a median success rate of 95% percent, closely matching our median user success rate for that semester of 94%. The mean success rate per application is somewhat lower, at 76%, indicating that some of our highly used applications have lower success rates. Examining the 20 most used applications, which in our data incur an average of 140 unique users per week, we plot the per application success rate over time to observe struggling applications. We define a struggling application as an application experiencing a success rate 50% below its mean success rate across the semester. In Figure 5 we report the lagging top 20 applications per week in the SP22 semester.

As one might expect, the top applications usually perform well, but it is not uncommon for one or a few to be lag-

Application Counts by Semester

5. Lapsed and Active Applications per Period

Struggling Top 20 Applications

6. Lagging Top Applications per Week

ging. Taking a specific application as an example, Microsoft Teams had a fairly low mean success rate of 53% in the SP23 semester, compared to 78% in the SP22 semester. Our “Lagging” metric flags a per-day success rate of under 10% on the last day of week 9, pointing to acute issues with the application. The graph in Figure 7 shows the downward trend of weekly success rate and its impact on the success rate across all applications in the following weeks. Early identification of such issues is key in reducing the impact of lagging applications on an organization.

In this paper we described a process to distill raw authentication logs into more meaningful events, then applied that methodology to real-world data. The process utilized was designed to incorporate a level of domain knowledge to improve the utility of raw logs, but be broad enough to generalize to other sources of authentication logs. These examples are simple demonstrations of the type of utility the events provide, such as identifying struggling users and lapsed applications.

We contend that the event view developed for the analysis has the potential to improve SOC analysts’ performance by

Fig. 7. Success Rate of Microsoft Teams vs All Applications

providing a human readable summary of a user ’ s experience that collapses numerous otherwise difficult to read log entries. This enables cybersecurity teams to quickly assess the state of a user’s authentication and note changes in usage and performance patterns when investigating alerts. Finally, this new unit of analysis allows for the creation of event-based metrics that can better capture subtleties of authentication usage and performance. Future work is planned to develop and deploy an event-based dashboard in the university SOC. This will help to evaluate the measures and incorporate feedback from real-world usage.

This paper’s primary goal was to describe and demonstrate a methodology for constructing userfocused authentication event logs. We attempt to filter out entries that do not reflect user interaction, but some events are inevitably missed. There is ongoing work by coauthors to utilize this event data in a diary study tracking users’ authentication experiences. This should provide a valuable opportunity to validate the approach and examine if the events as constructed match the users’ perceived experience.

In future research, we could apply event-based authentication logs to user clustering in a system that detects malicious activity. It is possible that our eventbased log method would remove noise that may have contributed the lack of cluster consistency found by [13] in their 2023 study, and “tune” our tools to the input we’re most interested in. Our approach also introduced derivative measures that embed a baseline of domain knowledge, such as distinctions between user errors and configuration errors, which can help differentiate two behaviors or experiences that might otherwise appear similar.

The authors thank Sal Aurigemma and Bradley Brummel for their feedback and acknowledge support from Tulsa Innovation Labs via the Cyber Fellows Initiative.

[1] B. A. Alahmadi, L. Axon, and I. Martinovic, “99% false positives: A qualitative study of SOC analysts’ perspectives on security alarms,” in 31st USENIX Security Symposium (USENIX Security 22) . Boston, MA: USENIX Association, Aug. 2022, pp. 2783–2800. [Online]. Available: https://www.usenix.org/conference/ usenixsecurity22/presentation/alahmadi

[2] H. Bian, T. Bai, M. A. Salahuddin, N. Limam, A. A. Daya, and R. Boutaba, “Uncovering Lateral Movement Using Authentication Logs,” IEEE Transactions on Network and Service Management , vol. 18, no. 1, pp. 1049–1063, Mar. 2021, conference Name: IEEE Transactions on Network and Service Management.

[3] J. Colnago, S. Devlin, M. Oates, C. Swoopes, L. Bauer, L. Cranor, and N. Christin, “It’s not actually that horrible: Exploring adoption of twofactor authentication at a university,” 04 2018, pp. 1–11.

[4] D. Freeman, S. Jain, M. Duermuth, B. Biggio, and G. Giacinto, “Who Are You? A Statistical Approach to Measuring User Authenticity,” in

Proceedings 2016 Network and Distributed System Security Symposium San Diego, CA: Internet Society, 2016. [Online]. Available: https://www.ndss-symposium.org/wpcontent/uploads/2017/ 09/who-are-you-statistical-approachmeasuring-user-authenticity.pdf

[5] M. Garchery and M. Granitzer, “Identifying and Clustering Users for Unsupervised Intrusion Detection in Corporate Audit Sessions,” in 2019 IEEE International Conference on Cognitive Computing (ICCC). Milan, Italy: IEEE, Jul. 2019, pp. 19–27. [Online]. Available: https://ieeexplore.ieee.org/document/8816990/

[6] B. Lindauer, “Insider Threat Test Dataset,” 9 2020.

[Online]. Available: {{https://kilthub.cmu.edu/articles/dataset/Insider Threat Test Dataset/12841247}}

[7] M. Liu, V. Sachidananda, H. Peng, R. Patil, S. Muneeswaran, and M. Gurusamy, “Log-off: A novel behavior based authentication compromise detection approach,” in 2022 19th Annual International Conference on Privacy, Security Trust (PST), 2022, pp. 1–10.

[8] Microsoft. (2024) Error documentation. [Online]. Available: https: //login.microsoftonline.com/error

[9] G. Pannell and H. Ashman, “Anomaly Detection over User Profiles for Intrusion Detection,” Proceedings of the 8th Australian Information Security Mangement Conference, vol. Edith Cowan University, p. 30th November 2010, 2010, medium: PDF Publisher: Security Research Institute (SRI), Edith Cowan University. [Online]. Available: http://ro.ecu.edu.au/ism/94

[10] K. Reese, “Evaluating the usability of two-factor authentication,” 2018.

[11] K. Reese, T. Smith, J. Dutson, J. Armknecht, J. Cameron, and K. Seamons, “A usability study of five two-factor authentication methods,” in Proceedings of the Fifteenth USENIX Conference on Usable Privacy and Security, ser. SOUPS’19. USA: USENIX Association, 2019, p. 357–370.

[12] J. Reynolds, N. Samarin, J. D. Barnes, T. Judd, J. Mason, M. Bailey, and S. Egelman, “Empirical measurement of systemic 2fa usability,” in USENIX Security Symposium, 2020.

[13] J. J. Sonneveld, “Profiling users by access behaviour using data available to a security operations center,” Jan. 2023, publisher: University of Twente. [Online]. Available: https://essay.utwente.nl/94221/

[14] N. Zhao, H. Wang, Z. Li, X. Peng, G. Wang, Z. Pan, Y. Wu, Z. Feng, X. Wen, W. Zhang, K. Sui, and D. Pei, “An empirical investigation of practical log anomaly detection for online service systems,” Proceedings of the 29th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Fo undations of Software Engineering, pp. 1404–1415, Aug. 2021, conference Name: ESEC/FSE ’21: 29th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering ISBN: 9781450385626 Place: Athens Greece Publisher: ACM. [Online]. Available: https://dl.acm.org/doi/10.1145/3468264.3473933

REGRESSION (C‐NPDR) FEATURE SELECTION FOR CORRELATION

PREDICTORS WITH APPLICATION TO A RESTING‐STATE FMRI

Student Author

Elizabeth Kresock (Computer Science PhD Candidate) Advisor

Dr. Brett McKinney

Additional Authors

Elizabeth Kresock1, Bryan Dawkins2, Henry Luttbeg1, Yijie (Jamie) Li1, Rayus Kuplicki3, B. A.McKinney1,4

1Tandy School of Computer Science, The University of Tulsa, Tulsa, OK 74104

2SomaLogic Operating Company, Boulder, CO 80301

3Laureate Institute for Brain Research, Tulsa, OK 74136

4Department of Mathematics, The University of Tulsa, Tulsa, OK 74104

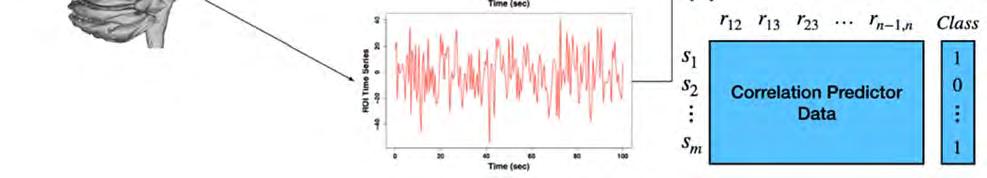

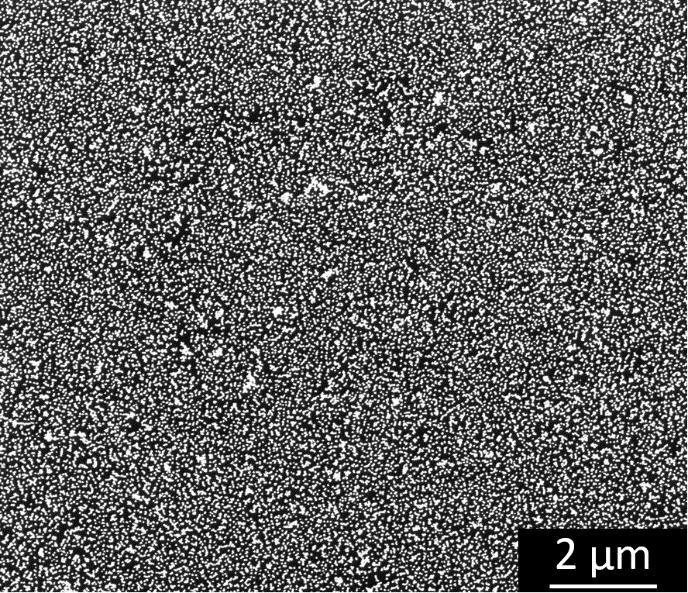

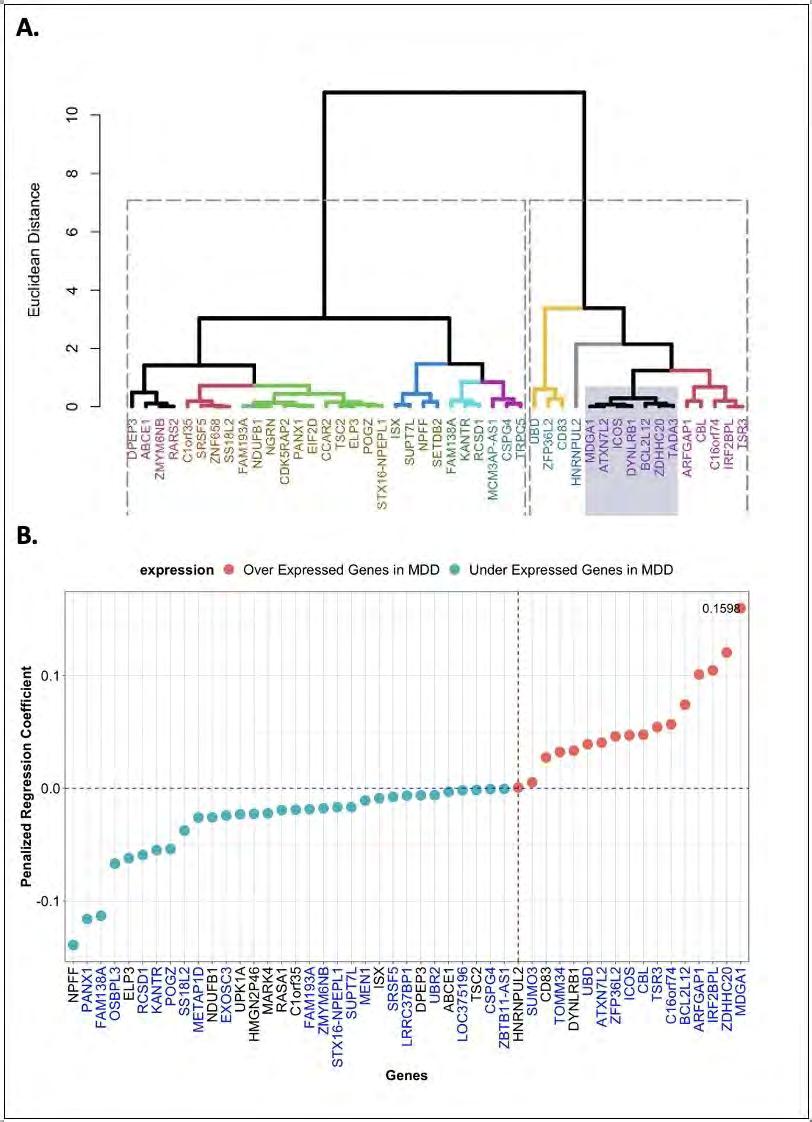

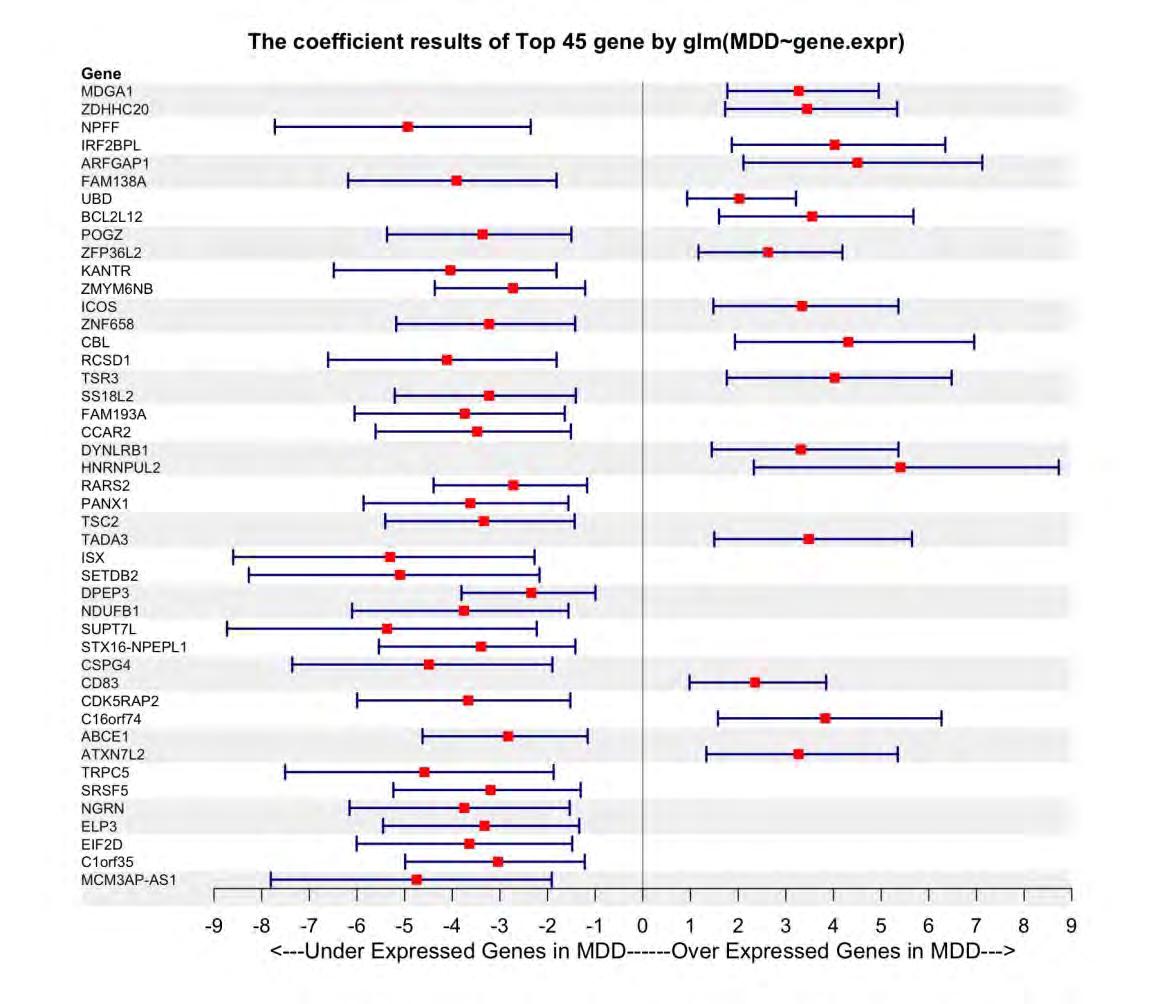

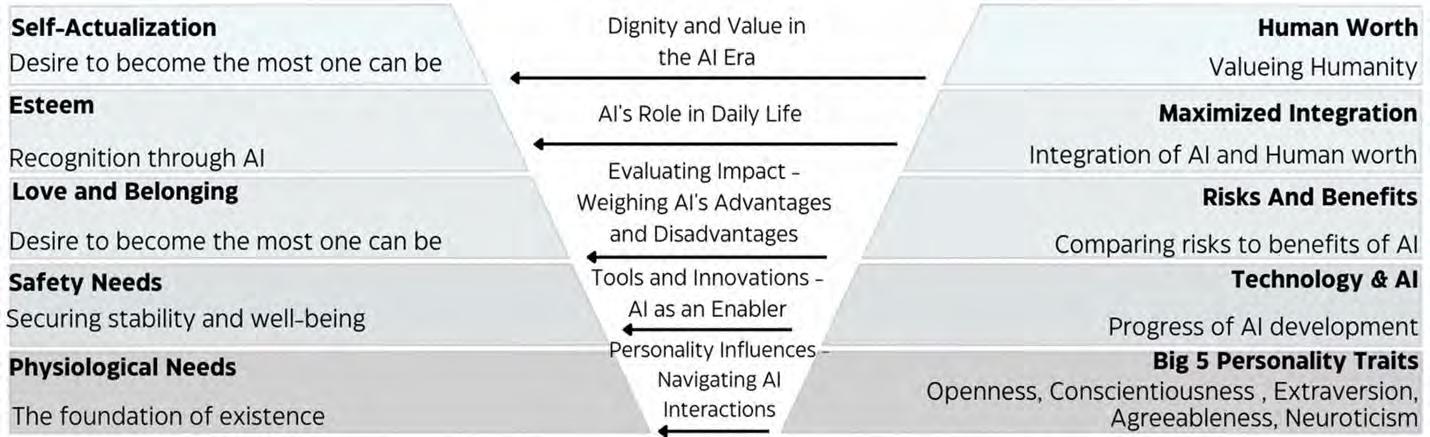

Nearest-neighbor projected-distance regression (NPDR) is a metric-based machine learning feature selection algorithm that uses distances between samples and projected differences between variables to identify variables or features that may interact to affect the prediction of complex outcomes. Typical bioinformatics data consists of separate variables of interest like genes or proteins. Contrastingly, resting-state functional MRI (rs-fMRI) data is composed of time-series for a set of brain Regions of Interest (ROIs) for each subject. These within-brain time-series can be transformed into correlations between pairs of ROIs, and these pairs can be used as input variables of interest for feature selection. Straightforward feature selection would return the most significant pairs of ROIs; however, it would be beneficial to know the importance of individual ROIs. We extend NPDR to compute the importance of individual ROIs from correlation-based features. We present correlation-difference and centrality-based versions of NPDR. The centrality-based NPDR can be coupled with any centrality method and with importance scores other than NPDR, such as random forest importance. We develop a new simulation method using random network theory to generate artificial correlation data predictors with differences in correlation that affect class prediction. We compare feature selection methods based on detecting functional simulated ROIs, and we apply the new centrality NPDR approach to a restingstate fMRI study of major depressive disorder (MDD) and healthy controls. We determine that the brain regions that are the most interactive in MDD patients include the middle temporal gyrus, the inferior temporal gyrus, and the dorsal entorhinal cortex.

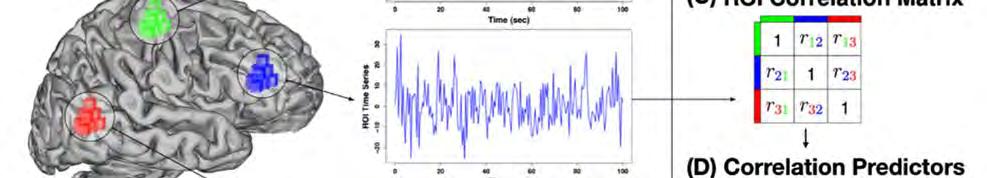

Figure 1: Illustration of resting-state fMRI data used for machine learning feature selection. Regions of interest (ROIs) are made up of groups of voxels within the brain. Three ROIs (a) are used for illustration (green, blue, and red cubes/voxels), but the number of ROIs is typically on the order of 200. Each voxel has an associated time series, which are averaged within ROIs to create the green, red and blue time series (b). From these time-series, pairwise ROI correlations are calculated and stored in a matrix for each subject (c). The upper triangle of each subject’s correlation matrix can be stretched into a sample vector, si, to form rows of a dataset (d), where the predictors (columns) are ROI-ROI correlations.

Figure 2: Analysis methods for rs-fMRI data with correlation-based features and a class variable. On the left, correlation-diff-NPDR (Eq. 2) can directly rank the importance of ROIs using P-values or penalized regression coefficients. On the right, centrality-NPDR (C-NPDR, Eq. 1) and centrality random forest (c-rf) rank the importance of pairs of ROIs, and then centralities of the resulting ROI-ROI networks are used to rank the importance of individual ROIs.

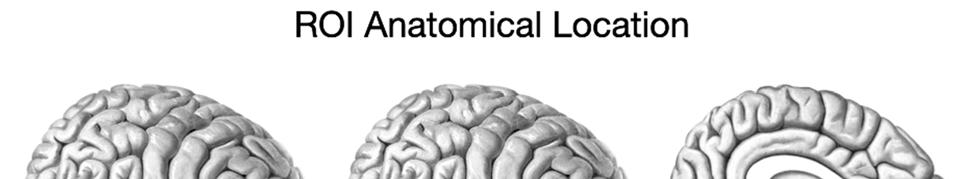

Figure 3: Brain diagrams that represent the anatomical location of some of the most significant individual ROIs yielded using the Brainnetome Atlas. From left to right we have ROI 84, ROI 95, and ROI 115. Located on the left hemisphere of the brain is ROI 84, the middle temporal gyrus, which is critical for semantic memory processing, visual perception, and language processing [17]. Also on the left hemisphere of the brain is ROI 95, the inferior temporal gyrus, which processes visual information in the field of vision and is involved with memory [18]. Located on the right hemisphere of the brain is ROI 115, the entorhinal cortex, which is involved in working memory, navigation, and the perception of time [19]. The image for the entorhinal cortex is a bisection of the brain to reveal where the entorhinal cortex appears in the right hemisphere.

Elizabeth is passionate about artificial intelligence and innovation! Elizabeth graduated from the University of San Diego with majors in Math and Computer Science. Now she is 1 of 37 Cyber Fellows (and one of only four women) that were selected for a full tuition scholarship and stipend to pursue a PhD in Computer Science at the University of Tulsa (TU). Elizabeth’s dissertation topic is machine learning (ML) algorithms that analyze which brain regions have increased activity in patients with major depressive disorder in comparison to healthy control patients. She is on track to finish her PhD in May 2024. Elizabeth is also 1 of 500 international students selected to be an Amazon AWS Machine Learning Scholar! This scholarship allowed her to complete Udacity certifications for AI Programming with Python and ML Fundamentals, which taught machine learning techniques, such as neural networks and image classification.

Elizabeth combines her computer science interests with her hobby of content creation. Through her YouTube channel “Liz Victoria”, she posts educational videos about artificial intelligence technology, advice for people interested in computer science, and her life as a PhD student. She has been able to monetize these platforms and form partnerships with businesses, and now she is working with mentors to develop her own entrepreneurial ideas. Elizabeth is the founder of “Pigment of Imagination”, a technological product that combines makeup and AI-generated art! She has gained insights into how to create products that solve problems, and she has practiced these skills by participating in business pitch competitions. Additionally, Elizabeth is the president of a student-run entrepreneurship club at TU called the JOLT Creativity Club!

About Dr. Brett McKinney

Brett McKinney, Ph.D., is a theoretical physicist and professor of computer science who specializes in the development of machine learning (ML) and AI algorithms for problems in the biomedical and physical sciences. He has made important contributions to methods that detect networks of interacting variables for accurate and interpretable ML models. He applies these explainable ML methods in close collaboration with researchers in neuroscience, immunology, geochemistry and astrobiology. He has also developed theoretical approaches to understand the foundations of quantum mechanics, such as the role of the dimensionality of space on many-body systems like Bose-Einstein condensates.

Dr. McKinney is a professor in the Tandy School of Computer Science with a joint appointment in the Department of Mathematics. He is a native Tulsan who did his undergraduate work summa cum laude in mathematics and physics at The University of Tulsa where he was a member of Phi Beta Kappa and the honors program. He did his graduate work at the University of Oklahoma, obtaining a Ph.D. in theoretical physics, followed by a postdoctoral fellowship in biomathematics and computational biology at the

Vanderbilt University Medical Center. Before joining TU, he was an assistant professor of genetics at the University of Alabama Birmingham School of Medicine.

[1]

M. P. Van Den Heuvel and H. E. Hulshoff Pol, “Exploring the brain network: A review on resting-state fMRI functional connectivity,” European Neuropsychopharmacology, vol. 20, no. 8, pp. 519–534, Aug. 2010, doi: 10.1016/j.euroneuro.2010.03.008.

[2]

R. Salvador, J. Suckling, M. R. Coleman, J. D. Pickard, D. Menon, and E. Bullmore, “Neurophysiological Architecture of Functional Magnetic Resonance Images of Human Brain,” Cerebral Cortex, vol. 15, no. 9, pp. 1332–1342, Sep. 2005, doi: 10.1093/cercor/bhi016.

[3]

A. Iraji et al., “The connectivity domain: Analyzing resting state fMRI data using feature-based data-driven and model-based methods,” NeuroImage, vol. 134, pp. 494–507, Jul. 2016, doi: 10.1016/j.neuroimage.2016.04.006.

[4]

M. G. Preti, T. A. Bolton, and D. Van De Ville, “The dynamic functional connectome: State-of-the-art and perspectives,” NeuroImage, vol. 160, pp. 41–54, Oct. 2017, doi: 10.1016/j.neuroimage.2016.12.061.

B. B. Biswal et al., “Toward discovery science of human brain function,” Proc. Natl. Acad. Sci. U.S.A., vol. 107, no. 10, pp. 4734–4739, Mar. 2010, doi: 10.1073/pnas.0911855107.

[5]

[6]

S. M. Smith et al., “Network modelling methods for FMRI,” NeuroImage, vol. 54, no. 2, pp. 875–891, Jan. 2011, doi: 10.1016/j.neuroimage.2010.08.063.

[7]

K. R. A. Van Dijk, M. R. Sabuncu, and R. L. Buckner, “The influence of head motion on intrinsic functional connectivity MRI,” NeuroImage, vol. 59, no. 1, pp. 431–438, Jan. 2012, doi: 10.1016/j.neuroimage.2011.07.044.

[8]

X.-N. Zuo and X.-X. Xing, “Test-retest reliabilities of resting-state FMRI measurements in human brain functional connectomics: A systems neuroscience perspective,” Neuroscience & Biobehavioral Reviews, vol. 45, pp. 100–118, Sep. 2014, doi: 10.1016/j.neubiorev.2014.05.009.

[9]

A. Fornito, A. Zalesky, and M. Breakspear, “Graph analysis of the human connectome: Promise, progress, and pitfalls,” NeuroImage, vol. 80, pp. 426–444, Oct. 2013, doi: 10.1016/j.neuroimage.2013.04.087.

[10]

L. Fan et al., “The Human Brainnetome Atlas: A New Brain Atlas Based on Connectional Architecture,” Cereb. Cortex, vol. 26, no. 8, pp. 3508–3526, Aug. 2016, doi: 10.1093/cercor/bhw157.

J. Zhuo, L. Fan, Y. Liu, Y. Zhang, C. Yu, and T. Jiang, “Connectivity Profiles Reveal a Transition Subarea in the Parahippocampal Region That Integrates the Anterior Temporal–Posterior Medial Systems,” J. Neurosci., vol. 36, no. 9, pp. 2782–2795, Mar. 2016, doi: 10.1523/JNEUROSCI.1975-15.2016. [12]

A. Al-Zubaidi, A. Mertins, M. Heldmann, K. Jauch-Chara, and T. F. Münte, “Machine Learning Based Classification of Resting-State fMRI Features Exemplified by Metabolic State (Hunger/Satiety),” Frontiers in Human Neuroscience, vol. 13, 2019, Accessed: Feb. 05, 2024. [Online]. Available: https://www.frontiersin.org/articles/10.3389/fnhum.2019.00164 [13]

J. D. Álvarez, J. A. Matias-Guiu, M. N. Cabrera-Martín, J. L. Risco-Martín, and J. L. Ayala, “An application of machine learning with feature selection to improve diagnosis and classification of neurodegenerative disorders,” BMC Bioinformatics, vol. 20, no. 1, p. 491, Oct. 2019, doi: 10.1186/s12859-019-3027-7.

[14]

C. Shi, J. Zhang, and X. Wu, “An fMRI Feature Selection Method Based on a Minimum Spanning Tree for Identifying Patients with Autism,” Symmetry, vol. 12, no. 12, p. 1995, Dec. 2020, doi: 10.3390/sym12121995

[15]

T. T. Le, B. A. Dawkins, and B. A. McKinney, “Nearest-neighbor Projected-Distance Regression (NPDR) for detecting network interactions with adjustments for multiple tests and confounding,” Bioinformatics, vol. 36, no. 9, pp. 2770–2777, May 2020, doi: 10.1093/bioinformatics/btaa024.

[16]

L. Breiman, “Random Forests,” Machine Learning, vol. 45, no. 1, pp. 5–32, 2001, doi: 10.1023/A:1010933404324.

[17]

D. Loh, “Middle temporal gyrus | Radiology Reference Article | Radiopaedia.org,” Radiopaedia. Accessed: Feb. 05, 2024. [Online]. Available: https://radiopaedia.org/articles/middle-temporal-gyrus

[18]

D. Loh, “Inferior temporal gyrus | Radiology Reference Article | Radiopaedia.org,” Radiopaedia. Accessed: Feb. 05, 2024. [Online]. Available: https://radiopaedia.org/articles/inferior-temporal-gyrus [19]

F. Gaillard, “Entorhinal cortex | Radiology Reference Article | Radiopaedia.org,” Radiopaedia. Accessed: Feb. 05, 2024. [Online]. Available: https://radiopaedia.org/articles/entorhinal-cortex

[20]

C. Ma et al., “Resting-State Functional Connectivity Bias of Middle Temporal Gyrus and Caudate with Altered Gray Matter Volume in Major Depression,” PLoS ONE, vol. 7, no. 9, p. e45263, Sep. 2012, doi: 10.1371/journal.pone.0045263.

[21]

T. Onitsuka et al., “Middle and Inferior Temporal Gyrus Gray Matter Volume Abnormalities in Chronic Schizophrenia: An MRI Study,” AJP, vol. 161, no. 9, pp. 1603–1611, Sep. 2004, doi: 10.1176/appi.ajp.161.9.1603.

[22]

I. B. Kim and S.-C. Park, “Neural Circuitry–Neurogenesis Coupling Model of Depression,” IJMS, vol. 22, no. 5, p. 2468, Feb. 2021, doi: 10.3390/ijms22052468.

[23]

Y. Du, Z. Fu, and V. D. Calhoun, “Classification and Prediction of Brain Disorders Using Functional Connectivity: Promising but Challenging,” Front Neurosci, vol. 12, p. 525, Aug. 2018, doi: 10.3389/fnins.2018.00525.

[24]

Y. Chen, W. Zhao, S. Yi, and J. Liu, “The diagnostic performance of machine learning based on resting-state functional magnetic resonance imaging data for major depressive disorders: a systematic review and metaanalysis,” Front Neurosci, vol. 17, p. 1174080, Sep. 2023, doi: 10.3389/fnins.2023.1174080.

[25]

V. D. Calhoun and N. de Lacy, “Ten Key Observations on the Analysis of Resting-state Functional MR Imaging Data Using Independent Component Analysis,” Neuroimaging clinics of North America, vol. 27, no. 4, p. 561, Nov. 2017, doi: 10.1016/j.nic.2017.06.012.

[26]

S. E. Joel, B. S. Caffo, P. C. van Zijl, and J. J. Pekar, “On the relationship between seed-based and ICA-based measures of functional connectivity,” Magn Reson Med, vol. 66, no. 3, pp. 644–657, Sep. 2011, doi: 10.1002/mrm.22818.

[27]

B. A. Dawkins, T. T. Le, and B. A. McKinney, “Theoretical properties of distance distributions and novel metrics for nearest-neighbor feature selection,” PLOS ONE, vol. 16, no. 2, p. e0246761, Feb. 2021, doi: 10.1371/journal.pone.0246761.

[28]

T. A. Victor et al., “Tulsa 1000: a naturalistic study protocol for multilevel assessment and outcome prediction in a large psychiatric sample,” BMJ Open, vol. 8, no. 1, p. e016620, Jan. 2018, doi: 10.1136/bmjopen-2017016620.

[29]

E. T. Rolls, C.-C. Huang, C.-P. Lin, J. Feng, and M. Joliot, “Automated anatomical labelling atlas 3,” NeuroImage, vol. 206, p. 116189, Feb. 2020, doi: 10.1016/j.neuroimage.2019.116189.

Jack Tilbury LinkedIn

Jack - Tilbury@utulsa.edu

JackTilbury Google Scholar

● Security P roduct Manager

● Security,Risk & Resilient Strategy Consulting

● Security An alystOperations

● Research S cientist

Working Location Preference

Tulsa Area, Florida, Chicago, Washington, Remote

Internships / RA / TA / Work History

Year Position

2022Present Doctoral Researcher

20202022 Product Manager

University of Tulsa

Flash Mobile Vending & The Delta, South Africa

2019 Assistant Lecturer and Teaching Assistant Rhodes University

2018 Software Implementation Analyst Saratoga, Cape Town

JackTilburyisasecond-yearPh.D.studentatTheUniversityofTulsa.Heobtained hisresearch-basedMCominInformationSystemsfromRhodesUniversity,South Africa,in2019.JackwasawardedafullscholarshiptopursuehisMaster'sdegree, whichexploredthePropTechindustry–conductingrealestatetransactionsthrough blockchaintechnology. HegraduatedatthetopofhisInformationSystemshonors classasheobtainedaBusinessScienceundergraduatedegree.Sincearrivingin Tulsa,JackhasattendedandpublishedattheAnnualSecurityConferenceandhas beeninvitedtopresentattheUNESCOSafetyConference.Hisresearchincludes informationsystems,behavioralinformationsecurity,andautomateddecisionaidsin securityoperationcenters.BeforestartinghisPh.D.research,Jackworkedasa ProductManagerintheITindustryattwosoftwaredevelopmentcompanies.

JackworkedfortwoyearsasaProductManagerwithexperienceinstart-upand corporateenvironments,buildingandlaunchingmultipleproductstomarket.Jackis eagertocontributetothedevelopmentofsecurityproductseitherthroughleading productteams,engagingwithproductcustomers,orconductingproductresearch.

Compliance Graph Analysis Techniques for Identifying Violation Correction Priorities and Schemes, Predicting Future Violations, and Determining Risk

Noah L. Schrick and Dissertation Committee:

Peter J. Hawrylak, Chair

Stephen Flowerday

John Hale

Brett A. McKinney

Mauricio Papa

Compliance graphs are graphical representations of a system or set of systems and their statuses and relationships toward compliance and regulation standings. These graphs are similar to attack graphs, and are directed, acyclic graphs generated through exhaustive means. Each change to a system or set of systems is performed individually, with all possible permutations fully examined and represented in the resulting graph. These graphs are useful tools to determin e if a system or set of systems is at risk of falling out of compliance, and illustrate any and all possible routes to a violation. Though visualization of these graphs and manual analysis can undercover useful information about necessary or desired changes to a system or set of systems, there is an abundance of additional information that can be uncovered with further, in-depth analysis tailored toward specific goals. This work presents analysis techniques for compliance graphs to identify violation correction priorities and schemes, predict future violations, and determine risk. These techniques were employed on compliance graphs generated in the automotive, healthcare, and energy sectors to highlight the versatility and robustness of these methods. The example graphs range in size and connectivity, and each graph examines its compliance standing with local, private, and federal regulations for its sector, each with varying degrees in severity and violation repercussion. The analysis techniques are adaptive and multifaceted, and are intended to function alongside a sector’s regulatory requirements, as well as any user-defined requirements.

Compliance graphs are an alternate form of attack graphs, utilized specifically for examining compliance and regulation statuses of systems. Like attack graphs, compliance graphs can be used to determine all ways that systems may fall out of compliance or violate regulations, or highlight the ways in which violations are already present. These graphs are notably useful for cyber-physical systems due to the increased need for compliance. As the authors of [1], [2], and [3] discuss, cyber-physical systems have seen greater usage, especially in areas such as critical infrastructure and Internet of Things. The challenge of cyber-physical systems lies not only in the dema nd for cybersecurity of these systems, but also the concern for safe, stable, and undamaged equipment. The industry in which these devices are used can lead to additional compliance guidelines that must be followed, increasing the complexity required for examining compliance statuses. Compliance graphs ar e promising tools that can aid in minimizing the overhead caused by these systems and the regulations they must follow.

Governance, Risk, and Compliance (GRC) Officers assist groups or organizations with preventing or mitigating incurred costs as a result of a violation of a mandate. With the wide array of mandates that organizations may need to follow regarding health or personally identifiable information (PII), specific

industry standards such as FinCEN [4], FDA QSR [5], NERC-CIP [6], internal standards, or equipment maintenance schedules to avoid voiding a warranty, it becomes increasingly difficult for GRC Officers to manage and track all mandate statuses. In addition, organizations rapidly and frequently bring changes into environments with new software, new equipment, new products, new contracts, or new processes. Each of these changes propagates additional change, all of which may affect the standing in regard to a compliance or regulation mandate. Rather than manual compliance checks, compliance graphs can be automatically generated, and analysis can be conducted on the resulting graph to aid in decision-making and visualization.

The semantics of compliance graphs are simila r to that of attack graphs, but with a few differences regarding the information at each state. While security and compliance statuses are related, the information that is analyzed in compliance graphs is focused less on certain security properties, but is expanded to also examine administrative policies and properties of systems. Since compliance and regulation is broad and can vary by industry and appl ication, the information to analyze can range from safety regulations, maintenance compliance, or any other regulatory compliance, including internal company standards. However, the graph structure of compliance graphs is identical to that of attack graphs, where edges represent a modification to the system, and nodes represent all current information in the system.

Compliance graphs begin with a root node that contains all the current information of the system or set of systems. From this initial root state, all assets in the system are examined to see if any single modification can be made, where a modification can include a change in system policy, security settings, or standing in relation to a compli ance or regulatory mandate. If a modification can be made, an edge is drawn from the previous state to a new state that includes all of the previous state’s information, but now reflects the change in the system. This edge is labeled to reflect which change was made to the system. This process is exhaustively repeated, where all system properties are examined, all modification options are fully enumerated, all permutations are examined, and all changes to a system are encoded into their own independent states, where these states are then individually analyzed through the process.

After a compliance graph has been generated and obtained, it is useful to analyze the graph to identify characteristics or planning procedures for the environment. Though visualization can help identify immediate concerns or simple solutions, in-depth analysis can uncover information about an environment that can suggest correction schemes, uncover hidden or hard-to-find problems, or give insight on planning for maintenance or mitigation of future violations. Analysis of graphs range in technique and results based on what information is desired. This work implements analysis techniques of compliance graphs tailored toward answering or addr essing the needs present in most environments. This work designs, implements, and examines the use of analysis techniques that are compatible across industries and can be used in various application settings.

The objectives of this work are to design and implement compliance graph analysis techniques for:

• Determining a numerical risk factor of a current environment given a compliance graph.

• Predicting trends and likelihoods of future violations for an environment given a compliance graph.

• Identifying violation correction priority in an environment given a compliance graph.

• Presenting violation correction schemes for an en vironment with known violations given a compliance graph.

• Presenting violation correction mitigation scheme s for an environment with expected or predicted future violations given a compliance graph.

[1] J. Hale, P. Hawrylak, and M. Papa, “Compliance Method for a Cyber-Physical System.” U.S. Patent Number 9,471,789, Oct. 18, 2016.

[2] N. Baloyi and P. Kotz´e, “Guidelines for Data Privacy Compliance: A Focus on Cyberphysical Systems and Internet of Things,” in SAICSIT ’19: Proceedings of the South African Institute of Computer Scientists and Information Technologists 2019, (Skukuza South Africa), Association for Computing Machinery, 2019.

[3] E. Allman, “Complying with Compliance: Blowing it off is not an option.,” ACM Queue, vol. 4, no. 7, 2006.

[4] “Financial Crimes Enforcement Network, Title 31 U.S.C. 310,” 2010. Available: https://www.govinfo.gov/content/pkg/USCODE-2010-title31/html/USCODE-2010-title31-subtitleIchap3-subchapI-sec310.htm.

[5] Food and Drug Administration, “Quality System Regulations,” 1996. Federal Register: Volume 61, Number 195. 1996 [Online]. Available: https://www.fda.gov/scienceresearch/clinical-trials-and-humansubject-protection/quality-system-regulations.

[6] Federal Energy Regulatory Commission, “Critical Infrastructure Protection Reliability Standard CIP,” 2020. 85 FR 8161. 2020 [Online]. Available: https://www.federalregister.gov/documents/2020/02/13/2020-02173/critical-infrastructure-protectionreliability-standard-cip-012-1-cyber-securitycommunications.

About Cameron

Professional Experience Preference

●

●

Working Location Preference

Internships / RA / TA / Work History

Timothy Flavin

The University of Tulsa Timmy-Flavin@utulsa.edu

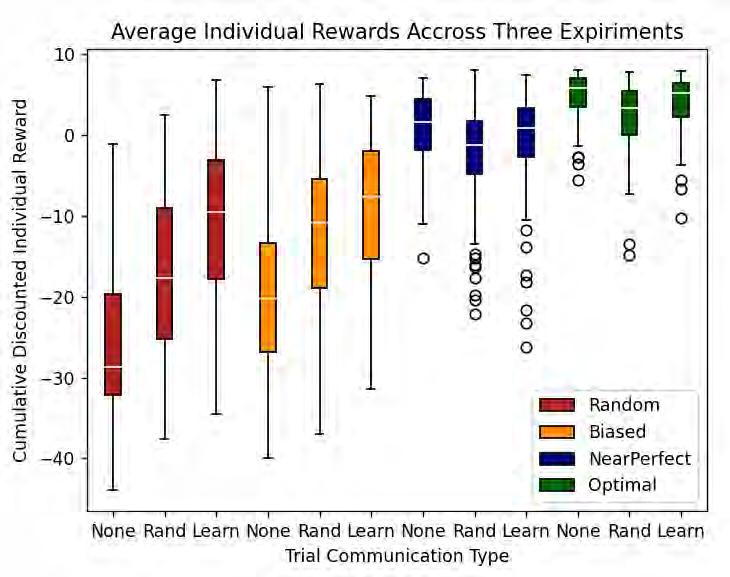

Abstract – This abstract briefly summarizes a sequence of projects with the goal of Producing Machine learning models for real world scenarios where reliability and human compatibility dominate. The most recent project, a multi-agent coordination algorithm called MATCH [2], allows for teams of artificial agents to dynamically learn a meritocracy-based command structure that allows them to approximate the performance of the best agent in the group. This algorithm allows cooperative AI to ignore bad actors while deferring to more experienced teammates. Our ongoing project in collaboration with the TU AI/ML club, jokingly referred to as Ultron, is a penetration testing LLM which is aligned with a reinforcement signal of compute resources the AI can accumulate via bash scripting and access to common tools. Our earliest project in the sequence, ML Democracy [1] is a method to combine an arbitrary list of machine learning models into a bagged ensemble so that the failure modes of each individual model may be avoided through pruning while the most capable models vote on classification.

Index Terms – Ensemble Learning, Human-AI, LLM, Penetration Testing, Reinforcement Learning Introduction