Customize3DForm

Introducing human subjectivity to objective objects using text-based generative pipeline

Karla Saldana Ochoa1 and Wei-Chun Cheng2

1University of Florida, College of Design, Construction and Planning, School of Architecture 1480 Inner Rd, Gainesville, FL 32611, USA

2Carnegie Mellon University, College of Fine Arts, School of Architecture 4919 Frew St, Pittsburgh, PA 15213, USA

1ksaldanaochoa@ufl.edu , 0000-0001-6092-4908 2jimmyche@andrew.cmu.edu, 0009-0008-3974-3094

Abstract. This paper introduces Customize3DForm, an MLassisted system for creating a customizable 3D form generator that iteratively adjusts specific model elements based on text input. The system integrates a parametric generator within Rhino Grasshopper, a Gazepoint eye-tracker, and a web-based interface to collect subjective feedback. A dataset of 3D models paired with text descriptions is created using designer input, guided by questions about peculiar, appealing, or reasonable elements. Eye-tracking ensures precise data collection by identifying focal areas during interaction. A unique feature of Customize3DForm is its ability to adjust individual model elements instead of entire models, enabling rapid iterative modifications. Model elements are labeled with descriptors reflecting design characteristics (e.g., beauty, ugly) and formal attributes (e.g., strong, light). The system supports early conceptual exploration by translating designers' abstract qualitative concepts into 3D spatial forms. Leveraging culturally influenced design languages, Customize3DForm fosters collaboration between artificial and human intelligence. This synergy empowers designers to create adaptable, structurally robust 3D architectural projects, revolutionizing early-stage design workflows through dynamic interaction with the generator.

Keywords. Machine Learning, Generative Design, Computational Pedagogy, Human-Centered Design

1.Introduction

Recent advancements in information technology have transformed machines from simple mechanical tools to advanced intelligent agents. This evolution has reshaped interactions between humans and machines into complex relationships characterized by cooperation, teamwork, and collaboration. Powered by artificial intelligence, deep learning, and similar technologies, machines now support humans in complex

K.S. OCHOA AND W. CHENG

decision-making tasks (Rahwan et al., 2019; Duan et al., 2019; Haesevoets et al., 2021). In these collaborations, humans lead as decision-makers, while machines provide support as automated assistants, enhancing rather than replacing human efforts. We argue that for collaboration to be effective, machines must not only solve problems but also actively contribute to the partnership. To improve human-machine collaboration, we suggest a dynamic approach that leverages machines to augment and refine human design decisions and develop communication interfaces that enhance the design process during the early stages of form finding, space definition, and scale resolution.

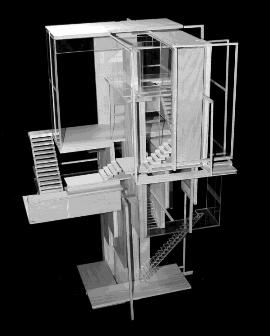

This paper used an undergraduate core design studio project (first-semester; second-year), Door, Window, and Stair, as the testing 3D design space. Door, Window, Stair is an abstract conceptual 3D design exercise that allows students to design a cultural artifact by extracting 2D and 3D parti diagrams, which become design language for students to generate design analogically (Figure 1). With well-defined constraints, doors are the spatial connectors between two spaces; windows are visual connectors, apertures, and openings; and stairs are vertical connectors between levels. Students explore the concept of boundary, transparency, spatial configuration, and connectivity from the diagram and transform it into 3D space with physical materials such as wood, plexiglass, and stick assemblage (Figure 2). This design exercise trains students to translate between natural language descriptions and forms. How to solidify a description, how to represent a concept, and how to realize it in a physical 3D form.

2.State of Art: 3D AI and Generative Algorithm

In architectural design, generative methods are effective tools for iterating design options within pre-defined boundaries. In the current state of the arts, three prominent generative methods have been identified in architectural applications: 1. Rule-based 2. Control-based 3. Probability-based. Unlike the 2D generative AI models that can modify certain areas of the generated output (Lugmayr A. et al., 2022), the current generative 3D AI mostly iterates the design options by generating new designs based on the randomization of rules, parameters, or datasets in a pre-defined design space.

CUSTOMIZE3DFORM:

INTRODUCING HUMAN SUBJECTIVITY TO OBJECTIVE OBJECTS USING TEXTBASED GENERATIVE PIPELINE

Current research in 3D generative AI primarily focuses on creating 3D objects from textual descriptions (Bai, Song, and Jie Li, 2024). This field significantly advances AI's ability to interpret and transform detailed verbal instructions into complex threedimensional shapes. However, we view the generative process as evolutionary. Present methods, which regenerate the entire form, often fail to preserve essential aspects of a particular model, not meeting designer expectations. Hence, this paper proposes a method that introduces the ability to customize and regenerate specific parts of a 3D model rather than the entire structure. This approach not only provides a new perspective on AI's role in design but also challenges the conventional use of AI merely for optimization. Instead, it promotes AI as a tool to enhance creativity and support varied human-centric solutions.

3.Methodology

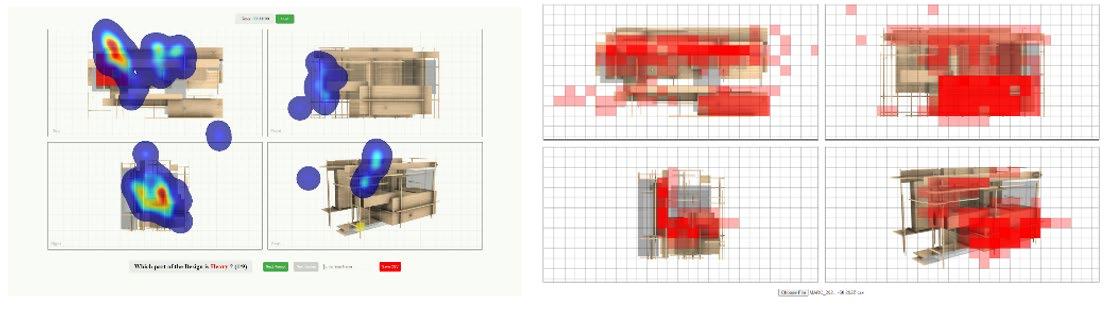

We conducted a threefold methodology: First, generate a dataset of 3D geometries from a control-based generative parametric model that is defined with specific design criteria; Second, collect human feedback as text labels using eye-tracking and a webbased interface that integrates clickable grid cells (Figure 3).

The second step of the methodology has two data-gathering techniques that work together to encompass two resolutions of human reaction to the text prompt and 3D form. This combined technique provides a granular examination of the generated 3D

Figure 4. The pipeline of Cusomize3DForm. Users' operations are colored in blue to differentiate them from the computational process. This workflow involved two main parts: data collection and data matching.

form. Third, resize the original dataset by using a cluster-then-label approach (CL). The CL used an unsupervised clustering algorithm called Self-Organizing Maps (SOM) to

K.S. OCHOA AND W. CHENG

autonomously label other unlabeled neighbor forms based on formal features used as features to train the unsupervised model to create a pool of design options to query from (Figure 4).

3.1.GENERATION OF DESIGN FORM AND DATA CURATION

In order to increase the diversity of the generated output elements from a parametricbased generator, a five-layer randomness system was implemented using the Populate 3D component in Grasshopper. Each layer introduces a specific level of control, with incremental integer seeds applied to ensure consistent element spawning locations across layers. For example, the first layer utilizes a seed value of 1, the second layer 2, and so forth, allowing all layers to be managed through a single number slider. The first layer of randomness determines the initial points within a bounding box to generate box elements. The second layer controls the placement of planks, while the third

and fourth layers manage both the positioning and rotation of vertical and horizontal elements, respectively. The fifth layer introduces a ratio control to adjust the transparency of randomly selected elements, enabling a specified percentage to become transparent. To validate the proposed generative pipeline, we generate ten unique designs from the parametric-based generator to test the pipeline (Figure 5).

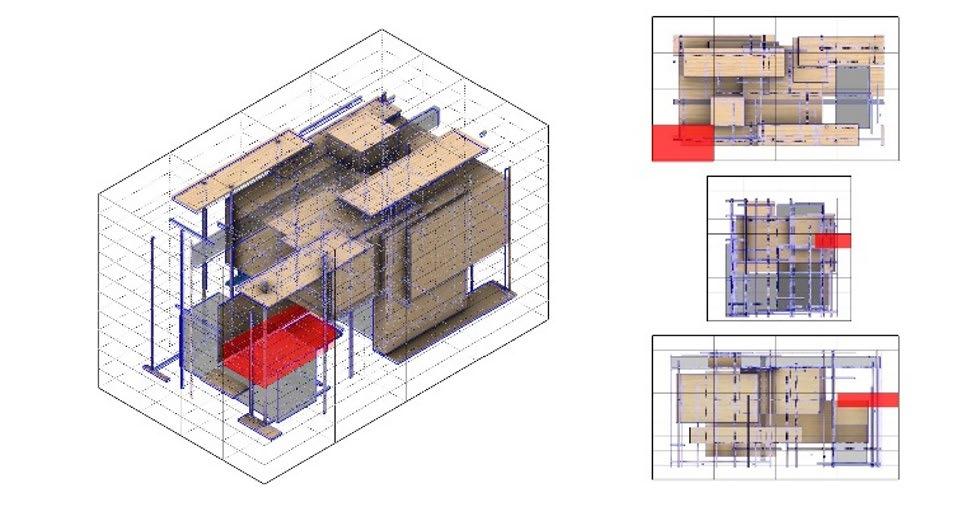

Each design is dissected into parts with cellar bounding boxes. The cellar bounding box was used to slice the model and create a template for the web interface for human feedback collection (Figure 6).

CUSTOMIZE3DFORM:

INTRODUCING HUMAN SUBJECTIVITY TO OBJECTIVE OBJECTS USING TEXTBASED GENERATIVE PIPELINE

The division resolution is user-defined; in this paper, we use 4x4x10 cellar boxes as a demonstration. Consequently, each design has 160 parts, and the total 3D elements dataset has 1369 parts (Figure 7).

3.2.

WEB-BASED INTERFACE DESIGN AND TEXT PROMPT DEFINITION

The web interface design contains four viewports to examine the design options: Top, Left, Front, and Perspective. Each viewport is divided into (20 x 12) grid cells to record the designer’s feedback to the text prompt questions located on the button of the web interface. It has a timer to record when each cell clicks; this time duration can further translate into the confidence of the designer’s feedback. In addition, the screen-based eye tracking devized utilized the GazePoint GP3 HD system (150Hz refresh rate, 0.5 – 1.0 degree of visual angle accuracy, Compatible with 24″ displays or smaller) alongside the web interface to capture the designer's instant and intuitive response (Figure 8). GazePoint GP3 is one of the most used screen-based eye-tracking systems in research runs simultaneously (Gazepoint, 2024).

K.S. OCHOA AND W. CHENG

The text prompt was informed by a multidimensional approach to describe both functional utility and aesthetic appeal from the 3D assembles. We decided to implement three assessment strategies: Kansei engineering (Nagamachi, 1995), Vocabulary from Architectural competitions (Guo, et al., 2023), and Creative Product Semantic Scale (Batey, 2012). From Kansei Engineering we selected adjectives like "Heavy - Light" and "Strong - Weak" to offer insights into perceived use, delineating between robustness and agility. Meanwhile, adjectives sourced from architectural competitions, such as "Modular - Eclectic" and "Vertical - Horizontal," emphasize adaptability and spatial orientation, which are crucial for architectural harmony. Complementing these, aesthetic appreciation, as delineated by Kansei adjectives like "Elegant - Not Elegant" and "Stylish - Not Stylish," along with attributes such as "Natural - Artificial" and "Unique - Common" from architectural contexts, provides a vast vocabulary to asses the formal representation of the 3D assembles. Moreover, the Creative Product Semantic Scale's "Usual - Unusual" highlights the innovative potential that transcends conventional norms. Together, these adjectives form a holistic framework for evaluating the 3D assembles and capturing their functional formal representations. This combined method provides a granular examination of the generated 3D form (Figure 9).

3.3.RAPID AUTONOMOUS LABELING WITH SELF-ORGANIZING MAP ALGORITHM

To efficiently assign text labels to all the 3D parts, we employed a clustering-then-label approach to reduce the number of parts students need to label. This method involves mapping labels to all similar parts within a defined cluster. By doing so, we significantly reduce the labeling workload for students, making the process more efficient and less time-consuming. We used an unsupervised machine learning algorithm called Self-Organizing Map (SOM), leveraging clustering and dimensionality reduction. SOM performs a nonlinear data transformation, converting high-dimensional data into a low-dimensional space (usually two or three dimensions) while preserving the topology of the original high-dimensional space. Topology preservation ensures that similar data points in the high-dimensional space remain close in the low-dimensional space, thus placing them within the same cluster. This lowdimensional space is represented by a planar grid with a fixed number of points, known as a map (Figure 10). SOM has been widely used in architectural design (Saldana

CUSTOMIZE3DFORM: INTRODUCING HUMAN SUBJECTIVITY TO

OBJECTIVE OBJECTS USING TEXTBASED GENERATIVE PIPELINE

Ochoa et al., Zifeng et al.) and effectively visualizes high-dimensional databases. The SOM grid size was 10x10 and the training procedure involved one million epochs until the response layer evolved to a stable configuration. SOM enhances transparency by clustering similar data points, reducing dimensionality, visualizing design spaces, and identifying outliers, which helps designers better understand complex relationships and make more informed design decisions.

To cluster the 3D parts based on their formal characteristics, we calculated the Higher Order Statistics (HOS) on the nodes of each 3D part. HOS is a statistical method that captures the invariances of the design features at a higher level. In HOS, for a sample of n observations, one can calculate up to n moments; in our case, we calculated up to four moments (arithmetic mean, standard deviation, skewness, kurtosis). This approach helps identify and cluster parts with similar formal qualities, facilitating the efficient assignment of text labels to the 3D parts within each cluster, as seen in (Figure 10). Each cluster contains multiple forms that are organized by the similarity of the observed features (Figure 11).

K.S. OCHOA AND W. CHENG

To reconcile the contradictory labels after the autonomous labeling with SOM, we employed a straightforward label occurrence analysis to address potential contradictions in qualitative user inputs. Contradictory labels were managed through a simple term-frequency calculation, wherein the frequency of each label was converted into a corresponding weight. This weighting mechanism represents the perceived importance or dominance of specific labels, which can subsequently be utilized to rank or evaluate forms based on their labeling frequency.

3.4.ITERATING DESIGN WITH SEARCH ENGINE-BASED GENERATOR

For the data matching, four layers of data linkage and conversion need to be executed: First, the parameters of the associated form; Second, the eye-tracking coordinates with semantic labels; Third, clickable cells with semantic labels; fourth, cellar grid index. By cross-matching these four layers of data, an exponential library of subjectivitydescribed forms will be ready for call out with natural language processing human input. After data collection and matching, Customize3DForm can generate design proposals from a user-defined descriptive query. In this paper, we present a UI control panel to perform this search and form generation function (Figure 12). However, this pipeline can be further integrated with natural language processing to replace the current UI control panel with only natural language text input.

4.Results and Discussion

This project showcased an approach to integrating a text-guided 3D generator to customized parts of a 3D initial model design, a method based on subjective human input, and a series of AI methods to guide the design output. Figure 12 shows the final result of the proposed 3D generator. However, it is essential to note that there were some limitations. a) insufficient text labels, which occasionally contained contradictions. To overcome this, we had to preprocess the labels and define the threshold to accept or reject a text label to ensure consistency. b) the generative algorithm operates using a brute search method, which, while functional, could be more efficient and scalable.

CUSTOMIZE3DFORM: INTRODUCING HUMAN SUBJECTIVITY TO OBJECTIVE OBJECTS USING TEXTBASED GENERATIVE PIPELINE

Despite these limitations, we identify some solutions for future research. First, by diversifying the training data, we can significantly enhance the robustness and accuracy of the algorithm. This diversified dataset could train deep-learning generative models, moving beyond brute-force methods to more sophisticated and efficient algorithms. Additionally, creating an interface to collect more comprehensive data, including verbal descriptions of the 3D models, would allow researchers to take a holistic approach to understanding human perception of space. This would provide data that could further improve the accuracy and usability of our generative design tools.

5.Conclusion

This article showcases the capabilities of Customize3DForm as a powerful tool for architects and designers, streamlining the design process and offering personalized, responsive solutions tailored to users' needs and preferences. A key challenge identified is that students in the early stages of design education may not be able to fully evaluate every aspect of a generated 3D design. To address this, we propose an iterative feedback loop that allows for incremental refinement of the design through multiple interactions. This method enables students to focus on specific design elements, such as visual aesthetics or the functionality of components (e.g., doors, windows, stairs), within their current understanding. By breaking down the evaluation into manageable, focused tasks, students can provide meaningful feedback without needing to assess the entire design in depth.

Additionally, we propose incorporating peer review to broaden feedback collection. Peer review would involve students evaluating and discussing each other's designs, allowing them to refine and critique based on subjective preferences and shared design principles. This collaborative approach fosters a learning environment that is crucial for design education.

For future research, conducting user studies or case examples demonstrating how designers interact with the system would provide practical validation of its effectiveness. Including quantitative metrics or qualitative feedback regarding the system's impact on the design process could further strengthen the evaluation.

Looking ahead, we aim to fully streamline the workflow into the Rhino Grasshopper environment, encompassing data preparation, labeling, and integrating natural language processing, replacing the current UI control panel with a purely textbased input system. For example, users could type "create a curved surface" to generate a 3D form with a curved surface. This development would make the tool more intuitive and accessible, enabling users to generate complex 3D forms through simple, conversational interactions.

K.S. OCHOA AND W. CHENG

Attribution

ChatGPT (OpenAI, 2024) was used to improve flow. Grammarly (Grammarly Inc., 2024) was used to correct errors in spelling and grammar.

References

Rahwan, I., Cebrián, M., Obradovich, N., Bongard, J., Bonnefon, J.-F., Breazeal, C., Crandall, J. W., (2019). Machine behaviour. Nature, 568(7753), 477–486. https://doi.org/10.1038/s41586-019-1138-y

Duan, Y., Edwards, J. S., & Dwivedi, Y. K. (2019). Artificial intelligence for decision making in the era of big data: Evolution, challenges and research agenda. International Journal of Information Management, 48, 63–71. https://doi.org/10.1016/j.ijinfomgt.2019.01.021

Haesevoets, T., De Cremer, D., Dierckx, K., & Van Hiel, A. (2021). Human-machine collaboration in managerial decision making. Computers in Human Behavior, 119, 106730. https://doi.org/10.1016/j.chb.2021.106730

Lugmayr, A., Danelljan, M., Romero, A., Yu, F., Timofte, R., & Van Gool, L. (2022). Repaint: Inpainting using denoising diffusion probabilistic models. In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/cvpr52688.2022.01117

Poole, B., Jain, A., Barron, J. T., & Mildenhall, B. (2022). DreamFusion: Text-to-3D using 2D diffusion. arXiv. https://doi.org/10.48550/arXiv.2209.14988

Sanghi, A., Zhou, Y., & Bowler, B. P. (2021). Efficiently imaging accreting protoplanets from space: Reference star differential imaging of the PDS 70 planetary system using the HST/WFC3 archival PSF library. arXiv. https://doi.org/10.48550/arXiv.2112.10777

Bai, S., & Li, J. (2024). Progress and prospects in 3D generative AI: A technical overview including 3D human. arXiv. https://doi.org/10.48550/arXiv.2401.02620

Nagamachi, M. (1995). Kansei engineering: A new ergonomic consumer-oriented technology for product development. International Journal of Industrial Ergonomics, 15(1), 3–11. https://doi.org/10.1016/0169-8141(94)00052-5

Batey, M. (2012). The measurement of creativity: From definitional consensus to the introduction of a new heuristic framework. Creativity Research Journal, 24(1), 55–65. https://doi.org/10.1080/10400419.2012.649181

Ochoa, K. S., & Guo, Z. (2019). A framework for the management of agricultural resources with automated aerial imagery detection. Computers and Electronics in Agriculture, 162, 53–69. https://doi.org/10.1016/j.compag.2019.03.028

Huang, L.-S. (2014, April). Pedagogical synergies: Integrating digital into design. Conference Paper, University of Florida School of Architecture. OpenAI. (2024). ChatGPT Model: GPT-4 | Temp: 0.7 [Large Language Model]. https://openai.com/chatgpt/ Grammarly Inc. (2024) Grammarly: AI Writing and Grammar Checker App (Version 14.1198.0) [Large Language Model] https://app.grammarly.com/ Gazepoint (2024). GP3 Eye-Tracker. Retrieved from https://www.gazept.com