Point clouds and conventional tools to represent Large-scale architectural projects

Karla Saldana Ochoa

saldana@arch.ethz.ch

Chair of Computer Aided Architectural Design (CAAD), Department of Architecture

Eidgenössische Technische Hochschule Zürich , ETH Zürich

Abstract

Visualizations play a significant role as decision-making tools in planning processes. Nowadays perspective representations -3D renders- are commonly used. However, we argue that in order to perceive depth, size, and detail; a “virtuality”: intensity, immersion, and interactivity have to be addressed. Technologies such as Virtual Reality (VR) create this “virtuality”, enabling the user to not only view the digital environment in 3D but also to look around and experience the spac e.

In this research, we propose a pipeline to visualize big scale landscape projects with laser scan’ point cloud models and virtual reality as a medium to communicate. The pipeline combines five software, Rhino, Cinema 4D, Cloud Compare, and Unity.

The method is applied in one case-study joining two representation, an interactive point cloud model, and specific 360 degrees renders. The resulting experience is one where the users can repeatedly visit the site, viewing it from angles impossible in the real world and allows them to have a better understanding of the project.

Keywords

Point cloud, visualization, virtual reality, new methodology, architecture visualizations.

1. Introduction

From ancient drawings to today’s computer animations, numerous types of visual representations can be used to illustrate a project (fig1). According to Olaf Schroth in his Ph.D. thesis, the immersion (which depends on motion, sound, and sensing) is necessary for the perception of depth and in consequence for landscape understanding.

“Visualizations are capable of modifying people’s perceptions and perhaps motivate their actions” (Sheppard et al., 2007). Visual representations communicate a “virtuality” according to Heim in his book Virtual Realism; virtuality is a word to describe the factors that virtual environments share with real environments. According to Heim, these factors depended on information intensity, immersion, and interactivity.

Heim defines intensity as the amount of detail with which objects are represented in the visualization. He continues to describe immersion as the sensation of “being in” the environment. This sensation of “being in” can be influenced by the used of motion and sound. Conclusively he describes interactivity as a tool that allows users to manipulate the visualization. All these components create a “virtuality” that in contrast to the static images (communicating only a part of the picture), a visualization with these components can communicate the proposal more efficiently.

Currently, researches are developing tools that provide this sensation of virtuality (Lang 2002, Motta 2014, Girot 2017). The most used is virtual reality (VR). This technology enables the user to not only view the digital environment in 3D but also to look around and experiment the scene.

2. State of art

During the past hundred years’ visualization of the real world were used to communicate ideas, and an essential resource for architects as a tool to sell their projects, this method has its apogee

in the Renaissance. In this period artists developed new techniques and artistic methods of composition and aesthetic effect for the formulation of perspective and th e emphasis on architectural forms. Their objective was to create art that would respect proportions, and that would closely resemble reality. (Motta, 2014) From the XIV century, artists and architects used this technic to emphasize their work. Realism is still a matter that concerns most nowadays, designers. Visualization tools facilitate the communication between the architect and the society; it increases veracity and transparency in the design. It becomes the common ground between planners, politicians and the public (Schoroth, 2010). Visualizations are aimed to help communicate the proposal to society.

Only within the last few years, sophisticated computer-based technological innovations allow architects to work in three and four dimensions (Lang, 2002). One of the most significant problems with these visualizations (traditional polygon surface modeling), is to simulate landscape at high fidelity. This task is often tricky due to significant consumption of time and resources.

For the past 20 years, engineers are using a technology that will solve this problem; Point Could models. Point clouds are the digital representation of a set of tridimensional coordinates that precisely measure the environment; they can be interpreted as GPS coordinates having X as a latitude, Y as longitude and Z as the elevation. (Figure 02)

We can use different apparatus to obtain point clouds models such as terrestrial laser scanners, airborne laser scanner (to capture cities and landscapes), aerial images and photogrammetric (to produce 3D point clouds and digital surface models from a bird’s eye view). (Richter et al., 2014). The advantages of keeping the scan in point form make it extremely useful. The file sizes are much smaller, and the porosity of the point clouds make it possible to see through walls and surfaces, accessing “hidden” spaces and unique views of the site (Han et al., 2017). The ability

of a point cloud model to represent vast areas in 3 dimensions makes it particularly suitable for describing large-scale projects.

Platforms as Sketchfab make possible to display models online, and support point cloud files, as is shown in (Figure 03, a), There are already several architects and artist using point clouds to visualize their projects, examples from Leif Sylvester (a). He presents his work as a part of the educational project done in collaboration with KEA Copenhagen School of Design and Technology. Another example is the work from Philipp Urech (Figure 03, b, c, d). Urech communicates through simulation this virtual sites and helps to recognize landscapes from a subjective viewpoint.

Figure 03. Each example carries different ways to represent point clouds, in case a), the artist decides to highlight the sculpture and the wall beside it, describing the surrounding grayscale. In example b), the scan was taken at night where the lights from the street were supposed to be part of the scan, the colors in the points has a blue and grayscale produced by the lamps. On example c), the scan was meant to be interactive giving the user key points were extra information was display and in case, d), the points are presenting the intensity of light reflected on the scan surface

The Chair of Prof. Christophe Girot, Institute of Landscape Architecture and it is MediaLab/LVML have been experimenting with these models more than ten years, according to Girot this tool is suitable for landscape architects because,

“The site model itself became a piece of workable terrain that could be tested according to local constraints. The data sets enable the landscape architect to determine precisely the point of contact between the artificially engineered topograp hy from the natural.” (Girot, 2016)

One representative project is the Gotthard Landscape (Figure 04). This project generates a digital model of the entire Gotthard region showing how accessible the point cloud models are. The point cloud model can be considered a dataset; it allows to add new data. The data can come from laser scanners but also other methods.

As is described above all these examples show the versatility of the point clouds technology. The idea of a 3D model that represents the exact reality gives the flexibility to start the design process knowing that all the changes will be placed and perform in the exact place.

The aesthetics of a point cloud model can be tackled by the oscillations between novelty and familiarity (Vanderbilt, 2016). What we see in a point cloud is millions of points representing reality (familiarity) and space in-between, the void, the absence of things (novelty). What makes a point could a unique visualization is this abstract representation of the world.

The present research aims to preserve this abstraction and still communicate details. To achieve this intention, we propose a method which combines two technologies, an abstract point cloud of 12km length and specific 360 renders.

3. Methodology

The visualization of 3D point clouds can be adapted to different objectives: to show the captured environment, illustrate structures, or communicate simulation and analysis results. Different rendering techniques and color schemes can be used to achieve this aim: photorealistic rendering with original surface color, non-photorealistic rendering, rendering based on category

data of object classes, colorization based on attributes from simulations and analysis, or a combination of the previous. (Richter et al., 2014)

To achieve the objective of this work, we will use a photorealistic rendering with the original surface color display. Following the next steps:

3.1. Scan the site and register the point clouds.

A single scan may contain several million 3D points since no single scanning location can visualize all surfaces of a site. The scans were obtained from multiple locations. We use a TLS RIEGL VZ1000 scan, together with a calibrated NIKON D700 camera mounted on top to obtain the point cloud model. We used RiScan Pro to register the different scans of the site.

3.2. Create the new point cloud objects for the proposal.

The designed proposal was modeled in Rhino, using the tools available to create polygonal meshes. The next step was to assign layers to each object and exported as an OBJ file. These objects were exported to Cinema 4D. We used the plugging Krakatoa which is specifically designed to process and render billions of particles1, where every surface was transformed into points.

3.3. Render the points.

The next step was to assign materials to the point clouds and export them as PTS files. We imported them in Cloud Compare to change the extension to XYZ. These new XYZ files were imported in Unity2 In this platform, we used an asset called Point Cloud Viewer and Tools. The latter allows the displaying of points in the platform. A critical aspect of the process is to decide which object should be highlighted. Currently, Unity can only visualize 20 million points per scenes, it is recommended to populate with more points the objects that compose the most significant gestures of the proposal, and with fewer points the ones far away or which are not essential.

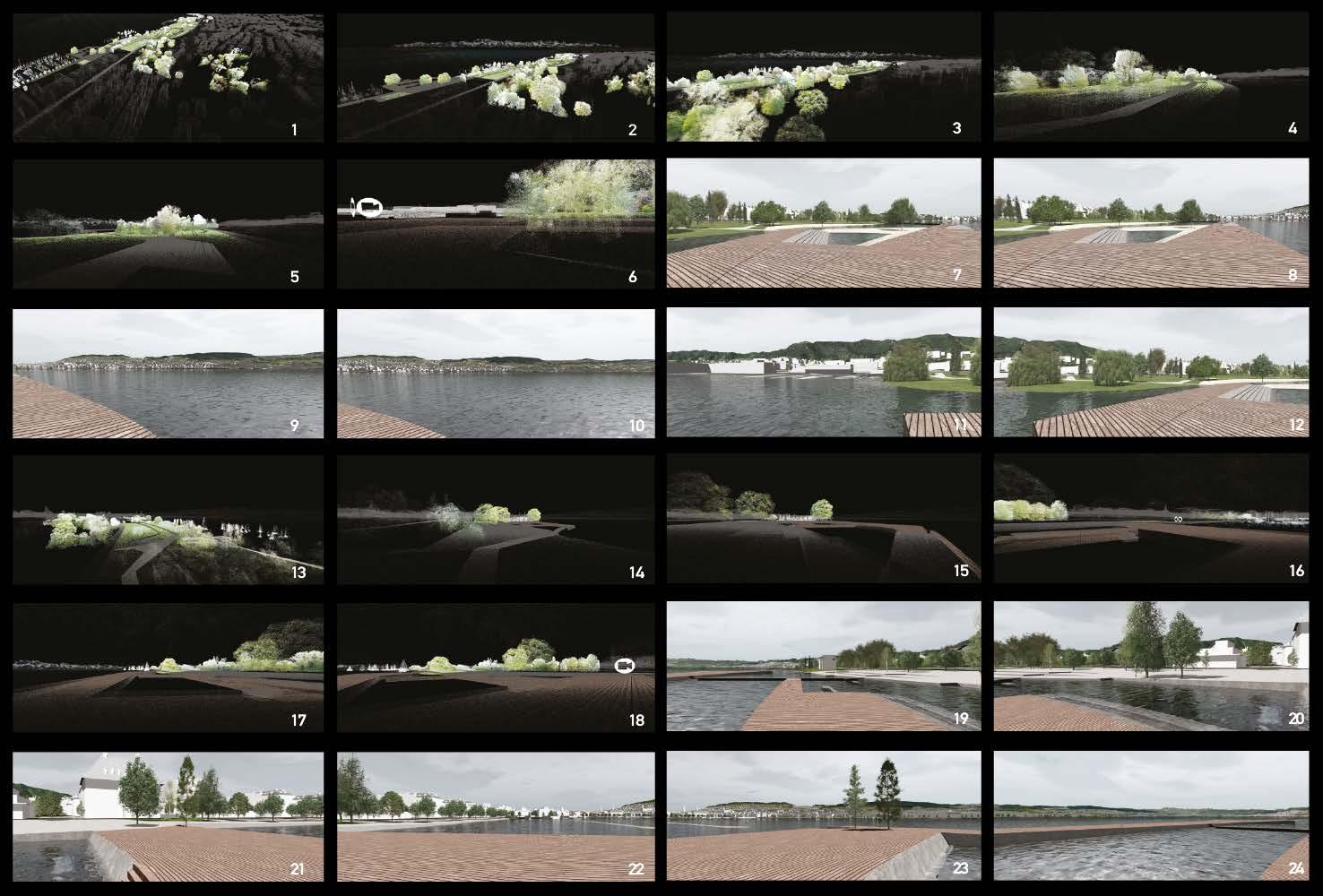

3.4. Generate the 360 render and display the model in a Virtual reality platform.

The singularity of a point cloud model sometimes generates misunderstanding of some details in the project. These details sometimes are not displayed because of the model’ transparency. Regarding this issue, we propose an interactive point cloud model, with 360 render of specific places.

1 www. thinkboxsoftware.com/krakatoa

2 A flexible and powerful development platform for creating games and interactive applications

The 360 Render were obtained in Cinema 4D. The process starts by applying textures to the surfaces. Then, setting up lights and cameras. Cinema 4D has already a 360 camera , which will capture a distort image from all the angles of the scene; these images were introduced in Unity as a new scene. This platform allows creating scenarios as in a video game. The imported Point clouds were visualized with cameras allowing them to move freely. We selected different scenarios od the design to be display in different scenes, together with 360 render. Triggers activated these scenes in the form of an icon. These icons allowed to switch between levels (point-cloud models or 360 renders). In this way, the user interacts with the site -they are virtually there. The users could use their natural senses of sight and sound while interacting with the project (Figure 05).

3. Discussion and Conclusion

In comparison to the projects mentioned above, this methodology is a novel approach to visualize architectural projects. The combination of an interactive point cloud model and specific 360 renders allow the user to visualize the complete site creating the experience where the user can repeatedly visit the place, viewing it from angles otherwise impossible in the real world. The 360 render helps the users to have a better understanding of the details of the intervention, materials, textures, shadows and the atmosphere of the proposal.

The objective of this work was to generate a novel approach for representing a landscape architecture project, which implies a significant scale representation together with subtle gestures of the proposal, this objective was achieved by the method proposed. One limitation found in the process was the restriction of points display in a single scene in Unity (20 million). If in the future this restriction does not longer exist, then the objects can be more populated with points, and the representation will be less transparent, but still, it will hold this abstract representation that characterizes it.

4. Outlook

This technology is opening new fields of research that were unthinkable before such as in simulation with point clouds, analysis of complex terrains, humanitarian response to natural catastrophes, digital fabrication and visualizing large scale areas.

For future work, it is important to emphasize that this technology is changing every day, probably in the following years we will be able to scan precisely an object or a site with a mobile phone, making the process even faster, saving time for the design, and the successful co mmunication with the client. This process will lead to a creation of architecture platforms that support much friendly Point clouds models, and the workflow between modeling and visualizing will be more fluent.

5. References

Andersont, D (2016). Real Data in Virtual Worlds. MIT Open Documentary Lab. Massachusetts. https://www.youtube.com/watch?v=9yGx3uexFuw&t=84s

Barnes, A., Simon, K., & Wiewel, A. (2014). From Photos to Models. Strategies for using digital photogrammetry in your project. Spar Cast-Ail.

Bishop, I. D., & Rohrmann, B. (2003). Subjective responses to simulated and real environments: A comparison. Landscape and Urban Planning, 65(4), 261–277. https://doi.org/10.1016/S0169-2046(03)00070-7

Duguet, F., & Drettakis, G. (2004). Flexible point-based rendering on mobile devices. IEEE Computer Graphics and Applications, 24(4), 57 –63. https://doi.org/10.1109/MCG.2004.5

Galler, C., Kratzig, S., Warren-Kretzschmar, B., & Von Haaren, C. (2014). Integrated approaches in digital/interactive landscape planning. Digital Landscape Architecture, 70–81. Retrieved from http://dla2014.ethz.ch/talk_pdfs/DLA_2014_1_Galler.pdf

Girot, C (2014). Topology: On Sensing and Conceiving Landscape. Harvard GSD. Massachusetts. https://www.youtube.com/watch?v=xCy-uS-A1iI&t=16s

Girot, C (2017). Point Cloud Modeling the Alpine Landscape. TEDx. Zurich. https://www.youtube.com/watch?v=REfBWEJh1rk&t=120s

Girot, C, Imhof, D(Eds). (2016). Thinking the Contemporary Landscape, Zürich.

Girot, C. (2017.1). Point cloud landscape - research in technology and landscape perceptions. http://girot.arch.ethz.ch/research/point-cloud-landscape

Han, X. F., Jin, J. S., Wang, M. J., Jiang, W., Gao, L., & Xiao, L. (2017). A review of algorithms for filtering the 3D point cloud. Signal Processing: Image Communication, 57, 103–112. https://doi.org/10.1016/j.image.2017.05.009

Hayek, U. W. (2016). Exploring Issues of Immersive Virtual Landscapes for Participatory Spatial Planning Support. Journal of Digital Landscape Architecture, 1, 100–108. https://doi.org/10.14627/537612012.

Hori, Y., & Ogawa, T. (2017). Visualization of the Construction of ancient roman buildings in Ostia using point cloud data. ISPRS - International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, XLII-2/W3, 345–352. https://doi.org/10.5194/isprsarchives-XLII-2-W3-345-2017

Lange, E. (2002) Visualization in Landscape Architecture and Planning: Where we have been , where we are now and where we might go from here. Landscape Architecture, (1803), 1–11.

Lange, E., & Hehl-Lange, S. (2006). Integrating 3 D Visualisation in Landscape Design and Environmental Planning. Gaia, 15(3), 195–199.

Lin,E. (2016). Point Clouds as a Representative and Performative for Landscape Architecture A Case Study of the Ciliwung River in Jakarta, Indonesia. Doctoral thesis ETH

McGrory, H (2015). Data Science, Creativity, and VR. O’Reilly. New York. https://www.youtube.com/watch?v=tawTE2tUQ5o

Motta, C. (2014). The Birth of Perspective. https://useum.org/Renaissance/Perspective

Richter, R., & Döllner, J. (2014). Concepts and techniques for integration, analysis and visualization of massive 3D point clouds. Computers, Environment and Urban Systems, 45, 114 –124. https://doi.org/10.1016/j.compenvurbsys.2013.07.004

Schroth, O. (2010). From Information to Participation: Interactive Landscape Visualization as a Tool for Collaborative Planning, 223. https://doi.org/10.3929/ethz-a-005572844

Shaw, M (2015). How ScanLAB Projects Creates 3D Laser Ghosts of Earth’s Hidden. Spaces | WIRED. London. https://www.youtube.com/watch?v=QGTSHwYjLXo&t=2s

Sheppard, S. R. J., Shaw, A., Flanders, D., & Burch, S. (2007). Can Visualisation Save the World? – Lessons for Landscape Architects from Visualizing Local Climate Change. Proceedings of Digital Design in Landscape Architecture”, (Ipcc), 20.

Spielhofer, R., Fabrikant, S., Vollmer, M., Rebsamen, J., Grêtregamey, A., Hayek, U. (2017). 3D Point Clouds for Representing Landscape Change. Journal of Digital Landscape Architecture, 206-2013. https://doi.org/10.3929/ethz-b-000171222

Tang, P., Huber, D., Akinci, B., Lipman, R., & Lytle, A. (2010). Automatic reconstruction of as-built building information models from laser-scanned point clouds: A review of related techniques. Automation in Construction, 19(7), 829–843. https://doi.org/10.1016/j.autcon.2010.06.007

Vanderbilt, T (2016) The secret of taste: why we like what we like. https://www.theguardian.com/science/2016/jun/22/secret-of-taste-why-we-like-what-we-like

Zilliacus, A. (2016). ArchDaily: 10 Models Which Show the Power of Point Cloud Scans, As Selected by Sketchfab. http://www.archdaily.com/798877/10-models-which-show-thepower-of-point-cloud-scans-as-selected-by-sketchfab