Contents Foreword Society Overviews CVD and Mental illness - Aneesa Kumar Genetics and Schizophrenia - Anisha Tripathi Contagious Vaccines - Harsha Pendyala What is an Antibiotic? - Teodor Wator The environmental impact of current nuclear reactors and whether we will ever create a perfect source of energy - Aaditya Nandwani Titan – The “Earth-like” moon - Nuvin Wickramasinghe Maxwell’s Theory of ElectromagnetismSamuel Rayner

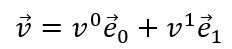

Relativity and the Mathematics behind it - Aaditya Nandwani Why Laplace’s demon doesn’t work - Bright Lan 4 5 8 Academic Journal 2023 - 2 12 17 21 24 29 34 43 50

General

The Shrouded History of Women in STEM

- Jake Leedham

How

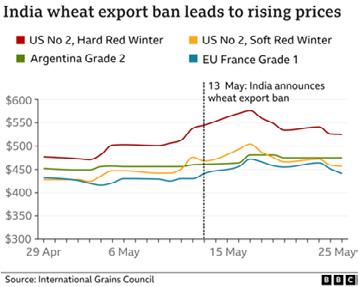

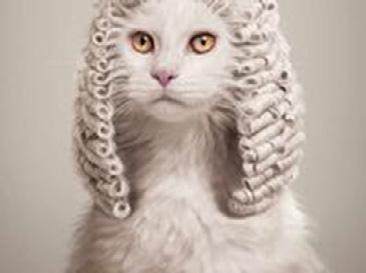

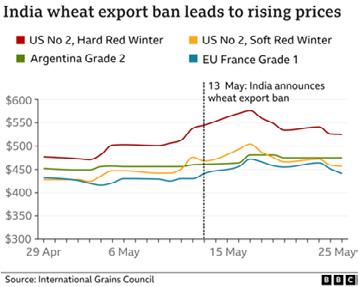

Harmony - Benjamin Dakshy Just Watch Me - Habibah Choudhry Cats in the Courtroom - Joe Davies Covid, Ukraine, and the ensuing food crisis - Will Lawson

Imperfect

has conflict shaped medicine?Rohan Chivali Vengeful violence – to what extent is it justified? - Sophie Kerr Depp v. Heard; Courtroom Case or Societal Struggle? - Nayat Menon 3 - Academic Journal 2023 53 55 58 60 63 66 69 71

Foreword

It has been a great privilege of mine to put together and edit this year’s edition of The Academic Journal. Over the years, I have read many different articles from various journals, so the standard always sets a high bar. In reading these articles included this year, I was delighted by how intriguing and eye-opening these articles are. From mental health and medicine, the environment to mathematical theories and the current global affairs, this journal really does have everything. This is a credit to the diversity in interests and strengths that students in this prestigious school have. What also can be seen is the strength of Societies available in the school - their successes with high attendence rates and the baton of student leadership being passed on year-on-year continuing to strive for interesting talks and debates. I would like to thank all the authors of the articles for the time and effort that went into these pieces, but also to the Society Presidents for the work they have done to produce their own Journals which I would highly recommend exploring for even more outstanding articles. Please do enjoy and I hope this may spur you on to having your own questions to research and write articles for in the future.

George O’Connor Academic Journal Editor

Academic Journal 2023 - 4

Society Overview

Natural Sciences:

“The Natural Sciences Society is one of the oldest communities at St Olave’s where we explore the fascinating workings of the world we live in. The society sessions involve a diverse range of topics that explore the boundaries of Natural Sciences to incorporate ideas from a multitude of other disciplines such as space physics, medicine, psychology, and more. As a result, we host bespoke and creative sessions that capture the curious nature of our members. We also publish the jam-packed Natural Sciences Journal which collates many fantastic articles from passionate students across the school. We run every Tuesday Lunchtime, so join the NatSci Teams to receive news about our upcoming talks and opportunities (MS Teams code: dasys9e). We hope to see you there!”

Islamic Society:

Islamic Society runs every Friday at 1pm for congregational ‘Jummuah’ prayers, to provide a space for Muslim students at St Olave’s to feel comfortable in practicing their faith, as well as getting to know people across different year groups. These are led by a different person each week where they choose an interesting topic to talk about before the prayer (called a Khutbah!). In the past, we have had talks on ‘Dealing with Anxiety’, ‘The Importance of Charity’ and ‘Handling Your Emotions’, among many more. However, ISoc is not just limited to praying! So far, we’ve run many charitable events such as an Ice Lolly Sale in Summer 2022 to raise money for Palestine, the first ever Inter-Faith Iftar event to raise over £2K for Bromley Food Bank and a Krispy Kreme Doughnut Sale to raise money for Young Minds.

5 - Academic Journal 2023

Hindu Society:

Hindu Society is a community for the Hindus at this school. You don’t have to be very religious to participate at this school and previous members have ranged from not very religious to very religious. It is a safe space for the sixth form to explore ideas related to Hinduism and also celebrate Hindu festivals together. All in all we promise to be a very fun society where we run large events like Sixth Form Diwali with dances and food etc but also debates such as the significance of religion with science.

Physics and Engineering Society:

Interested in physics and engineering? Physics and Engineering society is a place where anyone can talk about or demonstrate any area of physics that interests them. We have talks on all areas of physics – astrophysics, quantum mechanics, aerospace engineering, particle physics, theoretical physics, relativity, mechanical and electrical engineering and so much more! We have also had demonstrations of various engineering projects, such as a wind tunnel model, and have external speakers talk about studying physics at and beyond university.We also have an annual society journal giving you an opportunity to write about what interests you the most in the world of physics (there are journals available in the science department if you want to have a look!) We run in S8 every Friday lunchtime (a fun way to end the week!). To join the society team use the code g1zth51

Medics society:

Medics Society at St Olave’s is the biggest and arguably most flourishing society there is. Our aim is to build a medical community within our school, encouraging, supporting and driving all our aspiring medics towards a fulfilling future career. We recognise this isn’t an easy road to traverse alone, so Medics society provides a plethora of experiences to aid with the application process, from debates, discussions, student led presentations and EVEN external speakers such as consultant doctors or current university students. Medics society is the perfect place to gain some insight into medicine, and we look forward to seeing more and more people join our medic family!!!

Chemistry Society:

If you are intrigued by the world that surrounds us and what we can do with it, then the exciting talks, practicals and demonstrations (for the really dangerous stuff!) we have at Chem Soc is what you need. From the science behind Luminescence to that of poison, or perhaps how to make your own artificial diamonds! We’ve got it all, see you at Thursday 1pm, every week in S2.”

Classics Society:

Classics Society is open to all on Tuesdays at 1.10 in room 23. Every week we gather together to learn more about the ancient world through talks, activities, videos, quizzes and debates. No prior

Academic Journal 2023 - 6

knowledge is required, just a willingness to get excited about myths and monsters, language and literature, history and heartbreak (looking at you, Catullus). We’re a relaxed and welcoming space so everyone is welcome to follow in Horace’s footsteps and ‘sapere audere’ (dare to think!)

Space society:

From those intrigued by the complexities of the science behind space to the vastness and beauty of space, space society is the perfect place for all scientists and our humanities students to discuss everything related to space. We have both talks as well as discussions and competitions and some of our favourite talks range from Fermi’s Paradox (theory about the existence of Aliens) to the maths being rocket science. Space society runs on Monday lunchtime in S11 and is open to all year groups.

Afro-Caribbean Society:

Welcome to Afro-Caribbean society! Come along to learn more about African and Caribbean culture, and talk abut your own experiences. From heated debates, informative talks, and entertaining games and quizzes, there is always something fun going on at ACS. We welcome students of all races and backgrounds.

7 - Academic Journal 2023

The Increased Risk of Cardiovascular Complications due to Mental Illnesses

Aneesa Kumar

Aneesa Kumar

Introduction

Mental health disorders are characterised as clinically significant disturbances in the cognition, emotional regulation, or behaviour, of an individual and are usually associated with distress or impairment in key areas of functioning. These illnesses can range from the more common disorders, anxiety and depression, to others such as anorexia, post-traumatic stress disorder (PTSD) and schizophrenia.

For centuries, the mind-body relationship has been postulated and findings from various epidemiological studies have shown the impact of depression, trauma, anxiety, and stress on the physical body, including the cardiovascular system. As such, the damage to the system caused by mental distress can be regarded as a contributing risk factor to heart disease. According to the World Health Organisation (2022) in the world health statistics, some of the most common behavioural and metabolic risk factors, e.g. substance abuse and hypertension, for non-communicable diseases (NCDs) such as cardiovascular disease (CVD) are often linked to poor mental health, particularly mentally induced stress. A recent study led by senior research investigator in behavioural health, Rossom R. (2022), also found the estimated 30-year risk of CVD to be significantly higher among individuals with serious mental illnesses, at 25% compared to 11% for those without a serious mental illness.

Despite the abundance of investigation and demonstration of a clear relationship between mental health and cardiovascular diseases, patients with coronary disease, myocardial infarction, heart failure, and arrhythmias are rarely assessed for psychological distress or mental illness as a contributor to or resulting from the cardiovascular disorder.

Correlation between depression and negative lifestyle habits

Clinical depression is a common but serious mood disorder and is estimated to affect 5% of adults globally. It is also recognised by WHO to be a major contributor to the overall global burden of disease as one of the most prevalent mental health disorders. Patients with depression have shown increased platelet reactivity, decreased heart variability and increased proinflammatory markers, all of which are risk factors for CVD. As with other mood or stress related disorders, depression may also result in increased cardiac reactivity as well as heightened levels of cortisol, putting unwanted stress on the cardiovascular system. Over time, these physiological effects can lead to calcium build-up in the arteries, metabolic disease, and heart disease.

However, the greatest risk of developing CVD from depression is due to physical inactivity as a result of the fatigue, accompanied by the adoption of new and unhealthy behavioural habits. These changes in behaviour often include smoking and/or excessive alcohol consumption, a poor diet, lack of exercise, and failure to adhere to medical advice, all of which classify as CVD risk factors. In fact, smoking, poor diets high in cholesterol and/or lipids, and little regular exercise are the following

Academic Journal 2023 - 8

Figure 1: Diagram illustrating the cyclical nature of heart disease and depression (Vigerust, D., 2021)

leading causes for CVD after high blood pressure- which can also be affected by such lifestyle habits.

As shown in Figure 1, this relationship between the negative lifestyle habits of depression and the development of CVD is also cyclical as it creates further emotional distress which can then increase the risk of an adverse cardiac event such as blood clots or myocardial infarction, i.e. heart attacks, in patients already diagnosed with a heart disease.

Physiological distress and anxiety

Unsurprisingly, anxiety disorders are the most prevalent mental illnesses worldwide, often coming hand in hand with other mental disorders such as schizophrenia, eating disorders, and even sometimes depression. Furthermore, whilst the definition of anxiety disorders infers chronicity, anxiety and negative emotions like anger, fear, grief, and severe emotional distress, of which people suffering from mental illnesses will experience at least one, result in what is referred to as the “stress response” and can have a major impact on the cardiovascular system.

The “stress response” is triggered when mentally induced stress is detected by the limbic system and a distress signal is sent to the hypothalamus, which then sends its own signals through the autonomic nervous system to the medulla of the adrenal gland. The adrenal gland thus releases adrenaline, a catecholamine hormone, which activates the sympathetic nervous system that triggers the “flight-or-flight” response. Consequently, both pulse and blood pressure increase temporarily, leading to the constriction of arteries which may cause myocardial infarction or induce cardiac irregularities, including atrial fibrillation, tachycardia, and even sudden death. Repeated temporary increases in blood pressure may also lead to plaque disruption, resulting in myocardial infarction or strokes, and, in patients with a weakened aorta, such as those with aortic aneurysm or survivors of an aortic dissection, may lead to aortic dissection or rupture.

As the initial surge of adrenaline subsides, the hypothalamus then activates the second component of the stress response system, known as the hypothalamic pituitary adrenal (HPA) axis (see Figure 2).

The HPA axis is activated by chronic stress, causing the hypothalamus to stimulate the secretion of adrenocorticotropic hormone (ACTH) from the pituitary gland. ACTH then stimulates the adrenal gland to release another stress hormone known as cortisol. These highly increased cortisol levels cause an increase in platelet activation and aggregation which can lead to atherosclerosis. Elevated levels of cortisol may also result in high blood glucose levels, high blood pressure and inflammation, damaging the blood vessels.

9 - Academic Journal 2023

Figure 2: Diagram illustrating effects of stress on hormone levels and the resultant impact on the cardiovascular system (Nemeroff, C. & Goldschmidt-Clermont, P., 2012)

Eating disorders and cardiac issues

Cardiovascular complications of eating disorders are extremely common and can be very serious. Anorexia nervosa, in particular, can be detrimental to your heart, with heart damage acting as the leading reason for hospitalisation in people with this eating disorder.

Cardiac deaths as a result of arrythmias account for approximately 50% of patient deaths with anorexia, the reason being that it involves self-starvation and intense weight loss, which not only denies the body essential nutrients to function, but also forces the body to slow down to conserve energy. The heart thus becomes smaller and weaker as it loses cardiac mass, making it more difficult to circulate blood at a healthy rate as the deteriorating heart muscle creates larger chambers and weaker walls. Consequently, bradycardia, i.e. abnormally slow heart rate of less than 60 bpm, and hypotension, i.e. low blood pressure under 90/60 mm/Hg, are extremely common in such individuals. Patients with anorexia may also experience sharp pains beneath the sternum, which could be a symptom of mitral valve prolapse occurring due to a loss of cardiac muscle mass and can improve with weight gain. However, chest pain may also be a more serious sign, indicating congestive heart failure and only has the potential to improve with proper treatment. In other forms of eating disorders, such as bulimia nervosa, the biggest cardiac risk is arrhythmia due to an electrolyte abnormality, such as low serum potassium or magnesium. The imbalance of electrolytes is caused by purging and is another factor that can put the individual at risk of heart failure. Binge eating disorders possess these same risks but also have those associated with obesity, such as hypercholesterolemia, i.e. high blood cholesterol levels, hypertension, i.e. high blood pressure, and diabetes.

Antidepressant therapy and cardiovascular considerations

Interestingly, in previous years, there has existed more concern over the cardiac complications which may arise from taking antidepressants, such as selective serotonin reuptake inhibitors (SSRIs), than the actual cardiovascular risks of mental illnesses themselves. However, a cohort study involving 238,963 patients aged 20 to 64 years with a first diagnosis of depression between 1st January 2000 and 31st July 2011 assessed the associations between different antidepressant treatments and cardiovascular complications (Coupland, C. et al., 2016) and found that there was no evidence that SSRIs are linked with an increased risk of arrhythmia, stroke or transient ischaemic attack in people diagnosed as having depression. Furthermore, despite the beliefs of many, there was also no evidence to suggest that citalopram is associated with arrhythmia, even at high doses. Instead, the study actually found there to be some indication of a reduced risk of myocardial infarction with some of the SSRIs, particularly fluoxetine.

Conclusion

Mental distress and mental illnesses are real and can be associated with severe cardiovascular consequences. According to Von Korff, M.R. et al. (2016), as cited by Stein, D.J. et al. (2019), the odds ratios in the World Mental Health Surveys for the association of heart disease with mental health disorders were 2.1 for mood disorders, such as major depressive disorder or bipolar disorder, and 2.2 for anxiety disorders. These strong associations and inter-related causal mechanisms of mental health disorders and CVD, alongside many other NCDs, thus argue for a joint approach to care. Treatment of mental disorders should optimally incorporate attention to physical health and health behaviours, with this parallel focus on physical health beginning as early in the course of the mental disorder as possible as a primary prevention of NCDs such as CVD. It is also arguable that the mental-physical comorbidity would be better addressed by an early focus on the physical health of those with mental disorders rather than a later focus on the mental health of those

Academic Journal 2023 - 10

with chronic physical conditions.

References

Bremner, J.D., Campanella, C., Khan, Z., Shah, M., Hammadah, M., Wilmot, K., Al Mheid, I., Lima, B.B., Garcia, E.V., Nye, J., Ward, L., Kutner, M.H., Raggi, P., Pearce, B.D., Shah, A.J., Quyyumi, A.A., & Vaccarino, V. (2018) Brain Correlates of Mental Stress-Induced Myocardial Ischemia. Psychosom Med. 80 (6), 515–525. Available from: doi:10.1097/PSY.0000000000000597 Bucciarelli, V., Caterino, A.L., Bianco, F., Caputi, C.G., Salerni, S., Sciomer, S., Maffei, S. & Gallina, S. (2020) Depression and cardiovascular disease: The deep blue sea of women’s heart. Trends in Cardiovascular Medicine. 30 (3), 170-176. Available from: doi:10.1016/j. tcm.2019.05.001.

Centres for Disease Control and Protection. (2020) Heart Disease and Mental Health Disorders. Available from: https://www.cdc.gov/heartdisease/mentalhealth.htm [Accessed 22nd August 2022]. Chaddha, A., Robinson, E.A., Kline-Rogers, E., Alexandris-Souphis, T. & Rubenfire, M. (2016) Mental Health and Cardiovascular Disease. The American Journal of Medicine. 129 (11), 11451148. Available from: doi:10.1016/j.amjmed.2016.05.018

Coupland, C., Hill, T., Morriss, R., Moore, M., Arthur, A., Hippisley-Cox, J. et al. (2016) Antidepressant use and risk of cardiovascular outcomes in people aged 20 to 64: cohort study using primary care database. BMJ. 352 (8050). Available from: doi:10.1136/bmj.i1350

Mayor, S. (2017) Patients with severe mental illness have greatly increased cardiovascular risk, study finds. BMJ. 357. Available from: doi:10.1136/bmj.j2339

Nemeroff, C. & Goldschmidt-Clermont, P. (2012) Heartache and heartbreak – the link between depression and cardiovascular disease. Nature Reviews Cardiology. 9 (9), 526-539. Available from: doi:10.1038/nrcardio.2012.91

Northwestern Medicine. (n.d.) Disordered Eating and Your Heart. Available from: https://www. nm.org/healthbeat/healthy-tips/anorexia-and-your-heart [Accessed 22nd August 2022]

Pozuelo, L. (2019) Depression & Heart Disease. Available from: https://my.clevelandclinic.org/ health/diseases/16917-depression--heart-disease [Accessed 22nd August 2022].

Rossom, R.C., Hooker, S.A., O’Connor, P.J., Crain A.L., Sperl‐Hillen, J.M. (2022) Cardiovascular Risk for Patients With and Without Schizophrenia, Schizoaffective Disorder, or Bipolar Disorder. American Heart Association. 11 (6). Available from doi:10.1161/JAHA.121.021444

Rudnick, C. (2014) Cardiovascular Complications of Eating Disorders. Available from: https://www. mccallumplace.com/about/blog/cardiovascular-complications-eating-disorders/ [Accessed 22nd August 2022]

Stein, D.J., Benjet, C., Gureje, O., Lund, C., Scott, K.M., Poznyak, V. et al. (2019) Integrating mental health with other non-communicable diseases. BMJ. 364. Available from: doi:10.1136/bmj.l295 Vigerust, D. (2021) Can Depression Have A Negative Effect On Heart Health?. Available from: https://www.imaware.health/blog/depression-and-heart-health [Accessed 23rd August 2022]

World Health Organisation. (2022) World health statistics 2022: monitoring health for the SDGs, sustainable development goals. Available from: https://www.who.int/data/gho/publications/ world-health-statistics [Accessed 23rd August 2022]

11 - Academic Journal 2023

Genetics and Schizophrenia Anisha Tripathi

What is schizophrenia?

The term schizophrenia, means split mind, and describes a detachment between the different functions of the mind so that thoughts become disconnected and coordination between emotional, cognitive, and volitional (acting based on your own will) processes become weaker. It affects approximately 24 million people or 1 in 300 people (0.32%) worldwide. It is most apparent during late adolescence and tends to occur earlier in men than in women.

Symptoms

• Hallucinations

• Delusions

• Losing interest in everyday activities

• Wanting to avoid people

• Disorganised thinking (speech)

• Abnormal motor behaviour

Is schizophrenia genetic?

By pooling data from many studies carried out between 1920 and 1987, the American psychologist Irving Gottesman, was able to show that the risk of schizophrenia increases from approximately 1% in the general population to nearly 50% in the offspring of two schizophrenic patients and in identical twins of schizophrenics. The concordance of schizophrenia between monozygotic (identical) twins is 31% greater than that of dizygotic (fraternal) twins, strongly suggesting that there is a hereditary factor in schizophrenia. If the condition was purely genetic, however, then the concordance between monozygotic twins would be 100%. Furthermore, in Gottesman’s review he noted that 89% of patients have parents who are not schizophrenic, 81% have no affected first-degree relatives, and 63% show no family history of the disorder whatsoever. However, the reliability of these statistics is limited since family members may be unaware of or unwilling to disclose this information due to the stigma around mental illness. One reason for this 50% concordance between monozygotic twins may be due to epigenetic influences. These alter gene expression through DNA methylation and histone modification. Evidence supporting the role of epigenetic effects is presented in a study that found increased methylation for the dopamine D2 (a receptor linked to schizophrenia ) in a male without schizophrenia in comparison to his monozygotic twin brother and another sibling with schizophrenia.

Academic Journal 2023 - 12

Figure 1: Rates of schizophrenia among relatives of schizophrenic patients (1991)

Why is it so difficult to identify the genes causing schizophrenia?

RFLP (restriction fragment length polymorphisms) analysis has been used to find where a specific gene for a disease lies on a chromosome. It has helped to identify the faulty gene in several hereditary disorders such as Huntington’s disease, muscular dystrophy, and cystic fibrosis. Schizophrenia, however, has a complex pattern of inheritance. Evidence from genetic studies, suggests that there may be several genes responsible, each with a small effect. These genes interact with each other and with environmental factors to influence the susceptibility of a person to schizophrenia. However, none of these genes are either necessary or sufficient to cause schizophrenia. The great difficulty for genetic studies of schizophrenia is that there is no clear pattern of inheritance. A recent study undertaken by the University of Cardiff analysed DNA from 76,755 people with schizophrenia and 243,2649 people without the condition. They then identified 120 genes which were likely to contribute to the disorder. Although there are large numbers of genetic variants involved in schizophrenia, the study showed they are concentrated in genes expressed in neurons, pointing to these cells as the most important site of pathology. The findings also suggest abnormal neuron function in schizophrenia affects many brain areas, which could explain its diverse symptoms, which can include hallucinations, delusions, and problems with thinking clearly.

Schizophrenia and the Brain

Inside the brain, there are two lateral ventricles and a third and fourth ventricle which are filled with fluid (cerebrospinal fluid). Enlarged cerebral ventricles are found in 80% of individuals with schizophrenia. The mechanisms that lead to this ventricular enlargement are unknown although it is believed to be linked to the deletion of a region on chromosome 22 which increases the risk of developing schizophrenia approximately 30-fold in humans.

Brain scans undertaken by the UK Medical Research Council discovered higher levels of activity in part of the brain’s immune system in schizophrenia patients in comparison to healthy volunteers. Microglia cells are the immune cells of the brain and regulate brain development, maintenance of neuronal networks and injury repair. A chemical dye which sticks to microglia was injected into 56 people to record their microglia activity. The scans showed that levels of microglia were highest in those which schizophrenia and high in those at high risk of developing the condition.

It is thought that the microglia may sever the wrong connections in the brain, leaving it wired incorrectly which links to the symptoms of the illness as patients often make unusual connections of what is happening around them as well as mistaking thoughts as voices outside their head.

Environmental factors Stress

When under stress, the brain releases the hormone cortisol. Cortisol has been shown to damage nerve cells in the hippocampus. Excessive cortisol production, damage to the hippocampus and impairment in memory are

13 - Academic Journal 2023

Figure 2: Ventricles of the brain

Figure 3: Brain scans show higher levels of microglia activity (orange) in people with schizophrenia.

Figure 4: The location of the hippocampus in the brain.

all common occurrences in patients with schizophrenia. Research has shown that these patients have smaller hippocampal volumes than those without the disease. These stresses can be brought about by events such as bereavement, losing your job or home, divorce or physical, sexual and emotional abuse.

Substance abuse

Dopamine is a neurotransmitter which the brain releases in response to pleasurable activities such as when we eat food. It boosts mood, motivation and attention and helps to regulate movement, learning and emotional responses. Most drugs work by interfering with neurotransmission in the brain. This interference can happen in many ways. Drugs that cause receptors to be over-stimulated are called agonists. Amphetamine in large doses can cause hallucinations and delusions as it is a dopamine agonist. This means that it stimulates the axons of neurons containing dopamine, causing the synapse to be flooded with this neurotransmitter. This over-stimulation of the dopamine receptors results in hallucinations and delusions.

The use of cannabis among schizophrenic patients is associated with greater severity of psychotic symptoms as well as earlier and more frequent relapses. The Edinburgh High Risk study conducted between 1994 and 2004 was carried out to determine the features that distinguish highrisk individuals who go on to develop schizophrenia from those who do not. The study found that in genetically predisposed individuals, high cannabis use is associated with the development of psychotic symptoms. This conveys that schizophrenia is a result of an interaction between genetic and environmental factors.

Ketamine, Phencyclidine (PCP) and ecstasy have also been reported to induce hallucinations, delusions, and paranoia.

Obstetric complications

A 2018 study published in the journal ‘Nature Medicine’ shows that serious obstetric complications such as pre-eclampsia (high blood pressure during pregnancy), asphyxia (lack of oxygen during birth) and premature labour can increase the risk of the developing schizophrenia by 5 times in a child who is genetically predisposed to the condition. This is because these complications appear to ‘turn on’ genes in the placenta that have been associated with schizophrenia. The placenta is composed of foetal and maternal tissue and is a vital organ for the well-being of the foetus.

Nutritional factors

A lack of certain micronutrients and general nutritional deprivation are factors which increase the risk of schizophrenia. The Dutch Famine study of 1998 found that the rates of schizophrenia doubled amongst individuals who were conceived under circumstances of nutritional deprivation throughout premature foetal development. Further studies in 2001 found evidence that low birth weight can be associated with schizophrenia.

Treatments for schizophrenia

Prior to the discovery of antipsychotic drugs in the early 1930s, a common treatment for schizophrenia was insulin coma therapy. This consists of giving the patient increasingly large doses of the hormone insulin, which reduces the sugar content of the blood to produce a state of coma. The patient is then kept in a comatose condition for an hour, after which time they were brought back to consciousness by administrating a warm sugar solution or by an intravenous injection of glucose. This would result in a remission in symptoms for a period (which varied from several months to

Academic Journal 2023 - 14

a couple of years. Insulin-coma therapy is rarely used today is due to the very high risk of a prolonged coma where it is impossible to bring the patient out of this state using usual methods. Electroconvulsive therapy (ECT) was initially used for the treatment of schizophrenia, but over the years its use in schizophrenia has become limited. Before an ECT treatment, general anaesthetic and a muscle relaxant is given to the patient to restrict movement during the procedure. The treatment consists of electrodes being placed at precise locations on the patient’s head. Breathing, heart rate and blood pressure is monitored throughout. For roughly a minute, a small electric current pass from electrodes into the brain and triggers a seizure. ECT causes changes in the patient’s brain chemistry and thus results in a change to a patient’s catatonic symptoms.

Antipsychotic drugs

As mentioned earlier, it is the drugs that stimulate the dopamine system that produce psychotic states most like schizophrenia. Thus, drugs that block dopamine receptors in the brain can successfully treat schizophrenic symptoms. Antipsychotic drugs bind to dopamine receptors without stimulating them, thus preventing the receptors from being stimulated by dopamine. This reduced stimulation of the dopamine system reduces the severity of hallucinations and delusions in those with schizophrenia. Despite this, there is still no evidence that schizophrenic symptoms are due to an excess of brain dopamine. Although post-mortem brain studies have revealed increases in the densities of dopamine D2 receptors (the main receptor for most antipsychotic drugs).

Other treatments for schizophrenia consist of admissions to a psychiatric ward in a hospital. Under the 2007 Mental Health Act, people who are at risk of harming themselves or others can be compulsorily detained in a hospital. Therapies such as cognitive behaviour therapy, family therapy and arts therapy are also prescribed.

To conclude, it is undeniable that genetic factors play a significant role in the onset for schizophrenia. However, it can be deduced that it is a combination of both environmental and genetic factors, with the environmental effects having a larger effect when the individual is genetically predisposed to the condition. Overall, research into the genes which are responsible for schizophrenia and explanations for the mechanisms causing the neurological impacts seen is largely inconclusive and thus there is still a long way to go before finding a potential cure for this illness.

References

NHS (2021). Symptoms - Schizophrenia. [online] nhs.uk. Available at: https://www.nhs.uk/mental-health/conditions/schizophrenia/symptoms/. [accessed 21/07/2022]

Figure 1: Schizophrenia.com. (2019). Schizophrenia.com - Schizophrenia Genetics and Heredity. [online] Available at: http://www.schizophrenia.com/research/hereditygen.htm [accessed 21/07/2022]

Karki, G. (2017). Restriction fragment length polymorphism (RFLP): principle, procedure and application. [online] Online Biology Notes. Available at: https://www.onlinebiologynotes.com/restriction-fragment-length-polymorphism-rflp-principle-procedure-application/. [accessed 21/07/2022] Cardiff University. (2022). Biggest study of its kind implicates specific genes in schizophrenia. [online] Available at: https://www.cardiff.ac.uk/news/view/2616522-biggest-study-of-its-kind-implicates-specific-genes-in-schizophrenia [Accessed 5 Aug. 2022].

15 - Academic Journal 2023

Figure 5: The efficacy of antipsychotic drugs depends on their ability to block dopamine receptors. The smaller the concentration of drug that inhibits the release of dopamine by 50% (IC50), the smaller the effective clinical dose.

Figure 2: www.simplypsychology.org. (n.d.). Brain Ventricles: Anatomy, Function, and Conditions. [online] Available at: https://www.simplypsychology.org/brain-ventricles.html [accessed 21/07/2022]

Figure 3: Gallagher, J. (2015). Immune clue to preventing schizophrenia. BBC News. [online] 16 Oct. Available at: https://www.bbc.co.uk/news/health-34540363 [accessed 21/07/2022]

Figure 4: clipartkey.com. (n.d.). Brain Amygdala Hippocampus Prefrontal Cortex , Free Transparent Clipart - ClipartKey. [online] Available at: https://www.clipartkey.com/view/xwowRm_ brain-amygdala-hippocampus-prefrontal-cortex/ [Accessed 5 Aug. 2022].

CNN, M.L. (2005). Pregnancy complications might ‘turn on’ schizophrenia genes. [online] CNN. Available at: https://edition.cnn.com/2018/05/30/health/schizophrenia-genes-pregnancy-placenta-study/index.html [accessed 21/07/2022]

Picker, J. (2005). The Role of Genetic and Environmental Factors in the Development of Schizophrenia. [online] Psychiatric Times. Available at: https://www.psychiatrictimes.com/view/role-genetic-and-environmental-factors-development-schizophrenia. [accessed 21/07/2022]

Grover, S., Sahoo, S., Rabha, A. and Koirala, R. (2018). ECT in schizophrenia: a review of the evidence. Acta Neuropsychiatrica, 31(03), pp.115–127. doi:10.1017/neu.2018.32 [accessed 21/07/2022]

Figure 5: Author: Christopher Frith and Johnstone, E. (2003). Schizophrenia: A very short introduction. Editorial: Oxford New York: Oxford University Press.

Academic Journal 2023 - 16

Contagious Vaccines

Harsha Pendyala

The history of vaccines

Vaccines have been humanity’s most effective weapon in suppressing the spread of virus for over 2 centuries being our saving grace in midst of many devastating pandemics, like the polio vaccine introduced in 1955 reducing the incidence of polio cases by over 99% to just 6 cases worldwide in 2021 (Louten, 2016).

The beginnings of vaccines date back to the smallpox epidemic, back then variolation (the intentional inoculation of an individual with virulent material) was the method of choice to battle smallpox. Doctors noticed ground up smallpox scabs or fluid from its pustules, infects the patient with a much milder form of smallpox and provides them immunity from the virus when exposed to it at a later date. This was a very effective method however it had 2 major drawbacks: the inoculated virus could still be transmitted to others after variolation had taken place and the method wasn’t flawless; 2-3% of cases died (History of Smallpox, 2022). However, a viable alternative would soon be discovered as Edward Jenner, a physician at the time conducting research in a small village, noticed that milkmaids who were infected with cowpox (a virus similar to smallpox but much milder) did not contract smallpox, and connecting his knowledge of variolation to this he theorised that inoculating people with cowpox could give them immunity from smallpox. When tested on the young James Phipps, sure enough his method worked and protected the young boy from smallpox (Louten, 2016). This was the first vaccine created in 1976 and quickly caught on in the medical community as it was an ideal alternative to variolation that did not possess its disadvantages. Fast-forward to the present day and vaccines haven’t changed much since the first smallpox vaccine, apart from minor changes have made to increase the breadth of infectious diseases vaccines can prevent against and despite how revolutionary they were they have some major fundamental disadvantages. As illustrated by the COVID-19 vaccination programs, suppressing a pandemic this way is very expensive with the UK’s vaccination program spending more than £8bn over the 2019-2022 period (Pfizer ’s £2 billion NHS rip-off could pay for nurses’ pay rise SIX TIMES over - Global Justice Now, 2022), mostly due the vaccines being made and sold by private companies, with large profit margins in some cases. However, the manufacturing and planning costs were also a large part of this. With each vaccine costing just shy of 5 pounds to produce and sold for even more (Pfizer’s £2 billion NHS rip-off could pay for nurses’ pay rise SIX TIMES over - Global Justice Now, 2022), it is clear our current vaccine technology has major shortcomings.

What are contagious vaccines and how were they developed?

The possibility of a contagious vaccine promises to provide a better solution, with a more efficient rollout timeline and lower rollout costs than the traditional alternative. The first idea for a contagious vaccine came in 1999 from veterinarian José Manuel Sánchez-Vizcaino who was faced

17 - Academic Journal 2023

Figure 1- an artist’s interpretation of Edward Jenner’s conversation with the milkmaids (What’s the real story about the Milkmaid and the smallpox vaccine? 2018)

with the insurmountable task of trapping and vaccinating an entire population of wild rabbits, notoriously known for being fast breeding (Craig, 2022). He was simply unable to keep up with the rate of population increase while the virus on the other hand, ravaged through the population with relative ease. He needed to find a much faster way that could keep up with the rate of infections by the virus as the rabbits were dying faster than they were being vaccinated. Hence the first contagious vaccine was born, a hybrid virus vaccine between rabbit haemorrhagic disease and myxomatosis, his team sliced out a gene from the rabbit haemorrhagic disease virus and inserted it into the genome of a mild strain of the myxoma virus, which causes myxomatosis. The vaccine would then incite an immune response for both viruses which would easily overpower them both as the myxoma virus would in a very mild form. Although the virus would be modified to make it weaker and add the rabbit haemorrhagic disease viral DNA, Sánchez-Vizcaino hypothesized that because the vaccine was still similar enough to the original disease-causing myxoma virus, it would still spread among wild rabbits (Maclachlan et al., 2017).

A proof-of-concept field test was then carried out among a sample of 147 wild rabbits, with 50% being infected with the viral vaccine. The test showed positive results as percentage of wild rabbits they chipped containing antibodies for rabbit haemorrhagic disease and myxomatosis increased from 50-56% over a 32-day period (Maclachlan et al., 2017). While this does not initially seem like a significant enough difference, the test was done on an island that both rabbit haemorrhagic disease and myxomatosis viruses had not reached yet, therefore the only way rabbits could’ve gained the antibodies was through the viral vaccine’s immune response and the population of rabbits chipped in the test were a only small fraction of those inhabiting the island, and so the number of rabbits the virus had spread to was likely a lot larger than 9 over the month period. This initial test showed great promise for the technology however the EMA (European Medicines Agency), noted technical issues with the vaccine’s safety evaluation and requested that the team decode the myxoma genome, which had not been done before and as a result the concept was dropped with the team’s funding also being cut due to concerns the EMA would never approve such a technology (Craig, 2022). After this, research into self-spreading vaccines went largely dormant. Pharmaceutical companies weren’t interested in investing in research and development for a technology that, by design, would reduce its own profit margins and was unlikely to be approved for use.

The revival of contagious vaccine research

However, in recent years there has been a renewed interest and funding in this line of research, inspired by the devastation zoonotic disease epidemics have caused (like the Lassa virus in East Africa). A new strain of virus vectors, cytomegalovirus, or CMVs, are being used to create viral vaccines and have significant advantages over the previously used Myxoma vectors (Varrelman et al., 2022). These spark hope for the possibility of future contagious vaccine approval for use in reservoir populations, to attempt to combat the tight grasp zoonotic disease has on many countries. CMVs are better as they infect a host for life, inducing strong immune responses while not causing severe disease and they are also uniquely species-specific; as an example, the CMV that spreads among Mastomys natalensis, the rat species that spread Lassa fever, cannot infect any animals other than M. natalensis (Craig, 2022). This alleviates the ethical concerns over viral vaccines mutating and jumping into the human species, where unlike with wild animal populations,

Academic Journal 2023 - 18

Figure 2 - An image of the myxoma virus (taken by a transmission electron microscope) (Myxomatosis 2023)

Figure 3 - Cytomegalovirus image taken by a Transmission Electron Microscope) (TEM images of our virus-like particles 2020)

informed consent is essential before vaccinating. Field tests using CMVs were also carried out with promising results and after extensive mathematical modelling, a prediction of how long a vaccine of this sort would take to reduce pathogen incidence by 95% in reservoir populations was made. If the technology works as expected, releasing the Lassa fever vaccine could reduce disease transmission among rodents by 95% percent in less than a year and significantly reduce the annual predicted death toll of 5000 people to possibly even 0 and maybe eradicate the disease given a long enough time period (Varrelman et al., 2022).

How realistic is this technology?

The technology clearly shows huge amounts of potential but nevertheless, these predications are ultimately only predications, based on the chance that the technology works exactly as modelled to. Many believe technology should always be developed and tested from a pessimist’s point of view, ensuring that every potential problem that can develop has been addressed so that the release is not a catastrophic failure. This has been applied to many fields like AI but is especially important in immunology as it deals with ecosystems which we do not know enough about. Many experts warn that too little is known about zoonotic disease transmission and viral evolution to accurately predict what might happen if a self-spreading vaccine were released into the wild and what the consequences could be on an ecosystem.

Historical instances show that humans using and modifying viruses for their intended purposes has had devastating effects on the ecosystems of countries. For instance, a man in France intentionally released the myxoma virus in 1952 to keep rabbits out of his home garden but in the process decimated France’s rabbit population wiping out 90% of its rabbits within just 2 years (Maclachlan et al., 2017). Furthermore, the same myxoma virus started killing wild hares almost 70 years later, as it had mixed with the poxvirus allowing it to jump species (Maclachlan et al., 2017). Many experts cite this evidence and warn that we cannot accurately predict (using even mathematical models) what problems might arise from release of a viral vaccine. When the natural ecosystems and animal populations are involved, many say the stakes are simply too high to use the vaccine anyway.

Furthermore, the ethical and social issues that surrounds a programme like this are crushing as most experts in the field accept that the viral vaccine can never be used on human populations, because universal informed consent would never be achieved. However, another problem that accompanies all dangerous technology research is that even though they would never be passed by medical councils, underground unregulated research is likely to take place even if the technology was banned and if the technology enters the wrong hands, it could wreak havoc on countries. The potential scale of devastation that could accompany the viral vaccine technology is limitless, with the process to make the vaccine bearing an uncanny resemblance to the creation of a bioweapon, capable of causing global pandemics. Even Bárcena, a scientist who was part of Sánchez-Vizcaino’s original research group, had shifted his view of self-spreading diseases after he saw how previous strategies involving the intentional release of viruses had unforeseen consequences, referring to the evidence that the myxoma virus had combined with poxvirus enabling it to jump species (Craig, 2022). This ethical argument, however, is an age old one accompanying the discovery of any new technology. The question, whether the potential risks that technology poses, is worth taking to reap the potential benefits it could provide mankind? is at the core of this notion. However, some of the riskiest technologies like AI are still being allowed to develop, as it is widely considered by millions as the next step in human evolution; hence it’s worth taking the risk that AI poses, the benefits outweigh the potential risk. A similar logic can be used on contagious to evaluate if it should be developed or not and the conclusion most come to is that the technology might never be used due to its multitude of problems, but it should still be developed, in case we ever need it. Alec Redwood expresses this excellently with “it’s better to have something in the cupboard that can be used and is mature if we need it than let’s just not do this research because it’s too dangerous, to

19 - Academic Journal 2023

me, that makes no sense at all” (Craig, 2022).

Reference list

Craig, J., 2022. The controversial quest to make a ‘contagious’ vaccine. [online] Science. Available at: <https://www.nationalgeographic.com/science/article/the-controversial-quest-to-make-a-contagious-vaccine> [Accessed 1 October 2022].

Varrelman, T., Remien, C., Basinski, A., Gorman, S., Redwood, A. and Nuismer, S., 2022. Quantifying the effectiveness of betaherpesvirus-vectored transmissible vaccines. Proceedings of the National Academy of Sciences, 119(4).

Louten, J., 2016. ScienceDirect. Clinical Microbiology Newsletter, 38(13), p.109. Centres for disease control and protection. 2022. History of Smallpox. [online] Available at: <https://www.cdc.gov/smallpox/history/history.html#:~:text=Jenner%20took%20material%20 from%20a,but%20Phipps%20never%20developed%20smallpox.> [Accessed 1 October 2022]. Global Justice Now. 2022. Pfizer’s £2 billion NHS rip-off could pay for nurses’ pay rise SIX TIMES over - Global Justice Now. [online] Available at: <https://www.globaljustice.org.uk/news/pfizers-2billion-nhs-rip-off-could-pay-for-nurses-pay-rise-six-times-over/> [Accessed 1 October 2022].

Maclachlan, N., Dubovi, E., Barthold, S., Swayne, D. and Winton, J., 2017. Fenner’s veterinary virology. 5th ed. Amsterdam: Elsevier/AP, Academic Press is an imprint of Elsevier, pp.157-174. Brink, S. (2018) What’s the real story about the Milkmaid and the smallpox vaccine?, NPR. NPR. Available at: https://www.npr.org/sections/goatsandsoda/2018/02/01/582370199/whats-the-realstory-about-the-milkmaid-and-the-smallpox-vaccine (Accessed: March 13, 2023).

Myxomatosis (2023) Wikipedia. Wikimedia Foundation. Available at: https://en.wikipedia.org/wiki/ Myxomatosis (Accessed: March 13, 2023).

Shore, J. (2020) TEM images of our virus-like particles, The Native Antigen Company. Available at: https://thenativeantigencompany.com/tem-images-of-our-virus-like-particles/ (Accessed: March 13, 2023).

Academic Journal 2023 - 20

What is an antibiotic?

Teodor Wator

Teodor Wator

[1]Any substance that inhibits the growth and replication of a bacterium or kills it. Antibiotics are a type of antimicrobial designed to target bacterial infections within the body. This makes antibiotics different from the other main kinds of antimicrobials widely used today:

• Antiseptics are used to sterilise surfaces of living tissue when the risk of infection is high,

• Disinfectants are non-selective antimicrobials, killing a wide range of micro-organisms including bacteria used on non-living surfaces.

Of course, bacteria are not the only microbes that can be harmful to us. Fungi and viruses can also be a danger to humans, and they are targeted by antifungals and antivirals, respectively. Only substances that target bacteria are called antibiotics, while the name antimicrobial is a term for anything that inhibits or kills microbial cells including antibiotics, antifungals, antivirals and chemicals such as antiseptics. Most antibiotics used today are produced in laboratories, but they are often based on compounds scientists have found in nature. Some microbes, for example, produce substances specifically to kill other nearby bacteria to gain an advantage when competing for food, water or other limited resources. However, some microbes only produce antibiotics in the laboratory

WHY ARE ANTIBIOTICS IMPORTANT?

[1]The introduction of antibiotics into medicine revolutionised the way infectious diseases were treated. Between 1945 and 1972, average human life expectancy jumped by eight years, with antibiotics used to treat infections that were previously likely to kill patients. Today, antibiotics are one of the most common classes of drugs used in medicine and make possible many of the complex surgeries that have become routine around the world. If we ran out of effective antibiotics, modern medicine would be set back by decades. Relatively minor surgeries, such as appendectomies, could become life-threatening, as they were before antibiotics became widely available. Antibiotics are sometimes used in a limited number of patients before surgery to ensure that patients do not contract any infections from bacteria entering open cuts. Without this precaution, the risk of blood poisoning would become much higher, and many of the more complex surgeries doctors now perform may not be possible.

PRODUCTION Fermentation

[3]Industrial microbiology can be used to produce antibiotics via the process of fermentation, where the source microorganism is grown in large containers (100,000–150,000 litres or more) containing a liquid growth medium. Oxygen concentration, temperature, pH and nutrients are

21 - Academic Journal 2023

Penicillin

closely controlled. As antibiotics are secondary metabolites, the population size must be controlled very carefully to ensure that maximum yield is obtained before the cells die. Once the process is complete, the antibiotic must be extracted and purified to a crystalline product. This is easier to achieve if the antibiotic is soluble in an organic solvent. Otherwise, it must first be removed by ion exchange, adsorption or chemical precipitation.

Semi-synthetic

A common form of antibiotic production in modern times is semi-synthetic. Semi-synthetic production of antibiotics is a combination of natural fermentation and laboratory work to maximize the antibiotic. Maximization can occur through the efficacy of the drug itself, the amount of antibiotics produced, and the potency of the antibiotic being produced. Depending on the drug being produced and the ultimate usage of said antibiotic determines what one is attempting to produce. An example of semi-synthetic production involves the drug ampicillin. A beta-lactam antibiotic just like penicillin, ampicillin was developed by adding an amino group (NH2) to the R group of penicillin.[2] This additional amino group gives ampicillin a broader spectrum of use than penicillin. Methicillin is another derivative of penicillin and was discovered in the late 1950s,[3] the key difference between penicillin and methicillin being the addition of two methoxy groups to the phenyl group. [4] These methoxy groups allow methicillin to be used against penicillinase-producing bacteria that would otherwise be resistant to penicillin.

WHAT ARE THEY MADE OF?

The compounds that make the fermentation broth are the primary raw materials required for antibiotic production. This broth is an aqueous solution made up of all of the ingredients necessary for the proliferation of microorganisms. Typically, it contains a carbon source like molasses, or soy meal, both of which are made up of lactose and glucose sugars. These materials are needed as a food source for the organisms. Nitrogen is another necessary compound in the metabolic cycles of organisms. For this reason, an ammonia salt is typically used. Additionally, trace elements needed for the proper growth of the antibiotic-producing organisms are included. These are components such as phosphorus, sulfur, magnesium, zinc, iron, and copper introduced through water-soluble salts. To prevent foaming during fermentation, anti-foaming agents such as lard oil, octadecanol, and silicones are used.

WHAT DIFFERENT ANTIBIOTICS ARE PRODUCED BY?

• [4]Some antibiotics are produced naturally by fungi. These include the cephalosporin producing Acremonium chrysogenum.

• Geldanamycin is produced by Streptomyces hygroscopicus.

• Erythromycin is produced by what was called Streptomyces erythreus and is now known as Saccharopolyspora erythraea.

• Streptomycin is produced by Streptomyces griseus.

• Tetracycline is produced by Streptomyces aureofaciens

• Vancomycin is produced by Streptomyces orientalis, now known as Amycolatopsis orientalis.

Academic Journal 2023 - 22

DOES SILVER MAKE ANTIBIOTICS MORE EFFECTIVE?

[2]Bacteria have a weakness: silver. It has been used to fight infection for thousands of years —silver can disrupt bacteria and could help to deal with the thoroughly modern scourge of antibiotic resistance.

Silver — in the form of dissolved ions — attacks bacterial cells in two main ways: it makes the cell membrane more permeable, and it interferes with the cell’s metabolism, leading to the overproduction of reactive, and often toxic, oxygen compounds. Both mechanisms could be obtained to make modern antibiotics more effective against resistant bacteria.

Many antibiotics are thought to kill their targets by producing reactive oxygen compounds, and when boosted with a small amount of silver the drugs could kill between 10 and 1,000 times as many bacteria. The increased membrane permeability also allows more antibiotics to enter the bacterial cells, which may overwhelm the resistance mechanisms that rely on pushing the drug back out.

References

[1] https://microbiologysociety.org/members-outreach-resources/outreach-resources/antibiotics-unearthed/antibiotics-and-antibiotic-resistance/what-are-antibiotics-and-how-do-they-work.html

[2] https://www.nature.com/articles/nature.2013.13232

[3] http://www.madehow.com/Volume-4/Antibiotic.html

[4] https://en.wikipedia.org/wiki/Production_of_antibiotics

23 - Academic Journal 2023

In December of 1951, news of the first-ever nuclear reactor capable of producing electricity had broken out. Since then, nuclear energy sources have been considered, by many, to be an excellent replacement for fossil fuel-based sources; producing more energy per unit kg of fuel as well as no release of Carbon Dioxide. Despite these advantages, considering world events, with tragic bushfires ravaging Australia in 2020, the melting of ice glaciers in Iceland and most recently a heatwave with temperatures in London reaching up to 40.3 degrees Celsius, we must take drastic steps to slow down the effect of Climate change, which includes finding cleaner sources of energy This article sheds light on both the widely known and unknown consequences of nuclear fission reactors and whether it is possible to find a completely green source of energy

How do Nuclear Fission reactors work?

Comprehending the impact which these reactors have, involves an understanding of their function and how they carry it out. They work on the principle of radioactive decay, which is when an unstable nucleus releases radiation, in order to become more stable. Instability in a nucleus could be due to several reasons, such as an excess or dearth of subatomic particles such as protons or neutrons which causes forces within the nucleus to be unbalanced (however, the case we will focus on is the former). Nuclear fission is the process by which a neutron strikes a larger nucleus, causing it to split producing 2 smaller nuclei as well as 2 or 3 neutrons as well as copious amounts of energy. If a neutron produced in this fission reaction can strike another nucleus, it will cause this to split as well resulting in a chain reaction. One of the most famous equations ever created is E=mc2 stating that Energy and mass are different forms of each other and that Energy = mass times the speed of light squared. This means that a very small mass can be converted into a large amount of energy. This principle is critical in fission reactions; if one was to measure the mass of the individual products and the neutrons after the fission reaction, one would see this mass is slightly less than the mass of the nucleus before, which is called the mass defect. This is due to some of the mass being converted to energy, which is then harnessed.

Within a nuclear reactor:

Nuclear reactors use a similar process of creating electricity to fossil fuels; using energy to heat water and producing steam, which turns the turbine and in turn rotates a generator. Such reactors consist of 4 main components: fuel rods, control rods, a moderator, and a coolant, each of which has a distinct function in ensuring the production of this energy.

The components:

The fuel rods are rods containing the Uranium 235 nuclei, which are surrounded by the moderator. Upon impact of a neutron with the Uranium 235 nucleus, it produces a Uranium 236 nucleus

Academic Journal 2023 - 24

The environmental impact of current nuclear reactors and whether we will ever create a perfect source of energy

Aaditya Nandwani

Figure 1.1: A simple diagram illustrating the chain reaction of fission

(which is unstable and therefore splits). The moderator plays an important role in this as it reduces the speeds of neutrons from 13200 m/s to 2200 m/s, which increases the probability of a neutron hitting the fuel rod, making a successful fusion reaction more likely. The moderator, most commonly heavy water, is carefully chosen as it must not absorb the neutrons, but rather just slow them down by a considerable amount for the reason stated above. The control rods are the most crucial parts of this reactor, as they control the rate of the fission reactions taking place. When not lowered, the number of neutron collisions with the fuel rods is greater, which means the rate of nuclear fission is also greater, however, an uncontrolled rate of fission can prove to be dangerous. When the control rods are lowered, they absorb neutrons and therefore decrease the number of neutron collisions with the fuel rods, decreasing the rate of fission reactions taking place which means the electricity output is lower, however rendering the reactor safer. Therefore, there is always a fine balance between ensuring the rate of reaction is enough (to meet the financial goals) while making sure that it is not too high (to ensure the safety of the workers). Finally, the coolant transfers the thermal energy produced in the process to the electrical generator, where the electricity is produced.

The impact on the environment of Fission reactors:

Nuclear reactor accidents:

Nuclear accidents are catastrophic events, releasing significant amounts of nuclear radioactive waste into the surrounding environment, causing the tragic destruction of marine and land ecosystems. Despite numerous safety features implemented; events such as Chernobyl still took place (mainly due to a mix of human error and an unexpected failure of the machines), which have had a profound impact. This section will focus on the effects on agriculture and farming, the effects on lakes and finally the effects on plants and animals.

After the Chernobyl accident, the amount of radioactive iodine in the soil had reached an all-time high. Despite this level decreasing after the accident (due to multiple factors such as the wind carrying these isotopes and the quick decay time), it still had a profound effect. For example, the iodine levels in the thyroid gland of humans had increased, resulting in hyperthyroidism. Furthermore, it affected the reproductive abilities of trees, especially those in the exclusion zone. The Chernobyl accident also heavily contaminated water bodies, with radioactive Caesium as well as radioactive Iodine and radioactive Strontium. There were large concerns about the accumulation of radioactive caesium in aquatic food webs, as the amount of Cs137 in fishes was at a peak. Despite these levels evenutally decreasing, in certain lakes in Russia, the levels of Caesium in water bodies remain high, which is seen by the persistently high levels of radioactive Caesium in fishes. The Chernobyl accident had disastrous effects on human health as well as other wildlife. Workers in the Pripyat reactor received high amounts of gamma radiation (2-20 Gy), which resulted in acute radiation syndrome, a disease where a person has been exposed to large amounts of penetrative ionising radiation (which can penetrate the skin to reach sensitive organs and cause cell death), which resulted in death. Furthermore,

25 - Academic Journal 2023

Figure 1.2: Diagram showing the parts of the nuclear fission reaction vessel

Figure 2.1: A graph showing the number of cases of thyroid cases in children in Belarus and Ukraine as time progressed. It can be seen, that in Belarus and Ukraine the number of cases has increased, however has increased even more steeply in Belarus.

due to increased uptake of radioactive iodine by the thyroid (because of the milk of cows which grazed on radioactive grass), the ionising radiation caused mutations, and therefore thyroid cancer. Finally, the Chernobyl accident caused visible deformations in many animals in the exclusion zone.

Mining for Uranium

Obtaining Uranium consists of mining and processing, which have a severe effect on the environment. There are several different types of mining processes, such as underground mining (where large underground tunnels are vertically built, and the Uranium is then removed by breaking it up and then bringing it to the surface). The energy demand required in mining is very large, which is often provided through the burning of fossil fuels (which releases CO2 and other greenhouse gases into the environment). When processing the Uranium ore, by a process of leaching, it has a considerable impact (mainly due to the waste products created). The mill tailings (which is waste containing metals such as radioactive forms of radium, eventually form a toxic mixture of different gases, for example, radioactive radon). There have been particular concerns within the scientific community about radon gas on the environment, especially on human health. As explained by a Stanford University published article, radon gas has links to causing lung cancer: the radon gas can be converted into smaller radioactive particles which get stuck in the lung linings and continue to decay, releasing ionising radiation; this eventually leads to cancer due to the mutation of DNA in these cells. Furthermore, other problems such as its easy ability to be carried away from the mill tailings by the wind, and also its long half-life means that mill tailings have to be controlled safely for long periods of time.

Environmental issues concerning nuclear reactors:

Nuclear waste:

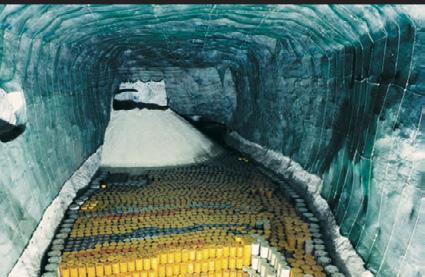

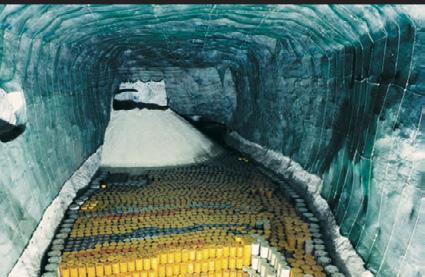

Furthermore, nuclear waste presents itself as a prominent problem; due to its effects on the environment and difficulties in disposal and storage. There are numerous proposed solutions to storing nuclear waste; such as underground or in the oceans. However, if the sealed container gets damaged this could result in leakages of highly radioactive elements with half-lives. The ionising radiation released could cause genetic deformations/mutations and furthermore death of plants and animals. Due to the long half-life of the waste nuclei (for example strontium 90), the rate of decay is much slower so more of this dangerous ionising radiation is released into the environment. Nuclear waste, similarly, has adverse effects on human health. For example, caesium 137, a soluble radioactive isotope, may be released as a waste product. This can be absorbed by internal organs such as the reproductive organs and will decay, releasing high-energy gamma photons (gamma radiation). These can then cause damage and inhibit reproductive abilities. Furthermore, it is widely known that ionising radiation (which is released by such isotopes) is capable of causing cancer.

Academic Journal 2023 - 26

Figure 2.2: An image of mill tailings from Uranium extraction

Figure 2.3: An image showing the storage of nuclear waste.

What is nuclear fusion and could we generate electricity from this?

Nuclear fusion is the process by which two smaller lighter nuclei fuse together, in order to produce a larger nucleus which is more stable (releasing energy). This is the process which takes place in the centre of stars, and in fact, keeps them in a stable condition (due to the outward force of radiation pressure balancing the inward gravitational force). Similar to fission, fusion also works on the principle of the mass-energy equivalence theorem. If you were to measure the mass of the two smaller nuclei, the sum of these masses is greater than the mass of the larger nuclei formed, this difference is called the mass defect. By E=mc2, we know that this mass has been converted to energy which is liberated (released) in the form of binding energy. At present, a proper nuclear fusion reactor has not been made that can successfully produce electricity on a commercial scale, however, there have been big strides in our technology. There are 2 main models for the nuclear fusion reactor: Magnetic confinement reactors and Inertial confinement reactors.

How close are we to a viable nuclear fusion reactor?

A viable nuclear fusion reactor would release more energy in the fusion reaction, than the energy taken in. There are several promising solutions which have been announced. Scientists hope that by 2040, we will have such a reactor which is economically viable and operational.

A large difficulty in making a nuclear fusion reactor is holding the initial ingredients for fusion in place. These nuclear reactors work by heating deuterium and tritium (isotopes of hydrogen) to high temperatures which produce Plasma, which is a soup of ionised particles. However, the plasma cannot touch the wall of the container as it would simply vaporise them. In order to hold the plasma in place, this type of reactor uses strong magnetic fields. Because the plasma contains ions (which are electrically charged), it means that the magnetic fields produced can guide the ions and keep the Plasma in a fixed position. A magnetic confinement reactor works in the following way:

1. Inside the reactor; a stream of neutral particles is released by the accelerator. This stream works to heat the deuterium and tritium to very high temperatures

2. The plasma is contained within a tokamak (which is a doughnut-shaped vessel). The transformer, which is connected to the tokamak, produces magnetic fields which squeeze the plasma so that the deuterium and tritium fuse to produce Helium.

3. The blanket modules absorb the heat produced in the fusion reaction and transfer it to the exchanger where water is converted into steam. This steam drives the turbine which in turn rotates the generator, producing electricity.

Inertial confinement is a new, experimental procedure which physicists are testing however, so far magnetic confinement is a more sound method. This process uses 192 laser beams which are shot at a small capsule called a hohlraum (inside which is a capsule of hydrogen). These X-rays collide with this smaller capsule and cause it to implode. This is meant to model the high pressure and high-temperature conditions at the centre of the stars and cause the deuterium and tritium nuclei to fuse and form Helium.

Furthermore, there are other promising solutions being developed such as SPARC which is also a magnetic confinement Tokamak, however, would be much smaller in size and can be built in a shorter timeframe (potentially allowing us to achieve viable fusion by 2025).

27 - Academic Journal 2023

Figure 3.1: A tokamak: a torus shaped vessel that uses magnetic fields to shape and squeeze the plasma.

How would our planet benefit from the production of viable nuclear fusion?

One reason we would benefit is due to no release of Carbon Dioxide. In 1900, the percentage of CO2 levels in the atmosphere was 0.285%, however, the percentage of C02 levels in 2020 was 0.039%. Such numbers, which may not seem to have much significance, are largely worrying when looking at them in the context of the global temperature. An increase in greenhouse gas levels results in an enhanced greenhouse effect, where radiation from the sun is absorbed by these gases (however not reflected back into space). This causes an increase in the average temperature on the Earth, which has caused climate change. The drastic effects of this on our environment have been recently seen for example the melting of glaciers which has resulted in the flooding of coastal areas and disrupting ecosystems. However, this is avoided when using energy made from nuclear fusion. Also, there is no release of radioactive isotopes that emit alpha, gamma or beta radiation (all 3 of which are destructive ionising radiation forms). Furthermore, nuclear fusion produces 4 times as much energy as nuclear fission reactions, allowing for more electricity production in nuclear fusion reactors.

What limitations will we face in the development of nuclear fusion:

The main difficulty we have had in producing a viable fusion reactor is the input-to-output ratio i.e. making sure the energy released is more than the energy taken in (With the current record being only 65%). The challenges being faced are:

- Initiating a burning plasma (which is plasma which can maintain itself at the same temperature and spark fusion), requires heating it to temperatures higher than the core of the sun- which needs technologies we haven’t developed yet

- If the walls of the reaction container are not kept at the same temperature (near absolute zero), it could cause damage to the blanket modules and cause the decommissioning of the nuclear facility.

In conclusion, the stark reality of the destructive nature of climate change has led us to re-evaluate the way we live, especially our energy consumption. Attempts to reduce our energy consumption, such as turning off the lights after leaving a room, are successful in slowing down the rate at which this situation is growing, but more radical approaches are needed to reverse this. It is clear that current forms of energy production such as burning fossil fuels have severe effects on the environment, and so does nuclear fission. Although nuclear fusion is not perfect (with recent articles indicating the radioactive waste which is produced due to neutrons colliding with the blanket, requires careful disposal), it is the best option which we have and the entire scientific community are optimistic that we can reverse climate change and sustain this beautiful, unique planet for future generations to come.

Academic Journal 2023 - 28

Figure 3.2: An image showing the melting of glaciers- a catastrophic effect of climate change.

Titan – The “Earth-like” moon

Nuvin Wickramasinghe

Titan is Saturn’s largest moon, the second largest natural satellite in the solar system, behind Jupiter’s Ganymede. Originally discovered in 1655, Titan has been revealed to be one of our solar system’s most bizarre satellites over time, especially regarding its atmosphere, surface and structure sharing similarities with Earth.

Rivers, lakes, sand dunes, canyons, a weather cycle, an atmosphere etc. are all found on Titan. Despite these features being composed of different materials to our planet, they all are Earth-like features found on this mysterious moon (Waldek, 2022).

Titan appeared in the popular film “Star trek” (2009), where the U.S.S Enterprise comes out of warp in the large moon’s atmosphere to ambush enemy ships attacking Earth. It also appears in TV shows such as “Futurama” and “Eureka” and in the famous anime “Cowboy Bebop” (NASA, 2022a). Iconic supervillain Thanos was also born on Titan in the original marvel comics (albeit the moon appears vastly different) (Aaron & Bianchi, 2013). In the movies, he’s from a faraway, fictional planet in a different solar system called Titan.

Timeline of major discoveries on Titan

On March 25th 1655, astronomer Christiaan Huygens discovered the bizarre moon. Then, Gerard Kuiper in 1944 discovered that the moon had a thick atmosphere, something extraordinary. He did this by finding methane when passing reflected sunlight from Titan through a Spectrometer (Kuiper, 1944). Pioneer 11 (1979) confirmed astronomers’ predictions regarding temperature and mass. Following this, Voyager 1 (1980) pictured the somewhat orange body we know Titan to be today. It also revealed its atmosphere to be primarily nitrogen (like Earth), whilst also containing other hydrocarbons (NASA, 2022b).

The Cassini-Huygens mission was a joint NASA/ESA (European Space agency) effort. From 2004 to 2017, NASA’s Cassini spacecraft orbited Saturn to collect data of the planet and its moons. On 14 January 2005, the ESA’s Huygens space probe reached Titan where the world obtained its first pictures from Titan, as well as furthering our understanding of the large moon. The entire operation was a huge success with the probe being the largest interplanetary spacecraft ever built and Huygens being the farthest landing from Earth ever made (ESA, n.d. a).

Academic Journal 2023 - 29

1 - 6 images of Titan that incorporate 13 years of data collected by NASA’s Cassini (Waldek, 2022).

Building on the Cassini-Huygens success, NASA plan to launch the Dragonfly spacecraft in 2027 following the discoveries of water and the Earth-like atmosphere on Titan. Scientists hope that this mission will be able to help study the possible start of life due to Titan sharing many features of a young Earth. Dragonfly will sample and examine the structures and chemicals there to hopefully gain invaluable information about life as we know it, and as we don’t (The planetary society, n.d.).

Size, orbit and formation

Some information about Titan: The large moon has a radius of about 2575km (nearly 50% wider than the Earth’s moon), as well as having a mass 1.8 times larger than Earth’s moon. Titan takes 15 days and 22 hours to complete 1 full orbit of Saturn (NASA, 2022c). As for how Titan came into existence is quite unique. Some moons, such as Neptune’s Triton was formed elsewhere in the solar system then pulled into orbit by the planet’s gravitational pull. Alternatively, some moons are created as residue from impacts such as the widely believed theory that the Earth’s moon was formed from an impact of the Earth with a smaller object called Theia. Other moons are believed to have been born in circumplanetary discs. These discs exist around a planet when an infant star is making a planetary system. Generally, large moons formed in the dusty material in the discs spiral towards the planet due to the drag from the surrounding gas and dust. However, Yuri Fujii and Masahiro Ogihara, at the Department of Physics, Nagoya University, and National Astronomical Observatory of Japan, created a model that used updated information about circumplanetary discs. The models hinted at a “safety zone” for larger moons where high pressure gas coupled with being a significant distance away from the planet lead to the moon being pushed outwards, causing a balance of forces, preventing Titan from being pulled into Saturn. Once these discs cease to exist, any movement towards or away by Titan from Saturn stops. Titan being formed during the creation of the solar system is supported by data collected by the Huygens’s probe on Titan’s nitrogen isotope ratio. It resembles material found in the Oort cloud, a collection of icy objects that was formed around the same time as the solar system, which implies Titan existed and was created during the formation of the planetary system (Dartnell, 2020; Leman, 2020).