For those of a certain vintage, brought up on a diet of novels set in Victorian public schools, scholarship is a rather quaint, old-fashioned concept. Scholarship is associated with row upon row of dusty, leather-bound editions of obscure textbooks, immaculately laid out in a rather grandiose, oak-panelled library. Scholarship is synonymous with ageing, rather austere schoolmasters sporting tweed blazers, elbow patches and half-moon spectacles. Scholarship is elitist and rooted in weighty tomes.

The Latin Inscription in School Room AUT DISCE AUT DISCEDE (Either learn or leave) would simply seem to reinforce this impression of scholarship.

And yet this impression could not be further from the truth at the RGS in the 21st century. Our recent inspection highlighted scholarship as one of the School’s two Significant Strengths: “pupils develop a breadth of knowledge and enthusiasm for scholarship… This strong academic culture leads to pupils who readily engage in critical thinking and deep learning and display intellectual curiosity.” If you were looking for evidence, then this edition of The Journal could not be a better illustration of how vibrant students’ learning and research is. Far from an outdated concept, scholarship at the RGS is dynamic and cutting edge, relevant and exciting, enriching and innovative, irrespective of where a student’s passion may lie.

I would like to take this opportunity to thank Mrs Tarasewicz, our Head of Scholarship, for her hard work and inspiration in compiling this impressive publication, to Mrs Webb for designing and producing this, and - most of all - to all those students who have contributed articles which are the result of so much passion, research and reflection. Scholarship - an outdated concept? Nothing could be further from the truth!

Dr JM Cox Headmaster

Welcome to this year’s edition of The Journal.

One of the joys of working in education is that no two days are the same. Nonetheless, every year I am delighted anew by the variety and sheer calibre of the ILA (Independent Learning Assignment) and ORIS (Original Research in Science) projects that our Sixth Form students produce. So high was the quality of submissions this year that we had to introduce an additional stage in the shortlisting process when trying to select students to take part in the ILA/ ORIS Presentation Evening, when the top students have the chance to compete for the coveted ILA and ORIS awards. It is this carefully selected shortlist of students that have been included in this publication and it gives me the greatest pleasure to share such a diverse, fascinating and frankly outstanding collection of essays with you.

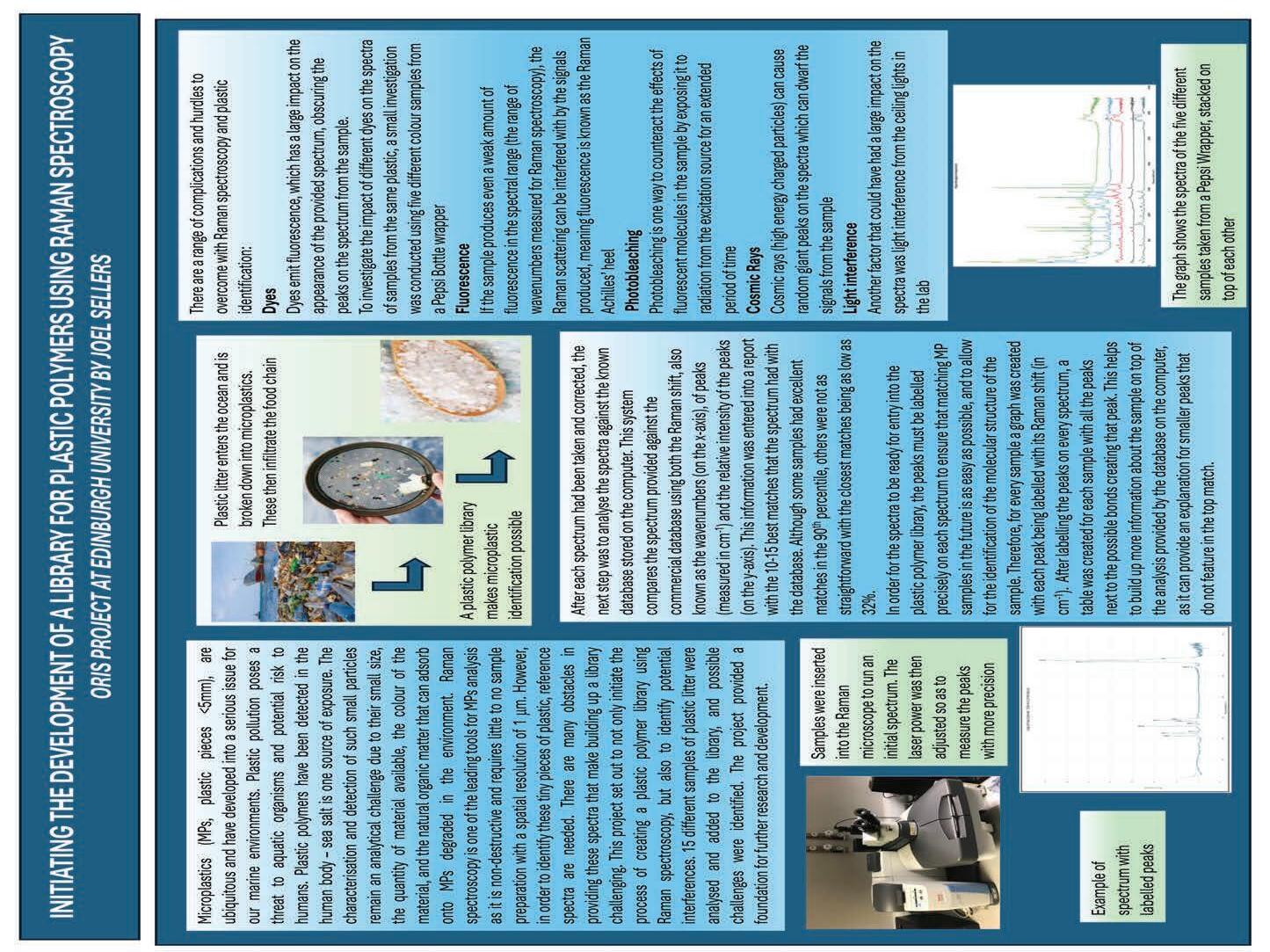

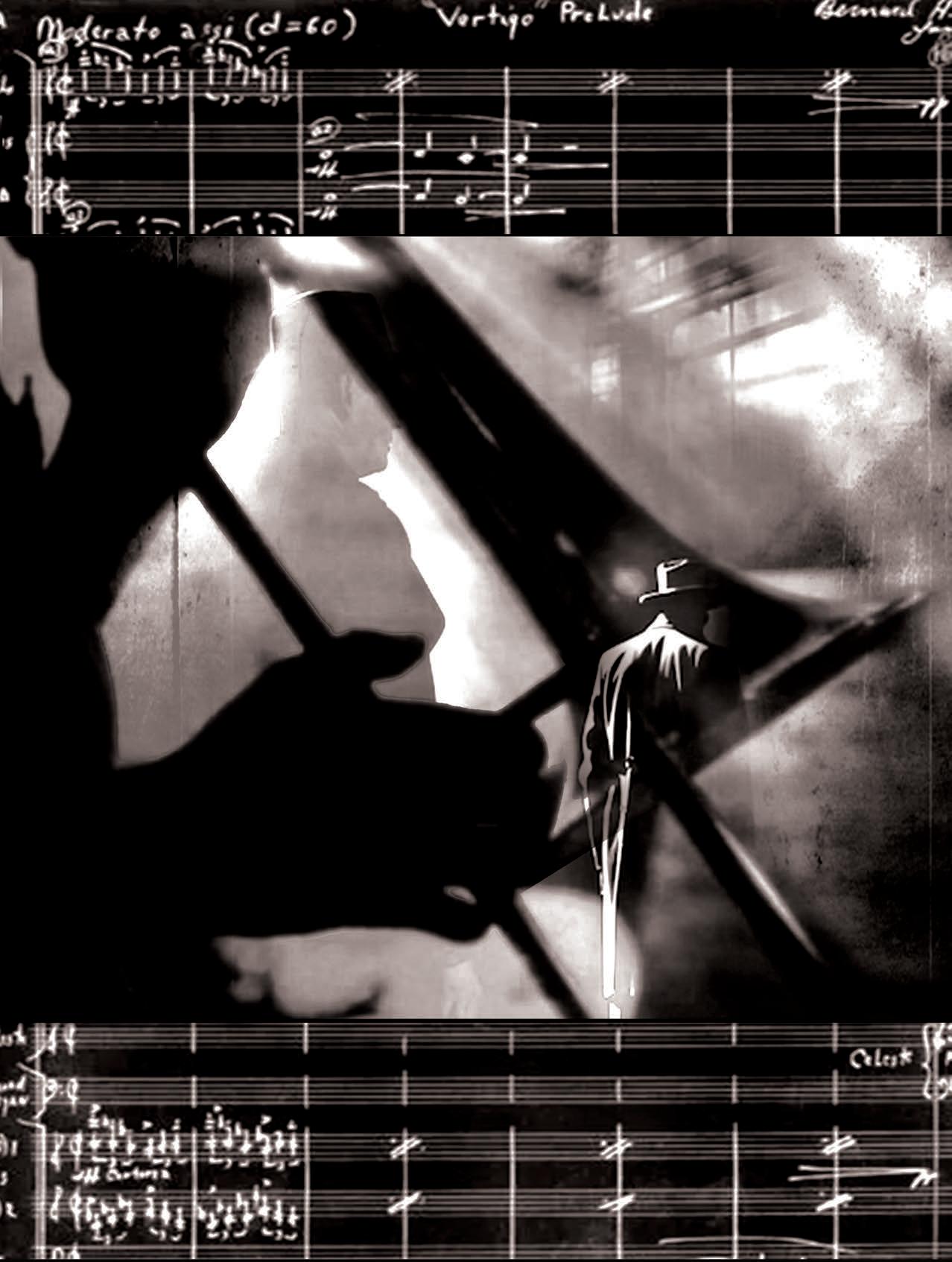

As you will find in exploring the pages of this magazine, the eventual winners of the ILA and ORIS awards were Ruvin Meda in the ILA Arts and Humanities category, Thomas Dowson in the ILA STEM category and Joel Sellers in the ORIS category, which was recognised separately for the first time this year. Special mention should be made of Ruvin because his full ILA cannot be included in the way that the others have been. This is because Ruvin’s ILA was, in fact, a film music score! Those of us at the ILA/ORIS Presentation Evening had the delight of listening to this, whilst watching the film that it accompanied, which was truly magical. Not only did Ruvin win our internal ILA award, but his composition was also recognised externally, securing second place in the Guildhall School of Music & Drama Release 2024 competition.

The calibre of work that the students produce for their ILA and ORIS projects indicates the strength and value of this programme. It therefore gives me great pleasure to report that this year, for the first time, we have also launched a Junior ILA (ILA JNR), open to all students in the Third and Fourth Forms. These younger students will each be supported by a Sixth Form mentor as they undertake a piece of independent research - perhaps their first piece of truly independent research - on a topic of their own choosing. We have been absolutely delighted by student uptake and look forward to sharing their work with an audience of friends and families at the Junior ILA Celebration Event at the start of the Trinity Term.

As last year, my particular thanks go the Georgina Webb, our Partnerships and Publications Assistant, for her flare and precision in producing such an eye-catching edition of The Journal. My thanks also go to Peter Dunscombe and Wai-Shun Lau for their continued support with running the ILA and ORIS programmes.

I very much hope that you enjoy the selection of projects that we have drawn together for you. Happy reading!

Mrs HE Tarasewicz Head of Scholarship

With thanks to: Mr Dunscombe, Mr Lau, Mrs Farthing and all of the ILA supervisors for their support with the ILA and ORIS programmes.

THOMAS DOWSON WINNER OF STEM

WILLIAM BAYNE

This Independent Learning Assignment (ILA) was short-listed for the ILA/ ORIS Presentation Evening.

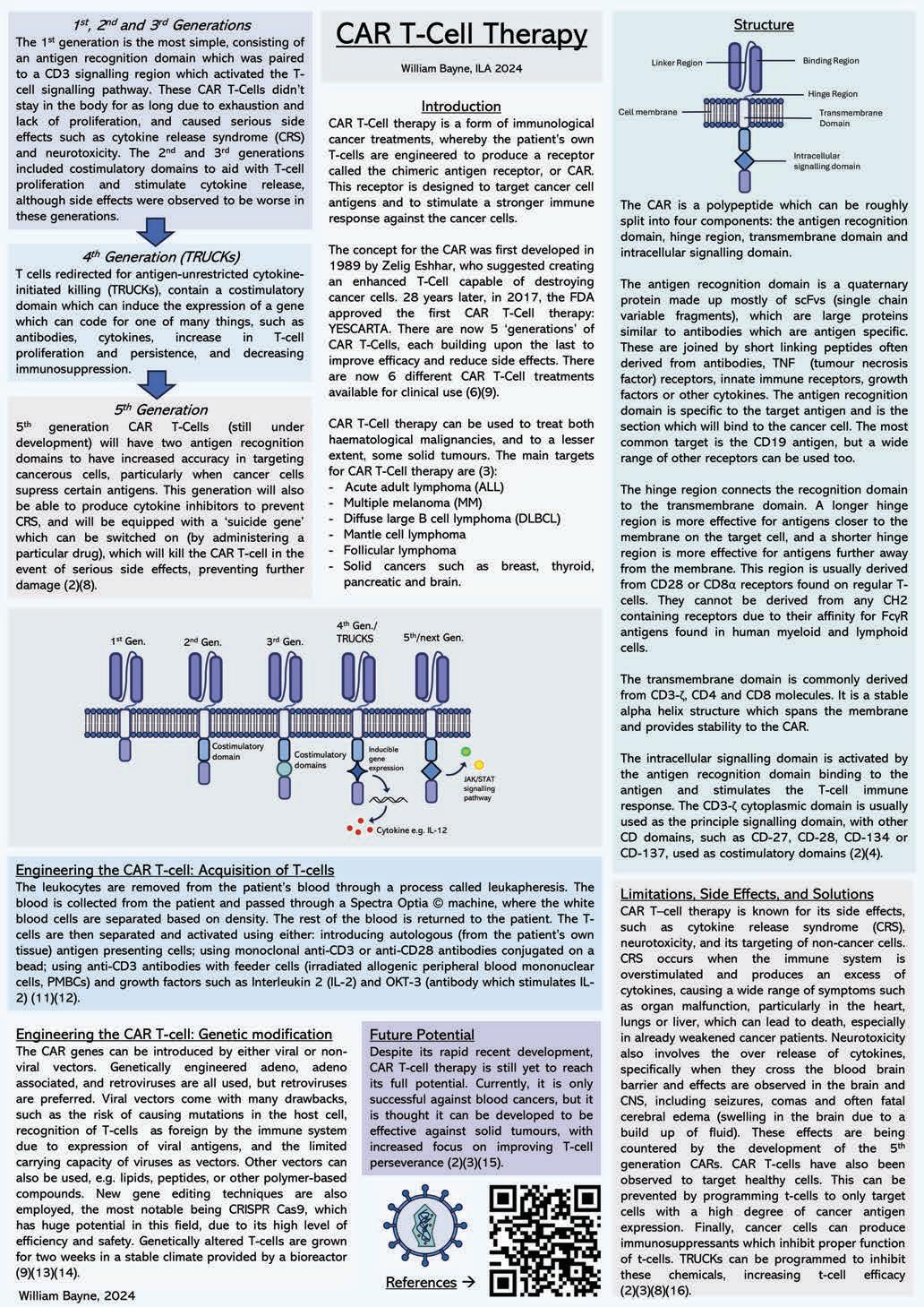

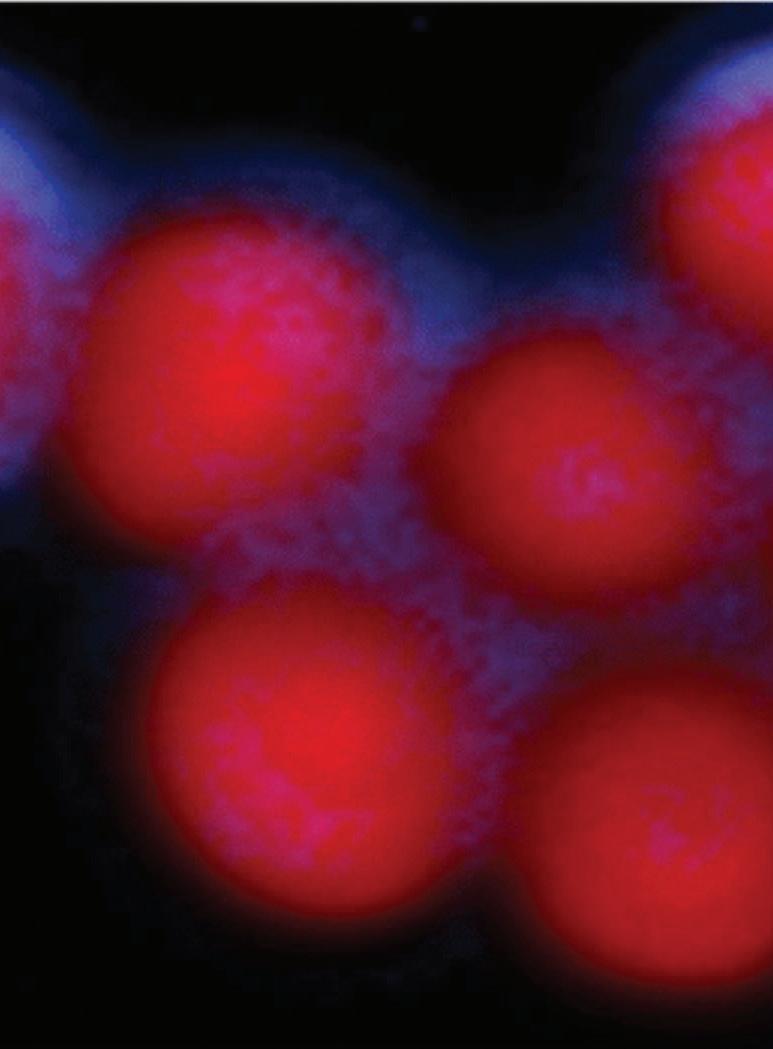

Immunotherapy is a relatively cutting-edge form of cancer treatment, having only been approved for use in the last 7 years. Unlike other common treatments for cancer which generally employ the tactic of directly killing or removing large numbers of cells from the tumour, immunotherapy works in a more subtle way. As the name suggests, it involves aiding the immune system in fighting and killing the

cancer cells. This can be done in many ways, from utilising immune checkpoint inhibitors, to creating a vaccine which will specifically target cancerous cells, or creating genetically enhanced white blood cells specifically designed to stimulate an immune response against cancer cells - CAR (Chimeric Antigen Receptor) T-cell therapy.1

The first research into this type of treatment began in the 1980s, with scientists such as Zelig Eshhar suggesting the first plans for creating an enhanced T-cell capable of destroying cancer cells in 1989. However, it was not until 2017 that the FDA approved the first CAR T-cell treatment for clinical use, YESCARTA.9 Since 1989, the chimeric antigen receptor has come a long way, with five recognised 'generations' of CARs, each more effective and with fewer side effects than the last.

CAR T-cell treatment is used to treat a wide range of cancer types, both haematological malignancies and some solid tumours,3 although different types of CAR T-cells are used for different types of cancer, due to the absence or presence of certain antigens on different cancers and the specificity required from the receptor to bind to the antigen.2 There are currently six CAR T-cell treatments available, and are used for the treatment of multiple cancers: Acute adult lymphoma (ALL), Multiple melanoma (MM), Diffuse large B cell lymphoma (DLBCL), mantle cell lymphoma and follicular lymphoma,15 and some solid cancers such as pancreatic, brain, breast and thyroid cancer.23

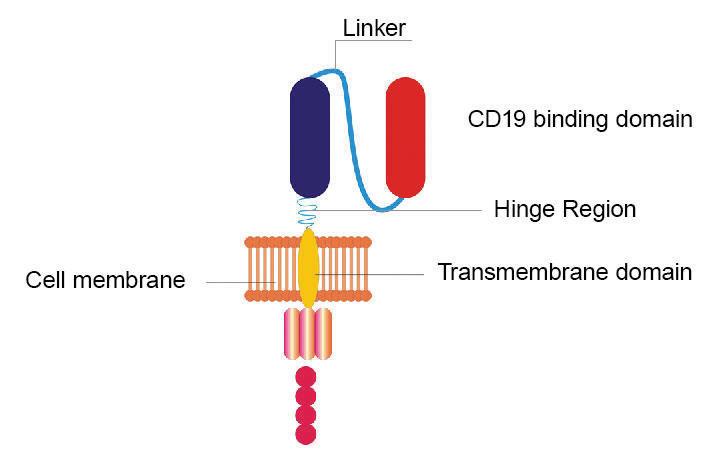

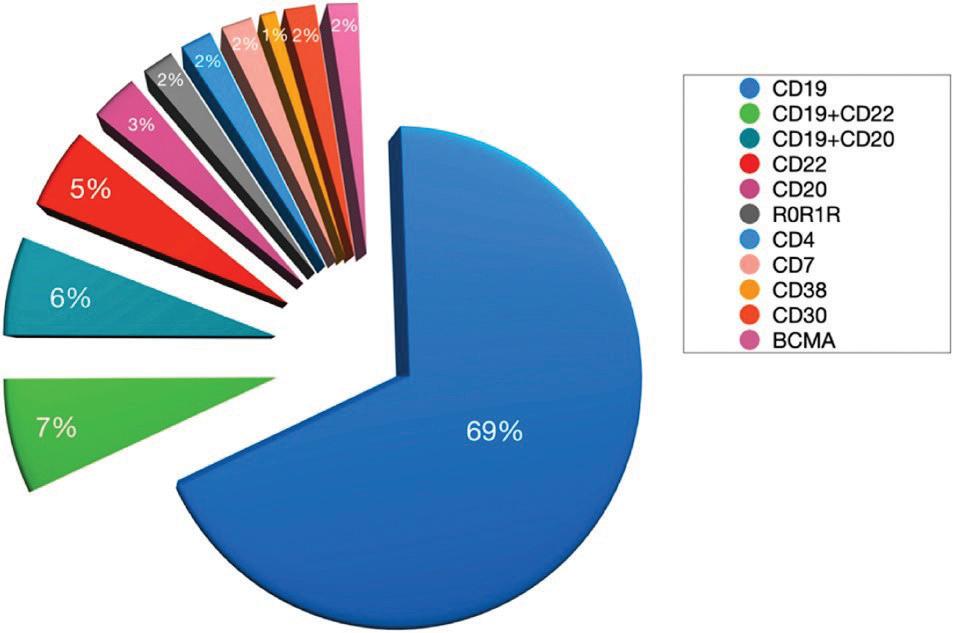

The chimeric antigen receptor (CAR) is a polypeptide made up of multiple components: the antigen recognition domain, the hinge region, the transmembrane domain, and the endodomain.4 The antigen recognition domain is found on the outside and is specific to a particular antigen found on a cancerous cell. It has a quaternary structure made up of single chain variable fragments (scFvs), which are large antigen specific proteins similar to antibodies. These are connected by short linking peptide chains, and are often derived from antibodies, although they can also be obtained from structures such as TNF receptors, innate immune receptors, growth factors or other cytokines.4 It is this section which will recognise and bind to the cancer cell and activate the immune response. The most common target antigen is CD19, but there is a wide range of possible antigens which could be targeted.2

The hinge (or spacer) region is found between the antigen recognition domain and the cell surface membrane on the T cell. It can vary in length, but the longer length is preferable for binding regions that are closer to the membrane on the target cell as it lends more flexibility to the antigen recognition domain , however shorter hinge regions are more desirable for antigens with a binding region which is further away from the cell surface membrane. The spacer region is commonly derived from CD28 or CD8α receptors (found on regular T-cells), however they cannot be derived from Immunoglobin G or any other CH2 containing receptors due their affinity for FcγR antigens found on human myeloid and lymphoid cells, which would cause them to bind these cells with many harmful effects.4

The transmembrane domain, as the name suggests, bridges the cell surface membrane of the T-cell and is derived from a wide range of molecules, including, but not limited to, CD3-ζ, CD4 and CD8. It is a stable alpha-helix structure which spans the membrane and stabilises the CAR.4

Finally: the intracellular signalling domain or endodomain. This section, found within the cytoplasm and attached to the transmembrane domain, is responsible for activating the T-cell when the extracellular antigen recognition domain is activated by an antigen. The CD3-ζ cytoplasmic

domain is used for the principal signalling domain, with other CD domains used as co-stimulatory domains, which is required for CAR T-cells to become activated. Commonly used co-stimulatory domains include CD-27, CD-28, CD-134 and CD-137.4

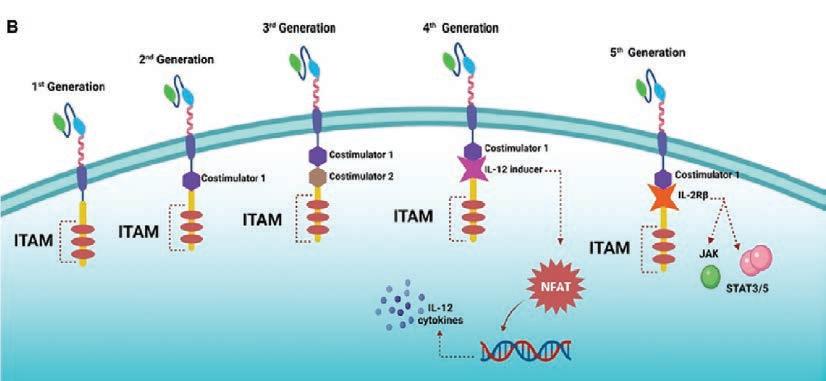

The first generation of CAR T-cells was developed in the 1990s and was the most basic form of CAR T-cells. The CAR was composed of an antigen recognition domain derived from an antibody which would activate the T-cell receptor signalling pathway (CD3-ζ) when coupled with the target antigen and cause an immune response. However, the first generation CARs were less adept at staying in the body for a prolonged period, due to T-cell exhaustion and a lower rate of proliferation, and had limited success against solid tumours and some forms of cancer which lack the target antigen, and were observed to produce some serious side effects such as cytokine release syndrome and neurotoxicity due to a reaction from the immune system against the engineered cells.2/8 In the second and third generations, costimulatory domains were added to aid with T-cell action, by stimulating cytokine release and causing further T-cell proliferation, although more side effects (such as Cytokine Release Syndrome) were observed in the third generation due to a higher production rate of cytokines by the T-cell.2

Fourth generation CAR T-cells are also called T cells redirected for antigen-unrestricted cytokine-initiated killing, or TRUCKs. TRUCK CARs contain the extracellular antigen recognition domain and the two intracellular signalling domains (one for T-cell activation and one co-stimulatory for cytokine release and T-cell proliferation), as with the second generation. The fourth generation are also engineered with an inducible gene expression which becomes active upon recognition of the antigen. This gene expression system can be engineered to code for many different cytokines or antibodies with a range of functions, such as increasing T-cell proliferation, aiding with T-cell persistence or overcoming immunosuppression. These TRUCKs have much more success in killing tumour cells due to their ability to improve cytotoxicity of the T-cells and increase the number of immune cells at the cancer site, as well as the invaluable tool to decrease immunosuppression caused by the cancerous cells.2

The most advanced CAR T-cell therapy currently under development, the fifth generation of CAR T-cells has many modifications which will allow it to fight cancer with amplified efficiency, strength and safety. One improvement is that the fifth generation will have two antigen recognition domains instead of only one. This means that it is much less likely for the CAR T-cell to falsely recognise other cells as cancer cells if they contain the same antigen. It also means that if the cancer cells become resistant to the T-cell by reducing the number of the target antigens

present on the cell surface, the CAR T-cell will still bind via the other receptor, thereby reducing the effects of resistance to the therapy by the cancer cells. This also means that these CAR T-cells can bind to a larger number of cancer cells as they have a wider target range of antigens, so they can kill cancer cells at a faster rate. The fifth generation also has an improved signalling domain which means the CAR T-cell can act with a more potent ability to recognise and attack cancer cells, due to signalling domains with an increased emphasis on cytokine production and T-cell survival and proliferation2

Fifth generation CAR T-cells are also equipped with tools to help prevent side effects common in earlier generations. They are engineered with the ability to produce cytokine inhibitors which can help control cytokine release syndrome which is a common but sometimes fatal side effect of CAR T-cell therapy.2 They also have the ability to 'commit suicide' in the case of excessive and dangerous side effects. The CAR T-cells can be equipped with one of many methods of suicide such as a suicide gene, which would contain the code for a destructive enzyme such as herpes simplex virus thymidine kinase, or small molecule assisted shutoff (SMASh) where a viral protease and a protease inhibitor are bound to the CAR in a position to cleave the CAR and destroy the cell.8 In all cases for a suicide mechanism, the system will be activated by a particular drug given to the patient in the event of CAR T-cell over expression, making the process much safer.

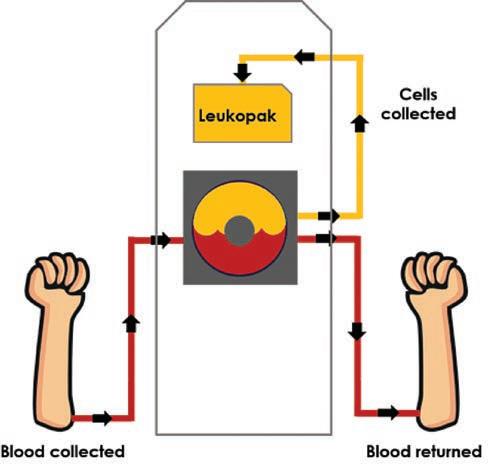

Blood is removed from the patient and the leukocytes (white blood cells) are removed from the blood using a Spectra Optia © machine, which separates the white blood cells based on their density, and the rest of the blood is returned to the patient.9/10 This process is called leukapheresis. The desired T-cells are then separated and activated by one of several methods: introducing autologous (from the patient’s own tissue) antigen presenting cells; using beads containing anti-CD3 and anti-CD28 monoclonal antibodies; or using anti-CD3 antibodies alongside feeder cells (irradiated allogenic peripheral blood mononuclear cells, PMBCs) and growth factors, such as Interleukin 2 and OKT-3 (a monoclonal antibody which stimulates IL-2 and aids with T-cell proliferation).11/12

The CAR genes are inserted into the T-cells either by viral or non-viral vectors. Viral vectors are preferable because of the high rate of genetic transfer of genetic material into the target cells. The CAR gene is inserted into the viral genome and the viral enzymes allow it to integrate the gene into the genome of the T-cells.

Genetically engineered retroviruses, adenoviruses and adeno-associate viruses are used for this, with retroviruses the preferred type. However, viral vectors have their drawbacks: the insertion of the gene into the host cell genome can lead to mutations which have the potential to cause the formation of a tumour; the virus can also cause the T-cell to be recognised as a foreign cell by the patient’s immune system leading to an undesired immune response; due to the small size of viruses, only smaller, less complex genes can be used, which restricts the potential for CAR T-cell therapy using viral vectors; the viruses can also find it difficult to produce high concentrations of genetically engineered cells.9

Non-viral vectors are also used, due to their unlimited carrying capacity and non-infectiousness. Vectors include lipid, peptide, and polymer-based compounds, alongside nude DNA and minicircle DNA. These generally work by chemically aligning themselves with a part of the DNA molecule though hydrophobic or hydrophilic interactions, at which point the DNA section is inserted into the genome.14

This section of CAR T-cell therapy has also improved with the development of new genetic engineering methods such as CRISPR Cas9, which has huge

potential for the reduction of current limitations of CAR T-cell therapy due to its accessibility and simplicity, not to mention improved safety compared to viral vectors.13 It is also much more effective at allowing for an introduction of higher numbers of genetically engineered T-cells (i.e a higher concentration of T-cells which have been genetically engineered) to the patient, as hence a stronger and more efficient treatment. Once the CAR T-cells are created, they are allowed to grow for two weeks in a Bioreactor system so they can multiply to a very large number. They are delicate cells however so require a stable microclimate to grow in, which can be provided by certain types of Bioreactors.9

Although CAR T-cell therapy has come a very long way since it was first conceived in 1989, its still has not reached its full potential. Currently, T-cell therapies are only successful against certain haematological cancers such as ALL (adult acute lymphoblastic leukaemia), MM (multiple melanoma), DLBCL (diffuse large B cell lymphoma), mantle cell lymphoma and follicular lymphoma, and some solid tumours such as pancreatic, breast, thyroid and brain cancers.15/23 Despite this, it is thought that CAR T-cell therapy can be developed far enough to be effective against more types of solid tumours3, especially with the rapid advancements constantly being made to increase the stamina of T-cells in fighting the cancer cells ). Often in patients undergoing CAR T-cell therapy, T-cells can become exhausted, but this issue is reduced with each generation of CARs.2 CAR T-cell therapy can also suffer from resistance to the treatment by cancer cells, where the target antigen becomes less expressed, reducing the effect of the CAR T-cell. This can be combated by introducing a second antigen receptor region to the CAR so that the T-cell has

“ The past thirty-five years have seen a dramatic development in the field of immunotherapy, with five separate generations of CAR T-cells developed, and six treatments currently approved by the FDA.

a second region to bind to if the cancer cell downplays the expression of one antigen, increasing its efficacy. CAR T-cell therapy is also known for its side effects, such as cytokine release syndrome, neurotoxicity and targeting of non-cancer cells, to name a few. Cytokine release syndrome (CRS) occurs due to overstimulation of the immune system to produce too many cytokines (cell signalling molecules), resulting in a wide range of symptoms, from inflammation to organ malfunction, particularly in the heart, lungs and liver, which in many cases (especially in already weakened cancer patients), can lead to death. Neurotoxicity also occurs due to an increase in cytokine production, specifically when the blood-brain barrier is crossed and adverse effects are observed in the brain and CNS, including seizures, comas and often fatal cerebral edema (swelling in the brain due to a build-up of fluid).16 Both these side effects are already starting to be dealt with in the 5th generation CAR T-cells which can both inhibit cytokines in the event of CRS and can be easily 'switched off' if the side effects are very serious.8 CAR T-cells have also been seen to attack some healthy body cells which can often display the same antigens as cancer cells, which leads to many harmful effects. This effect can be reduced by programming the T-cells to recognise cells with higher degree of antigen expression as would be in the tumour cell but not in regular body cells.3 Finally, the problem of immunosuppressants released by the cancer cells, preventing the proper function of the T-cells. This can be combated by producing CAR T-cell which are resistant to such chemicals, as was developed to a certain degree in the fourth generation.2

The past thirty-five years have seen a dramatic development in the field of immunotherapy, with five separate generations of CAR T-cells developed, and six treatments currently approved by the FDA. Each generation of CARs brings a new weapon to the fight against cancer, whether that be the ability to increase T-cell numbers more rapidly, inhibit immunosuppressants, or increase the safety of the patient during the treatment by managing the side effects. The process for creating these CAR T-cells is also evolving constantly, with the development of CRISPR technology helping this process become much faster, safer and efficient, and with advancements in the biomedical industry occurring at such a high rate, I would expect to see a dramatic increase in the success of this type of therapy in the near future.

Appendix A: Abbreviations and definitions (in order of appearance)

• CAR: chimeric antigen receptor.

• Chimeric: coded for by more than one different gene.

• CD (followed by a number): cluster of differentiation, a term given to a cell surface antigen or group of antigens, and the receptors which would bind to them.

• CH: Constant heavy chain

Appendix B: Other cancer treatments

• Chemotherapy uses drugs which will usually inhibit particular stages in the cell cycle, thereby preventing cancer cells (cells undergoing uncontrolled mitosis) from undergoing mitosis, preventing growth of the malignancy or tumour. However, despite the fact that these drugs can be targeted to the cancer cells, this can still also damage other cells in the body, causing severe side effects. Radiotherapy uses radio waves to kill cancer cells. However, this is a destructive process which can easily damage other body cells. Surgery is also commonly used to treat cancer but is often difficult, sometimes unsuccessful, and also not possible for many types of cancer, e.g haematological malignancies.

Appendix C: Immunoglobin G, CD2 and delocalised attack

• Immunoglobin G (IgG) contains CH2 receptors which will bind to FcγR antigens present on myeloid and lymphoid cells. Myeloid cells include macrophages, megakaryocytes granulocytes and erythrocytes, while lymphoid cells include T-cells, B-cells and natural killer cells6. These cells will be targeted by the CAR T-cells as well as the cancerous cells, leading to a decreased frequency of attack on the cancer cells by the CAR T-cells, and therefore decrease effectiveness of the treatment.

There have been in vivo studies to show that by removing the CH2 from the IgG the delocalisation of attack of CARs can be reduced7, but the most effective way of reducing this is to just use other sources for the hinge region.

Appendix D: The use of viral vectors in the genetic engineering of T-cells

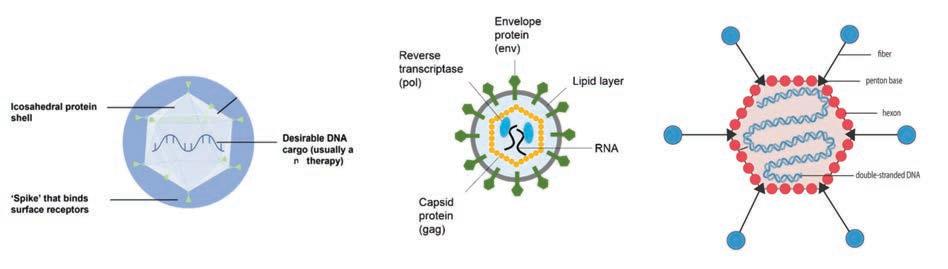

• Three main types of viral vectors are used for gene transfer to T-cells: retroviruses, adenoviruses and adeno-associated viruses. They all have a similar structure but are differentiated by the form of genetic material which is contained by the virus. Retroviruses contain a portion of single stranded RNA19, which is made into DNA inside the host cell using the virus’ enzymes, ready to be integrated into the host genome. Adenoviruses do not store genetic information as RNA but rather as double stranded DNA20, thereby eliminating the need for a conversion from RNA to DNA in the host genome. Adeno-associated viruses (AAVs) are subtly different in that they contain single stranded DNA instead of double stranded DNA21

Figure D: Left to right: structure of a retrovirus19, an adenovirus20 and an adeno-associated virus21 (simplified)

In all cases, the original viral genetic material is removed from the virus and replaced with the desired genetic material. This is to prevent viral replication inside the T-cells, which would likely result in cell death.

Appendix E: Side effects: cytokine release syndrome (CRS) and neurotoxicity in more detail

• CRS is a common side effect of CAR T-cell therapy and is characterised by a high release of cytokines into the blood. This can cause mild to serious side effects, from flu or fever- like symptoms to severe hypoxia and capillary leakage, with many more symptoms also reported22. Although the exact mechanism for CRS is poorly understood, certain aspects of the syndrome can be derived from the symptoms and the levels of certain cytokines found in CRS patients. For example, IL-6 (among others) is likely to result from activation of the endothelial cells and results in an immune response targeting these cells. This can lead to capillaries leaking and low blood pressure (hypotension)22. The cause of some other symptoms of CRS could also be derived in a similar way.

In most cases of neurotoxicity following CAR T-cell therapy, it is a result of CRS and is characterised by decreased integrity of the blood brain barrier due to CRS. Like CRS, the symptoms range from mild to serious, with some patients only suffering from slight confusion or aphasia (difficulties speaking or understanding others speaking), to seizures and cerebral edema (swelling due to fluid build-up)22

Appendix F: Costs associated with CAR T-cell therapy compared to other treatments

• An important factor to consider is the high cost to success ratio compared to other forms of cancer treatment. CAR T- cell therapy can cost as much as $500,000 for some patients17, compared to a 6-month course of chemotherapy which is estimated to cost around $27,000 (18), and with little guarantee of a side effect free treatment, with the highest CRS rate being 95% in for one particular treatment17. This begs the question as to whether this form of treatment is really worth the cost.

1. National Cancer Institute (no date) Immunotherapy for Cancer - NCI. Available at: https://www.cancer.gov/about-cancer/ treatment/types/immunotherapy (Accessed: June 4, 2024).

2. Wang, C. et al. (2023) CAR-T cell therapy for hematological malignancies: History, status and promise, Heliyon, 9 (11).

3. Alnefaie, A. et al. (2022) Chimeric Antigen Receptor T-Cells: An Overview of Concepts, Applications, Limitations, and Proposed Solutions, Frontiers in Bioengineering and Biotechnology, 10.

4. Ahmad, U. et al. (2022) Chimeric antigen receptor T cell structure, its manufacturing, and related toxicities; A comprehensive review, Advances in Cancer Biology - Metastasis, 4.

5. Novartis (2017) Novartis receives first ever FDA approval for a CAR-T cell therapy, Kymriah (TM) (CTL019), for children and young adults with B-cell ALL that is refractory or has relapsed at least twice | Novartis. Available at: https://www.novartis.com/news/ media-releases/novartis-receives-first-ever-fda-approval-car-t-celltherapy-kymriahtm-ctl019-children-and-young-adults-b-cell-allrefractory-or-has-relapsed-least-twice (Accessed: June 6, 2024).

6. Kondo, M. (2010) Lymphoid and myeloid lineage commitment in multipotent hematopoietic progenitors, Immunological reviews, 238 (1), p.37.

7. Pastrana, B., Nieves, S., Li, W., Liu, X. and Dimitrov, D.S. (2020). Developability assessment of an isolated CH2 immunoglobulin domain, Analytical chemistry, 933, pp.1342-1351.

8. Andrea, A.E., Chiron, A., Bessoles, S. and Hacein-Bey-Abina, S. (2020). Engineering next-generation CAR-T cells for better toxicity management, International journal of molecular sciences, 21(22), p.8620.

9. Zhang, C., Liu, J., Zhong, J.F. and Zhang, X., (2017). Engineering car-t cells Biomarker research, 5, pp.1-6.

10. Thompson, J. (2020). What is leukapheresis? Caltag Medsystems. Available at: https://www.caltagmedsystems.co.uk/information/ what-is-leukapheresis/ (Accessed: 12 June 2024).

11. Jin, C., Yu, D., Hillerdal, V., Wallgren, A., Karlsson-Parra, A. and Essand, M., (2014). Allogeneic lymphocyte-licensed DCs expand T cells with improved antitumor activity and resistance to oxidative stress and immunosuppressive factors. Molecular Therapy-Methods & Clinical Development, 1.

12. Schwab, R.I.S.Ë., Crow, M.K., Russo, C.A.R.L.O. and Weksler, M.E., (1985). Requirements for T cell activation by OKT3 monoclonal antibody: role of modulation of T3 molecules and interleukin 1. Journal of immunology (Baltimore, Md.: 1950), 135(3), pp.1714-1718.

13. Dimitri, A., Herbst, F. and Fraietta, J.A., (2022). Engineering the next-generation of CAR T-cells with CRISPR-Cas9 gene editing Molecular cancer, 21(1), p.78.

14. Ramamoorth, M. and Narvekar, A., (2015). Non viral vectors in gene therapy-an overview Journal of clinical and diagnostic research: JCDR, 9(1), p.GE01.

15. Cancer Research UK (2024) Blood cancers | Cancer Research UK. Available at: https://www.cancerresearchuk.org/ about-cancer/blood-cancers (Accessed: June 12, 2024).

16. Gust, J., Ponce, R., Liles, W.C., Garden, G.A. and Turtle, C.J., 2020. Cytokines in CAR T cell–associated neurotoxicity Frontiers in Immunology, 11, p.577027.

17. Choi, G., Shin, G. and Bae, S., 2022. Price and prejudice? The value of chimeric antigen receptor (CAR) T-cell therapy. International Journal of Environmental Research and Public Health, 19(19), p.12366.

18. Geng, C. (2023) Cost of Chemotherapy: What to expect and financial help, Medical News Today. Available at: https://www.medicalnewstoday.com/articles/ chemotherapy-cost (Accessed 19 June 2024)

19. Ulbrich, M. et al. (2014). The Viral Vector. Available at https://2014.igem.org/Team:Freiburg/Project/ The_viral_vector (Accessed 19 June 2024)

20. Applied Biological Materials (No date). The Adenovirus System. Available at: https://info.abmgood.com/adenovirussystem-introduction (Accessed 19 June 2024)

21. Blair, P. (No date). Snapshot: what are adeno associated viruses (AAV)? Available at https://www. ataxia.org/scasourceposts/snapshot-what-are-adenoassociated-viruses-aav/ (Accessed 19 June 2024)

22. Freyer, C.W. and Porter, D.L., 2020. Cytokine release syndrome and neurotoxicity following CAR T-cell therapy for hematologic malignancies Journal of Allergy and Clinical Immunology, 146(5), pp.940-948.

23. Jogalekar, M.P., Rajendran, R.L., Khan, F., Dmello, C., Gangadaran, P. and Ahn, B.C., 2022. CAR T-Cell-Based gene therapy for cancers: new perspectives, challenges, and clinical developments Frontiers in immunology, 13

This Independent Learning Assignment (ILA) won the ILA prize in the STEM category

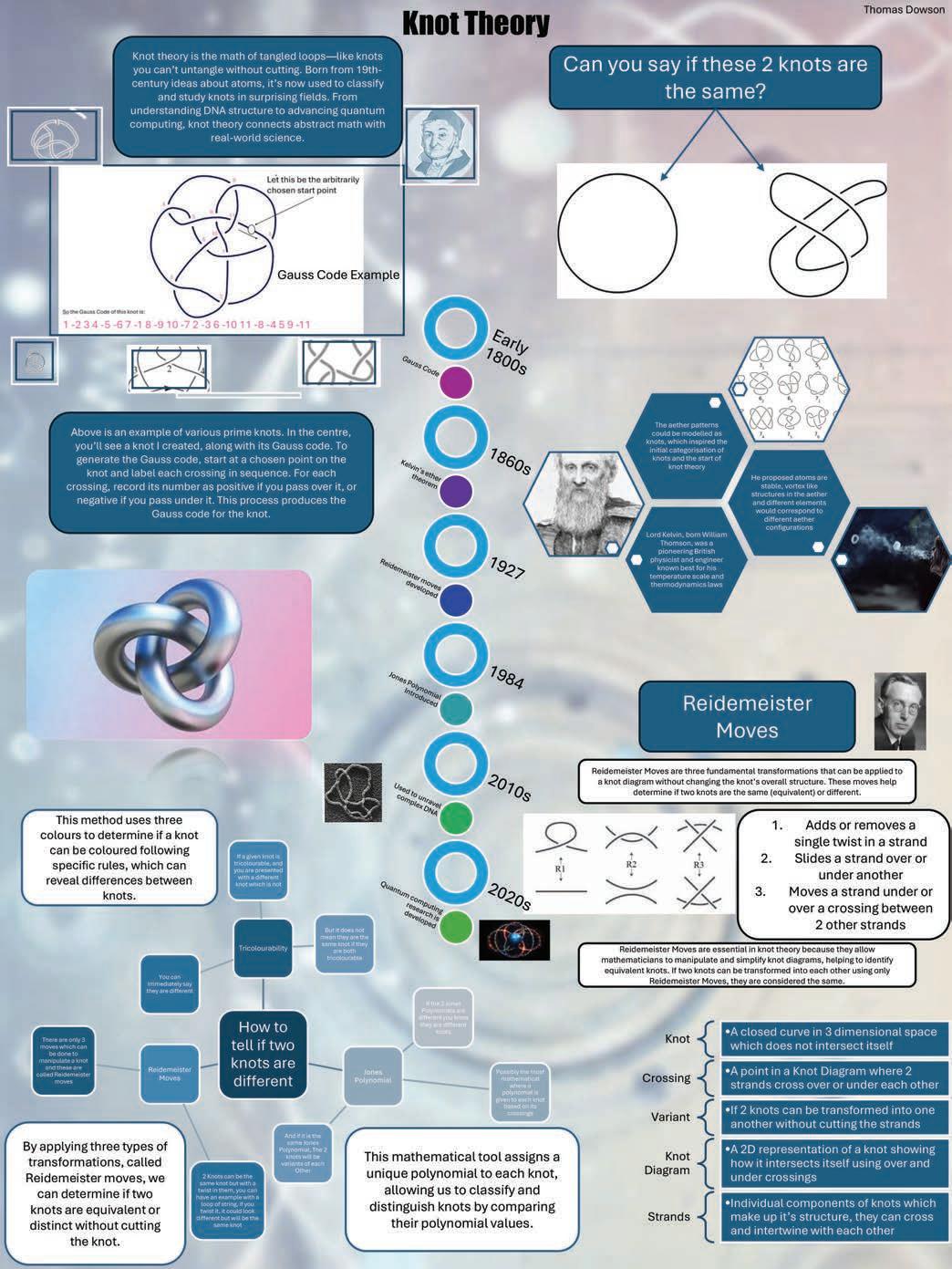

A knot is a simple yet complex object, which can be found almost anywhere in the world. If you have ever been climbing I’m sure you can appreciate the power a knot has. Can a knot be more than just a rope used as a safety measure for people who can’t climb?

If we look at a knot, surely there must be some way of describing it. Looking at the dictionary definition it states:

"A join made by tying together the ends of a piece or pieces of string, rope, cloth, etc".

In comes Knot theory, a constantly developing branch of mathematics and physics. Where advances are being constantly made in the quantum branch with new knot variants and invariants being discovered, and quantum fields and gravity being developed consequently. Although quantum might seem unfamiliar to many, knot theory finds applications across various fields of science. Both computer science and mathematics feature specialized branches dedicated to the study of knots.

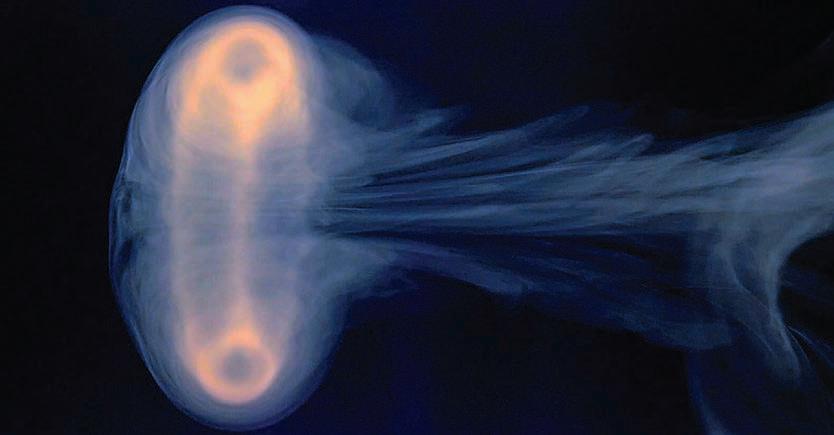

Knot theory was never actually intended for certain branches of quantum fields or even mathematics, rather chemistry. Where in the latter half of the 19th century, Lord Kelvin suggested that atoms would consist of knotted rings of an ether like substance where different elements had different knots. This idea came to mind after watching smoke rings floating in the air, as was the usual from the pipes which were commonplace at the time. This theory would hopefully enlighten scientists on how different elements would absorb and emit light at different wavelengths. The early proof, in 1860, came from the spectrum lines visible from the element. Spectral lines are produced as each element has its own energy, and will produce photons of distinct energy

levels which appear as colours at distinct levels. Knot theory would hopefully explain why each element will have a unique ‘fingerprint’ of spectral lines. Sadly while these theoretical possibilities sounded logical, when tested these did not end up being the case. Even though he was not first person to take a leap forward into the mathematics of knots, Kelvin was the first person to aim to tabulate these findings.Sil06

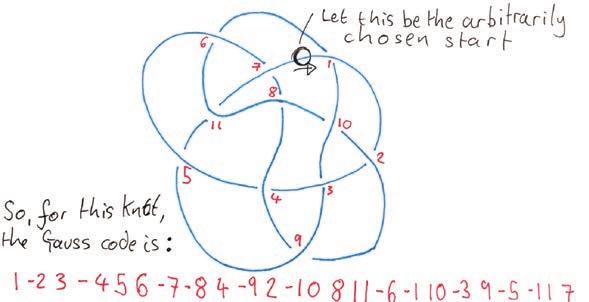

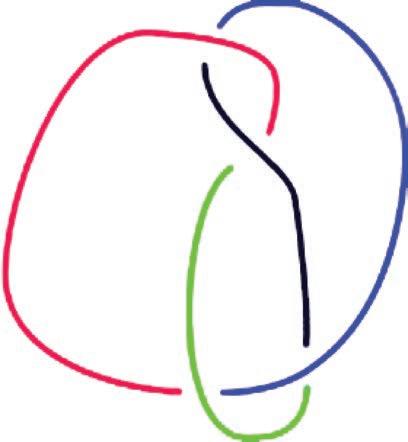

The first person to make progress into the mathematics behind knots was Carl Friedrich Gauss in the early 1800’s. His early theories involved the following idea.

1. Arbitrarily choosing a point on a line.

2. Imagine you are walking the knot, labelling the first crossing (the point on a knot where two strands overlap) as 1, and any future crossings you reach as 2, 3, and so on, until you get back to where you start.

3. To record the Gauss code of the knot, you simply walk the knot again, this time noting down each crossing you reach, and if your strand goes over another, note down as the positive value of the crossing, and if it goes under another, it will be recorded as negative.

An example of the Gauss code can be seen in figure 2: Extended Gauss Code.

taken place. This creates a new and more complex knot which combines the properties of the two individual knots.

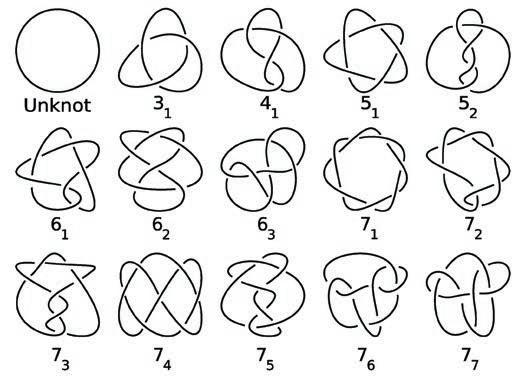

All of the Prime knots can be seen below up to the seventh crossing but if you expand this list up to the sixteenth crossing you get 1701936 different prime knots. Lic81

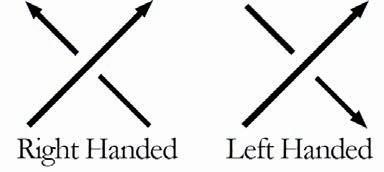

However, Gauss never realised the potential this branch of mathematics he had discovered held and left his research with the extended Gauss code, which was able to further describe the construction of the knot by giving the positive and negative values depending on the nature of the crossing. The nature of the crossing being if when it passes underneath or over, whether or ‘knot’ it is a right handed crossing or a left handed one. This is shown in figure 3.

3: Depicting the two forms of crossings

This can lead to a more complex code for each given knot but with each code being more complex, the benefit gained is when analysing the code, it is possible to recreate the knot to a more exact form than with the simple Gauss code.Bre06

As Knot theory developed, certain key concepts were definied, namely being prime knots and variants which are still key to modern day developments in knot theory.

A prime knot holds a status similar to prime numbers.

This is a knot which can not be decomposed into any simpler knots the knot sum process. The knot sum process is where you take to knots and by cutting each knot open at any point and then joining the ends to any other knot where the same process has

Figure 4:

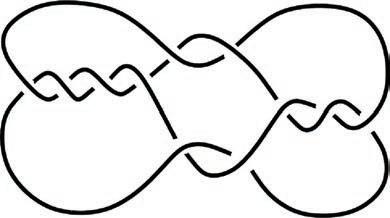

Variants are distinct shapes that one can create by distorting a particular knot in it’s form. For example, take any general knot, for instance the trefoil. By altering the way it twists or loops, or even adding in new twists and loops, you might be able to get the same basic knot in a different form. Many these variants can look drastically different, though they are all are based on topologically the same starting knot.

One other way this is similar is in fractions where 2/3 is the same as 4/6. These are variants of each other but we know that these two fractions are the same. This is the same principal in Knot theory where the unknot is the same as the Goeritz unkot while looking completely different.Wu92

For another example, each time you take any simple knot and add just one extra loop or twist, you generate a new variant of that original knot. Variants, in this way, give tools by which mathematicians understand all possible configurations of the shape a single knot can assume. This teaches mathematicians about the fundamental properties of knots and how they compare to each other.PW10

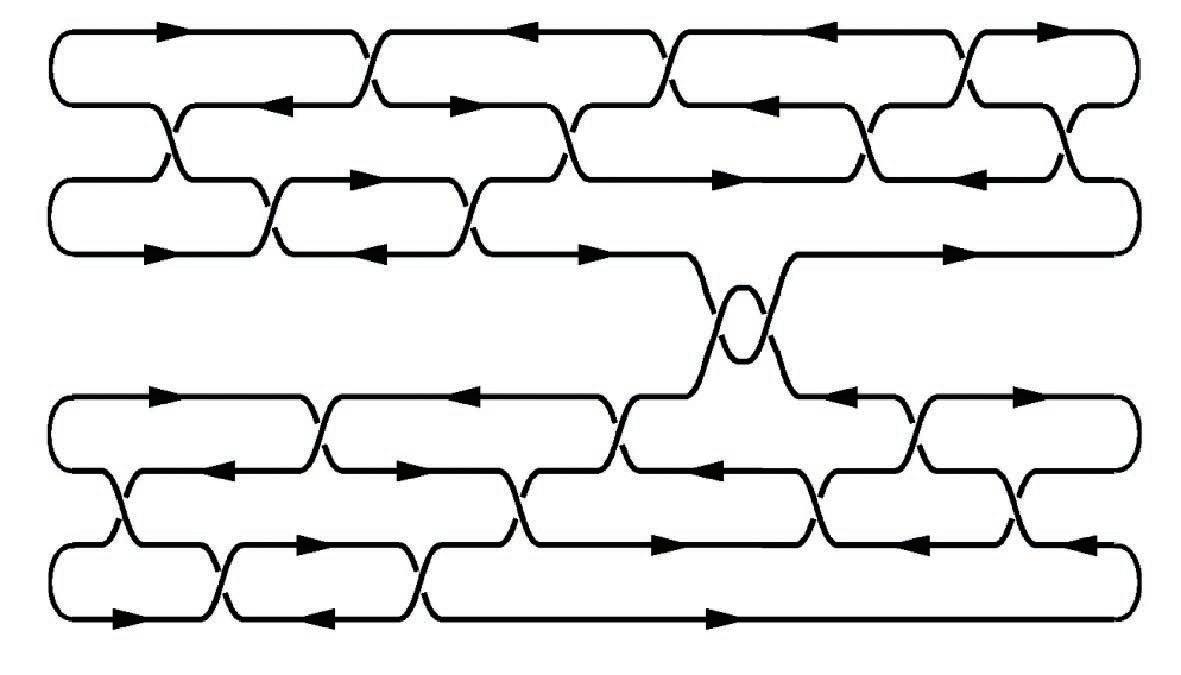

In 1927, the basis of knot theory as its known today was discovered. Kurt Reidemeister wanted to prove that knots do exist that are distinct from the unknot. The unknot can be thought of as a single loop with no crossovers or alternatively a slack elastic band. He went on to develop a series of 3 moves which could be completed without changing the underlying topology of the knot. These moves are local transformations which are as follows:

Figure 7: Depicting the 3 Reidemeister moves

With the development of these moves, he was able to identify whether a knot is distinct from another knot. However at this stage, this required many hours of trial and error completing the calculations.

Reidemeister used these moves he had discovered hich do not change the underlying topology of the knot to rigorously prove that there were knots that were different to the unknot. Which may sound trivial but I opened up the world of knot theory for future mathematicians to develop.Tra83

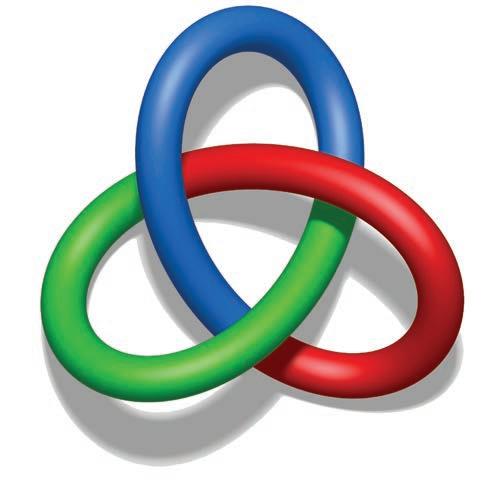

Theories began developing from knot diagrams to other ways to differentiate knots. A knot diagram being the simplified representation of a knot drawn on a single plane and each knot strand is represented as continuous curves. The key rule of tricolourability is that no two sections that share a common edge should be assigned the same colour. A section of a knot being from where if you follow the knot from where it goes under the last crossing to the next point it goes under the next crossing, as shown in figure 5 where each section of the knot is coloured using red, green, and blue.Alm12

There are knots that are not tricolourable, such as the figure-eight knot. The reason tricolourability is so useful, is that this process can be used to serve as a variant for distinguishing distinct knots. This is because the Reidemeister moves, which as stated earlier, do not change the topology of the knot. And as each of the 3 Reidemeister moves are tricolourable, if the prime knot is tricolourable, all other variants will be tricolourable as well.BH15

This makes tricolourability a very useful technique for comparing knots. This simple process aids in categorizing and developing the subject of knot theory. While also allowing people to easily see a visual difference without having to do complicated mathematics.Azr12

Figure 9: Example of a non-tricolourable figure of eight knot

The Jones polynomial is a relatively new discovery in the realm of knot theory which adds a unique polynomial number to each knot or link, from which they are sorted into different categories. The polynomial was invented by Vaughan Jones in the 1980s and has become one of the most powerful tools in modern knot theory.Jon05 To understand the Jones Polynomial better, It’s important to explain what a knot diagram is again. A diagram is nothing but a series of crossings: at each crossing, one of the ‘threads’ can go over or under another ‘thread’. With this at our disposal we can then compute the Jones polynomial for the given knot. For one of the simplest knots, the trefoil, where the given polynomial is V(t). Which is V(t) = t² - t + 1, where t is simply a representation of the knot diagram. However this is not the important part of the equation, what matters are the powers which show the crossings. If we compare this to the unknot

which has a Jones polynomial of V(t) = 1, comparing V(t) = t² - t + 1 to V(t) = 1, we can clearly see that they are different.Jon14 This is the power of the Jones Polynomial, it is able to tell the difference between things that, from an initial glance, look the exact same. But have different Jones polynomials. It is likely that two knots with the same Jones polynomial are the same topological knot, but further checks are needed to be certain. But to summarise, imagine the Jones polynomial as a signature for knots which is each individual polynomial. This allows mathematicians to tell the knots apart and to examine them more easily.Big02

Now we can somewhat understand the way a knot is created and categorised, how could what seems to be a purely theoretical concept be able to save a life?

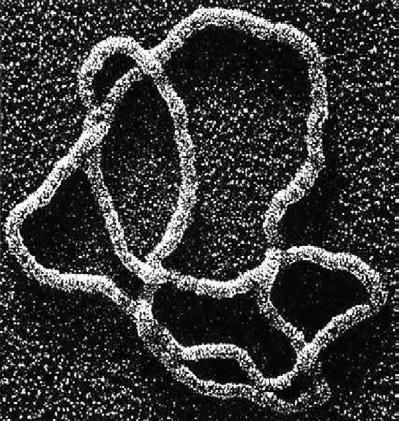

If we take a look at biology, specifically at the structure of DNA, the classic double helix. To create human life, the amount of data required to be stored on DNA is massive. Requiring these strands of DNA to be up to 2m long. To sustain human life, this DNA needs to be constantly produced and as it is produced these long strands inevitably get knotted up. Take any cables, when left in a box, will inevitably get knotted up. Developments have been made recently where we are now able to autonomously analyse and unknot a knot using a robot. This uses the principles of knot theory to predict what will happen when a certain action is performed.VSK+22 Now using these principles modern scientists are able to analyse strands of DNA and where required, unknot segments and in other cases create knots. By recreating natural knots in the DNA which may have been broken certain functions will be restored. As the shape is important for binding sites in the cell, damaged DNA may be life threatening and this new research can help save lives by fixing this DNA or creating new strands.LJ15

Quantum computing is what the world is moving towards as we require computers to handle larger and more complicated questions. Quantum computing is based on quibits that can coexist in many steps allowing these computers to deal with many complex problems at the same time. This technology is currently used for breaking encryption methods. There are two big problems, namely the fact that it is very expensive and there is a lot of hardware required but also that quibits are easily disturbed in the environment. Col06

The Topological computer aims to solve these problems using braided anyons. This significantly reduces the likelihood of errors forming and the calculations can be done faster using polynomial time not euclidean time. However precise manipulation is required when dealing with anyons, as only recently was there experiments proving they exist. However further developments are needed to understand the structure and how to layer them in computers. MMM+18

By using knot theory in the building of quantum computers we get the fact that: knot theory is being used to build computers which are used to find new variants for knot theory, developing the subject while making technological advances.

Knots are more than just objects in a physical world, but instead concern a complicated study in both mathematics and science. From initial hypotheses by people like Kelvin and Gauss has developed into this sophisticated discipline which is knot theory. The tools we need are given to us by the work of the Jones polynomial and the Reidemeister moves allowing us to classify and manipulate knots.

From this abstract branch of mathematics modern uses span into many areas for example biology and chemistry. But majorly quantum computing where knot theory is the key in developing more advanced ideas. As more research develops, further links with knot theory are being made and why the study of knot theory is so important to our future.

1. [Alm12] Manuela Almeida. Knot theory Estados Unidos, 2012.

2. [Azr12] M Azram. Knots and colorability Australian Journal of Basic and Applied Sciences, 6(2):76–79, 2012.

3. [BH15] Danielle Brushaber and McKenzie Hennen. Knot tricolorability. 2015.

4. [Big02] Stephen Bigelow. A homological definition of the jones polynomial Geometry & Topology Monographs, 4:2941, 2002.

5. [Bir93] Joan S Birman. New points of view in knot theory. Bulletin of the American Mathematical Society, 28(2):253–287, 1993.

6. [Bre06] Felix Breuer. Gauss codes and thrackles PhD thesis, Citeseer, 2006.

7. [Col06] Graham P Collins. Computing with quantum knots. Scientific American, 294(4):56–63, 2006.

8. [DF87] MJ Dunwoody and RA Fenn. On the finiteness of higher knot sums Topology, 26(3):337–343, 1987.

9. [Jon05] Vaughan F.R. Jones. The jones polynomial. University of California, 2005.

10. [Jon14] Vaughan Jones. The jones polynomial for dummies. University of California Berkley, 2014.

11. [Lic81] WB Lickorish. Prime knots and tangles Transactions of the American Mathematical Society, 267(1):321–332, 1981.

12. [LJ15] Nicole CH Lim and Sophie E Jackson. Molecular knots in biology and chemistry. Journal of Physics: Condensed Matter, 27(35):354101, 2015.

13. [Man18] Vassily Olegovich Manturov. Knot theory. CRC press, 2018.

14. [MK96] Kunio Murasugi and Bohdan Kurpita. Knot theory and its applications. Springer, 1996.

15. [MMM+18] D Melnikov, A Mironov, S Mironov, A Morozov, and An Morozov. Towards topological quantum computer Nuclear Physics B, 926:491–508, 2018.

16. [PW10] Peter Pagin and Dag Westerst˚ahl. Compositionality i: Definitions and variants. Philosophy Compass, 5(3):250–264, 2010.

17. [Sil06] Daniel S Silver. Knot theory’s odd origins: The modern study of knots grew out an attempt by three 19th-century scottish physicists to apply knot theory to fundamental questions about the universe. American Scientist, 94(2):158–165, 2006.

18. [Tra83] Bruce Trace. On the reidemeister moves of a classical knot. Proceedings of the American Mathematical Society, 89(4):722–724, 1983.

19. [VSK+22] Vainavi Viswanath, Kaushik Shivakumar, Justin Kerr, Brijen Thananjeyan, Ellen Novoseller, Jeffrey Ichnowski, Alejandro Escontrela, Michael Laskey, Joseph E Gonzalez, and Ken Goldberg. Autonomously untangling long cables. arXiv preprint arXiv:2207.07813, 2022.

20. [Wu92] FY Wu. Knot theory and statistical mechanics. Reviews of modern physics, 64(4):1099, 1992.

DANIEL HUGHES

Analysing the band structure of bulk and monolayer Transition Metal Dichalcogenides including Janus MXY structures

This Original Research in Science (ORIS) project was short-listed for the ILA/ ORIS Presentation Evening.

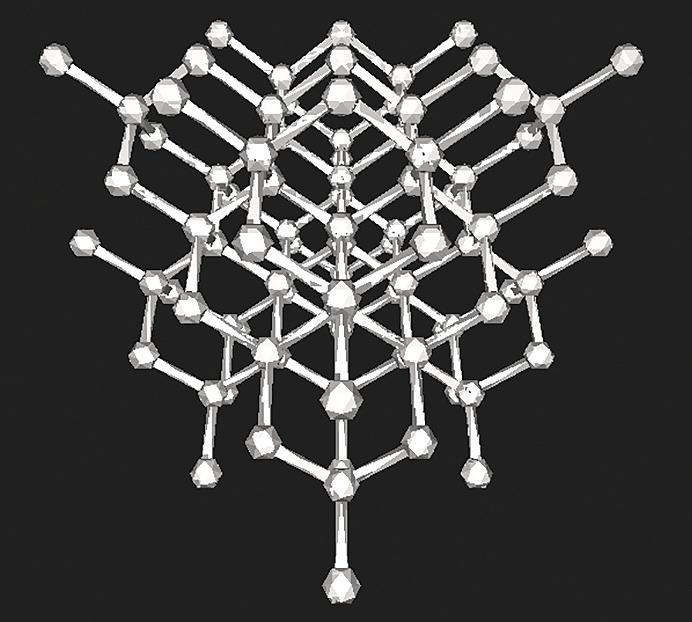

A crystal is a solid material in which the component atoms are arranged in a definite, periodic pattern. A solid is crystalline if it has long-range order - once the positions of an atom and its neighbours are known at one point, the place of each atom is known precisely throughout the crystal. A basic concept in crystal structures is the unit cell. It is the smallest unit of volume that permits identical cells to be stacked together to fill all space. By repeating the pattern of the unit cell over and over in all directions, the entire crystal can be constructed. This regular repetition of the unit cell makes the crystal

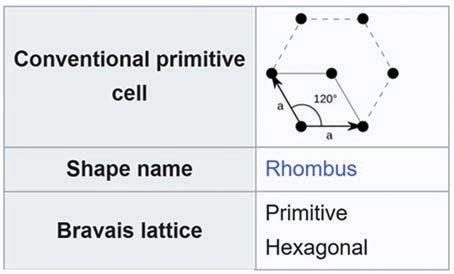

a periodic structure. The structure of all crystals can be described in terms of a lattice, with each atom or group of atoms replaced by a point in space forming a crystal lattice with the same geometrical properties as the crystal. In 1848, the French physicist, Auguste Bravais, identified 5 lattices from which all possible cases in 2-dimensional space can be represented and 14 possible lattice structures in 3-dimensional space. A fundamental aspect of any Bravais lattice is that, for any choice of direction, the lattice appears exactly the same from each of the discrete lattice points when looking in that chosen direction.

Mathematically, the unit cells of a Bravais Lattice are specified according to six lattice parameters which are the relative lengths of the cell edges (a, b, c) and the angles between them (α, β, γ), where α is the angle between b and c, β is the angle between a and c, and γ is the angle between a and b. The lengths of the cell edges are typically measured in angstrom (Å) - a unit of length that is equal to one ten-billionth of a meter, or 0.1 nanometres.

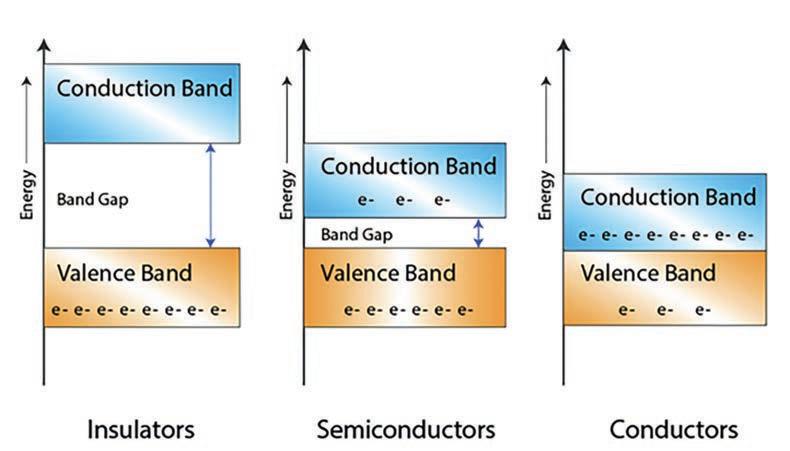

Band Theory is a fundamental model in solid-state physics that has been developed to describe the behaviour of electrons in a periodic potential, such as that found in a crystal lattice, and the electronic properties of crystalline materials.

In an atom, electrons occupy discrete energy levels, often referred to as atomic orbitals (in the real space an orbital corresponds to regions of space around an atom where there is a high probability of finding an electron). Band theory extends this concept to explain the behaviour of electrons in a solid material and it is most commonly applied to a crystalline solid. When atoms come together to form a solid, their atomic orbitals overlap. This overlap causes the energy levels of the orbitals to split, forming a continuous band of energy levels. These bands are called energy bands.

The arrangement of these bands and their occupation by electrons (which occupy the levels starting from the lowest energy but only two electrons per one state) determine the electrical conductivity of a material:

● Conduction band: This is the highest-energy empty or partially filled band. If there are available energy states in this band, electrons can move freely, making the material a good conductor.

● Valence band: This is the lowest-energy band filled with electrons.

● Band gap: This is the energy gap (if any) between the valence and conduction bands. If the valence band is fully occupied, the conduction band is completely empty and the energy gap is large, the material is an insulator. If the gap is smaller, the material is a semiconductor. If there is no energy gap and the highest-energy occupied band is partially filled, the material is a metal.

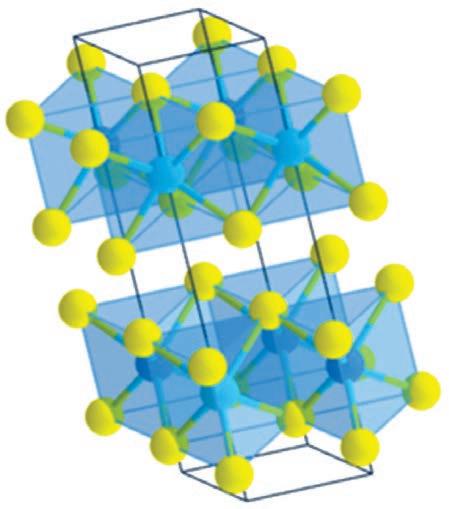

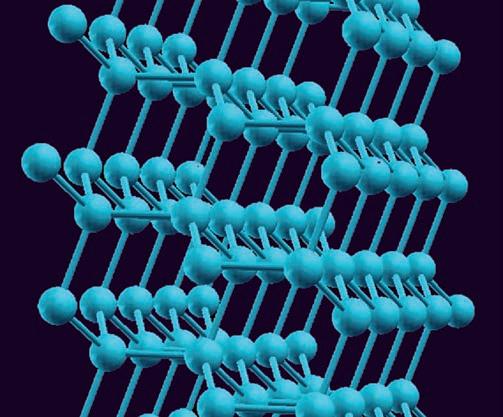

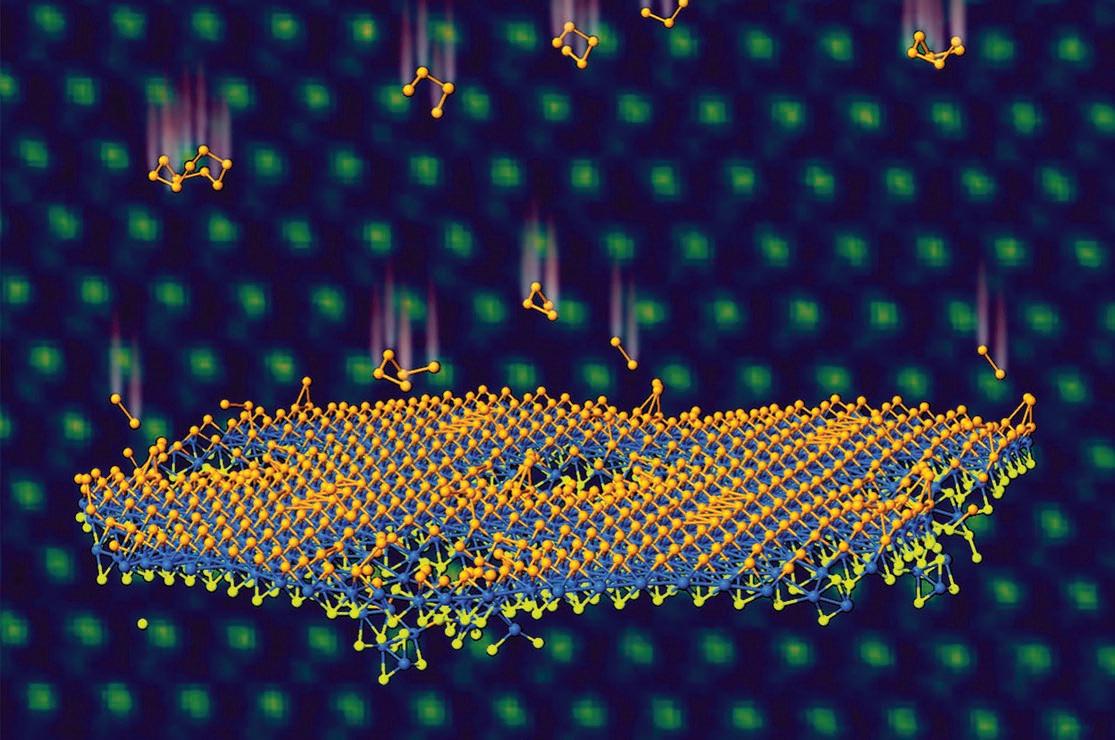

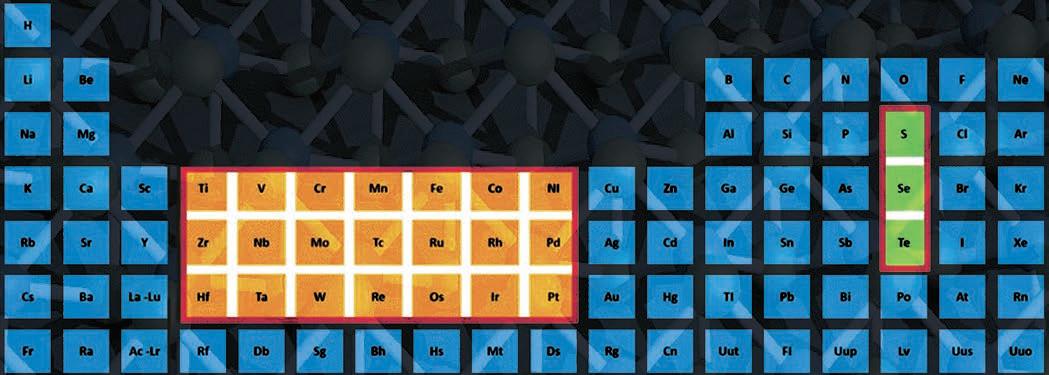

Transition metal dichalcogenides (TMDs) are a class of layered materials with regular crystal structures, that can be described by the general formula MX2, in which a layer of transition metal (M) is sandwiched between two layers of chalcogen atoms (X). Such 'sandwiches' are then stacked on top of each other, analogous to the stacking of graphene layers in graphite. TMDs can be found as bulk multilayer 3D materials (like graphite) or 2D mono-layer materials (like graphene). This research explores the properties of both of these forms.

TMDs have garnered interest because as a group they display a wide range of electronic properties (for example, some are semiconductors and some are metals; most are superconductors at sufficiently low temperatures), leading to a large potential range of applications including energy harvesting, sensing and nano-scale actuators.

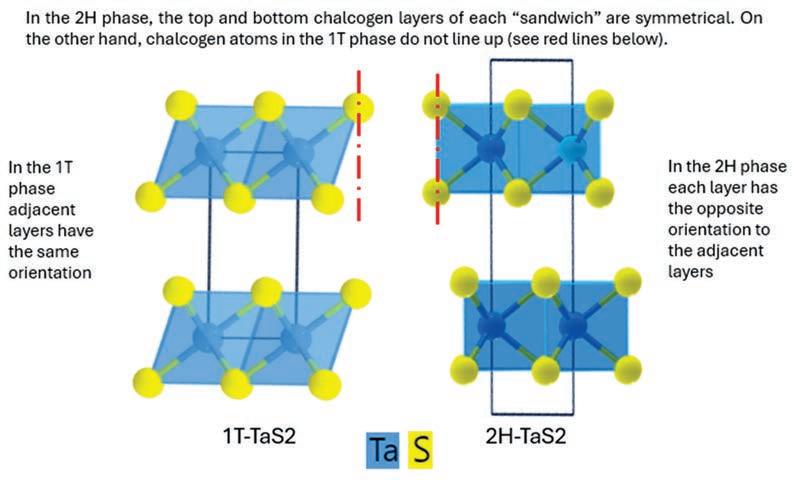

TMDs can also exist in several crystallographic phases, notably the 2H and 1T phases which this research focuses on. Whilst they have the same chemical formula, phases differ by the way in which atoms are arranged within and between layers. Just like with liquid water and ice, in given conditions (e.g pressure, temperature), one phase is the most stable although

several phases can coexist in the same crystal. For comparison, consider graphite and diamond which are different crystalline phases of carbon: both can exist in ambient conditions although graphite is the more stable phase.

Both phases consist of layers of transition metals and chalcogens. In each layer, atoms of the same element are arranged in equilateral triangles and each layer of transition metals is sandwiched between two layers of chalcogens. In the 2H phase, the top and bottom chalcogen layers of each 'sandwich' are oriented in the same direction relative to each other. When a 3D model of the 'sandwich' is viewed in the x or y direction the chalcogen atoms appear to be aligned with each other such that a line drawn parallel to the z axis of the unit cell would pass through both.

On the other hand, chalcogen atoms in the 1T phase do not line up when viewed in the x or y direction. Instead, the topmost and bottommost chalcogen layers of the 'sandwich' are oriented in the opposite directions (rotated by 180 degrees which swaps the orientation of the equilateral triangles). Additionally, in the 2H phase, each 'sandwich' is stacked with the opposite orientation to the previous one whereas each 'sandwich' in the 1T phase is stacked with the same orientation.

Source: ball and stick model from materials project

In the 2H phase, each layer has the opposite orientation to the adjacent layers. Therefore, the smallest repeatable unit must contain two layers (as opposed to the 1T unit cell which has one layer). As a result the unit cell of the 2H phase consists of 6 atoms whereas the unit cell of the 1T phase consists of 3 atoms and there are double the number of the bands between them.

In this research I will investigate TaS2 and TaSe2. These materials both have interesting electronic and optical properties and under certain conditions can exhibit superconductivity. They are being considered for a range of uses; currently their most common use is in the production of lithium-ion batteries where they can increase their efficiency and lifetime.

I will also investigate MoS2 which is the most widely used transition metal dichalcogenide (TMD) material because it is relatively abundant and can be produced at a relatively low cost. MoS2 has found applications in fields like electronics, energy storage, catalysis, and sensors. Its layered structure allows the layers to slide over each other with minimal friction, making it an excellent material for reducing wear and tear in mechanical components and it is widely used as a lubricant in the automative and aviation industries.

Furthermore, I also explore a subset of TMDs known as Janus MXY structures (the name refers to the

Roman god Janus with two faces). In particular, I will be analysing the structure of the hitherto not yet synthesised Janus structure TaSeS and comparing its electronic properties with the aforementioned materials under the same conditions. The Janus MXY structure differs from other TMDs in that the top and bottommost layers of the sandwich consist of different chalcogens. For instance, in TaSeS one layer consists of the chalcogen selenium and the other of sulphur. In such Janus materials, the differences in the number of electrons in S and Se lead to the appearance of electric polarization and piezoelectricity.

I hypothesise that the properties of TaSeS would be well approximated by an average of TaSe2 and TaS2.

Aims:

The aim of this research project was twofold;

1. To model the band structures of several transition metal dichalcogenides (TaS2, TaSe2 and MoS2) to compare the impact of differences in symmetry, chemistry and dimensionality on the electronic properties of a material.

2. To predict the possible crystal structure and electronic bands of a new, not yet synthesized material, Janus TaSeS to analyse the impact of mixing of S and Se atoms.

I will make comparisons between:

● Symmetry: materials with the same chemistry but different phase

● Chemistry: the same phase but different chemistry’

● Dimensionality: the same phase and chemistry but different dimensionality (three-dimensional bulk material vs a single, effectively two-dimensional, 'sandwich').

In order to analyse the electronic properties of these materials, I will plot a band structure graph describing the energy levels electrons can take with energy on the y-axis and momentum on the x-axis. In its simplest form, the band structure of a material can be thought of as consisting of a block of valence and conduction bands separated at the Fermi energy and perhaps also by a band gap (as illustrated in the diagram).

“ The band structure of a material can be thought of as

consisting of a block of valence and conduction bands separated at the Fermi energy and perhaps also by a band gap.

At zero Kelvin the valence band is filled, and the conduction band is empty, however, as more energy is added some electrons may be promoted to the conduction band depending on the size of the band gap. If there is no band gap the material is metallic, and many electrons are promoted to the conduction band even if little energy is provided. If the band gap is sufficiently small, then the material is a semiconductor, and some electrons are promoted to the conduction band using relatively little energy. If the gap is too large, electrons can only be promoted to the conduction band moderate energy if moderate energy is provided and the material is an insulator.

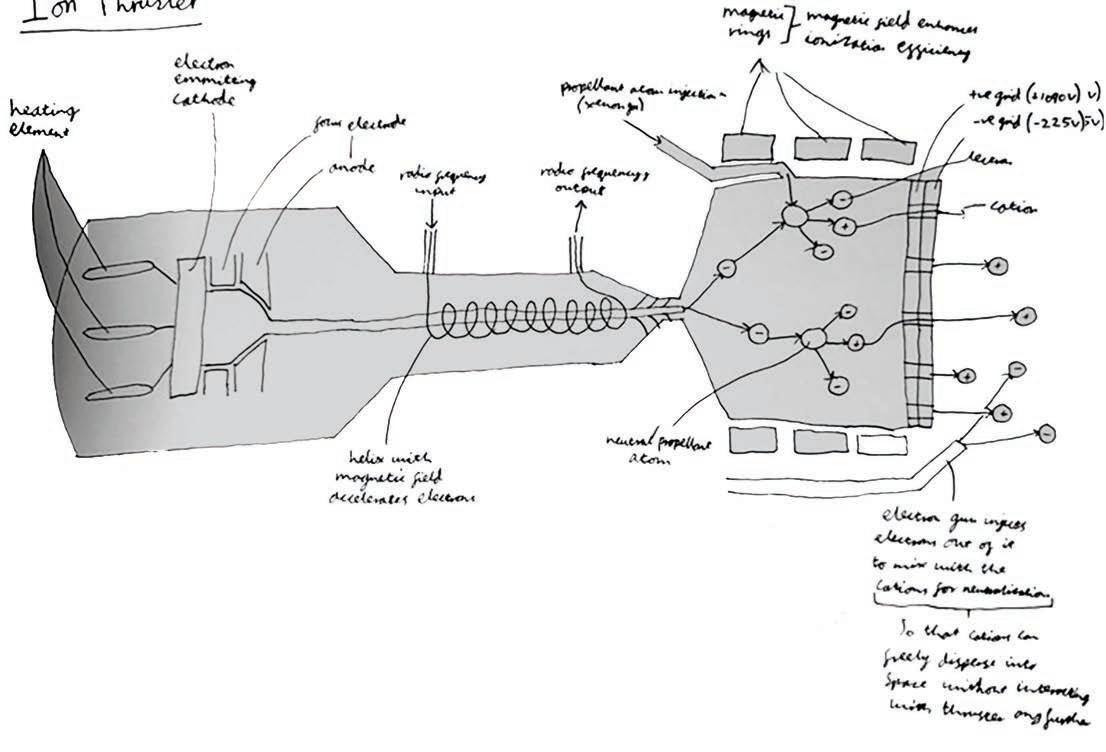

During the first phase of the project, I spent some time reading background material and learning to use Quantum Espresso (QE). My Project Sponsor, Dr Marcin Mucha-Kruczyński, provided me with a number of articles about research projects relating to TMDs which provided an excellent background to the project, and I also referred to a number of other texts and online resources. A study of the mathematics underlying Band Theory, given its complexity, was outside the scope of this project.

QE is an open-source collection of programs which was developed by a large international collaboration of researchers, primarily from institutions in Italy and the United States. It is based on Density Functional Theory and uses plane waves together with so-called pseudopotentials to enable calculations of the electronic structures of materials, simulation of complex molecular systems and prediction of material properties. DFT is a computational quantum mechanical modelling method grounded in the Schrödinger equation, which is itself the fundamental equation of Quantum Mechanics that describes the wave-like behaviour of atomic and sub-atomic particles.

QE makes it possible to carry out very complicated calculations within a reasonable amount of time without access to significant computing power. In order to be able to run QE on my laptop I created a Linux Virtual Machine (an independent space on my Windows-based computer that would run the Linux Ubuntu operating system) and downloaded the Quantum Mobile VM image (which had QE preinstalled) into this VM.

Initially I learned to run pre-prepared material files available from online tutorials to gain familiarity with the software before moving on to learn how to write these files myself and where to obtain the input data.

Following the preparation phase I undertook the field work during a two-week trip to the University of Bath. I met regularly with Dr Marcin Mucha-Kruczyński during this period to discuss progress and learning points that came up as the project progressed.

The first stage for each material whose band structure I was plotting was to find the relevant information about the crystal structure and unit cell of that material. The data I needed to carry out the calculations in QE were:

● the Bravais lattice index,

● cell constants a and c,

● the number of atoms in the unit cell, and

● atomic positions within the unit cell.

Since all the TMDs I researched had hexagonal lattice structure, their unit cells were all rhombohedral in shape and as such the only cell constants I needed were a and c since the a and b side lengths are the same.

Source: Wikipedia

I also needed to find a pseudopotential file for each element involved. A pseudopotential is used as an approximation that provides a simplified description of complex systems; only the chemically active valence electrons (those in the outermost shell which interact with other atoms) are dealt with explicitly, while the core electrons are 'frozen', being considered together with the nuclei.

In selecting the type of pseudopotential to use I opted for scalar relativistic ones which, unlike the full relativistic versions, do not take account of the impact of electron spin. This simplification reduced the time taken to run each calculation on my laptop. I also tried to run some calculations using full relativistic pseudopotentials, however, these calculations took too long to provide a complete set of results within the time frame of the project. Additionally, using scalar relativistic pseudopotentials meant that it was quicker and easier to debug the input files.

I was able to find most of the data set out above at The Materials Project website. The Materials Project is a multi-institution, multi-national effort that uses supercomputers (including, for example, the National Energy Research Scientific Computing Center in Berkeley, California which is part of the U.S. Department of Energy) to compute the properties of all inorganic materials and provide the data for researchers free of charge. The aim of the project is to reduce the time needed to invent new materials through focusing laboratory work on compounds that already show the most promise computationally.

The use of supercomputers enables the prediction of many properties of new materials before those materials are ever synthesised in the lab. However, the data I needed for 2H-TaSeS (which has not yet been synthesised in the lab) was not yet available in The Materials Project so I had to carry out an additional calculation for this material as explained below.

Fermi energy is a fundamental quantity in solid-state physics that represents the highest energy level occupied by an electron at absolute zero temperature. In band theory, the position of the Fermi energy relative to the band structure determines whether a material is a metal, insulator, or semiconductor.

In order to calculate the Fermi energy for each material I needed to create and run the SCF file in QE.

Self-Consistent Field (SCF) is a computational method used in QE to approximate the electronic structure of molecules and atoms.

Key input data for this included:

● the Bravais lattice index (which is 4 for hexagonal structures),

● cell constants a and c in Angstrom,

● atomic positions (in Crystal Coordinates),

● number of atoms.

I also needed to specify which elements made up the TMD and for each element I specified its atomic mass as well as a relevant pseudo-potential file. The SCF calculation also required several other parameters not directly related to the TMD itself. For instance, I had to set the Kinetic Energy Cutoff for wavefunctions. Kinetic Energy Cutoff determines the maximum kinetic energy of the plane waves used to represent the electronic wavefunctions. Selecting a higher value generally leads to more accurate results but also increases the computational cost significantly.

I tested a range of values for this cutoff and chose a value of 680 eV because it provided an optimal trade-off between accuracy of results and length of calculation time.

Having created the SCF input file for each material, I then inputted it to Pw.x to run the SCF calculation. Pw.x is one of the core programme modules in QE. It primarily performs plane-wave SCF calculations by iteratively solving the Kohn-Sham equations to determine the ground-state electronic structure of a system. The Kohn-Sham equations are a set of equations used to simplify the complex problem of understanding how electrons behave in atoms and molecules. Ground-state electronic structure refers to the arrangement of electrons in a system at its lowest possible energy state (its most stable state). As part of the output, Pw.x provided a value for the Fermi energy of each material.

Since Quantum Espresso is designed to run calculations for three-dimensional materials by default, in order to model monolayers, I had to adjust the parameters of the unit cell to provide an approximation for a monolayer within the multilayer simulation. I did this by increasing the cell constant 'c' which lengthened the ‘z’ axis of the unit cell, thus increasing the distance between layers. As the distance between layers increases, the interaction between them decreases, so by sufficiently increasing c I could minimise the interaction between layers and in effect simulate the properties of a single monolayer.

However, since I was using relative coordinates for the atomic positions within the unit cell, this meant that the absolute distance between the atoms in each layer also increased which would cause erroneous results. To fix this, I needed to scale z positions of the atoms within the unit cell so that the absolute distance between the atoms within each layer stayed the same. Since I was doubling the value of c for each monolayer calculation, this involved halving the z coordinates of the atomic positions and applying an offset where appropriate. This ensured that the layer itself would remain unchanged even though the distance between the layers increased.

“ As the distance between layers increases, the interaction between them decreases, so by sufficiently increasing c I could minimise the interaction between layers and in effect simulate the properties of a single monolayer.

The input data regarding the atomic structure required to calculate the Fermi energy (as outlined above) was available in The Material Projects for each of the materials I studied other than 2H-TaSeS. TaSeS is a material which has not yet been synthesised in a laboratory.

In order to calculate the atomic structure of 2H-TaSeS I used the VC-relax calculation in QE which adjusts the size and shape of the unit cell to find the most energetically favourable configuration. VC-relax can be used to determine the equilibrium lattice parameters and atomic positions of a material:

1. Initial Structure: The calculation starts with an initial guess for the unit cell parameters.

2. Relaxation: The software iteratively adjusts the volume and cell parameters while minimizing the total energy of the system.

3. Convergence: The calculation continues until a converged structure is reached, meaning the forces on the atoms are negligible and the lowest energy (and most stable) configuration of the structure has been found.

Before performing the band structure calculations, I first had to determine a set of k-points that I would keep the same throughout my calculations to ensure comparable band structure plots for different materials.

To perform calculations efficiently, a finite set of special points in the Brillouin zone, known as k-points, are sampled rather than calculating the electronic structure at every point in the Brillouin zone.

Since I was going to compare the differences between the bulk and monolayer forms of each TMD, I decided to use 2D k-points only (as the mono-layer forms have no 3D k-points), setting the z component (which represents the third dimension) of each to zero.

To generate a set of k-points for the calculations, I used the SeeK path tool from the Materials Cloud website (an open source resource designed for materials researchers) to help visualise the Brillouin zone and generate a range of values connecting the Γ (Gamma), M and K high-symmetry points (relevant to the 2D hexagonal lattice).

● Γ (Gamma): Centre of the Brillouin zone

● K: Located at the corners of the hexagon

● M: Midpoints of the edges of the hexagon

● Having selected a set of k-points using the Materials Cloud tool, I then created and ran a Python script to plot them on a graph to provide a visual check (against the expected output shape that was sketched out by my Project Sponsor) before confirming my selection.

I then created a new input file with similar inputs to the SCF input file as well as the specific set of k-points. I then ran this file using Pw.x specifying that a band structure calculation should commence.

Using the output files from these calculations I then ran the Bands.x QE module to produce band structure data in format that could be plotted using the Plotband.x QE module.

Prior to the start of the fieldwork I had two Teams calls with my Project Sponsor, Dr Marcin Mucha-Kruczyński, to discuss the scope of the project and some of the background to the underlying key physics concepts I would be working with.

During the first phase of the project prior to starting the fieldwork, I spent some time reading background material and learning to use Quantum Espresso (QE). This preparation phase was spread over a number of months but in total encompassed around two week’s work on a full time basis.

Following the preparation phase, I undertook the field work during a two-week trip to the University of Bath (29 July to 9 August 2024). I met regularly with Dr Marcin Mucha-Kruczyński during this period to discuss progress and learning points that came up as the project progressed.

For each material, I plotted a band structure along the path connecting the high-symmetry points Gamma, M, K and returning back to Gamma. I set the Fermi energy as the 'zero' reference value for each graph, plotting +/-2eV as the range of the y-axis to provide a closer view of the bands around the Fermi energy. This is the energy range relevant to the physics of electronic transport.

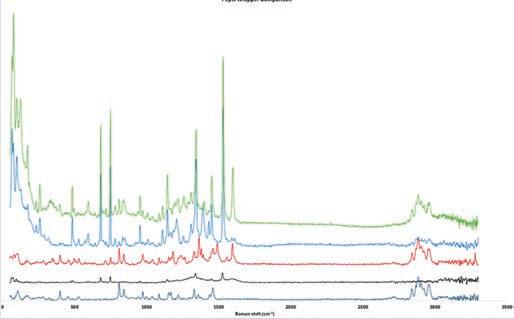

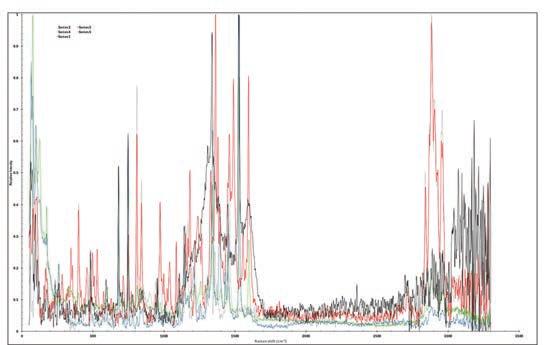

In these graphs, the conduction bands are at the top and may cross the Fermi energy (indicated with a horizontal dashed line). The fact that they do so indicates that the material is a conductor. The valence bands are in the lower half of the graph. I produced 16 band structure graphs showing the 1T and 2H phases in both 2D (monolayer) and 3D (bulk) form for each TMD that I investigated.

The number of the bands, how close together they are, whether the bands are steep or flat, the number of times a conduction band crosses the Fermi energy are all examples of factors which provide information about the electronic properties of a material. Consider, for example, applying a voltage to a material which provides energy to the electrons. However, they can only take advantage of this if there are higher energy states that they can move into. Therefore, knowledge of the band structure near the Fermi energy level is crucial for modelling or predicting the properties such as electronic transport of a material. A detailed consideration and measurement of the impact of these factors is beyond the scope of this project. In the analysis below I have made high level reference to these factors where they are relevant but have largely focused my comments on the interaction of the conduction bands with the Fermi energy level.

In both the 1T and 2H phases of the 2D forms of TaSe2, TaS2 and TaSeS (the Janus material) one conduction band crossed the Fermi energy line indicating that they are all conductors and the change in phase did not impact this core characteristic. The graphs of the 2D forms do show a degree of similarity, however, the slope of the conduction band is steeper in the 1T phase whilst in the 2H phase the conduction band crosses or touches the Fermi energy level one additional time. Overall, a larger part of the conduction band seems to be occupied (that is, lies below the Fermi energy) in the 1T phase than in the 2H phase.

“ In my analysis of the results, I observed that changing the chalcogen atom of the TMD has less impact than changing the metal atom on the electronic band structure of the TMD.

Both the 1T and 2H 3D or bulk forms of TaSe2, TaS2 and TaSeS are conductors. However, in the 2H phase two bands cross the Fermi energy level whereas only one band crosses it in the 1T phase. This difference was probably due to the number of layers in the unit cell of the 2H phase, two, as compared to one for the 1T phase. Therefore, the number of atoms considered in the 2H calculation was double that considered in the 1T band calculation thus doubling the number of bands plotted.

Whilst the TaSe2, TaS2 and TaSeS TMDs I investigated are all metallic in both phases, MoS2 changed from a conductor in the 1T phase (with three bands crossing the Fermi energy level) to a semiconductor in the 2H phase with no bands crossed the Fermi energy and a small band gap. This was the same in both bulk and monolayer forms of MoS2.

In their 1T phases and in both monolayer and bulk form, TaSe2, TaS2 and TaSeS were very similar with both one band crossing the Fermi energy level with a similar shape. This suggests that changing the chalcogen atom has little impact on the electronic band structure in the 1T phase.

More differences could be seen within the 1T phase when the metal was changed - from 2D 1T-TaS2 to 2D 1T-MoS2. Whilst both are conductors, in 1T-MoS2 three bands cross the Fermi energy level six times, whereas in 1T-TaS2 one band crosses twice. This suggests that in the 1T phase, changing the metal making up the TMD had a more significant impact on its’ electronic structure than changing the chalcogen. In the 2H phase of TaSe2, TaS2 and TaSeS changing the chalcogen also has a limited impact and the bands remain similar for the three materials. However, in 2D TaSeS 2D the conduction band crosses the Fermi energy level in between the K and Gamma high-symmetry points (whereas in the other two materials it only touches it) and in 3D TaSeS the 'top' conduction band only crosses twice (in the other two materials the top line crosses four times).

Changing the metal in the 2H phase (e.g 2H-TaS2 2D to 2H-MoS2 2D) had an even more significant than in the 1T phase changing the material from a metal to a semi-conductor. The greater impact of changing the transition metal than the chalcogen is because the former modifies the number of valence electrons involved (requires moving horizontally in the periodic table). This changes the number of electrons that must be distributed in the bands and so shifts the position of the Fermi energy level. In contrast, the chalcogen atoms have the same number of valence electrons (they are in the same column of the periodic table) so little change is observed.

The greater impact of changing the transition metal than the chalcogen is due to the fact that changing the former modifies the number of valence electrons

involved (requires moving horizontally in the table of elements). This in turn changes the number of electrons that have to be distributed in the bands and so shifts the position of the Fermi energy. In contrast, the chalcogen atoms have the same number of valence electrons (are in the same column of the table of elements) and so little change is observed.

When comparing the 3D bulk and 2D monolayer band structure graphs for the 1T phase of TaS2, TaSe2 and TaSeS, there is little variation in the bands crossing the Fermi energy level. However, for the 2H phase, the number of bands crossing the Fermi energy in the monolayer form is halved: the two bands crossing the Fermi energy level for the bulk form are replaced by a single band that appears to be roughly an average of the two. This is because the 2H unit cell in the 3D form consists of two layers whereas the monolayer calculation was adjusted to reduce the interaction between layers, simulating the properties of a single layer only.

In its bulk form, the conduction band minimum of 2H-MoS2 is approximately halfway between the K and Gamma high-symmetry points and the valence band maximum is at the Gamma point, resulting in a band gap of approximately 1.1eV. The monolayer form of MoS2 differs noticeably from its bulk form: whilst the valence band maximum is still at the Gamma point, it is at a lower energy than in the bulk form. Furthermore, the shape of the conduction band is different such that the global minimum is at the K high-symmetry point resulting in a larger band gap of approximately 1.5eV.

In my analysis of the results, I observed that changing the chalcogen atom of the TMD has less impact than changing the metal atom on the electronic band structure of the TMD. I also observed that changing phase impacts the properties of TMDs differently depending on their chemical structure as there was a significant change in MoS2 but not in TaS2. Additionally, changing dimensionality did not have a large impact in the 1T phase but had a larger impact in the 2H phase due to the presence of two layers within the unit cell.

Overall, I can conclude that the mixing of S and Se atoms to produce the hypothetical Janus material TaSeS results in a material whose electronic band structure can be approximated by a combination of TaSe2 and TaS2 with a smooth change between the properties of the two rather than a sudden and more drastic change in band structure.

Transition metal dichalcogenides are a group of materials in which a layer of transition metals is sandwiched between two layers of chalcogen atoms. Such 'sandwiches' are then stacked on top of each other. I use the multi-purpose nanoscale materials simulation software Quantum Espresso to model the band structures of several transition metal dichalcogenides: TaS2 (tantalum disulphide), TaSe2 (tantalum diselenide) and MoS2 (molybdenum disulphide). This selection of materials allows me to compare the impact of differences in symmetry, chemistry and dimensionality on the electronic properties of a material. I also use Quantum Espresso to predict the possible crystal structure and electronic bands of a new, as of yet not synthesized material, Janus TaSeS (tantalum selenium sulphide), in which in each sandwich one chalcogen layer consists of S atoms and another of Se. My findings show that the mixing of S and Se atoms to produce TaSeS results in a material with properties well approximated by the average of those of TaSe2 and TaS2.

I would like to thank my supervisor Dr Marcin Mucha-Kruczyński for making this ORIS project possible. His generous support, guidance and teaching allowed me to explore some fascinating aspects of condensed matter physics. The passion that he shows for the subject is inspirational and I am very grateful to have had this opportunity. I would also like to thank Mr Lau for overseeing the ORIS project and providing me with constructive advice particularly during the early stages of the project.

“

Overall, I can conclude that the mixing of S and Se atoms to produce the hypothetical Janus material TaSeS results in a material whose electronic band structure can be approximated by a combination of TaSe 2 and TaS 2 with a smooth change between the properties of the two rather than a sudden and more drastic change in band structure.

Example 2D SCF file

1. &CONTROL calculation = 'scf', prefix = 'tas2', ! 2H-TaS2 2D outdir = './tmp/' pseudo_dir = '../pseudos/' verbosity = 'high' /

2. &SYSTEM

ibrav = 4 ! https://next-gen.materialsproject. org/materials/mp-1984?formula=TaS2

A = 3.34 ! lattice constant a

C = 25.10 ! c=12.55, a=3.34 angstroms -- set c=25.1 for 2D

nat = 6

ntyp = 2

ecutrho = 400

ecutwfc = 50 occupations = 'smearing' smearing = 'cold' degauss = 1.4699723600d-02 /

3. &ELECTRONS

conv_thr = 1.2000000000d-09

electron_maxstep = 80 mixing_beta = 4.0000000000d-01 /

4. ATOMIC_SPECIES

S 32.065 s_pbesol_v1.4.uspp.F.UPF

Ta 180.94788 ta_pbesol_v1.uspp.F.UPF

5. ATOMIC_POSITIONS crystal ! 2D

Ta 0.0000000000 0.0000000000 0.1250000000

Ta 0.0000000000 0.0000000000 0.6250000000

S 0.3333333300 0.6666666700 0.0632663900

S 0.3333333300 0.6666666700 0.1867336100

S 0.6666666600 0.3333333300 0.5632663900

S 0.6666666700 0.3333333300 0.6867336100

6. K_POINTS automatic 11 11 3 0 0 0

Example 3D SCF file

1. &CONTROL

calculation = 'scf', prefix = 'tas2', ! 2H-TaS2 outdir = './tmp/' pseudo_dir = '../pseudos/' verbosity = 'high' /

2. &SYSTEM

ibrav = 4! https://next-gen.materialsproject. org/materials/mp-1984?formula=TaS2 A = 3.34 ! lattice constant a C = 12.55 ! c=12.55, a=3.34 angstroms nat = 6 ntyp = 2 ecutrho = 400 ecutwfc = 50 occupations = 'smearing' smearing = 'cold' degauss = 1.4699723600d-02 /

3. &ELECTRONS

conv_thr = 1.2000000000d-09 electron_maxstep = 80 mixing_beta = 4.0000000000d-01 /

4. ATOMIC_SPECIES