QUASAR #163

INFORMING AND ADVANCING OUR MEMBERS

THE ROLE OF QUALITY ASSURANCE IN PHARMACEUTICAL R&D

HOME SUPPLY –A PATIENT-CENTRIC APPROACH TO DIGITALLY MITIGATE DTP RISKS

30 PAGES OF ARTICLES

ROCHE/RQA COLLABORATION: IMPACT OF THE CERTIFICATE IN DATA BASICS eLEARNING IN THE PHARMACEUTICAL INDUSTRY, INCLUDING THE LAUNCH OF THE IMPALA CONSORTIUM

DID THE AUDIT GO SMOOTHLY? FOR SPONSORS, CLINICAL SITES AND AUDITORS

ANALYSIS OF THE EMA DRAFT GUIDANCE ‘GUIDELINE ON COMPUTERISED SYSTEMS AND ELECTRONIC DATA IN CLINICAL TRIALS’

KEEPING YOU POSTED REGULATIONS AND GUIDELINES P38

THE LATEST COURSES P43

www.therqa.com MAY 2023 | ISSN 1463-1768

CHATS WITH CHATGPT

QUASAR #163

ARTICLES

6 Analysis of the EMA Draft Guidance ‘Guideline on Computerised Systems and Electronic Data in Clinical Trials’ Mario E. Corrado, Marianna Esposito, Laura Monico, Anna Piccolboni, Massimo Tomasello

10 Roche/RQA Collaboration: Impact of the Certificate in Data Basics eLearning in the Pharmaceutical Industry, Including the Launch of the IMPALA Consortium Sharon Havenhand, Tim Menard

14 The Role of Quality Assurance in Pharmaceutical R&D Nigel Crossland

SUBMITTING AN ARTICLE

If you would like to submit an article for a future edition of Quasar on a topic you feel would be of interest to our membership, please contact the editor: editor@therqa.com Visit www.therqa.com for guidelines on article submission.

NEXT EDITION AUGUST 2023

CARBON CAPTURE QUASAR #162

353 8.84

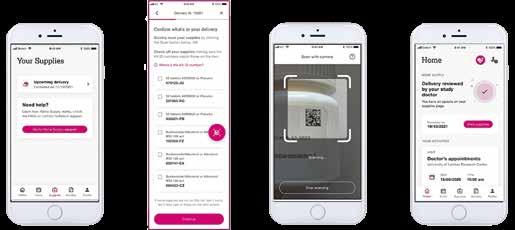

18 Did the Audit go Smoothly? For Sponsors, Clinical Sites and Auditors Wenjing Zhu

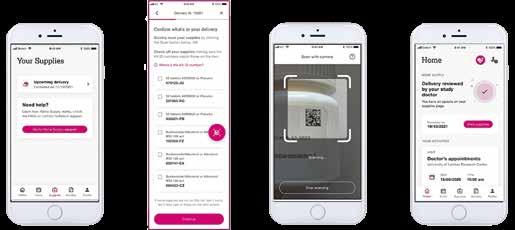

22 Home Supply – A Patient-Centric Approach to Digitally Mitigate DTP Risks Fatemeh Jami, Richard Knight, Marcus Wajngarten

30 Chats with ChatGPT Melvyn Rapprecht

Quasar is published four times a year and articles can be submitted to Quasar at anytime. If you are interested, please email editor@therqa.com.

The final copy deadlines are as follows:

Final copy date Publication

12th June 2023 August 2023

11th September 2023 November 2023

11th December 2023 February 2024

11th March 2024 May 2024

To connect with the RQA just follow these links:

LinkedIn

https://uk.linkedin.com/company/rqa

Facebook www.facebook.com/researchqualityassociation

Twitter

https://twitter.com/The_RQA

YouTube www.youtube.com/user/researchqualityass

Vimeo

https://vimeo.com/therqa/videos

This publication is a service offered by RQA to its members and RQA cannot and does not guarantee that the information is complete.

RQA shall not be responsible for any errors or omissions contained in this publication and reserves the right to make changes without notice.

The information provided by third parties is provided ‘ as is� and RQA assumes no responsibility for the content. In no way is RQA liable for any damages whatsoever and in particular RQA shall not be liable for special, indirect, consequential or incidental damages or damages for lost profits, loss of revenue arising out of any information contained in this publication.

MAY 2023 | 3

REGULARS

Welcome 5 Update 38 Keeping You Posted – Regulations and Guidelines GCP, Animal and Veterinary Products 43 Calendar FEATURES 36 10 Questions to… 37 How Did You Get into QA? CONTENTS

4

30

Dear Members,

When I sat down to write this message for Quasar, my thoughts reflected on the recent past… and what the future of RQA might hold. Whilst much effort in recent years has been focused on adapting how RQA functions to meet the challenges of the COVID pandemic (hopefully, that is all behind us), we can look to the future regarding RQA’s offering to members and customers.

At the recent RQA Board meeting, we reflected on the structure of RQA with a particular focus on the Board, Management Committee and Committees and how we collectively drive the future direction and efforts of RQA in serving its members and customers. My conclusion, following this reflection, was that as an association we need to be more coordinated in our approach to the information, services and products we provide to members in an ever-changing regulatory environment. In addition, the scope of RQA and its members’ interests are ever-changing and developing through the emergence of new technology, the ever-expanding breadth of science practised and from greater globalisation of the RQA’s member and customer base.

Despite many recent initiatives such as the Volunteer Programme, the Community Hub and remote training courses, to mention just a few, all of which have been successful in delivering products and services valued by members and customers, the management structure has changed little.

A few years ago, the RQA Board Chair was elected and changed every two years and board members were elected every three years. In addition to these elected Board members, the Chairs of each committee also served as Board members. This structure formed the Board of Directors, who would meet approximately four times a year to fulfil its corporate responsibilities and agree on the direction and strategy for RQA going forward. However, this structure was extremely fragile in that every Board meeting would consist of over 15 members, with a regular change of individuals attending those meetings.

To address this fragility, the Board was reconfigured through a change in the RQA constitution, agreed by the members, to include seven elected RQA members to serve on the Board. From those selected, the Chair of the Board is appointed by the Board members each year.

The Association structure includes a Board of Directors which oversees the operational performance and drives the direction of RQA, a Management Committee (comprising of Board members and Chairs of each committee) and several focused committees, including GCP, GLP, Animal Heath, IT and Pharmacovigilance – bearing in mind that all these individuals provide their input and time voluntarily.

The RQA Office does a magnificent job in providing the mechanisms by which the products and services are delivered to the members and customers.

The current committee structure was introduced in the early days of QAG and provided focused groups to explore the different areas of interest of the membership. These committees formed ‘silos of interest’ and worked well when each RQA member had a single interest focus. However, today that same structure of member interest does not exist; for example, IT crosses all areas of regulatory compliance and is of interest to most members.

All of this begs the question: Is the current operating structure of RQA capable of ensuring that the Association continues to provide the level of information, products and services required by members and customers in the present work and regulatory environment?

In the coming months the Board, working closely with Committee Chairs and Committees, with input and direction from the RQA Office, will rethink and reshape the makeup of the RQA. The objective is to ensure the products and services provided by RQA meet the current and future needs of members and customers and the scope of services continues to adapt to those requirements – doing all this while maintaining influence and input to applicable global standards and regulations.

I will provide updates on the progress of the organisational restructuring in future issues of Quasar.

Kindest regards,

WELCOME Tim Stiles chair@therqa.com

Tim

4 | MAY 2023

‘In the coming months the Board, working closely with Committee Chairs and Committees, with input and direction from the RQA Office, will rethink and reshape the makeup of the RQA.’

7TH GLOBAL QA CONFERENCE

Along with the Society of Quality Assurance (SQA) and Japan Society of Quality Assurance (JSQA), RQA is a co-organiser of the Global QA Conference (GQAC), held every three years.

The 7th Global QA Conference in March 2023 – hosted by SQA – was held at the Gaylord National Resort and Convention Centre in National Harbour, Maryland. The Convention Centre, encased in a monumental 19-storey glass atrium, stands on the banks of the Potomac River, just a stone’s throw from Washington, D.C. The venue is possibly the largest and most impressive convention centre I’ve ever visited, boasting almost 2000 bedrooms and over 94 meeting spaces – the largest holding 10,000 guests (a meeting space, not a bedroom).

SQA delivers a Quality College – a week of intense learning – twice yearly. The GQAC sat alongside the Quality College, offering SQA members a broad choice of learning and networking opportunities. The conference ran for two and a half days across several topic-driven streams. It was interspersed with meetings of Chapters, Speciality Sections and National Societies, creating a constant buzz of activity from dawn until dusk.

RQA, as usual, was offered the opportunity to exhibit, which we gladly accepted – a chance to connect with QA specialists, mainly from the US, and discover their professional needs. There were around 40 exhibitors, primarily consultants and auditors, plus the five societies – SQA, JSQA, KSQA (Korea), GQMA (Germany), and RQA. The visitors to the RQA stand were mainly interested in learning and membership; we’d taken a handout to briefly explain what RQA offers and it was exceptionally well received. We also had RQA USB cables and mouse mats to give away to enquirers.

The opening plenary featured a warm welcome from the Programme Committee Chairs, the SQA President, representatives from the co-host partners and the keynote speaker, David Lim, a.k.a. Mr Everest after he conquered the world’s highest mountain twice – the second time with a permanent disability in both legs. David’s address was humorous, heart-warming and highly motivational.

SQA made good use of the available networking time to plan events in social settings. We used the opportunities to meet with RQA members, collaborators, sponsors, exhibitors, and colleagues, old and new. It was good to see everyone; previously, I hadn’t experienced such an enormous benefit of networking.

The RQA session, scheduled for 10:30am on Wednesday, focused on Learning and Development Trends with a panel of experts comprising Carl Lummis, Zillery Fortner, Yasser Farooq, Richard Crossland and Miland Nadgouda. The discussion was lively and stimulating, with around 60-70 session attendees and a steady flow of excellent points, questions and responses from all sides. At international conferences, we always make the opportunity to meet with other national societies and discuss membership-related matters, including any opportunities for collaboration. This year, the meeting room seemed busier than ever, with representatives from SEGCIB (Spain), GQMA (Germany), SARQA (Sweden), SOFAQ (France), KSQA (Korea), ICSQA (India), JSQA (Japan), RQA, and SQA. Each representative introduced themselves and their organisation, explaining what they had done during and after the pandemic. It was clear that some societies had struggled to maintain their support to members – being a purely voluntary organisation cannot have been easy in such challenging times. JSQA and RQA regularly meet at conferences where both organisations are present. The topics discussed are mainly around collaboration and have, in the past, led to the publication of booklets, for example. In the agreement between JSQA and RQA, there is an annexe relating to the relationship between the JSQA GCP Division and the RQA GCP Committee. We agreed that this document should be reviewed since it has existed for around four years. At the same time, both parties should consider similar annexes for GLP and GPvP. The RQA Community Hub is also to be considered to facilitate those collaborative activities and relationships.

A key part of the closing plenary was a regulator Q&A session chaired by SQA President Cheryl McCarthy. The regulators included:

• Martijn Baeten, GLP Inspector, Sciensano, Belgium

• Chrissy Cochran, Director, US FDA

• Anne Johnson, District Director PHI-DO, DHHS/FDA

• Francisca Liem, Director, GLP Program, US EPA

• Thomas Lucotte, Inspection des Essais et des Vigilances, France

• Michael McGuinness, Head of GLP & Laboratories, MHRA

• Eric Pittman, Program Division Director, US FDA

• Naoyuki Yasuda, Director, MHLW, Japan.

A set of 16 questions had been prepared and the panellists took turns answering them as appropriate. Additionally, the regulators were asked to share interesting experiences, which were largely entertaining.

The closing address was mainly congratulatory; the Programme Committee Chairs, the SQA President and representatives from the co-host partners thanked all involved, including the attendees, for their support. And then, the big announcement… the next Global QA Conference.

While I received the commemorative GQAC flag for the next GQAC, Carl announced that the 8th Global QA Conference in 2026 will be held in London! Who knew?

Anthony Wilkinson, Director of Operations

UPDATE MAY 2023 | 5

ANALYSIS OF THE EMA DRAFT GUIDANCE ‘GUIDELINE ON COMPUTERISED SYSTEMS AND ELECTRONIC DATA IN CLINICAL TRIALS’

We are all aware that clinical trials are increasingly turning digital. Gone are the days when, at the words ‘source documents’, the image of a bunch of scribbled pages popped-out in our mind. No nostalgia or regrets. Now the data life cycle involves several structured computerised systems of increasing complexity, from local devices to delocalised cloud applications.

Massimo Tomasello

Anna Piccolboni

Laura Monico

Marianna Esposito Mario E. Corrado

Massimo Tomasello

Anna Piccolboni

Laura Monico

Marianna Esposito Mario E. Corrado

QUASAR 6 | MAY 2023

The COVID-19 pandemic has further accelerated this process and boosted the digitisation of clinical trials, introducing new challenges like remote monitoring and remote inspections. Still, it is not all rosy. The digital environment can be difficult to understand. With paper, data source was usually easy to locate. With digital, the concept of ‘source data’ is much more difficult to figure out. Compliance with GCP principles like ALCOAC+ could be also challenging. With perfect timing, in June 2021 EMA opened the public consultation for the draft guideline ‘Guideline on computerised systems and electronic data in clinical trials’. The document was released by the GCP Inspectors Working Group and it is therefore meant to represent the current EMA Inspectors’ expectation. This is not out of the blue. In recent years the European Inspectors published several Q&As on topics related to computerised systems, meaning that the inspectors’ attention in this area is high (and probably inspections findings are common). This document intends to replace the old 2010 EMA ‘Reflection paper on expectations for electronic source data and data transcribed to electronic data collection tools in clinical trials’. While the old reflection paper was only 13 pages long and with a narrow scope, this new guideline is an impressive 47 page document, highly detailed and demanding. As written by the inspectors ‘Development of and experience with such systems has progressed. A more up to date guideline is needed’.

The premise has been truly fulfilled, since the updated document now covers ‘current hot topics’ like electronic Clinical Outcome Assessment (eCOA), electronic Patient Reported Outcome (ePRO), electronic Informed Consent (eIC), cloud systems and Artificial Intelligence (AI). The recipients of the guideline are sponsors, CROs, investigators but also other parties like software vendors. An important focus is given to migration and transfer of data across different systems and to the requirement for audit trail and audit trail review. After introduction, scope and legal and regulatory background, the guideline summarises the principles and key concepts of computerised systems in clinical trials. A very precise definition of ‘electronic source data’ is given as ‘the first obtainable permanent data from an electronic data generation/capture’ and examples of common but incorrect identification of source data are provided. Details on requirements for computerised systems are given. A full chapter is dedicated to electronic data (and audit trail) and the challenges of their management during the whole life cycle. Finally, five annexes provide detailed requirements on topics like contracts, validation, user management, security and specific types of systems. An outline of the guideline structure is provided in Figure 1.

The Italian Group of Quality Assurance in Research (GIQAR), part of the Italian Society of Pharmaceutical Medicine (SIMeF, https://simef.it) has recently established a working group on GCP and computerised systems in clinical trials.

Our team analysed the draft guideline and sent comments, requests for clarification and suggestions on different aspects of the document to EMA. From this analysis we came up with some topics that, unmistakably, will be posing new challenges to sponsors, CROs and mostly to clinical sites. We summarised them in the following points:

NEW COMPUTERISED SYSTEMS IN SCOPE

Compared to the 2010 EMA reflection paper, additional computerised systems used in clinical trials are included in the scope of the guideline, such as:

• Applications for the use by the trial participants on their own device, ‘bring your own device (BYOD)’

• Tools that automatically capture data related to transit and storage temperatures for IMP or clinical samples

• eTMFs

• Electronic Informed Consents

• Interactive Response Technologies (IRT)

• Portals for supplying information from the sponsor to the sites

• Computerised systems implemented by the sponsor holding/managing and/ or analysing data relevant to the clinical trial e.g. Clinical Trial Management Systems (CTMS), pharmacovigilance databases, statistical software programming pharmacovigilance databases, statistical software, document management systems and central monitoring software

• Artificial intelligence (AI) used in clinical trials.

EXECUTIVE SUMMARY

GLOSSARY AND ABBREVIATIONS

ANNEX 1: CONTRACTS

ANNEX 2: COMPUTERISED SYSTEMS VALIDATION

ANNEX 3: USER MANAGEMENT

ANNEX 4: SECURITY

ANNEX 5: REQUIREMENTS RELATED TO SPECIFIC TYPES OF SYSTEMS, PROCESSES AND DATA

EMA GUIDELINE ON COMPUTERISED SYSTEMS AND ELECTRONIC DATA IN CLINICAL TRIALS

1. INTRODUCTION

2. SCOPE

3. LEGAL AND REGULATORY BACKGROUND

4. PRINCIPLES AND DEFINITION OF KEY CONCEPTS

5. COMPUTERISED SYSTEMS

6. ELECTRONIC DATA

FIGURE 1: OUTLINE OF EMA DRAFT GUIDELINE ON COMPUTERISED SYSTEMS AND ELECTRONIC DATA IN CLINICAL TRIALS

MAY 2023 | 7 QUASAR

EXTENT AND RESPONSIBILITIES FOR COMPUTERISED SYSTEM VALIDATION

The guideline clearly requires that the computerised systems used in clinical trials and in scope of the guideline are validated during their entire life cycle. The extent of validation required for each computerised system in scope is not totally clear and specific instructions are not provided. The guideline then clarifies that the investigator is ultimately responsible for the validation of the computerised systems implemented by the investigator’s institution as it is the sponsor for all the remaining systems used in each clinical trial. However, while sponsors and CROs are generally accustomed with concepts and techniques of validations and, have specific procedures and resources in place, this requirement may be challenging for clinical sites. Indeed, investigators/institutions will need dedicated personnel and/or consultants to validate their systems, to plan periodic reviews and to implement change control processes. Finally, the guideline requires that in case of regulatory inspections, the validation documentation for all the systems in scope is made available upon request of the inspectors in a timely manner, irrespective of whether it is provided by the responsible party, a CRO or the vendors of the systems.

ELECTRONIC DATA TRANSMISSION AND eSOURCE DATA IDENTIFICATION

Details of the transmission of electronic data should be described together with a dedicated diagram, including information on their transfer, format, origin and destination, the parties accessing them, the timing of the transfer and any actions that might be applied to the data, for example, validation, reconciliation, verification and review. This also applies when data are captured by an electronic device and are temporarily stored in the device local memory before being uploaded to a central server; this data transfer should be validated and, only once the data are permanently stored in the server, are they considered source data.

Certain source data might be directly recorded into the eCRF and this is true also for electronic tools directly collecting patient data: eCOAs or ePROs, such as electronic diaries, wearables, laboratory equipment, ECGs, etc. Those data should be accompanied by metadata related to the device used (e.g. device version, device identifiers, firmware version, last calibration, data originator, UTC time stamp of events).

All these electronically captured source data must be precisely identified in the study protocol. The guideline clearly states that any data generated/captured and transferred to the sponsor or CRO that is not stated in the protocol or study related documents will be considered GCP non-compliant. ePRO data should not be kept on servers under the exclusive control of the sponsor until the end of the study but they must be made available to the investigator in a timely manner, since he/she is responsible for the oversight of safety and compliance of trial participants’ data.

CONTROL OF DATA AND MANAGEMENT OF DYNAMIC DATA

The sponsor should never have the exclusive control of data entered in a computerised system. The investigator should be able to download a certified copy of the data at any time. Moreover, after a database is decommissioned, the investigator should receive a certified copy of the data entered at the site including metadata (i.e. audit trail) and the provided file should capture all the dynamic aspects of the original file. This means that static formats of dynamic data (e.g. PDF copies containing fixed/frozen data which allow no interaction) will not be considered adequate. Also, before revoking the investigator read-only access, he/she should be able to perform a review of the received certified copy versus the original database to assess its exact correspondence. However, the guideline remains quite vague on the expectations of this review and on where and how its performance should be documented.

Finally, the integrity of data must be preserved through its life cycle together with its dynamic features; after decommissioning of the database, the possibility of restoration to a full functional status must be ensured, including dynamic features, for example, for inspection purposes. The long-term retention of data in a fully functional status appears technically and economically challenging and hardly feasible in consideration of the retention time (up to 25 years) required by the Regulation (EU) No. 536/2014 on clinical trials on medicinal products for human use.

AUDIT TRAIL AND AUDIT TRAIL REVIEW

As anticipated, the guideline really highlights the importance of audit trails. The extension of ALCOAC principles to ALCOAC+, with the addition of the ‘traceable’ requirement, is easy proof of where the focus is and it explicitly requires that all changes must be documented in the metadata.

The guideline provides detailed requirements on the audit trail content; it should include all information on changes in local memory, changes by queries and edit check results, extractions for internal reporting and statistical analysis, and access logs. Even the exceptional case when a system administrator is forced to deactivate the audit trail, should be part of the audit trail itself.

The guideline goes ahead with the requirements for audit trail review and specifies that ‘the entire audit trail should be available as an exported dynamic data file in order to allow for identification of systematic patterns or concerns in data across trial participants, sites etc...’. This audit trail analysis should be focused on:

• Missing data

• Data manipulation

• Abnormal data

• Outliers

• Implausible dates and times

• Incorrect data processing

• Unauthorised access

• Malfunctions

• Direct data capture not performed as planned.

Therefore, the raising questions are: do we have the resources to deeply review the audit trail to the extent required? Is the end-user appropriately trained and qualified for this type of analysis? Will this be achievable, from a technical point of view, in a user-friendly way?

‘The sponsor should never have the exclusive control of data entered in a computerised system.’

8 | MAY 2023 QUASAR

‘Even the exceptional case when a system administrator is forced to deactivate the audit trail, should be part of the audit trail itself.’

TRAINING

The guideline reinforces the staff qualification needs foreseen by ICH E6 (R2), explicitly requiring training on applicable legislations and guidelines for all those involved in developing, building and managing trial-specific computerised systems, such as those employed at a contract organisation providing eCRF, IRT, ePRO, trial specific configuration and management of the system during the clinical trial conduct.

Whether or not technical providers are always up-to-date and aware of all the applicable legislations and guidelines is the main concern. Indeed, such vendors often provide systems for different industrial sectors and are not limited to the pharma industry. Therefore, while they are technically skilled and should be well aware of Software Development Life Cycle requirements, more specific and documented knowledge on GCP and specific clinical requirements may be needed.

For investigators and site staff competence, the guideline states that ‘all training should be documented, and the records retained in the appropriate part of the Investigator Site File/sponsor TMF’. It is clearly indicated that investigators should receive training on how to navigate the audit trail of own data to be able to review changes and that such training needs to be documented; however, the guideline does not clarify if non-study specific training (on systems used in multiple studies, such as training on CTMS, PV database, etc.) and training on IT security and serious breaches management should also be retained in ISF/TMF.

SECURITY

After widening the computerised systems in scope and involving new stakeholders, the guideline indirectly introduces new requirements for involved parties, such as clinical sites. For instance, availability of controlled SOP for defining and documenting security incidents, rating their criticality and implementing CAPAs, is required. Our question here is: are clinical sites equipped with such a well-organised quality system to support these activities? The list of required security measures includes:

• Anti-viral software

• Task manager monitoring

• Regular penetration testing

• Intrusion attempts detection system

• Effective system for detecting any unusual activity from a user (e.g. excessive file downloads, copying or moving or backend data changes).

As stated, while sponsors and CROs are quite used to work in a deeply regulated environment, clinical sites and computerised systems’ vendors might need additional resources in terms of employees and/or consultants to be able to fully satisfy the above requirements.

CONCLUSION

The new guideline provides directions to sponsors, CROs, investigators and other parties involved in the design, conduct and reporting of clinical trials on the management of computerised systems and clinical data. It does not really introduce new concepts but finally clarifies inspectors’ expectations on several compliance areas. It provides a fresh and modern view on new and emerging technologies (e.g. wearables, AI, cloud) and establishes a solid ground to support and enforce providers and site compliance.

PROFILES

Mario has 23 years of experience in Clinical Research. During his career he has worked as Clinical Monitor, Project Leader, Quality Assurance and Auditor. Mario leads the Quality Assurance Unit of the CROs Alliance Group. For seven years he has been one of the coordinators of the GCP working group of the Italian Group of Quality Assurance in Research (GIQAR).

Marianna has over 22 years of professional life in the pharmaceutical environment with extensive experience in GCP/ GCLP/GVP/CSV compliance and Quality Assurance (QA). She possesses wide knowledge of ICH guidelines, European pharmacovigilance regulations, GCP, GCLP (WHO), 21 CFR Part 11, EU Annex 11, ALCOA+ Data Integrity Principles, GAMP 5 and main FDA Guidance(s) for industry. Since 2000

Marianna has been working for PQE where she developed wide expertise in clinical and PV auditing, QMS implementation and computer systems validation projects. She is currently the GCP Compliance Operation Manager at PQE Group.

Nevertheless, through the analysis performed, we can conclude that not all the requests are clear and some of them are deeply technically challenging (e.g. retention of data preserving their dynamic state). A great deal of work will be needed to achieve full compliance when the guideline comes into force, especially for trials already ongoing. Additionally, some requirements could be really complex for clinical sites and they will require a totally new approach.

Electronic systems and data are here to stay and it is important that the compliance requirements are clear and feasible. GIQAR, as stated, has requested several clarifications on the document and we assume that many more questions and suggestions must have been received by the stakeholders in the pharma industry and maybe investigators. We hope that EMA will take them into account and will respond with a clear and well-applicable final guidance.

Article first printed in the Journal of the Society of Pharmaceutical Medicine.

Laura has a Master’s Degree in Pharmaceutical Chemistry and Technology and had her first experience in Fidia Farmaceutici S.p.A. Here she covered the role of Corporate R&D Quality & Compliance Coordinator and gained experience on GCP and GVP compliance, especially for vendor management and validation on computerised systems used in clinical studies and for pharmacovigilance processes.

Anna has a degree in pharmacy and more than 30 years’ experience in pharma companies covering the role of Head of QA for GLP, GCP, GVP. She also worked in project management and science information during her career. Anna is currently a freelance GxP auditor, coordinator of the Italian Group of Research QA (GIQAR) and Vice-president of the Italian Society of Medicinal Farmacy (SIMeF).

Massimo is GLP/GCP Senior Specialist and Auditor at Chiesi Farmaceutici. He has 19 years’ experience in research quality assurance across GLP and GCP areas. Massimo started working as QA Auditor in a toxicology test facility in 2004 and in 2016 joined Chiesi where he is responsible of preclinical quality assurance activities and he is involved in the implementation and maintenance of the GCP quality system in R&D projects.

MAY 2023 | 9 QUASAR

ROCHE/RQA COLLABORATION: IMPACT OF THE CERTIFICATE IN DATA BASICS eLEARNING IN THE PHARMACEUTICAL INDUSTRY, INCLUDING THE LAUNCH OF THE IMPALA CONSORTIUM

QUASAR 10 | MAY 2023

Tim Menard

Sharon Havenhand

Launched back on the 21st April 2021, Roche and the Research Quality Association partnered together in a new industry collaboration to bring its members the Certificate in Data Basics eLearning course, which is part of Roche’s Data Analytics University.

IMPACT

Reviewing the initial end of course survey feedback provided by quality professionals indicated a positive response to the eLearning:

• 97% said they will prioritise implementing the course concepts into their work

• 88% said that the eLearning will help them improve their work outcomes

• Overall 96% of RQA members would recommend this course to their colleagues. Building on this, we contacted RQA members that had completed the course to hear what impact the training has had on their work and the changes they have made as a result.

The course was designed to equip quality professionals with:

• The basic analytical skills and knowledge they need to interpret and use data effectively

• Recognising the challenges of data processing and analysis

• Describing different statistical methods used to interpret and analyse data

• Practical application by using statistical methods on a simplified data set

• Developing critical judgement when it comes to data, statistics and visualisations.

The self-paced learning course which takes approximately three hours to complete, includes a final self-assessment knowledge check which, when successfully passed, enables you to collect three CPD points. Since its initial launch back in 2021, a total of 284 RQA members have enrolled to take the course, with 152 completing the training to date. Based on the demographic characteristics of current RQA members who would be likely to take the training, just under half – 44%, have either enrolled or taken it so far.

With that in mind, Roche and the RQA began assessing what impact the Certificate in Data Basics eLearning has had in the pharmaceutical industry since its launch and how we can broaden our reach with this eLearning to other quality professionals across industry.

Taking this course has helped me with supporting my clients in preparing for inspections, especially with reference to the statistics part of inspections as it has given me a better understanding of the terminology and principles of data handling.

Helen Powell from HYP Pharma Ltd, Pharmacovigilance and GCP QA Consultancy

The course provided me with a good revision of basic statistics and is a helpful reference point for me when auditing clinical lab data.

Gail Todd, Associate Director LabsQA, AstraZeneca I applied what I learned towards the assessment of R and its use in data analytics at Flatiron. I was able to partner with the Quantitative Sciences (QS) team to develop and implement a risk-based framework. The course provided me with the basics which I was able to use in discussions with the QS team to further my knowledge about data analytics.

Manan Patel, Manager, Integrated Quality Management, Flatiron

Other feedback obtained said that it helped ‘give non-data scientists an understanding of how data analytics works’ and that they ‘really enjoyed putting the insights learnt into practice’

Carl Lummis, Events, Marketing, Publications and Online Learning Manager at RQA summarises that “The eLearning course has helped bring a stronger, more diverse perspective to Data Basics for RQA members. This initiative has given all members involved a new opportunity for growth in this area”. The insights derived from the data points collected indicates that the eLearning course is being utilised by a mixed group of people for varying activities, that most quality professionals were making or had made changes as a result of taking the eLearning course and that it was making a positive impact for those who had. In conclusion, the data supports that the course concepts are indeed making an impact in industry. Roche aims to be industry leaders in this space and with the support of the RQA have made great inroads to achieving this.

BROADENING OUR REACH

To build on the great response and uptake of the course by RQA members, Roche are now looking to gain a wider audience and global reach of other quality professionals across industry, outside of the RQA. As a result, Roche and the RQA have collaborated to release the first three parts of the Certificate in Data Basic eLearning free to non-members*. The courses, which are available to access on the RQA website, are:

• Introduction: Welcome to the Course

• Chapter 1 – Demystifying Analytics –Part 1

• Chapter 1 – Demystifying Analytics –Part 2

By taking these short, self-directed courses, it will give quality professionals a basic introduction to data analytics and provide a good platform to build upon. We hope to inspire and spark an interest with non-members for them to continue with learning and benefit from training like RQA members have. In turn, Roche will achieve its objective to broaden their reach to other quality professionals across industry.

12 | MAY 2023 QUASAR

“

‘Overall 96% of RQA members would recommend this course to their colleagues.’

” “ ”

“ ”

‘We hope to inspire and spark an interest with non-members for them to continue with learning and benefit from training like RQA members have.’

LAUNCH OF IMPALA CONSORTIUM

Continuing on the theme of data analytics, Tim Menard shares his insights into the newly launched Inter-Company Quality Analytics Industry group (IMPALA) and what that means for RQA Members.

WHAT WOULD RQA MEMBERS WANT TO KNOW ABOUT IT?

IMPALA are committed to developing an open source product (i.e. co-development of open source tools: analytics packages, templates, methodologies, etc.), meaning once these work products are released, they are accessible beyond IMPALA members. Our current work products are auditing schedule, data integrity, anomaly detection in audit trial and quality briefs, more information on these can be found on our website.

HOW CAN RQA MEMBERS GET INVOLVED?**

WHAT IS THE INTER-COMPANY QUALITY ANALYTICS INDUSTRY GROUP (IMPALA)?

The Inter coMPany quALity Analytics (IMPALA) consortium was initially established in July 2019 as an informal group of biopharmaceutical organisations with the common goal to share knowledge and better understand opportunities in applying advanced analytics for QA.

IMPALA’s mission is to transform the Biopharmaceutical Clinical Quality Assurance process in the Good Clinical Practice and Good Pharmacovigilance Practice areas, by using advanced analytics and promoting the adoption of this new approach and associated methodologies by key industry stakeholders (e.g. pharma quality professionals, health authorities) to assure safe use and therefore building patient trust, accelerating approvals and ultimately benefiting patients globally.

The IMPALA consortium will provide the strategic focus for working across the biopharmaceutical ecosystem to develop and gain industry-wide consensus for the adoption of improved QA using advanced analytics and best practices to be used across the industry.

To achieve this, several Work Product Teams have been established and are currently working on focused projects to advance the IMPALA consortium’s objectives. Current members include Astellas, Bayer, Biogen, Boehringer Ingleheim, Bristol Myers Squibb, Johnson & Johnson, Merck KGaA, Merck & Co., Novartis, Pfizer, Roche and Sanofi.

Some RQA members may remember that IMPALA ran an interactive session ‘How can we leverage data analytics for clinical quality and accelerate drug development? at the RQA International QA Conference back on 10th November 2022. The IMPALA panel engaged with the audience to define, discuss and gather business requirements for data analytics use cases in clinical quality. RQA members can continue to get involved in the discussions as IMPALA plans to attend the next RQA conference in November 2023 for another interactive session. More information to follow.

On 26th June 2023, as part of the DIA Global Annual Meeting in Boston, MA, IMPALA will present:

1. The vision of IMPALA and examples of IMPALA’s quality analytics products.

2. Ongoing feedback from health authoritieson the quality analytics methods.

3. Guidance on how sponsors and regulators can join IMPALA. At a fee, RQA members are welcome to attend and more information can be found on the DIA website. www.diaglobal.org

WHERE CAN RQA MEMBERS FIND OUT MORE INFORMATION?

To keep up to date on the latest news, RQA members can follow IMPALA’s LinkedIn page: www.linkedin.com/company/intercompany-quality-analytics. They can also head over to IMPALA’s website, which has a wealth of information on work products, publications and upcoming events: https://impala-consortium.org/

PROFILES

Sharon is currently a Principal Quality Solutions Lead at Roche, responsible for leading and delivering the Data Analytics Learning Strategy for her function. She has been working in the pharmaceutical industry since 2006, holding a variety of positions in Quality Assurance from Lead International Clinical Auditor to Quality Assurance Specialist, gaining extensive experience across the GxP disciplines. Sharon holds a Diploma in Learning and Development and is an ILM Accredited Advanced Professional Trainer.

Tim is currently Head of Quality Data Science at Roche. He started his industry career in 2009 in PV with different roles (operational and strategic) that brought him to live in various parts of the world. Tim joined Roche as an auditor and dived into the ‘magic world of analytics’ somewhere in 2017. He has been leading the PDQ Data Science Team at Roche since 2018 and his team is creating and implementing data-driven solutions that help understand, early detect or predict clinical quality issues.

MAY 2023 | 13 QUASAR

* In order to take the remainder of the eLearning course they will need to sign up to become RQA Members.

**Please note that at this time, only Bio Pharma organisations are eligible for membership to IMPALA. Biopharma sponsors can apply to join via the IMPALA website.

‘RQA members can continue to get involved in the discussions as IMPALA plans to attend the next RQA conference in November 2023 for another interactive session.’

Nigel Crossland

THE ROLE OF QUALITY ASSURANCE IN PHARMACEUTICAL R&D

It would be unreasonable to think that patients should be expected to agree to the taking of drugs if there was no proof of their effectiveness or relative safety. The idea of taking drugs merely on unsubstantiated trust belongs to the past and is not acceptable in the modern age of scientific evaluation.

QUASAR 14 | MAY 2023

As pharmaceutical products are manufactured, licensed, sold, prescribed and used worldwide it is essential that evidence of quality, efficacy and safety are proven and supportive documentation is made available to the respective national regulatory authorities for approval and licensing purposes. To successfully achieve this requires consistent and disciplined systems of project management, involving research scientists, manufacturing chemists, data managers and analysts, monitoring personnel, report writers, clinicians and volunteer subjects. The facilities containing these projects typically include preclinical as well as clinical laboratories, manufacturing plant, data processing and office accommodation.

Pharmaceuticals (referred to as products throughout this article) encompasses drugs (e.g. birth control pill), medicines (e.g. anti-cancer) and devices, including engineered technologies and biosynthetics (e.g. pacemakers and wound healing dressings). The starting point of most products is either an idea based on further development and modifications to the chemical structures of existing compounds, copying a naturally occurring material in which it is noted there is potential pharmacology or simply through the fortunes of serendipity. For medical devices it is more a case of developing mechanical or physiological capabilities of the product. Initial testing, which may include invitro, microbial or biochemical models may lead, after many months or years of effort, to a product that can be then tested in a clinical setting involving human subject volunteers.

The results of these studies will hopefully lead to a candidate product that after extensive further evaluation, can be presented to the licensing authorities. However, along this tortuous journey, the product will be very carefully monitored for clinical efficacy as well as any indications of untoward effects. Formulation developments, consistency in manufacturing ability and if it could be economically developed, are also determined and estimated.

When dealing with generic products, as opposed to those still protected under the terms of licence, it must be appreciated that the manufacturers are still required to submit their version (copy) to detailed testing in order to show that the generic version possesses similar pharmacological activity, i.e. there is bioequivalence. Such clinical trials involve healthy volunteers receiving treatments with both the original and generic versions of the product with blood samples taken and chemically analysed for the presence of the active product, i.e. pharmacokinetic analysis.

MAY 2023 | 15

In order to survive this labyrinthine plan and achieve success, systems are developed and put in place in order to best standardise and document work procedures: projects are defined, those in charge are identified, routine procedures are documented as Standard Operating Procedures (SOPs), equipment records are maintained, reagents and consumables recorded, job responsibilities are defined and staff training/competency-achieved records established; with proper maintenance of all study records, including retention and archiving being performed as a final step. Such rigorous performance of work procedures and the resulting collection of records are collectively referred to as Quality Assurance (QA) systems and this article attempts to provide the reader with an informative appraisal summary of this subject.

QA involves all aspects of the project, rather than the in-process series of checks performed by quality control (QC) processes. The management of QA is undertaken by an entirely separate group of professionals who can provide directional advice as well as extensive and thorough audit scrutiny. QA is the system and processes that are in place to ensure that the work is performed and the data generated are in compliance with Good Practice, including employment of SOPs, data reporting, staff training and procedures for ethical conduct in the case of clinical trials. With regard to the need to confirm whether standards are being maintained (in compliance), QA audits are conducted by specialists who have extensive training and experience in evaluating research processes. QA audits of clinical research look at compliance with GCP standards, thus obtaining assurance that the data and reported results are credible and accurate, and that the rights, integrity and confidentiality of trial subjects are protected. They are authorised by senior management, following formal request procedures. Their findings will be reported in accordance with confidential systems. It is notable that QA auditors focus attention on the questions rather than starting with the answers and this helps in dealing with the myriad of issues needing detailed scrutiny. In this way, confirmation is sought of whether the particular pieces of work, including equipment and facilities have been or are of a satisfactory standard. Audits can look at compliance with expected and approved practice in each and every one of the stages of a given research project. Both projectspecific elements, for example clinical study records and test results, as well as nonproject specific elements, such as staff qualification, training records and facilities, can be examined and evaluated.

Audit reports are prepared and supplied to the testing organisation senior management from where it is expected that any deficiencies identified will be corrected following review and acceptance by the facility.

Just as an audit cannot examine everything, it should also be noted that it is not a repeat of in-process QC procedures. Instead it will examine how QC was performed, by whom and how this was recorded. A typical audit, rather than looking for compliance, will instead concentrate on non-compliance, in other words: are there any problems? An experienced auditor should quickly see whether, for instance, work procedures were not standardised, the study protocol (plan) was not being followed, whether there was incompetence, facility deficiencies, inadequate record keeping and the principles of ethics were not adhered to. In looking at preclinical research and safety testing, it was here that deficiencies first came to the attention of regulators (especially the FDA in the USA) where it was thought that companies were not doing enough in safeguarding people from the effects of drugs such as Thalidomide (the USA largely avoided the problem as a licence for the drug was presciently withheld by the Licensing authority). Thalidomide was originally licensed for over-the-counter (OTC) sale in Germany in July 1956. It took five years for connections between the drug taken by pregnant women and severe deformities observed at birth of their offspring, forcing governments and medical authorities to review their pharmaceutical licensing policies, (Lancet, William McBride, 1961). As a result, changes were made to the way drugs were marketed, tested and approved in the UK and across the world. One key change was that pharmaceutical products intended for human use could no longer be approved purely on the basis of animal testing. And trials for substances marketed to pregnant women also had to provide evidence that they were safe for use in pregnancy.

During the clinical research phases of testing and evaluation, the candidate product is administered to volunteers; these may be non-therapeutic trials in healthy subjects, while therapeutic trials involve patients. In Phase I trials, i.e. first time in humans, special care is applied to deal with the possibility of potentially dangerous untoward events and this includes medically qualified staff and arrangements for emergency admission to an associated or nearby ICU. To ensure ethical standards are maintained, signed consent procedures are conducted at the start and all records are handled with strict application of confidentiality. Trial conduct is monitored by trained QC personnel who are independent of the clinical team. Care is taken to ensure volunteers are only identified by a coded identification number in order that no volunteers identification details are released to the sponsoring pharmaceutical company. Statutory regulations applicable include The Medicines for Human Use (Clinical Trials) Regulations 2004 + Amendments, EU No. 536/2014, Clinical Trials on Medicinal Products for Human Use and the Declaration of Helsinki – please see the Medicines and Healthcare Regulatory Agency (MHRA) website for details.

Quality concerning the manufacture, formulation, packaging and labelling of pharmaceutical products is described within the GMP regulations. Label design as well as the textual contents of the patient information leaflet are under the tight control of the regulatory function within the manufacturers offices.

Before clinical trials are initiated, approval must be sought from the relevant authority, which in the UK is the MHRA. In addition, prior approval must also be sought from an independent Ethics Committee, constituted of medical professionals, legal as well as lay public, whose sole responsibility is to ensure the rights, protection and safety of human trial subjects are protected. This is achieved using ethical standards based on the World Medical Association’s Declaration of Helsinki (1989 3rd revision). The starting point of this is a study-specific approved consent form, in which before the trial starts, the volunteer is asked (without coercion) to sign in the presence of a physician, to show that they understand the nature of the study and their own personal responsibilities while in the trial.

Details of each clinical research study should be described and defined within a written study protocol, which essentially provides answers to: ‘what, why, when, how, where and who’. This lists who is in charge, the study’s objectives, the identity of experimental product, its formulation details, a description of the methodology and where the study will be performed.

16 | MAY 2023 QUASAR

‘It is notable that QA auditors focus attention on the questions rather than starting with the answers and this helps in dealing with the myriad issues needing detailed scrutiny.’

QA conduct within preclinical laboratories is customarily defined by the standards of GLP. During the clinical phases, including the laboratories which are fulfilling analytical duties as part of a clinical study (e.g. clinical chemistry, cytology and haematology) and are often in hospitals, if not an independent facility, it is customary for confirmation of fitness for purpose to be established before study initiation. This commonly comprises the review and filing of the laboratory accreditation certificate for inclusion in the study file. It should be noted however, that independent QA audits using MHRA approved standards of GCLP provide more meaningful support for the quality of laboratories, especially in various other regions in the world. GCLP is similar to GLP but with the additional requirement of patient confidentiality, anonymity and enhanced data integrity, which are essential pre-requisites under the rules of GCP. Both clinical as well as technical procedures undertaken as part of a study should preferably be written up if they are routine, as Standard Operating Procedures (SOPs); if not then details of procedures must be described as part of the study protocol or as study-specific records. In all cases such methodologies should be version controlled, dated and display an approval signature; any modifications or deviations from the routine procedures should also be fully documented and signed off by the senior scientist in charge. Any departure from protocol methodology must also be explained and fully documented as part of study records. Data management, record maintenance and final storage are integral components in any enterprise involving the research and development of pharmaceuticals. These may be paper or electronic records, including statistical data sheets or biosamples and slides. The purpose of having such records is to ensure there is sufficient detailed information available post study to understand and even reconstruct how the study was undertaken if, at some future point, it is necessary to investigate a questionable result, as well as to provide documented evidence that the study was in compliance with all good practice requirements.

An example demonstrating the comprehensiveness of such records is illustrated by analytical laboratories where original result prints are collected and also staff lists, SOPs, equipment details and calibration, including internal and experimental quality control standardisation reagents.

The safety of products, especially new ones, is taken very seriously by the industry and the monitoring of untoward events while receiving treatment (pharmacovigilance) is a legal requirement of all pharmaceutical companies and all new reports are examined by medically qualified personnel. During the clinical research stages, the occurrence of any events is considered carefully and will strongly influence the furtherance of human exposure to a new drug undergoing development.

During GCP audit of clinical trials there should be an account of every trial subject, including detailed examination of all ‘dropouts’. Under such levels of scrutiny, it is believed that any significant issues such as gross errors, fraudulence or clinical wrongdoing will inevitably be found. However, far more common occurrences of non-compliance are more likely to include contravention of standardised procedures or planned events as detailed within the study protocol, inadequate training, documentation failings and also facility limitations.

My own personal audit experiences produced the following noteworthy and significant findings:

• Unworkable emergency procedures and equipment in a phase 1 clinical laboratory

• Falsification of Case Record Forms (CRF) in an attempt to hide an earlier improper inclusion of a particular patient who violated the trial’s exclusion criteria

• Design and usage of paediatric informed consent forms that prevented accurate recording of parent/guardian names

• Unbeknown to the sponsoring pharmaceutical company, the responsible therapeutic study physician, contrary to the trial’s inclusion criteria, was treating an additional patient with a reclaimed trial product

• Totally improper standards for the design, maintenance and use of a first time in human (Phase 1) test product formulation facility

• An analytical laboratory that was found to be totally unsatisfactory despite possessing a dossier of accreditation certificates

• Trial monitoring in a cancer study that improperly relied on nurse supplied summary records rather than any checks being conducted against source clinical records.

Sometimes it’s too easy to forget how fundamental many questions are that should be asked when performing assessments of product research and development:

• Were the test formulations actually consumed by the volunteers and is there written proof of this?

• Was the study ethical?

• Are the source records believable, complete, dated and signed?

• If records are electronic, was there adherence to these same standards?

• Did the trial monitor actually carry out full and acceptable data verification?

• Were all staff trained and approved for the work they performed?

• Are there accountability records showing proof of receipt, storage and usage of experimental products, including comparators and placebos?

• Are the data and reports believable?

Without the performance of QA audits and objective assessments undertaken, how do we know that the quality achieved was good enough?

Based on an article written by Nigel Crossland and originally published in the Biomedical Scientist (the journal of the Institute of Biomedical Science).

PROFILE

Nigel has international experience developing and evaluating R&D working practices during a career spent in pharmaceutical companies, laboratories and hospitals.

MAY 2023 | 17 QUASAR

‘During the clinical research stages, the occurrence of any events is considered carefully and will strongly influence the furtherance of human exposure to a new drug undergoing development.’

‘Any departure from protocol methodology must also be explained and fully documented as part of study records.’

DID THE AUDIT GO SMOOTHLY? FOR SPONSORS, CLINICAL SITES AND AUDITORS

In China, the number of registered clinical studies has significantly increased in recent years, from 939 in 2015 to 3318 in 2022 (data source: www.chinadrugtrials.org.cn). The increase mainly followed the issue of China Food and Drug Administration (CFDA) announcement #117 of 2015 (‘announcement’ for short) (CFDA was previously the National Medical Products Administration (NMPA)), which arose from the new drug development prosperity in China.

Wenjing Zhu

Wenjing Zhu

QUASAR 18 | MAY 2023

ne thing was confirmed with the issue of the announcement; more sponsors and medical institutions were expected to have Investigator Site Audits (ISAs) during the whole clinical study period and audit was becoming an essential process in their Quality Management System (QMS). Consequently, the number of auditors in the pharmaceutical company and Clinical Research Organisations (CROs) (we say sponsor in this article) and auditing consulting experts and companies increased; springing up like mushrooms.

OSponsors with mature systems and experienced medical institutions/clinical sites generally supported the audit requirement since it was one process to help the quality improvement of the study. However, in this booming market, there were also sponsors and medical institutions that were new to auditing and had little knowledge of the auditing process, which raised all kinds of not-so-reasonable requirements for the auditor. Moreover, many new auditors entered the market to satisfy the market needs, but they had no idea how to meet the various requirements. Therefore, this article includes some problems which exist in current ISA activities in China, as well as potential reasons for the issues. I hope the article

helps to answer some questions or resolve some queries from sponsors, medical institutions and auditors who have met with similar situations, and shed light on conducting ISAs.

Irrespective of whether the auditor was from the sponsor’s staff or consulting company, there were circumstances where a significant number of Source Document Reviews (SDRs) or/and Source Data Verifications (SDVs) were required during the audit. In some cases, the number was more than 50% of the study, e.g. to review more than four subjects, although only six patients enrolled in the study, and the requirement was from both the sponsor and the medical institution.

MAY 2023 | 19

It was understandable that the more source documents/data reviewed, the more findings were likely to be detected, which could make the one who raised this requirement perhaps feel safer. However, was it necessary to perform a high percentage review to see a finding? Would it be a waste of human and financial resources for the sponsor to undertake such a comprehensive review? For a clinical study, the site may or may not perform a 100% Quality Control (QC) check for all the documents and data. However, the monitor would usually conduct a 100% (the percentage was still high for sites in China even when it was not 100%, according to the monitoring plan) review of the source documents and data, which was essential to study primary and secondary endpoint and study quality, including the data entered in eCRF (electronic Case Report Forms).

So, when the auditor had to perform a high percentage of SDR and/or SDV, the audit more likely turned to another QC check rather than a sample-based audit. The sample-based audit was similar to an investigation, and a prepared sample selection could cover the most critical documents and data that needed to be considered. Reviewing the prepared selected sample, any existing or potential finding was likely to be detected. Combining the severity and frequency of the finding, the required action became apparent; the auditor and sponsor could have a judgement of the finding classification (minor, major, critical) and with the number of the minor, major, and critical findings detected during the audit, the sponsor could form a judgement of the study quality at the audited site.

The function of the supplementary document and data review provided additional cases of a particular finding rather than detecting new finding types. For example, there are more cases of eCRF data entry inaccuracy or source documentation problems from the same sub-investigator, etc. In rare circumstances, a new serious finding was detected, such as the site randomised ineligible subjects,

the classification of the finding was still unlikely to be critical when it happened in one subject following the review of several, but this usually did not change the sponsor’s overall assessment of the study quality at the site. When serious findings resulted from a system deficiency, they were typically detected when the auditor performed an SDR for the first or second subject. When critical and/or major findings are detected, it usually means a high percentage of the same finding; the corrective action should not only include rectifying what was reported in the audit reports but also conducting a comprehensive check of other documents/data of the same type that were not reviewed during the audit and correct the defects arising from the additional check. Thus, if the correcting action was effective, all defects, listed or not, in the audit reports should have been corrected. The site staff could determine the study quality and take improvement actions during the corrective action stage. To have a high percentage document and data review during an audit can be interpreted that the auditors were doing part of the study team’s work to find out more cases of one finding, which should have been undertaken by the study team during and as part of the Corrective and Preventive Action (CAPA) stage, and had no benefit on the improvement of the study teams’ capability or sense of responsibility for managing quality.

Take a daily life event; for example, imagine someone wanted to check if all chicken eggs sold in Store A were tasty – whether the size was small, medium or large. To check that, they may choose two or three of each size; they would not buy all eggs from the store to check. If all the eggs chosen were not as tasty as they expected, then they had a conclusion that the eggs sold in Store A were not tasty. But, if only a tiny percentage were not tasty, they knew the eggs sold in Store A were generally tasty. If any of the eggs tasted really bad, but others in the batch were OK, they might purchase another batch to confirm. They trusted their conclusion without testing all the eggs because the ones they chose represented the other eggs. Of course, with sufficient money and resources, buying and tasting all the eggs is still possible – but then there would be no eggs (this situation was not in the discussion scope of this article). Nevertheless, I hope this example explains why a prepared sample selection, rather than a high percentage review is sufficient in an ISA.

The second problem was minimal cooperation from the sponsor. To conduct an ISA, the auditor has to have full knowledge of the study, including all requirements for the clinical site (and monitoring activity, when applicable) defined in the study protocol, pharmacy

manual, laboratory manual and any relevant manuals or procedural documents. They also need a general idea of the site staff and study status at the site, through site documents review including delegation log, monitoring report, etc. Also, to prepare and conduct the audit, the auditor needs to access the system used in the clinical study, such as the electronic Trial Master File (eTMF), Electronic Capture System (EDC), etc. and of course, sufficient time for preparation.

Once they had all the information, the auditors would have a clearer picture of what was to be reviewed, who was to be interviewed, what needed to be considered and given special attention, the queries to be resolved during the audit and an indication of the samples to be selected for review during the audit. The auditor didn’t need to spend time collecting information and making judgements during the audit, giving the auditor more time to review what was critical to the site study quality, to dig out more information to better understand the study procedures and to get to know the actual finding instead of the superficial issue(s). Without that prior information during the preparation stage, the auditor had to use valuable time during the audit, which increased the risk that the sample selected was not representative, or insufficient time was left for the auditor to thoroughly review the selected samples or to dig out more information relating to a finding. For example, one auditor found that for the subjects they reviewed, the Investigational Medicinal Product (IMP) quantity was inconsistent in the subject medical record and the actual amount reported for some subject visits. If there was enough time, the auditor might check how the accountability log was recorded:

• Was the actual returned quantity consistent with the dispensing and dosing quantity?

• How was the subject IMP return procedure performed?

Then it would be clear if it belonged to a recording problem or an IMP management problem. Meanwhile, suppose the auditor has to take too much time on work that could have been completed during the audit preparation. In that case, there may be no time left for the auditor to dig out the necessary information, making the audit finding unclear.

It’s like someone going shopping; some people like to prepare a shopping list before going to the supermarket and others just go without a list.

20 | MAY 2023 QUASAR

‘For a clinical study, the site may or may not perform a 100% Quality Control (QC) check for all the documents and data.’

People who go shopping with a list can find all the things on the list. But, on the other hand, people who go shopping without a list need to consider what to buy as they wander around the supermarket; there is a high possibility they will forget something they need and only realise when they arrive at home – ‘Oh no, I forget to buy eggs’. Minimal cooperation from the sponsor is like people shopping without a list. For example, there were circumstances where the sponsor was not fully supporting the audit by not providing the necessary study or site documents/information to the auditor, not providing necessary access (e.g. EDC access) even after the audit had begun, or providing the information immediately before the audit – not allowing time for any preview.

It wasn’t easy to understand why the sponsor did not support the audit since they were paying for it (whether the auditor was the sponsor or consulting company) and were responsible for the study quality. Moreover, the auditor was independent of the study team and could discover the potential or existing problems not detected by the study team from a third party viewpoint. But if the sponsor did not support it, wouldn’t conducting the audit simply waste money and resources as the benefit of the audit was minimal?

In my experience, I met a situation where, in some audits, the monitor or the Project Manager (PM) of the audited study were greatly concerned about the finding classification because the number of major or critical findings in a study was included in their Key Performance Indicators (KPIs), without considering it was the site’s problem or monitor’s problem. So having this information, I understood that it might not be the sponsor who wasn’t supporting the audit but a study team member. It appeared that the sponsor was not supportive; more support will likely lead to more findings being detected, which might indirectly affect workload and finances.

So for this kind of circumstance, the sponsor must consider whether they want the truth of the study quality or just peace of mind. Minimal cooperation from clinical sites was quite common. Site staff interviews are an essential process during an ISA. By reviewing site staff, the auditor can get valuable information about the study procedure at the site, can determine if the staff member was familiar with their responsibility in the study, can verify if the interviewee’s words were consistent with what was recorded and can discuss with site staff to resolve questions/queries and confirm existing findings. However, in some ISA, the PI and site staff interviewed were unwilling to answer questions and eager to end the interview (not because of their busy work schedule) sometimes, they didn’t

respond when the auditor was trying to clarify any queries or when summarising the audit finding. When the auditor did not receive a response to the raised question/ query, it was likely that the question/query turned into a finding.

For example, Subject A signed two sets of Inform Consent Forms (ICFs), and the signatures were so different that the auditor suspected the two ICFs were unlikely to have been signed by the same person. If this could have been discussed between the auditor and site staff, it’s possible that they could clarify whether the two ICFs were really signed by different people or simply that the same person had two different signatures – supporting documents could have been provided. However, without that discussion, it turned into a finding. There were situations where the sponsor or clinical site did not want the auditor to write a finding in the audit report. The audit report is a way to reflect the audit activity and show the sponsor the site study status without the sponsor being on-site. The sponsor and clinical site could know what problems existed or needed improvement by reviewing the audit report. Without an accurate record of all those findings, it may result in a debate between the auditor and sponsor when the regulatory authority detects the auditor’s findings at a later date, or the possibility that the sponsor and/or clinical site forget to resolve the findings (from sponsor/site’s viewpoint), or the problems were resolved in an unconventional (non-GCP) way, which created a higher risk on the study quality at the site.

One possible reason was that the site or sponsor was worried that the audit finding would be reviewed by regulatory authorities. This scenario is unusual because ICH E6 (R2) 5.19.3 clearly states, ‘To preserve the independence and value of the audit function, the regulatory authority(ies) should not routinely request the audit reports. Regulatory authority(ies) may seek access to an audit report on a case-by-case basis when evidence of a serious GCP non-compliance exists, or in the course of legal proceedings’. To review the audit report when a serious GCP non-compliance exists was to verify whether the sponsor’s responsibility was fulfilled during the study. If reviewing audit reports during a regulatory inspection became a common approach, instead of only when serious GCP non-compliance exists, no sponsor or clinical site would dare to conduct an audit when the worst case needs to be presented. Moreover, the QMS would be incomplete without an audit, contradicting the GCP requirement. So the sponsor or site should not worry that the audit report could be reviewed during the inspection.

Also, recording the finding in the audit report was a sure way to remind the sponsor and/or site to resolve the problems. When the inspector noted a resolved critical finding, the audit report, together with the CAPA, was a way to prove that the sponsor/ site had noted the problem and had taken corrective and preventive actions to avoid reoccurrence on other sites and/or future study, which gave a positive impression to the inspector.

There were other strange requirements from sponsors or hospital institutions; whatever the situation was, it challenged the auditor to complete the audit. Therefore, it was important for the auditor to know the reason (or potential reason) for those abnormal situations; maybe the sponsor was not experienced in auditing procedure, the sponsor or the investigator was not confident of the study, they just took some minor findings too seriously or they had some concern which was not expressed, etc. If the reason was known or declared, the auditor could know what regulations, guidance and criteria should be referred to and what words or actions might eliminate or reduce the sponsor or investigator’s concerns.

Where there wasn’t any improvement after the auditor had tried, what else should the auditor do? Personally, I would just get on with the audit. But, importantly, always inform the sponsor about the abnormal situation, potential impact and what the auditor had done. It could protect the auditor, the sponsor and the clinical site.

PROFILE

Wenjing has worked in clinical QA for a CRO company since 2012 and is now working at Zigzag Associates Ltd. She has had seven years clinical study auditing experience, which covered phase I, phase II and phase III clinical studies. Wenjing has worked with both global and China local pharmaceutical companies and conducted investigator site audit in hospitals of various sizes in China.

MAY 2023 | 21 QUASAR

‘But, importantly, always inform the sponsor about the abnormal situation, potential impact, and what the auditor had done.’

HOME SUPPLY –A PATIENT-CENTRIC APPROACH TO DIGITALLY MITIGATE DTP RISKS

Decentralised Clinical Trials (DCTs) became the default position during the COVID-19 pandemic, where adoption was fast and furious, ensuring patients could gain access to the treatments they were promised, without having to complete in-person visits. This has led regulators to release flexibilities, which allowed remote or virtual settings (home health nurses, wearables, econsenting, etc.).

Marcus Wajngarten

Richard Knight

Marcus Wajngarten

Richard Knight

QUASAR 22 | MAY 2023

Fatemeh Jami

rior to this, there was an absence of any regulatory endorsement of conducting clinical trial tasks away from the site. Furthermore, sponsors and clinical trial practitioners were often sceptical about the uptake of the alternative approach without regulators providing clear guidance. The fast adoption of DCTs comes with risk, as taking on services from entrepreneurial vendors with DCT solutions, who have limited knowledge of GCP application, data privacy laws, has grown exponentially in a short period of time. This is a new area for the industry, unlike the established oversight relationships between sponsors and CROs, for example. 2021 saw the continuous rise in the adoption of DCT elements, as feedback and data increased to support the value DCT brings to patients and diversity in trials. Regulators released information which can help steer us in a compliant way without compromising patient safety and data integrity. We have now had time to pause and reflect and as an industry we have been granted an opportunity to look within our CT world to ask if DCTs are actually a viable route of delivery of CTs.

This view has certainly evolved since 2021, with the steady release and updates in the regulatory viewpoints, by a broad range of regulators, most recently the Accelerating Clinical Trials (ACT) EU organised multi-stakeholder meeting, with attendance from experts within the research community to share their knowledge, experience and perspectives.