Journal of Automation, Mobile Robotics and Intelligent Systems

A peer-reviewed quarterly focusing on new achievements in the following fields:

• Fundamentals of automation and robotics • Applied automatics • Mobile robots control • Distributed systems • Navigation

• Mechatronic systems in robotics • Sensors and actuators • Data transmission • Biomechatronics • Mobile computing

Editor-in-Chief

Janusz Kacprzyk (Polish Academy of Sciences, Łukasiewicz-PIAP, Poland)

Advisory Board

Dimitar Filev (Research & Advenced Engineering, Ford Motor Company, USA)

Kaoru Hirota (Japan Society for the Promotion of Science, Beijing Office)

Witold Pedrycz (ECERF, University of Alberta, Canada)

Co-Editors

Roman Szewczyk (Łukasiewicz-PIAP, Warsaw University of Technology, Poland)

Oscar Castillo (Tijuana Institute of Technology, Mexico)

Marek Zaremba (University of Quebec, Canada)

Executive Editor

Typesetting

PanDawer, www.pandawer.pl

Webmaster

Piotr Ryszawa (Łukasiewicz-PIAP, Poland)

Ministry of Science and Higher Education Republic of Poland

Editorial Office

ŁUKASIEWICZ Research Network

– Industrial Research Institute for Automation and Measurements PIAP Al. Jerozolimskie 202, 02-486 Warsaw, Poland (www.jamris.org) tel. +48-22-8740109, e-mail: office@jamris.org

The reference version of the journal is e-version. Printed in 100 copies.

Katarzyna Rzeplinska-Rykała, e-mail: office@jamris.org (Łukasiewicz-PIAP, Poland)

Associate Editor

Ministry of Science and Higher Education Republic of Poland

Piotr Skrzypczynski (Poznan University of Technology, Poland)

Statistical Editor

Małgorzata Kaliczynska (Łukasiewicz-PIAP, Poland) ´

Ministry of Science and Higher Education Republic of Poland

Editorial Board:

Chairman – Janusz Kacprzyk (Polish Academy of Sciences, Łukasiewicz-PIAP, Poland)

Plamen Angelov (Lancaster University, UK)

Adam Borkowski (Polish Academy of Sciences, Poland)

Wolfgang Borutzky (Fachhochschule Bonn-Rhein-Sieg, Germany)

Bice Cavallo (University of Naples Federico II, Italy)

Chin Chen Chang (Feng Chia University, Taiwan)

Jorge Manuel Miranda Dias (University of Coimbra, Portugal)

Andries Engelbrecht (University of Pretoria, Republic of South Africa)

Pablo Estévez (University of Chile)

Bogdan Gabrys (Bournemouth University, UK)

Fernando Gomide (University of Campinas, Brazil)

Aboul Ella Hassanien (Cairo University, Egypt)

Joachim Hertzberg (Osnabrück University, Germany)

Evangelos V. Hristoforou (National Technical University of Athens, Greece)

Ryszard Jachowicz (Warsaw University of Technology, Poland)

Tadeusz Kaczorek (Białystok University of Technology, Poland)

Nikola Kasabov (Auckland University of Technology, New Zealand)

Marian P. Kazmierkowski (Warsaw University of Technology, Poland)

Laszlo T. Kóczy (Szechenyi Istvan University, Gyor and Budapest University of Technology and Economics, Hungary)

Józef Korbicz (University of Zielona Góra, Poland)

Krzysztof Kozłowski (Poznan University of Technology, Poland)

Eckart Kramer (Fachhochschule Eberswalde, Germany)

Rudolf Kruse (Otto-von-Guericke-Universität, Germany)

Ching-Teng Lin (National Chiao-Tung University, Taiwan)

Piotr Kulczycki (AGH University of Science and Technology, Poland)

Andrew Kusiak (University of Iowa, USA)

Publisher:

ŁUKASIEWICZ Research Network –Industrial Research Institute for Automation and Measurements PIAP

Ministry of Science and Higher Education

Articles are reviewed, excluding advertisements and descriptions of products. If in doubt about the proper edition of contributions, for copyright and reprint permissions please contact the Executive Editor.

Republic of Poland

Ministry of Science and Higher Education Republic of Poland

Publishing of “Journal of Automation, Mobile Robotics and Intelligent Systems” – the task financed under contract 907/P-DUN/2019 from funds of the Ministry of Science and Higher Education of the Republic of Poland allocated to science dissemination activities.

Mark Last (Ben-Gurion University, Israel)

Anthony Maciejewski (Colorado State University, USA)

Krzysztof Malinowski (Warsaw University of Technology, Poland)

Andrzej Masłowski (Warsaw University of Technology, Poland)

Patricia Melin (Tijuana Institute of Technology, Mexico)

Fazel Naghdy (University of Wollongong, Australia)

Zbigniew Nahorski (Polish Academy of Sciences, Poland)

Nadia Nedjah (State University of Rio de Janeiro, Brazil)

Dmitry A. Novikov (Institute of Control Sciences, Russian Academy of Sciences, Russia)

Duc Truong Pham (Birmingham University, UK)

Lech Polkowski (University of Warmia and Mazury, Poland)

Alain Pruski (University of Metz, France)

Rita Ribeiro (UNINOVA, Instituto de Desenvolvimento de Novas Tecnologias, Portugal)

Imre Rudas (Óbuda University, Hungary)

Leszek Rutkowski (Czestochowa University of Technology, Poland)

Alessandro Saffiotti (Örebro University, Sweden)

Klaus Schilling (Julius-Maximilians-University Wuerzburg, Germany)

Vassil Sgurev (Bulgarian Academy of Sciences, Department of Intelligent Systems, Bulgaria)

Helena Szczerbicka (Leibniz Universität, Germany)

Ryszard Tadeusiewicz (AGH University of Science and Technology, Poland)

Stanisław Tarasiewicz (University of Laval, Canada)

Piotr Tatjewski (Warsaw University of Technology, Poland)

Rene Wamkeue (University of Quebec, Canada)

Janusz Zalewski (Florida Gulf Coast University, USA)

Teresa Zielinska (Warsaw University of Technology, Poland)

Journal of Automation, Mobile Robotics and Intelligent Systems

Volume 14, N° 1, 2020

DOI: 10.14313/JAMRIS/1-2020

Contents

Controller Area Network Standard for Unmanned Ground Vehicles Hydraulic Systems in Construction

Applications

Piotr Szynkarczyk, Józef Wrona, Adam Bartnicki

DOI: 10.14313/JAMRIS/1-2020/1

Application of an Artificial Neural Network for Planning the Trajectory of a Mobile Robot

Marcin Białek, Patryk Nowak, Dominik Rybarczyk

DOI: 10.14313/JAMRIS/1-2020/2

Timber Wolf Optimization Algorithm for Real Power Loss Diminution

Kanagasabai Lenin

DOI: 10.14313/JAMRIS/1-2020/3

Multi-Agent System Inspired Distributed Control of a Serial-Link Robot

S. Soumya, K. R. Guruprasad

DOI: 10.14313/JAMRIS/1-2020/4

Path Planning Optimization and Object Placement Through Visual Servoing Technique for Robotics

Application

Sumitkumar Patel, Dippal Israni, Parth Shah

DOI: 10.14313/JAMRIS/1-2020/5

Fuzzy Logic Controller With Fuzzylab Python Library and the Robot Operating System for Autonomous Mobile Robot Navigation

Eduardo Avelar, Oscar Castillo, José Soria

DOI: 10.14313/JAMRIS/1-2020/6

Toward the Best Combination of Optimization with Fuzzy Systems to Obtain the Best Solution for the GA and PSO Algorithms Using Parallel Processing

Fevrier Valdez, Yunkio Kawano, Patricia Melin

DOI: 10.14313/JAMRIS/1-2020/7

Exploring Random Permutations Effects on the Mapping Process for Grammatical Evolution

Blanca Verónica Zúñiga, Juan Martín Carpio, Marco Aurelio Sotelo-Figueroa, Andrés Espinal, Omar Jair Purata-Sifuentes, Manuel Ornelas, Jorge Alberto SoriaAlcaraz, Alfonso Rojas

DOI: 10.14313/JAMRIS/1-2020/8

Single Spiking Neuron Multi-Objective Optimization for Pattern Classification

Carlos Juarez-Santini, Manuel Ornelas-Rodriguez, Jorge Alberto Soria-Alcaraz, Alfonso Rojas-Domínguez, Hector J. Puga-Soberanes, Andrés Espinal, Horacio Rostro-Gonzalez

DOI: 10.14313/JAMRIS/1-2020/9

Application of Agglomerative and Partitional Algorithms for the Study of the Phenomenon of the Collaborative Economy Within the Tourism Industry

Juan Manuel Pérez-Rocha, Jorge Alberto Soria-Alcaraz, Rafael Guerrero-Rodriguez, Omar Jair Purata-Sifuentes, Andrés Espinal, Marco Aurelio Sotelo-Figueroa

DOI: 10.14313/JAMRIS/1-2020/10

Research Trends on Fuzzy Logic Controller for Mobile Robot Navigation: A Scientometric Study

Somiya Rani, Amita Jain, Oscar Castillo

DOI: 10.14313/JAMRIS/1-2020/11

Optimization of Convolutional Neural Networks Using the Fuzzy Gravitational Search Algorithm

Yutzil Poma, Patricia Melin, Claudia I. González, Gabriela E. Martínez

DOI: 10.14313/JAMRIS/1-2020/12

Controller Area Network Standard for Unmanned Ground Vehicles Hydraulic Systems in Construction Applications

Submitted: 15th January 2020; accepted: 30th March 2020

Piotr Szynkarczyk, Józef Wrona, Adam Bartnicki

DOI: 10.14313/JAMRIS/1-2020/1

Abstract: Unmanned vehicles occupy more and more space in the human environment. Mobile robots, being a significant part thereof, generate high technological requirements in order to meet the requirements of the end user. The main focus of the end users both in civil, and so called “defense and security” areas in the broadly defined segments of the construction industry should be on safety and efficiency of unmanned vehicles. It creates some requirements for their drive and control systems being supported among others by vision, communication and navigation systems. It is also important to mention the importance of specific design of manipulators to be used to fulfill the construction tasks. Control technologies are among the critical technologies in the efforts to achieve these requirements. This paper presents test stations for testing control systems and remote control system for work tools in the function of teleoperator using the CAN bus and vehicles which use hydrostatic drive systems based on the Controller Area Network (CAN) standard. The paper examines the potential for using a CAN bus for the control systems of modern unmanned ground vehicles that can be used in construction, and what limitations would possibly prevent their full use. It is exactly crucial for potential users of unmanned vehicles for construction industry applications to know whether their specific requirements basing on the tasks typical in construction [9] may be fulfilled or not when using the CAN bus standard.

Keywords: CAN bus, control systems, unmanned ground vehicles – mobile robots, hydrostatic drive systems, construction equipment

1. Introduction

Unmanned construction systems could be used to perform emergency countermeasure work and restoration work at disaster sites but also to increase safety at ordinary construction sites. Unmanned construction was used in civil engineering work for the first time in Japan in 1969 when an underwater bulldozer was used to excavate and move deposited soil during emergency restoration work at the Toyama Bridge that have been blocked by the Joganji River disaster [9]. There were

also some concepts to implement the remote controlled systems into manned platforms [6].

The executions can be categorized as emergency and restoration works [9]. It creates possibilities to define some principal types of hazardous tasks typical in construction for which unmanned vehicles could be used. There can be specified following works: rock removal work (excavation, loading, transporting), structure demolition and removal work (crushing and pulverizing concrete and cutting steel reinforcing bars, loading and transporting the products), large sandbag placing work (transporting and placing), concrete block work (removing obstructions, leveling ground, placing), temporary road work (cutting, filling and compaction), erosion and sediment control dam work (excavation, embanking, backfilling, compaction, pouring concrete), watercourse work (excavation, pouring concrete, placing foot protection blocks), tree felling work (cutting, stumping, transporting), Reinforced Cement Concrete RCC work (transporting, spreading and leveling, compaction, spraying, laitance removal), ready-mix concrete work (installing form materials, pouring and compacting concrete) and soil form work (excavation, loading, transporting, removing form materials) [9]. The specificity of the tasks performed by today’s unmanned ground vehicles, the ability to use them for hazardous construction tasks creates demanding requirements for both their drive, control, communication and navigation systems. The basic requirement for drive systems being discussed in this paper is to provide high mobility and control precision in the conduct of reconnaissance and rescue missions, as well as to achieve high power and torque for actuators of work tools. The progressive development of hydraulic components (their reliability and susceptibility to control) are the reasons why hydrostatic drive systems are increasingly used in solutions for drive systems of modern unmanned land platforms which offer both very good traction parameters of a vehicle and sufficiently large forces for their work tools which is crucial in case of construction machinery. An advantage of such solutions is relatively long operating time of these platforms limited only by the capacity of their fuel tanks (supplying combustion engines), as opposed to robots driven by electric propulsion systems whose working time is limited by the capacity of battery cells. Recreating readiness for reuse is at the present stage of cell technology development significantly longer than the process of refuelling. Full

utilization of the potential of these drive systems is only possible where modern control systems are introduced. Due to the principal categories of work performed the method described in [9] there are two examples of unmanned ground vehicles presented in this paper when this is a problem in the execution of construction tasks like rock removal work (loading, transporting), structure demolition, large sandbag placing work (transporting and placing), some concrete block and tree felling work.

Robert Bosch GmbH commenced its development of a Controller Area Network (CAN) in 1983. A Controller Area Network (CAN bus) is generally a vehicle bus standard designed to allow microcontrollers and devices to communicate with each other in applications without a host computer [7]. Unlike a traditional network such as USB or Ethernet, CAN does not send large blocks of data point-to-point from node A to node B under the supervision of a central bus master [8]. In this paper the main focus is on the applications of CAN systems for controlling hydraulic drives of modern unmanned ground vehicles for use in construction.

The CAN technology officially was introduced in 1986 at the Society of Automotive Engineers (SAE) Congress in Detroit, Michigan. The first CAN commercial solution appeared on the market in 1987, produced by Intel & Philips [3]. In 1991, Bosch released version 2.0 of CAN. Bosch first developed the CAN controller technology for use in a vehicle network in 1985 [3]. The advent of new technology for the control of hydraulic components – the CAN-bus system in its mobile version opened a new, long-awaited opportunities in the field of tools and work process control in machines equipped with hydrostatic drive systems. While descriptions of applications of this technology for drives other than hydraulic are available [1,2,3], the knowledge about the applications of CAN systems for controlling hydraulic drives is limited.

That is why the control system for an unmanned land platform based on a CAN bus standard has been developed and results of analysis to answer the scientific question what are opportunities and limitations for the use of such a standard in hydrostatic drive systems for unmanned construction machinery and how this research will contribute to determining whether the use of CAN-bus systems is indeed feasible in such machinery is presented in this paper.

2. Test Station for Hydrotronic Tests of Drive Trains Operating in the CAN-bus System

For the purposes of identifying the limitations and possibilities of implementation of the CAN-bus technology in mobile robot control systems and modern engineering machinery, two test stations were built at the Institute of Robots and Machine Design, Faculty of Mechanical Engineering, Military University of Technology (Figs. 1,2) at which actuators for the hydrostatic drive system were tested, controlled with the use of hydraulic distributors equipped with electronic modules cooperating with the CAN bus.

The basic elements of the stations are five working ports hydraulic valves (1 – Fig. 1) allowing the control of all movements of the work tools, consisting of single PVG32 type sections and operating in the LS (Load Sensing) system. The movement of hydraulic valves spools is controlled via PVED-CC electronic modules which are designed to work using the CAN-bus protocol. The PVED-CC Series 4 is an electrohydraulic actuator which consists of an electronic part and a solenoid H-bridge. The PVED-CC Series 4 transforms a command signal transmitted on a CAN bus into a hydraulic action by applying pressure acting on the end of the spool [11]. The individual coils are activated using remote control panel joysticks (2 – Fig. 1) generating analogue and digital control signals or the test station for testing the remote control system for work

Fig. 1. Test stations for testing control systems using the CAN bus: 1 – hydraulic valve with electronic CAN-bus modules, 2 – remote control desktop, 3 – hydraulic power unit, 4 – work tools

tools in the teleoperator function based on the CAN bus (Fig. 2). In this case the vision system provides a panoramic view of the surroundings, and a camera mounted in the manipulator holder (a – Fig. 2) allows for the identification and analysis of picked objects.

Below, (Fig. 3) there is presented the scheme of main control desktop as a component of the remote control station. Whereas on Fig. 4 the scheme of platform on-board control system is presented.

Beside the tests of functionality and effectiveness of manipulator operations, the measurements of its maximum load for different manipulator lift range were done at the test station (Fig. 5). The measurement system is based on force sensor CL-14d (1 – Fig. 5) equipped with amplifying system CL 71, ZEPWN (2 – Fig. 5). Additionally, the load force was measured using dynamometer 9016 APU-20-2-U2 (3 – Fig. 5). The results of tests were recorded with the use of ESAM TRAVELLER Plus data acquisition system.

Fig. 5. Test station for measurement of load force of engineering support robot manipulator (description in the content of the text above)

The value of manipulator loads were recorded both for different manipulator lift ranges and for different its configurations but the same lift range. There, on Fig. 6 the exemplary manipulator configurations for lift range of 2100 mm were presented. The achieved results of the tests are presented at the Fig. 7.

These results approved manipulator capabilities to lift the loads of 500 N for maximum lift range of 4700 mm and possibility to lift the load of 13 kN for lift range of 2100 mm and optimal manipulator configuration.

These tests allowed to define the maximum value of manipulator loads from the point of view of its kinematics and parameters of hydrostatic drive line controlled with the use of Controller Area Network Standard system.

Mutual communication between elements of the control system is carried out using microcontrollers of the PLUS+1 system. CAN-based PLUS+1 microcontrollers are the brains behind intelligent vehicle control. Swiftly programmable to its specific requirements [12].

Fig. 2. Test station for testing the remote control system for work tools in the function of teleoperator (a), based on the CAN bus of Engineering support robot EOD/IED “Marek”

Fig. 3. Scheme of control desktop of the remote control station

Fig. 4. Scheme of platform on-board control system

3. Unmanned Land Platform Controlled Based on a CAN Bus

As part of research into the possibility of using the CAN bus to control unmanned construction vehicles a light high mobility unmanned vehicle was developed with hydraulic coupling equipped with a hydrostatic drive in which a turn is accomplished by changing the angle of the relative positions of two parts of the vehicle connected by the coupling (Fig. 8). The high mobility of the vehicle is provided by a flexible crawler track where tracks are driven independently by four gerotor motors. Control of the vehicle is realized on the basis of hydraulic valves equipped with electronic modules operating in the CAN system which allows both – changing the speed of the vehicle and its direction of movement. The main drive unit of the vehicle (a combustion engine) and the hydrostatic drive system make it possible for the vehicle to be equipped with additional work tools utilizing the hydraulic energy of the operating fluid or electricity generated by on-board sources of energy. It is therefore possible to mount all kinds of self-levelling platforms, manipulators, cutting saws, hydraulic cutters, etc. on the vehicle. All such components can also be adapted for remote control.

There will be conducted tests to reach more data for quantitative anaylysis on the base of this platform. It will be also part of Validation&Verification tests for simulation models of platform and its drive line components equipped with electronic modules operating in the CAN system.

4. CAN-Bus in Remote-Controlled Engineering Robots for the Modern Battlefield

Other two designs which use the CAN bus in drive control and work tool control systems are engineer-

Fig. 6. Exemplary manipulator configurations for lift range of 2100 mm

Fig. 7. The graph of engineering support robot manipulator load

Fig. 8. Unmanned land platform with control based on a CAN: 1 – hydraulic valve, 2 – electronic CAN-bus modules

ing support robots Boguś and Marek (Figs. 9 and 10). Boguś is designed to perform tasks related to the supply of field work crews operating in a difficult to access areas. It can also act as a carrier of various types of reconnaissance or construction task systems like rock removal work (loading, transporting), structure demolition, large sandbag placing work (transporting and placing), some concrete block and tree felling work. The robot is built as a two-part structure, with a hydraulic coupling connecting the two parts. The power unit is a turbocharged diesel engine with a power of about 60 kW, the drive system is 10x10 (the first part – 6x6, second part – 4x4), transmission – mechanical and hydrostatic with interaxial lock. Independent hydro-pneumatic suspension with lockable front wheels and an lever-articulated turn system.

The time of operation has been determined to be not less than 8–10 hours. With the use of the two-part system with a specially designed hydraulic coupling it is possible to obtain a turning radius of about 4 m. This solution makes it possible to clear ditches with a width of 1 m and hills with a slope of 45°. A hydraulic fork lift mounted on the second part is designed for self-loading and self-unloading of objects (e.g. a standard pallet sized 2.5x1 m) with a weight up to 2000 kg, from storage level and from trucks.

The main dimensions of the robot are: length 7-8 m, width approx. 2.1 m, while the basic parameters are: curb weight approx. 4000 kg, load capacity 2000 kg + 1000 kg, maximum speed 30–40 km/h.

“Marek” (Fig. 10) is a 6-wheeled vehicle with a weight of approx. 3200 kg powered by a turbo-

Fig. 10. Engineering support robot EOD/IED “Marek”

Fig. 9. Engineering support robot “Boguś”

charged diesel engine with a power of approximately 60 kW. A 6x6 drive system with independent hydro-pneumatic suspension was proposed as part of this solution. Adequate traction properties have been achieved by using a hydrostatic drive system with a side lock and an interaxial lock, in which each wheel is independently driven by a gerotor motor. The side turn system makes the vehicle very manoeuvrable and allows turning with a zero turning radius, around the vertical axis of the robot. The robot is equipped with a manipulator with a special gripper and an openwork bucket loader. Work tools of the machine are also driven using the hydrostatic drive system.

The kinematics of the manipulator and its design are to provide the ability to pick from a roadside ditch or from a cavity in the ground:

– a load up to 250 kg and with a diameter of 600 mm (e.g. a barrel, aerial bombs, artillery ammunition, missiles);

– small objects with a diameter of less than 100 mm (e.g. grenades);

– prefabricated concrete elements, debris and boulders weighing up to 250 kg;

steel and wood structural elements (rods, sections, beams, boards, timber).

Like in the case of “Boguś” robot, “Marek” is designed to perform tasks related to the supply of field work crews operating in a difficult to access areas. It can also perform construction task systems like rock removal work (loading, transporting), structure demolition, large sandbag placing work (transporting and placing), some concrete block and tree felling work.

In addition to the manipulator, the robot is equipped with a lift and loader tools with a quick coupler for linking various different tools. The main tool is an openwork bucket capable of digging loose soil (category I and II) and separating from it objects with a minimum diameter under 50 mm, and lifting/ turning over bulky objects, digging out subsurface objects, anchoring – in order to increase the forces achieved by the manipulator – and as a support to increase robot stability. With the lift, it is to be capable of moving (lifting, pushing) concrete blocks, cave-ins, tree trunks, barriers, steel trusses etc. with a mass of up to 2000 kg. The proposed design solutions ensure 8-10 operating hours of the robot.

In both cases, the drive systems of the vehicles are controlled using a CAN bus based on the elements of the PLUS+1 system. Independent drivers are also used in the ride, work tool, hydraulic coupling and active hydropneumatic suspension control systems. The use of hydraulic gerotor motors designed to work on a CAN bus has made it much easier to control them in terms of obtaining the maximum values of torque on individual wheels, and the process of controlling the hydropneumatic suspension ensured continuous contact of drive wheels of the vehicle with the ground.

Another example could be the Expert robot [15]. A potential application of the Expert robot is to in-

11. Two PIAP robots performing construction tasks: a) Ibis/RMF with hydraulic cutter, b) RMI turning off the valve to demonstrate wrist infinite rotation functionality

spect critical infrastructure including dangerous places being results of earthquake or any other disasters. It was also used to set explosives in the building destined for demolition. Expert was designed for use in small spaces where the larger Inspector robot [10] would not be able to enter. Such confined spaces include small rooms in buildings.

The robot is powered from gel batteries permitting operation for 3 to 8 hours, or through a cable from 230V mains. Expert features a significant operating range of the arm, almost 3 m. The manipulator has six degrees of freedom plus the clamp of gripper jaws, each step being independent. The robot is equipped with six cameras, four of them on the mobile base of the robot. Two cameras are placed on front tracks and look in opposite directions to the sides; their location over the ground changes with the setting of the tracks.

The other examples are Ibis/RMF [13] and RMI [14] shown in Fig. 11. The first one is six-wheeled chassis robot with independent drive of each wheel that allows to operate in challenging and varied terrain. IBIS® is a fast robot (10 km/h). Special design of mobile base suspension ensures optimum wheel contact with the ground. Manipulator with extendable arm ensures a large reach (over three meters) and a high range of motion in each plane. It is possible to equip the manipulator in different tools including construction. The second one PIAP RMI® – Mobile Robot for Intervention is a tracked vehicle which can replace or assist humans in the most dangerous tasks. It’s dimensions and drive system allow to carry out activities both indoors and in difficult field conditions performing different task including construction.

Fig.

5. Testing Methodology

Testing hydraulic systems can be carried out using both the quantitative method [4] and the qualitative method [5]. At this stage, using the results obtained from tests conducted with the assumed methodology, it is possible to perform a qualitative analysis but some quantitative results are presented at Fig. 7. These will be the issue for the next step tests.

While these test methods have been developed to enable answering the research question: whether there is a possibility of using a CAN bus for the control systems of modern unmanned ground vehicles, and whether certain limitations do not prevent their full use being focused on the quantitative rather then qualitative analysis.

The test subjects were:

– two test stations (Figs. 1,2), at which actuators for the hydrostatic drive system were tested, controlled with the use of hydraulic valves equipped with electronic modules cooperating with the CAN bus;

– light high-mobility unmanned vehicle with a hydraulic coupling, equipped with a hydrostatic drive (Fig. 8);

– engineering support robots Boguś (Fig. 9) and Marek (Fig. 10).

It was assumed that the tests would focus on issues relating to the areas of both the possibilities and limitations resulting from the use of systems based on the CAN standard.

The abovementioned subjects were tested in order to obtain analytical material for such research areas as:

– requirements for systems based on the components of the CAN standard;

– operational reliability of both the actuator elements and control;

– susceptibility of unmanned land vehicles to control;

– precision of control and system diagnosis;

– creating complex, multifunctional control systems;

– creating complex, multifunctional diagnostic systems;

– implementation of CAN systems for autonomous unmanned land platforms;

– application of CAN standard components in existing mobile robot solutions;

– costs.

Based on the results of tests carried out on real objects qualitative analytical tests were carried out, the

Tab. 1. Analysis of possibilities for the use of CAN bus in control systems for mobile robots

No. Possibility

1. Operational reliability where used for both actuators in hydrostatic drive systems and their control systems

Description of possibility

The CAN bus is a solution with very high operational fail-safety, reliability and low interference potential.

RED CAN used as a closure of the bus ensures increased, improved reliability in the case of partial failure of the bus.

2. Susceptibility to control, including remote control CAN actuators enable their easy configuration and interference in control characteristics.

3. Precision of control – particularly important for tasks requiring high precision, e.g. identification, picking and neutralization of hazardous charges

4. Creating complex, multifunctional control systems

5. Creating complex, multimetric diagnostic systems

6. Implementation of CAN systems for autonomous unmanned land platforms

Control procedures generated by CAN controllers bring a new quality – an innovative approach to control logic based on intuitive control.

The application of the CAN technology enables the implementation of a multiprocessor, multi-controller process.

The use of the CAN technology makes it possible to implement a multi-processor, multi-controller process supported through the use of CAN sensors capable of selfcalibration (intuitive control system). It helps diagnose hydrostatic drive systems and their control systems.

The complexity of the structures of autonomous systems enforces the implementation of the CAN technology. In their control systems there is an increasing number and volume of transmitted information. Given the current technological possibilities for its transmission to and from the system, the use of the CAN technology enables the follow-up of the control system and actuating system. Placing on the market intuitive programming systems for controllers generating control signals to the CAN bus also results in the emergence of friendly, intuitive user interfaces (Human-Machine Interface, HMI).

7. Flexible design

8. Cost reduction

Application of the CAN technology allows the introduction of modular design, in which even the user can make changes to the configuration (e.g. add or remove equipment of the vehicle fitted with CAN-bus nodes). There is also the susceptibility of the design to be extended by functionalities which were initially unforeseen.

The costs of application of professional systems of connectors and cables often add up to considerable amounts. The use of CAN significantly reduces the number of cables.

No. Limitation

1. Need to change the approach to the design and construction of systems with the use of control systems based on the CAN bus

2. Adapting existing unmanned land vehicles for the use of the CAN bus

3. Need to change the concept of diagnosing the status of the robot

4. Costs

Description of limitation

Implementation of control systems based on the CAN bus requires unmanned vehicles to be equipped with actuators designed to be controlled by control components operating based on the concept of CAN systems. This process should take place as early as the design phase.

The use of CAN system components gives rise to the need to redesign both the control system and the actuator system.

The introduction of systems to diagnose the status of a robot based on the use of the CAN standard necessitates the need to use appropriate sensors and requires the use of specialized diagnostic equipment.

A higher price of components made in the CAN standard as compared to the previously used components.

results of which have been tabulated and discussed, indicating both the possibilities and limitations of the use of systems operating in the CAN standard.

Then conclusions were formulated focusing on answering the research question posed in this paper.

6. Test Results and Analysis

Analysis of the results of analytical research was carried out based on the results of tests carried out in accordance with the developed methodology, using two testing stations and three unmanned ground vehicles indicated in the previous section, focusing on the research areas identified therein. The results of the analytical tests are given in Tables 1 and 2.

Table 1 identifies six areas of possible use of CAN bus in control systems for mobile robots.

The analysis of the results of analytical research shows that the CAN bus is a solution with very high operational fail-safety, reliability and low interference potential. The use of the Controller Area Network (CAN) protocol analyzer (RED CAN) as a closure of the bus offers increased and improved reliability in the case of partial bus failure, which is very important from the point of view of the continuity of control, particularly for remote control. In previous developments of this technology, problems with CAN were noted which were associated with the inability to obtain a response to information in real time, in particular to information with a lower priority [2]. Currently, the CAN protocol is an asynchronous serial communication protocol compliant with the ISO standard 11898 (11898-1 [3]), widely used due to its real-time operation, reliability and compatibility with other devices [1] including, as was noted in the course of the testing in question, components of hydrostatic drive systems and control systems of unmanned ground vehicles. The protocols of top level are DeviceNet protocols, open CAN, J1939 [3]. Therefore, CAN actuators currently used for these applications enable their easy configuration and interference in control characteristics.

In the case of unmanned ground vehicles, susceptibility to control and precision of control are particu-

larly important for tasks requiring high precision, e.g. dealing with hazardous materials on project sites, inspect critical infrastructure including dangerous places being results of earthquake or any other disasters is very important. Testing vehicles with a hydraulic drive system, where the vehicle is controlled using hydraulic distributors equipped with electronic modules operating in the CAN system, they allow their easy configuration and interference in control characteristics. On the other hand, control procedures generated by CAN controllers bring a new quality allowing the use of an innovative approach to control logic which is based on intuitive control.

The ability to create multifunctional control systems, multimetric diagnostic systems is essential when using the CAN technology for autonomous unmanned land platforms. In their control systems there is an increasing number and volume of transmitted information. Given the current technological possibilities for its transmission to and from the system, the use of the CAN technology enables the follow-up of the control system and actuating system. Placing on the market intuitive programming systems for controllers generating control signals to the CAN bus also results in the emergence of friendly, intuitive user interfaces (Human-Machine Interface – HMI). It would also not be possible to develop the subsequent levels of autonomy without the ability to implement a multi-processor, multi-controller process supported through the use of CAN sensors capable of self-calibration (intuitive control system). The use of the CAN technology helps diagnose hydrostatic drive systems and their control systems.

Table 2 identifies four areas of limitations for the use of the CAN bus in control systems for mobile robots.

The results of research show that most of the limitations are due to the need to change the philosophy of designing new solutions for unmanned ground vehicles, in this case in the field of hydrostatic drive systems and their control systems with the use of control systems operating on the basis of the CAN systems concept. On the other hand, when using this technology for existing mobile robots the use of CAN system

Tab. 2. Analysis of limitations to the use of CAN bus in control systems for mobile robots

components gives rise to the need to redesign both the control system and the actuator system. In both cases, it is necessary to change the concept of diagnosing the status of the robot. This forces the need to use appropriate sensors and requires the use of specialized diagnostic equipment, but it is very important especially when achieving higher levels of autonomy of the vehicles.

The last of the analyzed areas was the cost of implementing this technology for unmanned ground vehicles. At the current stage of development and application of this technology in the field of hydrostatic drive systems and their control systems for mobile robots, the higher price of components made in the CAN standard as compared to the previously used components can give rise to some budgetary constraints.

7. Conclusion

Many areas of the possibilities and limitations of the use of the CAN technology for hydrostatic drive systems and control systems of unmanned ground vehicles overlap with other areas of application of this technology. However, unmanned ground vehicles are characterized by a specificity resulting from the range of their tasks and how they are executed.

The developed methodology has enabled carrying out analysis in order to answer the research question: whether there is a possibility of using a CAN bus for the control systems of modern unmanned ground vehicles. As a result of the carried out analytical research their possibilities and limitations have been determined. The latter do not prevent the use of this technology for hydrostatic drive systems and thereby for control systems of mobile robot with this kind of drive.

The need for precise control of the new generation of engineering machines, the possibility of interfering with their control procedures online and the need for continuous diagnostics of work processes are now becoming a global standard. Its fulfilment, however, requires the knowledge of the capabilities and limitations of the existing systems. This knowledge is currently possessed by the few companies working on new technologies in this area, and there are no scientific publications on this subject. The use of the new control technology, the CAN bus system, for the implementation of technological tasks by today’s unmanned ground vehicles can significantly affect both the efficiency of their work processes and operator comfort when using remote control. Therefore, identification of these problems, learning about the possibilities and identification of limitations to the control of hydrotronic systems in the CAN-bus system of mobile robots, determination of the possibilities for the use of the CAN-bus technology for the remote control of work tools has allowed us to implement state-ofthe-art drive systems for unmanned ground vehicles – guaranteeing the high quality of their performed technological tasks, as well as the safety of these tasks in hazardous areas.

This is the purpose of vehicles and machines with hydrostatic drive, controlled based on the CAN bus. The conducted research shows that it is possible to use the CAN bus to control of drive and work tools of unmanned vehicles. Its results to date in such research areas as: requirements for systems based on the components of the CAN standard, operational reliability of both the actuator elements and control, susceptibility of unmanned land vehicles to control, precision of control and system diagnosis, creating complex, multifunctional control and diagnostic systems, implementation of CAN systems for autonomous unmanned land platforms, application of CAN standard components in existing mobile robot solutions and costs have made it possible to carry out qualitative analysis of possibilities and limitations for the use of can bus in control systems for mobile robots .

Implementation of the next phase of research consisting of unmanned platforms being equipped with sensors to measure parameters resulting from defined methodology will allow performance of a quantitative analysis, which should answer the next research question: what are the quantitative differences between using classical components and control systems for vehicles with a hydrostatic drive system, and the application of components of drive system and vehicle control based on hydraulic valves equipped with electronic modules operating in the CAN system. A future approach will also involve carrying out tests of manned vehicles in which remote control systems have been implemented [6]. These tests will be also part of Validation & Verification tests of simulation models, both some platforms described in this paper and its drive line components controlled based on a can bus.

AUTHORS

Piotr Szynkarczyk – ŁUKASIEWICZ Research Network – Industrial Research Institute for Automation and Measurements PIAP, Al. Jerozolimskie 202, 02486 Warsaw, Poland.

Józef Wrona* – Military University of Technology (WAT), ul. gen. Sylwestra Kaliskiego 2, 00-908 Warsaw, Poland, e-mail: jozef.wrona@wat.edu.pl.

Adam Bartnicki – Military University of Technology (WAT), ul. gen. Sylwestra Kaliskiego 2, 00-908 Warsaw, Poland.

*Corresponding author

R EFERENCES

[1] S. K. Gurram and J. M. Conrad, “Implementation of CAN bus in an autonomous all-terrain vehicle”. In: 2011 Proceedings of IEEE Southeastcon, 2011, 250–254, DOI: 10.1109/SECON.2011.5752943.

[2] W. Baek, S. Jang, H. Song, S. Kim, B. Song and D. Chwa, “A CAN-based Distributed Control System for Autonomous All-Terrain Vehicle (ATV)”, IFAC Proceedings Volumes, vol. 41, no. 2, 2008, 9505–9510, DOI: 10.3182/20080706-5-KR-1001.01607.

[3] V. D. Kokane and S. B. Kalyankar, “Implementation of the CAN Bus in the Vehicle Based on ARM 7”, IJRET: International Journal of Research in Engineering and Technology, vol. 4, no. 1, 2015, 29–31.

[4] “Introduction to Quantitative Research Methods”. M. T. Smith, https://people.kth.se/~maguire/courses/II2202/ii2202_intro_to_quantitative_methods_2012_MTS_Lecture5a.pdf. Accessed on: 2020-05-28.

[5] “Introduction to Qualitative Research”. www. blackwellpublishing.com/content/BPL_Images/ Content_store/Sample_chapter/9780632052 844/001-025[1].pdf. Accessed on: 2020-05-28.

[6] A. Bartnicki, M. J. Łopatka, L. Śnieżek, J. Wrona and A. M. Nawrat, “Concept of Implementation of Remote Control Systems into Manned Armoured Ground Tracked Vehicles”. In: Innovative Control Systems for Tracked Vehicle Platforms, 2014, 19–37, DOI: 10.1007/978-3-319-04624-2_2.

[7] “History of the CAN technology”, CAN in Automation (CiA), www.can-cia.org/can-knowledge/can/can-history. Accessed on: 2020-06-19.

[8] S. Corrigan, Introduction to the Controller Area Network (CAN), Application Report, SLOA101B, Texas Instruments, 2002.

[9] Y. Ban, “Unmanned Construction System: Present Status and Challenges”. In: Proceedings of the 19th ISARC, 2002, 241-246, DOI: 10.22260/ISARC2002/0038.

[10] “INSPECTOR: robot for inspection and intervention”. Industrial Research Institute for Automation and Measurements PIAP, http://antiterrorism.eu/wp-content/uploads/inspector-en. pdf. Accessed on: 2020-05-28.

[11] “PVED-CC Series 4 Electrohydraulic Actuator Technical Information Manual”. Danfoss, https://assets.danfoss.com/documents/ DOC152886483924/DOC152886483924.pdf. Accessed on: 2020-05-28.

[12] “PLUS+1® MC microcontrollers”. Danfoss, https://www.danfoss.com/en/products/electronic-controls/dps/plus1-controllers/plus1-mc-microcontrollers/#tab-overview. Accessed on: 2020-05-28.

[13] “IBIS®: robot for pyrotechnic operations and reconnaissance”. Industrial Research Institute for Automation and Measurements PIAP, http://antiterrorism.eu/wp-content/uploads/ ibis-en.pdf. Accessed on: 2020-05-28.

[14] “RMI: mobile robot for intervention”. Industrial Research Institute for Automation and Measurements PIAP, http://antiterrorism.eu/wp-content/uploads/piap-rmi-en.pdf. Accessed on: 2020-05-28.

[15] “EXPERT: neutralizing and assisting robot”. Industrial Research Institute for Automation and Measurements PIAP, http://antiterrorism.eu/ wp-content/uploads/expert-en.pdf. Accessed on: 2020-05-28.

Application of an Artificial Neural Network for Planning the Trajectory of a Mobile Robot

Submitted: 08th November 2019; accepted: 30th January 2020

Marcin Białek, Patryk Nowak, Dominik Rybarczyk

DOI: 10.14313/JAMRIS/1-2020/2

Abstract: This paper presents application of a neural network in the task of planning a mobile robot trajectory. First part contains a review of literature focused on the mobile robots’ orientation and overview of artificial neural networks’ application in area of robotics. In these sections devices and approaches for collecting data of mobile robots environment have been specified. In addition, the principle of operation and use of artificial neural networks in trajectory planning tasks was also presented. The second part focuses on the mobile robot that was designed in a 3D environment and printed with PLA material. The main onboard logical unit is Arduino Mega. Control system consist of 8-bits microcontrollers and 13 Mpix camera. Discussion in part three describes the system positioning capability using data from the accelerometer and magnetometer with overview of data filtration and the study of the artificial neural network implementation to recognize given trajectories. The last chapter contains a summary with conclusions.

Keywords: artificial neural network, mobile robot, machine vision

1. Introduction

The idea of artificial neural networks (ANN) was taken from natural neurons, which are the basic elements of the nervous system of living organisms, including humans. Neural networks has become the subject of research for various specialties and fields of science, discovering newer and more creative forms of their use. Researchers around the world are developing ANN capabilities, striving to achieve efficiency comparable to living organisms. The number of neurons used is the main comparative scale in these studies. However, unlike real counterparts, they do not transfer signals only, but allow their processing, e.g. by making calculations. The ANN’s ability to carry out its tasks is determined by the learning process. The combination of ANN issues and mobile robotics is one of the main currents in the development of modern mechatronics. Planning the trajectory of a mobile robot using SSN requires an approach to two issues.

The first is the network itself, which is to recognize the given trajectory. The second is a robot that maps it, which must determine its location in space in a specific way, while avoiding obstacles.

1.1. Orientation of Mobile Robots in Space

Autonomous navigation of mobile robots is one of the main problems faced by their designers, mainly due to the problem of its definition in an unspecified area. To accomplish this task, it is necessary to equip the mobile robot with the sensors to collect data about the environment and its location. Their selection is related to the tasks the robot will perform, and thus the positioning accuracy and type of obstacles, which are mainly characterized by a specific geometry or color are mandatory to be known. Low cost solutions are based on Ultrasonic Sensors (US). The main disadvantages of proposed system was the angle restrictions at which the sound wave falls on the detected surface of the obstacle and the material of which it is made [1]. Nevertheless, they are one of the basic types of sensors used in mobile robots, especially for the implementation of obstacle avoidance tasks [2] and in indoor tasks [3]. It is also possible to track ultrasonic beacons in real time [4] by becoming a transmitter looking for receivers or being a receiver itself. Second popular device is infrared sensor (IR). Characteristic features of obstacles force adjustment of their recognition strategy. The rational approach is to use both ultrasonic and infrared sensors, due to the possibility of mutual complementarity in the detection capabilities [5]. In the case of small mobile robots, the basic environment detection system includes elements such as ultrasonic sensors, infrared sensors, cameras and microphones [6,7]. Global Positioning System (GPS) allows locating receivers based on determining their distance from satellites (at least three) in which they are located, giving data in a quasi-spherical coordinate system (due to the fact that the Earth is not an ideal sphere), which are geodetic coordinates such as: geodetic latitude and longitude and ellipsoidal height or in geographical coordinates: longitude and latitude [8]. The GPS system is designed to locate objects on a larger scale of displacements, expressed in kilometers (square kilometers). That is why it works well with vehicles covering considerable distances in a relatively short time, moving at a much higher speed than mobile robots, being able at the same

time to predict its location by projecting the vehicle’s direction of movement onto a road map. Robot displacements are much smaller and therefore require greater accuracy [3].

To create maps of the environment, LiDAR sensors are used. They allow to detect objects by measuring laser pulses which are proportional to their distance from the source. For example, determining the position of a robot by measuring distance and angle relative to another robot, using measurements obtained from raw data provided by two laser rangefinders in a 2D plane [9], positioning and orientation of the mobile robot in 3D space, using a laser head measuring the position relative to photoelectric reference points, deployed in the room [10] or the use of an industrial laser navigation system to collect information about the distance between the rotating measuring head and the markers located on the perimeter of the laser beam plane in the area of robot operation [11]. The practical application of LiDAR technology can be autonomous navigation of an agricultural robot in conditions without access to GPS-based solutions [12] or mapping of the environment, through its hybrid representation and robot location [13].

One of the popular devices used to navigate mobile robots has become the Microsoft Kinect system, which is an accessory for the Xbox game console [14]. It’s a vision-based system. Kinect is a common tool for navigating a mobile robot, enabling it to avoid obstacles while supplying data, e.g. to an artificial neural network, which deals with environmental recognition and makes decisions about choosing a specific path [14, 15]. An additional advantage of vision system was the creation of algorithms that allow simultaneous localization and building of a map, such as SLAM (Simultaneous Localization and Mapping) [16] and all related solutions such as S-PTAM (SLAM – Parallel Tracking and Mapping) [17]. Solutions for locating robots in a confined space include those based on all kinds of mutual radio communications between mobile robots or reference points. Simple location methods using a local wireless network allow the determination of Euclidean distance between the sample signal vector and references stored in the database [18]. Another use of a wireless network is the use of defined access points to locate an object by measuring signal strength using a compressive sampling theory [19], it enables effective reconstruction of signals from a small amount of data [20]. The considerations to date on the ways of navigating mobile robots are based on different ways of obtaining location data. As previously noted, popular space location and orientation systems such as GPS exhibit lower indoor efficiency. In turn, solutions based on defined reference points limit the robot to operate only in space covered by them. Especially when operating indoor, they give way to other information-based solutions from magnetometers, accelerometers and gyroscopes [21].The data obtained by these sensors can mutually compensate for their errors [22], and in some cases inertial navigation may be valuable [23]. It is based on

continuous assessment of the object’s position using data from susceptible sensors (such as gyroscope and accelerometer) in relation to the initial position. Not least is also the approach to control the motor drives in accordance with terrain environment [24].

1.2. Artificial Neural Networks

Artificial neural networks have been a field strongly developed over the years among other things in the form of so-called open source projects. Currently, TensorFlow libraries, designed by Google, in November 2015 are one of the most frequently used open source libraries. The vast majority of C ++ were used to write these libraries. Moreover they can use GPU (Graphics Processing Unit) for calculations, what significantly speeds them up. Another advantage of this software is the ability to work on less efficient Raspberry Pi devices or smartphones. ANNs are very often used for all sorts of image classification. Neural networks presented at the International Conference on New Trends in Information Technology[25] is a good example. They can recognize both Arabic script and faces. The architecture of the text recognition network is based on four hidden layers, and its diagram is shown in Fig. 1.

This network was taught based on numbers from zero to ten in 60,000 samples. The image fed at the entrance had a size of 28x28 pixels, which gives a total of 784 pixels for each sample. The value of the number of pixels determines the number of network input neurons shown in Fig. 1. The learning algorithm was based on the back propagation method of the network response error. The network designed in this way has the ability to recognize the images given on its input with an efficiency of 98.46%, which is a high result when it comes to OCR. The second network described in this article is the Convolutional Neural Network (CNN). The architecture of this network is shown in Fig. 2.

Fig. 1. Network architecture for text recognition [25]

Fig. 2. Convolutional neural network architecture for face recognition [25]

The network shown in Fig. 2 has eight weave layers in four connecting layers, two fully connected layers and an output layer. As the layer activation functions, except for the output layer, where the sigmoidal function was used, the ReLu functions were used. A learning database for the CNN network were photos taken for 10 different students, 50 photos per student. Pictures were taken in different orientations and then subjected to resolution reduction to 90x160 pixels. The photos prepared in this way allowed to start the CNN learning process. The results obtained are shown in Fig.3. The graph shows that for 11 learning epochs the network was able to recognize a given face at about 80%, after 31 epochs it was already about 90%, and for 41 epochs CNN possibilities already reached about 98% and changed further in the learning process slightly.

The MLP deep learning network was used to recognize digits from the MINST database, which contains 60,000 handwritten digits [26]. This network was based on the input layer, with the number of neurons in accordance with the number of pixels present in the image, five hidden layers with the number of neurons, respectively: 2500, 2000, 1500, 1000 and 500, and the output layer consisting of 10 neurons. Backward propagation was used to teach the network and the hyperbolic tangent function was used as an activation function. The network in this configuration achieved very good results, where the error was only 0.35%.

2. Control System

The built-in system allows to choose one of three robot driving modes, by the use of the RC equipment. Remote control, object tracking and ANN pattern recognition. The robot control system is divided into two parts. The first part consists of all the elements in the composition of the mobile robot and includes sensors, a 13 Mpix camera and microcontroller applications for collecting and viewing information (Fig. 4). The second part is the launcher application on a Windows PC (Fig. 5). The application has individual algorithms of an artificial neural network with a multilayer perceptron architecture. Artificial neural network analyses the image sent from a mobile phone camera and on its basis send the tasks to the microcontroller via UART interface.

8-bits microcontroller

Xiaomi Redmi 4X

Li-pol battery

7.4V 1350mAh 2S 30C

Step-down module 1,3V23V 5A

PC

Ultrasonic sensor

HC-SR04

Accelerometer

MPU-6050

Magnetometer

HMC5883L

DC motor driver DRV8835

DC motor Dagu DG02S-1P

• Camera data

• Control signals in object tracking and trajectory recognition using ANN.

5. Block diagram of the overall work of the PC –robot – RC apparatus

3. Mobile Robot Movement

3.1. Data Filtration

In accordance to the information from chapter 2, the robot has been equipped with an accelerometer (MPU6050) and a magnetometer (HMC5883L). The data collected using these two sensors allowed to determine the displacement and direction of robot movement. The device measuring linear or angular acceleration, by measuring it along each axis of the three-dimensional coordinate system: X, Y, Z [27]. In this case accelerometer has measured linear acceleration and, in the process of double integration, it is converted into a displacement [28]. Measurements are recorded only for one axis because the robot’s movement is considered in its coordinate system (fig. 6).

Calibration process of the magnetometer bases on data from the MPU6050 accelerometer [29]. This is extremely important because devices of this type are burdened with an error when tilting the system relative to the XY plane. Having regard to presence of the accelerometer it is possible to get an information about angular inclination in the range of 45º. The compensation procedure determines the relative position of the devices to one each other and

Fig. 3. Graph of CNN recognition capabilities [25]

Fig. 4. Placement of robot components

Computer Robot

Fig.

Implementation of acceleration data filtration: a) course with a zero cut-off factor equal to: 0.18 (1.2 s), b) passage with a factor equal to: 0.24 (2 s)

therefore adjusts the calibration offset. Lack of offset may cause elliptical readings instead of circular thus in some ranges the angle will increase much faster, in others slower. Accelerometer and magnetometer are prone to high noise and external factors such as vibrations, slight tilting. It is therefore necessary to filter the data provided.

Linear acceleration cannot be filtered using Kalman or Complementary Filters that on the other hand can be used in case of angular acceleration. Fig. 7a shows three characteristics. The blue one represents

raw data recorded by the accelerometer during 1st driving straight with 0,2s braking. There is a sudden increase in acceleration in the first phase of movement, as a result of powering the engines, overcoming the friction resistance of the wheels against the ground and putting the wheels in motion (Fig. 7a –section 1). At a later stage, its value oscillates in the range of 1-2 m/s2 to start the braking procedure after a second (Fig. 7a – section 2). Then the acceleration is negative and the robot’s movement ends in 1.2-second (Fig. 7a – section 3). In the range of 1.2-1.5 seconds, an acceleration value of approximately 1.4 m/s2 is visible (Fig. 7a – section 4). This is the zero reference necessary to take into account in the calibration and filtration process due to the geometry of the surface on which the robot is operating. At the beginning, 10 measurement samples are averaged, the table in which these data are stored is each time supplemented with a new acceleration indication and reduced with the oldest. The data filtered at this stage are shown in Fig. 7a in green. The measurement of surface geometry is made by collecting 50 samples of accelerometer indications at a standstill within 8 ms intervals, and then their arithmetic mean is recalculated. The introduction of calibration of the acceleration value at standstill determines the efficiency of the robot displacement calculation. Failure to use calibration would result in excessive acceleration values, and thus erroneous readings when counting the numerical integral.

Known acceleration value at standstill position is set to compensate indications while driving. An additional, experimentally determined zero cut-off value of 0.18 allows to reduce noise by creating a deadband. Thanks to this procedure, acceleration of 0 is clearly noted, and the system is less susceptible to interference (Fig. 7a, orange line). Fig. 7b shows a ride of approximately 2s with a zero cut-off value of 0.24. The blue line characteristic presents the raw data read from the accelerometer and the orange one after filtration. Increasing the value of the zero cutoff factor caused a significant decrease in acceleration compared to the raw data. Reduced values would cause incorrect displacement estimation.

The magnetic meridian direction (magnetic heading) is determined on the basis of the Earth’s magnetic field value in two vectors. The magnetometer reads vectors in the X and Y axes. The direction in radians is calculated by calculating the arc tangent of the two variables mentioned above. Magnetometers are very susceptible to the presence of ferromagnetics, which are hidden in the form of pipes arranged in the ground of the room, or other elements present in the laboratory. The global obstacle to the universal use of magnetometers is the need to take into account the diverse position of the Earth’s magnetic pole, which clearly does not follow changes in the geographical pole. Magnetometer data is used to orient the robot in a given direction. It is therefore beneficial to keep the indications as real as possible with adequate stability. Averaging

Fig. 6. The robot’s coordinate system (xR, yR)

Fig. 7.

averaged samples were used. The second noticeable aspect is that the robot reaches a comparable final angle value with the initial one. Driving over a distance of 90 cm over an uneven surface shows a lot of noise (Fig. 8c). Signal filtration at such indications is very difficult. In addition, the problem of uneven operation of all four engines is illustrated. The initial direction is around 148 °. After traveling 90 cm, the robot is positioned at an angle of about 133°. This gives about 15 cm of discrepancy over a length of less than a meter.

Fig. 8. Measurement of α angle with a magnetometer: a) when the robot is stationary, b) dynamic rotation 10°-120°-10°, c) straight travel – 90 cm

many samples, therefore, becomes fruitless because it would introduce a considerable delay compared to the robot’s physical activities. Positioning accuracy does not require decimal or hundredths, because the robot being tested is not able to reach the position with such precision. Initial filtration can therefore be achieved by using a variable to which data from the magnetometer is saved, in integer form, not a floating point (Fig. 8a, blue and orange characteristics). The second stage of filtration is averaging data from three samples and it is a low-pass filter. In this way we get relatively stable indications that affect the robot’s operation (Fig. 8a, green course). Fig. 8b shows the course with dynamic rotation of the robot from the indicated position 10° to 120° and back. As can be seen, filtration helps stabilize the noise resulting from the rapid movement of the robot. An example would be smoothing the rapid change of indications in the range of 0.2-0.3 s, and the filtered data are not simultaneously delayed in relation to the original signal. This type of problem could arise if too many

Fig. 9. Implementation of the trajectory of a square with a side length of 50 cm (blue line – the expected shape, red line – the actual shape): a) starting point, b) first 50 cm pass with a 90° turn, c) second 50 cm pass with a 90° turn, d) third pass 50 cm with a 90° turn, e) fourth pass 50 cm with a 90° turn – return to the starting point

Fig. 9 shows the process of implementing a 500 mm square trajectory. The starting point was marked in Fig. 9a. A card with a number was set up in front of the robot for further recognition by the neural network. After the recognition process, the ride begins in front of the specified distance. Then a 90 degree rotation is made (Fig. 9b). These two operations are repeated three more times (Fig. 9c, d), with the robot finishing the journey in the starting position (Fig. 9e).

The shape of the implemented figure is clearly distorted. It is influenced by many factors discussed

earlier in this work, with a detailed analysis of specific components. The gradient of the ground has a negative effect on the accelerometer, which calibrates during standstill. Changing it along the robot’s motion path causes erroneous readings that are difficult to filter out. The error accumulates due to the necessity of performing the ride and turn four times. Another problem is the stress that occurs when mounting the motors to the frame. Tightly folded elements cause the wheels to lose alignment. Working at constant speed, they can cause the robot to drift to either side as well as error resulting from uneven operation of the motors. Although the Authors compensate it accordingly for each pair of motors (by adjusting the PWM signal fill separately for the motors on the right and left), supplying them with the same voltage does not guarantee repeatability of rotational speeds.

3.2. ANN Recognition

For the task of recognizing the trajectory of the robot’s movement, the Authors decided to use a unidirectional neural network. Networks of this type occur in the literature under the name MLP (Multilayer Perceptron), because this network is actually made up of layers called successively the input, hidden and output. The network architecture was shown in Fig. 10.

A characteristic feature of this type of ANN is the method of connecting subsequent neurons, which in this case causes that each neuron of the preceding layer is connected to each neuron of the next layer. This method allows the influence of a single neuron on the network input on all neurons of subsequent layers. Such connection causes every neuron at the network input is just as important, which is an undoubted advantage. The disadvantages of this solution include the number needed to calculate and save the weights of the neural network. As we can see from the network architecture, in case of image recognition, a single pixel is the input for the corresponding input layer neuron. For images, i.e. with a resolution of 80x120, the neural network needs 9600 neurons at the input. The Authors of the work decided that numbers from 0 to 9 will be

recognized and responsible for planning the appropriate trajectory of the mobile robot. There are 10 such digits, hence the multilayer perceptron needs to work properly with 10 neurons in the output layer. Each neuron of the output is responsible for classifying the corresponding pattern in the form of an image.

1 – Wi-fi wireless communication, 2 – UART communication, 3 – PWM signal

structure

The block diagram in Fig. 11 shows how the planning process of the mobile robot’s movement is carried out. The key element in this task is the multilayer perceptron. Due to the relatively large size of the network, and the necessary image analysis, it was decided to use the computer as a calculation unit for the network used. The network that meets the conditions for recognizing digits from 0 to 9 and with a resolution of 80x120 pixels, is a multilayer perceptron with an architecture of 9600-705-10. The number of neurons at the input is determined by the image resolution. The number of neurons at the output results from the number of classified patterns, in our case the digits. However, the number of neurons in the hidden layer was determined on the basis of research, the results of which are presented in chapter 4 of this work. The authors decided to use the sigmoidal function as a function of neuron activation.

4. ANN Examination

Because of artificial neural networks reflected architecture in the operation of human neural cells, they are one of the best tools for solving classification tasks. Classifications of numbers, animals, vegetables and fruits, road signs, faces and many other elements or things are known in the literature. In the work considerations, it was decided to use the neural network to classify the image in the form of a digit, and then, depending on the recognized digit, drive the mobile robot to overcome the appropriate trajectory. The Authors claim that machine vision based on artificial neural network technology is an area that is unrivaled in comparison with other image classification algorithms. The principle of classification and operation of the network, which is referred to at work, i.e. MLP (Multilayer Perceptron), is very similar for all these cases. Multilayer

Fig. 10. Artificial neural network architecture [30]

Fig. 11. Blok diagram of system

perceptron differ in the number of layers, neurons, activation functions and parameters such as learning coefficient or function parameters determining the shape of the curve. In software design, the most difficult thing is choosing the right learning rate, curve shape and number of neurons in the hidden layer. The most common way of selecting the parameters mentioned above is the test method, relying on existing networks or on own experience in neural network design. The work focuses on architecture networks with one hidden layer. This kind of multilayer perceptron was used due to the fact that in the design of the neural network, we strive not only to reduce the error of the neural network response, but also to the smallest possible number of neurons in the hidden layer. This is because oversizing the number of neurons in this layer leads to an increase in the number of calculations, and thus time to teach MLP. Due to the use of MLP to determine the trajectory of the mobile robot, it was decided to carry out tests to calculate the number of hidden layer neurons, depending on the number of neurons of the input layer and output. The authors did not find in the literature a formula that allows to estimate what number of neurons in the hidden layer allows to complete the task of teaching a network of specific patterns.

patterns that affect the number of output neurons, and in the case of a change in the resolution of the analysed image that affects the number of neurons in the input layer. Images analysed by neural networks usually have low resolutions to limit the time needed for learning. An example is the network trained on the MNIST database, which is a database of 60,000 hand-drawn digits with a resolution of 28x28 pixels, in case of the target network presented in the work this resolution is 120x180 pixels. Due to the fact that the network learning time in case of MLP as shown in the literature example in Fig. 15, for the optimal case is 114 hours for a resolution of 29x29 pixels. Research on networks with a lower resolution than the target 120x180, saved a few hundred or several thousand hours selection of the appropriate number of neurons in the hidden layer. In addition, the research allowed to estimate the value of other parameters, i.e. the shape of the sigmoidal curve as a function of activation, and the learning rate factor. Research was began with small artificial neural networks, while in subsequent iterations the number of neurons in the hidden and input layers were increased by increasing the resolution of the analysed image. Sample results are shown in the following illustrations.

Fig. 14 shows to what extent the learning factor affects the learning speed of the artificial neural network. With a learning factor of 0.6, the network learned to the assumed error rate after 75 learning epochs. However, when the value of this coefficient was 0.5, the network already needed 94 learning epochs.

The research aims to develop a formula that will determine the number of neurons in the hidden layer. This will allow easy redesign of the neural network in the event of a change in the number of classified

In Fig. 13 to 17 one can observe the learning progress of the neural network with a variable learning coefficient and a variable parameter of the slope of the sigmoidal curve. It was noted that the impact of these parameters is significant. For small values, the artificial neural network did not show learning progress. However, when these values were too large, the network learned chaotically. Analyzing the previous graphs of learning progress, we can see that both the learning rate and the coefficient of the sigmoid curve

Fig. 12. Architecture of tested networks

Fig. 13. Architecture 300-15-5, angle parameter of the activation function curve 0.2

Fig. 14. Architecture 300-15-5, angle parameter of the activation function curve 0.3

angle affect the learning speed. Appropriate selection of these parameters allows to optimize the learning process of the artificial neural network.

Fig. 15. Architecture 300-15-5, angle parameter of the activation function curve 0.4

Fig. 16. Architecture 300-15-5, angle parameter of the activation function curve 0.5

Fig. 17. Architecture 300-15-5, angle parameter of the activation function curve 0.6

Fig. 18. Architecture 1200-50-5, angle parameter of the activation function curve 0.5

Fig. 19. Architecture 1200-75-5, angle parameter of the activation function curve 0.5

Fig. 20. Architecture 1200-100-5, angle parameter of the activation function curve 0.5

In the first part, Authors have analysed the effect of the learning rate and the slope angle parameter of the sigmoidal curve as a function of the activation of each neurons. In Fig. 18 to 20, however, can be seen how the number of neurons in the hidden layer has an impact on the learning process. During the study, it was also noticed that increasing the number of neurons in the hidden layer caused an increase in

learning speed. However, this process was saturated and in case of further increase in the number of neurons in the hidden layer, the multilayer perceptron did not learn faster. Hence, network design is not a simple process.

Fig. 21. Trend line graph generated using Excel software for data obtained from networks with architectures with 10 neurons on the output

Basing on performed tests, authors proposed a graph showing the number of hidden layer neurons depending on the examined resolution of the classified image. The results are presented in Fig. 21.

5. Conclusion

Implementation of artificial neural networks in the task of mobile robot navigation as well as machine vision has a crucial value in a way of modern robotics development. Captured image can simultaneously allow robot to avoid obstacle, follow the marker and provide information to navigate. Artificial intelligence methods, including the multilayer perceptron described in the paper, are perfect for this type of tasks. An example application of the proposed system is an autonomous mobile trolley in warehouses. Robot controller performs scanning of a label placed on a cargo and further proceed an assigned trajectory to deliver it to its destination point.

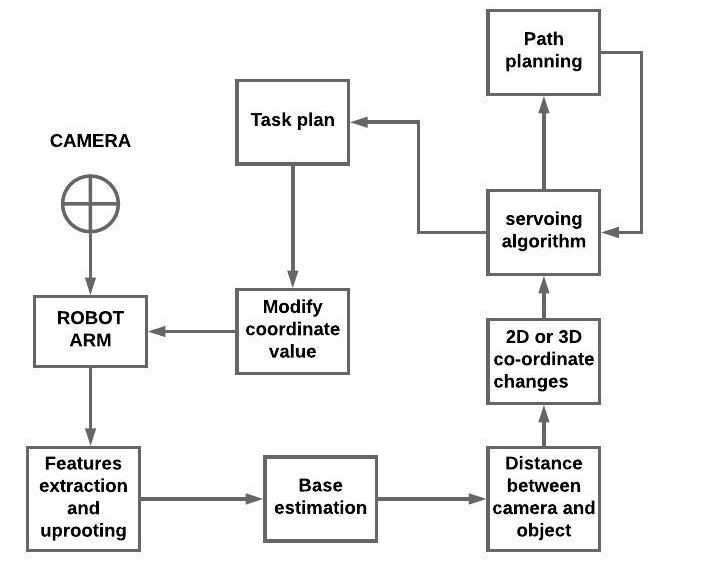

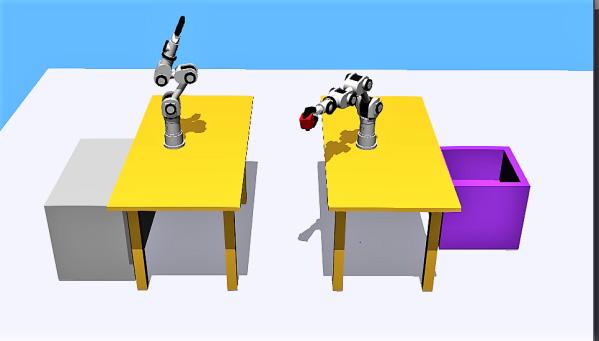

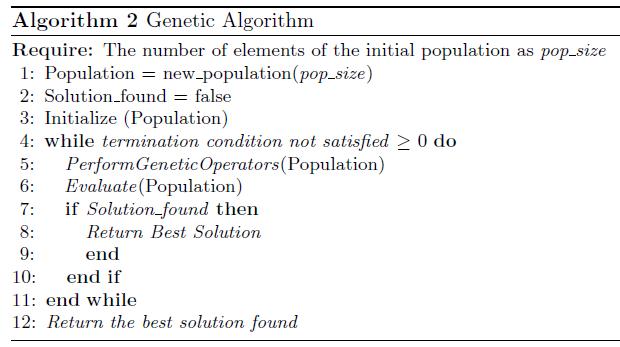

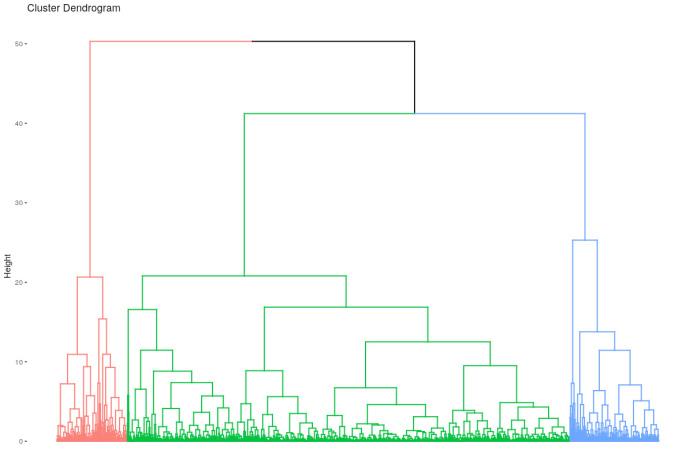

The designed network allowed to obtain the expected results in terms of path recognition. The algorithm can classify a specific number with certainty above 97% for digits written by a person whose handwriting has been included in the database of learning network. In order to recognize the letter better, it would be necessary to supplement it with samples of a larger number of people.