BROAD STREET SCIENTIFIC

The North Carolina School of Science and Mathematics – Durham Journal of Student STEM Research

VOLUME 13 | 2023-24

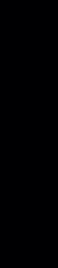

Front Cover

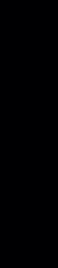

Caught by NASA’s SDO satellite, the Sun’s “smile” is actually made up of coronal holes. These dark spots, while invisible to the naked eye, can be seen through UV light and appear when areas of the Sun are less heated and dense than their surrounding plasma.

Credit: NASA’s Solar Dynamics Observatory

Biology Section

This image depicts a Cleopatra (Gonepteryx cleopatra) butterfly resting on a Rudbeckia hirta flower. The left side shows the butterfly and flower under a UV light while the right side shows them in the visible light spectrum

Credit: (C) Dr. Klaus Schmitt, Weinheim Germany uvir.eu used with permission of the artist

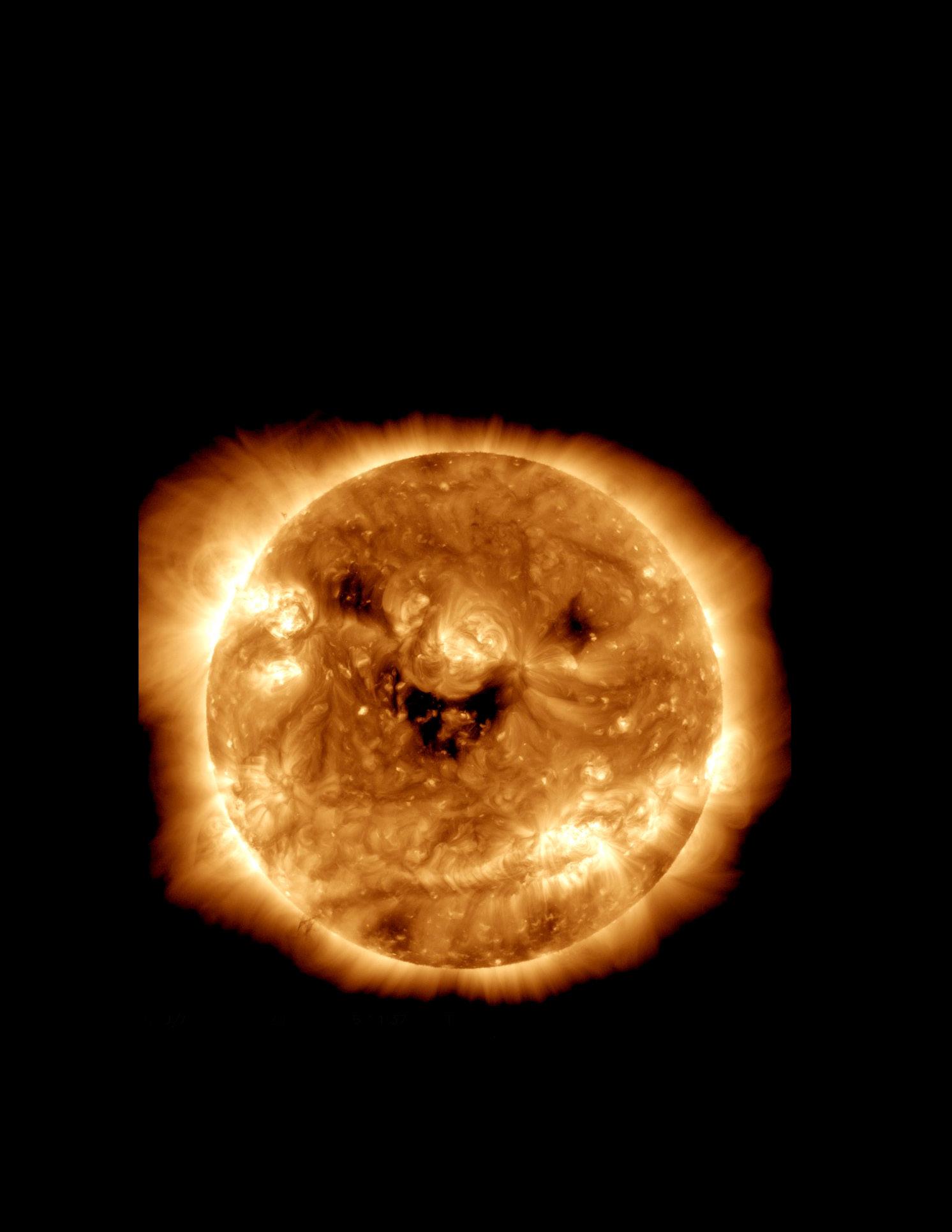

Chemistry Section

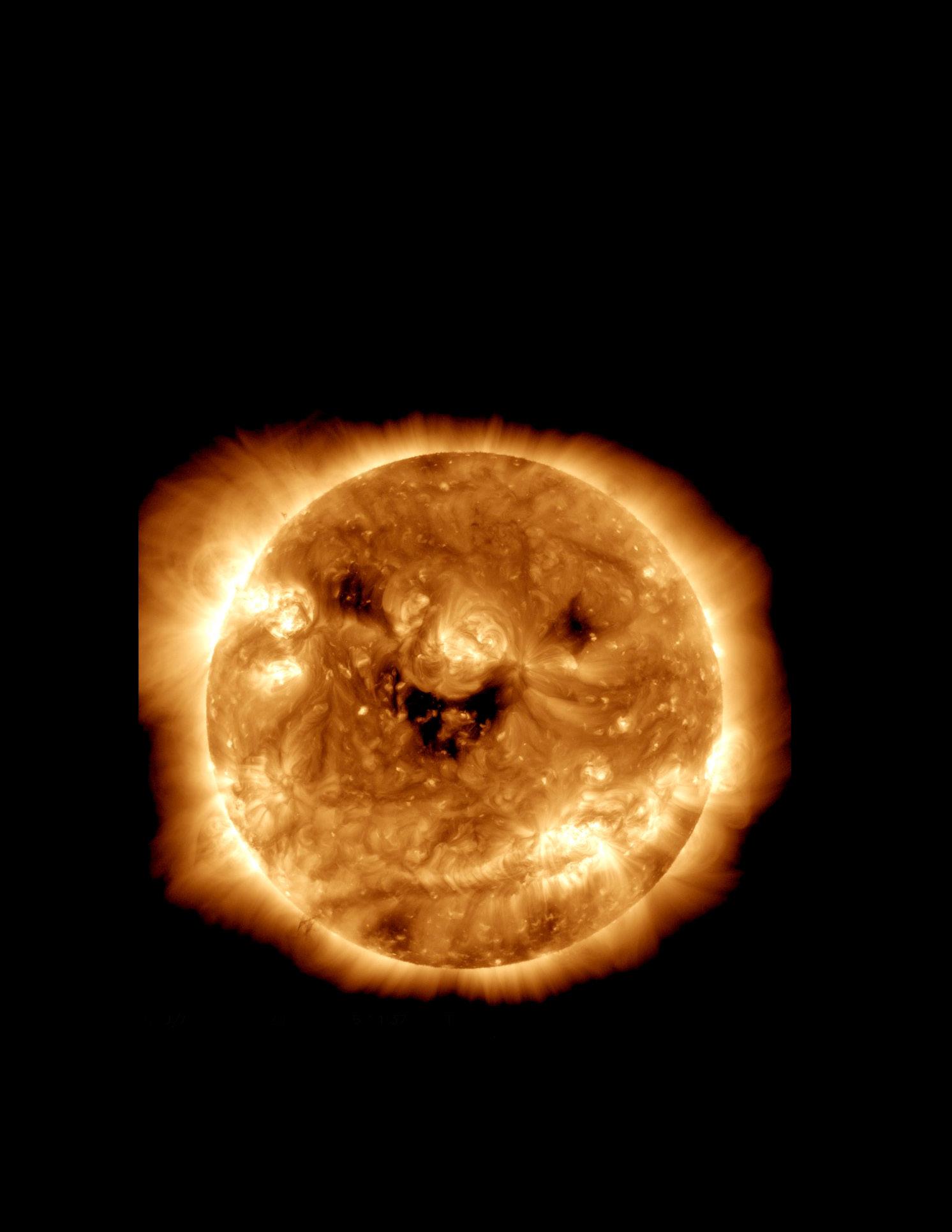

The “Fantasy Rock” is composed of multiple minerals from the Taseq Slope in Greenland. Typically, the rock consists of tugtupite, sodalite, chkalovite, and analcime. Under UV light, the composition of these minerals produces bright, fluorescent colors

Credit: (C) www.minershop.com used with permission

Engineering Section

These miniature UV LED lights are popular in recreational projects for their versatility. Practical uses of UV light include disinfection, sterilization, and forensics.

Credit: (C) adafruit.com used with permission

Mathematics and Computer Science Section

This image shows a white rose photographed under UV light; it appears pink and has glowing white spots. The petals of the rose follow the Fibonacci sequence, where a new set grows between the spaces of the previous set.

Credit: Craig P. Burrows https://cpburrows.com/

Physics Section

The Hubble Space Telescope took this picture of Jupiter in the UV spectrum. The famous Great Red Spot, which appears blue in UV light due to high altitude particles absorbing light at these wavelengths, is large enough to swallow the Earth.

Credit: NASA / ESA / Hubble / M. Wong, University of California, Berkeley / Gladys Kober, NASA & Catholic University of America

7 Essay: Is It Possible to Use GPS to Combat Climate Change?

TERESA FANG, 2025 9 Photography: Grog

CHARLOTTE GOEBEL, 2025

La Fortuna Waterfall

VINCENT SHEN, 2025

10 Reduction of the Abundance of Antibiotic-Resistant Bacteria (ARB) in Contaminated Soil using Modified Biochar

EMILY ALAM, 2024

20 Identification of Single-Nucleotide Polymorphisms Related to the Phenotypic Expression of Drought Tolerance in Oryza Sativa

REVA KUMAR, 2024

26 Analyzing the Effect of Inhibiting Combinations of Protein Kinases on the Progression of Alzheimer’s Disease in Caenorhabditis Elegans

GAURI MISHRA, 2024

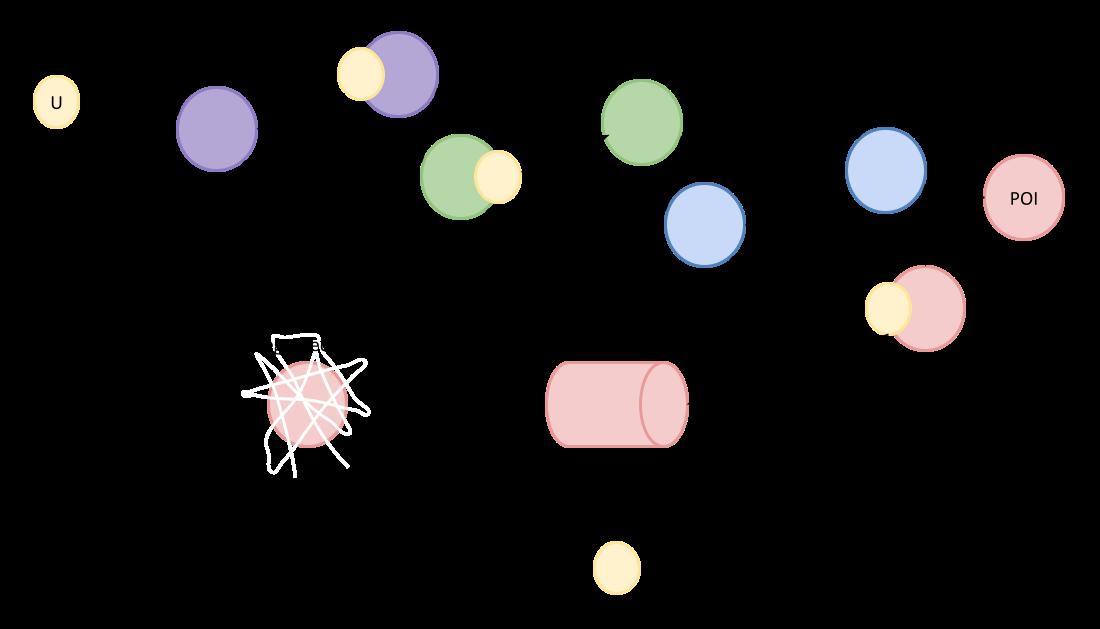

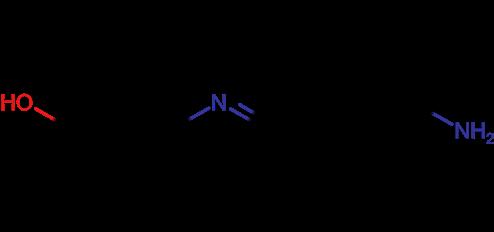

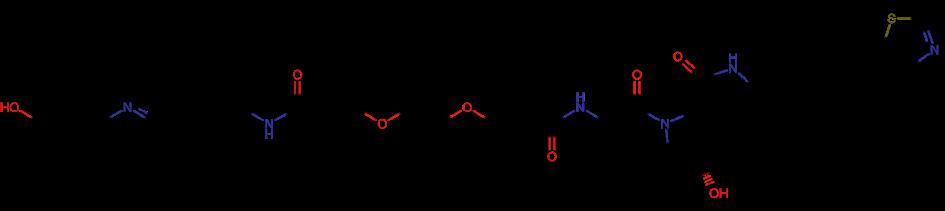

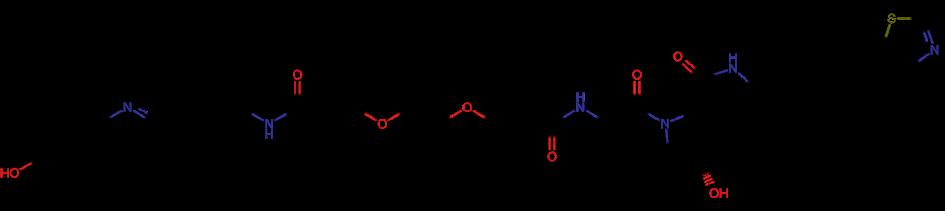

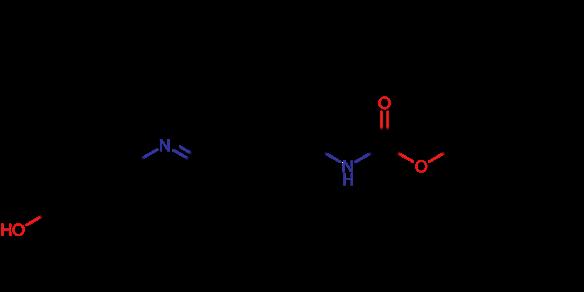

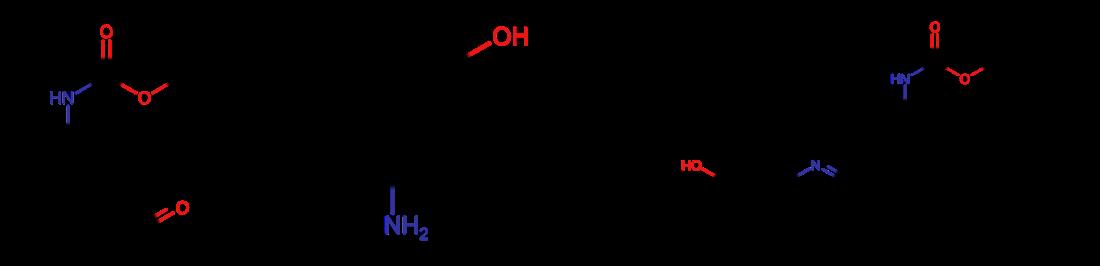

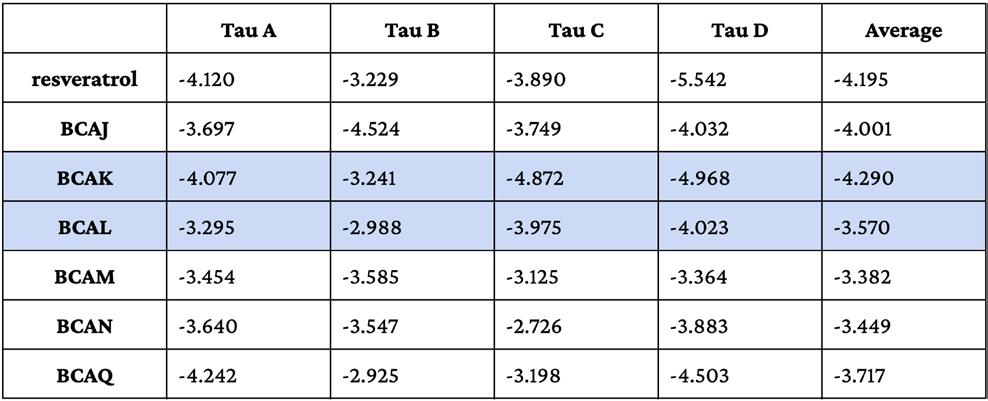

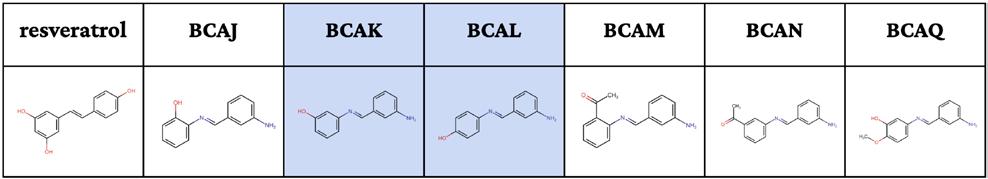

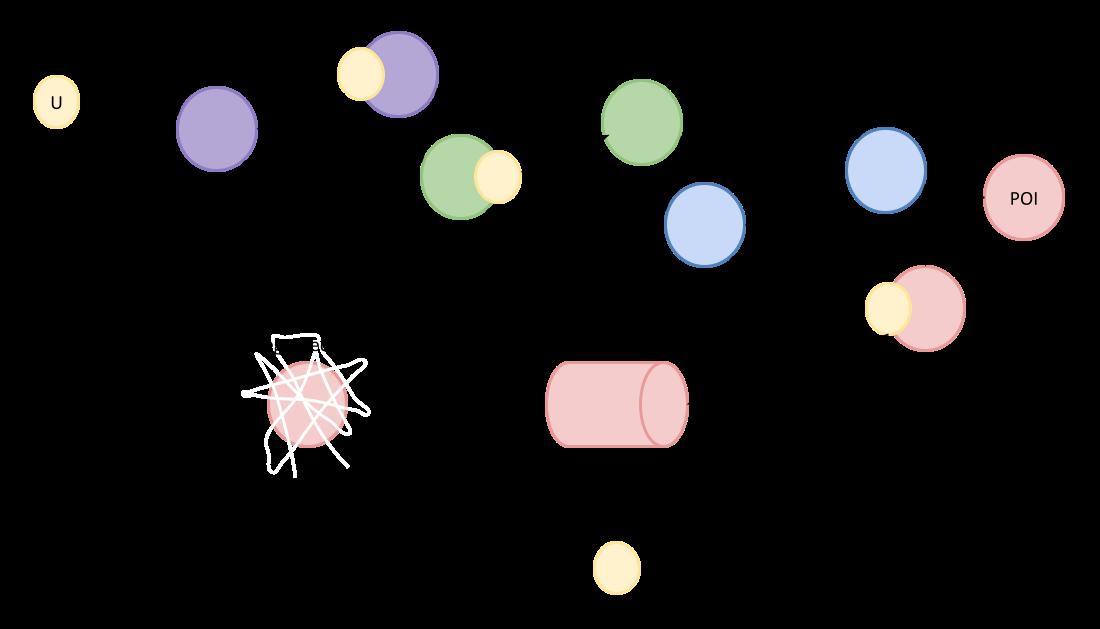

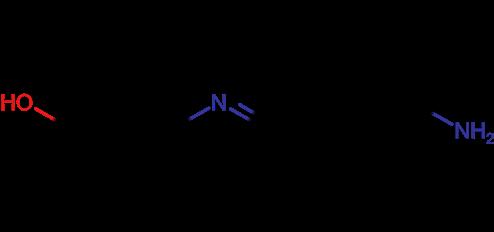

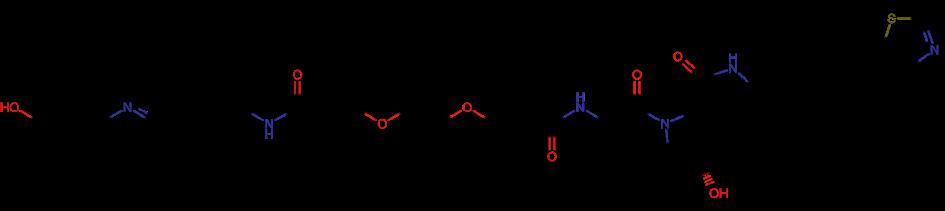

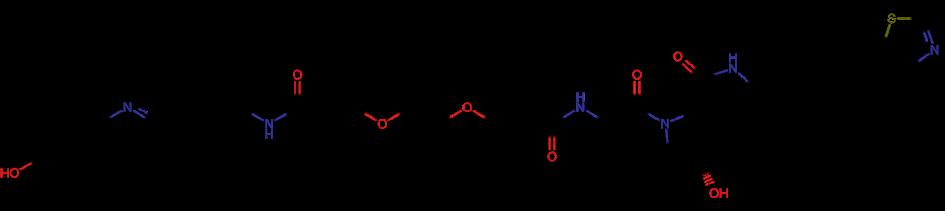

35 Design and Synthesis of a PROTAC Molecule and a CLIPTAC Molecule for the Treatment of Alzheimer's Disease by p-Tau Degradation through the Ubiquitin Proteasome System

OLIVIA AVERY, 2024

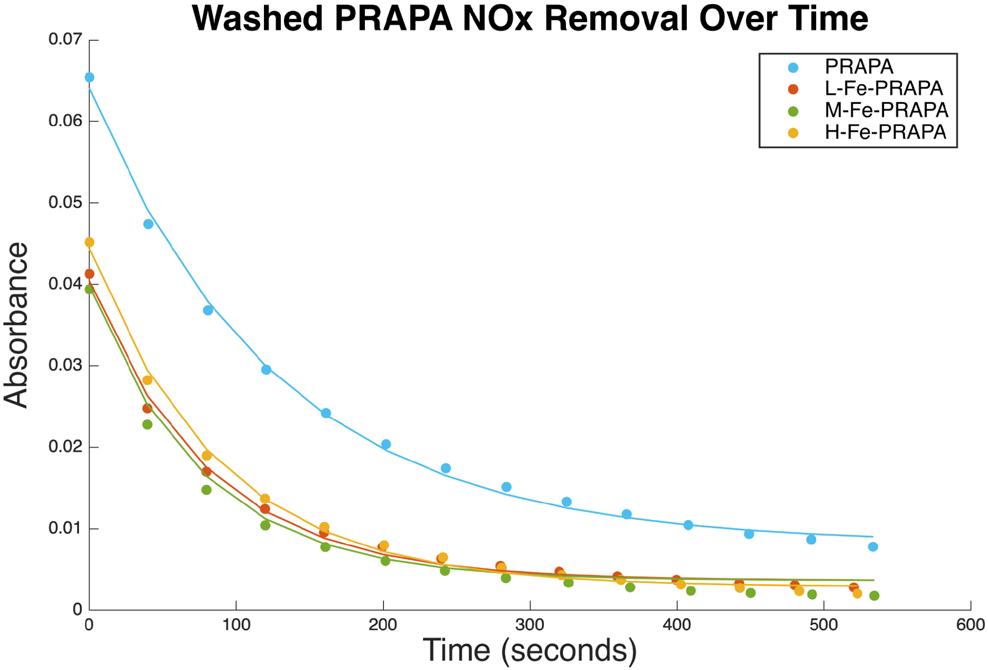

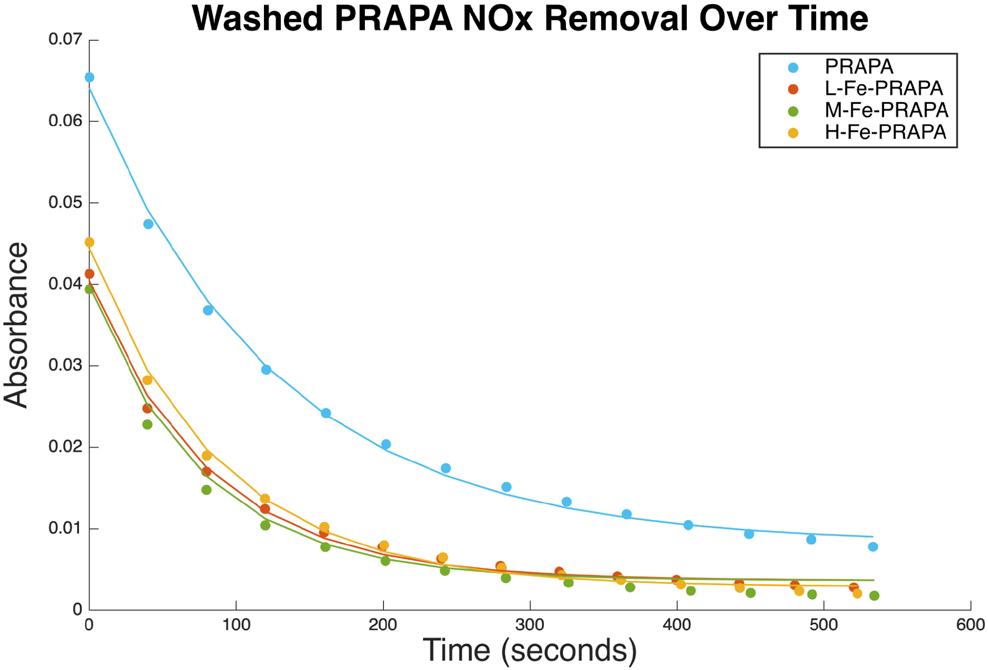

45 Analyzing NOx Removal Efficiency and Washing Resistance of Iron Oxide Decorated g-C3N4 Nanosheets Attached to Recycled Asphalt Pavement Aggregate

EMMIE ROSE, 2024

Biology

Letter from the Chancellor

Words from the Editors

Street Scientific Staff

TABLE of CONTENTS

4

5

6 Broad

Chemistry

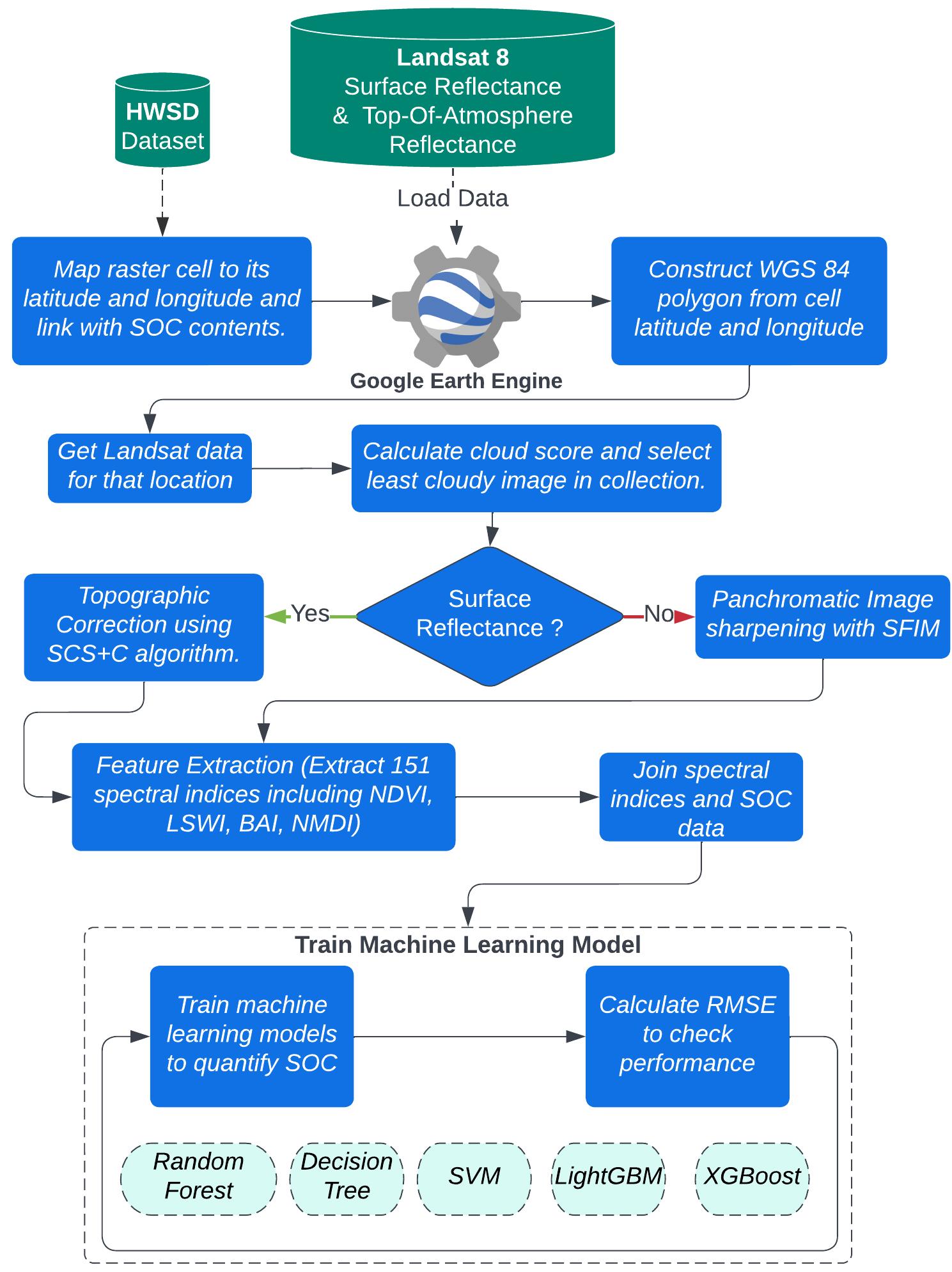

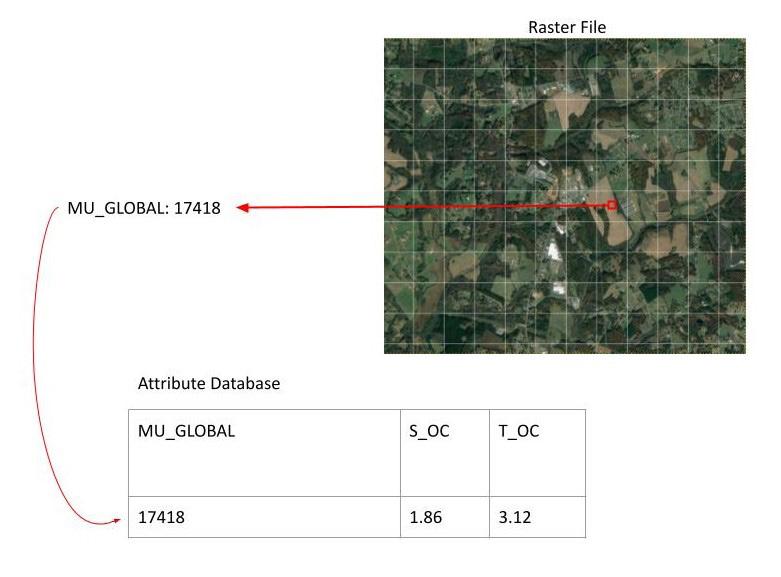

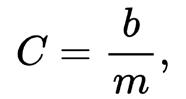

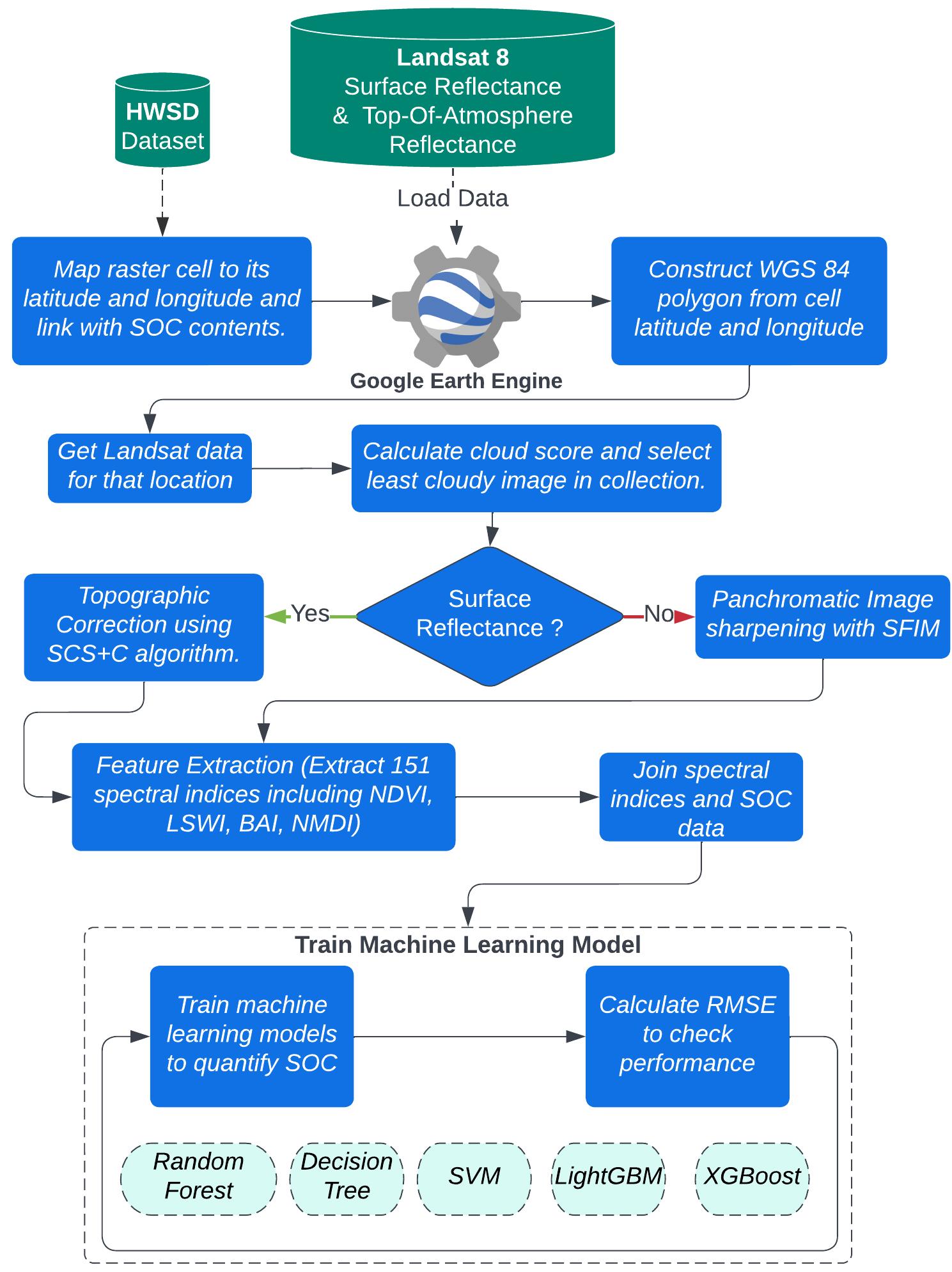

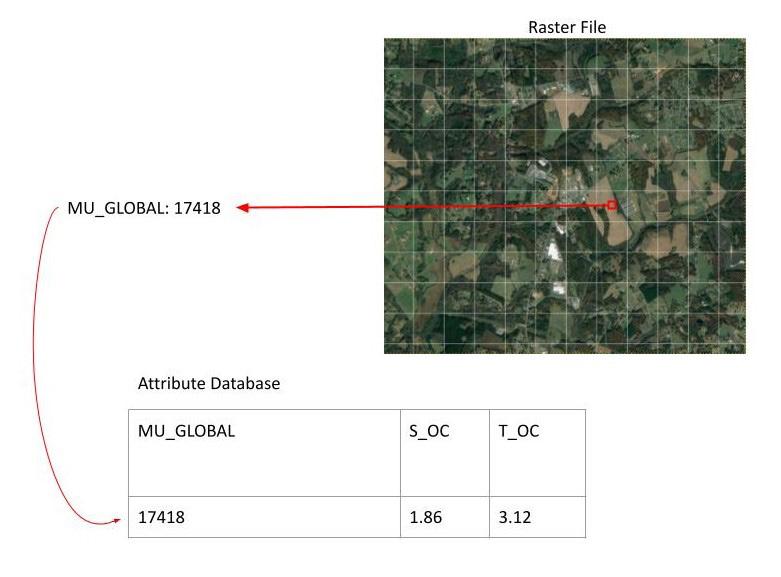

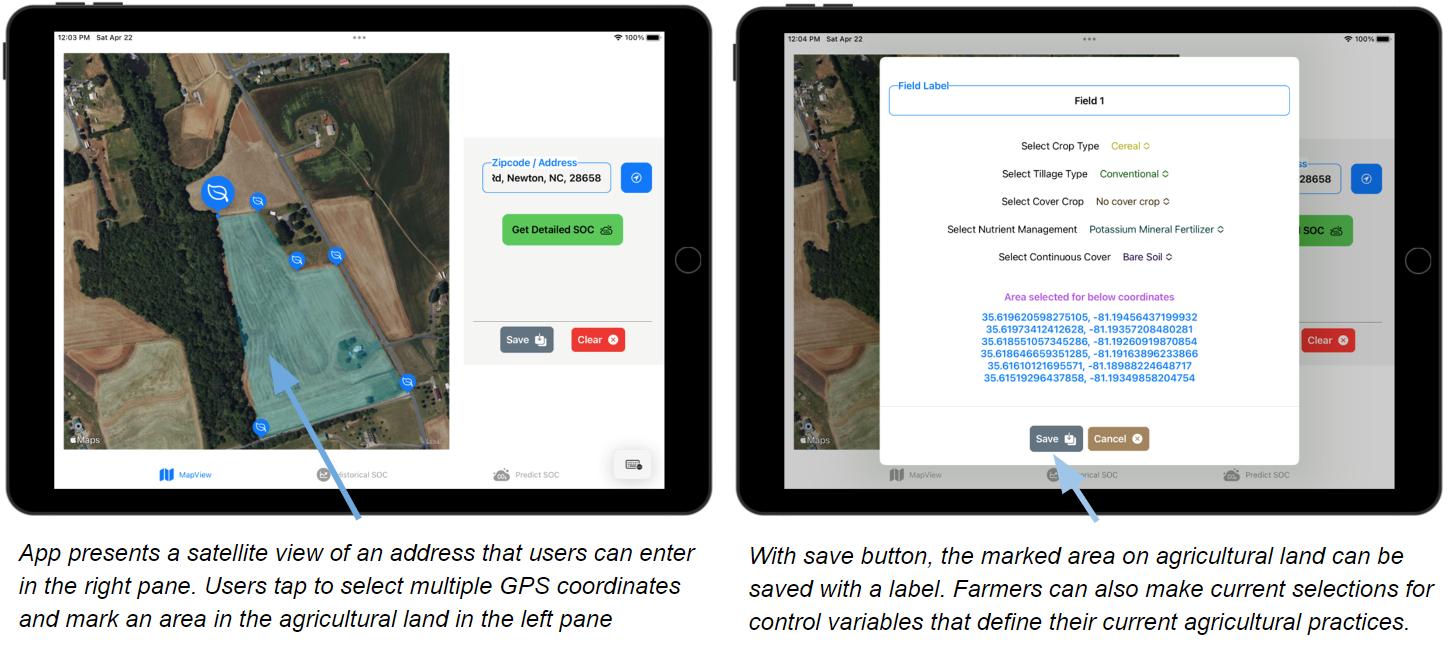

51 Mapping Soil Organic Carbon Using Multispectral Satellite Imagery and Machine Learning

REYANSH BAHL, 2024 ONLINE

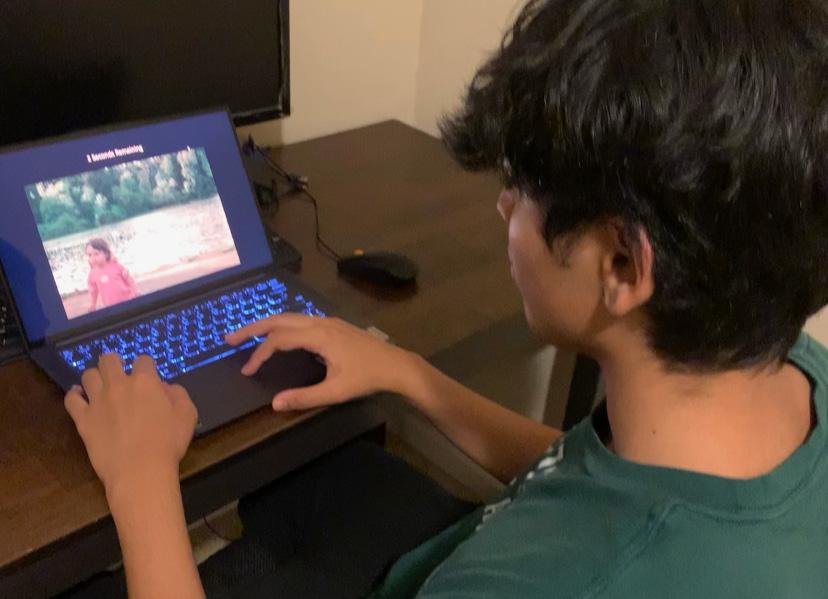

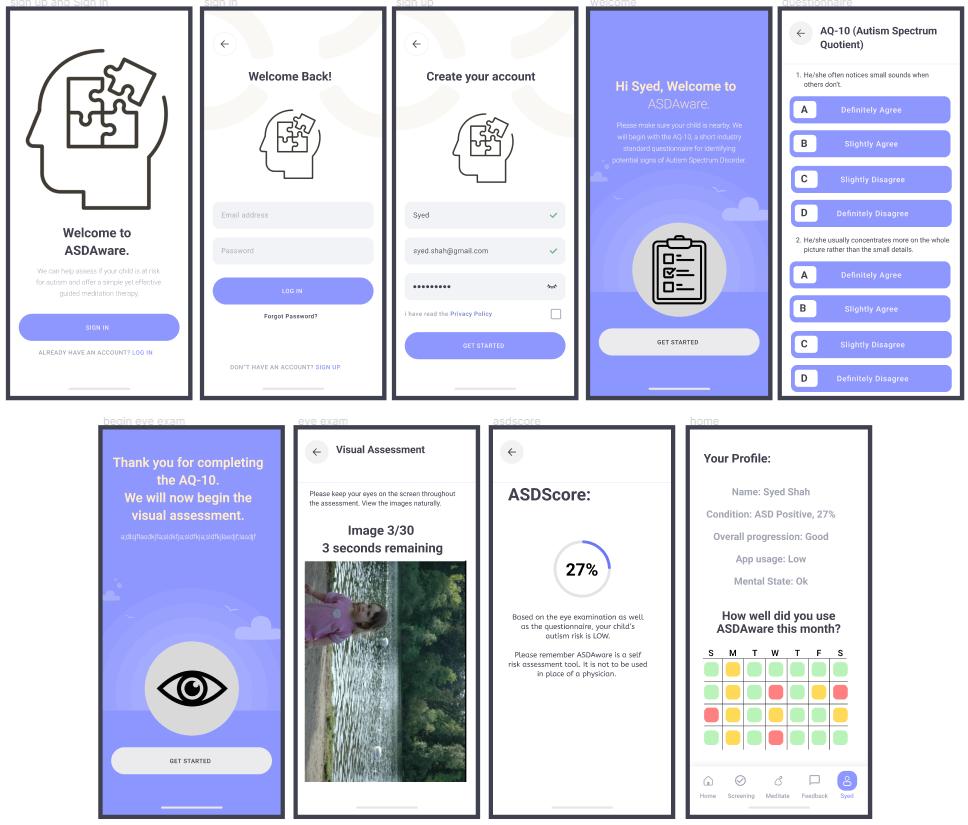

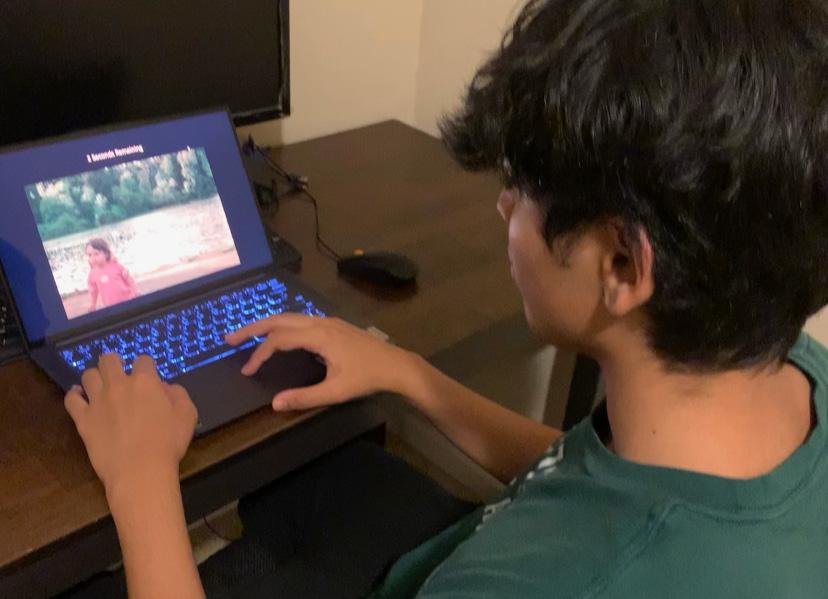

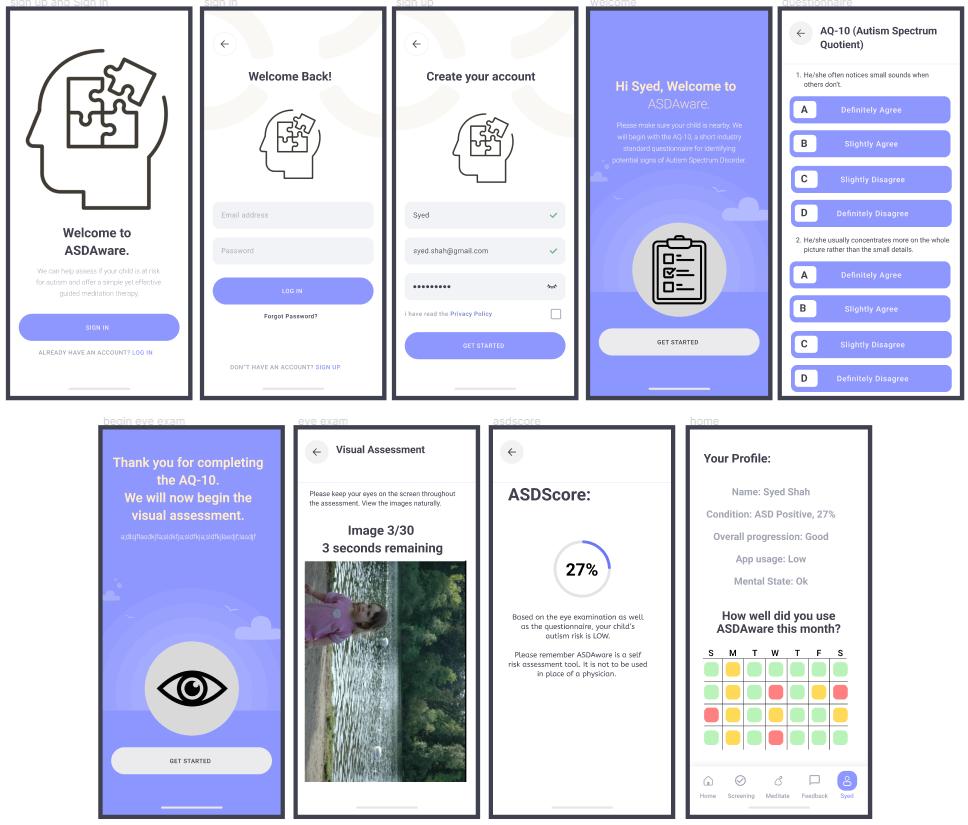

58 ASDAware: A Low-Cost Eye Tracking-Based Machine Learning Model for Accurate

Autism Spectrum Disorder Risk Assessment in Children

ISHAN GHOSH AND SYED SHAH, 2024 ONLINE

Mathematics and Computer Science

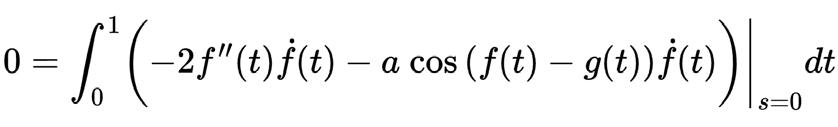

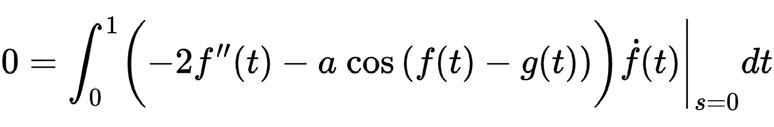

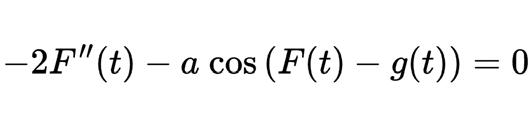

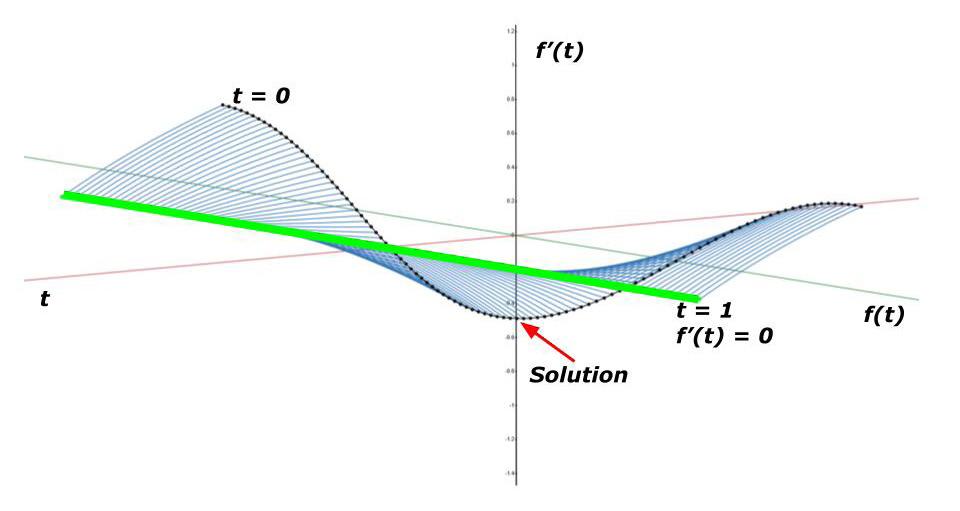

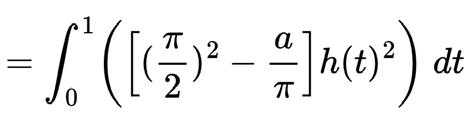

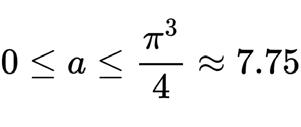

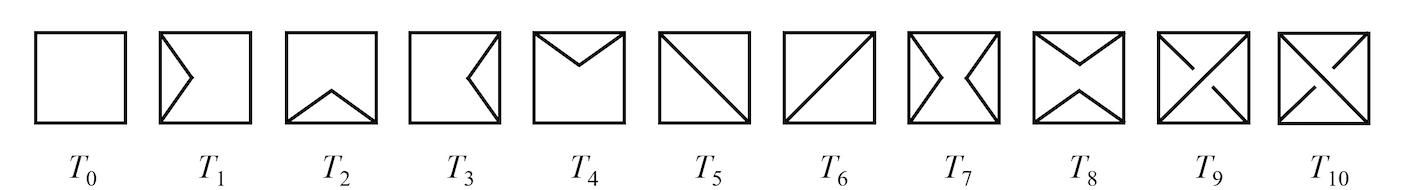

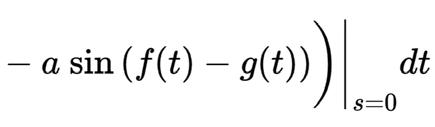

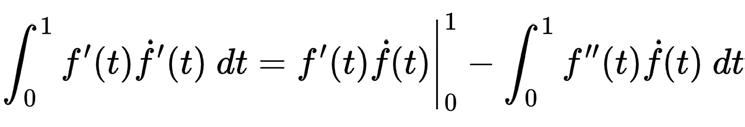

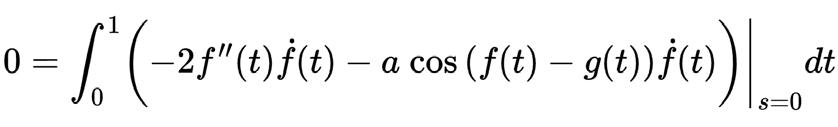

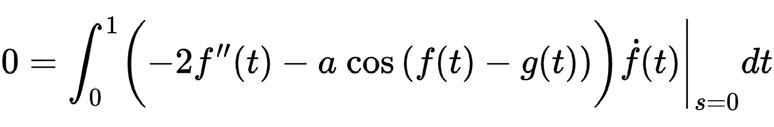

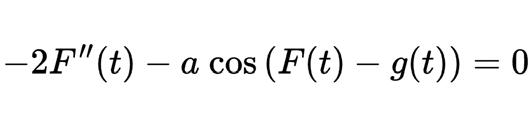

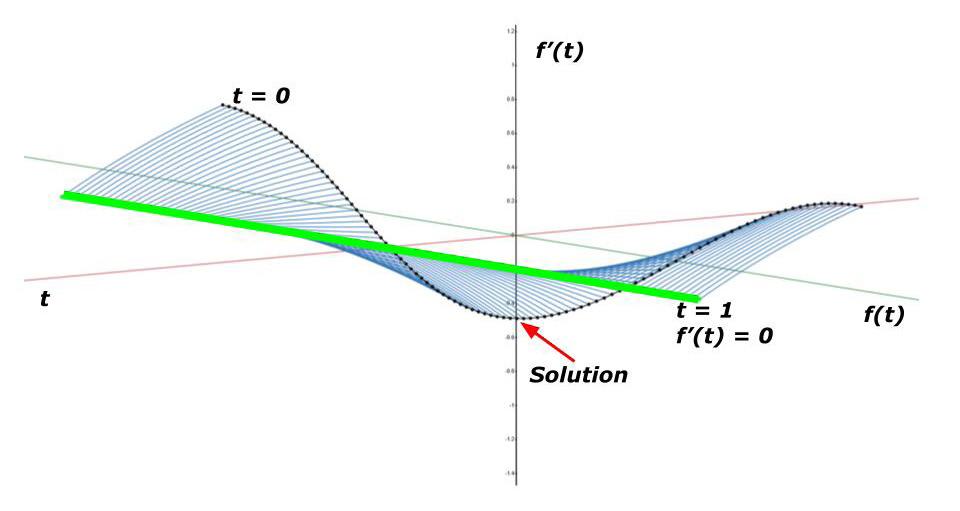

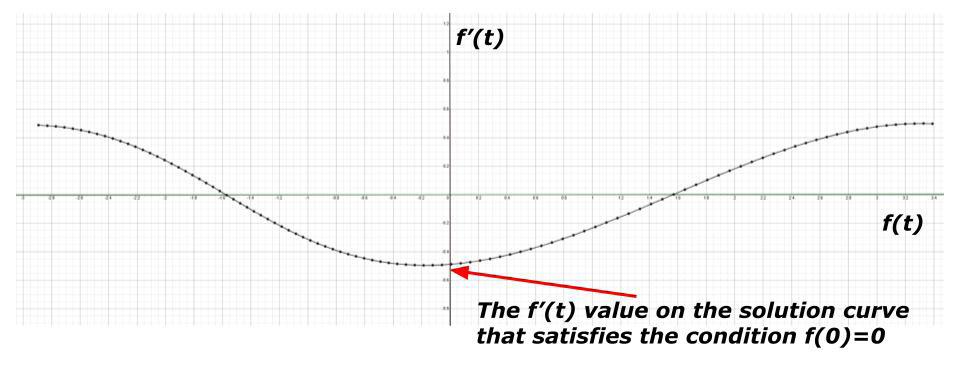

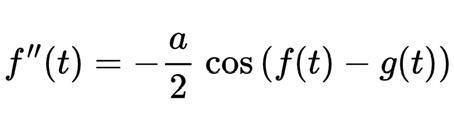

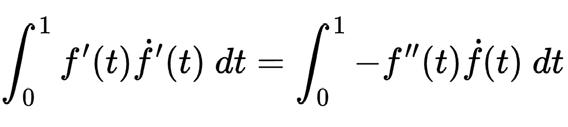

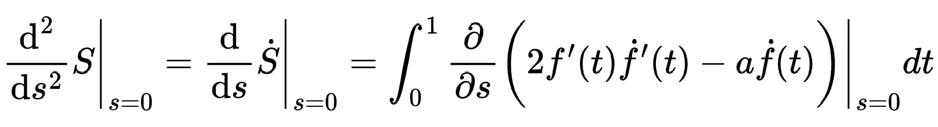

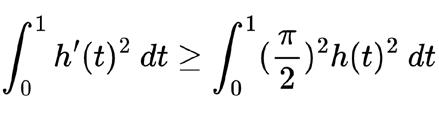

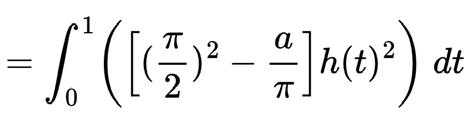

65 A Problem in Game Theory and Calculus of Variations

CHRISTOPHER BOYER 2024, GRACE LUO 2025, AND SIDDHARTH PENMETSA 2024

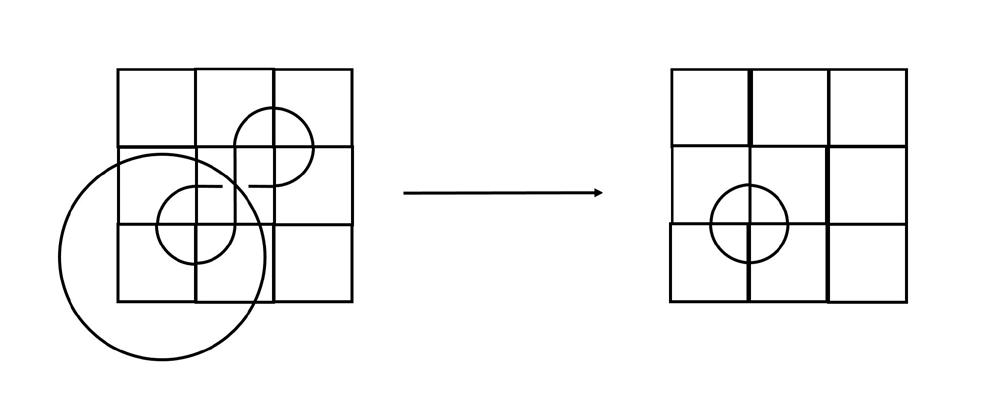

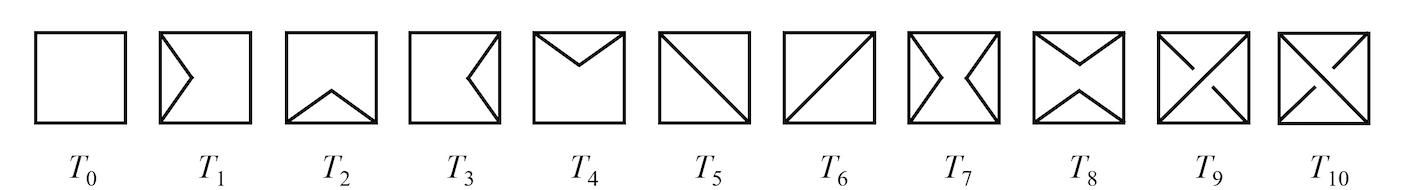

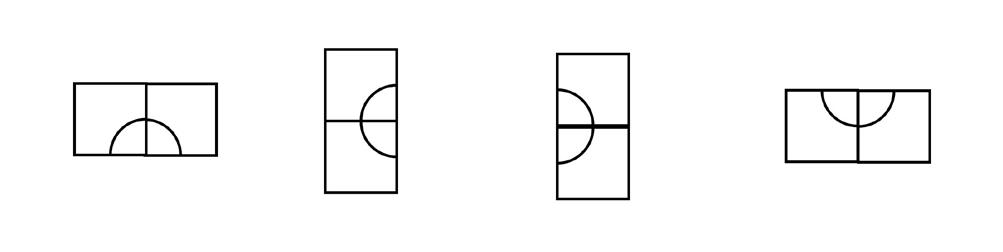

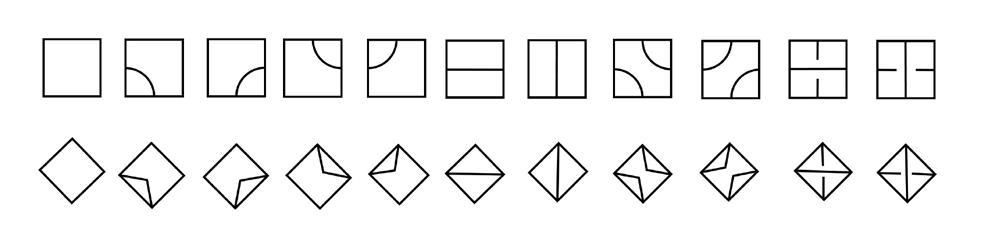

74 On Mosaic Invariants of Knots

VINCENT LIN, 2024

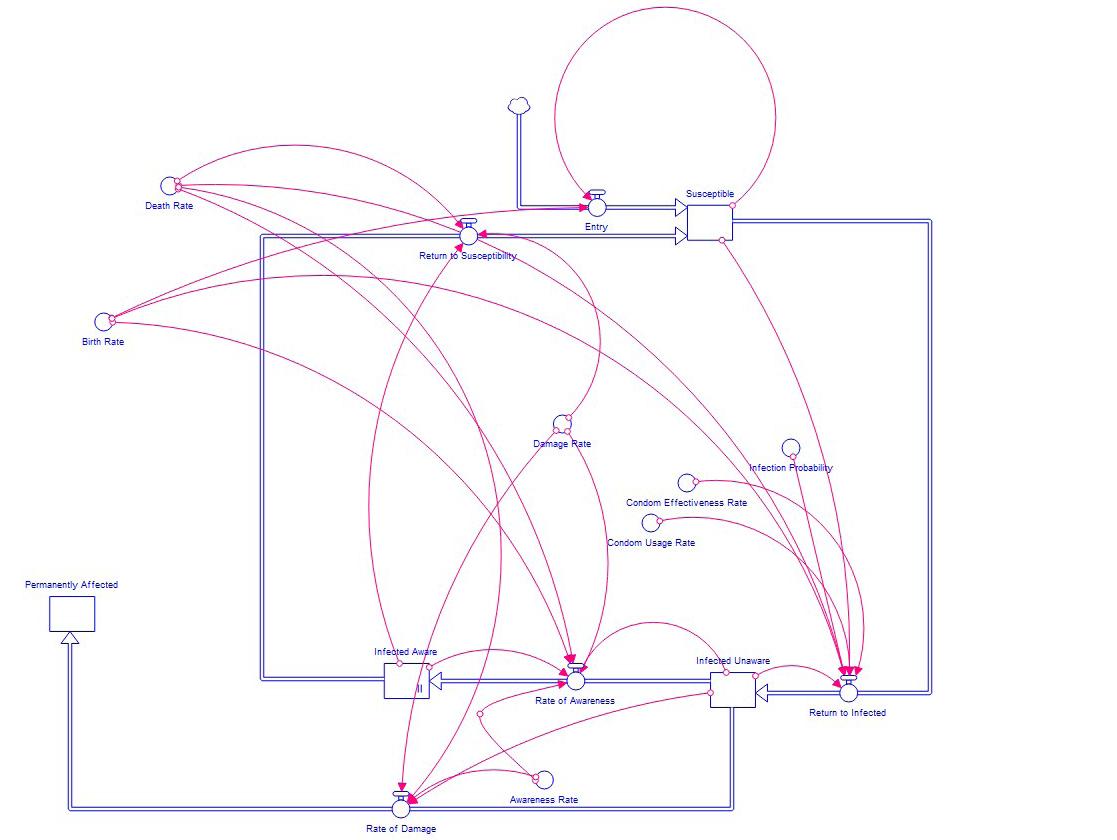

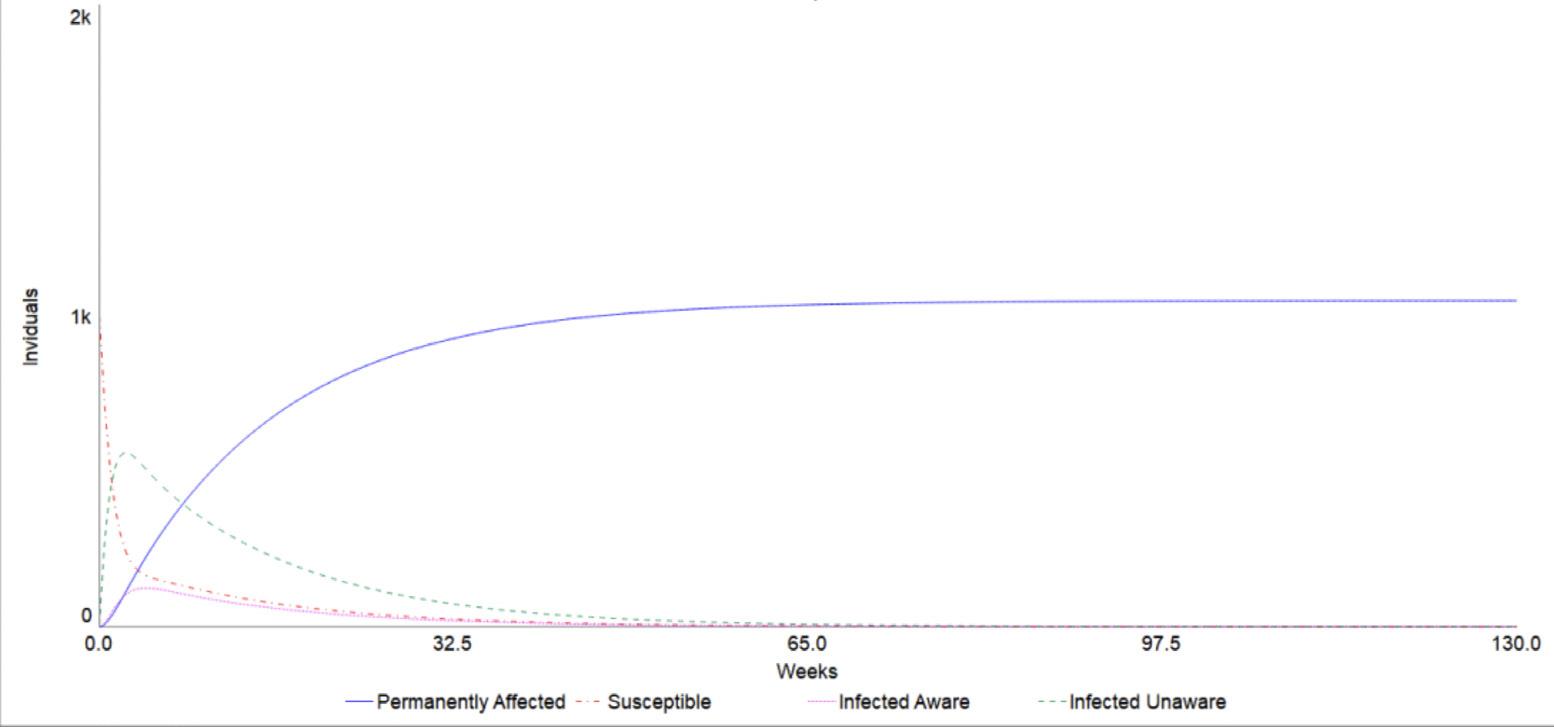

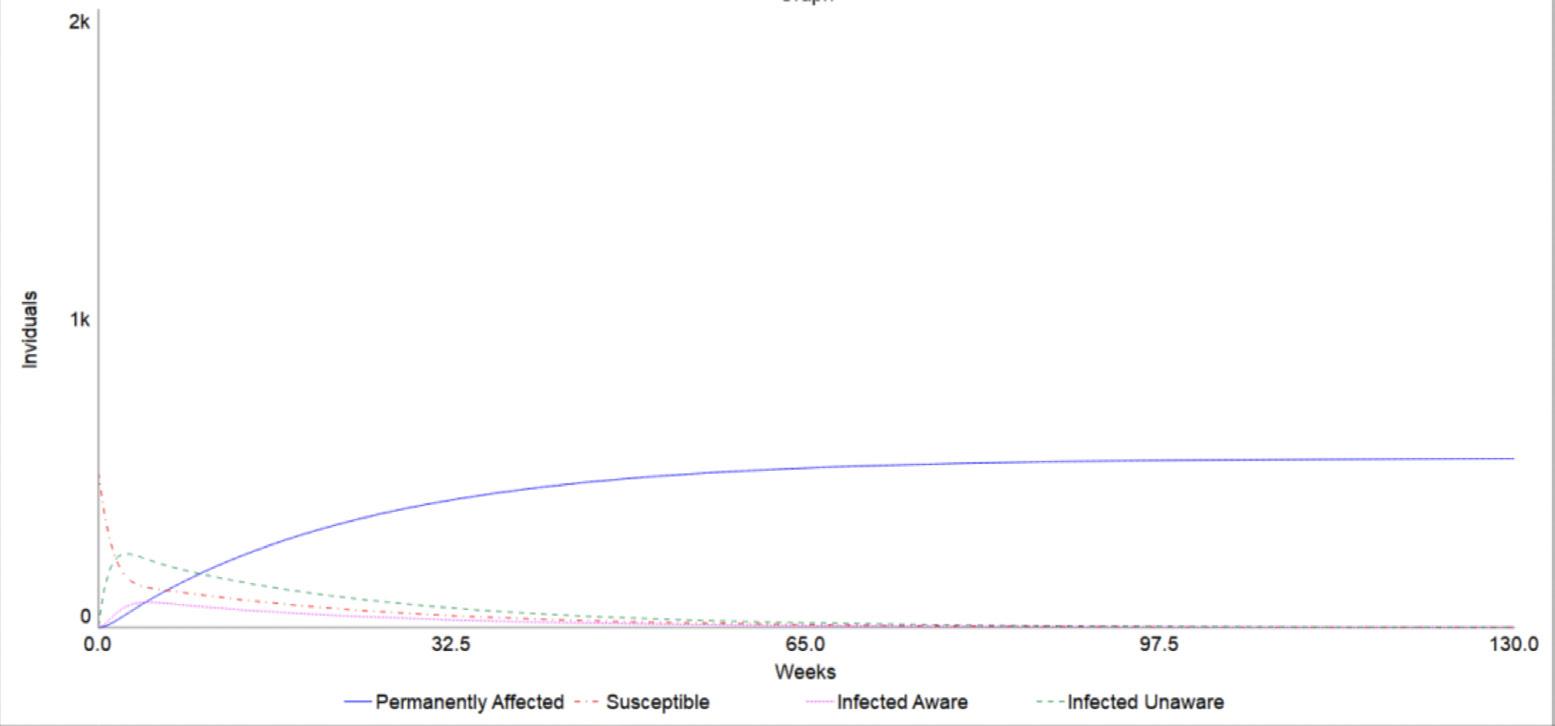

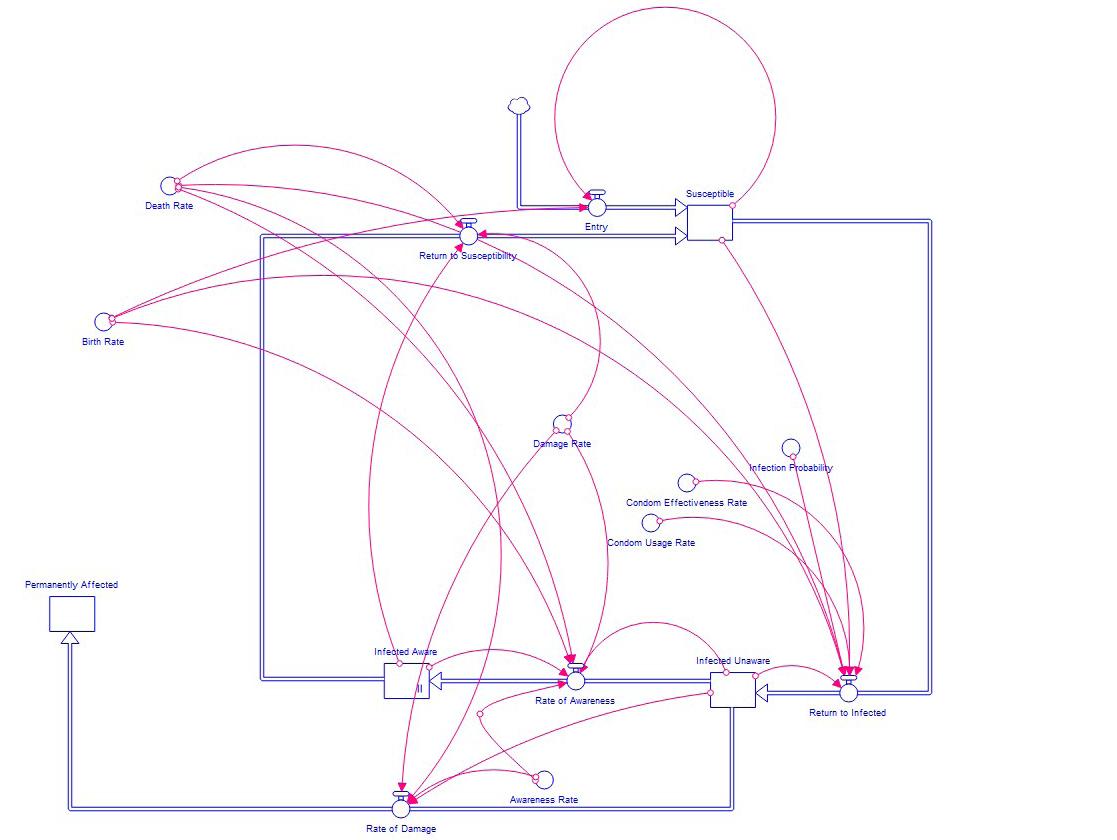

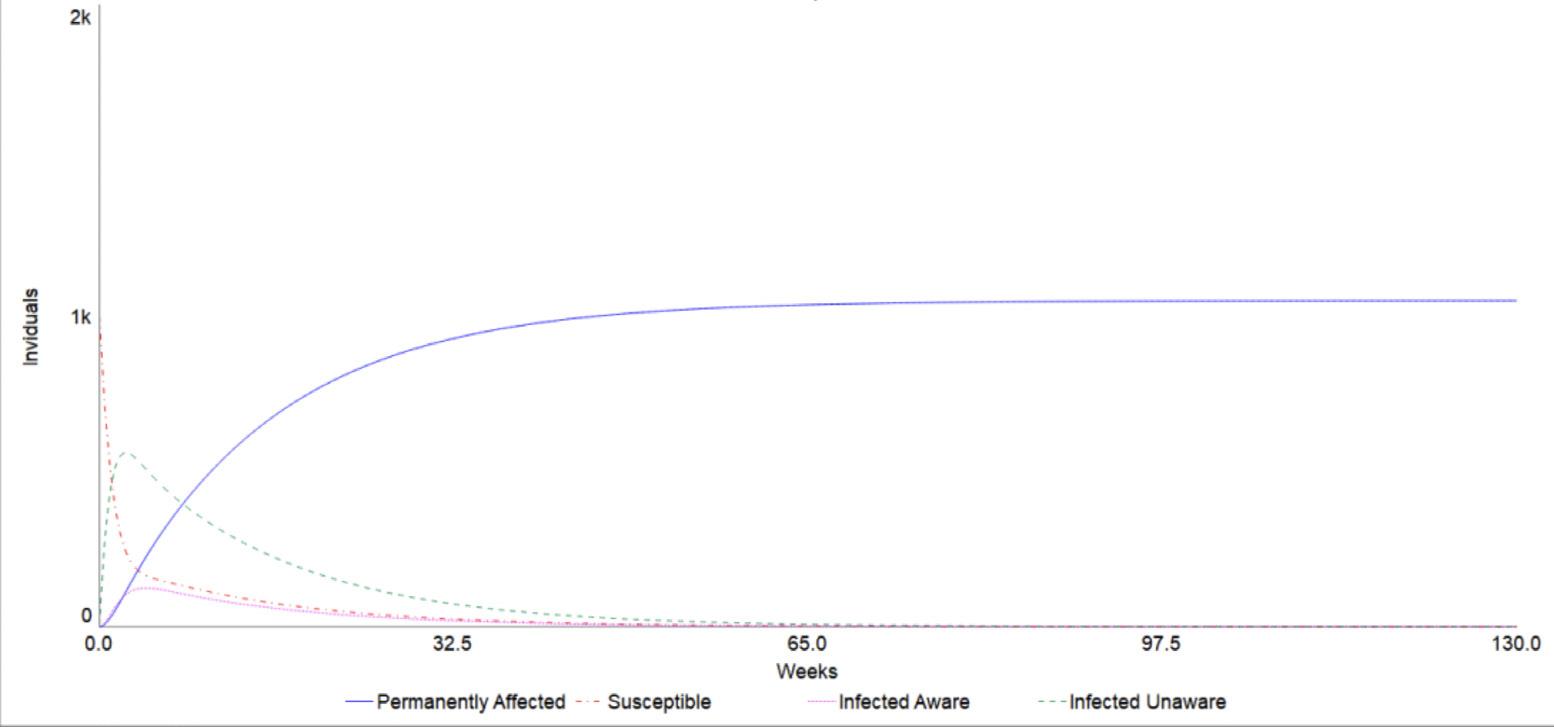

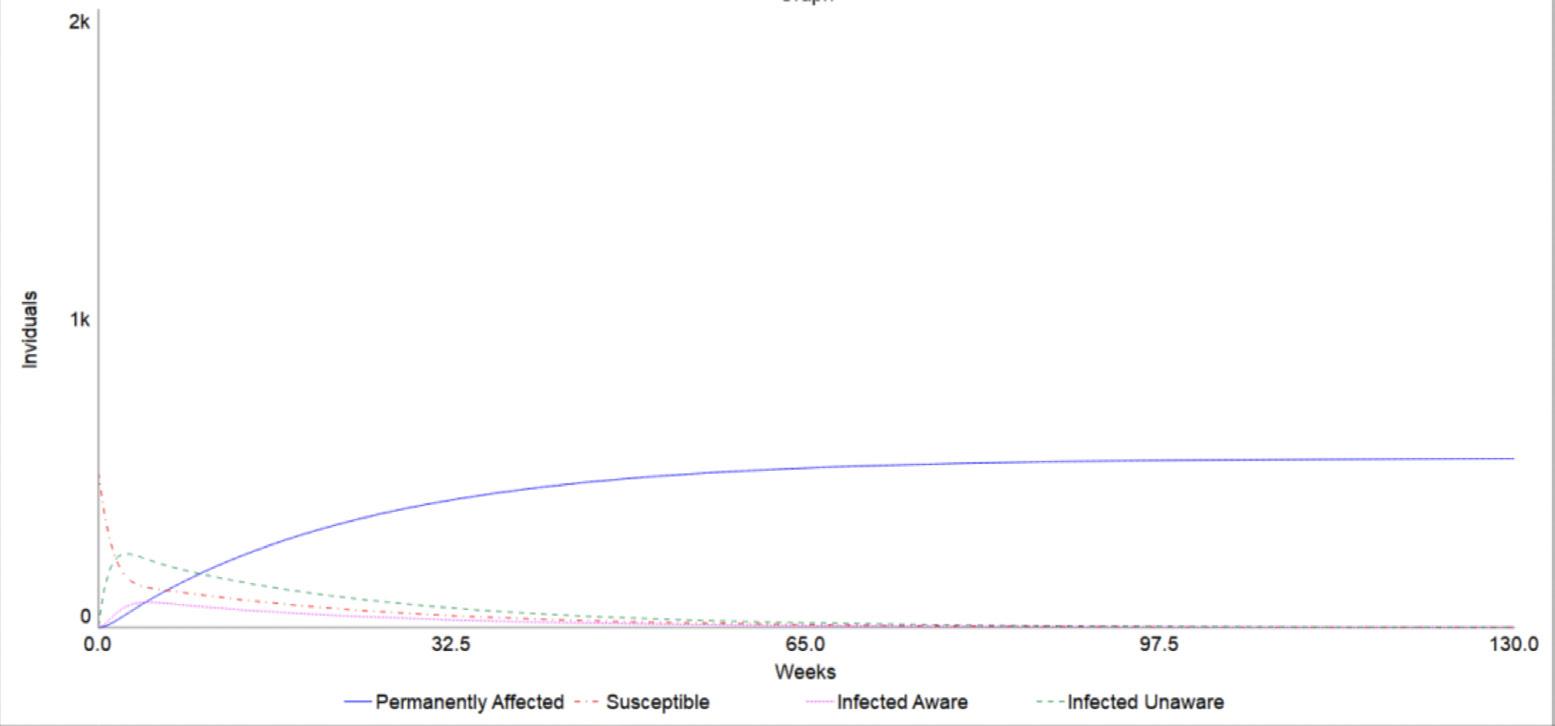

82 Computational Model of Gonorrhea Transmission in Rural Populations

DIYA MENON, 2025 ONLINE Physics

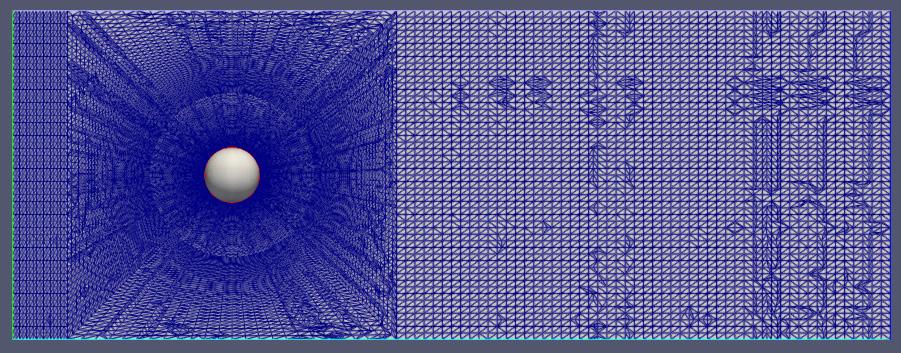

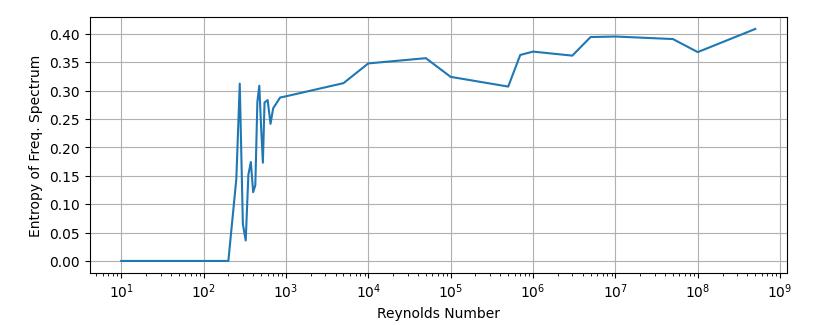

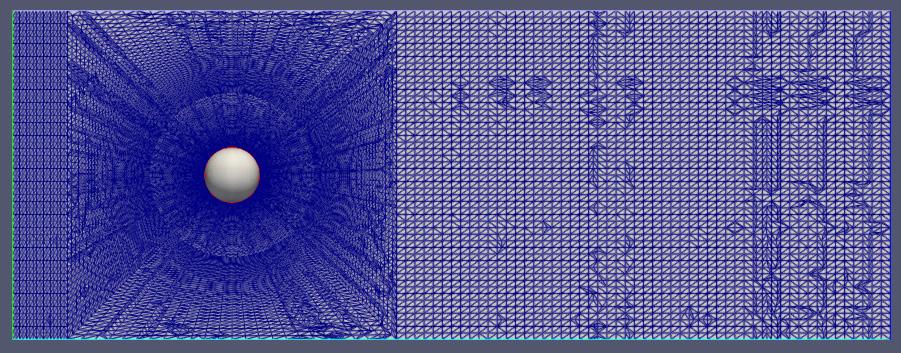

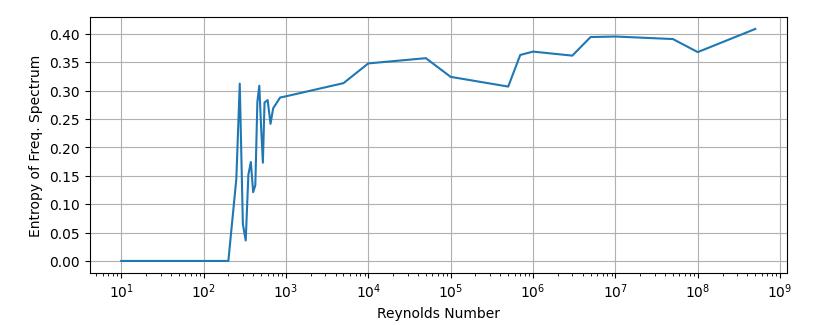

90 Using Spectral Entropy as a Measure of Chaos to Quantify the Transition from Laminar to Turbulent Flow

MATTHEW LEE, 2024

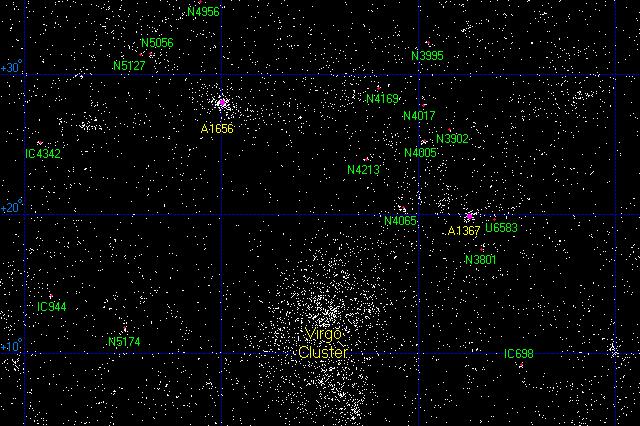

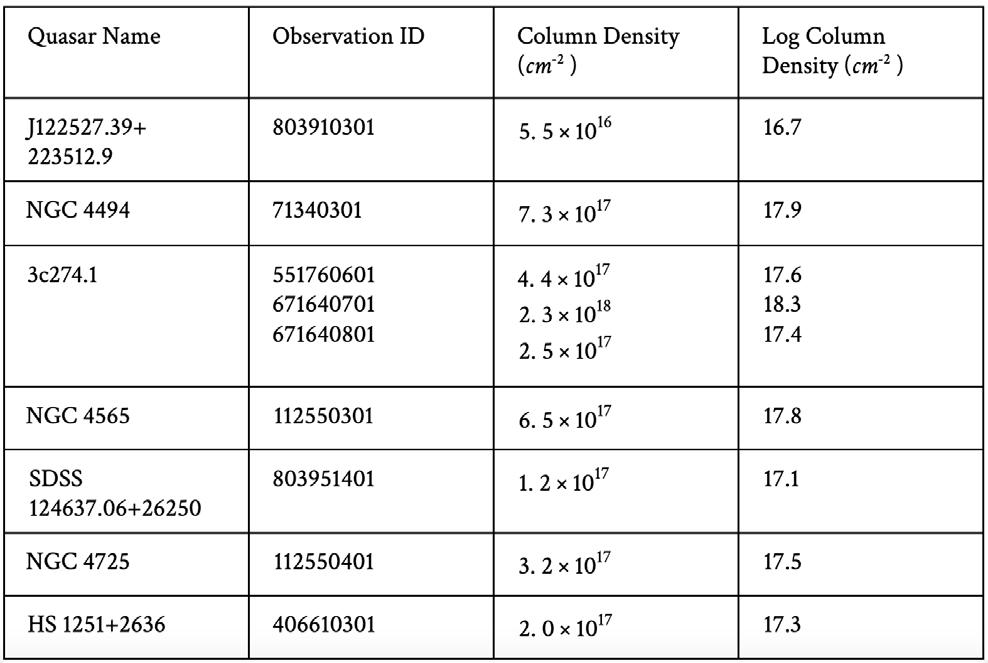

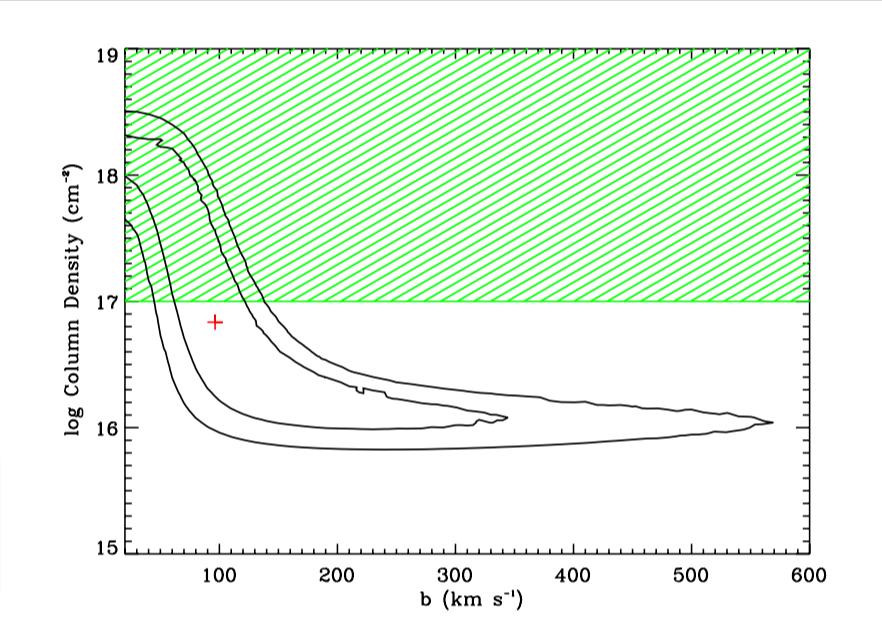

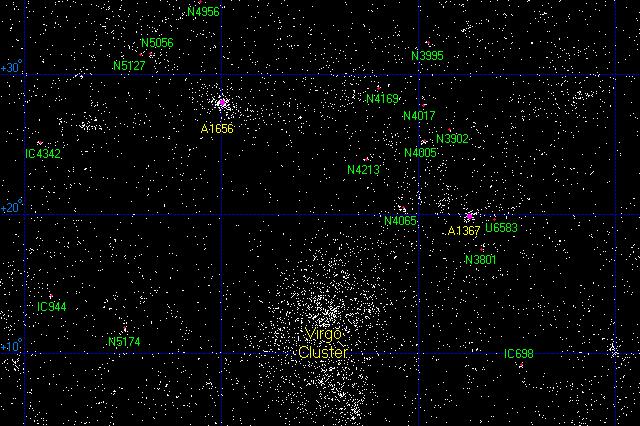

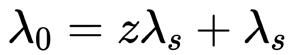

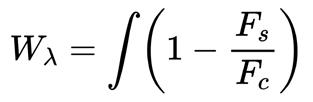

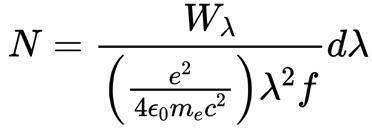

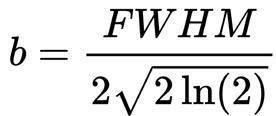

97 Detection of the Warm-Hot Intergalactic Medium Between the Coma and Leo Clusters

LIKHITA TINGA, 2024

Featured Article

103 An Interview with Dr. Richard McLaughlin

Engineering

LETTER from the CHANCELLOR

“It is a capital mistake to theorize before one has data. Insensibly one begins to twist facts to suit theories, instead of theories to suit facts.”

I am proud to introduce the thirteenth edition of the North Carolina School of Science and Mathematics’ (NCSSM) scientific journal, Broad Street Scientific. Each year students at NCSSM conduct significant scientific research, and Broad Street Scientific is a student-led and student-produced showcase of some of the impressive research being done by students on our Durham campus.

For more than four decades now we have provided research opportunities for NCSSM students from all across North Carolina. I am proud of how these opportunities have helped inspire many of our alumni to research and innovate, creating new companies and novel approaches to tackle some of our world’s most challenging problems. The research reflected in this journal highlights just a sample of the incredible work NCSSM students are doing today, building their foundation as the next generation of scientists and innovators who will undoubtedly change our state and the world for the better in the years to come.

Opened in 1980, NCSSM was the nation’s first public residential high school where students study a specialized curriculum emphasizing science and mathematics. Teaching students to do research and providing them with opportunities to conduct high-level research in biology, chemistry, physics, computational science, engineering and computer science, mathematics, humanities, and the social sciences is a critical component of NCSSM’s mission to educate academically talented students to become state, national, and global leaders in science, technology, engineering, and mathematics. More than eighty-percent of NCSSM students conduct research in their two years at NCSSM. I am grateful for

~Sir Arthur Conan Doyle

our talented NCSSM faculty and mentors at institutions across our state who provide these incredible opportunities for students, on two campuses and in our online program, to learn research methods and conduct high quality research.

Each edition of Broad Street Scientific features some of the best research students conduct at NCSSM under the guidance of our outstanding faculty and in collaboration with researchers at major universities and other research institutions. For thirty-nine years, NCSSM has showcased student research through our annual Research Symposium each spring and at major research competitions such as the Regeneron Science Talent Search and the International Science and Engineering Fair. Amazing things happen when you bring talented student researchers together with incredible faculty, which was highlighted this year as ten NCSSM students were named Regeneron Scholars. The publication of this journal provides another opportunity to share with the broader community the outstanding research being conducted by NCSSM students.

I would like to thank all of the students and faculty involved in producing Broad Street Scientific, particularly faculty sponsors Dr. Jonathan Bennett and Dr. Michael Falvo and senior editors Phoebe Chen, Keyan Miao, and Jane Shin. Explore and enjoy!

4 | 2023-2024 | Broad Street Scientific

Dr. Todd Roberts Chancellor

WORDS from the EDITORS

Welcome to the Broad Street Scientific, NCSSM Durham’s official journal of student research in science, technology, engineering, and mathematics. This past year has been one of significant change as we reworked the publication process and outlined our AI policy as we saw the rise of AI technology. These improvements in our 13th edition allow us to continue showcasing exceptional research, innovative ideas, and collaboration within the students of North Carolina School of Science and Mathematics. As you turn these pages, you will find investigations ranging from disease transmission models to galaxy clusters, with each sharing the same goal of advancing our understanding of the world.

Addressing these developments has required a new perspective, which is reflected in our theme for this year, ultraviolet. Nature contains hidden stories, from the smiling sun to fluorescing flowers, yet it takes research and the development of new technologies to perceive what our eyes cannot. And yet simply seeing is not enough: in an age where we are more divided than ever and AI image generation is blurring the lines between real and not, the truth is more elusive than ever. Ultraviolet reminds us that we must keep an open mind and embrace diversity, celebrating the opportunities brought by combining different perspectives.

We would like to thank the faculty, staff, and administration of NCSSM, particularly Chancellor Dr. Todd Roberts, Dean of Science Dr. Amy Sheck, and Director of Mentorship and Research Dr. Sarah Shoemaker. They continue to support and nurture a stimulating academic environment that encourages motivated students to apply their interests towards solving real-world problems. For the next generation of young people who will no doubt change the world, NCSSM serves as a nurturing environment of passion and determination. We extend special thanks to Dr. Jonathan Bennett and Dr. Michael Falvo for their invaluable support and guidance throughout the publication process. Lastly, we would like to acknowledge Dr. Richard McLaughlin, Professor of Mathematics at UNC Chapel Hill, for speaking with us about his experience in fluid dynamics research and imparting important advice to young scientists so that we may each define our unique perspectives and shape the world around us.

Jane

Shin, Keyan Miao, and Phoebe Chen

Editors-in-Chief

Broad Street Scientific | 2023-2024 | 5

BROAD STREET SCIENTIFIC STAFF

Editors-in-Chief

Phoebe Chen, 2024 Durham

Keyan Miao, 2024 Durham

Jane Shin, 2024 Durham

Publication Editors

Snigdha Agasthyaraju, 2024 Online

Advika Arun, 2025 Durham

Jessily Chen, 2024 Durham

Florence Cheung, 2025 Durham

Kelly Fung, 2025 Durham

Paisley Holland, 2025 Durham

Biology Editors

Aadi Kucheria, 2025 Durham

Jiah Lee, 2025 Durham

Skyler Qu, 2025 Durham

Marcella Willett, 2024 Online

Chemistry Editors

Cathy Deng, 2025 Durham

Nishanth Gaddam, 2025 Online

Nikhil Vemuri, 2025 Durham

Engineering Editors

Caroline Downs, 2025 Durham

Adrian Tejada, 2025 Durham

Mathematics and Computer Science Editors

Physics Editors

Vishnu Vanapalli, 2025 Durham

Markandeya Yalamanchi, 2025 Durham

Teresa Fang, 2025 Durham

Ian Suh, 2025 Durham

Faculty Advisors

Dr. Jonathan Bennett

Dr. Michael Falvo

6 | 2023-2024 | Broad Street Scientific

IS IT POSSIBLE TO USE GPS TO COMBAT CLIMATE CHANGE?

Teresa Fang

Teresa Fang was selected as the winner of the 2024 Broad Street Scientific Essay Contest. Her award included the opportunity to interview Dr. Richard McLaughlin, Professor of Mathematics at the University of North Carolina at Chapel Hill.

Despite the Global Positioning System (GPS) being well known, few are aware of an alternative use of its signals. Aside from providing directions to the nearest café or restaurant, GPS can revolutionize remote sensing, serving as a tool to predict natural disasters and combat climate change.

GPS is a tool for remote sensing. At its core, remote sensing is communication through electromagnetism. It relies on two things: a transmitter and a receiver. A satellite’s transmitter will encode a message through various bands of electromagnetic frequencies using modulation, changing data into waves [1]. A receiver will then pick those up and transform the waves back into analytical data. Depending on factors like distance apart, size of antennas, types of electromagnetic transmission, bandwidth, or limiting power, different kinds of data can be measured and transmitted.

At present, GPS consists of a network of 31 broadcasting satellites orbiting Earth at an altitude of around 20,000 km. While normal radar satellites have both their receiver and transmitters on the satellites, GPS has receivers on the ground, listening and interpreting microwave satellite transmission [1]. This means that at any point on the Earth’s surface, you are in the range of at least four satellites tracking the intersection of three physical dimensions, along with time, through trilateration. In an everyday example, your phone is the receiver to those four satellites’ transmitters to pinpoint your real-time location on Earth.

But what makes GPS an ideal tool to revolutionize remote sensing? After all, GPS is just a type of microwave radar system with a different scattering geometry than other systems, and microwave radar has been used for years to detect changes in Earth’s surface.

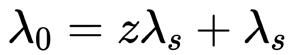

GPS reflectometry is one of the three techniques that have untapped potential for studying and combating climate change [2]. The two others are occultation, which measures the vertical gradient of the atmospheric index, and scatterometry, which scatters signals over targets like water to measure wind properties [3][4]. GPS signals are circularly polarized L-band signals (1-2 GHz range, at 19 or 24 cm specifically for GPS), which means they are

unaffected by cloud cover and sunlight, thus making it easier to measure strong versus weak signals from surfaces with large changes in dielectric constants, such as wet soil or sea ice [5]. This system allows researchers to measure changes in the frequency, amplitude, or phase of the interference pattern of a reflected surface to draw observations and collect pinpointed data quickly.

But rather than just having satellites track climate change, satellites may contribute to “solving” climate change through the bidirectional reflectance distribution function (BRDF). The BRDF fr (wi , wr ) determines a surface’s reflected energy in terms of incoming and reflected radiance [6]. Using the measured BRDFs of a known surface, BRDF can be applied to a much larger scope to image a larger surface, such as Earth. By adding more variables to the BRDF, we can potentially evaluate the parameters of a climate change model, i.e. with a multivariable function

fr(wi , wr , T, Vw , Vc , Ms ) where T represents temperature, V w represents wind speed, V c represents water current speed, M s represents soil moisture, etc.

GPS signals can provide the necessary information, in the form of microwaves, to model such a function. As a pioneering technique, GPS reflectometry is a promising approach to measuring and modeling hydrologic effects, such as icebergs or Arctic sea ice [6]. As a tool to combat climate change, these satellites give us an overhead and underwater view to make models, find melt rates, and potentially find ways to offset rising sea levels in the long run. While normal radar satellites have difficulty measuring ice thickness, GPS signals have long wavelengths to penetrate that canopy. If we can also measure the levels of greenhouse gases in the atmosphere, we could evaluate the correlation of greenhouse gases with the rate of sea ice melting and develop predictability models using the multivariable BRDF to mimic both short-term and long-term climate changes.

Although several other remote sensing techniques and instruments can retrieve the same information, the GPS reflection technique is cost-effective. GPS recycles

Broad Street Scientific | 2023-2024 | 7

signals that already exist in the ionosphere, so there is no need for additional satellites or expensive microwave transmitters, such as the passive radiometers and active radars that NASA and CERN have been launching. For example, NASA’s eight-satellite CYGNSS constellation cost $152 million to study Earth’s hydrology, while CERN’s two-satellite Sentinel 1 constellation cost $385 million for the same purpose [8][9].

GPS reflectometry may be an avenue to revolutionize future satellite missions, as this technique is relatively cheap and can provide data complementary to existing data, filling gaps in our knowledge on select aspects. Launching constellations of GPS instruments would be interesting, as GPS constellations have a high temporal resolution or temporal repeat period. With multiple satellites collecting data over a location at the same time, frequently updated data are readily available, making the creation of both predictability models and near-realtime spatial resolution maps easier. This means people in affected communities can have more time to evacuate in times of disaster as forecasting is more accurate. As more and more research is being done into GPS reflectometry, finding alternatives to dealing with climate change may be less of a distant dream and more of an innovative reality.

[1] Schauer, Katherine. (2020, October 6). Space Communications: 7 Things You Need to Know. NASA. https://www.nasa.gov/missions/tech-demonstration/ space-communications-7-things-you-need-toknow/#:~:text=At%20its%20simplest%2C%20space%20 communications

[2] Baier, M. (n.d.). GPS occultation, reflectometry and scatterometry (gors) receiver technology based on COTS as quintessential instrument for future tsunami detection system. GPS Reflectometry. https://www.gitews.de/en/ gps-technology/gps-reflectometry/#:~:text=GPS%20 scatterometry%20and%20reflectometry%20 are,compensates%20for%20the%20low%20signal

[3] Eyre, J. R. (2008, June). An introduction to GPS radio occultation and its use in numerical weather prediction. In Proceedings of the ECMWF GRAS SAF workshop on applications of GPS radio occultation measurements (Vol. 1618).

[4] Center for Ocean-Atmospheric Prediction Studies (COAPS). (n.d.). Scatterometry - Overview. Scatterometry & Ocean Vector Winds. https://www.coaps.fsu.edu/ scatterometry/about/overview.php

[5] Chew, C. (2022). Water makes its mark on GPS signals. Physics Today, 75(2), 42–47. https://doi.org/10.1063/ Pt.3.4941

[6] Wynn, C. (2015, October 7). A basic introduction to BRDF-based lighting. Princeton University. https://www. cs.princeton.edu/courses/archive/fall06/cos526/tmp/ wynn.pdf

[7] Schild, K. M., Sutherland, D. A., Elosegui, P., & Duncan, D. (2021). Measurements of iceberg melt rates using high‐resolution GPS and iceberg surface scans. Geophysical Research Letters, 48(3). https://doi. org/10.1029/2020gl089765

[8] Harrington, J. D. (2023, July 26). NASA selects low cost, High Science Earth Venture Space System. NASA. https://www.nasa.gov/news-release/nasa-selects-lowcost-high-science-earth-venture-space-system/

[9] Clark, S. (2014, April 2). Europe’s Earth Observing System ready for liftoff. Soyuz launch report. https://spaceflightnow.com/soyuz/ vs07/140402preview/#:~:text=Levrini%20 said%20the%20Sentinel%201A,or%20%2492%20 million%2C%20he%20said

8 | 2023-2024 | Broad Street Scientific

PHOTOGRAPHY: GROG & LA FORTUNA WATERFALL

Charlotte Goebel & Vincent Shen

Charlotte Goebel and Vincent Shen were selected as the winners of the 2024 Broad Street Scientific Photo Contest. Their awards included the opportunity to have their photographs featured in the 2024 volume of the Broad Street Scientific

Grog (Green Frog) by Charlotte Goebel

This American bullfrog (Lithobates catesbeianus) resides in a calm pond inlet of the Blue Ridge Mountains. Native to eastern North America, American bullfrogs are a globally invasive species, often destroying native amphibian populations. The rapidly multiplying bullfrogs exemplify the challenges of preserving natural habitats in a globalized world.

La Fortuna Waterfall by Vincent Shen

I captured this photograph of the 70-meter-high La Fortuna Waterfall in northwestern Costa Rica after a steep, sloping hike down. The dark, stratovolcanic rocks filter the plunging water above, which serves as the town of La Fortuna’s water supply.

Broad Street Scientific | 2023-2024 | 9

REDUCTION OF

THE ABUNDANCE

OF ANTIBIOTIC-

RESISTANT BACTERIA (ARB) IN

CONTAMINATED

SOIL USING MODIFIED BIOCHAR

Emily Alam

Abstract

This research investigates the efficacy of modified biochar in mitigating the proliferation of antibiotic-resistant bacteria (ARB) in contaminated soil, focusing on samples from Oak Grove Farm, Wallace, NC. Soil bacteria were isolated and tested for resistance to multiple antibiotics. Experimental groups included pristine biochar and biochar modified with alkaline (KOH) or acidic (H₃PO₄) treatments. Results demonstrate a significant reduction in ARB abundance with modified biochar treatments compared to the control, with acidic modification proving the most effective. This study suggests that modified biochar has a promising outlook in reducing the transmission of antibiotics, antibiotic-resistant genes (ARGs), and ARB, offering a sustainable solution to combat antibiotic resistance in soil environments.

1. Introduction

Antibiotic treatment is the most common medicine prescribed to combat infection. However, as the list of infectious diseases and illnesses that rely on antibiotics as their primary treatment continues to grow, it becomes a less reliable course of treatment [1]. The misuse and overuse of antibiotics imperil their efficiency against pathogenic bacteria growth and reproduction, resulting in antibiotic resistance.

In several developing countries worldwide, generic over-the-counter antibiotics are available without prescription and through unregulated supply chains [2]. As described by Willis and Chandler, “the lack of infrastructure due to the poor economy, corruption and low preparedness in many low-income and middleincome countries has led to inadequate attention to preventive measures, such as water, sanitation and hygiene, leading to the high burden of infectious diseases.” [3] Poverty is a significant root factor of antibiotic misuse that results in resistance, which has a disproportionately adverse impact on socioeconomically disadvantaged populations. This poses a significant challenge in disease management as more drug-resistant bacterial strains have emerged. This is an urgent global public health threat and epidemic, killing at least 1.27 million people worldwide and associated with nearly 5 million deaths in 2019. In the U.S. alone, 2.8 million people obtain an antibiotic-resistant infection each year, and more than 35,000 people die [4].

The global proliferation of antibiotic resistance is caused by several significant factors, such as overpopulation, enhanced global migration, increased use of antibiotics in clinics and animal production, selection pressure, poor sanitation, wildlife spread, and poor sewage disposal systems. Researchers can not

maintain the pace of antibiotic discovery in the face of emerging resistant pathogens. Persistent failure to develop or discover new antibiotics and non-judicious use of antibiotics are the predisposing factors associated with the emergence of antibiotic resistance [5]. Resistant strains of bacteria are responsible for significant clinical and economic losses, particularly in developing nations. Crucial medical advancements such as organ transplants, cancer treatment, neonatal care, and complex surgeries might not have been possible without effective antibiotic treatment to control bacterial infections. Globally, antibiotic resistance continues to worsen, giving rise to more bacterial mutations and strains. According to the analysts of Research and Development Corporation, a US nonprofit organization, a worst-case scenario may develop in the future where the world might be left without any potent antimicrobial agent to treat bacterial infections. In this situation, the global economic burden would be about $120 trillion ($3 trillion per annum), which is approximately equal to the total existing annual budget of US health care [6].

As shown in Figure 1 [7], environmental factors and stressors significantly contribute to the drivers of antibiotic resistance. In the environment, the presence of antibiotic residue has the potential to induce the development of an abundance of antibiotic resistance genes (ARGs) in bacterial colonies and mobile gene elements. Effluent discharge encompasses a broad range of sources, including wastewater treatment plants, excess waste from hospitals, agricultural practices, pollutants, and runoff into soil environments. This impacts soil antibiotic-resistant bacteria (ARB) as it plays an increasingly important role in the evolution and spread of antibiotic resistance in humans and animals. The continuous emergence of soil contamination and

10 | 2023-2024 | Broad Street Scientific BIOLOGY

pollution poses new challenges for soil remediation, recovery, and conservation.

Figure 1. Influencing factors of antibiotic resistance genes (ARGs) and antibiotic resistance bacteria (ARB) [7].

Over the past decades, antibiotic consumption has drastically increased [8]. A recent study predicted that global consumption of antibiotics in 2030 could be 200% higher than levels in 2015 in the absence of policy intervention. Raw water from antibiotics industries, effluents from treated wastewater containing antibiotics residues, ARB and ARGs are discharged into the soil through irrigation mechanisms [9]. Reusing treated wastewater effluents in agricultural soil causes serious problems with contamination of ARB and ARGs in agricultural soil [10]. Environmental stressors that amplify antibiotic resistance within soil ecosystems are a significant concern of public health. Tetracycline (tet) and sulfonamide (sul) are antibiotics found within the environment causing the rapid evolution of antibioticresistance genes. Tetracycline and sulfonamide resistance are the most frequently identified ARGs in livestock and agricultural waste found in soil contaminated by antibiotic residues. This has caused an increase in the abundance of ARGs over several decades [8].

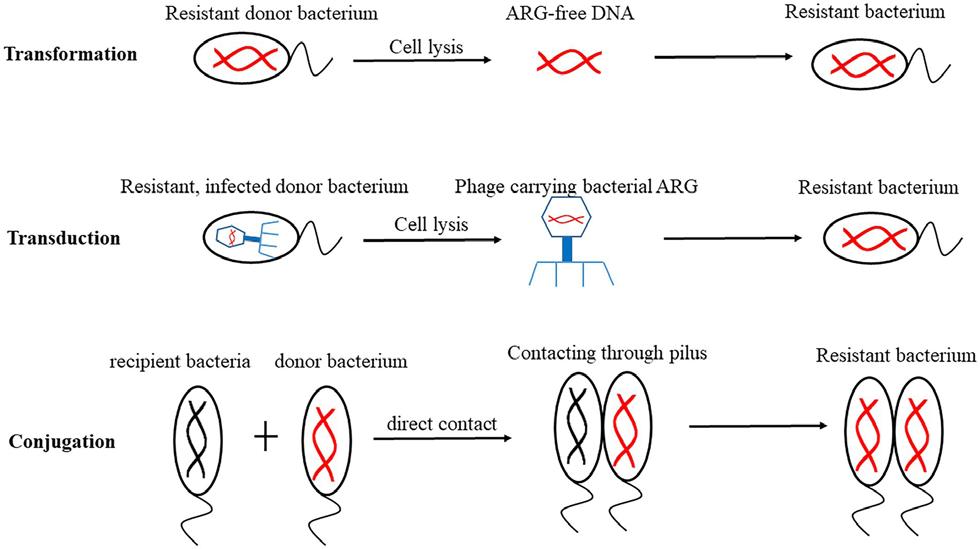

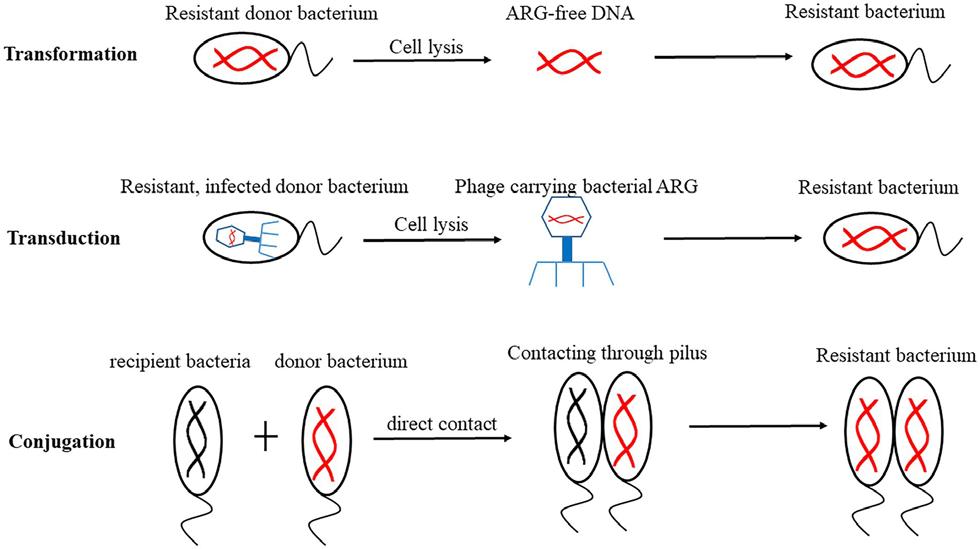

Figure 2. Mechanisms of horizontal gene transfer (HGT) [7].

Figure 2 [7] demonstrates how horizontal and vertical gene transfer mechanisms play a vital role in transferring ARGs and ARB. Existing antibiotics continually exert

selective pressures that have lasting and often permanent changes that affect the bacterial community. This raises the possibility of spontaneous bacterial DNA mutations to create drug resistance and promote horizontal gene transfer (HGT) among bacterial communities. Mobile genetic elements (MGEs), serve microbes by trafficking adaptive traits between species and strains, thereby inducing the uncontrolled spread of ARGs [11].

The study, “Antibiotic Resistance Genes (ARGs) in Agricultural Soils from the Yangtze River Delta, China”, [12] provided valuable insights into the prevalence and diversity of antibiotic resistance genes (ARGs) in agricultural soils. This study highlighted the environmental stressors’ impact on the presence of ARGs, particularly emphasizing the potential role of manure and manure-amended soils in contributing to ARGs in agricultural environments. Most antibiotics used in human medicine have been isolated and originated from soil microorganisms. Therefore, the soil is a potential reservoir of antibiotic-resistance genes and bacteria. The presence of antibiotics in the ground has promoted the development of antibiotic resistance mechanisms in antibiotic-producing and non-producing bacteria [13]. Additionally, the significant impact of farm soil on agricultural practices, particularly the overuse of antibiotics in livestock and crop production, has heightened the proliferation of ARGs and ARB.

A study found that most antibiotic residue in livestock is not fully metabolized and hence is released, together with their transformation products, into the environment along with the feces and urine. In fact, a considerable percentage (30–90%) of the antibiotic administered to a given animal for veterinary purposes can be directly excreted in the urine and feces [14]. Animal manure is commonly applied to soil as organic fertilizer to aid crop production yield and biological properties. Unfortunately, the agronomic application of manure can also lead to the emergence and dissemination of ARB and ARGs in the amended agricultural soil and, subsequently, in the food crops grown for human consumption [15]. This has the potential for contaminated soils in agricultural practices to serve as a carrier of ARGs and ARB to humans and livestock that feed on the crop. For example, Mcr-1 myxin resistance genes were initially found in animals and meat and then detected in food samples and human intestinal flora [16], indicating that ARGs were transmitted from animals to humans. It is also reported that the outbreak of quinolone-resistant Campylobacter infections in the United States is caused by human chicken consumption. Many innovative ideas have been researched to find the best way to remove antibiotics, ARGs, and ARB in the environment. The ultimate goal is to find an environmentally sustainable method that is cost-saving to avoid any secondary pollution from any removal

Broad Street Scientific | 2023-2024 | 11 BIOLOGY

treatment techniques. Our experiment focuses on one of the most efficient removal methods, biochar, a charcoal-like substance that is made by burning organic material from agricultural and forestry wastes using a specific process to reduce contamination and safely store carbon. As the materials burn, they release little to no contaminating fumes. It is essentially raw biochar, typically produced by heating organic materials such as plant residues or wood in a low-oxygen environment, a process known as pyrolysis. The energy or heat created during pyrolysis can be captured and used as a form of clean energy. Biochar is by far more efficient at converting carbon into a stable form and is more sanitary than other forms of charcoal [8].

Biochar has been proven to be a highly efficient, sustainable absorbent because of its abundant surface functional groups and relatively large surface area. Therefore, it can potentially eliminate or reduce the abundance of antibiotics and pathogenic microorganisms such as ARGs and ARB, in the environment [18]. Using biochar in contaminated soils reduces the bioaccessibility and bioavailability of the contaminants, hence reducing the biological and environmental toxicity. This also reduces contaminants-induced co-selection pressure. Biochar can also repress (HGT) and reduce ARG bacteria hosts, lessening bioavailability, which is favorable for diminishing antibiotic residues in compost and soil [8]. The most common type of biochar is pristine, the original pure form that has not undergone any chemical or physical modifications. It has an efficient enough adsorption mechanism to handle the current pollutants found in contaminated soils in the environment. Modified biochar is designed to improve the adsorption performance of biochar with different physical and chemical modification properties and methods. In modified biochar, the specific surface area was increased by 210.6 % and the adsorption capacity by 87.1 % compared to the pristine biochar. Li et al., stated that the adsorption efficiency of inorganic acid solution-etched biochar increased by 46% more than pristine biochar.

The objective of our study is to investigate whether chemically modified biochar can significantly reduce the abundance of ARB found in environmentally contaminated soil. The hypothesis is that the application of chemically modified biochar with both acidic and alkaline bases will result in a significant reduction of the colony count of antibiotic-resistant bacteria and an increase in the zone of inhibition, indicative of reduced bacterial growth.

2. Methods

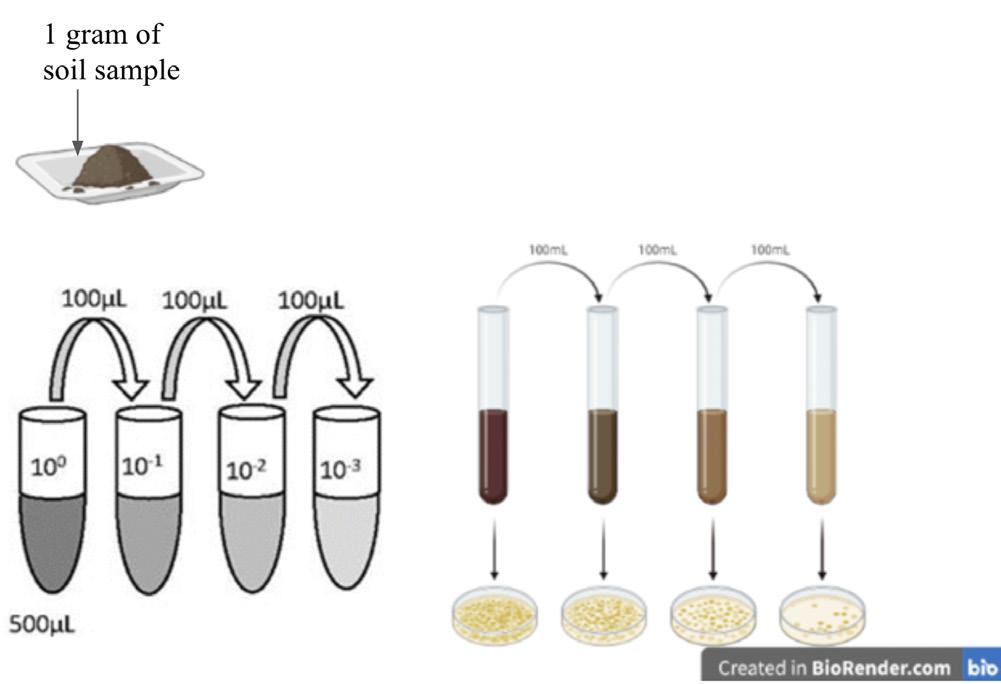

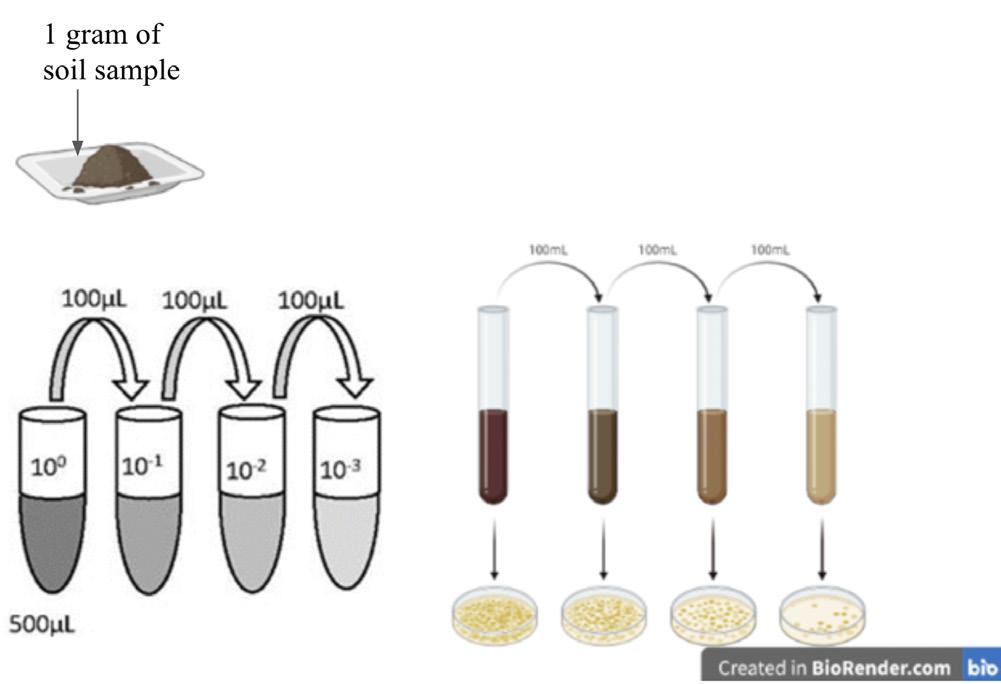

As seen in Figure 3, we collected 15 quarts of surface soil from 5 different areas across North Carolina contaminated with different environmental stressors and environments.

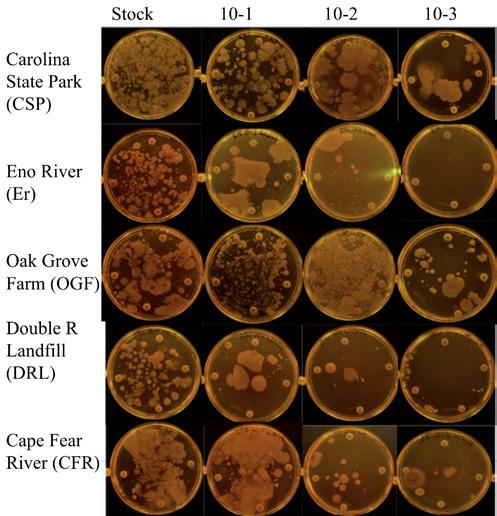

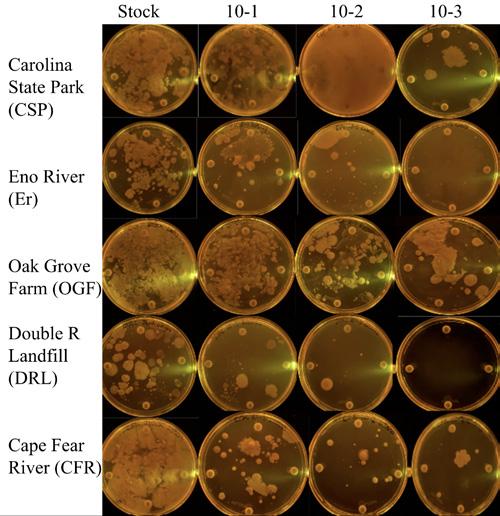

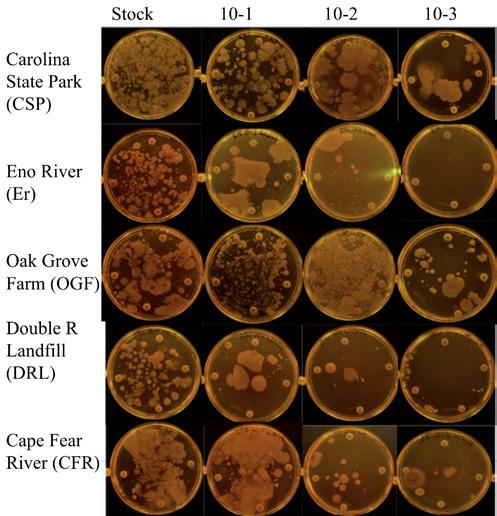

In our preliminary data, as shown in Figure 4 we cultured bacteria isolated from 1 gram of soil samples through a series of serial dilutions in glass test tubes (stock, 10-1, 10-2, and 10-3), using 1 gram of soil as the starting material, then we cultured them onto LB broth agar plates. 40 LB broth plates were divided into two sets, with four different antibiotics disks randomly assigned to each set. The antibiotics disk used were Penicillin, Streptomycin, Neomycin, Tetracycline, Erythromycin, Chloramphenicol, Kanamycin, and Novobiocin [20] which each contained a dosage of either 10 mcg or 30 mcg of antibiotics. Plates were placed in the incubator at 37 °C for 48 hours.

Figure 3. Soil sample collection across North Carolina. (Top) Photos by author, satellite images from Google Earth. (Bottom) Modified from NC General Assembly map.

12 | 2023-2024 | Broad Street Scientific BIOLOGY

Figure 4. General methods, culturing of antibioticresistant soil bacteria (created by the author using BioRender).

5. Modified acidic and base biochar treatment methods (diagram created by author).

One distinct type of biochar we used was pristine biochar which is derived from softwood and tree trimmings, which we chemically modified using an acidic modification (H3 PO 4) and alkaline modification (KOH) to enhance their physicochemical absorption properties for the biochar [21]. Roughly 50 grams of biochar were soaked in a 30% concentration of H 3 PO 4 and KOH for 24 hours (Figure 5) to have enough for the biochar treatment application (Figure 6). After the biochar was soaked from each solution, it was then carefully separated by draining it through funnel caps using filter paper. To ensure the removal of any residual acid, the biochar was rinsed with distilled water, and this process was repeated three times. Afterwards, the modified biochar was placed in a dryer oven set at 105 °C for an additional 24 hours.

6. Biochar treatment application (image from author).

A total of 200 conical tubes were sampled and were further subdivided into four groups; 1) N/A biochar (no biochar) as the control group 2) pristine biochar that has not gone through any chemical modification 3) acidic modification biochar (H3 PO 4) and 4) alkaline modification biochar (KOH). 40 conical tubes were dedicated to each of the distinct soil sample groups, and within each soil sample group, 10 conical tubes were allocated for each type of biochar sample for duplicates.

Each conical tube was filled with soil up to a volume of 35 mL. Only a small portion, specifically 2% of the recommended amount of biochar, was added to each tube. This amount was determined by the weight of the soil in each tube, with approximately 0.185 grams of biochar added per tube. Setting up this controlled experiment, the biochar’s influence on the soil samples was consistent across all groups. This was to create an environment that promoted the integration and stability of the biochar treatment within the soil samples filled into the conical tubes. This extended exposure to biochar may have influenced the genetic interactions and mobility of the Mobile Genetic Elements (MGEs), reducing the likelihood of horizontal gene transferring (HGT). This approach aimed to provide a more comprehensive evaluation of the long-term impact of biochar in inhibiting HGT within the bacterial community, yielding valuable insights into the treatment’s efficacy before soil samples were retested.

Based on our preliminary results, where duplicates were absent, we obtained data from all soil samples, antibiotic disks, and serial dilution samples. It became evident that the presence of fungi significantly impacted the accuracy of colony counting and the determination of zones of inhibition. Upon visual examination of the images (Figure 7), it was apparent that Oak Grove Farm soil exhibited consistent resistance across all experimental groups, irrespective of the type of antibiotic disk or serial dilution. As a result, this analysis focuses on the decision to prioritize Oak Grove Farm soil and the specific antibiotics to be tested.

Broad Street Scientific | 2023-2024 | 13 BIOLOGY

Figure

Figure

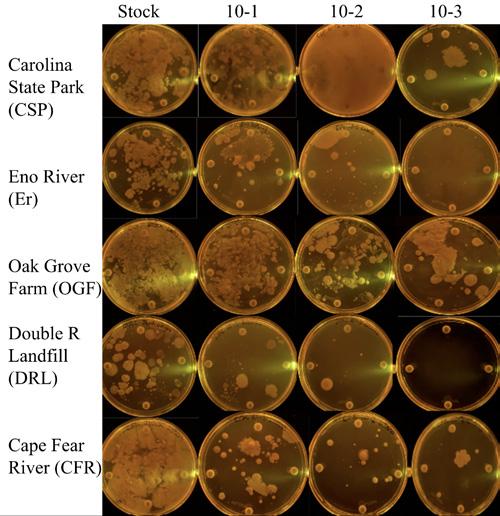

LB broth agar plates were each inoculated with soil cultures derived from the conical tubes that were initially set up. In response to the insights gained from the preliminary data, we limited our serial dilutions down to only stock and a 10-1 dilution due to limited results in the prior dilutions for 10-2 and 10-3.

To prevent the potential growth of fungal contaminants during plate preparation, we introduced 0.125 grams of antifungal powder into a 500 mL flask that already contained 5 grams of LB broth agar powder and 250 mL of distilled water. This mixture was then autoclaved before pouring the agar plates.

Furthermore, the number of antibiotic disks tested was reduced from eight to four, specifically Penicillin, Tetracycline, Kanamycin, and Novobiocin. This selection was based on the preliminary data, which indicated that these were the least resistant antibiotics, making them the most appropriate candidates for testing on the Oak Grove soil samples. These antibiotics were chosen due to their widespread use in combating bacterial infections.

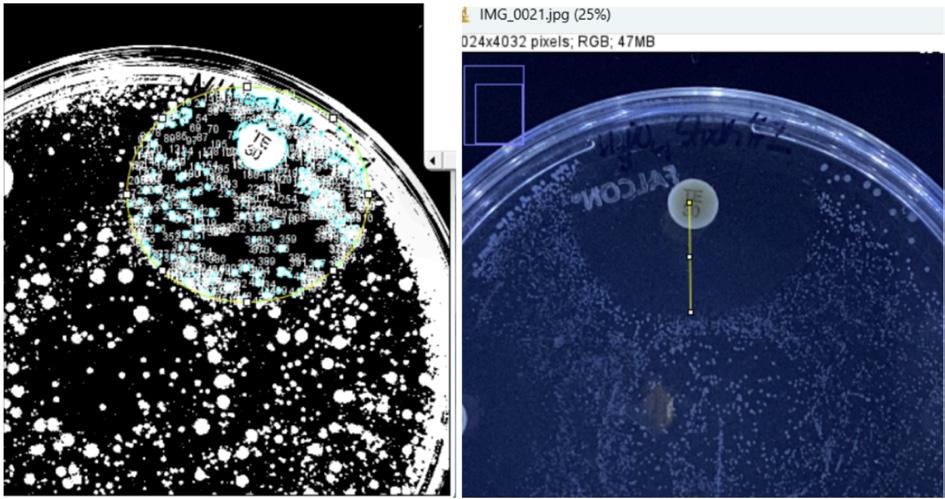

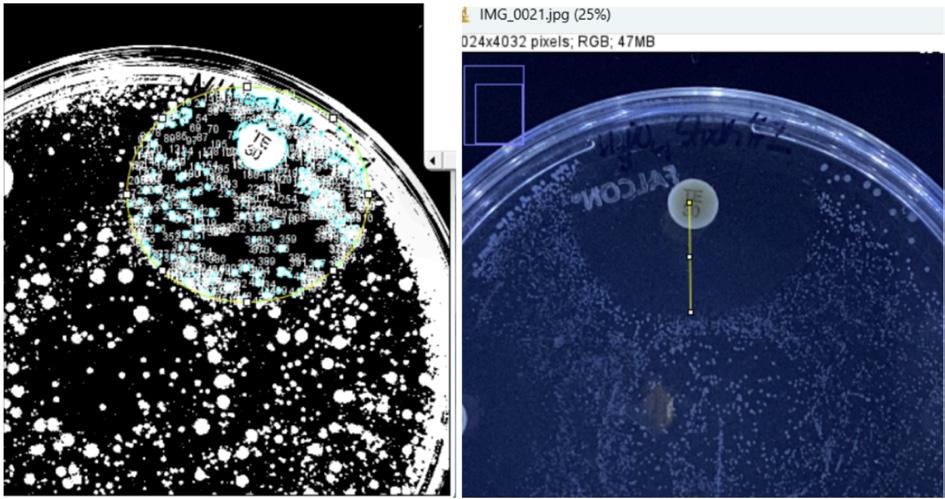

We used Image J software to analyze data as shown in Figure 8. To determine the zone of inhibition (ZOI) of each antibiotic disk, we measured their radius from the center of the antibiotic disk until ZOI ended, using millimeters (mm) as the units of measurement. Before calculating the measurement, we set an appropriate scale from the plate measurement to ensure precise data. Data analysis

could be only conducted for Tetracycline (TE-30) and Kanamycin (K-30) due to the presence of significant ZOI resistance, unlike Penicillin (P-10) and Novobiocin (NB10), which exhibited no resistance across the majority of the data.

For quantifying the resistant bacteria colonies, we used the threshold function within ImageJ to highlight the visibility of the colonies to be able to find a systematic count by circling the boundary of ZOI.

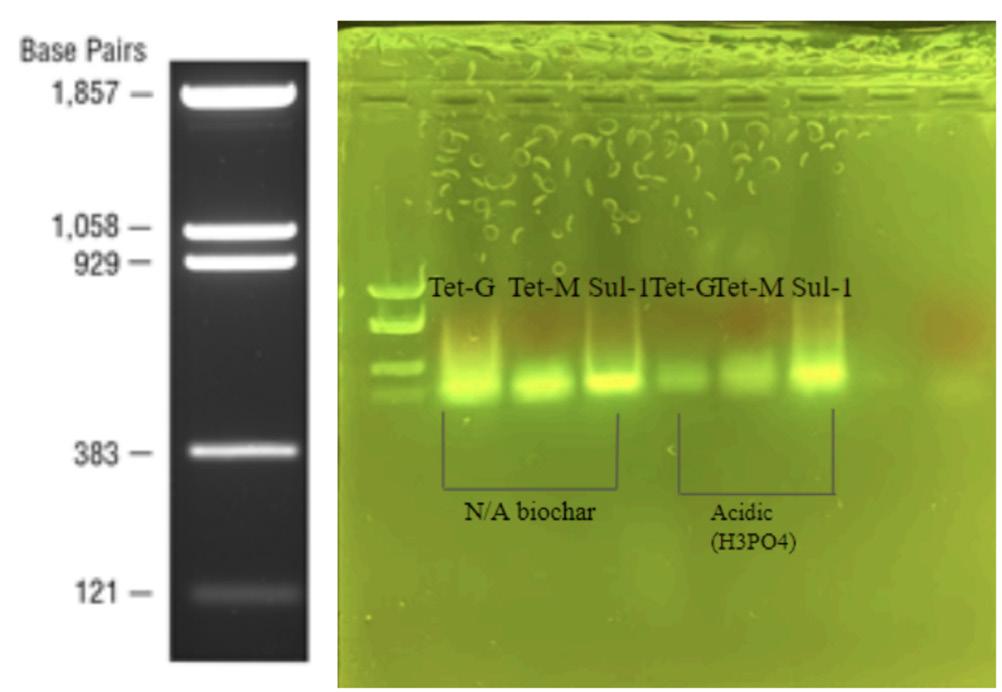

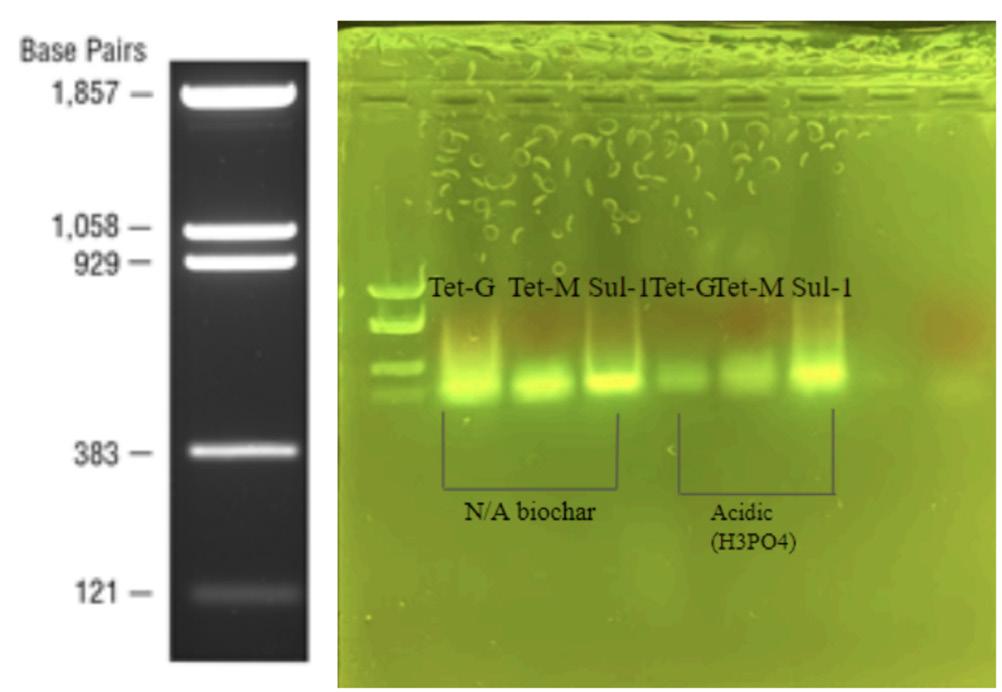

DNA extraction and resistance genes

We conducted a DNA and PCR analysis focusing on three distinct types of antibiotic resistance genes (ARGs), using the DNeasy PowerSoil Pro kit [20] to isolate DNA from the Oak Grove farm soil. Tetracycline G and M genes were chosen due to their relevance to the ongoing testing of tetracycline in our resistant bacteria analysis. We additionally tested the presence of Sulfonamide-1 due to its abundance within the agricultural waste and soil pollution caused by antibiotic residues, which can lead to an increased prevalence of ARGs.

We focused on specific primers that targeted the most abundant ARGs. In particular, we used Tetracycline-G (Tet-G) and Tetracycline-M (Tet-M) due to their relevance to the ongoing testing of tetracycline in our resistant bacteria analysis and Sulfonamide-1 (Sul-1) primers. These primers allowed us to pinpoint and analyze specific ARGs. This contributes to a deeper understanding of antibiotic resistance in agricultural soils influenced by environmental factors, due to their abundance within the agricultural waste and soil pollution caused by antibiotic residues, which can lead to an increased prevalence of ARGs [22][23].

The duplicate PCR reactions were carried out for each gene, while ultrapure water was used as a control to ensure the accuracy of the PCR results. The PCR reaction consisted of 7.5 μL of 10 x PCR Buffer, 8 μL of dNtPS (10 mM), 1.2 μL Taq DNA Polymerase, 67.8 μL double distilled H2O [11]. We used the PCR machine for all three resistance genes primers at an annealing temp of 56 Celsius, 5 min at 95 Celsius for initial denaturation, 40 cycles at 15 seconds at 95 Celsius, 30 seconds of 56 Celsius annealing temperature, 30 seconds at 72 Celsius, an additional 72 Celsius for 5 more min. Later on it was kept at 4 Celsius for 15 hours [24]. Afterwards, we ran our primers through our gel electrophoresis which consisted of SYBR Premix Ex TaqTM (TaKaRa), 4.6 μL of double-distilled water, 0.3 mL of the 50× ROX reference dye, 0.3 μL of the forward primer (10 mM), 0.3 μL of the reverse primer (10 mM) and 2 μL of the template DNA to identify whether or not there was a presence of our resistance genes from our soil.

These ARG analyses were tested on both no biochar (N/A biochar) and acid-modified biochar. The choice to include the acidic biochar was due to earlier results, which

14 | 2023-2024 | Broad Street Scientific BIOLOGY

Figure 7. Antibiotic Resistance Profiling- Preliminary data (diagram created by author)

Figure 8. Quantitative Analysis of Bacterial Colonies and Inhibition Zone (Created by the author using ImageJ software).

Figure 9. Antibiotic Resistance Profiling, inoculating the testing samples with bacterial cultures derived from soil colonies collected at Oak Grove Farm. (diagram created by author).

indicated the most efficiency in reducing the abundance of ARB. These selected samples allowed for a focused examination of the impact of biochar modifications on ARGs associated with soil, particularly in the context of agricultural waste and its contribution to soil pollution.

3. Results

Antibiotic Resistance Profiling

For the preliminary data presented in Figure 7, we conducted tests on two sets of plates for all soil samples. Each set contained eight antibiotic disks divided into four identical antibiotic disks for each group, ranging from stock to 10-3 dilutions. As shown in Figure 9, after introducing the antifungal treatment, we were able to accurately count colony numbers and measure zones of inhibition using only four antibiotics consistently positioned in the same location.

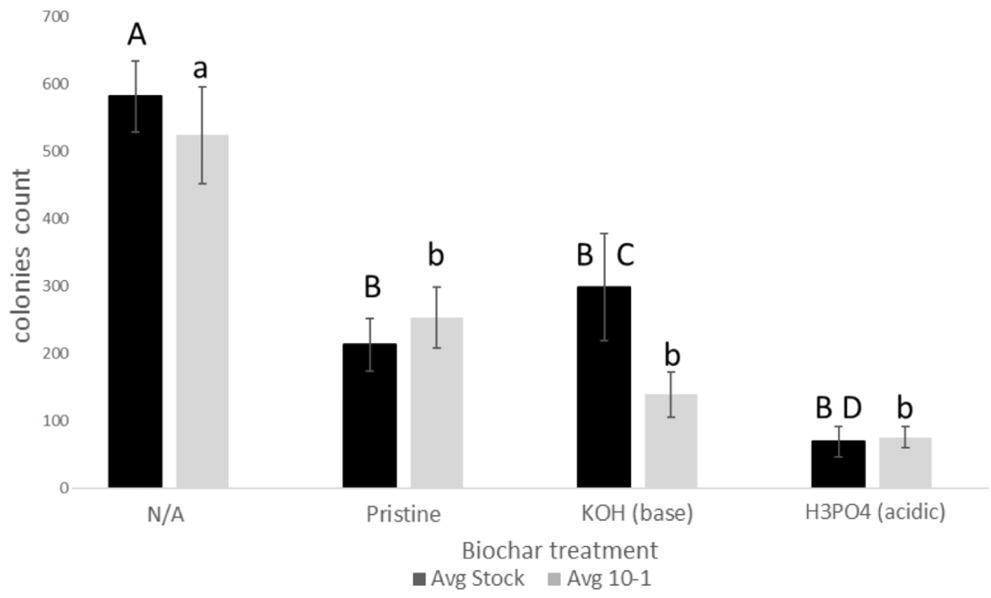

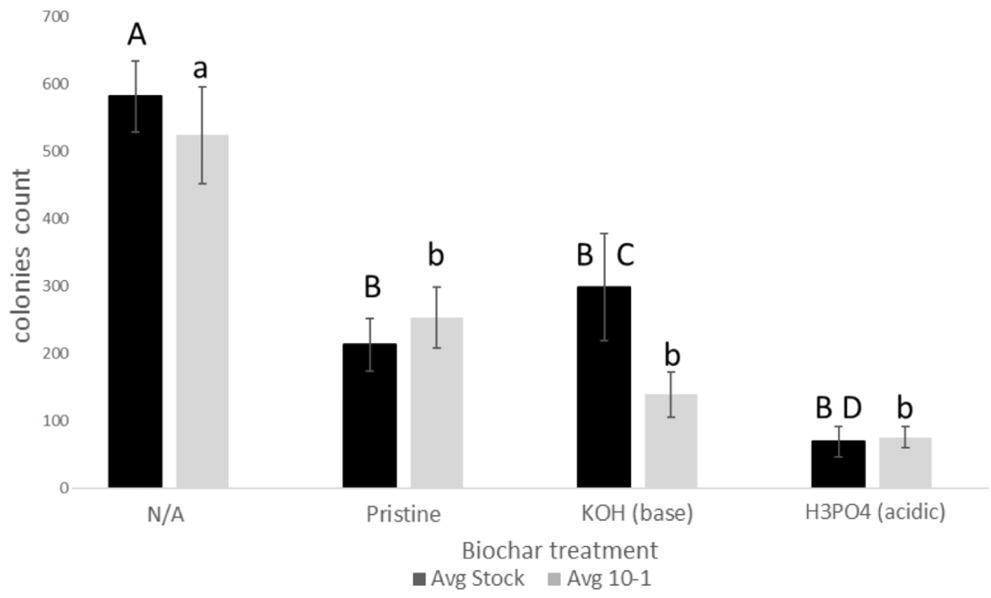

Antibiotic resistance profiling exhibited that modified biochar was significant (p<0.01) in reducing the number of colony counts for Tetracycline (TE-30), Kanamycin (K-30), and Novobiocin (NB-10) antibiotics, for both Stock and 10-1 groups (Fig 10, Fig 11, Fig 13). For the TE30 Stock, the alkaline biochar treatment was significantly better compared to the acidic treatment (p < 0.05). For the Penicillin (P-10) antibiotic disk, modified biochar also significantly reduced the number of colony counts compared to N/A biochar in both Stock and 10-1 groups (Fig 12). The results were intriguing, showing consistent significance (p < 0.01) for P-10 stock, yet P-10 10-1 remained significant but had a p-value of p < 0.05 when compared to the N/A biochar treatment as opposed to the

acidic biochar treatment. Pristine and alkaline biochar treatments were also significantly better than the acidic biochar treatment, with p-values of p < 0.01 and p < 0.05 for all four drugs.

Fig 10. Tetryacline (TE-30). The addition of biochar reduces the number of colonies. (ANOVA, F=16.69, 18.1, DF=3, P< 0.001, stock and 10-1 respectively) Capital letters indicate differences between stock solutions. Lower case letters indicate differences between 10-1 dilution. Error bars represent St.Error. AvB p<.01. CvD p<.05

Broad Street Scientific | 2023-2024 | 15 BIOLOGY

Fig 11. Kanamycin (K-30). The addition of biochar reduces the number of colonies. (ANOVA, F=29.57, 38.3, DF = 3, P< 0.001, stock and 10-1 respectively) Capital letters indicate differences between stock solutions. Lower case letters indicate differences between 10-1 dilution. Error bars represent St.Error. AvB p<.01

Fig 12. Penicillin (P-10). The addition of biochar reduces the number of colonies. (ANOVA, F=16.91, 21.3, DF=3, P< 0.001, stock and 10-1 respectively) Capital letters indicate differences between stock solutions. Error bars represent St.Error. AvB p<.01.

Fig 13. Novobiocin (NB-10). The addition of biochar reduces the number of colonies. (ANOVA, F=12.65, 38.3, DF=3, P< 0.001, stock and 10-1 respectively) Capital letters indicate differences between stock solutions. Lower case letters indicate differences between 10-1 dilution. Error bars represent St.Error. AvB p<.01.

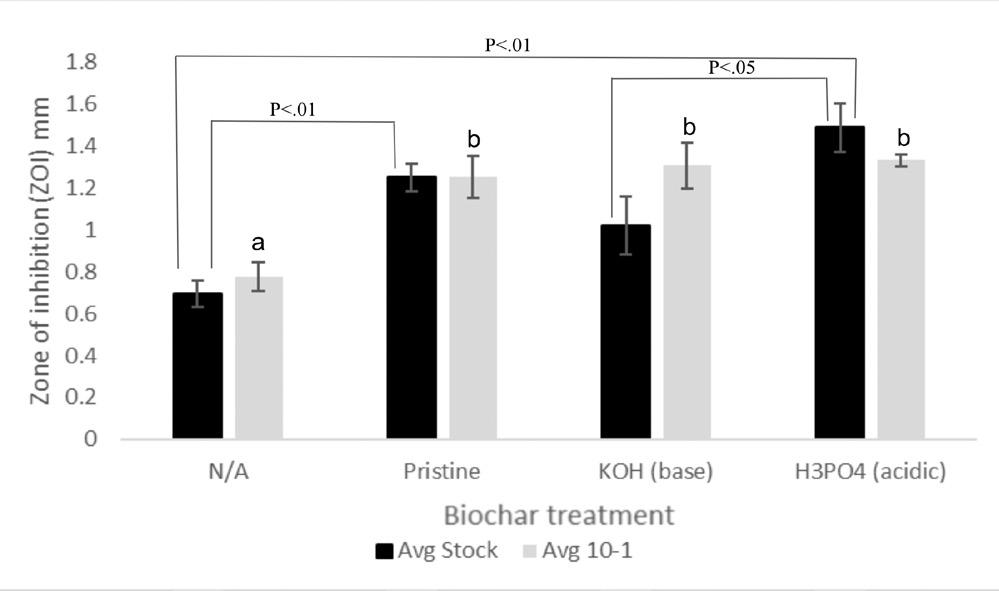

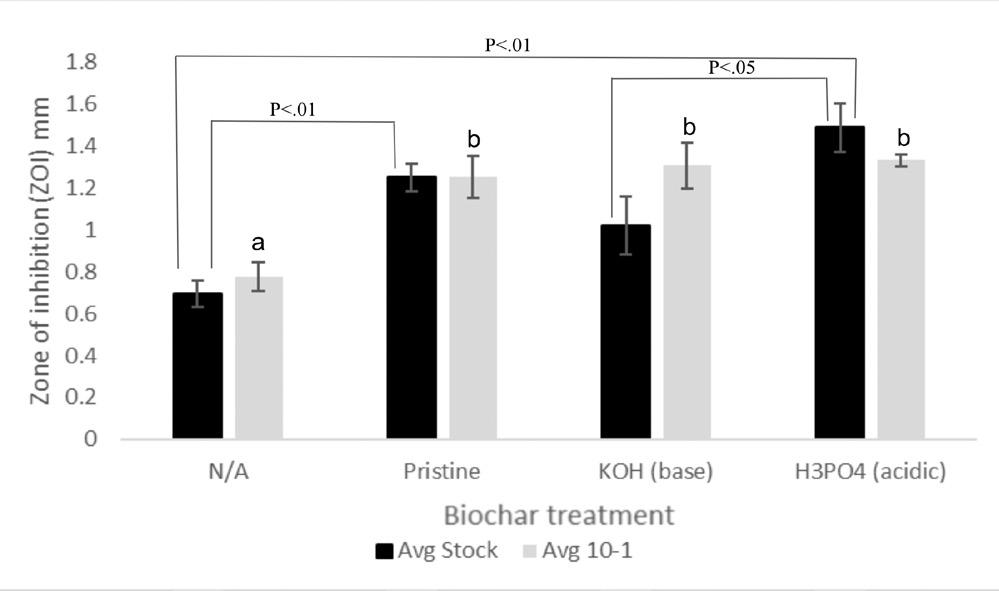

Additionally, the results from the antibiotic resistance profiling indicate a significant increase in the zone of inhibition with the addition of modified biochar compared to N/A biochar, specifically in the Tetracycline (T-30) and Kanamycin (K-30) 10-1 groups, consistently achieving a p-value of p < 0.01 (Fig 14 and Fig 15).

However, the N/A biochar treatment was not significant compared to alkaline biochar treatment, but it was significant when compared to the acidic biochar treatment for both T-30 and K-30 stock.

Fig 14. Tetryacline (TE-30). The addition of biochar increases the zone of inhibition. (ANOVA, F=9.84, 15.06, DF =3, P< 0.001, stock and 10-1 respectively). Lower case letters indicate differences between 10-1 dilution. Error bars represent St.Error. AvB p<.01.

Fig 15. Kanamycin (K-30). The addition of biochar increases the zone of inhibition. (ANOVA, F=11.48, 9.79, DF =3, P< 0.001, stock and 10-1 respectively). Lower case letters indicate differences between 10-1 dilution. Error bars represent St.Error. AvB p<.01.

After running our gel electrophoresis, the data analysis revealed the presence of all three antibiotic-resistance genes in the N/A biochar and acidic biochar samples (Fig 16). To determine the size of the bands, a pBR322 DNA-BstNI Digest was utilized as a ladder for base pair differentiation along with our primers.

16 | 2023-2024 | Broad Street Scientific BIOLOGY

Figure 16. DNA analysis of antibiotic resistance genes (Tet-G, Amplicon Size (bp) = 133, Tet-M, Amplicon Size (bp) = 171, sul-1T, Amplicon Size (bp) = 158)

4. Conclusion and Future Directions

This experiment demonstrated that modified biochar significantly reduces the abundance of antibioticresistant bacteria (ARB) found in the contaminated soil, focusing on agricultural environments. Based on our preliminary data, results indicated that Oak Grove Farm consistently exhibited elevated resistance levels and smaller zones of inhibition across all tested antibiotics. Thus, this led to a focus on Oak Grove Farm, testing various biochar treatment groups.

Penicillin (P-10) and Novobiocin (NB-10) consistently proved to be resistant to every biochar treatment that increases the zone of inhibition (ZOI), despite the fact that Penicillin was considered the “first wonder drug”, being one of the most commonly used antibiotics globally for numerous bacterial infections. Additionally, Novobiocin is commonly used as an alternative to penicillins, against penicillin-resistant infection, however, its decreasing effectiveness over time exemplifies the impact of evolution on its resistance to both antibiotics. Overall, after a comprehensive analysis of the data and graphs, modified biochar significantly reduced the abundance of ARB. This is observed by the colony counts and ZOI measurements, which are indicative of bacterial growth and resistant bacterial colonies.

Among the various biochar treatments applied, acidic biochar treatment consistently outperformed the others, demonstrating significant results across all graphical representations. This observation strongly suggests that modified biochar can significantly enhance adsorption capacity and mitigate the co-selection pressure exerted by contaminants of antibiotic residues. This can help repress (HGT) and reduce the presence of antibioticresistant genes (ARGs) within bacterial hosts, thus further reducing bioavailability.

These results are significant, as modified biochar

appears to be a promising solution for future environmental sustainability practices. Modified biochar is also a cost-effective and environmentally friendly approach that has the potential to prevent secondary pollution resulting commonly from alternative removal techniques such as ozonation. This approach can be incorporated into routine agricultural practices and livestock management to reduce the emergence and dissemination of ARB and ARGs within agricultural soils and the environment, which are recognized reservoirs for antibiotic residues. Ultimately, this has significant implications for food crops grown for human consumption, considering the potential for ARGs to persist due to evolutionary processes.

However, the consistency observed in all our data remained until the analysis of DNA revealed the presence of antibiotic resistance genes (ARGs) in both N/A and acidic biochar samples. This prompts more questions regarding whether, despite the significant reduction in the abundance of antibiotic-resistant bacteria (ARB) achieved by modifying biochar in contaminated soil, there may still be a lingering presence of mobile genetic elements within bacterial communities, still potentially facilitating ongoing horizontal gene transfer within the soil environment. This aspect of our results provokes future discussion and research into alternative methods of modification and techniques for effectively mitigating both antibiotic resistance and the persistence of antibiotic-resistance genes. In the future, there are several promising avenues for future research. Due to time constraints and availability, we were not able to complete the culturing of all soil samples, which would have entailed 400 plates to accommodate both stock and 10-₁ dilutions, twice that amount of antibiotic disk tests remain unexplored in our final results, despite their inclusion in our preliminary data.

Looking ahead our objective is to expand the experiment to the rest of our soil samples and antibiotics. Specifically, we assess whether significant differences exist in comparison to our findings for Oak Grove Farm soil. Notably, we have already prepared the necessary experimental setups for the remaining conical tubes to carry out these tests.

In addition, further researching our soil samples, we want to look closer at the 16S rRNA gene sequencing antibiotic resistance genes within the soil. Previous research has shown that tetracycline (tet) genes in soils worldwide varied between 10-6 to 10-2 gene copies per 16S rRNA gene copies [22] [23]. Given that the sul and tet resistance genes were often detected in manure or manureamended soils [12], application of manure fertilizer and wastewater irrigation could be the main anthropogenic sources of ARGs for agricultural soil environments [24]. However, current limitations in equipment prevented

Broad Street Scientific | 2023-2024 | 17 BIOLOGY

us from conducting this sequencing. Nevertheless, the potential for this research to provide valuable insights in the future.

This study offers numerous directions for future considerations. Researching further into soil remediation, recovery, and conservation on a broader scale in an environmental context has the potential to expand understanding of antibiotic resistance and its mitigation in soil environments.

5. Acknowledgments

Thank you to my mentor Dr. Mallory for always believing in me and her constant guidance supporting me throughout my research project. Also thank you to Dr. Monahan, Dr. Sheck, Dr. Maitê, Glaxo lab, my dad, who has always been my biggest advocate, Keith Hairr, the farmer who gave me his soil, and my Research in Biology peers with whom I made lifelong friends and made this experience enjoyable. I couldn’t have done this without all their support, truly.

Funding: Glaxo, NCSSM Foundation, Burroughs Wellcome Fund

6. References

[1] Llor, C. and Bjerrum, L. (2014). Antimicrobial resistance: Risk associated with antibiotic overuse and initiatives to reduce the problem. Therapeutic Advances in Drug Safety, 5(6), 229–241. https://www.ncbi.nlm.nih. gov/pmc/articles/PMC4232501/

[2] Okeke, I. N., Laxminarayan, R., Bhutta, Z. A., Duse, A. G., Jenkins, P., O’Brien, T. F., Pablos-Mendez, A., and Klugman, K. P. (2005). Antimicrobial resistance in developing countries. Part I: recent trends and current status. The Lancet. Infectious Diseases, 5(8), 481–493. https://www.sciencedirect.com/science/article/pii/ S1473309905701894

[3] Denyer Willis, L. and Chandler, C. (2019). Quick fix for care, productivity, hygiene and inequality: reframing the entrenched problem of antibiotic overuse. BMJ Global Health, 4(4), e001590. https://www.ncbi.nlm.nih.gov/ pmc/articles/PMC6703303/

[4] CDC. (2019, March 8). Antibiotic / Antimicrobial Resistance. Centers for Disease Control and Prevention. https://www.cdc.gov/drugresistance/index.html

[5] Nathan, C. (2004). Antibiotics at the crossroads. Nature, 431(7011), 899–902. https://www.nature.com/ articles/431899a

[6] Gould, I. M., and Bal, A. M. (2013). New antibiotic agents in the pipeline and how they can help overcome microbial resistance. Virulence, 4(2), 185–191. https://doi. org/10.4161/viru.22507

[7] Front Microbiol. (2022). 13: 976657. NIH. https://www. ncbi.nlm.nih.gov/pmc/articles/PMC9539525/figure/fig1/

[8] Zhuang, M., Achmon, Y., Cao, Y., Liang, X., Chen, L., Wang, H., Siame, B. A., and Leung, K. Y. (2021). Distribution of antibiotic resistance genes in the environment. Environmental Pollution, 285, 117402. https://www.sciencedirect.com/science/article/pii/ S0269749121009842

[9] Guo, Y., Liu, M., Liu, L., Liu, X., Chen, H.-H., and Yang, J. (2018). The antibiotic resistome of free-living and particleattached bacteria under a reservoir cyanobacterial bloom. 117, 107–115. https://www.sciencedirect.com/science/ article/pii/S0160412018300886

[10] Kumar, A. and Pal, D. (2018). Antibiotic resistance and wastewater: Correlation, impact and critical human health challenges. Journal of Environmental Chemical Engineering, 6(1), 52–58. https://www.sciencedirect. com/science/article/pii/S2213343717306176

[11] Chen, H. and Zhang, M. (2013). Occurrence and removal of antibiotic resistance genes in municipal wastewater and rural domestic sewage treatment systems in eastern China. Environment International, 55, 9–14. https://www.sciencedirect.com/science/article/pii/ S0160412013000391

[12] Qiao, M., Ying, G.-G., Singer, A. C., and Zhu, Y.-G. (2018). Review of antibiotic resistance in China and its environment. Environment International, 110, 160–172. https://www.sciencedirect.com/science/article/pii/ S0160412017312321

[13] Aminov, R. I. (2009). The role of antibiotics and antibiotic resistance in nature. Environmental Microbiology, 11(12), 2970–2988. https://pubmed.ncbi. nlm.nih.gov/19601960/

[14] Kumar, K., C. Gupta, S., Chander, Y., and Singh, A. K. (2005, January 1). Antibiotic Use in Agriculture and Its Impact on the Terrestrial Environment. ScienceDirect; Academic Press. https://www.ncbi.nlm.nih.gov/pmc/ articles/PMC3587239/

18 | 2023-2024 | Broad Street Scientific BIOLOGY

[15] Peng, S.-A., Feng, Y., Wang, Y., Guo, X., Chu, H., and Lin, X. (2017). Prevalence of antibiotic resistance genes in soils after continually applied with different manure for 30 years. Journal of Hazardous Materials, 340, 16–25. https://pubmed.ncbi.nlm.nih.gov/28711829

[16] Han, B., Ma, L., Yu, Q., Yang, J., Su, W., Hilal, M. G., Li, X., Zhang, S., and Li, H. (2016). The source, fate and prospect of antibiotic resistance genes in soil: A review. Frontiers in Microbiology, 13. https://www.ncbi.nlm.nih. gov/pmc/articles/PMC9539525/

[17] Holt, J., Yost, M., Creech, E., McAvoy, D., and Allen, N. (2021, April). Biochar Impacts on Crop Yield and Soil Water Availability. extension.usu.edu. https://extension. usu.edu/crops/research/biochar-impacts-on-crop-yieldand-soil-water-availability

[18] Sanganyado, E. and Gwenzi, W. (2019). Antibiotic resistance in drinking water systems: Occurrence, removal, and human health risks. Science of the Total Environment, 669, 785–797. https://pubmed.ncbi.nlm. nih.gov/30897437/

[19] Li, S., Zhang, C., Li, F., Hua, T., Zhou, Q., and Ho, S.-H. (2021). Technologies towards antibiotic resistance genes (ARGs) removal from aquatic environment: A critical review. Journal of Hazardous Materials, 411, 125148. https://www.sciencedirect.com/science/article/pii/ S0304389421001114

[20] Carolina Biological. (2019). https://www.carolina. com/

[21] Wu, N., Qiao, M., Zhang, B., Cheng, W.-D., and Zhu, Y.-G. (2010). Abundance and Diversity of Tetracycline Resistance Genes in Soils Adjacent to Representative Swine Feedlots in China. Environmental Science and Technology, 44(18), 6933–6939. https://doi.org/10.1021/ es1007802

[22] Ji, X., Shen, Q., Liu, F., Ma, J., Xu, G., Wang, Y., and Wu, M. (2012). Antibiotic resistance gene abundances associated with antibiotics and heavy metals in animal manures and agricultural soils adjacent to feedlots in Shanghai; China. Journal of Hazardous Materials, 235236. https://www.sciencedirect.com/science/article/pii/ S0304389412007716

[23] Wang, S., Gao, B., Zimmerman, A. R., Li, Y., Ma, L., Harris, W. G., and Migliaccio, K. W. (2015). Removal of arsenic by magnetic biochar prepared from pinewood and natural hematite. Bioresource Technology, 175, 391–395. https://www.sciencedirect.com/science/article/pii/ S0960852414015363

[24] Luo, Y., Mao, D., Rysz, M., Zhou, Q., Zhang, H., Xu, L., and J. J. Alvarez, P. (2010b). Trends in Antibiotic Resistance Genes Occurrence in the Haihe River, China. Environmental Science and Technology, 44(19), 7220–7225. https://doi.org/10.1021/es100233w

[25] Bartholomew, M.J., Hollinger, K., and Vose, D. (2003) Characterizing the risk of antimicrobial use in food animals: fluoroquinolone-resistant campylobacter from consumption of chicken. Microbial Food Safety in Animal Agriculture: Current Topics. Ames, IA: Iowa State Press, 293–301.

[26] Godwin, P. M., Pan, Y., Xiao, H., and Afzal, M. T. (2019). Progress in Preparation and Application of Modified Biochar for Improving Heavy Metal Ion Removal From Wastewater. Journal of Bioresources and Bioproducts, 4(1), 31-42. https://doi.org/10.21967/jbb.v4i1.180

[27] Sun, J., Jin, L., He, T., Wei, Z., Liu, X., Zhu, L., and Li, X. (2020). Antibiotic resistance genes (ARGs) in agricultural soils from the Yangtze River Delta, China. Science of the Total Environment, 740, 140001. https://www.sciencedirect.com/science/article/pii/ S004896972033521X

[28] Singer, A. C., Shaw, H., Rhodes, V., and Hart, A. (2016). Review of Antimicrobial Resistance in the Environment and Its Relevance to Environmental Regulators. Frontiers in Microbiology, 7. https://www.frontiersin.org/ articles/10.3389/fmicb.2016.01728/full

[29] Zhou, Z., Liu, J., Zeng, H., Zhang, T., and Chen, X. (2020). How does soil pollution risk perception affect farmers’ pro-environmental behavior? The role of income level. Journal of Environmental Management, 270, 110806. https://www.sciencedirect.com/science/article/ pii/S0301479720307374

Broad Street Scientific | 2023-2024 | 19 BIOLOGY

IDENTIFICATION OF SINGLE-NUCLEOTIDE POLYMORPHISMS

RELATED TO THE PHENOTYPIC EXPRESSION OF DROUGHT TOLERANCE IN ORYZA SATIVA

Reva Kumar

Abstract

Environmental stressors have contributed to the depletion of crop yield for major crops like rice due to climate change. Characteristics such as drought tolerance are critical indicators of stress. We identified genes associated with drought tolerance using several quantitative techniques. These include quantitative trait loci (QTL) analyses and genome-wide association studies (GWAS). The QTL analyses used a cross between Curinga and O. rufipogon. The GWAS study looked at a dataset of 413 rice varieties and approximately 44,000 single nucleotide polymorphisms (SNPs) on each of the species. The dataset contained 37 phenotypes. The goal was to use a kinship matrix as a correction for a GWAS analysis on a specified phenotype and to identify the most significant SNPs. We selected four major phenotypes possibly correlated with drought tolerance: plant height, seed length to width ratio, amylose content, and protein content. The analyses identified several genes local to the SNP region using a genome browser. Current work involves relating phenotype expression under environmental stimuli to orthologous genes in model plants like Arabidopsis thaliana and Zea mays. This study aims to use these genes in understanding the relationship between drought tolerance and gene presence/ expression in rice. This study will continue to identify species with genetic expressions correlating to high drought tolerance, with further applications to finding species that can be cross-bred to produce drought tolerant species of rice.

1. Introduction

Drought occurs during an extended imbalance between precipitation and evaporation. With the increasing severity of climate change, historically dry areas are likely to have increased drought occurrences in the coming years. From 2015-2021, extreme dry and wet events in the U.S. occurred four times per year, versus three times in the 15 preceding years. It is predicted that over 50% of the world’s arable land will be affected by drought in the year 2050 [1]. This is a major concern for agriculture. Rice is considered a key crop in global food security, since it is the primary nutrient source for more than three billion people [2]. However, rice is one of the most drought-susceptible plants because it cannot take up much water due to its small root system [3].

As the global population continues to grow, the demand for technologies that enhance crop yield has risen. Environmental stressors, including drought, have contributed to the depletion of crop yield. For rice, there was a 25.4% decline from 1980 to 2017 [4]. Critical mitigation of rice drought tolerance is needed to maintain global food security.

Because of the drastic effects drought has on plant growth, understanding the genetic expression of rice during drought is crucial for the mitigation of drought stress. Drought tolerance is composed of morphological

adaptations, so this study focuses on the phenotypic expression of some morphological features.

Rice is cultivated globally and has a genome with 12 chromosomes encompassing 430 Mbp (mega base pairs). Past literature has identified genes in rice correlated with drought tolerance, but this approach seeks to use several quantitative techniques, including Quantitative Trait Loci (QTL) analyses and Genome Wide Association Studies (GWAS), to identify genes associated with phenotypes related to crop yield. This paper also uses these two quantitative techniques to compare genes observed for plant height, and this is a unique approach compared to past studies [6].

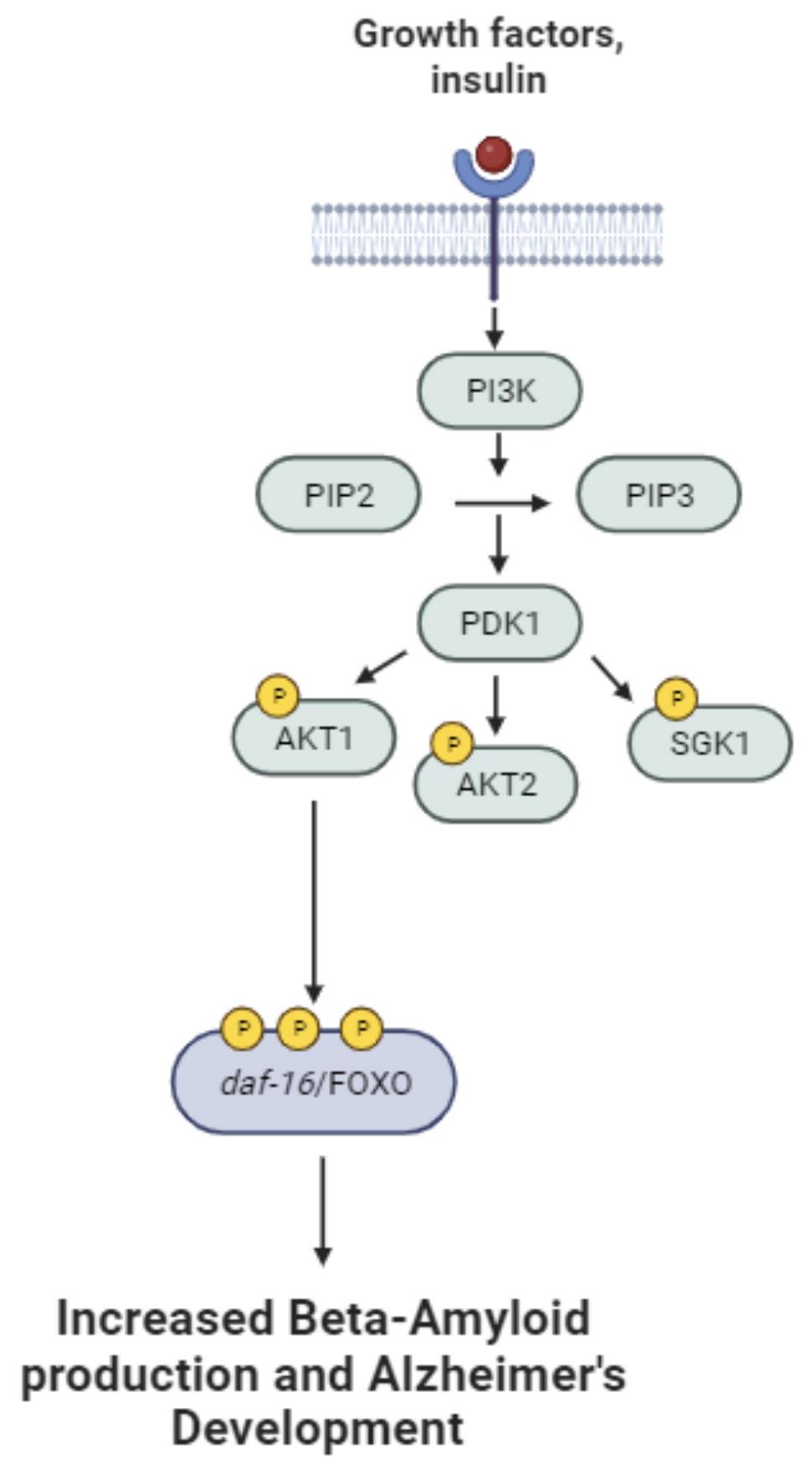

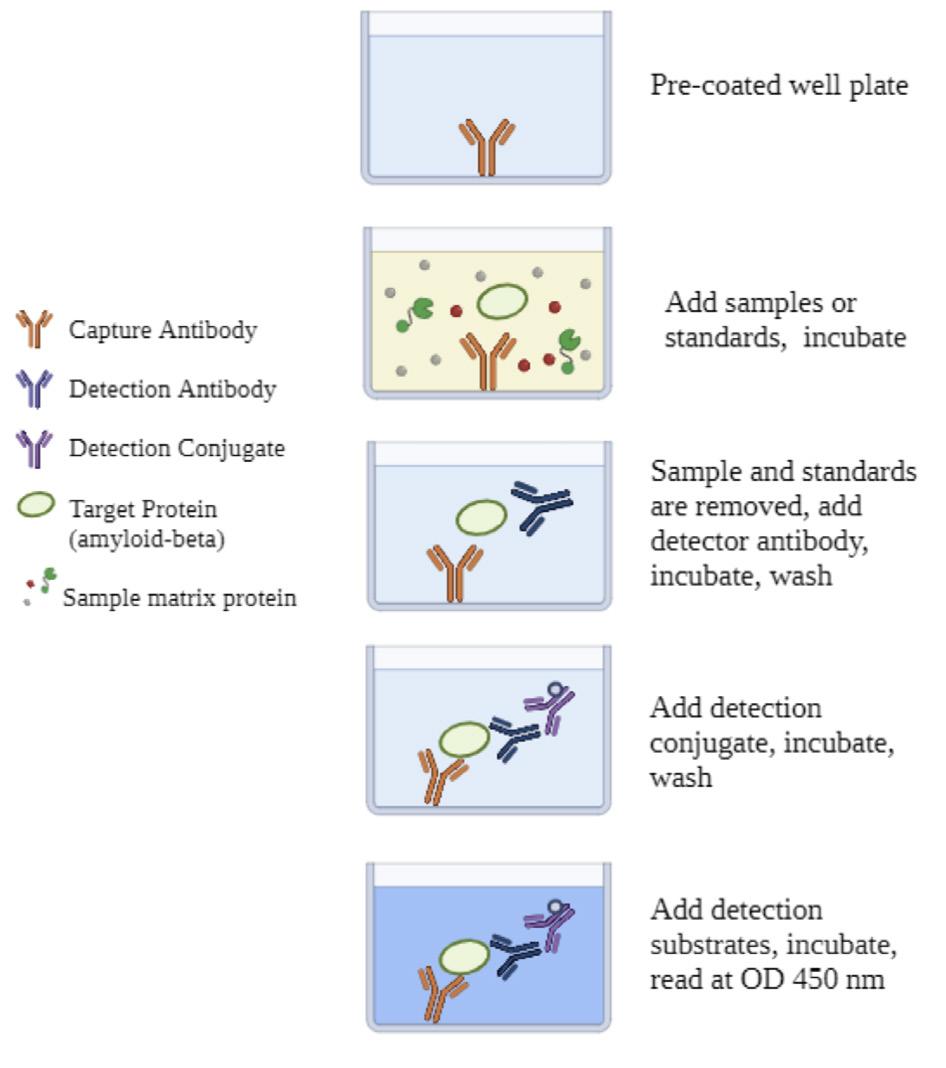

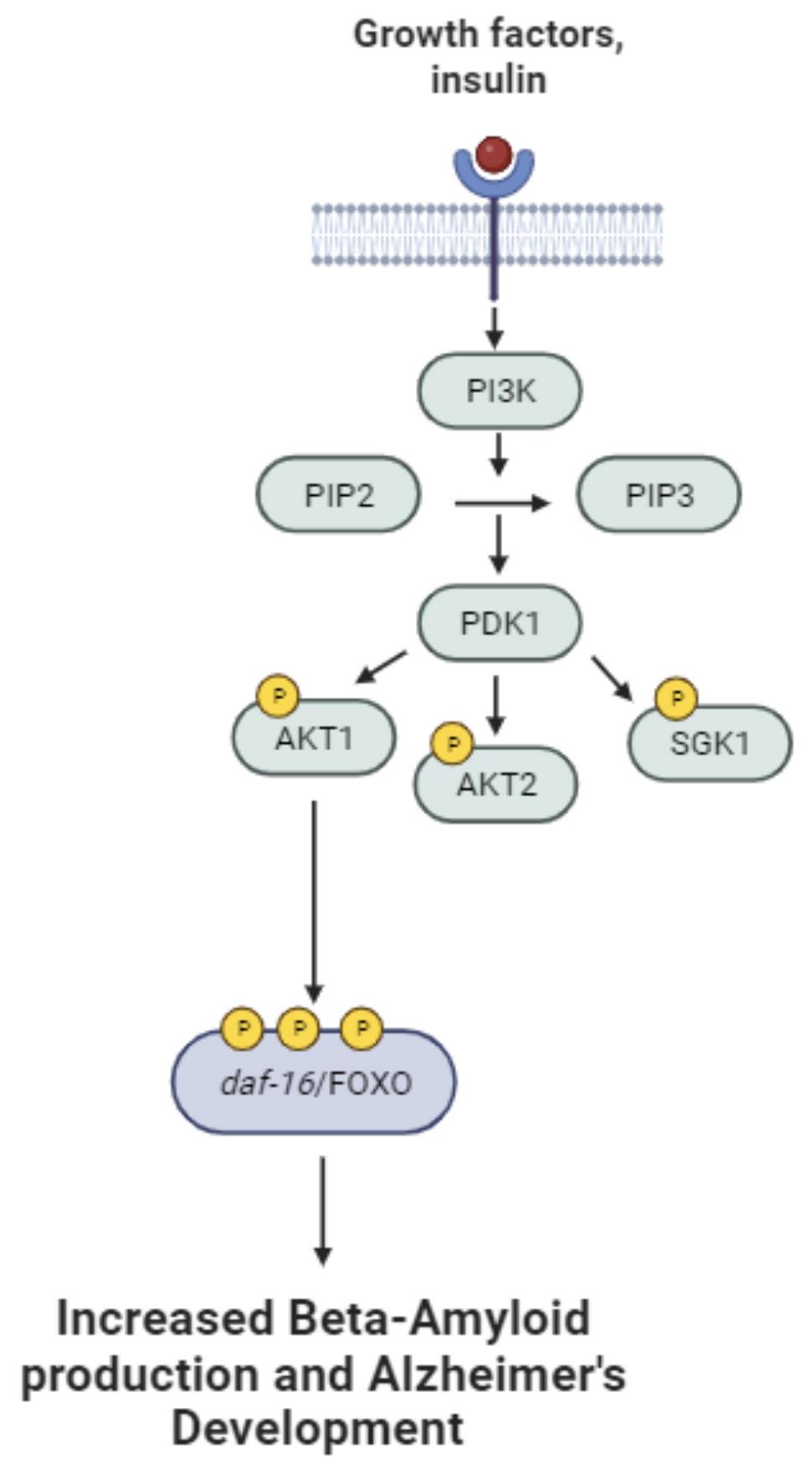

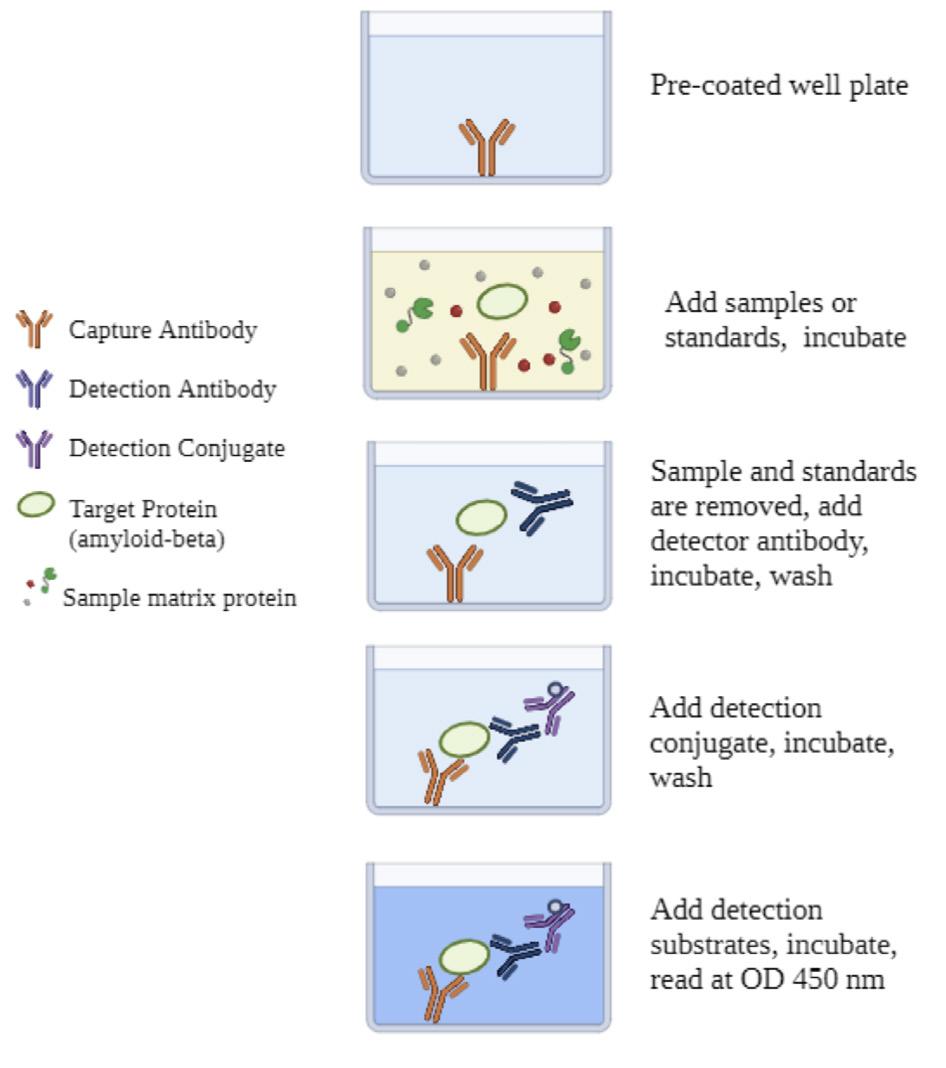

Drought stress is the result of a long-term period of low soil moisture content accompanied by a continuous loss of water through evaporation and transpiration [7]. Thus, to understand drought stress, it is imperative to understand the morphological, physiological, and biochemical responses of rice to stress. Since phenotypes are mainly observed through morphological responses [5], this paper observes how decreased plant height and a reduced number of tillers, also known as shoots, could denote poor yield. Poor yield is determined by attributes such as impaired assimilate partitioning, reduced grain filling, grain weight and size, and death of the plant. Figure 1 shows how drought stress could contribute to morphological responses and correspond to poor yield.

20 | 2023-2024 | Broad Street Scientific BIOLOGY

Though physiological and biochemical responses were not as heavily studied, all three response types could result in poor yield attributes [5]. Furthermore, morphological responses could be related to shoot development and characteristics during early development.

Figure 1: Drought stress influence on morphological, physiological, and biochemical responses of rice [5]

Various studies have found that other than morphological attributes denoting poor yield, there could be traits such as amylose and protein content that indicate poor yield. Insufficient water supply could lead to the reduction of carbohydrate synthesis in crops and lower grain and protein yield [8].

Overall, this study analyzes phenotypes with known correlations to environmental stimuli to observe how morphological changes due to drought tolerance may be the result of gene functions. Selected genes with quantitative statistical significance were observed using genome browsers to find gene functions and drought tolerant traits. This study responded to three main research questions:

1. Are there genes expressed in rice that are influenced by phenotypes related to drought?

2. Can the functions of these genes correlate to drought tolerance?

3. How can these genes be used to identify orthologous genes in other species and draw conclusions for drought tolerance?

2. Computational Approach/Methods

This study used two main quantitative techniques for identifying loci. The first technique is a GenomeWide Association Study (GWAS). Generally, GWAS work to find genes that are associated with phenotypes across the whole genome [9]. Association mapping in this study identifies single-nucleotide polymorphisms (SNPs), or genomic variants at a single location within one nucleotide [10]. If there is a non-zero slope along

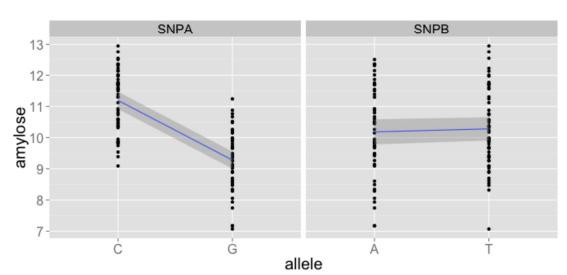

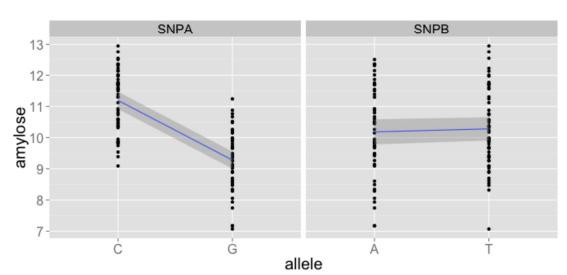

the association between observed data for the same trait, there is an association between a SNP allele and a phenotype. An example of this association is shown in Figure 2.

Figure 2: The non-zero slope for SNPA shows that it is significant because there is a correlation between the allele present and the phenotype observed. The flat correlation between the alleles on SNPB shows that it is an insignificant SNP. The y-axis corresponds to relative quantities of amylose, rather than specified quantities with units. In this case, two SNPs for amylose were portrayed, with SNPA showing the presence of C versus G yielded higher amylose content.

We used a GWAS model with a dataset including 413 rice varieties from 82 countries and 44,000 genetic mutations, or SNPs on each type of rice. Although there are around 500,000 SNPs among rice varieties, due to linkage disequilibrium closely linked SNPs have high correlation. Thus, the majority of known SNPs are not necessary for the study. The goal of the GWAS was to identify loci that may indicate the expression of a particular trait. The dataset includes 37 phenotypes, but those that were most significantly linked to drought tolerance were studied, including: plant height, seed length to width ratio, amylose content, and protein content.

Since the dataset was so large, a kinship matrix was used to adjust for population structure, assuming kinship from the observed genotypic data. A kinship matrix displays the genetic relatedness of organisms, with values representing genetic similarity ranging from 0 to 1 (1 as identical organisms, 0 as unrelated organisms). The kinship matrix in this method is estimated from the SNPs and data given, since the pedigree of the species is unknown. A LOD-score, also known as logarithm of odds, was used to estimate the genetic relatedness of a marker and an expressed trait. The LOD-score threshold for this data was 5.85, which is significant for plants [11]. After including a kinship matrix in the GWAS, significant SNPs were chosen. The three SNPs with the highest LOD scores at that location were identified as most significant. In this study, a specific type of LOD score was used that used a -log(P) as normalization for the dataset. There is a 95% certainty in this study, with a -log(P) used to normalize the data for association rather than probability

Broad Street Scientific | 2023-2024 | 21 BIOLOGY

of association by chance [12].

The second major quantitative technique that was used is a Quantitative Trait Loci (QTL) Analysis. QTL analyses work to identify molecular markers associated with phenotypes, rather than genes or purely genetic loci [6]. The QTL analysis was conducted on a cross-inbred population of Curinga x Oryza rufipogon. For the QTL data, the dataset was rather small, incorporating phenotypes of flow, height, tillers, panicles, and pericarp. Panicles refer to branching clusters of rice and perciparp refers to the layer surrounding the ovary wall of a plant’s seed. Based on the category ”Morphological Responses” from Figure 1, tillers and height were chosen as proxies for drought.

Using publicly available rice and plant genome browsers, genes were identified that were nearest to the identified loci. The Rice Genome Annotation Project (RGAP) Browser from the University of Georgia shows all 12 chromosomes with markers along all base pairs. Rice loci were identified, as well as the best orthologous genes/proteins in Arabidopsis thaliana (thale cress) and Zea mays (maize). These were two plants that had genetic similarities of 93.71% and 94.95% to rice, respectively, as seen in Figure 3. Although Triticum aestivum (wheat) had a higher genetic relatedness, it was easier to find orthologous genes in Arabidopsis thaliana and Zea mays because their genomes have been mapped extensively (especially Arabidopsis thaliana). The RGAP individual gene pages show gene ontologies (GO) and their accessions. These include the biological processes that are known as gene functions.

Figure 3: The percent genetic relatedness between Arabidopsis thaliana, Zea mays, Oryza sativa japonica, Oryza sativa indica and Triticum aestivum. These are listed vertically, where the japonica and indica species have 100% relatedness. This image was produced by creating a percent identity matrix on UniProt.

While the RGAP browser did not have extensive details on the ontologies, UniProt, a web-accessed resource, did, and was used to search for known genes from the QTL and GWAS studies and identify details about the proteins

encoded in those genes and their functions. The main ontologies that aligned with the goals of the study were those related to biological processes marking responses to environmental external stimuli, as seen in Figure 4. Response to general environmental stress and heat/ temperature stimuli ontologies were found.

Figure 4: Gene Ontology accessions with responses to heat stimuli. This image shows blue boxes as biological processes with the black arrows pointing to possible processes that are influenced. The GO accession numbers refer to different possible processes in the rice genes. Each specific number classification is not significant, but the processes they entail are. This diagram was produced by the European Bioinformatics Institute’s gene ontology browser.

While not every gene had extensive information about it, general conclusions were drawn from gene ontologies on how those genes could be affected by external stimuli, with a focus on drought and heat.

3. Results and Discussion

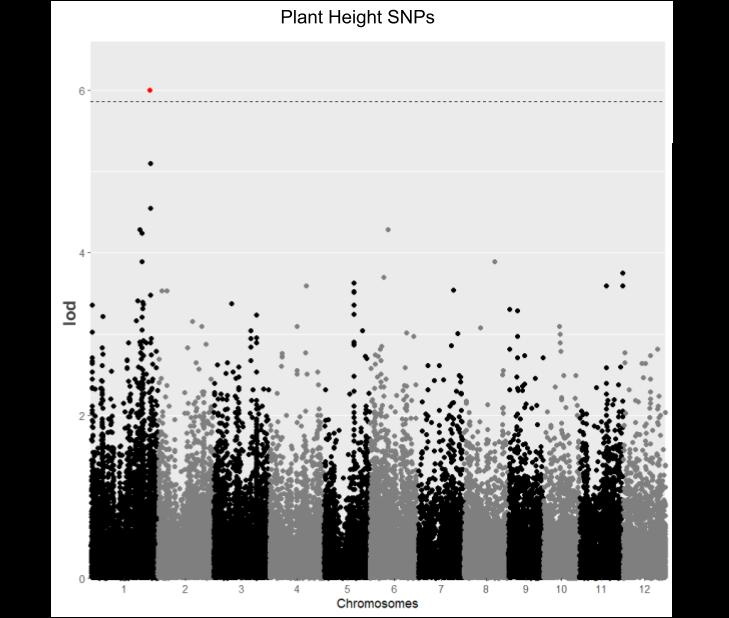

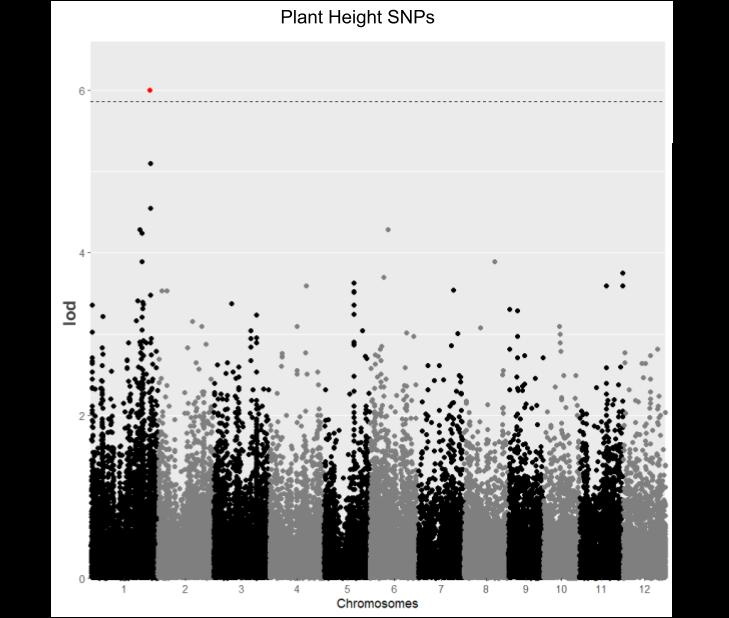

For the plant height phenotype studied using a GWAS, one significant SNP was found, with a LOD score of 5.99 and location of 38111539 bp on Chromosome 1, as shown in Figure 5.

22 | 2023-2024 | Broad Street Scientific BIOLOGY

Figure 5: This shows the GWAS analysis adjusted with a kinship matrix for plant height, identifying one significant SNP as shown in red.

The three nearest loci were LOC Os01g65650, LOC Os01g65640, and LOC Os01g65660, as seen in Figure 6. LOC refers to an identified loci, Os refers to Oryza sativa, the number 01 refers to the chromosome, and the remaining numbers denote the number gene it is.

Figure 6: a) Genome Browser showing SNP on the genome with nearby loci. b) LOC Os01g65650 shown on the genome.

LOC Os01g65650 was observed as a protein-coding gene but little information was available. An orthologous Arabidopsis thaliana gene was identified as AT1G72180. The gene ontology showed the purpose of the gene is to mediate nitrate uptake and to regulate the shoot system development and response to osmotic stress. It is possible that this result – plant height correlated with shoot system regulation – could be used as a proxy to results with tillers. This gene is also expressed heavily during the growth stages of the plant. Growth stages involving the root system may be correlated to plant height expression. Since the small root system of rice contributes to its high susceptibility to drought, this gene could be

studied more extensively in the future to understand correlations between plant root development and height. Furthermore, osmotic stress could directly be related to drought influence, due to the imbalance in salinity and important ions in the cell [13]. There is an emphasis on this gene’s functionality with shoot development, a key indicator of healthy plant growth under sufficient hydration.

For observing the plant height phenotype using QTL analysis, a mainscan plot was produced, as shown in Figure 7. The most significant QTL identified was one at Chromosome 2 and position 3.12 cM, with a LOD score of 6.91. 3.12 is the location in cM (centimorgans), so conversion to base pairs found the location as 842400 bp. This was observed in the rice genome browsers (Figure 8).

Figure 7: Mainscan plot showing the QTLs for height along the genome, with a peak at Chromosome 2 indicating a high LOD score and therefore high statistical significance. The blue line represents the LOD threshold of 5.85.

Figure 8: Rice Genome Browser showing location of Chromosome 2 at 842 kbp.

The QTL was observed in gene LOC Os02g02410, a DnaK family protein with an orthologous gene in Arabidopsis thaliana as AT5G42020. A DnaK protein expressed in chromosome 3 was also found, with possible proteins encoding for heat stress. It acts as a protein binder in response to heat stress, and there is a possibility that this DnaK protein could be used to target other environmental stressors, like drought. Other GO accessions not identified in the genes so far were 0009628 and 0006950, which could correspond to drought stress response. Further research may include identifying these accessions in QTLs and finding more phenotypes associated with these biological processes. Furthermore, a GO accession of GO:0009408 was present, which is related to heat stimuli response. Using the rice expression

Broad Street Scientific | 2023-2024 | 23 BIOLOGY

database, UniProt was used to identify another protein in the gene, Q6Z7B0, a kDa protein involved in the control of seed storage proteins during seed maturation. Due to the nature of the GWAS-identified plant height gene as a protein-coding gene, it is assumed that this gene has a purpose in coding for endoplasmic reticulum stress and therefore is crucial to the seed development. Additionally, both are expressed after flowering during seed development, not in mature seeds. Drought can decrease the quality of seeds during early development, thus contributing to poor plant growth [14]. No QTLs were identified for tillers, an additional possible indicator of drought tolerance. This is possibly due to the lack of data for this phenotype.

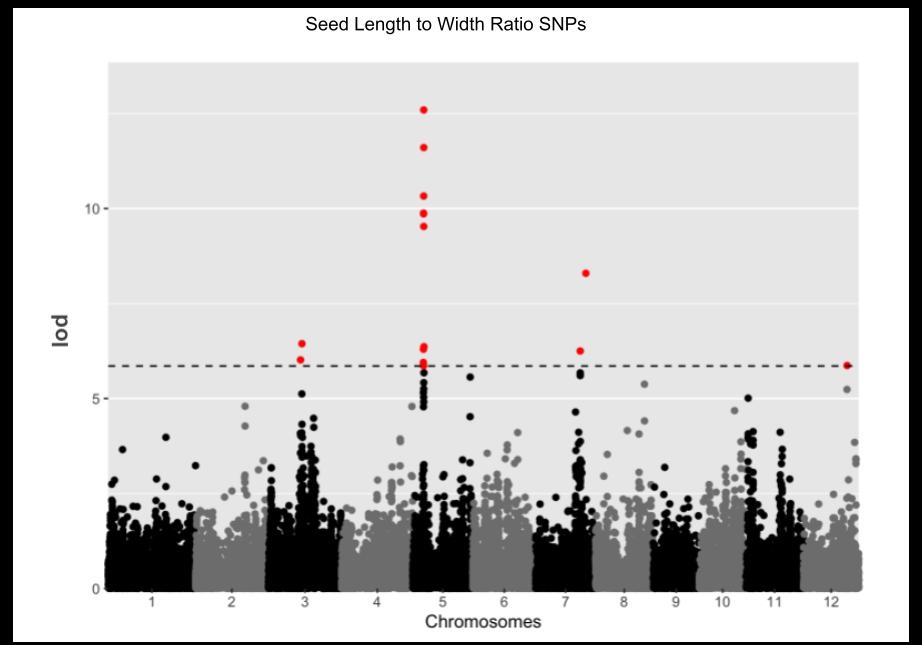

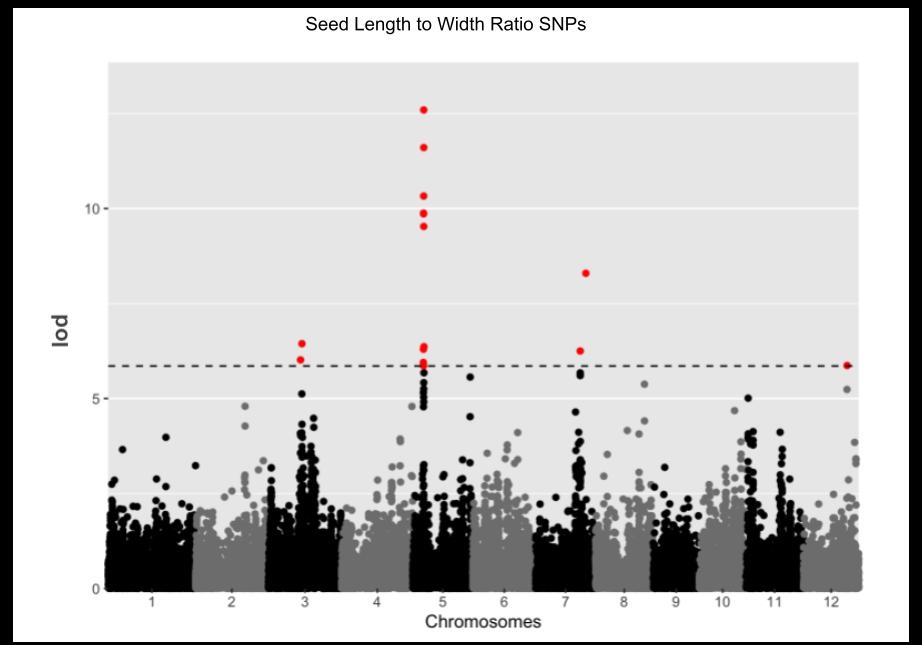

Other phenotypes were observed using GWAS. Seed length to width ratio was observed having a significant SNP at Chromosome 5 and location 5425317 bp, with LOD score 12.59, as seen in Figure 9. LOC Os05g09550 was the gene that contained this SNP, and is a Der-1-like family containing protein. It is involved in the degradation of proteins in the endoplasmic reticulum, which is similar to the traits observed from the plant height loci.

Figure 9: This shows the GWAS analysis adjusted with a kinship matrix for seed length to width ratio. There is a peak in significant SNPs at chromosome 5, and these genes are all close to each other.

The amylose content phenotype GWAS analysis suggested the gene LOC Os06g04200, a starch synthase with molecular functions in protein binding and metabolism. Since these are traits mainly correlated with plant regulation and the synthesis of vital nutrients, these could be compromised under drought stress, like they have been in sweet potato [15].

Finally, the protein content phenotype GWAS analysis suggested the gene LOC Os06g09880, as seen in Figure 10, contributes to major reproductive and embryonic development. Though this is different from the protein binding and metabolism processes seen with the other phenotypes, it still deals with seed development, suggesting that traits dealing with drought tolerance

often are expressed during seed development. Future applications of this research could be to observe these traits as expressed during development, not after plant growth, and in response to environmental stimuli.

4. Conclusion

From both the QTL and GWAS techniques, genes associated with traits that are often responsive to drought stress were found to show high functions in protein content and development as well as seed development and early stages of plant growth. This is supported by orthologous genes and GO accessions for genes for which these were available. Generally, this study shows that there are genes that could be correlated with drought tolerance, and supports the hypothesis that their genetic functions are directly related to responding to environmental stress. Further applications of this study are extensive. More QTLs and SNPs with information available regarding gene ontologies could be studied to support the conclusions of this study. We will continue to identify genes that correspond to morphological processes that are influenced by drought. Additionally, the use of genome browsers to directly identify the genes that correlate with drought tolerance could support the conclusions of this study. Once genes with conclusive applications to drought tolerance are observed, these genes could be isolated in populations of rice and populations could be bred using marker-assisted selection to produce drought-tolerant species of rice.

5. Acknowledgements

This study is supported by the North Carolina School of Science and Mathematics. We would like to thank Mr. Robert Gotwals and Dr. Amy Sheck for making the program available and for their ongoing support. Special thanks is given to these individuals:

1. Dr. Susan McCouch, Professor at the School of Integrative Plant Science Plant Breeding and Genetics Section and Professor of Computational Biology at Cornell University, for help with data acquisition and curation.

2. Dr. Juan Velez, Postdoctoral Fellow at the School of Integrative Plant Science Plant Breeding and

24 | 2023-2024 | Broad Street Scientific BIOLOGY

Figure 10: Genome browser showing SNPs for protein content.

Genetics Section at Cornell University, for help with data acquisition and curation.

3. Dr. Julin N. Maloof, Professor of Plant Biology, University of California Davis, for the use of his lab activities on GWAS.

4. Dr. Eli Hornstein of Elysia Creative Biology, and Dr. Bri Edwards, Research Assistant in the AlonsoStepanova Lab, Plant and Microbial Biology, North Carolina State University, for guidance on navigating rice genome browsers.

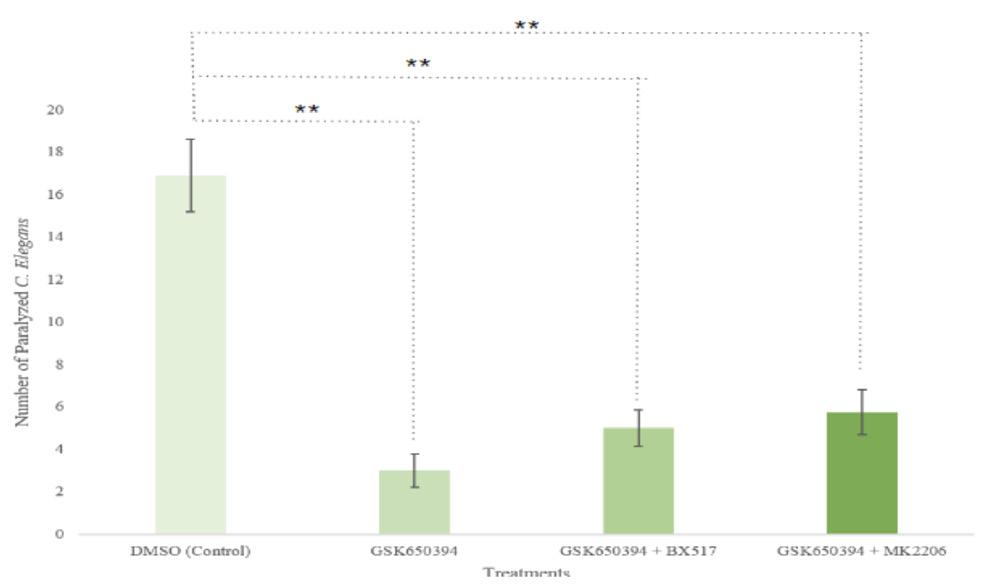

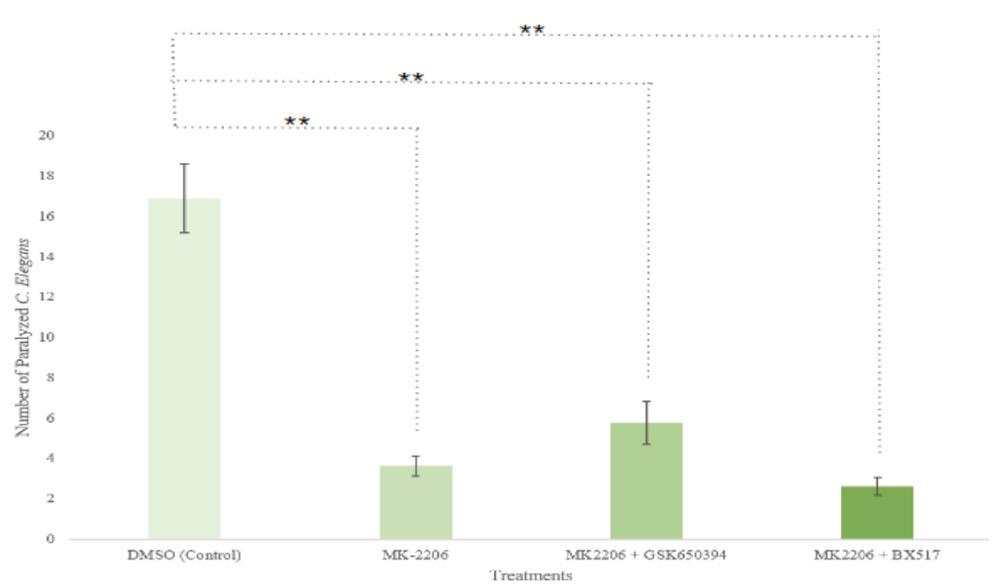

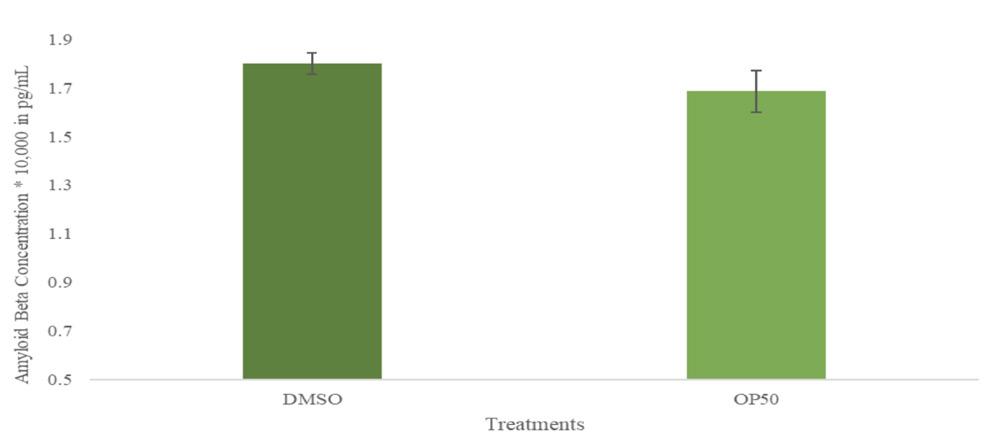

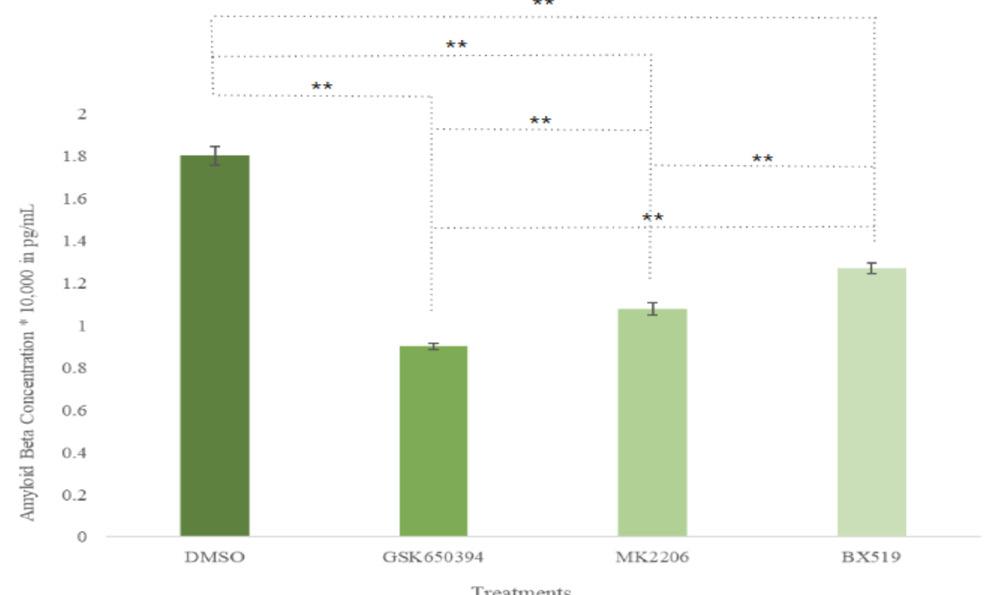

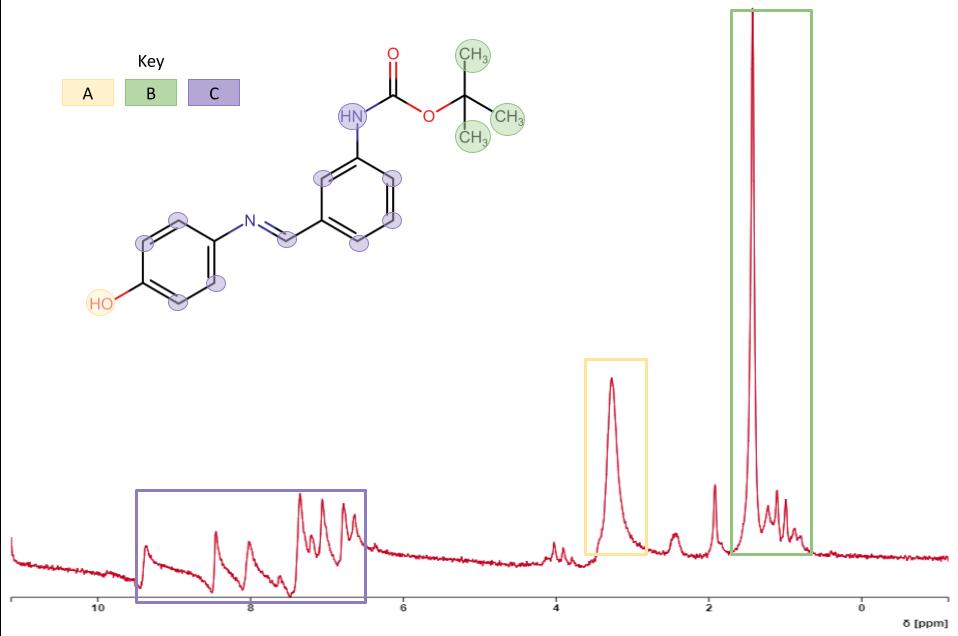

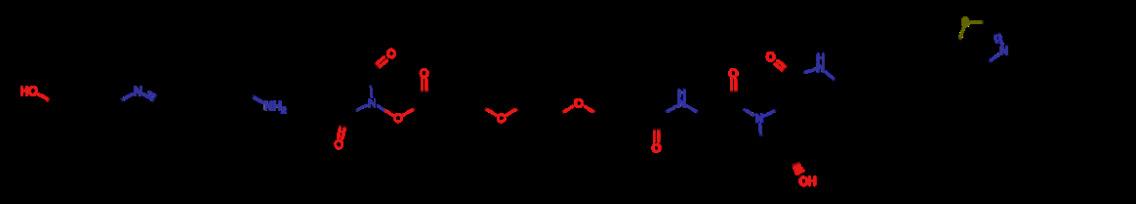

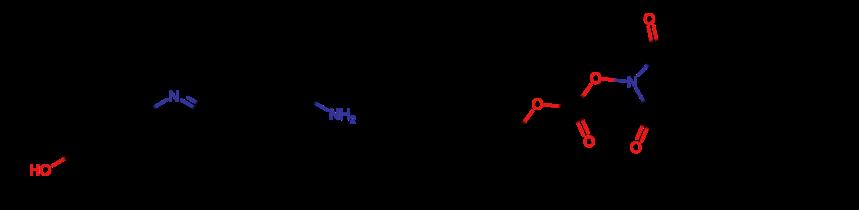

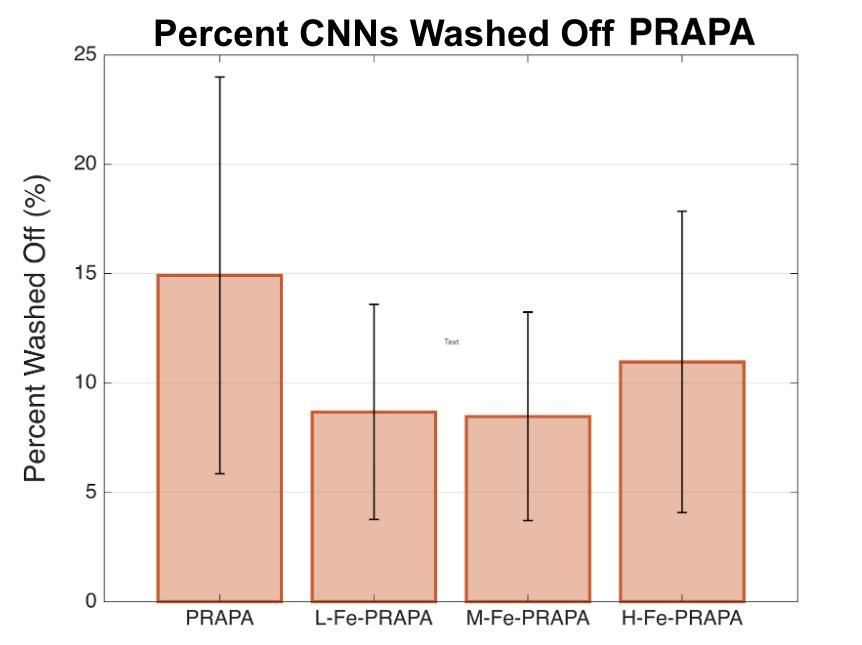

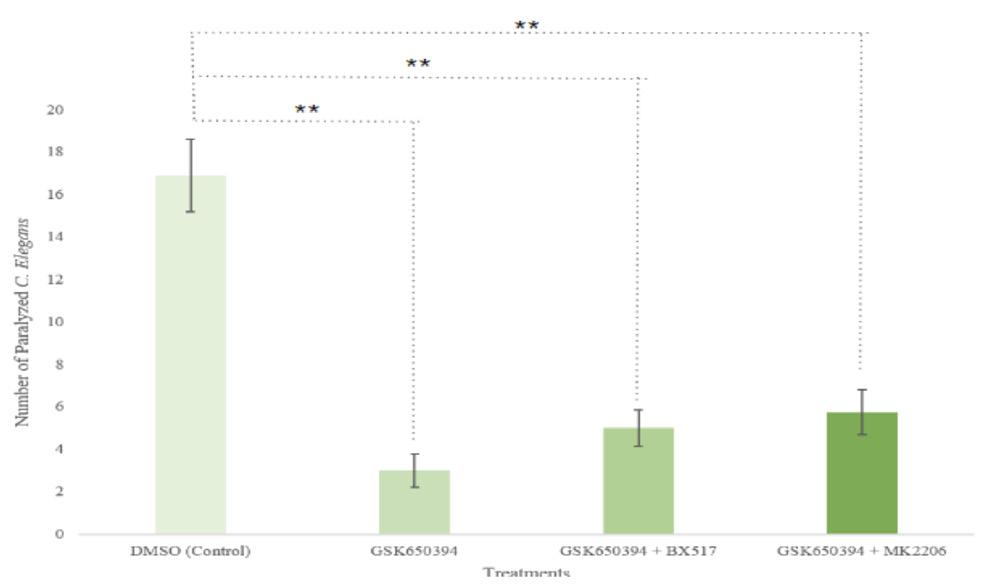

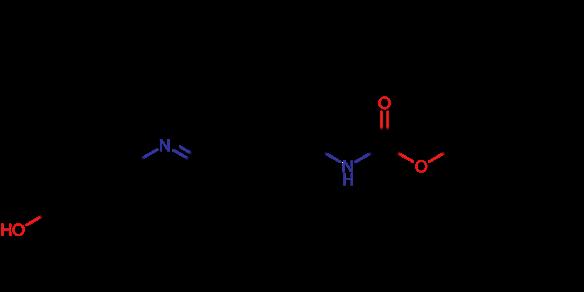

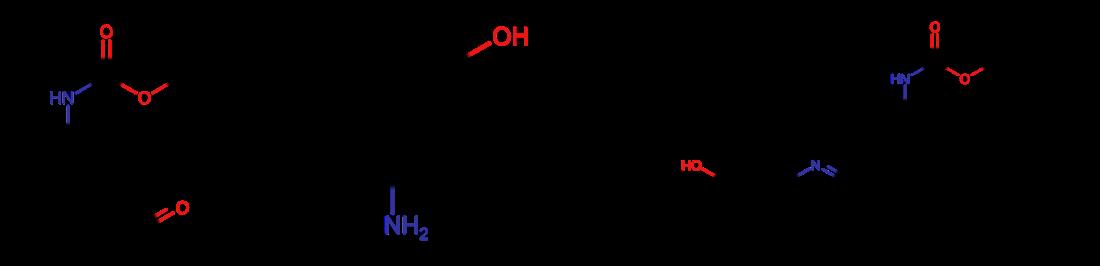

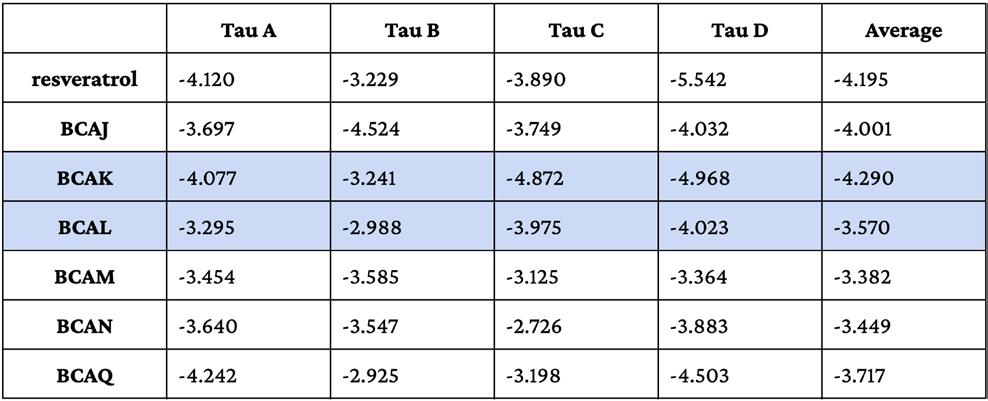

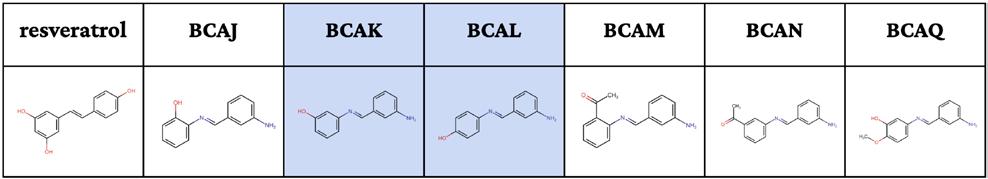

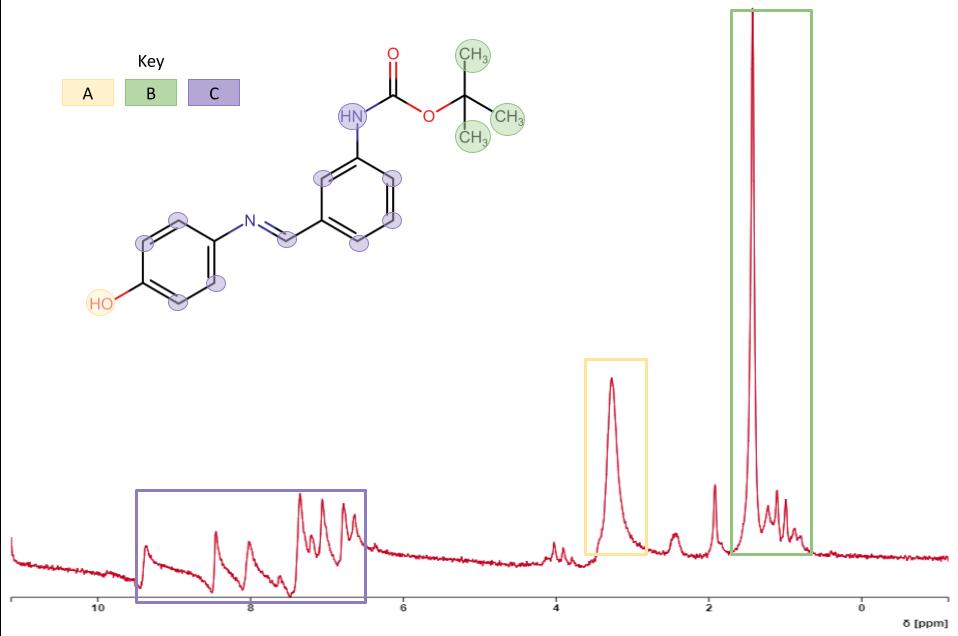

6. References