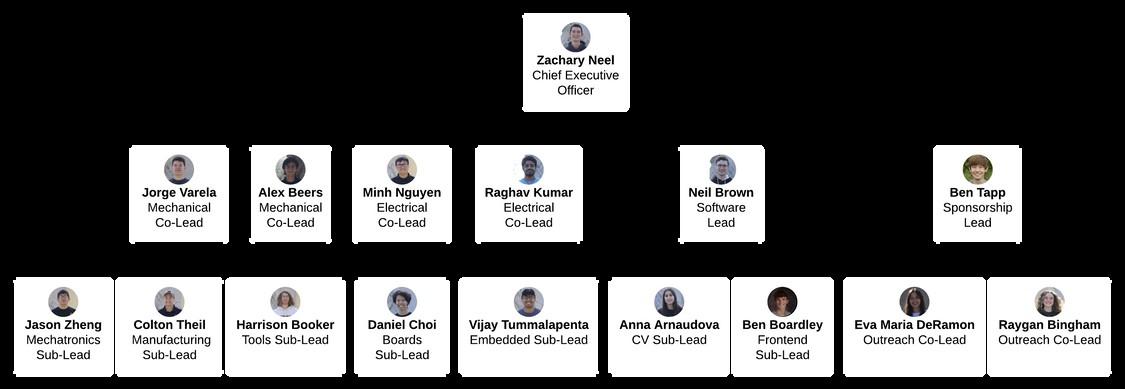

iii Editorial Board

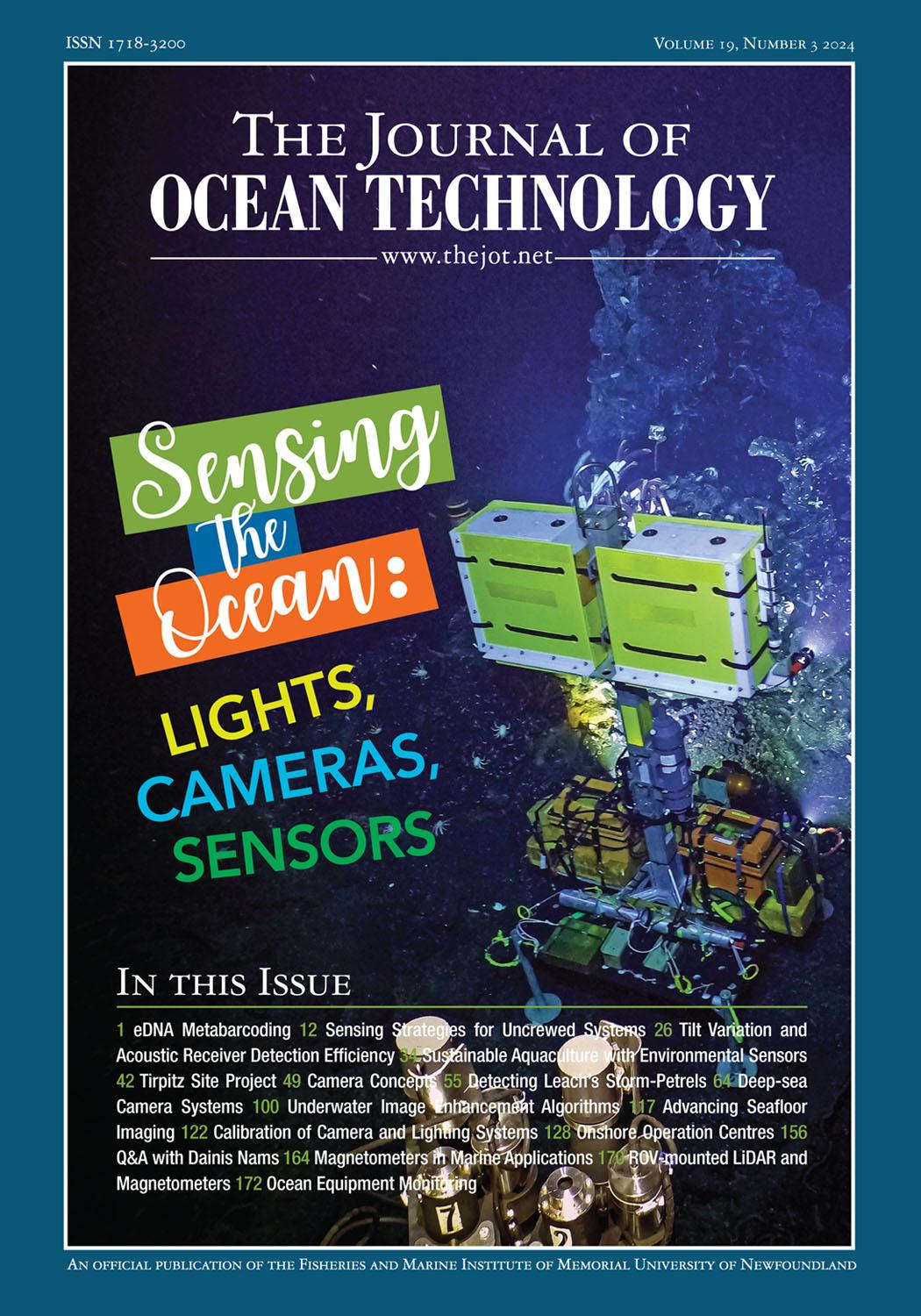

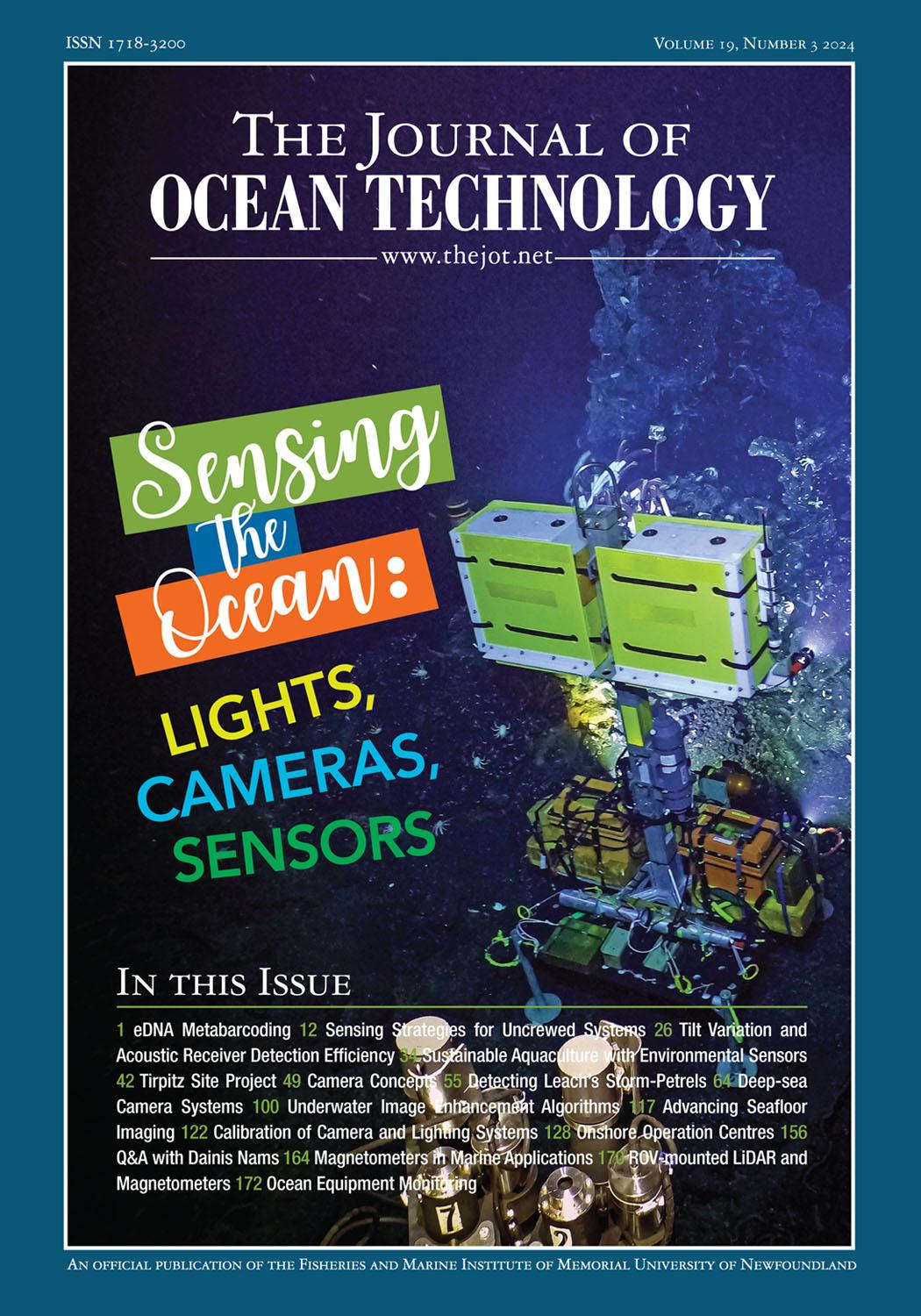

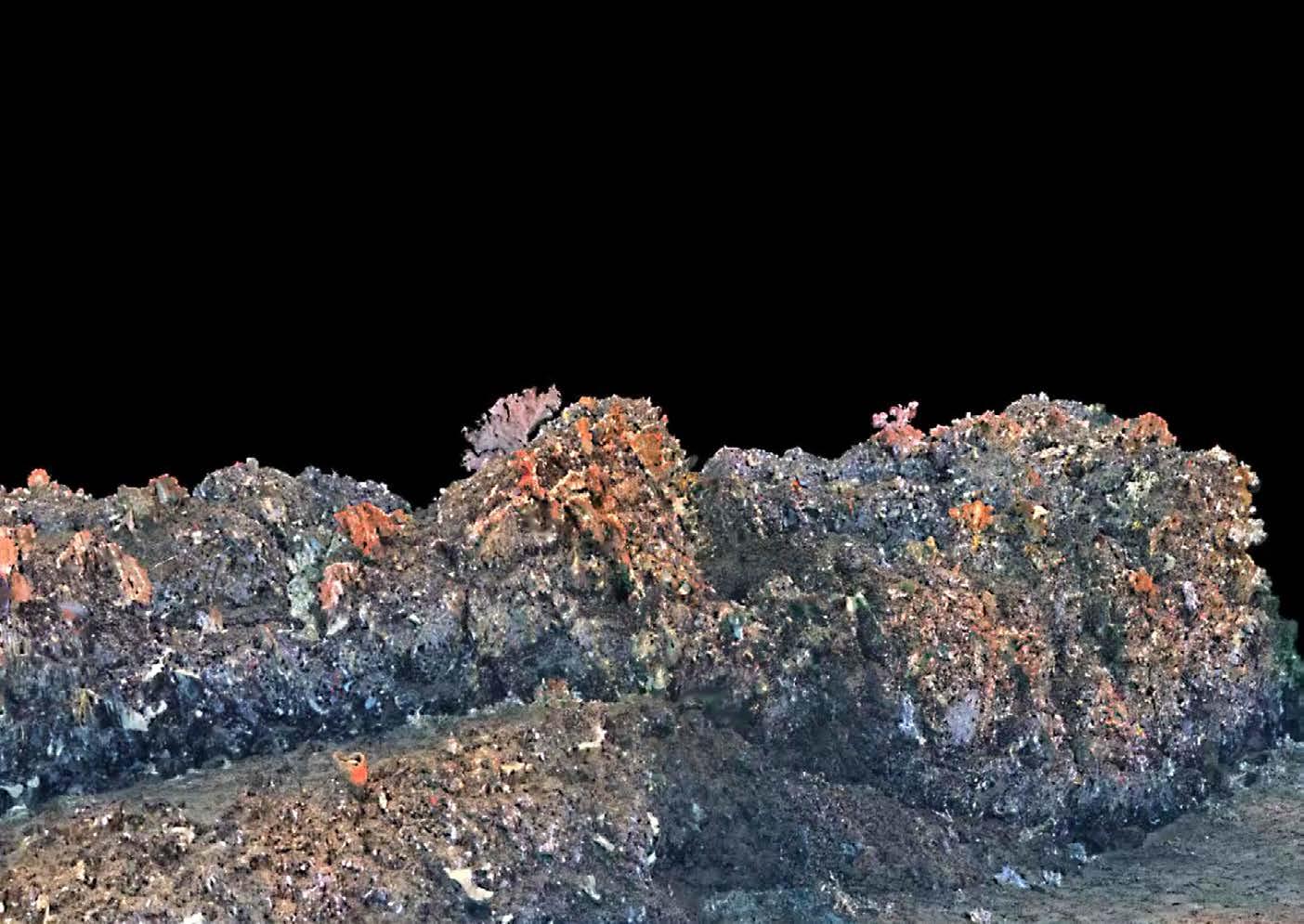

iv On the Cover

v Publishing Schedule and Advertiser ’s Index

vi Editor ’s Note

1 eDNA Metabarcoding in the Ocean: A Powerful Molecular Sensor in Need of Optimization

Gordon H.R. de Jong, Jonathan A.D. Fisher

Fisheries and Marine Institute

David Côté, Fisheries and Oceans Canada

12 Navigating Turbid Waters: Developing Sensing Strategies for Uncrewed Systems Testing and Proving

Michelle Barnett, Sonardyne International

Patrick Bunday, Vic Grosjean

Australian Institute of Marine Science

26 Off Centre: Tilt Variation and Acoustic Receiver Detection Efficiency in a High Flow Environment

Iago Gradin, Ocean Tracking Network

34 Enhancing Sustainable Aquaculture with Environmental Sensors: A Gateway to Long-term Data Analysis and Improved Practices

Kaeleigh McConnell, Innovasea

42 Arctic Legacy of War: Tirpitz Site Project

Bryan Lintott, UiT The Arctic University of Norway

Gareth Rees, University of Cambridge

49 What Can Camera Concepts Sense for You – that Conventional Sampling Methods do Not?

Niels Madsen, Amanda Irlind, Alex Jørgensen, Karen Ankersen Sønnichsen, Malte Pedersen, Jonathan Eichild Schmidt, Anders Skaarup

Johansen, Galadrielle Humblot-Renaux, Thomas B. Moeslund, Nadieh de Jonge, Jeppe Lund Nielsen

Aalborg University

55 Detecting Light-attracted Leach’s Storm-Petrels in the Offshore

Justin So, Kyle d’Entremont, Andrew Peddle WSP Canada Inc.

64 Autonomous, Fixed-focus, High-resolution Deep-sea Camera Systems

Aaron Steiner, Mark Olsson, Stacey Church

DeepSea Power and Light

Eli Perrone, EP Oceanographic

Jon Clouter, Back-Bone Gear Inc.

Daniel J. Fornari, Woods Hole Oceanographic Institution

Victoria Preston, Olin College

Mae Lubetkin, independent researcher

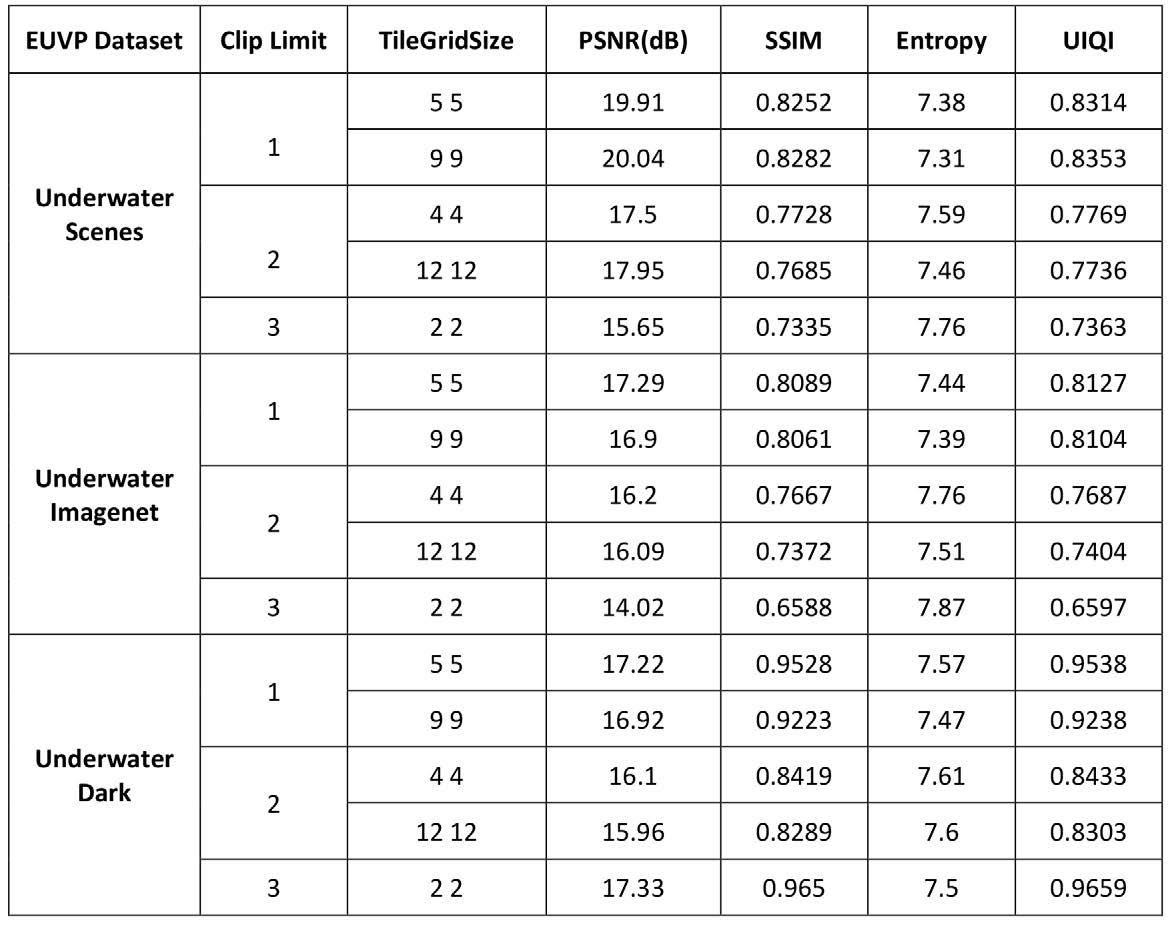

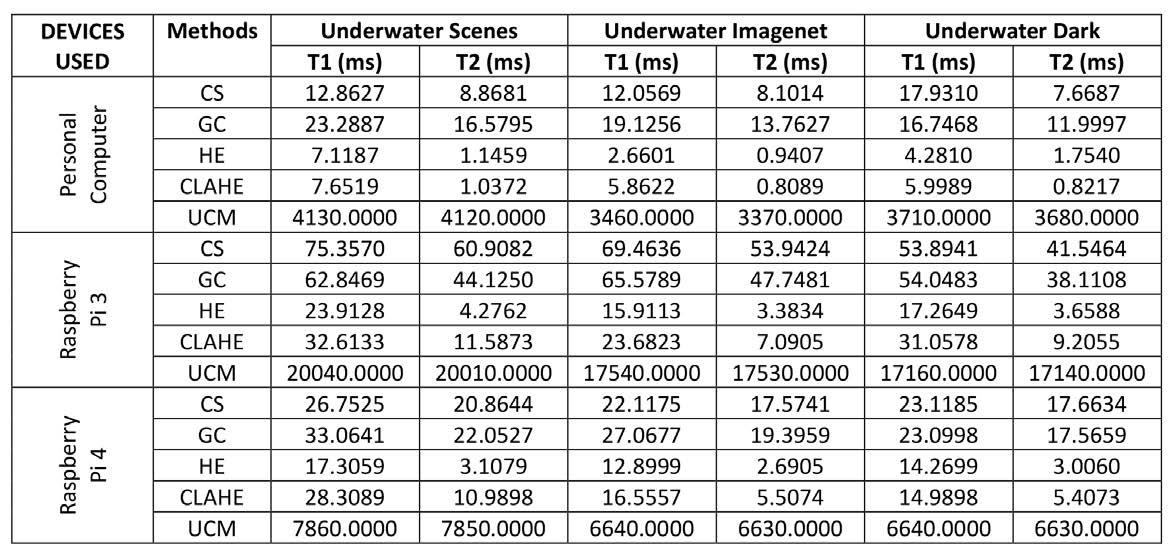

100 Implementing Underwater Image Enhancement Algorithms on Resource Constrained Devices

Arun M., Visvaja K., Vidyha A. Mepco Schlenk Engineering College

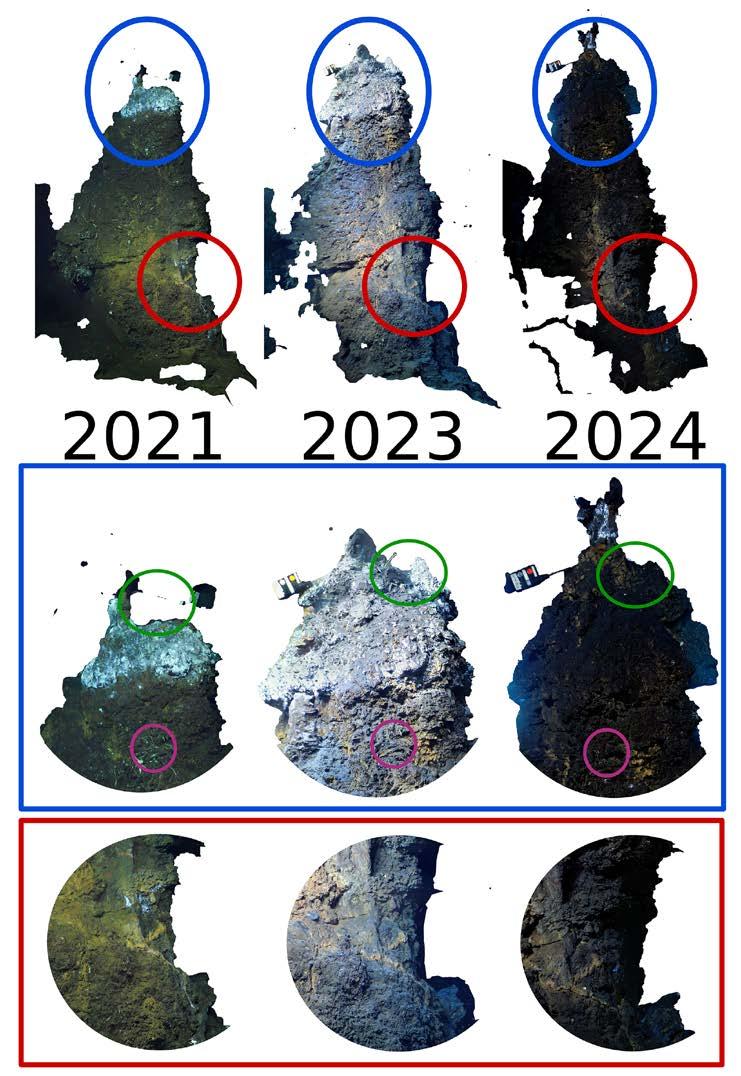

117 Technicalities ... Considerations for Advancing Seafloor Imaging to Enable Long-term Monitoring

Victoria Preston, Olin College

Pushyami Kaveti, Dennis Giaya, Aniket Gupta, Hanumant Singh, Northeastern University

Mae Lubetkin, independent researcher

Timothy M. Shank, Daniel J. Fornari

Woods Hole Oceanographic Institution

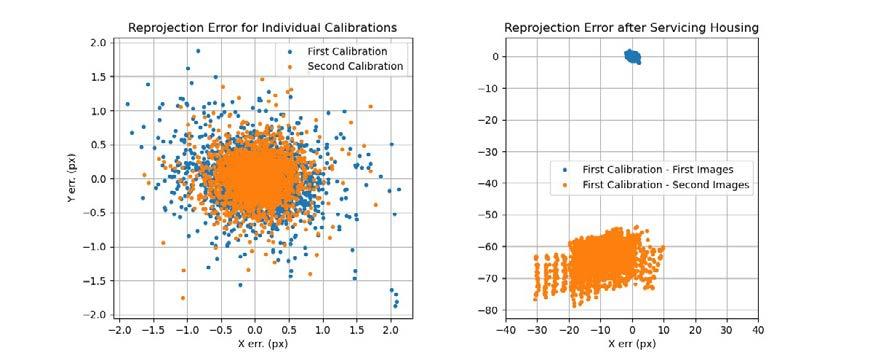

122 Technicalities ... Calibration of Camera and Lighting Systems on Marine Robotic Platforms for Scientific Data Collection

Dennis Giaya, Pushyami Kaveti, Aniket Gupta, Jasen Levoy, Hanumant Singh

Northeastern University

Victoria Preston, Olin College

Daniel J. Fornari, Woods Hole Oceanographic Institution

126 Lodestar … Petros Mathioudakis, Rylan Command

128 Role of Onshore Operation Centre and Operator in Remote Controlled Autonomous Vessels Operation

Muhammad Adnan, Yufei Wang

UiT The Arctic University of Norway

Lokukaluge Prasad Perera

SINTEF Digital

156 Q&A with Dainis Nams

158 Trade Winds … Real-time Marine Weather Data

Dan Reed and Carson Straub

OceanSync Data Solutions

160 Trade Winds … Autonomous and Efficient: Unpacking the Sensor-driven Technology Behind Oshen’s Micro-vessels

Anahita Laverack

Oshen

162 Trade Winds … Exploring Cold-water Coral Ecosystems with Advanced Underwater Optical Sensors: Insights from the Galápagos Expedition

Patricia Sestari

Voyis

164 Inside Out … Magnetometers in Marine Applications

Gorden Konieczek

SENSYS Magnetometers and Survey Solutions

169 Perspective … SubC Imaging

170 Reverberations … Sensing Superpowers: Using ROVmounted LiDAR and Magnetometers to Reveal the Unseen

Joshua Gillingham, SEAMOR Marine

172 Homeward Bound … Sound Mapping: The Future of Ocean Equipment Monitoring

Emma Carline, Ocean Sonics Ltd.

174 Parting Notes … Gauging the Ocean’s Mood

Edwina Nash

PUBLISHER

Kelley Santos info@thejot.net

MANAGING EDITOR

Dawn Roche Tel. +001 (709) 778-0763 info@thejot.net

Dr. David Molyneux

ASSISTANT EDITOR

Bethany Randell Tel. +001 (709) 778-0769 bethany.randell@mi.mun.ca

TECHNICAL CO-EDITORS

Director, Ocean Engineering Research Centre Faculty of Engineering and Applied Science Memorial University of Newfoundland

WEBSITE AND DATABASE

Scott Bruce

GRAPHIC DESIGN/SOCIAL MEDIA

Danielle Percy Tel. +001 (709) 778-0561 danielle.percy@mi.mun.ca

Dr. Katleen Robert Canada Research Chair, Ocean Mapping School of Ocean Technology Fisheries and Marine Institute

FINANCIAL ADMINISTRATION

Michelle Whelan

Dr. Keith Alverson University of Massachusetts USA

Dr. Randy Billard Virtual Marine Canada

Dr. Safak Nur Ertürk Bozkurtoglu Ocean Engineering Department Istanbul Technical University Turkey

Dr. Daniel F. Carlson Institute of Coastal Research Helmholtz-Zentrum Geesthacht Germany

Dr. Dimitrios Dalaklis World Maritime University Sweden

Randy Gillespie Windover Group Canada

S.M. Asif Hossain National Parliament Secretariat Bangladesh

Dr. John Jamieson Dept. Earth Sciences Memorial University Canada

Paula Keener Global Ocean Visions USA

Richard Kelly Centre for Applied Ocean Technology Marine Institute Canada

Peter King University of Tasmania Australia

Dr. Sue Molloy Glas Ocean Engineering Canada

Dr. Kate Moran Ocean Networks Canada Canada

EDITORIAL ASSISTANCE

Paula Keener, Randy Gillespie

Kelly Moret Hampidjan Canada Ltd. Canada

Dr. Glenn Nolan Marine Institute Ireland

Dr. Emilio Notti Institute of Marine Sciences Italian National Research Council Italy

Nicolai von OppelnBronikowski Memorial University Canada

Dr. Malte Pedersen Aalborg University Denmark

Bethany Randell Centre for Applied Ocean Technology Marine Institute Canada

Prof. Fiona Regan School of Chemical Sciences

Dublin City University Ireland

Dr. Mike Smit School of Information Management Dalhousie University Canada

Dr. Timothy Sullivan School of Biological, Earth, and Environmental Studies University College Cork Ireland

Dr. Jim Wyse Maridia Research Associates Canada

Jill Zande MATE, Marine Technology Society USA

The Journal of Ocean Technology is a scholarly periodical with an extensive international editorial board comprising experts representing a broad range of scientific and technical disciplines. Editorial decisions for all reviews and papers are managed by Dr. David Molyneux, Memorial University of Newfoundland, and Dr. Katleen Robert, Fisheries and Marine Institute.

The Journal of Ocean Technology is indexed with Scopus, EBSCO, Elsevier, and Google Scholar. Such indexing allows us to further disseminate scholarly content to a larger market; helps authenticate the myriad of research activities taking place around the globe; and provides increased exposure to our authors and guest editors. All content in the JOT is available online in open access format. www.thejot.net

The Journal of Ocean Technology, ISSN 1718-3200, is protected under Canadian Copyright Laws. Reproduction of any essay, article, paper or part thereof by any mechanical or electronic means without the express written permission of the JOT is strictly prohibited. Expressions of interest to reproduce any part of the JOT should be addressed in writing. Peer-reviewed papers appearing in the JOT and being referenced in another periodical or conference proceedings must be properly cited, including JOT volume, number and page(s). info@thejot.net

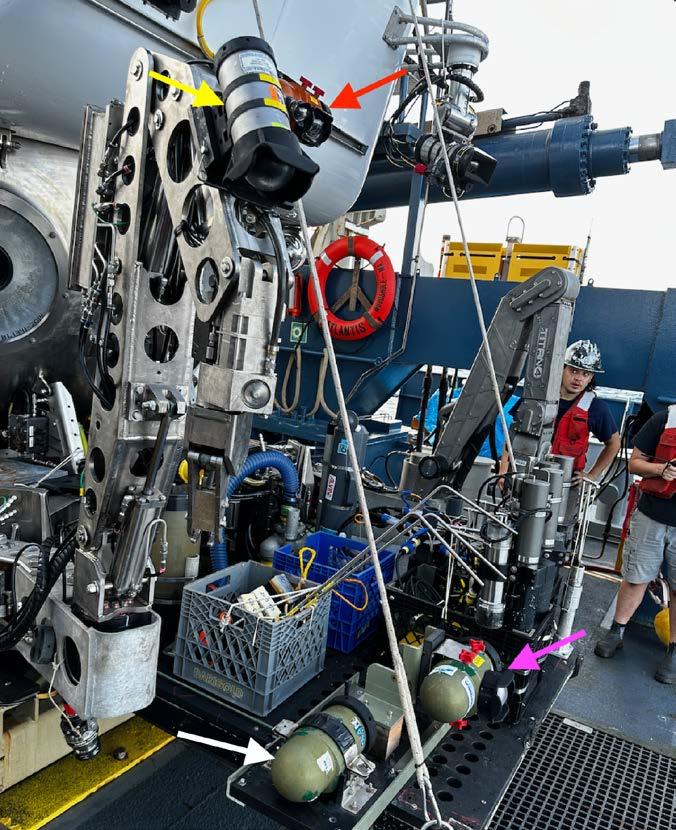

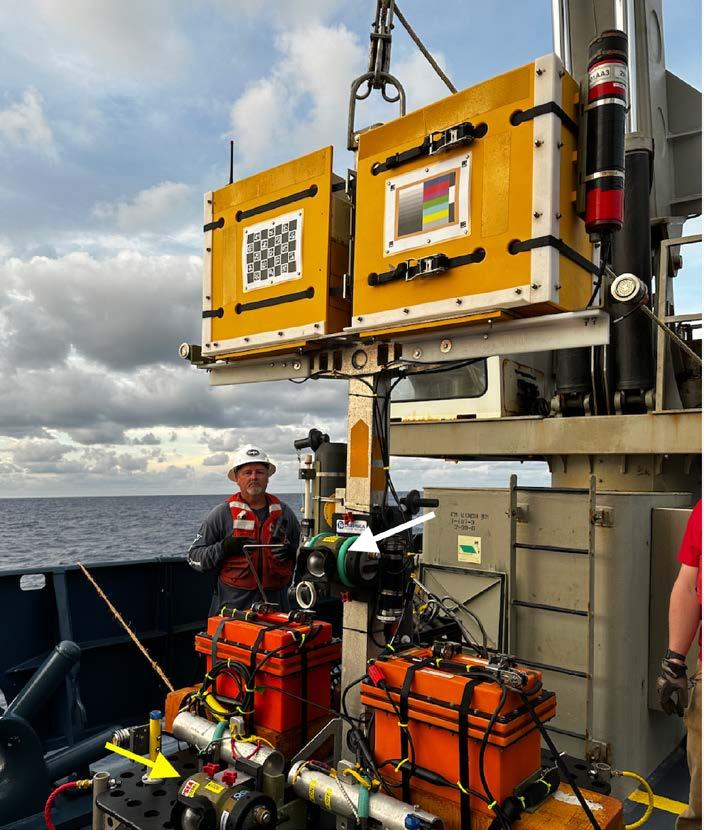

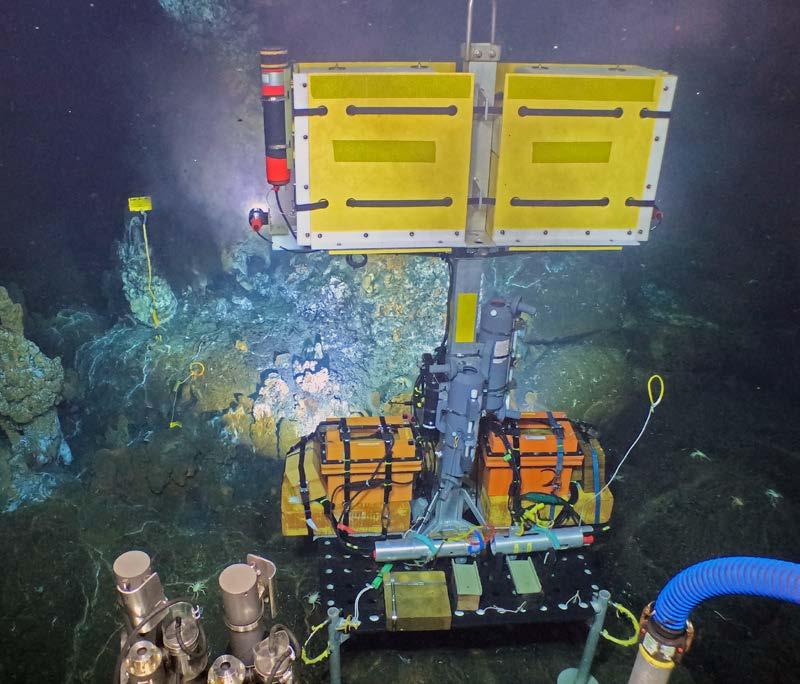

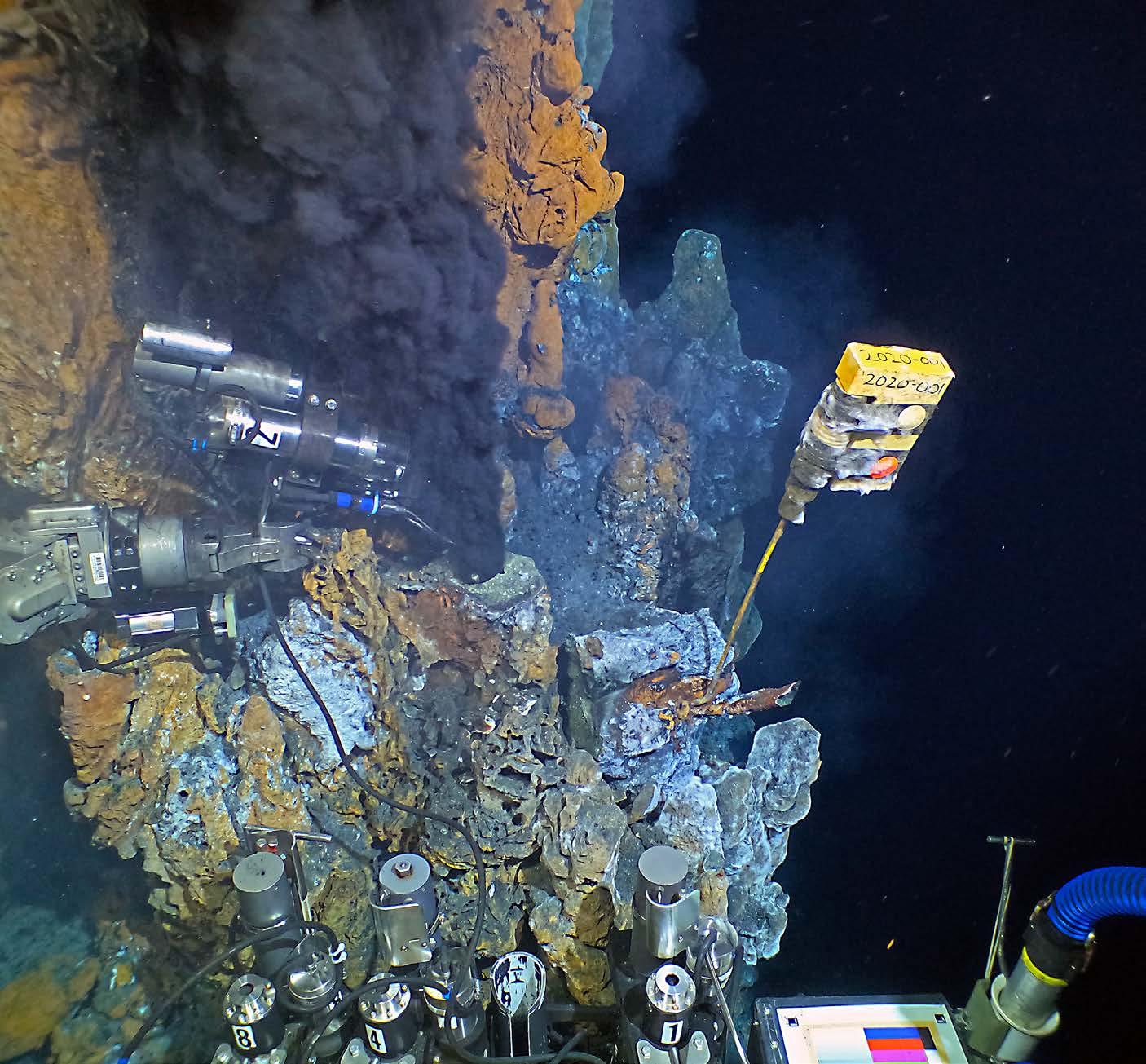

An imaging lander developed by the Woods Hole Oceanographic Institution’s Multidisciplinary Instrumentation in Support of Oceanography (MISO) Facility as deployed in the axial summit trough of the East Pacific Rise near 9°46.8'N at 2,508 m depth, during the AT50-21 expedition in March 2024. The lander uses four DeepSea Sealites™ to illuminate the seafloor and contains two MISO GoPro cameras, one shooting 5.3K video at 30 fps and the other 27MP still images every five seconds. Power to the lighting system is provided by two DeepSea SeaBatteries™, each delivering 24VDC with ~40 amp/h capacity. Two MISO deep-sea switches (small silver cylinders with magnetic reed switches on the lander) provide the ability to turn the lights on/off. The lander is positioned next to a hydrothermal diffuse flow area on a lava rampart near V vent, a high-temperature hydrothermal chimney, that is part of an ongoing NSF-sponsored research project to study the run-up to the next volcanic eruption at this fast-spreading mid-ocean ridge axis. The instruments at the middle bottom of the image are isobaric gas tight samplers used to sample high-temperature (>350°C) hydrothermal fluids, which are mounted on Alvin’s basket. The image was acquired by an autonomous MISO GoPro camera mounted above the pilot's viewport, shooting 27MP stills every five seconds during Dive 5248 (direction of view is 141°).

The JOT production team invites the submission of technical papers, essays, and short articles based on upcoming themes. Technical papers describe cutting edge research and present the results of new research in ocean technology or engineering, and are no more than 7,500 words in length. Student papers are welcome. All papers are subjected to a rigorous peer-review process. Essays present well-informed observations and conclusions, and identify key issues for the ocean community in a concise manner. They are written at a level that would be understandable by a non-specialist. As essays are less formal than technical papers, they do not include abstracts, listing of references, etc. Typical essay lengths are up to 3,000 words. Short articles are between 400 and 800 words and focus on how a technology works, evolution or advancement of a technology as well as viewpoint/commentary pieces. All content in the JOT is published in open access format, making each issue accessible to anyone, anywhere in the world. Submissions and inquiries should be forwarded to info@thejot.net.

All themes are approached from a Blue Economy perspective.

Winter 2024 Safety first: humans at sea

Spring 2025 Marine tourism

Summer 2025 Ocean monitoring

Fall 2025 Maritime security

Winter 2025 Indigenous use of technology

CIOOS 24, 25

Educational Passages 11 Marine Institute 48, 62, 168

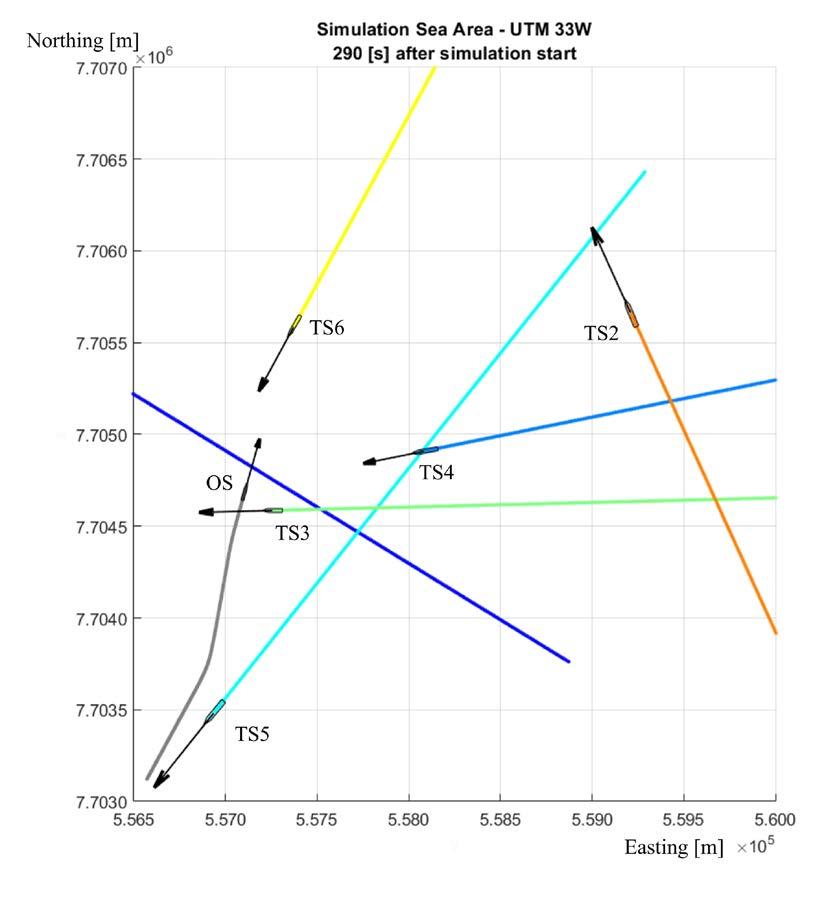

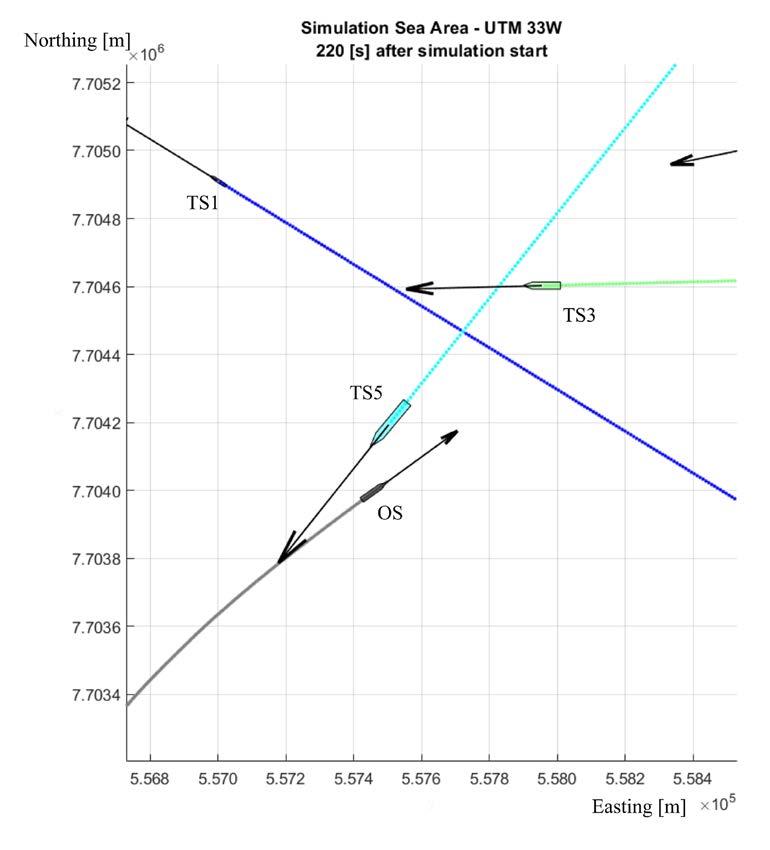

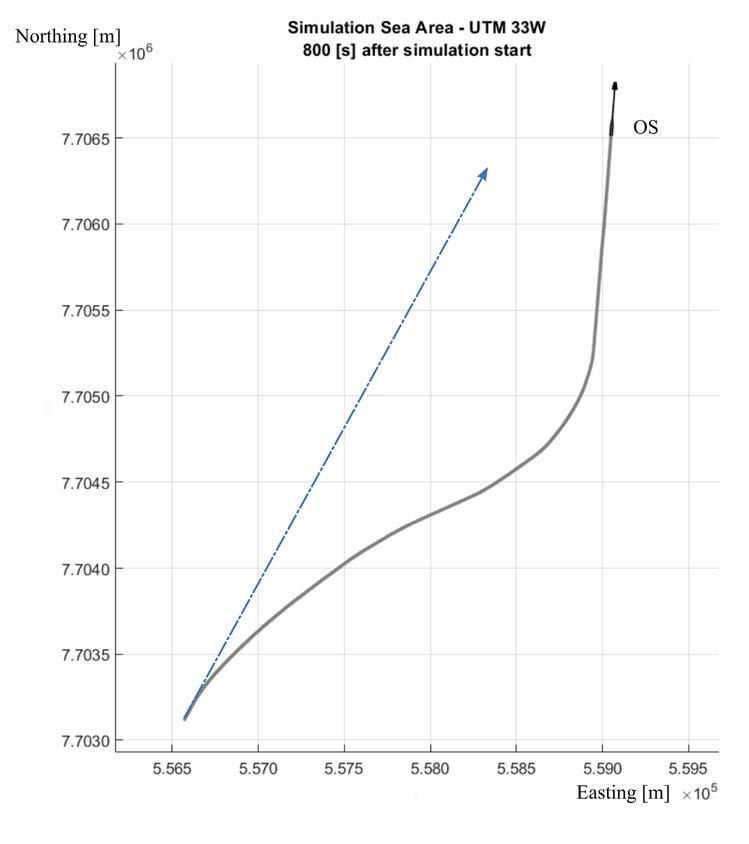

OceansAdvance IBC

SBG Systems 40 Workboat Show IFC

Each issue of the JOT provides a window into important issues and corresponding innovation taking place in a range of ocean sectors –all in an easy-to-read format with full colour, high-resolution graphics and photography.

The Journal of Ocean Technology c/o Marine Institute P.O. Box 4920 155 Ridge Road St. John's, NL

A1C 5R3 Canada

+001 (709) 778-0763 info@thejot.net www.thejot.net

It has been over 120 years since the first underwater photographs were captured. French scientist Louis Boutan wanted to bring the visuals of the underwater world he discovered while diving to the surface. He and his colleagues developed not only an underwater camera, but a lighting system and a remote trigger to accompany it. Keep in mind the technical challenges that had to be overcome to illuminate and capture an underwater scene. Not only did the camera’s mechanics have to be kept dry, but at that time, flash photography required a substance that would burn. This innovation speaks to the ingenuity of people dedicated to a goal: if the solution to a problem does not already exist, we will create one.

Underwater photography has come a long way since the time of Boutan, as evidenced by our beautiful cover. The image was taken more than 2,500 m below the surface, in a region of hydrothermal vents on the East Pacific Rise. Such regions of the deep ocean, sometimes looking more like an alien planet than the world we are familiar with, are only available to us through the use of lights, cameras, and sensors.

Cameras and their necessary lights may be some of the oldest sensing technology, but they are being used in many new and novel ways. High-resolution imagery is being used to construct colour-accurate 3D models of underwater structures, be they natural or manmade, to give us a more complete picture of what is going on. This could be used to give biologists a more complete understanding of the ecosystem around a hydrothermal vent, show engineers where corrosion is occurring on subsea infrastructure, or track the deterioration of a shipwreck.

While the imagery captured by underwater cameras is stunning, pictures alone are not enough to tell the whole story. Other sensors are needed to help us understand the marine environment, and we showcase just some of them in this hefty edition of the JOT.

Monitoring sounds underwater is just as old as underwater photography and is similarly being used in new ways. Sophisticated hydrophones are being used to capture not only the natural sounds

within the ocean, but also the manmade sounds that contribute to ocean noise pollution. These sounds can even be visualized on top of imagery, producing a “sound map” that shows areas of intensity. While the Bay of Fundy has been inspiring awe since time immemorial, we know very little about how animals navigate its turbulent waters.

Acoustic receivers deployed within the passages are gathering new insights as animals with acoustic tags pass through the area. Collecting eDNA from water samples allows researchers to determine what organisms are living in a region without ever having to witness an individual of a species, which is especially important for elusive or endangered species. This field is still growing in potential, and researchers are working hard to overcome the challenges. Continuing with the theme of things we humans cannot sense without augmentation, magnetometers allow us to detect disturbances in the electromagnetic field that could lead to finding unexploded ordnances or debris from a shipwreck.

While single data points are better than no data points, continuous monitoring of a system is the best way to learn about what is happening. This allows us to catch changes early and have enough data to make predictions about what could happen in the future. That could mean installing sensors in a fixed location, or equipping various sensors on a vehicle that could be crewed or uncrewed. Whether those sensors are continuously monitoring an aquaculture pen to ensure conditions are optimal for fish growth or collecting data in remote regions of the vast ocean, sensors that collect data 24/7 are of great benefit. Furthermore, those continuously operating sensors can be used to capture data during unexpected or dangerous events, as researchers with the Australian Institute of Marine Science found out when sensors within their ReefWorks test range endured (for the most part) Tropical Cyclone Kirrily.

Just like our own eyes, ears, and nose, sensors allow us to take in information about the surrounding environment. But taking in the information is not the only goal. We need to use that information, process it, to turn it into something useful, like a deeper understanding of how the ocean works, or make decisions necessary to ensure the resources provided by the ocean are used effectively and sustainably.

On its historic voyage 150 years ago, the HMS Challenger investigated the biological structure of the deep sea for the first time. Spanning nearly four years (1872-1876), nearly 69,000 nautical miles, and returning with more than 100,000 samples, the Challenger mission has been celebrated for its unprecedented scale of marine scientific endeavour and for quickly advancing knowledge of ocean processes through the repeated deployment of sensors and collectors.

However, reflecting on both the HMS Challenger mission and progress in oceanography, John Murray (1885) predicted: “This science cannot, from its nature, advance

slowly and gradually; it must proceed by strides, which will probably be as far apart in point of time as they are important with respect to discovery.” Murray’s prediction has since been supported by rapid advances in many fields (e.g., acoustics, digitalization, and satellite communications), expanding and improving the suite of available oceanographic sensors towards increasingly optimized data collection.

More recently, strides have been taken to create new survey tools, employing the power of genetic analyses to sense the biodiversity in all areas of the ocean within oceanographic survey programs. Analogous to

the researchers aboard the HMS Challenger having to optimize their equipment to successfully complete their historic expedition, molecular sensors are now in a similar stage where they must be optimized to complement or perhaps replace some of the more conventional survey methods used today. This essay outlines key terminology and workflows to move towards optimizing environmental DNA analyses.

What is Environmental DNA and Why is it so Useful?

All living things leave traces of DNA that are passed into the environment through substances, including mucus, scales, hair,

and feces. Through advances in genetic research, tools and methods have been developed to identify this shed genetic material, called environmental DNA (eDNA). One of the primary methods that uses eDNA is metabarcoding, also called high throughput sequencing; a method developed to simultaneously detect the many species present within this “eDNA soup.” Metabarcoding uses sequencing technology to identify short DNA segments unique to each species. These segments are called barcodes and are somewhat analogous to a human fingerprint. Detected barcodes can be compared to a reference library that contains barcodes of known species. Therefore, eDNA metabarcoding can scan many barcodes at once and provide a list of all the species in a sample. Being able to identify many species within different environments, from the deepsea environments once explored by the HMS Challenger to tide pools, freshwaters, and even land and air, has made eDNA metabarcoding an extremely versatile biodiversity sensor for many different applications.

The immense potential of eDNA metabarcoding for sensing ocean biodiversity is reflected in its rapidly increasing use across diverse environments. In addition to its versatility in different environments, its noninvasive nature makes it ideal for monitoring biodiversity in sensitive environments such as marine protected areas. Additionally, since all that needs to be collected for marine environments is seawater or sediment, it is easy to collect, thus often not requiring a high level of expertise or a specialized research vessel (Figure 1). Consequently, this method is easily scalable, allowing users to collect many samples quickly. These attributes, in combination with a world where qualified taxonomists are increasingly scarce, have transformed eDNA metabarcoding into an ideal method for many users. Despite the many positive aspects of eDNA metabarcoding, transforming a water or sediment sample into a species list is a complex set of tasks, which can be unclear to new users.

While eDNA metabarcoding may seem like a single method to new users, in reality, it is a series of methods where each decision can impact the final output. First, sample preprocessing is simply filtering and/or storing those DNA samples after samples have been collected from the environment for further processing. DNA extraction occurs when the contents of the filter or sediment (e.g., cells) are broken down into their basic components, including DNA, removing unwanted materials and contaminants, purifying, and collecting the DNA. After extraction, the DNA desired for sequencing is amplified with genetic markers (a.k.a. primers), where short sections of DNA that have the potential to differentiate species are copied and reproduced many times using a chain reaction. Afterwards, DNA tags are attached to short DNA fragments so that they can be identified as derived from sample sequences in later stages of the process; this is known as library preparation. The tagged DNA is then pooled into a machine for sequencing that provides the specific order of base pairs (genetic building blocks of which there are four types) that make up the DNA fragments. Throughout these steps, quality checks are completed to ensure that the DNA is suitable for analysis and has not been contaminated by artificial sources during collection or in the lab. After the DNA has been sequenced, bioinformatic analysis is initiated, where the sequences are separated by the previously added tags. Those tags are then removed, and sequences are filtered, removing everything that does not pass quality control. Those sequences are then identified by uniqueness, which can ideally be assigned to a species by comparing sample sequences to a reference database of known species sequences. The output is a list of species from the eDNA samples.

Given the potential applications of eDNA, many researchers and organizations have expressed great interest in using this series of methods. As a result of this enthusiasm, new methods are constantly being developed. The

multitude of new and complex methods can often lead to new and even experienced users questioning the optimal processes to implement in eDNA monitoring programs. As with many new technologies and methods, eDNA metabarcoding has been scrutinized for many different reasons. This includes the potential for false positives, i.e., detecting species that are not present within the ecosystem of study, and false negatives, i.e., not detecting species that are present within the ecosystem of study. These outcomes can be due to contamination, sequencing ability or biases, or a lacking reference database. Because of this, some researchers remain skeptical of the results of eDNA metabarcoding analyses – even when similar problems exist for most conventional methods. Optimizing eDNA programs can, however, reduce the number of false positives and negatives and move towards a powerful sensor that researchers can rely on to detect biodiversity in marine environments.

With some basic knowledge of eDNA metabarcoding methodology, this section provides a brief overview of decisions and considerations that can be made in developing an eDNA metabarcoding monitoring program. However, before beginning to optimize an eDNA program, users must understand the feasibility of completing this workflow (Figure 2). Processing eDNA for metabarcoding, including DNA extraction, amplification, library preparation, and sequencing, can be expensive if portions of the workflow are outsourced. However, this cost can be reduced if completed in-house, but it requires the facilities and the technical expertise to complete it.

Sample collection and post-collection sample processing are arguably the most important steps within eDNA metabarcoding, as they cannot be corrected through reanalysis. Therefore, the sampling design should be laid out well before collection begins. Decisions

besides study location and sampling depth include sample volume, replicates, filter size and material (if sampling water), preservation methods, and contamination prevention measures. When eDNA metabarcoding was in its infancy, collecting small volumes of environmental samples, such as 250 ml, was deemed sufficient. However, the field has been moving towards collecting larger volumes (i.e., multiple litres) when analyzing eDNA from animals that are more sparsely distributed to obtain a more thorough depiction of the species present. However, larger volumes may not be possible, depending on the availability of at-sea collection containers. In this case, using multiple replicates can

also increase the species lists via eDNA metabarcoding. Additionally, when collecting seawater, users should consider filtering the sample immediately before storing it. This prevents DNA degradation, as temperature and ultraviolet radiation can quickly destroy DNA in samples. Ideally, filtering samples should be completed in a clean space to prevent contamination (Figure 3). Although this cannot always be achieved depending on vessel capacity, users should consider including blanks (i.e., controls) in the field, in which clean, distilled water in place of eDNA samples is run through the sampling process to identify contaminating sources/species for removal in later processes.

previous deployments.

When choosing filters, one should consider the size and material of the filter, as both can influence the amount and type of DNA collected. Filters with smaller pore sizes can collect greater concentrations of eDNA but can be easily clogged depending on water volume and turbidity. The same goes for different kinds of filter material. Therefore, users should carefully consider the environment and volume of filtered water when selecting filters. As for storage of filters and/or sediment, freezing them in a -20 to -80°C freezer within sterile bags is one of the most common methods. However, similar to filtering, at-sea research does not always include the availability of a freezer; thus, other preservatives can also be used.

Laboratory processes and sequencing can begin after sample collection and pre-processing. The first step is DNA extraction, which has been made accessible to many researchers and organizations thanks to the multitude of available commercial kits. This includes commonly used kits for water, sediment, and animal tissue extraction, which can involve mechanical and/or chemical extraction. Kits allow users to choose the optimal extraction protocol for their specific needs. It is also possible to include an extraction blank at this point to identify any contamination that may have occurred during laboratory processes. Lastly, extracts can be archived for long-term storage, allowing the opportunity for future (re)processing (see below).

Choosing the genetic markers is another key choice within the eDNA metabarcoding process, as it determines which species sets are targeted (Figure 4). Each marker has inherent biases. This is because each marker targets a short genetic region, and a single region is incapable of differentiating all species. For example, if the study specifically targets fish, then genetic markers designed to differentiate fish sequences should be chosen. However, users should consider that a single genetic marker cannot detect all species or groups of

species and that using combinations of markers better characterizes community structure.

Library preparation and sequencing processes are related to one another as the preparation of adding tags to the sequence fragments will depend on the sequencing machine used. Sequencing machines are often expensive to purchase, and therefore, this step is often outsourced. There are many different machines from manufacturers such as Illumina, PacBio, and Nanopore, each having different sequencing depths and accuracy. Each sequencer model from these companies will have varied sequencing depth. Deeper sequencing allows users to sequence more of the DNA fragments from a sample, which means it has a better chance of capturing rarer species. However, higher sequencing depth comes at a greater cost; therefore, users must decide whether detecting rare species within samples is worth the extra expense. Additionally, if the study goal requires data products quickly for methodological adaptations, there are portable sequencing machines that can allow for immediate sequencing, even in offshore environments. However, these portable sequencers differ in sequencing depth and accuracy (having lower sequencing depths and reduced accuracy) than some sequencers from other manufacturers, potentially missing rare species detections. Therefore, the choice of optimal sequencer should be based on the priorities of the program.

While bioinformatics can seem daunting, many pipelines (software suites) have been created for those inexperienced with its software and programming languages. Choosing which pipeline for a particular program can be difficult, but one factor to consider is the operating software available, such as Linux, macOS, and Windows, as some software is limited to specific platforms. Additionally, the choice of reference database can be critical as this is how the species sequences found in environmental samples are determined. The largest reference database, NCBI’s GenBank,

has many sequences of identified species. However, the contents of this database are not verified and can contain misidentified species sequences. Because of this, it is sometimes best to use a curated reference database of verified sequences. This is ideal when users know which species should be found within the system of study. However, one of the major liabilities of eDNA metabarcoding is that reference databases often lack species, as many have yet to be sequenced and added to these databases, a problem that is more prominent in understudied species or environments.

eDNA metabarcoding is most often used for biodiversity assessments using presence/ absence data. Using eDNA metabarcoding to quantify species abundance is still in its infancy. However, some studies have been able to correlate eDNA sequence abundances with the local abundance or density of a species. These data have been used for monitoring ecosystems and conservation areas, finding invasive or endangered species, and much more. Many of the studies completed have compared the power of eDNA metabarcoding to other conventional technology, such as trawls and camera surveys, and most have found that eDNA metabarcoding detects greater diversity than the conventional techniques it was compared to, showcasing the capability of eDNA metabarcoding. However, as previously mentioned, eDNA metabarcoding does have limitations, and users can apply strategic use of complementary methods that may be required to address study goals. Applications of eDNA continue to expand such as using eDNA metabarcoding for food web analysis to answer ecological questions, increasing the versatility of this molecular sensor.

One of the many benefits of eDNA metabarcoding is the ability to reanalyze data at different parts of the workflow. For example, suppose new genetic markers are created. In such a case, DNA extracts, when archived, can be reanalyzed with new genetic markers targeting species that may have been

missed. Additionally, as reference libraries are updated, sequences can be re-run through a bioinformatic pipeline to determine if species were missing due to a lacking reference database. There are few conventional methods that can be reanalyzed as effectively and efficiently as eDNA metabarcoding.

Alongside efforts to optimize eDNA metabarcoding, there has been a large push in the scientific community to standardize eDNA metabarcoding. Standardizing eDNA workflows will enable researchers and organizations to achieve comparable and reproducible data, allowing them to compare datasets from different studies or geographical locations. However, the variety of applications of this technology will create conflicts in achieving both optimal practices and standardization. Standardizing this field may not benefit individual studies as different environments (freshwater vs. marine, coastal vs. offshore vs. estuarine) and objectives, such as species of interest, may require different methods for optimal results that would not conform to a set standard. As methods and tools quickly advance, the standard protocol with current technology may not be ideal in the future. Therefore, standardizing all aspects of the eDNA workflow may not benefit researchers or groups using eDNA metabarcoding.

Widescale standardization may not be feasible, but standardization may still be beneficial within more specific fields. For example, research groups and large organizations can incorporate a common general biodiversity genetic marker in all eDNA metabarcoding programs. Though this will have marginal increases to the cost of each program, it allows the ability to compare a portion of the data across different studies/regions using eDNA. Additionally, since laboratory methods can be reanalyzed, having standard sample collection and pre-processing methods enables the possibility of creating a standard dataset, if desired. Further, optimal practices can be

instilled for studies within a program such as including the use of blanks, both in the laboratory and field, as well as maintaining consistent preservation methods. These compromised solutions illustrate how eDNA metabarcoding workflows can be standardized for large-scale programs while maintaining optimal methodologies for individual studies.

One hundred and fifty years after the celebrated HMS Challenger expedition, the quest continues to explore the diversity of life in the deep ocean frontier and across the world’s other ecosystems that are increasingly under threat. eDNA metabarcoding is already a great asset in achieving this goal. However, it requires optimization as did the methods of the past eras. Reflecting on John Murray’s quote, we can see that eDNA technology has and continues to advance science in great strides. The immense versatility and accessibility of this molecular sensor will no doubt solidify it as a core survey tool for years to come. Just as the researchers of the HMS Challenger could not have envisioned the ability to detect marine communities from a cup of seawater, there is little doubt that future developments in genetic tools will be beyond our imagination of today. u

Gordon H.R. de Jong is a master’s student in fisheries science at the Fisheries and Marine Institute of Memorial University of Newfoundland. Having interests in genomic methodologies, marine ecology, and conservation, his primary aim for his master’s is to understand the potential application of environmental DNA metabarcoding for monitoring Canadian marine conservation areas, specifically focusing on how community composition, determined using environmental DNA, changes across space.

David Côté is a research scientist at Fisheries and Oceans Canada, working within a team devoted to achieving Canada's marine conservation targets. Collaborating closely with Indigenous communities, harvesters, and academic institutions, this team harnesses interdisciplinary synergy to characterize and safeguard biodiversity in some of Canada's most beautiful marine areas.

Dr. Jonathan A.D. Fisher is an associate professor and research chair in marine fisheries ecosystems dynamics at the Centre for Fisheries Ecosystems Research, Fisheries and Marine Institute of Memorial University of Newfoundland. His primary research goals are to understand and quantify how changing environmental conditions and fisheries alter the characteristics and recovery dynamics of marine populations, communities, and ecosystems – with a focus on Newfoundland and Labrador and the eastern Canadian Arctic.

Developing Sensing Strategies for Uncrewed Systems Testing and Proving

by Michelle Barnett, Patrick Bunday, and Vic Grosjean

With the ever-increasing pressures of climate change, population growth, and human use, the state of the world’s ocean is in decline. Yet, by improving our understanding of the ocean and comprehension of these pressures, there is opportunity to drive a healthy, resilient, and productive ocean that benefits human safety, well-being, and prosperity.

To better understand the ocean environment, enhanced ocean observation and measurement is essential. This involves development of next-generation technologies from subsea sensors to autonomous monitoring systems, and the establishment of safe, economical, environmentally sustainable, and efficient ocean observation operations. This means that uncrewed vehicles and intelligent instrumentation have an increasingly significant part to play in ocean measurement campaigns.

Development of next-generation marine technologies includes testing, and as such we are seeing development of subsea test beds in several countries across the world. A prime example of an underwater testing environment is being delivered in Plymouth, UK, by the Smart Sound Connect Subsurface (SSCS) project. Using instrumentation supplied by technology partners Sonardyne International Ltd., the SSCS project is delivering an underwater acoustic communications and navigation network that will link to existing surface assets to facilitate the world’s first ocean-focused 5G proving ground for subsea innovation (Figure 1). Integrated seabed sensor nodes will also provide real-time reporting of

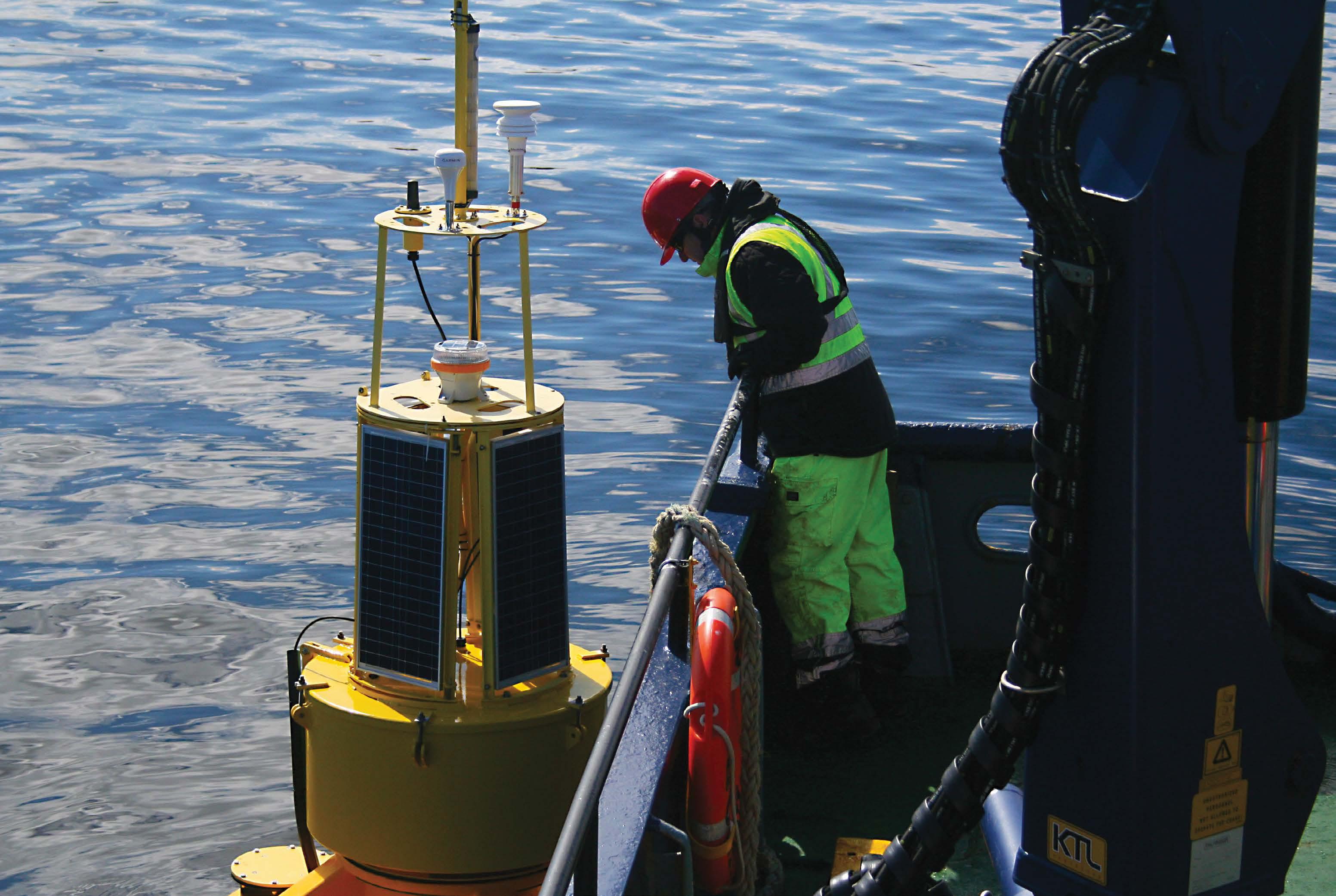

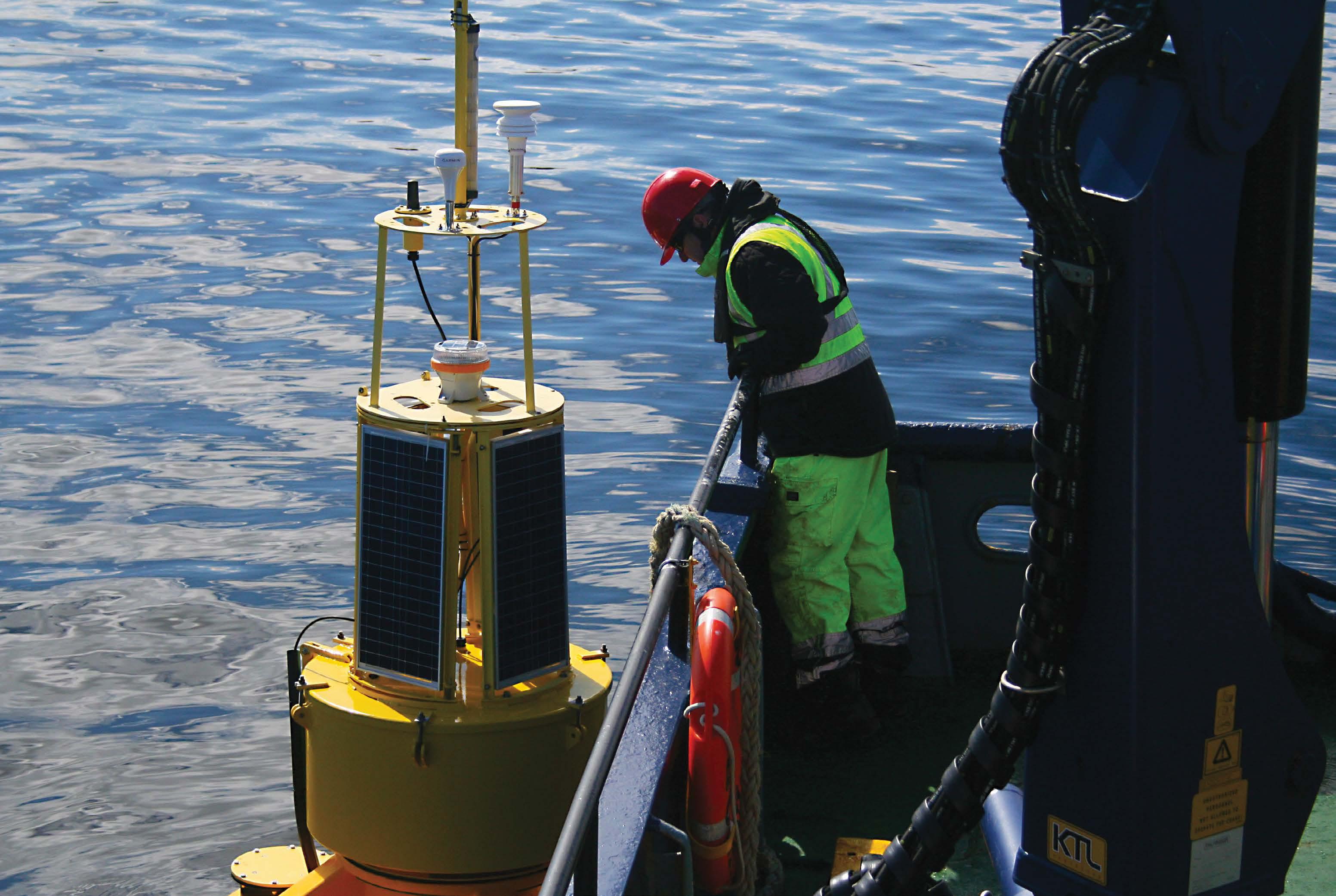

oceanographic parameters (currents, waves, temperature) critical for operational safety and for full calibration of the test facility (Figure 2).

Sonardyne International Ltd. is a global subsea engineering company specializing in the design, manufacture, and supply of acoustic positioning, inertial navigation, acoustic and optical communications, monitoring, wireless control, and autonomous data acquisition products for a diverse range of underwater applications.

Similar to Smart Sound, several other subsea technology testbeds are being developed in temperate and even boreal climates. Indeed, the focus of subsea technology testbed development has been in such climates. Yet, 42% of the world’s ocean is tropical, a marine environment very different to its temperate and boreal counterparts, presenting a whole host of operational challenges, including extreme heat, aggressive biofouling promoted by the warm waters, strong ocean currents, remote expanses, hazardous marine predators, substantial sand shifts, and extreme tropical weather.

One of the first to address the gap presented by the lack of tropical underwater testbeds was the Australian Institute of Marine Science (AIMS) with its ReefWorks test ranges. AIMS, headquartered in Townsville on the northeastern coast of Queensland, Australia, is a world leader in tropical marine

research, providing unique insight into Australia’s tropical waters, and knowledge to develop globally relevant and innovative research solutions. The institute is developing ReefWorks, a sandbox consisting of several authorized test ranges situated within the Great Barrier Reef covering approximately 348,000 sq/km. This premier tropical marine test facility provides a flexible, scalable architecture to overcome environmental complexities unique to tropical Australia, allowing for the development of trusted uncrewed marine technologies in tropical waters (Figure 3). At ReefWorks, marine system developers can rigorously test their platforms in real world conditions to improve and demonstrate technology readiness level to regulators, thus facilitating the transition

from traditional human-centric data acquisition methods to uncrewed marine technologies.

To support its ReefWorks users, AIMS needed to identify an array of integrated real-time technologies for understanding test range conditions to support system testing and proving in the challenging tropical, shallow environment. Specific challenges presented by the ReefWorks environment include warm water, turbidity, currents, shallow water, and sea states that change rapidly with the wind and tide.

To meet these diverse testing requirements, a solution integrating real-time current and water quality information was proposed as an initial proof-of-concept. This solution was to

Figure 4: Deployment map of ReefWorks trial showing deployment locations of instrumentation at influence and control locations. Technologies deployed include Sonardyne Origin 600 ADCPs, YSI DB600 water quality Xylem buoys, turbidity SESA buoys, smart moorings, and Spotter buoys.

bring together recent advancements in realtime underwater communication and machineassisted data analysis to enable seamless integration of standard communication protocols into oceanographic marine sensing.

Between November 2023 and March 2024, AIMS, with the support of partners including Sonardyne, put this solution into action.

A range of technologies with real-time data capability were extensively trialled, including wave buoys, ADCPs, and water quality multiparameter instruments integrated on floating buoys linked to an Eagle.io cloud-based data visualization platform (Figure 4). The proposed outcomes were to (1) identify technologies able to deliver real-time data that supports development of an autonomous systems test range; and (2) collect data that will support future activities, such as periodic maintenance dredging and sea water pumping for the National Sea Simulator.

Sonardyne was responsible for supplying ADCPs for the proof-of-concept study at the ReefWorks facility, specifically its Origin 600 ADCPs. The Origin 600 is an “all-in-one” unit ADCP with integrated modem and onboard

Edge data processing functionality (Figure 5); the pairing of these two features enables realtime data reporting of critical oceanographic variables, including currents, waves, and temperature, from the seabed to the surface. The Edge functionality works to process data on board the instrument via implementation of a data processing algorithm that outputs an NMEA-format string small enough to be exported over the acoustic modem.

Leveraging the capabilities of the Origin 600 ADCP running a currents and waves data processing Edge algorithm, and by integration of a Sonardyne topside “Nano” modem with a YSI DB600 water quality Xylem data buoy (Figure 6), real-time oceanographic data on the range could be obtained. AIMS and Sonardyne collaborated to integrate the data exported via the Nano Modem into Eagle.io via the Xylem buoy logger. Modifications to the RCBasic script on the Campbell datalogger facilitated data extraction from the Nano Modem attached to the Xylem buoy, enabling real-time data retrieval to Eagle.io.

The Eagle.io dashboard (Figure 7) enabled the observation of oceanographic parameters

AIMS

Figure 6: Subsea (Sonardyne Origin 600) and topside (Sonardyne “Nano” modem attached to YSI DB600 water quality Xylem buoy) instrumentation set-up for realtime reporting of oceanographic data on the ReefWorks range.

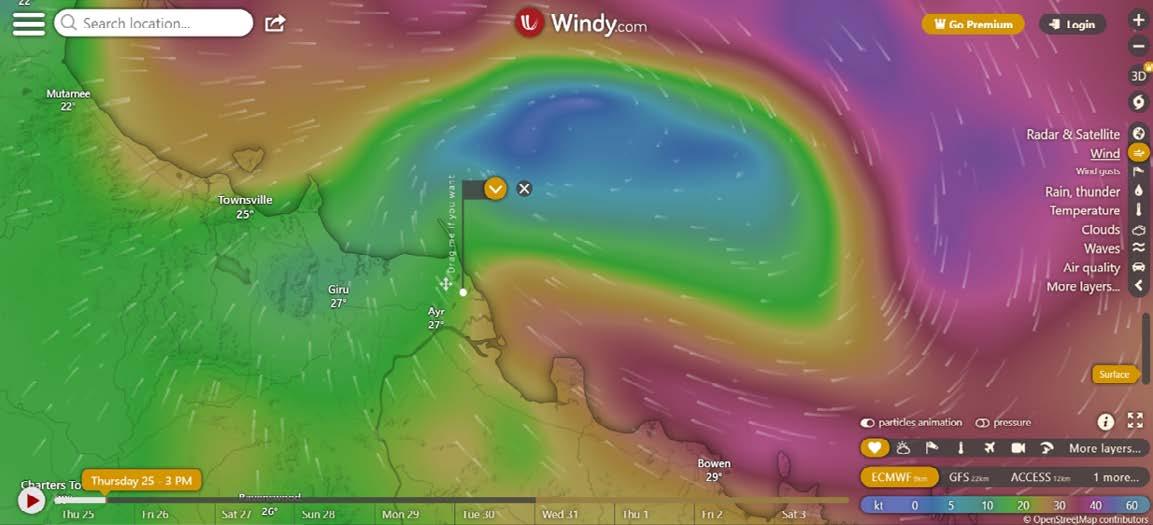

and system statuses over the deployment period, and aided decision-making for testing timing and platform selection. The comprehensive collection of data captured encompassed solar, atmospheric conditions, hydrographic data, wave parameters, power metrics, instrument status, and location data. Furthermore, the real-time map displayed on the Eagle.io dashboard tracked asset movement, enabling proactive monitoring and responsive decision-making based on current asset locations and conditions in the field (e.g., anchor slip, mooring failure), with autonomous real-time alerts set up to ensure operational integrity and timely response to anomalies. Specific alerts for instrument malfunction, high turbidity, extreme weather, battery voltage, positioning ringfence, logging, and abnormal sudden turbidity increase were included. Many of these alerts were triggered by the passage of Cyclone Kirrily, which served as a pivotal scenario to assess the resilience of the tested technologies deployed during the cyclone season (Figure 8). The tropical cyclone

challenged the trial by damaging most of the instrument buoys. However, its passage was initially tracked hour by hour from a remote location in real time thanks to the technologies selected for the trial. This in itself is worthy of examination.

Extreme weather events like tropical cyclones provide critical real-world test scenarios for evaluating the robustness of deployed technologies. The increasing prevalence of such events underscores the need for resilient and adaptive marine technology testing environments. The tests conducted at ReefWorks faced a rigorous weather event during Tropical Cyclone Kirrily, which reached Category 3 at 3pm on AEST January 25, 2024 (Figure 9).

On the approach of the cyclone, some team members relocated to Melbourne to undertake the monitoring of the cyclone in an area not affected by power loss. A support team

remained in the Townsville region, which was already affected by power loss issues. Tropical Cyclone Kirrily made landfall on January 25, 2024, impacting deployed systems in the test zone. Wind and wave activity escalated throughout the day, leading to the loss of contact with the Cape Bowling Green weather station and one ADCP at 1pm. Turbidity levels and wave heights increased at 3pm when the cyclone reached category 3. Between 4pm and 5pm, the Xylem buoy bungee cable snapped, and the buoys headed towards the rock cliff (Figure 10).

By 7pm, wave heights reached nearly three metres. At 8pm, AIMS lost power on-site resulting in significant data gaps, including the loss of data collected by the Bureau of Meteorology Automatic Weather Station (AWS) located on the wharf. Satellite Iridium-

enabled devices continued to report wave and position data until the connectivity fully stopped overnight.

Cyclone Kirrily revealed critical challenges and insights from deployed systems. Redundancy in communication systems was crucial when 4G connectivity was lost, prompting a shift to redundant instruments with Iridium-enabled satellite links. Larger assets, particularly noncyclone-proofed buoys, faced heightened risks, underscoring the need for robust and modular infrastructure capable of withstanding extreme conditions. ADCPs deployed at shallower depths were susceptible to sediment burial.

Power outages disrupted data acquisition efforts, affecting systems without uninterruptible power supplies (UPS), especially security cameras. Strategic

placement of these cameras proved essential, with recommendations for elevated installations to enhance coverage and resilience. Accurate asset positioning during deployment emerged as crucial for efficient post-cyclone recovery operations.

Despite the challenges, Eagle.io’s real-time monitoring capabilities were invaluable, though enhancements in data visualization and the use of physical cameras with reliable power

backup are needed. This would particularly help prevent data misinterpretation such as when the Xylem buoys continued to report turbidity and CTD data the day after the cyclone while being lodged on the cliff (Figure 11)

Over the days following the cyclone, service was slowly re-established. Recovery efforts revealed varying states of disrepair among

the equipment, buoys had disappeared, and one ADCP was buried. The few test systems which remained active were significantly weakened and detached or went offline a couple of weeks after the cyclone. The use of scuba divers and helicopters for equipment recovery (prior or after the cyclone) was identified as more effective and safer than traditional vessel-crane-based methods for efficient asset retrieval.

Post-cyclone recovery efforts focused on assessing asset displacement and damages. Buoys were all recovered in varying states of disrepair, necessitating refurbishment, upgrades, and recalibration. The loss of a SLB700 buoy highlighted some vulnerabilities in the deployment location strategies.

Lessons from recovery efforts emphasized redundancy in communication systems, robust construction and deployment practices for larger assets, the need for seasonal suitability assessments, and the necessity for UPS systems. Strategic placement of security cameras and accurate asset positioning during deployment emerged as critical for efficient asset recovery. The effectiveness of alternative recovery methods was highlighted, offering lower cost, greater flexibility, and better safety for future operations.

The trial was certainly a success, demonstrating how real-time data from a variety of sensors can be collected, collated, and transmitted, and how such real-time monitoring capability is key for establishing efficient test regimes. This opens the potential for the development of a marine synthetic environment (digital twin), delivering information on a variety of parameters to support the needs of ReefWorks range users, or to support and inform operators of sea water pumping and maintenance dredging activities.

While a success, the trial also faced challenges presented by the shallow, tropical environment, culminating in the extreme weather event, and these are worth exploring

too. We include assessment of the efforts to overcome specific challenges, a closer examination of the impact of the cyclone on real-time subsea to surface communications, and insights gained from the trial.

The first challenge was establishing communications between the Origin modem and the Nano modem mounted on the YSI buoy in one of the most difficult environments to handle acoustic communications – shallow water, in this case operating in only 6-8 m water depth. The strategy for approaching this challenge was to mount the Origin 600 ADCPs on a low-profile frame in line of sight with the Nano modem on the Xylem buoy and adopt a special mooring design using bungees. These bungees accommodated maintenance of line of sight by preventing the YSI data buoys from moving far away from the seabed ADCPs; however, were unsuitable to withstand extreme weather events.

Communications were extensively challenged by the passing of Cyclone Kirrily. The violent weather conditions provided real insights into the most extreme conditions the equipment could face when deployed. The Origin 600 ADCPs continued to log data subsea and could have still been remotely accessed via their integrated modems if the Nano modems on board the Xylem buoys had not been compromised by striking the rocks. This gave important insights of the requirements for surface infrastructure to support the establishment of ReefWorks test ranges.

In addition to presenting a challenge to communications, the shallow water on ReefWorks inshore test range imposed a requirement for the design and implementation of special low-profile ADCP frames to prevent the YSI DB600 water quality data buoys from hitting the Origin 600 ADCPs at low tide and during the deployment. In this effort to overcome one challenge of the ReefWorks environment, another presented itself in the form

of sand and sediment shifting and covering the bedframe, particularly during the cyclone. With sediments having the potential to compromise ADCP data, redesign of the bedframes needs to be considered moving forward.

A final challenge contended with was the intense biofouling prevalent during the warmer months in tropical Queensland, which included adhesion of large barnacles on top of the ADCPs (Figure 12). This prompts adoption of a high-performance anti-foul coating to protect the ADCPs from marine growth and fouling in future.

The ReefWorks trials, buffeted by the challenges of a tropical marine environment, emerged with a roadmap for a robust collaborative testing ecosystem. This ecosystem caters to the diverse needs of global uncrewed system operators and

instrument manufacturers. Innovative subsea technologies, like Sonardyne’s Origin 600 ADCP, with its real-time reporting capabilities, have been shown to have a significant role to play within this ecosystem.

Cyclone Kirrily served as a baptism of fire, revealing the critical need for enhanced communication redundancy, robust infrastructure, and effective real-time data collection. Valuable insights informed the development of more resilient mooring designs and refined sensor performance analyses. Additionally, they bolstered a comprehensive business case for ReefWorks’ continued operation.

In addition, the trial demonstrated the power of technologies for ocean monitoring in general and gaining actionable insights, a power which should not be underestimated in our quest to improve our understanding and management of the ocean. u

Dr. Michelle Barnett is the business development manager for ocean science at Sonardyne International Ltd. She has been responsible for supporting development of Sonardyne’s ocean science global business since 2021, with a special focus on the Origin Acoustic Doppler Current Profiler (ADCP) instruments. She has a strong academic background in the ocean sciences, culminating in a PhD in marine biochemistry from the University of Southampton funded by the Graduate School of the National Oceanography Centre Southampton.

Patrick Bunday is the operations planner at the Australian Institute of Marine Science (AIMS). He works on long-term planning and strategy at AIMS to ensure the institute’s infrastructure and operations meet future science needs. He works on identifying, implementing, and optimizing utilization of capability to meet forecast science requirements; and has a background across a range of industries in continuous improvement.

Vic Grosjean is the ReefWorks systems engineer, at the Australian Institute of Marine Science (AIMS). He specializes in environmental monitoring, ocean instrumentation and uncrewed systems applications. With a formal qualification in mechatronics engineering and physical oceanography, he has worked over the past 18 years in the ocean and environmental technology fields. He is now setting up ReefWorks 2.0 using state-ofthe-art ocean instrumentation, high-accuracy positioning, and high-speed communication across AIMS test ranges.

by Iago Gradin

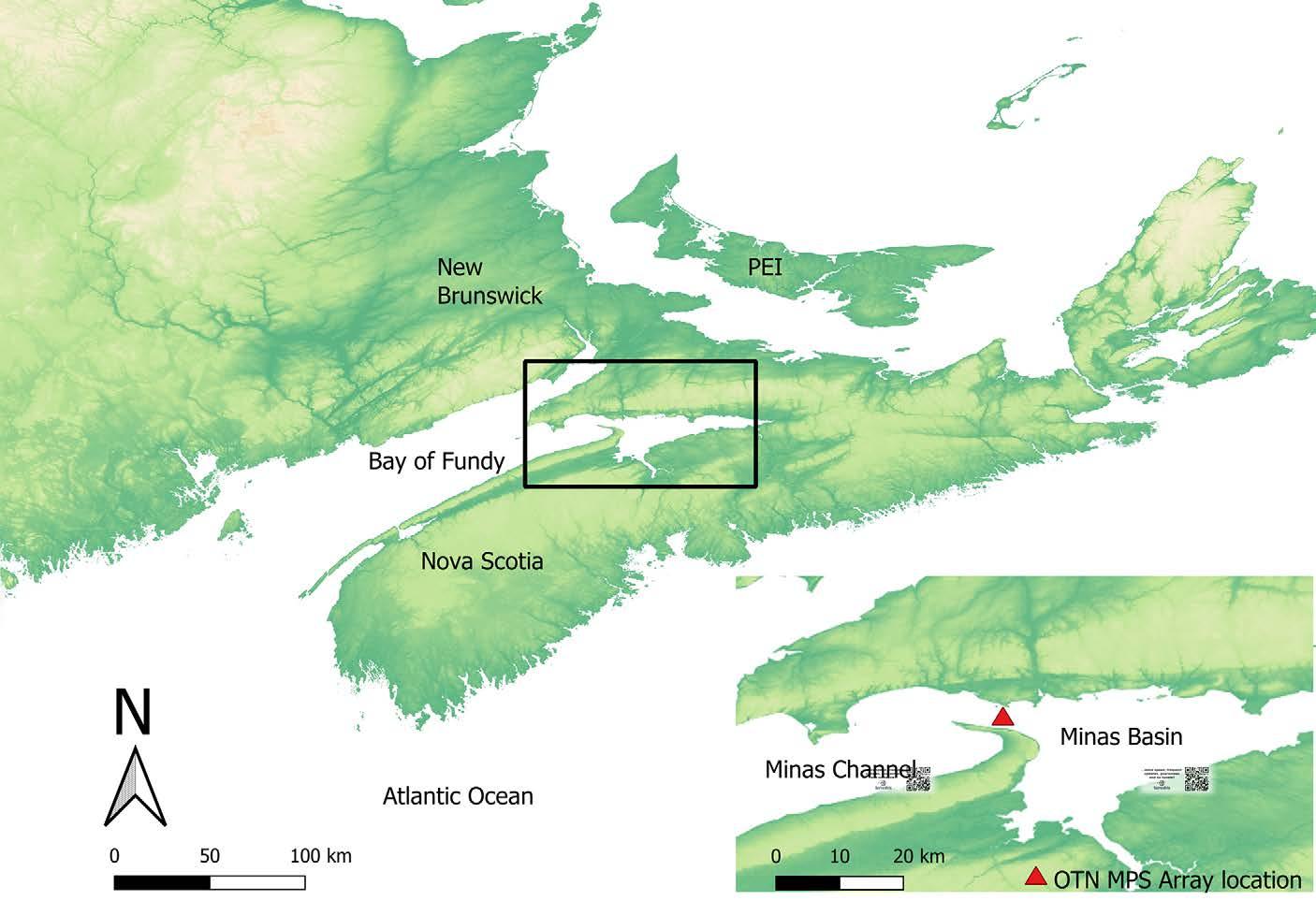

The Bay of Fundy is home to the highest tides in the world. About 160 billion tons of water flow through the Bay on each six-hour tide, equivalent to four times the estimated flow of all freshwater rivers in the world combined.

The Minas Channel connects the Minas Basin to the Bay of Fundy (Figure 1). The channel is 50 km in length, with a width of 20 km in the outer area that reduces to only 5 km in the Minas Passage. Depths range from 50-100 m in the outer channel to above 150 m in the Minas Passage.

Considered a stressful environment with its extreme tides, turbulent currents, soft substrates, and silt-laden waters, the Minas Basin is home and often a migration route to a variety of economically and ecologically important marine animals. The channels winding through the salt marshes are important nursery and feeding areas for species such as American shad, Gaspereau, American

eel, Atlantic sturgeon, Striped bass, Atlantic salmon, and even White sharks.

The Minas Passage has the strongest currents in the Bay of Fundy, where water speed can reach up to 5 m/s and is thus considered an area of interest for tidal power generation. So far, three commercial attempts to harvest power from the Minas Passage have failed for various reasons. The first attempt was in 2009 and failed just three weeks after the underwater turbine deployment, when all 12 turbine rotor blades were destroyed by tidal flows. The second attempt in 2016 failed when the company went bankrupt, leading it to abandon the project and the turbine underwater.

In 2018, a different concept of harvesting power using a floating in-stream tidal platform was conceived. Being surface-based, rather than seabed-based, the technology is easier and less costly to maintain and repair.

The turbines are mounted on a hydraulic lift and can be independently raised out of the water for routine maintenance, and in case of severe damage, the whole platform can be towed to shore for repairs. The platform was successfully tested and generated power for one year at Grand Passage, a different location of the Bay of Fundy. Despite the promising results, the company could not secure a license to implement the project in the Minas Passage. According to the Department of Fisheries and Oceans, the company failed to provide a proper management program and prove a lack of negative impact to the many animal species in the area.

Methods and technologies developed to investigate animal usage of a specific area are often expensive, limited in duration, and require constant maintenance to optimally operate. Acoustic telemetry, the use of acoustic transmitters attached to animals to relay

information to stationary, moored receivers, is a powerful tool to understand how animals move and may be using a specific area. This technology is relatively low cost, easy to use, reliable, and able to track individual animals over long periods (e.g., > 10 years).

Since 2010, the Ocean Tracking Network (OTN) has been maintaining an acoustic receiver array (Figure 2) in the Minas Passage composed of 12 stations. Throughout the years, the mooring design has changed several times to try and improve data quality and minimize equipment loss. The present mooring is composed of a 450 kg chain link anchor; ½” stainless steel chain, shackles, and swivels; a Teledyne Benthos R2K Acoustic Transponding Release; an 2.4 m Stretch EM Cable manufactured by EOM Offshore (the hose can stretch up to 6 m under stress and return to its original length); and two floatation devices, a regular 45 cm syntactic

foam Balmoral float, and a DeepWater Buoyancy StableMoor® Mooring Buoy.

Each mooring is equipped with two different receiver models, one Innovasea 69 kHz VR2TX and one HR2 High Residency Receiver. The VR2TX acoustic receiver operates in the Innovasea traditional Pulse Position Modulation (PPM) coded communication system. Tags that operate in this system transmit a series of 8-10 pulses and each “pulse train” represents a unique ID. Each pulse train takes approximately three to five seconds, and for a receiver to decode the ID, all pulses must be heard. The HR2 receiver is capable of decoding 180 kHz tags using both the PPM transmission system as well as the High Residency transmission system, which encodes the complete tag ID within a single pulse that only takes a few milliseconds to transmit.

The detection efficiency of any acoustic telemetry array (the relationship between the detection probability and the distance between tag and receiver) varies by receiver model and the environmental conditions. Water temperature, turbidity, and noise are often considered the main driving factors in reducing detection efficiency; however, tilt can play an even greater role, especially in high-flow estuarine environments.

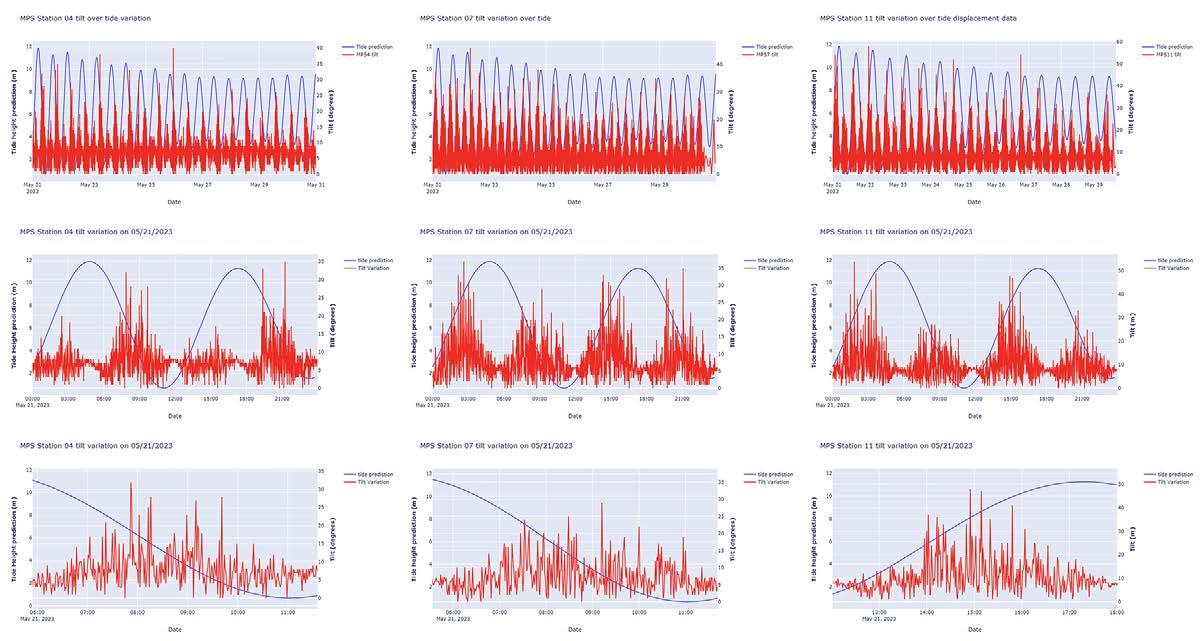

Both acoustic receiver models on the OTN moorings are equipped with auxiliary sensors that collect tilt data. To have a fine-scale assessment of the tilt displacement, the VR2TX receivers were set to fast diagnostic – a mode where the receiver collects tilt data every minute for a 14-day period (May 19 to June 1, 2023).

This collection of high-frequency tilt measurements revealed three different patterns (Figure 3). Stations 1-5 experienced higher tilt displacement when the tides were ebbing, stations 6-8 experienced similar tilt displacement at both periods of the tide, and stations 9-12 demonstrated more tilt

displacement when the tides were flooding. The tilt oscillation happened throughout the whole tide change but was more intense for an average of three hours half-way through the tide cycle. Most importantly, it is evident that the stations were not sustaining the same displacement for more than a few minutes at the time, being constantly knocked down and coming back to their natural vertical position. This pattern was observed at all stations.

Each receiver also emits its own transmitter code, allowing us to assess the detection range of each receiver unit to its neighbours during the deployment period. Stations were deployed approximately 150 m apart, the expected distances between stations and the expected pings from neighbouring receivers were used to measure changes to each station’s detection range. Maximum detection ranges were observed during slack tide and decreased as the tide started to change. The VR2TX maximum detection range was 1,650 m and the HR2 receiver was only 300 m. This difference was expected and is likely due to the difference in the frequency power at which those receivers’ tags operate, and therefore how far the acoustic signal can travel.

Surprisingly, despite the different transmission methods, both receivers demonstrated extremely limited detection range capabilities during the tide change for an average period of three hours (coincidentally with the high tilt displacement moments), with periods where no detections were present in either of the units, depending on the station (Figure 4). Although the HR2 receivers had a few more detections during the high tilt oscillation periods, given its capabilities to resolve a detection in a millisecond, it is concluded that the receiver was not able to maintain its designed functionality when the mooring started to be destabilized during the tide change.

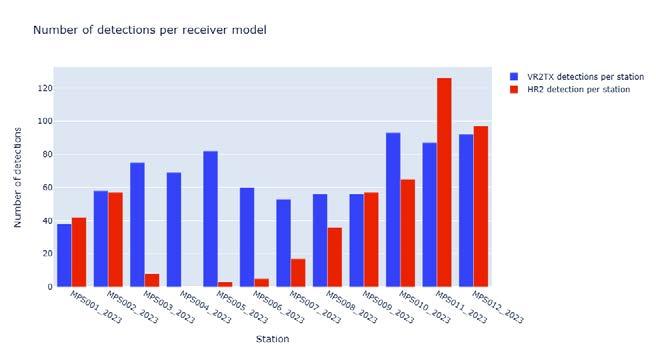

When examining actual animal detections during the analysis period, the VR2TX outperformed the HR2 (Figure 5). The VR2TX had a total of 819 detections from five unique

3: Tilt variation from Ocean Tracking Network Minas Passage Acoustic Receiver Line stations 04, 07, and 11 plotted over the 15-minute predicted tide variation from Baxter Harbour acquired from the Department of Fisheries and Oceans website.

tags, whereas the HR2 had 513 detections from 14 unique tags. Most importantly, the VR2TX had a representative number of detections from each of the 12 stations of the array, whereas the HR2 had no detections from station MPS04 and very few detections from stations MPS03, MPS05, and MPS06.

The results from this analysis strongly suggest that when the tide is changing, and the moorings are being constantly destabilized by the force of the moving water, despite their different capabilities, the detection range of both acoustic receiver models is extremely affected and there are periods when the receivers are not able to resolve detections. The tilt is likely to be the main driving factor for this loss in detection capability, especially seeing the great range of the VR2TX during slack tide.

Other variables that can influence detection range such as temperature, salinity, and turbidity appear to not have as much influence as the tilt since the water at the Bay of Fundy is always turbid and well mixed from top to bottom, so there is almost no chance of stratification (formation of different layers of temperature and salinity).

This cyclical range limitation from extreme tidal currents must be considered when analyzing animal detection data. In areas like Minas Passage, acoustic telemetry data collection may be limited for up to 12 hours a day, which could completely change the conclusions of studies being conducted, especially when less mobile

animals are being studied, including those that depend on tidal stream transport such as American eel through the Minas Passage.

Furthermore, the PPM-based 69 khz VR2TX receiver unit seemed to be a more suitable unit for the environmental conditions of the Bay of Fundy than the high residency receivers that have been thought to be more resilient to environmental noise. Despite having the capability to resolve detections more quickly using the HR technology compared to PPM, the HR2 receivers recorded fewer detections in total and per station during this observation period.

To confirm the patterns and the conclusions in this analysis, OTN is adding external loggers to the moorings that can measure tilt and environmental data at fine temporal scales to create a complete timeseries during the next six months deployment period and determine if what was observed during these 14 days is consistent throughout the longer deployment. u

Born and raised in the Brazilian coast, Iago Gradin quickly developed a passion for the ocean and the marine life, culminating with him pursuing a B.Sc. in oceanography. Throughout his career, he mainly worked with remote sensing and oceanographic data manipulation. Seeking to further develop his technical skills, he acquired an advanced diploma in ocean technology. Since 2019, Mr. Gradin has been working for the Ocean Tracking Network (OTN) as a field technician, where he is responsible for assembling, deploying, and recovering hundreds of oceanographic moorings. Because of his background in data processing, he takes pleasure in immersing himself in the data world searching for ways to improve the OTN technical operations and the quality of the data collected.

by Kaeleigh McConnell

As the global population steadily increases, the challenge of meeting rising food demands has become more pressing than ever. Aquaculture, the farming of aquatic organisms, has emerged as a vital component in the quest for global food security. By providing a reliable and sustainable source of seafood, aquaculture can significantly reduce the strain on wild fish populations and contribute to a more balanced and secure food supply.

A key component in the success and sustainability of aquaculture is the implementation of advanced technological solutions designed to enhance the efficiency and sustainability of operations. A pivotal advancement in the industry is the introduction of underwater environmental sensors, offering real-time monitoring capabilities and enabling farm staff to track vital parameters such as dissolved oxygen (DO), salinity, temperature, pH, etc. These features are crucial for maintaining optimal conditions in aquaculture environments, ensuring the health and growth of aquatic species. The integration of underwater environmental sensors is instrumental in driving the aquaculture sector towards a more resilient and productive future.

Traditional monitoring methods in aquaculture, such as manual observations and periodic sampling, present several inefficiencies and potential inaccuracies. Manual observations require considerable labour and are susceptible to human error, leading to inconsistencies and unreliable data. Additionally, periodic sampling provides only snapshots of environmental conditions at specific times, failing to capture dynamic changes in aquatic environments between sampling intervals. This limitation can compromise the health and growth of farmed species, as critical changes may go undetected until the next scheduled sampling event, potentially leading to erroneous decision-making due to the lack of a thorough understanding of the conditions.

In contrast, advanced monitoring systems offering continuous and real-time data collection are revolutionizing aquaculture management (Figure 1). Real-time monitoring allows for immediate detection and response to fluctuations, reducing risks associated with delayed reactions, such as disease outbreaks or water quality crises. For instance, sudden drops in dissolved oxygen (DO) levels can be quickly identified, prompting immediate mitigation actions such as increasing aeration or adjusting water circulation to prevent stress or mortality in fish.

Immediate access to comprehensive data empowers aquaculture operators to implement proactive management practices. This includes adjusting feeding schedules, optimizing water circulation, and managing population density to maintain optimal conditions for fish health and growth. Continuous data collection facilitates a deeper understanding of the aquatic environment over time, allowing managers to analyze trends and patterns. This enhanced understanding supports informed decision-making regarding stocking densities, feed formulations, and overall farm operations, thereby improving the efficiency and sustainability of aquaculture operations and promoting better resource management.

Underwater sensors are revolutionizing aquaculture by providing real-time data on crucial environmental parameters, which enhances decision-making and farm management. These sensors continuously measure metrics such as DO, temperature, salinity, and pH. They detect environmental changes and convert this information into electric signals. Many modern sensors use acoustic wireless telemetry, which sends data through sound waves in the water. The data are then transmitted wirelessly to a cloud-based system, allowing operators to access up-to-date information and make timely adjustments to maintain optimal conditions.

Key sensors used in aquaculture include:

• DO sensors monitor oxygen levels,

essential for aquatic respiration. Low DO can cause stress and mortality.

• Temperature sensors track water temperature, which affects metabolic rates and growth. Proper temperature management prevents stress and boosts productivity.

• Salinity sensors measure salt concentrations to ensure it stays within optimal ranges for the specific species being cultivated.

• pH sensors measure the acidity or alkalinity of the water, which affects

nutrient availability and overall health of the aquatic species. Maintaining proper pH levels is critical for preventing stress and ensuring a stable environment.

Underwater environmental sensors provide a unique advantage by enabling the placement of individual sensors in each pen without the need for cables. This setup ensures precise monitoring of key parameters at the pen level. For instance, Innovasea’s aquaMeasure sensors exemplify this approach, providing

Figure 2: A technician preparing to deploy an aquaMeasure sensor at an aquaculture farm, ensuring precise monitoring of underwater environmental parameters to support sustainable fish farming practices.

real-time insights and seamless integration with its cloud-based software, Realfish Pro. This integration leads to better management and sustainability in aquaculture, supporting global food security.

The integration of underwater sensors in aquaculture operations offers significant long-term advantages (Figure 2). Continuous monitoring helps farm staff identify trends, patterns, and anomalies over time, leading to more informed and effective management practices. By consistently recording and analyzing environmental parameters, aquaculture managers can gain valuable insights into the factors that influence the health and productivity of their farmed species.

One of the key benefits of long-term data collection is the ability to detect trends and patterns that may not be apparent in shortterm observations. For example, monitoring dissolved oxygen levels over several months or years can identify seasonal variations and anticipate periods when additional aeration may be needed. Long-term temperature data can also reveal the impacts of climate changes on water conditions, enabling proactive adjustments to mitigate stress on farmed species. This deeper understanding of environmental dynamics allows for the implementation of more effective management strategies, reducing risks and enhancing the overall resilience of aquaculture operations.

Data analytics plays a crucial role in leveraging long-term data to improve production efficiency and optimize resource utilization. Advanced analytical tools can process and interpret the vast amounts of data collected by underwater sensors, providing actionable insights for farm managers. Predictive analytics can forecast future conditions based on historical data, helping plan feeding schedules, optimize stocking densities, and manage water quality more efficiently. Machine learning algorithms can identify correlations between different parameters, such

as the relationship between water temperature and growth rates, allowing for fine-tuning of farm practices to maximize yields.

Continuous data collection and analysis can also contribute to mitigating the environmental impacts of aquaculture operations. By closely monitoring key parameters, farm staff can better understand the interactions between different factors, such as water temperature and oxygen levels, which can help to manage resource use more effectively. Early detection of anomalies can prompt timely corrective actions, minimizing potential negative environmental impacts. Long-term data supports compliance with environmental regulations and certification standards, demonstrating the commitment of aquaculture operations to sustainable practices.

The advancement of aquaculture is critical in addressing the growing global demand for sustainable seafood. Traditional monitoring methods, plagued by inefficiencies and potential inaccuracies, fall short in providing the necessary insights to maintain optimal conditions for aquatic species. The integration of continuous and real-time data collection has revolutionized the industry, enabling more responsive and informed management practices. Central to this transformation are underwater environmental sensors, which offer precise monitoring of critical environmental parameters such as dissolved oxygen, temperature, and salinity. These sensors ensure that aquaculture operations can swiftly detect and address changes, safeguarding the health and growth of farmed species.

However, deploying these sensors and interpreting the vast amounts of data they generate come with challenges. Ensuring proper sensor placement, maintenance, and data analysis requires investment and expertise. Despite these hurdles, the opportunities and insights gained through sensor technology are substantial. Continuous monitoring allows farm staff to identify trends,

patterns, and anomalies over time, leading to more effective and proactive management strategies. Through data analytics, aquaculture managers can optimize resource utilization, improve production efficiency, and mitigate environmental impacts. Predictive models and machine learning algorithms enhance the ability to foresee and respond to future conditions, ensuring that aquaculture operations remain resilient and productive.

In summary, the integration of real-time data collection, facilitated by underwater sensors, is transforming the aquaculture industry. These technologies not only enhance immediate management capabilities but also provide the long-term insights necessary for sustainable growth. By enhancing these advancements, the industry can better meet the rising global demand for seafood while promoting environmental stewardship and resource efficiency. The future of aquaculture

is promising, with continuous monitoring technology playing a crucial role in achieving a reliable, sustainable, and resilient seafood supply. u

Residing in Halifax, Nova Scotia, Kaeleigh McConnell is a dedicated professional in the aquaculture intelligence division at Innovasea. With a bachelor of science in marine biology from Dalhousie University and a certified PADI Divemaster, her expertise is well-rounded, merging academic knowledge with hands-on experience. Her keen interest in sustainable fisheries and aquaculture, coastal management, and marine communications highlights her commitment to advancing ocean conservation and management.

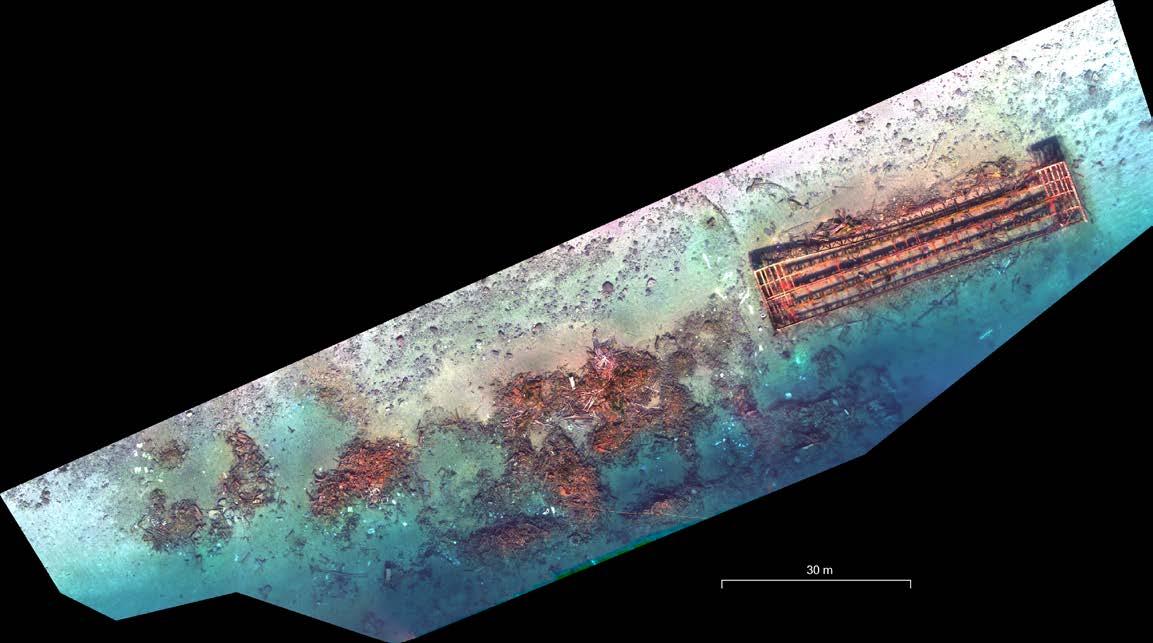

by Bryan Lintott and Gareth Rees

The Tirpitz salvage site is one of many areas in the Arctic where the legacy of war has resulted in contemporary concerns about effects on the biosphere and broader health and safety risks. Decades after the Royal Air Force sank the German battleship Tirpitz in 1944, and a subsequent salvage operation removed the ship’s high-quality steel and operational equipment, hundreds of cubic metres of nonsalvaged items remain on the site, along with widespread environmental contamination of the seabed. Current research on the Tirpitz salvage site, utilizing remote sensing and robotics, is developing innovative methods to map and monitor the site that can be utilized globally in near-shore environments.

The Tirpitz and its sister battleship Bismarck were the pride of the Third Reich’s Kriegsmarine. Among the largest and most powerful battleships ever built, these ships posed a severe risk to Allied shipping convoys. Bismarck’s one sortie into the North Atlantic resulted in the sinking of HMS Hood, the flagship of the Royal Navy, before combined air and surface attacks sank the Bismarck. In response to this sinking, the Tirpitz was ordered to Norway, where it became a “fleet in being.” The potential threat was so severe that even at anchor, the Royal Navy was forced to deploy numerous ships in case the Tirpitz attacked the Arctic convoys, which supplied vital military equipment to the USSR. While at anchor, Tirpitz was repeatedly attacked by the Fleet Air Arm, the Royal Air Force, the Red Army Air Force, and Royal Navy midget submarines. Due to extensive damage from these attacks, the Tirpitz was sailed to Tromsø, designated as a shore battery, and moored in shallow water. This would, it was thought, allow it to settle on the bottom if damaged in an attack. On November 12, 1944, the Royal Air Force’s famous Dam Busters 617 Squadron and 9 Squadron attacked Tirpitz with Tallboy bombs. After direct hits and an internal explosion, the ship – badly damaged on one side – capsized (Figure 1) with the loss of over 900 German lives; the Royal Air Force squadrons returned

with no losses. Churchill, Roosevelt, and Stalin commended the mission.

The German military swiftly commenced salvage work, transporting the propellers and some of the hull’s armourplate steel to Germany. Following the war, the Norwegian government awarded salvage rights to the private company Høvding Skipsopphoggeri, which cut apart the ship in conjunction with Eisen und Metall of Hamburg. Highquality steel and operational equipment were salvaged, but other materials were dumped on the seabed. No environmental protection controls were in place. Over subsequent decades, environmental surveys have shown that the site is still contaminated with hydrocarbons, PCBs, and heavy metals.

The Tirpitz Site Project is utilizing the site for ongoing scientific research, technological development, and environmental monitoring. The project is being undertaken by UiT The Arctic University of Norway’s Institute for Technology and Safety and the Scott Polar Research Institute of the University of Cambridge. In 2025, an updated environmental contamination assessment is planned with the REMARCO EU research consortium (Remediation, Management, Monitoring and Cooperation addressing North Sea UXO, a European project funded by Interreg North Sea).

The Tirpitz Site Project team has begun to survey the site using an innovative method of aerial-based through water remote sensing (Figure 2). This work has resulted in the first map of the salvage site, which shows the remains of the salvage wharf and debris piles. The map was produced with an airborne robot with RGB and multispectral cameras that took images through the water on a calm, sunny day. Hundreds of images were combined, using Pix4D and further processing, to produce an image map showing over 7 hectares of the site for the first time. From this data, measurements accurate to around 2 cm were made to a depth of 5 metres. The

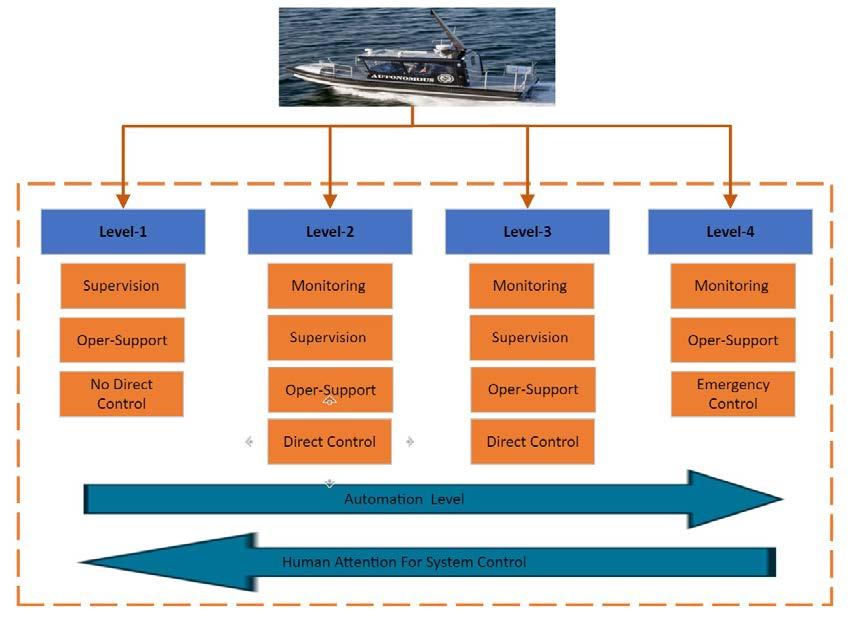

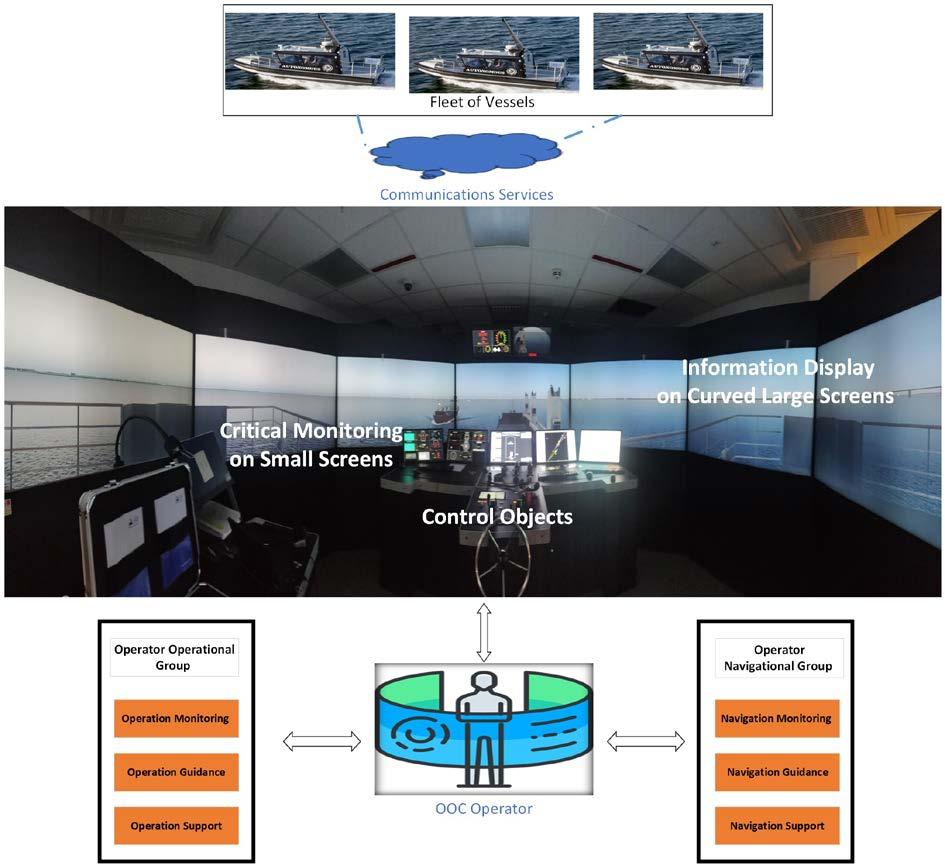

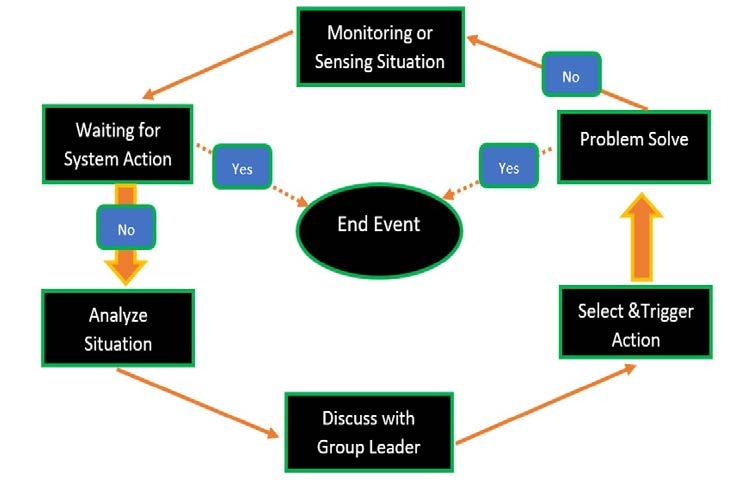

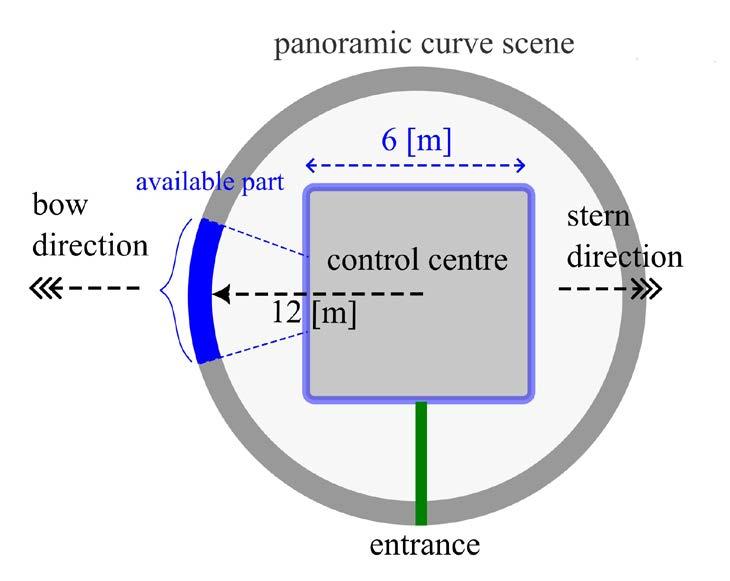

Figure 1: The capsized Tirpitz, May 26, 1945. The adjacent boats are from the German salvage operation.