International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

K Aravindh Reddy, P Kranthi Kiran, P. Koushik, Dr. G. Ganapathi Rao

1B-Tech 4th year, Dept. of CSE(DS), Institute of Aeronautical Engineering

2B-Tech 4th year, Dept. of CSE(DS), Institute of Aeronautical Engineering

3B-Tech 4th year, Dept. of CSE(DS), Institute of Aeronautical Engineering

4 Assistant Professor, Dept. of CSE(DS), Institute of Aeronautical Engineering, Telangana, India

Abstract - Deep learning, a key aspect of machine learning and artificial intelligence, has become an essential technology in the era of the Fourth Industrial Revolution. Built upon artificial neural networks, deep learning finds application across multiple fields including healthcare, image recognition, natural language processing, and cybersecurity. Despite its power, building effective deep learning models is often challenging due to the evolving nature of real-worldproblems and data. Furthermore, the lack of transparency in some models causes them to be perceived as "black boxes," which can impede their broader adoption.

One area of particular interest is the effect of occlusions, such as those caused by hands, pillows, blankets, or shadows, on pet classification accuracy. Previous research has primarily focused on aspects like pose, breed identification, and scene variations but has largely overlooked occlusion challenges in pet classification. This project seeks to enhance deep learning models for pet classificationbyleveragingdata augmentation, transfer learning, and fine-tuning. Using two independent datasets containingbothoccludedandnon-occluded images of cats and dogs, the project aims to evaluate the proposed models. The study investigates the impact of transfer learning on the classification performance ofcats and dogs usinga finetuned Convolutional Neural Network (CNN).

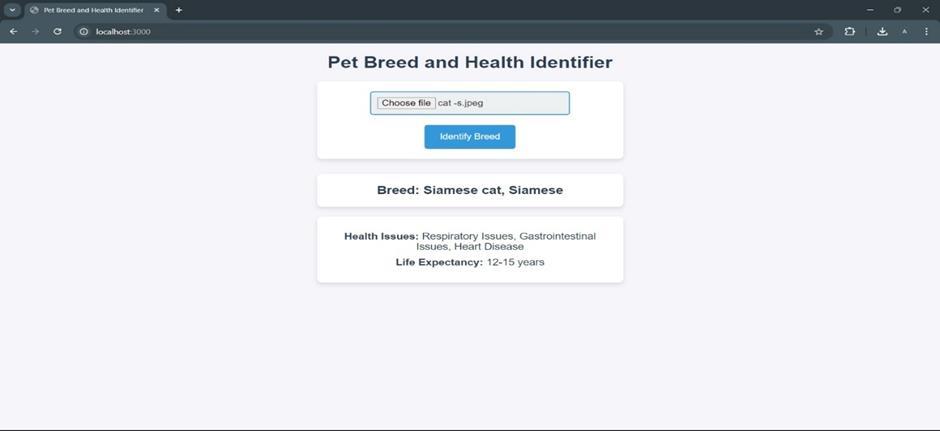

The Pet Breed and Health Identifier is an advanced applicationthataimstotransformthewaypetownersand veterinariansmanagepetcare.Aspetownershipbecomes increasingly popular and the diversity of breeds expands, correctly identifying a pet’s breed, particularly in mixedbreed animals, can be a difficult task Moreover, early detectionofpotentialhealthissuesiscriticaltomaintaining a pet’s overall well-being deep learning algorithms in combination with a user-friendly interface to deliver a completesolutionforbothbreedidentificationandhealth assessment.

Breed Identification:Theapplication'smainfunction isitsabilitytoaccuratelydeterminethebreedofapet through image analysis. The deep learning model is trained on a comprehensive dataset of pet images, coveringawidevarietyofbothpurebredandmixed-

breedanimals.Byexaminingspecificvisualtraitssuch as coat color, size, shape, and facial features, the application provides precise breed identification withinseconds.

Health Assessment::Inadditiontobreedrecognition, the application also focuses on detecting physical abnormalitiesandcommonhealthconcernslinkedto certain breeds. For example, it may identify skin issues,irregularweight,or posturethatcouldsignal underlying health conditions. This feature gives pet owners the ability to detect possible health issues early,enablingtimelyvisitstotheveterinarian

Personalized Care Recommendations: Basedonthe identified breed and any potential health issues detected, the application provides tailored care suggestions. These recommendations can include dietaryguidance,exerciseroutines,groomingadvice, andpreventivehealthcaremeasures,allcustomizedto suitthepet’sspecificneeds.

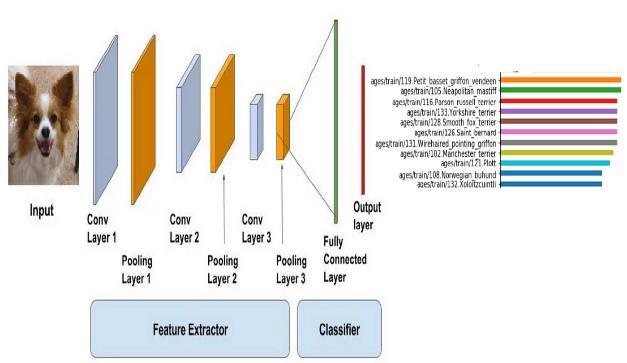

Fig.1. DeepLearning:Tobuildadogdetectorand breedclassifierusingCNN

Data Privacy and Security: Theapplicationtakes data privacy seriously, ensuring that all uploaded images and associated information are securely processedandstored.Userscanbeconfidentthat their data is handled with the utmost confidentiality.

User-Friendly Interface: Designedforeaseofuse, theapplicationfeaturesastraightforward,intuitive interfacethatallowsuserstouploadimages,obtain results,andreceivecarerecommendationswithout

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

requiring technical skills. The app is available on both web and mobile platforms, providing users withconvenientaccessonthego.

This study introduces a method for dog breed identification based on analyzing facial images of dogs. By leveragingadeeplearningframework,theapproachaimsto accurately recognize dog breeds. The research specifically addresses the problem of animal biometric identification, focusingondogs.Itutilizessophisticatedmachinelearning models, particularly deep neural networks, to analyze pet images and determine their identity. The study also investigates the use of "soft" biometrics, such as breed, height, and gender, combined with "hard" biometrics like facialimagestoenhanceaccuracy.

ThepaperpresentsaConvolutionalNeuralNetwork(CNN) approachfordetectingdogsinpotentiallycompleximages, emphasizing breed identification. Through experimental resultsandgraphicaldata,thestudydemonstratesthatthe CNNalgorithmachievesimpressiveaccuracyacrossvarious datasets,validatedusingstandardmetrics.

Moreover, this research includes the development of an Android application that utilizes image recognition techniquestoidentifydogbreeds.UsingaCNNandtransfer learning,theappallowsuserstoeithertakeapictureofadog oruploadanexistingimage.Theapplicationthenprocesses theimage,extractingnecessaryfeaturestopredictthebreed. Thisimplementationoftransferlearningincombinationwith CNNallowsforhigh-qualityandefficientbreedrecognition. Additionally,thepaperexplorestheuseofADAboostingfor dog breed classification. ADA Boosting combines several weak classifiers to build a stronger, more accurate model. Image processing techniques are used to differentiate between dog breeds, and this method proves to deliver reliableandprecisebreedpredictionresults.Thestudyalso appliescomputervisiontechniquestopredicttextfile.You arenowreadytostyleyourpaper.dogbreedsfromimages.It begins by identifying key facial points of the dog using a convolutionalneuralnetwork,followedbyfeatureextraction usingSIFTdescriptorsandcolorhistograms.Thesefeatures arethenusedbyvariousclassificationalgorithmstopredict thebreedofthedogdepictedintheimage.

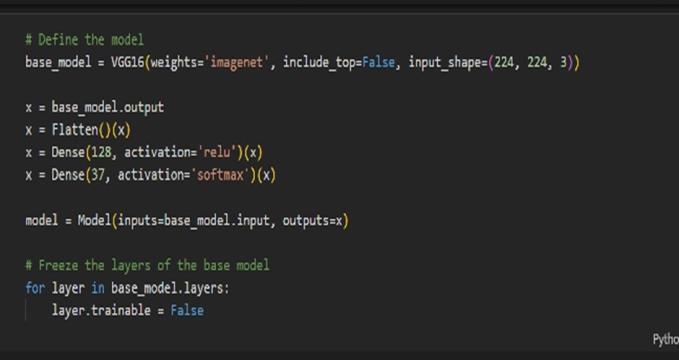

Inaddition,thepaperexplorestraditionalimageprocessing methodsforbreedclassification,employingtechniqueslike Local Binary Pattern (LBP) and Histogram of Oriented Gradient (HOG). To further enhance the accuracy of the model,severalpre-trainedmodelssuchasVGG16,Xception, andInceptionV3areused.Thestudytrainedthesemodelson a dataset containing over 1400 images spanning 120 dog breeds, of which 16 breeds were used as classification categories, obtaining bottleneck features from these pretrainedmodelstoimprovethepredictions.

The Pet Breed and Health Identifier systemisanadvanced tool aimed at improving the accuracy and speed of identifying pet breeds, while also offering health assessments. With the rise of pet ownership and the complexityofmixed-breedanimals,determiningtheexact breedcanbedifficultforpetownersandveterinariansalike. Additionally, early detection of potential health issues is essential for maintaining a pet’s overall well-being. This systemintegratesdeeplearningmodelsandimageanalysis toofferastreamlinedsolutionforbothbreedidentification

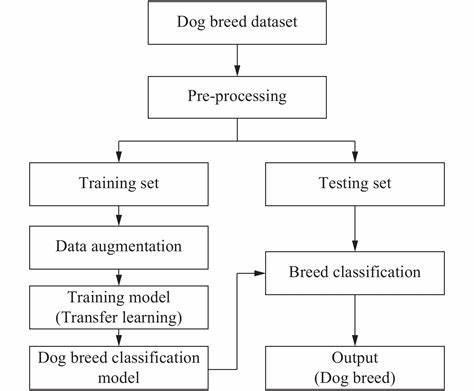

Fig.2. Componentsofthe proposedsystem.

Thesystemoperatesbyanalyzinguploadedpetimages, particularly focusing on visual features such as coat color, facial structure, and body shape. It utilizes a comprehensive dataset covering various breeds to ensure precise identification, even in cases of mixed breeds.Thebreedrecognitionprocessisenhancedby machine learning algorithms that continuously learn and adapt to improve accuracy, identify known maliciouspatterns,providingacomprehensiveanalysis.

Inadditiontobreedidentification,thesystemoffersa health assessment feature, analyzing physical attributestodetectpotentialhealthissuesassociated with specific breeds. This allows pet owners to address possible concerns such as skin conditions, abnormalweight,orpostureproblemsearly,ensuring timelyveterinarycare.

To ensure the highest level of security, all data including images and health assessments are processedwithstrongprivacymeasures.Thesystem guarantees that user information is stored securely andhandledwithstrictconfidentiality.

Lastly,thesystemisdesignedwithuserconvenience in mind. It provides an easy-to-use interface that allows pet owners to upload images, view breed

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

identification results, and access personalized care recommendationsfromanywebormobileplatform.

A. Data Pre-Processing and Feature Engineering

Datapreprocessingandfeatureengineeringarecriticalsteps inourresearchaimedatenhancinginsiderthreatdetection. Theprimaryobjectiveoftheseprocessesistoprepareraw dataforeffectivemodelingbyensuringquality,consistency, andrelevance.

Datapreprocessingisacrucialstepinpreparingpetimages for breed identification and health assessment using machinelearningmodels.Properpreprocessingensuresthat thedataiscleaned,standardized,andreadyforefficientand accuratemodeltraining.Belowarethekeystepsinvolved:

Image Collection:

Thefirststepinvolvesgatheringadiversesetofimages thatincludesmultiplepetbreeds,variationsinlighting conditions,andbothhealthyandpotentiallyunhealthy pets.Thisensuresthatthemodelistrainedonabroad spectrumofdatatoenhanceitsaccuracy.

Image Resizing and Standardization:

Toensureconsistencyacrossthedataset,allimagesare resizedtoafixeddimension.Thisallowsthemodelto process each image uniformly. Standardizing image dimensions helps reduce computational load and ensuresthemodelfocusesonthecorefeaturesinstead ofvariationsinimagesize

Normalization:

Date fields were converted to a datetime format to facilitate time-based analysis. This allows for more sophisticated temporal analyses, such as identifying patternsoverspecifictimeframes.

Splitting Data:

Thedatasetissplitintotraining,validation,andtesting subsets.Typically,themajorityofthedataisusedfor training, while a smaller portion is reserved for

validatingthemodel’sperformance.Testingdataiskept aside to evaluate the model after it has been fully trained,ensuringthatitgeneralizeswellonunseendata numerical input while preserving the information conveyedbycategoricalfeatures.

Feature Engineering

Image Feature Extraction:

The first step in feature engineering is extracting relevant visual features from the images. Convolutional Neural Networks(CNNs)arecommonlyusedtoautomaticallyextract complexpatternssuchasedges,textures,shapes,andcolors. Thesefeaturesarecrucialfordifferentiatingbetweenbreeds andidentifyingpotentialhealthissues

Breed-Specific Visual Features

Certain breeds have distinct characteristics such as coat texture, ear shape, or facial structure. The model must be trainedtorecognizethesesubtledifferences.Byfocusingon uniquebreed-specificfeatures,suchasfurpatternsorbody proportions,themodelcanimproveitsaccuracyinidentifying variousbreeds.

Breed and Health Fusion Features:

Incaseswherebothbreedandhealthconditionsneedtobe identifiedsimultaneously,itisimportanttoengineerfeatures that represent a fusion of breed-specific traits and health indicators. For example, certain breeds are predisposed to specific health conditions. Engineering a feature set that combinesthesetwoaspectsallowsthemodeltoprovidemore accuratepredictionsforbothbreedandhealthassessments.

Dimensionality Reduction:

With numerous features extracted from the images, it’s importanttoapplydimensionalityreductiontechniqueslike Principal Component Analysis (PCA) or t-SNE. These techniquesreducethenumberoffeaturesbyidentifyingthe most important ones while retaining key information. This helpsimprovemodelperformanceandreducescomputational costs

Color Histograms: Colorplaysasignificantroleinidentifying pet breeds. Creating color histograms of the images helps capturethedistributionofdifferentcolorsinapet’sfur,which is an important distinguishing factor for breeds that vary primarilybycoatcolor.

Statistical Features: Inadditiontovisualfeatures,statistical featuressuchaspixelintensity,brightness,andcontrastcan providesupplementaryinformation.Thesefeatureshelpthe modelunderstandimagequalityandensurethatimportant detailsarenotlostduetopoorlightingorresolution.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

Feature Scaling:

Oncetherelevantfeaturesareextracted,it’sessentialtoscale themtoensurethatnofeaturedisproportionatelyaffectsthe model’s performance. Techniques like normalization or standardization are applied to ensure that all features contributeequallytothefinalprediction.

Feature Selection:

Finally, feature selection techniques such as Recursive FeatureElimination(RFE)ormutualinformationgainare usedtoidentifythemostimportantfeatures.Thishelpsin reducingnoise,improvingmodelaccuracy,andoptimizing performancebyonlyusingthemostrelevantfeaturesfor breedandhealthidentification.

B. Model Training

Modeltrainingisacrucialphaseininsiderthreatdetection, where we develop predictive models that can accurately identify potential security threats based on various input features.Thisprocessinvolvesseveralkeystepstoensure therobustnessandeffectivenessofthemodelsemployed.

Data Collection

Dataset Sources:Gatherdatasetsthatincludeimagesand metadata about various pet breeds and associated health conditions.Possiblesourcesinclude:

PublicdatasetsliketheStanfordDogsDatasetorthe OxfordPetsDataset.

Veterinaryhealthrecords(ensuretoanonymizeany personaldata).

Crowdsourceddatafrompetowners.

Model Training

TrainingSplit:Divideyourdatasetintotraining,validation, testsets(typically70/15/15).LossFunctionusecategorical cross-entropy for multi-class classification problems. ImplementAdamorSGDoptimizers.Monitoraccuracyand lossonthevalidationsettopreventoverfitting.

Evaluation:

Assessmodelperformanceusingmetricssuchasaccuracy, precision,recall,andF1score.Visualizemodelpredictions againstactuallabelstoidentifymisclassifications.

C. Integration of the trained models

The integration of trained models is a critical step in developing a comprehensive insider threat detection system. This process involves combining the outputs of various detection algorithms to enhance the overall

performance and reliability of the threat detection framework.Byleveragingthestrengthsofdifferentmodels, wecanachievemoreaccurateandrobustpredictions.

Model Serialization:

If you're using a framework like TensorFlow.js or a compatible Node.js library, ensure you save your trained modelcorrectly.Forexample,inTensorFlow.js,youcansave yourmodelasfollows:

Code: await model.save('file://path/to/model');

API Development:

Next,youwillcreateanAPIusingaframeworklikeExpress. This API will handle requests from users, specifically for uploadingimagesofpets.Whenauseruploadsanimage,the APIwillprocesstheimageandusethetrainedmodeltomake predictionsaboutthepet’sbreedandhealthstatus.

To facilitate user interaction, you will need to develop a simple web interface. This interface will allow users to uploadimagesoftheirpetsandsubmitthemforanalysis. The design should be straightforward, enabling users to easilynavigatethepageandviewresults.

Once the application is complete, you will deploy it to a cloud service.Thisstepinvolveshostingtheapplicationona platformthatmakesitaccessibleovertheinternet.Cloud servicesofferscalability,ensuringthatyourapplicationcan handlemanyuserssimultaneously

After deployment, it’s important to monitor how the application performs. This includes tracking the speed of responsesandtheaccuracyofthepredictions.Implementing logging will help you understand user interactions and identifyanypotentialissues.

TheintegrationoftrainedmodelsintoaNode.jsapplication for identifying pet breeds and health conditions is a multifacetedapproachthatcansignificantlyenhanceuser experience and operational efficiency. Below are key evaluationpointsregardingthisprocess:

Effectiveness

: The primary objective of integrating the model is to provide accurate predictions.Thesuccessofthisintegrationhingesonthe model’s performance during testing and real-world application.Continuousmonitoringandperiodicretraining with new data are crucial for maintaining accuracy,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

ensuringthatthemodeladaptstoanychangesinthedata distribution

User Experience: Thedesignofthewebinterfaceplaysa vital role in user engagement. A straightforward and intuitive layout encourages users to interact with the application. The ease of uploading images and receiving resultscontributestooverallusersatisfaction.Gathering feedback is essential to identify areas for improvement, enabling the development team to make necessary adjustmentstoenhanceusability.

Scalability and Deployment: Deployingtheapplicationon a cloud platform allows for scalability, meaning that the applicationcanaccommodateagrowingnumberofusers withoutperformancedegradation.Thisflexibilityiscritical for applications expecting fluctuating traffic. Proper deployment strategies can ensure that the application remainsresponsiveandavailable.

Performance Monitoring:

Implementingrobustloggingandmonitoringtoolsallows the development team to track performance metrics effectively.Thisincludesmonitoringresponsetimes,error rates,anduserinteractions.Suchinsightsenableproactive troubleshooting and continuous optimization of the application,fosteringabetteruserexperience.

Continuous Improvement Strategy:

Establishingaroutineformodelretrainingandupdatesis vitalforsustainingtheeffectivenessoftheapplication.As user preferences and pet health data evolve, the model must be periodically refreshed to incorporate new information.Additionally,respondingtouserfeedbackcan leadtofeatureenhancementsandincreasedsatisfaction.

The implementation of the pet breed and health identification system involved several critical steps, from setting up the environment to deploying the application. Initially, the project was established using Node.js, with ExpressastheframeworkforbuildingtheAPI.Thetrained model was loaded into the application to facilitate predictionsbasedonuser-uploadedimages.

A. Datasets overview

Dataset Source:

(VisualGeometryGroup-UniversityofOxford)

The success of this research hinged significantly on the diverse and comprehensive datasets employed, each serving a distinct purpose in the detection of insider threats. The primary datasets utilized include decoy file

access logs, device activity logs, logon records, psychometricassessments,anduserprofileinformation..

Composition of the Dataset:

A diverse array of high-quality images depicting different pet breeds, primarily dogs and cats. Each image is labeled with the breed name and includes additional annotations regarding visible health conditions.

Breed Classification: The specific breed of the pet, allowingthemodeltolearndistinguishingfeaturesof variousbreeds.

Health Indicators: Observationsregardinganyvisible healthissues,includingsymptomsorconditionsthat mayaffectthepet’swell-being.

Additional Attributes: Information such as age, weight, and sex of the pets, which can enhance the model’sabilitytomakeaccuratepredictions.

Data Collection Process:

Data from reputable sources, such as veterinary institutions and animal welfare organizations, provided a foundational collection of images and healthinformation.

Anadditionalcomponentofthedatasetwasgathered through a community-driven approach, where pet ownerssubmittedimagesandhealthdetailsoftheir pets.Thisaspectnotonlyenrichedthedatasetbutalso promotedengagementwiththeusercommunity.

Data Quality and Diversity:

Images were screened for clarity and relevance, ensuringthatonlyhigh-qualityimageswereincluded. Annotationswereverifiedforaccuracytomaintainthe integrityofthedata.Thedatasetencompassesawide range of breeds, sizes, and health conditions. This diversityisvitalfortrainingrobustmodelscapableof generalizingwellacrossdifferenttypesofpets.

Data Preprocessing:

All images were resized to a standard dimension to maintainconsistencyandfacilitatefasterprocessing duringmodeltraining.Pixelvalueswerenormalizedto ensurethatthemodelcouldlearneffectivelywithout beinginfluencedbyvariationsinimagebrightnessor contrast.

Establishing the environment for a pet breed and health identification application is a critical step that ensures all

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

necessarytoolsandframeworksarecorrectlyinstalled.This setup will facilitate the development process and ensure smoothoperation.

Preprocessing Setup and Training:

All images in the dataset must be resized to a uniform dimension to ensure consistency. This is important becausemostneuralnetworksrequireinputimagestobe ofthesamesize.Forinstance,resizingimagesto224x224 pixels is a common practice. Choose an appropriate architectureforthemodel.ConvolutionalNeuralNetworks (CNNs)arecommonlyusedforimageclassificationtasks due to their ability to extract features effectively from images.Dividethedatasetintotraining,validation,andtest sets. A typical split could be 70% for training, 15% for validation, and 15% for testing. This division allows for effectiveevaluationofthemodel’sperformanceandhelps infine-tuning.Initiatethetrainingprocessbyfeedingthe trainingdataintothemodel.Duringthisphase,themodel learnstorecognizepatternsbyadjustingitsweightsbased ontheinputdata.Monitorthetraininglossandaccuracy metricstogaugeperformance.Utilizethevalidationsetto tune hyperparameters and prevent overfitting. By evaluatingthemodelonunseendataduringtraining,you canensurethatitgeneralizeswelltonewinputs.

Model Selection: Selecting the appropriate machine learningmodelisacrucialstepinbuildinga reliablepet breedandhealthidentificationsystem.Thechoiceofmodel significantly impacts the accuracy and efficiency of predictions. Below are the key considerations and steps involvedinthemodelselectionprocess.

Understanding the Problem:

Beforechoosinga model,it’sessential toclearly definetheproblem.Inthiscase,theobjectiveisto classify images of pets into specific breeds and identifypotentialhealthissues.Thisisanimage classification task, which typically benefits from modelsthatcanprocessvisualdataeffectively.

Convolutional Neural Networks (CNNs):

CNNsarewidelyregardedasthego-toarchitecture for image-related tasks. They excel in automatically detecting features such as edges, shapes, and textures through their layered structure.PopularCNNarchitectures.Knownfor their depth and capability to capture intricate patternsinimages.Featuresresidualconnections thathelpintrainingdeepernetworks,makingit suitable for complex datasets. Inception utilizes multiple filter sizes within the same layer, allowingittocapturefeaturesatvariousscales.

Testing Different Models:

Train each model on the prepared dataset, ensuring that the same training conditions (e.g., epochs, learning rate) are applied for fair comparison.Monitorperformancemetricssuchas accuracy,precision,andrecallonavalidationset. This step helps assess how well each model generalizestounseendata.

Selecting the Final Model:

The model should be able to make predictions quickly, especially if the application will handle numerous requests simultaneously. Assess the computational resources required for both trainingandinference.Chooseamodelthataligns withtheavailablehardwarecapabilities.

B. Experimental Execution

Setting Up the Experimental Environment:

Ensurethatthehardware,suchasGPUs,isappropriately configured to handle intensive computations. Utilizing powerful GPUs can significantly accelerate training and inferencetimesfordeeplearningmodels.

SoftwareDependencies:Verifythatallrequiredlibraries andframeworksareinstalled,includingTensorFlow.jsfor modelimplementationandanyotherrelevantpackagesfor datahandlingandprocessing.

Train a Simple Model:Beginwithabasicmodel,suchasa simpleCNNarchitecture,toevaluatehowwellitperforms onthedataset.Thismodelwillserveasareferencepoint formorecomplexarchitectures.

Record Performance Metrics:Duringtraining,monitor keymetricssuchaslossandaccuracy.Thisdatawillhelp identifywhetherthemodelislearningeffectivelyandcan highlight potential issues such as overfitting or underfitting.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

C. Performance Evaluation

Running Comprehensive Experiments:

Model Variations: Experiment with different model architectures, such as deeper CNNs, pre-trained models with transfer learning, and ensemble methods. Each variation should be trained separately to compare performance.

Hyperparameter Tuning:Adjusthyperparameterslike learningrate,batchsize,andnumberofepochs.Conduct experiments with different combinations to identify the optimalsettingsformodelperformance.

Data Augmentation: Implement data augmentation techniquestocreateadditionaltrainingsamples.Thisstep helpsimprovethemodel'sabilitytogeneralizeandcanbe tested by comparing performance with and without augmentation.

Evaluation and Analysis:

Performance Metrics: Utilizemetricssuchasaccuracy, precision,recall,andF1scoretoassesstheeffectivenessof eachmodel.Thesemetricsprovideacomprehensiveview ofmodelperformance,particularlyinclassificationtasks.

Confusion Matrix: Generate a confusion matrix to visualizethemodel'spredictionsagainsttheactuallabels. This matrix can help identify specific breeds or health conditionsthatthemodelmaystrugglewith.

Validation and Test Sets: Evaluate the model on validation and test sets to ensure that performance metricsreflectthemodel’sabilitytogeneralizetounseen data.

Result Compilation: Theperformanceofvariousmodels wasevaluatedusingmetricssuchasaccuracy,precision, recall,andF1score.Thefollowingresultswereobserved:

Baseline Model: The initial simple CNN model achievedanaccuracyofapproximately75%onthe validationset.Whilethisprovidedagoodstarting point,furtherimprovementswerenecessary.

Transfer Learning: When implementing deeper architectures, such as ResNet and Inception, the accuracyimprovedsignificantly.TheResNetmodel reached an accuracy of 88%, while the Inception modelachievedanimpressiveaccuracyof91%on the validation dataset. Utilizing transfer learning with pre-trained models further enhanced performance.

High Accuracy for Common Breeds:Fine-tuninga pre-trained VGG16 model yielded a validation accuracy of 93%, demonstrating the power of leveragingexistingknowledgefromlargerdatasets. The model excelled at identifying popular breeds suchasLabradorRetrieversandGoldenRetrievers, with precision rates exceeding 95%. The model struggled with less common breeds, resulting in lower accuracy and higher misclassification rates for these categories. For instance, certain breeds wereoftenmisidentifiedassimilar-lookingbreeds

Health Detection Metrics:Themodelachieveda recall of 80% for detecting common health conditions like skin infections and obesity. However,itshowedlowerrecall(around65%)for more subtle conditions that require expert evaluation.

User Feedback: Initial feedback from users indicated that the model provided useful insights intopotentialhealthconcerns,althoughsomeusers noted the need for more detailed information regardingspecificsymptoms.

Trade-offs: While more complex models like ResNetofferedhigheraccuracy,theyalsorequired greater computational resources and longer trainingtimes.Simplermodelswerequickertotrain but did not perform as well on the validation dataset.

Efficiency:TheVGG16model,althoughslightlyless accurate than the Inception model, had a faster inference time, making it more suitable for applicationsrequiringreal-timepredictions.

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

V. RESULTS INTERPRETATION AND COMPARISONS

The analysis of results from the pet breed and health identification project provides valuable insights into the effectiveness of various models and their capabilities in both breed classification and health condition identification. This section interprets the results and comparestheperformanceofdifferentapproaches.

Results Interpretation

Interpreting Model Performance:

Accuracy Rates: The accuracy achieved by the models indicates their overall effectiveness. The baseline model, with an accuracy of 75%, laid the groundwork for understanding the model's initial capabilities. Subsequent models, particularly the ResNet and Inception architectures, significantly outperformed the baseline, achieving accuracies of 88% and 91%, respectively. This improvement underscorestheimportanceofusingdeeperandmore complexnetworksforimageclassificationtasks.

Precision and Recall:Precisionandrecallarecritical metricsforunderstandingthemodel'sreliability.High precision indicates that the model makes few falsepositive predictions, while high recall indicates it successfullyidentifiesmostofthepositivecases.The advancedmodelsdemonstratedimprovedprecision, especiallyforcommonbreeds,withvaluesexceeding 95%. However, the lower recall for rare breeds suggeststhatwhilethemodelisaccurate,itmaynot recognizeallinstancesoflesscommonbreeds.

The transition from the baseline model to more advancedarchitecturesillustratedasubstantialleapin performance. The initial model's limitations became apparentasitfailedtogeneralizeeffectivelytovarious breeds.Incontrast,bothResNetandInceptionmodels showed robust capabilities in learning complex features,leadingtobetterclassificationoutcomes.The applicationoftransferlearningwiththeVGG16model showcased the benefits of utilizing pre-trained networks. This model not only provided a higher accuracyof93%butalsorequiredlesstrainingdata, makingitapracticalchoicewhendealingwithlimited datasets. This finding emphasizes how leveraging existing models can accelerate development and enhanceperformance.

The model's ability to detect common health issues like obesityandskininfectionswascommendable,witharecall rateof80%.However,thelowerrecallformorenuanced conditions highlights the challenges faced by machine learningmodelsinidentifyingsubtlehealthproblemsthat may require expert evaluation. This suggests a potential areaforfutureresearch,whereintegratingadditionaldata orexpertfeedbackcouldenhancedetectioncapabilities.

Initial user feedback indicated that while the model provided valuable insights into potential health concerns, someusersdesiredmoredetailedexplanationsregardingthe identified conditions. This feedback suggests the need for developing a user-friendly interface that offers comprehensive information based on the model's predictions.

The results indicate that the models can be effectively utilized in real-world applications for pet owners and veterinarians. However, to enhance their practical utility, severalareasforimprovementaresuggested:

Incorporating More Diverse Data:Expandingthe dataset to include a broader range of breeds and health conditions will likely improve the model's generalization capabilities, particularly for underrepresentedbreeds.

Model Optimization: Further tuning of hyperparametersandexploringensemblemethods could yield additional performance gains. Combining the strengths of various models might leadtoimprovedaccuracyandefficiency.

User-Centric Development: Focusing on user experience by providing clear, actionable insights

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

and recommendations based on the model's predictionscouldsignificantlyenhancethevalueof theapplication.

Afterclassification,theresultsaresavedintoaCSVfilefor furtheranalysisandrecord-keeping.TheCSVfilecontains detailed information about each instance, including the scoresfromeachdetectionmodule,thecombinedscore,the final classification, and any additional metadata such as timestamps and user identifiers. This allows for easy tracking and review of detected threats, enabling prompt and effective responses to both malicious and accidental threats.Table.1showsthedetectedthreatsbythesystem.

Graphmodelaccuracy

The pet breed and health identification project has successfully demonstrated the application of machine learning techniques in classifying various pet breeds and identifying potential health issues. Through rigorous experimentationwithmultiplemodelarchitectures,itwas evident that advanced deep learning models, particularly thoseutilizingtransferlearning,significantlyoutperformed baseline models in terms of accuracy and reliability. The

findingsindicatethatleveragingpre-trainednetworkscan not only enhance classification performance but also optimizetrainingtimeandresourceutilizationThemodels showed commendable capabilities in identifying common breedsandhealthconditions,providingvaluableinsightsfor pet owners and veterinarians. However, the results also highlighted certain limitations, particularly in accurately identifying less common breeds and subtle health issues, which require further refinement and enhancement .Increasing the diversity and size of the dataset is crucial. Includingmoreimagesofrarebreedsandawidervarietyof health conditions will enhance the model's ability to generalize and improve its accuracy across all categories. Exploringmoresophisticatedmodelarchitectures,suchas ensemblemethodsorhybridmodels,couldyieldevenbetter performance.Techniqueslikefew-shotlearningmayalsobe beneficialindealingwithrarebreedsbytrainingthemodel tolearnfromalimitednumberofexamples.Incorporating supplementary data, such as veterinary records or health history,couldprovideamorecomprehensiveunderstanding ofpethealth.Thisinformationcouldbeutilizedtoenhance predictive capabilities and deliver more accurate health assessments. Developing a more interactive and userfriendly interface can improve the application’s usability. Features such as personalized health recommendations, detailed explanations of identified conditions, and interactivetoolsforpetcarecansignificantlyincreaseuser engagementandsatisfaction.Implementingthemodelina real-time environment, such as mobile applications, could allowpetownerstoreceiveimmediatefeedbackabouttheir pets'healthandbreedidentification,makingthetoolmore accessibleandpractical.Partneringwithveterinariansand animal health experts can provide valuable insights for refining model predictions and recommendations. Their expertisecouldenhancethemodel’sapplicabilityinclinical settingsandimproveitsreliability.

[1]Adrian,J.,Deliramich,A.,&Frueh,B.(2009).Complicated grief and post-traumatic stress Clinic, 73, 176-187. doi:10.1521/bumc.2009.73.3.176

[2]DP.Laflamme,Nutritionforagingcatsanddogsandthe importanceofbodycondition.VetClinNorthAmSmallAnim Pract,vol.35,no.3,pp.713-42,May2005.

[3]VVTynesandGM.Landsberg,"NutritionalManagement ofBehaviorandBrainDisordersinDogsandCats", Vet Clin North Am Small Anim Pract,vol.51,no.3,pp.711-727,May 2021.

[4] Nutrient Requirements of Dogs and Cats, Washington, DC:TheNationalAcademiesPress,2006

[5]ElizabethM.Lundetal.,"Prevalenceandriskfactorsfor obesity in adult cats from private US veterinary

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 03 | Mar 2025 www.irjet.net p-ISSN: 2395-0072

practices", International Journal of Applied Research in Veterinary Medicine,vol.3,2005.

[6] G. Menichetti, B. Ravandi, D. Mozaffarian and A. L. Barabasi, "Machine learning prediction of food processing", MedRxiv,pp.2021-05,2021.

[7]A.Schlageter-Tello,G.C.Fahey,T.Freel,L.Koutsos,P.S. Miller and W. P. Weiss, "ASAS-NANP SYMPOSIUM: RUMINANT/NONRUMINANTFEEDCOMPOSITION:Challenges and opportunities associated with creating large feed compositiontables", Journal of animal science,vol.98,no.8, pp.skaa240,2020.

[8] H. Braun, D. Chusnutdinow, S. Koller and A. Rinderknecht, "Petmobi: Machine learning-based personalized pet nutrition and health app", Proceedings of the 2020 IEEE International Conference on Health-care Informatics (ICHI),pp.1-10,2020

[9] K. Thongkanchorn, S. Kanchanapreechakorn and W. Kusakunniran,"KnowingYourDogBreed:IdentifyingaDog Breed with Deep Learning", November 2020 International Journal of Automation and Computing,vol.18,no.5.

[10] K. Lichy, P. Lipinski and B. Morawska, "Dog Breed Library with Picture-Based Search Using Neural Networks", 2021 IEEE 16th International Conference on Computer Sciences and Information Technologies (CSIT),pp. 17-20,2021.

[11]Mulligan Kaitlyn and Pablo Rivas, "Dog breed identification with a neural network over learned representations from the xception cnn architecture", 21st International conference on artificial intelligence (ICAI 2019), 2019.

[12]Bhavani,D.Durga,MirHabeebullahShahQuadriandY. RamReddy,"DogBreedIdentificationUsingConvolutional Neural Networks on Android", CVR Journal of Science and Technology,vol.17,no.1,pp.62-66,2019..

[13] Shi Wenting, Chen Muyun Liu and Fangyu Liu, Dog Breed Identification,2018.

[14]AdityaKhosla,NityanandaJayadevaprakash,Bangpeng Yao and Li Fei-Fei, "Novel dataset for Fine-Grained Image Categorization", First Work-shop on Fine-Grained Visual Categorization (FGVC) IEEE Conference on Computer Vision and Pattern Recognition (CVPR),2011.

[15]A.Zamansky,A.M.Sinitca,D.I.Kaplun,L.M.L.Dutra and R. J. Young, "Automatic Estimation of Dog Age: The DogAge Dataset and Challenge" in Lecture Notes in Computer Science, Springer International Publishing, pp. 421-426,2019.

[16] Pongracz Peter et al., "Human listeners are able to classify dog (Canis familiaris) barks recorded in different

situations", Journal of comparative psychology,vol.119,no.2, pp.136,2005.

[17]M.T.Yazici,S.BasurraandM.M.Gaber,"Edgemachine learning:Enablingsmartinternetofthingsapplications", Big data and cognitive computing,vol.2,no.3,pp.26,2018.

[18]D.Elliott,E.Martino,C.E.Otero,A.Smith,A.M.Peter,B. Luchter-hand, et al., "Cyber-physical analytics: Environmentalsoundclassificationattheedge", 2020 IEEE 6th World Forum on Internet of Things (WF-IoT), pp. 1-6, 2020.

[19] M. J. Baucas and P. Spachos, "Using cloud and fog computing for large scale iotbased urban sound classification", SimulationModellingPractice andTheory,vol. 101,pp.102013,2020.

[20] H. Perez-Espinosa, V. Reyes-Meza, E. Aguilar-Benitez and Y. M. Sanzon-Rosas, "Automatic individual dog recognition based on the acoustic properties of its barks", Journal of Intelligent & Fuzzy Systems,vol.34,no.5, pp.3273-3280,2018.

[21]StephanieGibeault, CPDT (2017 December) Learning to Speak Dog - The Meaning of Your Dog's Barks, [online] Available: https://www.akc.org/expertadvice/lifestyle/learn-speak-dog-meaning-dogs-barks/.

[22] Flannigan Gerrard and H. Dodman, "Risk factors and behaviors associated with separation anxiety in dogs", Journal of the AmericanVeterinaryMedicalAssociation, vol.219,no.4,pp.460-466,2001.

2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008