APPLICATION FOR INDIAN CURRENCY NOTE DETECTION

Sree Ashrit Dande1, Gowtham Reddy Uppunuri2, Dr. Ajay Singh Raghuvanshi1,2UG Student, National Institute of Technology, NIT Raipur, Chhattisgarh, India

Assistant Professor, Dept. of Electronics and Telecommunication Engineering, National Institute of Technology, NIT Raipur, Chhattisgarh, India

Abstract - Indian currency notes, issued by Indian government, are involved in monetary transactions across India. The paper aims to provide an efficient method for detection of Indian currency notes, which is an application of object detection using deep learning.Thedetectionofcurrency notes aids visually impaired people who can’t recognise the monetary value in their possession.Hence,aneffectivemodelis designed in which currency notes are detected and human speech of detected currency note is provided. The notes are first trained using YOLO v5 model and then, the results are validated against validation data. The evaluation metrics are observed and model losses are minimized. Then, a new test data is considered as inference for recognition of classes. The web app is designed using YOLOv5, Flask and web technologies. The web appdetectedIndiancurrencynoteswith bounding box probability greater than 0.80 for all the classes. The labels of each note are detected with bounding box probability greater than 0.90 and labelsarespokenoutclearly using this designed model.

Key Words: Indian Currency notes, YOLO v5 Algorithm, Flask, JavaScript, Evaluation Metrics, Model losses

1. INTRODUCTION

ObjectDetectionistechniqueusedindetectionofreal time objects,inimagesandvideosusingeithermachinelearning ordeeplearningandOpenCV.Objectdetectionisappliedin surveillance, image retrieval, security, Advanced Driver AssistanceSystems(ADAS).Objectdetectionincludesimage classificationandobjectlocalization[1].

Based on WHO statistics, at least 2.2 billion people have a nearordistancevisionimpairment.Theleadingcausesfor visual impairment include uncorrected refractive errors, cataract, diabetic retinopathy, glaucoma and age related visionimpairment[2]

In 2017, National Center for Biotechnology Information (NCBI) reported that 253 million people globally were visually impaired among which 217 million had visual

impairment and remaining were identified as blind [3]. Visuallyimpairedlacktheabilitytointerpretbank notesis very limited and usually depend on others. The aim is to overcome this difficulty and to provide easier currency detection for visually impaired. The currency notes are detectedbasedonfeatureextraction,consideringgeometric sizeandcharacteristictexture[4].

InIndia,IndianCurrencyRecognitionSystemcurrentlyuses CNN and OpenCV based recognition system to detect monetarynotes[5].Thismodelhaslowerdetectionaccuracy than the proposed system which uses YOLOv5 for object detection.

TheproposedsysteminvolvesYOLOv5algorithmtodetect testimages.Themodelistrainedusingtransferlearningand islaterinferencedagainsttestdata.

Aweb basedapplicationisdesignedusingYOLOv5andFlask. Thisappprovideslabelsonthescreenaswellasaudiofileof detected currency note, when a currency note image is uploaded.Theaudiofilecanbelistenedtousingplaybutton provided in the web app. This will not only aid visually impaired, but also, provide easier way to recognize Indian currencynotestotouristsandforeignersvisitingIndia.

ThechallengeistodesignawebapplicationbasedonYOLOv5 modeltodetectIndiancurrencynotesaccuratelyandprovide optimizedandefficientresults,bothonlaptopandmobile.

Therestofthepaperisorganizedasfollows.First,reviewof related work is briefly discussed in Section II, followed by methodology in Section III. The experimental results are presented, followed by conclusion and future scope are presentedinSection1V.

2. LITERATURE REVIEW

Shenmei Luo and Wenbin Zheng (2021) proposed a table detectionmethodforacademicpaperbasedonYOLOv5(You OnlyLookOncev5)wheredetectionaccuracyandspeedof

competitive

observedtobesuperior

Sagaret.al(2021)designedasystemfordetectionofIndian currenciesthataidsvisuallyimpairedpeopletorecognizeand readoutthepossibleIndianpapercurrencieswith79.83% accuracy[4].TheproposedmodelisbasedonYOLOv5,where itcomparestheefficiencyofYOLOv5withCNNalgorithmfor currencydetection.

Sai Nadh et. al (2020) has developed a module for blind peopleanddesignmobileapplicationforvisuallyimpaired Indiancitizenstorecognizecurrencyvaluewhenheldnear androidphonetopredictthecurrencyvaluesothatcurrency canberecognizedcorrectly[7].

Shreyaet.al(2021)designedamobileapplicationforVisually Impaired Persons Blind Assist [8] based on web technologiestoscancurrencynoteusingdevice’scameraand getitsdenominationusingavoiceassistant.

Raghad Raied Mahmood et. al proposed real time Iraqi currencynotedetectionsystemthataidsvisuallyimpaired people using YOLO v3 and gTTS to obtain audio output. customIraqibanknotedatasetfordetectionandrecognition ofbanknotes.TheIraqinotesweredetectedinashorttime withhighmAPof97.405%[9]

MansiMahendruandSanjayKumarDubey(2021)proposed systemforvisuallyimpairedtodetectmultiple objectsand promptvoicetoalertpersonstatingnearandfartherobjects aroundthemusinggTTSandTensorFlow[10].Acomparison betweenYOLOandYOLO_v3forobjectdetectionwastested under same criteria based on measures like accuracy and modelperformance.

3. METHODOLOGY

Theexperimentalprocedureisdividedinto YOLOv5Model, EvaluationParametersandWebappDesign.

3.1 YOLO v5 Model

Themodelisselectedfromultralyticsrepository,whichisthe official YOLOv5 repository on GitHub. The repo is cloned along with installation of dependencies. Later, the configuration (yaml) files are customized by providing the classnamesfrom₹10to₹2000.TheYOLOv5modelchosen comprisesof283convolutionallayers.

DatasetandExperimentalEnvironment:

Currencynotesusedintheexperimentarecollected from sources like banks and shops. The dataset comprisesofoldnotesof₹10,₹20andnewnotesof ₹50, ₹100, ₹200, ₹500 and ₹2000 images (in .jpg format), in equal proportions. The images data comprise200imagesperclass,whicharecapturedon differentbackgroundsanddifferentvisualsettings.

Dataset Augmentation: These 1400 images are then augmented using rotation, blur and shift transformation techniques to generate dataset with 5600images.

Dataset Annotation: These 5600 images are then manuallyannotatedusingMakeSenseannotatortool. The annotations are then exported in YOLO format andstoredinlabelsdirectory

Split the entire datasetalong with theirlabels in 70 30 split.Thetrainingdatasetcomprises3920imagesand theirlabels.Remaining1680imagesalongwiththeir annotationscomprisethevalidationdataset.

Mount the dataset onto Google Drive. Connect to GPU runtimeinGoogleColaboratory.

Setting up the config files: The yaml files (custom_train andcustom_data)thatcontainmodelparametersare modified accordingly for seven classes. The class namesalongwithtotalclassesaresetaccordingly.The paths for train and validation dataset are provided. Thesefilesmustbepresenttotrainthedataset.

ClonetheYOLOv5repositoryintheIPYNBfileandinstall requireddependencies.Therunningenvironmentfor thismodelis CUDA:0(TeslaT4,15110MiB)(2CPUs, 12.7 GB RAM, 39.9/78.2 GB disk). Development packagesincludetorch1.10.0andPython3.8.

Trainthemodel:Theimagesizeissetto416.Themodel epochsaresetto30andbatchsizeissetto32.Setthe directorypathfortraindatasetandconfigurationfiles. Then, run the python command to train the entire datasetusingYOLOv5model.Theevaluationmetrics are Precision, Recall and mean Average Precision (mAP).Thelossescalculatedforbox,objectandclass foreachepoch.

Fig -1:Modelstoringtraindatalabels

Storethebestmodelweightsin.ptformat.

Inference the model against test data: The model is inferencedusingbest.ptasmodelweightandsetting thedirectorypathoftestdata.Thetestdatacontains 100 images of all classes and video file containing currencynoteimageframes.

3.2 Evaluation Parameters

Theevaluationmetricsusedtoanalysetheresultsinclude Precision,recallandmAP.Highervaluesofrecall,precision andmAP(meanAveragePrecision)arepreferredtodetect thecurrencynotesaccurately.Theothermetricsincludebox loss,objlossandclsloss.

InaconfusionmatrixwithTruePositive(TP),TrueNegative (TN), False Positive (FP) and False Negative (FN), the evaluation metrics Precision, recall and F1 score can be calculated.

Recall: Recall determines correctly identified positives amongtotalidentifiedpositives[11]

Fig. -2: ConfusionMatrixoftraindata

Precision:Precisiondeterminescorrectlyidentifiedpositives amongtotaltruepositives[11].

mAP (mean Average Precision): mAPrepresentsmeanof average accuracy in recognition of currency labels. mAP evaluatescomprehensivedetectionaccuracy[11]

mAP @0.5 indicates the precision recall area that lies abovethethreshold0.5.

Model Losses: There are three losses for every epoch, namelyboxloss,classificationlossandobjectloss.

Box loss: Itrepresentshowwellthealgorithmlocatesobject centerandpredictboundingboxes[12]

Objectness or obj Loss: Theprobabilitythatobjectexistsin proposedregionofinterest[12]

Classification Loss orclsLoss:Classificationlossrepresents howwellthealgorithmpredictsclassofgivenobjectcorrectly [12]

The evaluation metrics along with model losses for each epochareshowninFig3.

Fig. -3: Lossesandevaluationmetricsfor30epochson traindata

Precision Recall mAP

0.998 0.997 0.968

0.997 1.00 0.967

0.998 0.996 0.973

0.997 0.998 0.971

1.00 0.997 0.969

0.986 0.976 0.963

0.973 0.941 0.961

3.3 Web Application

Thewebappdesignissubdividedintofront endandback end design. The web application detects Indian notes with accuracy >90% and provides speech out as audio file in EnglishandHindilanguages.

Front end design: Infrontend,webpageisformattedand designed using web technologies. The webpage layout is designedusingHTML.Then,buttonsareprovidedtoupload andsendimagestotheserver.Thesebuttonsarestyledusing CSSandtheyforwardimagetoAPIfromlocalsystem.CSSis usedtostylewebpage.JavaScriptmakesthepageresponsive tobothmobileandlaptop.

Back end design: It involves object detection of currency notes.Back endserverisbuiltusingFlaskinpython[13].The servergeneratesroutesfordifferentwebpagesandAPIcall. Whenanimageissentbyuser,usingSendButton,theAjax callismadeandpre trainedmodelrunsonthesentimage. The obtained results comprise bounding box and its probabilityalongwithclasslabel.TheseresultsfromAPIcall areforwardedbacktorenderthewebpage.Then,thelabels are generated for the sent image. The speech out files are producedusinggTTSinback end.Finally,usingplaybutton, theusercanheartheaudiogeneratedfileofdetectedlabelof thesentimage,bothinEnglishandHindilanguages[14]

4. RESULTS

4.1 YOLOv5 Model

TheYOLOv5detectedresultsfortestdataimages(singleand multipleclass)arepresentedintabularformat.

Table 2: TestdataresultsforproposedYOLOv5model

Denomin ation Notes correctly detected (outof10)

Highest Bounding box probability

Least Bounding box probability

8 0.96 0.82

9 0.95 0.93

9 0.95 0.83

10 0.92 0.84

9 0.96 0.83

8 0.96 0.87

7 0.93 0.80

The model was tested against images with 3 classes and4 classes, each containing 10 images. The model detected 8 correctlyin3classesand7correctlyin4classes.

Fig 4: WebApplicationDesignonLaptop

Fig 5: WebApplicationDesignonMobile

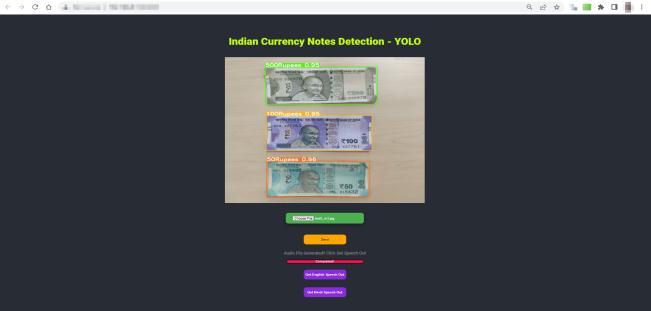

4.2 Web Application

The designed web app detects currency note images with bounding box probability greater than 0.90. The results inferencedagainsttestdatacontainingsingleclassareshown inFig7,8,11,12and13.Theresultsformultipleclassimages areshowninFig9,10and14.

resultsobtainedonlaptopforsingleclassandmulti class currencynoteimagesareshownbelow.

5. CONCLUSION AND FUTURE SCOPE

boundingboxprobability.Thewebappprovidesspeech outfilesofdetectedcurrencynotelabelsinEnglishaswellas

modelcanbeextendedtodetectforeigncurrencynotes. OpticalCharacterRecognition(OCR)canbeintroducedinthe systemtoenhancethemodelperformance.

REFERENCES

[1] J.G.ShanahanandL.Dai,“Realtimeobjectdetection via deep learning based pipelines,” Int. Conf. Inf. Knowl. Manag. Proc., pp. 2977 2978, 2019, doi: 10.1145/3357384.3360320.

[2] World Health Organization;, “Blindness and vision impairment: WHO Factsheet ,” World Heal. Organ., vol.11,no.2018,pp.1 5,2021,[Online].Available: https://www.who.int/news room/fact sheets/detail/blindness and visual impairment.

[3] P. Ackland, S. Resnikoff, and R. Bourne, “World blindness and visual impairment: Despite many successes,theproblemisgrowing,” Community Eye Heal. J.,vol.30,no.100,pp.71 73,2018.

[4] S.D.Achar,C.ShankarSingh,C.S.SumanthRao,K. Pavana Narayana, and A. Dasare, “Indian Currency RecognitionSystemUsingCNNandComparisonwith YOLOv5,” 2021 IEEE Int. Conf. Mob. Networks Wirel. Commun. ICMNWC 2021, pp. 1 6, 2021, doi: 10.1109/ICMNWC52512.2021.9688513.

[5] H.Hassanpour,A.Yaseri,andG.Ardeshiri,“Feature extractionforpapercurrencyrecognition,” 2007 9th Int. Symp. Signal Process. its Appl. ISSPA 2007, Proc., pp.7 10,2007,doi:10.1109/ISSPA.2007.4555366.

[6] S.LuoandW.Zheng,“You Only Look Once v5Based TableDetectionforAcademicPapers,” 2021Int.Conf. Digit. Soc. Intell. Syst. DSInS 2021, pp. 53 56, 2021, doi:10.1109/DSInS54396.2021.9670600

[7] K.K.S.N.Reddy,C.Yashwanth,S.H.Kvs,P.A.T.V. Sai, and S. Khetarpaul, “Object and Currency Detection with Audio Feedback for Visually Impaired,” 2020 IEEE Reg. 10 Symp. TENSYMP 2020,

no. June, pp. 1152 1155, 2020, doi: 10.1109/TENSYMP50017.2020.9230687.

[8] P. Shreya, N. Shreyas, D. Pushya, and N. Uma MaheswarReddy,“BLINDASSIST :AOneStopMobile Application for the Visually Impaired,” 2021 IEEE Pune Sect. Int. Conf. PuneCon 2021,pp.1 4,2021,doi: 10.1109/PuneCon52575.2021.9686476.

[9] R. R. M. Et al., “Currency Detection for Visually ImpairedIraqiBanknoteasaStudyCase,” Turkish J. Comput. Math. Educ.,vol. 12,no. 6,pp.2940 2948, 2021,doi:10.17762/turcomat.v12i6.6078.

[10] M. Mahendru and S. K. Dubey, “Real time object detection with audio feedback using Yolo vs. Yolo_V3,” Proc. Conflu. 2021 11th Int. Conf. Cloud Comput. Data Sci. Eng., pp. 734 740, 2021, doi: 10.1109/Confluence51648.2021.9377064.

[11]

R.Padilla,S.L.Netto,andE.A.B.DaSilva,“ASurvey on Performance Metrics for Object Detection Algorithms,” Int. Conf. Syst. Signals, Image Process., vol. 2020 July, pp. 237 242, 2020, doi: 10.1109/IWSSIP48289.2020.9145130.

[12] M. Kasper Eulaers, N. Hahn, P. E. Kummervold, S. Berger, T. Sebulonsen, and Ø. Myrland, “Short communication: Detecting heavy goods vehicles in rest areas in winter conditions using YOLOv5,” Algorithms, vol. 14, no. 4, 2021, doi: 10.3390/a14040114.

[13] R.Swami,S.Khurana,S.Singh,S.Thakur,andP.K.R. Sajjala, “Indian Currency Classification Using Deep Learning Techniques,” 2022 12th Int. Conf. Cloud Comput. Data Sci. Eng., pp. 567 572, 2022, doi: 10.1109/confluence52989.2022.9734149.

[14] R.C.Joshi,S.Yadav,andM.K.Dutta,“YOLO v3Based Currency Detection and Recognition System for VisuallyImpairedPersons,” 2020 Int. Conf. Contemp. Comput. Appl. IC3A 2020, pp. 280 285, 2020, doi: 10.1109/IC3A48958.2020.233314.