International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

Ramprasad Chinthekindi1 , Shyam Burkule2 , Senthilbharanidhar Boganavijayakumar3

Abstract - The proliferation of artificial intelligence (AI) and machine learning (ML) applications has greatly intensified the requirements for storage systems. These systems must now facilitate high-speed and quick access to vast datasets. When evaluating storage options for AI/ML applications, the most important factors to consider are performance, scalability, and efficiency. Optimal performance requires achieving a high rate of input/output operationsandminimizingthetime delay,which isessential for efficiently managing massive quantities of data and meeting real-time processing requirements. The primary objective of this study is to investigate storage solutions for AI/MLworkloads, specifically evaluating their performance, scalability, and efficiency. The study utilizes a comprehensive literature review methodology. This study examined a total of 20 papers published between the years 2018 and 2024. Data is gathered from several web databases. This paper provides an in-depth examination of modern storage solutions specifically built for AI/ML workloads. It covers distributed file systems, object storage, and specialized block storage systems that are optimized to enhance the performance of AI/ML models. The study also examines the incorporation of sophisticated storage capabilities, such as automated data tiering, in-storage processing, and hardware accelerations, which play a crucial role in improving data access speeds and processing efficiency. This study's findings not only emphasize the present condition of storage technologies in aiding advanced AI/ML environments, but also propose future avenues for innovation in storage solutions to more effectively address the changing requirements of the AI/ML community. This study offers a fundamental reference for enhancingthestorageinfrastructurerequiredforthe future generationofintelligentapplications

Key Words: AI; ML; Scalability; Efficiency; Performance; Storage systems; Throughput; Latency; AI/ML Environments

In the ever-evolving field of AI and ML, the pursuit of efficient storage solutions is crucial for harnessing the complete capabilities of data-driven advancements [1]. This assessment aims to thoroughly analyse the performance, scalability, and efficiency of storage solutions designed specifically for AI/ML workloads. In a context where there is a significant increase in data

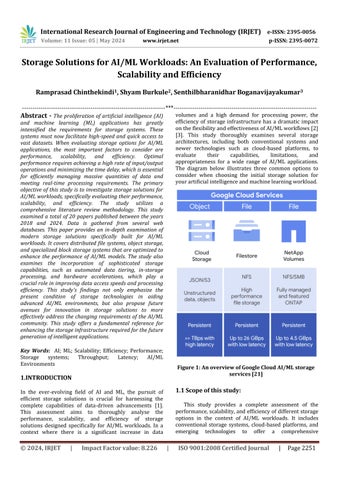

volumes and a high demand for processing power, the efficiency of storage infrastructure has a dramatic impact ontheflexibilityandeffectivenessofAI/MLworkflows[2] [3]. This study thoroughly examines several storage architectures, including both conventional systems and newer technologies such as cloud-based platforms, to evaluate their capabilities, limitations, and appropriateness for a wide range of AI/ML applications. The diagram below illustrates three common options to consider when choosing the initial storage solution for yourartificialintelligenceandmachinelearningworkload.

This study provides a complete assessment of the performance,scalability,andefficiencyofdifferentstorage options in the context of AI/ML workloads. It includes conventional storage systems, cloud-based platforms, and emerging technologies to offer a comprehensive

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

knowledge of their appropriateness and influence on AI/MLapplications.

1.2 Objectives of this study:

To examine the current storage solutions for AI/ML workloadsthatmaximizemodelperformance.

To assess storage systems' performance, including I/O throughput, latency, and scalability, while considering AI/ML training and inference phases' specific access patterns and data management needs.

To study the integration of sophisticated storage technologies that improve data access and processing performance.

To examine how new technologies, enhance storage system flexibility and performance for AI/ML workloads. To discusses how storage design affects system efficiency and the pros and cons of different storage designs

1.3 Research Questions:

Table -1: Relevant publications were identified from internet repositories in this study.

DIGITAL LIBRARY URL

Scopus https://www.scopus.com/sources.uri? zone=TopNavBar&origin=searchbasic

Semantic Scholar https://www.semanticscholar.org/

IEEEXplore https://ieeexplore.ieee.org/Xplore/ home.jsp

ScienceDirect https://www.sciencedirect.com/ Springer https://link.springer.com/ Webof Science https://wosjournal.com/

PubMed https://pubmed.ncbi.nlm.nih.gov/

1. How can AI/ML storage solutions optimize model performance andWhat metrics are essential for adapting access patterns and data management duringtrainingandinferencephases?

2. How do advanced storage technologies improve data access and processing in AI/ML workflows andWhat new technologies are emerging to improve storage system flexibility and performance for AI/ML workloads?

3. Whataretheimplicationsofstoragedesignchoiceson system efficiency, and what are the trade-offs associated with different storage designs in the contextofAI/MLworkloads?

2. BACKGROUND OF THE STUDY:

TherapidgrowthofAIandML inrecentyearshasgreatly transformedvariousindustries.Thishasledtoasignificant need for storage systems that can efficiently handle the

massive amounts of data required for these technologies. As artificial intelligence and machine learning algorithms getmoreadvancedandrequirelargeramountsofdata,the effectiveness of storage infrastructure becomes a crucial factor in determining performance, scalability, and efficiency [4]. Nevertheless, conventional storage systems sometimes encounter difficulties in meeting the distinct requirements imposed by AI/ML workloads, which are defined by the need for high-speed data access, quick retrievaltimes,andeffortlessscalability[5][6].Inorderto tackle these difficulties, researchers and professionals in the industry have been investigating a wide range of storage options, including distributed file systems, cloudbased platforms, and specialized hardware accelerators. This study seeks to explore the complex relationship between storage technologies and AI/ML workflows. It aims to provide a thorough assessment of their performance, scalability, and efficiency. The goal is to provide valuable insights for stakeholders and decisionmakers in designing storage infrastructure that is specifically optimized for the demanding requirements of AI/MLworkloads.

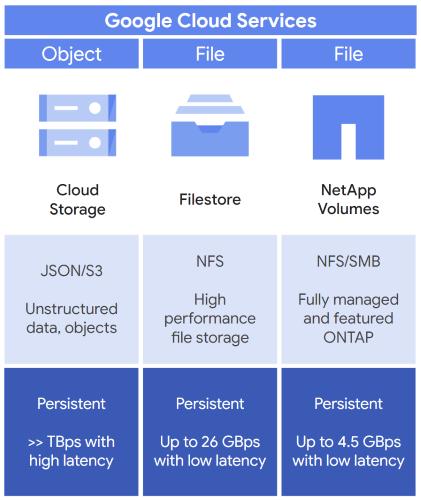

The PRISMA technique will be utilized to conduct a systematic literature evaluation on storage solutions for AI/ML workloads, focusing on evaluating their performance, scalability, and efficiency. The methodology involves creating research questions, devising a comprehensive search strategy, choosing relevant studies based on specific inclusion and exclusion criteria, extracting data from the selected studies, evaluating the quality of the studies, synthesizing the data, and presenting the results following PRISMA guidelines. This systematic approach ensures that the process of finding, evaluating, and integrating existing literature is clear, accurate, and reliable. Ultimately, it provides valuable insightsonthestoragesolutionsforAI/MLworkloads.

Search strings are precise combinations of keywords or phrases employed to obtain pertinent information from search engines or databases. Search strings are carefully designedtocatchimportantfeaturesofthesubjectmatter for the topic "Storage Solutions for AI/ML Workloads: An Evaluation of Performance, Scalability, and Efficiency." These strings are optimized for effectively searching throughextensiveinternetmaterialoracademicdatabases tolocateresourcesrelevanttoassessingtheperformance, scalability, and efficiency of storage solutions for AI/ML workloads. The purpose of these search strings is to identifyarticles,researchpapers,ordiscussionsthatfocus on optimizing storage infrastructure for artificial intelligence and machine learning applications. They achieve this by including terms like "performance evaluation," "scalability assessment," "efficiency analysis," andspecificreferencesto AI/MLworkloads.Everystringis

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

meticulously designed to focus on distinct aspects of the issue, enabling scholars to investigate several dimensions of the subject matter and acquire valuable insights from numerousviewpoints.

The following table presents the potential criteria for inclusion and exclusion in this systematic literature reviewontheinvestigationofperformanceandscalability instoragesolutionsforAI/MLworkloads:

Table 2: Inclusion/Exclusion Criteria

Criteria Inclusion Exclusion

Typeof Study AcademicResearch papersandReview articles

Topic Relevance Studiesthat investigatethe performance, scalability,and efficiencyofstorage solutionsforAI/ML workloads

Editorials, articlesand opinions

Studiesunrelated tothe performance, scalability,and efficiencyof storagesolutions forAI/ML workloads

Publication date Studiespublished between20182024 Studiesbeyond andafter20182024

Language English Non-English studies

Subject Area AI/MLworkloads; storagesolutions; scalability assessment; efficiencyanalysis; performance evaluation Irrelevant Subjects

Access Availability Openaccessstudies Studiesbehind paywallsor lackingaccess

Peer –Review Peer-reviewed studies Non-peerreviewedstudies

Thisflowdiagram(Figurebelow)illustratestheprocessof selectingarticlesfortheSLRduringaliteraturesearchand isbasedonthePRISMAFlowDiagram.

2: Literature search for SLR publications (based on PRISMA flow diagram).

AI/ML workloads encompass the computing tasks and proceduresrequiredfortraining,deploying,andexecuting machine learning models and algorithms. These workloads involve a wide variety of tasks, including as data pre-processing, model training, inference, and continuous learning [7]. AI/ML workloads can be effectivelyaddressedbyutilizingstoragesystemsthatare specifically designed to prioritize high-throughput data access, minimize latency in data retrieval, and seamlessly scale to meet the changing requirements of machine learning applications. These technologies include distributed file systems, object storage, and cloud-based platforms. They are optimized to provide the best performance, scalability, and efficiency in handling and analysingdataformachinelearningtasks[8].

4.1 Contemporary storage solutions for AI/ML workloads:

Modern storage solutions designed for AI/ML workloads consist of many technologies, each catering to specific needs in order to enhance the performance of machine learning models. Distributed file systems, such as Hadoop Distributed File System (HDFS) and Apache Distributed Log, are specifically engineered to effectively store and handle substantial amounts of data across numerous

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

nodes in a distributed computing environment. These systems possess fault tolerance, scalability, and parallel processingcapabilities,whichmakethemverysuitablefor managing the extensive datasets commonly associated withAI/MLjobs.Distributedfilesystemsfacilitateparallel computation and enhance the speed of data retrieval and model training procedures by distributing data across numerous nodes. Object storage, such as Amazon S3 (Simple Storage Service) and Google Cloud Storage, offers a scalable and resilient storage solution for unstructured data, which is commonly seen in AI/ML processes [9]. Object storage systems categorize data into individual objects, each assigned a distinct identification, enabling efficient retrieval and administration of various data formats, such as photos, audio files, and text documents. This architecture is especially advantageous for AI/ML applications that require the handling and analysis of substantial amounts of multimedia data. It allows for effortless integration with machine learning frameworks andtools[10].

Specialized block storage solutions, such as NVMe SSDs (Non-Volatile Memory Express/Solid-state Drive) and high-performance storage arrays, are specifically engineered to provide AI/ML applications with fast data access and processing by offering low-latency and highbandwidth storage. NVMe SSDs utilize flash memory technology to deliver very high storage performance, minimizing data access latency and expediting model training durations [11]. Advanced storage arrays, which include features such as tiered storage, caching methods, and data deduplication, enhance data access and throughput for AI/ML applications. This ensures optimal use of storage resources and improves overall system performance.So, modern storage solutions designed for AI/ML workloads, such as distributed file systems, object storage, and specialized block storage systems, provide clear benefits in enhancing the efficiency of machine learning models. These solutions offer a storage infrastructure that can handle large amounts of data in AI/ML workflows. This allows organizations to efficiently manageandprocessdata,leadingtofastermodeltraining, quickerinferencetimes,andimprovedoverallefficiencyin AI/MLdeployments[11].

4.2 Storage solutions for AI/ML workloads across critical performance metrics:

To properly assess storage systems designed for AI/ML workloads, it is necessary to have a comprehensive understanding of the specific access patterns and data management requirements that are typical throughout AI/ML training and inference phases. This evaluation shouldconsiderimportantperformanceindicatorssuchas I/Othroughput,latency,andscalability.

I/O Throughput: Optimalinput/output(I/O)throughputis crucialforeffectivelyperformingdatareadingandwriting operationsinthecontextofAI/MLworkloads.Distributed file systems are highly efficient in achieving high data throughputbydistributingdataovernumerousnodesand enabling parallel access Block storage systems that are specifically designed for a particular purpose, particularly those that utilize NVMe SSDs, exhibit outstanding throughputperformance[12].

Latency: Minimizing latency is crucial for reducing the time required to access and process data, which in turn leads to shorter model training cycles and enhanced realtime inference performance. Distributed file systems, while their advantages in parallel computing, can face latency problems when accessing data spread over multiple nodes. Specialized block storage solutions, specifically NVMe SSDs, are highly effective in providing storage access with extremely low latency, making them perfectforAI/MLworkloadsthatrequireminimaldelay.

Scalability: Scalability is of utmost importance in order to handle the increasing amounts of data and processing requirements that are inherent in AI/ML workloads. Distributed file systems and object storage systems possess an innate ability to scale, allowing for effortless expansion of store capacity and processing capabilities to accommodatechangingneeds[13].

AutomatedDataTiering: Itdynamicallyseparatesdatainto storage tiers by usage and access frequency. NVMe SSDs and DRAM are used for high-performance storage, whereas HDDs and cloud storage are used for low-cost, high-capacity storage. This optimization allows AI/ML applications to access frequently used data with low latency while tiering less-used data to cheaper storage alternatives[14].

Hardware Accelerations: Specialized computing systems builtfor parallel processing tasksareknown ashardware accelerators, and examples include GPUs (Graphics Processing Units) and FPGAs (Field-Programmable Gate Arrays). By taking on computational burdens from conventional CPUs, GPUs and FPGAs play a crucial role in speeding up model training and inference operations in the context of AI/ML workloads. Many machine learning algorithms rely on these accelerators for their superior performance in matrix calculations and neural network operations. AI/ML workloads can take use of GPUs and FPGAs' parallel processing capabilities to obtain considerable performance benefits and better processing efficiency by directly integrating them into the compute nodesorstoragearchitecture[15].

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

EmergingtechnologieslikeNVMeoverFabrics(NVMe-oF) and software-defined storage (SDS) architectures are transforming the flexibility and performance of storage systems that handle AI/ML applications. NVMe-oF expandsthefunctionalitiesofNVMestoragedevicesovera network fabric, allowing distributed compute nodes to have fast and efficient access to storage resources with little delay and high data transfer rates. This technology eradicates the limitations commonly associated with conventional storage methods,enablingAI/ML workloads toeffortlesslyretrieveandanalysedata withunparalleled velocityandeffectiveness[16].SDSarchitecturesseparate storage software from the hardware it runs on, allowing for flexible and software-controlled management of storage resources. SDS architectures simplify storage provisioning, data placement, and resource scaling by abstracting storage management processes and offering centralized orchestration capabilities. This improves the agility and scalability of storage infrastructure for AI/ML applications. NVMe-oF and SDS architectures enable enterprises to construct adaptable, high-performance storage systems that are specifically designed for AI/ML workloads.Thisallowsforfastermodeltraining,improved inference times, and more efficiency in AI/ML deployments[16].

4.4

of Storage design choices on the overall system efficiency:

The design choices made for storage have significant consequences for the overall efficiency of the system, including characteristics such as energy usage and costeffectiveness, which are essential for scalable AI/ML deployments. An ideal storage design achieves a compromise between performance requirements and energyeconomy,bydecreasingpowerconsumptionwhile still meeting computing demands. Organizations can reduce the environmental impact and operational expensesofAI/MLworkloadsbychoosingenergy-efficient storage components and applying power management measures [17]. Furthermore, the evaluation of costeffectiveness in storage design entails analysing the overall cost of ownership (TCO), which includes not only initialchargesforhardwareandsoftwarebutalsoongoing maintenance, upgrades, and scalability. By utilizing costefficient storage solutions like cloud-based storage platforms or open-source software-defined storage, enterprises can attain scalability and affordability in AI/ML deployments while maintaining optimal performance and dependability. In the end, the strategic decisions made in storage design are crucial for maximizingsystemefficiency,allowing forscalableAI/ML deployments that are both environmentally friendly and economicallyfeasible[17][18].

4.5 Strengths and limitations of various storage architectures AI/ML projects:

Differentstoragearchitecturespossessuniqueadvantages and encounter certain constraints when utilized in AI/ML projects. Distributed file systems (DFS) offer excellent scalabilityandfaulttolerance,makingthemwell-suitedfor managinghugeAI/MLdatasets.However,theircomplexity and possible overhead can present difficulties. Object storage is highly scalable and cost-effective, making it suitable for handling various data kinds commonly found in AI/ML [19]. However, it may experience increased latency and complexity while accessing data. Specialized blockstorageoptionssuchasNVMeSSDsofferexceptional performance and consistent latency, which are essential for AI/ML applications. However, they can be expensive and have limited scalability. Emerging technologies like NVMeover Fabricsand Software-DefinedStorage provide enhanced performance and flexibility. However, their complexity and compatibility problems might pose challenges [20]. Practitioners can optimize the speed of AI/ML projects and minimize constraints by carefully considering the specific requirements and trade-offs of eachstoragearchitecture.

Finally, the assessment of storage solutions for AI/ML workloads highlights the crucial significance of maintaining a harmonious equilibrium between performance, scalability, and efficiency in order to fulfil the varied requirements of machine learning projects. By comprehending the advantages and constraints of different storage frameworks, individuals involved can make well-informed choices to enhance infrastructure design and effectively meet the changing demands of AI/MLworkflows.

[1] A. Christidis, S. Moschoyiannis, C.-H. Hsu, and R. Davies,“Enablingserverlessdeploymentoflarge-scale AI workloads,” IEEE Access, vol. 8, pp. 70150–70161, 2020.

[2] A.Christidis,R.Davies,andS.Moschoyiannis,“Serving machine learning workloads in resource constrained environments: A serverless deployment example,” in2019 IEEE 12th Conference on Service-Oriented ComputingandApplications(SOCA),2019.

[3] I.Baldini et al.,“Serverlesscomputing:Current trends and open problems,” in Research Advances in Cloud Computing, Singapore: Springer Singapore, 2017, pp. 1–20

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

[4] R. Mayer and H. A. Jacobsen, “Scalable deep learning ondistributedinfrastructures:Challenges,techniques, and tools,”ACM Computing Surveys (CSUR), vol. 53, pp.1–37,2020.

[5] D.Buniatyan,“Hyper:Distributedcloudprocessingfor large-scale deep learning tasks,” in2019 Computer ScienceandInformationTechnologies(CSIT),2019.

[6] Z. Cao, S. Dong, S. Vemuri, and D. H. Du, “Characterizing, modeling, and benchmarking RocksDBKey-Value workloads at facebook,”18th USENIX Conference on File and Storage Technologies, vol.20,pp.209–223,2020.

[7] A. Nasariet al., “Benchmarking the performance of accelerators on national cyberinfrastructure resources for artificial intelligence/machine learning workloads,” inPractice and Experience in Advanced ResearchComputing,2022,pp.1–9

[8] D. Golubovic and R. Rocha, “Training and Serving ML workloads with Kubeflow at CERN,”EPJ Web Conf., vol.251,p.02067,2021.

[9] S. S. Gill et al., “AI for next generation computing: Emerging trends and future directions,” Internet of Things,vol.19,no.100514,p.100514,2022.

[10] S. S. Gillet al.,Modern computing: Vision and challenges.TelematicsandInformaticsReports.2024.

[11] D. Inupakutika, B. Davis, Q. Yang, D. Kim, and D. Akopian, “Quantifying performance gains of GPUDirect storage,” in2022 IEEE International Conference on Networking, Architecture and Storage (NAS),2022.

[12] J. Ejarque et al., “Enabling dynamic and intelligent workflows for HPC, data analytics, and AI convergence,” FutureGener.Comput.Syst.,vol.134,pp. 414–429,2022.

[13] P.Rościszewski,A.Krzywaniak,S.Iserte,K.Rojek,and P. Gepner, “Optimizing throughput of Seq2Seq model training on the IPU platform for AI-accelerated CFD simulations,”FutureGener.Comput.Syst.,vol.147,pp. 149–162,2023.

[14] G. Singh, “Designing, modeling, and optimizing dataintensivecomputingsystems,”arXiv[cs.AR],2022.

[15] J. L. Bezet al., “Access patterns and performance behaviors of multi-layer supercomputer I/O subsystemsunderproductionload,”inProceedingsof the 31st International Symposium on HighPerformance Parallel and Distributed Computing, 2022.

[16] A.S.George,P.B.Srikaanth, V.Sujatha,andT.Baskar, “Flash Fast: Unleashing Performance with NVMe Technology,”Partners Universal International ResearchJournal,vol.2,no.3,pp.71–81,2023.

[17] M. Zhao and L. Wei, “Optimizing Resource Allocation in Cloud Computing Environments using AI,”Asian AmericanResearchLettersJournal,no.2,2024.

[18] T. Ahmad, R. Madonski, D. Zhang, C. Huang, and A. Mujeeb, “Data-driven probabilistic machine learning in sustainable smart energy/smart energy systems: Key developments, challenges, and future research opportunities in the context of smart grid paradigm,”Renew. Sustain. Energy Rev., vol. 160, no. 112128,p.112128,2022.

[19] R. I. Mukhamedievet al., “Review of artificial intelligence and machine learning technologies: Classification, restrictions, opportunities and challenges,”Mathematics, vol. 10, no. 15, p. 2552, 2022.

[20] I. Antonopouloset al., “Artificial intelligence and machine learning approaches to energy demand-side response: A systematic review,”Renew. Sustain. EnergyRev.,vol.130,no.109899,p.109899,2020.

[21] D. Hildebrand, S. Derrington, R. Hendricks “Design storage for AI and ML workloads” in Google Cloud, 2024, url: https://cloud.google.com/architecture/aiml/storage-for-ai-ml