Advance Vehicle Advanced Driver Assistance Systems: Working & Features

ADAS A Path towards Intelligent Vehicles

SHREYAS KANKORIYA, MANOJKUMAR KHATAL

1SN. DESIGN ENGINEER, TATA TECHNOLOGIES, PUNE, MAHARASTRA, INDIA

2 PROJECT MANAGER, TATA TECHNOLOGIES, PUNE, MAHARASTRA, INDIA

Abstract - Intelligent connected cars (ICVs) are expected to improve transportation in the near future, making it safer, cleaner, and more comfortable for passengers. Even though many ICV prototypes have been created to demonstrate the notion of autonomous driving and the viability of perfecting business effectiveness, there is still a long way to go before high-position ICVs are produced in large quantities. The goal of this study is to provide an overview of key technologies needed for future ICVs from both the current state of the art and future perspectives. Reviewing every affiliated workshop and predicting their future perspectives is a taxing effort, especially for such a complicated and diverse field of research.

Advanced driver-assistance systems (ADASs) have become a salient feature for safety in ultramodern vehicles. They'realso a crucial underpinning technology in arising independent vehicles. State-of-the-artADASsareprimarilyvisiongrounded, colorful type of features for partner. Automatic Emergency Braking (AEB) and other advanced- seeing technologies are also getting popular. In this composition, this composition is organized to overview the ICV key technologies or Features of ADAS. We bandy approaches used for vision- grounded recognition and detector emulsion in ADAS results. We also punctuate benefits for the coming generation of ADASs.

This abecedarian work explains in detail systems for active safety and motorist backing, considering both their structure and their function. These include the well- known standard systems similar as Electronic Stability Control (ESC) or Adaptive voyage Control (ACC), Omni View( Bird eye View), Head- up display. But it includes also new systems for guarding collisions protection, for changing the lane, or for accessible parking.

The paper aims at giving a complete picture fastening on the entire Features. First, it describes the factors, which are necessary for backing systems, similar as detectors, and control rudiments. also, it explains crucial features for the stoner-friendly design of mortal- machine interfaces between motorist and backing system.

Keywords: Intelligent connected vehicles (ICVs), Lidar, ADAS level, Automatic Emergency Braking, Adaptive cruise control, Drowsiness, Lean Assistant, Head-Up display,OmniView

1. INTRODUCTION

Oneofthebiggestsocioeconomicissuesintheworldtodayis theamountoftrafficaccidents.TheWHOestimatesthateach year, up to 50 million people are injured and 1.2 million people are murdered. If the automatic systems were employedtoassisthumans duringbraking, manyof these accidents may have been prevented. Although Advanced Drive Assistance Systems (ADAS) cannot totally prevent accidents, they can better protect us from some human causes,andthemajorityoftrafficaccidentsaretheresultof humanerror.Wecouldalterdriverbehaviour,takevehiclerelatedactions,andimplementroadinfrastructure-related actionstoreducetrafficaccidents.Makingtheswitchfrom passive to active safety measures is another strategy. Airbags,thedesignofthevehicle'sbody,seatbelts,andhead restraints are examples of passive safety measures. Electronicstabilitycontrol(ESC),anti-lockbrakingsystems (ABS),andotherAdvancedDriverAssistanceSystems,such as intersection collision avoidance (ICA) and line maintaining assistant, are examples of active safety measures(LKA).WecannowdeployICT(Informationand Communication Technology) based ADAS systems, which constantly help drivers with their driving jobs, to prevent accidents.Thedocumentprovidesa summary ofthemost widely used and selected technology and components for intelligent transportation systems. This review only highlights a few exemplary instances of the available technologiesratherthanexploringallpotentialchoices.

Table -1: Summaryoflevelsofdrivingautomationforonroad

drivingtaskevenwhenenhancedbywarningorinterventionsystems

Thedrivingmodespecificexcutionbyadriverassistancesystemofeither steeringandacceleration/decelerationusinginformationaboutthedriving environmentandwiththeexpectationthatthehumandriverperformallremining

Thedrivingmodespecificexcutionbyoneormoredriverassistancesystemof bothsteeringandacceleration/decelerationusinginformationaboutthedriving environmentandwiththeexpectationthatthehumandriverperformallremining aspectofthedynamicdrivingtask

Thedrivingmodeperformancebyanautomateddrivingsystemofallaspectof thedynamicdrivingtaskwiththeexpectationthatthehumandriverwillrespond appropriatelytoarequesttointervene

4 Highly Automation Thedrivingmodeperformancebyanautomateddrivingsystemofallaspectof thedynamicdrivingtaskevenifhumandriverdoesrespondappropriatelytoa requesttointervene

Thefulltimeperformancebyanautomateddrivingsystemofallaspectofthe dynamicdrivingtaskundreallroadwayandenvironmentconditionthatcanbe

Thebelowtablegivesabriefoverviewofalreadyintroduced driver assistance systems (for both passenger and commercialvehicles)andofsystemswhichareonthewayto enterthemarket.

Thebelowlongfeatureslistofadvanced driverassistance systems, or ADAS as it is called in the automotive industry.ADAS is an umbrella term. Its individual technologiesaresmallautonomoussystems.Takentogether, ADAS is essentially a self-driving system, but it is not promotedassuchbecauseofregulatoryreasons.

Theglobalengineers’associationSAEhaslistedsixlevelsof autonomy from level zero – for no autonomy, just mechanicalvehicles–tolevel5,whichisfullself-drivingor autonomous capabilities. ADAS systems fit into various levels of autonomy – depends on how many of those individualelementsarecontainedwithinthecar.

2. OVERVIEW OF AUTOMOTIVE SYSTEM SAFETY

Securityincarframeworkshasbeenacentralissuesincethe beginning of on-street vehicles. A few original equipment manufacturers(OEMs)haveendeavoredtoresolvethisissue by creating different security frameworks to safeguard inhabitants inside a vehicle as well as forestall wounds to individuals outside the vehicle. These frameworks are basicallyarrangedintotwokinds: (1) passive (or reactive) and (2) active (or proactive). Passive wellbeing frameworkssafeguardvehicletenantsfromwoundsafteran accident, e.g., safety belts, air packs, and cushioned dashboards.Becauseofareliableshopperinterestformore securevehicles,latentwellbeingframeworksthathavebeen under persistent improvement for a long time have been increased by dynamic security frameworks, which lookto keep an accident from happening by and large. Dynamic frameworks are one of the primary areas of interest and haveseensignificantdevelopmentinthepresentvehicles. Instances of such frameworks incorporate path keeping, programmed slowing down, and versatile voyage control. These frameworks are regularly known as ADASs and are turning out to be progressively famous as a way for car makers to separate their contributions while advancing shopperwellbeing.

Modern-dayADASsarealsocrucialtechnologiestorealize autonomous vehicles. Still, several challenges with the design,implementation,andoperationofADASsremainto beovercome.Someofthesechallengesincludeminimizing energyconsumption,reducingresponselatency,adaptingto changing weather conditions, and security. In this composition,wegiveasynopsisofthegeographyofADAS explorationanddevelopmenttoaddressthesechallenges.

3. WORKING OF ADAS

ADASemployscamera-basedsensorstoassistthedriverin becomingmorealerttothedrivingenvironment.Motorcars arethefoundationofthefutureofmobile-connectedbias, with significant progress in driverless vehicles. SoCs, or systems- on-a-chip, is a collection of chips used to apply independentoperationresults.Thesechipslinkdetectorsto selectors via interfaces and high- performance electronic controlunits(ECUs).

ADASisincorporatedintotheoriginaldesignofmanylatemodelautomobilesandisupgradedwhennewmodelsand featuresareintroducedbyautomakers.Inordertoprovide beneficialsecuritymechanisms,thesystemsmakeuseofa varietyofdatainputs.Acollectionofhigh-qualitydetector systems that match or exceed human vision is known as machineimaging.Thisincludesreal-timedata,whichisone of these data sources, 360-degree content, 3D object resolution,andgoodvisibilityinadverselightingandrainfall conditions.

Similartoadaptivecruisecontrol,whichadjustsavehicle's speedtomaintainasafedistancefromthevehicleinfront, ADASalsoincludespropulsioncapabilities.Moreimportant ADASfeaturescanmanagesteeringandpropulsionwithout thedriver'sprimaryinterventionincertainsituations,such as an interstate trip or stop-and-go business. Typically referredtoasLevel2+activesafetysystems,thesesystems include some of the most extensive features currently available.

The potential for ADAS to save lives may be significantly increased by these characteristics. For instance, the InsuranceInstituteforHighwaySafetyfoundthatforward collisionadvisingsystemsreducedfront-to-rearcollisionsby 27%whentheyincludedtheabilitytostopautonomously. Additionally, backing accidents are reduced by 17% and 78%respectivelybyautomatedrearbrakingandrearview cameras.

3.1 Building Blocks for Automated Driving: Key Technologies

Inordertoimprovesecurityandspeedofresponse,ADAS technologywasdevelopedthroughpossibleearlywarning automated alarm systems. These technologies have been developedtoautomateandimprovedrivingproceduresin ordertoaddressmortalmiscalculationsandrelateddriving behaviorsbecausethemajorityofroadaccidentsarecaused byhumanerror.Byloweringtheoverallnumberofbusiness accidents,thesesafetysystemsaimtoimproveroadsafety andlessenthenumberofinjuries.

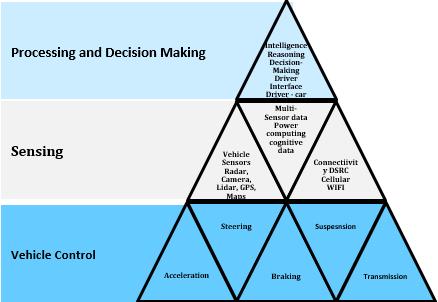

ThebuildingblocksforautomateddrivingareshowninFig. 3. They constitute three layers covering vehicle control (layer 1), sensing (layer 2), and processing and decisionmaking(layer3).

Thisindicatesthatinordertomeetever-higherperformance standardswhilesimultaneouslyloweringpowerandspace requirements, hardware designers employ process nodes thatarebecomingincreasinglysophisticated.

Otherthanthemainvehicleplatform,therearemoreinputs thatcanbegatheredfromsourceslikeothervehicles(V2V) or vehicle-to-infrastructure (V2X) like Wi-Fi. ADAS will continue to link to wireless networks in the coming generations using V2V and V2X data in order to provide moresafetyandfinancialbenefit.

Usingthesevisualprocessingabilities,annotationservices forADAShelpvisionsystemssurroundingthevehicleseeit inside a safe bubble against motorist error, road obstructions, other cars, and pedestrians. ADAS feeds recognition and tracking information to on-board safety systems in order to identify motorist somnolence, lane departure warning, collision avoidance, and defensive measures to prevent accidents and improve the driving experience.

Vehicles capable of (highly) automated driving are controlledagentsintegratingenvironmentperceptionand modelling,localizationandmapgeneration,pathplanning, anddecision-making.

Theblock“EnvironmentPerceptionandModelling”provides areal-timemodelofthesurroundingenvironment

3.2 Type of ADAS System

ADASsystemdividedintotwotype:-

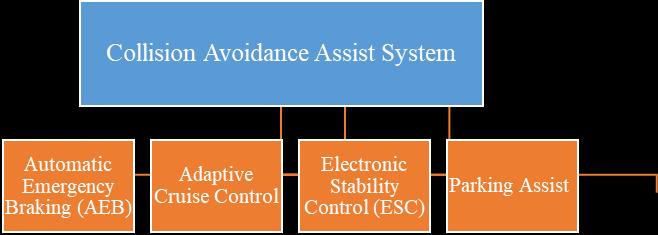

circumstances. Automatic emergency braking (AEB) recognizesanimpendingcollisionandautomaticallyapplies the brakes. Adaptive cruise control (ACC), lane-keeping assist(LKA),lanecentering (LC),andtrafficjamassistare examplesoffunctionalfeatures.

The Active ADAS System automatically modifies the host car'sspeedfromitspre-setlevelifaslowervehicleisinits path(asinregularcruisecontrol).Thecarisautomatically accompaniedbyLKAandLCtokeepitinsidethelanelines. Businessjamassistcombinesadaptivecruisecontrol(ACC) and lane centering for use in commercial traffic scenarios (LC).Theseautomatedcomponentsprovidethebasisofcars thatarepartiallyorcompletelyautonomous.

a.) Passive ADAS System:-

InapassiveADASsystem,thecomputerwarnsthedriverof a dangerous situation despite the presence of any or all detectors.Inordertoavoidanaccidentbroughtonbythis circumstance,thedrivermustchangelanes.

Sirens, flashing lights, and, in some cases, another auto (blind spot detection) that previously took tactile input, similartoasteeringwheelthatvibratestowarnthedriverof thezonetheyareentering,aretypicalwarningsystems.The driverreceivescrucialinformationthatenableshimtomake stylishdecisionswhiledriving.Throughamortal-machine interface (MMI), passive ADAS provides real-time information about the driving terrain as well as warnings aboutpotentialdangers.

Therearethreemodesofdatatransmission:haptic,auditory, andvisualsensesthearchitectureofADASdatapresentation has traditionally made extensive use of visual and audio warnings. Visual cues are easy to understand and can be used to send colorful messages that use colour and emblematic information. The most common method for disseminating information is this one. Warnings can be displayedvisuallyonavehicle'scentrepanelordashboard. However, doing so may result in motorist attention gaps knownas"eye-off-road."

Examples:-

Back-upCamera.

ESC-ElectronicStabilityControl.

TCS-TractionControlSystem:Incorporatesaspectsof both ABS and ESC above, to assist the driver in maintainingadequatetractionwhennegotiatingturns andcurves.

b.) Active ADAS System:-

IntheseADASsystems,thevehicleactivelybehaves.Thecar may operate independently to avert worst-case

Example:-

AdaptiveCruiseControl

LaneKeepingAssistandLaneCentering

3.3 Importance of ADAS

3.3.1 Automates the enhancement of safety systems

The number of drivers increases as safety initiatives are automatedimplementedandupgraded.Bywarningdrivers ofimplicitproblemsortakingcontrolofthevehicletoavoid them,ADASaimstopreventcollisions.

3.3.2 Actions adaptive features

The number of drivers increases as safety initiatives are automatedimplementedandupgraded.Bywarningdrivers ofimplicitproblemsortakingcontrolofthevehicletoavoid them,ADASaimstopreventcollisions.

3.3.3 Helps in the perception of traffic context

Thecentreofthetraffic-driver-vehiclecycleisthemotorist. The traffic situation is fed into the motorist perception system,whichthenactsasamotivatorforthedriver'sintent. Theintentionconclusionsystemwillthusbeimprovedby knowingthecurrenttrafficscript.

3.3.4 Understands and analyses driving behaviour

Driveractionslikechecking theirmirrors beforechanging lanesarethemostimportantsignals.Beforechanginglanes, thedrivermustgothroughaseriesofcheckstomakesure theyarefullyinformed.Therefore,drivinggestureanalysisis necessary to ascertain the driver's intent. In order to anticipateadriver'slane-changingintention,itisessentialto comprehendthehumanintentionprocess,includinghowthe intentionisgeneratedanditstriggers.Thenatureofdriver intentionisthefirstissuethatneedstobeaddressed.

3.3.5 Provides solutions for predictive maintenance

Bycombiningpallcomputing,edgecomputing,sensordata collection,andanalytics,predictivetechnologyanalysesrisks andtransmitsdataviathecloudtonotifycustomersofany vehicle issues. The fuel level, tire pressure, engine status, navigationroute,speed,andtemperatureareallmonitored byin-vehiclesensorstoalertthedriverandensuresafety.By anticipating and prioritizing performance goals, many conservationissuescanbeavoidedbeforetime.

4. FEATURE OF ADAS

Significantautomotivesafetyimprovementsinthehistory (e.g.,shatter-resistantglass,three-pointseatbelts,airbags) werepassivesafetymeasuresdesignedtominimizeinjury duringanaccident.Today,ADASsystemsactivelyimprove safety with the help ofembedded visionby reducing the occurrenceofaccidentsandinjurytooccupants.

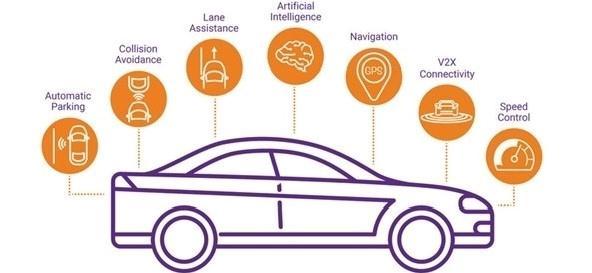

Some of the most common ADAS Features are:

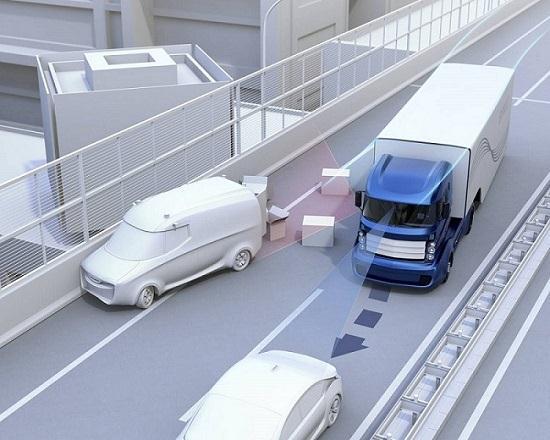

4.1 COLLISION AVOIDANCE

Fig -4:FeatureinthevehicleofADAS

A new AI function that uses detector emulsion to identify andreuseobjectsisincorporatedintothevehicle'scameras. With the assistance of image recognition software, ultrasounddetectors,LIDAR,andRADAR,detectoremulsion combines large quantities of data in a manner that is analogoustohowthehumanbrainprocessesinformation.A humandrivercouldneverphysicallyrespondasquicklyas thistechnologycan.Itisabletoevaluatewhatisshownona streamingvideotapeinrealtime,determinehowtorespond, andevaluatethevideotapeitself.

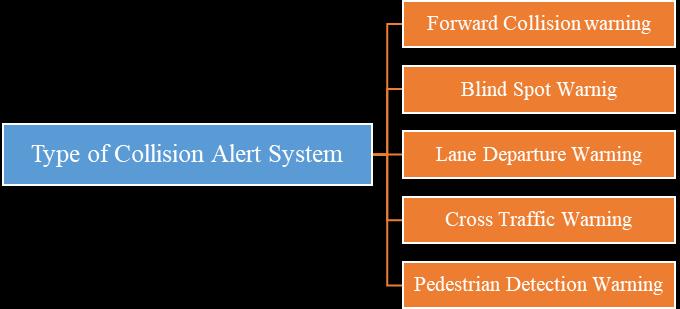

ADAS feature are divided into four category and each category. The below hierarchy show all the type of features: -

Automaticbrakingandcollisionavoidancearebeginningto be incorporated into ADASs. A safety system called a collision avoidance system helps drivers avoid imminent collisions and lowers the risk of them happening. This is accomplished by combining a number of previously mentioned features, such as distance estimation, vehicle detection, and object tracking. A vehicle can predict a collision based on this combination of data and prevent it fromoccurringbybrakingorevensteeringoutoftheway. Technologiesandsensorslikeradar,lasers,cameras,GPS, and artificial intelligence are used in collision avoidance systems.Some collisionavoidancesystemsadvise oralert the driver, while others override the driver to help them avoidcollisionsandreducerisk.

4.1.1 AUTOMATIC EMERGENCY BRAKING (AEB)

Sensors are used by automatic emergency braking to determinewhetherthedriverisabouttohitanothervehicle orsomethingelseontheroad.Thisapplicationcanmeasure thedistancebetweennearbyvehiclesandnotifythedriverof anydanger.Preventivesafetymeasuresliketighteningseat belts, slowing down, and adaptive steering can be implementedbysomeexigencybrakingsystemstoavoida collision.

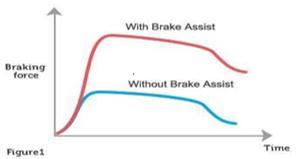

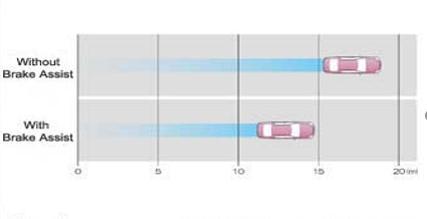

THE ACRONYM EBA OR BA STAND: -

EBAisshortforEmergencyBrakeAssist,andBAisjustshort forBrakeAssist.Bothtermsarereferredtointhesameway. Despite the fact that they are virtually identical, some manufacturersfavoroneovertheother.Itisa technology thataidsthedriverinreducingtheoverallstoppingdistance andisanactivevehiclesafetyfeature.

TheEBAisamechanismthathelpswithbrakingwhenit's needed. However, it only works in emergency braking situationsanddoesnotalwaysassistwithbraking.Whena driverisunabletoapplyfullbrakingforce,EBAisinplaceto ensurethatthevehiclecomestoastopasquicklyaspossible andtoprovideassistance.

They typically fall into two categories: mechanical and electronic. The underlying technology they use to operate distinguishesthetwoliesfromoneanother.UsinganECU, anelectronicbrakeassistpredictsanemergencysituation based on the current threshold, the pedal speed, brake pressure,andotherfactors.Electroniccalculationsareused.

TYPE OF THE EMERGENCY BRAKE ASSIST SYSTEM:-

a. ELECTRONIC EMERGENCY BRAKE LIGHT:-

Even when other vehicles or bad weather obscure the driver'sview,italertsthemtoahardbrakingeventinfront. Additionally,itmakesitpossibleforavehicletobroadcasta self-generatedemergencybrakeeventtoothervehiclesin thevicinity.

c. PEDESTRIAN

Through visual and audible alert as well as automatic full braking,theEBA-Pedestriansystempreventcollisionwith pedestrian

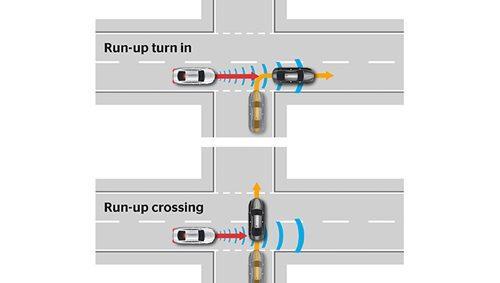

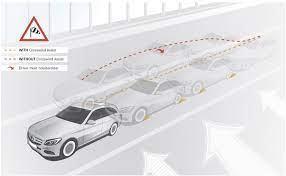

b. CROSS TRAFFIC: -

Cross Traffic provides motorists in critical situations with assistancefromparticipantsincrossingtraffic.Ifnecessary, thefunctioninterveneswithfullbrakingandissuesavisual oraudiblealert.

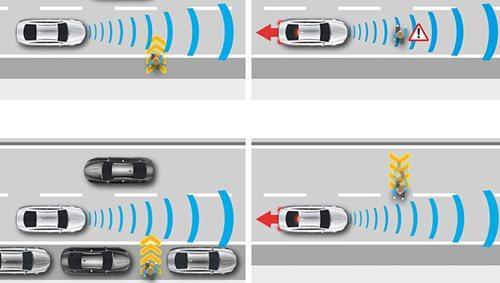

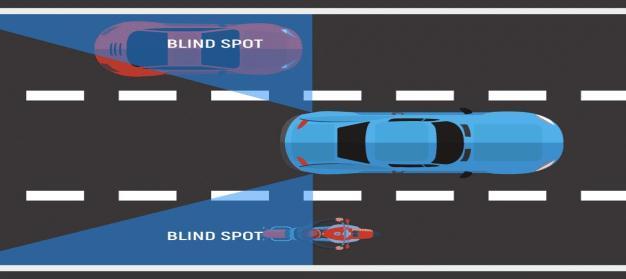

4.2 BLIND SPOT MONITORING

Sensors are used by blind spot detection systems to give drivers important information that would be hard or impossible to get otherwise. When the driver attempts to enteranengagedlane,forexample,certainsystemssound an alarm when they detect an object in the driver's blind spot.

Asobservedintheprecedingchapters,thedevelopmentof ADAS has involved enhancing road safety, guaranteeing vehicle dependability, and assisting drivers in preventing accidents. Studies have concentrated in particular on lane changeassistance,keepinganeyeontheblindspotareathat driverscannotseeintheiroutsidemirrors.Visualandaudio alertswarnthedriveraboutthepossibilityofcollidingwith anapproachingvehicleifthesystemidentifiesonedoingso inthedangerzone.

The system becomes more complicated when a camera is installedbeneaththeside-viewmirror:Differentelements, suchascameraangle,perspectivedeformation,andcamera vibration,havebeentakenintoconsiderationbecausethey mayaffectsystemperformance,andtheoperabilityscenario isnotstatic.Additionally,theacquiredimagesare rotated wheneverthecamerarollangleisnotnull.

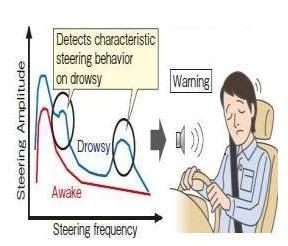

4.3 DRIVER DROWSINESS DETECTION

Driver drowsiness detection alerts drivers when they are fallingasleepordistractedontheroad.Thereareanumber ofwaystodeterminewhetheradriverislosingfocus.Inone instance, sensors can examine the driver's heart rate and head movement to determine whether they indicate sluggishness.Similartothelanedetectionwarningsignals, othersystemsissuedriveralerts.

Various technologies may be used to try to detect driver drowsiness

A. DRIVER EYE/FACE MONITORING

Itrequiresoneofthecameraswatchingthedriver'sface.

B. VEHICLE POSITION IN LANE MONITORING

Itusesthelane-monitoringcamera.

C. PHYSIOLOGICAL MEASUREMENT

Itrequiresbodysensorsformeasurementofparameterslike brainactivity,heartrate,skinconductance,muscleactivity.

D. STEERING PATTERN MONITORING

Primarily uses the steering input from electric power steeringsystem.

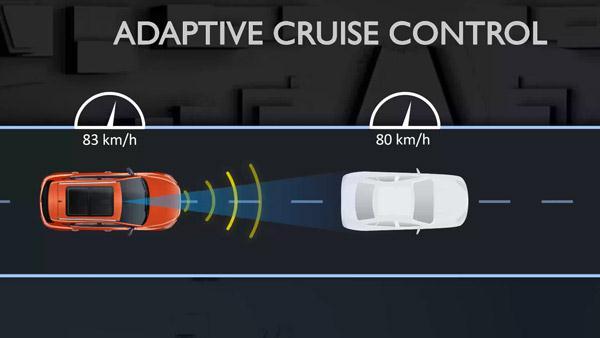

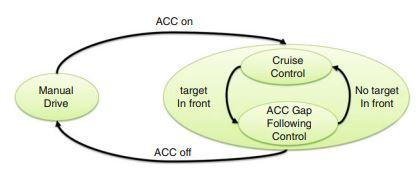

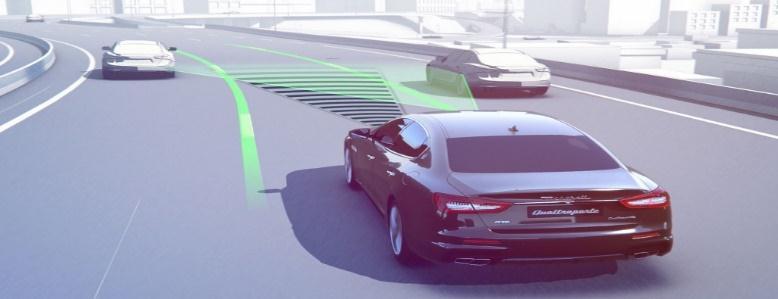

4.4 ADAPTIVE CRUISE CONTROL

Adaptive cruise control (ACC) is especially useful on the highway, where drivers sometimes have trouble keeping track of their own speed and that of other vehicles for an extendedperiodoftime.Dependingonthebehaviorofother objectsintheimmediatearea,advancedcruisecontrolcan automaticallyaccelerate,slowdown,andsometimesstopthe vehicle. A vehicle's velocity and distance from the vehicle ahead can be maintained by ACC. Based on the distance betweenthevehicleinfrontofitandthevehicleinfrontofit, ACCcanbrakeoraccelerateautomatically.Withstopandgo features, ACC systems can stop completely and accelerate back tothesetspeed.Becauseitonlycontrolsyourspeed andthedistancebetweenyouandthecarinfrontofyou,this systemstillrequiresanalertdrivertopayattentiontotheir surroundings.

An ACC/CACC system's typical operation. The subject vehicle,avehiclewithanACCorCACCsystem,isfollowing itspredecessor.Figure>depictstheACCsystem'smodeof operationtransition.17.Aswithconventionalcruisecontrol, thesystem will control the vehicle'sspeed when no other vehicleisinthewaywhenitisturnedon.Thesystemwill adjust the vehicle's speed to maintain the driver-set gap basedonmeasurementsfromtherangesensor,suchasthe relative distance dr and relative velocity (vr), without the driverhavingtocontrolthesystem.Byeitherturningoffthe ACCsystemorusingthebrake/throttlepedaltooverridethe ACC system's commands, the driver can take over longitudinalcontrol.Awirelesscommunicationlinkbetween

atleasttwovehiclesisrequiredforthedataexchangeina CACCsystem,asshownin(Fig.16).

You can get into and out of parallel and perpendicular parkingspaceswithParkAssist'sassistance.ParkAssistuses sensorsallaroundyourvehicletomeasurepotentialparking spacesasyoudrivebyonceyouturniton.

ACC mode will be activated if the vehicle in front of the following vehicle is not a CACC antedating vehicle (i.e., a vehiclewithwirelesscommunication).Thecontrollerenters CACC mode whenever the vehicle directly in front of the CACCprecedingvehicleisidentified.The"TargetID"mode maps the position of each vehicle that is directly communicating with the CACC subject vehicle and determines,forinstance,whichcommunicationdatasluiceis coming from the immediate antedating vehicle that the range sensor has detected. For their small two-car CACC team,2010,astraightforwardpatternrecognitionalgorithm wasimplemented.However,it'spossiblethatthealgorithm won't work well with multiple cars in multiple lanes. A positioningsystemthatisabletoaccuratelypinpointvehicle positionsdowntothelanepositionisnecessaryforamore generalsolution.

4.5 AUTOMATIC PARKING

Autonomous parking assists drivers in knowing when to turnthesteeringwheelandcometoastopinordertoavoid blind spots. When compared to vehicles with traditional side-view mirrors, those with rear view cameras have a superiorviewoftheirsurroundings.Insomecases,parking systems are able to complete the parking process automaticallywithoutthedriver'sassistancebycombining theinputofmultiplesensors.

Fig-19: ParkingAssist

Itpromptsyoutostop,releasethesteeringwheel,andselect reversegearonceithasdetectedaspacethatisatleast20% largerthanyourvehicle.Afterthat,asyoureverse,ittakes overthesteering.

Still,ifyoutrytosteeryourself,thesystemstopsworking.It automaticallysteersclearofthingsandothercars,butyou should always be on the lookout for children and other animalsinthearea.Youcandriveasslowlyasyouwantand stop whenever you need to because the accelerator and brakesareunderyourcontrol.

4.6 CROSSWIND STABILIZATION

Thevehicleissupportedinneutralizingstrongcrosswinds bythisrelativelynewADASfeature.Thissystem'ssensors areabletodetectexcessivevehiclepressurewhiledriving and apply brakes to the wheels that are impacted by crosswinddisturbance.

CWSusessensorsforyawrate,lateralacceleration,steering angle,andvelocitytofigureouthowmuchhelptogivethe driver in different situations, like when they're going differentspeedsorturning. CWSisabletoproperlyassist thedriverinagivensituationbyimplementingthereadings fromforcesensorsbyutilizingvariousvehiclecomponents likethebrakes,differentials,andsuspension.

4.7 LANE ASSISTANCE

Automaticbrakingandcollisionavoidancearebeginningto be incorporated into ADASs. This is accomplished by combininganumberofthepreviouslymentionedfeatures, such as distance estimation, vehicle detection, and object tracking. A vehicle can predict a collision based on this combination of data and prevent it from occurring by brakingorevensteeringoutoftheway.

Fig -21: Theobjectlanedetection

4.8 HEADS-UP DISPLAY

Informationisdisplayedpreciselywhereyouneedit,inline ofsight,onaHead-UpDisplay(HUD).Withouthavingtolook down at the instrument cluster or the secondary display, drivershaveaccesstoall ofthenecessaryinformation for navigation,includingspeed,warningsignals,andindicator arrows.

Currently, HUDs are used to display selected instrument clusterdata.Thismayincludethecurrentspeed,pertinent traffic signs, warning lights, blinkers in use, navigation arrows,andagreatdealmore,dependingontheconcept.As a result, the HUD serves as a sensory filter and has advantages that will lead to its growing popularity. ExpandingthecurrentHUD'sfieldofvisiontomakemore room for important content is one example of ongoing developmentwork.TheHUDstillhasalongwaytogobefore it reaches its full potential. The human-machine interface (HMI) ought to be optimized specifically for brand-new driverassistancesystemsinordertolessentheamountof work required of drivers and improve road safety. Additionally,theHUD'sabilitytoshortentheamountoftime spent"lookingaway"isasignificantbenefithere.

Benefits of HUD:-

TheHUDisprimarilyanactivesafetypoint,asitdoesnot requirethedrivertolookdownataconventionaldisplay panel. HUDs today can also project information like navigationguidance, cautionlightsand enginerevs,thus considerablylimitingthenumberoftimesamotoristneeds totaketheireyesofftheroad.Thepointalsohasacertain sense-goodandtech-smartfactorgoingforit.

4.9 NAVIGATION SYSTEM

To provide motorists with current traffic and navigation information,makeuseofdigitalmappingtoolsliketheglobal positioningsystem(GPS)andtrafficmessagechannel(TMC). Anautomotivenavigationsystemcansendandreceivedata signalsfromsatellitesaboutthevehicle'scurrentpositionin relationtoitssurroundingsthroughanembeddedreceiver.

In order to assist drivers in following a route while maintainingtheirfocusontheroad,autonavigationsystems providevoiceandon-screeninstructions.Somenavigation systemshavetheabilitytoshowprecisetrafficdataand,if necessary,plananewroutetogetaroundtrafficjams.

4.10 NIGHT VISION

Driversareabletoseethingsatnightthatwouldotherwise be difficult or impossible to see thanks to night vision systems. Night vision executions are carried out in two groups. Passive night vision systems rely on the thermal energy provided by BUS, automobiles, living things, and other objects, whereas active night vision systems emit infraredlight.

This up and coming ADAS include, with expanded dependabilityandlowerpeacefulness,givescorrespondence betweenthevehicleanddifferentvehiclesorwalkers,byand

large related to as V2X. Today, a great many vehicles interface with cell networks for continuous route. This application will improve current methods and the mobile network to improve situational awareness, control or suggestspeedadjustmentstoaccommodatebusinesstraffic, and provide real-time updates to GPS maps. Supporting over-the-air (OTA) software updates for the increasingly numeroussoftware-drivenautomotivesystems including map updates, bug fixes, security updates, and more requiresV2X.

“Modern vehicles are becoming increasingly connected with a lot of different systems, such as Wi-Fi, near-field communication, and V2X”

4.12 GLARE-FREE HIGH BEAM AND PIXEL LIGHT

Usingsensors,glare-freehighbeamandpixellightsadjustto thedarknessandthevehicle'ssurroundingswithoutcausing trafficapproachingfrombehind.Inordertopreventother roadusersfrombeingtemporarilydazed,thisnewheadlight applicationdetectsthelightsofother vehicles and directs thevehicle'slightsdownward.

4.13 ADAPTIVE LIGHT CONTROL

The vehicle's headlights are adapted to external lighting conditions by adaptive light control. Depending on the darkness and surroundings of the vehicle, it alters the strength,direction,androtationoftheheadlights.

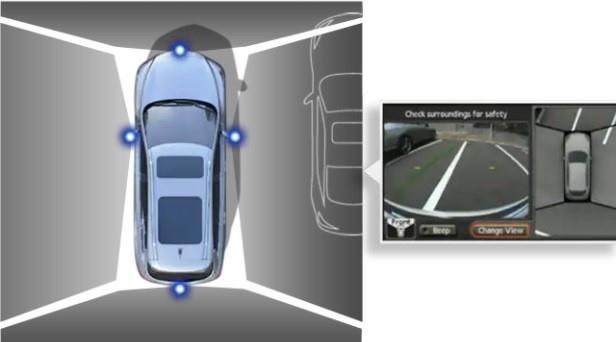

4.14 BIRD'S EYE VIEW OR OMNI VIEW

TheadjuncttechnologyknownasOmni-viewisintendedto assistdriversinparkingtheirvehiclesinconfinedspaces.In the early days of vehicle parking assistants, proximity sensorsorasinglerear-viewcamerawereusedtoidentify potentialobstaclesandprovidedriverswithasoundalarm orvideofromtherear.

There are four wide-field cameras in a typical Omni-view system:oneinthevehicle'sfront,oneinthevehicle'srear, oneintheleftrearviewwindow,andoneintherightoutside window.Theentireareaaroundthevehicleiscoveredbythe fourcameras.Throughimagefusion,deformationcorrection, and protuberance transformation, the system creates a bird's-eyeviewoftheroadinfrontofthevehicle.Theinput andoutputofatypicalOmni-viewproductaredepictedin theimagesbelow.

Ifyoutookyourdriver'stestandhadtoparallelparkacar, youmayrememberhowterrifyingitwas.Parallelparkinga vehicleinthepresenceofothersissomethingthatmanyof us simply do not want to do. It ranks right up there with publicspeaking.Ever.Itrequiresjudgmentandabilitiesthat many people appear to lack, possibly due to evolutionary reasons.

Whentryingtoparkabigcarinasmallspaceparalleltothe curb,manypeoplehaveundoubtedlythought, "I sure wish I could see everything from above, like birds could see it." Well,aswasoncesaidsowisely,seekandyouwillfind.The abilitytoviewaparkingsituationfromabovehasbecome possiblethankstocomputertechnology.

Althoughthetypicalconfigurationconsistsoffourcameras, surround-viewcamerasystemscanemployasmanyassix cameras. One is usually in the grille, which is in the front. Two wide-angle cameras are mounted in the areas of the exteriorrearviewmirrors,andafourthcameraismounted atthevehicle'srearandservesastheback-upcamera.Sideview camerasaheadofthe front wheelsare added bysixcamerasystems,allowingthevehicletoshowdriverswhatis ontheothersideofwallsandothervehicles.

5. CONCLUSION AND THE FUTURE POLICY OF ICV

We provided a comprehensive overview of the ADAS's workings,varioustypes,andvariants,aswellasanoverview of its features and variants, in this article. Based on the varioussafetyapplications,wediscussedADASfeatures.The significanceofadvancedcommunicationsystemslike V2X and sensor fusion techniques for upcoming autonomous vehicleswasalsodiscussed.

Thepurposeofthispaperistoprovideanoverviewofkey ADAStechnologies,includingEEAsensortechnology,aswell astheirfuturetrends.Thereisasignificantgapthatneedsto be filled before high-level ICVs can be produced in mass quantities, despite the fact that numerous demonstration ICVs have been developed to demonstrate the idea of autonomousdrivingandthepossibilityofimprovingtraffic efficiencybasedonICVs.Therootcausesofthisgap,aswell asthemostimportanttechnologiesneededtocloseit.This perspectivemayofferauniqueperspectiveonthestateof ICVdevelopmentatthepresenttime.Thisstudycombined thetwoperspectiveswiththegoalofassistingresearchers

fromindustryandroboticstocollaboratemoreeffectively andcomprehendeachother'swork.

In conclusion, intelligent algorithms and vehicle platform technological advancements are required for mass productionofICVsorADAS.Domain-basedandcentralized architecturesarethemostpromisingapproachestovehicle platform development for the next generation of EEA, offering significantly increased communication and computationcapacity.Thevehicleisexpectedtoincorporate AE and multicore heterogeneous computation platforms quickly.Thefuturisticvehicleplatformoughttobeoutfitted with cutting-edge sensors in addition to its robust architecture.Thesesensorsoughttobeabletogatherdata andproducerecognitionresultsbyincorporatingperception algorithms.Inordertointegratethesensorhardwareand perceptionalgorithms,numerousbusinessesandacademic institutions are already cooperating. Mobileye, which has incorporated vision algorithms into a sensor product, is a successfulexample.

6. REFERENCES

1) VipinKumarKukkala(vipin.kukkala@colostate.edu)isa Ph.D. student in the Electrical and Computer EngineeringDepartmentat ColoradoStateUniversity, FortCollins.

2) JordanTunnell(Jordantunnell@gmail.com)ispursuing his M.S. degree in the Electrical and Computer EngineeringDepartmentat ColoradoStateUniversity, FortCollins.

3) SudeepPasricha (sudeep@colostate.edu) isa Monfort andRockwell-AndersonprofessorintheElectricaland Computer Engineering Department at Colorado State University,FortCollins.

4) ThomasBradley(thomas.bradley@colostate.edu)isan associate professor of mechanical engineering at ColoradoStateUniversity,FortCollins.

5) WorldHealthOrganization.(2015).Globalstatusreport on road safe-ty. WHO. Geneva, Switzerland. [Online] Available:http://www.who.int/violence injury_prevention/road_safety_status/2015/en/

6) AssociationforSafeInternationalRoadTravel.(2018). Annualglobalroadcrashstatistics.ASIRT. Potomac, Maryland. [Online]. Available: http://asirt.org/initiatives/in-forming-roadusers/road-safety-facts/road-crash-statistics

7) B. Markwalter, “The path to driverless cars,” IEEE Consum.Electron.Mag.,vol.6,no.2,pp.125–126,2017

8) Cunningham. (2014, May 31). US requiring back-up camerasincarsby2018.Roadshow.[Online].Available:

www.cnet.com/news/u-s-requiring-back-up-camerasin-cars-by-2018/

9) I.Gat,M.Benadyi,andA.Shashua,“Amonocularvision advance warning system for the automotive aftermarket,”SAE,Warrendale,PA,Tech.Rep.2005-011470,2005.

10) S.Saponara.(2016).HardwareacceleratorIPcoresfor real time radar and camera-based ADAS. J. Real-Time Image Processing. [Online]. pp. 1–18. Available: https://doi.org/10.1007/s11554-016-0657-0

11) K.Koscher,A.Czeskis,F.Roesner,S.Patel,T.Kohno,S. Checko-way, D. McCoy, B. Kantor, D. Anderson, H. Shacham,andS.Savage,“Experimentalsecurityanalysis ofamodernautomobile,”inProc.IEEESecurityPrivacy, 2010,pp.447–462.

12) S. Thrun, M. Montemerlo, H. Dahlkamp, D. Stavens, A. Aron, J. Diebel, P. Fong, J. Gale, M. Halpenny, G. Hoffmann,andK.Lau,“Stan-ley:Therobotthatwonthe DARPAgrandchallenge,”J.FieldRobotics,vol.23,no.9, pp.661–692,Sept.2006.

13) E.Guizzo,“HowGoogle’sself-drivingcarworks,”IEEE Spectr.,vol.18,no.7,pp.1132–1141,2011.

14) MarkVollrath; Schleicher, Susanne; Gelau, Christhard (19December2010)."TheinfluenceofCruiseControl andAdaptiveCruiseControl ondrivingbehaviour – A driving simulator study".Accident Analysis & Prevention.43(3):1134–1139

15) Martinelli, Nancy S.; Seoane, Richard (1999-03-19). "Automotive night vision system".Thermosense XXI International Society for Optics and Photonics.3700: 343–346.

16) Kobbert,J;Kosmas,K;Khanh,TQ(2018-11-14)."Glarefree high beam optimization based on country road traffic simulation".Lighting Research & Technology.51(6):922–936.

17) Hilf, Klaus-Dieter; Matheis, Ingo; Magus, Jakob; Rauh, Jochen (2010-07-01)."Automated simulation of scenarios to guide the development of a crosswind stabilizationfunction" IFACProceedingsVolumes.6th IFACSymposiumonAdvancesinAutomotiveControl

18) Tech,CarBike(2015-05-06)."WhatisCross-wind?How CrosswindAssistWorks?"

19) SAEOn-RoadAutomatedVehicleStandardsCommittee. Taxonomyanddefinitionsfortermsrelatedtoon-road motorvehicleautomateddrivingsystems.SAEStandard J,2014,3016:1–16

20) UrmsonC,AnhaltiJ,BagnellD,etal.Autonomousdriving inurbanenvironments:Bossandtheurbanchallenge.J FieldRobotics,2008,25:425–466

21) Bacha A, Bauman C, Faruque R, et al. Odin: Team VictorTango’s entry in the DARPA urban challenge. J FieldRobotics,2008,25:467–492

22) Schumm,T.;Worzischek,R.:SerienfertigungvonHeadup-Displays (Series production of headup displays). ATZproduktion4(2011),No.4,pp.32–37.

23) Hirano Y. Integrated vehicle control of an in-wheelmotorvehicletooptimizevehicledynamicsandenergy consumption. In: 2012 10th World Congress on IntelligentControlandAutomation.Beijing,China:IEEE, 2012.2335

2339

24) PeiX,ZhouY,ShengZ.Torqueripplesuppressionofa new in-wheel motor based on quantum genetic algorithm. In: 23rd International Conference on MechatronicsandMachineVisioninPractice.Nanjing, China:IEEE,2016.1–6

25) ThrunS,MontemerloM,DahlkampH,etal.Stanley:The robot that won the DARPA grand challenge. J Field Robotics,2006,23:661–692

26) SivaramanS,TrivediM.Lookingatvehiclesontheroad: Asurveyofvision-basedvehicledetection,tracking,and behavioranalysis.IEEETransIntellTranspSyst,2013, 14:1773–1795

27) Gwon G P, Hur W S, Kim S W, et al. Generation of a preciseandefficientlane-levelroadmapforintelligent vehicle systems. IEEE Trans Veh Technol, 2017, 66: 4517–4533

28) Khodayari A, Ghaffari A, Ameli S, et al. A historical review on lateral and longitudinal control of autonomous vehicle motions. In: 2nd International Conference on Mechanical and Electrical Technology. Singapore:IEEE,2010.421–429

29) Souissi O, Benatitalilah R, Duvivier D, et al. Path planning: A 2013 survey. In: Proceedings of 2013 InternationalConferenceonIndustrialEngineeringand SystemsManagement.Rabat:IEEE,2013.

30) KatoS,TakeuchiE,IshiguroY,etal.Anopenapproachto autonomousvehicles.IEEEMicro,2015,35:60–68

31) GeigerA,LauerM,MoosmannF,etal.TeamAnnieWay’s entrytothe2011grandcooperativedrivingchallenge. IEEETransIntellTranspSystem,2012,13:1008–1017

32) UrmsonC,AnhaltJ,BagnellD,etal.Autonomousdriving inurbanenvironments:Bossandtheurbanchallenge.J FieldRobotics,2008,25:425–466

33) Leonard J, How J, Teller S, et al. A perception-driven autonomousurbanvehicle. JFieldRobotics,2008,25: 727–774

34) Levinson J, Askeland J, Becker J, et al. Towards fully autonomousdriving:Systemsandalgorithms.In:2011 IEEEIntelligentVehiclesSymposium(IV).Baden-Baden: IEEE,2011.163–168

35) Montemerlo M, Becker J, Bhat S, et al. Junior: The Stanfordentryintheurbanchallenge.JFieldRobotics, 2008,25:569–597

36) Bacha A, Bauman C, Faruque R, et al. Odin: Team VictorTango’s entry in the DARPA urban challenge. J FieldRobotics,2008,25:467–492

37) MerrillGP.TheFirstOneHundredYearsofAmerican Geology.NewYork:HafnerPublishingCompany,1924 13KurzweilR,RichterR,KurzweilR,etal.TheAgeof IntelligentMachines.Cambridge,MA:MITPress,1990

38) GrimesDM,JonesTO.Automotiveradar:Abriefreview. Prof.IEEE,1974,62:804–822

39) Tsugawa S. Vision-based vehicles in Japan: Machine visionsystemsanddrivingcontrolsystems.IEEETrans IndElectron,1994,41:398–405

40) DickmannsED,GraefeV.Dynamicmonocularmachine vision.MachineVisApps,1988,1:223–240

41) Leighty R D. DARPA ALV (autonomous land vehicle) summary. Report No. ETL-R-085. Army Engineer TopographicLabsFortBelvoirVA,1986

42) SchwarzB.Mappingtheworldin3D.NatPhoton,2010, 4:429–43019TurkMA,MorgenthalerDG,GrembanK D, et al. VITS-A vision system for autonomous land vehicle navigation. IEEE Trans Pattern Anal Machine Intell,1988,10:342–361

43) Lowrie J W, Thomas M, Gremban K, et al. The autonomous land vehicle (ALV) preliminary roadfollowing demonstration. In: Intelligent Robots and ComputerVisionIV.Cambridge,1985.336–351:

44) Barabba V, Huber C, Cooke F, et al. A multimethod approachforcreatingnewbusinessmodels:TheGeneral MotorsOnStarproject.Interfaces,2002,32:20–34

45) IEEE 802.11 Working Group. Part 11-Wireless LAN mediumaccesscontrol(MAC)andphysicallayer(PHY) specifications:Higherspeedphysicallayerextensionin the2.4GHzband.ANSI/IEEEStd802.11,1999

46) MontemerloM,ThrunS,DahlkampH,etal.Winningthe DARPA grand challenge with an AI robot. In: The

National Conference on Artificial Intelligence. Boston, 2006.982–987

47) UrmsonC,RagusaC,RayD,etal.Arobustapproachto high-speednavigationforunrehearseddesertterrain.J FieldRobotics,2006,23:467

508

48) Jung I K, Lacroix S. High resolution terrain mapping usinglowaltitudeaerialstereoimagery.In:Proceeding oftheNinthIEEEInternationalConferenceonComputer Vision.Nice,2003.946

49) ChenM,LiuY.Recognitionandextractionhighprecision digital road map. In: International Conference on Information Technology: Coding and Computing (ITCC'05)-Volume II. Las Vegas, NV: IEEE, 2005. 129

134

50) CoyerU,SchomerusJ,MosebachH,etal.Generatinghigh precisionmapsforadvancedguidancesupport.In:2008 IEEEIntelligentVehiclesSymposium.Eindhoven:IEEE, 2008.871–876

51) BojarskiM,Del Testa D, DworakowshiD, etal.End to end learning for self-driving cars. arXiv:1604.07316, 2016

52) XuH,GaoY,YuF,etal.End-to-endlearningofdriving modelsfromlarge-scalevideodatasets.ArXiv:preprint, 2017,http://openaccess.