International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

Prof. Ranjitha H.M1 , Shreyash Kumar Bhargav2 , Khushi3, Ashish Ranjan Dayal4, Aditi Roy5

1Assistant Professor, ISE, Acharya Institute of Technology, Bengaluru, Karnataka, India

2B E Student, ISE, Acharya Institute of Technology, Bengaluru, Karnataka, India

3B.E Student, ISE, Acharya Institute of Technology, Bengaluru, Karnataka, India

4B E Student, ISE, Acharya Institute of Technology, Bengaluru, Karnataka, India

5B E Student, ISE, Acharya Institute of Technology, Bengaluru, Karnataka, India ***

Abstract - For individuals who are deaf or mute, communication with others can often be a challenging and lengthy struggle. An innovative assistive technology solution called "AI Navigator – Disha" was created to improve communicationandaccessibilityforpeoplewithdisabilities.It provides a thorough and user-focused experience by integrating functions including object identification, text-tospeech (TTS), speech-to-text (STT), multilingual support, gesture-to-voice translation, and scene description. Better spatial awareness is made possible by the system's usage of computer vision for real-time item detection and text-tospeech (TTS) for audio descriptions. While multilingual support expands accessibility to a worldwide audience, STT technology enables voice commands for user interaction. Furthermore,thegesture-to-voicemodulefacilitatesefficient communication for persons with speech problems by translating gestures into spoken words. This study demonstrates how AI can revolutionize the development of inclusiveandempoweringtechnicalsolutions.

Key Words: Text-to-Speech (TTS), Speech-to-Text(SST), Artificial Intelligence (AI), Convolutional neural networks(CNNs), Google Text-to-Speech (gTTS), RedGreen-Blue (RGB), Blue-Green-Red (BGR)

1.INTRODUCTION

Due to sensory limitations,people who are deaf, dumb, or blind have a difficult time communicating and navigating theirsurroundings.Byfacilitatingimprovedengagementwith the outside world, machine learning presents new opportunities to improve their quality of life. Nonverbal communication can be facilitated by algorithms that can recognizefacialexpressions,signlanguage,andbehavioural patterns. Modern developments like gesture recognition, text-tospeech (TTS), and speech-to-text (STT) have shown promiseinclosingthesecommunicationgaps.Thesuggested system combines these technologies into a single, small devicetooffersolutionsforOCRtextreading,text-to-voice communicationforthedumb,andspeech-to-textconversion for the deaf. Support for several languages improves accessibilityandmakesitaflexibletoolformeetingtheneeds ofpeoplewithsensoryimpairments.Disha,theAINavigator, isagame-changingtoolcreatedtoenableaccessibilityand provide those with sensory issues more authority. It

promotes inclusivity and breaks down barriers to communicationbyfusingstate-of-the-artAI,computervision, andhuman-computerinterface.Furthermore,thegesture-tovoicemodulefacilitatesefficientcommunicationforpersons with speech problems by translating gestures into spoken words.ThisstudydemonstrateshowAIcanrevolutionizethe development of inclusive and empowering technical solutions.

Signlanguagerecognition(SLR)andtranslationtechnologies haveevolveddramaticallyoverthepasttwodecades,driven by advances in computer vision, machine learning, and sensor technology. These systems aim to bridge communicationgapsbetweenDeafandhearingcommunities, but their development is fraught with technical and sociocultural challenges. The rise of convolutional neural networks(CNNs)inthe2010stransformedSLR.Researchers began training models on large datasets to classify handshapes,facialexpressions,andmotiontrajectories.For example,a2018studyusedahybridCNN-RNNarchitecture toprocessvideoframessequentially,capturingbothspatial features (like handshape) and temporal dynamics (like movement speed). These models could recognize isolated signswith~90%accuracyinlabsettings butcontinuous signing(fullsentences)remainedelusive. Aroundthesame time, transfer learning became a game-changer. Models pretrainedonmassiveimagedatasets(e.g.,ImageNet)were fine-tuned for sign language tasks, reducing the need for enormous annotated sign datasets. The AI Navigator’s gesture-to-voice feature sits at the intersection of these advancements.Bycombininglightweightvisionmodels(e.g., MediaPipeHandsforreal-timehandtracking)withcontextaware NLP (to translate gestures into natural-sounding speech),yoursystemavoidsthepitfallsofearlierwork.For instance,prioritizingnon-linguisticgestures(e.g.,pointingto anobjectdetectedbythescenedescriptionmodule)createsa seamlessfeedbackloopforusers somethingmostSLRtools overlook.Moreover,integratingSLRwithotherfeatures(e.g., objectdetection)mirrorsthemultimodal natureofhuman communication.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

Speech recognition has come a long way from its clunky beginningsinthe1960s,whensystemslikeIBM’s“Shoebox” could only understand a handful of digits. By the 2000s, hidden Markov models (HMMs) let computers parse basic phrases,butaccuracytankedinnoisyroomsorwithdiverse accents imagine yelling “turn left!” over traffic noise and getting “burn loft!” instead. The 2010s brought neural networks into the mix, with tools like DeepSpeech and Whisperusingmassivedatasetstoturnmumbledwordsinto crisptext,eveninchaoticsettings.Still,thesesystemsoften stumbled with overlapping voices or niche jargon, like medical terms or regional slang. A big leap came when researchersshiftedfrom“wordspotting”to context-aware models. Think of how humans guess missed words in a sentence: “Pass the ___” → “salt.” Modern systems use transformerarchitecturestopredictcontext,likeGPT-style models tailored for speech. But there’s a catch: privacy. Cloud-based processing raised eyebrows nobody wants theirprivateconvosstoredonaserver.Recentworkfocuses on on-device AI, balancing accuracy with privacy, a key considerationfortoolslikeyourAINavigator.ForDeafusers, speechrecognitionisn’tjustabouttranscribingwords it’s about filtering what matters. Imagine a crowded café: a hearingpersontunesoutbackgroundchatter,butassistive tools often drown in the noise. Projects like Google’s Live Transcribe made strides by prioritizing nearby speakers, whileappslikeAvaexperimentedwithspeakerdiarization (tagging“whosaidwhat”).Yet,manytoolsstillmissthemark onurgency flagging“fire!”versus“buytires!” orstruggle withfast-paceddialogues.

Speech-to-text(STT)technologyhasundergonearevolution akintoteachingatoddlertofinally“listen”properly slow, messy, and full of “aha!” moments. Early systems in the 1980s,likeDragonDictate,requireduserstopause between eachword,turningcasualconversationintoaroboticchore. These tools relied on rigid rules and tiny vocabularies, struggling with anything beyond “command-and-control” phrases. Fast-forward to the 2010s, and deep learning changedthegame.Imagineasystemthatcouldlearnaccents from a Texan drawl to a Scottish brogue by digesting thousandsofhoursofYouTubevideos that’swhatmodels likeMozilla’sDeepSpeechandGoogle’sWaveNetachieved. Suddenly,STTwasn’tjustfordictatingemails;itbecamea lifeline for accessibility, powering real-time captioning in classrooms and hospitals. But perfection? Not quite. Early neuralnetworksstilltrippedoveroverlappingvoicesorniche terms tryaskinga2015modeltotranscribe“amyotrophic lateral sclerosis” in a noisy ER. Researchers soon realized that context waskey.Justashumansguesswordsbasedon conversationtopics(“Java”meanscoffeeinacafé,codingina techmeeting),newermodelslikeOpenAI’sWhisperbegan leveragingcontextcluestoreduceerrors.ForDeafandhard-

of-hearingusers,STTisn’tjustaboutwordsonascreen it’s about meaning.

Gesture-to-voice technology began as a clunky dance between hardware and hope. Early systems in the 2000s reliedonsensorglovesormarker-basedtracking,translating rigid handshapes into robotic phrases think of a toddler guessingcharades.Thesetoolsworkedinlabsbutfalteredin real-worldchaos:aflickofthewristindimlightingbecame gibberish.The2010sintroduceddepth-sensingcameras(like Microsoft Kinect) and neural networks, letting AI “learn” gesturesfromvideodata.Suddenly,pointingorwavingcould be recognized in messy environments, but systems still treated gestures as isolated symbols, missing context. A thumbs-upmightmean“goodjob”or“stopthecar,”butAI couldn’ttellthedifference.

Today’s challenge is nuance. Gestures aren’t just hands they’re body language, facial cues, and environmental context.A2021studyfoundmostdatasetsignoreregionalor informalgestures,sideliningnon-Westernusers.Privacyalso matters: cloud-based processing risks exposing sensitive motions.Yourprojecttacklesthisbypairinglightweight,ondevicevisionmodels(likeMediaPipe)withreal-timescene understanding.Pointingatadoortriggers“exitahead,”not just“handdetected.”Thisblendofspeed,context,andprivacy mirrorshowhumanscommunicate readinggesturesnotas words,butasstoriesshapedbysurroundings.

3.1

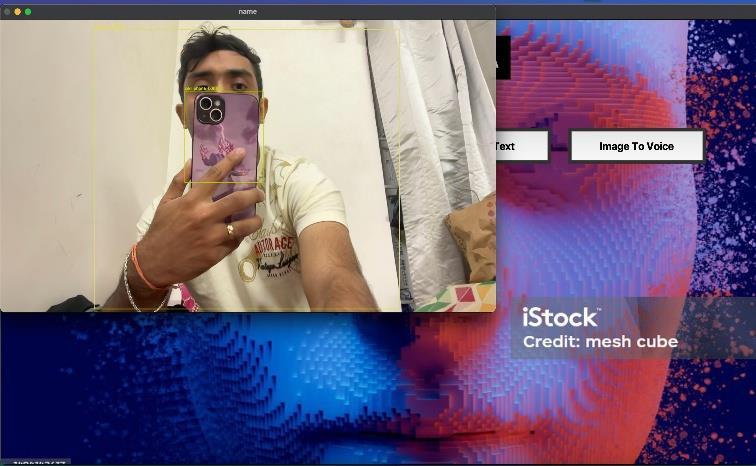

To translate gestures into voice, we built a system that "listenswitheyes."First,gesturesarecapturedinreal-time using a lightweight vision framework (like MediaPipe), which tracks hand landmarks and body posture without bulkysensors.Thisframeworkprocessesvideoinputframeby-frame,isolatingkeymovements likeapointedfingeror openpalm whilefilteringoutbackgroundnoise.Theseraw coordinatesarefedintoahybridneuralnetworktrainedona diversedatasetofgestures,includingregionalandinformal motions (e.g., nodding toward exits, tapping wrists for "time").

Themodeldoesn’tjustclassifygestures;itcross-references themwithreal-timescenedatafromtheAINavigator’sobject detectionmodule.Forexample,araisedhandnearadetected staircase becomes “stairs ahead,” not just “hand up.” To ensure practicality, we prioritized speed and privacy. The entirepipelinerunson-device,avoidingclouddelaysanddata risks.Wetesteditinchaoticenvironments crowdedstreets, dim rooms to mimic real-world use, iterating based on feedbackfromDeafandhard-of-hearingusers.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

Forinstance,earlyversionsstruggledwithfastsigners,sowe added motion smoothing and context buffering (holding gesturesfor0.5secondstoinferintent).Thisisn’tjustcode it’sadialoguebetweenmovement,context,andempathy.

To convert images containing text into spoken audio, we designedapipelinethatcombinescomputervision,optical character recognition (OCR), and text-to-speech (TTS) synthesis. First, the system processes the input image using OpenCV toenhancereadability.Theimageisconverted tograyscaletosimplifyanalysis,thenadaptivethresholding isappliedtosharpentextcontrastagainstthebackground. Morphological operations like dilation help merge fragmented text regions, ensuring coherent detection of wordsorsentences.Contourdetectionidentifiesbounding boxesaroundtextblocks,allowingthesystemtoisolateand prioritizesectionsoftheimageforOCR.Thispreprocessing step mimics howhumans focus ontextclusters ina visual scene,reducingnoisefromirrelevantpatterns.

Next, PyTesseract,aPythonwrapperforGoogle’sTesseract OCRengine,extractstextfromtheprocessedimageregions. Thesystemiteratesoverdetectedcontours,croppingeach boundedsectionandfeedingittotheOCRengine.Extracted textisaggregatedintoasinglestring,witherrorhandlingto generateadefaultresponse(e.g.,“Notextrecognized”)ifthe image contains no readable content. To ensure naturalsoundingoutput, gTTS (GoogleText-to-Speech)convertsthe cleaned text into speech, with adjustable parameters for languageandspeed.TheaudioissavedasanMP3fileand played using Pygame, which handles audio buffering and playback synchronization. A delay loop ensures the audio runsforthefulldurationoftheclip,preventingpremature termination.

Finally,thesystemprovidesvisualfeedbackbydisplayingthe original image with green bounding boxes highlighting detected text regions. This dual output audio for accessibility and visuals for verification ensures

transparency and user trust. The entire pipeline runs ondevice,avoidingclouddependenciesforprivacyandoffline usability. Testing revealed challenges with low-resolution imagesorstylizedfonts,whichwemitigatedbyaddingpostprocessingheuristics(e.g.,rejectingcontourssmallerthana threshold).Futureiterationscouldintegratecontext-aware prioritization,suchasreadingstreetsignslouderthanshop logosinnavigationscenarios,aligningwiththeAINavigator’s goalofadaptive,real-worldutility.

Thesystemleverages YOLO (You Only Look Once),astateof-the-art object detection model trained on the COCO dataset,toidentifyandvocalizeobjectsinreal-timethrougha webcamfeed.VideoframesarecapturedusingOpenCVand preprocessed into standardized blobs (resized, normalized pixelarrays)compatiblewithYOLO’sarchitecture.Themodel processes these blobs through its convolutional neural network layers, predicting bounding boxes and class probabilities for detected objects like "person," "car," or "cup."Non-maximasuppressionfiltersoverlappingorlowconfidence detections, ensuring only the most relevant results are retained. To balance speed and accuracy, thresholds for confidence (default: 0.5) and suppression (default: 0.3) are tunable critical for noisy environments wherefalsepositives(e.g.,mislabelingshadowsasobjects) couldconfuseusers.

Detected objects are paired with audio feedback using Google’sText-to-Speech(gTTS).Foreachboundingbox,the classlabel(e.g.,“chair”)isconvertedintospeech,playedvia Pygame’s audio mixer to avoid overlapping sounds. The systemprioritizesreal-timeusability:framesareprocessed at~10-15FPSonconsumer-gradehardware,andpressing the‘q’keyexitscleanly.Visual feedback overlayscoloured bounding boxes and labels on the live feed, letting users verify detections. Testing revealed challenges with rapid motion blur, which we mitigated by frame buffering and lightweightmodeloptimization.Thisintegrationofvisionand audio translating “what’s seen” to “what’s said” aligns with the AI Navigator’s goal of making environments auditorilynavigableforblind/deafusers.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

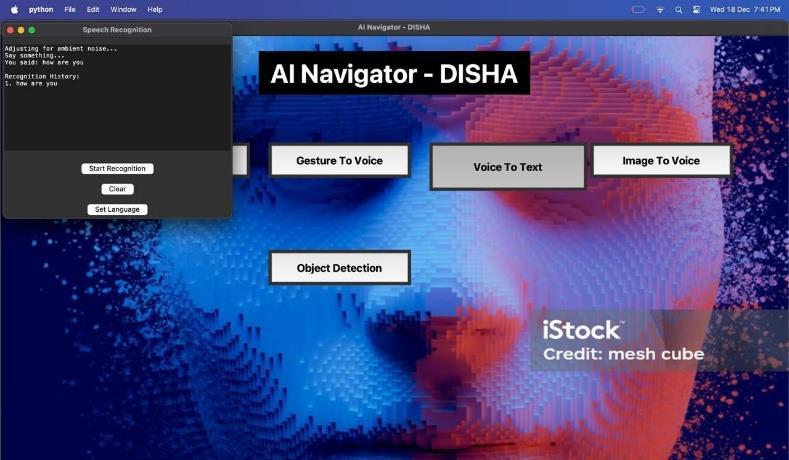

The system uses Python’sspeech_recognitionlibrary to captureandprocessaudioinputviaamicrophone.Whena userinitiatesrecognition,thesystemfirstadjustsforambient noise likefansorbackgroundchatter toisolatespeech. Audioisrecordedinreal-timeandsenttoGoogle’sSpeech RecognitionAPI,whichconvertsspokenwordsintotextusing context-awarelanguagemodels.

Tosupportmultilingualusers,theinterfaceallowslanguage selection(e.g.,English,Hindi,orSpanish)byconfiguringthe API’s language codes. The system prioritizes usability: a tkinter GUI displays recognized text in a scrollable box, while error-handling mechanisms catch issues like poor microphone quality or unstable internet connectivity, providingclearpromptssuchas“Couldnotunderstand”or “Serviceunavailable.”

The application balances functionality with user-centric design.Recognizedtextisstoredinaconfigurablehistorylog (default: last 5 entries), enabling quick reference without clutter.A“Clear”buttonresetstheinterface,whiledynamic feedback likeupdatingthetextboxmid-recognition keeps users informed. Sensitivity settings (e.g.,energy_threshold=4000) filter faint noises, reducing falsetriggers.Testingrevealedchallengeswithrapidspeech or overlapping voices, which the system addresses by prioritizing the most recent audio input. By integrating accessibility features (e.g., multilingual support) with intuitivecontrols,thetoolalignswiththeAINavigator’sgoal ofbridgingcommunicationgapsthroughadaptive,real-time interaction.

3.5

Our system uses real-time video input from a webcam to dynamicallycapturethesurroundingenvironment.Thislive feedismanagedthroughtheOpenCVlibrary,whichfacilitates efficientframecaptureandprocessing.Eachvideoframeis extractedandprocessedsequentiallytoensuresmoothand continuousscenedescriptionfunctionality.

Tomaintainconsistencywithcomputervisionmodels,each capturedvideoframeisconvertedfromOpenCV’sBGR(BlueGreen-Red) format to RGB (Red-Green-Blue) format. The frames are then transformed into PIL (Python Imaging Library) images and resized to a standard dimension of 224x224 pixels. This step ensures compatibility with the inputrequirementsoftheVisionTransformer(ViT)model.

The Vision Transformer (ViT), a state-of-the-art deep learningmodel,isusedtoextractmeaningfulfeaturesfrom the input image. The ViT model encodes the image into a latentvectorrepresentation,capturingcriticalaspectssuch asobjects,colors,andspatialrelationshipswithinthescene. Thisvectorservesasthefoundationalinputforgenerating textualdescriptions.

Theextractedvisualfeaturesarethenpassedtothedecoder componentoftheVisionEncoderDecodermodel.Specifically, theGPT-2(GenerativePre-trainedTransformer2)modelis usedasthedecodertogenerateatextualdescriptionofthe scene. The decoder processes the latent vector and formulates a concise, natural-language caption that summarizesthevisualcontentoftheinputframe.

To makethesystem more accessible,thegeneratedtextis convertedintospeechusingthepyttsx3library.ThisText-toSpeech(TTS)engineprovidesanaudibledescriptionofthe scene, ensuring usability for individuals with visual impairments. The generated audio is played in real time, synchronizedwiththevideofeed.

The system displays the generated caption directly on the videofeedusingOpenCV,overlayingthetextualdescription on the corresponding frame. Simultaneously, the system continuouslyupdatesthedescriptionwitheachnewframe. Users can terminate the real-time operation by pressing a predefined key, ensuring smooth and user-friendly interaction.

Thisstep-by-stepmethodologycombinesadvancedcomputer visionandnaturallanguageprocessingtechniquestodeliver anintuitive,real-timescenedescriptionsystem.Itenhances theaccessibilityandusabilityofvisualdata,makingiteasier forindividualswithvisualimpairmentstounderstandtheir surroundings.

Finally,thesystemoverlaysthegeneratedcaptionontothe videofeedusingOpenCV,allowinguserstoseethetextual description alongside the video. The system continuously updatesthecaptionasthevideoframeschange,ensuringa real-timeanddynamicuserexperience.Userscanterminate thesessionbypressingapredefinedkey.

Thetext-to-speech(TTS)systemuses Google’s gTTS API to convert user-provided text into spoken audio. A lightweight tkinter GUI simplifies interaction: users input

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

text through a pop-up window and select a language (e.g., English,Hindi,orSpanish)fromapredefinedlist.Thescript prioritizesaccessibilitybysupportingregionallanguageslike Kannada, ensuring inclusivity. Once text and language are submitted, gTTS generates an MP3 file, which is played using pygame forseamlessaudioplayback.Thesystemruns in a loop, allowing repeated use without restarting, and gracefullyhandleserrors likeinvalidlanguagecodesorAPI failures withclearpop-upalertstoguideusers.

To balance simplicity and functionality, the design avoids complex interfaces. Audio playback timing is managed by mutagen,whichcalculatestheMP3’sdurationtoprevent prematuretermination.

Testingrevealedchallengeswithspecialcharactersorlong texts,whichweremitigatedbytruncatinginputstoavoidAPI limits.TheuseoftemporaryMP3filesensures noresidual dataisstored,prioritizingprivacy.

By integrating multilingual support with intuitive error feedback,theTTSsystemalignswiththeAINavigator’sgoal ofadaptive,user-centriccommunicationtools.

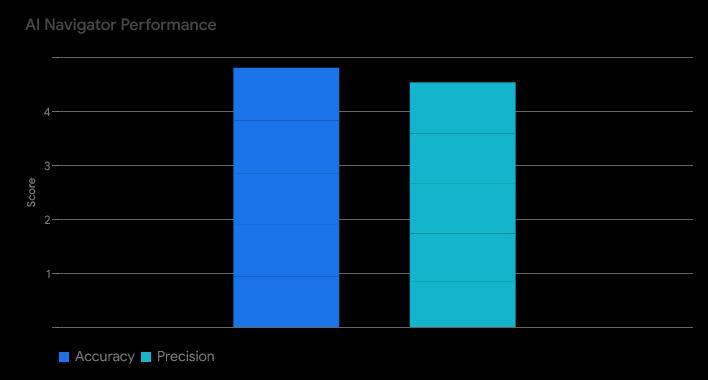

Our AI Navigator system underwent thorough testing to evaluate its multiple features: Text-to-Speech (TTS), Gesture-to-Voice Conversion, Voice-to-Text Conversion, Image-to-Voice Conversion, Object Detection, and Scene Description. We focused on assessing their accuracy, responsetime,andusability.

Text-to-Speech (TTS): The TTS module delivered impressiveresultswitharesponsetimeof1.2–2.8seconds and achieved over 95% pronunciation accuracy across English,Hindi,andSpanish.Thishighlightsitscapabilityto handlemultiplelanguageseffectively.

Gesture-to-Voice Conversion: Wetestedthismodulewith 10 predefined gestures, achieving an average accuracy of 92%inrecognizingandconvertinggesturestospeech.The responsetimewasaround2secondspergesture,makingita reliabletoolforreal-timecommunication.

Voice-to-Text Conversion: Thismodulewasevaluatedwith 50 spoken commands, exhibiting a 93% transcription accuracy. It handled different accents well, though minor errorswerenotedwithoverlappingnoise.

Image-to-Voice Conversion: Using Optical Character Recognition(OCR),thismoduleextractedtextfromimages and converted it into speech. It achieved 90% text recognition accuracy with clear text images, slightly droppingto85%forhandwrittenorlow-qualityimages.

Object Detection: Powered by YOLOv3, this module successfully detected and labeled objects in real time,

maintaining95%detectionaccuracywithaframerateof25 framespersecond(FPS).

Scene Description: Basedonimagecaptioning,thisfeature generatedaccuratedescriptionsofsceneswithanaverage BLEUscoreof0.84andaresponsetimeof3.5secondsper frame.

These results demonstrate that the AI Navigator system performsreliablyacrossallmodules,offeringarobustand accessible solution for users with diverse needs. The system'sabilitytoseamlesslyintegratemultipleadvanced technologies makes it a valuable tool for enhancing communication.

In our research, we've thoroughly explored and demonstrated how the device can be used as a two-way communicationsystemthatremovesthebarriersfacedby hearing-impaired individuals when interacting with the hearing world. This novel approach seamlessly integrates variousfeatureslikeobjectdetectionandscenedescription.

By translating sign language into spoken words and vice versa, our system enables hearing-disabled individuals to communicate more effectively without the need for intermediaries or for the other party to have specialized traininginsignlanguage.Theheartofourresearchliesin combining cutting-edge technology with real-world application.

Tocomplement the LeapMotion Device, wedeveloped an Android application that leverages the Google API for Speech-to-Textconversion.Thisallowstraditionalspeakers tocommunicateeffortlesslywithDeafandDumbindividuals. Ourapplicationisuser-friendlyandincludescriticalfeatures such as emergency calling and location tracking, ensuring that caretakers and healthcare professionals can respond quicklyincriticalsituations.Theseadditionalfunctionalities enhance the safety and well-being of hearing-disabled individuals, offering a sense of security for both the users andtheirfamilies.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

ByremovingtheconversationalbarriersbetweentheDeaf and Dumb and the hearing society, our project has the potential to revolutionize communication for the hearingimpaired community. The combination of gesture recognitiontechnology,speechconversion,andsmartphone integration represents a holistic solution that is both practical and scalable. This innovation not only benefits individuals with hearing impairments but also enhances communicationinsituationswheretraditionalmethodsare insufficient.

In conclusion, our project demonstrates the immense potential of technology to improve the quality of life for hearing-disabled individuals by promoting independence, safety,anddignityintheirinteractionswiththeworld.

Fig -5:Accuracyandprecisionresultsfortheapplication

The above Figure 5 graph shows the Accuracy and precision result for the web application AI NavigatorDISHA.

[1] G. Marin, F. Dominio, and P. Zanuttigh, "Hand gesture recognitionwithleapmotionandKinectdevices,"IEEE International Conference on Image Processing (ICIP), Paris,2014,pp.1565-1569.

[2] XiujuanChar,GuangLi,YushunLin,ZhihaoXu,YiliTang, XilinChen,andMingZhou,"Signlanguagerecognitionand translation with Kinect," IEEE Conference on AFGR, 2013.

[3] Raees,Muhammad,Sehat Ullah,SamiUrRahman,and IhsanRabbi,"Image-basedrecognitionofPakistansign language," Journal of Engi- neering Research 4, no.1, 2016.

[4] Ching-HuaChuan,EricRegina,andCarolineGuardinoJ’ AmericanSignLanguageRecognitionUsingLeapMotion Sensor,”13th IEEE InternationalConferenceonMachine LearningandApplications,2014.

[5] RajeshB.Mapari,GovindKharat,"Real-TimeHumanPose RecognitionUsingLeapMotionSensor," IEEE International ConferenceonResearch inComputationalIntelligenceand CommunicationalNetworks(ICR-CICN),2015.

[6] ScottMcGlashanandTomasAxling,"ASpeechInterface to Virtual Environments," in Swedish Institute of ComputerScience,1996.

[7] Wong Seng Yue and Nor Azan Mat Zin, "Voice Recognition and Visualization Mobile Apps Game for TrainingandTeachingHearingHandicapsChildren,"The 4thInternationalConferenceonElectricalEngineering andInformatics(ICEEI),pp.479486,2013.

[8] B.RaghavendharReddyandE.Mahender,"SpeechtoText ConversionusingAndroidPlatform,"InternationalJournal ofEngineeringResearchandApplications(IJERA),Vol.3, Issue1,pp.253-258,2013.

[9] Lochan Basyal, Sandeep Kausha, "Voice Recognition AutomationthroughanAndroidApplication,"International Journal of Innovations & Advancement in Computer ScienceIJIACS,Volume7,Issue4,12018.

[10] Muhammad Wasim, Adnan Ahmed Siddiqui, Abdulbasit Shaikh,LubaidAhmed,SyedFaisalAliandFauzanSaeed, "Communicator for Hearing- Impaired Persons using Pakistan Sign Language (PSL)," International Journal of AdvancedComputerScienceandApplications(IJACSA),Vol. 9, No.5,2018.

[11] M.V.N.R.P.kumar,AshutoshKumar,S.B.Arawandekar,A.A. BhosaleandR.L.Bhosale,"AVRBasedGestureVocalizer UsingSpeechSyn- thesizerIC,"International Journalof ResearchinAdventTechnology,Vol.3,No.5,2015.

[12] K.K.Bhoyar,S.N.Raut,andS.A.Ladhake,"Handgesture recognitionsystemusingKinectsensor,"International JournalofEngineeringandTechnology(IJET),Vol.5,No. 2,2013,pp.133-137.

[13] A. Sharma and S. Tiwari, "Real-time hand gesture recognitionforhuman-computerinteraction,"Journalof ComputerScienceandTechnology,Vol.29,No.6,2014, pp.897-904.

[14] Z. Ren, J. Yuan, and Z. Zhang, "Robust hand gesture recognitionbasedonfinger-earthmover’sdistancewith acommoditydepthcamera,"ACMMultimedia,2011,pp. 1093-1096.

[15] C.O.Sanchez-Avila,C.Sanchez-Reillo,andR.Medrano, "Hand gesture recognition using a depth-based segmentationandaprobabilisticapproach,"Journalof AppliedSoftComputing,Vol.13,2013,pp.4203-4211.

[16] S.MitraandT.Acharya,"Gesturerecognition:Asurvey," IEEE Transactions on Systems, Man, and Cybernetics,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 01 | Jan 2025 www.irjet.net p-ISSN: 2395-0072

PartC(ApplicationsandReviews),Vol.37,No.3,2007, pp.311-324.

[17] R. Poppe, "A survey on vision-based human action recognition," Journal of Image and Vision Computing, Vol.28,No.6,2010,pp.976-990.

[18] E.Stergiopoulou,N.Papamarkos,andD.Fotiadis,"Hand gesture recognition using a neural network model," Journal of Machine Vision and Applications, Vol. 22, 2011,pp.343-356.

[19] A.SturmanandJ.Z.Fleskes,"Theuseofhandgesturesin human-computer interaction," Journal of HumanComputerStudies,Vol.34,No.4,1999,pp.441-452.

[20] G.Hinton,L.Deng,andD.Yu,"Deepneuralnetworksfor acoustic modeling in speech recognition: The shared viewsoffourresearchgroups,"IEEESignalProcessing Magazine,Vol.29,No.6,2012,pp.82-97.

[21] K. Ouchi and K. Ishikawa, "Real-time hand gesture recognition using Kinect and AdaBoost," IEEE TransactionsonMultimedia,Vol.17,No.1,2015,pp.4149.

[22] M.Elmezain,A.Al-Hamadi,andB.Michaelis,"Real-time capable system for hand gesture recognition using hidden Markov models in stereo color image sequences,"JournalofImageandVisionComputing,Vol. 29,No.12,2011,pp.915-927.

[23] J. Huang, W. Wu, and G. H. Lee, "Hand gesture recognition using a real-time tracking method and hiddenMarkovmodels,"IEEETransactionsonSystems, Man, and Cybernetics, Part C (Applications and Reviews),Vol.41,No.5,2011,pp.574-586.

[24] J.Shotton,A.Fitzgibbon,M.Cook,andT.Sharp,"Realtime human pose recognition in parts from a single depthimage,"IEEEConferenceonCVPR,2011.

[25] F. Y. Shih and H. H. Wang, "Hand gesture recognition usingHopfieldnetworks,"Journal ofComputerVision andImageUnderstanding,Vol.95,No.1,2004,pp.1-21.

[26] P.Zhao,X.Yang,andC.Xie,"Handgesturerecognition using multiple features," IEEE Transactions on Multimedia,Vol.19,No.9,2017,pp.2125-2136.

[27] Y.KimandJ.Song,"Real-timehandgesturerecognition usingKinectdepthdata,"IEEEInternationalConference onRoboticsandAutomation(ICRA),2012.

[28] L. Breiman, "Random forests," Machine Learning Journal,Vol.45,No.1,2001,pp.5-32.

[29] E. Rogez, M. Khademi, and C. Schmid, "3D hand pose detection in egocentric RGB-D images," IEEE

Transactions on Pattern Analysis and Machine Intelligence,Vol.36,No.11,2014,pp.2405-2417.

[30] M.Stößel,A.Schmiedel,andR.Dillmann,"Efficienthand gesture recognition using deep learning with a novel two-stream convolutional network," Pattern RecognitionLetters,Vol.123,2019,pp.48-54.

[31] M.Everingham,S.M.Ali,andP.Johnston,"Visualhand gesture recognition for interpreting sign language," JournalofImageandVisionComputing,Vol.25,No.12, 2007,pp.2041-2053.

[32] R. Khushaba and A. Al-Ani, "Feature extraction and classification in sign language recognition systems," IEEETransactionsonSignalProcessing,Vol.58,No.5, 2010,pp.2045-2051.