International Journal of

ISSN: 2252-8814

ISSN: 2252-8814

Advances in Applied Sciences (IJAAS) is a peer-reviewed and open access journal dedicated to publish significant research findings in the field of applied and theoretical sciences. The journal is designed to serve researchers, developers, professionals, graduate students and others interested in state-of-the art research activities in applied science areas, which cover topics including: chemistry, physics, materials, nanoscience andnanotechnology,mathematics,statistics, geologyand earthsciences.

Editor-in-Chief: Qing Wang, National Instituteof Advanced Industrial Science and Technology (AIST), Japan

Co-Editor-in-Chief:

Chen-Yuan Chen, National Pingtung University of Education, Taiwan, Provinceof China

Bensafi Abd-El-Hamid, Abou BekrBelkaid University of Tlemcen, Algeria Guangming Yao, Clarkson University, United States

HabibollaLatifizadeh, Shiraz (SUTECH) University, Iran, Islamic Republic of EL Mahdi Ahmed Haroun, University of Bahri, Sudan

Published by:

Institute of Advanced Engineering and Science (IAES)

Website: http://iaescore.com/journals/index.php/IJAAS/ Email: info@iaesjournal.com, IJAAS@iaesjournal.com

International Journal of Advances in Applied Sciences (IJAAS) is an interdisciplinary journal that publishes material on all aspects of applied and theoretical sciences. The journal encompasses a variety of topics, including chemistry, physics, materials, nanoscience and nanotechnology, mathematics, statistics, geologyand earth sciences.

Submission of a manuscript implies that it contains original work and has not been published or submitted for publication elsewhere. It also implies the transfer of the copyright from the author to thepublisher. Authors should includepermission to reproduceany previously published material.

For information on Ethics in publishing and Ethical guidelines for journal publication (including the necessity to avoid plagiarism and duplicate publication) see http://www.iaescore.com/journals/index.php/IJAAS/about/editorialPolicies#sectionPolicies

You must prepareand submit your papers as word document (DOC or DOCX). For moredetailed instructionsand IJAAS templateplease takea look and download at: http://www.iaescore.com/journals/index.php/IJAAS/about/submissions#onlineSubmissions The manuscript will be subjected to a full review procedure and the decision whether to accept it will betaken by the Editor based on the reviews.

Manuscript must be submitted through our on-linesystem: http://www.iaescore.com/journals/index.php/IJAAS/

Once a manuscript has successfully been submitted via the online submission system authors may track thestatus of their manuscript usingtheonlinesubmission system.

International Journal of

Study of Absorption Loss Effects on Acoustic Wave Propagation in Shallow Water Using Different Empirical Models

Yasin Yousif Al-Alaboosi, Jenan Abdulkhalq Al-Aboosi

Data Mining Techniques for Providing Network Security through Intrusion Detection Systems: a Survey

Prabhu Kavin B, Ganapathy S

Software System Package Dependencies and Visualization of Internal Structure

Ahmad Abdul Qadir Alrababah

Data Partitioning in Mongo DB with Cloud Aakanksha Jumle, Swati Ahirrao

Graph Based Workload Driven Partitioning System by using MongoDB Arvind Sahu, Swati Ahirrao

A Fusion Based Visibility Enhancement of Single Underwater Hazy Image Samarth Borkar, Sanjiv V. Bonde

Optimal Bidding and Generation Scheduling of Utilities Participating in Single Side Auction Markets Including Ancillary Services B. Rampriya

Workload Aware Incremental Repartitioning of NoSQL for Online Transactional Processing Applications Anagha Bhunje, Swati Ahirrao

A Novel CAZAC Sequence Based Timing Synchronization Scheme for OFDM System

Anuja Das, Biswajit Mohanty, Benudhar Sahu 66-72

An Improved Greedy Parameter Stateless Routing In Vehicular Ad Hoc Network

Kavita Kavita, Neera Batra, Rishi Pal Singh 73-77

(Continued on next page)

Responsibility of the contents rests upon the authors and not upon the publisher or editors

SLIC Superpixel Based Self Organizing Maps Algorithm for Segmentation of Microarray Images

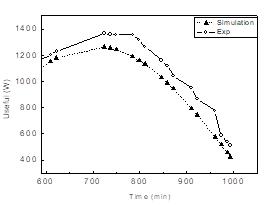

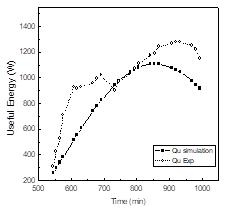

Durga Prasad Kondisetty, Mohammed Ali HussainExperimental and Modeling Dynamic Study of the Indirect Solar Water Heater: Application to Rabat Morocco

78-85

Ouhammou Badr, Azeddine Frimane, Aggour Mohammed, Brahim Daouchi, Abdellah Bah, Halima Kazdaba 86-96

International Journal of Advances in Applied Sciences (IJAAS) Vol. 7, No. 1, March 2018, pp. 1~6

ISSN: 2252-8814, DOI: 10.11591/ijaas v7.i1 pp1-6

Yasin Yousif Al-Alaboosi1, Jenan Abdulkhalq Al-Aboosi2

1Faculty of Electrical Engineering, Universiti Teknologi Malaysia, 81310, Johor, Malaysia

2Faculty of Engineering, University of Mustansiriyah, Baghdad, Iraq

Article Info

Article history:

Received Apr 20, 2017

Revised Feb 23, 2018

Accepted May 27, 2018

Keywords:

Acoustic wave

Absorption

Boric acid Frequency

ABSTRACT

Efficient underwater acoustic communication and target locating systems require detailed study of acoustic wave propagation in the sea. Many investigators have studied the absorption of acoustic waves in ocean water and formulated empirical equations such as Thorp’s formula, Schulkin and Marsh model and Fisher and Simmons formula. The Fisher and Simmons formula found the effect associated with the relaxation of boric acid on absorption and provided a more detailed form of absorption coefficient which varies with frequency. However, no simulation model has made for the underwater acoustic propagation using these models. This paper reports the comparative study of acoustic wave absorption carried out by means of modeling in MATLAB. The results of simulation have been evaluated using measured data collected at Desaru beach on the eastern shore of Johor in Malaysia. The model has been used to determine sound absorption for given values of depth (D), salinity (S), temperature (T), pH, and acoustic wave transmitter frequency (f). From the results a suitable range, depth and frequency can be found to obtain best propagation link with low absorption loss

Copyright © 2018 Institute of Advanced Engineering and Science All rights reserved

Corresponding Author:

Yasin Yousif Al-Alaboosi, Faculty of Electrical Engineering, Universiti Teknologi Malaysia, 81310, Johor, Malaysia

Email: alaboosiyasin@gmail.com

Increased interest in defense applications, off-shore oil industry, and other commercial operations provides a motivation for research in signal processing for the underwater environment. In the underwater environment, acoustics waves are more practical for applications such as navigation, communication, and other wireless applications due to the high attenuation rate of electromagnetic waves. Acoustic propagation is characterized by three major factors: attenuation that increases with signal frequency, time- varying multipath propagation, and low speed of sound (1500 m/s) [1].

The fading in underwater environment depends on the distance, frequency and sensors location, and can be divided into long term fading and short term fading. Long term fading caused by sound propagation is effected by spreading loss and absorption loss which is a function of range and frequency. Short term fading that composed of multipath and Doppler spread which is a random function of distance and time.

As the attenuation of sound in the ocean is frequency dependent, the ocean acts as a low-pass filter for ambient noise and the underwater systems operate at low frequencies, for example, on the order of tens of kHz [1]. No two deployment regions within the ocean with have the same depths ranging from tens of meters to a few kilometers with node placement that varies from one network to another [2].

Journal homepage: http://iaescore.com/online/index.php/IJAAS

Acoustic energy can be reflected and scattered from both the surface and bottom of the ocean as shown in Figure 1., permitting the possibility of more than one transmission path for a single signal [1, 2]. The phenomenon is known as multipath fading. The overlapping of consecutive pulses in digital communication results in intersymbol interference (ISI) that increases the bit-error rate in the received data [3-5]. Therefore, underwater data communication links generally support low data rates [1, 3]. This study aims to show the effects of absorption loss in propagation link between the transmitter and receiver

2.1. Sound Speed

The speed of sound in seawater is a fundamental oceanographic variable that determines the behavior of sound propagation in the ocean. Many empirical formulas have been developed over the years for calculating sound speed using values of water temperature, salinity, and pressure/depth. A simplified expression for the sound speed was given by Medwin [6]:

�� (1)

where c is the speed of sound in seawater, T is the water temperature (in degrees Celsius), S is the salinity (in parts per thousand) and d is the depth (in meters).

2.2. Total path loss

An acoustic signal underwater experiences attenuation due to spreading and absorption. Path loss is the measure of the lost signal intensity from the projector to the hydrophone. Spreading loss is due to the expanding area that the sound signal encompasses as it geometrically spreads outward from the source [7].

���������������������� (��) = �� ∗ 10 log(��) ���� (2)

where R is the range in meters and k is the spreading factor. When the medium in which signal transmission occurs is unbounded, the spreading is spherical and the spreading factor �� = 2; whereas in bounded spreading, it is considered as cylindrical �� = 1. In practice, a spreading factor of �� = 1.5 is often considered [2]

The absorption loss is a representation of the energy loss in form of heat due to viscous friction and ionic relaxation that occur as the wave generated by an acoustic signal propagates outwards; this loss varies linearly with range as follows [7]: ������������������������ (��, �� ) = 10 log���(�� )� ∗ �� ���� (3)

where �� is range in kilometres and �� (��) is the absorption coefficient. The absorption coefficient using different emperical models will be discussed in next section.

Total path loss is the combined contribution of both the spreading and absorption losses [1]

IJAAS Vol. 7, No. 1, March 2018: 1 – 6

The acoustic energy of a sound wave propagating in the ocean is partly:

a. absorbed, i.e. the energy is transformed into heat. b. lost due to sound scattering by inhomogeneities.

On the basis of extensive laboratory and field experiments the following empirical formulae for attenuation coefficient in sea water have been derived. There are three formulaes: Thorp’s formula, SchulkinMarsh model and Fisher-Simmons formula and the frequency band for each one as shown in Figure 2.

R. E. Francois and G. R. Garrison [11] have formulated the equation for the sound absorption in the frequency range 400Hz to 1MHz which includes the contribution of Boric Acid, Magnesium Sulfate and Pure water. The results given by this equation are very close to the practical results.

Figure 2 Diagram indicating empirical formulae for different frequency domains

The absorption coefficient for frequency range 100 Hz to 3 kHz can be expressed empirically using Thorp’s formula [2, 8] which defines α [dB/m] as a function of f [kHz]

The absorption coefficient for frequency range 3 kHz to 500 kHz can be expressed empirically using Schulkin and Marsh model [2,8] which defines α [dB/m] as a function of f [kHz]

(f)

where A and B are constants A = 2.34. 10 6 , B = 3.38. 10 6 , P is the hydrostatic pressure [kg/cm3], fT = 21 9 10[6 � 1520 T+273�] [kHz] is the relaxation frequency.

An alternative expression for the absorption coefficient α(f) [dB/m] is given by the Fisher and Simmons formula in range of frequency 10 kHz- 1 MHz [9] α(f) = � A

where A1 , A2 and A3 are the coefficients represent the effects of temperature, while theP1 , P2 and P3 coefficients represent ocean depth (pressure) and f1 , f2 represent the relaxation frequencies of Boric acid and (MgSO4) molecules [9] As a result, the absorption coefficient is proportional to the operating frequency. 1. Boric acid B(OH)3 ��1 = 8.686 �� . 100 78��ℎ 5

��1 = 1

��1 = 2 8

�� 35 104 1245 ��+273

2. Magnesium Sulphate MgSO4

��2 = 21.44. �� �� . (1 + 0.025��) ��2 = 1 1.37. 10 4 �������� + 6.2.

��2 = 8 17 (108 1990 (�� 273) ) 1 + 0 0018(�� 35)

3. Pure water H2O

= �

=

with f in [kHz], T in [°C], S in [ppt]. And where �������� , pH and c denote the depth in [m], the pH-value and the sound speed in [m/s] respectively.

R. E. Francois and G. R. Garrison [11] have formulated the equation for the sound absorption in the frequency range 400Hz to 1MHz which includes the contribution of Boric Acid, Magnesium Sulfate and Pure water. The results given by this equation are very close to the practical results.

A general diagram showing the variation of α(f) using Francois and G. R. Garrison formula with the three regions of Boric acid, B(OH)3, Magnesium sulphate, MgSO4 and Pure water, H2O is depicted in Figure 4. It can be observed that for the Boric acid region, Attenuation is proportional to �� 2 And for the regions Magnesium sulphate and pure water also Attenuation is proportional to �� 2 . In the transition domains it is proportional to �� .

With fixed the range at 1000 m, the path loss is proportional to the operating frequency and it is increased when the operating frequency increased and the contribution of the absorption term is less significant than the spreading term as shown in Figure 5.

Figure 6 and Figure 7 with fixed the range at 10Km and 100 Km respectively. As range increases and the absorption term begins to dominate, any variations in �� also becomes more significant. For data communication, the changes in the attenuation due to signal frequency are particularly important as the use of higher frequencies will potentially provide higher data rates.

The total path loss versus the range at different frequencies shown in Figure 8. The path losses Increase significantly at high frequency compared with low frequency. Therefore, the attenuation of sound in the sea is frequency dependent, the sea acts as a low-pass filter.

Vol. 7, No. 1, March 2018: 1 – 6

4. General diagram indicating the three regions of B(OH)3, MgSO4 and H2O

5. Total path losses as a function of frequency with range 1 Km

6 Total path losses as a function of frequency with range 10 Km

Study of Absorption Loss Effects on Acoustic Wave Propagation in Shallow (Yasin Yousif Al-Alaboosi)

Figure7 Total path losses as a function of frequency with range 10 Km

Figure 8. Total path losses as a function of range

In this paper, the losses due to phenomena of acoustic wave propagation and absorption have been studied. The absorption coefficients in dB/km vs signal frequency for Francois and G. R. Garrison formula shows that in general �� increases with increasing frequency at any fixed temperature and depth. For very short-range communication, the contribution of the absorption term is less significant than the spreading term. As range increases, the absorption term begins to dominate, any variations in �� also becomes more significant. For data communication, the changes in the attenuation due to signal frequency are particularly important as the use of higher frequencies will potentially provide higher data rates. Nevertheless, no other type of radiation can compete with low-frequency sound waves to communicate over great distances in oceanic environment.

[1] G. Burrowes and J. Y. Khan, Short-range underwater acoustic communication networks: INTECH Open Access Publisher, 2011.

[2] T. Melodia, H. Kulhandjian, L.-C. Kuo, and E. Demirors, "Advances in underwater acoustic networking," Mobile Ad Hoc Networking: Cutting Edge Directions, pp. 804-852, 2013.

[3] B. Borowski, "Characterization of a very shallow water acoustic communication channel," in Proceedings of MTS/IEEE OCEANS, 2009.

[4] P. S. Naidu, Sensor array signal processing: CRC press, 2009.

[5] E. An, "Underwater Channel Modeling for Sonar Applications," MSc Thesis, The Graduate School of Natural and Applied Sciences of Middle East Technical University, 2011.

[6] H. Medwin and C. S. Clay, Fundamentals of acoustical oceanography: Academic Press, 1997.

[7] F. De Rango, F. Veltri, and P. Fazio, "A multipath fading channel model for underwater shallow acoustic communications," in Communications (ICC), 2012 IEEE International Conference on, 2012, pp. 3811-3815.

[8] W. H. Thorp, "Analytic Description of the Low Frequency Attenuation Coefficient," The Journal of the Acoustical Society of America, vol. 42, pp. 270-270, 1967.

[9] F. Fisher and V. Simmons, "Sound absorption in sea water," The Journal of the Acoustical Society of America, vol. 62, pp. 558-564, 1977.

IJAAS Vol. 7, No. 1, March 2018: 1 – 6

International Journal of Advances in Applied Sciences (IJAAS)

Vol. 7, No. 1, March 2018, pp. 7~12

ISSN: 2252-8814, DOI: 10.11591/ijaas.v7.i1.pp7-12

Article Info

Article history:

Received Jun 1, 2017

Revised Jan 5, 2018

Accepted Feb 11, 2018

Keywords:

Artificial intelligence

Classification

Clustering

Data mining

Intrusion detection system

Soft computing

Corresponding Author:

Prabhu Kavin B,

ABSTRACT

Intrusion Detection Systems are playing major role in network security in this internet world. Many researchers have been introduced number of intrusion detection systems in the past. Even though, no system was detected all kind of attacks and achieved better detection accuracy. Most of the intrusion detection systems are used data mining techniques such as clustering, outlier detection, classification, classification through learning techniques. Most of the researchers have been applied soft computing techniques for making effective decision over the network dataset for enhancing the detection accuracy in Intrusion Detection System. Few researchers also applied artificial intelligence techniques along with data mining algorithms for making dynamic decision. This paper discusses about the number of intrusion detection systems that are proposed for providing network security. Finally, comparative analysis made between the existing systems and suggested some new ideas for enhancing the performance of the existing systems

Copyright © 2018 Institute of Advanced Engineering and Science All rights reserved

School of Computing Science and Engineering, VIT University-Chennai Campus, Chennai, 600 127-India

Email: lsntl@ccu.edu.tw

Now a day’s internet has become a part of our life. There are many significant losses in present internet-based information processing systems. So, the importance of the information security has been increased. The one and only basic motto of the information security system is to developed information defensive system to which are secured from an unjustified access, revelation, interference and alteration. Moreover, the risks were related to the confidentiality, probity and availability will have been minimized. The internet-based attacks were identified and blocked using different systems that have been designed in the past. The Intrusion detection system (IDS) is one of the most important sys tems among them because they resist external attacks effectively. Moreover, the IDS act as the wall of defense to the computer systems over the attack on the internet. The traditional firewall detects the intrusion on the system but the IDS performance is much better than the firewalls performance. Usually the behavior of the intruders is different from the normal behavior of the legal user, depending upon the behavior the assumption is made and the intrusion detection is done [1]. The computer system files, calls, logs, and the network events are monitored by the IDS to identify the threats on the hosts of the computer. By monitoring the network pockets, the abnormal behavior is detected. The attack pattern is known by finding the possible attack signatures and comparing them. By the known attack signature, the threats are detected easily by the system where as it cannot detect the unknown attacks [2].

An intelligent IDS acts flexible to increase the accuracy rate of the detection. Intelligent IDS are nothing but intelligent computer programs that are located in host or network. By firing the rules of inference

Journal homepage: http://iaescore.com/online/index.php/IJAAS

and also by learning the environment, the actions to be performed are computed by the intelligent IDS on that environment [1]. The regular network service is disrupted by transmitting the large amount of data to execute a lower level denial of service attacks. To cause a denial of service attack to the user, the receiver’s network connectivity was overwhelmed by creating a specific service request or by sending a large amount of data. The initiation of attack was done by a single sender or the compromised hosts by the attacker and from the latter variant will identify the Distributed Denial of Service (DDoS) [3]. The IDS work same as the Transparent Intrusion Detection System (TIDS) and for the non-distributed attacks the functionality to prevent the attack are provided. The scalability of the traffic processor is achieved by the load balancing algorithm and the system security is achieved by the transparency of nodes. The methodology of anomalybased attack detection is used in high speed network to detect DDoS attacks, in this method the SDN components are coupled with traffic processor [3].

Among many cyber threats Botnet attack was one the most severe cyber threat. In this attack botmaster is a controlling computer that compromised and remote controlled. Huge numbers of bots were spread over the internet and the botmaster uses the botnet by maintaining under its control. The botnet was used for various purposes by the botmaster, in that few are launching and performing of distributed cyber attacks and computational tasks. The IDS built for botnets are rule based and performance dependant. By examining the network traffic and comparing with known botnet signature the botnet was found in a rulebased botnet IDS. However, keeping these rules updated in the increasing network traffic is more tedious, difficult and time-consuming [4]. Machine-learning (ML) technique is a technique used to automate botnet detection process. From previously known attack signatures a model was built by the learning system. The features like flexibility, adaptability and automated-learning ability of ML is significantly better than the rule-based IDSs. High computational cost is needed for the machine learning based approaches [4].

In this paper, we have discussed about the various types of Intrusion Detection Systems which are used data mining techniques. Rest of this paper is organized as follows: Section 2 provides the related works in this direction. Section 3 shows the comparative analysis. Section 4 suggests new ideas to improve the performance of the existing systems. Section 5 concludes the paper.

2. RELATED

This section is classified into two major subsections for feature selection and classification techniques which are proposed in this direction in the past

2.1. Related Works on Feature Selection Methods

Feature selection was the most famous technique for dimensionality reduction. In this the relevant features is of detected and the irrelevant ones are discarded [5]. From the entire dataset the process of selecting a feature subset for further processing was proceeded in feature selection [6]. Feature selection methods are classified into two types, individual evaluation and subset evaluation. According to their degrees of importance feature ranking methods estimate features and allot weights for them. In contrast, build on a some search method subset evaluation methods select candidate feature [4].Feature selection methods is divided into three methods they are wrappers methods, filters methods and embedded methods [5] An intelligent conditional random field-based feature selection algorithm has been proposed in [7] for effective feature selection. This will be helpful for improving the classification accuracy.

In wrapper method optimization of a predictor is involved as a segment of the selection process, where as in filter method selection the features with self determination of any predictor by relying on the general characteristics of the training data is done. In embedded methods for classification machine learning models was generally used, and then the classifier algorithm builds an optimal subset or ranking features [5] Wrappers method and embedded method tried to perform better but having the risk of over fitting when the sample size is small and being very time consuming. On the other hand, filter method was more suitable for large datasets and much faster. Comparing with wrappers and embedded methods filters were implemented easily and has better scale up than those methods. Filter can be able to use as a preprocessing step prior trying to other complex feature selection methods. The two metrics of the filter methods in classification problems are correlation and mutual information, along with some other metrics of the filter method like error probability, probabilistic distance, entropy or consistency [5] In wrapper approach based on specified learning algorithm it selects a feature subset with a higher prediction performance. In embedded as similar as wrapper approach during the learning process of a specified learning algorithm it selects the best feature subset. In the filter approach the feature subset is chosen from the original feature space according to pre-specified evaluation criterions subset using only the dataset. In hybrid approach combining the advantages of the wrapper approach and the filter approach it uses the individualistic criterion and a learning algorithm to rate the candidate feature subsets [8]

IJAAS Vol. 7, No. 1, March 2018: 7 – 12

In high dimensional applications feature selection is very much important. From the number of original features, the feature selection was the combinatorial problem and found the optimal subset was NP-hard. While facing imbalanced data sets feature selection is very much helpful [9]. Rough set-based approach uses attribute dependency to take away the feature selection, which was important. The dependency measure that was necessary for the calculation of the positive region but while calculating it was an extravagant task [6]. Depend on the particle swarm optimization (PSO) and rough sets, the positive regionbased approach has been presented. It is a superintended combined feature selection algorithm and by using the conventional dependency, fitness function was measured for each particle is evaluated. The algorithms figure-out the strength of the selected feature with various consolidations by selecting an attribute with a higher dependency value. If the particle's fitness value is higher than the previous best value within the current swarm (pbest), then the particle value is the current best (gbest). Then its fitness was compared with the population's overall previous best fitness. The article fitness which is better will be at the position of best feature subset. The particle velocities were updated at the last. The dependency of the decision attributes which was on the conditional attributes was calculated by positive region based dependency measure and only because of bottleneck for large datasets it is suitable only for smaller ones [6]

Incremental feature selection algorithm (IFSA) is mainly designed for the purpose of subset feature selection. The starting point is the original feature subset P, in an incremental manner the new dependency function was calculated and required feature subsets are checked. P is the new feature subset if the dependency function P is equal to the feature subset if not it computes a new feature subset. The gradually selected significant features were added to the feature subset. Finally, by removing the redundant features the optimal output is ensured. Then again, the algorithm used the positive region-based dependency measure, and to make it unsuitable for large datasets [6]. Fish Swarm algorithm was started with an initial population (swarm) of fish for searching the food. Here every candidate solution is represented b y a fish. The swarm changes their position and communicates with each other in searching of the best local position and the best global positions. When a fish achieved maximum strength, it loses its normal quality after obtaining the Reduct rough set. After all of the fishes have lost it normal quality the next iteration starts. After the similar feature reduct was obtained under three consecutive iterations or the largest iteration condition was reached, then the algorithm halts. Then equivalent rough set-based dependency measure was used in this algorithm and it suffers from the same problem of the large datasets performance degradation [6]

Correlation-based Feature Selection is a multivariate subset filter algorithm. A search algorithm united with an estimation function that was used to evaluate the benefit of feature subsets. The implementation of CFS used the forward best first search as its searching algorithm. Best first search is one of the artificial intelligence search scenario in which backtracking was allowed along with the search path. By making some limited adjustment to the current feature subset it moves through the search space. This algorithm can backtrack to the earlier subset when the explored path looks unexciting and advance the search from there on. Then the search halted, if five successive fully expanded the subsets shows no development over the present best subset [5].

The objective of SRFS is to find the feature subset S with the size d, which contains the representative features, in which both the labeled and unlabeled dataset are exploiting. In this the feature relevance is classified in to three disjoint categories: strongly relevant, weakly relevant and irrelevant features [10-12]. A strong relevant feature was always basic for an optimal or suboptimal feature subset. If the strong relevant feature is evacuated, using the feature subset the classification ability is directly influenced. Except for an optimal or suboptimal feature subset at certain conditions, a weak relevant feature is not always necessary. Irrelevant feature it only enlarges search space and makes the problem more complex, and it doesn't provide any information to improve the prediction accuracy so it is not necessary at any time. Hence all features of strongly relevant and subset features of weakly relevant and no irrelevant features should be included by the optimal feature subset. An in addition supervised feature selection method that uses the bilateral information between feature and class that tend to find the optimal or suboptimal features over fitted to the labeled data, when a small number of labeled data are available. In this case, data mitigation may be able to occur in this problem on using unlabeled data. Therefore, relevance gain considering feature relevance in unlabeled dataset, and propose a new framework for feature selection on removing the irrelevant and redundant features called as Semi-supervised Representatives Feature Selection algorithm is defined. SRFS is a semi-supervised filter feature selection based on the Markov blanket [8]

2.2.

The combined response composed by the multiple classifiers into a single response was the ensemble classifier. Even though many ensemble techniques exist, for a particular dataset it was hard to found suitable ensemble configuration. Ensemble classifiers are used to maximize the certainty of several classification tasks. Many methods have been proposed, with mean combiner, max combiner, median

combiner, majority voting and weighed majority voting (WMV) whereas the individual classifiers can be connected using any one of these methods [13]. To solve classification and regression problems support vector machines (SVM) is an effective technique. SVM was the implementation of Vapnik’s Structural Risk Minimization (SRM) principle which has comparatively low generalization error and does not suffer much from over fitting to the training dataset. When a model performs poor and not located in the training set then it was said to be over fit and has high generalization error [13]. Recently a significant attention was attracted by the multi-label classification, which was motivated by more number of applications. Example include text categorization, image classification, video classification, music categorization, gene and protein function prediction, medical diagnosis, chemical analysis, social network mining and direct marketing and many more examples found. To improve the classification performance by the utilization of label dependencies was the key problem in multi-label learning and how it is motivated by which number of multi-label algorithm that have been proposed in recent years (for extensive comparison of several methods). The progress in the MLC in recent time was summarized. Feature space Dimensionality reduction, i.e. reducing the dimensionality of the vector x is one of the trending challenges in MLC. The dimensionality of feature space can be very large and this issue in practical applications is very important [14]. Many intelligent intrusion detection systems have been discussed in [1] and also briefly described the usage of artificial intelligence and soft computing techniques for providing network security. Moreover, a new intelligent agent based Multiclass Support Vector Machine algorithm which is the combination of intelligent agent, decision tree and clustering is also proposed and implemented. They proved their system was better when compared with other existing systems. Recently, temporal features are also incorporated with fuzzy logic for making decision dynamically [15]. They achieved better classification accuracy over the real time data sets.

Clustering techniques are very useful for enhancing the classification accuracy. Many clustering algorithms have been used in various intrusion detection systems in the past for achieving better performance. Clustering techniques are useful in both datasets such as network trace data and bench mark dataset for making effective grouping [16], [17]. Outlier detection is also useful for identifying the unrelated users in a network. This outlier detection technique is used for identifying the outliers in a network. It can be applied in real network scenario and both datasets such as network trace dataset and the benchmark dataset. Moreover, soft computing techniques are used in these two approaches for making final decisions over the datasets. The existing works [18], [19] achieved better detection accuracy.

Most of the Intrusion Detection Systems have been used data mining techniques such as Clustering, Outlier detection, Classification and data preprocessing. Here, data preprocessing techniques are used to enhance the classification accuracy. Feature selection methods are used to reduce the classification time. This paper describes various types of feature selection which are proposed in this direction in the past. The average performance of the existing classification algorithms is 94% and it has improved into 96% when applied data preprocessing. In addition, the average detection accuracy is reached to 99% when used clustering or outlier detection techniques. Table 1 shows the performance comparative analysis

Table 1 Comparative Analysis

1 Srinivas Mukkamala et al [20]

2 Ganapathy et al [21]

3 Ganapathy et al [1]

4 Soo-YeonJi et al [2]

5 Omar Y. Al-Jarrah et al [4]

6 Abdulla Amin Aburomman et al [5]

7 Abdulla Amin Aburomman et al [5]

8 VinodkumarDehariya et al [16]

9 UjjwalMaulik et al [16]

10 ChenjieGu et al [16]

11 Ganapathy et al [11]

12 J. Ross Quinlan et al [12]

13 Ernst Kretschmann et al [16]

14 GuoliJi et al [18]

15 Ganapathy et al [15]

16 Ganapathy et al [19]

IJAAS Vol. 7, No. 1, March 2018: 7 – 12

From Table 1, it can be seen that the performance of the method RDPLM perform well than the existing methods and the existing classifier SVM achieved very less detection accuracy than others. This is due to the use of various combinations of methods and the use of intelligent agents.

Figure 1 demonstrates the performance analysis in graph between the top five methods which are proposed in the past by various researchers. Here, we have considered the same set of records for conducting experiments for finding the classification accuracy. Classification accuracy of various methods is considered for comparative analysis.

From figure 1, it can be observed that the performance of the method RDPLM is performed well when it is compared with existing methods. Moreover, the IGA-NWFCM method achieves very less detection accuracy than the other existing algorithms which are considered for comparative analysis

The performance of the existing systems can be improved by the introduction of intelligent agents and soft computing techniques like fuzzy logic, neural network and genetic algorithms for effective decision over the dataset. In this fast world, time and space are also very important to take effective decision. Finally, can introduce a new system which contains new intelligent agents, neural network for training, effective spatio-fuzzy temporal based data preprocessing method and fuzzy temporal rules can be used for making effective decision and also can detect attackers effectively. This combination is able to provide better performance.

5. CONCLUSION

An effective survey made in the direction of data mining technique-based intrusion detection systems. Many feature selection methods have been discussed in this paper and their importance are highlighted. Classification, Clustering and outlier detection techniques are explained in this paper and also explained how much it is helpful for enhancing the performance. Finally, suggestion also proposed in this paper based on the comparative analysis of the existing systems

[1]. S. Ganapathy, K. Kulothungan, S. Muthurajkumar,M. Vijayalakshmi, P.Yogesh, A.Kannan, “Intelligent feature selection and classification techniques for intrusion detection in networks : a survey”, EURASIP Wireless Journal of Communications and Networking, vol. 2013, pp. 1–16, 2013.

[2]. S. Y. Ji, B. K. Jeong, S. Choi, and D. H. Jeong, “A multi-level intrusion detection method for abnormal network behaviors,” J. Netw. Comput. Appl., vol. 62, pp. 9–17, 2016.

[3]. O. Joldzic, Z. Djuric, and P. Vuletic, “A transparent and scalable anomaly-based DoS detection method,” Comput. Networks, vol. 104, pp. 27–42, 2016.

[4]. O. Y. Al-Jarrah, O. Alhussein, P. D. Yoo, S. Muhaidat, K. Taha, and K. Kim, “Data Randomization and ClusterBased Partitioning for Botnet Intrusion Detection,” IEEE Trans. Cybern., vol. 46, no. 8, pp. 1796–1806, 2016.

[5]. A. A. Aburomman and M. Bin Ibne Reaz, “A novel SVM-kNN-PSO ensemble method for intrusion detection system,” Appl. Soft Comput. J., vol. 38, pp. 360–372, 2016.

[6]. P. Teisseyre, “Neurocomputing Feature ranking for multi-label classi fi cation using Markov networks,” vol. 205, pp. 439–454, 2016.

[7]. S Ganapathy, P Vijayakumar, P Yogesh, A Kannan, “An Intelligent CRF Based Feature Selection for Effective Intrusion Detection”, International Arab Journal of Information Technology, vol. 16, no. 2, 2016.

[8]. V. Bolón-Canedo n, I. Porto-Díaz, N. Sánchez-Maroño, A. Alonso-Betanzos, “A framework for cost-based feature selection,” Pattern Recognition, Elsevier, vol. 47,pp. 2481–726, 2014.

[9]. M. S. Raza and U. Qamar, “An incremental dependency calculation technique for feature selection using rough sets,” Inf. Sci. (Ny)., vol. 343–344, pp. 41–65, 2016.

[10]. L. Yu, H. Liu, “Efficient feature selection via analysis of relevance and redundancy”, The Journal of Machine Learning Research, vol.5, pp. 1205–1224, 2004.

[11]. G. H. John, R. Kohavi, K. Pfleger, et al., “Irrelevant features and the sub-set selection problem”, in: Machine Learning: Proceedings of the Eleventh International Conference, pp. 121–129, 1994.

[12]. B. Grechuk, A. Molyboha, M. Zabarankin, “Maximum entropy principle with general deviation measures”, Mathematics of Operations Research, vol.34, no. 2, pp. 445–467, 2009.

[13]. Q. Li, Z. Sun, Z. Lin, and R. He, “Author ’ s Accepted Manuscript Transformation Invariant Subspace Clustering Reference : To appear in : Pattern Recognition,” 2016.

[14]. S. Maldonado, R. Weber, and F. Famili, “Feature selection for high-dimensional class-imbalanced data sets using Support Vector Machines,” Inf. Sci. (Ny)., vol. 286, pp. 228–246, 2014.

[15]. S Ganapathy, R Sethukkarasi, P Yogesh, P Vijayakumar, A Kannan, “An intelligent temporal pattern classification system using fuzzy temporal rules and particle swarm optimization”, Sadhana, vol. 39, no. 2, pp. 283-302, 2014.

[16]. S Ganapathy, K Kulothungan, P Yogesh, A Kannan, “A Novel Weighted Fuzzy C–Means Clustering Based on Immune Genetic Algorithm for Intrusion Detection”, Procedia Engineering, vol. 38, pp. 1750-1757, 2012.

[17]. K Kulothungan, S Ganapathy, S Indra Gandhi, P Yogesh, A Kannan, “Intelligent secured fault tolerant routing in wireless sensor networks using clustering approach”, International Journal of Soft Computing, vol. 6, no. 5, pp. 210-215, 2011.

[18]. S.Ganapathy, N.Jaisankar, P.Yogesh, A.Kannan, “ An Intelligent System for Intrusion Detection using Outlier Detection”, 2011 International Conference on Recent Trends in Information Technology (ICRTIT), pp. 119-123, 2011.

[19]. N Jaisankar, S Ganapathy, P Yogesh, A Kannan, K Anand, “An intelligent agent based intrusion detection system using fuzzy rough set based outlier detection”, Soft Computing Techniques in Vision Science, pp. 147-153, 2012.

[20]. A. H. Sung and S. Mukkamala, “Identifying Important Features for Intrusion Detection Using Support Vector Machines and Neural Networks", Department of Computer Science New Mexico Institute of Mining and Technology, pp. 3–10, 2003.

[21]. S. Ganapathy, P. Yogesh, and A. Kannan, “Intelligent Agent-Based Intrusion Detection System Using Enhanced Multiclass SVM,” vol. 2012, 2012.

IJAAS Vol. 7, No. 1, March 2018: 7 – 12

International Journal of Advances in Applied Sciences (IJAAS)

Vol. 7, No. 1, March 2018, pp. 13~20

ISSN: 2252-8814, DOI: 10.11591/ijaas v7.i1 pp13-20

Article Info

Article history:

Received May 22, 2017

Revised Dec 20, 2017

Accepted Feb 11, 2018

Keywords:

Dependency

Package

Reverse engineering

Software architecture

Software visualization

Corresponding Author:

ABSTRACT

This manuscript discusses the visualization methods of software systems architecture with composition of reverse engineering tools and restoration of software systems architecture. The visualization methods and analysis of dependencies in software packages are written in Java. To use this performance graph, it needs to describe the relationships between classes inside the analyzed packages and between classes of different packages. In the manuscript also described the possibility of tools to provide the infrastructure for subsequent detection and error correction design in software systems and its refactoring

Copyright © 2018 Institute of Advanced Engineering and Science

All rights reserved

Ahmad Abdul Qadir Alrababah, Faculty of Computing and Information Technology in Rabigh, King Abdulaziz University, Rabigh 21911, Kingdom of Saudi Arabia. Email: aaahmad13@kau.edu.sa

1. INTRODUCTION

The task of reverse engineering in program system is very important in the development of a software system using libraries with source codes. Building and visualizing the UML model for the newly developed Program system [1], and for the libraries it uses, greatly simplifies the understanding of their structure and functionality [2-3], the choice of the required version and developer of the library. Ways construction and visualization of the software system model were considered in previous works of the author [4].

The size of information obtained in solving these problems can be too large for their perception by the user, reception and visualization of all relationships can require too much time [5-6]. Therefore, visualization of the software system is only necessary for the most significant part of its architecture. For the constructed UML-model it is necessary to calculate and visualize the values of object-oriented metrics allowing evaluating the design of qualified systems [2], [5], [7]. In previous works, the methods of visualizing the system architecture and results of quality visualization were measured using Object-Oriented metrics [8-9]. Also an overview and analysis of object-oriented metrics was made, the simplest objectoriented metrics for analysis and design of individual classes, and then it was considered the class - structure metrics, allowing assessing the quality of the design in the class structure [10-11].

This article discusses system visualization with using matrices of incoming and outgoing packet dependencies, allowing analyzing existing dependencies between classes within a package, and between classes of different packages. Obtaining such Information allows us to understand the reason for the emergence of dependencies between packages that determine architecture of the system, and also if necessary refactoring systems [12], [13], [11].

Journal homepage: http://iaescore.com/online/index.php/IJAAS

2.1. Problems in Understanding Structure And Dependence Packages

The structuring of complex software systems by packages can be affected by a variety of factors. Packages can identify system code modules that will be used to propagate the system. Packages may reflect the ownership of the program code obtained from external developers. Packages can reflect the organizational structure of the team that developed the system, and the architecture of the system or the partitioning of the system into levels. At the same time, the correct structuring system should minimize dependencies between packets.

Errors in designing of system packages structure often affect the system as well. A recursive dependency of a package on other packages requires loading the code of these packets into memory devices with limited resources. To solve this problem it is necessary to apply package restructuring and to identify the package classes that have the maximum number of incoming and outgoing relationships to classes of other packages, also to determine the possibility of class moving to an external package that minimizes the dependency between packets.

Correspondingly important is the analysis of the relationships between the classes located inside the packages. Minimization dependencies between classes of large packages will allow you to restructure the package, breaking it into several smaller packages. Total number of dependencies between packages of the system in this case can be decreased. To solve the problems of package restructuring as special visualization packages, allowing analyzing in detail the dependencies between packages and classes of packages. In previous works it was examined the analysis and visualization of dependencies of packets using matrices of the structure in the reverse engineering tool. This method of analyzing system packages allows simplifying the system structuring to levels and simplifying the extraction of the system architecture. To analyze and visualize the relationships between pair's packages of the system, a detailed visualization of the dependency matrix cells was used, showing relationships between a pair of packets represented by this cell. However, often the information in the matrix cells of structural dependencies is not enough. To remove the cyclic dependencies between packets and reducing the number of relationships between systems packages, matrices can be useful, showing the reasons for the dependency of the package with all other packages of the system. To understand the interrelationships of packages, an essential visualization of packet metrics can help. Visualization of package nesting and the impact of such nesting on the software system architecture were discussed. Visualization and analysis of packet coupling, as well as joint the use of packets by classes was considered. Analysis of software system architecture with the help of matrices of structural dependencies is considered in the work.

2.2. Visualization for Understanding the Role of The Package in the System

2.2.1. Choosing the Way to Visualize the Package

Although the visualization of all the relationships of the package may need to show a very large amount related to the information package, however, it should simplify the analysis of the package. For visualization graphs, the most widespread are the visualization in the form of nodes and edges between nodes, and also visualization in the form of matrices. As was noted in [14 ], the representation in the form of knots and edges is easier to read and intuitively understood with a small number of nodes and edges in the graph. But the matrix representation has no problems associated with crossing the edges of the graph and superimposing nodes graph with a large number of connections between the bonds. Therefore, the matrix representation is more suitable for visualization of complex graphs.

2.2.2. Basic Principles of Package Visualization

For the detailed package visualization regardless of the graph dependency complexity offered, use the matrix representation of the graph. The package is represented by a rectangle, whose sides form contact areas called surfaces. Each row / column represents the inner class of the analyzed package or the class of the external package with which interacts inner class of the analyzed package. The surface has a heading representing the relationship between the inner classes of the packet under consideration, and the body representing the interaction of internal classes for the analyzed package with external classes. To represent incoming and outgoing dependencies of the package are used separate types of packages.

Consider the package dependency matrix in more detail. Figure 1 shows an example of visualization packages and their dependencies using nodes and edges. The P1 package shown in this figure will be then represented by the matrices of the incoming and outgoing dependencies of this package.

A group of matrix rows related to a single packet form the surface of the bag. The first surface, related to the packet under consideration, is the package header. The E1 class refers to the inner classes C1 and D1. In classes B1, H1, I1, and F1, no classes of the P1 package refer, because there are no completed cells in the corresponding rows of these classes. The classes from the surface of the packet P3 is referenced from the packet P1 under consideration. These are classes A3, B3 and C3. The surface of the packet P3 is located in the matrix above the surfaces of the packages P2 and P4, since it includes more classes than th e surfaces of packages P2 and P4.

To order the columns, surfaces and lines in surfaces, a single rule is used. Closer to the header are the surfaces packages that have the most links. Inside the surface closer to the header there are those classes on which the most links from the classes of the package considered. The background color brightness for the class name specified for the referring class shows how many links comes from the referring class in the cell of the column, in the package represented by the cell surface. Dark cell has more links. Both the horizontal position of the class and its brightness represent the number of Links. However, the position shows the number of references for the entire matrix, and the brightness for a particular surface of the matrix. To separate the classes represented in the matrix into categories, the class color can be used. Matrix color might be used to separate the classes of the classes that have links and do not have a link. Non-referenced classes are painted in lighter colors, referencing in a darker color. In the matrix body, it is possible to allocate color packets and classes that are not included in the analyzed application. For example, in this way, classes can be painted in packages from libraries received from external developers. Consider now the package matrix showing the incoming dependencies of the package. For this purpose, a similar matrix with slight differences: the surfaces of the matrix of incoming dependencies are located horizontally. Thus, it will be easier to distinguish between matrices of incoming and outgoing dependencies, if they are located on the screen side by side

3.1. Analysis of the Packet Structure with the Matrix Package

Now we illustrate the use of the matrix package to study the matrix structure and examine package dependencies. To analyze the packet structure of a matrix package, it is a necessitating for selecting/marking classes or packages (surfaces representing package). When we select a class, the class nodes and associated links are colored red. Also, the most happens when the class is marked with the specified color at the request of the instrument user. Selecting/marking a surface means that all relationships are selected/ marked in the same way which enters the package represented by this surface. Figure 2 shows the matrix of output dependencies of the protocols package with the class which selected in the matrix. The red color in Figure 2 shows both the HTTP socket class in the first line of the first column, and classes to which it refers (the second column of the matrix of output dependencies). An example of marking classes in the matrix of outgoing dependencies of the network kernel package is shown in the figure 3. Blue in Figure 3 is the socks socket class, green–class Internet configuration, and the crimson color-the class password. Also, in Figures 2 and 3 were the surface of the packages is marked with an orange color as network kernel, and the surface of the protocols package is marked in yellow. Blue color is marked classes that do not belong to the application being analyzed (classes from external libraries).

Figure 2. Visualization of the selected class HTTP socket in matrix package protocols

Figure 3. Classes in the matrix of output dependencies of the network kernel package

Packet classes and surfaces are represented in the dependency matrices in a compact form. More Detailed about the class or package appears as a tooltip, as shown in Figure 4. The user tool can filter information with the displayed matrix of the packet. It is possible to display links referring only to the analyzed application, or to the described group packages. After excluding all classes of libraries used, the array of incoming dependencies of the package protocols takes a compact view, as shown in Figure 5

4. A pop-up tip for the class HTTP Socket in matrix outgoing dependencies of the packet network kernel

5. The matrix of incoming dependencies of the Protocol package after filtering the classes

The user can also use the filter to remove classes of unrelated relationships with external class packages or hide in the header of the matrix package, concentrating on analysis only dependencies between classes of different packages.

3.2. Analysis of the Package Using a Matrix of Output Package Dependencies

Consider how the matrix of outgoing dependencies can be used for packet analysis. Such a quick look through the matrix on the "draft" package allows evaluating with the implementation of the package.

3.3. Analysis of Large Packages

Consider packages that have a large size matrix of outgoing dependencies. The reasons for this may be different. Figure 6 shows three packets with a large packet matrix. The HTML parser entities package has a large number of its own classes, because it has a large Header. On the other hand, the remote directory and protocols packages have a large matrix, because contains a large number of references to classes in other packages (a large body) with a relatively small header of the matrix. A large number of matrix surfaces

characterize closely coupled packages. Thus, the last two packages have a strong connection with their external environment.

3.4. Small Packages with Complex Implementation

The TelNetWordNet class, shown in Figure 7, has only four classes of its own.

7. TelNetWordNet-a small package with a complex implementation

In addition, the package has a large matrix body and a large number of matrix surfaces. Based on this, we can conclude that loading this small package into memory will also require loading the large number code of their packages. This may lead to problems on devices with a small memory. The remote directory class also has a small number of classes. However, its implementation is much more complicated Implementation of the TelNetWordNet class, since the dependencies of its classes are distributed among the larger number of outer classes and surfaces.

3.5. Sparse Packages

The package Html Parser Entities in Figure 6 and the package TelNetWordNet in Figure 7 have sparse Headers. This means that the coupling between classes inside these packages is small. It is possible that they are candidates for the decomposition of these packages (Distribution of package classes for other packages). At the same time, it can be noted that the package Html Parser Entities has not only a sparse title, but also a sparse body. For this reason its decomposition is more probable.

3.6.

The URL packet shown in Figure 8 has a large number of outgoing dependencies filled nodes. However, Figure 8 also shows that the URL package has many references to external packages in the body. Here, it's more important to link to classes of external packages than to classes inside packages

3.7. The Choice of the Position of the Class

Using the outbound dependency matrix, you can easily find classes for which you have unsuccessfully selected containing their package. So shown by the crimson rectangle in Figure 9, the password class does not have neither incoming nor outgoing dependencies within the header of the NetworkKernel matrix. Thus, the identified class becomes a candidate for moving it into packages; with classes which it has such dependencies. Moving a class to a using package will increase the cohesion of both packages.

Figure 9. An incorrect position selection of the password class in the network kernel package

3.8. Analysis of the Package Using the Matrix of Incoming Dependencies

The incoming dependencies matrix shows how the package is used by other application packages. When analysis of packages is using such matrices, you can identify, for example, packet templates.

3.9. Leaf Packages and Insulated Bags

Figure 10 shows the mail reader filters package list referenced by only one package mail reader. Also, using the matrix of incoming dependencies of the package, it is easy to identify in the system such fully isolated packages, as shown in Figure 10 of the Squeak Page package.

3.10.

To illustrate coupled Packages, consider the matrix of incoming dependencies of the kernel package, shown in Figure 11. The classes that have the most links are classes that are in the body packets with large surfaces, like socket and net name resolver located in the top two rows of the matrix. However, the string for the net name resolver class is darker than the string of the socket class. This means that the net name resolver class has more internal incoming dependencies than the socket class. And the socket class has more incoming external dependencies, since the brightness is represented by the number of references to the package classes in the body of the packet.

3.11. Related Packages

To assess the impact of a change in one package on another package, it is often necessary to identify system closely related packages. A sign of the close cohesion of packets is a large surface package in the body of the packet required, close to the header of the packet. Example, closely related packets are shown in Figure 10 with the matrix of incoming dependencies for the package mail reader filter. Closely related package, in accordance with the above criteria is a mail reader package. Another example of close bundling of packets is shown in the Figure 11. The Protocols package is closely related to the Kernel package. Changes in the Kernel package will significantly affect to classes in the protocols package.

3.12. Kernel Packages

When analyzing a software system, it is important to identify packages that define the core of the system. These are packages from which depends on most other packages of the system. Figure 12 shows two packages of URL and protocols that are such a kernel. Identify the kernel packages of the system might also be by the largest number of surfaces in the matrix of incoming dependencies for these packages.

The manuscript discusses the analysis and visualization of dependencies between classes in software systems packages written in Java language. This task is very important for restoration architecture of the software system when solving the problem of reverse engineering. The manuscript considers methods for visualizing such dependencies using a matrix representation of a graph describing the relationships between the classes within the parsed package, and the relationships between the classes of the different packages. Showing use this method to visualize the dependencies between packages in the inverse tool design and restoration of architecture. This tool is based on the UML modeling language and it is implemented as an extension of the eclipse environment. The manuscript is a continuation of the publication cycle on software engineering and application of the modeling language UML.

I thank King Abdulaziz University-KSA for providing me with needed resources for carrying out this work.

[1] S. Ducasse, M. Lanza, L. Ponisio, Butterflies: “A visual approach to Characterize packages, in: Proceedings of the 11th IEEE International Software Metrics Symposium (METRICS'05)”. IEEE Computer Society. pp. 70-77, 2005.

[2] Colin Ware, Information Visualization, Third Edition: Perception for Design (Interactive Technologies) 3rd Edition, Morgan Kaufmann, 2012.

[3] Dhanji R. Prasanna, “Dependency Injection: With Examples in Java, Ruby, and C#” Manning Publications. 2009.

[4] Saikat Das Gupta, Rabindra Mukhopadhyay, Krishna C. Baranwal, Anil K. Bhowmick, Reverse Engineering of Rubber Products: Concepts, Tools, and Techniques. CRC Press, 2013.

[5] Stephanie D. H. Evergreen, Effective Data Visualization: The Right Chart for the Right Data 1st Edition SAGE Publications, Inc, 2016.

[6] H. Abdeen, S. Ducasse, D. Pollet, I. Alloui, Package fingerprints: A visual summary of package Interface usage, Inf. Softw. Technol. 52 (12) 1312-1330, 2010.

[7] Bruce Dang, Alexandre Gazet, Elias Bachaalany, Sebastien Josse, Practical Reverse Engineering: x86, x64, ARM, Windows Kernel, Reversing Tools, and Obfuscation 1st Edition. Wiley, 2014.

[8] Addy Osmani, Learning JavaScript Design Patterns: A JavaScript and jQuery Developer's Guide, O'Reilly Media, 2012.

[9] H. Abdeen, I. Alloui, S. Ducasse, D. Pollet, M. “Suen, Package reference fingerprint: a rich and Compact visualization to understand package relationships”, in: Europe an Conference on Software Maintenance and Reengineering (CSMR), IEEE Computer Society Press, pp.213-222, 2008.

[10] Vinesh Raja and Kiran J. Fernandes, Reverse Engineering: An Industrial Perspective (Springer Series in Advanced Manufacturing), Springer; Softcover reprint of hardcover, 2010.

[11] M. Ghoniem, J. D. Fekete, P. Castagliola, “A comparison of the read ability of graphs using Node-link and matrix-based representations”, in: Proceedings of the IEEE Symposium Information Visualization, INFOVIS'04, IEEE Computer Society, Washington, DC, USA, Pp.17-24, 2004.

[12] M. Lungu, M. Lanza, T. Girba. “Package patterns for visual architecture recovery”, in: Proceedings of CSMR 2006 (10th European Conference on Software Maintenance and Reengineering). IEEE Computer Society Press Los Alamitos, CA, pp. 185-196, 2006.

[13] Kyran Dale, Data Visualization with Python and JavaScript: Scrape, Clean, Explore & Transform Your Data 1st Edition, O'Reilly Media, 2016.

[14] N. Sangal, E. Jordan, V. Sinha, D. Jackson. “Using dependent models to Manage complex software architecture”, in: Proceedings of OOPSLA'05, pp. 67-176, 2005

IJAAS Vol. 7, No. 1, March 2018: 13 – 20

International Journal of Advances in Applied Sciences (IJAAS)

Vol. 7, No. 1, March 2018, pp. 21~28

ISSN: 2252-8814, DOI: 10.11591/ijaas.v7.i1.pp21-28

Article Info

Article history:

Received May 23, 2017

Revised Dec 27, 2017

Accepted Feb 18, 2018

Keywords:

Data partitioning

Distributed transaction

Perofrmance

Scalable Workload-Driven

TPC-C benchmark

Corresponding Author:

Aakanksha Jumle,

ABSTRACT

Cloud computing offers various and useful services like IAAS, PAAS SAAS for deploying the applications at low cost. Making it available anytime anywhere with the expectation to be it scalable and consistent. One of the technique to improve the scalability is Data partitioning. The alive techniques which are used are not that capable to track the data access pattern. This paper implements the scalable workload-driven technique for polishing the scalability of web applications. The experiments are carried out over cloud using NoSQL data store MongoDB to scale out. This approach offers low response time, high throughput and less number of distributed transaction. The result of partitioning technique is conducted and evaluated using TPC-C benchmark

Copyright © 2018 Institute of Advanced Engineering and Science All rights reserved

Computer Science Symbiosis Institute of Technology, Lavale, Pune, India.

Email: aakanksha.jumle@sitpune.edu.in

In present world, there is huge widening of data due to storage, transfer, sharing of structured and unstructured data which inundates to business. E-commerce sites and application produce huge and complex data which is termed as Big Data. It is mature term that evoke large amount of unstructured, semi -structured and structured data. The cloud computing furnish with the stable platform for vital, economical and efficient organisation of data for operating it.

In order to handle and store these huge data, a large database is needed. To cope up with largescale data management system (DBMS) would not support the system. Relational databases were not capable with the scale and swiftness challenges that face modern applications, nowhere they built to take benefit of the commodity storage and computing the power available currently.

NoSQL is called as Not only SQL as it partially supports SQL. These data stores are rapidly used in Big Data and in many web applications. NoSQL is basically useful for the data which is unstructured to store. Unstructured data is growing rapidly than structured data and does not fit the relational schemas of RDBMS. Hence the NoSQL [1] data stores get introduced with high availability, high scalability and its consistency. NoSQL database is widely used to process heavy data and web application. Nowadays most of the companies are shifting to NoSQL database [1-3] for their flexibility and ability to scale out, to handle bulky unstructured data in contrast with relational database. NoSQL cloud data stores are developed that are document store, Key-value, column family, graph database, etc. NoSQL data stores comprise its advantages for coping with the vast load of data with the aid of scale out applications. The techniques which are in use are classified into static [4-5] and dynamic partitioning [6] systems. In static partitions, the related data item are put on single partition for accessing the data, and once the partitions formed do not change further. The advantage of static partition creation, no migration of data is done so as the cost of data migration is negligible.

Journal homepage: http://iaescore.com/online/index.php/IJAAS

The dynamic partition system, the partitions are formed dynamically in which the partitions changes frequently so as to reduce the distributed transaction. As the partitions changes, the chances of migrating the data is high so as the cost of migration.

Taking into consideration of the pros and cons of the static and dynamic partitioning systems, scalable workload driven data partitioning technique is derived. The main aim of this techniques is to reduce the distributed transaction, making the database scalable and also the performance the application to get improved. The scalable algorithm tracks the data access pattern that is which warehouse is supplying to which other requested warehouse and also the transaction logs are analysed. The proposed system frames, the partition which are formed uses NoSQL database that is MongoDB using this scalable workload-driven technique which fall neither under static nor dynamic system. The transaction logs and data access patter are monitored and partitions are formed periodically

The essential contributions of this paper are structured as follows:

a. The design of scalable workload-driven partitioning [2] which are stand on data access pattern and traces the logs, are studied and implemented in MongoDB by forming 5 partitions.

b. The TPC-C 9 tables are mapped into different 9 collections in MongoDB and transaction are carried out on 5 partitions which are statically placed. This static approach increases the distributed transaction and the performance of the application is decrease.

c. The TPC-C 9 tables are then mapped into a single collection, the scalable workload-driven technique is used to partition the data across the 5 partition and transactions are carried over those partitions. These will reduce the distributed transaction. The performance in the terms of response time is low and throughput of the system is high as compared to above case.

d. The results of both above case are taken on local machine and also on EC2 instance to check the performance over the cloud.

The rest of this paper is as follows. The section 2 is the background of the paper consist of the related work done by the researches are explained in brief. The section 3 describes the central idea of the work done which includes design of the scalable workload driven algorithm is described. Also, the architecture of the proposed system. Mapping of the TPC-C tables into MongoDB collections are explained in the section 4. Following section 5, with the implementation of the work. Section 6 states the results of the work done. Finally, section Conclusion, finalize the paper.

Data partitioning means physically partitioning the database which will help to scale out the database to get available all the time. A lot of work is done on the metrics for data partitioning to give the high performance of the application to be scalable and restrict the transactions on a single partition. Some of them are listed below.

The prototype is built with benchmark tool TPC-C which uses OLTP transaction for web applications. These OLTP transaction requires quick response from the applications in recent times. TPC-C benchmark is a popular benchmark which is an Online Transaction processing workload for estimating the performance on different hardware and software configuration.

The originator Sudipto Das open up with the technique ElasTraS [4] which express Schema level partitioning for gaining scalability. The intent behind schema level partitioning is to collect alike data into the same partition, as the transactions only access the data which is needed from a large database. A major goal of ElasTraS is to have elasticity and also to reduce cost operation of the system during failure.

The author Cralo Curino has put forward, Schism: A Workload-Driven Approach to Database Replication and Partitioning [7] to improve the scalability of shared nothing distributed databases. It intends to deprecate the distributed transactions while making balanced partitions. For transactional loads graph partitioning technique is used to balance the data. Data items which are accessed in graph partitioning by the transactions are kept on a single partition.

J. Baker et al., presented Megastore [5] in which data is partitioned into a compilation of entity groups. An entity group is a selection of related data items and is put on a single node so that the data items required for enhancing the approach are accessed from a single node. Megastore aims to make the system to have: Megastore provides synchronous replication but delays the transaction.

The author Xiaoyan Wang has presented, Automatic Data Distribution in Large-scale OLTP Applications [8]. The data is divided into two categories original data and incremental data. For original data that is old data, BEA (Bond Energy Algorithm) is applied on it and for incremental data that is progressive data, online partitioning will be invoked where partitions are formed on the base of kNN (k-Nearest Neighbour) clustering algorithm. Data placements allocate these data to the partitions by genetic algorithm.

IJAAS Vol. 7, No. 1, March 2018: 21 – 28

The author Francisco Cruz put forward Table Splitting Technique [1] which considers the system workload. A relevant splitting point is the point that split the region into two new regions with likely loads. The split key search algorithm satisfies the above statement. The algorithm estimates the splitting point when it receives the key from the first request of each region. For each request, if the split key differs then algorithm changes the splitting point.

The author Curino, suggested the Relational Cloud [9] in, which scalability is reached with the workload-aware approach termed as graph partitioning. In graph partitioning, the data items, which are frequently accessed by the transactions are kept on a single partition. Graph-based partitioning method is used to spread large databases across many machines for scalability. The notion of adjustable privacy showing the use of different levels of encryption layered can enable SQL queries to be processed over encrypted data.

The author Miguel Liroz-Gistau [6] has proposed a divergent way of dynamic partitioning technique called DynPart and DynPartGroup algorithm in Dynamic Workload-Based Partitioning Algorithms for Continuously Growing Databases, for efficient data partitioning for incremental data. The problem with static partitioning is that each time a new set of data arrives and the partitioning is redone from s cratch.

The authors Brian Sauer and Wei Hao have presented [10] a different way of data partition using the data mining techniques. It is the methodology for NoSQL database partitioning which depends on data clustering of database log files. The new algorithm has been built to overcome k-means issue that is the detection of oddly shaped data, by using minimum spanning tree which is effective than k-means

3.1. Design of Scalable Workload Driven Partitioning in Mongodb

The proposed system considers the mapping of TPC-C schema into MongoDB collections for the improvement of the performance. In this partitioning strategy, transaction logs and data access pattern are monitored. The data access pattern are analysed such as which warehouse is more prone to supply the requested warehouse. That means when customer place an order, and that order is satisfied by warehouse present on a partition but the item is out of stock and that transaction is fulfilled by another war ehouse from another partition. This behaviour of serving the requested warehouse is tracked and patterns are formed. Based on these two factors the partitions are formed.

3.2. Scalable Workload Driven Partitioning Algorithm

The architecture of scalable workload driven algorithm [2] gives overview of the project. The database which needs to be partitioned contains data items of local and remote warehouses in which local house will represent requested warehouse and the remote warehouse will represent the supplier warehouse. The algorithm is then applied on the database and shards are formed. Hence which will restrict the transaction to a single partition and the performance and the throughput of the application will increase. The algorithm is neither static nor dynamic, it lies between them and partitions are restructured as per need, by referring the transaction logs and access patterny

3.3. Definitions of Terms In The Algorithm

3.3.1. Load

The load of the partition [2] which is calculated in the algorithm, interprets the number of transactions executed on the each warehouse, and the total load of the partition is calculated by adding the load on each warehouse. The mean of the load is calculated to perform standard deviation on the partition which will define how much is the division of the load from the average load of the partition.

3.3.2.

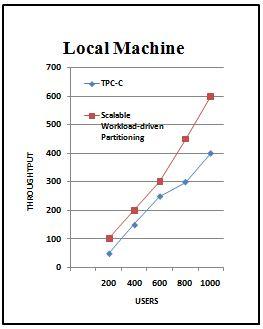

The association of the partition [2] is calculated in algorithm, interprets the number of local transaction and distributed transaction executed on the partition. Local transaction means the transaction which are fulfilled by the requested warehouse only and the distributed transaction means the request is fulfilled by the supplier warehouse where the requested warehouse was out of stock. For example, the customer is requesting data on w1 warehouse of partition A but as there is no stock, the request is completed buy another w two warehouse of partition B.