Augmented Reality Framework for Interactive Learning (ARFIL)

Faculty Advisor: Uzo N. Osuno, Adjunct Associate Professor in Computer Science

Author: Diana Bayandina Computer Science Department, Fred DeMatties School of Engineering and Applied Science, Hofstra University

Abstract

The Augmented Reality Framework for Interactive Learning (ARFIL) introduces a scalable, modular platform designed to transform traditional educational materials into immersive, interactive experiences. Developed in Unity and powered by Vuforia, ARFIL enhances student engagement by dynamically linking physical materials with responsive 3D content. The framework is architected for extensibility across disciplines, demonstrated through a human anatomy module featuring real-time model recognition and user interaction via custom C# logic. Designed with future AI integration in mind, ARFIL sets the foundation for intelligent, personalized learning systems that adapt to individual learners in real time.

Introduction

Traditional educational media often rely on static visuals that fail to convey dynamic structures or support learner interaction. Augmented Reality (AR) bridges this gap by allowing digital content to be overlaid onto physical materials, fostering richer conceptual understanding. ARFIL proposes a modular, extensible approach to AR-based instruction, enabling educators to pair image targets with interactive, discipline-specific 3D content. This project explores ARFIL's design principles, technical implementation, and roadmap toward intelligent, AI-driven education platforms.

Contribution

This project delivers a reusable AR architecture engineered for cross-disciplinary deployment. At its core is a modular system that links physical image targets with digital 3D assets in real time, enabling seamless integration of interactive content across subjects. ARFIL introduces a custom interaction engine that supports user-driven model manipulation and rotation. By decoupling recognition, content management, and interaction into distinct architectural layers, the framework promotes rapid extensibility, facilitating the easy addition of new educational modules without the need for foundational redesign. ARFIL includes built-in pathways for AI-driven personalization through data logging and interaction tracking.

Methodology

The development of ARFIL involved the integration of multiple software layers within the Unity engine, combining real-time image recognition via Vuforia, interactive logic based on C# scripting, and performance-aware rendering. This section highlights the technical pipeline, showcases the working AR module in use, and presents empirical data collected during runtime testing. Together, these components form a cohesive framework that prioritizes modularity, responsiveness, and educational adaptability.

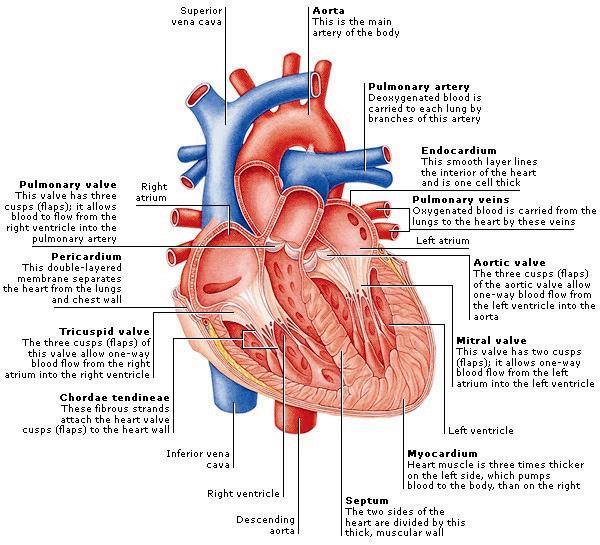

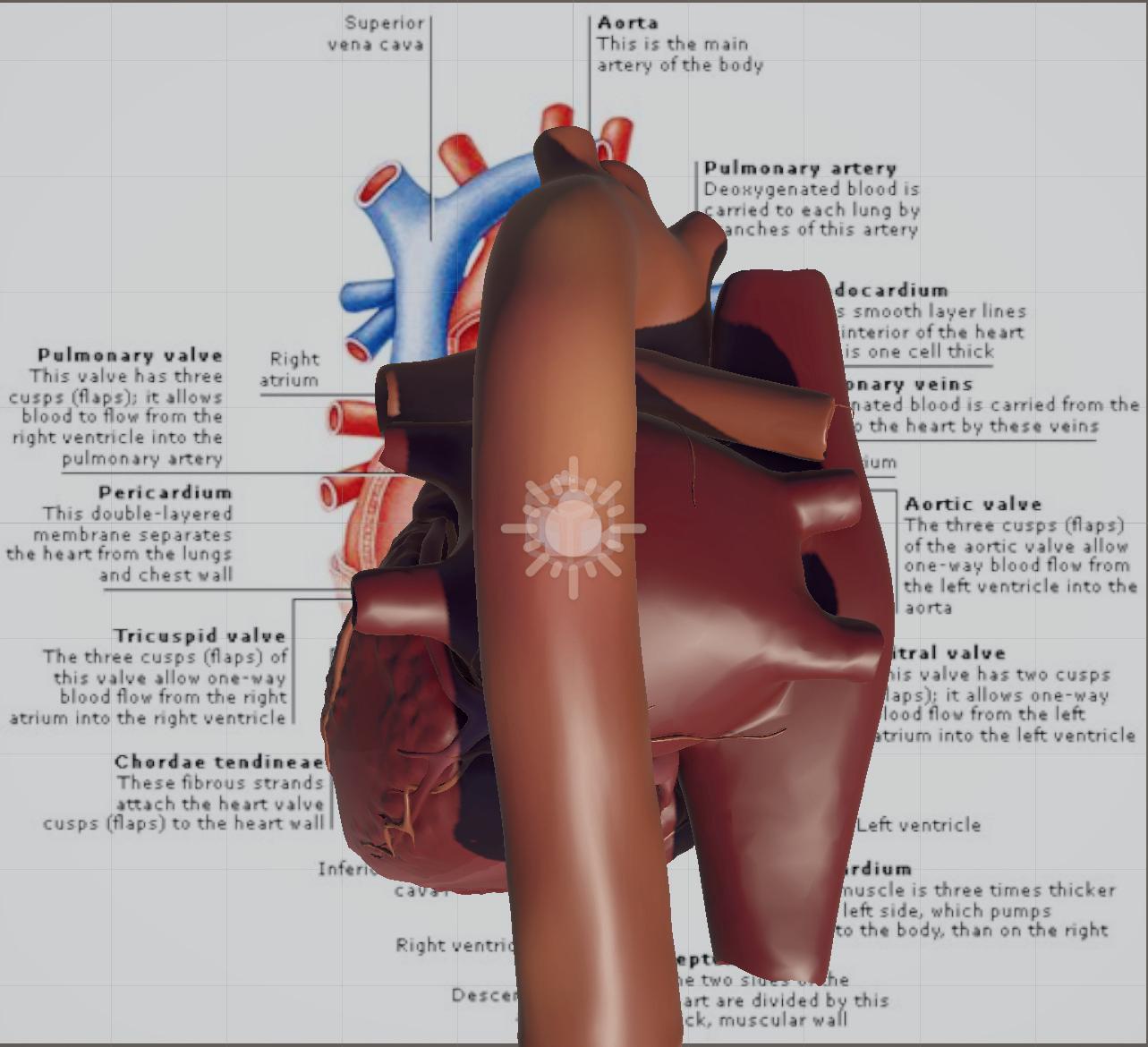

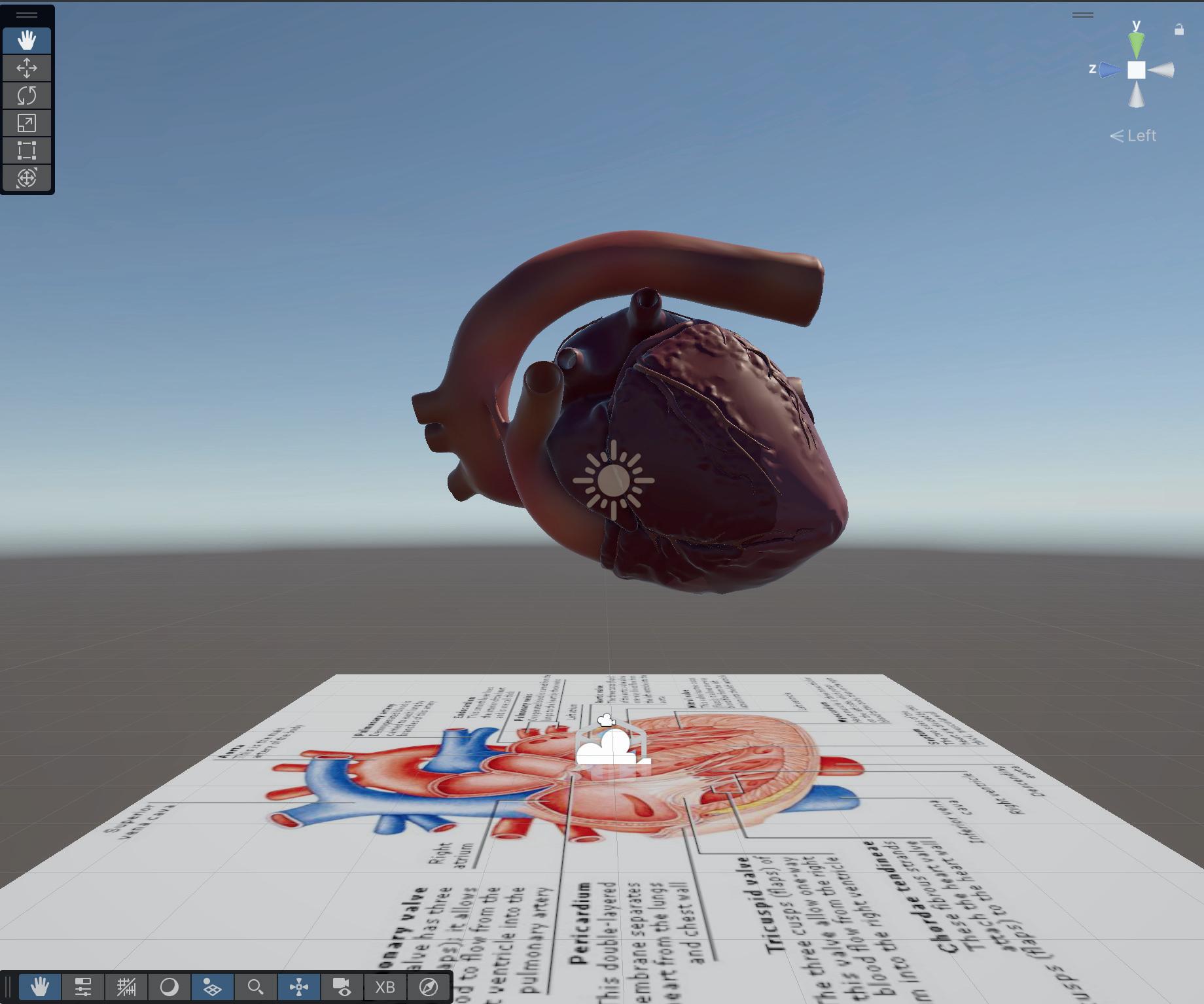

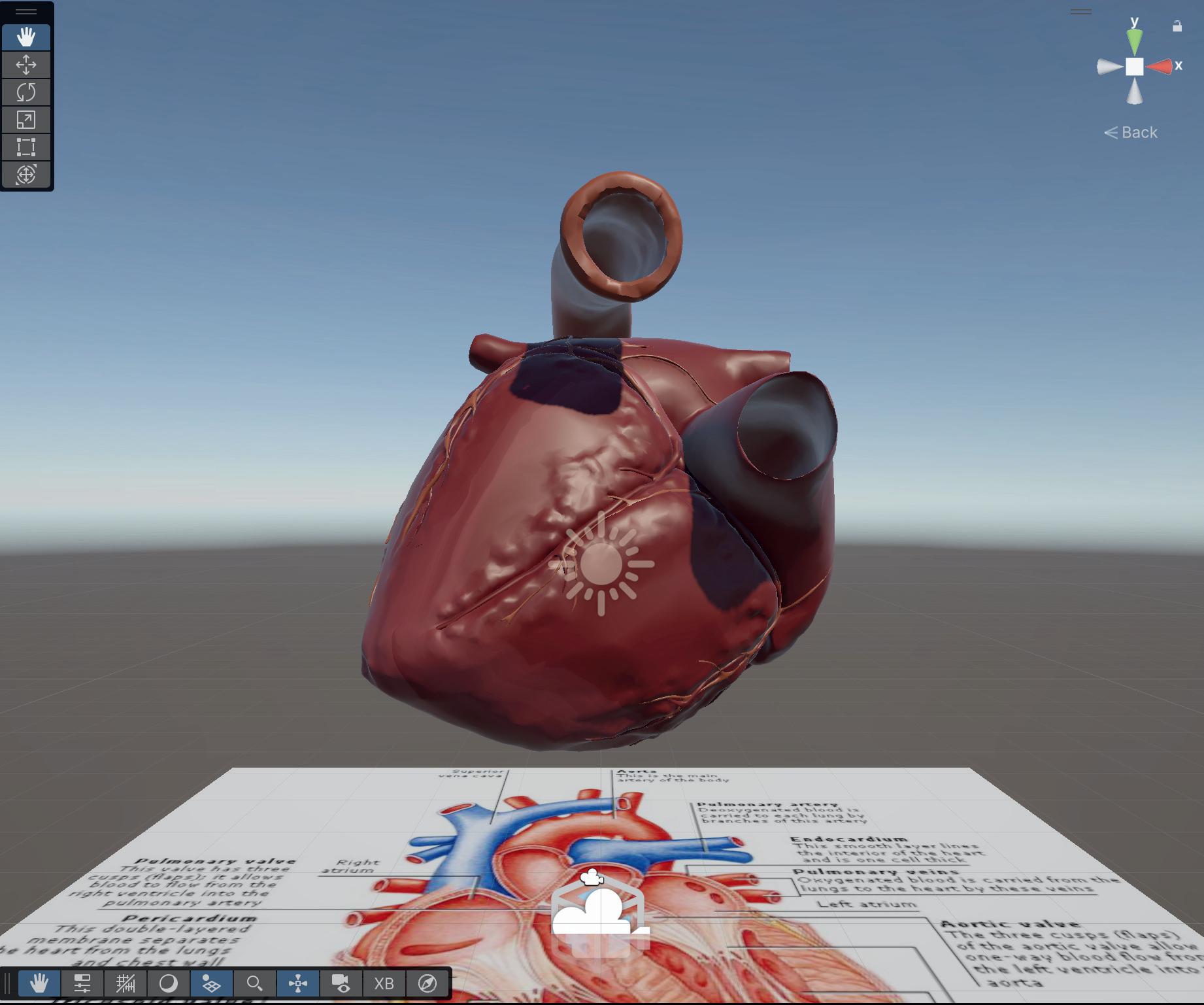

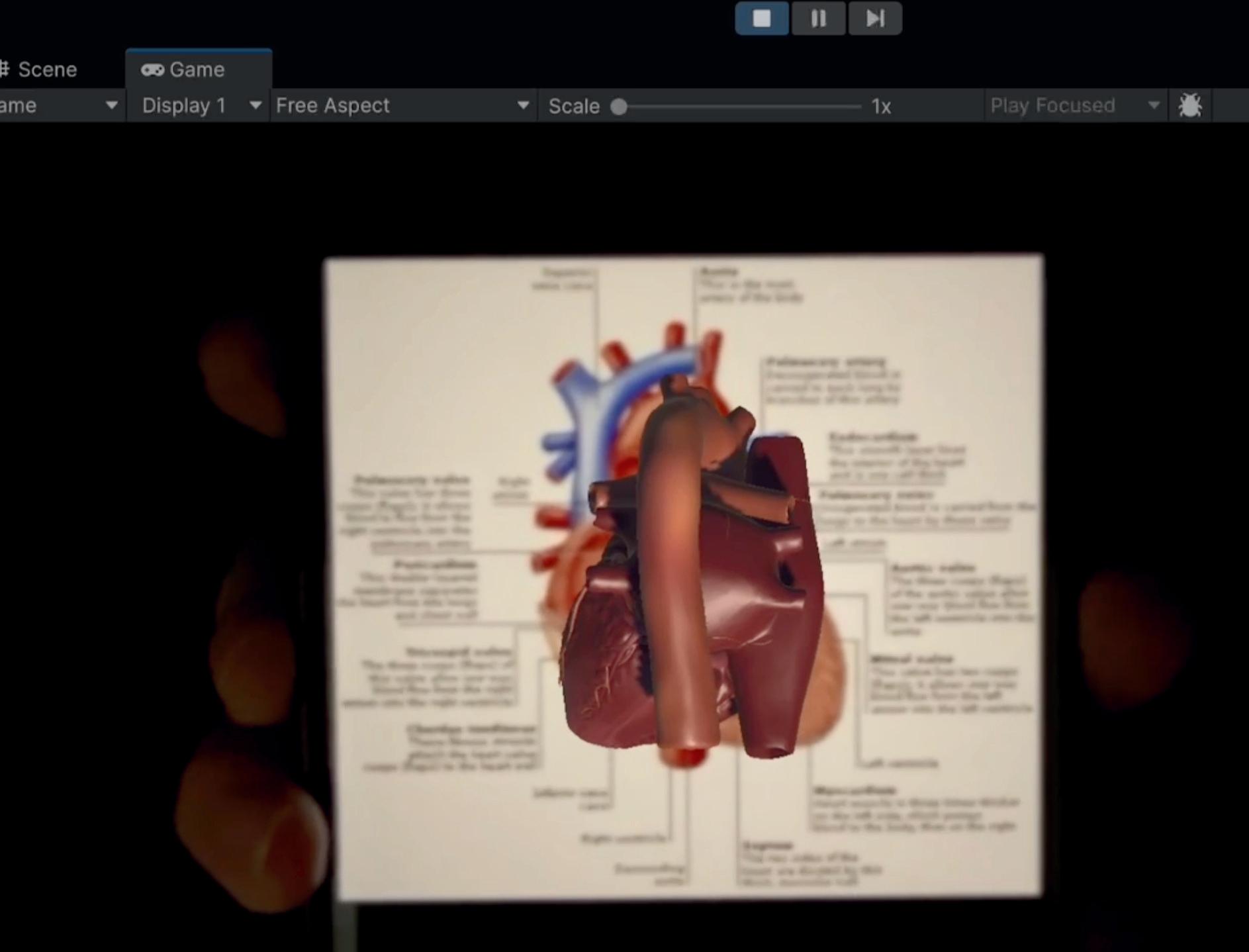

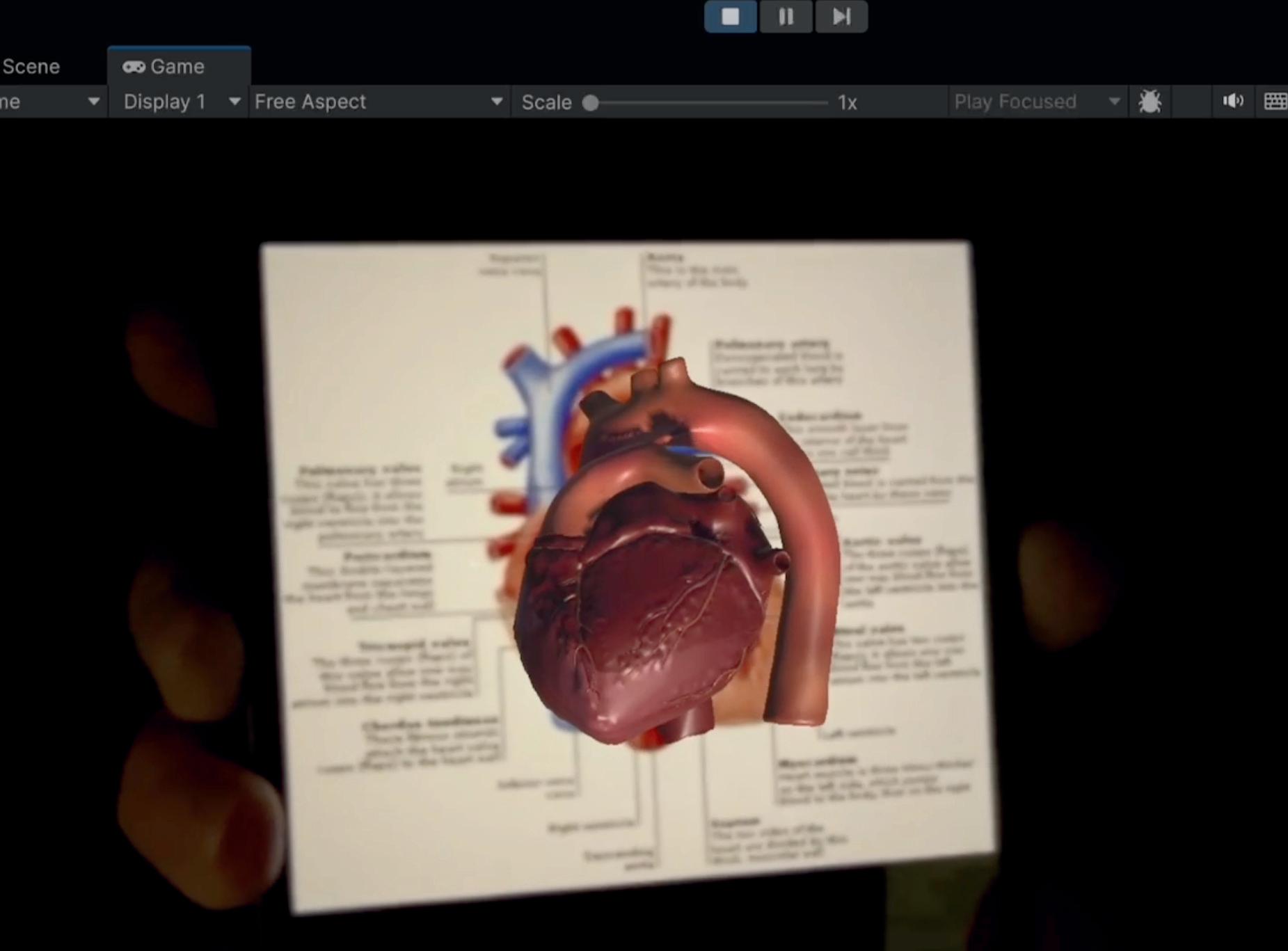

The ARFIL prototype features a human heart module designed to demonstrate the full interactive pipeline. Upon detecting a printed image target, the system renders a 3D anatomical heart model in real time, anchored to the physical surface. Users can engage with the model via tap or click to toggle rotation, simulating tactile examination. This interaction is handled through C# scripting and executed via Unity’s real-time rendering environment. This demonstrates the system’s support for spatial learning.

System Design

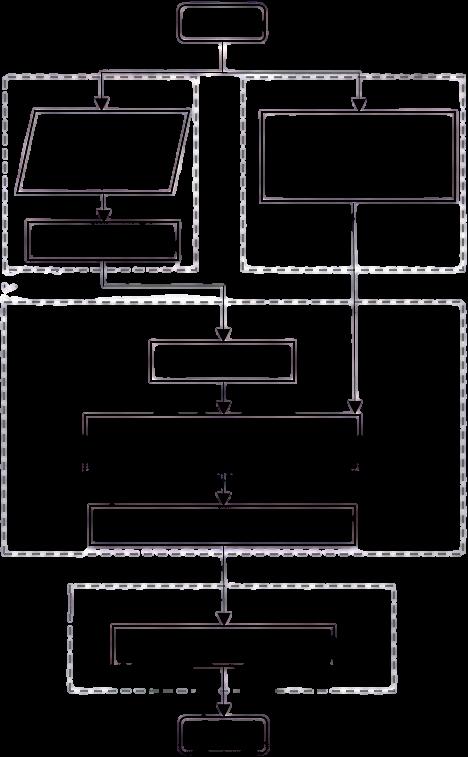

The ARFIL system is architected with a modular structure that separates recognition, content management, and user interaction into distinct components. The core controller (ARFILManager) orchestrates the flow of data and operations across these subsystems. Recognition is triggered by Vuforia’s image tracking, followed by model loading and positioning via AssetLoader, and interaction logic through RotationEngine. This architecture enables extensibility and reuse across subject-specific modules.

AR in Education

The ARFIL framework is intended to bridge the gap between physical study materials and immersive digital content, enhancing student engagement through interactive visualization. In disciplines like biology and chemistry, where spatial structures are central to comprehension, ARFIL enables learners to interact with complex 3D models in real time. By anchoring virtual content to printed references, the system supports intuitive learning, encouraging exploration and reinforcing conceptual understanding through multisensory engagement. Beyond the core interaction model, the framework supports modular content replacement, making it adaptable across grade levels and disciplines.

Modular Architecture

Each subsystem in ARFIL is designed for modularity and future extensibility, supporting clean integration of AI, analytics, and interaction logic within a real-time AR environment.

Human Heart Module

The initial ARFIL module visualizes the human heart using a textured 3D model anchored to a printed anatomical diagram. Users can rotate the model with a tap or click, simulating physical examination. This module demonstrates ARFIL’s interactive core and sets the foundation for domain-specific extensions.

This diagram illustrates the full development pipeline of the ARFIL application, starting with image marker registration and 3D model creation, followed by integration in Unity, and concluding with deployment on mobile devices. The workflow reflects a modular, scalable approach designed for educational AR content delivery.

Strategic Value

As educational institutions increasingly adopt digital learning technologies, frameworks like ARFIL offer scalable, cost-effective solutions for content delivery that go beyond passive video or slideshow formats. By combining real-time interaction, hardware accessibility, and the potential for AI integration, ARFIL stands as a forward-compatible platform that can support diverse learning styles, improve accessibility for under-resourced classrooms, and serve as a foundation for nextgeneration educational technologies. As AR adoption grows, ARFIL can serve as a model for future platforms that merge pedagogical theory with scalable technical implementation.

Results

The ARFIL prototype successfully demonstrates real-time image recognition and interactive model rendering using Unity and the Vuforia SDK. When a printed image of the human heart is recognized by the system, a detailed 3D model is projected into the physical space, anchored with sub-second latency. Interaction is handled through a lightweight toggle mechanism that allows the user to control rotation with a simple click, simulating a physical manipulation of the model. The application maintains a stable runtime frame rate of approximately 58 FPS on mid-range hardware and exhibits smooth rendering transitions even under moderate lighting and angle variance.

Demo

A live demonstration of ARFIL’s heart module was developed using Unity and Vuforia. Users can scan a printed target image to trigger realtime 3D model rendering and interactive rotation.

Future Work

Implement AI-based learner analytics for personalized model difficulty and navigation. Expand content library to additional subjects (e.g., DNA, Solar System, Cell Anatomy).

Integrate gesture and voice interaction via Unity ML Agents or TensorFlow. Evaluate pedagogical impact through formal user testing with educators and students.

References

Azuma, R. T. (1997). A survey of augmented reality. Presence, 6(4), 355–385. 1. Billinghurst, M., & Dünser, A. (2012). Augmented reality in education. New horizons for learning, 28(2). 2. Unity Technologies + Vuforia Developer Portal. (2023). 3.