Traditionally, the year in our industry begins with a trade fair highlight: Photonics West in San Francisco. More than 1,500 exhibitors from the machine vision and photonics industries show their innovations, framed by an extensive conference program. This is reason enough for us to focus on this event in this first issue of inspect America this year.

Not only the preliminary report on Photonics West is worth reading for visitors. The application articles and technical papers are also particularly exciting in this context. They show the companies’ products in action. Last but not least, at the end of this issue you will find product information that will give you a few impressions of what you can take a closer look at at Photonics West. To make it easier for you to find the respective company, the booth number is included for all exhibitors.

I hope you have a great time at Photonics West if you are going. And in any case, I hope you enjoy reading this first issue of inspect America in the new year.

David Löh Editor-in-Chief of inspect dloeh@wiley.com

PS: Please let me know how you liked this issue. Write to me at: dloeh@wiley.com

3 News

8 SPIE Photonics West 2025: A preview

9 “Bringing Together India’s Machine Vision Industry” Interview with Raghava Kashyapa, Co-Founder of Indian Machine Vision Association (IMVA)

12 Automation Industry: Will it Withstand Recession Also in 2025? State of Manufacturing Automation ahead of Trump 2.0 + 9 Implications for the Industry

15 1.7 Million Pins per Day: Checking the Vehicle Electronics Application of laser scanners in the automotive Industry

17 Liquid Lens Makes Compact Vision System Highly Flexible A complete control-integrated solution for image acquisition

19 Precise Measurement and Analysis of the Smallest Details Diverse 2D/3D machine vision in macro- and microscopy

21 Deep OCR on the Edge: No Buzzword Bingo, but Real Customer Benefit Optical character recognition with AI support

24 AI and Optics: Working Hand in Hand for Enhanced Quality Control Potential of artificial intelligence in machine vision

26 Manufacturing Data Platform Showcases Smart Factory Automation Increasing Efficiency in Production

29 Gobo technology improves 3D metrology Ultra-fast and -robust 3D sensing

32 Do I Really Need Machine Vision? Selecting Vision Systems for Cobot-Based Quality Applications

34 On the track of corrosion using machine learning Evaluate corrosion test panels rapidly and objectively

37 Products & Technologies

Optical Performance: high transmission and superior out-of-band blocking for maximum contrast

StablEDGE® Technology: superior wavelength control at any angle or lens field of view

Unmatched Durability: durable coatings designed to withstand harsh environments

Exceptional Quality: 100% tested and inspected to ensure surface quality exceed industry standard

Product Availability: same-day shipping on over 3,000 mounted and unmounted filters

Association A3 appoints new Executive Vice President

cutive team. His responsibilities include driving strategic initiatives, enhancing collaboration, and increasing member value to further A3’s mission.

President Jeff Burnstein emphasised the importance of Shikany’s promotion in light of the organisation’s rapid growth. Over the past five years, A3 has doubled its team, established the ‘Automate’ event as the leading annual robotics and automation event in North America, and expan ded internationally to Mexico and India. Shikany’s leader ship in internal operations enables Burnstein to focus on long-term strategies.

Shikany, who has been with A3 since 2012, led the reb randing initiative that unified four organisations under the A3 umbrella. In his previous role, he drove membership growth and expanded A3’s global reach to over 1,300 mem ber companies.

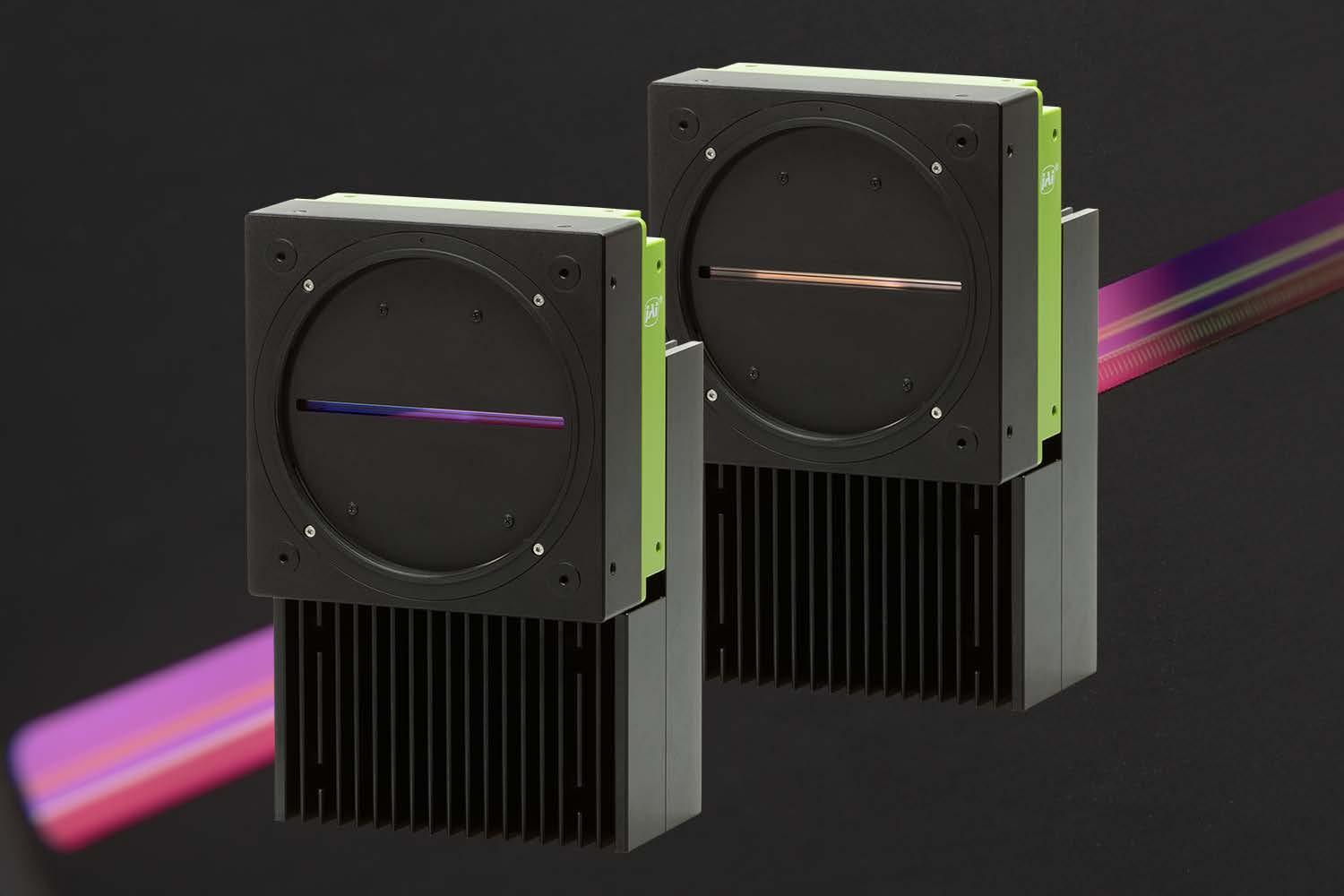

SW-16000 - 16K-megapixel

Trilinear: 16384 x 3 (100 kHz)

Mono: 16384 x 1 (277 kHz)

5.0 µm x 5.0 µm

8/10/12-bit

CXP-12

SW-2005 - 2K-megapixel

Trilinear: 2048 x 3 (44 kHz)

Mono: 2048 x 3 (172 kHz)

7.0 µm x 7.0 µm

8/10/12-bit

CXP-6, 5GigE

Headwall Group has acquired Austrian EVK DI Kerschhaggl. Headwall, a provider of spectral imaging solutions and optical components, is a portfolio company of the investment firm Arsenal Capital Partners. EVK specializes in industrial sensor-based sorting and inspection systems.

This acquisition is intended to diversify Headwall’s product portfolio and strengthen its presence in the machine vision market. EVK’s hyperspectral and inductive sensor technologies, as well as its expertise in data analysis, complement Headwall’s existing products. By combining their technologies and expertise, both companies aim to provide enhanced solutions and expand their market position. EVK’s integration will enable Headwall Group to expand its market presence and capture new growth opportunities in the machine vision and remote sensing markets. At Photonics West: Booth 5423

This year again, SPIE welcomes 47 Members as new Fellows of the Society. They join their peers in being honored for their excellent technical achievements, as well as for their substantial service to the optics and photonics community and to SPIE. Fellows are members of the society who have made significant scientific and technical contributions in the multidisciplinary fields of optics, photonics, and imaging. Since the society’s inception in 1955, more than 1,800 SPIE members have become fellows.

The inductees this year represent high-profile leaders in academia, industry, and government, many of whom are prominent in their support of the optics and photonics community and mentorship of others. The 2025 fellows cohort includes Jeremy Bos, an associate professor in the department of electrical and computer engineering at Michigan Technology University; Sona Hosseini, a research and instrument scientist in the planetary science section of the California Institute of Technology’s Jet Propulsion Laboratory; Martin Ettenberg, founder and CTO of Princeton Infrared Technologies; Mikhail Kats, a professor in the department of electrical and computer engineering at the University of Wisconsin-Madison and an active photonics-community advocate; Darren Roblyer, an associate professor at Boston University in the departments of biomedical engineering and electrical and computer engineering and editor-in-chief of the SPIE journal Biophotonics Discovery; Katie Schwertz, a senior manager at Edmund Optics and recipient of the 2024 SPIE President’s Award; Tsu-Te Judith Su, an associate professor of biomedical engineering and optical sciences at Wyant College of Optical Sciences; and Jess Wade, Royal Society University Research Fellow and lecturer in functional materials at Imperial College London and chair of the SPIE Equity, Diversity, and Inclusion Committee.

New Fellows are acknowledged during the SPIE symposium of their choice throughout the year. The complete list of the 2025 SPIE Fellows is available online.

The PI Group announced that Beate van Loo-Born has taken over the position of Chief Financial Officer (CFO) in the company as of January 1, 2025. Together with Markus Spanner, Chief Executive Officer (CEO), and Florian Geistdörfer, Chief Operating Officer (COO), she completes PI’s management team, which will focus on implementing the strategic vision and promoting sustainable and healthy growth in the new year.

In her role as CFO, Beate van Loo-Born is responsible for numerous business areas such as Finance & Controlling, Treasury, Risk Management, Legal, Compliance, Supply Chain Management, Business Architecture, Sustainability and Human Resources. In this position, she will play a decisive role in promoting operational excellence and the long-term success of the company.

At Photonics West: Booth 3517

•10GigE in BaseT/RJ45 POE & SFP+ fiber

•29 x 29mm

•4.8W POE & 3W SFP+

•Auto-negotiation to 10G, 5G, 2.5G, 1G

•Full Featured

•Zero copy and GPU Direct

•Pricing From 550 €

1GigE, 2.5GigE, 5GigE, 10GigE, 25GigE, 100GigE cameras from the world leader of high-speed cameras

Zebra Technologies is acquiring Photoneo, a developer and manufacturer of 3D machine vision solutions. This acquisition is intended to further strengthen Zebra’s presence in the growing 3D machine vision segment. By combining Photoneo’s 3D machine vision solutions with Zebra’s advanced sensors, multi-vendor software and AI-based image processing, customers will benefit even more from the portfolio, which covers demanding use cases such as bin picking, depalletizing, digital twins and object inspection in automotive manufacturing and logistics.

Photoneo’s intelligent sensors are particularly effective in the visionguided robotic (VGR) segment, offering faster, more accurate and more robust solutions through parallel structured light technology. The acquisition complements Zebra’s existing portfolio, which was expanded by the acquisition of Matrox Imaging in 2022. Zebra expects the transaction to close in the first quarter of 2025. Financial details will not be disclosed.

The strategic acquisition is intended to strengthen the company‘s position in the optics and photonics industry

Edmund Optics has acquired the high-tech company Son-x. The Aachen-based company, a spin-off of the Fraunhofer Institute, is a pioneer in the ultrasound-assisted production of precision optics. By integrating Son-x‘s technology, Edmund Optics can now process highly complex surfaces with multiple axes, from micro-components to large-format workpieces.

CEO Marisa Edmund emphasizes that this partnership advances the mission to provide leading high-precision optical solutions. Dr. Olaf Dambon of Son-x is excited about the new opportunities provided by Edmund Optics‘ global reach. The acquisition underscores Edmund Optics‘ long-term vision to expand its innovation leadership and better serve customers worldwide.

Networking, new products, and Nobel laureates complement the broad technical program and four exhibitions at the exciting week in optics and photonics.

Soon SPIE Photonics West 2025 will open its doors. The largest annual conference and exhibition in optics and photonics will run from 25 to 30 January at the Moscone Center in

San Francisco. The event brings together researchers, innovators, engineers, and business leaders from across the globe for an exciting week of research-sharing, collaboration opportunities, and exchanges. This year, the week will include 4,500-plus technical presentations across over 100 technical conferences, as well as showcasing more than 1,200 companies in four focused exhibitions.

SPIE Photonics West has four major application areas:

Bios highlights new research in biophotonics, biomedical optics, and imaging for diagnostics and therapeutics;

Lase focuses on the laser industry and its diverse applications;

Opto covers optoelectronics, photonic materials, and optical devices; and

Quantum West features quantum 2.0 technologies from quantum sensing and information systems to quantum enabled materials and devices and quantum biology. Quantum West also includes a Quantum West Business Summit highlighting the innovations that are moving quantum technologies to market.

Photonics West, the largest annual conference and exhibition in optics and photonics will run from 25 to 30 January at the Moscone Center in San Francisco. This year, more than 1,200 companies in four focused exhibitions will show their innovations.

The Bios Expo, featuring technologies in biomedical optics and healthcare applications, runs 25-26 January. The AR|VR|MR Expo – which focuses on augmented, virtual, and mixed reality and the vital role that optics and photonics play in hardware and headset development – takes place on 29-29 January. Quantum West Expo, 28-29 January, will showcase the latest quantum-enabled and -enabling technologies. The Photonics West Exhibition, running 28-30 January, encompasses the latest innovations from laser manufacturers and suppliers as well as other novel optics and photonics devices, components, systems, and services. a

Interview with Raghava Kashyapa, Co-Founder of Indian Machine Vision Association (IMVA)

The Indian Machine Vision Association (IMVA) unites India’s machine vision industry to foster growth and innovation. In this interview, Raghava Kashyapa, co-founder of IMVA, shares insights on IMVA’s mission to support businesses, educate professionals, and navigate industry challenges with a vision for a tech-driven future.

inspect: Why was the Indian Machine Vision Association (IMVA) founded?

Raghava Kashyapa: The IMVA was created to unite India’s machine vision industry. By bringing together companies, professionals, and researchers, we aim to share knowledge and address common challenges. With India’s growing need for machine vision technologies, a unified association helps streamline efforts and promote industry growth.

inspect: What is the aim of the IMVA?

Kashyapa: Our main goal is to promote the use and development of machine vision technology in India. We support businesses, educate professionals, and connect industry players. We also advocate for the industry’s interests in government policies and regulations.

inspect: Who are the founding members?

Kashyapa: The founding members include professionals and companies from various parts of the machine vision ecosystem, such as system

integrators, technology providers, academics, and manufacturers. The idea was initiated by myself and Ron Müller (of Vision Markets), recognizing the need for such an association. An initial meeting in April 2024 in Bangalore with 12 attendees validated this idea, followed by a meeting at the Automate show in Mumbai, where we opened it up for 25 charter members. Since then, a subset of the charter members has been diligently working on creating the charter and organizational structure, which we hope to establish by Q1 of 2025. We expect to have a 100 additional members in the next 12-18 months.

inspect: What requirements must a company fulfill in order to become a member?

Kashyapa: Membership is open to both domestic and international organizations involved in the machine vision ecosystem, whether through technology, research, or application. We have different membership categories: Corporate Members: Companies involved in machine vision. A subset, known as charter members, initially came together to form the association. Corporate members are further distinguished by whether they are domestic or international (based on majority ownership).

March 12, 2025: Robotics Day Robots have become an integral part of industry, and this will also be the case in small and mediumsized enterprises and the skilled trades in a few years‘ time. Our webinars show you the best way to enter the world of robotics and why now is the right time.

April 16, 2025: Metrology & Precision Manufacturing

High quality standards and the optimization of production efficiency are at the heart of modern manufacturing processes. Both are crucial for manufacturers to remain competitive. This event therefore revolves around measurement technology, production software and machine vision – in short: technologies that help to optimize your production.

Do you have expert knowledge that you would like to share?

June 4, 2025: Machine Vision, Robotics, and AI combined

Only robots with the ability to see can perform complex tasks such as bin picking or handling unsorted objects on conveyor belts. Cameras provide this sense of sight. In combination with artificial intelligence, the range of applications is immense.

Do you want to speak on a big stage on relevant industry topics?

June 25, 2025: Panel discussion: What were the biggest trends at Automate?

At Automate, the largest automation trade fair in North America, numerous innovative products were once again on display or even presented to the public for the first time. The expert panel discusses the highlights and technology trends that emerged at the trade fair.

Do you have an exciting innovation that you would like to present to your target group?

Then the digital events from inspect and messtec drives Automation are just right for you. They allow you to reach over 200,000 machine vision users and integrators, engineers, automation specialists and machine builders worldwide.

Interested? Then get in touch with us.

In addition to these events, we will also be happy to plan an individual webinar with you at a time that best suits your marketing plan.

Sylvia Heider Media Consultant Tel.: +49 6201 606 589 sheider@wiley.com

Birdie Ghiglione Sales Development Manager Tel.: +1 206 677 5962 bghiglione@wiley.com

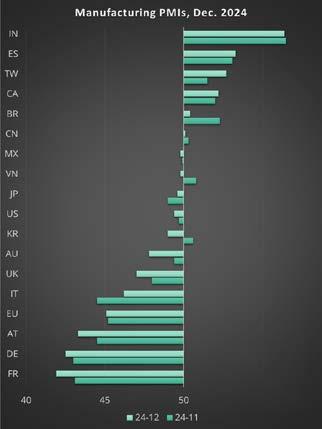

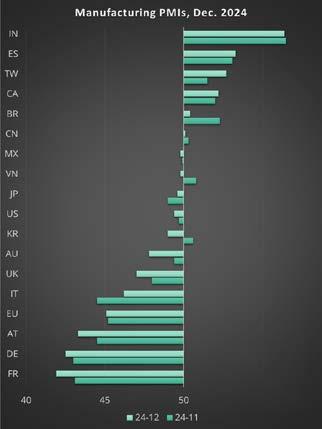

The latest Manufacturing PMI data for December highlights a mix of resilience and challenges across key global economies. This creates a compelling scenario for machine vision suppliers to refine their regional sales and marketing strategies.

In North America, the United States report a marginal decline reverting a steep M-PMI improvement since September. With Trump 2.0 and unforeseen changes in policies looming, manufacturers’ sentiment retreats from the 50-point line. Canada keeps rising signaling steady expansion. After the latest resignation of premier Trudeau, the economic climate in Canada is expected to brighten. Mexico sees a minor decline of 0.1 points, hovering close to the neutral line. For the first time, we have included Brazil in our monitoring as it becomes increasingly important as target market for manufacturing automation. While the M-PMI decreases significantly by 1.9 points it remains above 50, indicating growth at a slower pace. India and Taiwan deliver the strongest optimism in the Asian region. We will see how China’s new initiative to hand out Chinese ID cards to Taiwanese citizens will weigh on the economic climate of the island. South Korea experiences a sharp decline of 1.6 points, slipping into contraction from mounting pressures of local rivals on its

Manufacturing PMI readings of selected target countries for machine vision suppliers from last month, December 2024, compared to the previous month, November 2024

manufacturing sector while manufacturers in Japan showed less pessimism in December.

EU’s manufacturing industry stays unchanged in its expectation of a worsening recession, while especially France and Germany drag down the community.

These trends emphasize the contrasting trajectories of global economies. Emerging markets like India and Taiwan lead the charge in recovery, while developed markets confront persistent challenges.

Purchasing Managers’ Index and PMI are either trademarks or registered trademarks of S&P Global Inc or licensed to S&P Global Inc and/or its affiliates.

What exactly Trump will do during his second term is not yet clear at the time of writing. But some possible consequences for the (machine vision) industry can be deduced from his announcements, such as the import tariffs. This list explains a few of them. So, it can be expected to...

▪ attract investments into building manufacturing capacity on US territory, especially for high-value goods, to avoid tariffs, creating business opportunities for the machine vision industry.

▪ release held-back investment activity due to the resolved uncertainty from the election.

▪ decelerate the cooling of US inflation, keeping interest rates attractively high for capital, hence strengthening the US Dollar valuation, which finally invokes a price disadvantage for US players and an advantage for European and Asian players in global markets.

▪ accelerate the ongoing relocation of production capacity out of China to India, SEA, and North America to avoid tariffs and corresponding disadvantages on the US market. Equipping these green-field factories boasts big opportunities for non-Chinese machine vision players and Chinese players who are not government-subsidized.

▪ weaken the direct market access of Chinese machine vision players in the US.

▪ amplify the adoption of ABC (All But China) policies of US importers, restricting the use of Chinese-owned components in acquired equipment.

▪ lead to intensified business and sales activities of Chinese players in their remaining addressable markets in Europe, the Middle East, SEA, and Latin America.

▪ force European countries to invest heavily in their own military strength, providing growth opportunities for

machine vision in military equipment and its manufacturing.

▪ command the EU and especially the Euro-zone to reform its conditions as a business location, foster the European market, and unite its economic forces to ensure access to required commodities, raw materials, semiconductors, technologies, and low-cost energy, creating business perspectives for machine vision and all automation providers.

Recession in many manufacturing industries, US trade tariffs and expected countermeasures, commoditization of standard components, sales and price pressure from Chinese players, ongoing military conflicts, continued market consolidation through corporate acquisitions – formerly growth-spoiled Vision players have seen better market conditions.

Nonetheless, as elaborated above, there are still plenty of geographic regions and industry sectors with

strong demand for machine vision components, integration services, and turnkey solutions. Hence, our preliminary forecast of the 2025 market value of machine vision components concluded growth in the range of 5.0 to 7.5 percent. Our machine vision Market Report is built upon over 130 indicators, input from our network of 600 industry insiders, and findings plus ground truth information from our signature consulting services. It provides detailed analyses of the machine vision market size and its trends by product type, region, industry, and combinations of such. a

Ronald Müller CEO and Founder of Vision Markets

This pin inspection system is carried out in continuous operation on up to 40 machines simultaneously, which means that more than 1.7 million pins can be inspected every day.

Checking connector pins in cars is a real Sisyphean task – impossible for humans. A laser scanner combined with powerful image processing software can do the job. This ensures the quality of millions of pins every day.

The automotive industry has developed rapidly in recent years. Nowadays, almost every function in a vehicle is electronically controlled, from window operation to automatic light control. Electromobility in particular is playing an increasingly important role: in large markets such as China, electric vehicles now account for around 51 percent of cars sold, underlining the rapidly growing transition to electromobility. In the US, too, the proportion of e-cars is now around 8-9 percent, a significant increase that illustrates the growing acceptance and spread of this technology. This technological evolution has led to an increasing demand for high-precision electronic connectors, which act as the backbone of the vehicle control system. A modern car contains up to 5,000 different connectors, totaling around 100,000 pins. These connections are essential for the functionality of vehicles, especially in the area of electronics and automation. Each of these pins must therefore undergo a strict quality check before installation, as even a single defective pin can cause the entire electronic system to fail.

This is where the expertise of Aku Automation from Germany comes in. As a system integrator for machine vision solutions, the company has developed an application in collaboration with the northern German company AT Sensors that enables precise inspection of pins. This inspection is carried out in continuous

operation on up to 40 machines simultaneously, which means that more than 1.7 million pins can be inspected every day.

The biggest challenge when testing the pins is to ensure that they function perfectly even after assembly under a wide range of external influences, such as vibrations or moisture. The solution lies in the use of the 3D dual-head sensors from AT’s XCS series. These sensors make it possible to scan the pins of electronic connectors simultaneously from two sides, thus avoiding shadowing (occlusion) and achieving a high precision.

The 3D dual-head sensors from AT’s XCS series scan the pins of electronic connectors simultaneously from two sides, thus avoiding shadowing (occlusion) and achieving a high precision.

The sensor is also characterized by its optimized laser and a field of view of 53 millimeters. The XCS series laser offers a uniform line thickness along the entire laser line, which is achieved by the optics in the laser projector. This homogeneity of the line thickness allows for high-precision scanning of small structures, regardless of whether the object to be inspected is in the center or at the edge of the line. This is a decisive advantage for the quality control of pins, as an exceptionally high level of accuracy and repeatability is achieved.

Another feature of the XCS sensors is the clean beam technology developed by AT. This function protects the laser from external interference factors, such as optical anomalies, and ensures pre-

cise and focused laser projection. Clean Beam also enables a uniform intensity distribution, which leads to consistent and reliable results.

Dr. Athinodoros Klipfel, Head of Sales at AT, highlights the features of the free AT software, which supports very detailed data output with features such as Multipart and Multipeak. Multipart enables the simultaneous output of up to ten different image features, while Multipeak allows highly reflective materials to be scanned without disturbing reflections.

Right from the start, Aku Automation focused on innovation when developing a reliable solution

for inspecting electronic connectors. An experienced team of developers worked intensively for over six months to realize the optimal design of the application. The challenge was to put together all the necessary components for a reliable system, program user-friendly software and integrate the output of quality data. To do this, Aku Automation relies on image processing software called Aku Visionmanager, which then evaluates the data using AI-supported deep learning algorithms. This enables customers to read out their data in a simple and uncomplicated way.

“Our priorities during development were a reliable quality procedure and ease of use for the customer. We therefore designed the application in such a way that it can be used for a wide range of electronic connector types and has a high multiplier effect,” explains Boris Gierszewski, Managing Director of Aku Automation.

The connector test developed by Aku Automation and AT is now used by numerous well-known car manufacturers. The reject rate of defective pins always remains below one percent. a

AUTHOR Nina Claaßen Head of Marketing

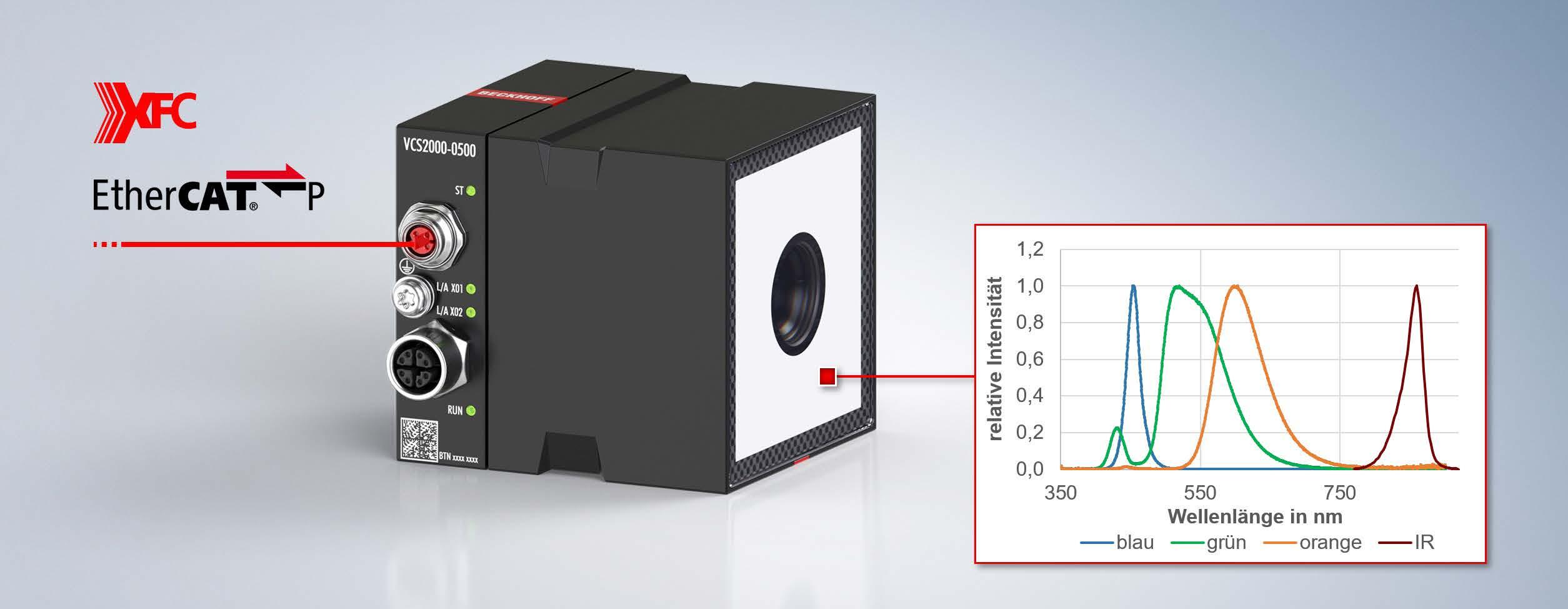

The compact camera system from a German machine vision and control provider consists of a camera, lighting and focusable optics with liquid lens. It significantly reduces the effort required for assembly and commissioning and is particularly suitable for applications with variable component heights, for example in logistics, due to its focus adjustment during operation. In addition, this Ethercat-capable unit offers all the advantages of direct and precisely synchronized control integration.

Beckhoff’s Vision Unit Illuminated (VUI) is a compact standalone vision system completed by a liquid lens. All the VUI’s functional components are encapsulated in a visually appealing anodized aluminum housing unit with an IP65/67 protection rating – making this product an all-inone solution. For image acquisition, the PLC controls the settings via Ethercat with a high level of precision and in exact synchronization with all processes in the application during runtime. Focusing in real time is facilitated by a robust liquid lens that can be used in any position. It also offers shock and vibration resistance in addition to a long service life on account of the high number of cycles. The light color as well as the intensity and length of the light pulse or the parameters of the camera for image acquisition can also be controlled via Ethercat.

The VUI is available with a standard camera, currently ranging from 2.3 to 5.0 MP, in both

monochrome and color versions. The IP65/67 housing unit has a low mounted height, thanks to the side-facing plugs. Multi-color lighting with four light colors ensures flexible contrast adjustment during runtime. The visible light colors are generated via blue LED semiconductors to ensure optimal temperature stability and complete coverage of the visible light spectrum. The green and redorange spectral range is converted via phosphors. Mixing the individual color channels produces spectrally resolved white light (Ra >80).

With a minimum working distance of just 40 mm, the VUI is also suitable for short working distances and confined installation spaces. The light distribution of the illumination is

Illuminated with an automatically focusable liquid lens is versatile and can also be used in confined spaces.

optimized to ensure homogeneous illumination of the object at all times. This means that the system can be used easily and flexibly throughout the respective system or application, thanks to electronic focusing.

The liquid lens does not have any mechanical moving parts. The unit is calibrated at the factory so that focus adjustment can be performed with a high degree of accuracy using real dimensions. The optimal choice and combination of lens position, aperture, and resulting depth of field ensures that the focus can be reliably adjusted at distances ranging from 10 to 2,000 mm, i.e., even beyond the optimal distance range for the illumination. Temperature-related changes in refractive power are reliably compensated by means of continuous temperature measurement and a corresponding mathematical model.

Because of the central data evaluation in the control system and simultaneous access to machine parameters, the control system can automatically adjust the focus at any time. This makes it easier, for example, for non-specialists to set the system up, which is often an important factor in view of the increasing shortage of skilled workers. In addition, prior height measurement and pre-setting the focus position in relation to the object according to the height measurement

is a common usage scenario for logistics applications, for example. If the object position is unclear, the automatic focus adjustment helps to create an evaluable image using a series of images with different focal adjustments.

The Ethercat performance factor

Ethercat communication technology ensures highprecision synchronization of image acquisition with all other machine processes and also provides the basis for fast, deterministic camera parameter switching through parameterization via Ethercat. Users also benefit from the Ethercat P one cable solution,

which combines communication and power in a 4-wire standard Ethernet cable. This means that the VUI can be supplied with the required voltage and data via a single cable.

All parameters for the camera, illumination, and even the focus of the liquid lens can easily be adapted to changing products (e.g. recipe-based products) during runtime thanks to ultra-fast Ethercat communication. Image acquisition can even be synchronized with high accuracy above the clock accuracy of the fieldbus using a highly precise timestamp. The image data is transmitted via the 2.5 Gbit/s port. The vision unit is compatible with numerous image processing applications as transmission takes place via Ethernet or the UDP protocol. a

Super depth of field through focus stacking with up to 250 focus positions combined to a 2D/3D image provides a very extensive expansion of analysis options compared to classic 2D machine vision.

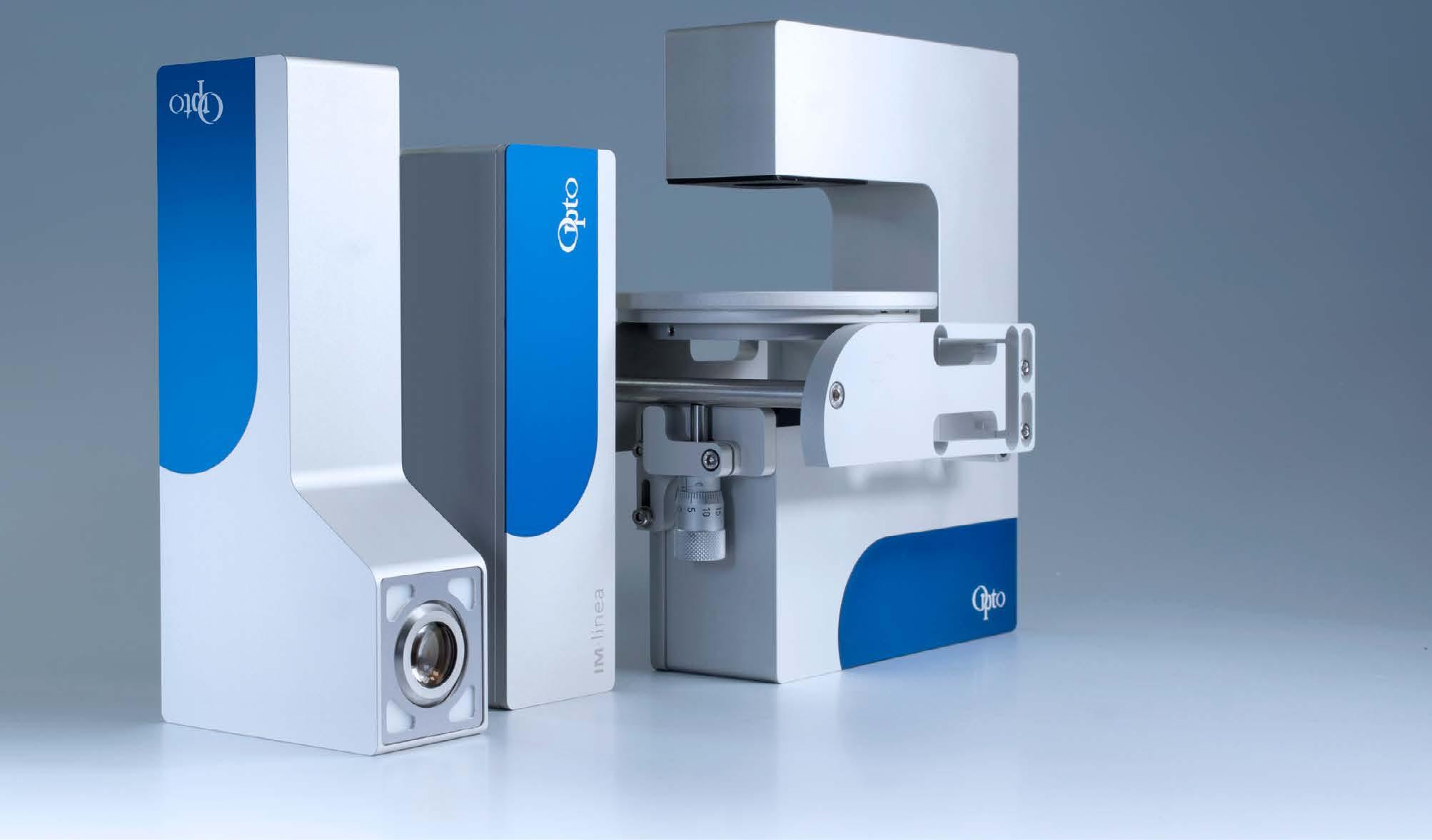

Deltapix’s modular inspection systems and the new Deltapix Insight 7.5 software offer ease of use for a wide range of micro- and macroscopic applications. High image quality increases the speed and accuracy of inspections, analyses, measurements and detailed visual documentations. The

◂ With the new stand for digital microscopes and the very precisely controlled XY stage, plus the function of precise tilting, there are many more possibilities in 3D topography. In addition, there is an integrated controller for easy installation and anti-vibration feet.

fully automatic 3D stitching option with motorized XYZ axes allows for large stitching and precise capturing of height information with an accuracy of less than one micron.

Such a digital microscope is used, for example, in industrial quality laboratories, but also in medical or biological applications. Typical measurement tasks relate to the measurement of lengths, distances, diameters and angles as well as to step heights, volumes and surface texture.

The microscope software suite for precise measurement, analysis and control of microscopes, cameras, motorized stages and other connected devices comes with a modern and intuitive user interface which is easy to operate. It comprises a basic package which is included free of charge with most Deltapix cameras and many optional modules. These modules provide extensive functionality for specific applications. The measurements and analyses can be easily documented using individual

With the limited depth of field of only one image in classical machine vision, important features can only be extracted to a limited extent. In the super depth of field of the true-color images with focus stacking, a lot of information can also be generated for exact 2D/3D analyses.

The particularly high-quality digital microscopes offer extremely reliable 2D and 3D measurements as well as information on surface roughness (ISO 25178-2:2012) for many industries and research laboratories.

reports in both Excel and PDF format or exported as images and CSV files.

This software allows for the calibration of multiple cameras and microscopes individually. Settings such as the exposure time and depth of field can be defined for each objective individually which makes the handling of different setups very fast and simple. The software suite is optimized for Deltapix cameras which cover a wide range of microscopy applications (up to 20 MP, standard, SWIR and UV) but also supports some third-party cameras.

The most important modules are:

Insight Basic Plus: Extended focus and exposure functions and much more

3D topography: Allows for 3D measurement and 2D profile extraction to measure height, depth, angles and distances

3D stitching: Stitching of images for seamless extension of the 3D view up to a size of 16,000 x 16,000 pixels

Roughness measurement: Accurate quantification of surface texture, both areal and profile, in accordance with the latest ISO standards ISO 25178 and ISO 21920

Video recording: Capturing and documentation of processes in both real time or time lapse

Segmentation, counting and multi-phase analysis: Advanced metallographic tools such as particle segmentation and counting combined with reporting and automated batch handling of multiple samples

Automation: Comprehensive control of the microscope, lighting systems and motorized stages

Converting text from camera images into machinereadable data is anything but trivial. If an ‘O’ becomes a ‘0’ or an ‘A’ becomes a ‘4’, this quickly leads to misunderstandings and errors. At best this is unpleasant, at worst costly - making it more important to choose an OCR system that is as powerful as possible. A prototype clearly demonstrates how, thanks to the collaboration between two highly specialised companies, very reliable and fast text recognition with AI can be implemented directly on a camera in the future.

The combination of Denknet OCR and industrial cameras from IDS Imaging Development Systems scores particularly well in terms of the simplicity with which an OCR application can be set up and used.

The demo system is the result of IDS’s combined expertise in the field of industrial cameras and Denkweit’s AI algorithms. “At product level, we are demonstrating the combination of our intelligent IDS NXT malibu industrial camera and the Deep OCR solution with Denknet,” explains Patrick Schick, Product Owner at IDS. Thanks to an Ambarella SoC (System on Chip) integrated into the camera, it is possible to perform AI-based text and character recognition directly on the camera. As soon as the product is ready for series production, customers will be able to use the technology ‘out of the box’ without having to connect an industrial PC.

He continues: “The trend is towards making AI smaller, faster and cheaper to implement. Our demo system achieves speeds in the low millisecond range both when searching and finding the text blocks and when reading the text. The prototype shows how high-quality OCR can be performed quickly on a small embedded device.”

Optical character recognition is still one of the most difficult disciplines for machine intelligence in image processing. The pure diversity of possible characters and methods used to apply characters to a wide variety of surfaces gives an idea of the challenges involved. Converting such complex visual data into clear, structured texts is no easy task – especially when dirt, reflections or material shape errors also come into play. Overlapping or incomplete characters, as well as a generally low pixel resolution of the image data, can mean that characters cannot be reliably distinguished from one another by man or machine.

This is precisely where Denknet, the AI vision solution for customised image analyses, can help. In addition to leading AI technology, users have access to an extremely high-performance and constantly evolving OCR model. Thanks to advanced deep learning algorithms, characters can be accurately identified and interpreted even under difficult conditions. It doesn’t matter whether Denknet has to deal with distorted fonts, low-contrast surfaces or different lighting conditions – this system works with a high precision. It can read text from a variety of surfaces, including packaging materials, labels and documentation.

The combination of Denknet OCR and industrial cameras from IDS Imaging Development Systems scores particularly highly in terms of the simplicity with which an OCR application can be set up and used.

In real-world applications, the single-device solution can, for example, help to make the handling of goods in the production process more reliable. Processes such as sorting, inventory management

Thanks to the deep learning algorithms, characters can be identified and interpreted accurately even under difficult conditions. It doesn’t matter whether the Denknet AI is dealing with distorted fonts, different lighting conditions or low-contrast surfaces, as shown here on the tire sidewall.

and shipment tracking can also benefit from automatic text recognition ‘on the edge’.

Another positive aspect of a good OCR model is its ability to recognise not only individual characters, but also the relationships between them – in the case of character sequences, such as serial numbers or words – and to take this knowledge into account when recognising characters. The better the OCR can predict subsequent characters and weight the reading result accordingly, the more robust and precise special applications can be solved with it.

Continuous optimisation for future-proof processes

Denknet OCR is designed to learn and adapt over time, improving accuracy with each iteration. This means that Denknet OCR continues to evolve without the need for constant manual updates. All development steps are strictly versioned so that application developments can fall back on defined versions. However, you also have the option of updating to a new, improved version to ensure versatile and robust reading at all times.

For quality assurance purposes, the performance and reproducibility of the trained networks can be tested and verified in a quality centre against sample data sets before a production system is updated with new software.

But what if a sign cannot be recognised after all?

Then it is important that fine-tuning is not simply a matter of ‘re-learning’ the network. Consider, for example, that the OCR model has been trained

read any text reliably with Denknet OCR

with 2 million images and the user now wants to teach the OCR model something new with a few images of their own. What weighting should be given to such information in the model in order to make a difference but not change everything? This is exactly where a lot of expertise is required from the provider to expand the AI in such a way that the previous stable recognitions are not negatively affected by such an adjustment.

An example: For some reason, an OCR has problems with numbers and the user only annotates numbers during the training process, never letters. The aim is to use intelligent ‘knowledge backup’ to prevent this network from only being able to successfully read numbers at some point because it thinks it doesn’t need to read letters.

The Denk Vision AI Hub therefore generates suitable artificial data for all new image data when fine-tuning the Denknet OCR in order to further train and weight the network to the right extent. This prevents the OCR from losing its previous capabilities, no matter how long it continues to be trained. At the same time, ‘retraining’ remains easy for the user of the Vision AI Hub and fast and performant thanks to cloud-based training in the background. In the best-case scenario, the basic skills of the OCR are so good that users no longer need to retrain at all. a

Lowering costs while increasing product quality: AI-based pattern recognition and analysis is becoming a game changer in manufacturing quality control. This is especially true when combined with optical techniques such as polarization imaging and infrared hyperspectral analysis.

Optical methods with computer-assisted image analysis play a key role in automating quality control.

The integration of AI into industrial image processing is currently revolutionizing this field. This shift is driven by clear advantages: in conjunction with recent advancements in optics, such as IR hyperspectral analysis and polarization imaging, significant cost and time savings can be achieved compared to traditional methods. Another major benefit of AI-supported imaging systems is their precision. These systems can detect even the smallest deviations from target specifications – anomalies that are often missed by conventional methods.

While traditional machine vision in quality control identifies only existing defects, AI-enabled systems can go further, predicting potential future issues by analyzing large data sets and generating timely alerts. These insights can be integrated into production planning and management systems, enabling not only product improvements but also minimizing equipment downtime. This, in turn, extends the lifespan of production systems.

The combination of AI and advanced optical methods is improving quality control, offering significant cost and time savings.

While traditional machine vision in quality control identifies only existing defects, AI-enabled systems can go further, predicting potential future issues by analyzing large data sets and generating timely alerts.

The collected data also supports companies in making their production processes more flexible and reducing manufacturing costs – an especially critical advantage for high-wage countries like Germany in the global marketplace.

The success of these systems depends on the careful alignment of optical system components, including lighting, lens selection, and image sensors, with the AI software. For instance, when inspecting large objects like injection-molded or stamped parts, the seamless interaction of components significantly enhances the performance of neural networks. Similarly, for processes with long or extended cycles, AI excels by analyzing data patterns to deliver highly accurate results in a short amount of time.

Among the technologies providing competitive advantages in AI-assisted optical systems for automated production processes are spectral imaging and polarization optics.

Spectral imaging leverages the unique reflection and transmission properties of materials across the electromagnetic spectrum for identification and measurement. This approach is particularly effective in the shortwave infrared (SWIR) range, where materials often transition between reflective and transparent states. This capability enables exceptional analytical insights. However, the technique requires high-quality optics with advanced features such as chromatic correction, excellent stray light management, and high transmission efficiency.

Recent advances in sensor technology further enhance the utility of spectral imaging. Modern SWIR sensors, particularly those based on gallium arsenide (GaAs), offer both high sensitivity and exceptional resolution. Their compact size and low energy consumption make them suitable for mobile applications and allow for the development of space-efficient devices.

Polarization Imaging is another innovative technique for optical quality control. It exploits the property of electromagnetic waves – including light – to oscillate within a specific plane of polarization. Using polarizers, polarized lighting, and specialized

polarizing filters, this method provides insights into defects or inclusions in glass or transparent plastics that are otherwise invisible. These insights help identify stresses within materials under inspection. Additionally, polarization imaging opens up valuable new possibilities for surface inspections.

The combination of AI and optical methods also delivers another critical advantage: time savings. In industrial production, short cycle times and efficient processes are vital competitive factors that drive higher productivity. Traditional methods often require separate testing procedures for material properties, dimensional accuracy, and surface characteristics. Multi-aperture lenses, however, allow for these inspections to be consolidated. These lenses simultaneously supply multiple image sensors with data from various spectral ranges, enabling comprehensive quality checks in a single pass.

Similarly, multi-measurement-point lenses enable the integration of multiple measurement points into a single testing station using only one camera sensor. This reduces the number of cameras and sensors required for quality control, simplifying integration into existing production lines. a

At Photonics West: Booth 1649 (Stand of Precisioneers Group)

AUTHOR Andreas Wegner-Berndt Managing Director at Vision & Control

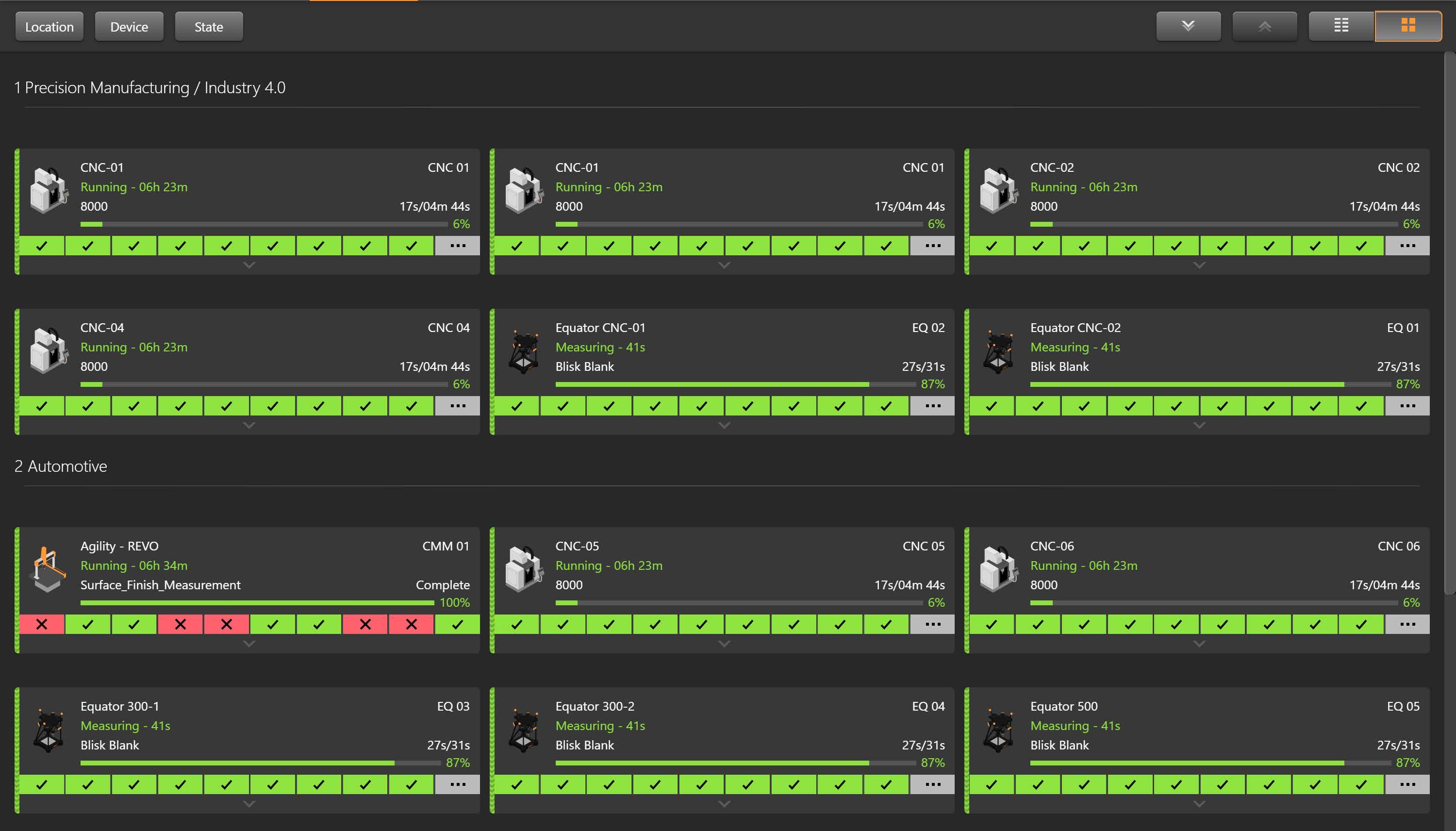

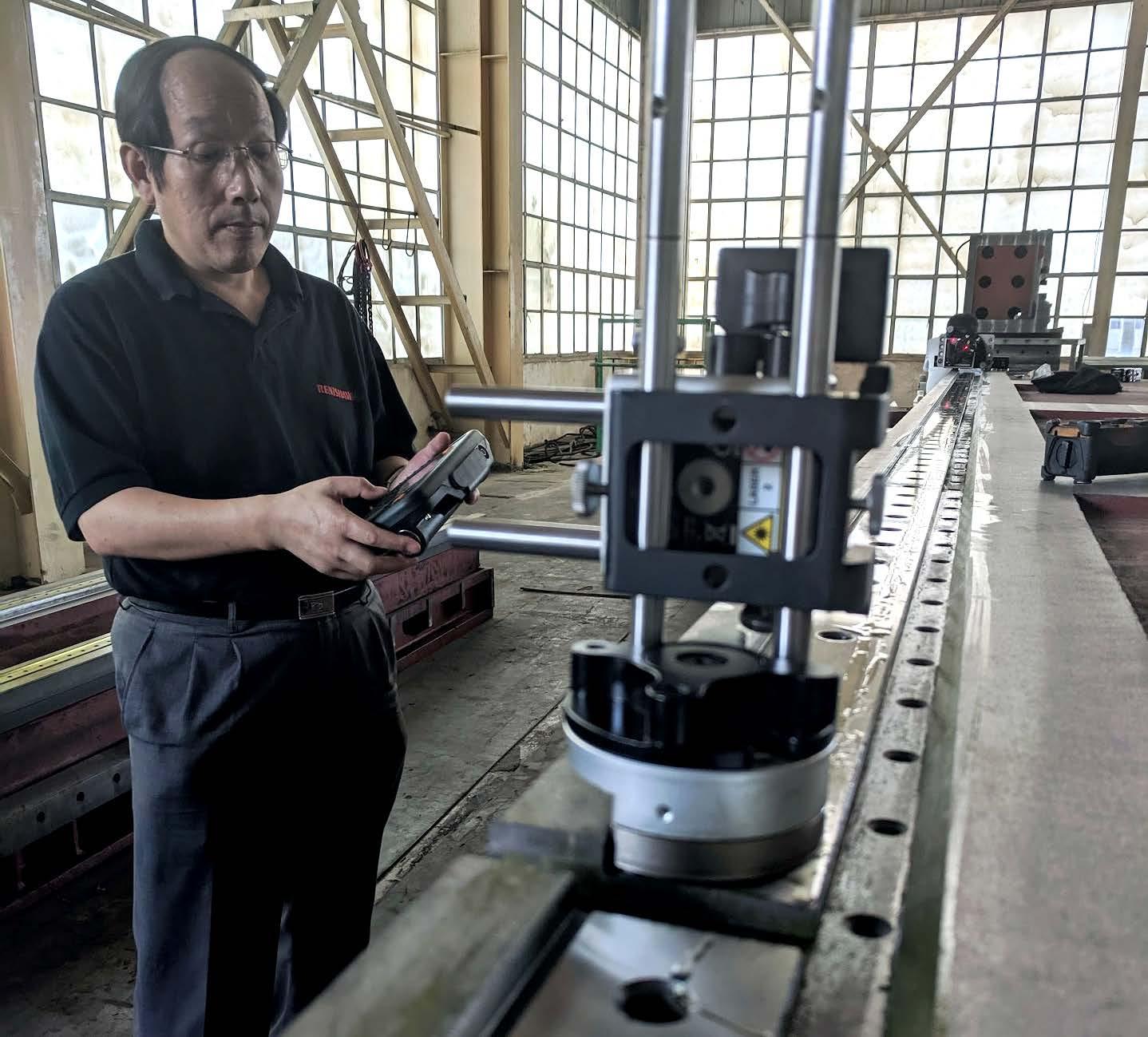

The Renishaw Central platform provides machine shop connectivity, consistency, control, and confidence. It allows manufacturers to harness endto-end process data and use it to develop a robust factory-wide system architecture.

Renishaw Central collects and provides visibility of machining process data across the factory for insights at the point of manufacture.

Global engineering technologies company, Renishaw, demonstrated its new manufacturing connectivity and data platform at IMTS 2024: Renishaw Central. It’s a data-driven solution designed to transform the productivity, capability, and efficiency of manufacturing operations. Bringing the power of connectivity to the machine shop floor, the system collects accurate, actionable data on machines, parts, and processes from across the factory, and presents it centrally for insight and interrogation at the point of manufacture.

The platform enables the user to monitor and update machining and quality control systems. Manufacturing process parameters can also be updated using its unique and patented Intelligent Process Control (IPC) software functionality. The ability to predict, identify and correct process errors before they happen supports increasingly automated solutions and processes for long-term productivity, capability, and efficiency gains.

“The Renishaw Central concept was born out of our own need to digitalize, visualize and control

With Renishaw Central, manufacturers can check the performance of devices on the shop floor, understand device utilization, and examine and validate part quality.

the manufacturing and measurement processes within our own production facilities. We wanted to reduce assumptions when problem solving, and facilitate the adoption of automated process control,” said Guy Brown, Renishaw Central Development Manager. “Because we live and breathe many of the same challenges faced by our customers, we’re confident that we’ve created a digital solution capable of driving actionable data across machining shop floors everywhere.” ▸

Renishaw is a supplier of measuring and manufacturing systems. Its products give high accuracy and precision, gathering data to provide customers and end users with traceability and confidence in what they’re making.

It is a global business, with over 5,000 employees located in the 36 countries where it has wholly owned subsidiary operations. The majority of R&D work takes place in the UK, with the largest manufacturing sites located in the UK, Ireland and India.

For the year ended June 2023 Renishaw recorded sales of £688.6 million of which 95 percent was due to exports. The company’s largest markets are China, USA, Japan and Germany.

Renishaw Uses Process Automation Technologies in its Own Production

Renishaw has used process automation technologies in the manufacture of its own products for over 30 years. During its in-house design and development, the Renishaw Central platform was deployed in the UK at Renishaw’s own low-volume high-variety manufacturing facilities at Miskin and Stonehouse. 69 machines were connected to Renishaw Central, and both sites subsequently reported a reduction in unplanned machine downtime caused by automation system stoppages.

Collaboration with those working directly with Renishaw Central in the machine shop, including production engineers, and maintenance and operations staff, allowed Renishaw to engineer a product that solves realworld problems. “Our original aim for Renishaw Central was to introduce further automation for lathes using our IPC technology, and this is progressing well. But an unexpected and positive outcome has been Renishaw Central’s ability to highlight and rank unplanned stoppages of our automa-

tion systems,” said Guy. “Analysis of this information has guided remedial actions, leading to a 69 percent reduction in automation stoppages and significant improvements in utilization.”

A global selection of pilot customers who trialled Renishaw Central also confirmed that access to standardized end-to-end data provided an insight into their processes that has allowed them to improve manufacturing performance.

Renishaw Central collects and provides visibility of machining process data across the factory for insights at the point of manufacture. With Renishaw Central, manufacturers can check the performance of devices on the shop floor, understand device utilization, and examine and validate part quality. User-friendly dashboards show live device data. Data can be passed into industry-leading tools such as Microsoft Power BI via APIs. Data analytics can then be used for in-process control applications and continuous improvement. a

At Photonics West: Booth 3575 CONTACTS

Renishaw, Inc., West Dundee, IL, USA

March 12, 2025: Robotics Day Robots have become an integral part of industry, and this will also be the case in small and mediumsized enterprises and the skilled trades in a few years‘ time. Our webinars show you the best way to enter the world of robotics and why now is the right time.

April 16, 2025: Metrology & Precision Manufacturing

High quality standards and the optimization of production efficiency are at the heart of modern manufacturing processes. Both are crucial for manufacturers to remain competitive. This event therefore revolves around measurement technology, production software and machine vision – in short: technologies that help to optimize your production.

Do you have expert knowledge that you would like to share?

June 4, 2025: Machine Vision, Robotics, and AI combined

Only robots with the ability to see can perform complex tasks such as bin picking or handling unsorted objects on conveyor belts. Cameras provide this sense of sight. In combination with artificial intelligence, the range of applications is immense.

Do you want to speak on a big stage on relevant industry topics?

June 25, 2025: Panel discussion: What were the biggest trends at Automate?

At Automate, the largest automation trade fair in North America, numerous innovative products were once again on display or even presented to the public for the first time. The expert panel discusses the highlights and technology trends that emerged at the trade fair.

Do you have an exciting innovation that you would like to present to your target group?

Then the digital events from inspect and messtec drives Automation are just right for you. They allow you to reach over 200,000 machine vision users and integrators, engineers, automation specialists and machine builders worldwide.

Interested? Then get in touch with us.

In addition to these events, we will also be happy to plan an individual webinar with you at a time that best suits your marketing plan.

Sylvia Heider Media Consultant Tel.: +49 6201 606 589 sheider@wiley.com

Birdie Ghiglione Sales Development Manager Tel.: +1 206 677 5962 bghiglione@wiley.com

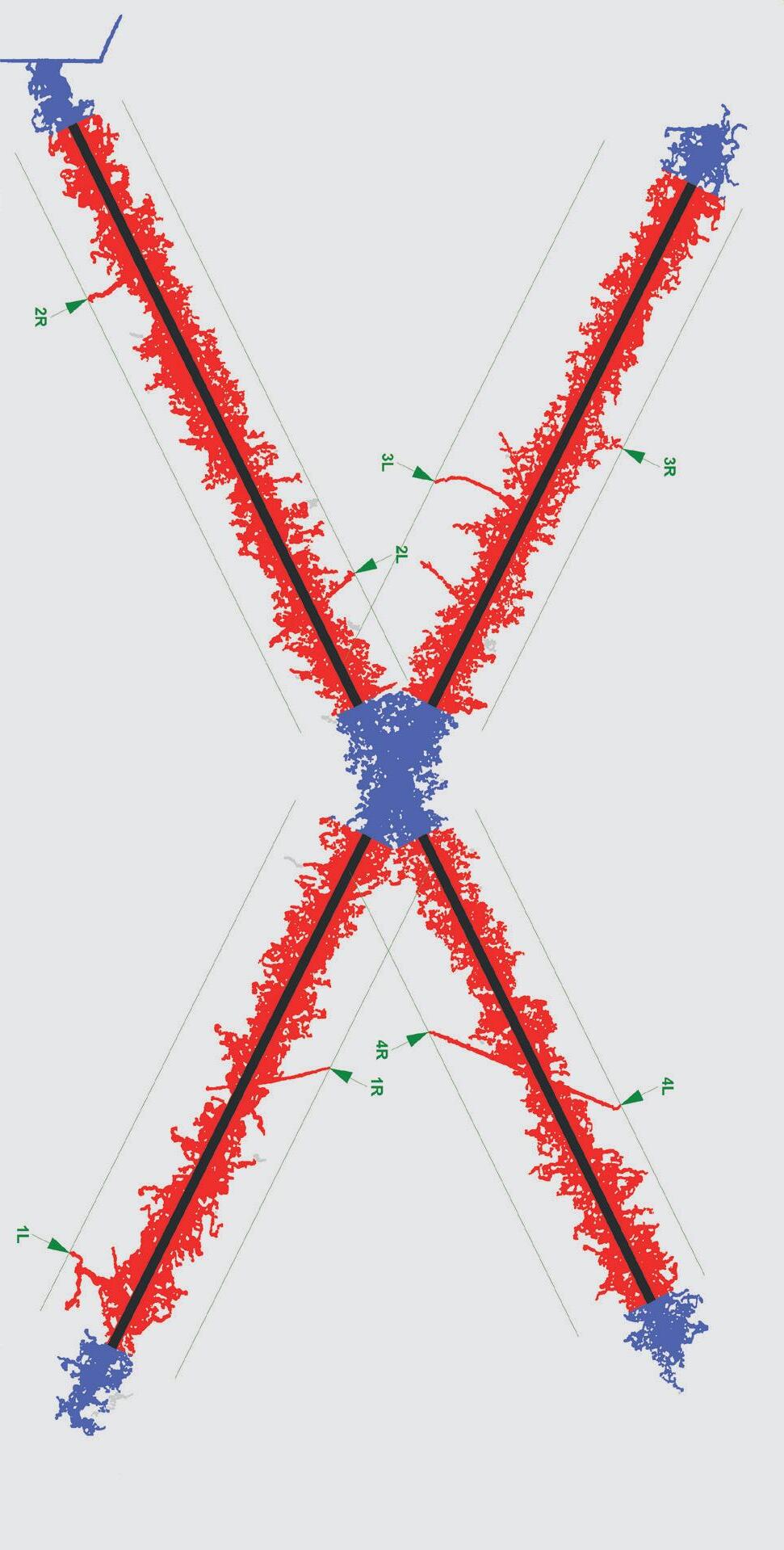

Researchers are using the principle of Gobo projection to set new standards in 3D metrology. Their technology surpasses previous systems in speed, accuracy, and robustness. It improves processes in various industries, including automotive, safety, shipbuilding, and aviation. In a joint project, advanced measurement systems were developed for use in safety tests.

Whether it’s monitoring industrial manufacturing processes, capturing people or vehicles, or in medical or sports applications: acquiring 3D data continues to grow in importance. The more dynamic a situation is, i.e., the faster an object or person moves during the

measurement process, the tougher the demands on the measurement method. Conventional techniques quickly reach their limits: in vehicle crash tests, e.g., objects must be marked in advance using special signs. However, this is not possible for all objects.

Test setup on the sled test bench with goCRASH3D –test start (left picture) and in the test (right picture)

For more than ten years, researchers at Fraunhofer IOF have been working with Volkswagen to overcome this challenge. They have developed a special measurement system for high-speed 3D data acquisition without prior preparation of the object. Their sensor essentially consists of two high-speed cameras and a pattern projector. Because only highperformance cameras are commercially available, the researchers in Jena, Germany, have developed a novel high-speed projection method: the so-called Gobo projection of aperiodic sinusoidal patterns.

How Gobo technology works

Gobo stands for “GOes Before Optics” and refers to the mask in a kind of slide projector. The Fraunhofer researchers have advanced this special technique, which originates from stagecraft. In their Gobo projector, a disk with an irregular fringe pattern rotates in front of a powerful light source. This generates a time-varying, nonperiodic sine pattern that is projected onto the object to be measured. At the same time, two high-speed cameras observe the scene from different angles. The pattern sequence allows the pixels in the images

from the two cameras to be clearly assigned, which can then be used to calculate the 3D coordinates of the object points.

In cooperation with Volkswagen, Fraunhofer IOF has created two measurement systems that use Gobo technology: Gospe3D and Gocrash3D. The goal of the Gospe3D system, developed in 2016, was to temporarily track the deployment of an unmodified airbag from series production. At full resolution, the system generates up

to 1,200 independent 3D data sets per second, each with one million 3D points. The speed can be further increased by using partially overlapping image stacks and a reduced resolution, which has made it possible to measure up to 50,000 3D images per second. Such high-speed measurements allow for capturing even the fastest movements in three dimensions and in detail. This enables the user to detect optimization points in airbag development at an early stage on the component test stands and to optimize the simulation forecasts of the tests in a systematic way. Due to the flexibility of the projection principle, Gospe3D can be adapted to a wide range of measurement tasks. In addition to various airbag tests, it has already been used by Volkswagen for, e.g., pedestrian protection tests.

The tests carried out with the Gospe3D were conducted on separate test stands outside the vehicle. A few years ago, the researchers began developing a 3D sensor that can actively ride along in a crash test, survive the crash without damage, and three-dimensionally measure movements or

deformations in the vehicle interior. Their efforts resulted in Gocrash3D, an ultra-robust 3D projection system that can measure accurately even at accelerations of up to 60 g and generates up to 1,200 3D images per second, each with up to 250,000 3D points. With a measuring field of up to 0.7 × 0.7 m², the new sensor allows for capturing areas in the vehicle interior, such as the footwell, whose movements or deformations could, thus far, not be measured or monitored without specially applied markers. Gocrash3D was first used in 2023 by Volkswagen. As it can be seamlessly and easily integrated into the test setups, Gocrash3D offers new possibilities for safety analyses. For other applications, the system can be customized to the measurement task. For example, Volkswagen plans to combine several systems to fully capture objects from all sides and further optimize simulation forecasts. Yet the potential of the novel technology ranges from industrial manufacturing and production to quality assurance in toolmaking or mechanical engineering, as well as in medicine and forensics.

At SPIE Photonics West, Gocrash3D will be exhibited at the German Pavilion in Hall F, Booth 4205.

For further information click here!

AUTHORS

Dr. Stefan Heist

Kevin Srokos

Fraunhofer IOF

Frank Scherwenke

Dr. Karsten Raguse Volkswagen AG, Vehicle Safety –Testing Technology

CONTACTS

Fraunhofer Institute for Applied Optics and Precision Engineering IOF, Jena, Germany

Dr. Martin Landmann Fraunhofer IOF

Tel: +49 3641 807-253

Email: martin.landmann@iof.fraunhofer.de

Frank Scherwenke Volkswagen AG

Email: frank.scherwenke@volkswagen.de

Dr. Karsten Raguse Volkswagen AG

Email: karsten.raguse@volkswagen.de

Integrating vision systems with cobots can boost quality and inspection tasks. Basic 2D cameras handle simple tasks, while advanced vision systems are needed for complex precision work. Users have to consider the necessity of vision, application type, lighting, 2D vs. 3D cameras, precision, and cycle time. Radio frequency-based systems offer an alternative for some tasks.

Integrating vision with your collaborative robots (or ‘cobots’) can improve quality and inspection processes, but it’s not a one-size-fits-all process. For example, detecting whether a product has a sticker indicating that it has passed inspection, is the type of simple application that can be performed effectively by low cost, basic, 2D camerabased systems. Meanwhile, a cobot working on printed circuit boards (PCBs) on an electronics assembly line might use machine vision to perform tasks with demanding precision requirements, such as inspecting the quality of solder joints post-assembly or locating the position of components on a PCB.

The wide range of vision solutions for cobots can make the process daunting, so consider the following questions before you talk to your integrator:

Simple sorting and detection applications can often be carried out using traditional sensors and fixtures. Similarly, palletizing wizards for cobots make it easy to program a cobot to pick up items placed in a grid pattern on a peg board. Applications that almost always require vision include quality inspection tasks and safety.

DCL Logistics – a 3PL fulfillment center in California – deployed a UR10e cobot from Universal Robots equipped with a Datalogic vision camera. When a product is picked, the camera checks the SKU and sends a ‘Pass’ or ‘Fail’ command to the UR10e cobot. If there is an error, the cobot places the product in a reject bin.

Universal Robots’ (UR) UR+ ecosystem contains a range of vision solutions developed by partners designed to integrate seamlessly with UR cobot arms. Here, UR+ partner Photoneo’s 3D Meshing with Motioncam3D color is shown mounted on an UR cobot to enable fast 3D object model creation for manufacturing validation, equality control and further processing.

2 | What application am I looking at?

Vision-based applications fall into three main categories: location (with path planning), inspection, and safety. In part location applications, object recognition and pose estimation capabilities are required. In quality inspection tasks, when the detection of minute artifacts is required, you’ll need high-res cameras with advanced image processing. Safety applications require real-time processing capabilities, tracking functionality, and robust object detection.

3 | What are my lighting requirements?

Some vision systems need consistent, high-contrast lighting conditions to perform effectively. Others provide their own scene illumination. Similarly, some vision systems cope with variable lighting conditions better than others. Ask your integrator whether changes in ambient light levels over the course of a day will impact application performance.

4 | Do I need a 2D or 3D camera?

2D robotic vision excels at non-complex barcode reading, label orientation and printing verification applications. 2D cameras can only provide length and width information – not depth – limiting the range and number of applications they can perform.

3D cameras are designed for applications requiring detailed information regarding an object’s precise location, size, depth, rotational and surface angles, volume, degrees of flatness, and more. Inspection applications requiring minute surface defect detection require 3D camera systems.

5 | What are my application’s precision requirements?

Microchip manufacturing and precision assembly call for advanced vision systems with high-res cameras and advanced image processing. Vision applications with lenient tolerances can be per-

formed by low-cost vision systems, but talk to your integrator about your application’s accuracy, repeatability, and tolerance requirements.

6 | What is the cycle time?

High-speed applications that require fast image capture and processing can take time to process the data. Factor this into your cycle time considerations.

A radio frequency-based alternative to vision

Laser, radio frequency, and radar technologies are also powerful machine-vision options for robotic applications. Laser systems, including LiDAR, provide precise distance measurements and highresolution 3D imaging, ideal for navigation and obstacle avoidance, such as in autonomous mobile robots. Radio frequency (RF) based sensors enable robots to detect objects through electromagnetic waves, offering advantages in low-visibility environments. Radar technology, utilizing radio waves, excels in long-range detection and is robust in varying weather conditions, making it useful for outdoor and industrial robotic applications. Each of these technologies enhance perception of robotic devices, allowing for more advanced decision-making and autonomy in complex, dynamic environments. a

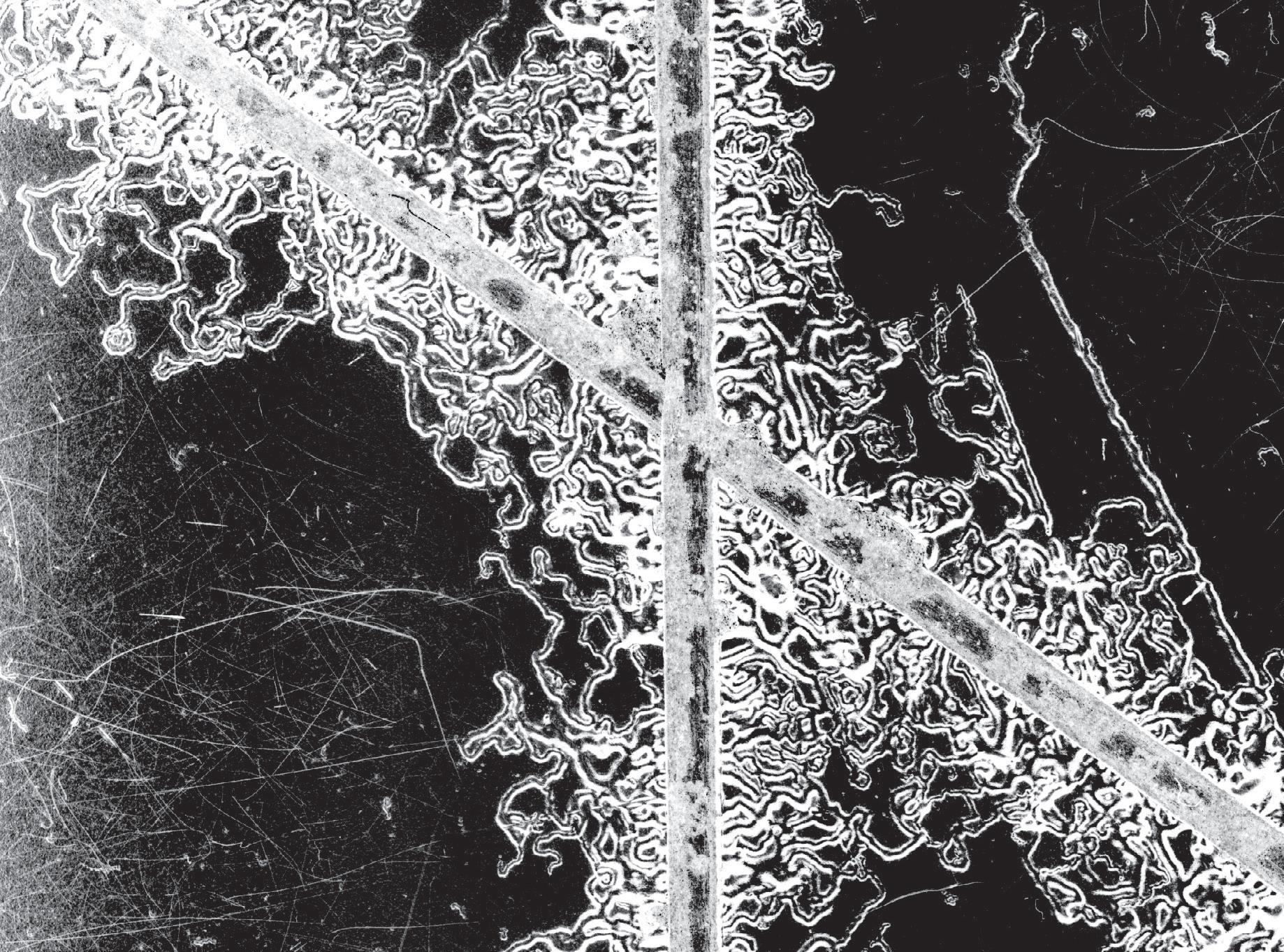

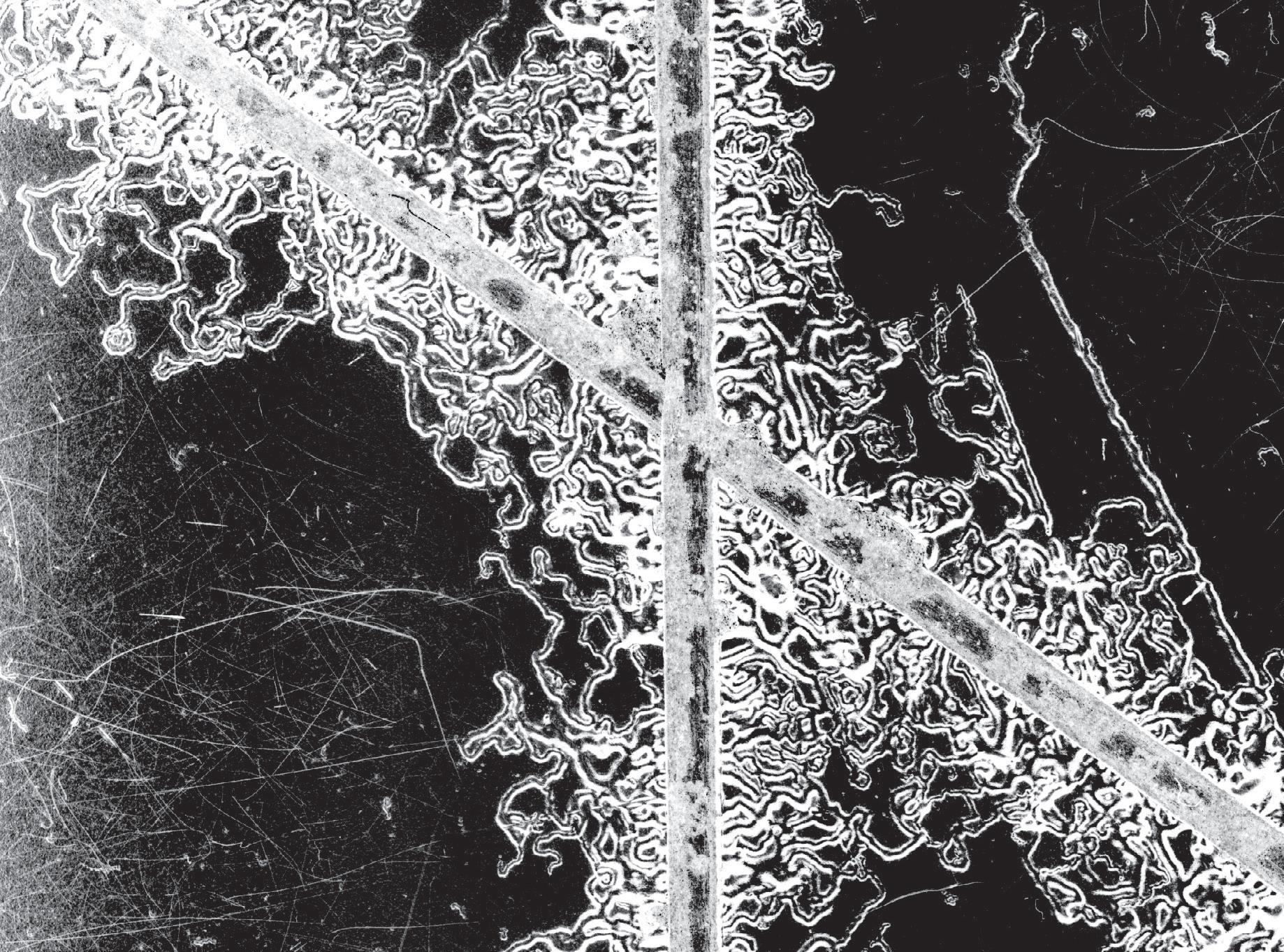

The „Corrosion Inspector“ scans a standardized test plate in 1.2 seconds with a resolution of 22 µm per pixel. It uses machine learning to improve corrosion detection for automatic evaluation.

DTo ensure the quality of corrosion protection for coated components, numerous test panels are produced and evaluated. The manual and visual evaluation is time-consuming, error-prone, and subjective. An automated inspection system has been developed to streamline this process, delivering digital results within 5 to 10 seconds. Through enhancement using maschine learning, even challenging samples can be efficiently and accurately evaluated.

uring the development of coating systems with improved corrosion protection and as part of the quality assurance of coated components, a large number of test panels are produced. These coated test panels are scribed and weathered in special climatic chambers. Until now, it has been customary to evaluate the corrosion phenomena caused in this way manually and visually – a very laborious task that is timeintensive, error-prone and subjective. A magnifying glass with an integrated scale is often used as an aid.

Schäfter+Kirchhoff have developed the “Corrosion Inspector” to speed up and objectify the evaluation of filiform and other corrosion phenomena on test panels. The fast automatic or semiautomatic evaluation and the generation of digital

evaluation of filiform corrosion on different scribe patterns. The infiltration area, the average infiltration width, length and number of filaments and many more are measured.

results contribute to a considerable rationalization of the testing effort. The test methods are noncontact and do not require partial destruction of the samples.

The system scans a standardized test panel in 1.2 s and delivers a very high-contrast image with a resolution of 0.022 mm per pixel. When using the automatic procedure, the evaluation of a panel,

including documentation and image storage, is completed in 5 to 10 seconds.

In addition to the time savings, the system is characterized by the variety of evaluation methods. The software supports the following standards:

▪ Filiform corrosion according to ISO 21227-4

▪ Counting, length measurement and statistics of filaments

▪ Delamination and corrosion degree according to ISO 4628-8

▪ Cross-cut characteristic value according to ISO 2409

▪ Degree of blistering according to ISO 4628-2

▪ Multi-impact test according to ISO 20567-1

Filiform corrosion is a type of corrosion that progresses in filaments under a coating and is usually due to localized damage to the coating.

The Software Skan-CI automatically detects the scribe position, length and width. The following are measured:

▪ the infiltration area

▪ average infiltration width

▪ length of filaments, and statistics such as

▪ mean value and median of filament lengths

▪ most frequent filament length

▪ number of filaments per cm scribe length

The true color imaging allows the system the separation of red rust, delamination and coating. That enables the automatic detection and measurement of the corresponding surfaces. The measurement of corrosion and delamination is carried out to the standard ISO 4628-8.

Cross-cut test: The cross-cut test is an international standard for estimating the resistance of a coating to separation from the substrate when a continuous grid is cut into the coating down to the substrate. The properties measured by this empirical method depend, amongst other things, on the adhesion of the coating to the previous layer or to the substrate.

The system automatically recognizes the removed (partial) surfaces in a very short time and immediately displays the result as the sum of the removed surfaces and also the socalled cross-cut value Gt.

In reality, the corrosion test panels are not always perfectly prepared. Structures and scratches in the background often directly interfere with the automatic detection of corrosion. The latest software version includes a feature that uses a trainable machinelearning algorithm for separating actual corrosion and background effects.

For example, we use a scribed panel, that has been exposed to a salt spray test: The task is to measure the size and the expansion of blisters caused by infiltrated corrosion that are close to the scribes. Due the aggressive environment, the image has a difficult background with visual smears and structures. In some cases, the blisters are barely distinguishable from the background.

Step 1: Annotations: The operator simply marks typical structures of interest such as background, blister and scribe areas in user-defined colors.

Step 2: Training the algorithm: The algorithm then assigns the back-

ground, the blisters and the scribes using the trained algorithm.

Step 3: Measurement: The software measures the expansion and the size of the blisters.

Once trained, the following panels are processed using the trained algorithm.

The “Corrosion Inspector” is used to rapidly and objectively evaluate filiform and other corrosion phenomena on coated test panels up to a size of 4 x 8 in. The automatic evaluation of corrosion is carried out according to various international standards. Even difficult samples can be evaluated using machine learning algorithms. a

At Photonics West: Booth 3456

KnowItAll provides top-tier software to identify, analyze, and manage your spectral data. Leveraging Wiley’s renowned spectral data collections, it offers unparalleled solutions for fast, reliable analysis. The new KnowItAll 2025 continues to innovate with more tools to automate and streamline analysis.

High-Speed, SWIR, and Event-Based Cameras at SPIE Photonics Weste

Automation Camera for Process Control, Monitoring, and Quality Assurance

High-Speed 16K Color Trilinear and Monochrome Line

Metrology for XR Experience

Windows for LWIR Imaging

Cameras with Raspberry Pi 5

CCD-in-CMOS Technology for Ultra-High Speed Imaging

Lucid will showcase its latest camera innovations and advanced sensing technologies at SPIE Photonics West, the leading optics and photonics event, taking place from January 28–30, 2025, in San Francisco, California.

Triton10 – High-Speed 10GigE Camera: The Triton10 is a compact IP67-rated 10GigE camera designed for demanding industrial

environments. Its 44 x 44 x 82 mm enclosure provides dust and water protection, Power over Ethernet (PoE), and 17-pin M12 GPIO connectivity. With RDMA support, it enables reliable, high-speed data transfer by streaming image data directly to main memory, bypassing the CPU and operating system.

Atlas25 – High-Bandwidth 25GigE Camera: The Atlas25 features high throughput over a 25GigE

interface featuring RDMA support. Paired with Sony’s 4th generation Pregius S CMOS sensors, it offers a 17-pin M12 GPIO and an integrated optical transceiver for a direct LC connection – reducing costs and simplifying installation. By leveraging RDMA, the Atlas25 streams image date directly to the PC’s main memory.

Triton2 – Event-Based Camera: The Triton2 EVS is a 2.5GigE event-based camera powered by Sony’s IMX636/IMX637 vision sensor and supported by LUCID’s Arena® SDK and PROPHESEE’s Metavision® SDK. It offers exceptional performance for motion analysis, object tracking, vibration monitoring, and autonomous driving. Its innovative design ensures lower power consumption and provides greater flexibility for diverse machine vision applications.

Triton2 with High-Resolution SenSWIR Sensors: The Triton2 SWIR camera series features Sony’s advanced IMX992 and IMX993 SenSWIR sensors, delivering superior imaging in the shortwavelength infrared spectrum. With resolutions of 5.2 MP (IMX992) and 3.2 MP (IMX993), these cameras are ideal for applications requiring precise imaging across diverse lighting conditions.

Stop by Lucid‘s booth 129 at Photonics West for live demonstrations of the latest camera technologies. To schedule a meeting with Sales Representatives: sales@thinklucid.com. a

Flir announced the Flir A6301, a sensitive, cooled midwave thermal imaging camera for 24/7 inspection and automation applications. The A6301 enhances process control and quality assurance in manufacturing by capturing high-speed product movement with up to 20 times less motion blur, ensuring precise temperature readings. It detects small thermal variations and measures temperatures on moving products, aiding machine builders and integrators in challenging machine vision applications. The camera boosts throughput, reduces disruptions, and minimizes downtime. The A6301 is designed with industry-standard interfaces, commands, and connectors, making implementation easy. It features a long-life cooler to reduce maintenance costs and is Flir’s smallest commercialcooled camera, facilitating system integration. When combined with additional sensors and advanced machine learning methods, the FLIR A6301 supports smarter, more efficient processes. At Photonics West: Booth 159

award 2025 winner

Registration deadline: February 14, 2025

The inspect award 2025 offers more categories to give more new products the opportunity to win an award.

Emergent Vision Technologies introduces Eros, a small low-power 10GigE camera. Initially launched as a 5GigE series, Eros was demonstrated at Vision 2024 in Stuttgart, Germany, as a 29 x 29 mm 10GigE camera with BaseT/RJ45 PoE and SFP+ fiber options, consuming 4.8W with PoE, 4W without PoE, and 3W on SFP+ fiber.

These cameras feature Gen4 Sony Pregius S sensors, offering frame rates from 35 to 1586 fps. Additionally, Eros includes models with Sony’s SenSWIR CMOS sensors for SWIR imaging. Eros cameras are also available in polarized sensor models and an ultraviolet model. They support zero-copy imaging and GPUDirect, providing costeffective solutions for multi-camera applications.

At Photonics West: Booth 2132

JAI introduces two new high-speed line scan cameras in its Sweep Series: the 16K color trilinear SW-16000TL-CXP4A and the monochrome SW-16000M-CXP4A. These cameras feature 16K resolution with large 5 μm square pixels, and a CoaXPress 2.0 interface providing high data throughput of 50 Gbps. The trilinear color model has separate lines for red, green, and blue channels, ensuring precise color reproduction, and includes advanced algorithms for sub-pixel spatial compensation. The cameras are suitable for high-speed applications such as battery, flat panel display, and PCB inspection, with the color model achieving scan rates up to 100 kHz and the monochrome model up to 277 kHz. Larger pixels enhance light sensitivity, and FPGA-based 2x1 horizontal pixel binning further improves performance in low-light environments. The CoaXPress v2.0 interface supports robust features like triggering and long cable lengths. Additional features include versatile GPIO and trigger options, region-of-interest, individual RGB gain and exposure control, and various image processing capabilities. The cameras come with an M95 lens mount and a heat sink for effective heat dissipation.

The latest version of Renishaw’s Carto software (v4.12) includes analysis-based data stitch functionality for long axis measurements using the XK10 alignment laser system. The data stitch function in Carto Explore has been developed to reduce noise on long axis measurements which are often affected by air turbulence. By breaking this measurement down into smaller sections and stitching them together, the environmental effects on each section can be reduced, leading to an increase in the accuracy of the overall measurement.

Renishaw’s Carto software, a digital ecosystem for the company’s laser calibration products, includes three applications. These are Capture for collecting laser measurement data, Explore for powerful analysis to international standards, and Compensate for quick and easy error correction. At Photonics West: Booth 3575

At SPIE AR|VR|MR 2025 and Photonics West 2025 in San Francisco, Instrument Systems will present its high-end measuring instruments for optical quality assurance of AR/VR/MR devices along the entire production chain.

At SPIE AR|VR|MR, the new LumiTop 5300 AR/VR will be presented at booth 6302. This 2D luminance and color measurement camera with 24 MP resolution and straight lens is particularly well-suited for testing AR/VR/MR display modules before installation in the headset. It complements the proven LumiTop 4000 AR/VR with a periscope lens.

Also new is the TOP 300 AR/VR, an optical probe for fast and easy quality testing of headset modules for luminance and color in production and development. It imitates the human eye and is connected directly to a CAS series spectroradiometer.

Additionally on display will be the LumiTop X150, an ultra-high-resolution luminance and color measurement camera developed for quality control of OLED and MicroLED displays at pixel level. At Photonics West: Booth 4205

Silwir Silicon Protective Windows from Midwest Optical Systems (Midopt) are a cost-effective and readily available solution – compared to germanium – for Long-Wave Infrared (LWIR) thermal imaging from 8 to 12 µm. They feature a Diamond-Like Carbon (DLC) coating for durability and a BBAR AntiReflection (AR) coating for a high transmission.

Silwir Protective Windows can withstand harsh environments and temperatures up to 200 °C, are suitable for weight-sensitive applications, and adhere to MIL-F48616 and MIL-C-48497C standards for severe abrasion resistance. With a Knoop Hardness of 1150, they’re low density, robust, lightweight and less prone to breakage.

At Photonics West: Booth 2461