Embedded Vision Summit –Where Engineers Connect Directly Interview about Smart Cameras: Fast Time to Market with Low Hardware Costs

Embedded Vision Summit –Where Engineers Connect Directly Interview about Smart Cameras: Fast Time to Market with Low Hardware Costs

Nowadays, everyone is talking about artificial intelligence, even your own grandmother or hairdresser. Personally, I am undecided whether this hype serves our cause – because it pushes economically and/or socially relevant AI projects – or rather distracts from the existing problems and is therefore counterproductive – because AI is often used to do “something with AI”, which inevitably goes wrong and the mood can then suddenly turn against any kind of AI. With these thoughts in mind, I asked Chat GPT about the most important developments in AI and embedded vision in the last 365 days. Yes, I admit it: I was increasingly desperate for a hook for this editorial. And, well, out came a (predictable) list of areas where technological development has made progress: more efficient chips, improved algorithms, expanded application areas, etc. pp. However, one point caught my attention: standardization and open source. Chat GPT writes verbatim: “Overall, standardization and open source tools help to accelerate the development of embedded vision applications, reduce costs and improve quality by leveraging best practices and shared

resources.” I couldn’t have summarized it any better. And it’s great that this realization has also reached generative AIs. Because that means that it is mentioned on enough websites, relevant portals and technical articles so that it has become a natural part of the embedded vision.

This gives me hope that, like standards such as GenICam and open source software libraries such as OpenCV, there will soon be something similar for AI. Firstly, this is the only way that users can reasonably compare different AI solutions (transparency, explainability and quality) and secondly, it is the only way that AI systems can be replaced or updated if technological progress has overtaken a once highly innovative solution (robustness, security). Several institutions are currently working at a political and technical level to develop ISO standards and the like. Let’s hope that they are successful internationally and that there are no isolated solutions that make cooperation between North American and European companies more difficult.

PS: Do you have any questions, suggestions or criticism? Please feel free to write to me at dloeh@wiley.com

reading David Löh Editor-in-Chief of inspect

With the complete takeover of the distributor Phase 1, Stemmer Imaging will have its own location in the USA for the first time. Having already been active outside Europe in Mexico and Latin America, Stemmer Imaging is now targeting the North American market with this step. The company is thus also responding to the wishes of important existing customers for a physical presence in the USA. At the same time, the distributor expects above-average growth rates in the industrial and non-industrial application areas. Stemmer Imaging therefore views Phase 1 Technology as a target-oriented platform investment for expanding existing customers and acquiring new customers. The 100 percent acquisition is expected to be completed in the second quarter of 2024.

ABB opened its remodeled North American robotics headquarters and manufacturing facility in Auburn Hills, Michigan, in mid-March. The approximately 20 million US dollar expansion reaffirms ABB’s commitment to long-term growth in robotics in the US, where ABB management expects annual growth of 8 percent between 2023 and 2026. The company is also expanding its robotics and automation capabilities and creating an additional 72 jobs. This third expansion of a robot factory – after China and Europe – reflects ABB’s efforts to increase local production.

The expansion of the Auburn Hills robotics plant, which opened in 2015, reflects the company’s focus on the US market and its commitment to further investment in Michigan. It was funded with a 450,000 dollar performance-based grant from the Michigan Business Development Program. ABB capitalizes on the region’s unique

concentration of skilled workers and provides workers with no prior experience or degree access to training in the skills they need for a successful career in the robotics and automation industry. ABB Robotics has invested a total of 30 million dollars in four US locations since 2019. The latest investment in Auburn Hills is part of ABB’s previously announced 170 million dollar investment in its electrification and automation businesses in the US.

Hexagon’s Manufacturing Intelligence division and Arch Motorcycle, a US-based manufacturer of high-performance motorcycles, have entered into a partnership. The aim of the collaboration is to make it easier for Arch to control quality during development and production and thus help customers have special driving experiences. Arch combines craftsmanship and engineering with precision technologies to maximize the performance potential of its motorcycles. By completely digitalizing quality processes with modern measurement technology throughout development and production, the partnership supports the motorcycle manufacturer in further implementing its high quality requirements. Hexagon’s equipment used by the manufacturing team includes an easy-to-use mobile measuring arm (Absolute Arm) with the state-of-the-art AS1 laser scanner, which allows even inexperienced users to enter measurements directly into the associated software.

The 3D laser scanner provides a guided workflow to help users visualize 3D models and guide them in successfully cap-

Displacement

Measurement

Draw Wire Sensors

Easy, fast and flexible mounting

High reliability and long life time

Inductive Sensors (LVDT)

Wear-free and maintenance-free

Compact sensor design

Environmentally immune (dirt / moisture)

Capacitive

Displacement Sensors

High precision and nanometer resolution

Non contact and wear-free

Suitable for fast applications

Reliable in harsh applications

Custom sensors with Embedded Coil Technology (ECT) Eddy Current Sensors

High precision and nanometer resolution

Non contact and wear-free

Suitable for fast applications

Reliable in harsh applications

Custom sensors with Embedded Coil Technology (ECT)

Confocal Sensors

and diffuse targets

Laser Triangulation Sensors

Low-cost and high-end sensors

High resolution at long distance

Advanced Real-Time-SurfaceCompensation

Compact cameras for R&D, test and process control applications

Widest range of sensor head styles and outputs from stock

Durable, robust and low cost OEM designs IR Temperature Sensors

Precise inspection of geometry, shape & surface

µm accuracy for the detection of finest details

Ideal for inline monitoring in production processes

Powerful software for 3D measurement tasks and inspection

The Imec Innovation Award 2024 goes to Lisa Su, Chair and CEO of AMD. The prize recognizes the contributions of Dr. Su recognized for promoting innovation in the field of high-performance and adaptive computing. Since its launch in 2016, the imec Innovation Award has become a hallmark for recognizing pioneers in the semiconductor industry. Previous recipients include Dr. Gordon Moore and Bill Gates.

“I am honored to receive this year’s Innovation Award and to be recognized by Imec for pushing the boundaries of computing. I am incredibly proud of what we have accomplished together at AMD and am even more excited about the possibilities that lie ahead of us to develop innovations that can help solve the world’s most important challenges,” said Lisa Su.

The awards ceremony will take place at ITF World (May 21–22, 2024) in the presence of over 2,000 leaders who will gather at the Royal Elizabeth Hall in Antwerp, Belgium.

receives Nvidia Preferred Partner status

Prohawk Technology Group (Prohawk AI) announced that it has been selected as a Preferred partner within the Nvidia Partner Network (NPN). NPN brings together a community of technology leaders that collaborate with Nvidia to develop and deliver state-of-the-art solutions in various industries, including artificial intelligence (AI), deep learning, and high-performance computing. Achieving Preferred partner status underscores Prohawk AI’s expertise and dedication in harnessing Nvidia accelerated computing to enhance video analytics and computer vision applications. The company was previously a member of Nvidia Inception.

Prohawk AI’s patented technology transforms video in real time, on a pixel-bypixel basis, overcoming all environmental obstacles, including rain, snow, sand, pollution, or other – converting nighttime and other risks to unobstructed daytime safety levels with unmatched visual clarity.

The technology is embedded across Nvidia’s platforms, including Nvidia Metropolis (vision AI), Nvidia Jetson (edge AI apps), and Nvidia Holoscan (real-time AI-enabled sensor processing and healthcare) and Prohawk is currently working to integrate it into the Nvidia Isaac (robotics) platform. The Preferred partner status highlights Prohawk AI’s commitment to excellence in leveraging Nvidia’s cutting-edge technologies to deliver innovative computer vision solutions to its customers across multiple industries worldwide.

Braemac has signed a distribution agreement with Efinix. The aim of this strategic collaboration is to strengthen sales channels for Field Programmable Gate Array (FPGA) solutions for markets in the USA and Southeast Asia. Combining Efinix’s technologies with Braemac’s demand generation capabilities and market reach, this partnership is designed to provide additional value to customers in these regions.

Interview with Jeff Bier, Founder and CEO of Edge AI and Vision Alliance

Get a sneak peek at what‘s coming up at Embedded Vision Summit 2024. Jeff Bier discusses the exciting lineup, including groundbreaking presentations about embedded vision, panel discussions on AI and edge computing, and over 75+ exhibitors showcasing cutting-edge machine vision technologies.

inspect: What highlights can visitors expect this year?

Jeff Bier: Attendees can look forward to a program packed with exciting speakers and topics, a bustling show floor with over 75+ exhibitors, a Deep Dive Session hosted by Qualcomm, a live start-up competition and a fascinating keynote address.

Our keynote session, “Learning to Understand Our Multimodal World with Minimal Supervision,” will be presented by Professor Yong Jae Lee of the University of Wisconsin-Madison. He’ll be sharing his groundbreaking research on creating deep learning models that comprehend the world with minimal task-specific training, and the benefit from using images and text—as well as other modalities like video, audio and LiDAR.

We’ll also be hosting a panel on generative AI’s impact on edge AI and machine perception, kind of a part two of last year‘s session (check out a recording of last year’s session). Industry leaders will lend their perspective to questions like: How do recent advancements in generative AI alter the landscape for discriminative AI models, like those in machine perception? Can generative AI eliminate the need for extensive hand-labeled training data and expedite the fusion of diverse data types? With generative models boasting over 100 billion parameters, can they be deployed at the edge?

Two General Sessions are also on the agenda. The first is “ Scaling Vision-Based Edge AI Solutions: From Prototype to Global Deployment ” given by Maurits Kaptein of Network Optix, covering how to overcome

the networking, fleet management, visualization and monetization challenges that come with scaling a global vision solution. The second will be from Jilei Hou of Qualcomm . Jilei will lay out the case for why these large models must be deployed on edge devices, and Qualcomm’s vision for how we can overcome the key challenges standing in the way of doing so.

As an engineer, the thing I love about Embedded Vision Summit is that it’s one of the few places where you can talk directly with the engineers who designed the things you’re looking at, and get your questions answered in a candid fashion.

We’re also looking forward to the annual Women in Vision Reception—an event that brings together women working in computer vision and edge AI to meet, network and share ideas.

inspect: What is new compared to the Embedded Vision Summit 2023?

Jeff: Like in years past, we categorize the sessions (see them all here) at the Summit into four tracks: Enabling Technologies, Technical Insights, Fundamentals and Business Insights. Some of the most interesting themes covered in the tracks are: From model to product: Almost every product incorporating AI or vision starts with

an AI model. And the latest models tend to get all the buzz – whose object recognition model is fastest, that sort of thing. But the industry has learned over the last few years that you need much more than a model to have an actual product. For example, there’s non-neural-network computer vision and image processing to be done. There’s deal-

ing with model updates. There’s real-world performance to be monitored and measured and fed back to the product team. This year we’ve got a number of great talks focused on what it takes to bring AI-based products to market.

Generative AI’s impact on edge AI and machine perception: The integration of generative AI into edge AI and machine perception systems holds promise for improving adaptability, accuracy, robustness and efficiency across a wide range of applications, advancing the capabilities of edge devices to process and understand complex real-world data. Many of our speakers and exhibitors will be focusing on how things like vision language models and large multimodal models work, and how they’re been using these new techniques.

Transitioning computing from the cloud to the edge: As the demand for real-time, real-world AI applications continues to rise, the shift from cloud computing to edge deployment is gaining momentum. This transition offers numerous advantages: reduced bandwidth and latency, improved economics, increased reliability, and enhanced privacy and security. By bringing AI capabilities closer to the point of use, companies can unlock new opportunities for innovation and deliver more seamless user experiences.

inspect: How many exhibitors will be on site? What will visitors see here?

Jeff: Every year the Technology Exhibits are my favorite part of the event—there’s this sense of excitement and innovation. We’ll be hosting more than 75+ sponsors and exhibitors at this year’s Summit. Every building block technology will be represented—from sensors and lenses and camera modules to processors and accelerators to software tools and libraries, it’s all there. Attendees can expect to see demos on technology that utilizes processors, algorithms, software, sensors, development tools, services and more – all to enable vision- and AI-based capabilities that can be deployed to solve real-world problems.

As an engineer, the thing I love about it is that it’s one of the few places where you can talk directly with the engineers who designed the things you’re looking at, and get your questions answered in a candid fashion.

inspect: Why should you visit the Embedded Vision Summit 2024?

Jeff: From the very first Summit, we’ve prioritized the best content, contacts and technology. That tradition continues 13 years later—let me break it down a little more:

▪ Best content: Our attendees get insights from people who are expert practitioners in the industry and who have proven track records in computer vision and perceptual AI. They not only use this technology, but they live and breathe it.

▪ Best contacts: We attract top-notch attendees and exhibitors. That gives attendees the opportunity to meet more game-changing partners and contacts in one place.

▪ Best technology: We are dedicated to making sure we and our exhibitors bring the most relevant technologies that will help our attendees reach their goals.

Author

David Löh Editor-in-Chief of inspect

Embedded Vision Summit

Date: May 21–23, 2024

Venue: Santa Clara Convention Center

Tickets: Order here

This year’s Embedded Vision Summit in Santa Clara, California, features around 70 exhibitors and once again nearly 100 presentations. As usual, the supporting program is diverse and extensive. And a few special features are also included.

On May 21, 2024, the Embedded Vision Summit kicks off in Santa Clara, California. The afternoon of the first day is traditionally reserved for the Deep Dive session. This year, the topic is “Accelerating Model Deployment with Qualcomm AI Hub,” presented by Qualcomm. In this workshop, Qualcomm will address common challenges faced by developers migrating AI workloads from workstations to edge devices. Qualcomm aims to ease the transition to the edge by supporting familiar frameworks and data types. In addition, Qualcomm offers a range of tools to help advanced developers optimize the performance and power consumption of their AI applications.

and Exhibition of Embedded Vision Summit 2024

On the remaining two days, everything revolves around the exhibition – with around 70 companies (see the full exhibitors list behind the link) this time – and the program

with nearly 100 presentations. A highlight here is the keynote by Yong Jae Lee. He is Associate Professor at the Department of

When and Where

• May 21–23, 2024

• Santa Clara Convention Center 5001 Great America Parkway Santa Clara, California 95054

Opening Hours:

Tuesday, May 23, 2023: 9:30 a.m. to 8:00 p.m.

Tuesday, May 24, 2023: 9:30 a.m. to 6:00 p.m.

Useful Links: The complete lecture program

Tickets

Computer Sciences at University of Wisconsin-Madison. He will speak about “Learning to Understand Our Multimodal World with Minimal Supervision”.

The rest of the talks offer a very broad range of topics. For example, Maurits Kaptein, Chief Data Scientist at Network Optix and Professor at University of Eindhoven, Netherlands, will talk about “Scaling Vision-Based Edge AI Solutions: From Prototype to Global Deployment”. Other sessions are about efficient data handling, introduc-

tions into the use of ai, image processing for object detection and many more. See the full program with abstracts behind here.

Two other special features in the Embedded Vision Summit program are the “Vision Tank Start-Up Competition” (see the linked page for attendees’ video pitches, some of which are very elaborate) and the “Women in Vision Reception.” The reception will take place on Wednesday, May 23 at 6:30 p.m. after the presentations.

Inspect talked with Magalie Haida from Automation Technology about the development of embedded vision in machine vision. She explained the company’s approach, including their focus on the US market and the significance of their embedded vision solutions like the IRSX-I thermal camera. Haida also provides insights in potential applications and technological advancements driving Automation Technology’s future plans, including a new camera series in thermal embedded vision.

inspect: Embedded vision is considered one of the growth areas of industrial machine vision. How is this area developing at Automation Technology?

Magalie Haida: We use embedded vision at several stages of the image processing chain. In our 3D sensors, for example, embedded vision already begins on the sensor chip. With the IRSX-I camera, on the other hand, the entire software concept can be described as embedded vision, as the complete machine vision solution runs on the camera in addition to the image pre-processing. We are therefore observing strong growth in various areas of embedded vision – from on-chip processing to smart camera solutions.

inspect: How important is the US busi ness with the parent company in northern Germany?

Haida: The US business and growth in the North American market is hugely important to the parent company in Northern Germany. The office in the US is the first subsidiary of its kind for AT where there were focused investments for a quick ramp up in the team. There was research and careful consideration on choosing the US as a first expansion location. It was determined that the available market and appreciation for German engineering was so high that it was a logical step. It was also under stood that to effectively do business

in North America, local team members are required. This is not just to address communication and time zone needs but also to provide the necessary full customer experience.

inspect: How is the US business developing?

Haida: Even with the market in several industries still being a bit down, our business in the US is developing really well. Over the last year we have had several potential customers testing and evaluating our sensors for new systems. The feedback has been fantastic on the performance and reliability, and we are entering the next stages of system development.

inspect: What are the major areas of focus?

Haida: The major areas of focus now that we have a great, complementary team, is on getting our name and values known more in our target applications. We have some exciting marketing initiatives coming out this year including some great video content. We will also expand our presence at trade shows and continue to add more informative content to our new website.

inspect: With the ISRX infrared camera, Automation Technology also offers a

Without any external hardware, the thermal camera IRSX-I records measurement data, and processes and evaluates it directly on the camera.

device with integrated image processing hardware. Why is AT focusing on embedded vision here instead of separating acquisition and processing?

Haida: With the embedded vision approach, we enable our customers to achieve a fast time-to-market with low hardware costs and no software development effort. We are also convinced that established IoT interfaces such as OPC-UA, MQTT and fieldbus protocols such as Ethernet/IP and Profinet allow embedded vision devices to be efficiently integrated into customer applications. In our opinion, embedded vision solutions are therefore the method of choice, especially in distributed applications.

inspect: What are the benefits of the smart infrared camera for embedded vision?

Haida: The IRSX-I infrared camera from AT completely redefines the benchmark for efficiency and integration for embedded vision systems by being designed as a complete all-in-one solution. Without any external hardware, the IRSX-I records measurement data, and processes and evaluates it directly on the camera. The data is then evaluated and analyzed quickly and effectively using a measurement plan (job) stored in the camera, which can be configured using the web-based job editor. Furthermore, integration into existing system landscapes is child’s play thanks to

the standardized GenICam interface and other IoT protocols such as Modbus or REST-API, which makes the camera particularly attractive for a wide range of applications. Configuration of the camera parameters is effortless via a web-based interface, so no additional software is required.

inspect: In which applications is this smart camera mainly used?

Haida: Thanks to the unique adaptability and flexibility of the IRSX-I, as well as its reliable industrial suitability, it can be used in a wide range of embedded vision applications, especially when it comes to temperature monitoring or quality control. One application for which the smart infrared camera from AT is particularly suitable, for example, is flare monitoring. This application involves monitoring the constant burning of a pilot flame in industry to ensure that no harmful gases are released into the atmosphere. Originally, companies installed temperature sensors at the edge of the chimney for flare monitoring. With the IRSX-I, however, this is no longer necessary as the infrared camera can monitor the flare remotely [watch the video] and, in the event of a failure, sends a signal directly to the PLC control system thanks to its smart features. The situation is similar with the temperature monitoring of transformer stations [watch the video] . If irregularities

occur here, the smart IRSX-I immediately triggers an alarm so that fires don’t start in the first place.

inspect: Where do you see the greatest development potential (applicationrelated) for embedded vision?

Haida: With thermal cameras we see the greatest potential in cloud-based thermal applications for condition monitoring of industrial equipment, for early fire detection of storage areas, for battery assembly and storage and for classical applications in steel industry and petrochemical applications.

inspect: Where do you see the greatest technological potential?

Haida: We see the greatest potential above all in the consistent use and implementation of the IoT standards mentioned above, both in embedded devices and in higher-level applications. There is a clear trend towards using embedded devices in the form of cloud solutions in distributed applications.

inspect: What can we expect from AT in this respect?

Haida: We are continuously working on expanding our IoT platforms to better support established standard protocols and further expand the range of compatible third-party solutions.

inspect: What new products will AT be presenting this year?

Haida: We will see new releases of our IRSX-I apps and also plan to launch a new camera series focused on thermal embedded vision.

AUTHOR David Löh Editor-in-Chief of inspect

Not just an winter wonderland:

The accumulation of ice at the edge of a roof creates a dam that prevents water from properly flowing down the drain. The trapped water flushes back up beneath the shingles and enters the attic, causing unhealthy mold, costly damage, and potential electrical safety hazards.

Ice dams on the roof can cause heavy damage to buildings in snowy regions. Heat cables prevent them, but waste Terawatts of power when used permanently. Now there is an innovative camera module that monitors the snow and ice level on the roof to melt it only when necessary – saving up to 90 percent energy and reducing CO2 emissions accordingly.

In regions with a rough winter climate, heavy snow, and ice layers accumulated on roofs can cause substantial damage to buildings. The nightmare of many homeowners and facility managers is the so-called ice dam. The accumulation of ice at the edge of a roof not only causes icicles but also creates a dam that prevents water from properly flowing down the drain when the softer snow layers melt. The trapped water flushes back up beneath the shingles and enters the attic, causing unhealthy mold, costly damage, and potential electrical safety hazards.

To avoid that, North American roofs in snowy regions are often equipped with so-called heat cables. These electric heating cables laid on the edge of the roof melt the snow and ice to prevent the formation of a dam. While this is an efficient solution to protect the property, it is extremely inefficient from an energy consumption perspective, because it blindly operates regardless of the snow and ice level. The heat cables could be switched off 90 percent of the time, but they are not. Building owners waste large amounts of money on their power bills, and the wasted energy is an unnecessary burden on the environment and the climate. Thermometer-based systems attempt to reduce waste but can only make assumptions based on the weather conditions. They don’t accurately assess the actual ice formations on the roof.

A US-American company teamed up with Maxlab, a Canada-based computer vision solution provider, to design a solution to that

issue using machine vision technology. The system consists of one or several camera modules (depending on the size of the roof) that monitor the level of snow and ice on the edge of the roof and trigger the heat cables only when necessary. The camera uploads the captured image data to the cloud via a smart hub for processing. The software not only recognizes the formation of an ice dam but also compiles the data with weather data to optimize the operation of the heat cables even further. If necessary, the heat cables are switched on but remain switched off most of the time when not needed.

“The camera module developed had to fulfill demanding requirements to operate under such tough conditions”, explains Constantin Malynin, co-founder of Maxlab. Each module consists of two full-HD cameras to provide a panoramic view of the roof. The image sensors are infrared-sensitive to operate under low-light conditions during long winter nights and when the camera

itself is covered with snow thanks to the built-in infrared light.

Like the heat cables, the camera module is permanently installed on the roof. That means that it must withstand both the low temperatures of the winter and the summer heat. The module is designed to operate in a temperature range from -40 to 80 °C (-40 to 176 °F).

The housing is UV-resistant and IP68 waterproof to withstand weather conditions. It is also designed to withstand the weight of a person mistakenly stepping on it during installation and maintenance.

Another specificity of Maxlab’s camera module is its extremely low power consumption. To simplify installation on the roof, the camera is not connected to a power supply. It runs on a battery that works in tandem with a solar panel. Since the whole module will typically be covered with snow for weeks, one battery load ensures the system is self-sufficient for the whole winter. Maxlab not only developed the camera module but also the hub that connects the system to the cloud.

The development of the camera module was completed within nine months between the first sketch and the first manufacturing batch. This was made possible by Maxlab’s unique Tokay camera platform. Tokay is a modular embedded vision platform allows Maxlab’s engineers to develop custom solutions from pre-existing building blocks. “This modular platform approach is the key to our ability to deliver reliable solutions such as the rooftop camera within a short time frame”, explains Malynin.

Maxlab supervised the manufacturing ramp-up during the first year before handing it over to their client. The system has now been selling in thousands of homes and facilities across the United States.

Maxlab’s client estimates that thanks to this solution, heat cables can be safely turned out more than 90 percent of the time, saving 100 – 400 dollars energy costs per month to the owner depending on the size of the property. The system also provides relief to the power network in times it is needed most. Finally, it saves an estimated 500 – 1,000 kg CO2 (1,000 – 2,000lbs) per month for an average American home.

AUTHOR Jean-Philippe Roman Vision Markets

Ximea

In the interview with inspect Sam Seidman-Eisler discusses Ximea’s XIX platform, highlighting its unique features and applications. He talks about the platform’s modular design and its impact on various industries, and gives insights on the future of embedded vision technology.

inspect: What role does embedded vision play for Ximea?

Sam Seidman-Eisler: We see the embedded vision industry as a key industry for growth and innovation. For embedded vision applications, we have developed our unique line of PCI express cameras. This is quite different from a typical USB or GigE camera in the sense that they are connected directly to the motherboard of the computing platform and deliver data directly to RAM.

Our embedded vision cameras play a key role for OEM customers who are designing their system from the ground up and are able to tap directly into the computing platform. In addition to OEM customers,

researchers and single camera users who are looking to achieve the highest performance out of their cameras can greatly benefit from the bandwidth capabilities of our embedded vision PCIe cameras. Finally, to me the most exciting role of embedded vision is to integrate multiple cameras into a vision system.

inspect: With XIX, Ximea has its own platform for embedded vision in its portfolio. What are its key features?

Sam: Most cameras are limited by the bandwidth of the connection, and not the data throughput limit of the sen -

sor itself. With the bandwidth capabilities of PCI express, this is not the case, and we are able to offer unparalleled sensor performance. In addition to large data transfer speed, we bring the computing platform outside of the camera, allowing for some of the most compact sensor modules on the market.

Very importantly, our design is modular, allowing the use of multi-camera switches to seamlessly connect up to 12 cameras to one PCIe port on a host computer. With a wide range of resolution, sensor size, and framerate options available, we have camera models for even the most demanding applications!

inspect: How does the XIX platform differ from competing products?

Sam: Compared to other cameras on the market, our XIX cameras are faster, smaller, lighter, and come standard with an excellent API and support package. But it is the PCI express interface that really separates the XIX platform from other similar cameras. The PCIe interface allows us to maximize the performance of any given sensor. Our cameras are for sure not the right fit for every customer, but if you find your image solution underperforming, our embedded XIX cameras may be the solution.

inspect: Is there an application focus for embedded systems from Ximea? Which one?

Sam: There are several applications where embedded systems can really enhance the solution. Any system where multiple cameras are needed is the perfect opportunity to utilize our embedded vision platform. This includes 3D and 4D volume capture, motion capture, performance capture, photogrammetry, and many more.

Additionally, applications such as robotics and automation which require a compact, low latency, high resolution, and fast frame transfer rate camera are ideal for the XIX embedded system.

One final application to mention is instrumentation, or the application where you are building a device. With the release of our new Nvidia Jetson carrier board, you can easily connect up to three cameras to a Jetson module, making a compact multi-camera solution easier than ever to create!

inspect: What new features and/or products will there be in the XIX series?

Sam: The latest and greatest from our XIX series included the Nvidia Jetson carrier board, and our uniquely compact detachable sensor head cameras.

The detachable sensor head models allow the sensor head to disconnect from the controlling electronics, allowing the sensor head to fit into tight spaces. Additionally, with the sensor head detached, the controlling electronics heat up while the sensor itself stays cool, enhancing the sensor noise performance.

With the release of faster and higher resolution sensors, such as new models from GPixel and Sony, the bandwidth requirements are only going up. Thanks to the PCI express interface being completely backward compatible and the increasing bandwidth of the latest PCI generations, our XIX series is able to keep up with increased sensor performances with ease, without making our current models obsolete!

inspect: Where do you see biggest growth potential for embedded vision?

Sam: I see the biggest growth in the performance capture market. We recently exhibited at the game developers conference where our hardware was embraced by the gaming/simulation industry. With the increased popularity of augmented reality and virtual reality, the need for realistic virtual worlds is paramount. This is the perfect application for our embedded vision cameras to deliver high quality, high speed data

to machine learning and AI applications to create ultra-realistic virtual worlds.

inspect: How do you think the machine vision industry will develop in the USA this year and next? And why?

Sam: I am incredibly optimistic about the future of the machine vision industry. It seems that every trade show we go to we see more and more exhibitors and attendees, with the breadth of applications being seemingly limitless.

As computing power increases, and AI/ machine learning gets better, the power of machine vision is increasing dramatically. I see this leading to an increased demand in data output from machine vision manufacturers. We are positioned well with our XIX cameras to meet this demand.

It is awesome to see all the unique and innovative applications in which our cameras are used, and I am very excited to see where our future customers will take our cameras. Most of the applications I cannot even dream of in this moment, but I know the opportunities are out there for our cameras to enhance the machine vision world.

CONTACTS

Ximea GmbH, Münster, Germany

MVTec Software, an international manufacturer of machine vision software, exhibited again this year at Automate in Chicago. The software company from Munich presented its product portfolio and the latest technologies.

“Automate is a very important trade fair for us, because this is where we meet visitors from all areas of automation. We see machine vision as the eye of production and therefore a key component of automation. At the trade fair, we want to show the fundamental advantages of machine vision and how our software products enable profiting from them,” explained Dr. Olaf Munkelt, Managing Director of MVTec.

Focus on battery production and semiconductor manufacturing

Machine vision can be used in almost every industry. With very high speed and accuracy and 24/7 availability, the technology offers advantages that are particularly needed in battery production and semiconductor manufacturing. Large manufacturing plants are currently being built up in these sectors in the

MVTec Software exhibited again this year at Automate in Chicago. The software company from Munich presented its product portfolio and the latest technologies.

USA. This is why machine vision is so important, as it plays a key role in production. At the trade fair booth, MVTec demonstrated how machine vision, as the “eye of production”, optimizes and automates processes. The

company offers a wide range of functionality based on deep learning and classic machine vision, which customers can use to implement their applications flexibly and efficiently.

MVTec has been present in the USA since 2007

MVTec has been represented in the USA with its own subsidiary since 2007. Since then, MVTec has grown steadily and has positioned itself as a reliable partner in the North American machine vision industry. “Our goal is to provide our customers with the best possible machine vision software at all times,” says Heiko Eisele, President of MVTec USA, and continues: “For us, this means that we maintain a very close relationship with our customers through our local service and actively support them in creating solutions based on Halcon, Merlic and the Deep Learning Tool . We are also actively expanding our presence in the North American market by continuously hiring employees for our Boston office.”

MVTec Software GmbH, Munich, Germany

Teledyne´s Integrated Vision Solutions (IVS) concept stands for a new way of Embedded Vision and is based on the Canadian manufacturer’s machine vision portfolio.

Teledyne´s machine vision portfolio is unique and includes its own sensor production, a full array of machine vision cameras, image sensors, AI-enabled vision systems, software, and many other components. This broad range of products and systems are the foundation of the new IVS concept, as Dr. Martin Klenke, Director Business Development at Teledyne, explains: “The idea behind IVS is to work closely with customers and fully understand their requirements and constraints. As a result of this close collaboration and based on the specific discovery and discussions in this step, we then determine the key factors necessary to meet their needs – both technical and economic requirements. In close consultation, we then put together a machine vision system that exactly solves the task at hand.

For that, we can access Teledyne’s entire arsenal of imaging technology and bundle a perfect high-performance combination of

components with the appropriate optical and electronic elements, and suitable software to enable simple and targeted integration.”

IVS is not one defined product, but a vision platform adapted by knowledgeable imaging professionals. The focus is on the solution that can meet many levels of performance – from simple embedded systems to complex turn-key solutions. Based on the company’s established software tools, Teledyne’s imaging experts support users in adapting the required software to the specific purpose.

Dr. Martin Klenke, Director Business Development at Teledyne Imaging

“With this approach, our customers are able to focus entirely on their core compe tencies, e.g., quality assurance or process optimization, without having to worry about the vision part,” empha sizes Dr. Klenke. “Our IVS strategy focuses in particular on smart systems with high resolutions, speeds, or even integrated 3D processing with integrated data processing. This may include AI technologies, if required.”

According to Dr. Klenke, a key advantage for IVS users is that all needed components are usually available from the same producer. However, since IVS is an open framework system, the use of third-party hardware and software is also possible, when required.

“In contrast to classic embedded vision systems, IVS does not target given standard components where application programming is left to the user, but specifically provides the constellation that enables seamless integration into customer systems or processes,” says Dr. Klenke. “This includes the possibility of integrating customer software on the system. The result is often an autonomously operating, compact,

high-performance vision system that may have to be designed completely different from a same application for another customer.”

According to Dr. Klenke, the typical Teledyne imaging offering has tended to be horizontal, leaving specific application adaptation to the customer. “With IVS, we have expanded our existing machine vision port-

folio to include an additional vertical offering to users dealing with a specific challenge in numerous fields of application. We are currently looking at projects for quality assurance tasks in the automotive industry to improve component control, as well as novel integrated logistics scanners and manifold autonomous monitoring tasks in industrial environments.”

There is also strong demand for ‘fused imaging’ systems, where different types of cameras are integrated into one device to deliver, for example, automated AI-assisted object recognition with thermographic or 3D support. Dr. Klenke therefore sees fields of application for the IVS approach in areas like automation, transportation, logistics, traffic, and optical sorting, among others, and even for complex applications with a need of adding radar, lidar or X-ray sensors to an IVS system.

According to Dr. Klenke, an important general aspect is also the convenience issue, i.e., the combination of simple integration, replaceability, low setup times and autonomous, simple operation. Its importance must be determined exactly for each application, but the IVS concept allows this criterion to be applied even for high bandwidths applications.

Teledyne Imaging, Ottawa, Canada

Volkswagen Nutzfahrzeuge (VWN) has been manufacturing vans, family vans and motorhomes at its traditional site in Hanover for more than 65 years. With the ID. Buzz is now the first fully electric vehicle to be produced at the site. Before the so-called marriage, i.e. the connection of body and drivetrain, a 3D camera system checks all components.

The inspection of each individual vehicle before the so-called “marriage”, i.e. the joining of the body and powertrain, is an important step in ensuring the quality of the vehicles produced. The 3D camera system Clearspace 3D from Vision Machine Technic Bildverarbeitungssysteme (VMT) is used here, which enables precise and fast inspection of all components. The system can scan every detail of the chassis and detect irregularities or defects before

the vehicle enters the next production step of the marriage.

To avoid time-consuming disruptions in production due to foreign objects, VMT has developed the Clearspace 3D, a 3D vision solution that reliably detects the smallest foreign objects. Like many other vision solutions from VMT, the machine vision system is designed to be equipped with its own 3D sensors such as Deepscan and with 3D sensors from other manu -

facturers and technologies, such as laser triangulation sensors. The right choice of suitable sensor technology is an essential first step in achieving a solution that meets all process requirements.

In the case of the inspection of the battery housing at Volkswagen Nutzfahrzeuge (VWN), the preliminary tests which VMT carried out in its own test laboratories showed that the use of laser triangulation sensors from Wen-

glor fully met the customer’s requirements. It was conceptually determined that the sensors of the highest performance level (WLML series) would be used to achieve optimum results. These are always aligned in pairs in opposite directions so that undercut-free scanning of one side of the battery cover is possible. A total of four of these Wenglor triangulation sensors are therefore used to detect both sides of the cover 100 percent.

The measurement results are evaluated during operation in the VMT MSS (multi-sensor system) software platform, which offers numerous configuration, evaluation, analysis and connectivity options. In the application implemented in Hanover, the twostage evaluation process makes it possible

to first detect larger foreign objects with dimensions of ten millimeters or more and then to detect objects with dimensions of five millimeters or more using downstream fine detection. One of the strengths of the machine vision solution is that this grid can be scaled in both directions, depending on the sensors selected, so that both very large and microscopically small objects can be reliably detected in the same way. Overall, Clearspace 3D achieves a high image quality and accuracy that reliably detects objects such as screws, nuts, splinters or other small parts, as well as forgotten tools, thus helping to avoid disruptions in the assembly process.

In the successfully implemented project at VW Commercial Vehicles, the top of the battery is scanned with up to 14 million pixels in the form of a 3D point cloud made up of four individual scans with a depth resolution of 200 µm. The lateral resolution is 300 µm. The triangulation sensors have sufficient light output to recover sufficient evaluable remissions from the top of the object, compensate for extraneous light influences and thus ensure high image quality and measurement accuracy. Based on the experience gained from this project, VMT selected a scanning frequency of 555 Hz in order to

In addition to geometric detection with the laser sensors, other quality features are monitored using a conventional machine vision system.

achieve the optimum scanning result, even if the sensors allowed significantly higher scanning rates. All scanners run synchronized via an encoder signal so that fluctuations in the speed of the linear axis have no effect on the quality of the point cloud. As a result, this is always equidistant between each individual scan.

The versatility of the VMT solutions is also demonstrated by the fact that, in addition to geometric detection with the laser sensors, other quality features are also monitored with classic machine vision in the sense of a traditional set-up check. To this end, 20 high-resolution area scan cameras are used in the same system to check the presence and positioning of other add-on parts for a successful joining process, particularly in the area of the front and rear axles. In detail, this involves monitoring the installation situation of add-on parts, measuring the position of suspension struts and bearings and checking the routing of high-voltage cables. Only when Clearspace 3D and the classic inspection solution give their OK is the chassis transported to the next station.

AUTHOR Joachim Kutschka Head of Sales, Marketing and Product Management at VMT

CONTACTS

VMT Vision Machine Technic Bildverarbeitungssysteme GmbH, Mannheim, Germany

Incoming goods, material flow, order picking, storage, shipping – these are processes that have to be coordinated in intralogistics. A suitable machine vision concept can help system operators save time and reduce susceptibility to errors. Fitted with decentralized installation technology, highly automated company departments achieve entirely new levels of efficiency, which can also benefit other sectors.

The number of sensors and actuators in cutting-edge logistics centers is constantly on the rise. This in turn requires continuous data exchange between the control system and the machines and systems. With its decentralized installation solutions based on the plug-and-play principle, Murrelektronik has struck a chord with its customers.

The advantages are clear – components such as IP67 I/O modules can be fitted directly to the machine or system in the field using pre-assembled plug-in connectors (M8 or M12). In this way, all sensors and actuators can be connected to pluggable I/O modules in protection class IP67 –simply and without any wiring errors. That’s plug-and-play.

Compared to point-to-point wiring, this cuts the installation time significantly. Instead of laying lots of individual lines to the control cabinet, two lines are enough for fieldbus or Ethernet systems – one each for power and communication. As a result, there is also no need for time-consuming installation work in the control cabinet, such as stripping, fitting wire end ferrules, and connecting terminals.

Quick and easy installation frees up valuable capacity. Even planning an upgrade or expansion – from procurement to commissioning – is extremely time-consuming. A decentralized I/O concept based on standardized modules and plug-in connectors

Up to four cameras for machine vision can be connected to the hybrid switch and integrated into automation systems.

creates a time advantage. What’s more, IP67 modules such as a hybrid switch also deliver diagnostic data relating to voltage and current for each port via a web interface. LEDs on the module provide initial optical indications for error diagnosis. The diagnostic data can then be transmitted to higher-level cloud systems in a straightforward, targeted way, meaning service technicians have access to all key information at any time from any place, and can respond rapidly when there is an error. This increases machine availability and shortens costly downtime for maintenance.

Fundamentally, a machine vision system consists of sensors and cameras for lighting and recording the objects and an industrial PC for processing the data. High currents can be achieved using L-coded M12 plug-in connectors (16A). Alongside the Ethernet protocols Profinet, Ethernet/IP, and Ethercat, fieldbus modules such as MVK Pro and Impact67 Pro can be used independently of the fieldbus via OPC UA, MQTT, or JSON Rest API.

Machine vision applications can be adapted quickly to changed conditions with specialist products for the decentralized installation concept. A typical example is a multi-reader scan tunnel, which consists of machine vision sensors that scan and pho-

tograph a product from every side, sort it, and forward it to the correct place. The data is processed by an industrial PC and compared with the expected information. Murrelektronik has developed an I/O solution for this application that can be connected to the sensors via plug-and-play. At its core is the Xelity Hybrid Switch, through which up to four cameras can be connected per switch. Two M12 ports supply power to the cameras and enable communication. Even

larger applications with high energy needs and multi-camera applications can be implemented with ease.

Logically, the next step would be to set aside the control cabinet entirely and implement the automation functions in the field in line with specific requirements. With Vario-X , Murrelektronik has developed just such a system. It consists of a platform, plus a

hardware module, which takes over some of the functions of a control cabinet and brings them directly to the machine. Power supply, control systems, switches, safety technology, and I/O modules are installed in the robust waterproof and dust-proof housing. The sensors and actuator systems in the field become even more flexible as a result.

Ultimately, though, it is not a specific product that is decisive when it comes to creating an efficient installation solution. Instead, it is the fundamental idea on which decentralized automation concepts are built – simplifying, modularizing, transferring to the field, and combining technologies. Furthermore, the IO-Link communication standard ensures transparency for the linking of data – from sensor to cloud.

AUTHORS

Simon Knapp

Solution Manager Machine Vision at Murrelektronik

Louis Stadel

Industry Development Manager Logistics at Murrelektronik

Contacts Murrelektronik GmbH, Oppenweiler, Germany all images:

The initial devices in the Versal Series Gen 2 portfolio build upon the first generation with new AI Engines expected to deliver up to 3x higher TOPs-per-watt than first generation Versal AI Edge Series devices, while new high-performance integrated Arm CPUs are expected to offer up to 10x more scalar compute than first gen Versal AI Edge and Prime series devices.

“The demand for AI-enabled embedded applications is exploding and driving the need for solutions that bring together multiple compute engines on a single chip for the most efficient end-to-end acceleration within the power and area constraints of embedded systems,” said Salil Raje, senior vice president and general manager, Adaptive and Embedded Computing Group, AMD. “Backed by over 40 years of adaptive computing leadership in high-security, high-reliability, long-lifecycle, and safety-critical applications, these latest generation Versal devices offer high compute efficiency and performance on a single architecture that scales from the low-end to high-end.”

AMD expanded its Versal adaptive system on chip (SoC) portfolio with the new Versal AI Edge Series Gen 2 and Versal Prime Series Gen 2 adaptive SoCs which brings preprocessing, AI inference, and postprocessing together in a single device delivering end-to-end acceleration for AI-driven embedded systems.

Balancing performance, power, area, together with advanced functional safety and security, Versal Series Gen 2 devices deliver new capabilities and features that enable the design of high-performance, edge-optimized products for the automotive, aerospace and defense, industrial, vision, healthcare, broadcast, and pro AV markets.

To meet the complex processing needs of real-world systems, AMD Versal AI Edge Series Gen 2 devices incorporate an optimal mix of pro-

cessors for all three phases of AI-driven embedded system acceleration:

Preprocessing: FPGA programmable logic fabric for real-time preprocessing with unparalleled flexibility to connect to a wide range of sensors and implement high-throughput, low-latency data-processing pipelines

The initial devices in the Versal Series Gen 2 portfolio build upon the first generation with new AI Engines expected to deliver up to 3x higher TOPs-per-watt than first generation Versal AI Edge Series devices.

An array of vector processors in the form of next-gen AI Engines for efficient AI inference

Postprocessing: Arm CPU cores providing the postprocessing power needed for complex decision-making and control for safety-critical applications.

This single-chip intelligence can eliminate the need to build multi-chip processing solutions, resulting in smaller, more efficient embedded AI systems with the potential for shorter time-to-market.

The Gen 2 provides end-to-end acceleration for traditional, non-AI-based embedded systems by combining programmable logic for sensor processing with high-performance embedded Arm CPUs. Designed to offer up to 10x more scalar compute compared to the first generation, these devices can efficiently handle sensor processing and complex scalar workloads.

With new hard IP for high-throughput video processing, including up to 8K multi-channel workflows, Ver-

The Versal Series Gen 2 provides end-to-end acceleration for traditional, non-AI-based embedded systems by combining programmable logic for sensor processing with high-performance embedded Arm CPUs.

sal Prime Gen 2 devices are ideally suited for applications such as ultra-high-definition (UHD) video streaming and recording, industrial PCs, and flight computers.

Subaru Corporation has selected Versal AI Edge Series Gen 2 devices for the company’s next-generation advanced driver-assistance system (ADAS) vision system, known as Eyesight. This system is integrated into select Subaru car models to enable advanced safety

features, including adaptive cruise control, lane-keep assist, and pre-collision braking.

“Subaru has selected Versal AI Edge Series Gen 2 to deliver the next generation of automotive AI performance and safety for future Eyesight-equipped vehicles,” said Satoshi Katahira, General Manager, Advanced Integration System Department & ADAS Development Department, Engineering Division at Subaru. “Versal AI Edge Gen 2 devices are designed to provide the AI inference performance, ultra-low latency, and functional safety capabilities required to put cutting-edge AI-based safety features in the hands of drivers.”

Versal AI Edge Series Gen 2 Samples Available in the First Half of 2025

Designers can get started with AMD Versal AI Edge Series Gen 2 and Versal Prime Series Gen 2 early access documentation and first-generation Versal evaluation kits and design tools available today. AMD expects availability of Versal Series Gen 2 silicon samples in the first half of 2025, followed by evaluation kits and System-on-Modules samples in mid-2025, and production silicon expected in late 2025. CONTACTS

AMD, Santa Clara, CA, USA

Sensor for the Electronics Industry Automation Technology

X

Intelligent industrial camera with 4K streaming IDS

Modular 10GigE

Embedded Vision Platform

Gidel

X

AI Edge Computing Solutions on Display Cincoze

X

Real-time evaluation of multiple high-end cameras

N.A.T. Europe

Image processing with FPGAs Vision Components

AI Accelerator

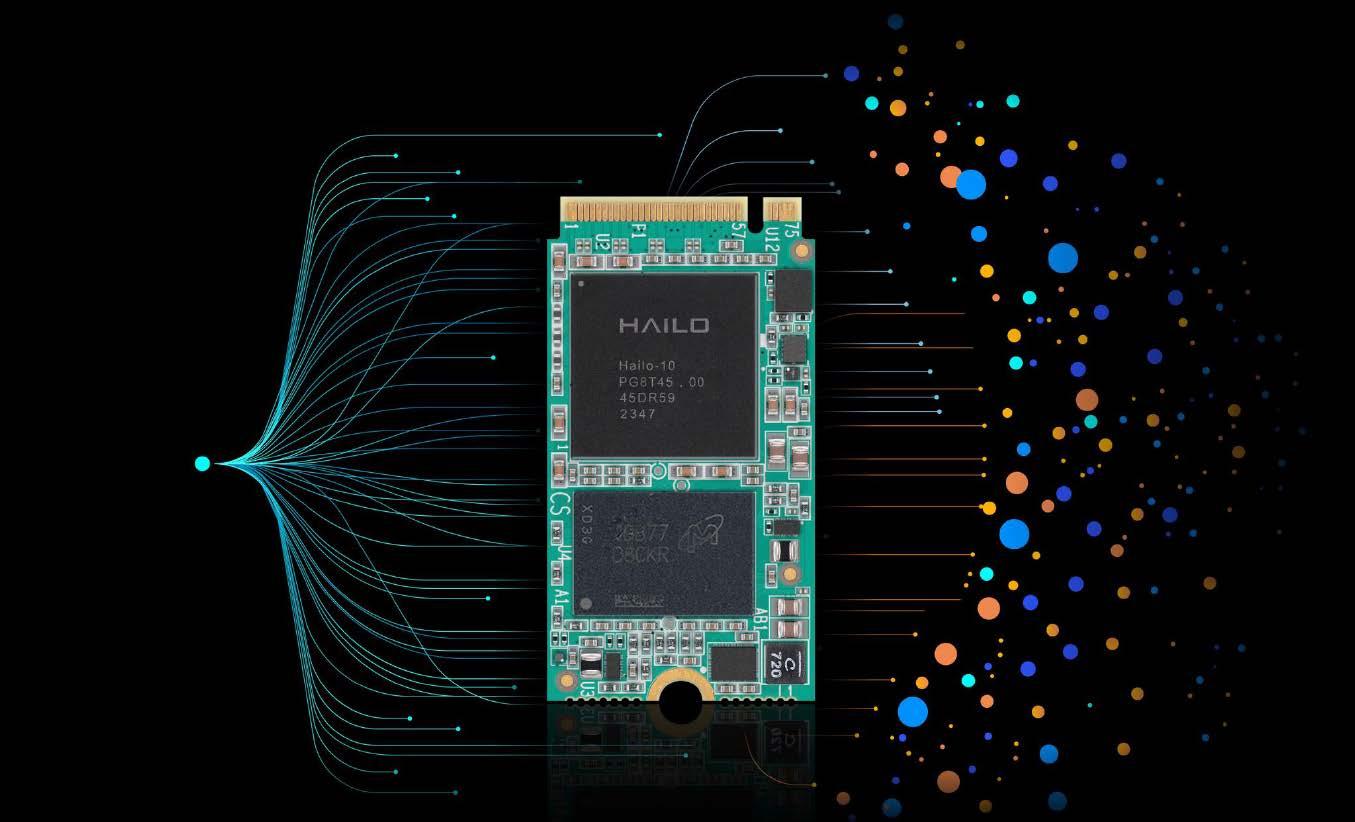

Bringing Generative AI to Edge Devices Hailo

Infrared camera with new microscope optics Optris

New cameras with Pregius sensor Ximea

IP67 Ethernet switch for communication and power Murrelektronik

Unlocking and Delivering Mainstream Edge Intelligence Innovation Efinix

Software with AI Training Tool Teledyne Dalsa

3D Scanner With Proprietary Sensor Chip Automation Technology

With its new 3D sensor from the recently developed XCS series, AT – Automation Technology is launching a product onto the market especially for high-performance applications in the electronics industry. The decisive arguments for this are above all its optimized laser and its very small field of view of up to 2.08 in (53 mm). The most important feature of the XCS series laser is the homogeneous thickness along the laser line thanks to the laser projector‘s special optics. The homogeneous line thickness enables precise scanning of the smallest structures – regardless of whether the object to be scanned is in the middle or at the edge of the line. The particular benefit for the customer: the development of inspection applications with high repeatability and exceptional accuracy, which means a real breakthrough in terms of precision for the inspection of ball grid arrays (BGAs), for example.

IDS is expanding its product line for intelligent image processing and is bringing a new IDS NXT malibu camera onto the market. It enables AI-based image processing, video compression and streaming in full 4K sensor resolution at 30 fps – directly in and out of the camera. The 8 MP sensor IMX678 is part of the Starvis 2 series from Sony. It ensures impressive image quality even in low light and twilight conditions.

IDS NXT malibu is able to independently perform AIbased image analyses and deliver these as a live overlay in compressed video streams via RTSP (Real Time Streaming Protocol). Hidden inside is a special SoC (system-on-a-chip) from Ambarella, which is known from action cameras. An ISP with helpful automatic features such as brightness, noise and color correction ensures that optimum image quality is always achieved. The new 8 MP camera complements the recently launched camera variant with the 5 MP onsemi sensor AR0521.

To coincide with the market launch of the new model, IDS has also published a new software release. Users now also have the option of displaying live images from the IDS NXT malibu camera models via an MJPEG-compressed HTTP stream. This enables visualization in any web browser without additional software or plug-ins.

With the MO2X microscope optics with 2x magnification, the PI 640i infrared camera from Optris is now able to capture infrared images of even complex structures.

For an exact temperature measurement, 4x4 pixels are required (MFOV), so that objects with a size of only 34 µm can now be measured. This means that even tiny structures can be analyzed at chip level. The thermal resolution of 80 mK is a very good value for this optic. The focus of the new optics makes it possible to work at a distance of 15 mm from the object being measured.

As the optics on the PI series infrared cameras can be easily exchanged, the system can be used flexibly for various measurement tasks. Together with the supplied high-quality microscope stand with fine adjustment, microelectronic assemblies can be inspected very easily. The maximum resolution of the infrared camera is 640 x 480 pixels at a frame rate of 32 Hz and even if this is 125 Hz, the PI 640i can still impress with 640 x 120 pixels.

The license-free analysis software PIX Connect is included in the scope of delivery; alternatively, a complete SDK is also available.

The Ximu camera series from Ximea is engineered for the smallest possible volume and weight, made with an advanced high-density manufacturing and assembly process.The new models for the xiMU camera line are equipped with Sony’s Pregius sensors as well as the freshest sensors from Onsemi.

The initial lineup includes camera models with IMX568 and IMX675 from Sony’s 3rd Generation Pregius family and AR2020 from onsemi.

Outstanding properties of the Sony and Onsemi CMOS sensors are presented in the resolution range starting from 5 MPix and going up to almost 20 MPix.

The sensors vary in achieving high values for different parameters, each selected for being remarkable in a specific field and suitable for particular applications.

All sensors are relatively small to fit inside the miniature cameras with pixel sizes reaching as low as 1.4 μm, which is unique and ideal for certain use cases.

At the Embedded World show in Nuremberg, Germany, Gidel demonstrated that high-resolution, high-speed, and/or multi-camera imaging with high-bandwidth camera interfaces like 10GigE can be achieved with compact, low-power embedded systems. There, Gidel presented its modular embedded vision platforms consisting of Fantovision edge computers and Gidel’s unique image-processing and compression libraries for real-time optimization of the image stream on the frame grabber’s FPGA.

Thanks to Gidel’s high-bandwidth camera interface technology, FantoVision edge computers allow system developers to design embedded vision applications on an Nvidia Jetson SoM with high-resolution and/or high-speed cameras. The platform offers various hardware options. Camera interfaces include two 10GigE ports, 4x PoCXP-12, or Camera Link. Users can also choose from different FPGA chips for image pre-processing or various Nvidia Jetson SoM (Xavier NX, Orin NX 16Gb).

Cincoze displayed at Automate 2024 its range of industrial embedded computing products around the theme of “Comprehensive AI Edge Computing Solutions” encompassing the full spectrum of industrial application environments in four dedicated zones, including the Rugged Embedded Computers, Industrial Panel PCs & Monitors, Embedded GPU Computers, and New Products. Cincozes Rugged Computing – Diamond line for harsh industrial environments will also be on display. The seven computers in the range have wide temperature, wide voltage, and industrial-grade protections, while each model offers different performance, expandability, size, power efficiency, and industry certifications. Every model supports additional I/O and functions through Cincoze’s exclusive modular expansion technology for high application flexibility

On the Embedded World in Nuremberg. Germany, N.A.T. Europe presented its new real-time capable, FPGA-based vision platform NAT Vision for high-resolution high-end cameras based on the Micro TCA standard for the first time (Embedded World). NAT Vision uses AMD’s (formerly Xilinx) ZYNQ UltraScale+ MPSoC FPGA technology for the processing and evaluation of high-performance video cameras. With NAT Vision, developers and system integrators can process imaging data streams from multiple high-resolution, high-end cameras in real time on a single consolidated platform. NAT Vision stands out compared to the usual PC-based vision platforms through the use of faster and more energy-efficient FPGA technology, which can be modularly adapted to individual processing tasks and a wide variety of transmission protocols as required. Thanks to NAT Vision’s scalability, up to twelve FPGA cards per system can be combined in a single system. The system is suitable for connecting cameras with a data throughput of up to 100 GbE.

In industrial machine vision, the requirements for precision, speed and flexibility are increasing rapidly; to keep up with the demand there’s a need for a more advanced solution: Murrelektronik ’s new Xelity Hybrid PN switch. In a robust IP67 housing, it’s able to transmit data at speeds up to 1 Gbit/s between different network devices while providing them with sufficient power. 6 times Gigabit Ethernet and 4 times NEC Class 2 power ports, the user can connect up to four devices such as cameras, light sources or barcode readers together in a decentralized installation. If it’s needed to add more devices to the network, daisy-chaining multiple switches won’t compromise the data transfer performance.

© Efinix

Vision Components has showcased innovations for fast and easy embedded vision integration at Embedded World in Nuremberg, Germany, from the first MIPI camera with IMX900 image sensor for industrial applications to an update of the VC Power SoM FPGA accelerator. The tiny board enables efficient real-time image processing and can be easily and quickly integrated into mainboard designs. It processes barcode reading and 3D stereo image capture at up to 120 Hertz. VC Picosmart, the world’s smallest embedded vision system, and the VC Picosmart 3D profile sensor based on it will also be on show at the trade fair.

Efinix Titanium Ti375 high performance FPGA is now shipping in sample quantities to early access customers. The Ti375 is manufactured in an advanced 16 nm process node, Titanium FPGAs deliver performance and efficiency through high-density, low power and high compute in small package footprints ranging from 35,000-to-1 million logic elements. Ti375 brings a capacity of 375K logic elements features, like Quad-Core Hardened RISC-V block at over 1GHz with Linux capable MMU, FPU and custom instruction capability. Quad SerDes transceivers supporting up to 16 Gbps raw data rates with multiple protocols, including PCIe 4.0, Ethernet SGMII and Ethernet 10GBase-KR protocols, as well as PMA Direct mode

Dual Embedded PCIe Gen4x4 controllers supporting root complex and end point with SRIOV (Single Root I/O Virtualization) and dual hardened LPDDR4/4X DRAM controllers for high bandwidth access to external memory.

The Titanium Ti375 FPGA solution enables a wide variety of applications spanning machine vision, video bridges, automotive and communications, to name a few.

With its VC MIPI Ecosystem, Vision Components demonstrates how perfectly matched components enable the fast, simple and cost-effective integration of image processing. The VC MIPI IMX900 MIPI camera is a new addition to this modular system. The ultra-compact global shutter camera module with a sensor diagonal of just 5.8 mm delivers high image quality even in low light conditions and has a high dynamic range extending into the infrared range. With a resolution of 3.2 megapixels, it can be used universally, whether for navigating autonomous robots, recognizing barcodes or in quality assurance.

Avnet introduces the new Smarc module family MSC SM2S-IMX95, which is based on the i.MX 95 application processor from NXP Semiconductors. The module complies with the Smarc 2.1.1 standard and enables easy integration with Smarc baseboards.

Combining powerful multi-core computing and immersive 3D graphics, NXP’s i.MX 95 family is also the first i.MX application processor family to feature NXP’s elQ* Neutron Neural Processing Unit (NPU) and a new Image Signal Processor (ISP) from NXP, the developer at supports the development of future powerful edge platforms.

“The new SMARC module family is ideal for applications that require a small form factor computing platform with high performance, graphics, video, AI capabilities and a rich selection of I/ Os,” says Thomas Staudinger, President, Avnet Embedded. “These applications include industrial, medical, smart home and building automation with displays and fast networking, industrial transportation and grid infrastructure.”

Hailo announced the introduction of its Hailo-10 high-performance generative AI (GenAI) accelerators that usher in an era where users can own and run GenAI applications locally without registering to cloud-based GenAI services.

The new Hailo-10 GenAI accelerator enables a whole spectrum of applications that maintain Hailo’s leadership in both performance-to-cost ratio and performance-to-power consumption ratio. Hailo-10 leverages the same comprehensive software suite used across the Hailo-8 AI accelerators and the Hailo-15 AI vision processors, enabling seamless integration of AI capabilities across multiple edge devices and platforms.

Enabling GenAI at the edge ensures continuous access to GenAI services, regardless of network connectivity; obviates network latency concerns, which can otherwise impact GenAI performance; promotes privacy by keeping personal information anonymized and enhances sustainability by reducing reliance on the substantial processing power of cloud data centers.

Teledyne Dalsa announces the latest version of its image processing software Sapera. This release includes enhanced versions of the graphical AI training tool Astrocyte 1.50 and the image processing and AI libraries Sapera Processing 9.50.

Teledyne Dalsa’s Sapera vision software provides field-proven image capture, control, image processing, and artificial intelligence capabilities to design, develop, and deploy powerful vision applications, such as: B. for surface inspection of metal plates, localization and identification of hardware parts, plastic sorting and circuit board inspection.

“This new version of Sapera image processing software offers a variety of new and improved features that enable flexible design and accelerated execution of AI-enabled applications,” says Bruno Menard, Software Director at Teledyne DALSA. “The key features of this release include the rotated object detection algorithm, which outputs position and orientation at the time of inference to achieve higher precision in target object and defect detection.”

Automation Technology has recently developed its own sensor chip. The reason for the fast 3D scans is the on-chip processing of the CMOS chip: This recognizes the laser line and compresses it without loss using intelligent algorithms so that only relevant data is transmitted. As a result, the C6 3070 sensor achieves an unmatched 3D profile pixel rate of up to 128 megapixels, which corresponds to 128 million 3D dots per second.

The particular benefit for customers is the profile speed of up to 204 kHz. The so-called Warp speed enables up to ten times higher measurement speeds and therefore ten times faster 3D scans than with conventional sensors, which opens up completely new possibilities for the modular compact sensor series (MCS) from the North German technology company. The MCS is based on a modular system with different sensor, laser and link modules and can be put together individually thanks to the flexible configuration of triangulation angle and working distance, thus achieving a scan width, measuring accuracy and measuring speed optimized for the application.

Published by Wiley-VCH GmbH

Boschstraße 12

69469 Weinheim, Germany

Tel.: +49/6201/606-0

Managing Directors

Dr. Guido F. Herrmann Sabine Haag

Publishing Director

Steffen Ebert

Product Management/

Anke Grytzka-Weinhold

Tel.: +49/6201/606-456

agrytzka@wiley.com

Editor-in-Chief

David Löh

Tel.: +49/6201/606-771

david.loeh@wiley.com

Editorial

Andreas Grösslein

Tel.: +49/6201/606-718

andreas.groesslein@wiley.com

Technical Editor

Sybille Lepper

Tel.: +49/6201/606-105

sybille.lepper@wiley.com

Commercial Manager

Jörg Wüllner

Tel.: 06201/606-748

jwuellner@wiley.com

Sales Representatives

Martin Fettig

Tel.: +49/721/14508044

m.fettig@das-medienquartier.de

Design

Maria Ender mender@wiley.com

Specially identified contributions are the responsibility of the author. Manuscripts should be addressed to the editorial office. We assume no liability for unsolicited, submitted manuscripts. Reproduction, including excerpts, is permitted only with the permission of the editorial office and with citation of the source.

The publishing house is granted the exclusive right, with regard to space, time and content to use the works/edito-

rial contributions in unchanged or edited form for any and all purposes any number of times itself, or to transfer the rights for the use of other organizations in which it holds partnership interests, as well as to third parties. This right of use relates to print as well as electronic media, including the Internet, as well as databases/ data carriers of any kind.

Material in advertisements and promotional features may be considered to represent the views of the advertisers and promoters.

All names, designations or signs in this issue, whether referred to and/or shown, could be trade names of the respective owner.