Welcome to the August 2025 issue of

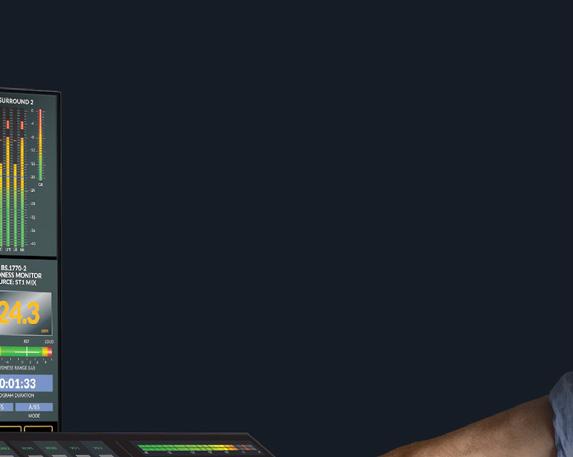

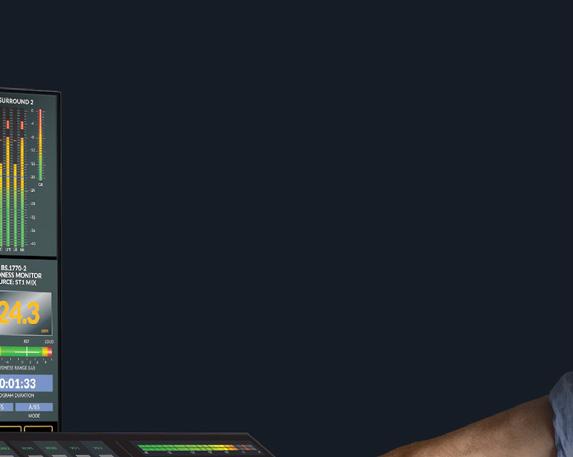

Make quick, precise audio adjustments from anywhere, any time. Complement your news automation system with this virtual mixer.

Adjust the occasional audio level with the Virtual Strata mixer as an extension of your production automation system. Mix feeds and manage the entire audio production with all the mix-minus, automixing, control and routing features you need from your touchscreen monitor or tablet. Fits in any broadcast environment as an AES67/SMPTE 2110 compatible, WheatNet-IP audio networked mixer console surface

Connect with your Wheatstone Sales Engineer Call +1 252-638-7000 or email sales@wheatstone.com

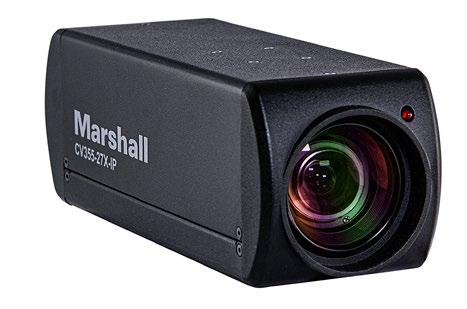

equipment guide cameras & lenses

[Host] Featuring AI Speaker ID. Redefining Automated Captioning. Again.

Mark Aitken

Does this year really mark 100 years since the invention of television?

This was the question I posed to Michael Crimp, CEO of the IBC, during a recent press conference previewing next month’s IBC Show in Amsterdam.

“We were looking around to see when everyone else was celebrating it, and most people seem to be celebrating it this year,” he said, adding that the show will feature a major exhibit devoted to television’s first century.

“But we’ll also be looking forward to the next 100 years and we’ll be working with young people who will be going around IBC, and they will be creating content about what the next 100 years of television will look like for them,” Crimp added.

Unlike the telephone, which has its Alexander Graham Bell, and the light bulb, which has its Thomas Edison, there is no “father of television,” or at least a historical figure who is known to most schoolchildren. The closest we have is two fathers: John Logie Baird in the U.K. and Philo T. Farnsworth in the U.S.

I asked two experts on the history of television, Mark Schubin and former TV Tech Technology Editor James O’Neal, about the IBC’s claim, and they concurred that technically, yes, 1925 does mark the first time television was demonstrated.

“If by ‘TV,’ you mean recognizable images of a human face, the date is good,” Mark said. “On Oct. 2, 1925, John Logie Baird first saw the recognizable features of the head of a ventriloquist dummy, ‘Stookie Bill,’ at 22 Frith St. in London’s Soho district.”

So far, so good, but Mark adds an asterisk: “But on June 14, 1923, in a building on Dupont Circle in Washington D.C., SMPTE founder Charles Francis Jenkins demonstrated live-motion wireless images to officials. Unfortunately, they were just silhouettes.”

James, who has an encyclopedic knowledge of the history of television, mentioned that Reginald Fessenden, who claimed to have made the first live radio broadcast of speech and music on Christmas Eve in 1906, filed a patent that described the early workings of television in 1922.

In June, a group of television history aficionados gathered at Dupont Circle in Washington, D.C., to honor Charles Francis Jenkins (second from left), one of the considered “founders” of television.

“I also have a nagging suspicion that Fessenden may have actually built a working television system, as he includes a great detail of information about the light valve’s construction—much more so than I’ve even seen in a patent or patent application,” James said. “The big problem I have with assigning priority to Fessenden is that he liked to blow his own horn. He was not one to hide his accomplishments ‘under a bushel.’ ”

So, to summarize, Logie Baird conducted the first private demo of a mechanical television system in 1925; the first public demonstration of TV came the next year (also in the U.K.); and Farnsworth is credited with introducing the electronic version of television in the U.S. when he successfully transmitted the first electronic television image—a straight line—on Sept. 7, 1927.

All arguments aside, if you’re heading to IBC next month, be sure to check out the exhibit on the television’s centennial and learn more about what’s to come.

Vol. 43 No. 8 | August 2025

FOLLOW US www.tvtech.com twitter.com/tvtechnology

CONTENT

Content Director

Tom Butts, tom.butts@futurenet.com

Content Manager

Michael Demenchuk, michael.demenchuk@futurenet

Senior Content Producer

George Winslow, george.winslow@futurenet.com

Contributors: Gary Arlen, James Careless, Fred Dawson, Kevin Hilton and Phil Rhodes

Production Managers: Heather Tatrow, Nicole Schilling

Art Directors: Cliff Newman, Steven Mumby

ADVERTISING SALES

Managing Vice President of Sales, B2B Tech

Adam Goldstein, adam.goldstein@futurenet.com

Publisher, TV Tech/TVBEurope Joe Palombo, joseph.palombo@futurenet.com

SUBSCRIBER CUSTOMER SERVICE

To subscribe, change your address, or check on your current account status, go to www.tvtechnology.com and click on About Us, email futureplc@computerfulfillment.com, call 888-266-5828, or write P.O. Box 8692, Lowell, MA 01853.

LICENSING/REPRINTS/PERMISSIONS

TV Technology is available for licensing. Contact the Licensing team to discuss partnership opportunities. Head of Print Licensing Rachel Shaw licensing@futurenet.com

MANAGEMENT

SVP, MD, B2B Amanda Darman-Allen VP, Global Head of Content, B2B Carmel King MD, Content, Broadcast Tech Paul McLane Global Head of Sales, Future B2B Tom Sikes VP, Global Head of Strategy & Ops, B2B Allison Markert VP, Product & Marketing, B2B Andrew Buchholz Head of Production US & UK Mark Constance Head of Design, B2B Nicole Cobban

FUTURE

West 42nd Street, 7th Floor, New York, NY 10036

Tom Butts

Content Director

tom.butts@futurenet.com

a licence to publish your submission in whole or in part in any/all issues and/or editions of publications, in any format published worldwide and on associated websites, social media channels and associated products. Any material you submit is sent at your own

and, although every care is taken, neither Future nor its employees, agents,subcontractors or licensees shall be liable for loss or damage. We assume all unsolicited material is for publication unless otherwise stated, and reserve the right to edit, amend, adapt all submissions.

Please Recycle. We are committed to only using magazine paper which is derived from responsibly managed, certified forestry and chlorine-free manufacture. The paper in this magazine was sourced and produced from sustainable managed forests, conforming to strict environmental and socioeconomic standards.

Two-thirds of broadcast engineers reaped the benefits of a pay raise within the last year.

That’s according to the Society of Broadcast Engineers’ annual compensation survey, which is used to determine salary levels and benefits among broadcast and media technology engineers. It’s the 10th year the SBE has conducted the report.

The survey indicated most engineers are between the ages of 50 and 70, many have more than 30 years of experience and, most importantly from the society’s perspective, its certification program continues to pay off.

The SBE shared a sampling of the annual survey’s findings with TV Tech’s sister publication, Radio World.

It was conducted between April 1 and May 15 with approximately 270 qualified respondents ranging in age from 20 to over 70, with the most common age group in their 50s.

Approximately 44% of those surveyed worked in radio, while 37% were in TV. Respondents held a wide range of experience. Many have been in the industry for more than 30 years, with a concentration in the 11-to-40-year range.

Around two-thirds of respondents reported receiving a salary increase in the last year. For those who received a raise, around 35% said they received a 3% raise.

The survey demonstrated the benefit of an SBE certification, which approximately 57% of respondents held. For a radio engineer, the average salary with a certification was approximately $88,000, compared to $80,000 without. For those respondents who indicated that they performed both radio and television engineering—about 23% of those surveyed—there was a $20,000 increase with a certification versus without.

Other notable certifications held by respondents included CompTIA, with approximately 12%, and Dante/Audinate, with about 8%. Approximately 17% of respondents held no professional certifications.

All responses remained anonymous, the society said, but demographic data collected included market size, job category and title, age, years in the industry, salary and benefits received.

The complete results are available free to society members and the survey is available for purchase by others through the SBE Bookstore. The society has around 4,000 members in 117 chapters in 26 countries worldwide.

President Donald Trump has signed a bill that will end federal funding for the Corporation for Public Broadcasting at the end of October, following Congress’ approval last month.

The end of funding could spell the death knell for PBS and NPR stations in rural areas, advocates warned.

“This elimination of federal funding—over 70% would go to local stations—will decimate public media and force many local stations to go dark, cutting off essential service to communities that rely on them—many of which have no other access to free, locally controlled media—especially those communities in rural areas,” America’s Public TV Stations CEO Kate Riley said in a statement. “The proponents of this legislation were

myopically focused on defunding NPR and PBS, but what this bill will actually do is devastate independent local stations—some of which are not even members of NPR and PBS and don’t air their programming.”

PBS President Paula Kerger concurred. “These cuts will significantly impact all of our stations, but will be especially devastating to smaller stations and those serving large rural areas,” she said. “Many of our stations which provide access to free unique local programming and emergency alerts will now be forced to make hard decisions in the weeks and months ahead.”

18% of PBS’s annual operating budget. Without the funding, PBS, which has 350 stations nationwide, estimates that roughly 15% of its stations will be unable to operate.

Olivia Trusty, the new FCC Commissioner appointed by Trump, acknowledged “the long-standing role that the Corporation for Public Broadcasting has played in supporting educational and cultural programming across the country, particularly in rural and underserved areas,” but added that defunding CPB ”presents an opportunity for innovation, partnerships and more localized decision-making.

CPB funding makes up approximately 15-

“As a regulator, I will continue to support policies that promote access and competition in media, without presupposing that one model of funding or content creation should be immune from public scrutiny or reform,” she added.

I’ve competed against him. I’ve worked for him. I’ve crossed paths with him at numerous industry events, and I’ve even interviewed him a time or two. Now it is time to say goodbye to him as he retires from ATSC as its vice president of standards development after 25 years with the organization.

Of course, I’m referring to Jerry Whitaker, one of the true gentlemen of the industry.

Kurz

Going to work full-time for KCRA radio in Sacramento, Calif., at the age of 19, Jerry worked as a morning news editor and later as a producer. “But my real interest was in engineering,” he said, so Jerry got his FCC First Class License and found a job as an engineer at an AM station in Eureka, Calif., market 183, in the late 1970s.

By 1983, Jerry joined Broadcast Engineering magazine as radio editor. Eventually, he became editorial director of BE and was then promoted to associate publisher.

One of his favorite magazine memories occurred at the Fall SMPTE Conference in Los Angeles when, as the newly minted radio editor, he visited Solid State Logic’s booth with the magazine publisher.

In the year 2000, he joined ATSC as technical director, a title which the organization changed a few years later to vice president of standards development. In that role, he has helped shepherd standards development, including the industry’s most significant effort since A/53 (ATSC 1.0) was published in 1995, namely the ATSC 3.0 suite of standards.

As he prepares to depart at the end of August, the industry awaits what the FCC will decide to do about sunsetting ATSC 1.0 to make way for 3.0. Sitting in the seat he has occupied for a quarter century, Jerry seemed to be the perfect person to ask about how the industry and standards body made the decision to develop a non-backwardcompatible TV transmission standard—one of the biggest hurdles the industry faces in a 1.0 sunset.

“We sat down at this SSL board with [the late] Doug Dickey,” he recalled. “It was 8 feet long—just enormous. And I’m used to a radio board, which if it had 10 channels was a lot. He’s telling me about this, and here’s the send, and here’s the return. And I’m thinking, ‘what the heck is this,’ but I managed to bluff my way through and say: ‘Mm-hmm, I see.’ ”

But if you do something long enough, you learn along the way, especially if you write articles read by broadcast engineers. That’s doubly so, if you’re writing and editing technical tomes, which Whitaker has done in spades.

Over his career, he has written or edited some 50 books and counts the 2,500page “The Electronics Handbook,” published by CRC Press in 1996, as his most important work.

ATSC put out a public call seeking input on what the new broadcast standard should be, he said. “People presented their ideas on what was possible today [at that time] and what was possible tomorrow. That detailed report led to ATSC 3.0.

“We realized clearly that if we’re going to develop a new television system, it needs to offer benefits that the current system simply cannot, and so it needs to be a pull—consumers need to want to have it. That’s what we set out to develop.”

What are his plans post-ATSC? “Well, I have hobbies as you can see,” Jerry said during our Zoom interview as he sat amidst the vintage radio gear he has restored.

“When I was in Eureka, I rebuilt the AM station and put the FM, KPDJ-FM, on the air, Class C from a construction permit. Thanks to eBay and a lot of repair work, I’ve collected pretty much everything we had there down to the automation system and the carousel cart machines and all of that fun stuff.

“So, I’ll keep up with that and keep trying to come up with answers to the question I get from my family members, which is: ‘Why, dad? Why would you do this?’ ”

Dan Whealy has acquired Heartland Video Systems and will be its new owner and president, the systems integrator said. Dennis Klas, former president and owner, will stay on through mid-2026 to support Whealy and ensure a smooth and seamless handover, the company said.

“It is an incredible honor to lead Heartland Video Systems into its next chapter,” Whealy said in a statement.

“As a long-time former customer and current employee, I developed a deep respect for the legacy built by Dennis and the HVS team—one rooted not only in visionary leadership and integrity, but in a culture of outstanding ethics, transparency, trust, dedication and service. That culture is what has made HVS so special to its employees, customers and partners over the past three decades—something that is very near and dear to my heart to preserve going forward.”

The company, a premier video systems integrator, consulting firm, and manufacturer of the Videstra MicroLocal IP camera management solution, said all key staff members, corporate structure and customer-focused sales and support will remain in place.

Whealy brings to his new role 21 years of experience in the broadcast industry, with a well-rounded background in both radio and television and a deep understanding of all facets of broadcast technology. His career has spanned roles from hands-on engineering to executive leadership, including time at Quincy Media and, more recently, as chief technology officer at Allen Media Broadcasting (AMB).

By Fred Dawson

Notwithstanding ongoing tariff-related economic uncertainty, U.S. TV broadcasters found plenty of developments at the midway point of 2025 to justify surprisingly optimistic commentary about both their short- and long-term prospects.

Of course, with the tariff threat escalating as this article went to press, there was no way to count on the first half of the year as an indicator of what’s in store for the larger economy. But at least industry results to date were cause for relief compared to what might have been.

While TV station and network owners attested to an overall softness in the local ad market stemming from the tariff situation, outlooks for the rest of the year were buoyed by results exceeding expectations, including the fact that year-over-year ad revenue declines were relatively mild, in the range of 5-10%+, which improved on the usual fluctuations following a national election year.

Addressing analysts in May, major TV station groups predicted continuing gains from technology-driven cost efficiencies, expanded local news coverage through digital outlets, the return of many major sports teams to local over-the-air distribution and a strong pace in

distribution contract renewals. In comments that typified the industry state of mind, E.W. Scripps Co. President and CEO Adam Symson touted an outlook based on Q1 results that “beat expectations across the board due to strength in network revenue, especially for connected TV, and due to strong expense control across the enterprise.”

Looking at the tariff situation, Nexstar Media Group Chairman and CEO Perry Sook contends, at least in Nexstar’s case, there is little cause for concern. As to whether tariffs might result in ad sales “falling off the cliff,” Sook says, “The answer is no.” While uncertainties might cause some hesitation in ad buys among automakers and other sellers of goods that could face higher tariffs, “only about 40% of our nonpolitical ad revenue is tied to goods-based businesses that could be impacted by tariffs,” he says.

Tegna, in a release describing Q1 performance that exceeded expectations, said the results, with just a 5% ad revenue drop from the politically charged 2024 ad pace, showed the company’s “resilience despite facing macroeconomic headwinds.” Amid anticipated ongoing softness in the overall local ad market, the growth rate on the digital side was making a big difference, leaving the company “in

a strong position to drive profitable digital growth through 2025 and beyond,” Tegna Chief Financial Officer Julie Heskett says.

How all of this plays with investors remains to be seen. But Justin Nielson, principal analyst and head of S&P Global Market Intelligence Kagan, suggests a few silver linings in the many clouds overhanging the broadcast TV industry haven’t changed his group’s conservative outlook on the sector’s long-term prospects. Accounting for election-related ups and downs in ad-sales cycles, S&P Kagan projects a 0.5% compound annual growth rate (CAGR) for TV-station ad revenue through 2035, with a 1.3% CAGR for core local spot ads and 2% CAGR for streamed video and website ads slightly outweighing a 4.1% decline in the national spot CAGR.

These projections account for the many upsides cited by station group executives, Nielson says, including likely consolidation, strong sports schedules, digital content expansion, NextGen TV and other factors. But in each case, he says, there are trends that appear to set limits on their potential.

For example, as station groups emphasize the work they’ve done to provide more

local news coverage through digital outlets, Nielson says S&P Kagan has seen “a bit of a plateau in terms of what stations are putting online.” When it comes to winning viewers who stream content to connected TV sets through station streaming services or FAST channels, local broadcasters are up against a “lot of competition,” he adds.

Such hard-nosed reality checks on industry optimism make clear there’s another way to look at things, but, given the volatility intrinsic to every trend line, it’s never been harder to judge where things are going. Beyond broadcasters’ take on what’s happening, there’s ample evidence for “the momentum shifting in favor of broadcasters,” as Nexstar President and Chief Operating Officer Mike Baird puts it.

One clue in that vein can be found in NathansonMoffett’s newly released Q1 Cord Cutting Monitor, which tracks pay TV industry trends with a direct bearing on the broadcast TV segment. Notably, “we’ve now had three consecutive quarters of improvement in the decline rate of traditional Pay TV,” co-authors Craig Moffett, Robert Fishman and Michael Nathanson report. “That’s the first time we’ve been able to say that since the decline began.”

Near-term considerations aside, the cause repeatedly cited by broadcasters for renewed optimism about the future is their confidence they’ll be getting regulatory relief on ownership caps, ATSC 3.0 spectrum availability and other issues. In June, the FCC asked for public comments (due this month) on a 2017 NPRM on updating station ownership caps, something group executives have been pushing for even before Carr took the chairmanship.

“I’d think a NPRM is the most likely way to kick off a revision of rules, local and national, as they relate to ownership,” Nexstar CEO Sook said during the company’s Q1 call in May. “I’d think that would be one of the first moves [FCC] chairman [Brendan] Carr would make.”

Optimism about what’s in store in Washington is fueled by the fact that, as noted by Sook, who’s also the current chairman of the NAB’s joint board of directors. “This is the first time in history that the entire board voted unanimously that the national cap elimination and in-market ownership restrictions be eliminated,” he says, adding that it’s certainly “impactful at the regulatory agencies and on Capitol Hill that the industry is speaking in one voice as it relates to this issue.”

Of course, any big, bottom-line impacts resulting from regulatory changes won’t be felt until new rules go into effect in 2026 or beyond. But, as Tegna CEO Mike Steib notes,

there’s a lot about what’s already in play on the efficiency side of improving performance that will move to center stage when the next round of consolidation kicks in.

“A real driver of value in the consolidation

“A real driver of value in the consolidation opportunity is around local costs.”

MIKE STEIB, TEGNA

opportunity is around local costs,” Steib says. Noting what consolidation would mean in contrast to “three, four, five, six TV stations performing the same tasks in a market,” he adds, “If you look at the potential sort of back-office and support takeout costs across markets and across the country, it’s many billions of dollars of potential savings for the ecosystem.”

It’s clear that broadcasters are putting real

money behind these ambitions. Uniformly, suppliers we queried for this article sounded more upbeat than they did at NAB Show in April, when everyone was concerned about how looming tariffs and general economic conditions would affect buying decisions.

So far, they said, tariffs have been a nonissue in their sales performance, though they acknowledge that could change. But with industry investments in operational efficiency, digital development and advanced advertising solutions surging, they say they are confident 2025 will turn out to be a good year.

One bird’s-eye view from the cloud perspective on industry transformation comes from Chris Blandy, director of strategic business development for M&E and games and sports at Amazon Web Services (AWS). AWS is seeing “continued momentum across the media and entertainment industry as companies migrate their existing archives and new workloads to the cloud, while also exploring new generative AI tools to support their productions and create more personalized experiences for audiences,” Blandy says.

Business for Bitmovin, long a leading beneficiary of the video streaming revolution, continues to surge as ever more service providers look to consolidate and streamline video processing.

“It’s all about efficiency,” Bitmovin Co-founder and CEO Stefan Lederer says. “We’re seeing customers really take a hard look at their technology stacks to unlock cost savings through smarter, more streamlined workflows. That’s true across our entire product portfolio, from better encoding efficiency and CDN savings, to switching to commercial platforms or analytics tools like ours that are built to drive efficiency and results.”

As a result, Lederer says: “We’re on track to double our growth goal for this year. By the end of the first half, we had already achieved the full-growth target originally set for 2025.”

The trends are just as impactful for longtime traditional TV providers who have evolved their technologies to fit the new market dynamics. Noting that Appear is building on a 46% revenue increase in 2024, Vice President of Marketing Matthew Williams-Neale echoed the common refrain.

“Broadcasters and media operators remain focused on deploying technologies that boost efficiency, support distributed production, and enhance audience engagement,” Williams-Neale says. “We see this reflected in strong customer interest around hybrid workflows, cloud migration and open software ecosystems.” ●

• Switch, extend Video, Keyboard and Mouse

• Support for Video, Audio (analog and digital), IR & RS-232,

• USB1.1 (HID), USB 2.0 fortouchscreens, tablets, USB drives

• Supports HDMI, DVI, DP++, VGA, resolutionup to 4K60

• Low latency (2ms), and fast computer switching (2 sec)

• Supports up to four head computers and consoles

• Receivers with built-in scalerto match the output displays

• Control up to four consoles from a single keyboard &mouse

• Runs oninexpensive GigEnetworks - POE support

Available as single/dualTx/Rx modules and open cards

APPLICATIONS

• Production

• MobileTrucks

• Postproduction

• CommandandControl

• Universities

SUPPORTS

• DaVinciResolve AdvancedPanel

•Stream Deck

•NewTek3Play

• AmericanMuseumofNaturalHistory

• FEMA

• Wells Fargo

• ZDF - WorldCup

American broadcasters face a choice

Preston Padden is a former broadcast executive at INTV, Fox, ABC and Disney.

The press is filled with stories predicting the demise of linear broadcast television. And certainly, it is true that we face many obstacles, including streaming services taking our viewers and advertisers, cable cord-cutting eroding our retransmission revenues and our own networks climbing on the streaming bandwagon.

But what if there was a new broadcast standard that held the promise of connecting broadcasters not only to television receivers, but also to 5G wireless smartphones and tablets, opening a whole new market to our transmission? The good news is that there is such a standard and it is called 5G Broadcast. And because 5G Broadcast (unlike ATSC 3.0) was adopted as part of the 3G PP 5G standard, it holds the key to our future. All we have to do is join large portions of the world in adopting it.

I have the greatest respect for my longtime friend Mark Aitken, who has advocated with great skill to try to make ATSC 3.0 the American standard for next-gen TV. And I have great admiration for Sinclair and its principal, David Smith. David is probably the only station group owner who has seated himself at a bench and actually built a UHF transmitter.

Let me state upfront that no one is paying me to write this article and that I do not own a single share of stock in Verizon, AT&T, T-Mobile, mobile chip makers or phone manufacturing companies. My investments are in boring municipal bonds with no relation whatsoever to the television or telecom industries.

The broadcasting industry is transitioning from ATSC 1.0 to a next-generation standard. The two principal choices available to us are ATSC 3.0 and 5G Broadcast. An international standards body called 3GPP sets the stan-

dards for all cellular devices in the world and all of the major cellular device manufacturers (Apple, Samsung, etc.) build their devices to comply with 3GPP. 3GPP-compliant devices only receive signals that are part of the 3GPP family of 5G global standards, meaning that broadcasters who transition to the 5G Broadcast standard will be able to transmit directly to the hundreds of millions of next generation 5G smartphones and tablets.

By contrast, ATSC 3.0 is not a part of the 3GPP family of cellular standards and therefore cannot and will not be able to be received by smartphones and tablets compliant with the 3GPP standards. For this reason, Sinclair and others tried diligently to get ATSC 3.0

With several large countries committing to 5G Broadcast, I expect TV set manufacturers to incorporate 5G Broadcast receivers to meet marketplace demand — no government mandates necessary.

approved by 3GPP as part of its standards. Ultimately, 3GPP refused to incorporate ATSC 3.0 into its standard.

LTE-based 5G Broadcast is better-suited for integration with 3GPP modems because it reuses nearly all existing LTE/5G components and hardware, whereas ATSC 3.0 requires different implementations across critical building blocks.

So far, public and private broadcast operators in Germany, France, Italy, Austria, Estonia, Spain and the Czech Republic came forward and announced their intentions to deploy the 5G Broadcast standard. And there is continuing interest in 5G Broadcast in Malaysia, China and Brazil, with active trials and evaluation of the technology. With several large countries

committing to 5G Broadcast, I expect TV-set manufacturers to incorporate 5G Broadcast receivers to meet marketplace demand—no government mandates necessary.

Because of trial broadcasts around the world—including a Federal Communications Commission-approved trial by low-power station WWOO in the Boston market—we don’t need to speculate about whether 5G Broadcast can be received by 5G smartphones.

So American full-power TV broadcasters face the following choice: Do you want to transition to a next-gen standard that broadens your market to include reception by 5G cellular devices, or do you want to transition to a standard that cannot be received by those devices?

The question answers itself.

Advocates for ATSC 3.0 try mightily to think of applications that could make up for their standard’s lack of access to 3GPP cellular devices. They argue that car manufacturers will go to the expense of adding ATSC 3.0 receivers to their cars to receive software downloads. But since all cars (even my low-tech minivan) already have 5G transceivers that serve that function, that seems very unlikely. Or they argue that an ATSC 3.0-based new GPS system will be the key to our future. That seems a real stretch, and certainly no substitute for gaining access to 3GPP cellular devices.

So why is Sinclair pushing so hard for ATSC 3.0? The simple answer is that they have a conflict—not bad or evil—just a conflict. Sinclair owns a vast portion of the intellectual property that makes up the ATSC 3.0 standard. That means that they stand to reap a fortune in royalties if American full-power broadcasters adopt ATSC 3.0. All other TV broadcasters can make their choice without being burdened by that conflict!

In my opinion the only thing that can save broadcasting from extinction is to transition to 5G Broadcast and transmit directly to both TV receivers and 3GPP cellular devices and thereby join the mobile future.

All we have to do is do it! ●

ATSC 3.0 isn’t just a standard; it’s a system designed to serve the public, empower broadcasters and evolve with technology

Mark Aitken is senior vice president at Sinclair Broadcast Group and president of ONE Media.

Bold claims are easy to make, especially from seasoned showmen.

But if you’re going to listen to them, it’s best to keep one hand on your wallet. Advocates of 5G Broadcast like to make impressive claims about what 5G Broadcast is and what it can do. So, to avoid confusion, before discussing the relative merits of NextGen TV (or ATSC 3.0) and 5G Broadcast, it’s important to clarify one thing that 5G Broadcast is not.

First and foremost, it is not a near-term path for broadcasters to get their signals into mobile devices. The 5G Broadcast Barkers make much of the fact that 5G Broadcast is already a 3GPP standard. But what they don’t tell you is that this isn’t self-executing. Currently, no consumer device can receive 5G Broadcast signals. Not one.

To actually get a 5G Broadcast signal into a phone in a consumer’s hands, manufacturers would need to agree to install broadcast band antennas and new radio frequency filtering and front ends in mobile devices, which is exactly what they would need to do to get ATSC 3.0 signals into a phone in a consumer’s hands. One difference? India’s mobile manufacturers are already supporting ATSC 3.0 phones. Right now. As you read this.

In any event, the standardization of the next ATSC 3.0 release, currently referred to as “Broadcast to Everything (B2X),” will accelerate the availability of 3.0 receivers in mobile devices. B2X is a backwards-compatible evolution of ATSC 3.0 that harmonizes with 3GPP standards—including Release 17 and anticipated extensions—providing a true path toward converged broadcast-broadband delivery without abandoning the robust ATSC 3.0 foundation.

So if 5G Broadcast doesn’t offer a faster

path to getting broadcast to mobile, what does it offer?

Well, for one thing, 5G Broadcast offers measurably inferior performance. This is partly because it’s not even really 5G. It’s 4G/ LTE. In fact, I don’t know why I’m even calling it “5G” Broadcast at this point.

You don’t need to take my word for 4G Broadcast’s technical inferiority. Last summer, Brazil engaged in a lengthy, thoughtful process involving extensive laboratory and field testing to select the ATSC 3.0 physical layer as the over-the-air transmission system for the country’s upgrade to next-generation terrestrial broadcast services.

In response to its original call for proposals, Brazil’s Fórum do Sistema Brasileiro de TV Digital Terrestre (SBTVD) received 31 responses from 21 different organizations worldwide,

It’s misleading to suggest that broadcast television could become instantly scalable just by virtue of being ‘part of 3GPP.’

resulting in 30 candidate technologies. This included four over-the-air physical layer candidate technologies: Advanced ISDB-T, ATSC 3.0, 5G Broadcast, and DTMB-A, all of which were subjected to both laboratory and field testing.

The full results of the first rounds of lab tests are available at https://forumsbtvd.org.br/ under the TV 3.0 Project tab. SBTVD determined that it was necessary to conduct further lab and field testing between the top two candidate standards, Advanced ISDB-T and ATSC 3.0, before making a final recommendation. The full results of the final round of lab tests are also available at the URL referenced above.

Here’s the short version of the lab results: ATSC 3.0 outperformed every other candidate standard and was unanimously recommended by SBTVD. ATSC 3.0 demonstrated greater

spectral efficiency, with higher throughput for both fixed indoor reception and high-speed mobile reception. (Somehow the so-called 5G Broadcast advocates never mention that their mobile standard doesn’t work well with devices that are actually mobile.)

But for our purposes today, it’s particularly worth noting that 4G Broadcast didn’t even make the cut for the final evaluation in field testing. That’s right—5G Broadcast advocates are trying to convince broadcasters to adopt an also-ran technology as the future of the industry.

It’s misleading to suggest broadcast television could become instantly scalable just by virtue of being “part of 3GPP.” The broadcast mode of 5G—FeMBMS or 5G Broadcast—is not implemented in those hundreds of millions of phones. It’s a separate mode, with its own antenna, filtering, LNA, silicon and other required components and software stack—none of which is found in current consumer devices.

ATSC 3.0 has so much more to offer and is so much further ahead in the game at this point. Broadcasters are already using ATSC 3.0 to deliver superior pictures and sound to viewers. Broadcasters have spent years working on ATSC 3.0, developing features like broadcast applications that allow broadcasters deploying NextGen TV to offer new interactive features and benefits, such as enhanced content, program restart, hyperlocal weather and programmatic advertising.

These applications also create an easy pathway to extending content created for digital platforms into the broadcast experience. And broadcasters are already developing new business models that will allow the industry to diversify revenue streams and thrive in the decades ahead.

The future of broadcast isn’t about shiny distractions—it’s about illumination. ATSC 3.0 isn’t just a standard, it’s a system designed to serve the public, empower broadcasters and evolve with technology. It’s on the air. It’s in consumer devices. It works. And if you attended the recent ATSC Next Gen Broadcast Conference … it’s all about mobile! While others chase hypotheticals, ATSC 3.0 delivers real value today—and lights the path to tomorrow. ●

Broadcasters seek out hybrid approaches to monitoring and QC-ing cloud-based media

By Kevin Hilton

In today’s data-based, IP broadcast world, the days of technicians in white coats bench-testing cameras and microphones using specialized test and measurement (T&M) devices such as oscilloscopes, signal generators and spectrum analyzers would appear to be very much in the past. This is increasingly the case due to a major adoption of software for both setting up and troubleshooting equipment and, perhaps more significantly, monitoring channel output for quality control (QC) and regulatory compliance.

The two areas remain very distinct but not entirely separate, with T&M playing a role in compliance through specific test equipment and techniques. “As broadcasters migrate to IP-based environments, HDR workflows, UHD/8K and cloud operations, packet loss, jitter and PTP [precision time protocol] timing become both a T&M and

a QC concern,” says Kevin Salvidge, sales engineering and technical marketing manager at Leader Electronics of Europe.

“Software-based solutions are establishing themselves in cloud-native QC platforms and are widely used in file-based and OTT workflows,” he says. “The adoption of ST 2110 has seen the introduction of softwarebased waveform and vectorscope monitors, but there are still a number of features only hardware solutions can provide, including latency and PTP timing. Because of this, most broadcasters today are looking for a hybrid approach.”

Even though software is offering new ways of doing established jobs, it is not changing how broadcasters approach T&M and QC, as Matthew Driscoll, vice president of product management at Telestream, observes: “The team looking at camera shading and SRT [Secure Reliable Transport]/Zixi delivery to

a cloud workflow are very different than the folks doing the QC on what is streamed to a subscriber. The fact that things are migrating to software, the cloud or hybrid workflows doesn’t matter in the sense that you’re doing the same jobs, just in a different location.”

Driscoll’s colleague Ravi McArthur, product manager for Telestream’s Qualify automated QC-in-the-cloud system, says the aim is to allow users to work where they want to be, rather than dictating how to use the technology. This has resulted in Qualify now being made available for on-prem operation.

“In the cloud, you effectively get infinite horizontal scaling, which is a big bonus for people with vast and wide media supply chains,” he says. “But sometimes, depending on the kind of resolutions and bit rates people are dealing with, they want their software on-prem near high-speed storage so they can process files as quickly as possible.”

In the view of Ashish Basu, executive vice president of worldwide sales and business development at Interra Systems, the situation has “changed for good,” with software and cloud now “almost everywhere” in T&M and QC.

“Broadcasters are saying they’re increasing the level of automation in checking audiovideo quality as much as they can,” he says. “But that is not necessarily aligned with the compliance side. Some of the most advanced broadcasting organizations may have no interest in looking at captioning QC because it’s not mandated in their specific geographic region. We see that variation, but otherwise broadcasters are still interested in delivering pristine content.”

“A key trend is the increasing diversity and complexity of the service being delivered.”

MARK SIMPSON, TRIVENI DIGITAL

What is clear is that the way broadcasters are approaching T&M and QC is driven by new distribution and platform technologies, Triveni Digital President and CEO Mark Simpson says.

“A key trend is the increasing diversity and complexity of the service being delivered,” he says. “ATSC 3.0 is a more complex standard than what came before, not only in the technical underpinnings but also because more services are being deployed. It is something we feel should have a more integrated, comprehensive approach rather than a lot of different subsystems.”

On the compliance side, Mediaproxy has been completely software-based since it was founded in 2001. “There are virtually no hardware solutions used for QC and compliance anymore,” CEO Erik Otto says. “There’s probably still a lot of hardware involved in T&M, but I’m sure they will have to change as well.”

Even so, Otto adds, there may still be a requirement for dedicated physical devices when it comes to detecting faults such as jitter. “When you deal with packets of data,

you need to detect jitter properly for correct synchronization of audio and video streams,” he explains. “To do that you need a clock, so technically, a piece of hardware to give the necessary accuracy.”

While a physical timepiece might appear to be irreplaceable, even this area could eventually follow the software trend due to that inevitable game-changer, artificial intelligence (AI). “With AI being everywhere, we are putting it throughout many of our products where the idea of a clock seems like a skeuomorphism,” Telestream’s McArthur says. “We’re very focused on practical AI and are responding to customer requests for features including clock and slate reading, contextual analysis and object detection.”

Interra has been working with AI and machine learning for three to four years, says Ashish Basu, and has incorporated both into many of its products.

“We use them in areas like video signal quality or degradation measurements,” he says. “However, we do not say we are an AI company or that we produce AI products. But there is space where AI can make a significant difference for our customers.”

Triveni has had early versions of AI in its products from the beginning because they were rules-based, Simpson says: “AI is a kind of rules-driven technology and it’s getting more sophisticated. Over time, we’ll see an increasing use of AI techniques—for example, our monitoring system with quality scoring for the feeds based on a number of observations.”

Otto has a different take on how AI could possibly change T&M, or, ultimately, the need for it: “Problems with satellite links, 4/5G, internet connections with terrestrial transmitters and networks in general can’t be controlled or predicted,” he says. “Technically, everything else—software and hardware—is in everyone’s control. Because AI is probably better at producing better code and outcomes, it could, in the long term, shrink the need for T&M.” ●

By James E. O’Neal

The public’s interest in viewing distant “breaking news” events was stoked more than 100 years ago by the French Pathé motion picture company, with that organization producing and distributing the first “newsreels” to European movie houses in 1909 and in the United States two years later.

These short presentations of major news developments allowed audiences to experience such events in greater detail than the still images that appeared in newspapers and magazines. By the 1930s, a few specialty movie houses catered to serious “news junkies” by offering only newsreels running on a continuous basis, a precursor to the 24/7 schedule initiated by CNN in 1980.

Television news coverage, and the immediacy that comes with it, ultimately spelled the death knell of the theatrical newsreel, with the final installment coming from British Movietone News in May 1979 (U.S. newsreel production had ceased some 12 years earlier).

With this background, and the rise of numerous successful television entities that do nothing but broadcast news, it’s interesting to trace the evolution of “on the scene” television newsgathering and the technological developments that have now made it possible to capture and transmit live video from anywhere in the world.

After a century, it’s sometimes a bit difficult to establish the true origin of many technologies and events—witness the competing claims for the invention of the telephone, radio, aviation and others. However, a very strong claim for priority in ENG exists for Schenectady, N.Y., TV station WRGB, which at the time of the history-making event operated under radio station WGY’s call sign.

WGY was owned by General Electric, and its wizard of combined electrical and mechanical engineering, Ernst Alexanderson, had begun experimentation with television around 1926. He succeeded in developing a 48-line mechanical system, and with it, broadcast the

“Ground zero” for ENG appears to be “on-the-scene” coverage in August 1928 of New York Gov. Al Smith announcing his candidacy for U.S. president. The event was televised over General Electric’s Schenectady station, WGY. Ernst Alexanderson (right), who headed up GE’s early television research and was responsible for the live event coverage, is seen here with assistant Ray Kell, examining a 48-line mechanical scanner used in his TV broadcasting initiative.

first televised drama, “The Queen’s Messenger,” in September 1928.

Alexanderson had also devised a portable version of his equipment, and in the previous month transported it to the New York statehouse in Albany to televise Gov. Al Smith as he accepted his nomination as the Democratic presidential candidate on Aug. 22.

This first-ever bit of televised “breaking news” was reported to have been clearly received on the handful of television receivers that existed then. (Alexanderson later continued his television research independently of WGY, with the experimental call sign W2XCW. This pioneering station later became WRGB.)

Credit for the first live coverage of a breaking ‘hard’ story by television likely goes to Los Angeles station KTLA.

Claims might be made by fledgling U.K. and German television operations in connection with the televised coverage of horse racing and the 1936 Berlin Summer Olympic Games, but these are better characterized as live coverage of sporting events, not breaking news.

A priority claim for the first electronically televised breaking event has to go to NBC and its 1939 experimental television station, W2XBS (later WNBT and now WNBC), which in November 1938 broadcast live the burning of an abandoned New York City building, and a few months later, covered the opening of the New York World’s Fair, with President Franklin Roosevelt, New York Mayor Fiorello La Guardia and RCA President and CEO David Sarnoff all appearing before the camera.

However, it was not until the end of World War II in 1945 that television began to be taken seriously by the general public and receivers started appearing in homes in substantial numbers. During this period, early TV broadcasters began to emulate their radio counterparts by increasingly taking programming out of the studio and into real-world environments, including live coverage of breaking news.

Credit for the first live coverage of a breaking “hard” story by television likely goes to Los Angeles station KTLA, which, not long after exchanging its experimental call sign W6XYZ for the commercial call, took its mobile setup to the scene of a massive explosion at a city electroplating plant. The Feb. 20, 1947, incident killed 17 people and damaged 11 nearby buildings beyond repair. KTLA microwaved video from the scene to its transmitter location atop Mount Wilson for retransmission to the few hundred TV sets then in use in Los Angeles.

KTLA also gets the honors for the first continuous television coverage of an evolving event—the attempted rescue of a 3-year-old girl who had fallen into an abandoned well. The station’s coverage of this 1949 event spanned nearly two days in April.

KTLA also wins—hands down—the honors for development and deployment in 1958 of the first helicopter television broadcasting platform, the “Telecopter,” which was the brainchild of the station’s chief engineer, John Silva.

Silva first experimented with a “tethered” configuration for video delivery, and with the success of this arrangement shifted to a “wireless” version, employing a special mobile microwave transmitter and non-directional antenna package built by General Electric.

The antenna was hinged so as not to impede helicopter landing operations, stowed horizontally until the aircraft was in flight. It was then dropped to a vertical position beneath the craft, radiating equally well in

Electronic television’s first generation of vehicles for “outside broadcasts” reflected the bulk and weight of the vacuum tube-driven cameras, monitors and support gear they had to carry. While this late-1940s-vintage 17,500-pound Dumont “Telecruiser” was capable of capturing and relaying video back to the studio, its requirements for external power and special parking arrangements were not conducive to coverage of “breaking news.”

On Feb. 20, 1947, a massive explosion leveled a Los Angeles electroplating plant. The blast, which killed 17 people and destroyed a number of nearby buildings, occurred less than a month after W6XYZ had been commercially relicensed as KTLA. The station provided on-the-scene coverage of the aftermath.

In addition to providing some of the very first televised on-the-scene coverage of unfolding news events, Los Angeles station KTLA also was the first to provide a bird’s eye view of such events via their “Telecopter,” which launched in 1958. In this photo, a special-made nondirectional microwave transmitting antenna is about to be installed on the Bell 47G-2 helicopter leased by the station prior to its deployment.

all directions. Signals could be easily tracked from an elevated receiving dish.

(As an historical note, Silva was not the first to transmit video from an aerial platform. Near the end of World War II, the military experimented with drone operation using early RCA camera gear, and in 1955, NBC used a Goodyear blimp to transmit portions of the Jan. 1 Tournament of Roses parade; however, Silva was the first to deploy a helicopter in an ENG application.)

KTLA was certainly not alone in devising methodology for airing news events as they happened. By the late 1940s, RCA was offering a two-camera “remote” unit in its catalog of TV-specific equipment, and a number of stations either purchased such ready-made products or “rolled their own” in an attempt to supplement studio programming.

And while these smaller mobile video origination platforms could be used in coverage of a scheduled news event, spontaneity was not their strong suit, as external electrical power was required, necessitating the pre-ordering of a power company “drop,” or running heavy cables to a suitable AC source.

However, by 1952, engineers at Washington, D.C.’s CBS affiliate, WTOP-TV (now WUSA), overcame this limitation with the creation of a one-camera vehicle (a modified passenger car) for event coverage. (As little documentation survives, it’s possible that the network may have been involved in the project, as CBS was then part-owner of WTOP-TV.)

A surviving photo (on the cover) shows the unit in operation, with CBS newsman Walter Cronkite describing a news event as technicians operate an RCA camera and microwave transmitter. Power demands for this gear would have been minimal compared to those required by vans with multiple cameras, monitors and other origination equipment, and could have been met by an auxiliary AC generator driven by the car’s engine.

Despite the efforts of station and network engineering teams in devising smaller and less power-consuming mobile video origination platforms, throughout most of the postwar first three decades, the favored methodology for capturing most news events for television broadcast remained the 16-millimeter motion picture camera, due to its small form factor, ease of handling and portability. ●

(The next part of this series will examine the initial attempts at breaking away from news film as solid-state equipment began to replace vacuum tube-driven broadcast gear.)

By James Careless

No matter how good your current gear is, there’s always new equipment that’s better, lighter and easier to use in the field. Today’s gear for news production is lighter and more compact yet more powerful than ever, thanks to an improved form factor and faster processing.

The quest to improve video transmissions from the field is never-ending and Teradek, a Videndum plc brand, has taken a step forward with its new Prism Mobile 5G cellular bonding unit, the lightest 5G-enabled camera-back video encoder on the market, according to Derek Nickell, Teradek’s technical product manager.

“The system is designed to provide the best possible cellular connectivity for live video streaming without compromising on size and weight, allowing field operators to stay agile for longer while live streaming over public and private networks,” he says.

Nickell says Prism Mobile enables camera operators to stream HD and 4K video over

cellular networks and Starlink with latency rates as low as 250 milliseconds. It also allows them to securely share encrypted, coloraccurate live video feeds and recordings with stakeholders globally and expedite postproduction with several natively integrated camera-to-cloud platforms such as Frame.io and Sony C3 Portal.

This last capability lets editors access camera proxies as soon as they’re captured, and allows marketing teams to post highlights and playbacks in near-real time.

As anyone who’s ever shot video knows, a lightweight yet solid camera head and tripod is your best friend on the field. The Sachtler Ace XL Mk II fluid head and flowtech tripod combination is worth inviting into the friend zone. Designed to handle payloads weighing up to 17.6 pounds, the 3.75 lb Ace XL Mk II head adds minimal weight to a tripod, yet can handle most of today’s video cameras. (The Ace XL Flowtech 1016MS tripod weighs 11.7 pounds.)

According to Sachtler Senior Product Manager Barbara Jaumann, the Ace XL Mk II head/ flowtech tripod combination was designed in collaboration with TV crews to meet their demands for robust, lightweight, and quickto-deploy equipment.

“One of its standout features is the placement of brake levers at the top of the tripod,” she says. “This design allows for exceptionally fast setup—just flip the levers and lift the tripod in a single motion. This top-mounted lever system also makes height adjustments quick and easy, even when working solo, such as adapting to different interview subjects on the fly.”

Another key focus in the Ace XK Mk II’s development was ergonomic portability, Jaumann adds. “The tripod is not only quick to deploy but also simple to fold down, with integrated magnets that keep it securely closed during transport,” she says.

Lighting can be a real challenge in field video shoots, which is why Litepanels has introduced the Astra IP line of weatherproof portable LED light panels. The lineup includes the Astra IP Half, 1x1 and 2x1 units, all with an IP65 rating that ensures protection against rain, dust, snow and humidity.

These bicolor LED panels offer a color temperature range of 2,700 to 6,500 kelvin and feature high color accuracy, with CRI/TLCI ratings around 95. They deliver a focused 30-degree beam angle, provide flicker-free dimming and support both Bluetooth and optional DMX control, with an onboard LCD for easy adjustments.

“The standout feature of the Astra IP is its IP65-rated weather resistance, making it ideal for use in challenging outdoor environments where crews often face unpredictable weather such as rain, dust, or snow,” Michael Herbert, head of product management at Litepanels, says. “In terms of performance, the lights are exceptionally bright. The 1x1 model delivers around 3,000 lux, while the 2x1 reaches up to

5,500 lux at 3 meters, ensuring strong illumination in any setting.”

Designed with portability in mind, each Astra IP unit is lightweight—ranging from 6.8-15 pounds, making them easy to carry and set up on the go. Each unit comes with an integrated power supply, and users can choose from optional battery plates including Gold Mount, V-Mount, and BP-U for greater flexibility. Available in Duo and Trio configurations, Astra IP Travel Kits pack multiple Litepanels, battery brackets and support stands into durable Peli can cases for convenient transport and rapid on-set setup.

These days, many camera operators are using SLR-styled mirrorless digital cameras to shoot stills and video con tent in the field. Manfrotto is address ing their need for a single compact support solution with the new ONE Hybrid Tripod.

Weighing from 6.9 pounds, “the ONE Hybrid tripod includes XTEND, a patented mechanism that allows

all leg sections to be deployed simultaneously in a single action for fast setup and quick adjustment on the fly with no downtime,” says Sofia Braccio, Manfrotto’s senior product manager, video. “It features a modular sliding center column with a removable lower section for ground-level shots and an integrated leveling mechanism for quick framing. The column also features a Q90 mechanism that allows shifts from vertical to horizontal instantly enhancing the variety of shots possible.”

The ONE Hybrid Tripod also comes with XCHANGE, Manfrotto’s new quick-release system that allows users to swap heads, sliders and accessories in seconds. “When paired with the new XCHANGE-ready 500X Fluid Video Head, the ONE Tripod becomes a professional system ready for modern content requirements,” Braccio said. “It includes a Fluid Drag System (FDS) on both pan

Sachtler’s Ace XL Mk II head/flowtech tripod combination was designed in collaboration with TV crews to meet their demands for robust, lightweight, and quick-to-deploy equipment.

and tilt, a selectable Counterbalance System optimized at 5.3 pounds and a hinged camera plate, making it easy to switch between horizontal and vertical orientations when supporting social content is required.”

Shotoku Broadcast Systems has recently combined its TG-27 pan and tilt head with the company’s TR-HP Hot Panel control panel into a powerful package.

“Together, they offer a lightweight and flexible system that’s ideal for mobile production environments such as news, sports or events,” James Eddershaw, the company’s managing director, says. “The TG-27’s compact design and high payload capacity make it suitable for a variety of camera types—including ENG models and small teleprompters—while the TR-HP enables intuitive, direct control without the need for a full-size panel.

This combination delivers Shotoku’s trademark precision and reliability in a footprint that suits fast-moving news teams, pop-up studio setups and remote production workflows.” ●

Remodeling

in the cloud is allowing platform providers to offer true AI services

Cloud service providers are expanding and broadening their business—and especially their service offerings—as everything seems to be moving toward AI. To help accomplish this, without rebuilding or replacing existing infrastructures, the new term “AI cloud” becomes a service offering that leverages some of the new and advanced telecom technologies the cable industry has been utilizing for a few years now.

Wondering how new service providers, including the cable industry, are effectively linking AI into cloud computing? This article will explain some of those new advances and how they are being melded into the cloud.

Don’t be surprised if, in the next five years or sooner, we start to see AI and/or cloud computing services being added to our cable systems for consumers, home and business. You’ve likely seen TV ads that exemplify the foundation of such services and may not even realize it is “already” happening.

For the future, one approach that will be needed and become of value to users is the integration of AI tool sets and their models into cloud infrastructures for tasks such as (home or business) automation, model training and data analysis. Such prospects will involve cloud platforms that leverage AI-specific resources and, in turn, can help streamline AI development life cycle and deployment. Cloud becomes a very significant part of this AI movement.

Several new steps and approaches will provide unique opportunities for the more traditional segments of the media and technology industries and include some of the following issues, changes and conclusions.

Cloud service providers are beginning to change their physical data and transmission centers. They are investing heavily in AI topologies for end users and those with business-related requirements in such areas as operations, research and development. As the major cable providers continue to add new service segments (e.g., business connectivity and cellular communications), there becomes a great new need for these media providers

to edge towards becoming cloud vendors that will, in turn, provide user access to emerging AI tools and technologies.

These providers will need to expand and provide sufficient compute power and resources, leading to the building of newer and larger data centers with the specifics and needs for AI applications. This further need for more power to support cooling and servers will force these providers to new locations, such as more rural areas where power is affordable and space is not at a premium. Connectivity to end users (customers) will

Cloud AI platforms are becoming increasingly important, and we now see them expanding focus on their core competencies.

further require additional high-speed (fiber optic) services which, in turn, means a change in physical distribution platforms.

Regardless of today’s core functions, data centers will soon need to be capable of providing new insights employing systems such as virtual cable modem termination systems (vCMTS) and Distributed Access Architecture (DAA). vCMTS is a software-based CMTS, while DAA decentralizes the cable network by moving functions from the headend to the edge of the network, closer to the customer. The vCMTS replaces the traditional, hardware-based CMTS with a software platform running on servers and includes more centralized management and control of the network (Fig. 1).

Some of the early advances made by cable operators and vendors in virtualizing the industry’s access networks and expanding their capacity could become a hybridized means for multipurpose cloud providers who are increasingly moving to virtualize hybrid fibercoax (HFC) access networks as these next-gen technologies emerge.

Such a change is critical to meeting the challenges of emerging GenAI solutions to

deploy and manage the mass data-distribution industry’s long-term health and competitive prospects. Cable service providers are always using new systems technology, such as AI, to mitigate failures. For example, one methodology is limiting no more than just a single fiber deep node issue per 40 customers. A legacy cable hub population of 20,000 to 30,000 customers using a traditional CMTS was the expectation less than a decade ago.

In another way, the models being used by the cable industry are taking key access network functions out of the traditional cable headend and placing them in software running “at the edge” on much simpler and less costly commercial off-the-shelf (COTS) servers. Despite a slow start, cable operators are increasingly moving to virtualize their hybrid fiber-coax (HFC) access networks as the next-gen AI technology emerges as critical to the industry’s long-term health and competitive prospects (Fig. 2).

Benefits for operators include addressing the future needs to meet the capacity and connectivity challenges of a new decade. Whether the systems are to be used for traditional media distribution or as an augmentation to offerings that include “smart features” driven under the “AI umbrella,” speed, efficiency and continual access will be essential to the overall success of the mainstream media and cable solutions providers.

Additional related practical requirements and capabilities for cloud platforms headed toward AI services include these core requirements:

Scalability: To rapidly and efficiently—based on fluctuating workloads—expand services without overspending on resources.

Speed and Agility:

Cloud-managed abilities to provide rapid deployment and development capacities, allowing for accelerated time-to-market especially for AI applications.

Data Management

Enhancement: Cloud platforms must offer robust and stabilized data storage and compute management capabilities. For AI applications, this means the cloud provider must be able to securely store, process and deliver data to customers in large volumes, especially when offering AI model training and insight.

Fig. 2: Moving from traditional hybrid fiber coaxial to virtual cable-modem termination systems (vCMTS) and Distributed Access Architecture (DAA). Note that little change is seen initially (two dotted sections above) until migrating to remote physical layer (PHY) shelf vCMTS (non-dotted section). Diagram adapted from a NCTA technical paper prepared for SCTE19 ISBE.

Cloud AI platforms must further be able to offer “pretraining” AI models for concepts such as image recognition, LLM (large language models) and speech-to-text/text-to-speech translation.

Process automation and optimization is a normal and routine process for AI solution systems; thus, the AI cloud platform providers are tailoring their systems to help streamline tasks that have semi-consistent applications (such as delivery of services or products and order entry prediction requirements). Optimization of these tasks, in turn, will improve accuracy and reduce costs across myriad common business functions.

AI-cloud platforms may also help or be used to improve personalized customer interactions, enable better, more-efficient customer service and to streamline workflows. We’ve already recognized that the “bot” is the new customer service representative! Last but not least, AI-cloud platforms should offer robust security features. Real-time threat detection and (automatic) compliance monitoring will aid businesses in protecting not only their sensitive information, but also in ensuring customers are clear of potential bad actors—both of which may be essential to meet regulatory requirements.

AI-cloud platforms are becoming increasingly important, and we now see them expanding focus on their core competencies. Businesses— including media-centric enterprises—that must interact publicly can now outsource AI infrastructures and management to their cloud providers as part of a regular service offering. This dramatically changes the core capabilities and pushes new strategic initiatives without a need to retool and invest in additional secondary services outside their core directives. ●

Karl Paulsen is a retired CTO and a frequent contributor to TV Tech. You can reach him at TV Tech or karl@ivideoserver.tv.

Arecurring theme in this column is that change is the only constant in media technology and now we’re entering yet another inflection point. For the past two or three years, the conversation has been dominated by generative artificial intelligence (GenAI) large-language models, synthetic media and the promise (and peril) of machines that can create. But a new concept is emerging to take center stage: Agentic AI.

Think of a transcoding pipeline or a playout automation system. These are engineered for consistency and reliability.

of making decisions, forming strategies and interacting with other agents in ways that mirror human delegation.

To understand how agentic systems might reshape the media landscape, it helps to visualize the ecosystem they could create. That’s where the Agentic Model for media comes in.

This shift is more than just a buzzword swap. It represents new thinking in how we consider automation and interaction. Where GenAI focused on content generation, Agentic AI is about delegation and communication. While the technology is still maturing, the trajectory is clear: agents are coming.

Before we go further into the world of agentic systems, it’s worth stepping back to clarify what we mean when we talk about “AI.” AI is not a single technology—it’s a spectrum of capabilities, each suited to different kinds of problems.

Over the past few years, the spotlight has been on generative systems— models that can produce text, images, audio or video based on patterns learned from large datasets. Attention is shifting toward something more dynamic: Agentic AI. These are systems that don’t just respond—they act. They can pursue goals, make decisions and interact with other systems or agents on behalf of a user.

A key distinction between AI and traditional automation lies in determinism: Traditional automation excels in deterministic environments, where inputs and outputs are well-defined and predictable.

AI, by contrast, thrives in nondeterministic contexts—where inputs may be ambiguous, incomplete or constantly changing, and where outputs are not always binary or fixed. This makes AI especially useful in areas like content personalization, natural language interaction or adaptive media workflows, where flexibility and learning are more valuable than rigid rules.

As we move into the agentic era, this distinction becomes even more important. We’re building systems that can operate in the gray areas, where human judgment used to be the only option. This is a significant evolution in how we think about automation. These agents may be powered by generative models, but they go beyond them by incorporating memory (context), planning and the ability to interact with other systems or agents. In some cases, it may even act without direct prompting based on what it knows about your goals.

The key point is this: Agents are not just tools. They are actors in a system, capable

At the center of the model is “Agentic Discovery and Communication.” This is the core function that ties everything together: the ability of agents to find, filter, personalize and exchange content on behalf of their human or organizational counterparts. This is a foundational concept: the emergence of a general agent communications plane-A layer that sits “above” the internet as we know it today. This plane would allow agents to interact, negotiate and transact with one another directly, without requiring constant human mediation.

Some envision a future where this agentic layer becomes the dominant interface for digital interaction—potentially superseding the traditional web. In such a world, websites and apps may become secondary to the agents that navigate the digital world on our behalf.

Surrounding this core are four key roles:

• A Creator Agent might help manage rights, optimize distribution or assist in content creation or personalization;

• A Brand Agent could autonomously place ads for a brand, negotiate campaign terms or monitor performance;

• A Personal Curator Agent would act on behalf of the consumer, filtering content, managing preferences and even negotiating access or pricing; and

• An Influencer Agent represents any entity granted the authority to shape or guide the

behavior of other agents. This could include institutions, communities, regulatory bodies or even parents wishing to influence the curator agents of their children.

What emerges from this model is a vision of a media ecosystem where agents mediate nearly every interaction. It’s a shift from a platform-centric media model to an agent-centric one, where the locus of control moves closer to the individual or organization being represented.

Among the most transformative elements of the Agentic Model is the Personal Curator Agent—a digital representative that acts on behalf of an individual consumer. This agent doesn’t just recommend content: it negotiates access, filters noise, adapts to evolving preferences and potentially even manages subscriptions or monetization decisions. It becomes, in effect, a media concierge—one that knows your tastes, your values and your boundaries.

The need for such a capability has never been more urgent. We are rapidly approach-

ing—if not already living in—what some have called the “dead internet,” a digital landscape increasingly saturated with AI-generated content, synthetic engagement and algorithmically amplified noise. In this environment, the content signal-to-noise ratio is getting worse. We will need agents to sift through the junk and identify what truly matters.

For a personal agent to be effective, it must have access to a rich and continuous stream of behavioral, contextual and preference data. That data might come from viewing history, social interactions, biometric signals or even inferred emotional states. In today’s media environment, much of that data is collected and controlled by platforms. But in an agentic future, the balance of power could shift—from platforms to people.

The pace of technological innovation often outstrips the pace of business model evolution. We’ve seen this before—file-based workflows were technically feasible long before they were widely adopted. Cloud infrastructure was ready years before media companies trusted it with their core operations. Even

streaming, now ubiquitous, took more than a decade to become mainstream.

The core technologies—autonomous agents, large-scale models, distributed orchestration— are already emerging. But the real constraint isn’t technical; it’s organizational, economic and cultural. Business models will need to adapt. Rights frameworks will need to evolve. Standards for agent behavior, identity, and trust will need to be developed and adopted. And perhaps most importantly, people will need time to adjust to the idea of delegating meaningful decisions to machines. It’s a decade-long transformation, at minimum.

For media professionals, the message is clear: don’t wait for the future to arrive—start preparing for it now. Begin experimenting with agentic workflows. Rethink how your content is discovered, curated and monetized. Invest in data quality, interoperability and flexible infrastructure. And most importantly, stay curious. ●

John Footen is a managing director who leads Deloitte Consulting LLP’s media technology and operations practice. He can be reached via TV Tech.

Sébastien Paquet

Cinematographer

LOS ANGELES—As Korn’s cinematographer for the past 20 years, I’ve worked closely with the band to capture everything from music videos and studio sessions to global tours. When it came time to document their 30th anniversary show, I wanted to do something we’d never done before, something that matched the scale and energy of the band’s legacy while pushing the boundaries of how a concert can be filmed.

The idea? Invite the fans to shoot it.

Korn has always embraced technology and they’re constantly looking for ways to connect with fans on a deeper level. So, when I pitched the idea of using the Blackmagic Camera mobile app to let fans become part of the film crew, the band was totally on board.

crucial for consistency between fan footage and mine, as I was shooting on a Blackmagic Pocket Cinema Camera 6K Pro.

We blasted the opportunity out on social media, inviting the first 200 fans who signed up to act as filmmakers for the night. Each downloaded the app, which is free for anyone, with a custom preset we provided to ensure a uniform recording format and upload settings. Time-code syncing was as simple as using

fans filming their journeys to the concert, the energy in the crowd and the raw chaos of the mosh pit. Some of the most striking moments were from right inside that pit immersive, sweaty and emotional. It’s a perspective I could never have achieved with a traditional rig, nor would I want to disrupt those moments by pointing a professional camera in someone’s face. But thanks to Blackmagic Camera, we had hundreds of lenses capturing

duction team and another for editorial. This structure gave us creative flexibility while keeping everything secure and accessible.

One of the things I appreciate most about Blackmagic Camera is that it lowers the barrier to entry. Picking up a professional camera for the first time can be overwhelming; all the buttons, menus and controls can feel completely foreign if you haven’t worked in production before. But your phone? That’s something you’re already on constantly.

Blackmagic Camera adds Blackmagic Design’s digital film camera controls and image processing to iPhone, iPad, Android phone and tablets, so anyone can create the same cinematic look as a Hollywood feature film from their smart device. It has color space directly integrated to preserve the dynamic range and when footage goes to post, it is consistent with footage shot on any other Blackmagic Design camera. This was

each phone’s time of day.

DaVinci Resolve’s cloudsyncing capabilities made the phone-to-Blackmagic Cloud workflow seamless and hands-off for the fans. As fans were shooting, clips were uploading to the cloud right away. In another use case, the editor could have been working on the footage moments after it was filmed.

The footage we got was unlike anything I’ve ever shot. We had