Revolution in Radio & Streaming AI

July 2025 eBook www.radioworld.com

FOLLOW US

www.twitter.com/radioworld_news

www.facebook.com/RadioWorldMagazine www.linkedin.com/company/radio-world-futureplc

CONTENT

Managing Director, Content & Editor in Chief Paul J. McLane, paul.mclane@futurenet.com, 845-414-6105

Assistant Editor & SmartBrief Editor Elle Kehres, elle.kehres@futurenet.com

Content Producer Nick Langan, nicholas.langan@futurenet.com

Technical Advisors W.C. “Cris” Alexander, Thomas R. McGinley, Doug Irwin

Contributors: David Bialik, John Bisset, Edwin Bukont, James Careless, Ken Deutsch, Mark Durenberger, Charles Fitch, Donna Halper, Alan Jurison, Paul Kaminski, John Kean, Larry Langford, Mark Lapidus, Michael LeClair, Frank McCoy, Jim Peck, Mark Persons, Stephen M. Poole, James O’Neal, T. Carter Ross, John Schneider, Gregg Skall, Dan Slentz, Dennis Sloatman, Randy Stine, Tom Vernon, Jennifer Waits, Steve Walker, Chris Wygal

Production Manager Nicole Schilling Art Editor Olivia Thomson

ADVERTISING SALES

Senior Business Director & Publisher, Radio World John Casey, john.casey@futurenet.com, 845-678-3839

Advertising EMEA Raffaella Calabrese, raffaella.calabrese@futurenet.com, +39-320-891-1938

SUBSCRIBER CUSTOMER SERVICE

To subscribe, change your address, or check on your current account status, go to www.radioworld.com and click on Subscribe, email futureplc@computerfulfillment.com, call 888-266-5828, or write P.O. Box 1051, Lowell, MA 01853. LICENSING/REPRINTS/PERMISSIONS

Radio World is available for licensing. Contact the Licensing team to discuss partnership opportunities. Head of Print Licensing Rachel Shaw licensing@futurenet.com

MANAGEMENT

SVP, MD, B2B Amanda Darman-Allen

VP, Global Head of Content, B2B Carmel King MD, Content, Broadcast Tech Paul J. McLane

Global Head of Sales, Future B2B Tom Sikes

Managing VP of Sales, B2B Tech Adam Goldstein

VP, Global Head of Strategy & Ops, B2B Allison Markert

VP, Product & Marketing, B2B Andrew Buchholz

Head of Production US & UK Mark Constance Head of Design, B2B Nicole Cobban

FUTURE US, INC.

Future US LLC, 130 West 42nd Street, 7th Floor, New York, NY 10036

All contents ©Future US, Inc. or published under licence. All rights reserved. No part of this magazine may be used, stored, transmitted or reproduced in any way without the prior written permission of the publisher. Future Publishing Limited (company number 02008885) is registered in England and Wales. Registered office: Quay House, The Ambury, Bath BA1 1UA. All information contained in this publication is for information only and is, as far as we are aware, correct at the time of going to press. Future cannot accept any responsibility for errors or inaccuracies in such information. You are advised to contact manufacturers and retailers directly with regard to the price of products/services referred to in this publication. Apps and websites mentioned in this publication are not under our control. We are not responsible for their contents or any other changes or updates to them. This magazine is fully independent and not affiliated in any way with the companies mentioned herein.

If you submit material to us, you warrant that you own the material and/ or have the necessary rights/permissions to supply the material and you automatically grant Future and its licensees a licence to publish your submission in whole or in part in any/all issues and/or editions of publications, in any format published worldwide and on associated websites, social media channels and associated products. Any material you submit is sent at your own risk and, although every care is taken, neither Future n or its employees, agents, subcontractors or licensees shall be liable for loss or damage. We assume all unsolicited material is for publication unless otherwise stated, and reserve the right to edit, amend, adapt all submissions.

Radio World (ISSN: 0274-8541) is published bi-weekly by Future US, Inc., 130 West 42nd Street, 7th Floor, New York, NY 10036. Phone: (978) 667-0352. Periodicals postage rates are paid at New York, NY and additional mailing offices. POSTMASTER: Send address changes to Radio World, PO Box 1051, Lowell, MA 01853.

A revolution in progress

What’s actually happening in the marketplace?

Paul McLane Editor in Chief

Two years ago it dawned on radio broadcasters that artificial intelligence technologies could change our workflows drastically. Have they? How deeply have AI tools — generative and otherwise — penetrated radio’s workflows? What have early radio adopters learned and how has their thinking about AI evolved as a result? Which companies are offering AI-based tech? Which tools have found the most uptake, and what further changes can we expect to see?

This Radio World ebook explores these questions. You will find case studies about how AI is being used at companies like iHeartMedia, Saga Communications, Beasley Media Group, Connoisseur Media, SiriusXM and multiple local broadcast groups.

You’ll read thoughtful comments from radio veterans like Sean Ross and Jerry Del Colliano.

And you’ll learn the perspectives of our tech sponsors Broadcast Bionics, CGI, CreativeReady, ENCO, Waymark and WorldCast Systems, all of which offer products that have put AI to use. Their support makes this ebook possible.

THIS ISSUE

4 Chris Brunt: “Broadcasters must prepare to win the AI race”

6 Ken Frommert: AI tools can automate, personalize and enable

9 Nicolas Boulay: AI delivers operational efficiency and sustainability

14 Jamie Aplin: “I knew we were on to something huge”

16 Michael Thielen: Trust is so important to the broadcast industry

19 Justin Chase: Beasley finds ample uses for AI tools

20 Dan McQuillin: How radio wins the arms race for attention

22 Hayden Gilmer: AI now can help radio compete in video advertising

25 Case studies in the use of AI

34 Nicole Starrett: AI plays a role in FM antenna modeling

36 Jerry Del Colliano: AI is quietly taking over music, radio and streaming

37 More News From Around the World of AI

AI Revolution in Radio & Streaming

Broadcasters must prepare to “win the AI race”

Chris Brunt says AI agents and other tools can drive revenue growth for radio

Writer

The AI evolution in radio and streaming is moving quickly. As radio broadcasters expand their strategies, we asked Chris Brunt, director of AI, digital and revenue generation at Jacobs Media, about recent developments.

Brunt writes a Jacobs Media blog about digital tools including the uses of AI in a radio environment.

Present day, how are AI technologies altering broadcast workflows?

Chris Brunt: There have been few technologies that have taken hold so quickly as AI.

Eighteen months ago, when talking with broadcasters, I would ask who’s using it and around a quarter of the participants’ hands would go up. Today, it’s an overwhelming majority; regardless of market size.

We’ve seen massive uptake in text-generation tools to knock out promo and advertising copy. Many television and radio groups have AI-generating audio and video ad tools that are being used extensively.

The applications seem endless. Which AI tools have found the most uptake by broadcasters?

Brunt: Most of the AI-use around stations is ChatGPT for text generation, including writing proposals and advertising copy. In a world where there’s more work to do by less people, broadcasters are using AI shortcuts to get better work faster.

How are broadcasters using AI to bolster revenue, and how much of an impact is it making?

Brunt: The biggest change is AI-generated advertisements. These sound and look great.

Using AI for upgrading scripts and producing specs is a great first step for broadcasters. Many salespeople are now quickly generating spec spots and including them in their pitches, and increasing their closes because of it. Broadcasters are also using those AI tools to sell across digital audio platforms.

What have early radio adopters learned in the past two and a half years?

Brunt: As AI tools have become more powerful and less clunky, the first adopters are discovering new ways and new prompts to enhance the work at their stations. A year ago, to get a really good output from AI engines required a really good prompt. Today, these engines create good text, audio and images without having to meticulously craft a prompt. AI hallucinations are dramatically less as well.

What specific types of AI are broadcasters using?

Brunt: A majority of broadcasters who are using AI are using the off-the-shelf tools offered by the tech giants, such as ChatGPT, Gemini and Copilot.

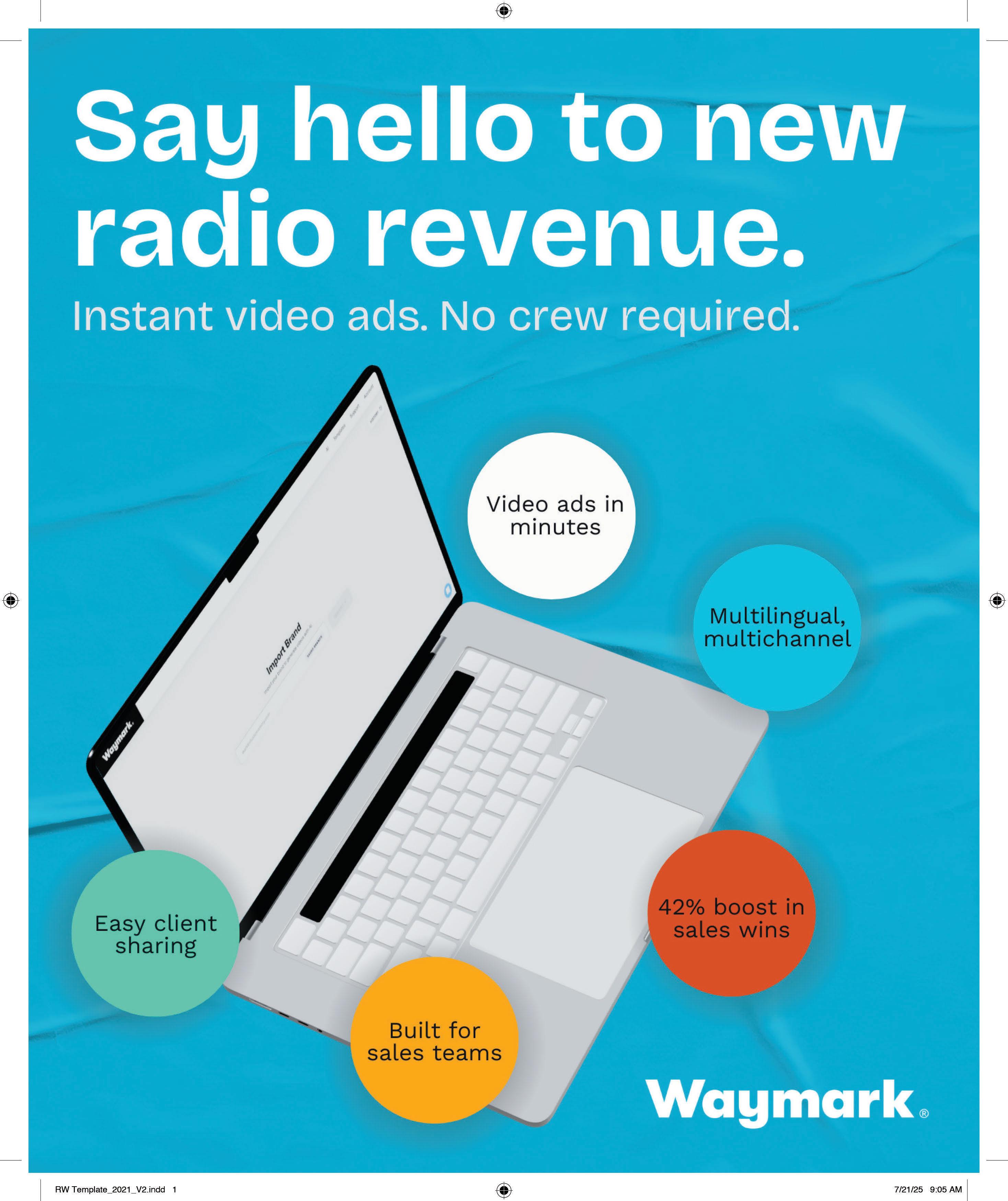

For specific station tasks, ElevenLabs is getting a lot of use on the audio side. Audacy put out a press release on their use of the tool last year, and Waymark is used by many broadcasters for video ad generation.

Are radio broadcasters changing how they think of their product thanks to AI? For instance, you wrote in a recent blog that the integration of tools like Operator from OpenAI could help stations evolve into fully interactive hubs, “blending human creativity

Right

Chris Brunt with a friend outside the CES Show.

Randy J. Stine

The author is Radio World’s lead news contributor and a longtime broadcast journalist.

AI Revolution in Radio & Streaming

with AI-powered energy and accessibility.” Describe that transition.

Brunt: Most AI tools today are designed for single, oneand-done tasks, such as generating a script, rewriting a paragraph or summarizing a meeting. Useful, but limited in scope. What’s emerging now is a more advanced capability: AI agents that can handle entire workflows, not just isolated actions.

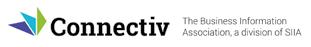

Operator is a project revealed by OpenAI earlier this year. Unlike task-based tools, Operator is designed to plan, coordinate and execute multi-step projects with minimal human input. It can take a goal, break it down into tasks and use other AI tools or APIs to complete each step. It’s essentially a digital project manager.

Broadcasting tech companies are starting to take notice. Some are already exploring how these agentbased systems can be integrated into their platforms to automate complex processes. Instead of just speeding up one task, these AI agents could eventually optimize entire operations, fundamentally shifting how stations manage workflows and deliver programming to audiences and campaigns to clients.

You’ve also said that the tone around AI has changed in broadcast circles. How so?

Brunt: The panic has subsided as broadcasters see what AI can do to help them. Broadcasters, both radio and TV, are in a people-focused industry where there are less people to do the work. Using AI tools make them more efficient and allow them to spend more time on what’s important: focusing on and interacting with viewers and listeners.

Alpha Media recently pulled the plug on AI Ashley in Portland. Why are broadcasters seemingly hesitant to use AI jocks but seem to be embracing it for audio production needs?

Brunt: Media companies have looked at the research. At this point, radio listeners overwhelmingly want to listen to actual humans and not AI-created content. Could listeners tell the difference between live, voice-tracked and AIgenerated? I’m not sure. But there is inherent trust between the audience and the people behind the microphones.

Our Christian Music Broadcasters Techsurvey in the fall showed that 91% of Christian radio listeners feel it’s very or somewhat important for stations to be up front with their audiences about AI usage. We rarely see a result that unanimous.

What’s next? Can you identify trends you expect to see?

Brunt: We’re seeing existing products getting AI embedded into the next versions coming to market, and that will continue.

The next wave of AI will be all about hyper-personalized content. Think about an AI that scans your social profiles such as Instagram likes, TikTok skips and even your dating app swipes. It then uses that data to generate a custom podcast made just for you, filled with exactly the kind of content you crave, hosted by someone who you find easy on the eyes.

That level of tailored media isn’t here yet, but it’s not science fiction. It’s already being discussed inside tech and entertainment companies. The race is on to build AI that curates your entire experience.

Right OpenAI’s Sam Altman, Yash Kumar, Casey Chu and Reiichiro Nakano introduced Operator in a YouTube video earlier this year. Watch it here.

Credit: YouTube/OpenAI

AI Revolution in Radio & Streaming

AI tools can automate, personalize and enable

“We have only really just begun to explore the interactive possibilities”

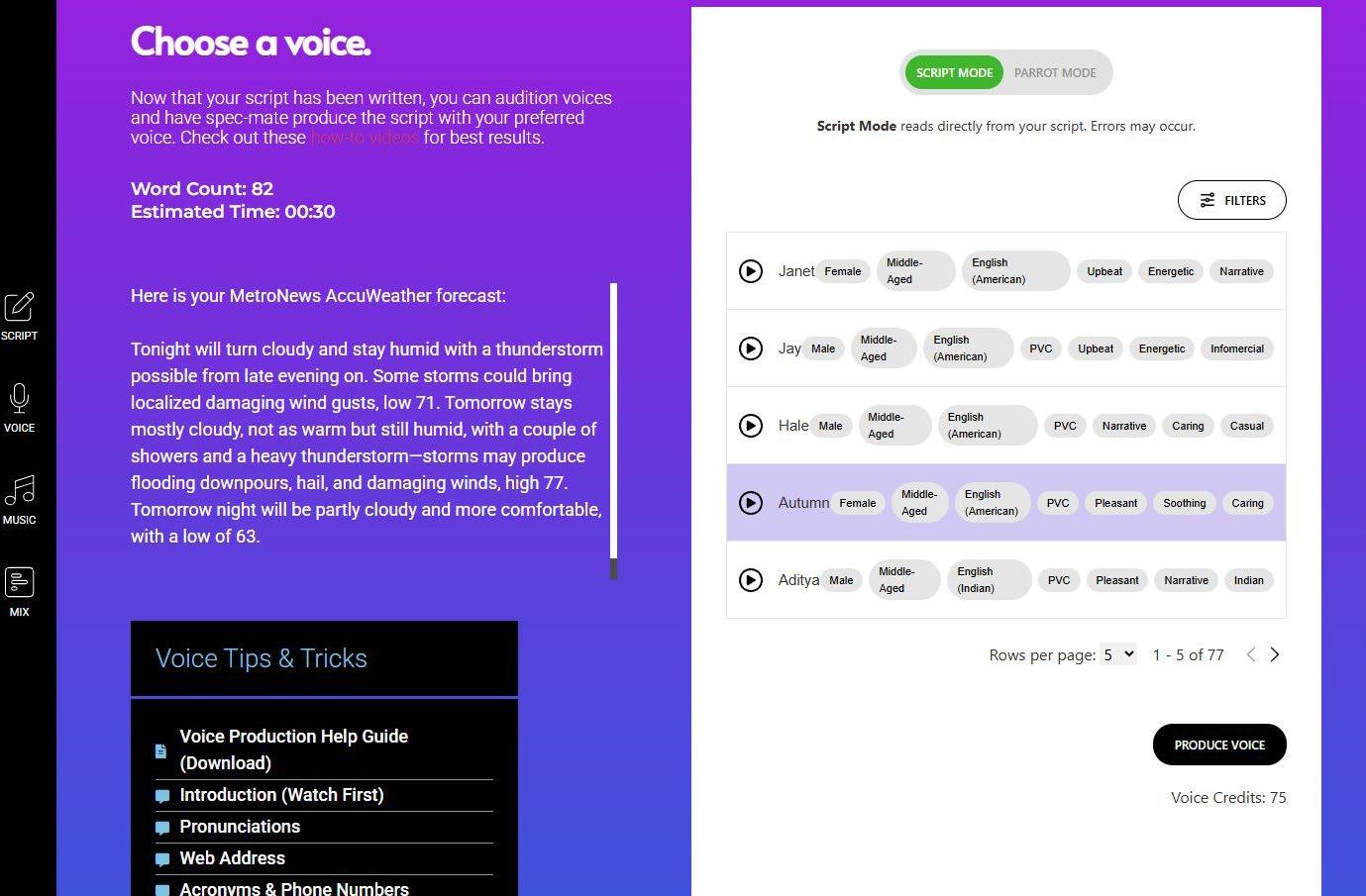

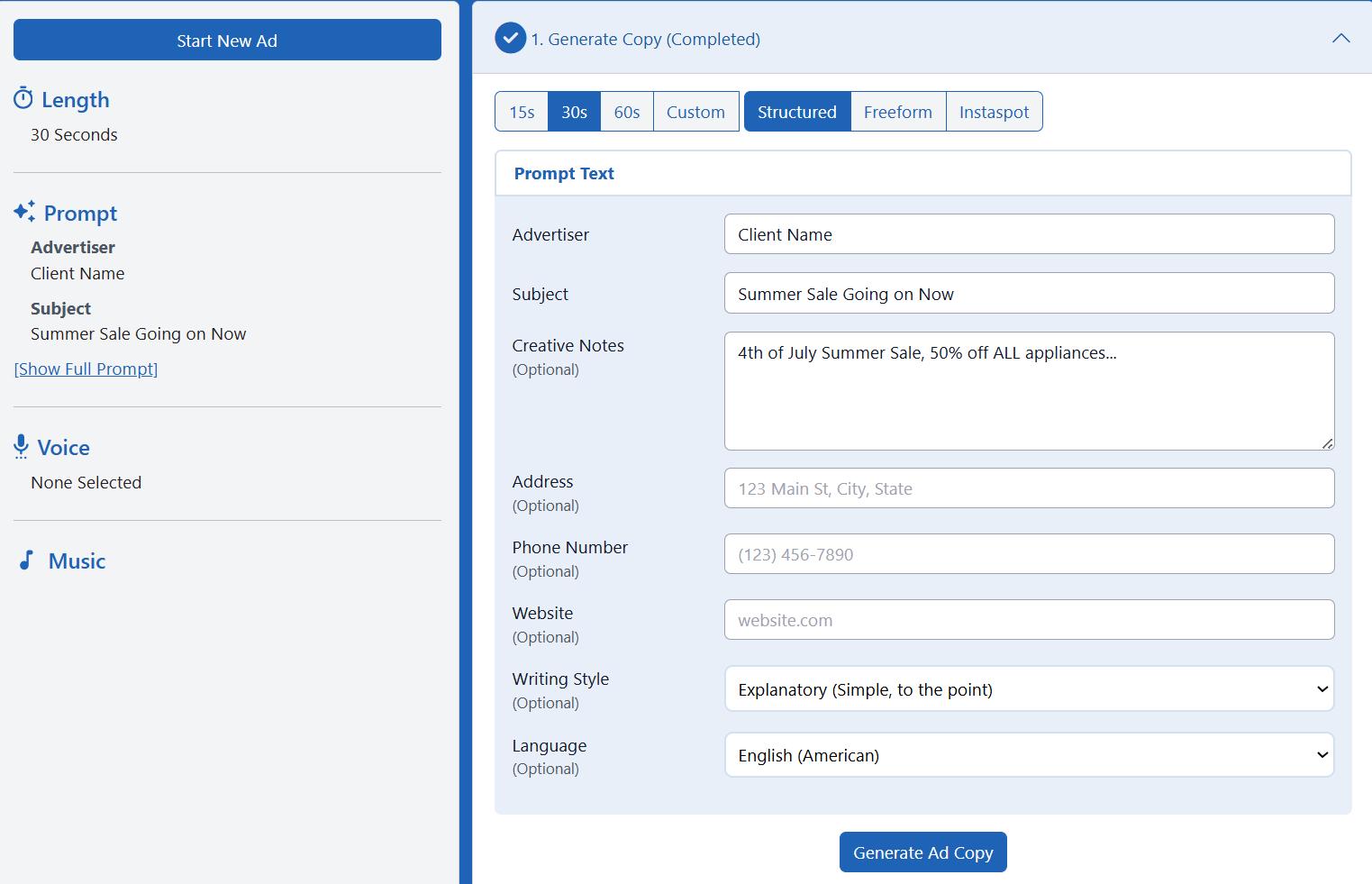

Offered by ENCO and its partners, SPECai is an ad creation platform that allows a broadcast sales team to create localized spec ads on demand while sitting with clients.

Ken Frommert is ENCO’s president.

When generative AI burst into our general awareness more than two years ago, our industry realized that it could change radio workflows drastically. Has that come to pass?

Ken Frommert: The promise of AI has always centered on working smarter — enhancing efficiency, streamlining workflows and unlocking new creative possibilities.

At ENCO, we embraced this potential early on, previewing the foundations of SPECai and aiTrack at NAB Show 2023. Since then, we’ve demonstrated how generative AI can be seamlessly integrated into broadcast operations to automate routine tasks, personalize content and enable talent to focus on what they do best.

Much like we did with the launch of DAD over 30 years ago, we’re once again helping broadcasters evolve, this time with AI tools that are practical, reliable and built for the unique demands of radio. The result is not just operational efficiency, but entirely new ways to engage audiences and deliver content.

Potential applications for AI seem almost endless. In what areas of radio are ENCO’s tools finding the most uptake?

Frommert: SPECai has been a runaway success. Its impact was immediate, which sent a message that instant spec spot creation was precisely what broadcasters needed for their ad sales efforts.

Along with our partners at Benztown and Compass Media Networks, we designed SPECai to essentially become part of the ad sales team. It was a toolset that account executives could quickly learn to use, and one that management could see immediate value in. The ability to create scripts and build ads with voice and music directly in front of the client, and invite the client’s input, set the tone for AI acceptance in radio.

What kind of queries or concerns do you get?

Frommert: One of the most common questions about SPECai is: “Can we use these spots on-air?” And that’s

exactly the reaction we hoped for. SPECai was designed to create high-quality spec spots that not only impress clients but also sound broadcast-ready.

While its main purpose is to help sales teams quickly generate custom ads for client pitches, many stations do end up airing them — sometimes with minor tweaks or added branding. We built SPECai with professional voice models, music beds and copywriting logic that reflect the tone and pacing of real radio ads. So yes, the quality is there, and in some cases, stations are confidently putting them on-air.

All users react with amazement at the speed and flexibility of using SPECai. When they see how fast they can turn around a polished spot, it really clicks.

What are the models your tools are based on?

Frommert: SPECai is built on top of advanced large language models, like those from OpenAI, which we’ve customized for the specific needs of radio advertising.

Below Ken Frommert

“Along with our partners at Benztown and Compass Media Networks, we designed SPECai to essentially become part of the ad sales team.

We’ve layered in broadcast-specific logic, voice model selection, custom music bed integration from Benztown, and ad structure templates to ensure the output matches the tone, timing, and flow of real spec spots.

While the core language model does the heavy lifting for natural-sounding copy, the magic comes from how we’ve tuned it for radio workflows.

How do you keep products current with evolving technology and cultural differences?

Frommert: ENCO’s products are continuously updated with the latest terminology, industry trends and linguistic data through large language models. We also gather real-world feedback from users across diverse industries and regions to ensure our solutions stay accurate, culturally relevant and aligned with emerging technologies.

This is especially important with products like SPECai and aiTrack, as this agile approach allows us to deliver AI tools that evolve in real-time with the world around them.

How will AI technologies evolve further in the coming years?

Frommert: Content generation will be explosive, as will dynamic insertions. That means automatic hyperlocal content creation with real-time insertion and personalized content creation for each listener.

This is precisely what we are achieving with aiTrack in its formative stages, which we continue to enhance over time to improve performance — same as we have with SPECai. We have only really just begun to explore the interactive possibilities of AI, and we believe the ability to personalize content for each listener will only serve to grow the radio audience both online and over the air.

Catching Up With NRG Media

Last year, Radio World readers enjoyed a case study about how NRG Media was using AI tools to serve listeners in its seven Midwest markets. (Find that here.)

We checked back in with Erica Dreyer, director of integrated media. She said NRG’s use of AI has grown in scope and confidence.

“More teams are incorporating AI into their daily work, across both creative and operational tasks. From idea generation and voice cloning to meeting transcription and data analysis, these tools open up new creative possibilities and help us bring ideas to life in ways that weren’t practical before. … It’s not replacing our thinking, it’s helping us bring better ideas to life with more speed, variety and clarity.”

Production teams are using voice models to add variety to spec spots and ads. Sales teams are using AI to research prospects more efficiently and speed the creation of visuals and sales materials. Dreyer uses Fathom to record Zoom calls, summarize key points and search across meetings by keyword.

“What started as a few isolated use cases is now a distributed practice across departments.”

For example, KFMW(FM)’s synthetic talent AI Tori, built with Futuri AudioAI, now is doing weekend mornings as well as overnights. And she has a sponsor.

Programmer Russ Mottla said, “The client loves Tori and has sponsored her weekend shows, where Tori gives clever sponsorship announcements like: ‘I’m AI Tori and today’s show is sponsored by Midwest Shooting. Guns won’t fill the emptiness in your soul — you will also need some ammo!’”

different voices. “There are about 10 spots airing on our stations right now that are female voices, but I, a guy, read them. There are spots that have four or five characters, all different voices, ages and genders, each voiced by me.”

Waymark is used for generating video spec spots; quite a few end up being aired. Meanwhile, markets across NRG use Creative Ready’s Spec-Mate AI.

Production Director Austin Michael likes that he can read a spot the way he wants it done, upload it and change it to one or more

Integrated Media Specialist Jeff Ulrich uses Perplexity Pro and ChatGPT side by side. He uses them to research prospects, gather insights quickly and tailor materials for client conversations. He generates voiceover scripts, visual personas and ideas for proposals. He uses Otter.ai for calls and meetings, and he produces AI voiceovers using Fish Audio. He also can animate still photos for AI-generated video using tools like Google Veo 2. Said Erica Dreyer, “What continues to impress me most is how our teams across markets are exploring these tools in ways that make sense for them … Everyone’s experimenting, learning and finding what sticks.”

You can read our full interview at http:// radioworld.com keyword Dreyer.

AI Revolution in Radio & Streaming

AI delivers operational efficiency and sustainability

WorldCast Systems has been deploying the technology since 2018

The involvement of WorldCast Systems with AI goes back at least seven years, longer than that of most manufacturers, as Radio World has reported.

Nicolas Boulay is co-president of WorldCast Group.

Nicolas can you start by reminding us of how the company started in AI?

Nicolas Boulay: WorldCast Systems introduced artificial intelligence to FM broadcasting in 2018 with SmartFM, the first AI-driven technology designed specifically for FM radio.

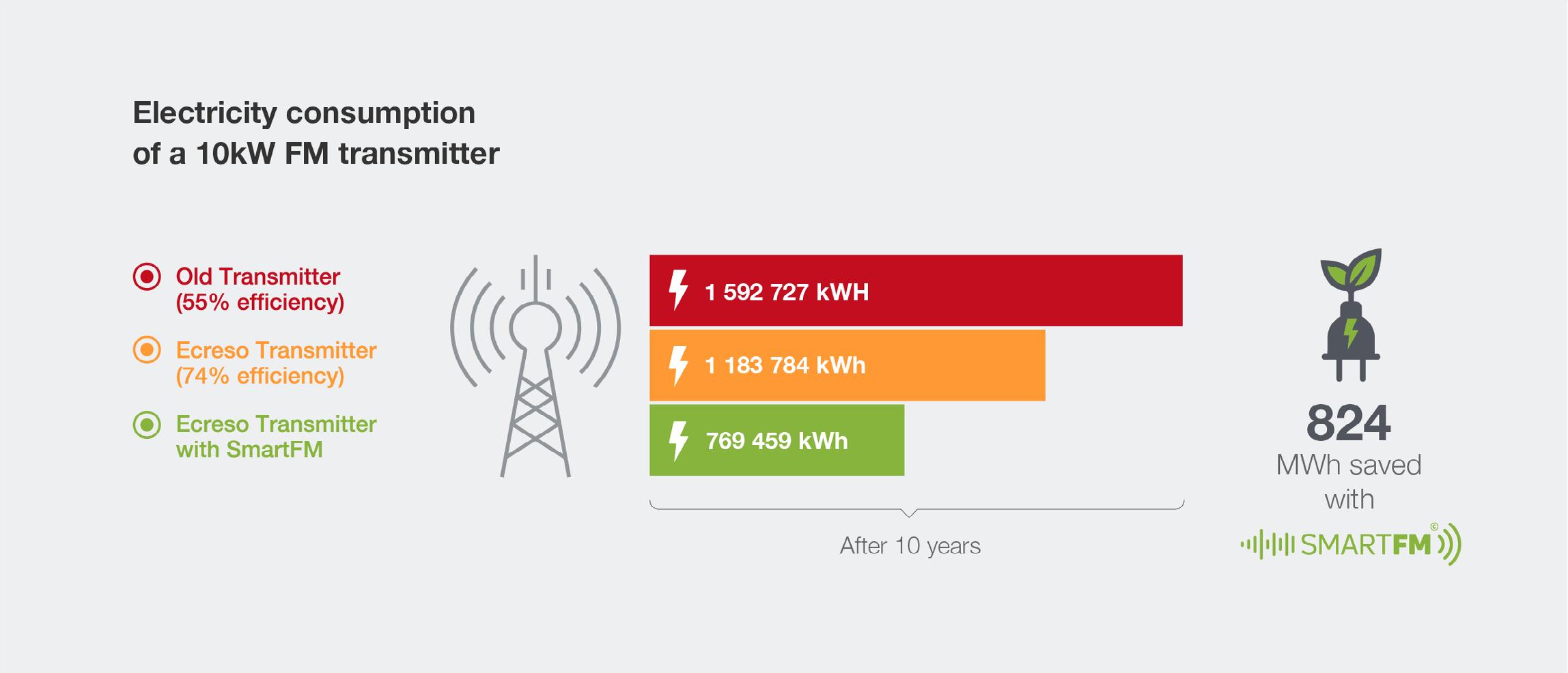

After three years of R&D and field testing, SmartFM was launched to optimize transmitter efficiency without compromising audio quality. It uses a combination of statistical, probabilistic and deterministic models to analyze audio content and dynamically adjust power usage, enabling broadcasters to reduce energy consumption by up to 40 percent.

This leads to reduced electricity costs, lower CO₂ emissions and extended transmitter lifespan due to decreased thermal stress. The technology is compatible with all Ecreso FM transmitters delivered since 2010, making it accessible through software upgrades.

Beyond Uplink in Germany and implementations in Morocco, SmartFM has been adopted by public broadcasters such as Deutschland Radio, RBB, WDR and NDR. By the end of 2022, approximately 800 FM transmitters in Germany were equipped with SmartFM, marking a significant step toward decarbonizing FM broadcasting in the country.

What has SmartFM technology allowed your users to do, that they could not do otherwise?

Boulay: It has allowed them to meet their company sustainability goals, and reduce operational costs for transmitter broadcasting, including electricity costs for transmitters but also for the cooling system as the heat will reduce by around 20 percent.

With SmartFM, broadcasters have been able to drastically reduce the operational costs for their Ecreso transmitter. As we know, most of the transmitters on the market have high efficiency, 70 percent to 76 percent, and it is no longer possible to increase it further as we

have reached the limit of the components.

With SmartFM, broadcasters benefit from additional energy savings by adjusting the power of the transmitter based on the audio content robustness. In average, we observed a reduction of 20 to 30 percent consumption, which cannot be obtained without SmartFM.

Transmitters remain one of the most consuming products in the radio broadcasting chain. These savings

“ The broadcast industry is at a tipping point where AI will converge with virtualization and cloud infrastructure.

Left Nicolas Boulay

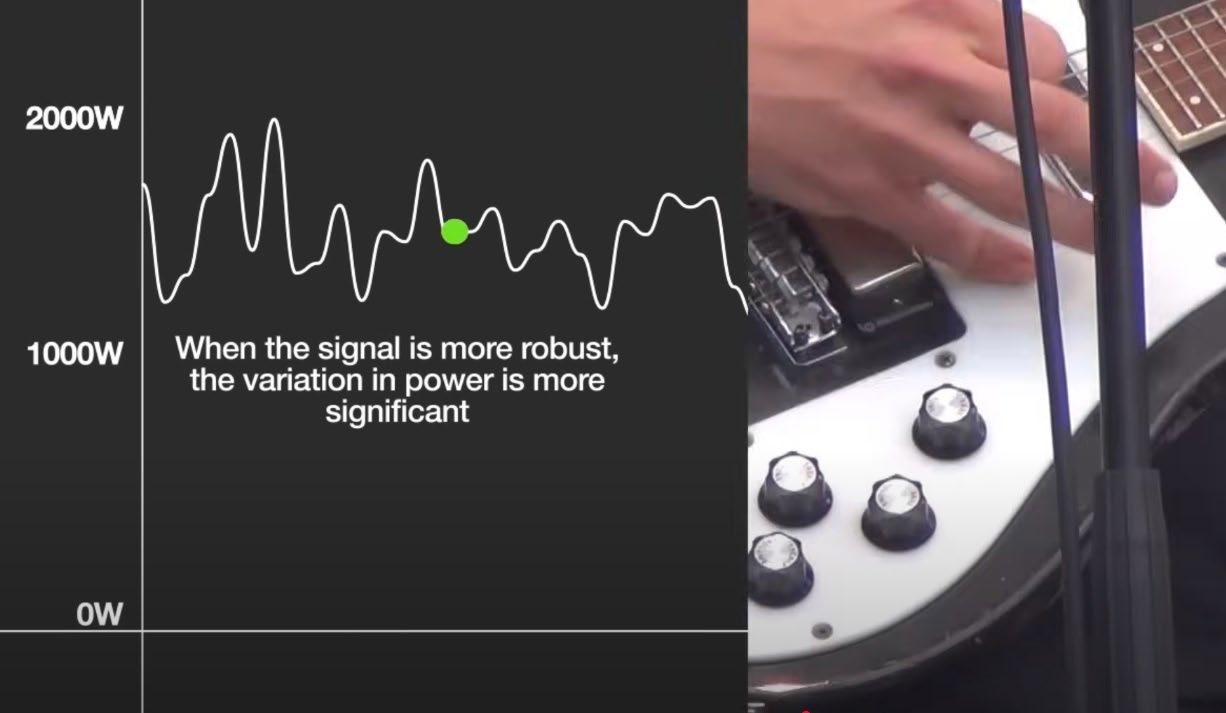

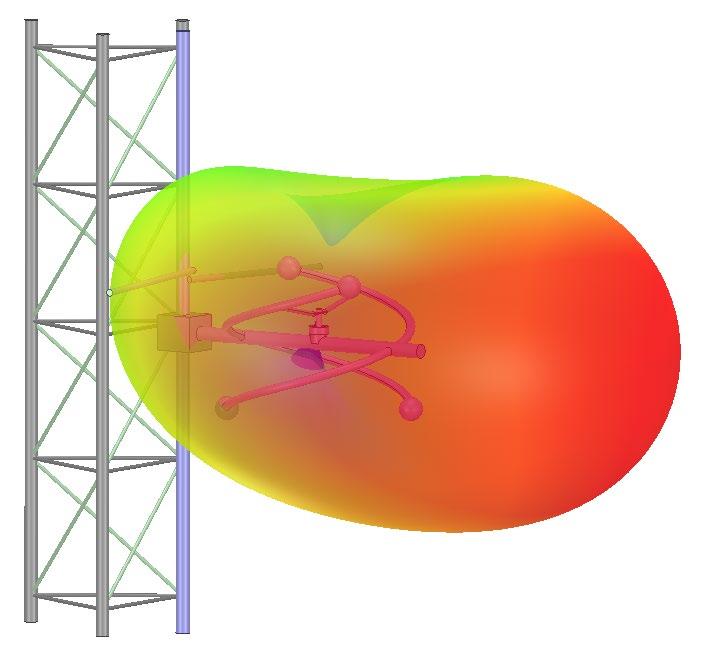

Right This image is from an explainer video that you can access here. The inner circle shows dynamic changes in transmitter power (top line) and electricity savings (bottom). On the main screen, power is at 733 watts for a 1 kW transmitter; it varies in the video down to 677 watts.

AI Revolution in Radio & Streaming

make a real difference for broadcasters.

In addition to the direct impact on the transmitter consumption, we noted a positive side effect on the cooling system consumption. As there is less heat to dissipate, the cooling system also runs at lower rhythm, resulting in lower electricity consumption.

The second big advantage of SmartFM for our customers was the impact on their sustainability goals. Most of the companies have set a very high emission target and are even targeting net zero. Transitioning to SmartFM is an additional step in this direction.

especially in Africa. They used SmartFM to reduce their electricity consumption, sometimes related to diesel generators and solar plants.

Some other customers are starting the deployment of SmartFM for their existing Ecreso transmitters but we cannot reveal their names so far.

When ChatGPT came out it dawned on the radio industry broadly that generative artificial intelligence technologies could change our workflows drastically. Has it happened?

Below

Output power adapts in real time to audio content, which is the horizontal axis. This image is from the explainer video.

We’ve reported on notable installations of SmartFM at Uplink in Germany and a number of uses in Morocco. Are there other notable projects we should know about?

Boulay: We also have an interesting project with Adventist World Radio, who deployed radio worldwide,

Boulay: Yes, the realization that generative AI could transform our industry has indeed led to profound changes, though the pace and scope vary depending on the segment.

For content creators, the impact is most visible: Radio stations are experimenting with AI-generated voiceovers, text-to-speech news bulletins and even virtual DJs. Generative AI is streamlining scripting, translation and personalization, enabling broadcasters to do more with fewer resources — especially valuable for community radios or smaller teams.

At WorldCast Systems, our focus is on the infrastructure side, where AI plays a crucial role in operational efficiency and sustainability. While not generative in the creative sense, our SmartFM technology uses intelligent algorithms to optimize transmitter power consumption based on real-time audio content, significantly reducing energy usage without sacrificing quality.

In the future, we foresee generative AI also supporting proactive diagnostics and automated response systems across our monitoring and network management platforms, enhancing reliability and reducing the need

AI Revolution in Radio & Streaming

for human intervention in repetitive, time-critical operations. In short: Yes, the change is underway, and we are only scratching the surface of what’s possible.

David Houzé told us last year that AI also is used, or could be used, by WorldCast in fault detection and automatic correction on equipment of the chain. Can you expand on that?

Boulay: The difficulty with AI is to use it for useful and relevant purposes. If it’s used to surf the latest trend, it’s useless and dangerous. So, every time we use AI, we keep this in mind: Is it 100 percent reliable, is it relevant in terms of use, and does it add value for the end-user?

If the answer is yes to all these questions, then we can make use of AI. As David mentioned, we are indeed working in two directions: combining multi-factors to characterize future behavior, and combining multimeasures to characterize QoS, or quality of services. This is progressing well, but at this stage we’re still in the development phase, and I don’t want to give away all our secrets.

Is WorldCast deploying AI in products other than your FM transmitters? How?

Boulay: Transmitters are really the products that have benefited most from developments around AI. For the other ranges, this is less the case, except for our supervision and measurement tools, where we’re thinking about using AI to improve data processing and provide our customers with ever more efficient and relevant results. But at this stage, I can’t say any more.

What else should we know about the state of AI, at WorldCast or more generally?

Boulay: AI in broadcasting is no longer a concept; it’s becoming a foundation. At WorldCast Systems, we see AI not as a buzzword but as a set of practical tools to enhance the resilience, efficiency and intelligence of broadcast networks.

We are currently investing in AI across three main dimensions: in energy optimization, through technologies like SmartFM; in operational intelligence, with AI-assisted fault detection and automated responses; and in infrastructure management, via smarter supervision and telemetry tools like our Kybio platform, capable of learning from behavior patterns to anticipate incidents.

More broadly, the broadcast industry is at a tipping point where AI will converge with virtualization and cloud infrastructure. The result will be flexible, cost-effective and highly automated radio networks — especially crucial for operators managing large-scale or remote deployments.

But we must also approach AI responsibly. Human oversight remains essential, especially in safety-critical systems. Our goal is to use AI to empower — not replace — broadcast professionals, by giving them predictive insight, automation, and peace of mind.

I can add that we’re using AI more and more in every department to be more efficient by saving time on “secondary” tasks, so that we have more time to devote to tasks that require more thought. It’s really good for our engineers, but also for our designers and communicators.

Of course, we must always be vigilant about the results given by these tools. It’s essential to bear in mind that AI makes proposals and doesn’t deliver absolute truths ... but it’s becoming so powerful that it’s hard not to get carried away.

“I

AI Revolution in Radio & Streaming

knew we were on to something huge”

Aplin says the impact of AI has been even greater than expected

Jamie Aplin is founder and CEO of CreativeReady. Founded in 2013, it describes its products as “your ultimate toolkit for radio advertising success.”

Have the predictions about the impact of generative AI in radio been met?

Aplin: I would say the impact has been even greater than expected. Generative AI has completely transformed how radio groups approach production, especially when it comes to creating spec spots and commercials. The amount of time they’re saving, until now, was simply unheard of.

I can still remember the first time, almost two years ago, when a producer told me, “What used to take me 45 minutes, now takes me 3.” That’s when I knew we were onto something huge. We weren’t just improving their workflow, we were changing the entire process.

Which CreativeReady products and services use AI-based technologies?

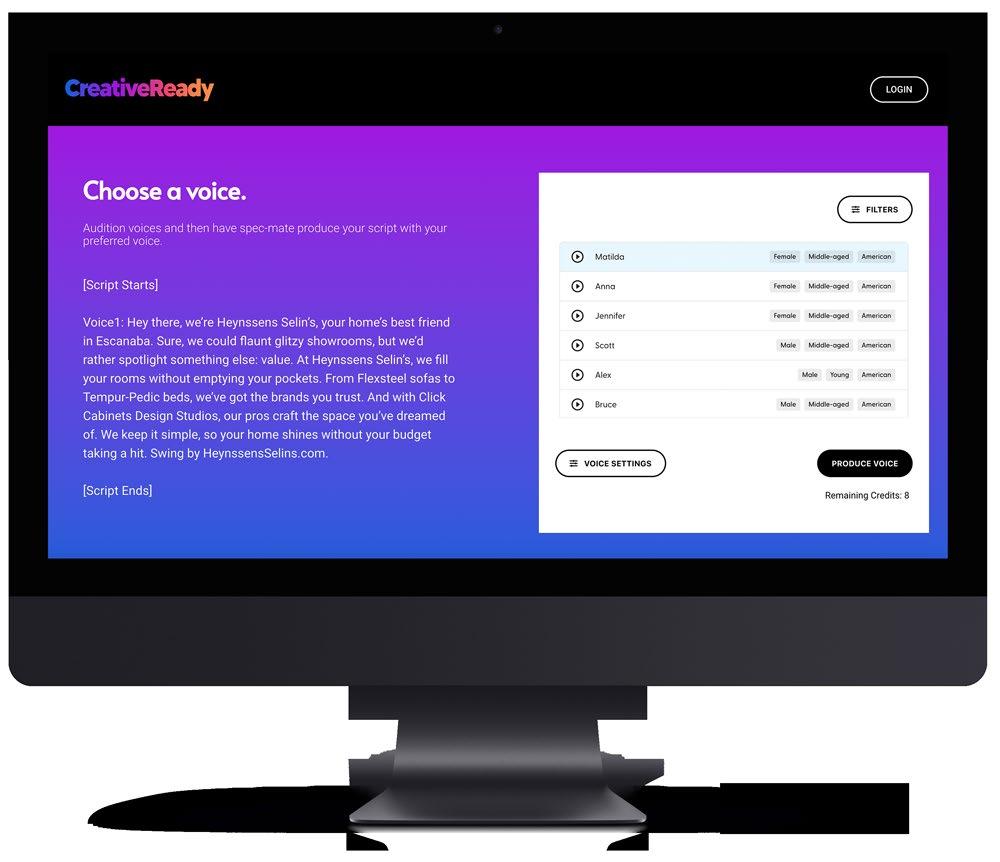

Aplin: Our primary AI-powered product is Spec-Mate It uses advanced AI to help build your script, voice and produce customized radio commercials, dramatically reducing production time while helping teams create highquality spots faster and more efficiently.

We’re also expanding AI into the music side of our business. We’re developing AI-generated “Singables,” aka jingles, along with audio logos and sonic identifiers. Since musical branding is what CreativeReady is best known for, bringing AI into this space is a natural next step for us.

How do people pay for them?

Aplin: Spec-Mate is available through a simple monthly subscription. There are no hidden fees or confusing licensing terms, just clear, predictable pricing that gives users access to all of Spec-Mate’s features. It’s scalable too. This way you’re only paying for what you need.

We’ve intentionally designed our pricing to fit broadcasters of all sizes. Whether you’re a single standalone station or part of a large group with over 100 markets, there’s a subscription tier that works. Our goal is to make these time-saving tools accessible to every team that needs them regardless of size or budget.

Where are your tools finding the most uptake?

Aplin: The most significant is in commercial production. AI is being used every day by sales teams and producers to quickly generate spec spots and even broadcast-ready commercials. It takes care of the repetitive, time-consuming parts of the process, allowing creative teams to focus on strategy, storytelling and building client relationships.

While our focus is on radio advertising and creative production, we’re seeing AI adoption across the board: sales prospecting, social content creation, podcast editing, even video and design. The potential really is quite broad, but for us, the biggest impact so far has been where stations feel the daily pressure: fast, highquality production.

Your website states that you’ve combined the speed of AI with the creativity of human copywriters. What is the human aspect?

Aplin: This is what gives Spec-Mate its edge. While the AI handles speed and scale, the creative thinking behind each commercial comes from our experienced, professional copywriters. They’ve developed the voice, tone and structure that guide Spec-Mate’s output, ensuring the results feel human, not robotic.

Below

Jamie Aplin

This is a key difference between Spec-Mate and most other AI spot builders. We don’t expect AI to be creative; it isn’t. But it is fast. So we’ve combined the best of both worlds: human creativity and AI efficiency. That’s what allows Spec-Mate to generate creative, client-specific commercials in seconds without sacrificing quality.

What questions or concerns do you get from users interested in your tools?

Aplin: The most common are around creative control and authenticity, things like “Will this sound robotic?” or “Will it feel generic?” We address that by showing users how SpecMate works.

It’s not a one-size-fits-all output generator. It’s built with customization and flexibility in mind, so users can shape the tone, style and messaging to suit each client. Once people hear how natural and creative the results can be, those concerns usually fade quickly.

Another concern we hear is around job security. There’s no sugarcoating it: AI is going to change roles in this industry. But it won’t replace the people who are willing to learn and adapt. If anything, it gives them a major edge.

My biggest encouragement is to lean into it. Learn the tools, understand how they work, and use them to get more done in less time. Spec-Mate was designed to help your production and sales staff, not replace them.

What are the underlying models?

Aplin: Spec-Mate is powered by large language models or LLMs, similar to ChatGPT but fine-tuned for radio advertising. These models are designed to understand the unique structure, tone and pacing of radio ads. And they continue to evolve to keep up with industry trends.

On the voice side, we’re working with the industry’s top AI voice providers. Truth is, most AI tools are working with the same foundational tech. What sets Spec-Mate apart is our user interface/experience. We were intentional about designing a UI/UX that’s simple, intuitive and fast, qualities that have always defined our brand. Based on feedback from our users, we’ve nailed that balance better than most.

How will AI technologies evolve further?

Aplin: From where I sit in the creative and audio production space, we’ll continue to see big improvements in voice synthesis, especially around emotional nuance and realism. That means more natural-sounding commercials

we’ll see AI tools become even more responsive to user intent, making it easier to generate highly personalized audio quickly.

As for other areas of radio, I can’t speak with authority, but if there’s time to be saved or workflows to streamline, someone’s either building or about to build a tool to do just that. The momentum behind AI in this industry isn’t slowing down, it’s accelerating. The key is figuring out how to integrate it in a way that supports your team.

What else should we know?

Aplin: I’ll say it again, AI in radio isn’t about replacing people, it’s about helping them do their jobs better. It takes the repetitive, time-consuming tasks off your plate so you can focus on what really matters: creative thinking, strategy and building strong client relationships.

That said, the technology is moving fast, and it’s not slowing down. I’m not suggesting we throw all caution to the wind, but I do recommend taking an informed, practical look at what AI can do for you and your team. You can set the pace. You can set the boundaries. But digging your heels in and saying “no” isn’t really an option anymore. The opportunities are here; it’s just a matter of whether or not you’re willing to explore them with an open mind.

“ I can still remember the first time, almost two years ago, when a producer told me, ‘What

Above A CreativeReady user screen.

Trust is so important to the broadcast industry

AI tools should be used responsibly and with your station’s reputation in mind

Michael Thielen is vice president, consulting services at CGI. He is responsible for the dira radio broadcasting playout and production solution. He also is involved in the company’s AI and non-linear planning and production workflows.

How are artificial intelligence technologies being deployed for radio at CGI?

Thielen: We offer several relevant products. The first is dira, where AI is used in the management of audio

and other files. It improves the work of journalists in creating audio material that plays on the air. Another is OpenMedia, a newsroom system that journalists use to gather information. AI is used for researching material, looking at the wires, scrolling through the internet for material and then writing text.

A premise of this ebook is that radio awoke to the potential of AI when ChatGPT hit the marketplace. How would you assess the impact of AI in radio so far?

Thielen: ChatGPT of course was the first big breakthrough of generative AI, to be used for generating content and helping solve problems in the production process.

We were using AI prior to that for purposes such as speech-to-text transcription. That was a small revolution in itself, making the content of archives more accessible. In the past, users had to transcribe material manually; they can now search for material much more easily.

But generative AI truly is a revolution in the market, opening so many possibilities for journalists and creative people. It can help me generate content in various languages, it can help me generate an article with a different kind of style or tone — it brings so many opportunities.

“ For many of our customers, their brands are among their most important business assets. They need to protect that reputation. ”

Below Michael Thielen

AI Revolution in Radio & Streaming

Can you expand on the uses with dira?

Thielen: AI is used for example in automatic audio enhancement, so journalists can take problematic audio material and make it sound much better through an automated process.

We also can offer artificial voices, and the user can generate a fully artificial presenter, or clone voices from presenters. This can be helpful, say, if there’s an important traffic problem, a public health announcement or news about a fire. You might not have a presenter in the studio, but with the AI tool you can bring these stories to the listener with synthesized voices.

We’re also testing the ability to generate different types of content based on the format and tone of a given station. Is the presenter a serious person, or should they have a cheerful tone as with a magazine show? Commercial stations in particular may be interested in these kinds of options.

We also partner with a company that does live transcriptions. Deaf people normally may not be able to

“

other social media, you see a lot of AI-generated content, and much of it is not labeled as such. What’s real and what’s not? People tend to believe what they see, but with current models of AI, it’s very hard to know whether a video or photo is real.

I mention this because trust is so important to us in the broadcast industry. These are questions broadcasters must weigh too.

Are there obstacles or blockers that broadcasters should keep in mind?

Thielen: Users certainly should be aware of privacy compliance. This is not unique to AI, but it involves questions for instance of where content is stored in the cloud. Are data protection rights being adhered to? If I’m using not my own language model but something provided by big tech companies, what really happens with the data I use? What kind of training might it be used for? For broadcasters, how do you ensure that the language models are not trained into becoming biased or unethical?

Tools like ChatGPT are great for building text and suggesting content. But in our way of thinking, everything that’s done with AI should go through the eyes of a real person who will read and approve it.

listen to you, but they’re still interested in your news and content. Now you can offer live subtitling for your radio program, which is great for accessibility. In fact you can offer the transcription in multiple languages.

Also, these transcription services generate tons and tons of text, which we store in our dira system. This creates a time-stamped record of what happened on the radio.

AI then can be used to generate analytics about what has been played. You might use this for music reporting or for analyzing your advertising content or the news stories that have aired. And you can analyze your competitors’ programs as well as your own, without paying a marketing agency to have students sit down and transcribe programs.

It’s fascinating what the technologies can do. At the same time, personally I sometimes want to step back and ask about unintended consequences of AI. For instance, when you look at web pages, at TikTok and

Other thoughts?

Thielen: Our aim in implementing AI in our radio production suite is to help build workflows that ease the work of the journalist or content creator so they can be more effective and work better and more quickly. We want to help get rid of boring — call them stupid — tasks that can be automated or done easily by AI. We’re not a company that’s just looking to bring the cheapest solution to the market.

Tools like ChatGPT are great for building text and suggesting content. But in our way of thinking, everything that’s done with AI should go through the eyes of a real person who will read and approve it. We think it’s important that broadcasters continue to be seen as trusted advisors, as trustworthy people. For many of our customers, their brands are among their most important business assets. They need to protect that reputation, and they can only do it if there is a human in the loop.

Beasley finds ample uses for AI tools

The media group has expanded its AI integration significantly

Justin Chase is chief content officer of Beasley Media Group, which has 55 radio stations in 10 markets and offers capabilities in audio technology, podcasting, ecommerce and events. It reaches some 20 million consumers on a weekly basis.

When ChatGPT came out, our industry saw that generative AI technologies could change our workflows drastically. Has this happened?

Justin Chase: Over the past two and a half years, we’ve discovered a wide range of opportunities to integrate AI across our operations. From streamlining content creation to enhancing our commercial production workflow, AI has proven to be a valuable tool.

In parallel, we’ve also taken the opportunity to optimize existing legacy systems, such as WideOrbit and Selector, by unlocking underutilized capabilities and aligning them with today’s landscape.

Your company has been willing to jump in and explore new tech over time. How has it approached AI?

Chase: Caroline Beasley and our executive leadership team have consistently been forward-thinking when it comes to emerging technologies, and AI is no exception. From the outset, we formed a dedicated AI working group to explore potential use cases. Today, that effort has expanded significantly as AI discussions and experimentation are now integrated into leadership conversations across all departments.

The potential applications seem almost endless. How is Beasley using AI-based tools?

Chase: We initially focused on audio production, partnering with Benztown and SpecAi for commercial and spec spot creation. Since then, our AI integration has expanded significantly.

We now use AI to generate digital written and video content, and we’ve been piloting Futuri’s AI-powered DJ in non-peak hours on a couple of stations in Charlotte. In

other markets, we’re testing the Super Hi-Fi platform for cloud-based programming.

On the business side, our finance team has adopted a new accounting platform that leverages AI to automate and optimize workflow. Additionally, we provide our leaders with access to an internal ChatGPT tool, which has become part of their daily workflow.

You mentioned radio spec spots.

Chase: Yes, we’ve successfully implemented SpecAi for generating spec spots. The tool has delivered good results and has become a reliable part of our commercial production process.

Beasley deployed the Super Hi-Fi platform on a 50 kW FM in Florida. What were the benefits?

Chase: That’s correct, we’ve been testing Super Hi-Fi at WPBB in Tampa and have since expanded to a couple of additional stations to better understand its adaptability across different formats.

If the trials deliver positive results, we could see benefits like automated cloud-based music log generation and a reduced reliance on large physical studio spaces with greater flexibility in operations.

What does AI do in that station’s workflow, and how would you characterize the success?

Chase: At this stage, AI is primarily being used to enhance efficiency and reduce repetitive or time-consuming tasks. Importantly, we view AI as a support tool, not a replacement for human oversight.

For example, while AI may assist with content creation, such as articles for our digital platforms, everything is still reviewed by one of our amazing staff members to ensure quality, accuracy and brand alignment.

We’re very pleased with the outcomes of many of our AI initiatives so far. They’ve delivered tangible benefits and sparked valuable learning experiences. Some are still in the experimental phase, but the overall direction has been promising, and we remain excited about what’s ahead.

Does Beasley use cloned or synthetic voices on the air?

Chase: We do use synthetic voices from time to time, particularly those provided by SpecAi and Futuri, in select situations. These tools have proven useful for supplementing traditional voice work in specific scenarios.

Above

Justin Chase

How radio wins the arms race for attention

It’s a case of authenticity vs. AI

Writer

Dan McQuillin Managing Director Broadcast Bionics

In the AI era of synthetic voices and algorithmic content, one thing stands out above all else: authentic human connection. And that’s where radio wins.

We’re no longer just competing for audiences and attention with other stations, or even AI clones of traditional radio formats. Today, we’re up against hyperpersonalized chatbots and adaptive content engines that learn from every interaction. They’re designed to sound personal but are optimized for addiction and attention instead of connection and community.

At Broadcast Bionics, we believe the future of radio lies in amplifying human creativity, not automating it away. That’s why we focus on Augmented Intelligence, smart tools that support broadcasters instead of substituting them. Even the most creative workflows are typically around 80% process and only 20% creativity so Bionics builds tools that strip away the 80%, freeing humans to focus on the creative 20%, not the other way around.

Nothing Bionics is developing is ever generative or artificial, because while AI can stream endless silos of personalized content, radio offers something profoundly different: a shared experience, built on real-time

conversation, unpredictable interaction and genuine audience participation.

Radio doesn’t just broadcast, it connects everyone listening to the same show at the same time together. In this new landscape connection and engagement isn’t a feature; it’s our compelling competitive edge. By drawing listeners in and making them part of the show, we create community and deliver something no machine ever can.

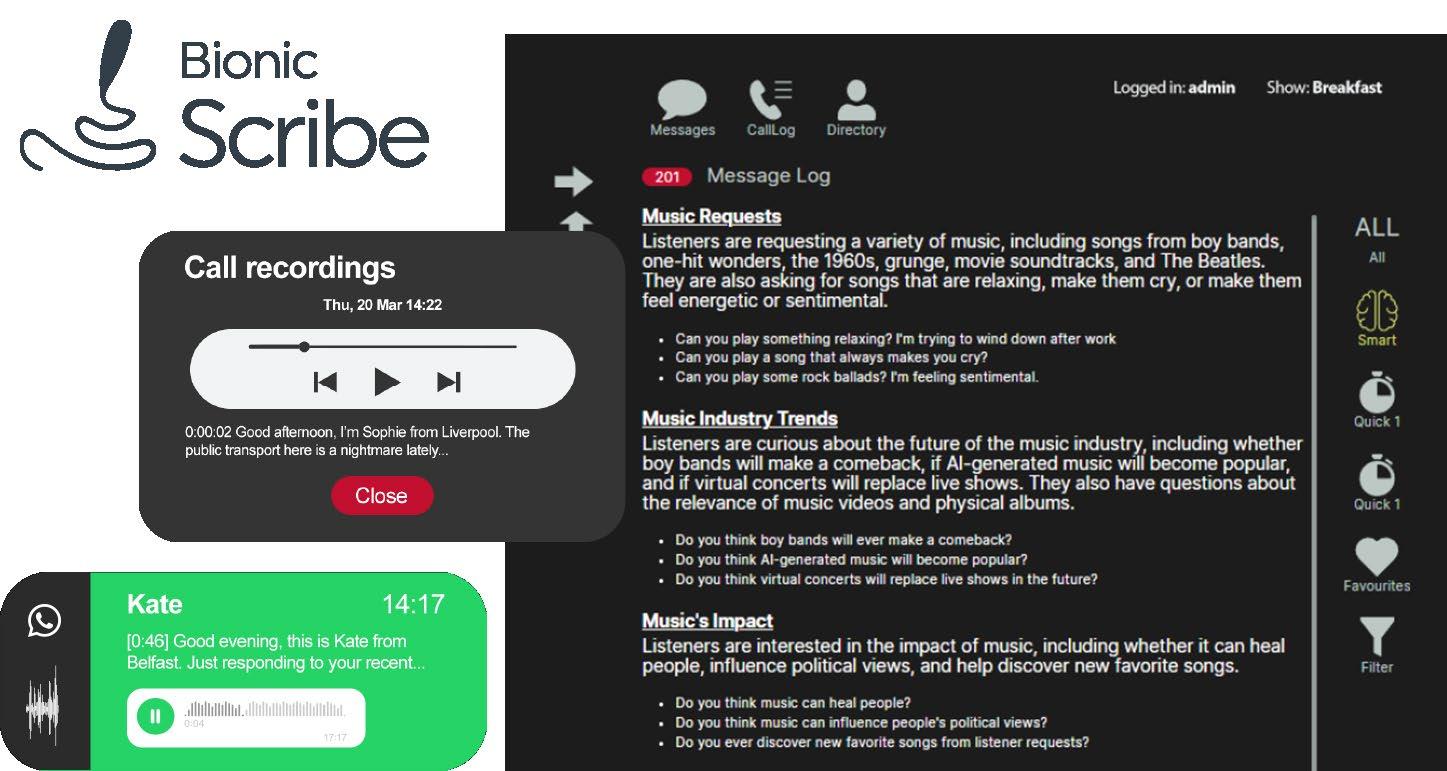

Our solutions like BionicScribe help producers and presenters keep pace in real time, transcribing, summarizing and surfacing the best moments from live calls, WhatsApp messages and social media interaction. It’s not about replacing talent, it’s about giving them superpowers so they can respond faster, and engage more deeply.

One of the greatest challenges broadcasters face is the sheer speed at which AI tools and technologies are evolving. Traditional studio technology and workflows, which have remained largely unchanged for decades, are falling further behind every day.

MOR>, our Multi Object Recorder, bridges that gap by allowing existing broadcast studios to seamlessly integrate emerging technologies without replacing hardware or retraining staff. MOR> brings agility to legacy infrastructure, enabling broadcasters to innovate at the pace of AI.

All Bionics tools are built specifically for broadcasters: secure, on-premise and optimized for media content. Unlike generic AI platforms, Bionics’ solutions are deeply integrated into broadcast workflows, respecting privacy, editorial control, compliance and the fast-paced nature of live production. This means stations can adopt cutting-edge technology with confidence, without compromising their values or their content.

This is how we cut through all the noise in the arms race for attention — by empowering human teams to do what only they can: connect, empathize, surprise, inspire. The more synthetic the world becomes, the more valuable authentic human presence becomes.

The temptation is to use AI to compete on cost. But audiences aren’t crying out for more content, they’re longing for authenticity. For trusted voices, meaningful stories and communities they can belong to. What radio offers audiences is not just information, but invitation: to belong, to hear and to be heard.

In a world of limitless content, we need radio because we need each other more than ever.

AI now can help radio compete in video advertising

Stations that master this are capturing ad dollars that used to go elsewhere

Writer Hayden Gilmer VP of Revenue Waymark

Two years ago, radio had an AI awakening. Station managers, sales directors and creative teams suddenly found themselves staring at the kind of technology that promised to transform everything from writing ad copy to automatic voice synthesis.

The questions were immediate: Would this actually work? Could AI maintain the quality and authenticity that radio demands? And perhaps most importantly: What would it mean for them?

Today, we’re starting to see answers. The sharpest stations aren’t just experimenting anymore. They’re using AI to fundamentally expand their operations and compete in ways that were impossible even 24 months ago.

The spec game

Below

Here’s an example of a video spot created for Lightning Digital with Waymark’s AI technology. (Watch the video here.)

For decades, radio sales operated under a constant constraint: Creative took time … days, sometimes weeks, depending on production schedules and creative availability. Sales teams learned to pitch conceptually, relying on words and existing samples to paint a picture of what a campaign might look like.

AI has blown up that model entirely.

Sales teams can now generate complete commercials with logos, footage, voiceover, music and professional animation in under five minutes. Not rough drafts or placeholder content — broadcast-ready commercials that clients can see, approve and air immediately.

The impact on sales cycles has been dramatic. Stations report that prospects who might have taken weeks to make decisions are now closing deals in days. The reason isn’t just efficiency; it’s psychology. When a local restaurant owner sees or hears their storefront in a polished 30-second spot, with their name and a professional voiceover plugging their weekend specials, that’s when the conversation shifts from hypothetical to immediate.

Why video matters

Here’s where it gets interesting for radio specifically: AI isn’t just making audio production faster. It’s giving radio stations the ability to compete in video advertising for the first time.

Video advertising is the fastest-growing format in the industry, but many radio stations have treated it like someone else’s territory. The production costs, technical requirements, and creative expertise needed to produce quality video content have simply been too high for most local and regional stations to justify.

AI-powered video generation changes that calculation completely. Stations can now walk into advertiser meetings with comprehensive multimedia strategies. They’re not just selling 15- and 30-second radio spots anymore. They’re presenting coordinated campaigns that include on-air spots, streaming video ads, and social media content, all

“

AI Revolution in Radio & Streaming

Successful stations have discovered that AI works best under human oversight as a complement to creativity, not a wholesale replacement for it.

”

effortlessly generated from the same source material.

This represents a fundamental shift in how radio stations can position themselves in the advertising ecosystem. Instead of competing solely on reach and frequency metrics, they can offer the kind of full-service creative capabilities that advertisers previously had to source from multiple vendors, or just do without entirely.

The quality question

Transparently, the early skepticism about AI-generated content wasn’t unfounded. The first iterations often produced generic, sometimes bizarrely flawed, and obviously synthetic content that simply didn’t live up to established standards.

But the current generation of AI tools has largely evolved beyond these problems, particularly when deployed thoughtfully. The key insight that successful stations have discovered is that AI works best under human oversight as a complement to creativity, not a wholesale replacement for it.

The most effective implementations we’ve seen involve sales teams and creative directors who understand how to prompt AI systems effectively, how to incorporate their local market knowledge and approaches, and how to maintain brand consistency across automatically generated content.

This requires training and process development, but stations that invest in building these capabilities are seeing dramatic returns. They’re able to produce significantly more creative content without expanding their teams, respond to last-minute advertiser requests that would have been impossible to fulfill previously, and offer services that differentiate them from competitors who are still operating under limiting traditional constraints.

Numbers don’t lie

The business impact here extends far beyond faster production times. Stations using AI video creation report tangible improvements in their spec-to-close conversion rates. The ability to show real, produced content rather than simply describe concepts has proven to be a powerful new capability.

More importantly, many stations are discovering that video-enabled sales pitches allow them to compete for budgets they were simply excluded from before. Local businesses that might have allocated ad dollars to digital

agencies or larger multimedia platforms now have reason to keep those budgets with their local radio partners.

This is particularly significant in smaller markets, where local businesses often have few marketing resources and even less time. These owners prefer working with a single, trusted vendor who can manage multiple aspects of their advertising strategy for them. That’s an ideal scenario for a local radio team.

What’s next

The trajectory of AI development suggests several emerging opportunities for radio stations willing to stay ahead of the curve:

1. Robust, purpose-built platforms are becoming advanced enough to turn a single creative brief into cohesive campaign content for on-air, streaming, social media and digital display advertising simultaneously.

2. A new wave of self-service platforms allows advertisers to create their own content while maintaining station branding and quality standards. This creates opportunities for new revenue streams while reducing the direct labor required for smaller accounts.

3. Rapid turnaround times are an asset. With instant production, business owners who need eleventh-hour marketing are now viable prospects and fast-converting revenue generators.

The strategic imperative

Radio has always been adaptable. The industry has survived the transition from AM to FM, embraced digital streaming, and found ways to remain relevant in an increasingly fragmented media world.

AI video represents the next phase. At Waymark, we’ve seen the proof firsthand. Stations like our partners are mastering cutting-edge tech like our ad creator; and in doing so, they aren’t just improving their operational efficiency. They’re expanding their addressable market and positioning themselves as first movers and full-service marketing partners rather than single-channel vendors.

The technology is here. The tools work.

The next step? Take the leap and capture the competitive advantage that AI-powered evolution provides.

Case studies in the use of AI

How companies like iHeartMedia, Saga and SiriusXM are using the technologies

Writer Paul McLane Editor in Chief

We went looking for more examples of how AI is being deployed at radio and audio companies. We discovered it being used by radio companies, large and small, for purposes such as language translation; commercial and spec-spot creation; on-air voice imaging; creation of text, audio and video; audio processing; and yes, for onair hosting.

Here are examples. And the article concludes with questions from a radio consultant who feels somewhat ambivalent about the topic.

Found in Translation at iHeartMedia

iHeartMedia — the largest podcast publisher, according to Podtrac — is using AI to bring podcasts to a global audience by streaming them in new languages, accessible on iHeartRadio, Apple Podcasts and other platforms.

Translated episodes began rolling out in June and will be available in Spanish, French, Arabic, Portuguese, Hindi and Mandarin, with plans to expand to more shows and languages. Shows include titles like “Revisionist History with Malcolm Gladwell,” “Stuff They Don’t Want You to Know,” “Betrayal,” “The Girlfriends” and “Murder 101.”

Translations had begun into Spanish on 11 podcasts, listed on a dedicated web page.

iHeartMedia quoted Jay Shetty, the host of “On Purpose,” saying, “One of the questions I get asked most is, ‘When will the podcast be in Spanish? When will it be in Hindi?’”

The program uses SpeechLab, a platform that provides speech-to-speech translation. SpeechLab was created with incubation support from Andrew Ng’s venture studio AI Fund; it is led by entrepreneur Seamus McAteer as CEO.

The platform replicates original speakers or matches native voices; the company promises “nuanced, emotionally resonant vocals that seamlessly uphold your brand’s unique integrity.”

Disclaimers in each episode and in show descriptions let the listener know the show is translated using AI technology. The podcast providers or partners participate in profits from the translated versions.

Conal Byrne, CEO of iHeartMedia Digital Audio Group, said podcast listenership continues to rise, notably in regions such as Latin America, Europe, India and other

AI Revolution in Radio & Streaming

parts of Asia. The company also said this is part of its goal to make podcasting more accessible and inclusive.

Describing the development process to the website Digiday, Will Pearson, the president of iHeartPodcasts, said SpeechLab cloned host voices from snippets of their shows, then refined the audio to translate it while preserving tone and personality. Clips were reviewed by podcast teams at iHeartMedia, who worked with the podcast creators and with human teams of native speakers to review the snippets.

“The podcast network would share feedback with the SpeechLab’s team,” Digiday reported. “For example, one host who has a slight accent had some words mistranslated. iHeartMedia also tested out the translations with groups of listeners who were native speakers.”

Pearson told Digiday the technology had advanced significantly in the last 12 to 18 months. “There’s a nuance of language … you want the conversational flow to feel right — more for the chat shows than the narrative style shows. When Jay Shetty delivers his content, he has his own delivery. We want to make sure it captures that essence as much as possible.”

A Measured Approach at WVRC Media

WVRC Media in Charleston, W.Va., uses Spec-Mate, an AI platform from CreativeReady, for generating voice tracks and commercial scripts.

“After completing internal training, I was onboarded to support both scheduled and on-demand needs,” said Dale Cooper, director of operations for Charleston.

“It provides a range of voice options, from character and ethnic accents to standard broadcast-style announcer tones, so we can produce reads without booking a studio or arranging outside talent.”

Cooper said the staff can now produce and revise promos entirely in-house and much more quickly.

“Instead of scheduling studio time, I can generate script reads or cloned voices directly from my workstation. This is particularly useful for updates that need to go on air the same day. It also helps fill gaps in our talent lineup — for example, when we need a second voice for a two-person spot but don’t have staff available.”

How realistic are the voices?

“There is a bit of an art to the clone voices to make them hit right. You have to play around with it to find the right talent and the right voice for a pro result. The pure AI reads are a variable. Inflection is still a bit of a roulette. There is some inconsistency — a weakness across most AIs — where a convention for copywriting that gets results one time will generate odd pronunciations or variations the next.”

Cooper suggests that providers of AI tools create a centrally maintained update log or user forum. Voice options change over time, so having a clear record of additions or removals — plus shared user tips — would help teams integrate new features more smoothly, he said.

WVRC’s four divisions encompass 33 radio stations, a statewide news network, a video production arm and a

Above Jay Shetty’s podcast is among those being translated into multiple languages.

AI Revolution in Radio & Streaming

digital marketing division. Beyond Spec-Mate, the organization is using targeted AI tools for specific tasks, such as Zapier for process automation and Adobe’s AI features in Creative Cloud for visual work. It does not use AI voices outside of promos and spots.

“We have taken a measured approach to large-language models and personality-driven interfaces, prioritizing stability and consistency on air,” Cooper said.

AI Gives His Radio Selling a Boost

Adam Perrotti is an account executive at Connoisseur Media, based in Milford, Conn., and consulting with a variety of businesses on their ad and marketing campaigns.

He frequently uses ENCO’s SpecAI tool, saying it helps him keep his pitches fresh and allows him to customize for each client or prospect.

“I use this in a variety of ways depending on the client/prospect and the stage in the sales process,” he said.

“One of the most common purposes is for when I am cold-calling businesses or walking into their storefront in hopes of getting in front of the owner or decision-maker, in most cases just trying to trigger a meeting to talk about their business and their marketing goals.

“But in order to create that excitement about radio, what better way than playing their commercial for them? Especially when it’s one that relates to a current sale that they have going on or focusing on a specific product/service that they’re pushing.”

Perrotti has found himself doing more research prior to his cold calls or walk-ins, to create a more accurate spec commercial.

“Usually I’m using SpecAI to create a custom commercial for a prospect based on research from their website or social media accounts, linking the commercial to something that aligns with what they’re currently doing.”

SpecAI helps him write the commercial in seconds, then helps to produce it, adding a voiceover and background music, giving it a professional sound.

Perrotti came to the radio industry in 2023. He liked writing commercial copy but it kept him from tasks like prospecting and meeting with clients.

Being able to create multiple versions of a spec spot within seconds frees his schedule. He likes that he can create them while sitting in the prospect’s parking lot before he walks in.

“Many of these tools were just being introduced, which to my benefit allowed me to only understand a work environment with access to tools like these, so I quickly adapted.”

More broadly he’ll use ChatGPT to identify trends or news stories in specific industries, for instance by asking AI, “What’s trending in the auto dealership industry?” It helps him understand a business and prompts meaningful conversations.

“I will also use ChatGPT to brainstorm and come up with creative ideas. I will ask for marketing campaign ideas with a specific theme.”

His advice to peers is not to be intimidated by AI.

“It is constantly evolving and can be one of our strongest selling tools for many reasons. I think that the more we begin to embrace AI as a tool, the more efficient we will become as marketing consultants for new and current clients.”

Above A user screen in ENCO SpecAI.

Left

Adam Perrotti

AI Revolution in Radio & Streaming

Saga Deploys AI for VO

Saga Communications will use an AI-based service for audio imaging on its 113 stations, as first reported in a news story from Barrett Media.

“The company is utilizing a third-party solution that will consist of voices from real humans who have trained AI programs to replicate their voices for usage in a variety of ways,” it reported.

Saga CEO Chris Forgy told Barrett that while this means the company won’t use imaging voice talents, it will actually help maintain several employees in the process.

“When we started this process, we said we wanted to be prudent and control our expenses,” Forgy said. “And we didn’t want to cut people. … The savings we were able to generate by going through this other way of doing station imaging — utilizing AI — saved about 10 people who otherwise would have lost their jobs as a result of the expense reductions we wanted to put in place.”

Radio World followed up with Forgy. He told us that Saga is using ElevenLabs technology for the imaging.

“We have an in-house spec AI production service. In fact, our in-house solution has enabled us to utilize it on two new Hispanic music stations called La Pantera in Asheville, N.C., and Clarksville, Tenn.,” he said.

“We can read a commercial in English and then have it translated in the same voice into Spanish into the correct dialect etc.”

He said Saga plans to use AI in areas like broadcast and digital log reconciliation, and to create online news content that is localized by its human staff, “all designed to make all of us more efficient.”

Barrett quoted the CEO promising that AI will “never

“ There is no Saga asset that is more important and vital to our success than the assets who walk out the door every day at 5 p.m. ”

replace our on-air personalities, period.”

Forgy told Radio World in our followup, “There is no Saga asset that is more important and vital to our success than the assets who walk out the door every day at 5 p.m. Our people are what make us hyper-local in our markets. They are the ones who connect with listeners, raise money after a disaster or for the local children’s hospital, and help clean up after horrendous weather, fire etc. In my opinion, AI is not a replacement for on-air talent.”

SiriusXM Uses Narrativ

Advertisers that work with SiriusXM Media will now be able to choose AI-generated voice replicas, through its inhouse creative agency, to create audio ad creative at scale.

SiriusXM Media is the sales organization for SiriusXM, Pandora and the multiple streaming and podcast networks. In June it announced an agreement with Narrativ, which works with talent to digitize the voices of voiceover actors and make them available for licensing.

Advertisers will access the tools via a self-service creative tool called AdMaker.

Human actors who opt in to the platform will earn fees. “[T]his solution will offer them the opportunity to earn additional income through the licensing of their voice replicas while maintaining full ownership and control over voice usage, including the ability to approve or reject every ad using their voice replica,” according to the announcement.

Above

Chris Forgy (via LinkedIn)

Right

Lizzie Collins

AI Revolution in Radio & Streaming

Lizzie Collins, SiriusXM Media’s senior vice president, B2B marketing and ad innovation, said, “We can meet growing advertiser demands for more personalized, professionally produced audio creative at scale — something particularly challenging for smaller brands due to traditionally high costs, complexity and limited flexibility in production processes.”

She gave the example of a grocery store that wants to do hundreds, or even thousands, versions of an ad with a different call to action or a different location it wants to promote.

“Realistically, voice actors don’t want to spend eight hours a day for two weeks straight recording 4,000 location tags. This initiative allows us to make the re-editing process much more efficient for the voice actor, and much easier for the brand.”

Read more details of how it works

Collins said AI and ML have been part of the company’s business for years.

“Pandora has long been a pioneer in this space, with its algorithmically driven radio and the innovative Music Genome Project. Today, across SiriusXM, we’re using AI in different ways to make it easier for people to find and interact with our business and our content, from personalization to customer agents to, in our advertising business, optimizing ad delivery to the right listener or bringing these AI voice replicas into ad creative.”

A Helping Hand in Hutchinson

Family-owned Ad Astra Radio, headquartered in Hutchinson, Kan., is the third-largest radio company based in that state, serving listeners on a network of 11 local stations.

It is using AI Content Helper Pro, a tool from Skyrocket Radio that can create story content, titles or images.

“Part of my job is posting stories and press releases on our company’s website,” said Digital Content Director Brenna Eller.

“Sometimes the stories I get don’t come with a headline or image. When I have busy days where I get multiple press releases or stories to post and the first paragraph of the story isn’t really giving me the full overview, the Content Helper Pro helps to quickly scan and highlight key information and helps to generate a title or at least ideas for a title.

“It can also help produce an image for my story if I don’t have one already. And at the correct size that our website uses, which is nice.”

Have they experienced any bumps?

“With any AI, you always have to tweak things to make the content fit your voice best,” Eller said.

“I’ve found myself just rewriting the suggestions a lot because there are words I would never use. I have also found that when using the image generator, you have to be very specific with the details you need or you’ll get

something a bit too abstract.”

She noted that Skyrocket offers a voiceover tool, but the company doesn’t use AI voices on the air.

“AI is definitely on our radar because it is everywhere, but we are first and foremost about our local communities. And they want to hear from the people that they know and trust.”

Left Brenda Eller

Left

An image generated by AI Content Helper Pro.

AI Revolution in Radio & Streaming

Using AI to Enhance Audio

Technology supplier 2wcom Systems uses AI to enhance cloud-based audio productions.

It offers a remote production solution called up2talk that was designed to deliver high-quality audio across various devices; it enables users to join virtual studios through their browsers. Its underlying MoIN software is an AoIP codec that operates in Docker containers. The company has since expanded up2talk to support video features.

Now an AI-supported feature allows users of up2talk to improve previously recorded audio with a click.

“This can resolve challenges like the ‘cocktail party problem’ or background disturbances such as sirens or crowd noise that may affect the speaker’s clarity,” said the company’s Markus Drews.

“The AI handles noise removal, echo cancellation, speaker separation and voice enhancement, making post-production much faster and more efficient.”

(Watch a demo.)

More broadly, Drews said that now that the initial hype around AI has settled, radio is enjoying concrete productivity gains.

“Some radio stations are using AI to manage audio scheduling, detect audio errors in real time and even improve overall sound quality with tools like our AI-based audio enhancement. That said, there are still many areas where AI isn’t the silver bullet. Ultimately, it’s people who take on the most critical roles: bringing creativity, intuition and judgment to the table.”

He believes the next big step will be broader integration, making AI-powered tools more accessible and seamless for broadcasters. “Whether it’s automating routine production tasks, improving real-time decision-making, or enhancing listener experiences, we’re only at the beginning of what’s possible with AI in this space.”

An AI Host in Afternoon Drive

Nelonen Media is a Finnish commercial media company owned by Sanoma Media Finland, part of the Sanoma group. It’s an entertainment house with a portfolio of video, audio event content including television channels like Nelonen, Jim, Liv and Hero, as well as the streaming service Ruutu.

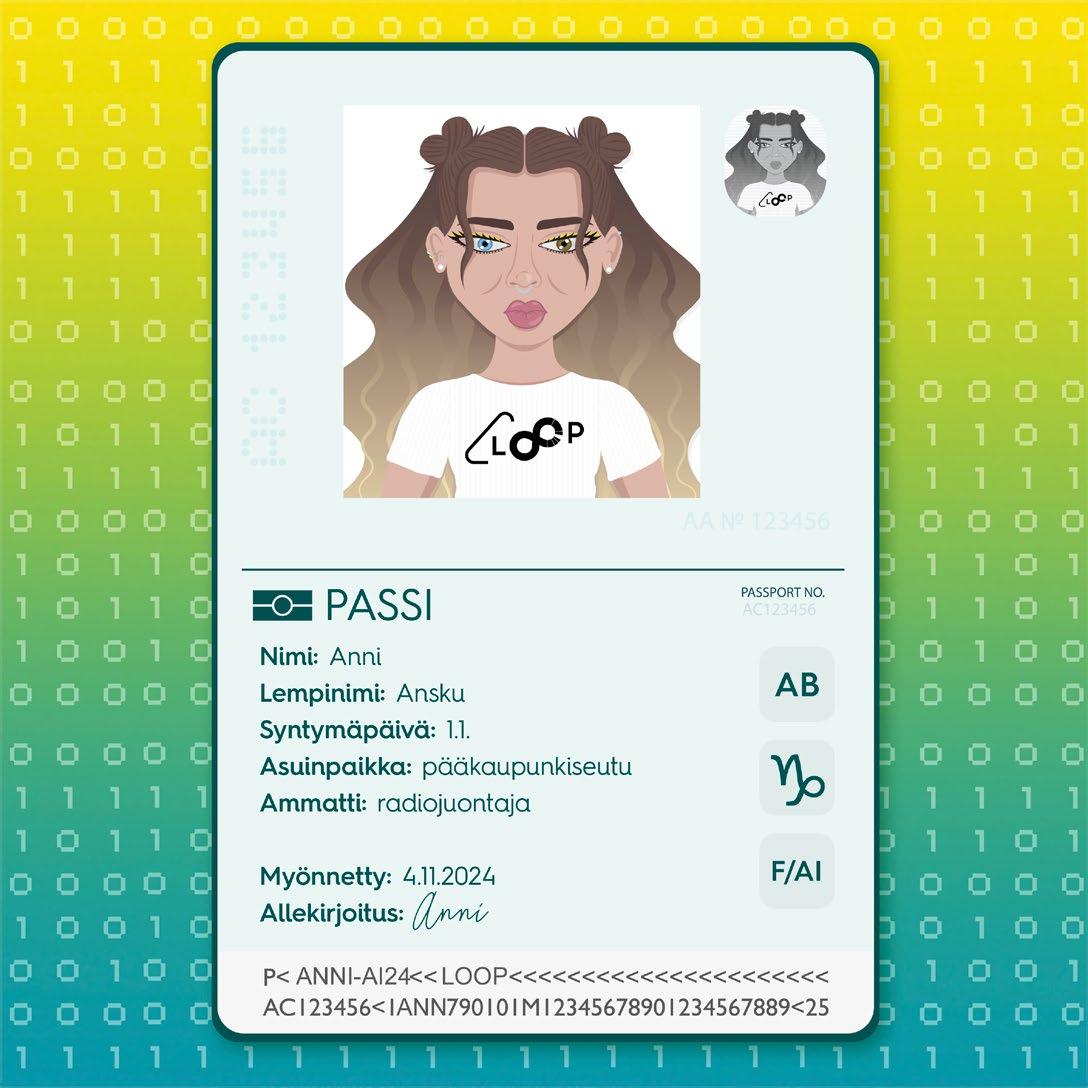

Since November, its national FM station Loop has featured a virtual AI-assisted host named Anni in the weekday afternoon slot from 3 to 7 p.m., using technology from Radio.Cloud.

Anni introduces songs and segments. Her voice is based on broadcasts by personality and Loop/HitMix program director Satu Kotonen. (The name “Anni” is derived from Kotonen’s middle name.)

“The voice model was trained using authentic on-air material, preserving the tone, energy and presence of a live host,” said Sami Virtanen, a content insight strategist at Nelonen.

“As Satu puts it, ‘My idea of a good radio voice is authenticity, clarity and presence.’”

Loop reaches approximately 300,000 weekly listeners,

Above Markus Drews at an IBC show.

AI Revolution in Radio & Streaming

primarily women 20–34. “It’s an audience that’s both receptive to and familiar with AI-driven experiences,” Virtanen said.

“Anni doesn’t replace existing talent but enhances programming in a previously unhosted time slot. This project reflects our broader approach: using AI to enrich content where it adds value, not to compete with human creativity.”

Virtanen’s role focuses on understanding how audiences engage with the organization’s audio content. He also chairs Nelonen’s AI group for TV and radio, and is a member of Sanoma’s Generative AI working group.

“Our workflow has evolved significantly with the introduction of the AI-driven host,” he told Radio World.

“We’ve established a process where all AI-generated content is reviewed by a human before it goes on air. This means our program director now has a new responsibility: reviewing and editing the AI’s scripts in advance — something quite unusual in radio, where program directors typically don’t pre-approve presenter scripts.”

He said the most intensive phase was training Anni to speak.

“While creating the voice clone was relatively quick, developing the right tone of voice took much longer. We also encountered new challenges, such as artist names with special characters, which the AI struggled to pronounce correctly. As a result, our audio producer now also handles prompt engineering — adding pronunciation guidance into the system when needed.”

The organization also uses AI in its weather coverage.

“We are using Microsoft Azure GPT-4o to generate local weather reports from data provided by the Finnish

Meteorological Institute. These forecasts are read by a lifelike synthetic voice, created using Custom Neural Voice (CNV),” he said.

The automated system allows Nelonen to produce localized forecasts across 26 regions.

“To develop the AI weather service, we had to overcome several challenges. One issue was that every forecast had to be the same length so that they would fit into the live national news broadcast. We solved this issue by designing each local weather report to consist of only five sentences, with each sentence defined by a strict prompt structure.

“We also noticed some pronunciation issues, which may have been due to the fact that Finnish is not widely spoken and there is consequently less testing with Finnish voice.”

In addition, AI is being integrated into editorial and operational workflows. In radio and podcasting, they use AI for interview prep, where it helps generate questions based on guest profiles and current topics. Transcription tools convert spoken interviews into text, speeding the editing and repurposing process.

And Nelonen is running multiple text-to-speech pilots.

“For example, our podcast platform Supla features ‘audio articles’ where listeners can hear Sanoma’s print journalism read aloud by synthetic voice,” he said.

“And this is the first summer when we are using AI voice for radio news. Now the summer holidays don’t affect our news service.”