of innovation FUTURE A shared

How broadcast and AV continue to benefit from their ongoing convergence

How broadcast and AV continue to benefit from their ongoing convergence

arlier this summer, IBC CEO Mike Crimp told me that one of the aims for this year’s show is to “enable companies that haven’t traditionally exhibited at the show to enter the media and entertainment market”.

This is something we’re seeing more of with the ongoing convergence between the pro AV and broadcast industries. Thanks to broadcasters moving towards a more IT-based infrastructure, IP adoption is continuing at pace, driven by SMPTE ST 2110 as well as IPMX, NDI and NMOS. On the pro AV side, integrators are becoming more ambitious, employing what would be traditional broadcast cameras, switchers, graphics etc.

In this special publication from TVBEurope and Installation , you’ll find features exploring the ongoing coming together of technology for the two industries, as well as a special Q&A with industry experts exploring key trends and technologies reshaping workflows. Plus, we also hear from key figures who will be at IBC Show about what they’re looking forward to seeing in Amsterdam.

The TVBEurope and Installation teams will be on-site at IBC and are looking forward to hearing about all the latest developments in both broadcast and pro AV. See you in Amsterdam!

JENNY PRIESTLEY, CONTENT DIRECTOR, TVBEUROPE jenny.priestley@futurenet.com

While the AV broadcast market presents new opportunities for traditional media tech vendors, pro AV companies are also finding their tools increasingly adopted by broadcasters and studios, finds Robert Ambrose, co-founder, Caretta Research

The discovery, registration and connection management aspects of the Networked Media Open Standards initiative are now well-established—but there’s much more to NMOS than that, writes David Davies

We speak to industry experts to explore the key trends and technologies reshaping workflows

30

Jacqueline Bierhorst, president, WorldDAB, looks forward to demonstrating the new Automatic Safety Alerts (ASA) broadcast on DAB+ during IBC2025

32

Steve Reynolds, chairman of the board of AIMS, is ready for more meaningful conversations about IP

X.com: TVBEUROPE / Facebook: TVBEUROPE1 / Bluesky: TVBEUROPE CONTENT

Content Directors: Jenny Priestley jenny.priestley@futurenet.com

Rob Lane rob.lane@futurenet.com

Senior Content Writer: Matthew Corrigan matthew.corrigan@futurenet.com

Graphic Designers: Cliff Newman, Steve Mumby

Production Manager: Nicole Schilling

Contributors: David Davies, Ken Dunn

Publisher TVBEurope/TV Tech, B2B Tech: Joseph Palombo joseph.palombo@futurenet.com

Account Director: Hayley Brailey-Woolfson hayley.braileywoolfson@futurenet.com

SUBSCRIBER CUSTOMER SERVICE

To subscribe, change your address, or check on your current account status, go to www.tvbeurope.com/subscribe ARCHIVES

Digital editions of the magazine are available to view on ISSUU.com Recent back issues of the printed edition may be available please contact customerservice@futurenet.com for more information.

TVBE is available for licensing. Contact the Licensing team to discuss partnership opportunities. Head of Print Licensing Rachel Shaw licensing@futurenet.com

SVP, MD, B2B Amanda Darman-Allen VP, Global Head of Content, B2B Carmel King MD, Content, Broadcast Tech Paul McLane

Global Head of Sales, B2B Tom Sikes

Managing VP of Sales, B2B Tech Adam Goldstein VP, Global Head of Strategy & Ops, B2B Allison Markert VP, Product & Marketing, B2B Andrew Buchholz Head of Production US & UK Mark Constance Head of Design, B2B Nicole Cobban

Chris Neto, head of emerging markets, at Midwich, is looking forward to attending his first IBC Show and asking, what’s next?

WHAT ARE YOU MOST LOOKING FORWARD TO AT IBC2025?

What I’m most looking forward to at IBC2025 is twofold: meaningful connections and unexpected discoveries. After years of attending NAB Show, I’ve seen firsthand the power of in-person conversations. IBC takes that to a global level, bringing together voices from regions where we’re actively expanding. It’s a chance to listen, learn, and understand the specific challenges and opportunities our partners face, and how Midwich can help them grow. I’m also eager to explore the quieter corners of the show floor, the places where innovation often hides. Whether it’s a start-up or an under-the-radar brand, I’m always on the lookout for that one solution that tackles a real-world problem in a bold, creative way. Some of the biggest industry shifts begin in those back halls.

WHAT’S YOUR USUAL TRADE SHOW ROUTINE?

My trade show routine kicks off early, typically with a 5am alarm. That quiet time before the show starts is my reset button. It’s when I gather my thoughts, get focused, squeeze in a workout, and mentally gear up for the full-throttle day ahead. Once the doors open, it’s nonstop. I’m logging 30,000+ steps as I move through the halls visiting Midwich brands, supporting our vendors and partners, and scouting out solutions that align with the needs of our emerging markets. Throughout the day, I carve out time to connect with media outlets, online AV communities, and industry voices that help shape the conversation beyond the show floor. I’m constantly scanning for what’s next, genuine innovation that solves real-world problems, scales across verticals, and adds value to both integrators and end users. But the real magic happens after hours. My evenings are packed with deeper conversations, team dinners, and those spontaneous meet ups that turn into long-term partnerships or spark new ideas. The techology might draw us to these shows, but it’s the people, the connections, the stories, the shared passion, that make it all meaningful. That’s what keeps me coming back to industry trade shows.

The pandemic flipped a major switch, end users turned on their UC cameras and realised “good enough” wasn’t good enough anymore. That shift created a ripple effect across pro AV and broadcast. Suddenly, expectations changed. People now want studio-quality

video and broadcast-level audio in everyday spaces, whether it’s a boardroom, classroom, or virtual event. At IBC, I’m hoping to see clear synergies where pro AV is borrowing from broadcast’s playbook: better storytelling tools, more dynamic camera systems, and AV over IP infrastructures that allow for scalable, flexible deployments. AV over IP is no longer a future concept, it’s a common language both industries are speaking fluently. Audio is another big crossover. Broadcast has always prioritised intelligibility, and now it’s critical in AV, especially with the rise of AI. Transcription, real-time translation, and voice control all depend on clear, isolated audio. Without it, you’re not unlocking AI’s full potential. It’s not about merging industries, it’s about learning from each other to deliver higher-quality, more intelligent experiences.

HOW DOES THIS YEAR’S SHOW REFLECT THE AREAS YOU’RE MOST INTERESTED IN?

This year’s IBC speaks directly to where my AV interests converge: enterprise broadcast, live production, and emerging tech. What really stands out is the emphasis on IP-based workflows and immersive storytelling tools. These aren’t just trends in broadcast, they’re actively reshaping how we approach AV integration across corporate, education, and live event environments. IBC showcases a clear shift in how content is delivered and how audiences engage. I’m looking for solutions that move beyond traditional broadcast tools built for flexibility, scalability, and real-time interaction. That’s critical for AV professionals like me who are helping design systems to support hybrid experiences, multi-platform streaming, and dynamic control environments.

WHY HAVE YOU DECIDED TO ATTEND IBC FOR THE FIRST TIME?

As someone who’s been to countless NAB Shows, I’ve seen how the broadcast and AV industries continue to converge, especially when it comes to IP workflows, live production, and immersive experiences. I decided to attend IBC for the first time because I wanted to see that convergence from a global lens. IBC in Amsterdam isn’t just another trade show; it’s a melting pot of innovation from Europe, Asia, and beyond. I’ve seen what NAB does well. Now I want to understand where IBC excels and how that can inform how we deliver solutions globally for Midwich. And to be honest, if we’re helping build systems and strategies for clients who think globally, then we need to think that way too.

While the AV broadcast market presents new opportunities for traditional media tech vendors, pro AV companies are also finding their tools increasingly adopted by broadcasters and studios, finds Robert Ambrose, co-founder,

Caretta Research

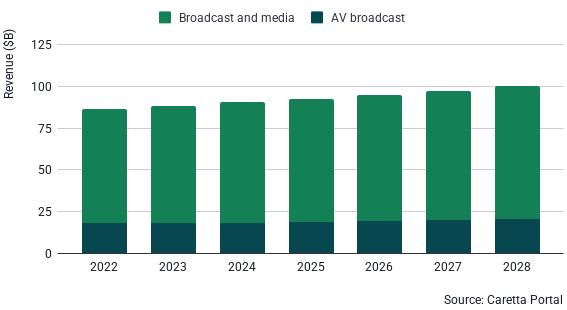

The market for AV broadcast now accounts for 20 per cent of the overall broadcast and media technology market, and is forecast to exceed $20 billion by 2027.

It’s not surprising then that the most commonly-asked questions we’ve had at Caretta Research from technology vendors over the past year are, ‘how can we address these adjacent markets, what’s the potential market size, and where’s the best place to focus?’ (fig 1)

We define the ‘AV broadcast’ market as sales of professional media technology and services into new markets like corporate video, sports, events and venues.

But it's a two-way street: while the market beyond traditional broadcast and media companies creates a valuable opportunity for vendors able to address them with the right products and go-tomarket strategy, vendors with their roots in the pro AV market are increasingly finding customers among established broadcasters and studios—tools like SaaS MAM, PTZ cameras and softwarebased production tools—often at much lower price points.

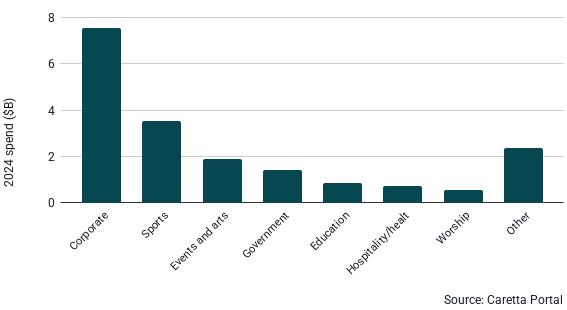

Within AV broadcast, corporate buyers (everything from banks to software developers to retailers) account for nearly half of the market. Video has quickly become an essential and integral part of business, with the key use cases including:

• Enhancing meeting room tech, for example, with multi-PTZ camera and mic setups;

• Producing high-impact internal and external company events, from town halls to product announcements and investor events;

• Creating marketing videos for social media platforms or digital signage displays;

• Delivering compelling product videos for e-commerce.

Sports is the next biggest segment, driven by clubs, leagues and federations producing and distributing their own content—making full use of unexploited rights and the rising importance of direct-to-fan content and engagement, plus the importance of in-stadium content.

Events and performing arts venues and organisations create the third largest market segment. After that, it’s government, education, hospitality, healthcare and houses of worship that make up the addressable market for AV broadcast. (fig 2)

AV BROADCAST SPENDING PATTERNS

By far the biggest spend in AV broadcast is on services, over half is on external rental of production resources for the many corporate customers that have infrequent video production needs, or recognise the importance of hiring experienced staff for important events. Other product segments that over-index for investment by AV broadcast buyers compared with traditional broadcast buyers include content acquisition (cameras and lighting), live production, sports tech and video data and analytics. (fig 3)

AV BROADCAST USE CASES AND PRODUCTS

Drill down further, and investment priorities and AV broadcast use cases vary between buyers:

• Corporate tech buyers are more likely to buy content acquisition, production and post services to produce internal and external corporate comms and events.

• Sports buyers tend to buy live production services and sports data, with the focus on delivering direct-to-fan content and fan engagement.

• Events and performing arts organisations are investing in professional services, live production and post-production for producing and distributing event-based content.

We’ve identified four main hurdles for AV broadcast buyers and media technology vendors looking to sell to them.

1. Pro AV buyers have an information gap: Video technology isn’t usually a core business for pro AV buyers, with organisations having a diverse range of experience within their AV teams. Many decision makers are more familiar with pro AV-focused tools than those offered by broadcast and media vendors.

2. Media tech vendors don’t always fully understand the new market: The pro AV market has different workflows, requirements and budgets from broadcast and media. For example, many buyers demand tools that are easily used by those inexperienced with video production. Access to reliable data revealing exactly which types of buyers are investing in which tools for which workflows is essential for vendors wanting to successfully sell to pro AV users. Different products, specs, positioning, and (lower) pricing are commonly needed.

3. Routes to market are not the same for all buyer types: While broadcast and media technology sales are typically direct, or via dedicated broadcast system integrators, channels to market in pro AV are far more complex. Tier 1 buyers may still engage directly with vendors, while distributors, resellers and installers all play a vital role for the majority of other corporate buyers. Understanding the channels and creating the right partnerships is crucial for AV broadcast buyers and vendors.

4. Marketing language doesn’t translate from broadcast and media to pro AV: Buyers at broadcasters tend to focus on performance, specs and ROI of the production tools in their own right, while pro AV buyers are much more focused on the business outcomes their video tools are enabling. They are far more concerned about ensuring their meetings flow smoothly, and products, or the CEO, are on screen reliably, and the corporate reputation is maintained.

Messaging for a broadcaster might be: “With Native IP, SMPTE ST 2110 delivers output directly from the camera for highest picture quality and operational agility…” While messaging that resonates with pro AV buyers is more likely to be: “Make your boardroom meetings, internal comms and external presentations more dynamic…”

At Caretta Research, we treat the media and broadcast market and the broadcast AV market as a continuum—and in Caretta Portal we now carry comprehensive data for technology buyers from across all industries, and track deployments and market sizing for products with a broadcast heritage alongside those coming from the pro AV world. But the go-to-market strategy for vendors and buying approach for technology users are very different. Success in pro AV depends on understanding the very different market dynamics, new competitors, different sales channels, messaging and price points, new types of decision makers, and complex sales channels.

The Pierra Menta is an international ski mountaineering competition held every March since 1986 in ArêchesBeaufort in Savoie, France, on the slopes of the Grand Mont in the heart of the Beaufortain region. Covering a total of 10,000 metres of elevation gain over four days, over 600 elite athletes compete in teams of two in one of the world’s most demanding and exhilarating ski mountaineering races. The Pierra Menta is a stage of “La Grande Course”.

HighLive is a videographers’ collective specialising in mountain productions. For over 20 years, they have been making films for television and digital on the Pierra Menta. The idea of a live broadcast of this legendary race was born three years ago. HighLive and Favoriz joined forces to meet the challenge.

France-based Favoriz Production is an agile audiovisual production company delivering complete technical support for live events, corporate film production, and television content. Known for its innovative approach to filming in remote and outdoor environments, Favoriz specialises in covering high-impact mountain events.

“Having already worked with Haivision solutions on several projects in similarly challenging environments, we were very positive that we could provide viewers with exciting footage of the Pierra Menta competition. Haivision technology is extremely agile and the powerful cellular bonding features of its mobile contribution devices allowed us to deliver reliable and dynamic footage to viewers in one of the most remote locations in the country,” says Mickaël Favard, director, Favoriz Production.

Pierra Menta’s challenging vertical course is considered one of the most demanding events of its kind, both for athletes and the broadcast team. Given the extremely remote nature of the race environment, getting the necessary broadcast equipment and team members in place was a massive logistical challenge. There were several major obstacles for the Favoriz team to overcome, including:

• The need to build and transport their own mobile broadcast infrastructure to contribute live video throughout the course, as a traditional fixed set-up was not feasible due to the lack of accessibility in the mountain terrain.

• A very limited and unstable cellular connection because above 2,000 metres, the public cellular network no longer functions

• The geographical challenges of sending return video feeds from the mountain to an OB van in Arêches-Beaufort and a production facility in Grenoble Production company Favoriz needed a way to provide coverage

of the climb and descent with a flexible and reliable workflow that could deal with these remote and extreme conditions.

The Favoriz team built a versatile and agile broadcast workflow consisting of Haivision mobile video solutions deployed throughout the course. This enabled them to capture the emotion of the participants and to produce dynamic angles from up close on the mountain and for dramatic perspectives from the air. Combined, it created exhilarating footage of the competition.

Two Haivision Pro mobile video transmitters were attached to JVC cameras on the ground, along with four roaming mobile devices with the Haivision MoJoPro smartphone camera app installed to follow the racers throughout the course. This gave viewers captivating views of the skiers and the competition, immersing them in the struggle of the climb and descent.

The compact and ultra-portable Haivision Air mobile video transmitter was attached to a drone to capture unique aerial footage of the course. Two additional drones leveraged mobile devices and MoJoPro for supplementary aerial coverage under 2,000 metres. Smartphones with the MoJoPro camera app were also deployed in the Fanzone to capture fan live reactions and on a motorcycle for action shots of the competition.

Video from sources under 2,000 metres used Haivision’s Safe Streams Transport (SST) protocol to transport video over bonded cellular networks. To transmit video above an altitude of 2,000 metres from the helicopter, Favoriz leveraged Starlink satellite technology and the Haivision Pro’s video streaming technology to send signals from the extreme elevation down to its endpoint.

Video streams were sent to two Haivision StreamHub receivers located in a production facility in Grenoble and an OB truck in Arêches-Beaufort for post production and distribution.

Leveraging Haivision's mobile contribution solutions, Favoriz delivered high-definition video of the competition to a global audience powered by:

• Ultra-reliable and ultra-low latency video contribution in a difficult and unpredictable environment

• An agile and flexible deployment of mobile solutions across a geographically diverse, multi-day course

• Robust cellular bonding capabilities from Haivision Pro mobile video transmitters with public cellular networks at lower altitudes and Starlink connections at higher elevations

Favoriz successfully produced an immersive experience of the Pierra Menta with a broadcast-quality and cost-effective live production. Despite the remote and extremely elevated area, Haivision’s agile ecosystem of mobile video contribution solutions was able to deliver an exciting viewing experience to the audience.

The discovery, registration and connection management aspects of the Networked Media Open Standards initiative are now well-established—but there’s much more to NMOS than that, writes David Davies

The last time TVBEurope checked in for a full update on NMOS was in early 2024, when parent organisation AMWA had just released details of the NMOS Control specifications. Collectively, NMOS Control (MS-05-01), NMOS Control Framework (MS-05-02), NMOS Control Protocol (IS-12) and NMOS Control Feature Sets Register meant that NMOS was now able to deliver a full IP control plane solution with operational control, device configuration, monitoring and more.

Since then, AMWA has opted to separate the NMOS specifications into two main branches: NMOS Connect and NMOS Control. The

former includes the two specifications that had previously dominated the discussion of NMOS: IS-04 for discovery and recognition and IS05 for device connection management. In large part, this is due to the fact that the specifications also provide the discovery and connection management parts of SMPTE ST 2110, the group of standards for IP network media that have been widely adopted in broadcast. But now with NMOS Control, which includes the aforementioned control plane specs, awareness of NMOS’ full scope of capabilities is growing.

Cristian Recoseanu, development tech lead at broadcast automation, playout and management solutions company Pebble,

has been a key participant in the NMOS project for many years, and remarks: “The discovery and connection management solutions have enabled the discovery of ST 2110 equipment on an IP infrastructure, to see it in an NMOS registry, and then make connections to it. That is essentially the capabilities that you get with the NMOS IS04 and IS-05 specifications. But since they were published, the number of specifications and areas we have been working on has increased exponentially, and many more people should be made aware of that.”

For example, Recoseanu points to two work-in-progress ‘best common practices’ (BCPs). BCP-008-01 and BCP-008-02 contain standard methods of monitoring the statuses of receivers and senders, respectively, providing users with standard models, guidance, expectations and requirements for minimum status reporting. By allowing broadcast engineers to ascertain the health of critical parts of the network, it becomes much easier to debug problems and identify potential weaknesses.

The two specifications also exemplify the NMOS teams’ ongoing commitment to outreach, which involves speaking to end-users to determine their precise requirements. “As with previous specifications developed by AMWA, we spoke extensively to end-users, who had largely been faced with not having technical specs in this area, and they suggested the minimum requirements they would be happy to have, which would help them solve 95 per cent of the problems they face. We then had to work really hard on the modelling framework and develop the specifications in such a way that they respected the requirements and ‘red lines’ outlined by the users.”

Recoseanu adds that the receiver/sender status specifications are now in “their final phases of development; we’re working on the test implementations and expect to be able to publish them soon.” There is also a white paper, which can be accessed here, related to the development of BCP-008-01 and BCP-008-02 that provides useful “use cases and scenarios”.

Across its specifications, NMOS effectively addresses many of the requirements pertaining to the presentday broadcast ecosystem. For instance, the current geopolitical outlook— characterised by profound global instability, rising numbers of cyberattacks, and threats to broadcast organisations—means that many content services are reviewing their security infrastructures. One of

the earlier BCP specifications, BCP-003-01, documents best practice for secure transport related to NMOS API communications. Recognising that a secure control plane and sufficient encryption are essential, BCP-003-01 (and related specs BCP-003-02 and BCP-003-03) allows for interoperability using widely adopted technologies, such as TLS 1.2 or better for HTTP and WebSocket messages.

Security and encryption will continue to be high priorities as the NMOS specifications develop, underlining the fact that, for broadcasters and other media organisations, the preservation of their content is always paramount.

Meanwhile, NMOS continues to raise awareness of NMOS Control. Whilst this aspect has undoubtedly contributed to the present sense of momentum around the standard, there is also a recognition by AMWA proponents that the best kind of awareness revolves around practical demonstrations and hands-on experience. Therefore, demos and training about NMOS Control are set to play a decisive role in the next phase of NMOS.

Stefan Ledergerber is founder and owner of Simplexity, a company providing consulting and training of audio/video over IP systems, and a contributor to standards initiatives including AES67, SMPTE ST 2110 and NMOS. “I think a lot of people already have a strong theoretical knowledge of NMOS, but now they are increasingly beginning to convert that into practical experience, including via the growing number of training sessions being organised by AMWA.”

The annual calendar of trade shows and industry events remains crucial to spreading the word about NMOS. In addition, Recoseanu and Ledergerber highlight the role of the IPMX (Internet Protocol Media Experience) open standards, which are growing in popularity and build upon some existing specs and standards, including NMOS, ST 2110 and AES67, to offer an approach to IP networking that is especially relevant to pro AV applications, with additional provisions for control, copy protection, connection management and security.

“It's definitely an exciting time for NMOS as these specifications begin to be more widely understood and implemented, due to their inclusion in ST 2110 and IPMX,” adds Ledergerber. “NMOS is out there in the world, delivering for a wide range of customers, and some very exciting times lie ahead.”

For more information on NMOS, including videos about individual specifications and the overall project, please visit https://www.amwa.tv/

As demand for high-quality, low-latency video and audio continues to rise across corporate, education, and entertainment sectors, the pro AV market is undergoing rapid transformation. Organisations are increasingly looking for flexible, scalable solutions that match the sophistication of traditional broadcast infrastructure while offering the ease of integration and agility of IP networks.

At the forefront of this evolution is EvertzAV, the pro AV division of Evertz, which has leveraged decades of broadcast innovation to build the next generation of AV over IP (AVoIP) solutions. EvertzAV has extended its industry-leading Software Defined Video Networking (SDVN) platform into the pro AV space with the introduction of IPMX-ready products. These additions bridge the gap between broadcast and pro AV workflows, delivering professional-grade audio and video with the flexibility and interoperability needed for modern environments.

IPMX (Internet Protocol Media Experience) is quickly becoming the standard for interoperable AVoIP solutions. Developed as an open specification based on the proven SMPTE ST 2110 standard, IPMX is tailored to meet the unique needs of the pro AV sector. It enables unified, scalable, and non-proprietary AV workflows across diverse systems and use cases.

“IPMX represents a transformative leap forward in AV-over-IP,” says Paulo Francisco, vice president of engineering at EvertzAV.

“We are fully committed to advancing the adoption of IPMX through innovation and rigorous testing to ensure robust, real-world interoperability. As we look ahead, our roadmap includes expanding our IPMX-ready product line to support both pro AV and broadcast markets with reliable, standards-based solutions.

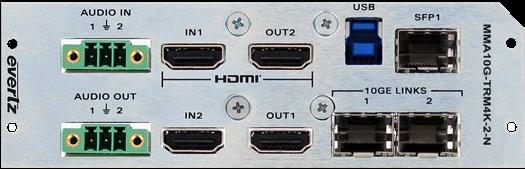

EvertzAV’s new MMA25G line of gateways is purpose-built to support UHD (4K60/4:4:4) media workflows. These high-performance devices offer:

• On-the-fly JPEG XS and RAW processing for pristine image quality

• Configurable transport with dual or single 25GbE (with full redundancy)

• Up to dual IP encode and decode, depending on the model

• Live transcoding for backward compatibility with MMA10G

• Seamless interoperability with SMPTE ST 2110-20/-22 and full alignment with IPMX standards

With live transcoding capabilities, MMA25G allows operators to ingest RAW UHD streams, encode to JPEG XS, and distribute signals across MMA10G and other IPMX-ready systems. This seamless compatibility removes the friction often encountered when integrating next-generation systems with existing infrastructure.

MAGNUM-OS, Evertz’s powerful orchestration, monitoring, and control platform is both the NMOS Registry and Controller for the MMA25 product line. MAGNUM-OS provides centralised, secure, and intuitive management across MMA25G, MMA10G, and thirdparty devices, simplifying operations and enhancing reliability for complex AVoIP workflows.

Francisco adds, “Under MAGNUM-OS control, the MMA25G family delivers a truly unified experience—bridging Broadcast and pro AV through a common, standards-based platform.”

Building on the trusted MMA10G legacy, EvertzAV’s IPMX-ready MMA25G family reflects the company’s commitment to driving open standards and innovation. As an active member of the Alliance for IP Media Solutions (AIMS) and a contributor to the IPMX standard, Evertz continues to lead the way in enabling interoperable, future-ready AV ecosystems for every industry.

Visit https://av.evertz.com

The worlds of pro AV and broadcast are undergoing a significant transformation, driven by shared technological advancements and evolving audience demands. As the lines continue to blur, a new landscape for content creation and distribution is emerging. We speak to industry experts to explore the key trends and technologies reshaping workflows

ANDY BELLAMY, technical director, EMEA, AJA Video Systems: AI is looming large in media and entertainment (M&E). It’s a fast-growing space that AJA is tracking. We’re hearing of AI being deployed to help accelerate everything from common editing applications to logging clips and film and TV restoration.

IP and virtualisation are also topics of conversation right now, both introducing new possibilities for M&E and pro AV workflows. IP is ushering in the next era of connectivity for production and post,

with SMPTE ST 2110 quickly gaining favour for its ability to transmit uncompressed audio and video. At the same time, the virtualisation of traditional hardware within a computing framework is opening new doors, helping to reduce the physical footprint of productions.

Also, the further democratisation of high-end production tools is fueling the continued growth of the creator economy. Technology is advancing so rapidly and becoming more accessible today that nearly anyone with an iPhone and an editing app can create and stream compelling content in no time. To this end, a whole new world of visual storytelling is making its way to audiences that might never have before.

JOHN HICKEY, senior director, R&D and KVM systems, Black Box: The media and entertainment industry is being reshaped by hybrid workflows, cloud migration, growing demands for remote and virtual access, and increased experimentation with AI. Content providers are navigating challenges around latency, scalability, and security while responding to the shift toward distributed teams and virtualised environments.

There’s a strong push for flexible infrastructure that supports both physical and cloud-based systems. Control systems are evolving to enable centralised, software-defined oversight, which supports realtime responsiveness and efficient collaboration across geographies. These trends are fostering more agile operations, paving the way for a smarter and more sustainable production environment.

PAULO FRANCISCO, vice president of engineering, EvertzAV: Several key trends are currently shaping the media and entertainment industry, including content creation, content distribution, and industry consolidation. These developments are driving the rapid adoption of IP-based and cloud-enabled workflows, enabling greater scalability and flexibility across organisations.

In terms of content creation, audiences are demanding more personalised experiences—ranging from live sporting events to podcasts. This is accelerating the need for resilient, modular, IP-based infrastructures that support hybrid cloud and remote production workflows. Such setups allow for real-time collaboration over both managed and unmanaged networks, from virtually anywhere.

Streaming and direct-to-consumer models are empowering content creators to reach audiences on any device, at any time. At the same time, growing expectations for seamless, always-on content delivery are shifting the industry’s focus toward disaster recovery, business continuity, and infrastructure upgradability.

Finally, personalisation, advanced analytics, and hybrid skill development have become critical. Users are increasingly seeking more control and tailored experiences, whether through AV systems or broadcast solutions, making adaptability and audience insight essential for success.

MARK HORCHLER , marketing director, products and solutions, Haivision: Broadcasters are under pressure to deliver high-quality content faster and more efficiently. Technologies such as remote production, IP transition, 5G, and cloudbased workflows are enabling more flexible, scalable, and reliable live production workflows, bringing broadcasters more ways to produce engaging content.

A good example of this is the widespread adoption of the SRT (Secure Reliable Transport) protocol, originally developed and opensourced by Haivision. SRT enables low-latency, high-quality video streaming over unpredictable networks, including the public internet, allowing broadcasters to cost-effectively transport live content from virtually anywhere with enhanced reliability and security, which is critical in today’s remote and hybrid production environments.

DAN PISARSKI, CTO, LiveU: The broadcast media landscape is undergoing a profound transformation, driven by the rapid adoption of digital platforms and cutting-edge technologies like AI and 5G. This has reshaped consumer media consumption–from more ‘traditional’ formats like linear TV to formats such as podcasts, social media platforms and ‘social video’ (like YouTube and Twitch). This has brought in new names and personalities as sources of both news and entertainment, but it is also an opportunity for traditional media producers who can adapt to creating content tailored to these formats, particularly for younger audiences. We’re also seeing the digital democratisation of broadcasting, which is levelling the playing field, particularly in sports. The surge in online viewership and widespread access to digital platforms has given lower-division clubs and niche sports the tools to deliver broadcastgrade content at a fraction of traditional costs.

Leveraging remote, on-site, or fully cloud-based production workflows, these entities can scale their operations efficiently without sacrificing quality. IP-based production technologies enable even the smallest teams to engage directly with digitally native audiences, particularly Gen Z and millennials, who crave authentic and personalised narratives. This shift isn’t just about accessibility, it’s about strategic transformation, allowing clubs and teams to grow their reach, deepen fan engagement, and build sustainable digital content ecosystems.

ANTHONY

ZUYDERHOFF,

executive director

global sales, Riedel Communications: The media and entertainment industry is steadily shifting toward more agile, efficient, and distributed production models.

A major trend is the move from traditional, proprietary hardware to software-defined workflows built on commercial off-the-shelf (COTS) systems. This enables teams to produce from virtually anywhere, with remote and

hybrid models gaining wider adoption. Driving these changes is also a clear need to ‘do more with less.’ As demand for content grows across platforms and formats, broadcasters and AV professionals are turning to scalable, modular, and IP-based systems that reduce infrastructure requirements while maintaining high quality and increasing operational and financial flexibility.

Altogether, these developments signal a deeper shift toward IT-centric production environments—where software, virtualisation, and distributed tools are key for building flexible, cost-effective, and future-ready workflows.

Andy Bellamy: Both industries are drawing inspiration from one another, with AV professionals borrowing broadcast technologies and strategies to uplevel the quality of content they can deliver to audiences, and broadcasters taking lessons out of the pro AV playbook to make their content more immersive.

4K, 8K, and HDR technologies are providing new ways for both industries to entertain and engage audiences with greater visual appeal. Digital signage is one area where this development is evident. Nearly anywhere you go today, you can find a digital display, be it a roadside billboard, EV charging station, the underground or airport, a restaurant, the gym, etc.

Given the high resolutions and graphics capabilities of modern displays, more brands are leveraging them to share broadcast-quality imagery and video with audiences. We’re also beginning to see more broadcasters embrace virtual and augmented reality technologies, which have proven popular in AV for some time and similarly require higher-resolution content development.

Fibre technology has also become an important consideration across both industries, as it can help feed the content that reaches these displays, with support for large raster sizes and high resolutions. Then, there is IP, which is also bringing broadcast and AV together, though approaches vary. Whereas a broadcast facility may adopt SMPTE ST 2110, an OB truck, House of Worship, or high school may opt for NDI or Dante. Both industries, however, are turning more to IP for greater long-term scalability, with many taking a hybrid approach that requires a solution for bridging between the worlds of baseband and IP.

Camera and streaming equipment accessibility in recent years is also playing a role in this convergence. More corporations, universities, concerts and sports venues, and other AV environments are investing in broadcast-like facilities and production studios to create and deliver content to audiences that rivals that of film and

broadcast. At the same time, some broadcasters are experimenting with less-expensive prosumer cameras previously used in AV environments where budgets ran tight. With broadcast and pro AV now both capable of supporting higher resolutions and colour spaces, the sky is really the limit. I expect to see production quality continue to increase and 4K and HDR soon become the norm across both industries, providing more vivid, detailed, and engaging content.

John Hickey: The convergence is being driven by IP-based control systems, virtualisation, and remote access technologies. Solutions like Black Box’s Emerald IP KVM allow access to both physical and virtual machines across LAN, WAN, and internet, supporting workflows in both broadcast and pro AV environments. These technologies eliminate the need for proprietary matrix switches and cabling, enabling high-performance control from virtually any location, which is crucial for hybrid operations. Enhanced interoperability, smart multiview interfaces, and API-driven automation also reduce friction between traditionally separate industries by offering scalable, flexible, and cost-efficient integration.

Paulo Francisco: The emergence of IPMX (Internet Protocol Media Experience) in the professional AV space is a major driver of convergence between broadcast and pro AV workflows. As a suite of open standards and specifications, IPMX builds on the media and entertainment industry’s ongoing transition to IP using SMPTE ST 2110. It allows the pro AV market to leverage broadcastquality technologies—such as cameras, switchers, and routing infrastructure—for a wide range of applications, including (but not limited to) corporate live production, command centres, and hybrid event environments.

Mark Horchler: The growing use of IP-based video and cloud technology is bringing pro AV and broadcast workflows closer together. Technologies like SRT, ST 2110 and NDI enable the secure, low-latency delivery of high-quality video over IP networks, including local area networks (LAN) or the internet, making it easier for AV teams to utilise broadcast equipment and apply high levels of reliability and performance to corporate, education, and other types of live video productions and use cases.

Dan Pisarski: The epicentre of this convergence is the cloud. Pro AV was naturally faster to embrace a number of cloud-centric techniques, such as video contribution over unreliable internet, cloud distribution, and cloud production. As these techniques have migrated to pro broadcast, many of the technologies that underpin them came along with the migration, including technologies such as

NDI and SRT, and specific product examples such as StreamDeck. In some cases, these technologies were better positioned to serve broadcast needs than other broadcast standards and technologies–a good example would be NDI’s use in cloud private networks, a place SMPTE ST 2110 was not ready to play.

Anthony Zuyderhoff: The convergence between pro AV and broadcast is being driven by shared adoption of software-defined tools, IT-centric infrastructure, and distributed workflows. As pro AV applications expand from in-room experiences to global distribution–think concerts, corporate events, or second-screen experiences streamed to remote audiences–the technology requirements start to resemble those of traditional broadcast.

At the same time, both industries are aligning more closely with the IT world, with cloud, IP protocols, and scalable networked systems providing the flexibility and versatility required. As the traditional boundaries between AV and broadcast blur, it is not just a merging of tools–it is a convergence of workflows, expectations, and the broader digital ecosystem.

HOW DO YOU SEE THE ABOVE INFLUENCING CONTENT PRODUCTION ACROSS BOTH INDUSTRIES?

John Hickey: Content production is becoming more agile, collaborative, and geographically distributed. IP-native systems allow teams to access, monitor, and manage content in real time, regardless of location, which facilitates faster production cycles, streamlined post-production, and greater adaptability in live environments. This also enables smarter workflows, as operators can share virtual machines, reduce infrastructure overhead, and manage different sources from a single workspace.

The result is a significant boost in operational efficiency and scalability, enabling production environments to evolve with less disruption and more creative freedom.

Paulo Francisco: With IPMX as an open standard for pro AV— designed to be compatible with SMPTE ST 2110, used in broadcast facilities—professional-grade workflows are becoming more accessible and flexible for the pro AV community. In both AV and broadcast environments, teams can now create, route, and distribute content from virtually anywhere, to any device or platform.

Whether it’s a global live stream or a corporate town hall, the traditional boundaries between broadcast studios and AV environments are dissolving—empowering more users to produce high-impact, professional content with ease.

Mark Horchler: We’re seeing AV and broadcast workflows converge in meaningful ways, especially in how content is captured and delivered. AV teams are adopting broadcast-level tools to elevate the quality of live events, while broadcasters are embracing the flexibility of AV workflows by using software, cloud, and IP video technologies to streamline production.

For example, broadcasters are increasingly using cloud platforms to manage live contribution remotely, a capability long utilised in AV environments through software-based and remotely operated systems. This convergence has led to the rise of mobile, cloudenabled, and hybrid production models that prioritise speed, scalability, and efficiency.

Dan Pisarski: The overall trend of media outlets broadcasting on social media, phone viewership replacing large screens, and pro AV-originated technologies used for production (such as cloud video switching and vision mixing) have sometimes led to the incorrect conclusion of ‘quality matters less,’ but this is not quite correct. In fact, there is an overall trend towards high-quality video on all these platforms–YouTube supported 4K video long before

some broadcast outlets used it, 1080p is the de facto standard and interlacing is never used, etc.

However, these technologies do allow for a type of production that some broadcast tech is not easily adapted to–a more personal and opinionated type of production. Hand in hand with this style of production are techniques like wireless contribution (particularly over bonded cellular), use of body-worn cameras and drones, and use of long, relatively static shots. When you compare some trends in broadcast tech such as 2110, 25 or 100 Gbps networking, and vision mixing in hardware, you start to see the mismatch between the direction production is going and what some broadcast tech considered “important problems to solve”.

John Hickey: The convergence could become near-complete, with pro AV and broadcast professionals relying on a unified, software-defined control infrastructure. The need for specialised, industry-specific hardware is diminishing as IP and virtualisation take precedence. Given the common goals—such as low latency, high resolution, remote operation—shared tools and practices will increasingly dominate, fostering interoperability, standardisation, and crossindustry collaboration.

Paulo Francisco: From a technological standpoint, the convergence between broadcast and pro AV will be significant. An increasing number of pro AV applications now demand the same level of technology used in broadcast, where the reliable delivery of high-quality video and audio to a global audience is no longer optional—it’s a core requirement.

That said, it’s important to recognise that pro AV users are fundamentally different from broadcast engineers. They expect greater simplicity, intuitive interfaces, and more cost-effective solutions. While the underlying technologies will converge—enabling interoperability and unlocking creative potential across enterprise environments—the final product offerings must be tailored to the unique needs and expectations of each market.

Dan Pisarski: There will always be a role for ‘broadcast tech.’ There’s a popular saying that, ‘They’re never going to use X at the Super Bowl,’ where ‘X’ is your pick of pro AV tech. Additionally, while viewership is shifting, it will not ‘go to zero’ on more traditionally produced and distributed programmes. But, the trend has not slowed yet—if anything, in 2025, it is accelerating.

This convergence is driven primarily by the

widespread adoption of IP-based workflows. IP technology enables scalable, flexible, and interoperable deployments across different environments such as live television studios, live music and corporate events to digital signage networks.

Both industries now leverage IP-powered remote production and control systems, which facilitate distributed operations, reduce onsite personnel requirements, and support collaborative workflows. The use of common protocols, such as NDI, creates common ground for toolsets, skill sets and platforms across broadcast and pro AV. This is producing a new era of converged systems that combine the reliability and quality standards of broadcast with the flexibility and scalability demanded by modern AV applications.

John Hickey: Broadcast technology is setting a new standard for quality, reliability, and control within pro AV. Innovations such as low-latency IP KVM, centralised management, and smart multiview receivers are enabling pro AV environments to scale more efficiently, support distributed teams, and deliver higher-fidelity outputs. Technologies developed for live production and playout are now powering control rooms in transportation, health care, security, and corporate AV, where responsiveness and uptime are critical. As pro AV borrows more from broadcast, we’re seeing more streamlined workflows, more sophisticated control, and better user experiences across sectors.

Paulo Francisco: The role of broadcast technology leaders can—and should—be significant. With SMPTE ST 2110 serving as the foundation for IPMX, companies like EvertzAV, which bring decades of experience deploying broadcast solutions, are uniquely positioned to lead this transition. That deep expertise provides a valuable head start in understanding the needs and challenges of the pro AV market, along with proven, reliable approaches to addressing them.

Mark Horchler: Broadcast technology is playing an increasingly important role in the pro AV space, particularly as expectations for video quality, reliability, and low-latency performance rise. Whether for public events, internal communications, or education, AV teams are now aiming for a high-quality and broadcast-quality experience from any type of viewing device. As a result, there's greater demand for secure and reliable video-contribution solutions. By deploying scalable and flexible live broadcast tools, AV professionals can produce and deliver great content with maximum impact.

Dan Pisarski: One area where the flow of ideas has gone in the other direction is around the use of ‘cinematic’ styles of camera and camera lens. The ‘live cinema’ look has rapidly gained popularity in sports production and has begun to bleed into things like long-form social video. For example, some ‘In Real Life’ streamers on Twitch use cinema-like lenses on DSLR cameras (on gimbal mounts) to achieve depth of field and cinema-like framing. There will certainly be other examples where good ideas from both sides get cherrypicked by the other.

AWARE OF ON THE TOPIC OF

Andy Bellamy: While bringing broadcast and pro AV technologies closer together is revealing incredible new opportunities, interoperability challenges remain a blocker to true convergence. Facilities and professionals on both sides must manage and work with an ever-growing range of technologies and standards, and it’s important to be able to leverage the right standard within the appropriate technological framework.

While there are tools to help bridge the gaps, a lot of technological workarounds are often required. Both broadcast and pro AV share a strong technological foundation, which is a good start, but broadcast standards need to better reflect the needs of AV for continued advancement.

John Hickey: Long-term, sustainability and energy efficiency will play a greater role in infrastructure decisions, especially as virtualised workflows reduce physical hardware needs and end users become more conscious of their environmental impact. Cybersecurity is another key consideration for the media and entertainment industry; companies need to protect their content and infrastructure and must investigate which practices and technologies are optimal for their specific needs.

Dan Pisarski: An important question when looking at the mix of technologies is around latency and expectations of latency. Broadly generalising, a lot of pro AV tech, as well as general cloud video tech and ground-to-cloud solutions, rely on the compressed domain, which always involves higher latency than uncompressed. Technology is rapidly evolving to help combat this, and it is already clear that technology that helps eliminate or reduce latency–such as the emerging Media over QUIC protocol–will be adopted faster than technologies that don’t improve total latency. Another area is security.

Perhaps somewhat ironically, pro AV tech and general ‘internet video’ technology have had to focus on this area because the very nature of sending anything over the internet involves a lot of needed security. Some broadcast tech has assumed closed ecosystems and private networks and are now playing catch-up to security needs.

Spedeworth TV is a leading motorsport VoD platform, delivering thrilling live and on-demand coverage of Hot Rod, Stock Car and Banger Racing events every weekend throughout the racing season. As the appetite for high-quality, dependable live streams has grown, especially during major international events like the recent National Hot Rods World Championship, the platform sought a more agile, scalable remote production solution to support its evolving needs.

While the platform boasts a passionate and growing fanbase, Spedeworth TV faced mounting challenges in scaling its live streaming infrastructure. The existing setup was increasingly unable to meet the demand for richer, more flexible content. Motorsport audiences now expect dynamic multi-angle views, instant replays, curated highlights, and behind-the-scenes access, all of which require a more robust production workflow.

In early 2025, Spedeworth TV turned to LiveU to revolutionise its live production infrastructure, enabling a massive step up in live coverage capacity. Building on a successful test run, Spedeworth TV deployed several LiveU LU300S units alongside LiveU Studio, the company’s cloud-native production service, as part of a lightweight cloud production setup. This powerful combination enables seamless live and on-demand streaming across its own digital platforms, as well as Apple TV, Roku, Amazon Firestick, and more.

The new LiveU lightweight production solution means that Spedeworth TV can now deliver live streams from any racetrack, at any time, with fewer resources, increasing engagement rates up by 100 per cent. The system supports a multi-camera setup, capturing everything from fixed trackside cameras to roaming operators, aerial drone footage, and immersive onboard views–all synchronised and mixed using LiveU Studio at Summer Isle Films' production facility in Suffolk, UK.

LiveU Studio’s Audio Connect tool keeps producers and camera operators connected in real time, ensuring smooth communication across sprawling race venues, enabling field equipment, production teams and mobile users with LiveU Control+ to all communicate within a single audio space. Instant replays and highlights packages are pulled directly from the ISO system, delivering adrenaline-pumping moments to fans faster than ever.

The cloud-based solution also unlocks new revenue streams by enabling Spedeworth TV to insert targeted ads and customise broadcast schedules, choosing which events to stream live. The result is a more efficient, scalable production workflow that keeps motorsport fans worldwide revved up with unparalleled access to the sport.

Tom Newman, Summer Isle Films’ creative director, says, “The LiveU solution has really accelerated what we're able to do, making it affordable to produce and watch. We can create a fantastic live viewing experience, which captures the atmosphere of the race.”

Newman also explains the LiveU solution has simplified Spedeworth TV’s workflow. “Prior to adopting LiveU’s cloud production solution, we had to spend money on bringing a team in to manage the live streams. It was a more complicated setup. When I spoke with the UK sales team, they told me about LiveU Studio cloud production and I knew very quickly that my crew, who are full-time editors, would pick up the workflow fast.

"It gives our team full control of the production; they can be more creative and they have the capability to explore new revenue generators. For example, for the big live streams like the recent National Hot Rods World Championship, we can approach businesses for sponsorship. Opportunities like this gain interest, and these impacts and successes are big wins for us.”

Newman mentions that while the production team utilises most of LiveU Studio’s tools, a few features truly stand out. “Before we go live now, we can package up content including behind-the-scenes footage such as pit interviews with drivers and send that out to our social media platforms, encouraging people to subscribe to watch the race. That’s an instant spike. We can do highlights, replays and onboards using mobile phones cable-tied to the roll cages of cars.”

He concludes, “Recently, while I was working away on another job, I noticed our sales light up. I realised the team had posted a feed straight out to Facebook. The power of being able to do things very quickly to increase sales is really good.”

By turbocharging its live streaming with LiveU’s cutting-edge cloud production technology, Spedeworth TV has transformed how it delivers motorsport content, going from a handful of live events to a full-throttle, flexible broadcast powerhouse that captures the thrill of racing for motorsports fans.

Visit LiveU at IBC2025, Stand 7.C19

Now that the fundamentals of IP networking have been established and accepted, a greater focus on network control & management is emerging –with the broadcast/pro AV convergence on course to be a major beneficiary, writes Ken Dunn

Over the past ten years, in particular, IP-based networking has become a default standard in pro AV as well as in broadcast. Thanks to technologies such as Dante, NDI and the ST 2110 standards suite, there are now plenty of tried-and-trusted approaches to moving audio, video and data over IP.

But despite these obvious advances, there has been a continued suggestion for some time that we might still be missing one or two pieces of the puzzle. In particular, end-users and integrators have wondered how best to control, monitor and manage IP networks so that they are secure, flexible, and as conducive as possible to stress-free operation.

Fortunately, there are several major initiatives – some already having recent fruition–that address these issues and, in effect, promise to close the circle for even then most demanding of IP-based operations. In this article, we’ll look at recent developments in NMOS and IPMX with the associations involved in defining–and refining–them, and consider the impact they might have on the IP installations of the future.

Networked Media Open Specifications (NMOS) – which provides discovery, registration, connection and device management capabilities within ST 2110, and is also part of IPMX is the family name for specifications produced by the Advanced Media Workflow Association (AMWA) related to networked media for professional applications. In light of NMOS’ recent announcement of a “full control plane solution", Installation asked Cristian Recoseanu–a leading developer for NMOS for many years, as well as development tech lead at broadcast automation, playout and management solutions company Pebble–if it would be fair to say that control and connection/device management were areas perceived as being not fully covered by previous standards projects?

“I think that whenever a very interesting product or feature is created, the almost immediate question is: ‘That’s incredible, now how can I make it actually do exactly what I need it to do for my use case?’, he says. “We can identify this pattern in transport technologies as well, where data plane is

always associated with a counterpart control plane model to support this necessary adaptability and customisation required in real deployments and operational uses. ST 2110 went through the same process and, on its journey to make the transition to IP networked environments using standard and interoperable means, it inevitably reached the point where the question around control plane became loud and relevant.

“Carrying on using proprietary fragmented solutions in the control plane had the potential to undermine the philosophy and approaches taken in the data plane, so the emergence of the AMWA NMOS space was both timely and an extremely good fit with what had been developed in the data plane side–only now creating a control plane based on open and free-to-use interoperable specifications, using technologies underpinning the internet.”

The most urgent requirements of emerging IP streams were addressed with two specifications: IS-04 Discovery & Registration, allowing a means to

advertise and discover media entities and signalling a shift from “static and fixed configuration to more dynamic and reactive workflows”; and IS-05: Device Connection Management, which enables the ability to configure stream emitting and consuming devices, and facilitate connections between them over an IP network. More recently, the NMOS control plane solution has grown the ecosystem with specifications such as IS-12 Control Protocol, BCP-008-01: Receiver Status and BCP-008-02: Sender Status.

“Parameter control across besteffort networks, with a mix of hardware and software from different vendors, is a genuinely hard challenge. There’s a reason no single solution has gained universal traction yet"

ANDREW STARKS

“These establish interoperable standard best practices in the areas of device parameter control and monitoring in a way which leverages all the existing layers while enabling strong synergies by reusing existing technologies and shared models in addressing a wide variety of use cases," says Recoseanu. "From a user perspective you can discover media nodes, establish stream connections between them and control their various processing functions and monitor any relevant status parameters which indicate health issues impacting your workflows.”

Considering how the control plane innovations might impact upon pro AV, Recoseanu notes that “an expansion in the form of IPMX”, with IPMX devices being native NMOS devices and able to be discovered and connected in the same way as ST 2110 NMOS devices. He adds: “Furthermore, I see the IPMX space fully embracing the NMOS control layer and imbuing devices with abilities to control them and monitor them, fully leveraging the ecosystem and its relevant pieces and synergies.”

Moreover, there are already a number of activities looking in-depth at use cases, some of which seem destined to support the broadcast/pro AV convergence that has been much-discussed in recent years. One example noted by Recoseanu is how IPMX devices can advertise stream issues caused by HDCP through the monitoring patterns that have already been established.

“All of this will create a convergence of broadcast and pro AV devices which can interoperate and give end users unprecedented freedom and flexibility in choosing the best of breed devices for their workflows at different cost levels,” says Recoseanu. “NMOS control has been extremely well-received by vendors, end users and integrators and, with the expansion of IPMX and the advent of Media Exchange Layer (MXL), interest in open, free-to-use interoperable solutions in this space will only continue to grow as demands and requirements from future dynamic media facilities start to trickle down to the most appropriate layer.”

Created by AIMS (the Alliance for IP Media Solutions), IPMX builds upon existing work from other organisations–such as VSF, ST 2110 from SMPTE, and NMOS from AMWA–to provide a set of open standards and specifications geared towards the requirements of the pro AV market.

Enabling the carriage of compressed and uncompressed video, audio and data over IP networks, IPMX also offers provisions for control, copy protection, connection management and security that are deemed more applicable to pro AV customers and environments.

Asked to outline the main control/management capabilities included in IPMX not in ST 2110, Andrew Starks–marketing work group chair at AIMS, as well as director of product marketing at AIMS–responds: “ST 2110 does not define a control or management layer. The JT-NM TR-1001-1 architecture fills this gap by recommending the use of AMWA NMOS APIs, and IPMX follows that model – while introducing stricter and broader requirements tailored to pro AV.”

More specifically, IPMX mandates support for the aforementioned NMOS IS-04 (discovery and registration) and IS-05 and IS-11 (connection management), and mandates support for both mDNS for ad hoc deployments and DNS-SD for managed systems. These requirements–defined in VSF TR-10-8–support “essential day-to-day functionality and simplify system integration, key expectations in pro AV environments”.

Starks highlights the Extended Display Identification Data (EDID)style connection management addressed through IS-11: “Unlike ST 2110 workflows–where receivers must precisely match the source format–pro AV environments require dynamic negotiation between sources and displays. IS-11 supports this by effectively tunnelling EDID information, allowing controllers to ensure that sources send formats compatible with connected displays.”

The inclusion of IS-11 may also benefit workflows in live production. For example, in systems depending solely on IS-05, stream management is out of scope. But with IS-11, operators can

define and enforce the house format, adding an extra layer of control when required.

In addition to the above, other NMOS specifications such as IS-12 (system parameters) exist and are fully compatible with IPMX-compliant devices. For instance, IS-12 furnishes users with a standardised way to expose and control system-level settings including network configuration and hostname.

“While not currently required by IPMX, it represents an important step toward broader interoperability,” says Starks. “Devices that do support it provide a standardised way to manage system-level parameters such as network settings and hostnames, which can be especially useful in installed environments where consistent configuration across devices is critical.”

He adds that device configuration is another active area of investigation, with “several candidate approaches under discussion”.

In terms of specific AV applications to which these control and management capabilities are particularly relevant, Starks pinpoints an extensive range, including conference rooms and corporate AV systems: “In these environments, IT staff expect systems to auto-discover devices and ‘just work’ with minimal set-up. IPMX’s use of mDNS enables plugand-play installation for ad-hoc rooms, while DNS-SD supports enterpriselevel systems with centralised management. IS-11 ensures that laptops and other sources automatically send formats compatible with connected displays or projectors, eliminating user frustration and support calls.”

Other applications include digital signage networks involving one-tomany distribution across mixed displays–with IS-11 allowing “a controller to manage format compatibility across multiple endpoints dynamically”–and higher education and campus-wide AV: “Universities often deploy distributed AV systems across multiple buildings with diverse device types

and inconsistent network environments. The ability for IPMX devices to operate in both registry and ad hoc modes (via NMOS + mDNS/DNS-SD) allows flexible deployment based on infrastructure maturity.”

There is surely no danger of contradiction when Starks concludes: “This is not a trivial space–parameter control across best-effort networks, with a mix of hardware and software from different vendors, is a genuinely hard challenge. There’s a reason no single solution has gained universal traction yet. For IPMX, we’re taking a deliberate approach. We want to ensure that whatever mechanism we standardise is capable, future-proof, and practical for the real-world complexity of pro AV systems.”

In short, this area remains a work in progress, as one would expect. But the steady and methodical approach that has characterised the story of AVoIP for the past decade has surely proven to be the correct one–over and over again.

It’s unlikely that, back in the early 2010s, many observers have predicted the scale or speed of adoption that has taken place through pro AV, broadcast and beyond. That is testament to an extraordinary amount of hard work by many people across the industry – but also to the recognition that open standards are integral to delivering universal improvements. With that now firmly established, the experience of system integrators and end users will only be improved further with the developments that reach fruition in the next few years.

This article is also available to read off-page at www.installationinternational.com/avoip/missing-pieces-taking-control-of-avoip

Rapid change has become the default in technology. With artificial intelligence (AI), for example, changes can sometimes be so extreme that they even surprise experts in their own field. Against this fevered backdrop, the broadcast industry can sometimes feel as if it has a more conservative approach to change.

This apparent contradiction in a technology-dependent industry is understandable because broadcast demands innovation and absolute dependability, sometimes seen as opposites in the early stages of implementation.

Fortunately, robust and reliable technology from Sony and others has made new live production paradigms not only feasible but compelling, too, and not just because of economic efficiency. This technology also means we can feel confident that we can adapt to future changes and innovations quickly, efficiently, and without (too much) disruption. Sony has made professional digital media products since the breakthrough PCM-3324 digital audio multitrack recorder launched in 1982, and the professional DAT format in 1987. Digital Betacam (DigiBeta) arrived in 1993, and media production has been almost exclusively digital since then. The company’s research and innovation over four decades ago forms the backdrop to today’s extraordinary pace of progress.

Flexible, agile and scalable software architectures for broadcasters–we will use the term Software Defined Broadcast (SDB)–are seen as the next wave of innovation in broadcast, following the industry’s successful adoption of IP-based video transport.

The aim is to evolve previously hardware-based processors into containerised software applications. This approach will replicate existing processes and add new ones in a safe and manageable software environment that is compatible with IT industry software principles. The technique allows users to exchange media essence data between applications running on commodity IT infrastructure which can be deployed on-premises, or in the cloud for greater scalability and geographical independence.

In practical terms, with the right orchestration tools, you can reconfigure an entire facility in seconds, produce complex live broadcasts remotely, scale resources on-demand, and can manage entire–sometimes global–operations.

An overriding requirement for SDB to be successful is interoperability. It should allow both synchronous and asynchronous data processing, be able to accept any input, deliver to any output, and self-sufficiently process content on demand and without hitting internal limits. It must also allow external products and services to ‘plug in’ without issues, so that broadcasters can choose the best products and services for their circumstances. SDB has the inherent flexibility to adapt and flourish in almost any future media environment.

Sony has been a foundational player in the shift from analogue to digital, from SDI to IP video and audio transport, and is now poised to embrace the next stage: Software-Defined Broadcast. SDB is an exciting new frontier that is paving the way for nearly total convergence between IT and media.

Sony’s active involvement in the ST 2110 uncompressed Video-over-IP standard has brought it to production-readiness. Broadcasters can now choose an all-IP (or hybrid) architecture for new and existing facilities.

The next stage moves beyond synchronous IP transport and routing towards processing media essence in software. In everyday terms, SDB can process media essence in addition to merely moving it. It borrows techniques from High Performance Computing (HPC) to create a shared memory architecture called a ‘Fabric’ that allows efficient data transfer between data centre components. With a shared memory fabric at its core, the architecture becomes an easier on-ramp to adopting AI in the future. It makes the essence data readily accessible in the right format for AI processing to provide real-time analysis, management insights and enhanced services.

Despite the looming demise of Moore’s Law-type acceleration, multicore processors and continuous architectural innovation have kept up the pace.

Video is intrinsically suited for parallel processing and, therefore, a perfect match for GPUs, the rise of which has made Nvidia a multi-trillion-dollar company. Driven in recent years by the off-thescale demands of AI, GPUs have brought ease and fluency to video processing at a reasonably affordable cost.

Today, the technology landscape is an impressive wide-angle vista of choices for broadcasters as they explore future workflows and production platforms.

The cloud has great powers to transcend geography and to allow virtually infinite (and almost instantaneous) scalability. It is made up of buildings with racks of computers. These are essentially the same computers as you could have on your premises - and you can indeed have a private cloud on your own premises.

While the above is important context, it doesn’t specifically address the idea of an open, real-time media processing environment. But the EBU has proposed the Dynamic Media Facility reference architecture where modular Software Media Functions (SMFs) replace dedicated hardware with flexible and interoperable containerised software modules.

At the core is the Media Exchange Layer (MXL), to enable frictionless real-time in-memory exchange of video, audio and timed metadata between media functions, overseen by provisioning, control, and security layers

In April 2025, The Linux Foundation, in collaboration with the European Broadcasting Union and the North American Broadcasters Association, announced a project to develop the Media Exchange Layer as an open-source project. The intention was to allow diverse industry vendors to participate with their products while ensuring compatibility between other software contributions.

The MXL is significant because it can be thought of as a high performance, low-latency transport for sharing media between the SMFs. Crucially, it is asynchronous relative to incoming sources and the speed of local hardware processors.

What the MXL offers is real-time performance, guaranteed by design. It’s a compelling prospect because it promises economically constrained broadcasters more for their shrinking budgets.

Dedicated real-time hardware is often more expensive than software-only systems.

Manufacturers can now “virtualise” the essence of some of their hardware products as software that can run either in the cloud or on-prem, potentially on lower-cost COTS (Commercial-off-theShelf) hardware. Let’s look at some of the specific advantages of this approach. First, flexibility. Viewer habits and choices can change more rapidly than ever due to social media, and a software architecture provides the flexibility to adapt quickly enough, dynamically allocating resources as needed, with new workflows spun up almost instantaneously.

And then there’s interoperability. This is the opposite of the traditional “locked in” philosophy we’re more used to. While it will take time for manufacturers and developers to adapt to this new mindset, there are huge practical advantages, and multiple revenue models. Suppliers will have lower manufacturing and transport costs and be more sustainable. For broadcasters, costs can be proportional to the demands of individual productions.

The biggest benefit may be the ability to collaborate with fellow manufacturers, who may even be competitors. No single company has a monopoly on innovation, but the traditional method of operating in a competitive environment means that competing entities are often reluctant to work together.

MXL levels the playing field and implicitly welcomes collaboration. A codec company can work alongside a production switcher supplier. The customer has the reassurance that they can always choose the “best of breed” processes for their bespoke workflow.

Sony embraces the principles of DMF and MXL. While other manufacturers may want to maintain their “walled garden” approach and merely establish connectivity with the DMF, Sony believes in open architectures.

Nevion, a subsidiary of Sony, already has an accomplished orchestration layer, and Sony itself has software-based live production switchers, while its Hawkeye Replay servers are also compatible, all using cloud-native techniques.

The shape of the future is already apparent: it is exponential, and likely to be accelerated by AI. Dynamic, software-defined media facilities are arguably the most viable approach to the coming years, given the absolute need for flexibility and robustness.

Working together and enforcing rigorous interoperability will help to make this possible.

Software-defined media facilities will change the face of production and broadcasting through geographical independence, effortless remote production and virtually instantaneous configurability, and the entire system will be ready to adapt to whatever form the future of broadcasting takes.

There’s never been a better time to evaluate the latest developments in dynamic, software-defined media technology.

HOW LONG HAVE YOU BEEN GOING TO IBC?

I was delighted when in 1992 IBC moved from Brighton in the UK to my home city of Amsterdam and have made the effort to be there most years ever since.

WHAT’S YOUR TYPICAL IBC ROUTINE: WHAT ARE YOU THERE TO SEE/LEARN? WHO ARE YOU THERE TO MEET?