THE MONOPOLY TAX THRILL OF THE CHASE

Alternatives to Articulate

FOREVER IN THE GROOVE

A lifetime of learning

LEARNING TO LEARN

Stop ignoring the science

HOW I CHEATED MY COMPLIANCE COURSE

The

The pursuit of knowledge or the pursuit of prizes?

Alternatives to Articulate

A lifetime of learning

Stop ignoring the science

The

The pursuit of knowledge or the pursuit of prizes?

It’s issue 11 of Dirtyword, which means we’ve been publishing for 2 whole years! And yes, I realise that as a bi-monthly magazine, 2 years should really bring us to issue 12, but what can I say? All those little delays really add up…

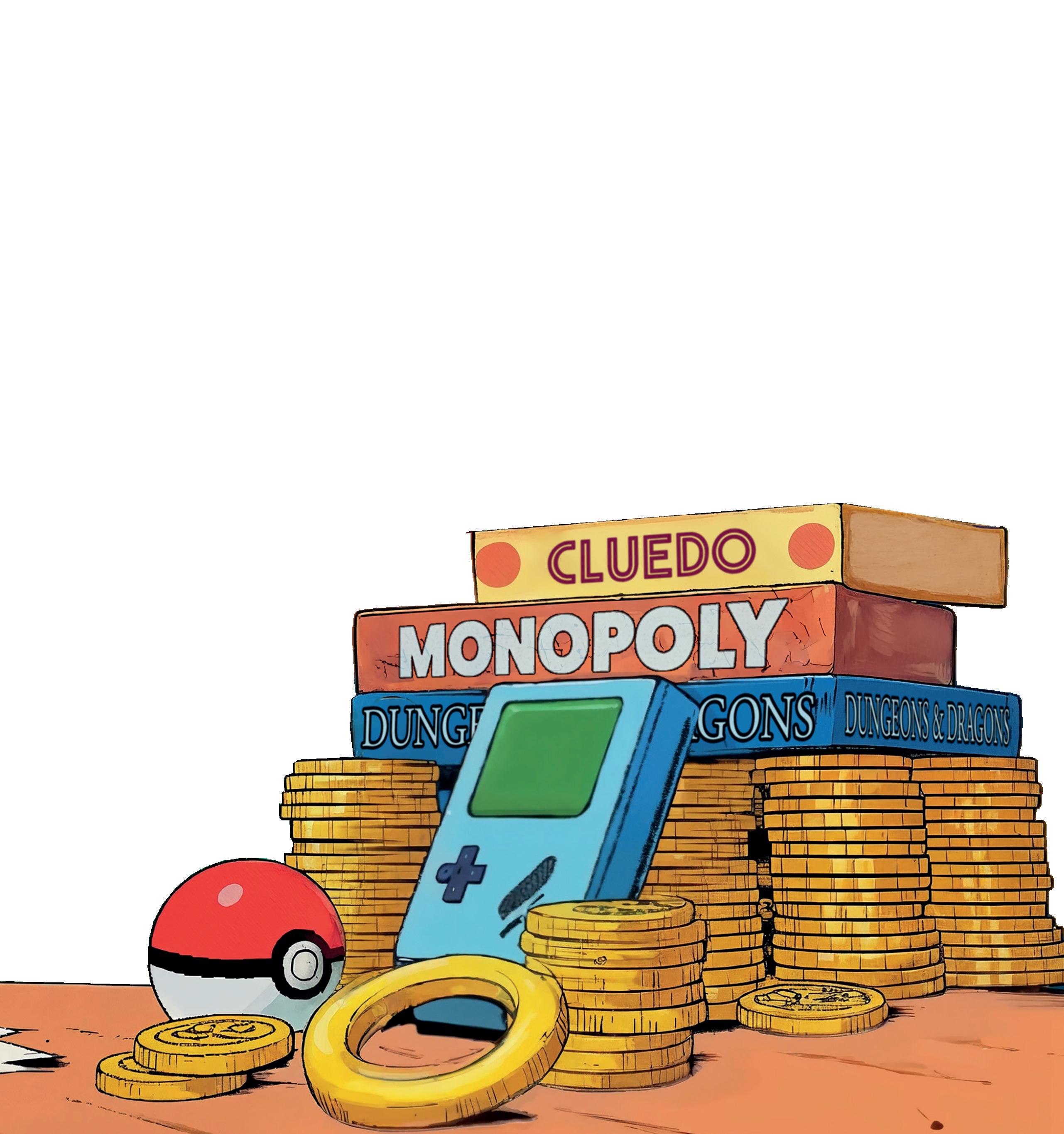

This issue is channelling a strong gaming theme, with a Pokemon-style analysis of Gamification from Dr Ace Hulus, ID Atlas’ Mike Stein explores authoring alternatives to the Monopoly of Articulate, Lewis Carr defends against Agentic AI cheats, and I virtually dress up as Sonic the Hedgehog to pit the pursuit of knowledge against the pursuit of rewards.

Google recently “discovered” the concept of how we all need to “learn to learn” and announced it to the world. Which is weird because Learning Pirate’s Lauren Waldman has been beating that drum for years, so she’s here to lay it all out and let us know where we’ve been going wrong.

Another buzzword (buzzphrase?) that’s been trending over the last year is Critical Thinking; how we all need to do more of it before AI turns us into mindless drones. It sounds simple but Kritikos’ Joe Chislett writes that critical think skills alone won’t save us.

Dynamic’s Tom Foulkes finds himself stranded on an island of dinosaurs as he pleads with us not to introduce new ideas and technology just because we can - we need to stop and think about whether we should.

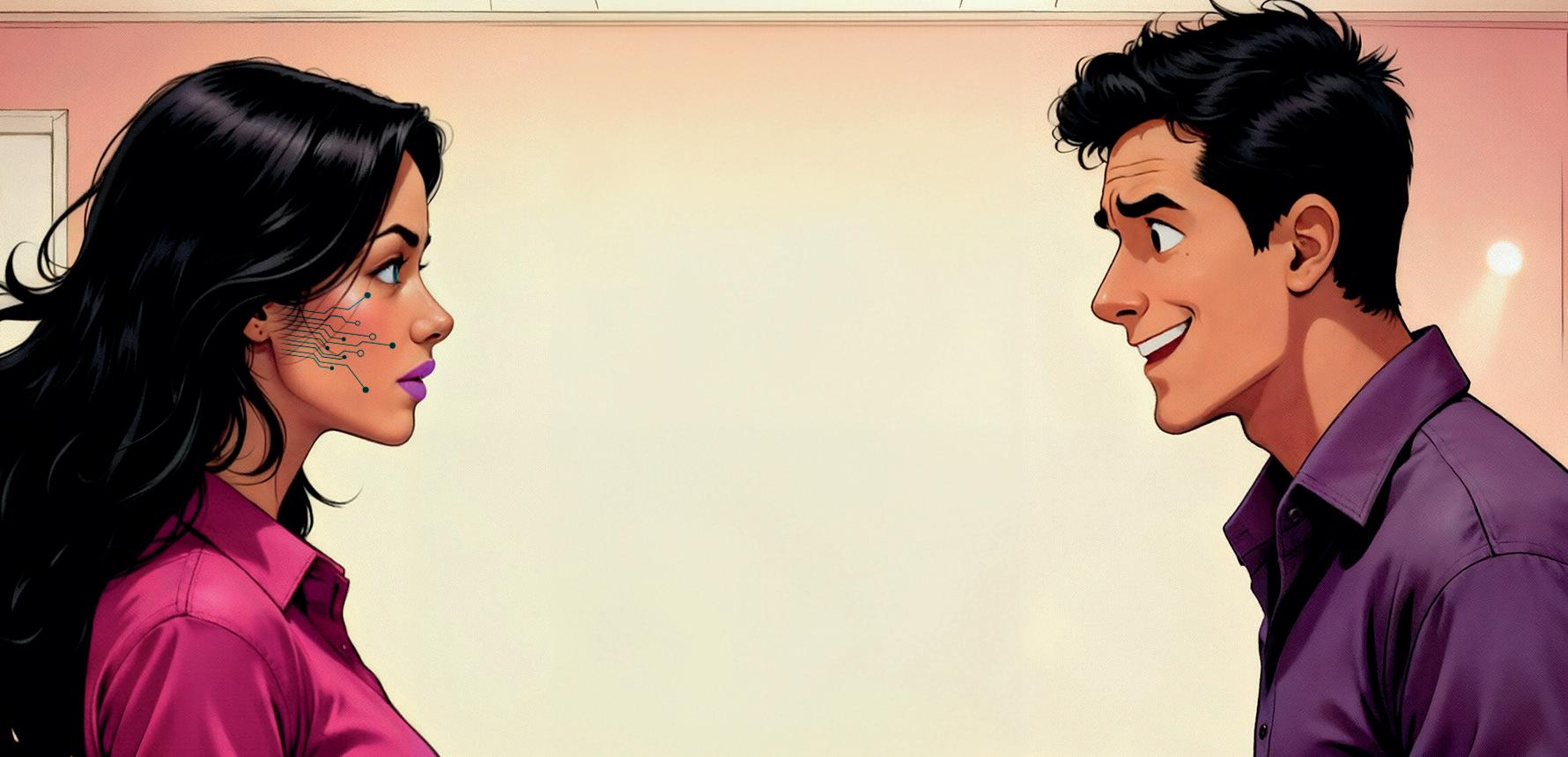

Plus we have Instructional Designer, Lisa Emmington, reflecting on a lifetime of learning, eLearning Developer, Carolyn Colon, investigating the need for human/AI symbiosis, and Whisper struggling to explain her role in the industry.

Enjoy!

Mark

Dirtyword - The E-Learning Magazine issue 11. Dirtyword is published 6 times a year and is available direct to your inbox by signing up at dirtywordmag.com or via issuu.com/dirtyword For all enquiries, contact info@dirtywordmag.com All content is © copyright Dirtyword - The E-Learning Magazine 2025 and reuse of the content is permitted only with the permission of the publisher. In all cases, reuse must be acknowledged as follows: “Reused with permission from Dirtyword - The E-Learning Magazine.” The opinions expressed in this publication are those of the authors and do not necessarily represent those of the publisher.

Dr Ace Hulus writes that when it comes to L&D borrowing practices from the gaming industry, the motto is “Don’t Gotta Catch ‘Em All”

Lauren Waldman explains why L&D’s neglect Is costing us people, progress, and

Whisper finds herself battling against her own job title.

Lewis Carr unveils his plan to combat learner misuse of agentic AI in online training.

Mark Gash questions whether gamification is less about the pursuit of knowledge and more about the pursuit of rewards.

We interview Mike Stein, Director of Design at ID Atlas as he Articulates eLearning Development Pain Points.

Tom Foulkes channels Jurassic Park’s Dr. Ian Malcolm and wonders, are we so preoccupied with whether or not we can, we aren’t stopping to think whether we should?

Joe Chislett explains why critical thinking skills alone aren’t enough to save us.

Instructional Designer, Lisa Emmington, puts lifelong learning on repeat.

Carolyn Colon explores why AI-driven learning still struggles with the human signal.

Dr Ace Hulus writes that when it comes to L&D borrowing practices from the gaming industry, the motto is “Don’t Gotta Catch ‘Em All”

Gamification in learning is evolving, with engagement techniques becoming more closely aligned with those of the gaming industry. But whilst adopting ideas from gaming might seem like a winning strategy, not every approach is suitable, especially in an ethical sense, when it comes to learning.

Like a child’s first game of Pokémon, it’s easy to get carried away with a “Gotta catch ‘em all” mentality to collecting every unique gaming-industry approach for your L&D content. But as seasoned players know, not every cute creature is worth adding to your party, and some of them can evolve into monsters with unforeseen and negative consequences for your learners.

Professor Oak’s Warning: Not Every Practice is Worth Catching

In the original Pokémon game, Professor Oak was the player’s guide, introducing you to the world of pocket monsters. And just as he cautioned that tall grass can be treacherous without proper preparation, the gaming industry’s rapid evolution has given rise to rather wild practices that learning developers shouldn’t adopt without careful consideration. Whilst Pokémon’s motto may be ‘Gotta Catch ‘Em All,’ the gaming industry requires a rather different approach: Don’t Gotta Catch Em All.

When borrowing techniques from gaming, there may be some that appear like harmless Pokémon, designed to boost learner engagement, but could actually be malicious. The bad guys of Pokémon, Team Rocket, would often use these tactics, deploying schemes that on the surface may appear beneficial, but ultimately undermine the very learning communities we are trying to support.

Loot boxes might look as tempting as capturing a fiery flying Charizard, but they’re more like a shape-changing Ditto. They appear valuable, but may also have a negative impact. Loot boxes in gaming are often tied to monetisation - players pay a nominal amount of money to unlock a hidden reward. The monetisation of learners via this technique isn’t yet as prevalent, but the risks are similar to those in gaming.

These randomised reward systems can trigger addictive behaviours by exploiting the brain’s reward centres, especially in the younger generation. The unpredictability of the reward can cause learners to become fixated on the ‘game’ rather than the learning itself.

Trainer’s Choice:

Avoid random reward mechanics that distract from the learning process. Instead, provide transparent progression paths and rewards that are clearly tied to specific, meaningful achievements. No false promises, no hidden surprises, just honest and valuable learning.

Team Rocket was famous for employing deceptive techniques to steal Pokémon, and unfortunately, some gaming companies use similar deception to obtain players’ data. Personal details, gaming patterns, and biometric identifiers are being collected without obtaining appropriate consent or implementing adequate security measures.

Companies treating player privacy like a Pokémon they can trade without permission isn’t in the spirit of fair play, and learning organisations should leave this strategy well alone; instead, mirror the respect Pokémon trainers show for their Pokémon by respecting the digital autonomy of their learners.

Inclusivity in the gaming community has not yet evolved significantly. Numerous online platforms currently mirror the initial phases of Pokémon contests, presenting competitive settings that appear to cater exclusively to specific “types.” Unfortunately, even online learning communities can be plagued with toxicity; different personalities, skills, and ideals can turn knowledge forums into Pokémon-style battlegrounds. It takes a strategic approach to break up these battles and instead promote a friendly, collaborative environment.

Inclusion Evolution Chain

Effective community management does not involve censorship. The goal is to create environments where everyone can have a rewarding experience, regardless of their origins, self-perception, or level of expertise. Envision it as the management of Pokémon Centres: protected areas where one can recover and gear up for the next challenge.

In Pokémon, the Elite Four are a group of highly skilled Pokémon trainers who are considered the strongest trainers in a region, with each member specialising in a specific Pokémon type.

Similarly, key principles of ethical gaming serve as the definitive measure of the industry’s development and should be considered when borrowing techniques for learning:

Transparency Champion

Promote transparent communication regarding monetisation, data utilisation, and gamification mechanics.

Accessibility Master

Accommodate users of all skill levels. Just as Pokémon introduced features like colourblind-friendly options, learning should be designed with accessibility in mind.

Sustainability Sage

Environmental Responsibility in Learning Development and Distribution. The Pokémon world teaches us about balance in nature. The industry should adopt a similar approach.

Labour Guardian

Fair working conditions for developers. Even Professor Oak had assistants, and he treated them well.Crunch culture in gaming is like forcing Pokémon to battle until exhaustion. It’s harmful and ultimately counterproductive. Treat your learning development team with respect!

AI has impacted both the gaming and learning industries, much like encountering a legendary Pokémon. It is extraordinarily powerful, but it needs responsible handling. Developers must ensure that the AI systems implemented for behaviour analysis,

Master Ball Moment

The most ethical applications of AI improve the learner’s experience while preserving their autonomy. These tools aid users in their progress, rather than imperceptibly influencing their decisions.

The Pokémon League of Stakeholders

Creating ethical learning standards, whilst borrowing from gaming, requires bringing together different types of people:

Learners: The heart of every Pokémon journey

Developers: The Pokémon trainers

Educators: The Professor Oaks, sharing knowledge

Mental Health Professionals: The Nurse Joy-style healing communities

Regulators: Pokémon League officials ensure fair play

Gym Badge Challenge: Building Your Ethical Framework

Each ethical principle is like earning a gym badge in Pokémon. It’s a mark of progress toward becoming a Pokémon Master of learning development:

Transparency Badge: Clear, honest communication with learners

Privacy Badge: Robust data protection and user consent

Inclusion Badge: Welcoming communities for all

Accessibility Badge: Gamification for everyone

Sustainability Badge: Environmentally conscious development

Fairness Badge: Ethical reward and labour practices

Innovation Badge: Responsible AI and tech use

Community Badge: Healthy online environments

Team Up: Cross-Industry Collaboration

Just as Pokémon trainers often team up for double battles, L&D can learn from other sectors facing similar ethical challenges. Strategies can be found within the technology sector’s user privacy practices, the entertainment industry’s content rating systems, and healthcare’s patient protection protocols.

A true Pokémon Master respects different regions and their unique customs. Similarly, ethical frameworks must consider diverse cultural perspectives on learning, gaming, competition, and digital interaction. What works in one town might not work in another. Global ethics require regional sensitivity and adaptation.

Call to Action: Join the Elite Trainers of Ethical Gamification

The learning industry stands at a crossroads. Much like a trainer choosing their starter Pokémon, our current choices will define whether we become exemplary leaders for our learning communities or succumb to the challenges that have affected other sectors.

For Learning Developers:

Start Your Journey: Implement transparent practices immediately

Evolve Your Approach: Invest in inclusive design and accessibility features

Master Your Craft: Establish clear ethical guidelines for AI implementation

Become a Champion: Lead by example in labour practices and environmental responsibility

For Learners and L&D Communities:

Use Your Voice: Support companies that prioritise ethical practices

Share Your Experience: Report problematic practices and celebrate positive changes

Join the League: Participate in discussions about ethics and industry standards

For Educators and Researchers:

Prove Your Knowledge: Continue researching the impacts of gamification on players, learners and society

Train Future Leaders: Integrate ethics into gamified learning development and computer science curricula

Bridge Learning Communities: Facilitate conversations between industry, academia, and players

For Policymakers and Regulators:

Set the Rules: Develop thoughtful regulations that protect learners without stifling innovation

Support Research: Fund studies on gamification’s social and psychological impacts

Foster Collaboration: Create spaces for multi-stakeholder dialogue

Both the gaming and L&D industries require advocates who will champion ethical practices. Together, we can catch the practices worth keeping and release those that undermine our learning communities back into the wild.

Remember: when adopting practices from gaming, you don’t gotta catch ‘em all. You gotta catch the right ones.

Because every great trainer knows, it’s not just about catching Pokémon. It’s about caring for the ones you choose to journey with.

Dr Asegul (Ace) Hulus is an Assistant Professor, Lecturer, Researcher and Author in Computing, and a regular contributor to Dirtyword.

Learn more about her work and connect with her here: https://www.linkedin.com /in/asegulhulus/

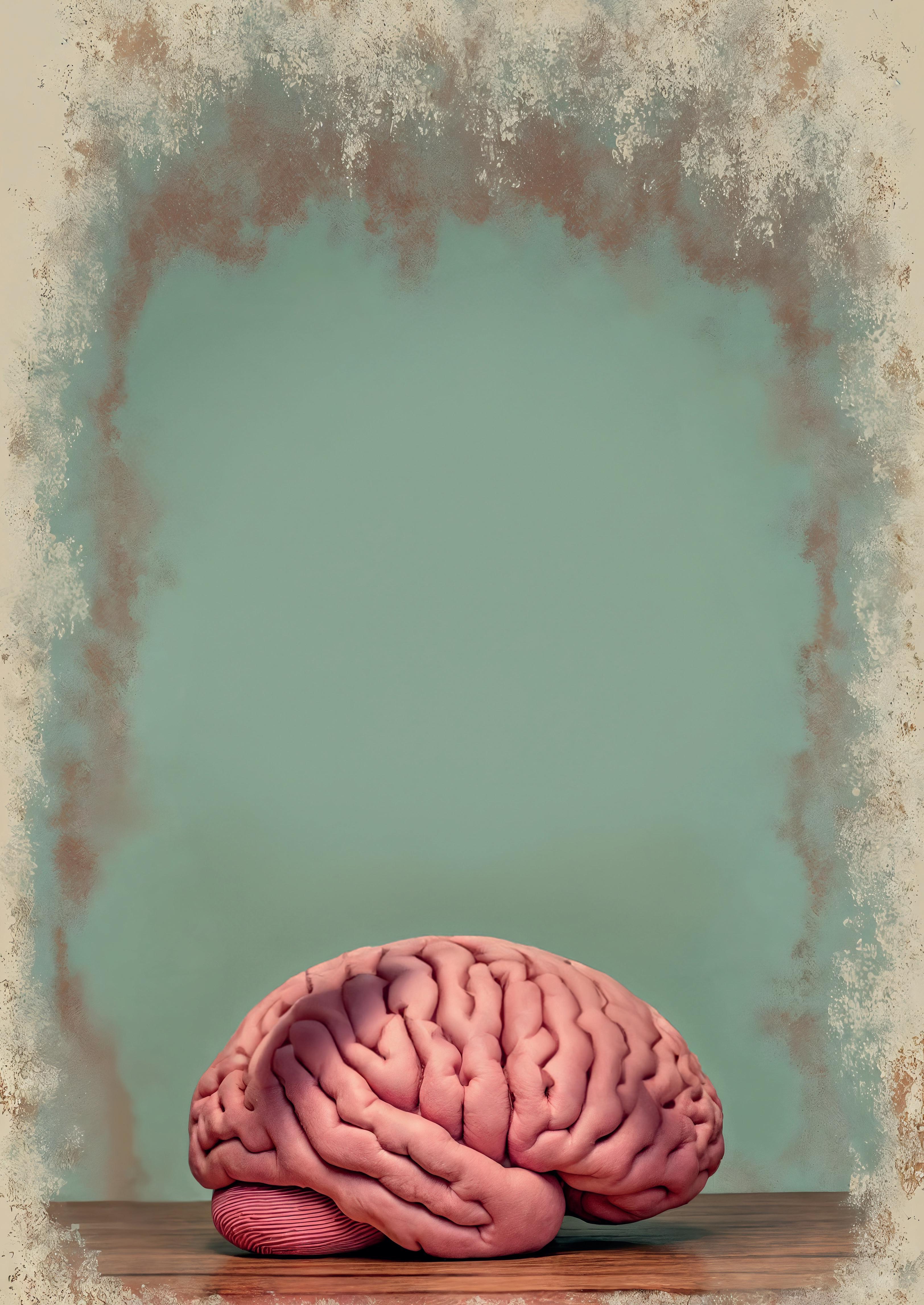

Lauren Waldman explains why L&D’s neglect Is costing us people, progress, and potential

We’re humans. Learning is what we do. From the moment we’re born, we’re wired to learn. Babies don’t take a course in “learning how to learn.” They just do it. And yet, somewhere between childhood curiosity and corporate life, we stopped upgrading our ability to learn - better.

Instead of building capacity in ourselves and in our organizations, the learning and development industry has spent decades chasing shiny tools, trendy buzzwords, and quick-fix programs. The results?

Mass layoffs and redundancies as “learning” departments are deemed expendable.

Brilliant leaders walking away, exhausted from fighting battles they shouldn’t have to fight.

Entire workforces losing faith in the learning function because they see no impact.

This isn’t just disappointing. It’s negligent.

Learning professionals are charged with enabling human growth, adaptation, and performance. Yet as an industry, we’ve largely failed to do our due diligence on the one thing that underpins all of this: how learning actually works in the human brain.

For years, cognitive science and neuroscience research has been shouting the same message: Humans can absolutely learn more effectively when we align with how memory, attention, and motivation systems in the brain operate.

We know how retrieval practice strengthens memory.

We know how interleaving boosts transfer.

We know how habits are formed and sustained.

We know how emotion drives attention and how attention drives encoding.

This isn’t theory. This is evidence.

And yet, how many organizations systematically build these findings into their learning strategies? How many CLOs can confidently say their programs are designed with cognitive science

at the core, not bolted on after the fact?

The answer: not enough. Not nearly enough.

When an industry ignores its own mandate, the cracks show up everywhere:

Mass layoffs. When L&D can’t demonstrate real capability building, they’re the first on the chopping block. It’s easier to cut “training budgets” than to admit we built the wrong programs.

Leadership exodus. Great leaders who care deeply about learning are leaving the field because they feel stuck, boxed in by outdated models, bureaucratic inertia, or executives who don’t see impact.

Disillusioned employees. Workers stop trusting the learning function when what’s offered feels irrelevant, uninspiring, or ineffective. The message becomes clear: learning here is compliance, not growth.

The tragedy? None of this is inevitable.

We Have the Science. We Just Don’t Use It.

In the last two decades, cognitive neuroscience has exploded with insights that could transform how organizations learn. We understand more about:

Memory systems. The hippocampus, prefrontal cortex, and basal ganglia each play roles in encoding, retrieval, and habit. We know strategies to strengthen those processes.

Attention networks. The brain’s dorsal and ventral attention systems literally govern what we notice and what we miss. This is why “engagement” isn’t fluff, it’s biology.

Metacognition. Humans can learn to monitor and regulate their own performance when they’re given the tools and space to practice it.

This isn’t magic. It’s cognitive science.

And yet, instead of embedding these principles into the DNA of learning design, the industry too often runs to the next LMS upgrade, the next gamified app, the next branded framework. We chase the external fix while underestimating the internal capability.

I’ve seen what happens when we stop bypassing the science and actually join forces with the brain.

People remember more - because retrieval, spacing, and feedback are baked into design.

Leaders feel empowered - because they finally see evidence that learning works.

Organizations build true adaptability - because they’re not just filling gaps, they’re building capability for change.

The difference is night and day. And it’s what this industry should have been doing all along.

We can’t afford to keep underestimating humans. Not in a world that demands agility, creativity, and constant adaptation.

That means:

STOP treating “learning how to learn” as a shiny new trend - it’s the foundation.

START embedding science into every program, not as garnish but as the main course.

INVEST in upskilling your own people, not just delivering to others.

Because here’s the truth: if we don’t rebuild learning functions on a scientific foundation, we’ll keep repeating the cycle - layoffs, redundancies, lost leaders, and lost faith.

But if we do? We unlock the very thing we’ve been chasing all along: humans capable of growth, organizations capable of resilience, and an industry capable of leading rather than lagging.

I’ve waited more than a decade for this moment - for “learning how to learn” to stop being a fringe conversation and start being taken seriously.

Yes, it’s frustrating that it took a tech giant to declare it a trend for people to pay attention. But frustration aside, I am wildly optimistic. Because now the door is cracked open.

The question is: will we walk through it? Or will we let history repeat itself one more time?

It’s time to stop chasing shiny objects and start building real human capability.

The brain is not a bonus feature. It’s the seat that’s been empty for far too long.

Lauren Waldman

Founder of Learning Pirate Inc., is a globally recognized learning scientist driving the evolution of how organizations approach learning and development. With four certifications in neuroscience - from Harvard, Duke, Johns Hopkins, and the University of Michigan - Lauren translates cutting-edge brain science into strategies that transform the way learning is designed, delivered, and absorbed. Connect with her here: https://www.linkedin.com/in/ lauren-waldman-4666bab/

So what do you do?

L&D - Learning and Development.

Ahh... HR, right?

No, I’m an Instructional Designer. A what?

I work in e-learning. Urgh.

Sigh... Everytime.

“How I passed cHeAted my comPliAnce course” -Sheila from

Accounts

Lewis Carr unveils his plan to combat learner misuse of agentic AI in online training.

Meet Sheila from Accounts.

She’s loyal and she works hard, and every year she logs into Moodle to complete her mandatory training. GDPR, anti-money laundering, nut allergy awareness. You name it, she aces it.

She clicks Next through 140 PowerPoint slides, answers a ten-question quiz, watches a video and prints her certificate. Box ticked. Compliance achieved. Wham, bam, thank you ma’am.

Now she can get back to her real job. Or, more realistically, she gets her AI agent to answer her emails and transcribe her meeting notes, all while she’s out walking the dog (sorry… I mean “working from home”).

Because yes, Sheila has adopted AI in her workflow. More importantly, Sheila has learnt to use agentic AI.

Using an AI-powered browser such as Comet, she can instruct it to log in to Moodle, open the course, read the slides, pass the quiz, and even mark the course as complete.

Agentic AI is a godsend for busy workers like Sheila, but a nightmare for learning developers like me.

There’s very little I can do to stop her because the old model is broken. But I have a plan (of sorts).

For 20 years, corporate e-learning has been built around content delivery and compliance tracking.

“Here are some slides. Click Next. Answer a quiz. Download a certificate.”

It worked (sort of) when the main threat was someone skipping the video. But now, that entire model has collapsed.

“We Can’t Stop Them” isn’t a strategy, that’s a surrender. I’m a bad ass Moodle developer, and there are some nerdy tricks I could build into the LMS:

I could randomise DOM elements so every “Next” button has a different selector and the AI can’t find the same thing twice. I could add micro-delays and scroll tracking so the course only unlocks after real human-style interactions. I could build behavioural analytics that flag robotic click patterns or impossible completion speeds. I could even hook into Moodle’s logs to create an “AI suspicion index” that alerts trainers to suspiciously efficient learners.

If I wanted to get really creative, I could write a custom availability plugin that randomises learning paths so the content flow changes every session, the kind of madness that AI hates and that would freak poor Sheila out.

But would that slow Sheila’s AI down? Maybe. For a week.

But the moment I build a wall, Sheila will find a taller AI ladder to climb over it. So yes, I can make it trickier for Sheila, but I’ll never beat the bots at the code level alone. So I need to look at this differently.

The real fix is to outdesign and outcode.The future of learning platforms isn’t just about locking things down; it’s about making learning unoutsourcable (if that’s even a word).

That means designing experiences an AI would struggle to fake. (And I’m not saying AI couldn’t eventually work around it, but let’s at least make it harder for Sheila.)

Ask Sheila to reflect on a real incident in her workplace. Have her record a 60-second video explaining what she’d do if she noticed a suspicious invoice. I can do this by building new tools and creating better content with better tools, so we create a cultural shift.

Sure, she could read a ChatGPT-generated script off camera, but only if she had time to write the prompt and prep the response. Not if we fire random questions at her in short succession. Or grill Sheila on the spot, in the office, catching her off guard in the corridor on the way to the loo. I’m on to you, Shiela.

AI can’t walk around the office. It can’t reflect on your company’s culture. And it can’t prove Sheila understands the why behind the policy.

Some people suggest we just proctor online training, i.e we force Sheila to take her anti-money-laundering module under webcam surveillance.

Aside from being wildly impractical (and creepy), it doesn’t solve the problem.

Are you really going to monitor every employee’s SCORM habits? And what if Sheila’s AI agent just runs on a second machine while she stares blankly at the camera?

The goal of our training was never to verify presence, it was to ensure engagement. So maybe I build more engaging tools in Moodle that make learning.... fun?

The fix isn’t just technical, it’s pedagogical too. Even with all my mad Moodle tricks, the real answer still lies in course design. But not just telling teachers and instructors to change the way they assess - give them the tools to build courses that assess differently, or deliver learning in new, creative ways.

Instead of pushing content at learners, we should be creating experiences that connect to real-world situations and capture personal context or examples. Courses should conclude with meaningful reflection, not just a quiz score.

That’s how we make it easier to learn than to cheat.

I am actively looking at technical code solutions, but I’m looking at how I blend learning experiences that are human, contextual, and impossible to outsource. If I am to change a broken model, I need to write a brand new one.

Lewis Carr is an Innovation and Product Director, and all-round Moodle Wizard. Connect with Lewis here: https://www.linkedin.com/in/lewiscarrlearning/

Mark Gash wonders whether gamification has made e-learning less about the pursuit of knowledge and more about the pursuit of rewards.

Back when I were a lad… yes it’s one of those articles. This time, I’m examining video games and exploring an annoying modern trend that seems to have been adopted by e-learning without much thought, as I attempt to define the difference between intrinsic and extrinsic motivation.

By today’s standards, I am not a gamer. I grew up playing Sonic the Hedgehog on Sega Megadrive, Tomb Raider on Playstation, Pokemon on Gameboy and Ninja Turtles in the local arcade. I’ve never crafted a mine, been called for duty, nor been bestowed with the title of God of War. And I’ve definitely never crushed any candy. Candy Crush, in my opinion, was the tipping point for games. It opened them up to the masses, using a system that was easy to learn, hard to master and filled with hollow reward for those willing to put in the time, effort and cash to progress through the stages. I once caught my mother-in-law, who has never picked up a game controller in her life, paying 79p to advance to the next level on Candy Crush on her phone.

Before this tipping point, people who played games enjoyed playing the game - they weren’t in it for the badge at the end. After hours of practice, it was the thrill of finally learning how to make Lara Croft leap across the chasm without falling to her death. Playing games was about the challenge, the discovery, the mastery of a new skill. Now, it feels like we’re just running on a treadmill, waiting for the next loot box to drop, the next cosmetic skin to unlock, or the next achievement notification to ping.

Intrinsic vs extrinsic motivation.

Intrinsic motivation is the pure, unadulterated joy of the activity itself. It’s the feeling of flow when you’re so engrossed in a game that you lose track of time. It’s the satisfaction of figuring out a complex puzzle or finally defeating that boss you’ve been grinding away at. The reward isn’t the shiny sword you get at the end; the reward is the act of playing the game, of mastering the challenge, of experiencing the story. You play because you want to, not because you have to.

Extrinsic motivation, on the other hand, is all about the external rewards. It’s the leaderboard ranking, the high score, the digital badge, and the certificate you can print out and pin to your wall. These are the carrots dangled at the end of the stick. They’re great for getting people started, but they can be a dangerous crutch. When the reward becomes the sole focus, the activity itself becomes a chore. You’re not playing the game for the joy of it; you’re playing it for the prize. You’re not learning for the sake of knowledge; you’re learning for the certificate. Or worse, just to get to the end.

In many modern games, the “fun” part - the actual gameplay - is often a repetitive, grindy loop designed to get you to the next unlock. The core experience is devalued in favour of the dopamine hit of a new acquisition. As a player, you’re no longer the hero hedgehog; you’re the mouse in the Skinner box, pushing a button for a pellet.

So what’s this got to do with e-learning?

In a word: Gamification. The clunky phrase every course developer uses to sell their skills to a client. When used properly, gamification of e-learning is a fantastic approach, but it’s often a case of putting the cart before the horse. We see e-learning modules laden with points, badges, and leaderboards. And for a while, it works. The learner is motivated to click through the slides, answer the quizzes, and “win” the course. But this is classic extrinsic motivation - learners are chasing the badge, not the knowledge.

But what happens when the badges run out? What happens when the extrinsic rewards lose their novelty? The learner, who has been conditioned to learn for the prize, now finds the activity itself to be a dry, unrewarding slog. The moment they get that final tick box or certificate, the learning stops. There’s no intrinsic love for the subject matter, no enduring curiosity. It was just a transaction.

The goal of effective e-learning - the kind that truly sticks - should be to cultivate intrinsic motivation. The learning experience itself should be the reward. The “game” of e-learning should be so engaging, so relevant, and so well-designed, that the learner forgets they’re even “learning.”

We need to design experiences where the challenge is the reward and the learner feels a sense of accomplishment from solving a problem, not from getting a good score.

Create learning where discovery is the prize, where the learner is driven by curiosity, by the desire to uncover new information or master a new skill.

And build journeys where relevance is the win, so the learner sees a direct, immediate benefit to their life or work from the knowledge they’re acquiring: that in itself is the greatest reward.

So, let’s stop Candy-Crushing our courses and making learners push a button for a pellet. Instead, how about we start designing experiences where the journey is the destination, where the game is the prize, and where the penny-drop moment of learning clicking in the brain is more valuable than any digital badge. Real winners know that e-learning isn’t about how many rings, coins or certificates you get at the end; it’s the knowledge you keep long after the course is over.

Up, Down, Left, Right, A + Start. IYKYK.

Mark Gash Writer. Designer. AI Image Prompter.

Connect with him here: https://www.linkedin.com/in/markgash/

We

You’ve spent 20 minutes fighting an interaction that should have taken two…again. Your project timeline is already tight, and now you’re behind. You glance at the clock; it’s past 6 PM, and you’re still in the office because a button trigger won’t fire. Tomorrow’s client review is looming, and you haven’t even touched slide 47. You close the software, reopen it, and hope this time it works. It doesn’t. Was this really the software you spent weeks convincing IT and procurement to let you install?

This isn’t just a bad day; it’s a symptom of a rigged game. For over a decade, one player — Articulate Storyline — has taken over every property on the board: from corporate compliance to non-profit onboarding. As the undisputed industry standard, we’re all paying rent to play their game.

This dominance has consequences beyond market share. Businesses post jobs demanding “Storyline skills” (often exclusively), leaving new designers wondering, “Am I even a real ID if I don’t use it?”

And it’s easy to see why: Storyline is powerful, established, and its PowerPoint-inspired, slide-based editing makes picking up the software easy. But that same familiarity hides real costs. We’ve been sold the “blank canvas” as the epitome of creative freedom, but it’s an illusion. The tool has already made many critical decisions for us, encouraging designers to build what the tool does best rather than what might be most effective.

But the game is changing. A new generation of cloudbased competitors is here. They are building their own infrastructure, their own properties, and in many cases, their rent is significantly cheaper.

Dirtyword has long tracked the shifts and frictions within the eLearning development space. When Mike Stein and his team at ID Atlas, an instructional design agency with over a decade of experience using dominant tools like Storyline and Captivate, decided to stop wondering if the development pain was necessary, we paid attention.

Mike Stein, Director of Design at ID Atlas, launched an ambitious, independent project: Articulating eLearning Development Pain Points. The goal was to scientifically measure the experience across leading cloud-based authoring tools to quantify the cost of tool dominance.

To do this, ID Atlas designed a single, comprehensive course with all assets pre-built. They then pressuretested 10 web-based platforms in an authoring tool build-off, tracking every click, minute, and keystroke to quantify what he calls the “monopoly tax.” Is a 6-hour build in Storyline truly providing four times the value of a 90-minute build in a modern alternative? That’s what this project was determined to find out.

Dirtyword sat down with Mike Stein, of ID Atlas to learn more about this project and the key findings.

Dirtyword: Mike, you make a bold claim about the Articulate monopoly and the tax we pay to use Storyline. This research project is your evidence; but how do you objectively measure a subjective experience? What was your scientific methodology for turning friction and frustration into hard data?

Mike Stein: That’s the challenge, right? How do you objectively measure a feeling? Maybe that’s one of the reasons this type of research hasn’t really been attempted before.

I’m a 10-year veteran of Storyline and Captivate. My own bias, and the industry’s reliance on these tools, is the problem. We had to create a system that could challenge the ‘standard’ on neutral ground, free from both our own assumptions and any outside influence. This was an entirely independent, unsponsored project. We were not paid by any of these vendors; we just wanted real answers. To do that, we had to separate the art of instructional design from the friction of development.

First, we designed a single, comprehensive course storyboard: our control. Then, we pre-built every single asset. The script, images, audio files, and videos were finalized before the clock started on development. This step isolated the design phase completely, ensuring we were only measuring the development experience.

Then we ran the builds. We gave 10 of the top web-based platforms the same storyboard, same assets, and the same goal: build the exact same course. This was a pure pressure test of efficiency. We recorded the build for timing and used tracking software to capture the quantitative data: every click, every keystroke, and every scroll.

But metrics don’t tell the whole story. They track effort, not friction. That’s why we added a second layer: structured qualitative data. After each build, the developer (myself and another designer on my team) completed a detailed intake survey. We captured those immediate, raw feelings: the wow moments, the points of friction, and the features that felt like we were fighting the tool. That qualitative data served as the basis for the 1-5 star ratings in each sub-category, and we considered it relevant when it was corroborated across these surveys and backed by the hard data.

Finally, we addressed the new user problem. We intentionally went into most of these tools blind to capture that initial learning curve. However, after the build, we did deep-dive research to see if a hidden feature or an expert-level workflow would have solved our pain points. So while the quantitative questions measured our personal experience, the final ratings reflect a tool’s full capability, not just a first impression.

This four-part system (the standardized course, the quantitative metrics, the qualitative debrief, and the post-build research) is how we turned a subjective feel into an objective, data-backed score.

DW: Let’s talk about that monopoly tax you mentioned. It’s not just referring to the pricing model; your data shows the industry standard (Storyline) took 6 hours to build, while you were able to build the same course in less than 1 hour with some of the cloud tools. Is the 6-hour product really not better? Or, to use the metaphor, are we just comparing a Bentley to a Buick?

MS: That’s the central question we wanted to answer with this project. Yes, in a vacuum, the 6-hour Storyline course is objectively better. We were able to use our full toolbox of tricks: custom layers, easter eggs, background music, and complete control of the media. It’s a very sleek final product that we’d be proud to deliver to a client. In contrast, every cloud tool we tested forced some kind of compromise. We couldn’t rebuild every interaction 1-to-1 and we had to re-think our design to fit scrolling, block-based platforms.

But, let’s look at the numbers. The Storyline build took 6 hours. The average build time for the cloud tools was about 90 minutes. The fastest builds took under an hour. That’s a 4x to 7x difference in development time.

So, this begs the real ROI question: Is the Storyline product four times better? Is the learning four times more engaging? Will the learner remember four times as much? When you look at the side-by-side comparisons in our project showcase, it’s pretty clear the answer is no. The final products are shockingly similar.

And it’s not just more time; it was exponentially more work. Our tracking software showed the 6-hour Storyline build required 12,192 clicks and 10,474 keys. The 50-minute iSpring Pages build took only 1,471 clicks and 1,411 keys. That’s over 8 times the physical effort to develop an equivalent course. That is the monopoly tax quantified.

We assume the 6-hour build is a Bentley, and that the high price and high effort are just the cost of luxury and power. But the data shows it’s often just an older, less efficient model we’re paying a premium for out of habit. Meanwhile, the modern cloud tools get you to the same destination in a fraction of the time and don’t demand eight times the effort to turn the key.

DW: So, what’s the alternative? For freelancers, a free tool like Coassemble or a low-cost option like Genially seems like a no-brainer. But for large organizations, the $1,500 for Storyline or even $10,000 for the premium Lectora bundle is monopoly money. How much does price really matter when selecting a tool?

MS: For freelancers and small businesses, the sticker price is a huge factor. The good news is, the rent is significantly cheaper on these cloud properties. You have Coassemble’s free forever plan for single users. You have Genially’s monthly $10 low-cost entry point. Even some of the most popular tools, like Parta, iSpring Pages, and Evolve are less than half the annual cost of the Articulate suite, so you definitely have options. For this group, the value is obvious: you can get a modern, capable tool for a fraction of the price.

But for big organizations, you’re right, the license fee is a rounding error. The real cost, the one they’re ignoring, is the developer’s salary. This is the monopoly tax in another form. Is your $60/hour developer spending 6 hours on a build that should take 1.5? That’s a massive, quantifiable ROI drain that dwarfs the annual cost of the software.

DW: So if the real cost is the developer’s time, are we still just stuck choosing between speed and power?

MS: That’s the main trade-off we found, and it’s where the qualitative data is so important. You have tools like iSpring, 7taps, and Coassemble that are incredibly fast to

build in. However, the trade-off is that you’re creatively locked in. The tool controls a lot of the design. On the other hand, you have tools like Parta and Genially that give you that blank canvas freedom, but come with a steeper learning curve and demand the time needed for that extra customization.

DW: But doesn’t a suite like Articulate 360 solve that problem? It gives you both Rise for speed and Storyline for power. Isn’t that the ideal solution?

MS: You might think so, but it’s an inflexible, all-ornothing bundle; you’re paying for Storyline whether you need it or not. This is where a competitor like iSpring offers a more flexible model. They have a similar ecosystem with a powerful PowerPoint add-in and their cloud-based Pages tool, but they allow you to buy Pages separately for a much lower price. Articulate’s model forces you to buy the whole bundle. It’s less of a bonus and more of a strategy to ensure every user pays the full subscription price.

But even beyond that, what our data really revealed is that Speed vs. Power is too simple. The most important factor for ROI is long-term workflow efficiency. For example, Rise is fast for a first draft, but its workflow for reorganizing that course is terrible, which kills your ROI on iterations and maintenance. In contrast, Parta has a slow learning curve and requires some initial up-front investment to get going, but once you’re over that hill, its powerful reusable templates and real-time collaboration create long-term efficiencies for a team. It’s important to evaluate not just the cost of the first build; but the total cost of ownership.

And not all power tools are created equal. Lectora, for example, was a complete outlier. It represents the worst of both worlds: the high-effort, high-learning-curve of a power tool, but with a dated, clunky, and buggy interface that makes it less efficient and more frustrating than even the Storyline baseline. Despite Lectora being fully accessible from the web browser, if the choice is between Lectora and Storyline, Storyline wins every time; even if I have to buy a Windows laptop to use it.

In the end it comes down to what you value and what makes the most difference in your workflow. That’s why we put together the Priority Ranking tool. Are you willing to put up with a frustrating UI (like Chameleon) to get more built-in interactions? Is best-in-class accessibility (like isEazy) more important than a smooth workflow? These are the real factors, and they’re all qualitative. The Priority Ranker on our site lets you weigh those trade-offs and find the tool that best fits your needs.

DW: You’ve established that some power tools like Lectora are a bad investment in time and effort. But a high learning curve isn’t the only way a tool might send you to development jail. Your scorecards show big splits on what many would consider professional, must-have

features. What are the real dealbreakers that send a tool straight to the bottom of the recommendation list, regardless of its other strengths?

MS: This was one of the most frustrating parts of the research. Some tools had such standout features that it genuinely made it fun to build in, but then they’d mess it up with something that’s often non-negotiable for certain clients or industries.

The most obvious one is Accessibility. You have tools like isEazy, Evolve, and Rise that have made it a core, best-inclass strength. isEazy’s Accessible Mode, for example, is a game-changer that ensures compliance with almost no extra developer effort by automatically creating a separate equivalent accessible experience for all of their built in interactions, including scenarios and games.

Then, you have tools that are otherwise fantastic, like iSpring Pages, that fail on the most basic accessibility features. Despite having a super streamlined editor where you could just copy and paste images, drag and drop to resize columns, and easily select and reorganize groups of elements, iSpring Pages has no alt-text, no captioning, and isn’t fully keyboard navigable. In 2025, that’s simply not acceptable.

The second major dealbreaker is the Review Workflow. There’s the friction of stakeholder access which can be a huge pain point. Some tools, like Rise and isEazy, get this right: you send a single shareable link, the reviewer types their name, and they’re in. That’s it. But other platforms, like Evolve, iSpring, or Chameleon Creator, force your non-technical SME, who just needs to provide a final signoff of an otherwise completed module, to create an account. Telling a busy VP they have to create and manage another password just to provide feedback once is a significant, unnecessary barrier.

We also found that many tools, like Rise (Review 360) and Lectora (ReviewLink), force you into a twoapp process. The comments live in a separate web application, so you’re constantly switching between the editor and the review site to see what you need to fix. Whereas the best-in-class tools, like Parta, Evolve, and isEazy, pipe the comments directly into the editor so you can see the feedback right on the section you’re working on. Parta and Evolve also let reviewers pin a comment to a specific element (this exact button, this exact text box), whereas others work on the page level. This means the SME has to write, “in the third paragraph, the second word is wrong,” which is just the old spreadsheet problem in a prettier interface.

And the third major dealbreaker is mobile responsiveness. Mobile-friendly has become a meaningless marketing term. Our research found there are really three distinct approaches on the market.

You have the Scalers, like Storyline and Genially. They don’t reflow content; they just shrink the entire desktop slide to fit the phone screen. While technically visible,

it’s often unusable. Text becomes unreadable and buttons are too small to tap. The only real workaround is to create two separate, dedicated projects: one horizontal for desktop and one vertical for mobile, which is a substantial duplication of effort.

Then, you have the Automatics, like Rise, Evolve, and isEazy. They do a fantastic job of fluidly reflowing content into a clean, single-column view that works great. The trade-off is that you get no manual control. If the automatic reflow stacks two images on top of each other in a way you don’t like, you just have to live with it.

Finally, you have the granular approach, which we saw in Parta. It gives you total manual control to reorder, resize, or even hide elements specifically for the mobile view. It’s more work, but it’s the only way to achieve a truly custom, mobile-first design. This is a critical distinction that most buyers won’t see in a sales demo.

DW: We’ve covered the dealbreakers that can send a tool to jail. But what about Free Parking? Were there any standout features you found that offered unexpected, high-value bonuses?

MS: Absolutely. This is where you see the real innovation and personality of these tools. Some of the standout features weren’t always big-ticket items, but the small things that solve major developer pain points.

The most obvious was the feel of iSpring Pages. We gave its Developer Experience a 5/5. It was just a joy to use: fast, fluid, and intuitive, like editing an interactive Google Doc. While it failed on critical features like accessibility, its core editing experience, combined with its powerful in-tool image editor, was a huge bonus.

Another one was Parta’s error-recovery system. It has a Recycle Bin and per-block version history. That’s an incredible safety net that gives you total confidence to experiment, knowing you can never truly break your project, especially on a large team.

We also saw huge value-adds in specific media tools. Genially, for example, has in-tool audio recording and trimming, a rare combination that saves a ton of time when working with narration; plus a solid built-in media and template library to get projects off the ground.

Then there were innovative approaches to delivery. Tools like Coassemble and 7taps excel at this. They aren’t just SCORM-export tools; they are built to meet learners where they are. They generate simple, shareable links and have their own powerful analytics to track users via email or integrations, all without forcing a learner into an LMS. For anyone without a traditional LMS, that’s a significant bonus.

These are the kinds of features that don’t show up on a standard must-have checklist but make a real difference in the day-to-day quality of life for a developer.

DW: You’ve quantified the monopoly tax in thousands of manual clicks and keystrokes. Many of the tools you reviewed, like isEazy and Rise, are heavily pushing AI as the solution. Based on your tests, is AI just a marketing gimmick, or is it a genuine Get Out of Jail Free card for development friction?

MS: Right now, it’s a bit of both, but its potential to completely change the game is undeniable. We saw AI being implemented in a few distinct ways.

Let’s start with the most frustrating use of AI: as an intrusive upsell. We saw this most egregiously in Articulate Rise. Even if you’re on the non-AI plan, the interface still defaults to the AI Generation tab every time you add an audio block. This purposefully adds an extra click to your workflow just to get to the basic Upload or Record tabs. It’s a frustrating, ad-like experience that adds friction inside a premium product. It feels particularly sleazy because Rise is the only platform in our review that sells AI as a separate, costly add-on, while every other competitor is embracing AI and including it as a standard value-add to their subscription.

Where it starts to get more promising is AI as a content assistant. We saw this everywhere, used for different tasks. For media generation, isEazy offers AI-generated voiceovers, avatars, and images, and Genially can create images from prompts. 7taps has full Synthesia-powered AI avatars to generate video for building scenarios. Parta lets you plug in your own API key to generate text from your preferred LLM, while Genially and isEazy can generate quiz questions.

The most ambitious use is AI for full-course drafting. Coassemble has an AI-driven drafter for quick starts, and isEazy can generate an entire course from a PowerPoint or build a full editable branching scenario for you. However, we weren’t thoroughly impressed with any fully AI-generated course draft and hit a significant bug in isEazy’s AI-assisted branching that forced us to rebuild it manually. So as an assistant, it’s promising but not yet a perfect, reliable shortcut.

But the real game-changer is AI as the developer. This is where AI completely removes the monopoly tax on complex, high-effort builds. We’re already seeing this with tools like Canva AI that can generate full blown HTML code from a single prompt. For this project,

interactions could be vibe coded in minutes and you just paste it in. This is why Storyline’s ROI is collapsing. Its main defense (the power to build custom interactions) is no longer a defensible moat. AI will do that heavy lifting, and the tools that win will be the ones that let us integrate that code seamlessly.

DW: So after all the data, all the clicks, and all the frustration... just give it to us straight. What’s the best tool for a designer or L&D manager who’s ready to stop paying the monopoly tax?

MS: At the end of the day, the research showed us that there is no single best tool. No platform got it 100% right, and there are always trade-offs you’ll have to make.

Genially, for example, is a creative powerhouse; its media and interactivity scored a 20/20 in our rubric. But its responsive design capabilities (a 2/5) might be a dealbreaker for anyone who needs their courses to run beautifully on all devices. Then there’s iSpring Pages, which had the single best Developer Experience (a 5/5) of any tool we tested; that editor was just so easy to use. But its accessibility score (a 1/5) and review workflow (a 2/5) make it professionally unusable for us. It’s a beautiful racecar with no seatbelts.

This is what led us to change our development approach at ID Atlas. We have been phasing out Storyline as our default tool for a few months now. If a client insists on it, we will absolutely build in it; but now we go into that conversation armed with data. We show them the 4x-8x development tax in time and effort and let them make an informed decision.

For most of our new projects, we’ve transitioned to Parta. Not because it’s perfect, but because it hits our sweet spot at half the price: it gives us the deep visual and responsive control we need in a fast, fully collaborative, cloud-based package. But we’re flexible. We also use Genially for highly visual slide-based projects and Evolve when accessibility is the top priority. We’d happily use iSpring or Coassemble if a project’s needs (like raw speed or a free plan) made sense.

DW: So the big takeaway isn’t to pick the best tool, but to have a bigger, more flexible toolbox?

MS: Exactly. The real winner isn’t any single platform, it’s the approach. Stop defaulting to Storyline because

We know the biggest barrier for large companies is the perceived friction of transitioning a huge library of legacy courses. But our data shows this barrier may be an illusion. If you have your assets (even from a published course where you don’t have the source file) you can rebuild a 15-minute module in a tool like iSpring or Coassemble in less than an hour. If you have a library of 100 courses, you’re looking at 100-200 hours of work depending on the platform. It’s not nothing, but it’s a manageable, one-time cost to escape a permanent tax on all future development.

That’s why we didn’t just publish a static report. We’ve made everything public at: https://www.idatlas.org/blog/elearning-pain-points

It’s a complete, interactive dashboard with five tabs. You can see the Overall Ratings and click any category to read our full qualitative reviews. You can see the Development Metrics tab for the raw data: the 23,000-action Storyline build versus the 6,500-action Parta build. You can use the Tool Comparison radar chart to see head-to-head. And you can use the Project Showcase to compare the final Bentley and Buick builds side-by-side for yourself.

Maybe the most useful section to readers is the Priority Ranking tab. Go there, move the sliders for what you actually care about, whether it’s Accessibility, Collaboration, or Developer Experience, and the dashboard will instantly re-rank all 10 platforms based on your specific needs and show you the matches and conflicts in your priorities.

But our research is just the start. It’s also an invitation. We’re actively welcoming peer review. Our entire 20-category rubric and all our project assets are available on the site under a Creative Commons license. We want other designers to use this framework, run their own tests, and challenge our findings.

On the site, you’ll find a Peer Review Toolkit with the complete storyboard and all the assets. You can run your own build, track your time and effort metrics, and then submit your results directly into our Evaluation Form. This form will guide you through the full 20-category rubric and help us build a shared, data-driven understanding of our tools.

Our ultimate hope is that this framework serves as a valuable, living tool for the entire eLearning industry. We want to help all of us make better decisions; not just about the software we buy, but about the designs we’re creating with these tools.

Mike Stein is a professional problem solver, accessibility specialist, and Director of Design at ID Atlas.

Connect with him here: https://www.linkedin.com/in/mikesteindesign/

Tom Foulkes channels Jurassic Park’s Dr. Ian Malcolm and wonders, when it comes to new tech, are we so preoccupied with whether or not we can, we aren’t stopping to think whether we should?

Imagine for a moment that it’s 1975, and you’ve just been hired by a new start-up called InGen. Your boss, billionaire philanthropist John Hammond, has asked you to help him clone extinct dinosaurs, ready to show them off to crowds of punters in his visionary theme park, Jurassic Park some years later.

Sounds like a good idea, right? A great use of time, money, and tech? And the payoff… unimaginably awesome! What could possibly go wrong?

Now let’s imagine you ask, “Hang on a minute, John? Why do you want to bring dinosaurs back to life? Won’t that be incredibly dangerous for the human race?” only to be met with a casual, “Why not? We’re doing it because we can…”

Would you be 100% satisfied with that answer?

No? Then why would we settle for that same ‘Why not?’ argument when using technologies or formats in digital learning without taking the time to check they meet the specific needs of the requirement?

Now, I’m not saying that the stakes are quite as high in elearning (although you could argue, if the training is on health, safety or something dangerous, you wouldn’t need a triceratops in the room to understand that lives could be at risk if the elearning doesn’t land). But it is really important to question ‘why?’ when faced with a new requirement. That means digging deeper into why this is a requirement (what’s causing the problem or gap) and whether their suggested approach is truly fit for purpose.

All of this will help you to achieve a bigger, more thought-through impact on your learners and organisation, improving the effectiveness, quality, and value of your courses.

We’ve all encountered an alpha stakeholder who’s keen to dictate exactly what they want. Maybe they’re fixated on a shiny trend from years ago. Maybe they’re overly excited about using that new equipment that cost half their yearly budget. Or maybe, just maybe, they’ve put the work in to understand what they’re trying to achieve and the best way to achieve it. My point is, until you ask them, how are you meant to know?

If, when you do ask them, “Okay, what are you trying to achieve? What’s the overarching goal here? Why do you think this is the best way to go?” they struggle to explain their reasoning or focus more on the technical outputs of creating a course itself (e.g., ‘we need a VR module!’ or ‘it has to be a slick animation,’ rather than ‘we need to reduce errors by 15%’ or ‘increase sales by X%’), it’s your job to make them understand the potential issues associated with this approach.

What I’m getting at here is simple: without a clear understanding of what they’re trying to change or achieve, there’s no clear argument for any one approach over another, and no way to predict which will have the best results for the business. This, of course, could also mean a lack of any positive impact at all.

So, if someone asks you to revamp their data protection course, for example, ask them a few probing questions like, “Why is this a business priority? Is it compliance or have there been incidents?” to discover the driving factors behind their requirement.

And don’t stop there; if there have been data protection incidents, ask, “Why do people keep making the same mistakes? What protections have you got in place to prevent issues like this?” to further explore the problem.

From here, you might discover that people are consistently falling for sophisticated scam emails. Maybe a full data protection course revamp isn’t actually a good use of budget or time.

Instead, “Could a targeted piece of microlearning help to reduce incidents, while also saving on training time?”

This is just one example of how asking the right questions can improve the validity and effectiveness of your design decisions. You might end up going in the same or a similar direction as your initial thoughts, but you’ll do so with purpose and intent.

So, next time you’re approached with a request to build, remember:

• Always ask “Why?”

• You’re solving problems not building courses.

• Form follows function; find the need before choosing the approach.

• There is no one ‘right way’ to approach the solution.

Following these simple tips will help keep your elearning from harking back to the cretaceous period, while contributing to the impact you need to achieve.

And, let’s be plain, you do NEED to achieve something. Otherwise you’re not delivering value on any level – not for your learners, not for your organisation, not even for yourself.

Oh, and also, don’t reanimate any dinosaurs… that should be a given!

Tom Foulkes is a Certified Digital Learning Professional (CDLP) and Learning Experience Consultant (LExC) at Dynamic.

Connect with him here: https://www.linkedin.com/in/tomfoulkes-a69483178/

Over the last year there has been a clamour for ‘critical thinking’ skills. As someone who has both taught and assessed these, and who has a business devoted to ‘Empowering human thinking”, you might think I’d leap on that bandwagon. But I’m reluctant to do so. Not because critical thinking skills are not important: they are. But because they are only part of the picture. When it comes to really empowering your thinking, they are useless without a set of other skills or abilities alongside them.

I will set aside the question of what we mean exactly by “critical thinking” skills. This is a whole problem area in itself, as the phrase can range from the trivially banal (don’t take things at face value) to quite technical rules about logic, arguments, and the use of evidence. I will assume for now that there are a set of skills that most people would agree fall under this banner: such as being able to distinguish different types of claim, consider the evidence-base for them, or the quality of reasons or arguments you are given for accepting them.

So why are these skills not any good by themselves?

This is where our two facts, about people, and about the context they think in, come in.

The first fact to take on board is that humans are not entirely logical. The idea that you can simply show people what a valid argument looks like, or explain the difference between, say, evidence and anecdote, and then expect that people will suddenly transform the way they think and behave is naïve to say the least. Better, some argue, to stick to gut instinct, stories, emotions to get your points across.

Humans are tribal, storytelling creatures, and there is no point trying to get them to respond to logic and reasons. Except this isn’t true either. People do respond to reasons and arguments. It’s just they also respond to feelings and emotions.

The truth is that we are a mixture of things. Of the inquisitive, the instinctive, the logical, the imaginative, the intuitive; the head and the heart. And that all these things – these different thoughts and emotions, the more and the less conscious, are going on, nearly all the time.

We do not think in a vacuum, but amongst other people. People who may or may not think differently. We live in a shared social world, with shared as well as conflicting desires, goals and values. We are also embodied beings, who experience the world through our senses – as well as through the language and behaviour of others. What all this means is that sharing ideas, sharing information, claims, arguments, viewpoints, is as much a process of negotiation as it is persuasion.

The reason why people find it hard to accept counter-evidence is not because they are unaware of the rules of evidence, but because it comes up against other, psychological or sociological facts to do with our self-esteem, social standing or reputation.

These two facts may seem totally obvious, but from my experience, they get forgotten by the people who write the critical thinking textbooks (and I should know, having contributed to them myself).

So what do we need to do?

The simple answer is that critical thinking skills are necessary, but not sufficient; and that in order to have any actual impact on the world, they are one of a set of 4 conditions that we need to meet.

Humans as we have acknowledged are a blend of things – of thoughts, sensations, emotions, instincts and desires. Most of the time these things go on out of our control. We don’t choose to have thoughts or feelings we have, the particular ideas that pop into our heads.

However, we can choose the ones we want to take forward. This requires us to make a judgment. Judgment is the heart of critical thinking. We don’t need to think critically unless a judgment is required; but judgments are required; and to make them we need to think critically.

But it is not enough. Critical thinking can help us evaluate ideas, but it can’t give us ideas in the first place. For this we need creativity. (That’s if we want to have some autonomy, some input; obviously we can just put forward the ideas that ChatGPT provides for us.) But this is not a problem, since creativity is a naturally human trait.

Any idea that we have that is not purely logical, is by definition creative, since it means it has not something that can have been deduced from what went in to forming it. We need creative thinking alongside critical thinking. To make an analogy, critical thinking without creativity is like a car without an engine. Creativity without critical thinking is like a car without an engine or brakes.

Also, this idea that being logical or rational means drawing careful conclusions, never going beyond the evidence – this isn’t how we think. This doesn’t even necessarily mean good, logical thinking. When we solve problems the way scientists think, they jump to conclusions, then test them.

Even if you can think up ideas and select which ones are worth pursuing, and have good reasons for your selection, it is no use if those ideas stay inside your head. You need to have the confidence to share them with other people. And yet it’s no good being able to put forward and argue for your own ideas if all you do is alienate other people.

So you need to be able to share your ideas effectively, and this requires a whole separate set of skills. (Since people are not purely logical and rational, it is not enough just to give them reasons and evidence for instance for why you are right and they are wrong.)

Even if you are able to think critically and creatively, to have ideas and be able to select the best ones, and be able to justify those choices – and to frame them in ways that will land with others – it’s no good if it takes you forever coming up with them. We are embodied beings, in a real, physical world, where actions are needed, and often where swift decisive action is rewarded.

This doesn’t mean that you give up on thinking critically. It just means that there is a time and a place for it, and that it needs to be done in a way where the outcome is not just more thinking. Fortunately, this is where some of the other, faster-thinking features of human cognition come into play: the intuition, the instinct. The trick is to know when to listen to these, and when it’s time to maybe put on the brakes.

In conclusion: human thinking is way more than just critical thinking, but critical thinking is a necessary component.

The trick is how to do it effectively, to balance it with the other aspects of our thinking.

In particular, we need to meet the 4 conditions outlined above. Only then can our thinking be truly empowered.

Joe Chislett provides training on How to Maximise Your Thinking. He is a lifelong creative writer, an author on critical thinking, and the founder of KRITIKOS. Connect with him here:

https://www.linkedin.com/in /joe-chislett-64a41a142

Learning Pool Acquires Global Authoring Tool Elucidat and LXP WorkRamp

Learning Pool, a major global provider of learning technology, announced two significant acquisitions in October 2025: the cloud-based authoring platform Elucidat and the Learning Experience Platform (LXP) WorkRamp. These strategic moves are designed to create a single, comprehensive ecosystem that simplifies the creation, management, and delivery of learning content for global enterprise clients, positioning Learning Pool as a market powerhouse.

The acquisitions allow Learning Pool to offer a deeper, more integrated suite of solutions, combining its existing LMS and LXP offerings with best-in-class authoring capabilities for rapid content production. This consolidation reflects a clear market trend: providers are striving to own the entire learning lifecycle, offering organisations an all-in-one solution for skills development, from AI-assisted content creation to delivery and performance tracking.

Titus Learning Acquires Moodle Specialist adaptiVLE to Boost Open-Source Innovation

Titus Learning, a global Moodle Partner specialising in open-source digital learning solutions, announced the acquisition of UK-based adaptiVLE in September 2025.

This strategic move consolidates expertise in Moodle development and support, allowing Titus Learning to significantly enhance its capacity for delivering dynamic, scalable, and tailored learning solutions for its multinational client base.

The acquisition is particularly impactful for the open-source community, as adaptiVLE brings deep specialist knowledge in Moodle development and a strong client portfolio, including major organisations and global retail brands. Titus Learning co-founders emphasised that integrating the adaptiVLE team will enable faster innovation and allow the combined entity to push the boundaries of what is possible within the widely used Moodle platform.

QA, a leading global technology skills provider, announced a pioneering partnership with Microsoft in October 2025 to embed Microsoft Copilot AI learning

directly into all of its apprenticeship programs. This initiative is a direct response to the urgent need for a workforce fluent in Generative AI tools, ensuring that professionals entering the market possess the immediate, practical skills required by AI-driven businesses worldwide.

The integration provides apprentices with formal, structured training on utilising AI assistants for business functions, from data analysis to content generation. The company stated this is part of its commitment to future-proofing skills and empowering a global workforce to harness the transformative potential of AI, signalling a critical shift towards mandating AI literacy in foundational professional training.

On November 13, 2025, Kyndryl, a major provider of mission-critical enterprise technology services, announced the launch of new advisory and implementation services focused on Agentic AI.

Agentic AI refers to sophisticated AI systems that can autonomously manage complex, multi-step tasks - a capability that is set to redefine job roles and workflows across industries globally.

The company stated that the new services will guide organisations through the organisational change management necessary to scale Agentic AI effectively. This move is significant for e-learning as it highlights that corporate training is urgently required not just to teach employees about AI, but to prepare the entire workforce for a future where intelligent agents handle routine tasks, shifting human roles toward strategic oversight and complex problem-solving.

The AI skills intelligence platform, Helix by 5app, saw significant functional updates throughout October 2025, moving beyond its initial launch phase with a focus on delivering granular, in-the-flow-of-work insights. The platform, which uses AI to measure soft skills (such as communication and leadership) from passive signals in meeting platforms like Zoom and Microsoft Teams, released enhancements for reporting and personalised coaching integration.

Specifically, the October releases included new features that directly link Helix feedback to personalised coaching actions, such as a Coach me on this direct link to the platform’s AI tutor agent, VeeCoach. Furthermore, administrators gained additional permissions for controlling access to Helix data, reflecting an industry trend towards treating AI-derived skills data as a critical, high-value asset that requires stringent governance and precise delivery to L&D stakeholders.

Lifelong learning. It’s like sending all your vinyl to the charity shop in the 1990s, then regretting it 20 years later. Life is full of lessons, and for me, they arrived in unexpected shapes and seasons; from a dodgy car deal in a pub car park to becoming a Microsoft Certified Trainer, from teaching reluctant teenagers to mentoring the next generation of instructional designers.

I was reminded of this recently when a letter dropped through my door about a company pension scheme I joined at 20. I wasn’t in the job long, but now, as I turn 60 this December, it means I’ll receive a tidy little tax-free bonus and a monthly income that will just about cover my fuel costs. Nearly-60-yearold me is very happy that 20-year-old Lisa made that decision!

But if you’d asked that younger version of me what 60 would look like, she’d never have imagined this. Back then, 60 was the retirement age for women. And yet, here I am, still freelancing, still learning, still curious, with no intention of stopping anytime soon.

At 20, I had just learned to drive. With my boyfriend (now husband of 36 years), I’d bought a car, a house in a new city, and started a new job. The car was my

first real-life education: don’t buy cash-in-hand from a stranger in a pub car park. It led to a crash course in buying parts from scrapyards, using jump leads, and how to report it stolen when it went for regular outings with joy riders.

Navigation was the next classroom. Milton Keynes, with its grid roads and endless roundabouts, was a maze. I taped a Post-it map to my steering wheel, flipping it upside down to find my way home. I got lost, often, but I adapted. Over time, the city became home, and I learned to trust my sense of direction.

The job that funded that pension letter wasn’t glamorous. I’d abandoned teacher training after three days, realising I’d made the wrong choice. I landed a secretarial role instead. One day, I attended a training course and had a lightbulb moment: this is what I want to do!

Around the same time, shiny little Apple Mac IIs appeared on our desks with almost no instructions. I was captivated. I tinkered, experimented, and before long, became the go-to tech helper in the office. That was the spark: I wanted to train people in IT.

But enthusiasm alone doesn’t get you hired.

Enter the YTS scheme, the government initiative that took on inexperienced trainers for a pittance. My baptism of fire was teaching 16-year-olds who didn’t want to be there. It was tough, but two years later, I had experience under my belt and doors began to open.

Commercial IT training was no easier. If I wasn’t in front of a class, I was preparing for the next one. But the grind paid off. Within a few years, I became a Microsoft Certified Trainer and a Certified Lotus Engineer. By 29, I’d met my goal of going freelance before 30, by just four months.

Freelancing opened new doors. While working with a client, I stumbled into the emerging world of eLearning. What started with curiosity soon became a passion. I pursued it seriously, earning a TAP Diploma in eLearning from The Training Foundation and a Professional Certificate in eLearning from the University of Chester. Two decades later, I’ve designed countless programmes, courses, and resources and I’m still learning every day.

Today, my journey has shifted again. I’m working with a business coach to move into mentoring new instructional designers and supporting small businesses with eLearning tools. Outside work, I started dance lessons in my 40s, dog training with my two spaniels in my 50s and was signed by a later life model agency last year.

The data backs up my instincts. The IMF’s World Economic Outlook (April 2025) found that a 70-year-old in 2022 had the same cognitive health score as a 53-year-old in 2000. The Learning and Work Institute’s Adult Participation in Learning Survey 2024 revealed that more than half of UK adults (52%) are either currently learning or have done so in the last three years.

The message is clear: we don’t stop because we get old, we get old because we stop.

A note to my 20-year-old self

If I could go back, I’d tell 20 year old Lisa not to worry so much. It really does work out. I’d tell her to embrace each lesson, whether it’s how to bump start a car, how to survive a room full of reluctant teens, or how to reinvent yourself at 40, 50, or 60. And I’d add one last piece of advice: hang on to that vinyl collection, you’ll still be adding to it when you’re 60!

Lisa Emmington

is an instructional designer, eLearning developer, dog lover, dancer and later life model. Not necessarily in that order.

LinkedIn: https://www.linkedin.com/in/lisaemmington

Carolyn Colon explores why AI-driven learning still struggles with the human signal.

AI can now predict which course module I should take next. It can even serve it to me “just in time,” tailored to my skill profile. But here’s the paradox: AI can’t predict what motivates me to stay, grow, and contribute.

In the last year, eLearning vendors have flooded the market with promises of hyper-personalized, AI-driven learning. It sounds like a dream: adaptive content that feels tailor-made, “Netflix-style” recommendations for upskilling, and predictive analytics that anticipate skills gaps before they even appear.

But beneath the hype, decision-makers are quietly asking: Is this scalable - or just another shiny object? And more importantly: Does personalization improve performance, retention, or culture?

The uncomfortable answer: Not always.

Let’s start with the obvious: AI-driven personalization is big business.

• According to Deloitte’s 2024 AI in the Workplace Report, 62% of L&D leaders have already piloted adaptive learning platforms (Deloitte, 2024a).

Gartner’s Hype Cycle for Learning Technologies (2024) places AI personalization at the “peak of inflated expectations.”

• LinkedIn’s Workplace Learning Report (2024) identifies “AI-powered learning” as the #1 priority for L&D investment.

The promise is clear: AI can analyze learner data and build custom paths faster than any human designer. It promises scale, efficiency, and flexibility. And yet, many organizations discover that their “personalized” programs still fall flat. Completion rates stall. Engagement metrics hover.

Culture doesn’t shift. Why? Because AI personalizes content, not context.