Post production is one of the sections of our industry most easily integratable into cloud production standards, adaptable to remote collaborative techniques and able to implement tasks based on artificial intelligence. We wanted to take a closer look at this area to find out what advances and bets are being taken by two of the UK’s major post houses. We talked to The Finish Line and Evolutions. Inside this magazine, you will discover how important is the adoption of cutting-edge technology for them; both to evolve in the possibilities of content creation, as well as to take care of their employees.

Within this interest in content creators in the UK, we show you in this issue of TM Broadcast International the particular case of Second Home Studios, an animation studio that has specialized in the creation of content through stopmotion techniques. This method, highly based on the creation of content, scenes and characters manually in a fully artisanal way, also has a lot to evolve thanks to technology. In our tour of the UK, we have traveled to Scotland

to find out first-hand what has brought Kelvin Hall to the attention of the international broadcast industry. The latest of the production centers developed by BBC Studioworks has been built on the most solid broadcast technology in the Scottish city of Glasgow. Don’t miss out on all its features, it has a lot to offer.

In an amazing geographical leap, we travel to New Zealand to meet Aaron Morton, one of the members of the prominent cinematography team involved in the first season of “The Lord Of The Rings: The Rings Of Power.” This is, to date, the most expensive series in history. We spoke to this professional to find out why, as well as to provide you with all the details and technical challenges of its visual production.

The productions we have already mentioned in this editorial, both film and television, are still based on workflows over traditional connections. Why do people still choose to renovate and build on these techniques? How can companies that offer solutions to the industry help to speed up this transition? Pebble and Zixi answer us.

The Finish Line is a post-production company that has recently been awarded as the best TVrelated site to work at in the whole of the UK.

Evolutions is a British post-production company that has reached third place in the TV Producers Poll in Televisuals Top UK Post Houses ranking.

The constant changes in the possibilities of content creation, as well as the new media needs to properly manage them throughout the various stages of the creation process, are key aspects in our audiovisual environment.

Technology inside Kelvin Hall 46

Grass Valley recently announced a new member to the Grass Valley Alliance. It is PlayBox Technology, a specialist in building OTT solutions for broadcasters and content owners. The company has agreed to join the alliance by making its OTT Stream CMS products and AVOD solutions available on the Agile Media Processing Platform (AMPP).

AMPP is a live production, management, and distribution SaaS platform that connects the entire content production and distribution chain designed by Grass Valley.

“By including the PlayBox OTT Stream CMS as part of the GV Alliance, we can offer our clients the best in cloud-based solutions for their OTT and Ad Server workflows,” said Phillip Neighbour, PlayBox Technology’s COO.

“OTT systems provide broadcasters and content owners with numerous benefits, including wider reach, greater flexibility and control, personalized experiences, valuable insights, and cost savings. We are excited to offer our customers this opportunity to expand their operations and enhance their audiences’ viewing experiences.”

“As the Media and Entertainment industry transitions to more flexible, cost-effective workflows,

we need well-integrated solutions across the production chain,” said Chris Merrill Director of Strategic Marketing for Grass Valley. “By bringing PlayBox into the GV Alliance, we’re removing the expense and work of integrating systems from different vendors. Grass Valley and PlayBox are doing all the system validation so that our joint customers can be confident of getting a pre-vetted system that works from day one.”

AJA has recently announced that its Developer Partner, Time in Pixels, has delivered Nobe OmniScope support for the company’s desktop and mobile products.

Time in Pixels is specialized in bring information into the color workflow on

any production. Founder Tomasz Huczek developed Nobe OmniScope, a software-based suite of scopes that help creative professionals analyze a broad range of video sources and imagery with standard post production tools.

The AJA products that already are compatible with the Nobe OmniScope capacities are KONA 4, Io XT, U-TAP and T-TAP Pro. KONA 5 and Io X3 integrations are planned to support future customer demand.

Blackmagic Design announces the release of the Studio Camera 6K Pro. The camera solution features an EF lens mount, a larger 6K sensor for improved colorimetry and fine detail handling, ND filters and built in live streaming via Ethernet or mobile data. Advanced features include talkback, tally, camera control, built in color corrector, Blackmagic RAW recording to USB disks.

Simultaneously, the Australian company has announced the release of Blackmagic Studio Camera 4K Pro G2.

The 6K Pro camera is designed in a carbon fiber reinforced polycarbonate body which includes a 7″ HDR viewfinder. Advanced features include talkback, tally, camera control, built in color corrector, Blackmagic RAW recording to USB disks, and live streaming.

At a location outside the studio, the camera generates an H.264 HD live

stream that is sent over the internet back to the studio. Only an Internet connection via fiber or 4G or 5G networks is needed. No modem is needed, users of this camera would only need to connect their smartphone to the camera to give it a connection.

The sensors included in these cameras are combined with Blackmagic’s proprietary color technology. The color corrector can be controlled from the switcher. It can perform 13 stops of dynamic range. The sensor features an ISO up to 25,600. All models support 23.98 to 60 fps.

With the addition of EF or MFT lens mounts support, now the products can be integrated with a wide range of photographic lenses.

The Blackmagic Studio Camera 4K Pro G2 and 6K Pro models have SDI connections that include talkback. The connector is built into the side of the camera and supports standard 5 pin XLR broadcast headsets. Other inputs and outputs are 12G-SDI, 10GBASE-T Ethernet, talkback and balanced XLR audio inputs. The 10G Ethernet allows all video, tally, talkback and camera power via a single connection.

Ateme announces that its 5G media streaming solution is now integrated into Amazon Web Services’

AWS Wavelength 5G Mobile-Access Edge Computing (MEC) infrastructure.

AWS Wavelength embeds AWS compute and storage services within 5G networks, providing

mobile edge computing infrastructure for developing, deploying, and scaling ultra-low-latency applications.

5G MEC (Multi-access Edge Computing), is a network architecture that enables the deployment of applications at the edges of a 5G cellular network.

The integration was tested in a Wavelength zone within a tier-one operator’s network. This development enables the deployment of a complete 5G streaming and monetization platform including encoding, packaging, CDN and dynamic ad insertion (DAI) in a 5G MEC architecture.

Williams Tovar, 5G Media Streaming Solutions Director, Ateme, said, “After all the talk about 5G, the reality is even more exciting as it opens up innovative opportunities such as immersive content delivery in venues, and AR and VR content offerings that will be game-changing for service and content providers. AWS has an excellent solution with a global footprint, so we are excited to integrate our media streaming solutions into the AWS Wavelength 5G MEC architecture to create the first complete, low-latency streaming solution at the edge.”

OBS, (Olympic Broadcasting Services), has held during these first days of March its usual meeting with the Media Rights-Holders (MRHs) for the Olympic Winter Games Milano Cortina 2026. This was an opportunity for the attendees to meet in person, visit selected competition venues and the International Broadcast Center (IBC), while discussing the progress of preparations for the Games and the development of the broadcast operations and coverage plan.

The Milano Cortina 2026 Winter Olympic Games, after 20 years, are returning to Italy and will be held over an area of more than 22,000 square kilometers. This project involves two cities, Milan and Cortina, working in collaboration with the support of two regions, Lombardy and Veneto, as well as two autonomous provinces, Trento and Bolzano, to create an unforgettable event.

The broadcasters, in addition to visiting the facilities where some of the events will be held, will also visit the Milano Convention Centre, which will be transformed into the Main Media Centre (MMC) for the upcoming Games. During the Games, it will house the International Broadcast Centre (IBC), which will offer the OBS and the MRHs access to technical and production facilities, as well as various offices and services.

“The International Broadcast Centre for the Olympic Winter Games Milano Cortina 2026 will find its home in the MiCo exhibition complex, which stands out as one of the finest venues ever selected for this purpose,” explained Sotiris Salamouris, OBS Chief Technical Officer. “As the host broadcaster of the Games, OBS is thrilled to establish its base of operations there, and the Media Rights-Holders

share our enthusiasm. A venue of such high quality is an essential component for ensuring the absolute success of the event.

Broadcasters and content creators will have access to the best facilities for producing exceptional coverage of the Milano Cortina 2026 Olympics.”

OBS updated the broadcasters on their plans to showcase world’s top athletes. The organization intends to provide MRHs with greater access to the athletes, including more behind-the-scenes content pre- and post-competition to enhance their multiplatform coverage of the Games. Furthermore, OBS provided them with a detailed technical framework that aims to facilitate their operations on-site and for their remote teams. As part of this framework, broadcasters will be able to rely on an extended range of cloudbased solutions.

Culture Club celebrated the 40th anniversary of the triple platinum album Colour by Numbers with a one-off concert in California on February 25. However, the band’s fans were able to enjoy it all over the world because it was streamed thanks to Caton Live Stage solution.

Monogram Media and Entertainment captured the entire show in 4K Ultra HD, including a short set by Berlin before Culture Club performed for close to two hours. Live Stage ensured distribution of the show from the production output to every venue around the world. Many markets, including Japan, received the feed live, while others, like Europe, had a timedelayed stream.

“We are really excited that the technology has finally arrived which allows us to live stream our music around the world, giving our fans a great experience

– nearly as good as being there in person,” said Boy George, Culture Club’s singer.

The tool allows to live stream the event with digital cinema quality and low latency. This performance is obtained with AI-based intelligent routing from Caton. The cloud architecture known as C3 uses smart traffic engineered algorithms to generate multiple routes with self-optimising capabilities between the source and destination.

“With Caton Live Stage, we’re changing the paradigm for digital cinema, broadcast, and OTT. Our AI

smart traffic technology and completely autonomous switching ensure users are guaranteed complete reliability and real theatrical quality over commodity business broadband,” said Gerald Wong, Senior Vice President at Caton Technology. “The system is easy for the cinema or venue to deploy, with the decoder configured remotely, making it a simple plug-and-play solution. This is a game-changer for the entertainment industry, providing venues with a new way to offer highquality music and sports to audiences and operators with valuable sources of revenue.”

V-Nova has announced the collaboration with Globo Mediatech Lab in the delivery of an LCEVCenhanced stream for the 2023 Carnival in São Paulo and Rio de Janeiro, Brazil.

The live stream was configured in conjunction with existing AVC/H.264 transmission to demonstrate the benefits of MPEG-5 LCEVC in live streaming applications.

The streaming trial featured a MainConcept LCEVCenhanced H.264 live encoder creating a ladder of 6 profiles peaking at 1080p29.97 @ 4500kbps. This was compared to the incumbent AVC/H.264only channel of 8 profiles, where the top profile was 1080p29.97 @ 5500kbps.

The streaming employed HLS in 6 second TS segments and delivered via AWS CloudFront. Playback was available on

a demonstration website using Shaka Player v4.3 which natively includes an LCEVC decoding option.

The LCEVC-enhanced H.264 delivered a high quality output with a ~40% bandwidth saving compared to the existing H.264-only stream.

Guido Meardi, CEO at V-Nova said, “The Carnival is as beautiful to watch as it is challenging to compress at high quality. We are proud of our collaboration with Globo, which proves the tangible benefits of LCEVC for enhancing resolution and picture quality in largescale live events while

improving efficiency in both the CDN and backend. Critically, these real-life trials demonstrate that this new technology can be deployed today with broad encoder and decoder support available.”

Deacon Johnson, SVP & General Manager at MainConcept said, “We are pleased to continue the work we started in 2022 with V-Nova and Globo. MainConcept values our partnerships with industry leaders, such as V-Nova and Globo, who share our commitment to the quality, reliability, and performance we demand in our products.”

ATG Danmon has recently shared one of its TV and radio system modernisation project for a London based university.

The company has partnered with the university’s management in selecting new elements of the system and advising on how best to integrate these into the creative workflow. In this University, students can learn about film, fine art and media, digital arts and communications, games design and animation, music, theatre and dance, creative writing, cultural and heritage studies, journalism, advertising and performing arts.

Among new additions to the video infrastructure are a UHD video production switcher with NDI IP connectivity as well as 6G-SDI and HDMI interfaces. The system is capable of supporting up to 10 wired or wireless cameras plus three graphics tracks. It also incorporates storage for up to 20 hours of broadcast quality video and audio. Creative facilities include live switching, chroma key compositing and text management plus the ability to perform live streaming.

Also integrated are UHD monitors plus an IP-

interfaced 40 input digital audio production console. This is augmented by intercoms between studio and production gallery plus wireless microphones and wireless in-ear monitors.

The radio hub is equipped with an HD-SDI production switcher including 4 SDI and 10 NDI inputs. The switcher sources from 16 1080p NDI-interfaced remote pan/tilt/zoom cameras. Audio is handled through a 12-channel digital mixer with 16 audio-over-IP channels accessible via two Ethernet ports or 64 MADI inputs via fibre.

platforms and applications, including web browsers, iOS mobile, Android mobile, Tizen, and soon Android TV, LG smart TVs, Sony Playstation and Apple TV. Using Red Bee Media’s Software Development Kit (SDK), Dotscreen refreshed all Auvio user interfaces across end-user devices.

Red Bee Media has recently made public that the media services provider has signed a multi-year agreement with Radio-télévision Belge de la Communauté Française (RTBF), the public broadcaster for the Frenchspeaking community in Belgium.

The objective is to power its recently re-launched Auvio platform through Red Bee Media’s managed OTT services with live TV and radio, Audio on Demand (AOD), and Video on Demand (VOD) capabilities.

As a part of the project, Red Bee Media partners with Dotscreen, a multiscreen

User Interface, app design and development agency, to improve Auvio’s user experience with UI solutions.

RTFB was looking to revamp their streaming platform and to deliver new capabilities while ensuring integration with its existing technology infrastructure, RTBF needed a partner to provide a managed solution with end-to-end responsibility for delivery, storage, and management.

Harnessing a range of OTT capabilities via the Red Bee Pulse service, RTBF delivers live and on-demand content to Auvio users on a wide variety of online

“Live streaming and topquality video and audio content are at the core of our digital offering. With Red Bee Media, we achieved record-breaking audience levels on our streaming service during the 2022 FIFA World Cup,” says Cecile Gonfroid, Technology Director, RTBF.

“Red Bee Media’s proven broadcast-grade solution for live and on-demand streaming, combined with Dotscreen’s intuitive and user-centric frontend design proposition, perfectly aligned with what we were looking for. We’re excited to continue innovating and have the right partners to implement our vision.”

Ad Insertion Platform (AIP) and Tiledmedia announced a partnership agreement to provide overlay ads into the video content of video-ondemand (AVOD) and free, ad supported streaming TV (FAST) streams.

The idea is not to sacrifice monetization by reducing the number of traditional ad breaks and replacing them with overlay ads. Users can keep watching their content while a suitable ad appears in a corner of the screen or on a configurable customer location thanks to Tiledmedia’s Multiview technology.

The insertion workflow is automated via the Ad Insertion Platform’s SSAI technology, both for ondemand and live video. Ad overlay inventory is inserted automatically into the tech stack.

“Personalised Multiview Advertising is a gamechanger in the video streaming industry,” said Frits Klok, CEO of Tiledmedia. “Our partnership with AIP has resulted in an innovative solution that combines the power of Tiledmedia’s Multiview streaming with AIP personalised advertising expertise. This advanced

advertising solution provides a new revenue stream for service providers while offering a more engaging and interactive experience to customers.”

Laurent Potesta, CEO, Ad Insertion Platform, added: “Automated noninterruptive advertising creates a great complementary option for brands to connect with audiences nowadays with a high level of viewer acceptance. Without interrupting the viewer experience, an advertiser can contextually target its audience in an innovative and non-intrusive way.”

MultiChoice Group owns Showmax. This is a streaming service in Africa that has a footprint in 50 markets in sub-Saharan Africa. Recently, the group has reached an agreement for what they will relaunch the platform, powered by NBCUniversal’s Peacock technology, along with world-class content from NBCUniversal and Sky. It will build on Showmax’s success to date and aim to create the leading streaming service in Africa.

Showmax subscribers will have access to a premium content portfolio, bringing African audiences the best

of British and international programming.

The service will combine MultiChoice’s accelerating investment in local content, content licensed from NBCUniversal and Sky, third party content from HBO, Warner Brothers International, Sony and others, as well as live English Premier League (EPL) football.

“We launched Showmax as the first African streaming service in 2015 and are extremely proud of its success to date. This agreement represents a great opportunity for our Showmax team to scale

even greater heights by working with a leading global player in Comcast and its subsidiaries,” said Calvo Mawela, Chief Executive Officer of MultiChoice. “The new business venture deepens an already strong relationship and builds on the Sky Glass technology partnership that we announced in September last year. We believe we are extremely well positioned to create a winning platform going forward.”

Matt Strauss, Chairman, Direct-to-Consumer & International, NBCUniversal, added, “This partnership is an incredible opportunity to further scale the global presence of Peacock’s world-class streaming technology, as well as to introduce millions of new customers to extensive premium content from NBCUniversal and Sky’s stellar entertainment brands.”

Chicken Soup for the Soul Entertainment Inc. is an US-based content provider and KC Global Media is multichannel and network entertainment operator from Asia. Both companies recently entered into an agreement with the goal of expanding internationally. They will do so with adsupported free streaming TV (FAST) and ad-supported video-on-demand (AVOD). The companies plan to launch additional FAST channels and license AVOD rights in 2023.

This strategic agreement strengthens Chicken Soup for the Soul Entertainment’s international presence as it joins KC Global Media’s portfolio, through AVOD licensing and the launch of FAST Channels in Asia.

Andy Kaplan, Co-Founder

Andy Kaplan, Co-Founder

and Chairman of KC Global Media, said, “As we head into 2023, one of our key strategic goals is to increase our volume and diversity of premiums content in our current portfolio. Chicken Soup for the Soul Entertainment is one of the largest content providers, and this strategic partnership gives us an edge as we provide more value to our partners and affiliates in the region. This, in turn, will also enable us to reach out to new audiences and new territories and create more opportunities around the world as we continue to grow our business with our

affiliates and streaming platforms.“

“One of our areas of focus in 2023 is to grow the availability of our owned content globally – and monetize it in every way possible,” said Elana Sofko, Chief Strategy Officer of Chicken Soup for the Soul Entertainment. “We always seek to work with prominent media companies already successful in the markets we are entering. KC Global Media is a company with deep relationships throughout Asia, and we know we can continue to scale our international business together.”

I was working at a large London post-production company when I suffered from a mental breakdown. What led me there had to do with the fact that budgets were getting tighter and tighter and that meant doing the same tasks in less time. In addition, as we were cutting back on investment, we were also cutting back on the tools we had at our disposal to get the job done. No time or training was ever invested in our development as creatives, we were just ordered to get the job done. I worked a minimum of 14 hours sometimes, and very often 17 or more hours a day.

This caused me to start to think more about how post production was done and if there was potentially a healthier and better way to do it. I never stopped loving post, but the approach was making it impossible to do work to my standards and to have a life outside of work. I should be able to be a passionate creative person, but also have friends, a family and other

The Finish Line is a post-production company that has recently been awarded as the best TV-related site to work at in the whole of the UK. Despite what we might traditionally think, the company does not provide entertainment spaces in the office, nor does it have luxurious and comfortable post-production facilities in its work center. This company, made up of almost 30 professional high profile creatives, deserves this title because it cares for the worker above all else.

In an industry where times (and budgets) are getting smaller and smaller, and workloads bigger and bigger, The Finish Line, led by Zeb Chadfield, our interviewee; has managed to ensure that the people in their charge are not overwhelmed by 12-hour workdays, can enjoy their private lives and, at the same time, get a high-level job done. Read on to discover that technology has played a big part in this ability.

By Javier Tenathings that give my life value. This was the idea.

With a lot of thought, I realized that apart from talent, the only thing a creative like me needs is to have the best software and hardware available. The first thing to invest in was a high quality grade one reference monitor and with that I started my career again, by myself, because that was The Finish Line at the beginning: me trying to get my health back while trying to limit my work load.

is today?

It was just me and now, nearly 12 years on, it’s just under 30 people. We are well known for our healthy approach to post production, putting our talent first and looking after them so they can do their best work. We have been recognised as the best place to work in TV in the UK in 2023 and also received other awards for leadership.

We handle end to end post production services but our main services are picture finishing and delivery. We have the largest team of Finishing Artists in the country and work to deliver to the highest standards so we are very focused on technology like HDR with Dolby Vision and high end mastering monitors like our favourites from Flanders Scientific allowing us to

deliver up to 4000nit HDR masters.

On the side of the tools we use, the truth is that everything has slowly evolved to make Blackmagic’s DaVinci Resolve the most complete tool in picture finishing. So we don’t jump from one software to another as much anymore. Resolve, in its development, became

more accessible and they have continued to add more features to make it much more useful.

The combination of highend color management tools, with capabilities accelerated by neural engines and integrated with Dolby Vision, Dolby Atmos and all the best mastering formats, offers the best solutions for us at this

very moment. Being able to work with end-to-end camera originals and devise intelligent workflows with Dolby Vision has allowed us to make a big name for ourselves in the HDR finishing and delivery space.

How much added value does technology bring to The Finish Line?

Technology is very important to us, but we look at it through a different lens. We look for technologies that make life easier for our employees

so they can spend more time with their loved ones, or that allow us to improve the quality of the images we produce. It’s often both: the tools and technology that allow us to have a better, more balanced life also allow us to do higher quality work.

Is it really possible to get the best results in your work and, at the same time, promote a healthy way of working among employees? Because, it really seems impossible!

Yes, it is. We decided to start planning the projects

around 8 hour workdays, more shorter days rather than a few excessively long ones. That was the first thing we did. It’s hard to believe, but the truth is that grading shifts are often around 12 hours. And that’s really inefficient. Because with so many hours spent in the suite, there comes a point where you don’t think clearly and you end up needing even more hours than 12 and then you come in the next day and have to do it again, that can only last so long.

Sometimes the shorter workdays are difficult to maintain. In fact, more than once with certain projects, we suddenly see that the time planned was not enough to get the job done, often due to assets coming in late from one place or another. When we see that happening we sit down with the team and rework the costs and schedule to keep things moving forward in a healthy way. If we are up against a broadcast date or time that can mean throwing a lot more resources at it to get it on air, and then we need to look at what could have been done differently. This

is a management failure and we need to be sure to learn from it so it can be avoided in future.

In addition to time management and organization, we have also devoted special attention to technology. We have observed its evolution and have adopted new technology always keeping in mind how we can make it possible for our employees to spend more time with their loved ones while improving the quality of the work.

Going deeper into this topic of technology and its evolution, do you think that the evolution of technology has taken into account this very human factor?

I think for the most part yes. The question is whether companies will decide to use it to improve the lives of the people they work with or for profit. Probably the majority of businesses will favor profit.

But it’s very important to look at technical evolution with a people-centric lens. Because no matter how

smart the tools are, you will always need to combine them with creative talent to get the most out of them. I’m of the opinion that an AI can get the job done for you, but if you really want to get the best result, you’ll need a person as well as the AI.

So, technology is allowing us to do more with less effort. For example, Resolve has an AI-based depth tool. It will create a depth map based on an algorithm. You can use it to change the focus of the image or even to make a text appear behind someone, or use the object recognition to select a person or a car and use it to blur or obscure something. Before these tools we could spend a day or two rotoscoping. Now, all of a sudden, it’s done. In fact, in addition to the way technology gives us the ability to do more things with less effort, it also frees up talent, because it is no longer necessary for one of our artists to spend three days doing those minor jobs. They can focus on adding more value to the project and really harnessing their creativity.

are the technological challenges you usually face in your projects?

The main challenge for us with the kind of projects we are involved in, especially with UHD and HDR, where everything revolves around the speed of storage and computers. Also, it doesn’t help getting stuck waiting on master deliveries for final GFX or archive. However, these are things that are beyond our control. Regarding the former, as long as the equipment is up to par, higher resolutions are less important so we invest a lot in fast storage and systems.

Developing workflows in which we don’t have to convert files to lots of different formats or move them between different tools is essential to be able to work efficiently. Equally important is working with camera originals and having them available at all times. For example, our editorial team can conform all media through DaVinci Resolve, whether they are working in Final Cut, Resolve, Avid, Premiere, Lightworks or any other program. Our conforming process,

regardless of how you edit, takes between 10 and 15 minutes which is a huge difference when compared to the alternatives.

In our case, instead of having to generate different files in different phases, we can work with a nested version of the timeline, i.e. work with a reduced version of the timeline, but still containing everything within it. That means we can do reversioning, remastering or international versioning at the same time. In fact, we do quality control and fixes on that original timeline,

so everything we correct is corrected automatically within all the other versions.

You mentioned that you develop your content in HDR, how do you manage the different copies in different color standards?

Dolby Vision is the best for that. If you treat your HDR Master as primary, you can generate any version, even a theatrical version with Dolby Vision. It’s fantastic. We just have to finish a piece of content in the correct HDR standard and

then we can output it into any deliverable format. It’s an automated process, really, where you just have to do minor manual adjustments to make sure everything comes out as intended. If you’ve done a good HDR finishing, the treatment you’ll have to give to the SDR version will be minimal. However, if the HDR finishing was not good, the automatic conversion process will not produce a great result. We do a visual pass to see if there are any strange flashes or cuts, correct what needs to be corrected and that’s

it. As mentioned earlier, using this Dolby technique, we don’t have to generate different grade passes for each version. It is enough to do the primary version and use the metadata method to generate what’s needed for the different formats; the quality it produces is very good, I think aesthetically it’s even better than if we did each pass manually — though this is a personal opinion.

Remote production techniques, as well as post-production, have changed the landscape of our industry. They became commonplace during the COVID-19 pandemic. What workflows did you modify then? How did the technology help you adapt them? Which workflows have remained today?

We were developed and started running virtual edit suites back in 2014 so we were well positioned fortunately for the remote working approach. The main thing that changed over the past three years is that the client understanding about

this shifted and it’s more common for production companies to take us up on lower rates for remote work because they know it can be done and trust us to deliver to the highest standards.

Regarding tools, which ones do you use to solve the challenges associated with remote post-production?

We rely in LucidLink. It works like a shared storage system within a traditional facility, but it’s cloud-based, and it’s very capable. You can even insert a change into a file, and it will only

upload the changed bit of data inside the file.

On the other hand, regarding shared editing, our team members can work in shared timelines. To do this, we use the features provided by DaVinci Resolve. To give you an idea of its capabilities, if one of our colorists is working on a clip, it locks that clip for everyone else so you can have multiple colorists on the same timeline.

In addition, Blackmagic has now released cloud libraries with Blackmagic IDs. It gives us the ability to work from multiple locations on a shared project and timeline.

We can divide up the tasks as much as required. One person can be subtitling, others working on color, etc. It allows us to be much quicker when required on a fast turnaround project.

I think we’re in a space right now where there are not going to be big technology leaps. AI and ML-based tools will continue to enable more functionality, but ultimately the revolutions are inspired by users and consumer product demands. The next big thing may be just around

To conclude, will we come to rely solely on machine learning and artificial intelligence for post-production?

I am a bit of an optimist. I do think it will just create more content rather than reducing jobs. And I believe this because I maintain that the best content is created by the best talent and the best technology when combined. AI can write a perfect text, a text that gives you what you are looking for, but it doesn’t give that text your personality based on your life experience. That’s the gold dust you need to sprinkle on top and that’s why cooperation between these tools and human talent will always be necessary to elevate the creative output to the highest level.

the corner, but there is nothing obvious that is going to be a systemic change in the way things are done in the short term.

However, I do think the industry is going to change. A lot of creatives are now in a pretty strong position to say what they want to do. In that sense, what you see is that there’s little interest in going back to big facilities, in fact, we all proved that you can do the same work from home. So they’re in the position of saying “I’m going to do it from home. If you don’t want me to do it at home, then I’ll work another job.”

The question here is clear, then, will the traditional facilities vanish? I think they probably won’t. There will be a need for creative hubs where the different parts of the production teams come together from time to time. But the viability of traditional facilities, which are based on providing as many services as possible under one roof might become more of a wework type model, you can hirer the right sort of creative space but all the resources are virtualised. This is already changing to a scenario where more and more professionals are working in a distributed way and you will get a better result by working with specialists in each field. Producers choose who they would like to work with at any given time and it won’t matter where they are. Even in the highest level productions, which always prefer that everyone works from the same systems for security reasons, they can already make that system adapt to the distributed production models we are talking about so hypothetically a large steaming service would just give limited access to specific tools for various creatives wherever they may be to do their bit of the job and then revoke access when that bit is done. Componentized talent services, perhaps?

Actually, the only thing holding back all this development is the mindset of the people making the decisions.

One hundred percent. When I set up the company, it was designed exactly the way it works now, but no one was willing to work that way when we tried to sell it to them. We ended up pretending that we were doing everything the same as everyone else, but in the background we were relying on all the virtualized systems like edit suites, cloud technology and glacier storage.

Post-production with an eye on the future

Evolutions is a British post-production company that has reached third place in the TV Producers Poll in Televisuals Top UK Post Houses ranking. We spoke with Will Blanchard, Head of Engineering at Evolutions Bristol, to find out what this post house is focusing on in terms of the technologies that are currently being developed in the industry. With detailed opinions, you will learn about what this company is emphasizing in terms of investment and infrastructure for remote post production workflows, cloud or artificial intelligence.

How was Evolutions born? How has the company grown from what it was to what it is today?

Evolutions launched following a management buyout from ITN in 1994. With a broadcaster’s attitude to technical standards and an independent facility’s approach to client care, Evolutions quickly became one of the most popular and successful post production companies in London.

Another management buyout in 2004 was the precursor to a period of growth and expansion, leading, in March 2006, to the acquisition of Nats Post Production, another of Soho’s most popular fullservice post houses. 2008 saw continued growth with the opening of a further Soho facility in Great Pulteney Street, and in June 2013 Evolutions opened its first regional facility in the UK in the heart of Bristol’s media district.

In April 2014, a brand-new, purpose-built, facility in

Sheraton Street, in central Soho was opened. Further expansion in September 2015 lead to the opening of Berwick Street, a beautiful 30-suite facility in the heart of Soho.

Evolutions is now one of the largest independent,

full-service post production companies in the UK across it two-city sites. Evolutions Bristol has now been part of the South West’s production and postproduction community for ten years, and has grown from a small facility with one Colourist, one Dubbing Mixer and a handful of offline suites to one of the regions most respected HETV facilities offering creative talent. We have a credit list ranging from blue chip natural history, to drama and animation.

In 2023 Evolutions were ranked 3rd in the TV Producers Poll in Televisuals Top UK Post Houses.

Their services encompass workflow consultancy including complex VFX work, in-facility, remote and hybrid offlines, UHD HDR Baselight grading, Flame Online suites and Dolby Atmos Audio.

How much added value does technology bring to Evolutions?

This is a tricky question to answer. In terms of value specific to Evolutions we don’t believe we will stand out because of our technology. We believe that it is assumed that every

post production facility can gain the same technology, so the way we stand out is being operationally and creatively excellent. That said obviously we wouldn’t be able to function without technology. It’s difficult to put a value it though.

What are the house specialties?

High end television (HETV) post-production in natural history, documentary, drama, etc.

What recent projects has the company been involved in?

I would highlight some contents such as “Epic adventures” (Wildstar, Disney); “Serengeti S1,2,3” (John Downer, BBC/ Discovery); “Lloyd of the files” (Ardman/CITV); “The Doghouse” (FiveMile Films, ch4); and “Gangs of baboons” (True to Nature, Sky Nature).

What have been the most challenging and why? What has technology brought you to solve these challenges?

“Gangs of Baboons” was our first UHD HDR delivery that included sound in Dolby Atmos. We upgraded one of our 5.1 audio suites to accommodate 7.1.4 Dolby Atmos. The most challenging aspect of this was to meet the Dolby specification for speaker placement. We already had 5.1 but some of those speakers needed to move slightly and we needed to move the listening position. Then, obviously, adding more speakers. Once the monitoring was all in place we upgraded the audio IO to an AVID MTRX with

suitable option cards to connected to a Dolby RMU to be able to process and render out Dolby Atmos content.

We have also bought in Auto Desk’s Flame product to overcome some of the colour pipeline issues we were having in picture finishing.

Technology is evolving very fast. Postproduction techniques are not far behind. What are the evolving trends in technology that Evolutions has identified and is betting on?

In general, everyone seems to be centralising compute power. Whether or not it’s cloud, data centre or on premise MCR, moving everything centrally solves a few challenges and opens up other technology opportunities. Having all the compute in one place can make some of the remote solutions easier to manage. It also helps if you want to start virtualizing workstations and servers. We have embraced virtualization. For us, the main advantage is security, backup and recovery.

In terms of production, we are seeing increasing engagement with VFX. This is often handled by outside companies, as the production company usually has a preferred supplier (although Earth is our in-house visual effects arm). The challenge is that, with UHD HDR, even sending small clips/plates to a visual effects studio can increase the amount of data that needs to be transferred. Along with the files being bigger, the amount of VFX work per episode has increased. We have expanded our finishing support department and increased the number of workstations

and network speed to enable them to process plates and shape visual effects more efficiently.

HDR and UHD (4K), or later (such as 8K), have already become a production standard. How has Evolutions adapted to the demands of these new formats?

There are a few aspects that are challenging when moving to the high-end workflows.

The obvious being, everything needs to be bigger and faster. Storage needs to be bigger and faster, networks need more

bandwidth, workstations need more power. These are all the obvious challenges anyone needs to overcome. In the natural history space we have a fairly unique challenge as the amount of footage captured by a production is massive. Due to the nature of the production stage it is not easy to predict how much footage will come to us. But with an average or around 400:1 shooting ratio we end up with a lot of data. In the region of 1-2PB per series. The time from receiving the first rushes to delivering the final programme can sometimes be measured in years so we need to take that into consideration when specifying storage and workflow.

We have put in data centre grade storage with a 100G network backbone, which should future proof us for a time. We are also in R&D on the next generation of storage infrastructure, looking to make things more efficient, cost effective and faster.

Archive is another issue we face. When creating

multiple copies for archive, 1PB of source material can quickly become 3PB of data to write, which is a huge. We have had to double our LTO capacity as well as move up a generation in drives/tapes to accommodate faster speeds and higher capacity.

The HDR colour pipeline is infinitely more detailed than SDR. Even at delivery stage there are many choices of specification. This is dictated by the delivery platform. Therefore we must be geared up to deal with every possible iteration. It’s fairly standard for delivery versions to enter the 10s (per episode) including; HD SDR, UHD SDR, UHD HDR and within the HDR deliveries sometimes multiple different colour spaces for each delivery. Gone are the days when rec709 was the only flavour you had to worry about. From a workflow perspective, we work with a master version of a programme that can deliver all the different ‘flavours’ at the end of the creative process. This has meant we don’t have to

repeat work in different colour spaces. Along with the workflow we have also had to accommodate a lot more HDR monitoring so more people can be involved in the process along the way. We now accommodate UHD HDR monitoring in all finishing stages, grade, online and audio (Dolby Atmos) as well as the technical support teams that assist all these processes.

We have added Autodesk Flame to our picture finishing tools. This enables us to utilise a completely native workflow throughout, allowing us to preserve the highest quality images.

We have also added MTI’s Cortex platform which does a number of things but helps us with QC and delivery of UHD HDR content.

Remote production techniques,

have changed the landscape of our industry. They became commonplace during the COVID-19 pandemic. What workflows did you

as well as post-production,

modify then? How did the technology help you adapt them? Which workflows have remained today?

We have decided to move a lot of infrastructure to a data centre. This not only gives us some benefits in terms of scale, but allows us to share work efficiently between our physical sites in Bristol and London. Clients have become used to working from home, which has meant that anyone’s geographical location is fairly irrelevant. That’s such a good thing allowing production to put together really strong teams, using talent they might not have used before as they might have been too far away from the rest of the production. Even though a lot of people are ‘back in the office’ the requirement for remote technology is still very much needed. I think a real positive to come out of the pandemic is we are now setup to facilitate this flexible working, which allows production to draw on talent they might not have been able to use

previously. Not only can clients work from home a Bristol based production can have editors working on the same project, in our London facility, and vice versa. So we can accommodate distributed production teams in a way that suits them. We manage to do this by our high speed network infrastructure between sites, as well as equipment in our data centre.

We also have systems to be able to review and sign off content remotely, again from home or sit in an edit suite in London reviewing work (live) that is happening in Bristol. This helps clients with distributed teams be more efficient with time, and reduce carbon footprint due to limiting the amount of travel needed.

What capabilities does the introduction of the cloud bring to your workflows, and do you see it as an advantage?

Cloud technologies are interesting from a technology point of view. Obviously the most attractive thing is the fast

scale and de-scale allowing companies to accept work beyond their physical technology infrastructure. The challenge we face is the cost. Dealing with the amount of data we do, the ingress, egress and storage charges make the cost prohibitive. Each project we work on now commonly goes into PB of storage, with a project lifespan of 1-3 years, making the bill for storage alone into the millions. Where as to buy the physical storage is a tenth of that. Even when you take into consideration power and space, it’s still not attractive in that domain.

If we do manage to get over the cost hurdle, we also have to consider getting uncompressed video at 12bit 4:4:4 into monitors in our finishing suites. To make best use of cloud infrastructure we have to put the compute element of our system in with the storage to give us the best efficiencies and performance. While lots of software manufactures are doing a lot to make their products work well in the

What are the next steps Evolutions will take and what technology will it rely on to take them?

Moving into the future we are looking at our infrastructure and how we best plan for change. The only thing certain in technology is that it will change. The goal posts our clients will continue to change. So whatever you plan for today may become redundant tomorrow. We want to create a flexible infrastructure that can be scaled and accommodate a number of scenarios. More specifically we’re investigating technologies to centralise compute power. This helps solve a lot of challenges.

cloud there are still some challenges to overcome in the video monitoring side. Lots of people are using NDI successfully but we want to aim for the highest quality possible. Currently we are viewing UHD HDR 12bit 4:4:4 in our finishing suites, to move to a compressed video format like NDI would be a step back in my opinion. While we have adopted NDI elsewhere, in our finishing suite I don’t think it’s appropriate. SMPTE 2110 may provide the answer but along with the storage costs and other technical challenges has leaded us to keep an eye on the cloud technology space, but has stopped us jumping in with both feet at this stage.

We use the cloud in other ways, often with 3rd party SaaS platforms for some data transfers into and out of our on premis infrastructure.

We also (being Albert affiliate) consider our environmental impact of using cloud infrastructure. All of this and the above come into our decision making when deciding on how to deploy technology, including cloud infrastructure.

What do you think could be the next big technological revolution in the post-production industry?

We are starting to use more and more AI tools. I think there is a space for AI to add value to post production in providing higher quality conversion and manipulation. We deal with a lot of upscaling and standards conversion for archive footage, sometimes creatively trying to sit amongst newly shot UHR HDR footage. We already have some high end software tools and hardware for this. Last year we started using AMDs Threadripper platform for noise reduction (in picture) which increased the speed of processing around x8. So we have really powerful hardware. The next step for us is to see what value content based AI can give us. We occasionally come across footage that will cause a traditional algorithm based process to fall over and create unwanted artifacts. I’m really excited to see what will happen when the process moves over to content based processing.

The constant changes in the possibili es of content crea on, as well as the new media needs to properly manage them throughout the various stages of the crea on process, are key aspects in our audiovisual environment.

We are now used to handling virtually all of our information in digital media: from a simple text or voice message to large volumes of information required by quality images require, especially when it comes to audiovisual content.

From the standpoint of a generic user, disregarding everything that is behind such storage is the usual thing. But from a professional point of view, it is part of our responsibility to know as much as possible in regard to everything that is related to our tools so as to make, as always, the most of them.

When discussing “media storage” or more specifically in our sector “audiovisual content storage”, we do not intend to delve into brands or models, but into the qualities and features of said storage, highlighting those that are critical for our creation process, from camera capture to broadcasting and long-term archiving.

It is not an exact figure, but at the beginning of this 21st century it was already

estimated that in a little more than a decade of digital photography more photos had been lost due to technical or human failures of any kind than all the photos that had been created for more than a century since the invention of chemical photography. This is something that cannot happen to us.

But it is not simply about not losing information, it is about being able to record

and retrieve it in the most efficient and reliable way at each and every one of the different stages and situations through the creation process. So, let’s review what are the most important aspects for each of those stages, hoping that no one will be surprised by the large differences that exist regarding the needs to handle the same content throughout the whole process.

Let’s start by clearly setting our scenario by establishing the large sections, because we must not limit ourselves exclusively to the media, but also consider “what”, “when” and “where” for our contents. Naturally, other questions such as “depends”, “what for”, etc. will arise later on, and this will lead us to make the right decisions.

Dealing with “what” is very simple; what are

we going to save?: given our audiovisual creation environment we are going to focus only on the aspects related to our AV contents, that is: the file (or set of coherent files) that save the image, the sound and the associated metadata that allow us to correctly manage our recordings.

As for “when”, we will consider four major stages in the creation process: registration, while recording is taking place; handling, which normally involves all processes related to ingestion-editingpostproduction; broadcast, when it is made available to our viewers; and archiving, when it is saved for later use. Because each particular stage involves very different needs.

“Where” is precisely the medium that will store our contents, not in all cases will it be a physical medium.

“Depends” will almost invariably be the right answer to all the “what for’s” that we will be facing. These “what for’s” will be the goals, the purposes for which the recording and/or

archiving and/or broadcast is made. Different “what for’s” of the same content will determine very different storage needs.

We will begin by explaining the two main features of storage systems, since these will be what will most frequently determine the ideal media in each particular circumstance.

The first and most significant feature is capacity, measured in terms of Giga-Tera- or Petabytes, and it is the easiest one to grasp: it is the size of the warehouse and determines the amount of information that will be possible to save, their respective factors being of the order of 109, 1012 and 1015. It is important to remember that if we refer to an order of 103 in computer terms (1 kilobyte) we are really talking about 1,024 bits, 106 (1 Mega-byte) in the same terms = 10242 = 1,048,576 bits, and so on.

Next comes speed. But let’s slow down now because there is more than one speed or rate of transfer. Usually, we talk about

speed figures of the order of MB/s (Mega means 106). In addition, we must take into account that writing speed is usually lower than reading speed, that these speeds are not the same for a small block of data as for a continuous flow (precisely that is our need!). We must also take into account access speed or time it takes to find the data sought, and the most delicate task: make sure if we are handling data in MB/s (megabyte) or Mb/s (megabit): 1 MB/s = 8 Mb/s!

In our case, the speed that really interests us will always be the sustained speed, whether for reading or writing. But be careful not to confuse bit rate (transfer rate) with frame rate (the frame rate or fps in our recordings), although a higher frame rate will always require higher bit rates and storage space.

Having explained all this beforehand, it is now possible to start exploring possibilities.

The capture stage seems relatively simple, since the internal media is always

conditional to the camera. While today there are a lot of cameras that already use memory cards of standardized formats such as SD, XQD or the new CF extreme (types A and B), there are still in service a good amount of equipment that only support proprietary media such as Panasonic P2, Sony SxS and even optical disks. Not to forget that it is common to use external recorders such as those from Atomos or Blackmagic among others.

The necessary storage capacity will be based on resolution, frame rate and codec chosen, but do not need a calculator: manufacturers usually offer quite reliable figures for the various recording times possible in each combination, as well as the minimum writing speed necessary to ensure correct recording. In fact, it is often the case that the cameras themselves do not allow you to select formats that are not supported by the internal card.

That is where external recorders come into play, which in turn also use cards and/or disks that must also meet the necessary capacity and speed requirements for our shooting needs. But pay a lot of attention here, because under the same physical card shape, especially in SD media, it is possible to find a huge variety of speeds, a factor that is usually directly related to the enormous range of prices for the same capacity. Categories C (class) U (UHS) and V (video) give an idea of performance.

Although we have used the word disks, at this stage mechanical hard disks are no longer normally used, but SSD type, that is solid state hard disks. They are actually large memory cards that for all intents and purposes behave like a hard disk, but with the advantages of being much faster, not having moving parts and consuming much less energy. And they also exist with a very wide range of speeds.

The impact of each of the factors that affect the volume of information generated is very different, which is normally referred to as “the weight” of a file: quite proportional and predictable if we consider resolution (HD vs UHD), frame rate (24/25/29.97 vs 48/50/59.94) and color space (4:2:0 vs 4:4:4). But enormous among very diverse codecs (AVCHD vs H.265 vs RAW).

Let us get a little closer to the extremes to illustrate the breadth of ranges that can be handled nowadays: from about 16 Gb per hour for a HD 4:2:0 in compression media formats suitable for editing (AVCHD type), to more than 18 Gb per minute for a 4K RAW 4:4:4:4 (ARRI Alexa) with the maximum possible information for the most delicate and demanding tasks.

And as if the possibilities were not wide enough, we start with the “depends”. Because in addition to the original record, if it depends on the criticality of the work, we will need a backup as soon as possible, it

depends on the immediacy that content must be available in another place almost simultaneously, and if it depends on the type of project we will have to approach its treatment in very different ways.

Which leads us to stage two -the so-called handling- that encompasses everything related to ingestion, editing, composition, color grading, audio mixing, mastering, etc., where needs begin to multiply and diversify.

We start from the simplest end where the same person films and edits, connecting the original media to the computer. With the necessary caution to start by transferring the content to a first medium such as the computer disk, and then make another copy on external disk or cloud service before proceeding to edit. Because we already know what can happen when we rush with editing on the original media from the camera or recorder…

In addition to the computer in general, especially the disk must meet the

necessary requirements so that all the filmed material flows with the necessary smoothness in its original quality.

As the project grows, either due to volume or complexity, it will be necessary to have a NAS storage system capable of responding to all our needs. And these will no longer just be those of running an AV content, but it may be necessary instead to move several streams of different AV content simultaneously. Again, both capacity and speed of the relevant medium will be the key factors to take into account, with different manufacturers offering different solutions in terms of speed, performance, scalability, etc.

As our project continues to grow or becomes sophisticated, the shared storage system will need to be sized proportionately to maintain sufficient capacity and speed to provide performance for a growing team of people working simultaneously on the same project or in the same organization, both by ingesting new content and by using existing content. At this point we must already add the concurrency factor, that is, how many simultaneous accesses we will need to provide support to.

Regardless of the size of the equipment and depending on the criticality of the materials it may be advisable to adopt a RAID configuration for our shared media that allows to increase capacity, improve access times, and above all, save and recover any potential data losses. The various possible configurations for RAID

systems allow these different functionalities by combining different techniques.

It must also be considered at this stage, although it always “depends” on the project, that not all processes will be linked or sequential. Thus, stages such as color grading, soundtrack or so many others, may have their own needs for access to storage on the same materials.

There will be a turning point in growth, different for each organization, where it becomes more interesting to have media storage -or part of it- managed by a cloud service. In this case, the decision will not be given simply for reasons of capacity or speed. Factors such as equipment scattering, security, accessibility, confidentiality, and profitability, among others, will be the real keys for the final decision.

In short, in this handling stage is where we will normally find the highest demands around capacity and speed requirements simultaneously, in addition to reading/writing concurrency to properly manage our AV contents.

This stage ends when we have already composed the final piece, which almost always becomes a single file ready to be enjoyed. And this brings us to our third stage: broadcast.

Nowadays we must take into account the very different broadcast possibilities: from a sequential broadcast grid in traditional broadcast channels, to on-demand content. In any case, by this stage the volume of information to be handled has been considerably

reduced. We no longer have dozens of materials to compose for which we need the highest possible quality to handle them in the best conditions.

The codecs available enable to handle significant compressions without loss of quality, since the material will not undergo subsequent modifications, so the files have a much lighter weight and will be easy to handle. Even so, a sufficient storage volume is needed to keep all of our contents. And with the right

access speed to serve them on demand if we offer such service.

In this case, we must bear in mind that we may be required to send the same file at different times to multiple destinations and do so through networks over which we have hardly any control, so it will be important to size up the file to ensure that transfer is carried out as smoothly as possible.

In short, in this stage it will be necessary to take

special care of the ability to access and read, while capacity will only be conditioned by the amount of contents available in our catalog, or simply by the grid to broadcast if we are only considering linear

broadcasting. And just by using a bit of sense, it will be a different platform to the one used in the manipulation phase. In this case, at present NaaS (Network as a Service) formats prevail, as they have sufficient capacity to be the most profitable investment for a good number of content broadcasters. Although profitability will not always be the decisive factor, and in other circumstances or for certain organizations, internal management of these contents will be preferred.

Already reaching the next stage, long-term storage, requirement types are the other way around. It will be capacity rather than speed the decisive factor, since now a few seconds

to find the content will not be critical, but it will to have enough space. And the criterion of a new platform that is separate from the previous ones for this storage still holds. Making an analogy, this would be the library in the basement.

But all of this leads to yet another “depends”. Because, what do we want to store on this occasion? all the shooting raw files with maximum quality for future reediting. Only the final piece? Even if it is only the final piece, we must preserve our master file with the highest possible quality, and not only a version that will be enough for broadcasting. Because we know from experience that the capabilities of all systems increase exponentially over time.

There is no single solution that will cater to all our needs, being instead “scalability”, “flexibility” and “adaptability” the most important notions to take into account when designing and managing our storage system. Going back to the beginning: “What for?” may be the key factor to always keep in mind in each of the stages.

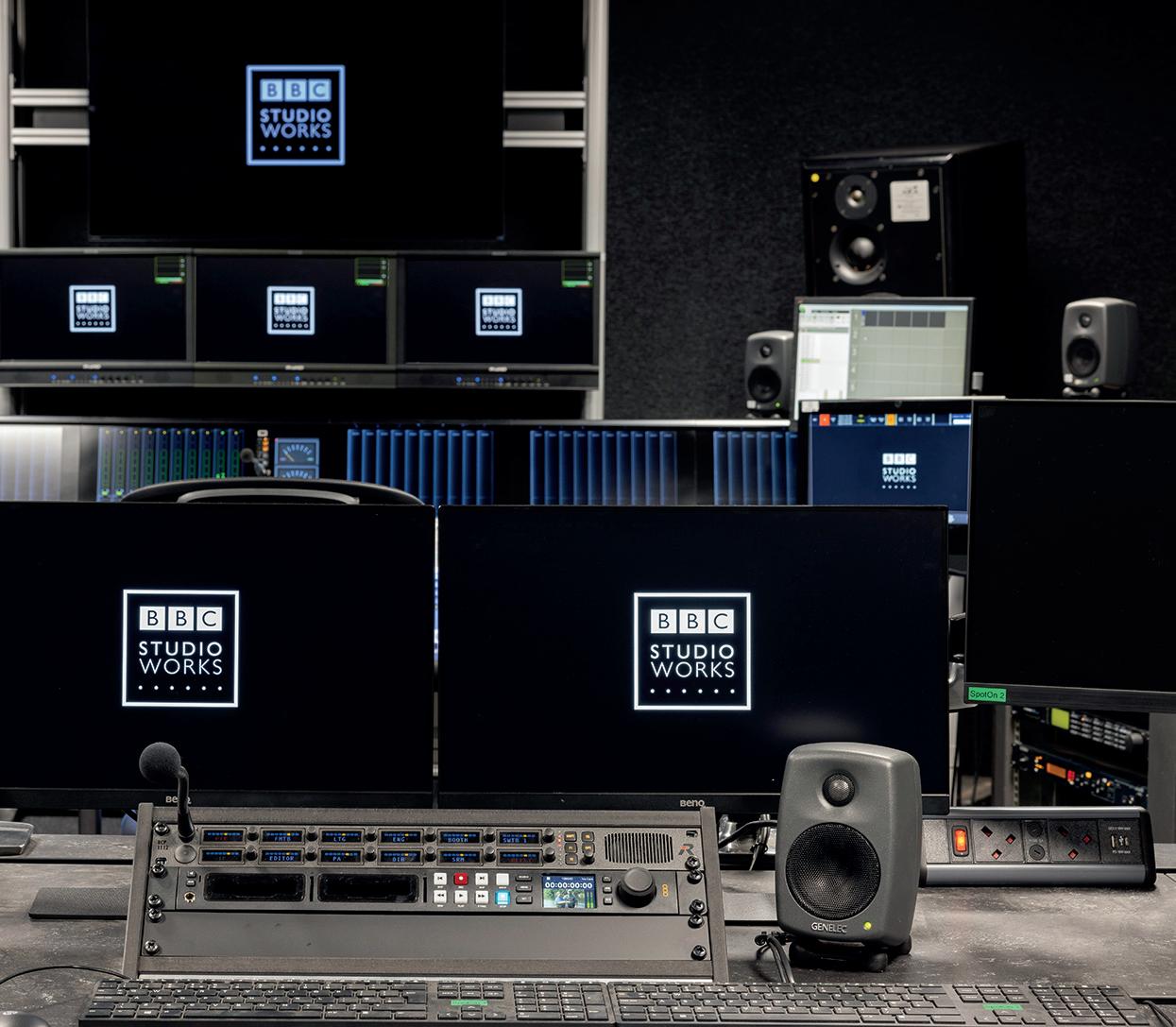

BBC Studioworks’ latest step to create a complete TV production ecosystem in the UK

BBC Studioworks is one of the UK’s leading providers of television studio infrastructure and services. As part of its ambitions to create a nationwide television production ecosystem, it opened the Kelvin Hall studio in Glasgow in mid-2022.

This facility built on the pillars of sustainability and the best production technology available has been the talk of the industry for the past six months. Has it been developed on IP infrastructure? Can it accommodate virtual production techniques? What picture standard does it work on? We asked David Simms, Communications Manager, and Stuart Guinea, Studio Manager, of BBC Studioworks, all these questions. Here are all the details.

David Simms: BBC Studioworks is a commercial subsidiary of the BBC providing studios and post production services to all the major UK TV broadcasters and production companies including the BBC, ITV, Sky, Channel 4, Channel 5, Nexflix, Banijay and Hat Trick Productions.

Located across sites in London (Television Centre in White City, BBC Elstree Centre, Elstree Studios) and in Glasgow (Kelvin Hall), our facilities are home to some of the UK’s most watched and loved television shows.

Some of our credits are “Good Morning Britain”, “Lorraine”, “This Morning”,

“Loose Women”, “The Jonathan Ross Show”, “Saturday Night Takeaway and The Chase” for ITV. For the second channel of ITV we can highlight “Love Island Aftersun” and “CelebAbility”. For Channel 4 we produce “Sunday Brunch” and “The Lateish Show with Mo Gilligan”. For Sky we make “A League of Their Own” and “The Russell Howard Hour”. “The Crown” and “Crazy Delicious” are some contents we produce for Netflix. And, for the BBC we create “The Graham Norton Show”, “Pointless”, “Strictly Come Dancing” and “EastEnders”.

Kelvin Hall is one of your latest projects, what are the reasons for its construction and what objectives did you intend to achieve with it?

David Simms: Kelvin Hall is a 10,500 sq.ft. studio. It opened on September 30, 2022 and its construction was co-funded by the Scottish government and Glasgow City Council. Between them, £11.9 million was invested. The Scottish city council owns the building.

The studio has been designed to host all kinds of productions. In its development, sustainability was one of the main pillars on which we based ourselves. To meet these obligations, we brought new life to an existing building that was already in disuse.

Other facts that make it a sustainable project are that it uses 100% renewable energy and has been designed with LED lights.

Kelvin Hall responds to our ambition to open more studios throughout the UK. We did our research

across major UK cities and the studio at Kelvin Hall comes in direct response to a growing demand from producers and industry bodies to make more TV shows is Scotland, as well as a niche in the Scottish market for more Multi Camera Television

(MCTV) studios to meet the demand.

Our objectives with Kelvin Hall were to create a boost to Scotland’s capacity to produce multi-genre TV productions and as such help fuel the demand for Scotland’s creative workforce and economy.

After six months, have expectations been met?

David Simms: Six months since opening the studio at Kelvin Hall we have facilitated over 40 episodes of television —on two

shows: BBC Two’s “Bridge of Lies”, produced by STV Studios; and BBC One’s “Frankie Boyle’s New World Order”, produced by Zeppotron— created job opportunities for local production, technical

staff and freelancers (six permanent staff and an average of 100 people working on a studio record day).

We have also helped to support the next

generation of talent to join the TV industry - we launched a Multi Camera TV Conversion Programme in collaboration with the NFTS and Screen Scotland that trained 12 trainees who are now being offered paid

work placements at Kelvin Hall.

We understand the importance and necessity of inspiring, nurturing and developing the next generation of production

talent, so collaborating with NFTS Scotland and Creative Scotland to offer entry points for young Scottish people to the industry was a priority for us when opening Kelvin Hall.

With regard to supporting the nurturing future local talent, we aim to forge an industry collaboration, supported by Screen Scotland, which routinely invests and delivers initiatives that create a sustainable talent pipeline to meet the growing demand for skills and people.

The creative sector in Glasgow flourishes, built on a reputation of having the best talent that can be sourced locally. We want to increasingly deliver on this ambition over the next five years.

Kelvin Hall is said to be integrated with stateof-the-art technology and has been built to be consistent with the other BBC studios. How did you achieve this?

Stuart Guinea: The BBC Studioworks project

team that managed the construction and installation of Kelvin Hall consisted of a group of our in-house experts who had previously project managed the remodelling and technical fit-out of our facilities at the Television Centre in London between 2013 and 2017, as well as our move to the two Elstree sites in 2013.

One of the key decisions made in the design of the Kelvin Hall studio was the commitment to install the infrastructure for LED lighting. This not only reduces the carbon footprint of the studio operation, but it also puts us at the forefront of the change to LED lighting in multicamera TV Studios.

Unlike our facilities in London where our project teams had to work to specific space limitations in existing buildings, at Kelvin Hall we had the fortune of building a brand-new studio facility from scratch in a cavernous space. We took advantage of this to maximise our spaces, ensuring the control rooms and galleries were amply sized – these technical

spaces are now the largest across our entire footprint, allowing room for possible tech expansion in the future.

The biggest challenge for the whole project was timescale. There are always small delays in every building project and although we had planned for these, we were also working towards the deadline of our first confirmed client production series at the end of September, so the pressure was on to deliver on-time.

It is also important how the connections between equipment and devices within Kelvin Hall and even between other studios are developed. Designing them over IP is the big challenge and promises many capabilities. What is the situation in Kelvin Hall with respect to this technology? And in other studios developed by BBC Studioworks?

David Simms: After extensive research, we decided to implement a 3G (non-IP) solution for Kelvin Hall.

This was the more costeffective route for our business model and is already known to our clients, as this is the tried and tested solution that we have in our London facilities.

The core infrastructure at Kelvin Hall is all 4K ready, so we can accommodate a 4K production in the future.

What are Kelvin Hall’s virtual production capabilities? LED or Green Screen, which has been your bet?

Stuart Guinea: Our studio at Kelvin Hall is a large flexible space (10,500 sq. ft.) - we have integrated hoists, data and power distributed throughout our overhead grid and the studio floor is paintable so can be matched to any greenscreen system. We can therefore accommodate both LED or Green Screen for VR.

Focusing on the technology, which manufacturers have you relied on to develop the Kelvin Hall installations?

Cameras, visions mixers and monitors

• Six Sony HDC-3200 studio cameras. The 3200 is Sony’s latest model which has a native UHD 4K image sensor and can easily be upgraded to UHD.

• Sony XVS7000 vision switcher, LMD and A9 OLED monitors for control room monitoring.

Hardwired and radio communications systems

• 32 Bolero radio beltpacks with the distributed Artist

fibre-based intercom platform and for external comms, VOIP codecs.

• A SAM Sirius routing platform solution to support the most challenging applications in a live production environment and to ensure easy adoption of future technology innovations.

Audio

• Studer Vista X large-scale audio processing solution that provides pristine sound for broadcast.

• Calrec Type R grams mixing desk. A super-sized grams desk providing ample space for the operation of the Type R desk and associated devices, such as Spot On instant replay machines.

• A Reaper multi track recording server.

Lighting

• ETC Ion XE20 lighting desk and an ETC DMXLan lighting network.

• 108 lighting bars with a mix of 16A and 32A outlets (if tungsten is required).

• 48 Desisti F10 Vari-White Fresnels.

• 24 ETC Source 4 Series 3 Lustr X8.

Another major focus has been on sustainability awareness. In practical terms, how can broadcast technology reduce its impact on the environment?

David Simms: We were fortunate enough to find an area within Kelvin Hall that was already part of a wider redevelopment initiative at the site - the studio repurposes a previously derelict section of a historically important building. And as there is no gas plant, all electricity

is sourced from renewable sources.

The studio has been designed without dimmers to encourage LED and low energy lighting technology. The reduced heat generated by the low energy lighting has enabled the use of air-source heat pump technology for heating and cooling, and the ventilation plant has class-leading efficiency using heat recovery systems.

For the past three years we have proudly held the Carbon Trust Standard for Zero Waste to Landfill accreditation at our Television Centre facility, and we are currently working on achieving this for Kelvin Hall too. The Zero Waste to Landfill goal was achieved through a combination of reducing, reusing and recycling initiatives to reduce environmental impact. Specific waste

contractors have been procured to ensure diversion from landfill and an onsite waste disposal system, incorporating eight different streams, has been implemented to encourage responsible waste disposal and reduce cross contamination.

We are also a founding collaborator of BAFTA’S / ALBERT’s Studio Sustainability Standard, a scheme to help studios measure and reduce the environmental impact of their facilities.

“What VR offers creators and consumers still seems to be in the Commodore 64 phase of its evolution. I think it still has much more to come.”

With this statement, Chris Randall, head of the Second Home animation studio based in Birmingham, positions us in front of a really promising future. His hands, and those of his team, have developed a multitude of projects ranging from bringing stories to life through handmade models captured with stop-motion techniques, to the creation of virtual worlds consumable through immersive technologies. Here’s what an animation craftsman has to say about the cutting-edge technologies that will inevitably revolutionize his profession.

Second Home Studios came about as a happy accident. I’ve always loved visual effects and animation. I cut my teeth as a film Clapper Loader whilst at University working with the BBC VFX Unit (when it existed) on shows like “Red Dwarf” and helping to blow up Starbug in my Uni holidays. Great fun. I learned a lot and had a brilliant mentor in the form of DoP Peter Tyler.

I moved back to Birmingham, worked in theatre for a bit, then started working in Central Television’s Broadcast Design Department as a Rostrum Cameraman. I’ve always been a keen modelmaker so I started teaching myself stopmotion in my lunch hours. Soon after, CiTV commandeered me to make promos for them for children’s content. My first proper body of work for broadcast won World Gold at Promax and I started to take it more seriously.

Around this time I was asked by an old colleague in theatre to create a film to cover a scene change for The Wizard of Oz. It was a two minute tornado sequence and

encompassed much of what I’d previously learned on both sides of the camera while working as a Clapper Loader.