Anewchapter for Italy’s Rai:

Exclusiveinterviewwiththeexecutive technical leadershiptoexplore itstechnological horizon

STREAMING RakutenTV goes all-inonFAST

INDUSTRY VOICES

SimenK. Frostad Chairmanof BridgeTechnologies

5THEDITION

Exclusiveinterviewwiththeexecutive technical leadershiptoexplore itstechnological horizon

STREAMING RakutenTV goes all-inonFAST

INDUSTRY VOICES

SimenK. Frostad Chairmanof BridgeTechnologies

5THEDITION

Rai is entering a new chapter. The retirement of its CTO, Stefano Ciccotti—a story first reported exclusively by TM Broadcast—opens a new scenario for the Italian public broadcaster. As of the publication date of this issue, Rai has not officially confirmed how its final organizational structure will look, although internal sources consulted by this magazine indicate that the corporation’s various business areas already operate with a high degree of autonomy. As such, the CTO role has gradually adopted a more institutional profile.

In other words, Rai’s renewal strategy remains firmly in place. The Italian broadcaster is currently undergoing an ambitious technological transformation, with the goal of evolving from a traditional broadcasting model to a media company—mirroring the direction taken by other key players in the industry.

The roadmap, which spans several years and involves a multi-million-euro investment, aims to establish a fully IP-based infrastructure. The transition is expected to be completed within the next four to five years.

Editor in chief

Javier de Martín editor@tmbroadcast.com

Creative Direction

Mercedes González mercedes.gonzalez@tmbroadcast.com

Chief Editor

Daniel Esparza press@tmbroadcast.com

Editorial Staff

Bárbara Ausín

Carlos Serrano

This is therefore an ideal moment to take a close look at Rai’s current status and future technological horizon. To that end, we sat down for an exclusive interview with Rai’s top technical leadership, led by Director of Technology Ubaldo Toni, to whom we extend our sincere thanks for coordinating this in-depth conversation with his team.

Also in this issue, we present the third installment of our Industry Voices section. This time, we speak with Simen K. Frostad, President of Bridge Technologies, an industry veteran and pioneer in the implementation of IP workflows.

It’s worth highlighting how the generous participation of each guest in this section—openly discussing key market trends and strategic directions—is turning Industry Voices into a privileged space for reflection on the present and future of the broadcast industry. Our sincere thanks to everyone making this possible.

All this and much more… only in TM Broadcast.

Key account manager

Patricia Pérez ppt@tmbroadcast.com

Administration

Laura de Diego administration@tmbroadcast.com

TM Broadcast International #143 July 2025

Published in Spain ISSN: 2659-5966

TM Broadcast International is a magazine published by Daró Media Group SL Centro Empresarial Tartessos Calle Pollensa 2, oficina 14 28290 Las Rozas (Madrid), Spain Phone +34 91 640 46 43

Exclusive interview with the executive technical leadership to explore its technological horizon

Rai’s Director of Technology, Ubaldo Toni, together with his team, answers all our questions. We review the current technological status of Italy’s public broadcaster—now undergoing a deep transformation—and its main investment priorities. The interview also offers broader insights into the future of the broadcast industry.

Rakuten TV, goes all-in on FAST

We interview Rakuten TV’s Chief Product Officer, Sidharth Jayant, to explore the company’s strategy and most notable technological implementations.

INDUSTRY VOICES

Simen K. Frostad, Chairman of Bridge Technologies:

“We need today tools that help non-experts understand what’s going on”

Much more than a buzzword

This new trend in the sector is here to stay. The various players in cinema and broadcast, including camera and optical manufacturers, must understand each other in order to generate professional partnerships with guarantees for success.

TM BROADCAST AWARDS

5th Edition of the TM Broadcast

TM BROADCAST SPAIN has held the fifth edition of these awards, now an undisputed benchmark in the country’s broadcast industry due to their rigor and independence.

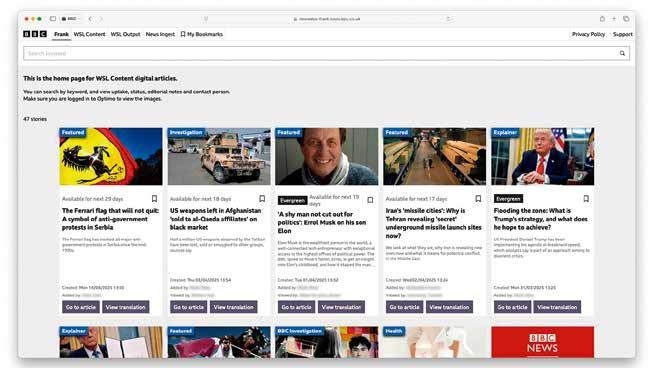

Rhodri Talfan Davies, BBC Executive Sponsor of Gen AI, has announced that the BBC will launch new Generative AI pilots with the objective of supporting its production processes. The corporation is now looking to test two pilots publicly: ‘At a glance’ summaries and BBC Style Assist, as it has claimed in a statement.

BBC has been working on this project oover the last 18 months. They’ve also published some updates that share their approach to AI and What they are doing with it on the official web.

‘At a glance’ summaries

The objective of this is to make journalism more accessible, using GenAI to help assist journalists to create new ‘At a glance’ summaries of longer news articles.

“We’re going to look at whether adding an AI-assisted bullet point summary box on selected

articles helps us engage readers and make complex stories more accessible to users”, explains Talfan Davies.

The project aims to give journalists the full control, by using a single, approved prompt to generate the summary, then review and edit the output before publication.

“Jounalists will continue to review and edit every summary before it’s published, ensuring editorial standards are maintained. We will also make clear to the audience where AI has been used as part of our commitment to transparency”, adds.

BBC Style Assist

‘Style Assist’ is designed to explore how GenAI could support their news journalists to adapt and reformat stories so that they match the BBC ‘house-style’ for online news.

Every day, the BBC receives hundreds of stories from the Local Democracy Reporting Service (LDRS), a public service news partnership funded by the BBC, but provided by the local news sector in the UK.

The intention of this is to help journalists to reformat LDRS stories quickly and efficiently by reducing the production time required to publish these stories.

How it works

“BBC Style Assist uses a BBC-trained Large Language Model (LLM) which has been developed by our Research and Development team. It’s a smart AI system that has ‘read’ thousands of BBC articles so it can help amend text quickly to match our own house style”, details Talfan Davies.

Changes are coming to the BBC’s technical leadership. Its CTO, Peter O’Kane, has announced that he will step down from the role at the end of the year, after 15 years at the public broadcaster. “This wasn’t an easy decision — working with you has been one of the most rewarding and inspiring parts of my career,” he said in a message shared on his LinkedIn profile.

In the post, Peter O’Kane reflected on some of the milestones achieved during his time at the BBC. “I’m incredibly proud of what we’ve achieved together: from modernising our technology landscape and embracing the cloud, to keeping the BBC on-air and online through some of the most challenging moments in recent history.”

He also highlighted the professionalism of his team. “What I’ll remember most, though, is the people. Your dedication, ingenuity, and resilience have inspired me every day.”

O’Kane stated that he will remain in the role until the completion of a key project focused on technological resilience and expressed confidence in ensuring a smooth transition. “Thank you for everything — for your trust, your talent, and for making this such a meaningful chapter in my life,” he concluded.

The Italian broadcaster is currently undergoing an ambitious technological transformation

By Daniel Esparza

End of an era at RAI. Italy’s public broadcaster—one of the most prominent in Europe—bids farewell to its Chief Technology Officer, Stefano Ciccotti, who is retiring as of July 1. The news has been confirmed exclusively to TM BROADCAST by internal sources within the organization.

So far, RAI has not issued any official statement nor clarified who will succeed Ciccotti in the role. It’s worth noting, however, that due to the vast scope of its operations, the public corporation’s various business units already enjoy significant operational autonomy.

RAI is currently immersed in an ambitious technological transformation plan, aiming to shift from a traditional broadcaster to a media company—echoing a trend followed by other media players. The core goal of this transition is to establish a fully IP-based infrastructure.

Born in Rome in 1960, Stefano Ciccotti has been closely tied to RAI for nearly four decades. He was appointed CTO in October 2017. Upon taking on the role, he gave an exclusive interview

to TM BROADCAST, where he shared his initial thoughts and outlined the challenges ahead.

Ciccotti holds a degree in Electronic Engineering and joined RAI’s Technical Department in 1987, where he held several positions in the following years. In 1995, he moved to Omnitel Pronto Italia, and a year later, joined Telecom Italia Mobile. In 1997, he relocated to Vienna, where he was appointed Deputy Technical Director of Mobilkom Austria AG.

He returned to RAI in 1998 as Director of the newly created Broadcasting and Transmission Division. From this position,

he led the transformation of RAI’s plant engineering area into a joint-stock company, founding RAI Way S.p.A.. This company, part of the RAI Group, is responsible for planning, implementing, and managing telecommunications and broadcasting networks and services for both RAI and external clients.

Ciccotti served as CEO of RAI Way from 2000 to 2017 and was also its President between 2001 and 2004. During his tenure, he oversaw the company’s IPO, which was completed in 2014 with its listing on the Mercato Telematico Azionario. In October 2017, he returned to RAI to take up the role of Chief Technology Officer.

Warner Bros. Discovery has announced a new partnership with the Legends Team Cup to show the new international tennis tour where players of the ATP Tour will compete in three teams across the US and Europe, as it has claimed in a statement.

WBD Sports will offer live coverage of all seven tournaments taking place across a host of locations in 2025 on its streaming platforms including HBO Max and discovery+. The inaugural Legends Team Cup will kick off from 14–16 August 2025 in Europe. Throughout the 2025 calendar, the tour will also take place across Asia, the Americas and the Middle East.

A Legends Team Cup highlights show will also be shown on Eurosport across Europe, and TNT Sports in the UK and Ireland following each event.

Across the 2025 calendar, the tour will visit locations in North America, Europe and Asia, with the date and venues to be revealed at a later date.

Each of the seven tournaments spans three days, with two teams competing in a series of two singles matches and one doubles match per day. The teams, who have a combined 503 ATP Tour titles across singles and doubles, are competing to lift the Björn Borg Trophy, named in honour of the tournament patron, and with a total prize pool of $12 million on offer.

The competition is made up of three teams, each spearheaded by one of the captains Carlos Moya, James Blake and Mark Philippoussis.

Players competing on the tour include Dominic Thiem, Sam Querrey, Jo Wilfried-Tsonga, Feliciano Lopez and Diego Schwartzman. The tour also welcomes players transitioning from the ATP Tour, such as Richard Gasquet, who last month played his final professional match at Roland-Garros, losing to Jannik Sinner in the second round.

Scott Young, EVP at WBD Sports Europe, affirmed: “We’re thrilled to bring the Legends Team Cup to millions of viewers across Europe. This innovative tournament brings together some of the most iconic names in tennis, which will deliver unforgettable moments for fans in some stunning locations across the globe. The fast-paced, unique format of two sets of four games in the competition makes it a unique proposition for fans to watch”.

“Adding the Legends Team Cup demonstrates our commitment to showcasing innovative sporting formats across the world and we can’t wait to see how some of these tennis greats extend their legacy”.

Marten Hedlund, president of Legends Team Cup, added: “Partnering with Warner Bros. Discovery marks a major milestone for the Legends Team Cup, as we’re now able to showcase our tour to millions of people around the world”.

“I’ve long had the belief that there should be more opportunities for tennis players to extend their careers once they retire from the ATP Tour, and that is what we are providing with the Legends Team Cup. This is a new chapter in the history of tennis, one where legends can continue to inspire, compete and connect with fans worldwide”.

RTL Group has announced that it has signed a definitive agreement to acquire Sky Deutschland (DACH). The transaction brings together two of the most recognisable media brands in the DACH region, creating an entertainment business with around 11.5 million paying subscribers.

Together, the business is well-positioned to meet evolving consumer demands and compete with global streamers. The transaction combines Sky’s premium sports rights – including Bundesliga, DFB-Pokal, Premier League and Formula 1 – with RTL’s leading entertainment and news brands across RTL+, freeto-air and pay TV. It also unites

the fastest growing streaming offers in the German market, RTL+ and WOW. The transaction, which has been approved by the Board of Directors of RTL Group, is subject to regulatory approvals.

€150 million deal

According to the agreement, RTL Group will fully acquire Sky’s businesses in Germany, Austria, Switzerland, including customer relationships in Luxembourg, Liechtenstein and South Tyrol on a cash-free and debt-free basis. The purchase price consists of €150 million in cash and a variable consideration linked to RTL Group’s share price performance. The variable consideration can be triggered by Comcast, Sky’s parent company, at any time within five years after closing, provided that RTL Group’s

America’s younger and older generations spend less time watching TV than any other group. While Gen X (1965-1980) and Millennials (1981-1996) prefer ad-supported subscription streaming (AVOD) over FAST channels, Gen Z (1997-2012) its more likely to fragment its consumption on smaller screens, according to the latest

release published by DASH in partnership with NORC at the University of Chicago.

The ARF DASH TV Universe Study shows how Americans connect to TV, how they consume it today and how that’s changing across generations. According to it, the habits that the different age brackets experiment is shaped

by the technologies they grew up with and the values they share.

Although Boomers (those born in the late 1940s through late 1950s) remain loyal to more familiar viewing patterns (54%), they are also starting to experiment with new platforms (49%). The report’s analysis informs that this could be

share price exceeds €41. The variable consideration is capped at €70 per share or €377 million. RTL Group has the right to settle the variable consideration in RTL Group shares or cash or a combination of both. RTL Group is considering buying treasury shares to be in a position to settle the variable consideration fully or partly in shares.

The combined business will offer a broader and more compelling German-language content portfolio for consumers across the DACH region. Viewers will benefit from expanded access to premium live sports, entertainment and news across RTL+, Sky, WOW and RTL’s free-to-air channels. By bringing together the strengths of RTL and Sky, the combined company will be able to compete against global streaming platforms. The transaction is expected to generate €250 million in annual synergies within three years, mostly cost synergies across all categories.

Under a separate trademark license agreement, RTL will have the right to use the Sky brand in the DACH region (Germany, Austria, Switzerland), Luxembourg, Liechtenstein and South Tyrol. RTL will acquire Sky Deutschland’s streaming brand “WOW” as part of the transaction.

Barny Mills, Sky Deutschland CEO, will continue to lead the Sky Deutschland business until the transaction is completed. Stephan Schmitter will stay in his current role as CEO of RTL Deutschland until closing of the transaction and then lead the combined company. RTL Deutschland will remain headquartered in Cologne and Sky Deutschland in Munich.

The two businesses will operate independently until 2026

The pro-forma revenue 2024 of the combined company was €4.6 billion, with approximately 45 per cent of the total revenue

coming from subscription-based revenue.1 RTL Group’s pro-forma revenue for 2024 was €8.2 billion2, more than 30 per cent higher than RTL Group’s reported consolidated revenue for 2024 (€6.25 billion). The acquisition of Sky Deutschland is the largest transaction for RTL Group since its inception in 2000. The two businesses will continue to operate independently until regulatory approvals are obtained, which are expected in 2026.

Thomas Rabe, CEO of RTL Group, said: “The combination of RTL and Sky is transformational for RTL Group. It will bring together two of the most powerful entertainment and sports brands in Europe and create a unique video proposition across free TV, pay TV and streaming. It will boost our streaming business, with a total of around 11.5 million paying subscribers, further diversify our revenue streams and make us even more attractive for creative talent, rights holders and business partners.

because FAST more closely resembles the channel-surfing experience they are used to. Also, many of those channels feature programming that was current when Boomers were growing up and it’s free, like TV used to be when they were younger.

The opposite happens with other generations.

The “parent” generations, Gen X and Millenials, are the ones who most consume TV,

especially AVOD. The study’s data reflects that the first ones have a 69% preference for streaming services and 61% for FASTs.

Millenials are the main consumers of paid content (73%) and the second most frequent users of traditional TV (58%), after Gen X.

Radio 47, one of Kenya’s Swahili-language radio stations and operated by Cape Media Ltd., has unveiled Africa’s first fully IP-based, automated hybrid broadcast facility. Located in Nairobi, the installation aims to represent a new benchmark in the modernization of media infrastructure on the continent. The project was planned and executed by Kigali-based Mediacity Ads Ltd., a specialist in IP broadcast systems and Lawo’s regional partner, as the company has claimed in a statement.

The new facility integrates radio, television, live streaming, and remote production into a unified, flexible system, using broadcast technology from manufacturers including Lawo (Germany), MultiCam Systems (France), AVT Audio Video Technologies (Germany), Voceware, Cleanfeed, Genelec, Telos Alliance, PTZ Optics, OptiSign, and others.

Central to the installation is a fully IP-native broadcast infrastructure built on Lawo’s crystal broadcast consoles, Power Core DSP engine, and Ravenna/AES67-based

audio-over-IP networking. The integrated Lawo VisTool GUI software provides customizable touchscreen interfaces for control and visualization of sources, meters, and routing. The new workflow allows remote control and monitoring of all broadcast operations.

Each of the three new on-air studios at Radio 47 features a Lawo crystal console, chosen for its form factor, operation, and integration with IP workflows. The consoles are networked to a centralized Lawo Power Core, a modular IP I/O and mixing engine designed with the intention of managing extensive audio routing, DSP processing, and multiple studio operations from a single location.

The Power Core is equipped with modular I/O cards that handle analog, AES3, Dante, and MADI formats. Cape Media needed flexibility to proof its investment while still accommodating external sources and OB workflows. The system’s modular design also allows for easy expansion and remote integration, whether for regional studio feeds, mobile devices, or external contributors.

All studios, edit rooms, and control points are connected via an AES67-compliant IP backbone, with support for Ravenna networking. This infrastructure has the objective of enabling real-time, lossless audio transmission and complete operational freedom — feeds, phone calls, or streams can be routed anywhere in the facility.

“We wanted more than another radio station. We wanted to build the future of African media—an infrastructure that removes traditional barriers, empowers creativity in the young generation, and embraces tomorrow’s technology today”, explained Simon Gisharu, Chairman, Cape Media Ltd.

“This wasn’t just an upgrade”, added Fred Martin Kiwalabye, Project Manager and Head Technical Engineer at Mediacity Ads Ltd. “It was a reinvention of how media can work—cleaner, smarter, faster, and future-proof. Powered by Lawo and supported by visionary leadership, we’ve redefined the possibilities”.

Riedel Communications has announced the launch of RefSuite, a new Managed Technology solution tailored to professional sports workflows. RefSuite combines hardware, software, cloud services, and 24/7 remote operations into one ecosystem with the objective of transforming refereeing, coaching, and broadcast operations across live sports environments, as the company has claimed in a statement.

Developed in close partnership with referees and industry stakeholders, and built on Riedel’s engineering team, RefSuite aims brings together five tightly integrated modules: RefCam, RefBox, RefComms, CoachComms, and RefCloud. From head-mounted referee cameras and FIFA Quality-certified Video Assistant Referee (VAR) systems to Bolero S-based wireless comms and cloud-based media management, RefSuite aims to enable coordination, performance, and decision-making while delivering unseen/immersive broadcast perspectives.

“RefSuite is the culmination of years of field experience, product innovation, and direct collaboration with the world’s leading sports organizations”, explained Lutz Rathmann, CEO Managed Technology, Riedel Communications. “With each module already proven in highprofile deployments worldwide, RefSuite represents a decisive

move away from fragmented solutions — delivering a cohesive, future-ready platform that empowers officiating, coaching, and broadcast teams alike”.

RefSuite’s modular design looks forward to offering better scalability and flexibility, adapting to each client’s requirements and scale. It delivers stabilized, broadcastgrade referee POV video, while the RefBox VAR review system offers intuitive, synchronous control of all video sources for instant analysis, playback, and clip export. RefComms and CoachComms provide encrypted, low-latency referee and team communication, complemented by the RefCloud media cloud as a central hub for secure media storage, review, and collaboration.

The ecosystem can be extended with Riedel’s Easy5G Private 5G network solution, offering data transmission for full-pitch RefCam video streaming or

remote RefBox review workflows. Combined with (optional) centralized monitoring and support via Riedel’s Remote Operations Center (ROC), this Managed Technology offering tries to deliver turnkey deployment and operational peace of mind.

“RefSuite represents our vision of seamless delivery — a unified technology and service model designed to meet the demanding needs of international federations, leagues, broadcasters, and production partners”, added Marc Schneider, Executive Director Global Events, Riedel Communications. “This is just the beginning: RefSuite is the first step in a new generation of integrated ecosystems that will unlock new capabilities for remote production, performance analysis, and immersive fan experiences — shaping the future of motorsport, maritime, and live production environments alike”.

IBC2025 is poised to lead the global conversation on media and entertainment (M&E) innovation when it brings the industry’s creative, technology, and business communities to the RAI Amsterdam on 12-15 September. Industry players from 170 countries will converge on this year’s show to connect, collaborate and unlock business opportunities – with a sharpened focus on transformative technology and real-world application. IBC2025 introduces Future Tech, a dynamic hub of emerging technologies, collaborative projects and next-gen talent taking up all of Hall 14.

“Shaping the future of media and entertainment worldwide is more than our theme – it’s

our mission,” said Michael Crimp, IBC’s CEO. “IBC2025 is where the brightest minds from the international M&E community explore the ideas and technologies reshaping our industry. Future Tech brings that innovation into focus – from AI-powered personalisation and cloud-native workflows to content provenance and private 5G networks, visitors can see the future being defined and built in real time.”

Pioneering advances in areas such as AI, virtual production, interactive media, sustainable technology, and immersive

experiences will drive visitors’ show journeys throughout IBC2025, but these technologies take centre stage in Future Tech.

Those exploring Hall 14 will see how AI is moving from the conceptual to the practical, spanning live production automation, generative content tools, and new uses just being introduced. They will also get the opportunity to delve into the virtual world of LED backdrops, augmented reality and real-time VFX, with exhibitors including Microsoft, Mo-Sys, 3Play, CaptionHub, Deepdub, Files.com, Monks (formerly Media Monks), Tata Communications, Veritone, and Ultra HD Forum.

Offering a curated blend of visionary showcases, hands-on demos, and thought leadership, Future Tech will also feature:

› Accelerator Innovation Zone: Where nine cutting-edge Proof of Concept (PoC) projects fast-track solutions to some of the industry’s toughest practical challenges through media owner and tech vendor collaboration.

› Future Tech Stage: Packed with keynotes, panels and live demos – including the Accelerator PoCs – and sponsored by Microsoft, this stage will feature industry pioneers previewing the technologies set to transform content creation and delivery.

› IBC Hackfest x Google Cloud: A high-intensity, two-day hackathon with digital innovators, tech entrepreneurs, software developers, creatives and engineers tackling real M&E challenges using Gemini AI and more.

› Google AI Penalty Challenge: This immersive interactive football-fan experience employs more than 15 integrated technologies to showcase AI-driven decision-making in sports performance.

› 100 Years of Television: Celebrating a century of innovation since the first TV picture was demonstrated by John Logie Baird at Strathclyde University in 1925, this exhibit not only looks back but offers a glimpse into what the next 100 years might bring.

› Networking Hub: From stand-up breakfasts and informal lunches

to curated meetups and evening DJ sets, this buzzing hub offers a relaxed space for media and tech professionals to connect.

Future Tech also plays host to the growing IBC Talent Programme, supported by partners such as Rise, Rise Academy, Host Broadcasting Services (HBS), Gals N Gear, and Media Entertainment Talent Manifesto. Running on Friday 12 September, the programme presents a wide range of sessions and speakers covering everything from mentoring and career pathways to practical skills, diversity and inclusion.

Exhibitor innovation everywhere

Innovation is by no means restricted to Hall 14. Groundbreaking advances in everything from production and transmission to cameras and lighting to streaming and cloud services will be spotlighted on stands across the show – including exhibitordriven presentations, demos and panels on the two Content Everywhere stages and the Showcase Theatre.

Companies returning to the show to meet, collaborate and showcase innovations include Adobe, Amazon Web Services, Ateliere, Avid, Blackmagic, Canon, EVS, Grass Valley, Panasonic, Riedel, Ross Video, Samsung, Sony and Zattoo. New exhibitors include Baron Weather, Cachefly, Files.com, Momento, NewBlue, OTT Solutions, Plain X, Raysync,

and Remotly. The amount of space booked by exhibitors for IBC2025 has now passed 44,000 square metres, with over 1,100 exhibitors booked so far.

Steve Connolly, Director at IBC, notes: “IBC2025 is set to be our most transformative edition yet – a show where innovation is embedded across the entire experience. From world-first product launches to pioneering conference insights, we’re bringing together the technologies, people, and ideas that are redefining what’s possible in M&E.”

Deep dive at the IBC Conference

This year’s IBC Conference, the exclusive knowledge and networking experience, is running from 12-14 September. It brings together a broad mix of industry leaders and innovators to address topics such as: the business of TV and the search for sustainable growth across new platforms; live sports and real-time experiences; personalised advertising and the future of commercial models; and discovery and prominence – how to ensure content is surfaced in a crowded landscape.

This year’s conference will once again deliver visionary speakers who will cover business-critical challenges around technology and advertising models. AI also features heavily across the programme, examining not just its creative potential but how it can drive efficiency, enhance personalisation, and support real-world editorial workflows.

Grass Valley and Daktronics have announced a technology partnership that unites Grass Valley’s live production with Daktronics’ LED displays. Together, they aim to deliver fully integrated solutions that enhance content creation, production efficiency, and realtime presentation for sports and entertainment venues, as Grass Valley has claimed in a statement.

“This partnership with Grass Valley represents a powerful alignment of complementary technologies”, affirmed Brad Wiemann, Interim CEO and President of Daktronics. “Together, we’re helping venues deliver fully immersive, end-to-end experiences that transform how fans engage with live events”.

“Joining forces with Daktronics allows us to push the boundaries of what’s possible in live production and venue presentation”, added Jon Wilson, CEO of Grass Valley. “With two of the industry’s leading brands, we’re excited about what this means for the future of integrated, scalable solutions in the world’s top venues”.

Several joint projects are already underway, applying the technical integration of Daktronics’ display and control + Camino with Grass Valley’s IP-based live production tools.

Daktronics and Grass Valley will be showcasing this partnership at the upcoming IDEA Conference, taking place in Boston July 13 to 16, 2025.

Telos Alliance has announced that its Axia Altus SE virtual mixing console is now shipping. Like the original software implementation of Altus, Altus SE aims to offer a full-function browser-based mixer for remote events and contributors, allow easy deployment of temporary studios, and become a new option for disaster recovery sites, as the company has claimed in a statement.

As part of the company’s newly introduced Studio Essentials family of products, Altus SE

is built on the same fanless hardware as the Telos VX Duo broadcast phone system.

Its is designed in smaller size and with the intention of operating more silently. It includes 8 faders and can be expanded to up to 24 faders in 4-fader increments via buyout-style licenses.

“The reaction to Altus SE in our booth at the 2025 NAB Show was overwhelmingly positive”, affirmedCam Eicher, Telos Alliance VP of Worldwide Sales. “The idea of having the same

features and flexibility as our software-based Altus virtual mixing console, but with the convenience of an easy-to-deploy dedicated hardware appliance, really resonated with everyone at the show. We’re happy to share that Altus SE is in stock and ready to ship”.

LiveU has announced the completion of the European FIDAL/B5VideoNet (B5GVN) project, which is co-funded by the 6G Smart Networks and Services. The project aimed to demonstrate advancements in remote video production workflows using multi-link, multi-slice 5G bonding for single and multi-cam transmission and feeds back to the receiver. LiveU conducted tests and trials using its multi-camera LU800 PRO units and Xtend connectivity solution with beyond 5G (B5G) capabilities, as it has claimed in a statement.

As part of this project, LiveU focused on three remote production use cases:

› Cloud Remote Production –leveraging slicing configurations to try to guarantee bandwidth and latency for cloud-based broadcast workflows.

› Edge-Based Production –integrating mobile edge computing within an operator infrastructure (like a private cloud).

› On-Site Remote Production with Cloud-based Solutions –testing uplink/downlink slicing configurations for production workflows using LiveU’s Mobile Receiver, Xtend and LU-Link together with the LiveU Ingest automatic recording and story metadata tagging solution.

The trials took place at the University of Patras (UoP) B5G testbed in Greece. All three

scenarios had the objective of exploring the benefits of multi-link, multi-slice bonding for broadcast-grade video transmission – a mechanism to guarantee specific SIMs bandwidth, latency, or error-rate parameters across the 5G infrastructure. This proved to be important under network load and congestion conditions.

The trials included various configurations of Guaranteed Bit Rate (GBR) and Non-Guaranteed Bit Rate (Non-GBR) slice and multi-slice configurations. Upon deployment in commercial networks, this technology would enable broadcasters and content creators to match service levels to their production requirements, resources and

budgets. For example, higherbandwidth GBR slices could be reserved where necessary, while more economical GBR slices or even NGBR, could be relied upon for less critical traffic or supplementing bandwidth where needed (and per budget).

Another ‘B5G-towards 6G’ technology explored was Network Exposure APIs for network resource allocation. The project laid the foundation for real-time video contribution service ordering, allowing LiveU and similar applications to request slices based on event timing and location. This technology (and related use cases) allows cellular operators to provide services on an ‘as needed’ basis rather than ‘everywhere all the time’.

Canal+ has used the new Blackmagic URSA Cine Immersive camera and DaVinci Resolve Studio to film and finish its latest sports documentary about MotoGP with the intention that the audience see it on Vision Pro. The project is part of a new generation of immersive workflows for capture, postproduction and viewing on Apple Vision Pro and will be available exclusively on the Canal+ app on September 2025, as Blackmagic has claimed in a statement.

Produced in collaboration with MotoGP and Apple, the documentary follows world champion Johann Zarco and his team during their dramatic home victory at the French Grand Prix in Le Mans.

It was captured using the URSA Cine Immersive camera with dual 8160 x 7200 (58.7 Megapixel) sensors at 90fps, delivering 3D

immersive cinema content to a single file mixed with Apple Spatial Audio. The MotoGP sports experience places viewers in the heart of the action, from the pit lane and paddock to the podium.

“MotoGP is made for this format”, said Etienne Pidoux at Canal+.

“You feel the raw speed, and you see details you’d otherwise miss on a flat screen. It puts you closer to the machines and the team than ever before”.

To place the viewer at the center of the action, Canal+ deployed multiple URSA Cine Immersive cameras. “We had two cameras on pedestals and one on a Steadicam”, explained Pierre Maillat of CANAL+. “The idea was to be able to swap quickly between Steadicam and fixed setups depending on what was happening in the moment. The Steadicam setup was extremely valuable”, noted Pidoux. “It made us more reactive in a fast

changing environment and gave us more agility while filming”.

“Immersive video changes how you shoot”, added Pidoux. “You plan more, shoot less, and you rethink composition because of the 180 degree view, especially in tight or crowded spaces like the pit lane”. Lighting was also a consideration inside the team garages. “We added some extra light to compensate for the 90 frames per second stereoscopic capture”.

Each camera was paired with an ambisonic microphone to capture first order spatial audio, the ambisonic mics were supplemented by discrete microphones for interviews and other critical sound sources. “We recorded in ambisonics Format A for the immersive mix and channel based for other sources,” Maillat noted. “Everything was timecoded wirelessly and synced on both the cameras and the external recorders”.

A portable production cart with a Mac Studio running DaVinci Resolve Studio, alongside an Apple Vision Pro, was set up trackside to monitor and test shots in context. “This approach allowed us to check the content right after shooting and helped us verify framing while still on location”, said Maillat.

Canal+ had a second Mac Studio running DaVinci Resolve Studio and an Apple Vision Pro set up at the hotel in

Le Mans to handle media offload and backups. With 8TB of internal storage, recording directly to the Media Module, the crew could film more than two hours of 8K stereoscopic 3D immersive footage on the track without needing to change cards.

Postproduction took place in Paris, where Canal+ used a Mac Studio running DaVinci Resolve Studio for editing, color grading,

and audio mixing. “We could even preview the stereoscopic timeline directly in Apple Vision Pro, crucial for immersive grading”, explained Maillat.

Spatial Audio was mixed using DaVinci Resolve Studio’s Fairlight. “Initially, we planned to use a different digital audio workstation (DAW), but DaVinci Resolve Studio and Fairlight was the platform that gave us both creative

LYNX Technik has announced an executive leadership transition, effective July 1, 2025. Markus Motzko has been appointed Chief Executive Officer and Vincent Noyer will take on the role of Chief Technology Officer, as the company has claimed in a statement.

Founder and longtime CEO Winfried Deckelmann will transition out of daily operations and continue to support the company as a member of the Supervisory Board, where he will serve as Chairman.

“Markus and Vincent represent the perfect balance of operational excellence and visionary thinking”, said Winfried Deckelmann. “They have both demonstrated a deep understanding of our technology and our customers’ needs. I have full confidence in their ability to guide LYNX Technik into its next chapter while honoring the principles that have shaped our company since the beginning”.

Dr. Markus Motzko brings experience in production processes, manufacturing optimization, and technical leadership. Since joining LYNX Technik, he has led production and administration, focusing on operational efficiency and long-term growth. Prior to joining the company, Markus held roles in the industrial and medical semiconductor sectors, managing product development, supply chain operations, and customer engagement.

“I’m honored to help lead LYNX Technik through its next phase”, affirmed Markus Motzko. “The company has a strong reputation for engineering excellence and customer-focused innovation. I look forward to building on that legacy with Vincent and our talented global team”.

flexibility and the high quality deliverables for Apple Vision Pro”, explained Maillat.

“Filming with the URSA Cine Immersive camera and viewing it in Apple Vision Pro, we found incredible moments we’d normally treat as background”, Pidoux concluded. “Cleaning the track, helmet close ups, the crowd, they all become part of the experience”.

Vincent Noyer, previously Director of Product Marketing, assumes the CTO role with over two decades of experience in the broadcast and live sports industries. Before LYNX Technik, Vincent held senior positions at Ross Video, where he helped drive the PIERO Sports Graphics solution.

“LYNX Technik has always been about delivering smart, dependable tools that broadcast, and AV professionals can rely on”, concluded Vincent Noyer.

“As CTO, my focus is on continuing that tradition, developing technology that addresses real-world challenges and evolves with our customers’ needs. We will keep refining our solutions to make them even more efficient and aligned with the way people work with content.”

Exclusive interview with the executive technical leadership to explore its technological horizon

Rai’s Director of Technology, Ubaldo Toni, together with his team, answers all our questions. We review the current technological status of Italy’s public broadcaster—now undergoing a deep transformation— and its main investment priorities. The interview also offers br oader insights into the future of the broadcast industry

By Daniel Esparza

A change of cycle at Rai. The retirement of its CTO, Stefano Ciccotti—a story exclusively reported by TM BROADCAST opens a new chapter for the Italian public broadcaster. As of the publication date of this issue, Rai has not officially confirmed how its final organizational structure will look. It’s worth noting, in any case, that the various business units within the public corporation—due to the vast scope of its activities—already enjoy a considerable degree of operational autonomy. Over the years, the CTO

position has taken on a more institutional character within the company.

This makes it an ideal moment to take stock of Rai’s current situation and to explore the key elements of its technological outlook for the years ahead. Helping us in this task is Ubaldo Toni, Director of Technology, who has brought together his senior technical leadership team to exclusively answer all our questions.

This article is part of a series exploring the main public broadcasters across

A large-scale shift to UHD would involve significantly higher costs — for example, storage requirements can increase fourfold — so we enable full UHD functionality only where and when it is truly needed

Europe. In recent issues, we have focused on the Nordic countries, featuring interviews with the technical heads of Sweden’s SVT, Denmark’s TV 2, Iceland’s RÚV, and Norway’s NRK (you can access the articles through the links). As a side note, Ubaldo Toni mentioned that he had read these pieces and that the ambitious upgrades carried out by SVT and TV 2—which he personally visited—once served as a source of inspiration for Rai.

In addition to Ubaldo Toni, the interview also includes contributions from

Nino Garaio, Head of Digital Production Systems; Fabio DiGiglio, Deputy Head of Digital Production Systems; Stefano Marchetti, Head of Platform Engineering; Guglielmo Trentalange, Head of Studio Networking Systems; Andrea Menozzi, Head of Infrastructure Engineering; and Riccardo Rombaldoni, Head of Content Production Engineering.

As for Rai’s general organizational structure, it is important to highlight that the “Direzione Tecnologie” led by Toni is primarily focused on television production systems and media-related IT infrastructure. The scope of this interview

has therefore been limited to that area. Other domains are managed by separate business units: “Reti e Piattaforme” oversees OTT services, non-linear media, and DVB-S/DVB-T distribution; “ICT” handles corporatewide IT and networking outside the media domain; CRITS (“Centro Ricerche Innovazione Tecnologica e Sperimentazione”) is responsible for R&D activities; and “Direzione Produzione TV” manages the operational aspects tied to major events such as the Sanremo Festival.

With four production centers, 17 regional offices, around 2,000 journalists, and dozens of linear

channels, Rai stands as one of Europe’s leading broadcasters. It also holds an extensive content archive, dating back to 1924 for radio and 1954 for television. Currently, the corporation is undergoing a major transformation. “We’re shifting from broadcaster to media company so we are developing a multi-year, multi-million-euro innovation plan to support this transition,” explains Ubaldo Toni. “We are steadily moving toward a fully IT- and IP-based architecture, though completing the full transition will take another four to five years.”

Have you implemented any recent technological innovations or upgrades that you would like to highlight?

In recent years, we completed the renewal of the production infrastructure of one of our major Rai Production Centre in Rome (Studi Nomentano). This facility includes six medium- to large-sized studios (ranging from 380 to 1,500 sqm) dedicated to entertainment programs, five control rooms equipped with HD/UHD-12G-ready technology, a central SMPTE 2110 IP-based routing system, post-production facilities with centralized storage, and new LED-based lighting systems that have reduced energy consumption by 80%.

We also recently completed a full overhaul of our national news production systems, transitioning from a traditional IT environment to a private cloud. This cloud infrastructure features state-of-the-art technology,

both at the application level — using AVID MediaCentral Cloud UX — and the hardware layer, which is built on Dell and VMware hyperconverged virtualization. As a result, we’ve reduced energy consumption by 60%, while continuing to serve over 800 journalists, who now benefit from fully effective remote working capabilities.

What is your current technological landscape when it comes to the transition from SDI to IP on the production side?

We began introducing SMPTE 2110 IP technology where we saw clear benefits, not simply to follow industry trends. In the live production area, we didn’t find compelling technical or economic reasons to be early adopters of the 2110 standard. As a result, most of our newer live production systems are based on 12G SDI UHD/ HDR, while SMPTE 2110 is used primarily to optimize signal distribution and

enable shared use of resources across different studios and control rooms. We believe this hybrid approach has been the best choice in terms of reliability, sustainability, investment efficiency, maintenance costs, and operational effectiveness.

Rai has been a pioneer in adopting UHD. Could you summarize your current position and the main challenges you foresee in this area?

On the production side, we have primarily invested in OB vans, as they are typically used for premium events that may be sold or distributed internationally, where UHD quality is often required. Our long-term infrastructure projects are also designed with UHD support in mind.

However, a large-scale shift to UHD would involve significantly higher costs — for example, storage requirements can increase fourfold — so we enable full UHD functionality only

where and when it is truly needed. We particularly value flexible licensing models (e.g., for high-end cameras) that allow UHD capabilities to be activated on a usage basis.

On the distribution side, Rai launched its 4K channel in 2016, broadcasting free-to-air on the Tivùsat digital satellite platform. The channel is used to air UHD versions of major live events (such as the Opening of the Holy Door) and other premium content. These broadcasts are simulcast with HD versions on our premium HD channels and are also available via streaming on HbbTV-compatible devices.

In relation to this, could you provide an overview of the current state of the transition to UHD in the Italian television landscape?

UHD is steadily gaining ground in high-end production, particularly for premium events and some scripted content.

AI has recently been adopted to enhance processes related to media accessibility. It is currently used to assist with batch preparation of subtitles and is being tested for automatic transcription of live events

However, its broader adoption is still limited by distribution challenges. In Italy, DTT remains the primary delivery platform, and even with T2-HEVC, there isn’t sufficient bandwidth to support UHD broadcasts terrestrially. Satellite is also used, but its reach is limited. Broadband distribution remains costly due to high CDN expenses, and fiber-to-thehome coverage is still not widespread across the country. At this stage, large-scale investments in UHD are not justified by the potential for additional revenue.

This year, we plan to sign several framework contracts for equipment such as UHD video switchers, studio cameras and lenses, PTZ cameras, ENG cameras, and broadcast monitors

Are there any interesting projects or upgrades in the near future that you can share with us?

We’ve just entered the executive phase of the Rai Sport digitization project. The goal is to connect our sports channels and newsrooms in Rome and Milan to the existing Avid infrastructure, while integrating EVS equipment to create a fully streamlined workflow for event coverage and sports news production. The project is expected to be fully implemented within two years.

In addition, we are about to launch a proof of concept (POC) for studio automation in our regional news operations.

In news or sports production, graphics are a key element. In this regard, how do you approach this area, and to what extent are you using immersive technologies or augmented reality?

We are currently using immersive technologies and augmented reality in several studios in Milan, primarily for sports programming,

as well as in Rome for other popular TV programs and documentaries. We have also planned further investments in this area to expand and enhance our capabilities.

I’d like to know a bit more about the key elements of your studios and facilities. Have you recently acquired any significant equipment? Which manufacturers do you usually rely on?

We have recently carried out significant upgrades in our TV studios through

public tenders for key technologies. This year, we plan to sign several framework contracts for equipment such as UHD video switchers, studio cameras and lenses, PTZ cameras, ENG cameras, and broadcast monitors.

On the infrastructure and control side, we typically rely on both Utah and Riedel’s MediorNet distributed video routing systems, as well as the Lawo VSM broadcast control system. We are also planning investments in technologies aimed at significantly reducing operational and energy costs — including LED walls,

LED floor systems, LED lighting, and, last but not least, virtual set systems.

Among the events Rai has recently covered,

could

you share a specific success story

you’re

particularly proud of — one that stood out due to the challenges it involved?

I guess “ Sanremo Festival 4k” and “ Eurovision Song Contest” in Turin, but as this questions relates to operational events managed by a different business unit, I may not be able to answer.

What would you say is the current state of 5G adoption in the Italian broadcast industry and within your workflows?

We are running extensive tests, using either public networks, or private “5G bubbles” in some events. This is still an R&D matter for us, so for detailed information Rai CRITS (Centro Ricerche ed Innovazione Tecnologica) should be contacted for further details.

How are you adopting artificial intelligence and process automation in your workflows?

How do you see its influence in the near future?

AI has recently been adopted to enhance processes related to media accessibility. It is currently used to assist with batch preparation of subtitles and is being tested for automatic transcription of live events. More broadly, we see AI as increasingly valuable in improving the

performance of production tools, which in turn will help boost overall productivity.

What is your level of confidence in and adoption of cloud technologies compared to on-premise systems?

On-premises systems, while requiring a significant upfront investment, offer predictable costs and greater control — which is essential for a public broadcaster like Rai, given our strict compliance and security requirements.

That said, it’s clear that cloud technologies offer unique advantages, particularly in terms of flexibility and scalability.

We see a hybrid approach as the most effective strategy — one that leverages the strengths of both cloud and on-premises solutions to address specific business needs.

For example, we see clear value in beginning to support Rai’s program delivery partners with ingest and QC file services

configured in the cloud. This allows us to reduce feedback latency regarding the technical compliance of media assets and to scale delivery operations during peak periods throughout the year.

However, we have no plans to move our program archive to the cloud. Too many questions remain open around content rights, security, and content management — particularly when relying, even partially, on major providers such as AWS or Azure.

“A change of mind is needed from the old way of “creating” television to the new one, involving not just engineering matters part but also the operation, professional training and human resources side”

“That old “niche” made of companies and technology suppliers is disappearing and we can oversee on the horizon, even in the media/broadcast world, the big IT market players such as Amazon, Microsoft, Huawei, Nvidia, Meta with their impressive economic power and resources”

This also ties into the broader issue of the digital divide. A cloud-based platform requires stable and high-speed network connectivity, especially for post-production workflows. Unfortunately, that level of connectivity is not always guaranteed — particularly in scenarios where cloud processing would be most beneficial, such as special outdoor productions or major sporting events like the Giro D’Italia bicycle competition.

What is your vision for the transition of the broadcast industry to a software-based infrastructure?

The transition to softwarebased infrastructures is progressing rapidly, driven by advances in artificial intelligence, cloud computing, and IP-based workflows. Our objective is to harness the potential of software to achieve more flexible, scalable, and efficient operations.

At the same time, it’s essential to maintain the reliability and security that have traditionally characterized broadcast environments.

An equally important focus for us is the training of engineers. Their skillsets must evolve to meet the growing complexity involved in managing and administering software-based systems.

Would you like to add anything else?

An equally important focus for us is comprehensive training across all levels — from project and system design to operations and maintenance. As we adopt more complex, softwaredriven infrastructures, it’s critical that all teams involved are well-prepared before systems go live. Engineers must understand not only the underlying architecture, but also how to configure, operate, and troubleshoot new technologies effectively.

Likewise, operators need to adapt to evolving workflows, and maintenance personnel must be equipped to handle updates, integration issues, and long-term support. In our view, investing in training ahead of final release is not optional — it’s a key success factor for ensuring reliability, minimizing downtime, and securing a smooth transition to next-generation broadcast systems.

Technology is rapidly and relentlessly transforming

professions and duties. A change of mind is needed from the old way of “creating” television to the new one, involving not just engineering matters part but also the operation, professional training and human resources side.

This works with media companies but also to suppliers who find themselves facing the same challenges, even in the recruitment of new talents and consolidated professionals in the sector.

An

important focus for us is the training of engineers. Their skillsets must evolve to meet the growing complexity involved in managing and administering software-based systems

That old “niche” made of companies and technology suppliers is disappearing and we can oversee on the horizon, even in the media/broadcast world, the big IT market players such as Amazon, Microsoft, Huawei, Nvidia, Meta with their impressive economic power and resources.

Rai PBS, in this context, adds an additional point of complexity as it is subject to the “Italian procurement code” by virtue of which it must plan its technological investments with extreme caution and precision, in a complex and extremely competitive context in Italy and Europe.

We recently completed a full overhaul of our national news production systems, transitioning from a traditional IT environment to a private cloud

We interview Rakuten TV’s Chief Product Officer, Sidharth Jayant, to explore the company’s strategy and most notable technological implementations

The FAST (Free Ad-supported Streaming Television) phenomenon has become one of the hottest trends in the industry—and it seems we’re only at the beginning. “What we’re seeing now is that a large part of the industry still hasn’t fully embraced FAST”, explains TM BROADCAST Sidharth Jayant, Chief Product Officer at Rakuten TV.

This streaming platform played a pioneering role in this vertical, launching its first FAST channels back in 2020. “We were one of the first European companies to do so at scale, across multiple countries,” emphasizes Sidharth Jayant.

By Daniel Esparza

“Over the past five years, these channels have gained incredible traction for us. They’ve become a key growth driver—so much so that continuing to invest in them was a no-brainer.”

Today, Rakuten TV owns and operates hundreds of FAST channels.

“Some TV manufacturers were ahead of the curve and adopted it early on, but now more and more are jumping on the FAST bandwagon,” continues Rakuten TV’s CPO. “On the telecom side, especially in Europe, there’s still a lot of room for growth. Not enough telcos have adopted FAST yet.”

“Most of our markets are localized, and every time we roll out a new feature, it needs to come with the right copy in each language. We’re looking at how AI can help us improve that process”

Sidharth Jayant has helped expand the company’s service from two to more than 40 markets across Europe during his ten years at Rakuten TV. Among the most notable recent technological implementations, Jayant is particularly proud of Rakuten TV Enterprise, a newly created business line that is already fully operational. This solution is designed to enable content owners and distributors to easily launch and monetize their FAST channels and video-centric apps.

In this interview, we explore all the key aspects of this new unit and also review Rakuten TV’s overall strategy, its use of artificial intelligence, its vision of the FAST universe, and its future plans, among other topics.

What would you say are the main technical challenges Rakuten TV is currently facing?

If we take a step back, we’ve been running this service for over 15 years now, across Europe. The platform operates under two business models: a transactional one—where users can rent or buy movies—and a free one, supported by advertising. This includes both on-demand content and FAST (Free Ad-Supported TV) channels.

One of the key technical challenges is managing the diversity of devices our service runs on. Smart TVs or CTVs, as we call them, are especially important to us. They offer the best environment for watching long-form content like movies. People sit down in their living rooms, in front of high-quality screens, and engage for longer sessions.

From a technical perspective, it’s crucial to understand that each TV

manufacturer is different. The operating system may vary, the video player might be different, and even the specs required to build the application can change.

So the first challenge is embracing that diversity and building a service that not only supports it, but actually thrives

in it—delivering the best possible experience for each type of device. And the same applies to smaller screens, web platforms, and consoles. Each platform involves a different usage context, but the goal is always the same: delivering a great playback experience.

The second challenge I’d highlight is the development of a new business line, which we call Rakuten TV Enterprise. Over the years, we’ve developed a lot of great technology for our own app, and now we’re at a point where we can offer those same tools to partners and clients.

These might be content owners wanting to launch a channel or an app, or telcos looking for a transactional store or FAST channels. We can build the full service for them. We’ve been developing this for the past couple of years, and it’s now ready. In fact, many clients are already using it.

So this has been another major challenge: running a successful B2C service while also creating and scaling a new B2B line. Thankfully, we’ve been able to leverage the tools we originally built for ourselves. Still, it’s been demanding from a technical point of view— especially when it comes to prioritizing developments and maintaining high quality for two very different customer bases. One is B2C, the other B2B, and their needs are completely different. Those are the two main challenges that come to mind—though of course, there are more.

Before we go into more detail about that new business line, I’d like to ask: what strategies do you generally follow to monetize your services?

Let’s start with the ongoing B2C service. As I mentioned earlier, we offer movies to rent or to buy. This allows

us to provide newer content from the biggest Hollywood studios—something that’s not always available on other streaming platforms, because they work under different models. So, the first part of the strategy is offering premium, up-to-date content.

The second part is simplifying the rental and purchase experience as much as possible. That includes integrating local payment methods, which are very important in each market. For example, in Poland, Blik is widely used. In Sweden, Swish is very popular. In some countries, PayPal is more common. And of course, credit cards are standard. So we try to make the process as smooth and user-friendly as we can, tailored to each region.

The other key pillar is advertising. We aim to build an ad experience that isn’t

“If you’re watching a movie or a TV show, the ad should appear at just the right moment—so we can

monetize your viewing, but without disrupting the experience”

intrusive and that respects user preferences. Ads need to blend in and out of the content seamlessly. If you’re watching a movie or a TV show, the ad should appear at just the right moment— so we can monetize your viewing, but without disrupting the experience.

Then, when it comes to the enterprise side, the monetization model changes completely. Depending on the kind of service we’re offering, we might use a fixed-fee approach, or explore more innovative models—like helping our partners monetize their inventory, rather than charging them a large upfront cost. It really depends on the use case, but overall, it’s a very exciting area for us right now.

handle content storage from a technical standpoint?

How much do you rely on the cloud, and in which cases do you make use of it?

We definitely take advantage of cloud storage solutions. It’s pretty basic

for us at this point—we rely on it heavily. We use different storage classes so we can optimize both performance and cost. And we also apply retention policies to remove content that’s no longer in use. So broadly speaking, yes, it’s a cloud-based approach.

I’d also like to speak about artificial intelligence. To what extent have you implemented AI in your workflows—like subtitling, personalized recommendations, and so on?

There’s a major focus—not just from us, but from Rakuten as a group—on making AI our ally. Let’s put it that way.

So more than just at a local level, it’s a strategic priority at the group level, both in Japan and across Rakuten International. We’ve been provided with internal AI tools, but it goes beyond just having access to them. There’s constant encouragement and training to actually use them. The idea is that we all need to become comfortable with AI and use it to boost our productivity. At the engineering level, for example, our team uses GitHub Copilot, and we’re already seeing improvements in productivity—not just here in Europe, but across the company.

Beyond that, several AI-driven solutions are

being developed with the goal of increasing efficiency. One area is localization. You mentioned subtitling, but localization goes further than that. Since our service runs across Europe, we need to adapt everything to different languages. Most of our markets are localized, and every time we roll out a new feature, it needs to come with the right copy in each language. So we’re looking at how AI can help us improve that process.

Of course, we’re also exploring how to enhance subtitling itself. And because we license a lot of content, we’re in constant dialogue with studios to understand the possibilities in this space. That will take some time, but it’s part of the roadmap.

In addition, there are initiatives at the group level focused on improving customer service—for example, by equipping support teams with AI agents that can assist them in their daily work. So there are several fronts where AI is already in use, and many more where it’s being actively developed.

Let’s go back to the new business line you mentioned earlier —Rakuten TV Enterprise. Could you go into more detail about it—and the technical challenges it involved?

Yes, definitely—this is one of our main technological innovations. Until last year, when we built our own FAST channels, we relied

on external technology providers. That meant using third-party solutions for CDN, playout technology, scheduling and ad insertion engines. But we have a large number of owned-andoperated channels across Europe—100+, actually— and they’re distributed on many platforms.

Over the past year and a half or so, we’ve developed our own technology stack to support all of this internally. Today, all of our FAST channels are powered 100% by Rakuten TV’s own technology.

We’ve built our own scheduler, so our in-house programming team can manage content without

“We’ve built our own scheduler, so our in-house programming team can manage content without relying on any third-party tools”

relying on any third-party tools. Our playback experts developed the playout system as well. For ad insertion, we still use external components, but we adapt and integrate them into our own system. And we continue to use our existing CDN network, which now also supports the enterprise offering.

What’s most important is that everything we’ve built is designed so that third-party partners—content owners or service providers—can adopt and use it easily. So it’s not just for us internally; it’s become the foundation for an entirely new line of business. This is the core of what we call Rakuten TV Enterprise. And it’s gaining a lot of traction. We’re seeing strong interest and onboarding a growing number of clients.

In terms of trends, how do you assess the evolution of FAST models in today’s media landscape—and how do you expect it will evolve in the future?

To talk about the future, I think it’s worth taking a quick look back as well. We launched our first FAST channels in 2020— March, if I’m not mistaken. We were one of the first European companies to do so at scale, across multiple countries.

Over the past five years, these channels have gained incredible traction for us. They’ve become a key growth driver—so much so that continuing to invest in them was a no-brainer.

What we’re seeing now is that a large part of the industry still hasn’t fully embraced FAST. Some TV manufacturers were ahead

of the curve and adopted it early on, but now more and more are jumping on the FAST bandwagon. On the telecom side, especially in Europe, there’s still a lot of room for growth. Not enough telcos have adopted FAST yet.

That said, many of our current and potential partners are talking to us about launching their own FAST services. We believe content owners are increasingly seeing the value—it helps them reach incremental audiences. And we expect more broadcasters will step into the FAST space as well.

So, yes, we anticipate continued growth—not just in adoption and distribution, but also in the evolution of FAST formats. We believe in it strongly.

“There are initiatives at the group level focused on improving customer service—for example, by equipping support teams with AI agents that can assist them in their daily work”

The most recent development is Rakuten TV Enterprise, as I mentioned earlier. But like with any product, it’s never really “finished”—it keeps evolving.

What we’re already seeing is that new formats are being requested for FAST playback. Not many yet, but depending on the market, there are new or additional formats being asked for. At the same time, more manufacturers and telcos are joining the distribution side. And when you connect your channels to different platforms, it’s not just a simple copy-paste—it requires tailored integration every time. So we expect more of that.

Within the FAST space—and beyond it—our tools will continue to be adopted by more and more clients. And in this context, our “users” are actually other companies. That’s a different approach to product development, one where feedback from the clients plays a key role.

So, on a quarterly basis, we expect to roll out new

features and improvements to the tools we’re offering in the market. Advertising will be at the core of all this. You’ll see new ad formats coming from us, and much greater signal transparency—meaning advertisers will clearly understand the kind of inventory they’re targeting.

For end users, we’ll offer more control over preferences. Recognized

CMPs (Consent Management Platforms) will be supported across all platforms, so users feel confident that their privacy is respected.

And finally, we’ll keep working on making advertising feel even more integrated. Ads will blend more smoothly into the content stream, helping users stay engaged. We believe even the ad

experience itself will become more immersive and enjoyable over time.

“You’ll see new ad formats coming from us, and much greater signal transparency— meaning advertisers will clearly understand the kind of inventory they’re targeting”

“We need today tools that help non-experts understand what’s going on”

K. FROSTAD

SIMEN

We interview this industry veteran to gain his market perspective. IP, the dominance of OTT platforms, the new production models emerging in sports broadcasting, and the expansion of software over hardware are among the key topics addressed in this conversation

By Daniel Esparza

Simen K. Frostad has a rare talent for explaining complex matters in a way that makes them seem simple. Perhaps that’s why he’s been able to clearly identify a growing market trend in today’s fast-paced technological landscape: the need to create accessible tools. As he puts it: “What we need today are tools that help non-experts understand what’s going on. That’s absolutely critical. Across IT, telecom, and the broader IP space, we’re seeing fewer people with in-depth knowledge. That means our tools must do more—providing deeper insights and even suggestions for how to fix problems.”

Co-founder of Bridge Technologies in 2004 and currently the company’s Chairman, Simen K. Frostad joins the Industry Voices section to share his seasoned market vision with TM BROADCAST readers.

A pioneer in recognizing the advantages of IP—a technology that has transformed the industry from top to bottom—he is

convinced that the sector must fully embrace the shift to IT environments. Doing so, he stresses, requires a joint effort from network and video departments, which often struggle to communicate due to their different technical languages.

“A core part of our work today is enabling teams to immediately determine if a problem is transport-related or production-related, so the appropriate experts can step in and resolve it”

The dominance of OTT platforms, the new production models emerging in sports broadcasting, and the expansion of software over hardware are also key topics in this conversation.

We founded the company in 2004, driven by a strong interest in IP-based technologies. But the story actually begins earlier—

“IT teams are incredibly vigilant and have powerful orchestration tools that make operations much more efficient than traditional broadcast workflows,” says Simen K. Frostad. At the same time, he sends a message to the IT world: “It needs to be more time-sensitive and better understand the critical nature of real-time broadcast.”

back in 1999, when we created a Scandinavian IP-based contribution network. At the time, IP technology was still in its infancy, so we were definitely early adopters.

We had a great start thanks to substantial support from Cisco, and we developed a network that transported TS-based MPEG-2 at what was then considered high bandwidth—50 megabits— with low latency over an IP/MPLS network.

That experience gave us deep insight into IP, and we quickly became enthusiastic advocates for it.

I also received a lot of support from Tandberg Television, which was a major player in broadcast systems at that time. I got to work closely with some of their top engineers— some of whom developed Tandberg’s very first IP interfaces. So, when Tandberg decided in 2004

to relocate much of its R&D from Norway to the UK, many engineers weren’t keen on moving abroad. That situation helped spark the creation of Bridge Technologies. We saw a clear need to improve visibility and understanding of packet transport— because with IP, a lot happens inside the switch, and it’s not always obvious how packets are actually flowing or how minor configuration changes can have a major impact.

NOC – BROADCAST & OTT SERVICES

“The remote aspect is transforming the way production is done. We’ve already seen it happening in major sports events over the past two years, and we’re going to see even more of it in the next two”

Broadcast media, in particular, is unforgiving. If you start losing packets, the result is visible artifacts in the picture. The problem is that an artifact caused by an encoder issue looks exactly the same as one caused by packet loss in transport. So our first mission was to create tools that could help distinguish between those two root causes—whether the issue was in the production chain or in the transport layer.

That remains a core part of our work today: enabling teams to immediately determine if a problem is transport-related or production-related, so the appropriate experts can step in and resolve it.

One of our main strategic goals is to create tools that are accessible to non-experts—people who may not fully understand how these complex systems work, but who need enough clarity to identify issues and bring in the right teams to fix them. Even in production environments with incredibly talented engineers, many don’t have deep IP expertise. Conversely, IT departments that support parts of production often don’t understand media transport. So, we aim to bridge that gap—translating advanced metrics into insights that can be understood and acted upon by non-specialists.

That brings me to one of my questions — the convergence between video and IT departments. What would you say are the main challenges in making these two areas work together? Well, first of all, these two groups have grown up quite differently. The IT department is full of clever

people who understand a lot about scaling, processing, and systems. But traditionally, they’ve focused on supplying their own set of tools and capabilities within a service-based framework. Telecommunications people, on the other hand, have tended to embed a lot of intelligence directly into the network.

So even though they share common goals, their approaches—and their language—are different. That creates a bit of friction. The world is moving more and more towards IT because it’s such a vast and well-developed industry, and that means broadcasters have to learn how to communicate better with IT professionals.

The challenge is that the IT world can be slow to adapt to the specific needs

of broadcast. In high-end broadcasting, there’s no room for packet loss. If you’re delivering low-latency services, there’s no error correction fast enough to compensate for lost packets. So, while I have a lot of respect for the IT industry, I do think it needs to be more time-sensitive and better understand the critical nature of real-time broadcast.

That said, the broadcast industry can also learn a great deal from IT. IT teams are incredibly vigilant and have powerful orchestration tools that make operations much more efficient than traditional broadcast workflows. So yes, we’re heading toward a more IT-centric world, and IT will continue to dominate—but both sides have something valuable to offer.

“IP is embedded in every aspect of broadcast. There’s no avoiding it. Even in SDI environments, you’ll find devices controlled via IP, so a solid understanding of IP is essential”

“What’s coming next, in my view, is the expansion of networks not just to transport data, but also to support things like memory sharing and server clustering”

You mentioned founding Bridge Technologies back in 2004. How would you assess how IP technology has evolved since then?