3 minute read

5.1.4 Geodatabase Design

indexes can be calculated infrequently, whereas data that is dynamic or actively being edited should have the indexes recalculated with each database compression.

For some data, building the indexes may occur with a predictable frequency, while other data is cyclical, such as land use data that is generated only infrequently. While data is being edited, indexes should be calculated perhaps multiple times per week, with each reconcile/post and compression.

Statistics: Statistics, like indexes, help query performance by providing information that the database can use to determine the most efficient execution plan for a given query. The dynamics associated with statistics is also like indexes. The more frequently the data is edited, the more regularly statistics should be updated. And as was the case with indexes, calculation of statistics can be associated with a database compression.

Compression: Database compression is a process that effectively helps to clean-up the geodatabase. Following a typical reconcile and post of versioned data, statistics would be calculated and then the compression can be performed which moves data from the delta tables (which track all changes in any version) to the base version where possible. As a result, the geodatabase is both cleaned up and, in many cases, reduced significantly in size, both of which contribute to increased performance.

As with updating the indexes and calculating statistics, the frequency of geodatabase compressions is determined in large part by how dynamic the data is, but due to the potentially significant performance impact frequent compressions is recommended. For data that is more periodic, such as quarterly updates, the compression would simply follow a similar schedule.

5.1.4 Geodatabase Design Challenges arise with maintaining schema changes while publishing data for web and mobile use as well as performing regular maintenance activities. Once the City has consolidated the two present systems into a single enterprise architecture, the City should consider establishing a publication instance and a production instance of the EGDB. With automation, this dual instance would have little to no impact to the day-to-day administration and maintenance of the EGDB at the same time provide complete and real-time control over schema changes and version maintenance activities.

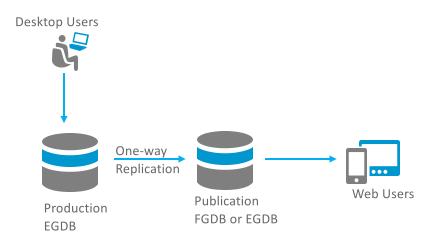

The graphic below illustrates the concept of a production geodatabase and a publication geodatabase for web applications. Since the publication geodatabase is read only this could be a File Geodatabase (FGBD) with automated one-way replication on a regular timed interval from the production environment.

Production and Publication GDB’s

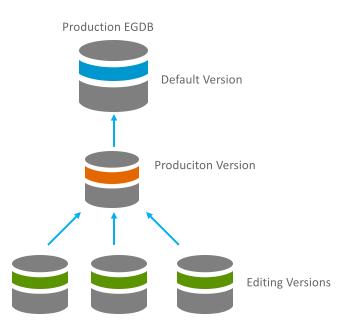

The GIS Technical team would then establish versions for internal maintenance. This graphic illustrates a versioned approach with the production EGDB. It is recommended to establish a production level version where all QA/QC takes place prior to posting and reconciling with the Default version. As the City implements versioning it will be necessary to understand the issues that any 3rd party solutions might have on the EGDB and its schema. This may require the City to establish a separate and isolated data model for those solutions.

Production EGDB Version Tree

When implementing versioned EGDB it is important to follow these best practices: ✓ Increase the frequency of updating statistics on tables ✓ Rebuild indexes on tables regularly ✓ Plan parent-child version relationships carefully ✓ Compress the geodatabase often ✓ Monitor system resources ✓ Automate post and reconcile routines

Esri has recently introduced the concept of branch versioning in order to support a servicesoriented architecture for multiuser editing. It builds upon the well-established capabilities of traditional versioning. The new Utility Network Management extension recommended earlier in this document requires implementation of branch versioning. It does not support traditional