Inside

the new brain of oil and gas

When operations begin to think

Culture before code

When data meets conscience

Safety with a sixth sense

When machines learn to decide

CEO Adam Soroka

Editor Mark Venables

Editor’s Letter

Artificial intelligence is no longer a distant ambition. It is now embedded within the daily workings of the energy industry, shaping how assets are managed, risks are mitigated and decisions are made. Machines that once executed instructions are beginning to interpret intent, blending analytical precision with a growing sense of operational awareness.

Across upstream, midstream and downstream operations, intelligence is moving closer to the process. Algorithms stabilise well pressure, refineries learn to optimise yields and emissions simultaneously, and predictive models anticipate failures before they occur. The result is a subtle but profound shift: human expertise is no longer working beside technology but through it.

This edition explores that transformation through seven detailed features. It begins with retrieval-augmented generation and agentic systems that bridge the gap between information and action, creating knowledge frameworks that support real-time decisionmaking. It then examines how AI is enhancing downstream operations, turning refineries into responsive, data-driven ecosystems. Further articles explore the cultural dimension of adoption, leadership, training and trust, alongside the technology’s impact on supply chains, where predictive analytics and causal reasoning are redefining resilience.

Autonomy forms a central thread. AI is already making independent decisions in drilling, production and maintenance, but true autonomy demands governance, explainability and accountability. Responsible deployment is now as much about ethics as engineering. Another feature addresses that responsibility directly, examining how data quality, transparency and privacy determine whether AI remains a force for progress or becomes a source of risk.

Safety provides the final focus. From wearable sensors and computer-vision systems to predictive environmental monitoring, AI is expanding human vigilance across the workplace. It detects anomalies, learns from

every incident and helps protect both people and the planet. In the process, health, safety and environment are becoming proactive rather than reactive disciplines.

All these themes converge at The Future AI Conference in Abu Dhabi. The event brings together engineers, policymakers and innovators to discuss how intelligence can be embedded responsibly throughout the energy value chain. The dialogue has moved beyond what AI can do toward how it should behave, how to balance autonomy with oversight and ensure that progress is built on transparency, trust and integrity.

Artificial intelligence is redefining operational excellence. The measure of success will not be the scale of adoption but the quality of understanding, the ability to align digital intelligence with human purpose. The future of energy will be guided by machines that think, but it will be defined by the people who decide how they are allowed to think.

Mark Venables, Editor Oil & Gas Technology

When operations begin to think

Artificial intelligence is changing how industrial systems perceive, decide and act. The next stage of that evolution is autonomy: operations that run with minimal intervention but maximum accountability. In oil and gas, the challenge is not whether AI can operate alone, but how to ensure it should.

The

refinery that thinks

Refineries are evolving into living systems of intelligence. Artificial intelligence is beginning to occupy the space between control logic and human judgement, connecting data that once stood apart and turning operations into continuous learning environments. In the process, it is redefining what it means for a refinery to think for itself.

Balancing the invisible network

Supply chains are the nervous system of oil and gas, connecting production with people, plants and markets. Artificial intelligence is now bringing foresight and adaptability to that system, transforming supply chains from static operations into intelligent networks capable of learning from every movement.

Inside the new brain of oil and gas

Supply chains are the nervous system of oil and gas, connecting production with people, plants and markets. Artificial intelligence is now bringing foresight and adaptability to that system, transforming supply chains from static operations into intelligent networks capable of learning from every movement.

Safety with a sixth sense

Artificial intelligence is transforming how risk is recognised, prevented and managed across the oil and gas sector. By combining real-time data with contextual awareness, AI systems are not replacing human vigilance, they are extending it.

The oil and gas industry is entering an era where machines are no longer passive components of production, but active participants in their own performance. Artificial intelligence and digital twin technology are merging to create self-aware assets, systems that observe, learn, and evolve throughout their lifecycle.

Culture before code

Artificial intelligence promises to make oil and gas operations faster, safer and smarter, but success depends on the people who use it. Building a user-first culture turns data and algorithms into something far more powerful: collective intelligence.

When data meets conscience

Artificial intelligence has become the engine of industrial progress, but it also carries a mirror. Every new algorithm reflects the values, priorities and biases of those who create and use it. For oil and gas companies, which operate under intense public scrutiny and complex regulation, the question is not only what AI can do but how it behaves while doing it.

Final Word: When machines learn to decide

Artificial intelligence has become the engine of industrial progress, but it also carries a mirror. Every new algorithm reflects the values, priorities and biases of those who create and use it. For oil and gas companies, which operate under intense public scrutiny and complex regulation, the question is not only what AI can do but how it behaves while doing it.

Common Model

POWERED BY

BKOAI’s Common Model ensures consistent asset and connectivity definitions (The Common Model) by instantiating all changes simultaneously across the enterprise.

This ensures that all modifications are implemented accurately, consistently, and in a timely fashion and are immediately available for use by every application.

The Common Model leverages a message broker architecture, a knowledge graph, and a low-code canvas interface to create a unified, always-accurate operational backbone.

Core Functionality of the Common Model

Drag-and-drop interface builds asset models and relationships on a visual canvas.

Auto-configures asset structures in AVEVA PI, Seeq, and other connected systems in real time.

Synchronizes structured data to analytics, dashboards, and business tools with one truth.

Drives enterprise-wide consistency, enabling faster decision-making and AI-driven improvements.

Deep Search Agent

POWERED BY

At BKOAI, We engineer collaborative multi-agent systems that unify CV, NLP, RAG, and symbolic reasoning to help industrial organizations accelerate compliance, capture expertise, and drive smarter decisions.

Our MCP and A2A integrations enable AI agents to work together—turning static engineering data into dynamic operational intelligence.

By connecting simulation, process, and field data, we turn fragmented industrial knowledge into predictive, actionable insights that drive continuous improvement.

Our Core Solutions: Deep Search for Industrial AI

Built on MCP–A2A architecture, collaborative AI systems combine CV, NLP, RAG, and symbolic reasoning to enable industrial automation and compliance.

Engineering files, process data, and control systems are connected into a unified, searchable intelligence layer.

Simulation models integrate with real-time production data to deliver predictive, performance-driven decision support.

Static industrial workflows evolve into dynamic, data-driven intelligence that enhances reliability and decision-making.

Learn more and explore full use cases at www. bkoai.com /company/bkoai

Follow BKOAI on LinkedIn

Subscribe to our newsletter BKOAI Snapshot

When operations begin to think

Artificial intelligence is changing how industrial systems perceive, decide and act. The next stage of that evolution is autonomy: operations that run with minimal intervention but maximum accountability. In oil and gas, the challenge is not whether AI can operate alone, but how to ensure it should.

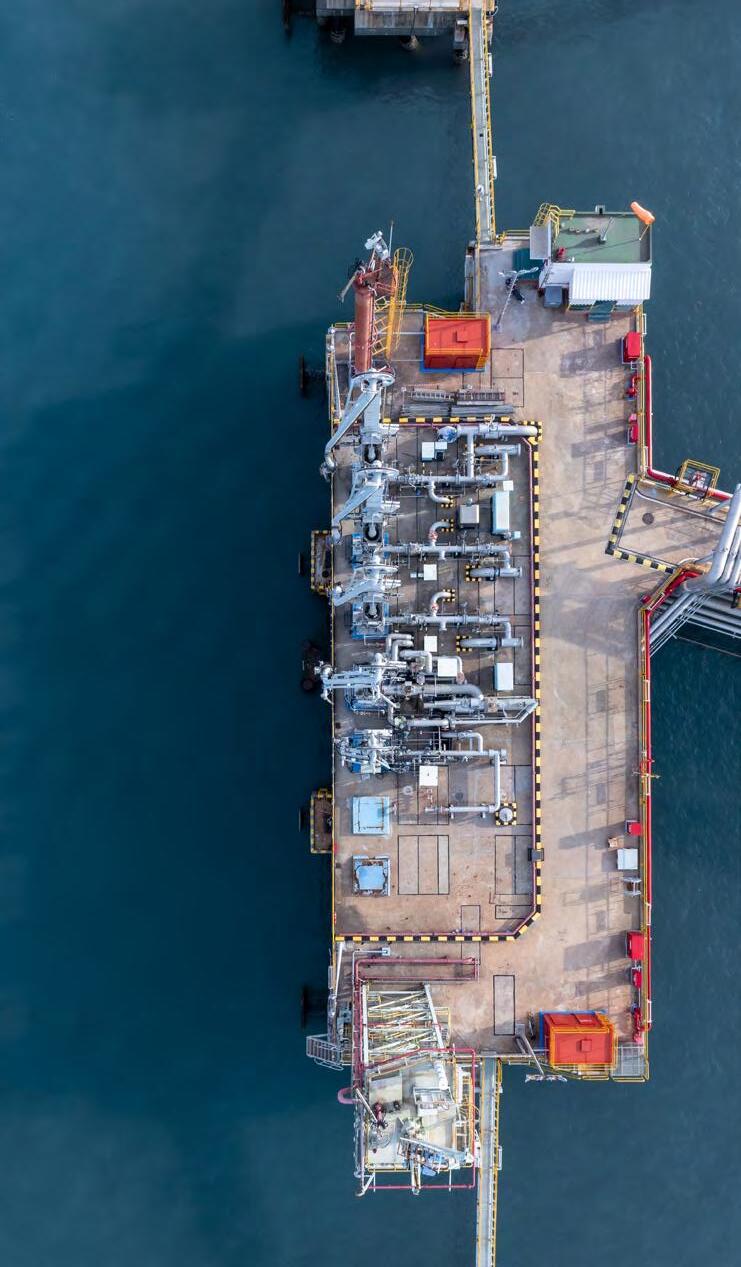

The modern production facility hums with an intensity that never fades. On offshore platforms and in refineries, hundreds of processes operate simultaneously, each dependent on precise timing and balance. For decades, these systems have relied on automation—control logic programmed by humans, adjusted within strict parameters. Yet even the most sophisticated automation remains reactionary, responding to inputs rather than anticipating them.

Artificial intelligence is altering that rhythm. With models capable of reasoning, forecasting and adaptation, machines are starting to participate in decision-making. Pumps and compressors can adjust their own operation to maintain stability. Control systems can evaluate risk and choose corrective actions faster than human operators. This transition marks the border between automation and autonomy, a frontier that promises efficiency but demands trust.

Autonomy is not a single leap. It is a gradual layering of intelligence, governance and experience. As oil and gas companies move toward self-regulating systems, they are discovering that the most critical question is not how to make machines think, but how to make them think safely.

From automation to autonomy

Industrial autonomy emerges in phases. Automation handles repeatable tasks based on fixed logic. Optimisation introduces machine learning to improve efficiency within known boundaries. True autonomy adds reasoning, the ability to understand goals, evaluate options and act without direct instruction.

In upstream production, this evolution is visible in well control systems that adapt to changing reservoir conditions. A generation ago, pressure and flow management required constant human adjustment. Now, AI models can balance these variables autonomously, adjusting choke valves and injection rates to maintain production targets without breaching safety limits.

Downstream, refineries are using autonomous optimisation to control hydrogen plants, heat exchangers and distillation columns. The AI analyses process data, learns from each cycle and recalibrates in real time. Engineers no longer spend hours interpreting graphs; they supervise a system that interprets itself. The concept of autonomy extends beyond process control. It encompasses logistics, maintenance, and even energy distribution, every function where sensing, decision-making and action form a continuous loop. The aim is not to remove humans but to elevate them from operators to orchestrators.

The power behind the intelligence

Autonomous operations demand enormous computational resources. The systems must process terabytes of data in milliseconds, running complex models continuously. Advances in edge computing, distributed cloud architectures and highperformance processors have made this possible.

Quantum computing, though still emerging, offers a glimpse of the next frontier. Its ability to evaluate multiple possibilities simultaneously could revolutionise optimisation problems such as refinery scheduling or pipeline flow balancing. Traditional computers evaluate options sequentially; quantum systems consider them in parallel, finding solutions that would otherwise take hours or days.

The collaboration between AI and quantum technologies will eventually allow near-instantaneous response to multi-variable scenarios, a capability critical to fully autonomous systems. For

now, the emphasis remains on creating scalable architectures where computing power grows alongside capability.

Many operators are adopting hybrid environments that combine local edge devices for latency-sensitive decisions with cloud infrastructure for large-scale learning.

Decision-making in real time

Autonomy begins when AI transitions from recommendation to action. Agentic AI, systems that act toward defined goals, makes this possible. These agents monitor their environment, interpret changes, and take initiative within predefined safety and compliance boundaries.

In subsea operations, agentic systems are being tested to manage pumping and separation units kilometres below the surface. They analyse pressure, flow and temperature data, adjusting performance in real time to prevent hydrate formation or

flow assurance issues. Each action feeds back into the model, refining its future responses.

Onshore, autonomous pipeline control is becoming increasingly practical. AI can detect anomalies in flow or pressure and initiate corrective measures before human operators intervene. Reinforcement learning enables the system to test its own decisions in digital twin environments, learning what works without jeopardising safety.

The key lies in governance. Every action must be transparent, logged and reversible. Engineers need to understand why a model chose a particular path. Autonomy without explainability is a liability; autonomy with traceability is a strength.

Predictive becomes prescriptive

Predictive analytics has long been a hallmark of digital transformation, allowing engineers to anticipate failures and plan interventions. In autonomous systems, prediction becomes prescription. Instead of issuing warnings, the system takes the appropriate action.

A compressor exhibiting early signs of imbalance no longer waits for human confirmation. The AI correlates vibration patterns, calculates the probability of mechanical failure

and adjusts load distribution to prevent damage. In refineries, process control models can alter furnace temperatures or flow rates to prevent downstream bottlenecks automatically.

The efficiency gains are substantial, but so is the complexity. Prescriptive action requires not only accurate data but contextual understanding.

A model must know the difference between a transient anomaly and a genuine fault. That understanding depends on rigorous training and continuous feedback.

No autonomous system should begin life in the field. Digital twins provide the proving ground. These virtual replicas of physical assets allow AI models to learn, test and validate decisions safely. Before deployment, an autonomous well-control algorithm can run thousands of simulated pressure fluctuations, refining its responses. A refinery twin can model how changes in feedstock quality affect optimal operating conditions. The digital twin becomes both classroom and laboratory, where every mistake is a lesson rather than a cost.

This stage also provides insight into explainability. Engineers can interrogate the AI’s reasoning within the simulation, asking why it made certain adjustments and how it ranked risk. When models graduate to the real world, their decision logic is already understood and trusted.

Scaling autonomy responsibly

Deploying autonomous systems across an enterprise requires more than technical readiness. It demands organisational maturity. Companies must establish frameworks that define accountability, risk tolerance and ethical boundaries.

Data quality is the foundation. Without accurate, timely and secure information, autonomy collapses into chaos. Many operators are now investing in comprehensive data governance programmes, auditing sensor networks, validating datasets and standardising metadata. These efforts ensure that every autonomous decision is based on verifiable truth.

Cybersecurity becomes equally critical. As AI systems gain control authority, they become attractive targets. Segmented networks, zero-trust architectures and continuous monitoring are no longer optional; they are prerequisites.

Scaling also means harmonising standards across regions and disciplines. Offshore platforms, onshore plants and remote terminals often operate under different systems. Integration requires a shared digital language, common protocols for communication, decision thresholds and emergency handover.

Autonomous operations change what it means to be in control. Instead of monitoring every variable, humans now oversee the decision framework itself. The model operates within boundaries set by engineers, who act as mentors rather than masters.

Training reflects this shift. Operators learn to interpret AI rationale, assess risk levels and step in when confidence scores fall below thresholds. The relationship becomes symbiotic. Humans provide ethical and contextual oversight; machines provide precision and speed.

The concept of human-on-the-loop, where oversight remains active even when intervention is minimal, captures this partnership. Removing people entirely from critical operations remains a distant and controversial goal. Most companies prefer to pursue supervised autonomy, where systems act

independently but under continuous observation. This approach balances innovation with reassurance.

Building trust through transparency Trust is the currency of autonomy. Engineers must believe that systems will act predictably; management must believe they will act responsibly. Transparency is the mechanism that earns both.

Best practice now includes full documentation of model architecture, data lineage and decision pathways. Each action is recorded with contextual metadata: what data triggered it, which algorithms executed it, and what outcome followed. This level of traceability mirrors process safety documentation and satisfies regulatory scrutiny.

Explainability also extends to human communication. Visual interfaces show operators why AI decisions are made, not just what they are. Confidence metrics and causal reasoning graphs replace black-box outputs. The goal is to make autonomy visible rather than mysterious.

Governance and ethics

As autonomy grows, so does the ethical responsibility of its creators and users. Systems

AUTONOMOUS OPERATIONS

that act on their own must be bound by principles that prioritise safety, transparency and accountability. Many organisations are establishing dedicated AI governance boards, combining engineers, ethicists and legal experts. Their role is to evaluate risk before deployment, ensuring that autonomous systems align with corporate values and industry standards.

Testing protocols are being updated to reflect this shift. Where mechanical systems were once certified through physical inspection, AI systems now require algorithmic auditing. Validation must prove not only functionality but fairness—ensuring that the system does not prioritise efficiency at the expense of safety or environmental compliance.

The path to full autonomy

Complete autonomy remains an aspirational goal. Environmental variability, regulatory complexity and human psychology all impose limits. Yet each incremental step toward autonomy brings value.

Semi-autonomous drilling systems already manage bit pressure and rotation speed without direct oversight. Autonomous aerial drones perform inspections of flare stacks and pipelines, transmitting data to maintenance models that plan interventions automatically. These developments represent progress toward the self-regulating facility, a vision in which continuous learning governs every process.

The endpoint is not the absence of people but the elevation of their role. When machines handle execution, humans focus on creativity, strategy and stewardship. Autonomy becomes a partnership between human judgment and machine consistency.

No autonomous system can be perfect. The measure of maturity is how organisations respond when the unexpected occurs. Incident reviews must extend beyond mechanical root causes to include algorithmic behaviour.

When an autonomous process makes an incorrect decision, engineers analyse its decision pathway: what data it received, how it interpreted context, and why it chose the action it did. This analysis feeds back into model retraining, closing the loop between failure and improvement.

Establishing a culture where these reviews are routine rather than punitive encourages openness. Autonomy thrives in environments where mistakes are understood, not hidden.

Best practices for adoption

Successful implementation of autonomous operations in oil and gas follows several recurring principles. First, start with simulation. Every autonomous system should be trained and tested in a digital twin environment before physical deployment.

Second, maintain supervision. Even mature models require human oversight, particularly in safety-critical operations.

Third, document everything. From data provenance to decision logs, traceability underpins both trust and compliance.

Fourth, scale incrementally. Deploy autonomy where data is richest and consequences are manageable, expanding only after performance and governance prove reliable.

Finally, embed ethics. Design decision boundaries that prioritise human safety and environmental protection above productivity metrics.

These principles are emerging as the informal code of conduct for autonomous industrial intelligence. They ensure that as systems gain capability, they also gain accountability.

The future of operational intelligence

Autonomy changes the relationship between industry and information. Data no longer informs decisions; it becomes the decision-maker. The refinery, pipeline or production facility evolves into a self-regulating organism where physical processes and digital cognition operate as one.

In the long term, autonomous operations could enable entirely new forms of resilience. Systems will adapt to feedstock variation, equipment degradation and environmental change without external input. They will share data across networks, learning collectively rather than individually.

Yet even as the technology advances, the principle remains constant: human purpose must define machine behaviour. The goal of autonomy is not independence but interdependence, a collaboration where each side amplifies the other’s strength.

The oil and gas industry stands on the edge of this transformation. Those who move forward with caution, clarity and conviction will define the blueprint for intelligent operations worldwide. The machines may act, but the responsibility will always belong to the people who built them.

The refinery that thinks

Refineries are evolving into living systems of intelligence. Artificial intelligence is beginning to occupy the space between control logic and human judgement, connecting data that once stood apart and turning operations into continuous learning environments. In the process, it is redefining what it means for a refinery to think for itself.

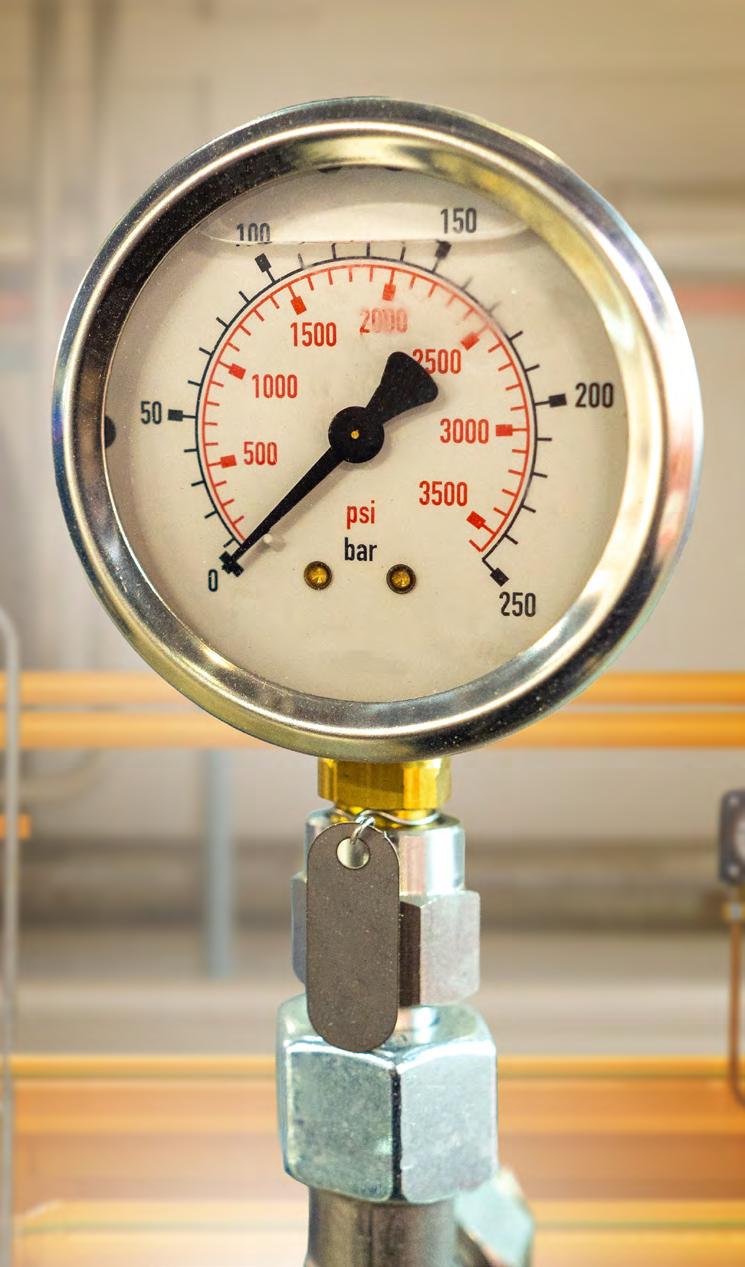

Inside a refinery control room, the rhythm never stops. Temperatures, pressures and flow rates scroll across a dozen monitors, each line telling a story of heat exchange, reaction, or separation. Operators read these stories the way pilots read instruments, translating numbers into instinct. Yet even the most seasoned engineer knows that data can deceive. Small anomalies are easily missed, correlations take hours to confirm, and by the time the cause is known the cost has already been counted.

The modern refinery generates more data in a day than early facilities did in a year. Machine learning, physics-based modelling and symbolic reasoning now converge to interpret this deluge. Together they allow systems to see the refinery not as isolated loops of automation, but as an interconnected organism. Hybrid AI models combine empirical sensor data with first-principles physics, creating representations that understand both cause and consequence. This change is not theoretical. In distillation and hydrocracking units, AI systems already adjust control parameters based on predictive feedback. They learn how feedstock composition, ambient conditions and catalyst performance interact, optimising throughput without breaching safety or energy constraints. The shift is from monitoring to reasoning.

The industrial field worker

Down on the process floor, the field operator remains central to that reasoning loop. Machine learning does not replace experience; it amplifies it. Equipped with wearable sensors and handheld devices, today’s operators can read live vibration patterns from rotating equipment or detect fugitive emissions before they become incidents.

When a pump bearing begins to deteriorate, an AI model running on a mobile platform can recognise the spectral signature within seconds. The system cross-references maintenance history, estimates remaining life and recommends the optimal time for repair. The operator receives the alert, verifies the condition visually, and records the outcome, which in turn becomes new training data. This interplay between human observation and algorithmic inference is closing the gap between the control room and the field. It ensures that insight flows both ways. As digital twins evolve to mirror every asset, the boundaries between design, operation and maintenance start to blur. The refinery becomes a single environment of shared intelligence rather than a collection of disciplines.

Data challenges in downstream operations

No refinery begins its AI journey with clean

data. Decades of incremental upgrades have left most plants with a patchwork of systems and incompatible tags. Laboratory information sits in one silo, inspection reports in another, and process historians in yet another. Integrating this data is the first and most difficult step.

Operators are now investing heavily in data quality programmes that standardise naming conventions and align time stamps across control systems. Unified data models are replacing spreadsheets as the lingua franca of collaboration. The aim is not to collect more data but to connect what already exists.

This discipline pays off quickly. Once data from maintenance, quality control and process analytics is interoperable, AI can uncover relationships that manual analysis could never reveal. It might discover that a subtle temperature fluctuation in a heat-exchanger loop predicts fouling weeks before performance degrades, or that specific vibration

harmonics correlate with catalyst poisoning in a reactor feed pump. These connections form the raw material from which predictive intelligence grows.

Seeing asset health clearly

Every refinery depends on the reliability of its mechanical assets. Pumps, compressors, valves and heat exchangers represent the heartbeat of production, and each carries the risk of unplanned downtime. AI is now extending the visibility of maintenance teams far beyond the reach of traditional monitoring.

Causal AI models, which identify not just correlation but underlying mechanism, are being used to forecast failures with greater accuracy. In crude distillation units, these systems track how process variability affects exchanger fouling and energy efficiency. In fluid catalytic cracking units, they evaluate vibration and acoustic signatures to

warn of rotating-equipment imbalance. These insights feed directly into risk-based inspection frameworks. Maintenance is no longer purely scheduled but condition-driven, aligning manpower and resources with actual need. The result is not only reduced downtime but safer operation. A machine that tells you why it might fail also helps you prevent it.

Investment and justification

Refineries are capital-intensive ecosystems, and any innovation must prove its worth against strict financial metrics. AI does not escape that scrutiny. The question has shifted from “Can we use AI?” to “Where does AI deliver measurable value?”

Executives justify investment by tracing the impact from algorithm to outcome: lower energy use, fewer shutdowns, extended asset life. In hydrogen plants and reformers, AI-driven control has already demonstrated measurable gains in fuel efficiency.

In wastewater treatment sections, predictive analytics reduce chemical usage while maintaining compliance. The journey toward autonomy, however, remains incremental. Refineries rarely leap from manual to autonomous operation. They begin with decision support, AI offering recommendations subject to human approval, then evolve toward partial self-control as confidence grows. Each stage builds a stronger business case for the next.

When AI learns to forecast

Predictive maintenance is only the beginning. Advanced analytics are now penetrating the heart of process forecasting, from feedstock blending to product quality prediction. Reinforcement learning algorithms simulate thousands of process scenarios, identifying the control strategies that maximise yield and minimise emissions.

For example, in catalytic reforming, AI models adjust hydrogen recycle ratios and furnace temperatures in response to changing naphtha composition. The system learns through continuous reward feedback, balancing octane enhancement against energy cost. Such models run parallel to the distributed control system, offering advisory set-points that operators can accept or override.

The long-term goal is adaptive optimisation—refineries that learn as they operate, improving with every cycle rather than degrading between turnarounds. This principle marks the boundary between automation and genuine operational intelligence.

The language of machines and people

Large language models are finding their place within this environment, not as creative writers but as

interpreters of complexity. They translate technical data into human understanding. Engineers can now ask, “What caused the pressure surge in the hydrocracker feed line yesterday?” and receive a coherent, referenced answer drawn from logs, historian data and maintenance notes.

These conversational interfaces sit atop retrievalaugmented systems that ensure factual grounding.

The model does not invent; it retrieves, analyses and explains. For training and compliance, this is invaluable. New staff can access decades of operational knowledge without searching through binders or databases. Experienced engineers gain a faster route to insight during critical decision windows.

The challenge is control. Language models must be fenced by access rights, data privacy, and strict prompt design to prevent sensitive information leakage. Done correctly, they become a trusted colleague, fluent, transparent and tireless.

The human factor

Technology alone cannot transform a refinery.

Culture determines whether innovation takes root. Many organisations are finding that AI success depends less on algorithms and more on people’s willingness to work differently.

Trust is the first hurdle. Operators accustomed to deterministic systems often find probabilistic reasoning uncomfortable. Training therefore focuses on interpretation: understanding what confidence levels mean, how models learn, and why occasional error is part of improvement. When teams see AI as a tool that learns with them, resistance fades.

Collaboration is the second hurdle. Data scientists, process engineers and reliability specialists speak different technical languages. The rise of AI is forcing them into the same conversation. Cross-functional teams are becoming standard in refineries that embrace digitalisation. The result is not only better

models but stronger operational cohesion.

Safety and the AI frontier

No discussion of downstream innovation can ignore safety. As AI assumes a larger role in control and maintenance, the potential consequences of error increase. The industry’s long history of process-safety management provides a framework for addressing this.

Every AI intervention must be validated, logged and auditable. Explainability is no longer a theoretical concern but a regulatory one. If a model recommends a valve closure that prevents an incident, investigators must later be able to trace the reasoning behind that recommendation. Transparency underpins accountability.

Some refineries are already using AI to simulate safety scenarios that would be impossible to test physically. By combining digital twins with reinforcement learning, they explore how control

systems respond to complex upsets, information that feeds directly into hazard-and-operability studies. In this way, AI not only manages safety but helps design it.

The energy intensity of refining makes efficiency synonymous with sustainability. AI now plays a central role in optimising steam networks, heat integration and flare management. Models trained on historical energy data identify inefficiencies invisible to manual analysis.

In one refinery example, optimisation of steamtrap operation through AI analysis reduced fuel consumption by several percentage points, small in isolation, substantial at scale. Similar approaches are improving flare-gas recovery and emissions monitoring, aligning operations with tightening environmental standards.

Sustainability is no longer a separate objective but a performance metric embedded in every

algorithm. The smarter the refinery becomes, the cleaner and more efficient it tends to be.

From automation to autonomy

Look closely at a modern refinery and the signs of autonomy are already there. Intelligent valves adjust themselves to preserve energy efficiency, compressors schedule their own inspections, and control systems learn from every cycle. The evolution is gradual but unmistakable.

Autonomy does not mean removing humans; it means elevating them. Engineers will increasingly oversee networks of learning systems rather than individual assets. Their expertise will guide policy and ethics as much as process control.

The refinery that thinks for itself is not a futuristic vision. It is the logical outcome of decades of digitalisation and data stewardship finally connected by artificial intelligence. Every sensor, every historian, every operator note contributes to a larger conversation, a dialogue between people and machines that never truly ends.

Artificial intelligence in refining is not a single breakthrough but a series of subtle improvements that accumulate into transformation. It turns historical data into foresight, operational feedback into learning, and complexity into clarity. The smart refinery does not rely on luck or experience alone. It reasons, anticipates and adapts. It uses every byte of data to make better decisions and to explain those decisions in human terms. That transparency will be its defining feature.

In time, refineries will be judged not only by throughput or yield but by how intelligently they respond to change. Those that master the dialogue between algorithm and operator will set the pace for a new era of downstream performance, one where intelligence is built into the infrastructure itself and knowledge never sleeps.

Pairing human expertise with AI insights, we can reimagine your IT infrastructure with deep industry knowledge from running the world’s leading businesses.

Unlock Your Inside EdgeTM

Balancing the invisible network

Supply chains are the nervous system of oil and gas, connecting production with people, plants and markets. Artificial intelligence is now bringing foresight and adaptability to that system, transforming supply chains from static operations into intelligent networks capable of learning from every movement.

Oil and gas supply chains stretch across continents, linking remote production sites to refineries, storage terminals and global markets. They depend on a choreography of vessels, pipelines, suppliers and warehouses, each constrained by time, regulation and geography. The pandemic revealed how delicate that choreography had become. Even minor disruptions echoed through the system, exposing how little visibility operators truly had beyond their immediate assets. AI is beginning to restore balance by transforming supply chains into predictive ecosystems. Instead of reacting to shortages or delays, companies are using models that simulate entire networks, anticipate pressure points and propose corrective actions before they occur. The shift is not simply digitalisation but cognition, the supply chain as an intelligent entity that senses, reasons and adapts.

Learning from the real world

Reinforcement learning, the branch of AI that improves through interaction with its environment, is proving particularly relevant. In traditional optimisation, systems are told what to maximise, cost, time or throughput, and compute the best static answer. Reinforcement learning, by contrast, learns dynamically. It runs thousands of simulated supply scenarios, measures the outcome of each decision, and adjusts its strategy accordingly. For an oil major managing fleets of LNG carriers or chemical tankers, this learning process reveals how schedules and routes respond to changing weather, port congestion or demand fluctuations.

Each iteration makes the model more resilient.

The algorithm learns when to reroute, when to hold inventory and when to release capacity. Over time, these digital experiments inform realworld decisions, allowing human planners to test possibilities without physical risk.

The value lies not in automation alone but in discovery. Reinforcement learning exposes inefficiencies that human intuition might overlook, identifying trade-offs between cost and reliability that redefine supply-chain strategy.

Predictive visibility

Predictive analytics now underpin every layer of supply-chain planning, from drilling consumables to finished products. The difference lies in scope. Earlier systems relied on historical averages; today’s AI-driven platforms integrate live data from IoT sensors, supplier portals, satellite tracking and market feeds.

A refinery, for example, can forecast catalyst demand by combining production data, turnaround schedules and vendor performance metrics. The model not only predicts when materials will run low but assesses the probability of delivery delays based on supplier history and route conditions. These insights flow directly into procurement systems, triggering early orders or reallocating

stock from nearby facilities.

For operators responsible for spare parts in offshore environments, predictive modelling reduces the risk of critical equipment downtime. By analysing usage patterns and maintenance logs, AI can forecast which components will fail within the next operating cycle, ensuring that replacements arrive before they are needed. The result is a quieter, more continuous rhythm of logistics that matches the industry’s appetite for reliability.

Data as a single language

Effective prediction depends on shared understanding. Most oil and gas supply chains still operate through a patchwork of enterprise resource planning systems, vendor spreadsheets and manual reconciliations. AI demands coherence. Data harmonisation efforts are now underway across the sector, aligning taxonomies and integrating legacy databases into unified platforms. These foundations allow AI to map relationships across the value chain: how a refinery’s output affects marine fuel supply, how maintenance schedules influence spare-part demand, or how geopolitical shifts ripple through freight rates.

Creating this digital backbone is often more complex than the AI itself. Yet once established,

it transforms visibility into insight. The network stops behaving like a series of silos and starts functioning as a single living structure.

Causal reasoning in action

Causal AI takes this intelligence further by moving beyond correlation. It seeks to understand why events occur and how interventions will influence outcomes. In inventory management, this means the difference between knowing that stockouts follow shipping delays and understanding which upstream decisions caused those delays.

A logistics manager can ask the model: “If we change supplier lead times by two days, how does that affect on-site inventory resilience?”

The system tests the scenario across simulated conditions, producing an evidence-based answer. Over time, causal models help managers redesign the system itself—optimising reorder points, adjusting batch sizes, and balancing cost against continuity.

In complex facilities such as LNG terminals or refineries, causal reasoning also supports sustainability goals. Models can identify how storage temperature, energy use and emissions interact, guiding decisions that reduce both waste and carbon footprint. This ability to trace consequence as well as correlation makes causal AI

a powerful tool for responsible logistics.

The warehouse that thinks Warehousing in the oil and gas sector often spans continents. From regional hubs supplying offshore platforms to massive storage yards holding valves, turbines and spare modules, the scale is immense. Traditional management relies on static databases updated by hand. AI introduces situational awareness. Computer-vision systems integrated with drones and sensors continuously scan stockyards, tracking parts and verifying condition. Predictive algorithms determine optimal storage locations based on demand frequency and size constraints. Reinforcement learning refines those layouts by measuring retrieval efficiency over time, gradually reorganising facilities without human instruction.

In large distribution centres, AI-controlled automated guided vehicles now move materials using routes optimised by machine learning. Each trip informs the next, minimising energy use and congestion. The result is not full automation but augmented supervision, a partnership between human logistics teams and digital counterparts that learn from each other’s performance.

The supplier relationship redefined

Procurement has long been measured by price and delivery, but AI is expanding that view. Supplier performance is now evaluated through multi-dimensional metrics, reliability, sustainability, responsiveness and risk exposure.

Machine-learning models analyse historical purchasing data, invoice timelines and quality reports to rate supplier resilience. During volatile market periods, these models highlight potential vulnerabilities long before they become critical. For example, an algorithm may flag that a supplier’s average delivery time has lengthened steadily over six months, correlating with regional labour shortages. The insight prompts early dialogue rather than last-minute crisis management.

AI also supports dynamic procurement, adjusting sourcing strategies as market conditions shift. In the chemicals segment, where feedstock prices fluctuate daily, reinforcement-learning models simulate contract options, advising on the balance between spot buying and longterm agreements. Procurement teams gain the flexibility to act rather than react.

Interconnected risk management

Risk in supply chains is rarely linear. A single weather event can affect shipping schedules, refinery production and downstream distribution simultaneously. Traditional

contingency planning struggles with such complexity. AI thrives on it.

By combining predictive, causal and simulation models, operators can create digital replicas of their supply networks. These replicas, or supply-chain twins, evaluate how shocks propagate through the system and test resilience strategies in real time.

During hurricane season, for instance, an AI twin can simulate port closures and model the impact on refinery feedstock inventory. It might suggest pre-positioning shipments at alternate terminals or adjusting production rates to avoid overstocking. Each test improves preparedness. Over time, the system learns which interventions deliver the best balance of cost and continuity.

Sustainability and efficiency

Supply-chain optimisation also supports the industry’s sustainability commitments. AI-driven route planning reduces fuel consumption in transport fleets by analysing historical shipping paths against weather and current data. Predictive loading models cut waste by aligning cargo capacity with demand, while warehouse optimisation reduces idle energy use.

In procurement, algorithms evaluate suppliers on environmental, social and governance (ESG) performance, supporting corporate transparency. As regulators tighten reporting requirements, these automated assessments help ensure compliance without excessive manual auditing.

The integration of sustainability data into supply-chain models transforms ethical responsibility into operational advantage. A supply network that consumes less and wastes less is inherently more resilient.

Despite its sophistication, AI remains a tool. The real transformation comes from how people use it. Supply-chain managers must evolve from overseers of process to curators of intelligence. Their expertise is essential in framing the questions that AI answers.

Cultural adaptation follows the same pattern seen elsewhere in digital transformation. Success depends on collaboration between planners, data scientists and operations staff. Each group sees a different part of the network; together they define its whole. As trust in AI grows, decisions shift from reaction to orchestration, with humans guiding the rhythm and machines maintaining tempo.

From reaction to prediction

The shift from reactive to predictive logistics marks a profound turning point for oil and gas. For decades, the industry has relied on experience and contingency to manage uncertainty. Now, with AI, that uncertainty becomes a resource, data to be mined, learned from and mitigated. Supply chains that once broke under stress are starting to bend intelligently. The technology does not remove volatility; it teaches the system how to live with it. Reinforcement learning brings agility, causal AI brings understanding, and predictive analytics bring foresight. Together they create an operational intelligence capable of maintaining balance across a volatile world.

The invisible network that moves materials and products across the globe is beginning to think for itself. Each shipment, invoice and sensor reading becomes part of a learning loop that strengthens the next decision. The supply chain evolves from a linear process into an adaptive infrastructure, capable of anticipating change rather than absorbing it. In this evolution, success will depend not on how much AI is deployed but on how intelligently it is used. The most advanced systems are those where technology and human experience share the same rhythm, data informing judgement, judgement guiding data.

Oil and gas has always depended on coordination. With AI, that coordination is becoming cognition. The industry’s future supply chains will not only move faster but think faster, balancing the unseen forces that connect every well, refinery and terminal in the global flow of energy.

Inside the new brain of oil and gas

For decades, oil and gas companies have gathered oceans of data yet still struggled to see the full picture. Retrieval-augmented generation and agentic AI are beginning to change that, giving machines the ability to reason, explain and act. In control rooms and refineries, a new kind of intelligence is starting to emerge, one that learns, contextualises and collaborates rather than merely computes.

Walk into any modern control room and the glow of monitors never fades. Pressure, temperature and vibration readings roll endlessly across the screens. The irony is that the more data engineers collect, the harder it becomes to know what truly matters.

Oil and gas operations have never lacked information; they have lacked interpretation. Traditional analytics can flag a deviation or plot a trend, but it often leaves people guessing why that deviation occurred. Retrieval-augmented generation, or RAG, begins to bridge that gap. Instead of relying solely on pre-trained statistical patterns, a RAG model can reach back into verified archives, maintenance logs, incident reports, drilling notes, and pull evidence into its reasoning.

In practice, that means a digital assistant supporting a subsea engineer can explain the cause of an unexpected pressure drop by referencing comparable events from similar wells. The answer is grounded, not guessed from probabilities. For a sector where each decision carries financial and safety implications, that grounding matters more than speed or novelty.

Making data speak

Every refinery, LNG plant and offshore platform already runs on a patchwork of dashboards. They tell operators what is happening, but rarely why it is happening or what to do next. Engineers still spend hours cross-checking SCADA feeds with spreadsheets, inspection logs and handwritten notes to reach a conclusion that might have been obvious had the information been connected.

RAG systems change the rhythm of that work. They allow engineers to query their environment in plain language: “Why did the compressor trip yesterday?” or “Show me similar vibration patterns over the past six months.” The model retrieves the relevant history, combines it with live data and produces an explanation that can be verified. The insight is no longer buried in a database; it sits on the

screen, ready for scrutiny.

In downstream operations, where every minute of unplanned downtime costs money, this kind of reasoning can cut straight through the noise. A refinery shift supervisor can ask about a temperature anomaly in the catalytic cracker and receive a contextual answer supported by maintenance records. Decisions become faster, but they also become clearer, traceable and easier to defend.

Balancing capability with control

Building the architecture to support such intelligence is far from trivial. Oil and gas companies are learning to walk a fine line between centralised capability and local autonomy. Too much control from headquarters and offshore crews lose agility. Too little, and the company risks data chaos.

The emerging solution is a hybrid one. Central teams curate and train the core models, ensuring data quality and compliance, while individual sites host smaller instances of those models for rapid inference. A remote platform in the North Sea, for example, can process sensor data locally to maintain real-time responsiveness yet still feed its learnings back to the central knowledge base.

This balance between independence and governance is not simply a technical design; it is cultural. Engineers who have spent decades trusting their own experience must learn to trust a system that interprets for them. Governance frameworks, audit trails and model registries are becoming the new scaffolding for that trust. Every generated insight must be traceable to a data source. Without that, even the most advanced model will never earn its place in the operations hierarchy.

Cost, complexity and justification

AI in oil and gas carries an unavoidable price tag. Training large models demands significant computing power, often across secure cloud and on-premise environments. The smartest approach, many have found, is to start small and scale only where value is proven.

A single predictive-maintenance project on a fleet of pumps can demonstrate the principle: if it reduces downtime by measurable margins, the business case for expansion follows naturally. Incremental adoption also helps contain risk. Once the underlying data pipelines are tested and security certified, replication across other assets becomes faster and cheaper.

Executives are no longer asking whether AI can work; they are asking where it works best and how to measure return on investment without losing control of cost or compliance.

Agents that think ahead

Agentic AI takes the conversation a step further. If RAG helps a model reason, agents give it purpose. These systems do not wait for prompts; they pursue objectives within defined boundaries.

Consider the pumping systems on an offshore platform. An agentic AI monitors flow rates, pressures and vibration data. When it detects early signs of cavitation, it does more than raise an alarm. It consults historical patterns, evaluates potential causes and suggests corrective actions, sometimes scheduling the intervention itself. The engineer remains in

the loop, but the cognitive load is dramatically reduced.

The same logic applies to midstream pipelines. Intelligent agents can balance throughput and pressure while accounting for temperature fluctuations and equipment age. They operate continuously, refining their models with every cycle. In a world where a minor leak can escalate into an environmental incident, foresight becomes the ultimate safeguard.

Building the knowledge base

None of this works without a solid foundation of knowledge. Oil and gas companies hold decades of institutional memory: safety bulletins, design documents, incident analyses, and handwritten shift reports. The challenge lies in turning that mountain of information into structured knowledge.

Creating domain-specific knowledge bases is now an essential discipline. It involves tagging and relating data so that machines can recognise not just words but meaning. A RAG system trained on such a base can connect a maintenance note about “valve seat erosion” with inspection photos and pressure data from the same period, producing an explanation no single dataset could offer.

Integration with live sensor feeds completes the loop. On an LNG train, for instance, combining compressor telemetry with archived maintenance data allows the model to forecast degradation more accurately. It is not merely predicting; it is reasoning from precedent. That distinction is the difference between a clever algorithm and a reliable colleague.

Digital twins that learn

Digital twins have become a familiar concept, yet most still behave like sophisticated simulators rather than collaborators. Agentic behaviour changes that. In pipeline operations, an agentic digital twin can run continuous comparisons between predicted and actual flow profiles. When it spots divergence, it investigates possible causes, temperature gradients, valve friction, or even suspected deposits, and recommends a course of action. In refineries, similar twins model catalytic reactors or heatexchange networks, updating themselves as new sensor data arrives.

Over time, these twins begin to exhibit something close to learning. They remember which adjustments worked and which did not. Each recommendation feeds into the next iteration, sharpening accuracy. The system becomes self-improving rather than merely self-monitoring.

The promise is clear, but so is the danger. The more a twin acts on its own, the greater the need for transparency. Engineers must see the reasoning chain behind every recommendation. The aim is not to surrender control but to share it intelligently.

Trust, bias and the human touch

Bias in industrial AI rarely stems from ideology; it comes from imbalance. A model trained mainly on data from one region or equipment type can misjudge conditions elsewhere. For global operators with assets from the

RETRIEVAL-AUGMENTED GENERATION

Arctic to the Gulf, this is a genuine concern.

Regular validation and retraining are therefore critical. Feedback loops allow engineers to confirm or correct AI-generated actions, turning every decision into new training data. The process is slow, but it builds trust, the most valuable currency in the relationship between people and machines.

Regulators are watching closely too. When an AI system contributes to an operational decision, its rationale must be recorded. Future audits may treat algorithmic reasoning the same way they treat maintenance records today. Documentation will not disappear in the age of autonomy; it will simply describe a different kind of worker.

People still matter

Despite the progress, no one inside the industry believes AI will replace human judgement. Oil and gas remains a domain where experience, instinct and accountability matter as much as mathematics. What changes is the focus of that expertise.

Engineers are learning to guide AI rather than outthink it. They need to know how to interrogate a model’s assumptions, spot inconsistencies and interpret probabilistic answers. Training programmes are beginning to reflect this shift, emphasising digital literacy alongside traditional process knowledge.

A new generation of engineers is emerging, people fluent in both thermodynamics and data governance. They may never crawl inside a separator vessel, but they will understand how a digital twin interprets one.

The rhythm of continuous intelligence

What was once post-event reporting is now becoming live reasoning. Retrieval-augmented generation and agentic AI turn static data into a living conversation between machines and people.

On offshore rigs, RAG systems can weave together sensor readings, weather data and drilling logs to anticipate well stability issues. In downstream plants, digital twins can adjust energy consumption in response to demand and feedstock variations. Across the midstream network, intelligent agents evaluate corrosion risk and reroute flow before conditions deteriorate.

The common thread is continuity. Insights no longer arrive as monthly reports but as constant, explainable guidance. Decisions become part of an ongoing dialogue rather than a series of isolated interventions.

Trustworthy autonomy

The next decade of digital transformation in oil and gas will be defined by one principle: trustworthy autonomy. Machines will be expected to act, but also to justify those actions in language people can understand.

Retrieval-augmented generation ensures that the reasoning stays tethered to verified data. Agentic systems ensure that those insights turn into action. Together they create a feedback loop where data becomes understanding and understanding becomes operation.

Firms that master this cycle will move beyond automation toward genuine cognitive operations, where intelligence is not something that happens in a data centre but something that lives within the process itself. The payoff will not only be efficiency but resilience: a workforce that thinks faster because its machines finally know how to explain themselves.

Energy Point Consulting

We arehere to maximize profitability through creatingclarity out of chaos, utilizing data, processes, and systems. Building the playbook energy teams rely on today, upgrade tomorrow and drive success with scalable future-ready solutions.

Safety with a sixth sense

Artificial intelligence is transforming how risk is recognised, prevented and managed across the oil and gas sector. By combining real-time data with contextual awareness, AI systems are not replacing human vigilance, they are extending it.

Health, safety and environmental management has always been a discipline built on vigilance. Operators watch for signs of failure, supervisors look for unsafe behaviour, and engineers design systems to keep both people and equipment out of danger. That vigilance has limits. Fatigue, distraction and information overload erode human perception over time. Artificial intelligence is emerging as a partner that never blinks.

The technology’s impact is already visible across upstream and downstream operations. Algorithms analyse thousands of sensor readings to detect the earliest trace of mechanical distress. Computer vision monitors confined spaces and high-risk areas where humans cannot always see. Predictive models map risk probabilities that shift hour by hour with weather, pressure or process changes. Safety, once reactive, is becoming anticipatory.

Yet the promise of AI in HSE is not automation for its own sake. It is about preserving human capability, using machines to extend the reach of awareness, to detect patterns invisible to the eye and to connect fragments of data into a coherent warning before harm occurs.

The anatomy of predictive safety

Traditional safety systems rely on static thresholds: a temperature limit, a vibration range, a pressure cutoff. They work well for known conditions but struggle with the dynamic complexity of modern operations. AI alters that by learning the shape of normality and detecting when reality drifts from it. In rotating equipment, vibration patterns can be as distinctive as fingerprints. Machine learning models trained on years of data learn those signatures and recognise anomalies long before they trigger alarms.

When combined with acoustic sensors, they can even detect the faint resonance of a bearing beginning to fail. These insights feed into maintenance scheduling, preventing incidents that once seemed unpredictable.

The same principles apply to process safety. A refinery’s distributed control system may generate thousands of data points every second, too many for human operators to interpret meaningfully. AI filters and contextualises this torrent, identifying subtle correlations that hint at emerging hazards, pressure deviations coupled with temperature fluctuations, or flow inconsistencies that could signal fouling or blockage. The result is a predictive capability that shifts safety from hindsight to foresight.

Seeing risk in real time

Vision-based AI is reshaping situational awareness on worksites. Cameras equipped with deep-

learning models can identify unsafe acts or conditions, an unfastened harness, an obstructed fire exit, or a worker entering a restricted zone. Unlike static surveillance, these systems interpret context rather than merely detect movement. They know the difference between a person walking and a person falling.

On offshore platforms, where confined spaces and variable lighting challenge human observation, AI-driven video analytics provide an extra layer of protection. When integrated with wearable sensors, they form a continuous safety envelope around every individual. If a worker collapses, deviates from routine, or fails to respond to prompts, alerts reach the control room within seconds.

This technology does not replace supervision; it reinforces it. Safety officers still investigate and intervene, but AI ensures that no critical moment goes unseen. The real transformation lies in

speed—the seconds saved between incident and response.

Wearable intelligence

The fusion of AI and wearable technology is creating a new class of personal safety systems. Smart helmets, wristbands and badges equipped with accelerometers, GPS and biometric sensors monitor fatigue, heat stress and posture. Data from these devices flows into AI models that interpret not just single readings but patterns over time.

If a worker’s motion slows, body temperature rises and heart rate spikes, the system may predict the onset of heat exhaustion. A gentle vibration or audible signal reminds them to rest or hydrate, while supervisors receive alerts to adjust workload or rotation. Over longer periods, aggregated data helps managers redesign schedules and workflows to reduce exposure risk.

In hazardous environments such as hydrogen plants or gas terminals, wearables can detect abnormal gas concentrations or radiation levels, triggering automatic evacuation protocols. Combined with geolocation data, emergency coordinators can track personnel movements, ensuring everyone is accounted for.

The digital twin of safety

Digital twins are now being adapted for HSE, offering a dynamic mirror of facility operations that integrates real-time safety data. In this virtual environment, engineers can test how process changes affect risk levels or simulate emergency scenarios without endangering personnel. During a maintenance turnaround, for instance, the digital twin can visualise work permits, equipment status and crowding in each zone. If the model predicts congestion that might compromise safe evacuation, schedules are rearranged before the

work begins.

In downstream plants, safety twins connect process data with environmental monitoring, tracking emission levels and identifying potential breaches before they occur. AI analyses sensor networks and simulation outputs to ensure compliance, guiding decisions that protect both workers and the surrounding environment.

Learning from every incident

AI thrives on feedback. Every near miss, inspection record and safety report becomes part of a growing dataset that teaches the system what risk looks like. Natural language processing tools can read unstructured text from incident descriptions, extracting root causes and correlating them with operating conditions. Over time, patterns emerge

that humans might never notice, recurrent combinations of shift length, weather and maintenance backlog that increase incident likelihood.

Companies are beginning to use this insight to adjust procedures and training programmes. If the data shows that a certain type of work consistently carries a higher probability of minor injury after long shifts, managers can intervene proactively. Instead of simply counting incidents, organisations begin to understand why they happen. The same technologies that improve safety can also create discomfort. Cameras, wearables and data analytics inevitably capture personal information. Workers may fear surveillance rather than protection. Responsible deployment depends on transparency and consent. Employees must understand what is being monitored, why it matters and how data will be used. Clear boundaries prevent overreach. AI should evaluate risk, not behaviour unrelated to safety. Some companies are forming ethics committees that include workforce representatives to review new monitoring systems before adoption. Privacy-preserving techniques such as edge processing,

where data is analysed on the device rather than in the cloud, reduce exposure. An algorithm can detect fatigue without transmitting biometric data offsite. Striking the balance between vigilance and dignity is part of the evolution of digital safety culture.

AI and environmental stewardship

Health and safety do not end at the site perimeter. Environmental protection has become inseparable from operational integrity, and AI is increasingly being used to manage emissions, waste and ecological impact.

At upstream facilities, predictive analytics integrate weather data with emissions models to forecast dispersion patterns, allowing operators to adjust output or flare rates in advance. In downstream operations, AI tracks wastewater composition and temperature, identifying deviations that might breach discharge limits.

The same technologies that prevent accidents can also prevent environmental harm. A leak detected early is both a safety victory and a sustainability success. As regulation tightens, these capabilities provide not only compliance but reputation resilience.

Safety has always been a cultural challenge as much as a technical one. AI introduces another dimension to that culture. Machines may identify risk, but humans must decide how to act on it. Training therefore shifts from memorising procedures to interpreting digital insight.

Supervisors learn to translate AI outputs into field actions, balancing predictive recommendations against experience. When a model predicts fatigue risk, the response is not purely algorithmic, it involves empathy, communication and leadership. The most advanced organisations are incorporating AI literacy into safety induction programmes, ensuring that every worker understands both the value and the limits of intelligent systems.

This understanding builds trust. Workers who see AI as a partner in protection rather than an instrument of surveillance are more likely to engage with it honestly, report anomalies and contribute data that makes the system stronger.

A system that learns and adapts

One of AI’s defining strengths is its ability to evolve. Unlike static safety protocols, intelligent systems learn continuously from new data. Each alert, incident or false alarm becomes feedback that sharpens precision. Over time, the system adapts to the unique rhythms of each facility, the equipment it runs, the climate it operates in, and the people it protects.

This adaptability also changes how safety management is measured. Instead of focusing solely on lagging indicators such as lost-time injuries, organisations can monitor leading indicators generated by the AI, frequency of predictive alerts, response times, and near-miss avoidance. The result is a living safety framework that adjusts with the operation itself.

The ultimate test of any safety system is its performance during crisis. AI can play a critical role in emergency response by integrating multiple streams of information, personnel location, equipment status, weather, and evacuation routes, into a single operational picture.

During an offshore incident, for example, AI-powered analytics can track every worker’s position through wearable tags, identify blocked exits using camera feeds, and suggest alternative evacuation paths in real time. Decision-makers gain situational awareness that no human team could compile manually.

Post-incident, the same system analyses timelines and communications, helping investigators reconstruct events and identify procedural gaps. Each emergency becomes a source of knowledge that strengthens the next response.

Towards proactive protection

The convergence of AI, connectivity and sensing is redefining what safety means in industrial environments. Risk management is no longer confined to checklists and compliance audits; it has become a continuous, intelligent dialogue between machines and people.

The human role remains indispensable. Technology can see patterns and anticipate danger, but only people can interpret context and apply judgement. The future of HSE lies in this partnership, a blend of digital vigilance and human care.

The industry’s next generation of safety excellence will depend not on stricter rules or faster reactions but on foresight. AI gives oil and gas companies that foresight, allowing them to recognise hazards before they appear and to respond with precision when they do. In that sense, artificial intelligence is not replacing the human instinct for protection; it is amplifying it, ensuring that every decision, every moment of awareness, and every precaution is one step ahead of harm.

The asset that knows itself

The oil and gas industry is entering an era where machines are no longer passive components of production, but active participants in their own performance. Artificial intelligence and digital twin technology are merging to create self-aware assets, systems that observe, learn, and evolve throughout their lifecycle.

Every machine has always told a story. Pressure, vibration, temperature and flow have been the vocabulary of engineering for more than a century. Operators learned to read those signals like a second language, translating data into instinct. Yet that language has always been one-sided. The equipment could indicate what was happening, but never why. That gap is beginning to close. Artificial intelligence has given industrial assets a new form of self-expression. With the help of embedded analytics, sensor fusion and digital twins, machinery can now analyse its own behaviour and predict what it needs before failure occurs.

The relationship between humans and equipment is being rewritten: machines are no longer silent witnesses to their condition but articulate participants in it.

From reactive maintenance to cognitive awareness

For decades, asset management was a cycle of reaction. Components were inspected, repaired and replaced on fixed schedules or after failure. Preventive maintenance reduced risk but often at the expense of efficiency; parts were changed because they might fail, not because they would. Predictive maintenance introduced data and probability into the process, but it still relied on human interpretation. The next stage is cognitive maintenance, assets that understand themselves well enough to anticipate, diagnose and even resolve issues autonomously. A compressor train equipped with AI-driven analytics can detect a pattern of vibration that suggests rotor imbalance. It cross-references that signature against historical data, simulates future outcomes in its digital twin and, if the prediction confirms deterioration, initiates load redistribution or shutdown sequences automatically. What distinguishes this from earlier automation is the depth of reasoning. The system is not following a script; it is making a decision based on context. Every event feeds back into the model, refining its understanding of what “normal” looks like and adjusting its definition as the equipment ages.

The rise of the cognitive asset

In oil and gas operations, the complexity of assets has always mirrored the complexity of the environment. Offshore platforms, refineries and LNG terminals contain thousands of interdependent components, each operating under stress. Human oversight remains essential, but scale limits visibility. AI offers a way to extend that oversight beyond human endurance.

The cognitive asset emerges from three converging technologies: pervasive sensing, advanced analytics and the digital twin. Together they create a loop of perception, interpretation and action. Sensors provide the raw perception, analytics convert it into insight, and the twin becomes the context through which that insight is tested.

A subsea pump, for example, may host hundreds of sensors monitoring flow, vibration and thermal signatures. Its digital twin runs continuously onshore, fed by that data in real time. The AI analyses behaviour under changing conditions, recognising anomalies invisible to conventional monitoring. When performance begins to drift, the twin tests alternative operating modes to identify corrective actions, which are then applied remotely.

The machine, in effect, develops a sense of self. It knows its baseline performance, understands its operating limits, and learns to stay within them.

Learning through simulation

The digital twin is more than a visualisation tool; it is the asset’s memory and imagination combined. Every operational state, every anomaly and every intervention becomes part of its knowledge base. Over time, it accumulates enough experience to run predictive scenarios that go far beyond traditional engineering models.

In one Middle Eastern refinery, the digital twin of a catalytic cracking unit now simulates micro-variations in catalyst density to predict how efficiency will respond to different feedstock qualities. The AI learns which variables most influence yield and adjusts control strategies accordingly. Engineers review these recommendations before they are implemented, but the process runs continuously, the plant learning from itself at every turn.

This iterative loop, observation, simulation, and correction, represents the shift from descriptive to generative maintenance. Instead of merely reporting conditions, the twin proposes and tests new operating strategies. The asset, in effect, becomes an experiment in permanent progress.

Contextual intelligence

The value of self-aware assets lies not only in what they understand about themselves, but in how they relate to their environment. A compressor does not operate in isolation; its performance affects and is affected by everything around it, pipelines, turbines, process controls, and ambient conditions.

AI models trained across networks of assets can identify relationships that transcend the boundaries of equipment. If two pumps consistently show correlated vibration patterns, the system can infer mechanical coupling or shared stress factors. It may also detect environmental influences , temperature gradients, fluctuating gas compositions, or power supply irregularities, that would be invisible when each machine is considered separately.

This ability to interpret context transforms maintenance into a system-level discipline. Rather than fixing individual machines, operators manage ecosystems of interdependent assets that collectively optimise themselves.

Human oversight in a learning environment

As assets become more self-sufficient, the engineer’s role evolves. The skill set shifts from manual troubleshooting to strategic supervision. Understanding how a machine thinks becomes as important as understanding how it works.

Training programmes are adapting accordingly. Engineers learn to interrogate AI systems — to question their reasoning, validate their predictions and adjust their learning parameters. The work becomes less about wrench and gauge, more about data literacy and critical analysis.

The challenge is psychological as much as technical. Trust must be earned. The first time a system prevents a failure by acting on its own prediction, confidence grows. The first time it errs, that trust is

tested. Successful adoption therefore depends on transparency, the ability for humans to see how and why the machine arrived at its decision. Explainability becomes part of the maintenance manual. When an AI flags a risk, it must also show the data and logic that justify it. Only then can engineers calibrate their response.

Sustainability through intelligence

Cognitive assets also hold the key to sustainability. By constantly monitoring performance and adapting to changing conditions, they reduce waste, emissions and energy consumption.

At a gas compression facility, AI-driven control systems have reduced flaring events by learning the interplay between temperature, pressure and compressor load. In refineries, similar systems finetune heater operation to maintain efficiency while cutting excess fuel use. Even small optimisations multiply when applied across fleets of assets, translating into significant environmental and financial benefits.