Autonomy Requires More Than Just Data

Edge Computing Powers Oil Efficiency

Rethinking Safety In A Connected World

AI Is Now Critical In Oil And Gas

Building Trust In Digital Operations

Managing Director

Adam Soroka

Editor in Chief

Mark Venables

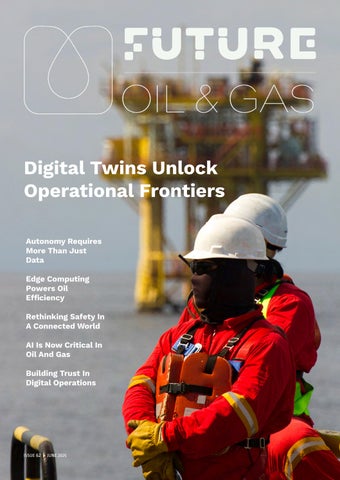

There was a time when the term ‘digital twin’ sounded like a marketing gimmick, an impressive label for something vaguely virtual, indefinitely postponed, and questionably useful. That time is over. The stories in this issue leave no doubt: digital twins are no longer a futuristic concept for oil and gas. They are the defining feature of a new operational paradigm.

What has changed is not just the maturity of the technology, but the clarity of its purpose. Early digital twin efforts often suffered from an obsession with visual fidelity and data completeness. The thinking seemed to be that if we could replicate every bolt, pump, and pipe in perfect 3D, the value would emerge on its own. But replication is not transformation. The real power of a digital twin lies not in its ability to mirror the physical world but in its ability to interpret it, making operational complexity comprehensible and actionable.

This issue examines that shift in thinking from several angles. On one hand, we explore how digital twins have become integral to managing asset performance, safety, and emissions in real time. They are no longer isolated dashboards or highend simulators. They are operational engines, living, learning systems that evolve with the asset, absorbing data from sensors, logs, documents, and human input. From upstream reservoir management to downstream logistics, twins are reshaping decision-making across the value chain.

But just as important as the technological evolution is the human one. As we highlight in this edition, the digital twin must serve people, not just machines. A model that satisfies control systems but ignores the technician at 2am or the graduate engineer on their first offshore assignment has failed its most important test. We must build systems that empower users with role-specific insight, contextual navigation, and actionable knowledge, because in oil and gas, performance and safety still depend on the quality of human judgement.

That means designing digital twins not as monoliths, but as dynamic interfaces that grow with the organisation. It means investing in architecture that prioritises flexibility over perfection and traceability over data hoarding. It means recognising that the real return on a digital twin is not a

visualisation, it is a decision made faster, a repair made earlier, a risk understood before it escalates.

Perhaps most critically, it means reframing the digital twin as a long-term asset in its own right, a digital spine connecting people, systems, and strategy. It is not a one-off project but a continuous capability. Every time an operator tags an insight, every time a process is simulated, every time a decision is logged, the twin becomes more useful and more trusted.

This issue of Future Oil and Gas is a snapshot of that evolution. It shows what is possible when we stop asking whether digital twins are ready for the industry and start asking whether the industry is ready for the scale of change, they make possible.

Welcome to the twin-enabled future.

Mark Venables, Editor, Future Oil and Gas

Driven by best-in-class health and safety standards, Suncor is committed to transforming its operations across its diverse business units. A key element of this transformation involved optimizing Control of Work processes: such as permit-to-work, hot work, and isolation management/LOTO. To support this initiative, Suncor selected Enablon Control of Work as part of its broader digital transformation strategy. Standardizing these critical processes not only helped meet rigorous health and safety standards but also improved efficiency and provided frontline workers with better access to essential information directly in the field.

Data-driven decision-making is transforming oil and gas, but success depends as much on people and processes as on technology. As autonomous operations mature, companies must align culture, connectivity, and computing to achieve real value.

Oil and gas companies are under pressure to reduce costs, operate more safely, and deliver on sustainability promises. Edge computing offers a powerful tool to help meet these challenges by enabling realtime data insights in even the harshest operational environments.

As the oil and gas sector confronts disruption on multiple fronts, digital twins are emerging as the key to reducing downtime, enhancing safety, and optimising asset performance across complex operations. By mirroring real-world systems with real-time data and simulation, they are reshaping how companies manage infrastructure, streamline operations, and respond to volatility.

AI is redefining the role of digital twins from isolated replicas to intelligent, interconnected agents. As oil and gas operations become more complex, AIenabled twins are shifting from visualisation tools to real-time decision-makers that support safety, sustainability, and systemwide optimisation.

Senior leaders in oil and gas face mounting pressure to extend asset life, enhance cyber resilience and cut emissions. Industrial autonomous operations promise to deliver gains across all three, but require a fundamental rethink of how data, systems and people work together.

Decarbonization of the UK’s energy system requires increased interaction and cooperation between all energy stakeholders. Therefore, alongside electrification, digitalization remains a key enabler in the pathway towards net zero against a backdrop of volatility, unpredictability, and complexity.

From dynamic PHAs to live barrier management, Ruben Mejias of Enablon outlines how integrated platforms are helping energy and petrochemical operators reduce incidents, cut emissions, and stay compliant. As safety, productivity, and sustainability converge, the industry must rethink how risk is managed in real time.

Data handover failures in oil and gas projects are still far too common, often undermining the performance of even the most sophisticated digital assets. By appointing a Main Digital Contractor, operators can embed data integrity from the outset and ensure the transition from project to operations is seamless, efficient and value-driven.

Murray Callander, CEO of Eigen, reflects on 25 years of digital transformation in oil and gas, from floppy disks and Excel hacks to cloud-native deployments and AIpowered workflows. He explores the cultural, technological, and organisational shifts that have shaped the digital oilfield, and the lessons that still need learning.

Real-world applications and use cases have emerged beyond the buzz and hype of AI. Natural language processing (NLP), large language models (LLM), hybrid machine learning (ML), generative and agentic AI, all represent a litany of transformational technologies.

In the oil and gas sector, performance is not merely a concept, kit is a calculation. As the industry convenes in Aberdeen for the Future of Oil and Gas 2025, the dialogue is shifting from theoretical innovation to proven transformation in the field. As Katarzyna Chrzan, Senior Marketing Manager at Cohesive explains, the question is no longer whether we should modernise, but how we can do so in a way that delivers measurable, scalable impact.

Digital twins are becoming essential for operational insight in oil and gas, but a narrow focus on assets and automation risks missing the bigger picture. To deliver real value, twins must serve the connected worker as much as they serve the control system.

For too long, the oil and gas sector has treated artificial intelligence as a future consideration, something to be explored in labs and pilot projects, rather than deployed at scale.

Data-driven decision-making is transforming oil and gas, but success depends as much on people and processes as on technology. As autonomous operations mature, companies must align culture, connectivity, and computing to achieve real value.

There is no shortcut to autonomous operations. The journey is as much about shifting attitudes as adopting technologies. While the sector increasingly invests in AI, robotics, and edge computing, IDC’s Research Manager for Operational Technology, Carlos Gonzalez, argues that none of it matters without cultural commitment.

“IDC believes it is essential that there is complete buy-in from the top down when we address any sort of digital transformation strategy,” Gonzalez explains. “If you do not have the people on the ground floor willing to undergo training or adopt new tools, or people at the C-suite level willing to invest time, money, resources, and also their patience, you are not going to see a high level of success.”

Oil and gas operators are already under pressure to modernise legacy assets and futureproof remote sites. But without a workforce that understands and supports the shift, even the most advanced systems fall short. Leaders who succeed tend to be those that foster a shared belief that transformation is necessary, not optional.

Many executives mistakenly see autonomous operations as a linear technology upgrade. In practice, it is circular and continuous, with data as both input and output. AI and machine learning depend on data to function, but autonomy also generates new data, fuelling real-time optimisation, predictive maintenance, and strategic scaling.

“Data is fuel for autonomous operations. Without data, there is no way they can proceed,” Gonzalez states. “The cloud, in IDC’s perspective, is one of those inevitable tools that companies will need to embrace in some capacity if they want to scale and deploy data at a larger level.”

For distributed operations typical in oil and gas, spanning onshore, offshore, subsea, and desert environments, the cloud is

not merely a storage mechanism. It is the connective tissue that allows insights to be shared across facilities and functions. However, its effectiveness depends on how well organisations democratise access to data internally. Cloud-native strategies that enable secure, scalable, and decentralised decision-making are increasingly the differentiator between cautious adopters and digital leaders.

If cloud is the brain, edge is the reflexes. Autonomous systems thrive on responsiveness, but latency kills performance in real-time scenarios. That is why edge computing is now a priority in environments where decisions must be taken faster than a human can act.

“Edge devices are where the operation meets the cloud. If we have learned anything along this industry 4.0 journey, it is that edge computing is beneficial,” Gonzalez explains. “It helps with what data gets transmitted to the cloud. It is not a data dump. You are being very effective in how you communicate and transform your data.”

The distinction between data collection and data utility becomes vital here. Advanced sensor technologies, combined with edge computing, enable only the most relevant, compressed, or contextualised information to reach the cloud. This ensures agility without overwhelming storage or bandwidth, critical in harsh or bandwidthconstrained environments such as offshore rigs or remote gas processing plants.

Technology adoption is never purely technical. Gonzalez stresses that digital transformation is ultimately a human process, and the biggest bottleneck is rarely the system, it is the skills gap.

“Among the leaders that believe digital technology gives them an edge, 36% of them have a culture of buy-in. They have an understanding that this is where we need to invest, and they are all behind it,” he says. “At the end of the

day, you are introducing all this technology, but if the human workers and employees are not fully invested, the success rate is diminished.”

According to IDC’s Future of Operations survey, nearly half of respondents cite education and training as the biggest challenge in deploying digital technology. This is closely followed by recruitment and retention. As experienced workers retire, their knowledge departs with them, leaving a vacuum that new tools are only beginning to fill.

AI, machine learning, and generative tools like ChatGPT are being integrated into training environments. Virtual reality enables immersive instruction, while augmented reality connects younger engineers with senior specialists from miles away. These tools do not replace human insight, but they can retain, replicate, and relay it more efficiently than traditional handovers or static documentation ever could.

Remote operations are a proving ground

Autonomous operations are not an abstract goal. In sectors where access is limited and conditions are extreme, they are already essential. Oil and gas, like agriculture, covers vast and often hazardous territories. Travel time, safety risks, and environmental exposure make remote operations a practical imperative, not a future aspiration.

“Oil and gas have several sites that are only accessible by helicopter or some sort of hazardous mode of travel,” Gonzalez points out. “By setting up remote operations and letting them run autonomously, it is essential for companies to maintain connectivity and safety. These are the organisations leading the way in wireless networks and distributed data strategies.”

Remote operations depend on robust connectivity, but also on automation maturity. AI must be able to make and adapt decisions without human input in real time. In oil and gas, where a minor delay can cascade into major losses or safety risks, this capability is not merely valuable, it is existential.

As these systems scale, edge computing allows decisionmaking to stay localised, while cloud infrastructure ensures strategic alignment across geographies. The result is a distributed model of autonomy, where data, decisions, and deployment are harmonised rather than centralised.

The future is flexible and federated

The post-pandemic industrial world no longer tolerates rigidity. Market fluctuations, regulatory shifts, and geopolitical risks have all exposed the fragility of inflexible systems. The modern expectation, from both customers and shareholders—is for resilience and responsiveness, not just efficiency.

“Making advanced decisions in real time that are flexible, being able to respond—that is the goal of autonomous operation,” Gonzalez says. “AI, cloud technology, edge devices—these are all driven by data, and it is about using that data to make decisions in real time.”

This vision of autonomy is not about removing humans from the loop. It is about putting people in the right part of the loop, where creativity, judgment, and oversight are most valuable, and automating the rest. It is a model of human-machine collaboration that prioritises speed, safety, and sustainability.

For oil and gas, the implications are significant. Sites become safer by removing personnel from hazardous zones. Operations become more efficient by minimising manual interventions. And organisations become more adaptive by embedding intelligence at every level, from the field device to the executive dashboard.

Tomorrow’s leaders are building today’s infrastructure

The technologies of autonomy are already available. AI, robotics, cloud, and edge computing are no longer experimental. But the companies realising value are those that understand the full equation: data plus culture plus connectivity equals autonomy.

Gonzalez is clear about the stakes. “Tomorrow’s success will be artificial and machine learning driven. These tools are going to help with upskilling, with training, with assisted programming, and with the real-time adjustments that need to happen in operations.”

Executives in oil and gas now face a strategic choice. Continue to see digitalisation as a bolt-on initiative, or embrace it as the foundation of operational resilience and innovation. Autonomous operations are not the end goal. They are the infrastructure for the next era of industrial competitiveness.

Cintoo is the all-in-one platform for reality and 3D scan data synergy. When scan data is trapped in siloed desktop tools, disconnected from EDMS, BIM, and digital twins, its value is lost.

Cintoo changes that—making data accessible, collaborative, and actionable for faster, safer and more sustainable decisions. In turn, empowering teams to streamline inspections, planning, maintenance, and asset lifecycle management. Join us for an exclusive webinar with our partner Neonex on July 8

Reimagine P&IDs – Safer, Smarter, More Accurate. Discover today’s practical use cases for enhancing ROI.

cintoo.com sales@cintoo.com

As the oil and gas sector confronts disruption on multiple fronts, digital twins are emerging as the key to reducing downtime, enhancing safety, and optimising asset performance across complex operations. By mirroring real-world systems with realtime data and simulation, they are reshaping how companies manage infrastructure, streamline operations, and respond to volatility.

The oil and gas industry is undergoing a structural recalibration. Long defined by boom-and-bust commodity cycles and complex physical infrastructure, operators are now required to navigate persistent volatility, decarbonisation targets, and investor demands for leaner, more transparent operations. The appetite for short-term gain has been replaced by a need for long-term adaptability, built on foundations of operational intelligence, real-time data, and advanced analytics.

This environment leaves little room for reactive, siloed approaches. Operational resilience is no longer a back-office conversation, it is a boardroom imperative. Digital twins, once experimental and confined to high-fidelity modelling of isolated components, have evolved into strategic enablers that support enterprise-wide transformation. By creating dynamic, virtual replicas of physical assets, systems, and processes, digital twins provide operators with a continuous feedback loop between real-world operations and digital simulation.

This capability changes not only how assets are maintained and operated but also how decisions are made. It removes the guesswork from complex optimisation problems, allowing multidisciplinary teams to test scenarios, forecast risks, and simulate improvements before making physical changes on the ground. In an industry where decisions carry significant safety, environmental, and financial consequences, that precision is vital.

A fully functioning digital twin is far more than a visual model. It is a dynamic system that integrates sensor data, engineering documentation, operational parameters, and real-time performance metrics. It incorporates the digital DNA of an asset, geometry, process flows, material properties, and control logic—alongside real-time feeds from IoT devices, DCS systems, and historian databases. It also contains the operational memory of the asset, including maintenance records, incident histories, and contextual information such as environmental constraints or safety-critical tolerances.

This convergence of static and dynamic data creates a system that does not just reflect reality but learns from it. The digital twin evolves alongside the physical asset, ingesting new data, recalibrating predictions, and refining simulations over time. It becomes not only a single source of truth but also a system of insight.

The digital twin’s value is unlocked when this insight is made actionable. Engineers must be able to query the twin for diagnostics, operators must receive timely alerts, and executives must gain dashboards that support capital allocation and strategy development. This requires a robust infrastructure of data standardisation, integration middleware, cloud platforms, and userfriendly visualisation tools. Without these, the digital twin remains a passive model. With them, it becomes an operational command centre.

In the context of asset performance, the benefits of a digital twin are immediate and tangible. Predictive maintenance, energy efficiency, and throughput optimisation can all be managed with greater precision and lower cost. Real-time condition monitoring enables earlier detection of degradation,

allowing planned interventions rather than emergency repairs. Heat exchangers, pumps, and compressors, assets that traditionally fail without warning—can now be monitored for subtle patterns of failure behaviour, informed by years of historical data and advanced analytics.

This has measurable impacts on OPEX and CAPEX. Maintenance budgets can be allocated based on condition rather than calendar. Spare part inventories can be calibrated to actual failure probabilities. High-value assets can remain in service longer without compromising safety or performance. In complex environments such as offshore platforms, this also reduces the risk exposure of personnel, who are no longer dispatched reactively but based on data-driven risk profiles.

What differentiates digital twins from conventional monitoring is the ability to simulate potential interventions in advance. Before a valve is replaced, engineers can run a simulation within the twin to understand the impact on process dynamics, energy use, and system balance. This accelerates root cause analysis and shortens the time from problem detection to resolution.

While rotating equipment and surface assets are natural candidates for twin implementation, some of the most valuable applications occur upstream, within reservoir modelling and production planning. By integrating geophysical models, well logs, flow simulations, and historical production data, operators can develop digital twins of

reservoirs that continuously adapt as new data is captured. This enhances the accuracy of forecasts, optimises field development plans, and supports adaptive reservoir management.

The ability to run synthetic simulations—testing different injection rates, lift strategies, or well trajectories, within a digital twin environment means decisions are no longer constrained by static assumptions. Machine learning models can identify early signs of water breakthrough or pressure anomalies, suggesting new workover strategies or real-time choke management.

For brownfield assets, this capability enables enhanced recovery at minimal additional cost. For greenfield projects, it ensures that development decisions are informed by a living model, not a onetime simulation. In both cases, production efficiency improves, and environmental impact is reduced.

Safety and risk management are embedded in every layer of oil and gas operations. Digital twins provide a powerful platform for managing these obligations more proactively. They allow safety systems to be stress-tested in silico, revealing interlocks that might fail under pressure or alarms that might conflict in cascade

scenarios. Operators can visualise the sequence of events that would lead to loss of containment or equipment failure and adjust control logic accordingly.

Real-time monitoring of safety-critical parameters, such as gas concentrations, vibration thresholds, or pressure limits, allows the digital twin to serve as a predictive risk engine. Rather than responding to breached limits, operators can act on trend data that shows a deviation is emerging. This enhances compliance with regulatory frameworks while also reducing downtime due to false alarms or over-engineered trip points.

Scenario planning is another key benefit. Entire facilities can be subjected to virtual emergency drills, with process upsets, electrical failures, or blowdown events simulated in full. This improves not only system design but also human readiness, as operators are trained in digital replicas that reflect the actual layout and behaviour of their plant.

Supply chains that see around corners

Beyond the plant boundary, digital twins are helping operators better understand and manage their supply chains. By modelling the flow of goods, spare parts, chemicals, and products from source to site and beyond, companies gain visibility into bottlenecks, inefficiencies, and vulnerabilities.

These models incorporate data from suppliers, logistics providers, and internal ERP systems, allowing simulations that account for lead times, regional risks, weather events, and demand fluctuations. What emerges is a predictive model that can anticipate delays and recommend countermeasures before they impact production schedules.

This is particularly valuable for LNG operations, where supply chains are long, constrained, and capital-intensive. Being able to model storage constraints, shipping delays, or feedstock quality variations in a single digital environment supports more agile decision-

making and reduces the bullwhip effects that plague energy logistics.

Human insight through simulation and training

Digital twins are not simply operational tools. They are also learning environments. In an industry facing a widening skills gap, the ability to train personnel using live data and real-world scenarios is invaluable. Operators can rehearse startup, shutdown, and emergency procedures in a safe, controlled setting. Technicians can visualise system layouts and maintenance workflows before setting foot on site.

This improves not only knowledge transfer but also confidence. By simulating infrequent or hazardous events, digital twins accelerate learning and reduce the risk of human error. Simulation data can be used to benchmark competence and identify training needs, ensuring that workforce development is continuous and datainformed.

Scaling across the enterprise

The true impact of digital twins emerges when they are scaled from point solutions to platform capabilities. This requires standardisation of data formats, alignment of engineering practices, and cultural shifts toward data-driven operations. It also requires investment in the supporting infrastructure, edge devices, connectivity, cloud platforms, and cybersecurity.

Companies that succeed in this transition are those that treat the digital twin not as a product, but as an evolving digital asset. Each new project adds fidelity. Each integration expands capability. Each user interaction generates insight. Over time, the digital twin becomes the digital spine of the enterprise—connecting planning to execution, engineering to operations, and people to processes.

The oil and gas industry is often characterised as slow to adopt change. Yet, when the stakes are clear and the value is demonstrable, it has shown an ability to move fast and decisively. Digital twins represent one of those inflection points, a convergence of technologies that offers a fundamentally different way of operating.

They do not replace human expertise but augment it. They do not eliminate risk but make it visible and manageable. They do not promise perfection but deliver improvement at scale. For leaders tasked with steering their organisations through a period of profound transition, the digital twin is not just a tool. It is a lens, a way of seeing operations as they are, understanding them as they behave, and improving them as they evolve.

That perspective, more than any single metric or application, is the real value of the digital twin. It is not a mirror of the present. It is a preview of what is possible.

Oil and gas companies are under pressure to reduce costs, operate more safely, and deliver on sustainability promises. Edge computing offers a powerful tool to help meet these challenges by enabling real-time data insights in even the harshest operational environments.

Few sectors generate as much data under such extreme conditions as oil and gas. Remote assets such as offshore platforms, pipelines and liquefied natural gas (LNG) plants rely on thousands of sensors.

Yet most of that data remains unused or is delayed by transmission bottlenecks and bandwidth limits. In many cases, valuable insights are simply lost.

Edge computing provides a way to reverse that trend. By processing data locally, on or near the asset itself, operators gain the ability to act on information in real time without relying on distant cloud infrastructure. This is particularly important in environments with limited connectivity or high latency.

“The industry has long been rich in data but poor in insight,” explains Miran Gilmore, Senior Consultant at STL Partners. “By moving compute resources closer to where data is generated, edge infrastructure allows operators to extract meaning and value from information that was previously wasted or delayed. It is about turning raw data into decisions.”

Edge is not just about speed. It enables safer operations by flagging anomalies before they become incidents and supports more efficient production through continuous feedback and adjustment. The shift from reactive decision-making to predictive optimisation has become a strategic imperative.

Traditional IT infrastructure is rarely designed for the harsh realities of oilfield operations. Edge

architecture must contend with heat, vibration, corrosion and isolation, all while operating reliably and autonomously.

“Latency is not just a technical inconvenience,” Gilmore adds. “In upstream production or gas processing, a few seconds can be the difference between early intervention and equipment failure. Edge computing makes it possible to act on those early signals without waiting for a round trip to the cloud.”

For an LNG plant, where downtime can cost upwards of $25 million per day, these delays matter. Edge allows equipment monitoring, process control and predictive diagnostics to take place on site, dramatically reducing the time to detect and respond.

There are also economic benefits. A single oil platform can generate over a terabyte of data each day, but transmitting this data via satellite is both slow and costly. By analysing it at the edge, network traffic and associated costs are reduced.

One of the clearest benefits of edge computing is improved condition monitoring. As sensors become cheaper and more capable, they are being deployed across critical systems, from compressors and turbines to subsea valves and wellheads.

“There is a growing maturity in the ecosystem,”

says Gilmore. “With more compute power available on site, operators can now run real-time analytics to detect unusual behaviour, isolate faults and even trigger automated responses.”

That enables predictive maintenance to be embedded within operations. Rather than responding to alarms or periodic inspections, operators can anticipate failures, schedule maintenance efficiently, and reduce unplanned downtime.

The improvements go beyond cost and efficiency. Edge analytics contribute to safer working conditions by identifying issues early and reducing the need for manual intervention in hazardous areas. They also provide a buffer for critical infrastructure, protecting against cascading failures.

Integration and governance remain key challenges

Despite the advantages, integrating edge solutions is not straightforward. Oil and gas remains a conservative industry, with significant investment in legacy systems and established workflows. There is also a persistent gap between IT and operational technology (OT) environments.

Gilmore warns that adoption will require more than new equipment. “Edge is not just a technical bolt-on. It demands changes in how data is managed, how teams collaborate, and how decisions are made. That means rethinking budgets, processes and even training.”

Skills are a major barrier. Many field personnel are unfamiliar with edge architecture, while data scientists and engineers often lack domain

experience in oil and gas operations. Closing this gap will be critical to scaling successful deployments.

There are also unresolved questions around data ownership. As machine learning models are increasingly embedded at the edge, it is not always clear who owns the insights, or the value they create. Collaboration between operators and vendors will need clearer governance frameworks.

Edge computing also has a role to play in sustainability. Real-time monitoring of flaring, emissions, and energy consumption enables operators to measure and improve environmental performance. In remote or off-grid areas, edge systems can support integration with renewable energy and microgrid infrastructure.

“Edge provides the visibility and responsiveness needed to operate more sustainably,” says Gilmore. “It supports compliance and reporting, but more importantly, it enables better control over environmental impact.”

As the energy transition accelerates, edge technology may prove essential for balancing new demands with existing assets. By enabling smarter, cleaner operations, it supports both short-term performance and long-term strategic goals.

The oil and gas sector is under no illusions about the complexity of adopting new technologies at scale. Edge computing will not replace cloud infrastructure or traditional SCADA systems overnight. But it offers a complementary layer of intelligence, one that is increasingly vital.

“There is growing interest and real investment happening,” Gilmore concludes. “But edge will only deliver value if it is embedded into how companies operate day to day. It must be treated as a strategic capability, not a peripheral experiment.”

As the industry continues to navigate volatile markets, operational risk, and environmental scrutiny, edge computing offers a rare combination of agility, insight and resilience. For forwardlooking operators, it could prove to be one of the most important enablers of digital transformation over the coming decade.

AI is redefining the role of digital twins from isolated replicas to intelligent, interconnected agents. As oil and gas operations become more complex, AI-enabled twins are shifting from visualisation tools to real-time decision-makers that support safety, sustainability, and system-wide optimisation.

Digital twins have long played a vital role in asset-heavy industries, enabling engineers to monitor performance, simulate conditions, and predict maintenance needs. But the traditional twin has always been tethered to a specific function or dataset. A model of a single compressor, for example, might help identify performance anomalies, but it cannot contextualise what those anomalies mean across an entire platform or pipeline. That is where artificial intelligence is changing the game.

Rick Standish, Executive Industry Consultant at Hexagon Asset Lifecycle Intelligence, has witnessed the evolution of digital twins over more than two decades. The technology has matured, but the turning point now lies in AI’s ability to deepen, scale, and interconnect twin functionality across assets and systems. “Initially, digital twins were primarily used for monitoring and simulation of a physical asset and focused on a specific business interest,” he explains. “Today, we are really seeing the beginning of a transformative shift. Thanks to the ever-growing availability of real-time and retrofitted sensor data, more powerful generative AI models, and deep learning analytics, digital twins are becoming more proactive and increasingly autonomous.”

This progression is not just about technical capacity. It is about realigning how twins function in the wider decision-making environment. Instead of mirroring an individual pump, AI-powered twins are now being designed to simulate and optimise process-

wide behaviour, integrating structured and unstructured data from engineering documents, maintenance records, and sensor feeds. That, says Standish, gives operators a real opportunity to simulate and optimise entire workflows, so long as they can trust the intelligence behind the insights.

The issue of trust is not a peripheral concern. As twins become more intelligent, the consequences of acting on flawed data become more significant. Martin Bergman, Lead Strategist for AI and Enabling Technologies at Hexagon, emphasises the foundational role of data quality in digital twin success. “An impactful and trustdriven digital twin relies on a solid, up-todate data foundation,” he says. “If the data does not represent the status quo of the physical asset, it is of little value.”

According to Bergman, AI can help address

this by ingesting and contextualising previously inaccessible information. Around 90 per cent of industrial data is unstructured, buried in PDFs, diagrams, logs, and handwritten records. AI, especially large language models, is enabling companies to turn this material into structured, machine-readable context. This is not a one-off project. “We are now seeing the move from project-based contextualisation to continuous services that keep the twin up to date in real time,” he explains. “That makes the digital twin a living, trustworthy source of truth.”

The importance of this shift is compounded by the fact that many assets, especially offshore, change ownership over their lifetime. The result is a patchwork of engineering records, maintenance systems, and data gaps. AIpowered contextualisation creates consistency where previously there was confusion, enabling what Standish describes as “a consistent record of the asset’s engineering data” that can then be used for process safety and asset integrity management.

Importantly, contextualised data is not simply about locating the right document or verifying a specification. It also underpins the ability to automate verification processes across entire data ecosystems. With the correct contextual layer, companies can run large-scale consistency checks between technical drawings, P&IDs, and maintenance logs to verify accuracy, identify discrepancies, and trigger corrective workflows before those inconsistencies manifest as operational risks.

Once trusted data is in place, the next step is making it actionable. Today’s digital twins do not simply serve up insights; they support decision-making through interfaces that resemble consumer AI. Natural language interaction is becoming a core capability, allowing engineers to ask complex questions about the twin’s behaviour or the asset’s condition without navigating through multiple dashboards.

Bergman outlines a three-tier evolution of this capability. The first is the AI intern, searching, summarising, and

organising relevant data. The second is the AI engineer, assisting in analysis, running root cause assessments, and suggesting optimisations. The third is the AI agent, an autonomous participant in operational workflows that can communicate with other digital systems, such as ERP or safety monitoring tools. “The idea is that users no longer need to go into multiple subsystems,” he explains. “Instead, agents communicate with each other to surface insights or initiate tasks. It is about turning passive data into intelligent action.”

What differentiates this new generation of tools from earlier dashboard-style solutions is their interactivity and their autonomy. Instead of waiting for human queries, these agents can trigger alerts or suggest optimisations based on observed changes in behaviour, asset conditions, or operational context. This moves the model from a reactive posture to a proactive one, anticipating faults, analysing scenarios, and even suggesting economic trade-offs between maintenance timing, resource allocation, and production targets.

One of the most promising outcomes of this shift is the ability to align digital twin capabilities with broader corporate priorities, especially sustainability. Historically, digital twins have been perceived as efficiency tools: reducing downtime, extending equipment life, and streamlining maintenance. Now, AI integration is allowing them to support ESG goals in a far more tangible way.

For example, AI-enhanced twins can monitor and simulate emissions, identify energy inefficiencies, and even help with regulatory reporting. “We are moving into a phase where digital twins are critical to environmental compliance,” says Standish. “Whether it is reducing fugitive emissions with sensor analytics or using AI-powered monitoring for carbon capture and storage, these are becoming core operational capabilities.”

This is especially relevant for offshore platforms where the stakes for both environmental performance and uptime are high. One operator, Standish explains, used a digital twin to analyse vibration and temperature trends from gas compressors and predict early bearing wear. The AI model forecast failure within weeks, triggering a proactive intervention that avoided unplanned shutdown. At the same time, remote teams accessed the same cloud-based twin to simulate outcomes and validate next steps.

As operators take on growing regulatory and shareholder pressure to decarbonise operations, the role of AI will only become more integral. Bergman sees a future where digital twins can simulate

energy consumption across an entire network of facilities, model the effect of switching to alternative fuels, or predict how adjusting operating parameters could reduce carbon intensity without sacrificing throughput. This is not science fiction, it is already beginning to happen in pilot projects across the sector.

Scaling responsibly and avoiding the AI lock-in trap

Despite the clear potential, success is not guaranteed. Digital twins fail more often from governance issues than technical ones. Both Bergman and Standish caution that earlystage projects can fall into the trap of overpromising, underdelivering, or building models that are not scalable. “The first question companies should ask is not what technology they need, but what outcome they want to achieve,” says Bergman. “Digital twins must solve specific problems or unlock specific value, or they will not be used.”

He also stresses the need for architectural flexibility. AI tools and models are evolving rapidly, and solutions that look promising today may be outdated within a year. “You cannot afford to be pinned to a single ecosystem,” he says. “Stay agnostic. Choose architectures that allow you to plug in best-in-class tools as they emerge.”

Standish highlights the importance of involving users at every stage. “Digital transformation is not just about deploying technology, it is about ensuring people understand it, are not threatened by it, and know how to use it to improve their work,” he says. “We need to bring operations, engineering, and IT together from day one.”

A paradigm shift for human-machine collaboration

Looking ahead, both experts are convinced that the next phase of industrial digitalisation

will involve far deeper collaboration between human users and intelligent systems. Digital twins are becoming not just smarter, but more empathetic, designed to reflect how people actually work, think, and make decisions under pressure.

In high-stakes environments like offshore oil platforms, the ability to quickly simulate a ‘what if’ scenario or ask a digital assistant to identify failure correlations across a complex dataset is more than a convenience. It is a necessity. With labour shortages growing and operational complexity increasing, AI-powered twins offer a way to capture institutional knowledge, reduce risk, and deliver decisions that are traceable and explainable.

Bergman puts it simply: “If we can make digital twins easier to use, more trustworthy, and more responsive, they will not just be used by data scientists or engineers. They will be used by everyone who needs to make fast, reliable decisions about the assets they are responsible for.”

The implication is clear. As AI becomes embedded in the DNA of digital twins, the boundaries between data, insight, and action will blur. And for operators seeking to remain competitive while navigating energy transition, economic volatility, and operational risk, that convergence may be the most valuable transformation of all.

Senior leaders in oil and gas face mounting pressure to extend asset life, enhance cyber resilience and cut emissions. Industrial autonomous operations promise to deliver gains across all three, but require a fundamental rethink of how data, systems and people work together.

Even before oil and gas executives reach for AI-enabled tools, they are being forced to answer more pressing operational questions. How can they improve asset utilisation without triggering downtime? Which legacy infrastructure must be upgraded to meet new emissions targets? And what happens when their most experienced engineers retire, taking decades of domain knowledge with them?

According to Sunil Pandita, Vice President and General Manager for Connected Cyber and Industrial at Honeywell, these questions are not isolated. They all stem from a confluence of pressures shaping the sector. Global macroeconomic uncertainty, the energy transition, infrastructure modernisation, and cybersecurity are now interdependent problems, and demand an equally integrated response. That response, Pandita argues, is industrial autonomous operations.

The convergence of four pressures

Each of these challenges is well known individually. Many organisations are deferring investment due to interest rate volatility and inflationary pressure, choosing instead to sweat existing assets. But this introduces new problems around ageing

equipment and unplanned downtime, which, according to Honeywell research, affects around 10 per cent of turnover across the industry.

The second pressure comes from sustainability mandates. “Electrification and emissions reduction are non-negotiable,” Pandita explains. “We are seeing a rise in Chief Sustainability Officers at the most senior levels. That is a clear signal that net-zero is no longer a reporting obligation. It is a strategic priority, with operational consequences.”

The third challenge relates to infrastructure, both physical and digital. “The real challenge is not just adding more sensors or connecting machines to the cloud,” Pandita continues. “It is extracting intelligence from that data at the right time, in the right context, and making it useful to the people who need it most. With advances in edge computing, machine learning, and GenAI, we can now separate signal from noise at scale.”

The final pressure is cybersecurity, where the stakes have never been higher. Honeywell estimates that 80 per cent of industrial organisations have experienced a cyberattack in the past year, with nearly half resulting in operational downtime. OT cybersecurity has become a board-level concern. But the response cannot be limited to perimeter defences alone. It must be embedded in every layer of the industrial technology stack, from sensor to cloud. “Industrial autonomous operations offer a systemic answer to all four pressures,” Pandita adds. “But autonomy is not just about technology. It is about rearchitecting how data, applications and people interact, across the entire asset lifecycle.”

For oil and gas, the traditional use of AI has been rooted in machine learning models built

on structured data, vibration signals, flow rates, temperature curves. That approach still has value, but Pandita believes the real transformation lies in AI’s ability to mine unstructured data, much of it previously untapped.

“We are talking about AI that can read equipment manuals, maintenance logs, video feeds, shift reports,” Pandita explains. “That is the unstructured layer, and GenAI is surfacing insights from it that were simply inaccessible before. We call this AI 3.0, intelligent context at scale.”

In practical terms, that opens entirely new use cases. A technician can ask a digital assistant how to safely isolate a valve based on hundreds of past procedures. A field engineer can receive real-time guidance drawn from manuals and prior incident reports, without leaving the worksite. Search becomes intelligent, decision-making becomes

faster, and experience is no longer a bottleneck.

According to Honeywell’s estimates, these developments could contribute to a 26 percent increase in industrial GDP by 2030, largely driven by the fusion of domain knowledge with generative AI.

The shift to industrial autonomy also changes the role of digital twins. Once used primarily for training or design validation, they are now becoming operational systems in their own right. “We use high-fidelity digital twins not just for simulation, but for real-time optimisation,” Pandita explains. “It is the same digital twin used to train an operator that is now running online, monitoring live plant data, testing scenarios, and advising on optimal process adjustments.”

This is not theoretical. Honeywell has already deployed operational twins that can analyse flow conditions, test what-if cases during upsets, and identify pathways to achieve maximum throughput with minimal emissions. These models can balance performance across competing objectives, production volume, cost, energy consumption, and environmental compliance, in real time.

What makes this possible is not just the model itself, but the cloud-native architecture that supports it. “The digital twin lives in the cloud, it is fed by edge data, and it is accessed remotely,” Pandita continues. “This enables collaboration between experts located anywhere, supporting faster response and broader knowledge sharing.”

Autonomy does not mean removing people. It means augmenting them with better tools, insights, and interfaces. That philosophy applies not only to operations, but to workforce development.

There are two major challenges. First, the sector’s most experienced professionals are nearing retirement. Second, their successors learn differently. Traditional training rooms and static manuals are no longer effective. “New operators want learning to be mobile, on-demand, and

contextual,” Pandita says. “We have built training platforms that allow them to access immersive simulations on a tablet, on a bus, or at home. They can replay actual plant operations, pause, rewind, and experiment in a safe environment.”

AR and VR have become essential to this shift. But just as important is the ability to capture expert knowledge from the existing workforce before it is lost. Honeywell does this by recording workflows and integrating them into digital twins, preserving expertise in a format that is interactive and persistent.

It is not only trainees who benefit. These tools empower internal teams to troubleshoot, collaborate, and adapt more effectively. When data is organised, accessible, and contextualised, it becomes an equaliser. “Technology becomes the co-pilot,” Pandita explains. “It does not replace human judgement, but it supports it with real-time advice and richer data sets. And increasingly, that advice includes insights from unstructured sources, videos, logs, and emails, that were invisible before.”

Many executives fall into the trap of thinking that more data equals more value. But Pandita cautions that the goal is not simply to increase volume. It is to deliver relevance.

“When we deploy new platforms, it is common to discover problems the business did not even know existed,” Pandita says. “Machine number five has been underperforming for six months, but nobody noticed because there was no insight. Once data is captured and analysed, inefficiencies become visible.”

The value then comes from turning that visibility into action. Whether it is predictive maintenance, dynamic scheduling, or process reconfiguration, the system must be able to recommend and support decisions across departments and functions. “Connecting existing applications through APIs

is one way we do this,” Pandita adds. “It allows insights from one domain, such as asset health, to inform another, like supply chain or production planning.”

In this sense, the autonomy is not only in machines, but in the information itself. When insights move freely between systems and stakeholders, they accelerate alignment, reduce conflict, and enable faster course correction.

Despite the technical complexity, the deployment of industrial autonomous operations hinges on trust. Systems must be explainable, resilient, and secure. That means embedding cybersecurity at every level and ensuring human oversight is never removed from the loop.

“Cyber risk is not just a control system problem anymore,” Pandita says. “It is a business continuity risk. The more connected we become, the more important it is to ensure every device, every edge node, every cloud instance is protected.”

That is why Honeywell builds security into the architecture from the outset, not as an add-on. And it is also why autonomy must remain transparent. Decision logic should be traceable, assumptions documented, and human operators empowered to intervene when needed.

“Ultimately, industrial autonomy is not a technology project,” Pandita concludes. “It is a leadership decision. It requires a clear vision for how your organisation wants to work, how it values data, and how it empowers people.”

Those who succeed will not only run safer and more efficient operations. They will shape the future of the energy transition itself, one informed decision, one intelligent system, and one empowered team at a time.

At Wood, we are designing the future by decoding digital to transform industry.

Our Digital Asset and DataOps solution applies decades of operating experience and digital expertise to deliver the building blocks of digital transformation.

As your Main Digital Contractor, we drive digitalisation across capital projects by setting the lifecycle strategy and building the digital asset. We then embed technology in operations to advance ways of working, enable operational excellence and deliver lasting value.

See what’s possible: woodplc.com/digital

From dynamic PHAs to live barrier management, Ruben Mejias, Senior Technology Sales Support Specialist, Wolters Kluwer Enablon outlines how integrated platforms are helping energy and petrochemical operators reduce incidents, cut emissions, and stay compliant. As safety, productivity, and sustainability converge, the industry must rethink how risk is managed in real time.

To start off, please tell us a little more about Enablon and what you do. Wolters Kluwer Enablon provides industry leaders across complex, hazardous industries with a fully integrated platform that supports them in managing risk, drive operational excellence and boost productivity across their entire value chain – from digital permit to work to incident management, and from interactive bowties to process safety management. In my role as Senior Technology Sales Support Specialist, I support clients in implementing advanced software solutions that enhance risk management and elevate safety standards in their organizations.

In our opinion, what are the key challenges faced by your target audience in the energy and petrochemical industries?

One of the key challenges is maintaining robust process safety management (PSM) in increasingly complex and high-risk operating environments. As facilities age and operational demands rise, companies must rigorously manage hazards associated with hazardous substances and extreme operating conditions to prevent catastrophic incidents. Ensuring compliance with evolving regulations, integrating digital monitoring technologies, and fostering a strong safety culture across all levels of the organization are essential but resource-intensive tasks.

I understand that Enablon covers quite an expansive range of solutions offered for the energy and petrochemicals industries. Could you tell us a little more about this?

Within our platform, we have designed our interconnected solutions to support three core goals: increasing safety, boosting productivity, and maintaining compliance. The Enablon platform is tailored to the maturity level and the expectations of our customers. We support a wide range of use cases – from digital Permit to Work for a single refinery, all the way to a fully integrated PSM, EHS and sustainability management system for an entire enterprise. Whether

our customers need to view live barrier health information in dynamic bowties that deliver data for predictive risk in their plant visualization – or if they want to manage all process safety incidents across operations. This is all made possible by our core data platform, which uses more than two decades of expertise to operationalize all this risk data into useful outcomes. This has led to our customers achieving stunning results, from reducing incidents by 94%, to reducing maintenance backlogs by 60%, to preventing thousands of tonnes of CO2 releases.

Why is dynamic management of barriers and safeguards critical?

Organizations that don’t use dynamic barrier management must understand that all barriers can potentially degrade over time, and they might not be aware of the status of their safety critical controls and the cumulative risk associated with their degradation. Dynamic barrier management software provides real-time management and continually informs users of the current state of risk, including whether there is barrier degradation. It gathers data and provides analysis that helps organizations make informed decisions.

What is the main problem with PHAs today?

Traditionally, PHA has been an activity documented on paper or through a standalone solution, which is typically built, managed, and accessed only by a select few within a company. The problem is that PHA are static documents and workers in the field may find challenging or time consuming to make operational decisions based on extensive and static PHA studies. Most organizations only review and update PHAs based on schedules or when an incident/event demands a review of existing hazards and controls.

What should companies be aware of regarding the management of safeguards and dynamic PHAs?

A holistic approach is needed for managing risk effectively and remain compliant, safe and competitive. Risk data should be easily accessible and visible to key decision makers so they can prioritize and take appropriate actions. . The best-in-class Process Safey Management system can address that issue because it fully integrates PHA studies, risk databases, and control of work software. It also provides a single platform for complete visibility and can be easily updated.

What are some changes in trends that you have observed?

I am seeing a strong push towards consolidation and connection – both in people as well as in tech. People expect a stronger connection to the work they do and the planet they inhabit, and technology is expected to work seamlessly to support both company and workforce goals. I see these as positive trends, which are driving more harmonious development, and more holistic innovation across, safety, productivity and sustainability.

What are some of the benefits that your clients have seen from working with Enablon?

Prioritize long-term vision. Even in a rapidly changing landscape, a wellsupported long-term strategy always wins over ad-hoc solutions. Increased safety will generally support increased productivity (and thus increased value), and sustainability will drive a better future for all of us.

Murray Callander, CEO of Eigen, reflects on 25 years of digital transformation in oil and gas, from floppy disks and Excel hacks to cloud-native deployments and AI-powered workflows. He explores the cultural, technological, and organisational shifts that have shaped the digital oilfield, and the lessons that still need learning.

Let’s start with your background. Can you tell us a bit about yourself and your journey in the industry? Where were you when the digital oilfield revolution began over 25 years ago?

Well, I started working in oil and gas right at the very end of 1999. I graduated from university in July 1999 and began working in September with Foster Wheeler Energy Limited. Back then, everything was incredibly manual; I remember being given a calculation pad and strict instructions on numbering and initialling every page for traceability – everything was done on paper. My desk didn’t even have a computer on it in 2000. I got my first desktop later that year for specific tasks like air dispersion modelling. Most work, however, was still on paper, like marking up huge P&IDs laid out on desks. I was given a massive stack of paper to check in two weeks, and that was actually the trigger for me to learn to program. I figured automating it would be faster than doing it by hand. I managed to get a digital copy on a floppy disk and taught myself Visual Basic, wrote an algorithm, and finished the check in about nine days. From then on, I used code more and more, and I saw everyone eventually getting computers and then laptops on their desks. By 2005, I was working at Halliburton in a consultancy called Granherne and was invited onto one of the early digital projects with BP because I was seen as the “engineer that could do digital”. I was linking simulations with Excel and automating reports to speed up concept studies.

Looking back, what were the biggest challenges in those early digital oilfield initiatives?

Looking back, especially at those BP projects we did in the Caspian starting in 2005, the biggest challenges were definitely cyber security, networking, and infrastructure. It was all so new. There wasn’t a standard way to connect control systems to data historians and then to business systems. We had to work extensively with others to figure out and establish secure templates for these multi-tier architectures.

What limitations did you face back then — technologically, organizationally, and from a management perspective?

We faced limitations across the board. Technologically, a big one was the sheer speed and rate of change in

IT technology compared to the very slow, deliberate pace of major oil and gas projects. We had to lock in technology decisions early on, like the version of Windows (I think it was XP initially), which would often be obsolete by the time the platform was actually live. We simply couldn’t afford to keep up with those rapid IT changes within the structure of a major project.

Organizationally, there was a significant disconnect between the IT world and the operational technology (OT) world. These are two vastly different worlds with very different domain expertise and ways of working. The IT world often moved extremely quickly, seeing mistakes as relatively cheap and technology as almost disposable. This clashed with the careful, slow process of major projects where mistakes are very costly. There was a real cultural mismatch. Often, IT didn’t understand what the business or users needed, leading to them optimizing their own world potentially at the expense of operations. This sometimes pushed intelligent engineers back to simple tools like Excel rather than engaging with IT, which they saw as complicated. Central IT structures weren’t always compatible with the needs of major projects either. From a management perspective, a key challenge was the lack of understanding between specialist areas. It was hard for those outside of IT to grasp why digital projects took so long. There was also a major misunderstanding about the difference between a minimum viable product (MVP) or proof of concept and a full enterprise-grade, supported product. People would see a simple proof of concept working and think the project was done, not realizing the ten times more work required to make it robust, secure, and supported for industrial operations. This made getting proper funding and schedules challenging. There was also perhaps an expectation, influenced by consumer software and open source, that digital solutions should be free or cheap, not recognizing the significant costs involved in enterprise software development and customization for unique company challenges.

Fast-forward to today — how has the digital oilfield landscape evolved over the past 25 years? What’s truly different now?

The landscape has evolved dramatically. A huge shift happened around 2008 with the iPhone, which accelerated changes in behaviour and technology adoption. Now, we have a new generation that grew up with technology always available, so they have higher expectations and are comfortable using it. What’s truly different? Firstly, the amount of data we generate is enormous now, partly because projects are designed with digital tools in mind and are better instrumented. We can handle and store much more data. Cloud systems have been a game changer, allowing us to manage vast amounts of data and make it far more accessible. Remote access is now much easier and more secure from anywhere on any device, thanks to cloud technology, which was a major headache early on.

Secondly, the quality and availability of web technology have improved immensely. We have standardized browsers and excellent open-source libraries that make developing secure apps

with good user experiences much quicker than coding everything from scratch. Finally, infrastructure as code is a major shift. Instead of installing on physical servers, we use code and pipelines to build and deploy applications rapidly into virtual environments. Deployment speed is huge, and it’s incredibly flexible –we can spin up environments quickly and even rebuild everything from code in minutes if there’s a security issue.

AI is transforming industries across the board. In your view, how is AI shaping or accelerating digitalisation in oil and gas?

AI is definitely another game changer. There’s a huge expectation around it, but I think the reality, like with any tool, will be a bit different from the initial hype. It will provide value in ways we might not have thought of, but perhaps not in others we initially expected. I see it primarily as an extremely valuable tool for improving efficiency. I use it a lot as an assistant, and it makes me and my team more efficient. However, it’s not at a level where it can replace people because it makes mistakes. It can also be misused if relied on blindly. If we’re going to trust AI outputs, there’s a huge responsibility on us to verify them. It needs to be explainable and auditable. While autonomous operation might be a future possibility, I think we’ll see AI used in an assistive way much more in the near term. It will take significant time, checking, verification, and legal frameworks to build the trust needed for full autonomous operation, similar to how we still have pilots and train drivers even though the technology for automation exists. So, it will accelerate things, but likely with humans remaining in the loop for a long time.

Based on your experience, what are the top three lessons you’ve learned from implementing digital

Based on my experience, here are the top three lessons: Get into the detail early. Concepts are great, but you need to start looking at real data, real systems, how things connect, the infrastructure, data structures, and what the users actually need as soon as possible.

Focus relentlessly on the user. You need a laser focus on delivering value to the user. It might feel boring or too simple, like just reducing clicks, but if it makes their daily work easier and they are delighted, it will be adopted and successful. Trying to force adoption rarely works.

Break the project down into manageable steps. Industrial digital projects are often unique, so you can’t cost them like building a standard house. Cut them into small, costable, deliverable steps. Use a phased approach – discovery, de-risking, and several delivery stages – to get something working early, verify it with users, and then add the enterprise features. This minimizes rework and keeps the organization on board. Trying a big bang approach is very difficult and risky.

your

what are the

Building on those lessons learned, the key success factors are really about breaking projects down into stages and critically, making sure all your stakeholders are on board. You need to communicate clearly what you’re delivering and give users the chance to provide input as early as possible. This is crucial because rework is one of the most expensive things in digital projects. Getting feedback early helps avoid misunderstandings where you deliver exactly what was asked for, only to find out it wasn’t actually what was needed. By showing users what’s possible early on, they can better define a scope that they will be delighted with at the end.

digital transformation across operations and design

Augmented engineering applications and services to fundamentally improve the way you work through intelligent, data-driven approaches

In the oil and gas sector, performance is not merely a concept, kit is a calculation. As the industry convenes in Aberdeen for the Future of Oil and Gas 2025, the dialogue is shifting from theoretical innovation to proven transformation in the field. As Katarzyna Chrzan, Senior Marketing Manager at Cohesive explains, the question is no longer whether we should modernise, but how we can do so in a way that delivers measurable, scalable impact.

Despite decades of investment in SCADA, DCS, EAM, and ERP systems, many oil and gas operators still lack a unified, real-time perspective on asset performance. Data silos between operational technology (OT) and enterprise IT environments continue to obscure visibility, delay decisionmaking, and restrict proactive action.

The result? Reactive maintenance, inefficient planning, and missed opportunities for optimisation.

This is where a comprehensive Asset Performance Management (APM) strategy becomes vital. It’s not simply about deploying a platform, it’s about creating an integrated ecosystem that connects data, people, and processes.

Delivering Tangible Value Through Integrated Asset Strategies:

• Advisory services to evaluate the current digital landscape, identify integration gaps, and

define a roadmap for a unified APM architecture aligned with business objectives.

Data services to classify, standardise, and integrate fragmented asset data across systems, transforming it into actionable intelligence for real-time monitoring and advanced decision support.

• Technology platforms such as the IBM Maximo Application Suite (MAS) serve as unified solutions that integrate data from SCADA, DCS, EAM, and ERP data into a single operational layer, eliminating silos and enabling predictive insights at scale.

Together, these capabilities establish the foundation for scalable, insight-driven asset strategies that go beyond dashboards and deliver measurable impact in the field.

An example of this comes from Scotia Gas Network (SGN), a leading UK gas distributor. By unifying operations in a single Maximo system, SGN cut infrastructure complexity, removed fragile custom integrations, and reduced maintenance costs. The transition was completed with zero downtime for thousands of users—proving that large-scale digital transformation can be seamless and effective. The unified platform now enables shared communication, decision-making, and reporting across the organization.

Inconsistent failure coding and reliability data across onshore, offshore, and regional sites continue to hinder effective root cause analysis and benchmarking. Without a unified taxonomy, continuous improvement efforts often rely on assumptions rather than evidence. Aligning asset hierarchies with ISO 14224 and harmonising historical failure data unlocks analytics-ready datasets—enabling RCA, MTBF/MTTR tracking, and global reliability benchmarking. This creates a solid foundation for consistent, data-driven decisionmaking across the enterprise.

At the same time, operators face growing pressure from ageing workforces and rising labour costs, particularly in remote or offshore environments where skilled labour is scarce. AI-powered workforce analytics and intelligent scheduling tools to help organisations optimise resource

allocation, identify skill gaps, and support longterm workforce planning. The goal isn’t just to reduce OPEX—it’s to embed resilience and agility into day-to-day operations.

In high-risk industrial environments, safety and operational continuity go hand in hand. Yet many oil and gas operators still rely on manual or fragmented systems to manage critical activities such as shutdowns, commissioning, and high-risk maintenance. This not only increases exposure to incidents but also limits the ability to respond quickly and confidently when it matters most. Digital tools are transforming how operators plan and execute complex operations.

A real-world example shows the value of this approach:

This case comes from the gas-to-power sector, where natural gas (LNG) is converted into electricity. At a major power plant in Indonesia, Cohesive implemented a digital Permit-toWork (PTW) system in IBM Maximo during the commissioning of a new combined cycle gas turbine (CCGT) unit. The high-risk “first fire” phase—when the plant begins generating power— was managed without a single incident or minute of downtime.

The system ensured consistent safety procedures, real-time permit tracking, and better coordination—critical in such environments. It also supported the plant’s sustainability goals by reducing the risk of unplanned emissions and reinforcing its reputation as a responsible employer.

Many facilities still rely on fixed-interval or reactive maintenance—even when condition monitoring tools are available. This results in unnecessary interventions, unplanned outages, and increased lifecycle costs.

In the UK, the number of active production hubs could fall dramatically—from 46 today to just 4 by 2040. But this trajectory is not set in stone. With the right digital strategies, including AI-powered predictive maintenance, operators can extend the productive life of existing assets, reduce unplanned downtime, and extract more value from mature

fields—delaying decommissioning and improving return on investment.

The future of oil and gas is not solely about technology—it’s about transformation that delivers in the field. By addressing deep operational challenges with AI-powered solutions, the industry can move from fragmented data to unified intelligence, from reactive maintenance to predictive strategy, and from complexity to clarity.

The UK’s North Sea sector is entering a new phase. While the government has not formally banned new oil and gas exploration licences, recent policy direction—particularly through the Great British Energy Act 2025—signals a strategic shift away from fossil fuel expansion and towards clean energy investment. In this context, the ability to safely and efficiently operate mature infrastructure is essential—not only to maintain domestic energy supply, but also to buy time for the build-out of low-carbon alternatives.

In this evolving landscape, AI and Asset Performance Management are more than tools for operational efficiency—they are strategic enablers. By extending asset life, improving reliability, and creating a digital foundation for future repurposing, operators can not only maximise today’s output, but also prepare infrastructure to support tomorrow’s low-carbon energy systems.

Some operators are already on this journey. For the rest, the time to begin is now.

www. cohesivesolutions.com www.ibm.com/products/maximo

Decarbonization of the UK’s energy system requires increased interaction and cooperation between all energy stakeholders.

Therefore, alongside electrification, digitalization remains a key enabler in the pathway towards net zero against a backdrop of volatility, unpredictability, and complexity.

In the UK’s first Energy Digitalisation Strategy, the Government set out how only a digitalized energy system can withstand the distributed nature of low-carbon technologies connecting to the grid in the coming years. A number of subsequent use cases - many now being realized as actual projects - are emerging across the UK energy sector. For example, the National Energy System Operator (NESO) is leading on the Virtual Energy System which aims to enable an ecosystem of connected digital twins. However, introducing digital technology, enabling data sharing, and increasing automation, inherently introduces additional risk into safety-critical systems.

How can the energy industry adopt digital technology, deploy sensor systems,

share data and enable the introduction of computational models (including artificial intelligence and digital twins) to command their assets and be confident in their safe, secure and economical operation in addition to meeting the legal and regulatory requirements?

A substantial green prize lies ahead for the UK as it decarbonizes its economy.

As we enter 2025, the energy industry stands at a pivotal moment in its history. Despite global economic headwinds and geopolitical uncertainties, we’ve witnessed unprecedented momentum in clean energy deployment and DNV forecasts that the world will hit peak emissions this year.

The UK’s energy landscape is transforming rapidly, with record-breaking renewable energy generation and falling costs of clean technologies setting new benchmarks for what is possible. The past year has demonstrated the UK’s potential on this transition journey. We’ve seen wind power generation reach historic highs, battery storage capacity expand significantly and Electric Vehicle (EV) adoption show signs of recovery. However, these achievements, while significant, are merely the initial steps toward the UK’s 2050 net-zero goal.

The ambitious new transition pathway mapped out by the UK government includes three critical milestones that will shape our journey towards net zero. DNV’s UK Energy Transition Outlook 2025 reveals a mixed picture of progress and challenges against these three targets:

• First, by 2030, we expect to see a dramatic expansion of clean power infrastructure, with installed renewable capacity forecast to double, reducing our reliance on gas to less than 12%, but falling just short of the Clean Power 2030 target of 95%.

Second, DNV’s forecast of a 35% reduction of today’s emissions by 2035 highlights that progress is being made, but that the UK will still fall short of its new Nationally Determined Contributions (NDC) target of at least 81%.

Third, our forecast for 2050 sees the UK successfully decoupling economic growth from energy consumption, reducing the UK’s energy demand by 25% and emissions by 82% compared with 1990 levels. But clearly the UK is not reaching net zero.