Liquid Cooling AI

The world’s first 160 µm bend-insensitive fibre - higher capacity, smaller footprint

Unlock record cable density for today’s space-constrained ducts, buildings, and data centres with the BendBright XS 160 µm single-mode.

By shrinking the coating while maintaining a 125 µm glass diameter and full G.652/G.657.A2 compliance, it delivers faster, more cost-effective deployments that don’t disrupt established field practices.

Enables slimmer, lighter, high-count cables that install faster and travel further when blown

Packs dramatically more fibres into the same pathway thanks to a >50% cross-sectional area reduction versus 250 µm fibres

Ensures full backward-compatibility with legacy single-mode fibres for seamless splicing and upgrades

Provides superior bend performance and mechanical reliability with Prysmian’s ColorLockXS coating system

Ideal for FTTH/X access, metro densification, and hyperscale /data centre interconnects where every millimetre counts

Ready to boost capacity and shrink your network footprint?

Connect with our experts, request samples, or download the BendBright XS 160 µm technical data today.

Welcome to this latest edition of DCNN! As we settle into the long winter nights and begin to unwind for the festive period, it’s clear that the pace of development in the data centre world is doing anything but. Whilst thoughts shift to relaxation and time with loved ones, we simultaneously find ourselves navigating new challenges on a global scale.

For instance, in Europe, we see the stark impact of how heavily the continent depends on foreign – particularly American – hyperscalers. Recent outages across global cloud infrastructure highlight this best. When services hosted on AWS or safeguarded by Cloudflare collapse, the disruptions are immediate to European public services, factories, and more. We can observe how, in response to this

GROUP EDITOR: SIMON ROWLEY

T: 01634 673163

E: simon@allthingsmedialtd.com

ASSISTANT EDITOR: JOE PECK

T: 01634 673163

E: joe@allthingsmedialtd.com

ADVERTISEMENT MANAGER: NEIL COSHAN

T: 01634 673163

E: neil@allthingsmedialtd.com

SALES DIRECTOR: KELLY BYNE

T: 01634 673163

E: kelly@allthingsmedialtd.com

increasingly uneasy reality characterised by a distinct lack of data sovereignty, European nations are finally beginning to take some action, but how swift and how effective this will eventually be is still to be seen.

As we look to the new year, it’s essential for all stakeholders to remain agile and informed amidst seemingly incessant global changes, including from the many influences beyond just the tech space. More generally, as is clear from the features in this issue, we can at least rest assured that the coming year promises much transformation.

I hope you enjoy the edition!

Joe

STUDIO: MARK WELLER

T: 01634 673163

E: mark@allthingsmedialtd.com

MANAGING DIRECTOR: IAN KITCHENER

T: 01634 673163

E: ian@allthingsmedialtd.com

CEO: DAVID KITCHENER

T: 01634 673163

E: david@allthingsmedialtd.com

Steven Carlini of Schneider Electric outlines how new collaborations with NVIDIA and ecosystem partners are accelerating AI-ready power, cooling, and digital-twin capabilities

DCNN speaks with Jens Holzhammer of Panduit about the company’s approach to ongoing supply pressures, rising power densities, and more

Mike Hellers of LINX and Mike Hoy of Pulsant explore how Scotland’s reliance on distant internet hubs undermines performance, security, and sustainability

Joe from DCNN reports back from Schneider Electric’s recently held Innovation Summit in Copenhagen

24 Chris Cutler of Riello explores how using SiC semiconductors could help the next generation of modular UPS systems ensure operators can balance reliability with sustainability

28 Karl Bateson of Centiel reflects on the pressures created by rapid, AI-driven development and how UPS strategies are evolving to keep pace

32 Ricardo de Azevedo of ON.energy explains why traditional UPS designs are falling short as AI campuses overwhelm utility systems

36 Domagoj Talapko of ABB details how new UPS strategies are helping meet growing power and reliability requirements

42 Dennis Mattoon of TCG explores how trusted computing standards aim to protect facilities against increasingly sophisticated hardware and firmware attacks

46 Cory Hawkvelt of NexGen Cloud outlines how sovereignty, regulation, and hardware-level security are reshaping the safeguards required for mission-critical AI workloads

50 Brian Higgins of Group 77 examines how AI, cyber-physical convergence, and rising environmental pressures are reshaping safety and security across modern data centres

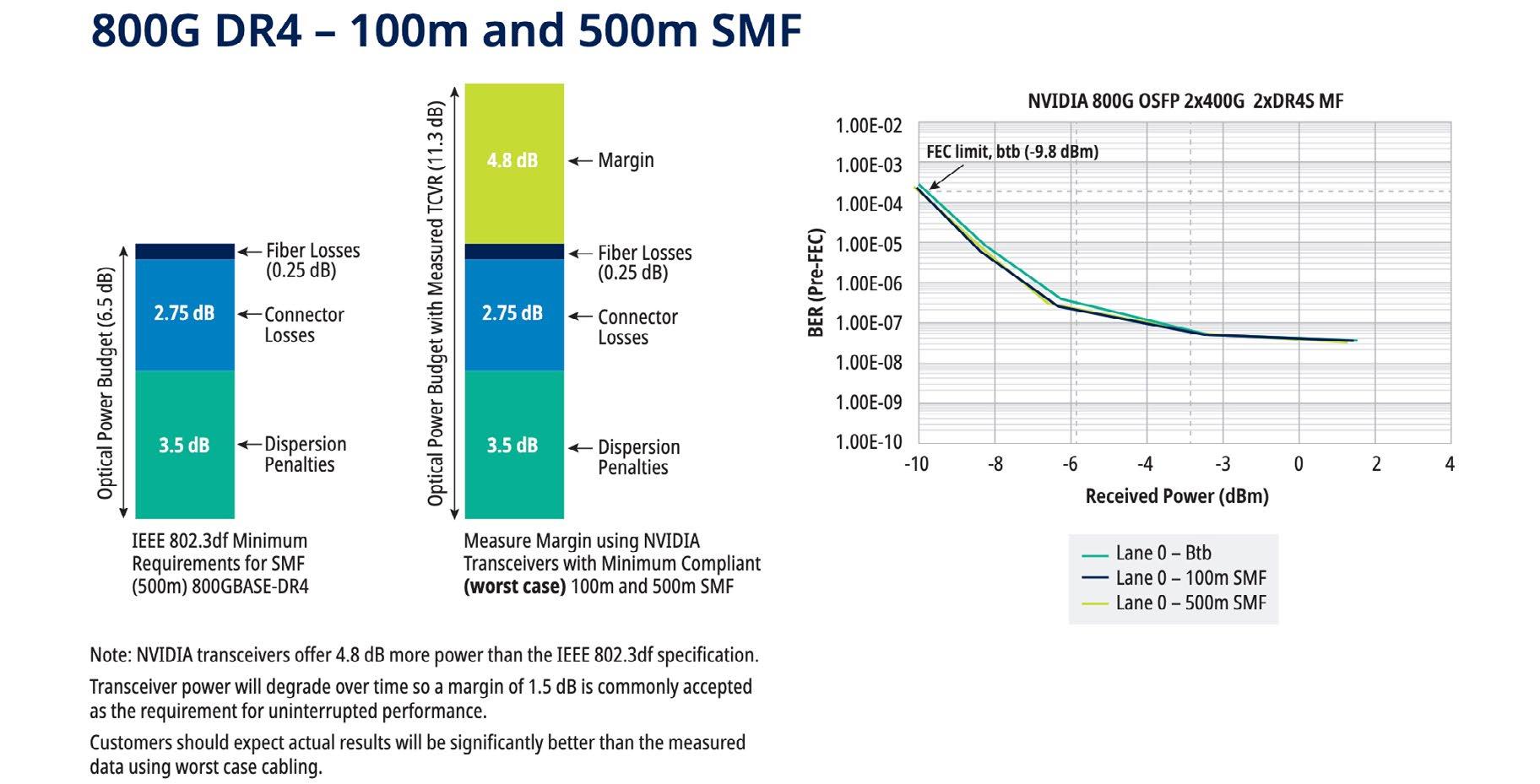

56 Michael Akinla of Panduit outlines how standards-aligned fibre design helps AI SuperPods manage rising MPO density and prepare for rapid transitions in ethernet speeds

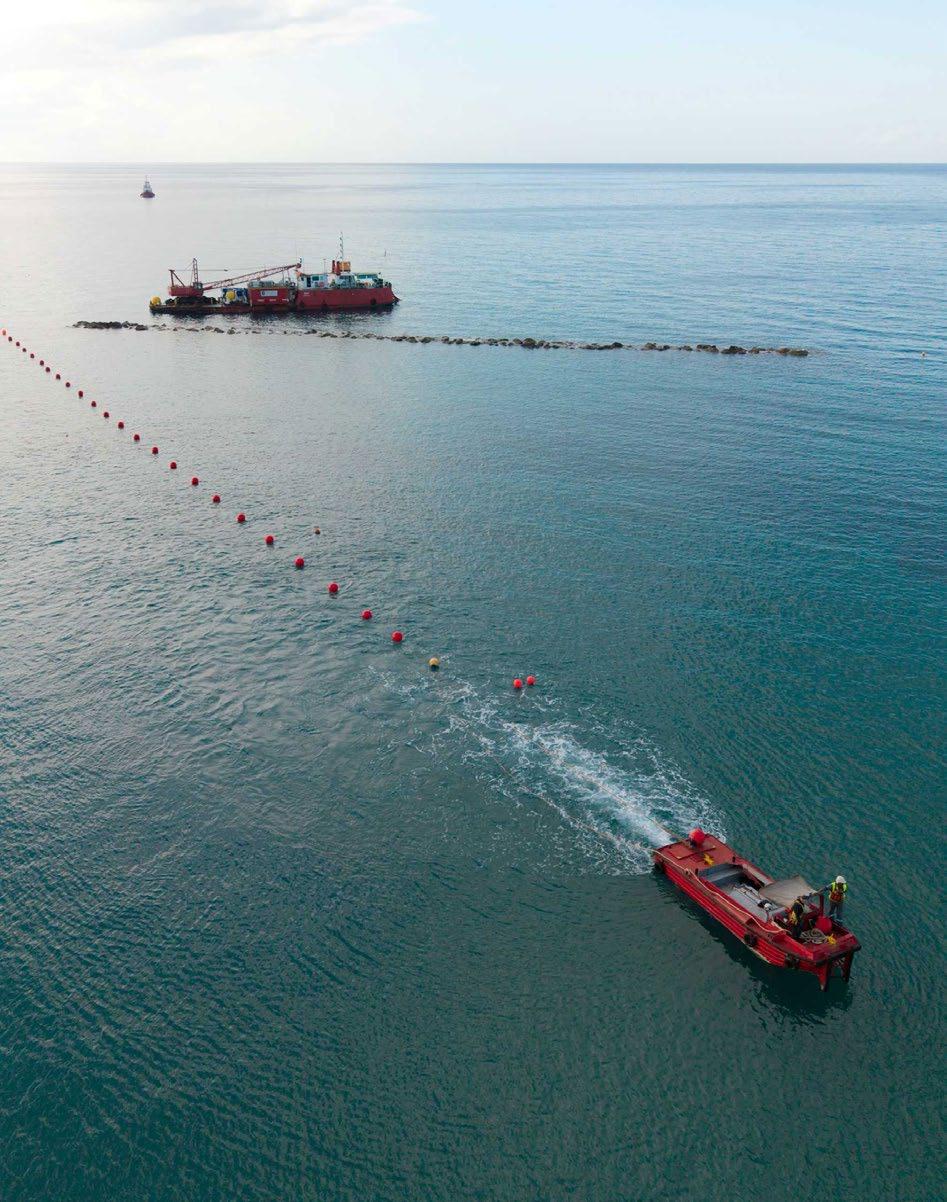

70 Amanda Springmann of Prysmian explains how reduced-diameter single-mode fibre and high-density cable designs are helping operators keep pace with rapidly escalating optical demands

76 Carson Joye of AFL examines the ultra-high-density fibre innovations reshaping hyperscale and long-haul networks

21 Advertorial

Baudouin explores how a century of engineering expertise translates into gensets built for the uncompromising demands of modern data centres

54 Edge Computing

Niklas Lindqvist of Onnec outlines the design principles that ensure edge facilities can meet rising AI-driven performance and efficiency demands

60 Carsten Ludwig of R&M suggests how IMDCs can deliver the energy efficiency needed to support high-density AI workloads

63 Arturo Di Filippi of Vertiv explains how modular, grid-interactive power systems are redefining efficiency and resilience in an era of constrained energy supply

66 Stephen Yates of IT Cleaning outlines the relationship between cleanliness, airflow dynamics, and energy efficiency in data centres

74 Bonus Feature Ian Lovell of Reconext analyses how shifting risk perceptions, tighter power constraints, and proven recovery methods are reshaping end-of-life policy inside modern data centres

Show Previews: DCW London

Equinix has acquired an 85-acre site in Hertfordshire for a planned data centre campus, announcing a £3.9 billion investment expected to deliver more than 250 MW of capacity. The company says the development will support organisations across sectors such as healthcare, public services, finance, manufacturing, and entertainment, and forms part of wider ambitions around UK sovereign AI capability.

The site, formerly known as DC01UK, is forecast to create around 2,500 construction jobs and more than 200 permanent roles. KPMG estimates the project could generate up to £3 billion in annual Gross Value Added during construction, and £260 million once operational.

Equinix says it will work with local partners on education, skills, and environmental initiatives, and plans to retain over half the land as open space, introduce ecological habitats, and use dry cooling to support at least a 10% biodiversity net gain. The campus will also be designed to enable future heat reuse.

Equinix, equinix.com

atNorth, an operator of sustainable data centres, has agreed a partnership with Vestforbrænding, Denmark’s largest waste-to-energy company, to supply excess heat from its forthcoming DEN01 data centre campus into the district heating network serving Greater Copenhagen.

DEN01, a 22.5MW site in Ballerup, is scheduled to open in early 2026. Through the collaboration, warm water generated as a by-product of direct liquid cooling will be transferred into Vestforbrænding’s network from 2028. The recovered heat is expected to support the heating of more than 8,000 homes, reducing energy consumption for local central heating and lowering emissions for both organisations.

Steen Neuchs Vedel (pictured right), CEO of Vestforbrænding, says, “For many years, we have talked about surplus heat from data centres being part of the future. Now, the future is here.”

Eyjólfur Magnús Kristinsson (pictured left), CEO at atNorth, adds, “As the demand for AI-ready digital infrastructure continues to increase, it is imperative that data centre companies scale in a responsible way.”

atNorth, atnorth.com

AVK, a supplier of data centre power systems in Europe, and Rolls-Royce Power Systems have announced a new multi-year capacity framework as an addition to their established System Integrator Agreement.

The new partnership cements closer collaboration between the two companies, with a focus on increasing industrial capacity for genset orders whilst

accelerating joint innovation across the data centre and critical power markets. It comes 12 months after AVK announced a record-breaking year of sales, with 2024 seeing AVK deliver its 500th mtu generator from Rolls-Royce.

Under the new Memorandum of Understanding, their relationship moves on to become a strategically integrated, longer-term alliance. The framework formalises a five-year capacity partnership, with Rolls-Royce increasing supply and AVK committing to order volume.

A parallel six-year master framework designates AVK as the exclusive System Integrator for mtu generator sets across the UK and Ireland until 2031.

AVK, avk-seg.com

The London Internet Exchange (LINX) has successfully deployed its 25th 400GE port across its global network, a major milestone within the wider industry’s growth.

The first 400GE port was provisioned in 2021, with demand for ultra-high bandwidth connectivity surging since then, driven by the exponential growth in cloud services, video streaming, gaming, and AI workloads. A single 400GE connection delivers four times the capacity of a standard 100GE port, enabling networks to consolidate traffic, reduce operational complexity, and build in resilience.

LINX has invested heavily in Nokia’s next-generation hardware and optical technologies to enable 400GE delivery across its interconnection ecosystems in London and Manchester.

Jennifer Holmes, CEO of LINX, comments, “Reaching 25 active 400GE ports is a testament to the evolving needs of our members and the strength of our technical infrastructure.”

LINX, linx.net

AECOM has been selected by Nostrum Data Centers to lead the design and construction management of a new data centre in Badajoz, Spain. With an investment exceeding €1.9 billion (£1.6 billion), ‘Nostrum Evergreen’ is one of Spain’s most ambitious digital infrastructure projects, with capacity expected to reach 500 megawatts.

The first phase includes the design and construction of data halls and critical operational infrastructure, with an initial capacity of 150 megawatts of electric capacity (MWe). The second phase, scheduled to begin in early 2029, will allow the site to reach 300 MWe. The complex’s design will enable scalability up to 500 MWe.

“This data centre in Badajoz will have a total capacity similar to the combined capacity of all current operational data centres in Spain,” says Gabriel Nebreda (pictured left),, CEO of Nostrum Group.

The project expects to obtain its building permit by mid-2026 and has already secured electrical capacity and more than 200,000 m² of ready-to-build industrial land.

aecom.com

Oxford Instruments NanoScience, a UK provider of cryogenic systems for quantum computing and materials research, has supplied one of its advanced Cryofree dilution refrigerators, the ProteoxLX, to Oxford Quantum Circuits’ (OQC) newly launched quantum-AI data centre in New York.

As the first facility designed to co-locate quantum computing and classical AI infrastructure at scale, the centre will use the ProteoxLX’s cryogenic capabilities to support OQC’s next-generation quantum processors, helping to advance the development of quantum-enabled AI applications.

The announcement follows the opening of OQC’s New York-based quantum-AI data centre, powered by NVIDIA CPU and GPU Superchips. Within OQC’s logical-era quantum computer, OQC GENESIS, the ProteoxLX provides the ultra-low temperature environment needed to operate its 16 logical qubits, enabling over 1,000 quantum operations.

Matthew Martin, Managing Director at Oxford Instruments NanoScience, says, “We’re proud to support OQC in building the infrastructure that will define the next generation of computing.”

Oxford Instruments NanoScience, nanoscience.oxinst.com

Steven Carlini, Chief Advocate, Data Center and AI at Schneider Electric, outlines how new collaborations with NVIDIA and ecosystem partners are accelerating AI-ready power, cooling, and digital-twin capabilities for gigawatt-scale deployments.

The compute requirements for AI reasoning and other inference workloads is outpacing traditional data centre designs, creating an urgent need for high-density power and advanced cooling. Schneider Electric is collaborating on the newly announced NVIDIA Omniverse DSX Blueprint for multi-generation, gigawatt-scale build outs, using NVIDIA Omniverse libraries and SDKs that will set a new standard of excellence for AI infrastructure.

As the world’s leading solution provider for power distribution and liquid cooling solutions for data centres and AI factories, Schneider Electric is working with NVIDIA and other ecosystem partners at the AI Factory Research Center in Virginia to form a pathway to develop next-generation, AI-ready infrastructure faster and with greater efficiency and performance.

These are the most recent highlights of Schneider Electric’s innovation and capability commitments to the partnership:

Earlier this year, Schneider Electric shared plans to invest more than $700 million (£525 million) in its US operations between now and 2027. These planned investments are intended to support national efforts to strengthen energy infrastructure in response to growing demand across data centres, utilities, manufacturing, and energy sectors –particularly as AI adoption accelerates. Building on investments made in 2023 and 2024 to reinforce its North American supply chain, the initiative includes potential manufacturing expansions and workforce development. These efforts reflect strong customer demand for solutions that improve energy efficiency, scale industrial automation, and enhance grid reliability.

Together, these plans are designed to enable innovation, especially through new reference designs developed in collaboration with NVIDIA and the integration of Schneider Electric’s digital twin ecosystem.

In September, Schneider Electric announced new reference designs developed with NVIDIA that significantly accelerate time to deployment and aid operators as they adopt AI-ready infrastructure solutions.

The first reference design delivers one of the industry’s first and only critical framework for integrated power management and liquid cooling control systems – including Motivair by Schneider Electric liquid cooling technologies – and enables seamless management of complex AI infrastructure components. It includes interoperability with NVIDIA Mission Control – NVIDIA AI factory operations and orchestration software, including cluster and workload management features. The control systems reference design can also be utilised with Schneider Electric’s data centre reference designs for NVIDIA Grace Blackwell systems, enabling operators to keep pace with the latest advancements in accelerated computing with seamless control of their power and liquid cooling systems.

The second reference design focuses on the deployment of AI infrastructure for AI factories of up to 142 kW per rack, specifically the NVIDIA GB300 NVL72 racks, in a single data hall. Created to provide a framework for the nextgeneration NVIDIA Blackwell Ultra architecture, the reference design includes information on four technical areas: facility power, facility cooling, IT space, and lifecycle software. The design is available under configurations for both the American National Standards Institute (ANSI) and the International Electrotechnical Commission (IEC) standards.

Leveraging the NVIDIA Omniverse Blueprint for AI factory digital twins, Schneider Electric and ETAP enable the development of digital twins that bring together multiple inputs for mechanical, thermal, networking, and electrical systems to simulate how an AI factory operates. The collaboration is set to transform

AI factory design and operations by providing enhanced insight and control over the electrical systems and power requirements, presenting an opportunity for significant efficiency and reliability.

Schneider Electric has built on this virtual modelling capability by also enabling enterprises of the future to optimise the electrical infrastructure that supports top-tier accelerated compute environments.

Collaboration between ETAP and NVIDIA introduces an innovative ‘Grid to Chip’ approach that addresses the critical challenges of power management, performance optimisation, and energy efficiency in the era of AI. Currently, data centre operators can estimate average power consumption at the rack level, but ETAP’s new digital twin aims to increase precision on modelling dynamic load behaviour at the chip level to improve power system design and optimise energy efficiency.

This collaborative effort highlights the commitment of both ETAP and NVIDIA to driving innovation in the data centre sector, empowering businesses to optimise their operations and effectively manage the challenges associated with AI workloads. The collaboration aims to enhance data centre efficiency while also improving grid reliability and performance.

These innovations underscore Schneider Electric’s commitment to unlocking the future of AI by pairing its data centre expertise with NVIDIA-accelerated platforms. Together, we’re helping customers overcome infrastructure limits and scale efficiently and at speed. The progression of advanced, future-forward AI infrastructure doesn’t stop here.

Schneider Electric, se.com

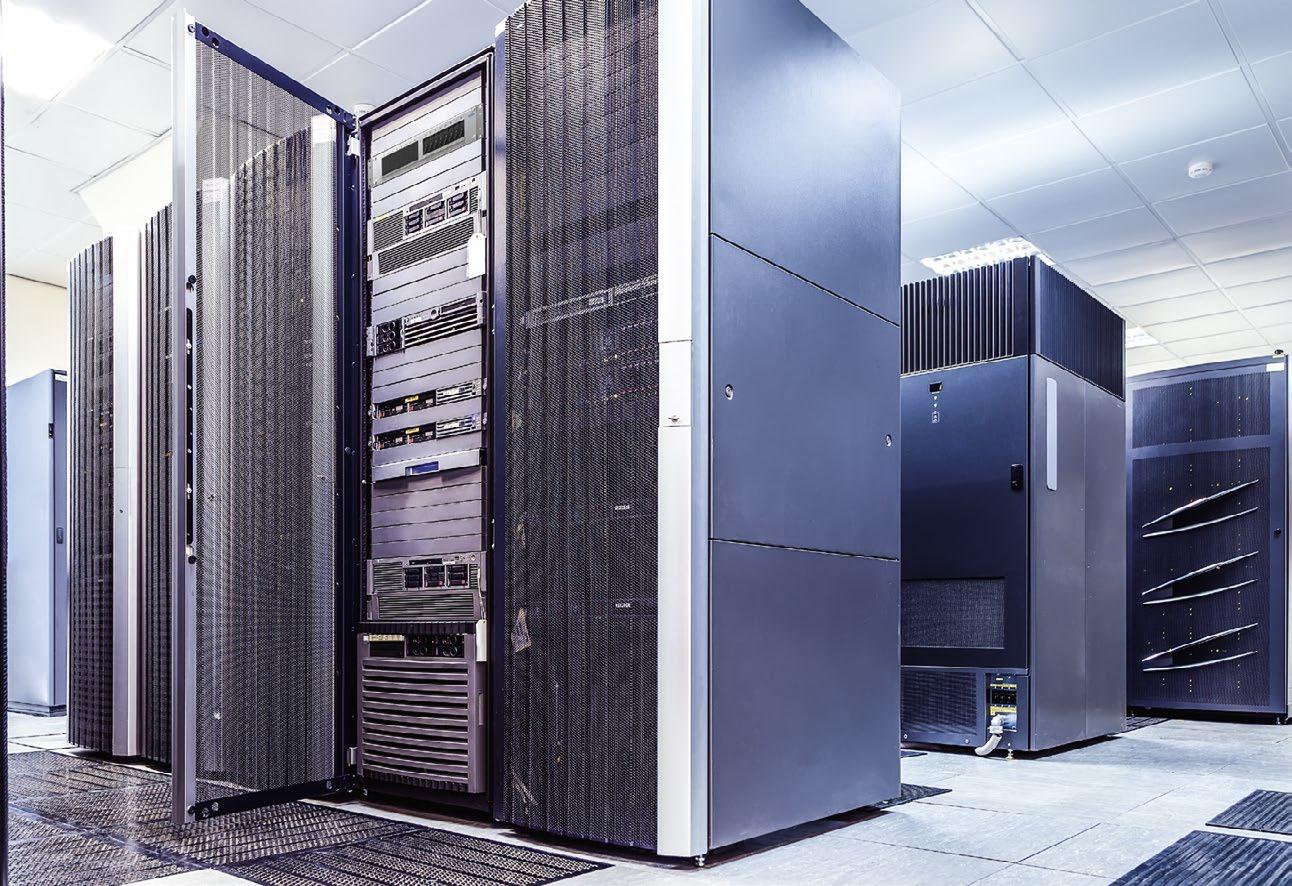

DCs are facing growing challenges like rising power demands, labour shortages, rapid growth of AI workloads... Traditional approaches are often too slow, costly, and unsustainable where speed, efficiency, and scalability are required.

R&M addresses this with modular, ready-to-use solutions. These support key areas including servers and storage, computing rooms, meet-me rooms, and interconnects.

Scan to contact us.

& De-Massari UK Ltd.

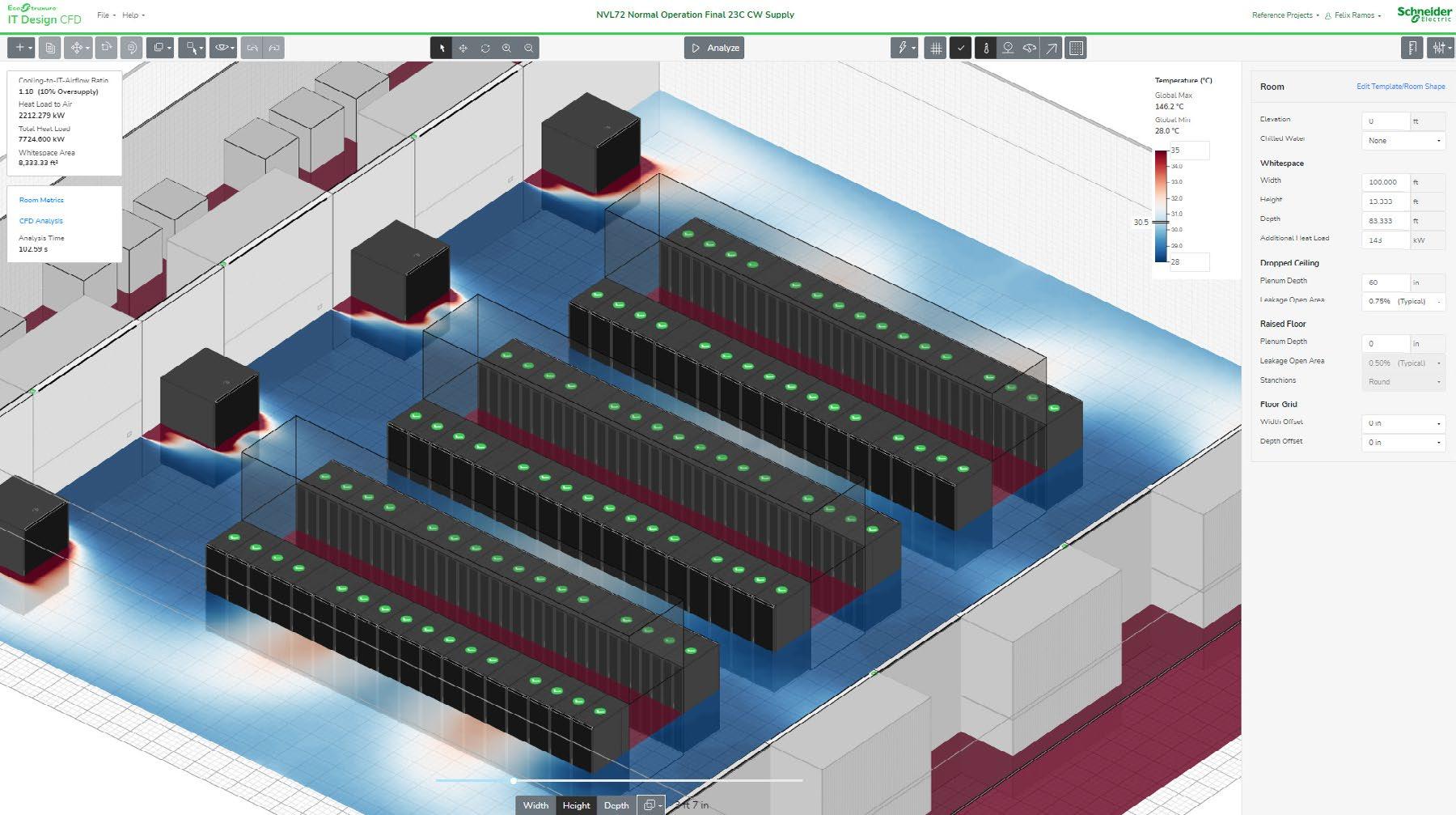

Optimising cooling and energy consumption requires an understanding of airflow patterns in the data center whitespace, which can only be predicted by and visualised with the science of computational fluid dynamics (CFD).

Now, for the first time, the technology Schneider Electric uses to design robust and efficient data centers is available to everyone.

• Physics-based predictive analyses

• Follows ASHRAE guidelines for data center modeling

• Designed for any skill level, from salespeople to consulting engineers

• Browser-based technology, with no special hardware requirements

• High-accuracy analyses delivered in seconds or minutes, not hours

• Supported by 20+ years of research and dozens of patents and technical publications

Equipment Models –

Easily choose from a range of data center equipment models from racks, to coolers, to floor tiles.

CFD Analysis –

The fastest full-physics solver in the industry delivering results in seconds or minutes, not hours.

Cooling Check –

Visualisation Planes and Streamlines –Visualise airflow patterns, temperatures and more.

Reference Designs –

Cooling Analysis Report –

Generate a comprehensive report of your data center with one click.

At-a-glance performance of all IT racks and coolers.

Quickly start your design from pre-built templates.

IT Airflow Effectiveness and Cooler Airflow Efficiency –Industry-leading metrics guide you to optimise airflow.

Room and Equipment

Attributes – Intuitive settings for key room and equipment properties.

Earlier this month, Joe from DCNN sat down with Jens Holzhammer, SVP and Managing Director EMEA at Panduit, within the stunning setting of the Schlosshotel Kronberg, on the outskirts of Frankfurt, to find out about his company’s approach to ongoing supply pressures, rising power densities, and more.

Joe: Thank you for speaking with us today, Jens. To start, could you tell us about your role at Panduit?

Jens: I joined the organisation pretty much a year ago now. The role essentially encompasses the whole commercial organisation for the EMEA theatre, and that starts with sales and

ends with the operational side of things. Being an American company, we are matrixed, so there are heavy links into manufacturing as well as logistics and the supply chain. My role covers the whole strategic ‘go-to-market’ side of things right down to the very day-to-day operational aspects of making customers happy, customer acquisition, and partner management.

Joe: What are some of the key challenges you’re facing at the moment?

Jens: There is a huge demand for data-centre-related products in the market. Demand currently far outweighs supply, and it’s not only us who are impacted by that, but also other vendors in the data centre market. Anything that goes into high-density fibre cable connectivity – including ducting for all the power and fibre cables, as well as copper cable – is in high demand. There is not enough supply in the market, and therefore everyone is struggling a little bit. It’s a nice challenge – or a luxury problem – to have.

Additionally, as many companies and countries are investing in electrical infrastructure, the energy transition, and data centres and AI, it is important to strike a balance – given the available resources – and focus on the right markets at the right time.

Joe: What are the biggest trends and developments you’re seeing at Panduit currently?

Jens: The coming together of AI, increasing power consumption, and the energy transition – this all forms an area where the various divisions within our business are overlapping and interconnected. You have the white space and the grey space in the data centre, and in the grey space you have a lot of infrastructure installation applications where our industrial electrical business is coming in nicely. Thanks to our broad portfolio of solutions, we can offer system integrators and end customers alike better combinations for efficient and effective electrical and network infrastructure.

Another trend, given all the ongoing geopolitical and macroeconomic developments globally, including with tariffs, is that we see a strong need to further localise the supply chain. We produce in Romania, for example, and we are going to increase the number of products manufactured there. Rather than sourcing everything from America, we are relocating capabilities into EMEA.

More generally, we have identified strong pockets of growth in geographical areas which we want to concentrate more on; the Nordics are certainly one of these because a lot of data centre activities are now moving to the north from the more traditional spaces. Then there are other regions, such as Saudi Arabia, where we are also currently investing heavily.

Joe: What are some of the most pressing demands from your data centre customers?

Jens: Definitely the on-demand availability of products and, therefore for us, the localisation of the supply chain. Let me give you an example: Right now, there is very high demand for fibre optic cable guides, which we normally produce in our North American plants. We now see opportunities to manufacture such important components for AI data centres in Europe as well.

Joe: How have infrastructure requirements evolved, especially with the rise of AI workloads requiring higher power densities?

Jens: We are now talking about 100 kilowatts plus of power per rack. There’s even talk of some early developments of one-megawatt racks, which is crazy. With tonnes of GPUs going in there, you can imagine the power requirement and the heat dissipation. Cooling is certainly an area where the industry has an increasing challenge.

Not just this, but I was talking to a professor recently and they are currently looking into using data centres for renewable or semi-renewable heating purposes – taking the heat that is created within the data centre environment and using that as a source for remote heating of households. There’s a development in former East Germany, to give you one example, which is going to be a big one.

Joe: With Europe’s sustainability targets, how is Panduit helping reduce its overall environmental impact?

Jens: We are particularly challenged in Europe regarding regulations and laws. The company itself is quite involved in the sustainability space and we are EcoVadis Silver awarded, meaning we are in the top 15% of assessed companies globally for sustainability. Thanks to the commitment of our Executive Chairman, we consider this to be a holistic approach to environmental stewardship, ranging from sustainable manufacturing processes and energy-saving projects in our plants to the use of environmentally friendly materials in our products. We recently launched an ecofriendly cable tie that is made from 100% waste materials and meets our quality standard for nylon 6.6.

Joe: Looking ahead, how do you see the future of your market developing?

Jens: According to some of our market ecosystem partners – and we work with many of the large logistics and distribution companies – there is no other company like Panduit that offers such a wide and deep portfolio when it comes to data centre applications, commercial network infrastructures, or industrial electrical infrastructures. For the foreseeable future –the next five years or so – we see a strong, unprecedented, continuous demand on the data centre side and we are very geared up for it. There are more opportunities than challenges.

Panduit, panduit.com

Subscribe free today to receive your digital issue of Data Centre & Network News, along with our weekly newsletters featuring the latest breaking news along with exclusive offers from industry leaders.

Mike Hellers, Product Development Manager at LINX, and Mike Hoy, Chief Technology Officer at Pulsant, explore how Scotland’s reliance on distant internet hubs undermines performance, security, and sustainability.

Imagine sending a message to someone next door, only for it to be routed via London or Amsterdam first.

That’s what happens to much of Scotland’s internet traffic today. Data often leaves the country before reaching its destination, creating unnecessary delays, higher costs, and raising serious questions about privacy, resilience, and digital sovereignty.

Hosting and routing traffic within Scotland is the missing piece needed to unlock faster speeds, stronger security, and greater economic inclusion.

Most Scottish internet traffic is still routed via major hubs in London, Manchester, or Continental Europe. This leads to slower performance for consumers and real business consequences for organisations, especially in rural regions where only half of homes currently have gigabit-capable broadband. Longer data journeys also consume more energy, enlarge carbon footprints, and increase vulnerability to faults or cyberattacks.

The infrastructure already exists. Data centres such as Pulsant’s, where LINX first operated in Scotland, prove that local routing is viable. However, wider adoption requires

collaboration between industry and government to promote regional peering and keep traffic in-country. This is crucial as AI adoption surges, creating a sharp rise in data storage, processing, and streaming demands.

Many organisations assume using a Scottish data centre guarantees data sovereignty; it doesn’t. Data may still cross borders during updates, backups, or when using cloud services. For highly regulated sectors like finance and healthcare, this lack of transparency poses legal and ethical risks.

These sectors need more than storage assurances; they need visibility and control over where data travels.

By enabling clearer routing policies, public-private collaboration, and greater transparency over data flows, Scotland can boost performance, protect sensitive information, and support a greener, fairer digital economy. The infrastructure is here. Now it’s time to keep more data in Scotland, where it belongs.

LINX, linx.net | Pulsant, pulsant.com

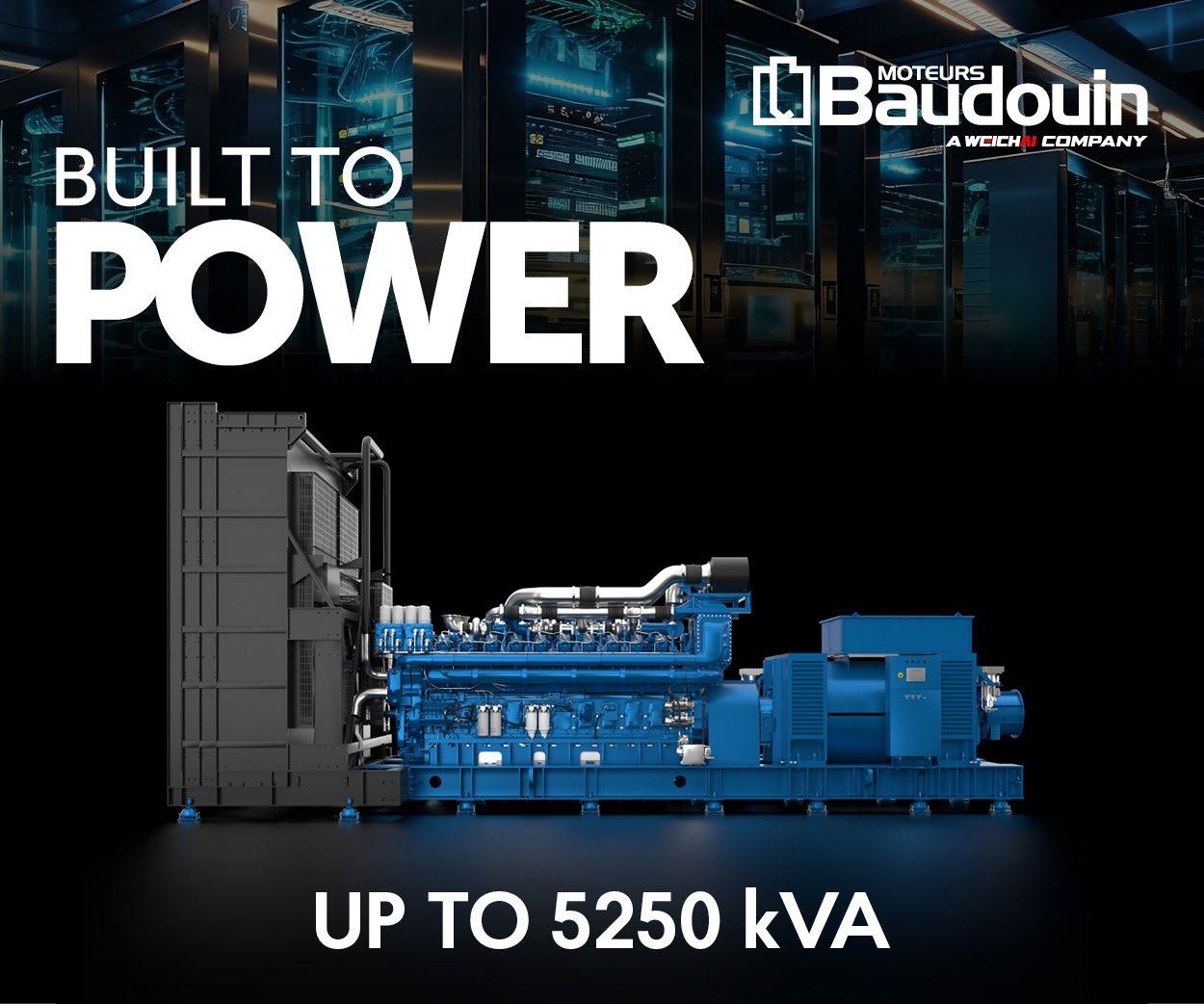

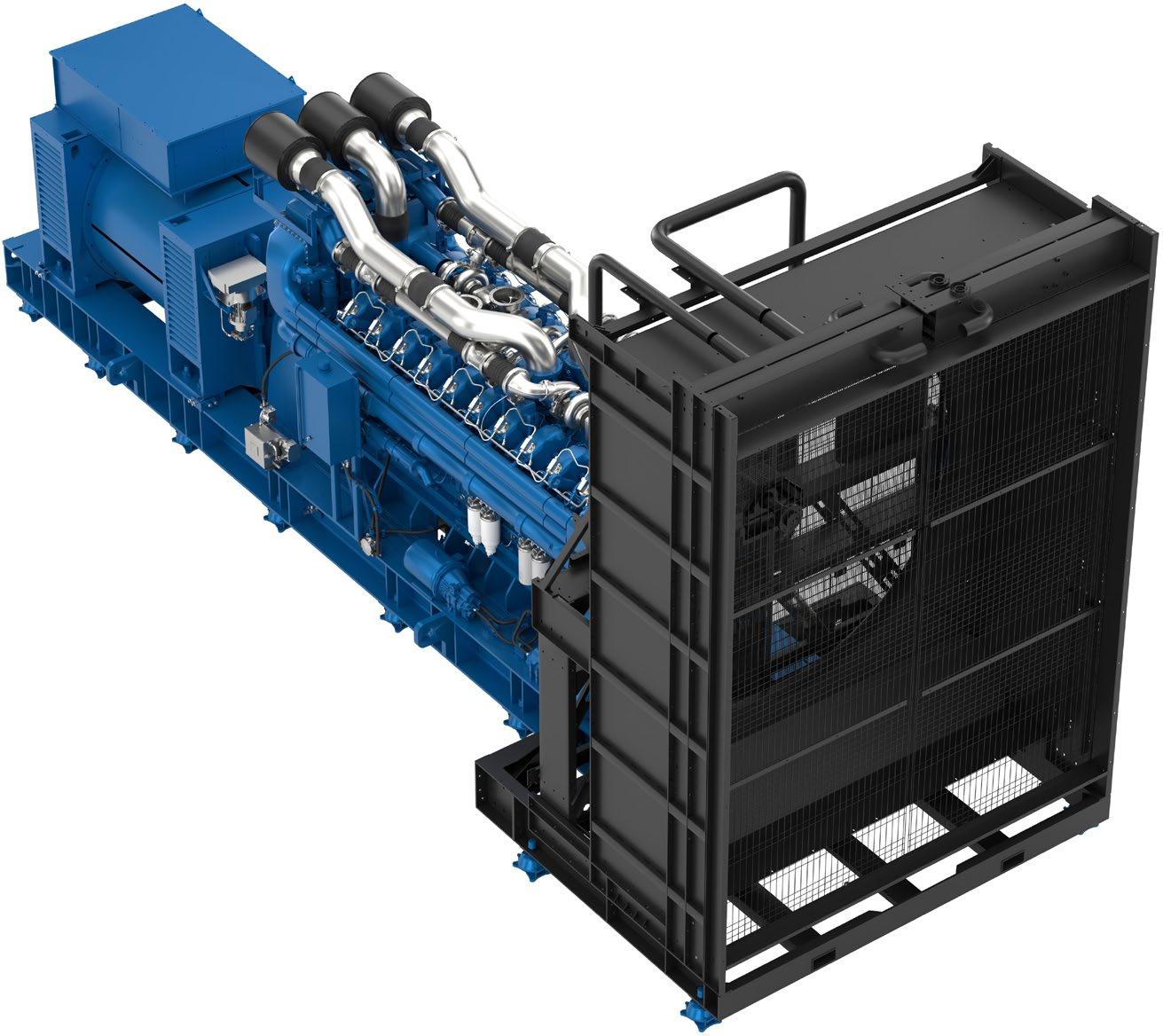

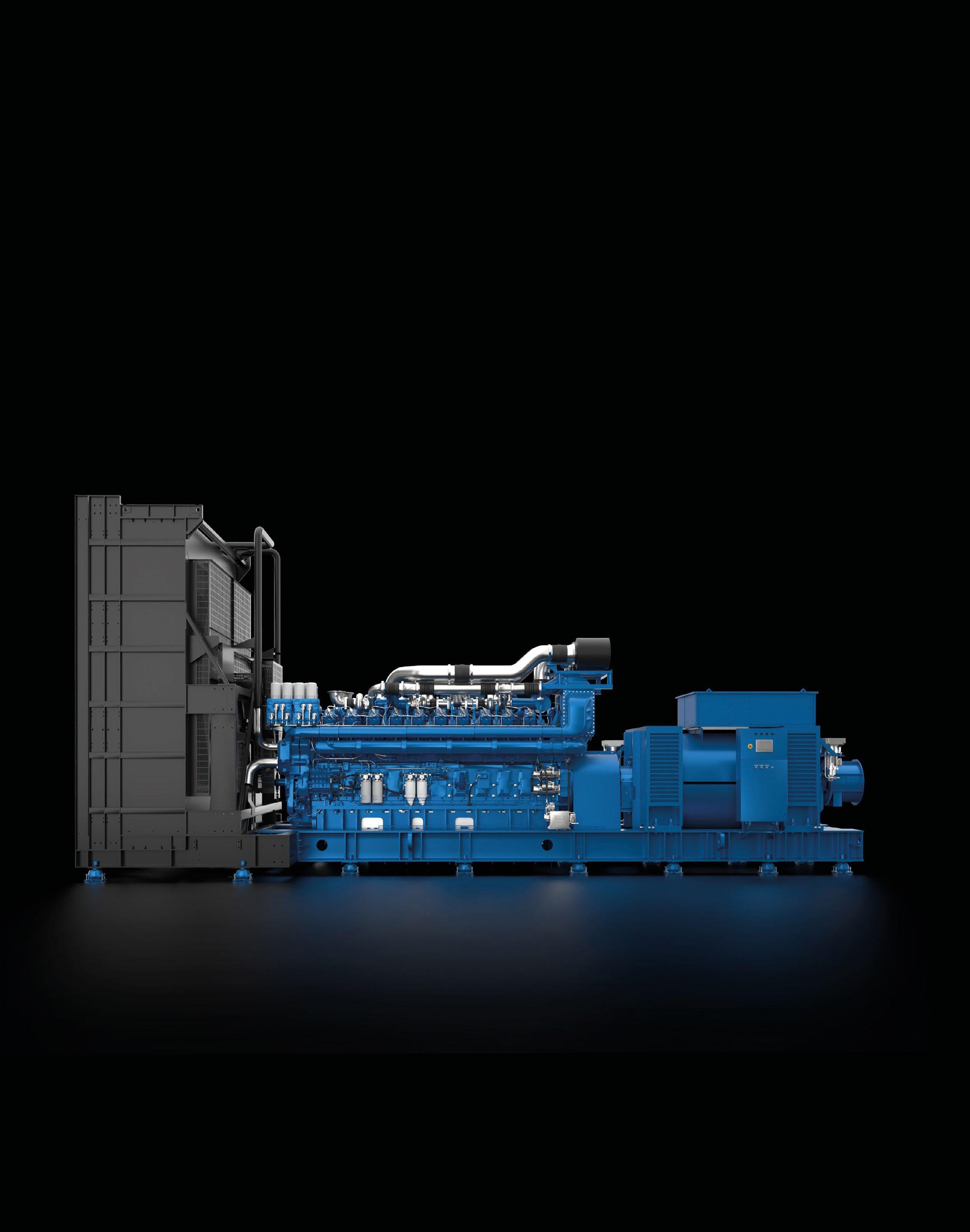

Baudouin explores how a century of engineering expertise translates into gensets built for the uncompromising demands of modern data centres.

As the global data centre landscape accelerates, power continuity has become the ultimate benchmark of operational excellence. With workloads increasing exponentially and uptime measured in milliseconds, the demand for high-performance, mission-critical backup power has never been greater.

For over a century, Baudouin has been engineering power solutions that keep essential infrastructure running without interruption. Today, the company is redefining backup generation for the digital age – delivering diesel gensets purpose-built for data centre applications where reliability, performance, and responsiveness are non-negotiable.

In a data centre, every second counts. Grid fluctuations or even brief power drops can lead to costly service disruption. Baudouin gensets are designed to guarantee seamless continuity between mains and generator supply, ensuring instant start-up and stable voltage and frequency under the most demanding load conditions.

Built for Tier III and Tier IV installations, Baudouin generator sets combine outstanding transient performance, high load acceptance,

and redundant start capability. Fully compliant with ISO 8528-5 G3 standards and pre-approved by the Uptime Institute, they deliver the responsiveness that security operators require to maintain uninterrupted operations.

Baudouin’s data centre range covers outputs from 2,000 to 5,250 kVA, offering class-leading power density that enables smaller footprints and simplified integration. Each unit is equipped with intelligent electronic fuel injection, advanced turbocharging, and high efficiency intercooling for optimal response and durability. A reinforced H-Beam chassis ensures structural rigidity, while optimised NVH architecture minimises vibration and noise. Every genset is also HVO-ready, supporting the use of renewable diesel fuels that reduce carbon emissions without compromising performance – aligning reliability with sustainability.

Beyond technical specifications, Baudouin differentiates itself through agility. Its production capability allows short delivery times – under six months for certain configurations –helping operators overcome supply-chain bottlenecks and grid-connection delays.

Each system is engineered for flexibility, capable of customisation to meet specific site conditions or hybrid configurations, from single-unit deployments to multi-MW clusters. Combined with a worldwide network of service partners and technical support, Baudouin ensures reliability extends far beyond installation day.

As data becomes the world’s most critical resource, the systems that protect it must be uncompromising. Baudouin’s century of mechanical expertise, combined with continuous innovation in power generation, delivers one clear promise: reliability at the speed of data.

Baudouin — Built to Last

Baudouin, baudouin.com

Chris Cutler, Business Development Manager – Data Centres at Riello UPS, explores how using silicon carbide (SiC) semiconductors could help the next generation of modular UPS systems ensure data centre operators can balance reliability with sustainability.

The data centre landscape is undergoing unprecedented transformation, with the seemingly unstoppable growth of AI and hyperscale computing leading to rising demand and rack densities. High energy costs are compounding these pressures, along with the rapidly fluctuating and unpredictable load profiles associated with AI applications. These factors pose huge challenges to traditional UPS architectures as manufacturers need to balance the primary purpose of an uninterruptible power supply – namely to guarantee resilience and reliability –with the sector’s desire for ever more sustainable solutions.

Historically, uninterruptible power supplies have been manufactured using Insulated Gate Bipolar Transistors (IGBTs), a well-established, proven, and cost-effective technology.

Over recent years, incremental changes in design – such as the evolution from two-level to three-level architecture inverters or adapting filter materials – have helped UPS manufacturers keep pushing efficiency ratings upwards.

But how can we answer the industry’s call for even higher efficiency? That’s where the potential of silicon carbide (SiC) semiconductors comes in.

Silicon carbide isn’t a new technology, of course, considering its widespread adoption in the electric vehicle industry. However, for UPS manufacturers, it does offer several inherent advantages compared to silicon-based IGBT:

• Higher efficiency and reduced switching losses — SiC components exhibit lower electrical resistance, resulting in reduced energy losses, which helps to maximise the overall efficiency of the UPS.

• Increased power density — The technology enables increased power density, making it possible to design more compact and lightweight UPS systems without compromising on overall power capacity.

• Increased thermal stability — SiC can operate at higher temperatures than IGBTs, translating to a broader operational range and reduced cooling demands. IGBTs require larger heat sinks as they dissipate more energy.

• Enhanced frequency response — SiC’s faster switching capabilities result in a more responsive UPS, crucial for handling the rapidly fluctuating load conditions typically found in modern data centres, particularly those dealing specifically with AI applications.

• Durability — The robustness of SiC and its ability to withstand high surge currents or voltage spikes reduces overall wear and tear, leading to extended component and UPS lifecycles, as well as reducing maintenance needs.

Now, we do need to acknowledge that silicon carbide components take 4-6 times the energy to manufacture. This inevitably results in increased production costs and the amount of CO2 generated. That being said, these issues are more than offset by the overall energy savings across the lifecycle of the UPS.

Riello UPS embraced the untapped potential of SiC with our Multi Power2 range. These modular UPS systems come in two configurations: MP2 (300-500-600 kW versions) and Scalable M2S (1000-1250-1600 kW versions).

Both options are based on high-density 3U 67 kW power modules. Thanks to the use of silicon carbide components, these modules can achieve ultra-high efficiency of 98.1%. This significantly reduces a data centre’s energy consumption and running costs.

The positive characteristics of SiC also shine through in the UPS’s ability to handle rapidly fluctuating AI loads and its overall robustness.

For example, with a typical UPS, you’ll need to swap out the capacitors at service life year 5-7; that’s potentially two or three times over a 15-year lifespan. But with the durability of SiC, it is realistic to go through the entire lifespan without having to replace the capacitors at all, cutting maintenance and disposal costs.

Don’t forget the broader benefits of modular UPS too, namely ‘pay-as-you-grow’ scalability that allows a data centre to add extra modules or cabinets as and when its load requirements change, as well as hot-swappable modules that ensure zero-downtime maintenance.

A modular UPS made with SiC components will deliver data centres significant cost, efficiency, and carbon emissions savings compared to both legacy monolithic UPS and IGBT-based modular solutions.

Take Example 1, which replaces a monolithic 1 MW N+1 UPS (made up of 3 x 12 pulse 600 kVA 0.9pf UPS) with a 1,250 kW Multi Power2 Scalable M2S:

• Total annual energy cost savings = £95,759

• Overall total annual cost savings = £117,544

• Total annual CO2 savings = 148.7 tonnes

• Total 15-year cost savings = £2,353,655

• Total 15-year CO2 savings = 1702.8 tonnes

Or in Example 2, here are the savings provided by a 1,250 M2S at 1 MW load versus a UPS comprising 3 x 400 kVA modular units:

• Total annual energy cost savings = £51,099

• Overall total annual cost savings = £53,839

• Total annual CO2 savings = 79.4 tonnes

• Total 15-year cost savings = £1,078,068

• Total 15-year CO2 savings = 908.7 tonnes

If you add in aspects such as the lifelong components and not having to regularly swap out capacitors, as highlighted previously, a typical data centre could save anywhere from £80,000 to £120,000 in maintenance costs alone over the lifespan of a UPS manufactured using SiC.

Although SiC-based UPSs may come at a higher initial cost compared to traditional IGBT, the lower cooling requirements, reduced energy consumption, and longer operational life ultimately deliver a better total cost of ownership (TCO).

And while it is by no means a silver bullet, silicon carbide will likely become the go-to choice to help UPS manufacturers meet the needs of modern data centres’ ongoing demand for higher efficiency power infrastructure.

Riello UPS, riello-ups.com

RELIABLE BACKUP POWER SOLUTIONS FOR DATA CENTRES

The 20M55 Generator Set boasts an industry-leading output of 5250 kVA, making it one of the highest-rated generator sets available worldwide. Engineered for optimal performance in demanding data centre environments.

PRE-APPROVED UPTIME INSTITUTE RATINGS

ISO 8523-5 LOAD ACCEPTANCE PERFORMANCE | BEST LEAD TIME

Karl

AI is driving growth in the data centre market like we have never seen before. It reminds me of the early 90s during the telecom and internet boom, or even the Gold Rush of 1849. There is a race to capitalise on the opportunities AI offers.

Every week we are seeing news of another data centre development: There is one in Middlesbrough said to be taking over the former ICI works site, another at the site of an old power station, and a further development in Scotland, to name a few. Developers are already publicising these developments to attract speculative customers. In the US, they are even re-purposing former nuclear power stations and, because data centres need strong connectivity and power connections, these sites will work well. Serious players are

even building their own power stations to feed their AI data centres.

That all being said, the rise of AI has also created some volatility. As I write, the US stock market is recovering from losses last week, when AI stocks dropped amid worries regarding how they are funded.

In the UK, we see the cost concerns centred around energy prices. A 20–100-megawatt data centre project will require significant energy to power it. Liquid cooling for 100kW racks means water consumption is also a concern. The UK Government is funding some data centre projects that, as a society, we eventually pay for in tax.

The question of how to feed a gigawatt data centre remains. In Dublin, we have already seen a spotlight fall on energy consumption and water consumption, as well as the resulting restriction of new builds.

Because of the high cost of energy and real estate in the UK, we are seeing further data centre growth abroad. At Centiel, for instance, we are currently working on a 100-megawatt operation in the Americas.

From our perspective, as a manufacturer of UPS systems, the challenges surround the deployment of huge amounts of kit ready for installation. Most data centres of this size will be

a phased deployment. However, it comes down to how production can be ramped up and fit in with the rest of the production schedule.

For Centiel, our modular production makes this more straightforward as we are not producing multiple models of UPS, which, as a result, can provide short lead times. Nevertheless, to cover their bases, we are still seeing customers pre-purchase UPS ahead of time, mindful that other manufacturers may not be able to keep up with demand.

Suddenly, the key question is now how rapidly the equipment can be delivered – again, reminiscent of the telecom/internet boom of the 90s.

The other trend I am seeing with large data centre installations is for lithium-ion batteries to support UPS. Lithium ion is smaller and lighter than traditional VRLA batteries for short duration runtimes and is used more for grid support opportunities. Different customers have different requirements, but many are looking for short duration autonomy for power protection just to enable them to get generators going and protect the load.

Some are installing lithium ion with a view towards future grid support and storage of energy bi-directionally to save costs – or to harness renewable energy sources like wind and solar in the future – but I am yet to see this in action in earnest. Although expensive, the good news is that lithium-ion costs are coming down as more are deployed.

Nickel zinc is now also an option for battery storage to support UPS systems. Although expensive, the inert technology is safe. Where we see US insurers driving the need to house lithium battery storage outside of the building envelope, at some point the cost of the additional building to house battery storage will cancel out the increased cost of nickel zinc.

At Centiel, our UPS technology is AI data centre ready. The modular nature of our high-availability systems also means we can easily absorb increased production into our planning capability to ensure systems are deployed rapidly.

In the rush to build AI data centres, only time will tell how we can ensure they have enough energy to feed them. As for the UPS supply, we are ready.

Centiel, centiel.com

Environmental monitoring experts and the AKCP partner for the UK & Eire.

Contact us for a FREE site survey or online demo to learn more about our industry leading environmental monitoring solutions with Ethernet and WiFi connectivity, over 20 sensor options for temperature, humidity, water leakage, airflow, AC and DC power, a 5 year warranty and automated email and SMS text alerts.

projects@serverroomenvironments.co.uk

Ricardo de Azevedo, Chief Technology Officer at ON.energy, explains why traditional UPS designs are falling short as AI campuses overwhelm utility systems.

AI data centres have quickly become the largest and fastest-ramping electrical loads ever connected to the grid. Multi-hundred-megawatt to gigawatt campuses, operating at high load factors and expanding continuously, are pushing utility systems beyond their limits. Fast load ramps and poor ride-through behaviour destabilise substations and feeders, degrade power quality, and can trigger protective trips that ripple across the network.

Put simply: The grid wasn’t designed for this kind of tightly packed, highly dynamic demand. These conditions have the potential to shift costs and reliability risks to other customers

and, in extreme cases, can even contribute to blackouts.

In the USA, the Electric Reliability Council of Texas (ERCOT), the independent system operator that manages most of the state’s power grid, created the Large Electronic Load (LEL) framework to address this new class of demand. It treats major AI and industrial facilities as grid-sensitive resources, requiring them to demonstrate

controlled behaviour during voltage disturbances and recovery events.

The LEL standard requires four things:

• First, ride-through – Large loads must stay connected through prescribed high- and low-voltage events (HVRT/LVRT) and avoid abrupt megawatt drops.

• Second, disciplined ramps – The point of interconnection (POI) profile must be ratelimited (typically to 20% per minute), both in modelling and in operation.

• Third, power quality and stability – Projects must analyse and mitigate harmonics, flicker, and control interactions, backed by credible dynamic models.

• Fourth, evidence – ERCOT verifies behaviour through Model Quality Tests (MQT) to ensure PSCAD or PSSE models match actual POI performance.

These expectations are bringing rigour to how large loads connect, but they’re also exposing the weak points of today’s standard data centre and industrial power designs.

At the heart of the issue lies the traditional uninterruptible power supply (UPS). Originally designed to protect IT systems, legacy UPS systems can react poorly to grid events. When upstream voltage sags, they often transfer off the grid, instantly dropping load at the POI. To ERCOT, that looks like a sudden load drop, exactly the opposite of the desired ride-through requirements and controlled ramps.

Attempts to compensate with parallel batteries or microgrids don’t actually address the problem. A parallel-connected BESS doesn’t sit in the current path to the facility, so it can’t filter upstream transients or guarantee ride-through for downstream equipment.

Microgrids and hybrid generator systems face similar limitations. Turbines and engine-gensets have mechanical ramp constraints; they cannot follow the fast load steps common in AI and compute clusters. Unbuffered load swings cause frequency deviations, torque oscillations, and trips.

Beyond technical performance, the interconnection process has become a roadblock. Every new large load arrives with a unique electrical design, forcing utilities and regulators to repeat the same protection studies and dynamic modelling from scratch. PSCAD and PSSE reviews pile up in queues already stretched thin by staff shortages and record application volumes.

The absence of a standard, verifiable behaviour envelope is slowing interconnection down. What’s missing? A common technical definition for ‘grid-safe’ operation that sets clear ramp limits, voltage and frequency ride-through windows, harmonic boundaries aligned with IEEE 519, and auditable model evidence. Without that, system operators are left to treat each load as a one-off.

Whether interconnecting under ERCOT’s LEL rules or operating off-grid, the underlying physics are the same. Fast, deep load swings will continue to collide with systems designed for gradual change. A practical design must stay connected through voltage events, rate-limit what the POI or generator sees, filter transients and harmonics at the facility boundary, and prove all of this with credible, validated models.

ON.energy’s AI UPS is built to meet these challenges. Installed in series at medium voltage, it forms a controlled electrical buffer between the grid or generator and the whole facility. It maintains ride-through under HVRT and LVRT conditions, enforces the 20%-per-minute ramp profile, filters transients and harmonics before they propagate either way, and provides validated PSCAD models that are designed to meet ERCOT’s Model Quality Tests.

By addressing the behaviour at the electrical interface rather than relying on protection workarounds, this approach creates a predictable, verifiable, and repeatable way to make large electronic loads grid-safe and generator-stable.

Artificial Intelligence (AI) - Friend or Foe of Sustainable Data Centers?

Data centers are getting bigger, denser, and more power-hungry than ever. Artificial intelligence’s rapid emergence and growth (AI) only accelerates this process. However, AI could also be an enormously powerful tool to improve the energy efficiency of data centers, enabling them to operate far more sustainably than they do today. This creates a kind of AI energy infrastructure paradox, posing the question: Is AI a friend or foe of data centers’ sustainability?

In this Technical Brief, Hitachi Energy explores:

• The factors that are driving the rapid growth in data center energy demand,

• Steps taken to mitigate fast-growing power consumption trends, and

• The role that AI could play in the future evolution of both data center management and the clean energy transition.

Domagoj Talapko, Business Development Manager at ABB Electrification, explains how new UPS strategies are helping meet growing power and reliability requirements.

The growth of artificial intelligence (AI) workloads is changing data centre power requirements. Industry forecasts indicate that generative AI could require more than 100 GW of power by 2028, up from around 10 GW in 2024. This shift reflects the fundamental difference between AI and traditional computing workloads.

AI workloads using GPUs consume more power than traditional CPU-based computing. Where a large data centre might have operated at 30 MW, current AI-focused builds target 150 MW, with future facilities planned

at 500 MW. Some facilities now exceed one gigawatt in total capacity.

The rack density is also higher. While traditional data centres operate at power densities of 5–10 kW per rack, AI facilities can operate at 30–50 kW per rack. Some high-performance computing clusters are already exceeding 100 kW per rack and one megawatt per rack is on the horizon.

Workloads are different too. AI can generate sudden, unpredictable power spikes, which require fast, resilient UPS systems and flexible distribution.

The top priority remains reliability. AI training runs can take weeks or months, and a single power interruption can result in massive computational losses and costs.

Power density management comes a close second. Concentrating so much power in small footprints creates thermal and electrical challenges that traditional solutions were not designed to handle.

Scalability is the X factor. With AI infrastructure growing exponentially, power protection systems must be able to scale while maintaining the availability of ongoing operations.

Recent deployments demonstrate how closer collaboration is driving innovation that addresses these priorities.

In the US, for example, Applied Digital has expanded its partnership with ABB for its Polaris Forge 2 campus in North Dakota, a 300-megawatt facility phased across two 150-megawatt buildings scheduled to come

online in 2026 and 2027. The collaboration differs from conventional projects in structure: Rather than completing design work before engaging with equipment suppliers, ABB and Applied Digital teams collaborated from the start alongside architects and other engineers. This not only enabled them to introduce a medium-voltage architecture with MV UPS and power distribution, it also gave them the opportunity to optimise technical requirements from the outset.

AI data centres consume significant amounts of energy, so every efficiency improvement translates into valuable cost and carbon reductions. Modern UPS systems can achieve efficiency levels of 96–99% in eco-mode or online mode, compared with 85–92% for older technologies.

Beyond the UPS itself, intelligent power distribution systems minimise conversion losses, while advanced cooling integration optimises the entire thermal management system. Predictive analytics identify inefficiencies before they impact operations, allowing operators to maintain peak performance across the infrastructure.

Organisations planning new AI data centres should start with scalability and flexibility as core design principles. AI technology is

evolving and power infrastructure must be able to adapt.

Modular, scalable solutions allow growth without major overhauls. Systems with proven reliability in high-density applications reduce risk during critical deployments. The technical and strategic decisions that shape longterm performance require understanding both electrical engineering and AI application requirements. Our team at ABB provides frameworks and case studies from successful deployments to support this planning process.

ABB, abb.com

Joe from DCNN recently attended Schneider’s flagship event in Denmark, where the company hosted a series of keynote sessions, press briefings, and technical deep-dives. As part of his trip, Joe also interviewed Steven Carlini, Chief Advocate, Data Center and AI at Schneider Electric. Here’s our round-up of how it all unfolded.

Last October, I was invited to Copenhagen for Schneider Electric’s annual Innovation Summit, a two-day gathering held at the heart of Denmark’s energy ecosystem. The event brought together more than 5,000 professionals, policymakers, and C-suite leaders to explore the future of energy and digital infrastructure, and this scale was certainly felt within the bustling, lively atmosphere around the Bella Center.

In his opening keynote, CEO Olivier Blum (pictured above) took to the stage to outline a vision for energy that is “intelligent, resilient, and sustainable.” The emphasis was clear, setting the tone for the Summit as a whole: Electrification, automation, AI, and digitalisation are reshaping how we build and operate data centres and wider industrial ecosystems.

Across the main stage, breakout sessions, and exhibition hall, speakers explored how Schneider’s EcoStruxure and partner-driven solutions are enabling smarter, more efficient power and cooling infrastructures for today’s most demanding workloads. A highlight was the discussion around AI-ready data centre architectures and the concept of so-called “AI factories” that couple massive GPU clusters with advanced thermal management.

Beyond just data centres, Copenhagen was alive with conversations on decarbonisation, digital twins, grid edge resilience, and the power of ecosystems in accelerating sustainable

transformation. The innovation hub and exhibition gave us hands-on access to the latest electrification platforms, automation tools, and AI-enabled operational technologies, making complex tech tangible for all attendees from Schneider’s various markets.

As part of my visit, I was able to gain some time with Schneider’s Steven Carlini to unpack what the prevalent themes from the event mean for data centre operators, as well as discussing the technologies shaping the industry’s future. Here are the key moments from our conversation:

Joe: Thank you for taking the time to speak with us today, Steven. How are you finding the Innovation Summit so far?

Steven: It’s been great! It’s been interesting to get not just the data centre perspective, but the ‘entire perspective’ of all the different businesses [that Schneider works with]. I think Schneider goes out of its way to try to balance it all.

But regarding the event overall, I’m just shocked at how many people are here and the engagement of them all. It’s not like they’re ‘just here’; they’re very, very engaged. There’s lots of good discussion going on.

Joe: Have there been any standout discussions or points that you’ve picked up on?

Steven: I’ve had a few customer meetings and one of the customers was talking about different architectures for data centres: adding batteries, eliminating generators, and moving workloads around. That was one of the more interesting things.

The NVIDIA people that were here are trying to get a handle on the different CDUs and how to deploy the different versions. They were wondering about the one-and-a-halfmegawatt CDU and what CDU they’ll use for their next generation – developing larger CDUs to run the bigger pods, or multiple pods.

It’s also amazing how many people already know about this 800 volts power that we announced. We’ve been talking about liquid cooling for a long time and that’s finally starting to happen, but 800 volts is kind of new, and a lot of people already knew that was going on.

Joe: I noticed sustainability has come up a lot throughout this event. Do you think there’s pressure to decarbonise as quickly as possible, or is it more about thinking long term?

Steven: Two years ago, I would say that was the focus. When we had this conference back then, we were talking more about sustainability. This time, we’re talking more about the AI race. There is still a need to decarbonise, and a lot of companies are focused on that, but there are a lot of companies that are not like they were before. There were a lot of data centres which were actually holding up construction because they didn’t have the approved green solutions. Now you don’t see that anymore. It’s “how do we deploy as fast as possible?” We’re seeing things like bridge power, which is power we can deploy now that may not be as sustainable or as carbon-free as we want, but we can use it for now until we get other things in place. You’re starting to see this kind of ‘downplaying’ of net zero commitments.

Joe: Looking to the future, what excites you the most about where we’re heading in the data centre world?

Steven: The move to SMRs – a solution that runs on recycled uranium – is really exciting. The thing that was always going to hold it up was there wasn’t a refurbishment plant for this uranium, and you must refurbish it before you can use it. But OKLO is now building one of those, spending $1.6 billion (£1.2 billion). I think we’re going to have at least 50 SMRs in operation by 2030; Google has an agreement with NuScale [and] the data centre companies are dying for it. In data centre alley, they just can’t get any more power into the locations they want. To build a substation or a high voltage line [with traditional power plants] could take up to 15 years, but SMRs could be the golden ticket.

It’s an exciting time. We went through decades of almost nothing, and now it all comes at once!

Schneider Electric, se.com

Dennis Mattoon, Co-Chair of the Trusted Computing Group’s (TCG) Data Centre Work Group, explores how trusted computing standards aim to protect facilities against increasingly sophisticated hardware and firmware attacks.

Across the globe, data centre buildouts continue to increase in both pace and scale. Within the United States, regulations are being de-emphasised in order to expand the use of federal and brownfield sites for data centre projects across the country. At the same time, projects such as ‘Stargate’ – a private-sectorled venture which will invest approximately $500 billion (£378 billion) in capital expenditure to develop new data centres and create 100,000 jobs over the next four years –continue to be announced and actioned.

A similar picture is being painted across Europe, with cities such as London and Frankfurt emerging as major data centre hubs. In fact, the number of data centres within the UK is set to increase by almost a fifth in the coming years, in part due to the surging demand from artificial intelligence and cloud

computing. As data centre projects continue to be rolled out, it’s essential that security doesn’t become an afterthought in the race to get these facilities up and running.

It’s clear that data centres will continue to play a crucial role for business operations across the globe, but it’s for this reason that they remain prime targets for cybercriminals. Essentially, as the number of data centres across the world grows, so too does the threat landscape. There are a range of attacks hackers can level against data centres. These include Distributed Denial of Service (DDoS) attacks, where a hacker floods servers with massive amounts of irrelevant traffic in order to overwhelm resources and render services unavailable to authorised users. If they are

able to gain access to critical data centre infrastructure, attackers can also encrypt files and hold an operator to ransom in exchange for the decryption key, while application-layer attacks that exploit vulnerabilities such as SQL injections are also commonplace.

In June 2025, internet security provider Cloudflare announced it had blocked the largest DDoS attack in recorded history, with one of its clients hit by a massive cyberattack that saw its IP address flooded with 7.3 Tbps of junk traffic. This was followed by an even larger 22.2 Tbps attack in September. Attackers aimed to exploit the User Datagram Protocol, which would enable them to send traffic to all ports of their target, overwhelming the company’s resources. While not explicitly covered as an attack against a data centre, it illustrates how network infrastructure associated with co-location and data centre services can be significantly hampered by this attack type.

To overcome these threats and mitigate any downtime, it’s essential that operators are given the tools to enhance the security measures found within their facilities. This is where the latest computing standards and specifications that embrace trusted computing can make a difference.

For example, operators can leverage a Trusted Platform Module (TPM), which provides a hardware-based Root-of-Trust (RoT) for data centre servers. A TPM, integrated within their infrastructure, can be used to prevent any malicious actors from compromising systems at both the hardware and firmware level, which software alone can’t protect.

During start-up, the TPM will measure and record the boot code, then compare these measurements to a known, trusted configuration. If unauthorised changes are discovered, it can then halt the boot process to prevent malicious software from loading.

The TPM also provides a secure environment for the generation, storage, and management of cryptographic keys used for data encryption. However, this RoT isn’t a one-size-fits-all solution to data centre security: An interposer may still be able to position themselves between the Central Processing Unit (CPU) and the TPM, thus being able to cause significant damage by gaining possession of the legitimate control signalling between the two.

In fact, interposers can even inject their own boot code into the CPU and wield an authorisation key to fool a remote verifier and make the TPM attest the integrity of fraudulent information. This allows them to snoop, suppress, and modify vital signals and measurements in order to access and exploit secrets and information from within the data centre and weaponise it against the operator.

To this end, organisations such as the Trusted Computing Group (TCG) are actively working to establish additional trust within systems and components found in data centres, focusing primarily on developing protective measures against potential interposers within a system. A number of different attacks are being assessed in order to devise ways to avoid or mitigate them. These include the feeding of compromised boot codes

to a CPU, impersonations of a CPU to a TPM, the suppression and injection of false measurements to a legitimate TPM, and the redirection of legitimate measurements to an attacker-controlled TPM.

As a result, a TPM will be empowered to protect the resources and communication of a CPU to which it’s bound through the use of precise measurements. It will also be able to attest the measurements and correct CPU instance of a given object to a verifier.

The protection of data centres against hackers clearing Platform Configuration Registers (PCRs) in the TPM through false assertions is also in scope going forwards. These actions will enable operators to trust that components and hardware so vital to their operations are operating as they should, without the fear they may become weaponised by an attacker.

TCG, trustedcomputinggroup.org

Cory Hawkvelt, Chief Product & Technology Officer at NexGen Cloud, outlines how sovereignty, regulation, and hardware-level security are reshaping the safeguards required for mission-critical AI workloads.

AI has become one of the most demanding and sensitive workloads to run inside modern data centres. What started as experimental pilots has quickly evolved into serious national infrastructure, from healthcare diagnostics and fraud detection to defence planning and secure communications. The UK Ministry of Defence’s recent investment in sovereign cloud services reflects this shift. AI is no longer a novelty; it is an operational dependency, and with that comes an entirely different set of security expectations.

Many organisations now recognise that the risks attached to AI do not resemble those of traditional IT systems. It is not just about preventing a server breach or limiting access to sensitive files; AI systems can be tampered with in more subtle ways. Inputs can be manipulated. Models can be influenced to behave in

unpredictable ways. Logs and prompts can reveal far more about an organisation’s operations than teams often realise. As a result, the data centre is becoming a critical line of defence, not an afterthought.

The four principal risks to AI security stem from users, lack of sovereignty, regulatory compliance, and the failure to secure the infrastructure at a hardware level.

One of the most overlooked challenges is how easily AI can expose an organisation’s internal logic. A prompt might seem harmless, but the model’s output can unintentionally reveal strategies, processes, or decision rules that would never be written down in a document. This is why shadow AI has become such a growing problem.

Employees use public tools without understanding the exposure risk. This includes acts such as pasting proprietary source code or error logs into AI coding assistants, which can inadvertently leak intellectual property to external servers, or uploading sensitive data such as customer records, financial reports, and internal strategy decks to unapproved AI analytics or data visualisation tools for pattern detection or summarisation. Each of these can fatally compromise an organisation’s IT integrity and lead to major and expensive problems.

According to IBM, these user errors are now adding hundreds of thousands of pounds to the average cost of a breach. It is a reminder that AI security is as much about context as it is about data.

A second major driver behind renewed focus on security is the question of who ultimately has access to AI workloads. The term ‘sovereignty’ is frequently used, but it is often misunderstood. It is easy to assume that if data sits on a server in the UK or in the EU, then it is protected by the laws of that region. In reality, the legal jurisdiction of the cloud provider matters as much as the location of the hardware. Suppose the operator is owned by a parent company based overseas, particularly in countries with far-reaching data access legislation, which creates a potential exposure point. For sectors such as defence, healthcare, financial services, and critical infrastructure, this is becoming a major procurement concern rather than a theoretical risk.

Data centre operators are now being asked questions that did not arise a few years ago. Customers want clarity on where operational control resides, who can access the management plane, and whether the provider is subject to foreign legal obligations. They want to know that sensitive AI workloads are not only physically local, but are also governed locally. This shift is creating new expectations for the industry and reshaping how data centres position themselves in national and regional digital strategies.

A related challenge is the accelerating pace of AI regulation. The UK is exploring a flexible, principles-based model, whilst the EU is taking a stricter, prescriptive approach. Other regions are somewhere in the middle. For global organisations, this creates a confusing and sometimes contradictory landscape.

The data centre layer can remove much of that complexity. When an infrastructure provider builds regulatory alignment into the environment itself, it gives customers a level of confidence that would be difficult to achieve independently. Rather than bolting governance onto an AI system after it is deployed, teams can work within clear, compliant boundaries from the start.

Governments need to take action to ensure compliance with the most stringent standards for AI infrastructure development, as well as its use.

Security is the final piece of this puzzle, and it demands a broader view than traditional data protection. AI models do not simply store information; they also process it. They process intent, assumptions, and internal business logic. If an attacker can see those prompts or influence them, they can shape decisions in

ways that are not always obvious until damage is done. This is why secure AI infrastructure must offer more than encryption, firewalls, and monitoring; it must provide isolation at the hardware level, strict access control, continuous behavioural monitoring, and clear auditability. The goal is to protect not only the data, but also the decision-making process that relies on it.

Industry standards, such as SOC 2 and ISO 27001, provide organisations with a helpful baseline. However, in environments where AI plays a mission-critical role, the requirements often extend far beyond these benchmarks. Many of the organisations we work with require infrastructure that aligns directly with their regulatory frameworks, whether that is GDPR, HIPAA, or defence-grade guidelines. The ability to design environments around these expectations from the outset has become one of the most critical factors in the safe adoption of AI.

What becomes clear from speaking with enterprises across Europe is that the most

significant barrier to secure AI is rarely the model itself. There is uncertainty about the platform on which it runs. The organisations that move fastest are usually the ones that have the most confidence in their infrastructure. Unresolved questions about sovereignty, compliance, and control often hold back those who move slowest.

As AI becomes more deeply embedded in national and enterprise systems, the data centre community has a central role to play.

Safe AI will depend on environments that are transparent, sovereign, resilient, and built with security as a design principle rather than an optional extra.

The direction of travel is obvious. The future of AI will be defined by trust, not just innovation, and trust begins long before a model is trained; it starts with the infrastructure it relies on and the people responsible for keeping that infrastructure safe.

NexGen Cloud, nexgencloud.com

In July 2024, a lightning arrester failure in Northern Virginia, USA, triggered a massive 1,500MW load transfer across 70 data centres – handling over 70% of global internet traffic.

The result? No customer outages, but a cascade of grid instability and unexpected generator behaviour that exposed critical vulnerabilities in power resilience.

Powerside’s latest whitepaper – entitled Data Centre Load Transfer Event – Critical Insights from Power Quality Monitoring – delivers a technical case study from this unprecedented event, revealing:

• Why identical voltage disturbances led to vastly different data centre responses

How power quality monitoring helped decode complex grid interactions

What this means for future-proofing infrastructure in ‘Data Centre Alley’ and beyond

Whether you are managing mission-critical infrastructure or advising on grid stability, this is essential reading.

DATA CENTRE LOAD TRANSFER EVENT: CRITICAL INSIGHTS FROM POWER QUALITY MONITORING

REGISTER HERE TO DOWNLOAD THE WHITEPAPER

Brian Higgins, Founder of Group 77 – a security company that provides a full range of security assessment, planning, training, and consulting services – examines how AI, cyber-physical convergence, and rising environmental pressures are reshaping safety and security across modern data centres.

Data centres have quietly become some of the most critical – and most risk-exposed – facilities on the planet. As AI and cloud workloads surge, operators are being pushed to rethink how they protect people, infrastructure, and the surrounding environment. Safety and security are no longer “support functions”; they’re central to uptime, compliance, and brand reputation.

Modern data centres are moving away from siloed cameras and badge readers towards integrated, analytics-rich platforms. Recent industry reports highlight how AI, biometrics, and advanced video analytics are being utilised to transition from reactive incident response to predictive risk management, enabling the identification of unusual behaviour, tailgating, or abnormal patterns in real time.

Among the key trends in data centre security is the use of biometric and multifactor access control at every layer (perimeter, building, data hall, cage) to combat credential theft and social engineering. Security cameras are now leveraging AI-enabled video analytics that can spot loitering, piggybacking through secure doors, or vehicles stopping where they shouldn’t be, and automatically notifying security operations.

These tools also contribute to enterprisewide risk management, generating operational data used for capacity planning, SLA reporting, and incident forensics. These AI models require tuning, governance, and strong privacy controls, especially where biometrics are in play.

Historically, facilities teams managed locks and doors, while IT oversaw cameras, access control, networks, and servers. That division of responsibility is disappearing.

Cloud-connected HVAC, power management, BMS, and physical security systems have expanded the attack surface, making tight coordination between cyber and physical security essential. Organisations now distribute responsibilities across multiple roles, reflecting the shift from a physical-security focus to one centred on cyber and network security. Over the past decade, these domains have continued to converge towards unified, enterprise-wide security risk management.

Yet, cyber and physical security remain distinct disciplines requiring different expertise. A coordinated, cross-functional team with clearly defined complementary roles is the most effective model.

Joint cyber-physical security operations centres (SOCs), where building alarms, OT events, and cyber alerts are monitored together, allow security personnel to correlate anomalies quickly. For example, if a doorforced-open alarm in a remote data hall correlates with network anomalies on the same VLAN, the SOC can escalate the event as a potential blended intrusion rather than treating them as unrelated alerts.

Security teams should treat converged threats – such as a physical intruder planting a hardware implant or a cyberattack disabling cooling systems – as a single domain. Zerotrust principles should also extend into the physical realm, including network segmentation for security devices, strong identity controls for contractors, and strict governance over remote access to OT and BMS systems. Integrating all aspects of security into a unified programme eliminates duplicated spending, improves response times, and closes exploitable gaps.

To support 24/7 AI and cloud workloads, operators are aggressively adopting lithium-ion (Li-ion) battery systems and large-scale BESS that, while being more efficient, also introduce fire and explosion hazards. Updated fire and electrical codes now require enhanced gas detection, ventilation, sprinkler coverage, blast mitigation, and risk assessments tailored to specific chemistries. High-profile Li-ion incidents have pushed regulators and insurers to scrutinise battery safety strategies.

Specialist fire engineers and risk consultants design performance-based fire protection using advanced modelling for gas release and deflagration scenarios. Vendors that can certify Li-ion solutions to the latest codes and provide integrated gas detection, off-gassing management, and remote monitoring should be prioritised. Data centres face challenges navigating evolving standards, managing permitting delays, and ensuring first responders understand the unique behaviour of Li-ion fires.

Inside the white space, risks extend beyond tripping hazards. Technicians face high noise levels, ergonomic strain, chemical exposures, and electrical hazards. Industry guidance stresses the need for robust ‘Environmental, Health, and Safety’ programmes tailored to data centres.

Best practices include formalised lockout/ tagout and arc-flash programmes, especially as power densities climb and more facilities adopt medium-voltage distribution. Design projects should prioritise ergonomics, incorporating lifting aids and workflow-friendly layouts.

Private security recognises the need to address psychological safety and fatigue in 24/7 operations. With AI tools emerging, integrating EHS data into the same dashboards used for operational monitoring ensures near-misses and unsafe conditions are treated as critical signals. Mobile apps for incident reporting, sensor-based monitoring of environmental conditions, and AI-assisted analysis of near-miss data can help predict where injuries are likely.

Data centres consume roughly 4% of US electricity, and AI growth may double that by 2030. This brings climate and reputational risk as well as direct safety implications for surrounding communities through air pollution, water use, and diesel emissions.

Regulators, investors, and NGOs are increasingly linking ESG performance to safety expectations. Environmental reports now scrutinise generator emissions, cooling water discharge, and fire-suppression chemicals. Health-impact studies have quantified billions in public-health costs associated with datacentre-related power generation, pushing operators towards cleaner power, advanced filtration, and better community engagement.

Some hyperscalers position renewable energy and high-efficiency hardware as both sustainability and resilience measures.

Security professionals can leverage this focus by participating directly in site selection and environmental impact assessments, ensuring resilience and community-risk factors are built into early design. Data centre designs should consider low-global-warming-potential suppression agents, better containment of runoff, and more efficient cooling methods such as liquid cooling.

All of this complexity amplifies a familiar problem: a shortage of skilled people. Research shows persistent labour gaps, particularly in roles requiring both IT and physical security expertise.