Table of Contents

Introduction

A five-year journey

Facing uncertainty through trust

Workstream 1: Focus on the people, embracing security by design

1. Cultivate an inclusive workforce

2. Design for decision-making

3. Adapt to Risk and Redesign Processes for Procurement

4. Engage your Community

5. Create a Space for People to Work Together: Working Group Cyber-Fusion

6. Conduct TableTops - engaging leadership to propel strategies

7. Rethink Information Security Training - engaging everyone in keeping us secure

8. Celebrate & Advocate: Cybersecurity Awareness Month and Data Privacy Day

9. Establish toolkits for Trust Builders

10. Lean into NIST Frameworks to help with wayfinding.

11. Treat Privacy as a Practice

Workstream 2: Engineering and Technology

12. Be Proactive in the Management of Networks

13. Modernize Identity

14. Push Towards Passwordles

15. Recommit to Good Things

16. Experiment toward a federated future

17. Take entrepreneurial action

Looking Forward

A final word (why we should be incredibly optimistic)

References

4 | 5| 7| 12| 20 | 24| 26| 29|

13| 13| 14| 14| 15| 16| 17| 18| 18| 19| 20| 21| 22| 22| 24| 25| 2

Human machines

By John Paul ‘JP’ Alejandro

Our bodies were designed to be like a machine cogs and gears that grind to produce ideas an innovation in this world

Humans were the first to fabricate setting foot to mankind’s greatest achievements like landing on the moon deep into the black abyss of space a seemingly impossible frontier our limbs move in confidence through lifeless gravity Wrought up from the inventions that we built to accompany us

Because there is nothing more Human than hoping and dreaming

Technology is created through our hopes and dreams it is an extension of who we are as people and how far we want to go forward we build artificial machines as intricate as our bodies to conquer the mundaneness of life to define life in our existence by finding the rewards in solving the unknown Despite our ingenuity, we have come to acknowledge the limits of our of existence:

Climate Change

Disease

Overpopulation and again, we stand at the frontier of impossible obstacles as Human as we are we imagine a better world for everyone like the first time we thought to use flint to create fire we use our hands to spark the fire in our will so we create machines that reflect the expansiveness of the human mind this artificial intelligence processes information faster against the ticking down of the clock to help us focus on being more human than ever to dream bigger things to advance our people forward and hoping for a change that can secure a place in the future

I put trust in knowing that nothing can replace us for as long as we stay at the font of our consciousness our machines built from our humanity can build the foundations of excellence for human kind.

John Paul Alejandro danced to a spoken-word poem, which compared the human body to that of a computer. His synchronous movements and words depicted how the human body is an orchestra of simple and complex systems and processes running simultaneously, much like machines that process vast amounts of data in milliseconds.

Photo credit: Mike Sanchez, ASU

3

Introduction

Times of change are exciting opportunities to shape our future, and as you read this, we find ourselves right in an incredible moment. It is a powerful time to leverage technology to create empowering learning experiences that enable those around us to craft their futures. Transformative technology moments allow us to shape the things to come and establish new ways of collaborating and solving complex challenges. Critically, we have the opportunity to model what trustworthy educational digital experiences should be.

What is digital trust?

Digital Trust is where we build trusted digital experiences for our university communities, creating safe and secure platforms from which learners can reinvent themselves, push forward our understanding of the world around us, and shape the solutions of tomorrow. Each day, we support resilient digital spaces available for anyone who wants to learn from across the globe. We are creating digital experiences that need to be trustworthy, where data is secure, private, and respected.

We are embracing new learning technologies and paradigms at a time when our learners have robust expectations of what a digital experience should look and feel like, expectations set by seamless streaming networks like Netflix, immediate and entertaining environments like TikTok, and engaging environments like Discord and Twitch.

People visiting ASU’s campuses may have arrived in an autonomous vehicle, operating between Tempe, Downtown Phoenix and the Skysong Innovation Center, powered by AI and offering them a comfortable and private drive across town. Remarkably, in the Fall of 2024, we

will welcome students who started their first year in high school during a global pandemic, which has since reshaped how we work, learn and collaborate.

Cybersecurity & privacy

These changes in work and learning have transformed our digital trust landscape - with privacy and cybersecurity at the top of our minds. “Cybersecurity as a Core Competency” is #1 in the 2024 Educause Top 10, showcasing higher education’s challenges with cyber threats. Our cyber attack surface has shifted and accelerated, and the situation is worse in education than in any other sector: our environments are complex, our resourcing is tight, and our data is attractive. In 2023, we saw one of our university peers faced with tough scenarios, like taking their entire campus off the internet (Univeristy of Michigan Public Affairs, 2023) on the day before fall started, or finding that decades of financial aid application data had been stolen (University of Minnesota, 2023).

Education is one of the most attacked sectors. Over the last couple of years, getting cyber insurance coverage has become increasingly complex, forcing many states to consider alternative forms of self-insurance. Meanwhile, regulatory requirements are ratcheting up, with new GLBA Safeguards rules and States seeking privacy legislation for their citizens as we navigate the complexity of federal approaches.

Social media shifts

In 2023, many universities found themselves navigating TikTok bans and new concerns about managing social media tools on their campus. As technology leaders, we want to ensure we meet learners where they are, foster creativity, entrepreneurship, and innovation, and develop new approaches to protect our learners’ data.

Generative AI has entered the building

While cyber and privacy sands are shifting, a massive shift is happening as we learn to leverage generative AI. In the summer of 2022, enthusiasts sat anxiously awaiting invitations to Open.AI’s DALL-E and ChatGPT. They featured trickles of clever artist renderings of AI-facilitated imagery, sparking joy and creativity while introducing serious conversations about the future of intellectual property, the role of creators, and their futures.

By finals of the Fall of 2022, teachers and faculty all over the country may have thought they were experiencing a Christmas miracle,

4

as students who had struggled with writing assignments found their voices and turned in incredibly cogent essays! By January of 2023, ASU set up an AI Taskforce, moving quickly to embrace an incredibly powerful learning tool and looking to support faculty in pivoting assessment approaches to leverage new capabilities.

Throughout 2023, teams rallied across the campus to discuss digital trust, academic integrity, and the exciting opportunities for the future of our work and learning, culminating in launching an AI Acceleration team under the leadership of Executive Director Elizabeth Reilly and communities of practice managed

by Senior Director of Learning Experience Allison Hall, who is bridging intrepid efforts across the campus.

Looking ahead

What an exciting moment to be in higher education! It’s full of uncertainty, threats we do not understand, and a complex landscape of both technology and policy. All true! Each aspect of uncertainty offers a unique opportunity to face these challenges head-on. Each presents an opportunity to rethink our practices and policies and find pragmatic ways to shape an AI-empowered future.

We have an unprecedented

opportunity to learn and shape this future together. Where should we start? Let’s look back at the last five years and then ahead to key milestones along the path.

Dr. Donna K. Kidwell, Chief Information Security and Digital Trust Officer at Arizona State University

ASU student Valkyrie Yao shared a performance dance that she choreographed to showcase the digital fatigue that is often associated with the constant use of our devices. As she ended the performance, she unraveled her scarf, symbolizing the reminder to be fully present in our physical world.

5

Photo credit: Mike Sanchez, ASU

A five-year journey

The future feels urgent. Typically, the future feels like it is on a far horizon, waiting for us to make our way towards it. But with the advent of generative AI, it is clear that the future can happen while we await it. As a history major who found herself honored to serve in protecting an incredibly innovative public university, I appreciate taking a moment to understand the paths we have traveled to help illuminate the road ahead.

Over five years ago, I joined ASU as the Chief Technology Officer (CTO) of EdPlus. This extraordinary team wakes each morning to create and sustain the amazing ASU Online phenomenon. When I joined, we had more than 40,000 students and new programs rolling out at an incredible pace. Today, ASU Online serves more than 90,000 students, offering over 300 degrees and programs. As CTO, I had the joy of leading teams that rearchitected their technology stack to support this tremendous growth of students and to lay the foundations of what, over the coming years, will be an ever more personalized experience that respects where the students are in their journeys. Much of this work included the incredible work of others across ASU, who champion student experience and are mavens of modern data strategies. As the teams grew to have sophisticated data strategies, we found robust insights into our student’s digital experiences.

What could we do? What should we do?

We found ourselves asking what we could do and what we should do. We sought approaches that respected our learners, treated data as precious, helped us make informed decisions and empowered them on their journeys. This required deeper and more meaningful

conversations across the campus. The technology stacks supporting ASU Online are interwoven with those at Enterprise Technology and the Provost’s office, which we call Academic Enterprise here at ASU. Technology on a modern campus is highly distributed, with each college and business unit working to ensure our processes meet learners where they are and are designed to do that at scale. It is not an easy task, as distributed technology struggles under the weight of maintenance and technical debt, not to mention the complexity of modern-day cybersecurity challenges. It can also create innovation eddies.

A river forms an eddy in the path of obstruction, creating a wonderful spot for entering swimmers, adventuring paddlers, and fly fishers

puzzling where to meet their next trout. On our campuses, we need to solve local challenges while seeking technology designs that ensure our insights and opportunities serve to address both those local needs and help us shape what the university of the future should be. We want to keep the innovation near where it solves problems, allowing insights and innovation to hop the eddies.

Resilience in the face of global events

Much of this work happened in the context of a global pandemic when, suddenly, all of us were looking at the tools in front of us and leveraging them to respond to the stress and uncertainty facing everyone. ASU teams sprang into action, not only pivoting our delivery and services

6

but extending what we knew to help schools and universities worldwide adapt. Where we could, our teams leaned in to help and share our work with others, to offer as much as we could, and to ensure students could come out the other side of the pandemic better positioned to face any challenge they may have.

CISO Evolution: Chief Digital Trust Officer

Mid-pandemic, the opportunity came to re-craft the Chief Information Security Officer (CISO) role. Rather than pick up with the traditional model, we created a new title - Chief Information Security and Digital Trust Officer. More than a CISO 2.0, my role recognizes that security is a foundational requirement for privacy, digital agency, transparency and trust. As part of an alignment

working group, my role creates a structure for building consensus, collaborating on work, and, more critically, co-designing new processes with leaders across campus. For example, it gives me mechanisms for partnering on workforce initiatives with colleges, advanced network strategies with my research colleagues, building new cookie consent mechanisms across ASU Online and various business units, designing new approaches to risk management in partnership with business and finance and EdPlus, and co-designing intellectual property guidelines for exciting new generative AI tools.

Over the past three years, this work has helped clarify what builds ‘digital trust’ and which work needs prioritization to reduce risk. CISOs sit as risk-reducing cost centers,

with seven minutes in a board presentation to make a persuasive case for what they need to protect the enterprise diligently. We can certainly avoid risk, but the path to innovation is in taking risks: embracing them with a keen sense of how to mitigate the challenges and become stronger as we push ahead. ‘Antifragile’ captures this sense - to respond to adversity, learn through it, and improve.

7

Facing uncertainty through trust

Cyber threats have evolved to be faster, more intelligent and exceedingly sophisticated. Strengthening partnerships is essential to forge connections across campuses, throughout higher education institutions, and with vendors and thirdparty collaborators supporting our collective cybersecurity journey.

2020 - 2023

Evolving cyber threats

The cybersecurity landscape has shifted massively. In 2020, during the throes of the pandemic, the cyber attack of SolarWinds changed how we think about cyber attacks. Until that moment, most of us considered our own perimeters and technology stacks, with a growing understanding that we should be adopting ‘zerotrust’ mindsets and moving away from assuming our environments are Ivory Towers with moats towards a model of testing the trustworthiness of everyone and everything that enters our environments. Suddenly, that world was not limited to ‘our’ technology but showed immediate vulnerability to the vendors we entrusted with parts of our operations and businesses. Supply chain attacks

Zero-day Attacks Change the Game

took a concept broadly understood in award-winning business schools to something we needed to wake up and face, actively seeking vulnerabilities. Solarwinds showed the immediate attack landscape of 18,000 victims, all infiltrated through a trusted vendor (U.S. Government Accountability Office, 2021). Later, in 2020, we showed that zero days were another critical vulnerability. Log4J, an exceedingly useful and inconspicuous logging tool, was revealed to have a critical vulnerability that, if left unfixed, would allow attackers to break in, steal passwords, extract data, and install malicious software (Cybersecurity & Infrastructure Security Agency,

2022). Suddenly, across our campuses, we were rallying to find all the systems using Log4J, including trusted vendors frantically patching and sending updates to custom software designed on campus. Often, these software were developed by resources within colleges that had since graduated and left their code to carry on important tasks. This combination of zero-days and supply chain hit higher ed in a perfect storm through the MOVEit vulnerability, a standard tool many companies use to move data securely. Within 48 hours of the public recognition of a zero-day vulnerability, hackers had moved to nab data from trusted vendors across education. Pension funds and healthcare companies were hit, and most companies were

8

combing through to see if MOVEit was part of their ecosystem. We had a startling wake-up call with the theft of data from the National Student Clearinghouse, a trusted a trusted entity that is a warehouse of the data of students across the country from over 3,600 institutions. Fortunately for most, the student data taken wasn’t sensitive - but how close was the bullet? How do we build secure and resilient systems knowing that the new attacks include combinations of zero-days in our environment and the environments of the vendors we rely on?

2023 - Now

AI challenges

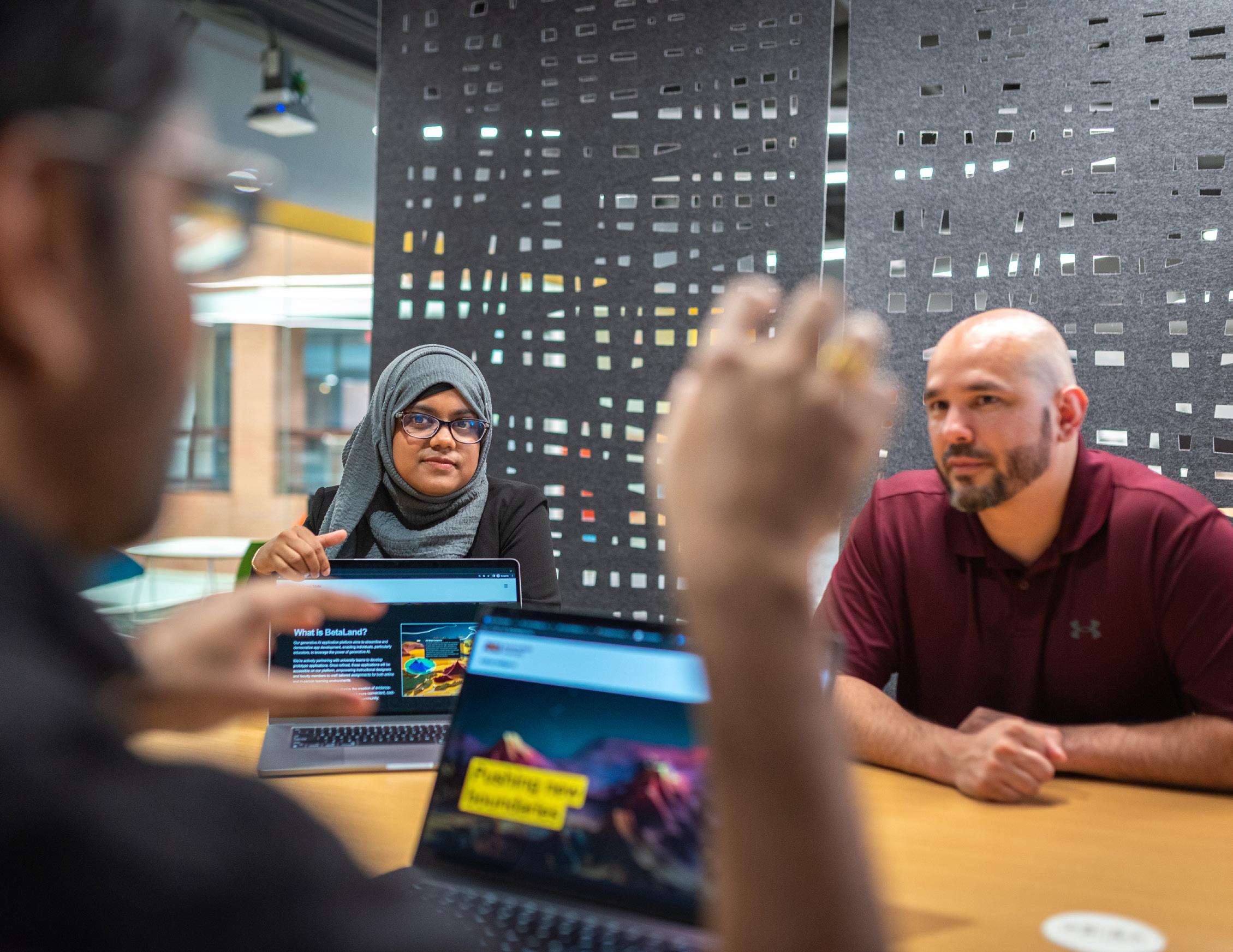

One of the more fascinating changes in the past year is the advent of generative AI. Over the past year, we watched a groundswell of creative and productive use cases emerge. In the Summer of 2022, enthusiasts waited patiently for beta access to tools like DALL-E. This allowed for experimenting, exchanging findings, and contemplating potential applications and the creative ramifications for our future endeavors.

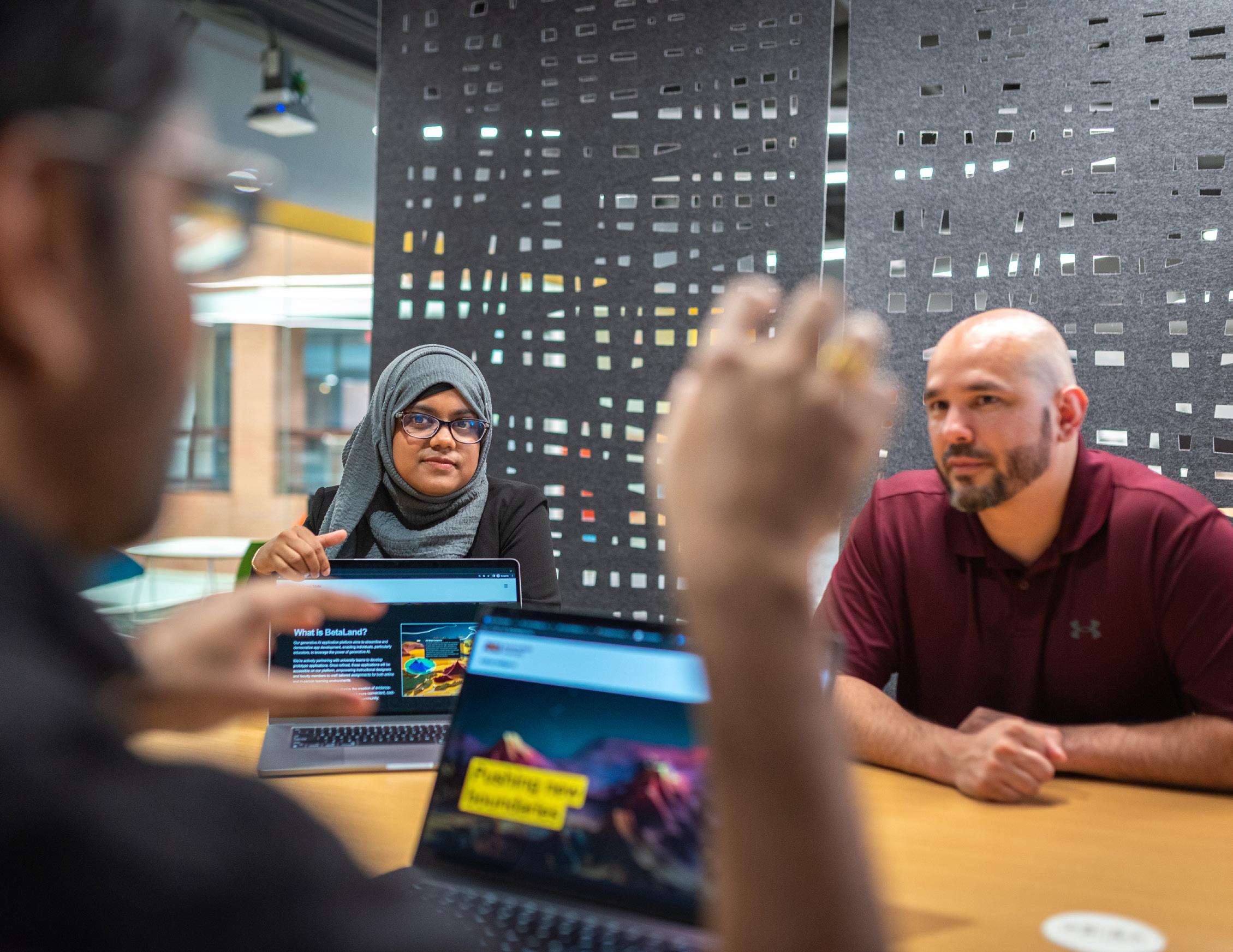

By January 2023, ASU’s Provost called together a Task Force of 50+ strong representing faculty, researchers, administrators, and legal counsel, all curious about how to navigate the practice of using AI broadly across our enterprise. Our journey began with the exploration of academic integrity challenges. It became evident from the outset that reevaluating assessment methods and comprehending how learners demonstrate mastery of subjects, particularly in the presence of powerful yet fallible AI companions, was imperative.

We mobilized our learning design and technical teams to partner with the campus in workshops. We recognized that the pivot to embrace AI was an intensive effort and required teams to support our

faculty. We proactively ran new tools through security and privacy reviews, not waiting for requests through traditional procurement channels but recognizing that we would want to adopt a tool quickly. I worked with our attorneys at the Office of General Counsel and leadership to develop privacy and intellectual property guidelines. By August, Elizabeth Reilly, Executive Director of AI Acceleration, set up a team that pulled together technical talent from across engineering and data teams. These leaders have been working on chatbots and mobile apps for the past few years and understanding how to take action from data insights. They are creating a secure space for ASU’s community to experiment and learn with AI in a platform coined BetaLand. Allison Hall, Senior Director of Learning Experience, leads convening and defining work for everything from ethics to practical uses in courses. Her work ensures we’re supporting the faculty and identifying which innovations have the opportunity to lift efforts across the campus. This work is evolving rapidly, and it is a lovely example of how we’ve put in place mechanisms to learn quickly across the campus, support one another, and learn about best practices and guardrails as we enter the days of accelerating AI. We are crossing a threshold into a new age. We have reshaped how and where we work. Meanwhile, our adversaries have become adept at exploiting our complex and distributed nature. What tools and actions do we need for the work ahead of us?

9

Phishy emails look a lot less suspicious

These vulnerabilities are technical, and attackers are getting far better at social engineering. In 2023, we saw highly sophisticated phishing attacks through emails, SMS, QR codes, or any mechanism allowing users to enter their credentials. These are increasingly clever, even finding ways to circumvent multi-factor (if you didn’t ask DUO for a nudge, don’t agree to one!). We’re seeing highly personalized messages, and our teams have done AI Red Teaming to demonstrate how effective generative AI can be for bad actors. As a university, we promote and encourage the dissemination of the innovative work happening across campus. This makes it easier to generate phishing efforts that are timed with benefits enrollment on campus, offer job placements for students, or grant opportunities for faculty. These tactics have made it easier to steal credentials, giving attackers access to a precious .edu email address, one of our most trusted assets. They are insidious, coming to our inboxes from esteemed .edu email addresses and looking like it was meant for you and related to your work.

Whoa there! Be careful with that IP, cowboy!

We are in the early days of navigating intellectual property in an age of powerful generative AI. Carefully read the fine print when working with an AI-fueled tool. Unless it is evident that the tool is protecting your IP, assume it is not.

Copyright

Many legal cases are coming before the courts to clarify the world of copyright for works created with generative AI. Copyright is an intellectual property right granted to humans, so we are still working to understand what copyrights are available to a human working with an AI. Use tools that help you track your intellectual contributions through version and source control so that you can demonstrate what can be attributed directly to your work.

Additional resources on AI guidelines can be found at ai.asu.edu.

Patents and Trades Secrets

Patents or trade secrets may protect your work, and in both cases, you will want to be thoughtful about sharing your work in public, thus jeopardizing your ability to protect it. The public LLM models share your input into their training. Instead, work with models that guarantee that they do not train on your work. With the technology and business of cyber threats evolving, our best response will be to strengthen our forces and work together to combat them.

10

11

Two work streams with calls to action

Workstream 1: Focus on the people

1. Cultivate an inclusive workforce

2. Design for decision-making

3. Adapt to Risk and Redesign Processes for Procurement

4. Engage your Community

5. Create a Space for People to Work Together: Working Group Cyber-Fusion

6. Conduct TableTopsengaging leadership to propel strategies

7. Rethink Information Security Training - engaging everyone in keeping us secure

8. Celebrate & Advocate: Cybersecurity Awareness Month and Data Privacy Day

9. Establish toolkits for Trust Builders

10. Lean into NIST Frameworks to help with wayfinding.

11. Treat Privacy as a Practice

systems that too often act as sieves for our precious data.

Privacy by design

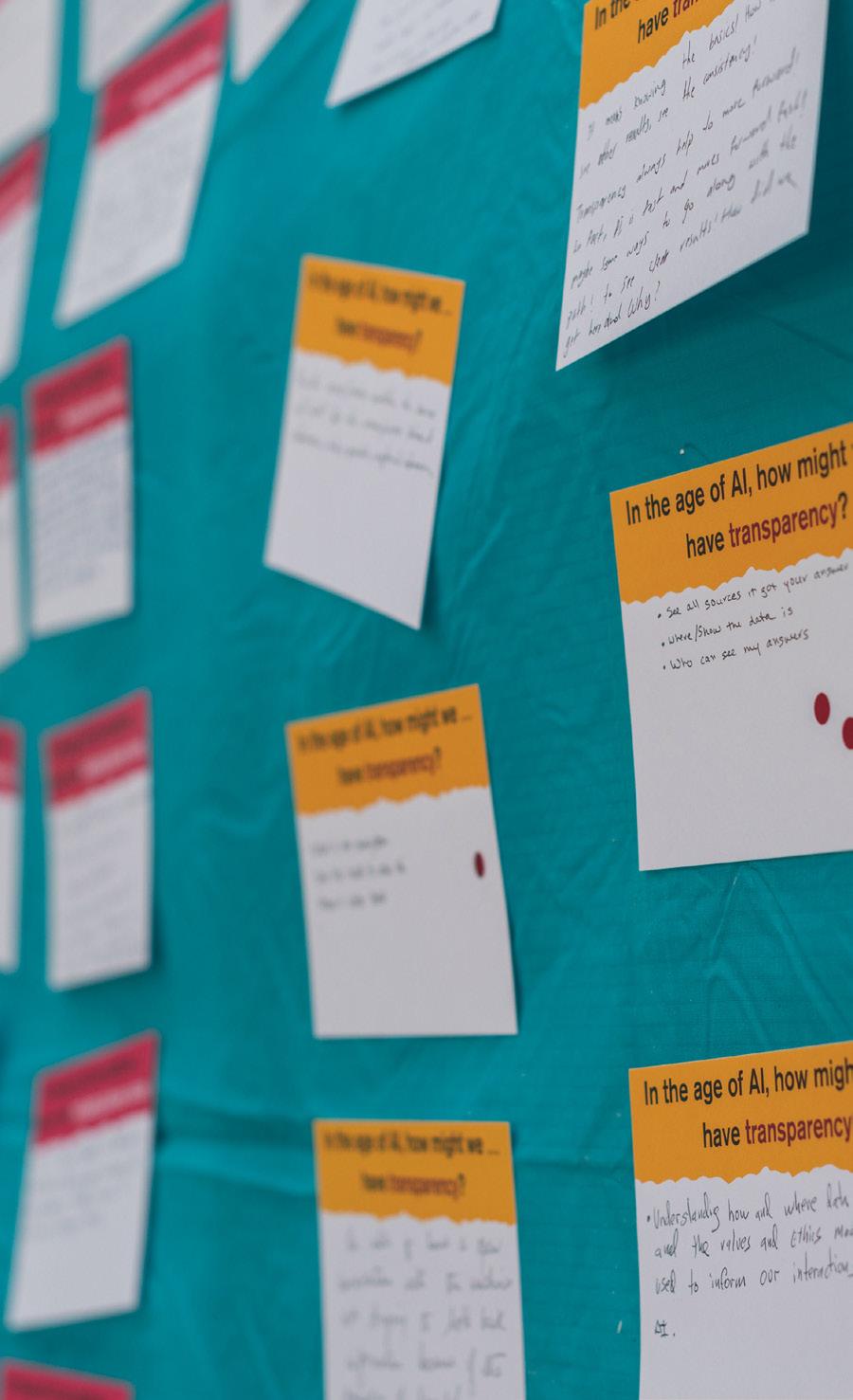

Some will argue that privacy is gone and could only exist in a simpler, less data-connected world. We discover something entirely different when we talk to students (which we often do during our annual Data Privacy Day and the Digital Trust Summit). Our students articulate a less cavalier and more nuanced approach to privacy - navigating complex online social identities and actively engaging and critiquing new environments where they can thrive as creators.

Security by design

Secure by design is gaining traction, with great work from the Cybersecurity and Infrastructure Security Agency (CISA).

Security by design starts with an assumption that we do not leave security as a job that learners, or anyone else, attend to on top of or as an afterthought to working with our systems. Instead, security by design assumes we consider and design in collaboration with the people who will use our systems and not place the burden solely on them.

anti-fragile approach assumes that we will create CVEs in our work and encounter them with vendors, as we saw with MOVEit.

A security-by-design process works to address CVEs quickly. At ASU, we have revamped our IT vulnerabilities process to offer guidance on prioritizing incoming CVEs. Our development teams have internalized security to actively respond quickly, and in cases like AI development, we have created AI Red Teams. Rather than avoiding the threats, we hold ourselves accountable for fostering a robust learning community. For example, our enterprise teams have a slack channel that serves as a Security and Compliance Guild, where over 100 technologists surface findings, debate approaches, and actively collaborate on security issues.

For decades, pursuing innovative technology experiences has been driven by the opportunity to do something new. In most cases, this can be found in new approaches that advance how we get things done and solve key problems in the way we do them and, in some cases, by creating something entirely new. This aspiring and restless approach has made great opportunities and progress. Still, it has unwittingly created the groundwork for vulnerabilities and

Security by design requires transparency and the willingness to shift our gaze beyond traditional metrics. Today, we build our risk practices around the ability to identify and address vulnerabilities leveraging a published list of Common Vulnerabilities and Exposures (CVE). Rather than expecting to avoid these, we should gauge our metrics around how quickly we can identify, analyze and address them. An

12

Cultivate an inclusive workforce 1

We must cultivate a diverse and trust-focused workforce. Part of this work focused on rethinking how our cybersecurity professionals work and relate to the rest of the organization. Philip Kobezak, Deputy CISO, brought 20 years of experience at Virginia Tech and helped us design working teams for the key practices within cybersecurity: blue teams, risk management, identity and access. He also designed an accompanying cybersecurity professionals framework that helped argue for salary adjustments and normalized the profession across ASU. We could bring our Security Operations Center (SOC) in-house with that in place. By releasing a vendor and redirecting the funds, we were able to hire more

redesign allowed us to reshape ourworkforcein partnership with the W.P. Carey School of Business and create meaningful apprenticeships for 12 full-time employees through a robust AZNext program. AZNext is a partnership with the State of Arizona that provides career pathways for talented, underemployed individuals. Candidates for AZNext do not have college degrees and aspire to chart new paths.

We welcome these incredible “upskillers” for a one-year apprenticeship and embed them in our teams. They are fully salaried, giving them access to ASU’s tuition benefit, so if they want to pursue a degree, they can! They have been the single change-making force to help us tackle challenges like vulnerability management at scale across the campus. They have also completely changed the face of our cyber teams,

technologists or cyber professionals before. I’m incredibly impressed with their commitment and work, and I look forward to where they take us and where they go as their careers advance in the coming years.

Design for decisionmaking 2

Over the past three years, our teams have taken great strides in designing structures for privacy and data governance that lay the foundation for tackling challenges that simply cannot be addressed across silos of excellence. New teams have been formed, including a privacy and data governance task force. This is critical as our privacy landscape grows more complicated daily while our commitment to being good stewards of student data deepens. This team brings together voices representing

13

Adapt to Risk and Redesign Processes for Procurement 3

With new attacks finding ways to infiltrate through vendors and supply chains, now is an ideal time to gather in working sessions to rethink and redesign business processes and the technologies that support them. Now is the time to spin up projects that gather together leading players from cybersecurity, IT, procurement and your Attorney from the Office of General Counsel. Share what works and does not, and map out your current state. From there, you can identify where to improve, what does not serve you anymore, and what new processes you can create together.

4

Engage your Community

The challenges of trust are very human, and it is in working collaboratively that we can build trust together. Cybersecurity professionals have deep and complex expertise and work alongside other technical teams with engineering and design prowess, creating powerful learning experiences of the future: virtual reality biology labs and virtual tutors. To have a solid digital trust practice, we need intentional ways for these professionals to create common approaches. At ASU, we’ve done that through four approaches to engagement: working groups for practitioners, tabletops for leaders within and across units, redesigned information security training for internal audiences, and events for our broader community.

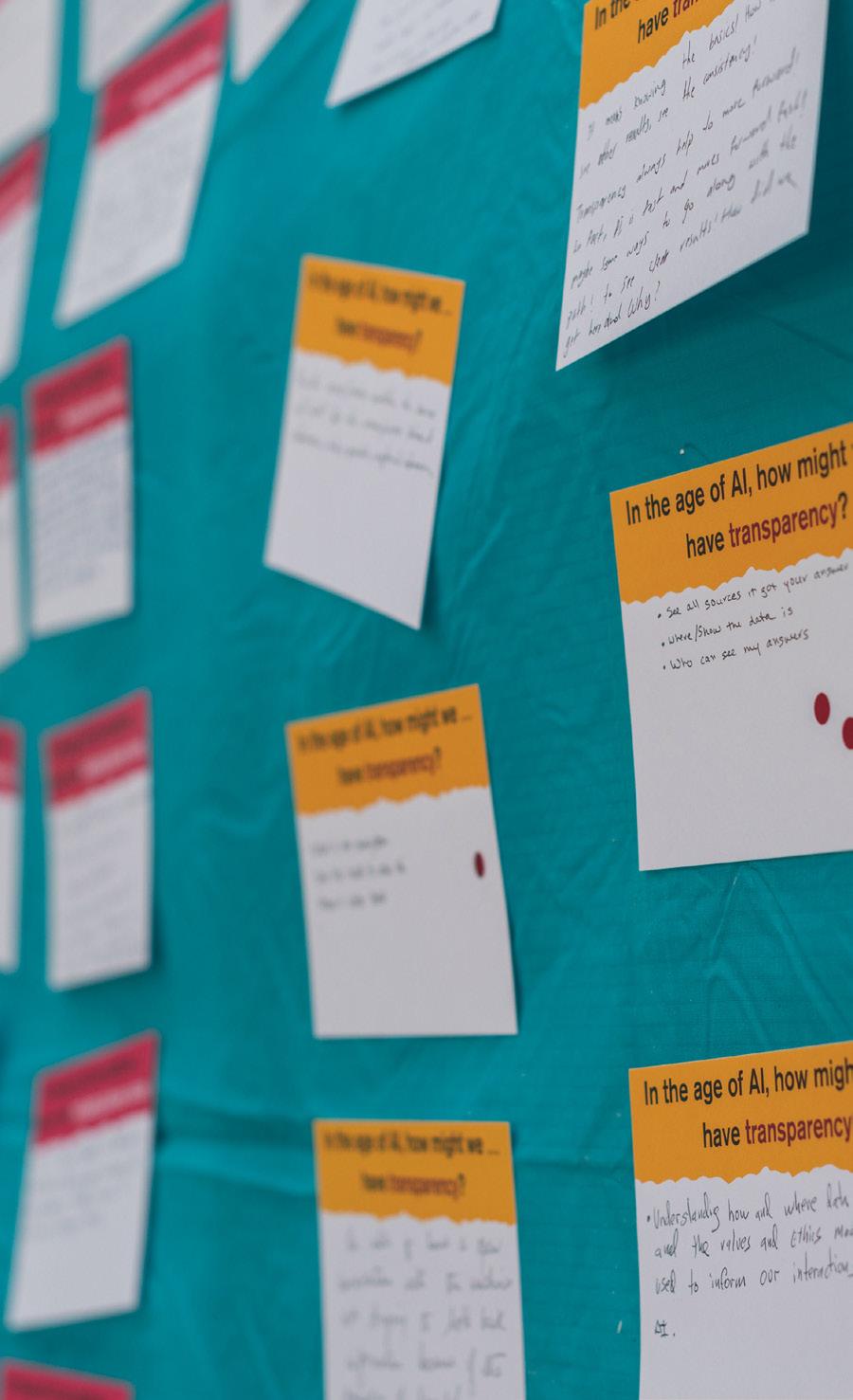

Workshop at the 2023 Digital Trust Summit 14

Create

a Space for People to Work Together: Working Group CyberFusion 5

Combating cyber threats and strengthening our cyber responses require engaging across the campus. Individuals across our campus have taken accountability for protecting one another, and we saw that we could recognize this effort by formally creating a working group called CyberFusion (WG-CF). This working group gives leadership across the campus a trusted network of skilled professionals and a team that can recommend new processes and tools. They gather to work on these challenges, giving us critical insights into the realities of managing technology across the campus. They are in the foxholes of innovation: managing the complexity of endpoints on a campus that celebrates bringing your device, problem-solving for faculty seeking to create the best digital experience in a classroom, and researchers working with sensitive data (from working to help the most vulnerable in our communities to push the boundaries of science with protected health data). WGCF creates a working space for improving our IT standards, sharing approaches for meeting compliance requirements, and learning from one another. Critically, it also creates a mechanism for threat intelligence and rapid response during active threats. When a new critical vulnerability is discovered, individuals across campus rally to quickly address it.

Forty percent of all ransomware attacks come from IT vulnerabilities (Mahendru, 2023). Hackers recognize they are the fastest and most lucrative form of attack. When the MOVEit vulnerability was discovered, the cybercriminal gang CL0P quickly moved to seize the opportunity. As of January 2024, 93,345,243 individuals from 2,729

organizations (Simas, 2024) have been part of the MOVEit attack.

As mentioned previously, in May of 2023, teams across the university were activated. While we did not find any use of MOVEit in our environment, a few of our thirdparty vendors were hit. The most direct exploit for education was the attack on the National Student Clearinghouse (National Student

1. Implement stronger threat intelligence and response across business owners on campus.

Hackers are exploiting software vulnerabilities that our vendors use, and we are heavily dependent on vendors. We need stronger threat intelligence to respond, and that means we need to activate the people on campus who own these vendor relationships. For MOVEit, that was our Registrar, the steward of our transcript data. They knew how to reach out to the cyber teams but needed to recognize that on many campuses, creating a lag time in activating the cyber team to help. Since MOVEit, we’ve engaged our colleagues in Procurement, Risk Management, and the Office of General Counsel so that we can quickly discover and connect to the business leaders on campus when a vendor they work with is attacked. Our WG-CF serves to learn of threats and to hear the impact on folks across campus so we can move quickly.

Clearinghouse, 2023), the nation’s hub for transcript data, serving more than 4000 educational institutions. It took less than 48 hours for CL0P to discover and steal the data, creating months of forensics and remediation efforts. ASU did not lose any sensitive student data in MOVEit, but we learned two important things:

2. Good habits are worth forming: revisiting the configuration management database (CMDB).

We’re doubling down on the work to improve this database, which, despite the pedantic and not terribly useful name, is a wonderful source for identifying and protecting the critical systems used across the campus. A strong CMDB is a well-known best practice, but it is challenging to do in a distributed environment when there is no single owner of such a system. WG-CF unlocks a critical key in identifying which systems should be in the CMDB and what information should be stored there about each system, helping us understand the actual value to the business for each system. They will also help us orchestrate and automate the workflows around the CMDB, making it more than a database but a hub for monitoring our environment and activating threats.

15

TableTops are standard best practices for engaging a team that may not typically work together to think through a scenario and identify actions they should take to improve the response should a similar scenario come through. Like the CMDB and vulnerability management, we can approach these creatively, forging partnerships and lively interaction. At ASU, we see tabletops at two levels: strategic tabletops that engage across leadership and help teams advocate for advances in our work and tactical tabletops that engage teams that would directly mitigate and resolve incidents.

Key elements of a good tabletop strategy:

1. Like most exercises, tabletops should be part of a regular cadence and practice. It is not injected like an interruption in the normal course of daily business but as a strategy for helping teams identify and prioritize work and a tool to surface larger initiatives that require greater coordination and buy-in across campus.

2. Both strategic and tactical tabletops include similar roles, highlighting the importance of inclusion and community. Technical leaders are present, of course, and serve to offer the scenario and its challenges to a wide variety of stakeholders, such as:

• University executives who will be making key decisions impacting learners, faculty, researchers, staff and the community,

• Administrative and academic leaders who will be managing the experience across the campus community,

• Media relations professionals who will be interfacing with the concerns and questions of the community,

• Legal professionals who will be

• helping to navigate a complex

• terrain of compliance and regulatory actions,

• Risk and compliance professionals who will be interfacing with cyberinsurance, state/federal agencies and other external groups,

• Law enforcement state and federal agency representatives will be assisting along the way.

3. All tabletops are rooted in scenarios chosen to illuminate the greatest area of risk and impact. Selecting a situation that surfaces a significant risk with a poignant impact helps rally teams and advocate for the work required. It also helps inform others across the campus so they can appreciate the problems that the operational teams face. The tactical tabletops can flush out the risk at the unit level; for example, units with strong online programs face different threats than units developing the future of clinical healthcare.

4. The tactical tabletops feed into the strategic ones, allowing us to do semester or annual tactical tabletops that inform strategic executive tabletops every two to three years. Over time, these offer a roadmap of activities that strengthen the entire campus.

In 2024, we will share a CISO’s Guide to University TableTops alongside Player Handbooks and other resources to help your teams conduct engaging tabletops. This resource can be found on GetProtected.asu.edu.

Conduct TableTops - engaging leadership to propel strategies 6

16

7Rethink Information

Security Trainingengaging everyone in keeping us secure

We are in an age where cyberdiscernment is a crucial skill in our personal and professional lives. Every page we view, every click, every piece of data we enter into a field (or is generated unbeknownst as we go) is a point of discernment, which is simply overwhelming if we don’t distill our approach. We need engaging and informative ways of helping our communities develop this discernment and highlight actions we can reasonably practice.

ASU has a deep commitment to engaging learners. We are beginning to understand how to do that for our community regarding cybersecurity and privacy training. It is critical, and we cannot assume that compliance training will solve the challenge. With the sophistication of social engineering, our best mechanism to combat this is engaging with users to help them understand how to avoid falling into phishing traps and how to help identify new tactics.

In the last two years alone, we have renovated our cyber training. The revamped training was designed in collaboration with people across the campus: champions for student data, FERPA and HIPAA data stewards and people passionate about security and privacy. The result is a more engaging model that paves the way for a future that will enable role-based training, making it easy to discover the guidelines and practices required for different types of work across our enterprise.

In the coming years, our teams will work towards:

Ensuring that educational vignettes, stories, and videos are discoverable where our audience will most likely need them. Building on the great work in the past year to create more engaging training and ensuring that the training is accessible to all our stakeholders.

Coordinating learning activities into events such as Cyber Security Awareness Month and Data Privacy Day.

Identifying new mechanisms for ensuring that we are compliant

and that people take our training, with incentives and when-needed enforcement models, that do not create an undue tax on support services.

Looking to see where our efforts are most effective and finding ways to boost those efforts so we can directly combat the high percentage of threats in things like compromised credentials.

In late 2024, we will launch an educational series for Safety and Trust in AI. This course is designed to help the community make better decisions to protect privacy and intellectual property when many of our daily tools leverage generative AI.

17

Two national events anchor our community efforts: Cybersecurity Awareness Month in October and Data Privacy Day in January. By leaning into these nationally recognized campaigns, we join the many organizations exploring these themes and engaging with our communities across campus.

Raise Awareness for Cybersecurity

Engage the Campus in Issues of Trust: Digital Trust Summits

We convened our first Digital Trust Summit during the pandemic of 2020, bringing cyber, privacy and future thinking technologies together. Over the past three years, these discussions have featured security executives talking to our growing community about how Waymo is reshaping transportation through autonomous vehicles, cyborg futurists talking about how we are creating digital environments of the future, and FBI Drone experts discussing the incredible considerations of drone technology.

We’ve commissioned students to participate, who brought provocative and compelling expressions of trust in the way only a young creator can. The event allows us to see Digital Trust expressed by engineering students, design students, faculty experts, and local and state partners.

Advocate for Privacy during Data Privacy Day

Each January 28th, in association with the National Cyber Security Alliance (NCSA), ASU celebrates Data Privacy Day, an international effort that encourages individuals and businesses to have an awareness of their digital privacy. By convening privacy experts from across the campus and beyond, we invite the community to tackle privacy challenges.

9

Establish toolkits for Trust Builders

We need practical tools to help us get our bearings: Where are we? Where do we want to go?

To align teams and set milestones for the work ahead, benchmark where you are today and where you want to go. ASU will launch our Digital Trust Framework in the coming year, starting with a renewed IT Risk Assessment. We have aligned this work to the National Institute of Standards and Technology (NIST) Cybersecurity and Privacy Frameworks. This will allow our units to understand where they are today and give us a baseline for the health of security and privacy on campus

The framework will also recognize that controls are only part of our security posture. It will celebrate the activities we can all do to keep us safe:

• By taking our cyber security training,

• Actively patching and managing vulnerabilities,

• Assisting in protecting our managed devices,

• Engaging in working groups to make our standards and practices stronger

• Joining in our events and activities across campus.

Earlier, we looked at the 40% of ransomware attacks in Higher Education from an exploited vulnerability. Another 56% were from compromised credentials, phishing, or malicious emails. We can fight most attacks through our diligence (Sophos, 2023).

8 Celebrate & Advocate: Cybersecurity Awareness Month and Data

18

Privacy Day

10

Lean into NIST Frameworks to help with wayfinding.

As we gain a greater selfawareness of where we are on campus, the framework will help us with wayfinding - where do we need to go? Our teams will use the framework to chart milestones for improvement over the next few years. We’ll be able to navigate the need to comply with NIST 800-171 (Ross et al., 2020) and, in the future, Cybersecurity Maturity Model Certification. It will help pinpoint where we need to collaborate to unify controls across the campus and help drive business cases for where Enterprise Technology should invest in shared capabilities. It will help clarify where a unit needs more stringent controls. It will give us a grounding source of truth as we understand evolving needs that intersect with regulatory compliance, such as HIPAA for our health data and the Gramm-Leach-Bliley Act for financial data.

Treat Privacy as a Practice 11

One of the more considerable challenges we face in areas such as privacy is deciding where to invest our talent and funds in the face of uncertain and evolving risks.

We need solid data paired with an understanding of the external landscape to help us determine which efforts to prioritize. This will require intentional discovery work to help us gauge where we are.

• Prioritize backlogs of work,

• Improve contract language for information security terms and data processing agreements to handle the advancing use of AI across many tools, and

• Address gaps in cookie consent and consent management.

Our privacy team, led by Ben Archer, diligently helps us understand the quickly moving privacy landscape, tracking policy and regulation across the US and globally. We need processes to translate insights into decisionmaking. As new regulation comes out, our teams do constant surveillance of the privacy landscape to understand how to deploy new privacy practices quickly:

General Data Protection Regulation (GDPR) took effect in May 2018. Since then, ASU has organized to handle the critical, if complex, data privacy work through the Privacy and Data Governance Advisory Committee. Founded in 2023 from a discovery and design process with leaders across campus, this group puts privacy at the center of governance. It ensures our data governance and management efforts are informed and aligned.

The Digital Trust community at ASU also shares intelligence on recent legal cases and policy shifts. This ensures that we can learn

quickly as decisions are made that can help us inform how to put policy into practice.

The seven principles of Privacy by Design (Cavoukian, n.d.) have stood as foundational considerations in our work. As we go into an increasingly more interesting and complex privacy terrain, we must advance our tools and approaches.

Double down on the proper handling of data

In 2024, ASU will launch its new data handling standard: an interactive web tool designed to help people make informed decisions about handling and managing the vast array of data found across the campus.

Trail guides for privacy journeys

A privacy journey walks a team through the privacy considerations for every aspect of their work and data flow. By observing someone’s experience, we can identify where their data will be shared, who it will be shared with, and how it will be handled along the way. This process can help us engage with our campus stakeholders and identify key technology and process support they will need to practice privacy by design.

19

Workstream 2: Engineering and Technology

Architecting for a trustworthy

Cybersecurity provides a foundation for creating value - a resilient, safe, and secure platform on which our digital experiences thrive. Rather than relegate cybersecurity to a technical and risk reduction role, Digital Trust lets us focus on the opportunity to transform what we do and create value. It asks us what risk-taking we can enable if we consider privacy and security part of our work’s DNA. Let’s explore the opportunity to rethink three cornerstones: our networks, the identities using them, and the design of systems folks access from them.

12.

13.

14.

15.

16.

17.

12

Be Proactive in the Management of Networks

Universities have always been a gathering place for curious minds, and our technology infrastructure needs to be designed to support both traditional and exciting activities for the future. A visiting researcher from another university may have guest credentials and log in on a personal device to the EDUROAM network. A parent might be accessing a guest network from a mobile device from a dorm, checking in on the FERPAapproved parent portal for their child. A resident of the Mirabella Retirement Community may be enjoying moments on campus at the ASU library or getting technology support.

To do this, we need new ways of securing our network, managing identities and designing the digital experiences created by the university. Cybersecurity professionals use the term ‘zerotrust’ to capture a concept key to how our modern environments work. Technically, starting from a place of ’zero-trust’ allows us to match who

is accessing our environments with which devices they are using and what they want to access. Trust can be spun up as a match of the person, their device, and their goal. In a true zero-trust environment, trust isn’t assumed but configured on the fly. Our university network history is rich, starting as ARPANET’s originating nodes in 1969. As modern-day networking evolves, we can rethink the models established in the 1980s and 1990s, where, as research needs grew, we would set up infrastructure appropriate to whatever we were adding into the mix. The result is often a network management approach of tending a garden of firewalls without an overarching design, where the skillset and know-how of the respective gardens are ad-hoc and based on tradition rather than design. Modern networking approaches allow us to rethink this - from the

hardware choices to rethinking VPN approaches.

ASU is advancing this work and recognizes that this challenge must be faced through collaborations across campus. Firewall strategies and network approaches of the past were reactions to business growth where decisions were made quickly and locally. The challenge is moving from heritage decisions and technical debt to a designed approach that allows us to lean into enterprise architectures without mandating centralized control. The work we’re embarking on requires a concerted strategy that weaves the knowledge of cybersecurity professionals with our network engineers and enterprise architects - all working towards a trajectory set by our teaching and research aspirations.

of Networks

Be Proactive in the Management

Identity

Modernize

Towards Passwordles

Push

to Good Things

Recommit

toward a federated future

Experiment

20

Take entrepreneurial action

13

Modernize Identity

The examples above highlight the myriad identities the university serves: applicants and degreeseeking students, their parents, faculty, researchers, visiting researchers, research collaborations, trusted third-party vendors, alums, and the list goes on! Someone is expected to be both of these identities simultaneously or shift them throughout their lives. ASU manages nearly 2 million identities, and we expect the sun never to set on a Sun Devil. Your relationship with the university shifts as your life and work shift, and our systems require that we respect your identity. A robust and modern identity approach is critical to our ability to manage privacy concerns and a challenging task across a broad and highly aspirational community.

ASU is working towards a modern approach:

• Distributed identities with digital wallets and verified credentials: Through our work with the Trusted Learner Network, we are collaborating

with other institutions to build an identity that the individual owns. This work requires us to look at challenges around disclosure, content and custodianship, all critical issues we need to have elegant solutions for today.

• Identity management: Most individuals think of their ‘identity’ on a system as a ‘username’ and recognize it as the name they use to log into an environment. The work behind making that a seamless and intelligent identity is far more complex. Modern systems need to navigate everything from respecting a person’s lived name (the name they are known by may not be the same as prior university experiences) to managing the resources they should have access to. Those may shift depending on the nature of the work they hope to accomplish (e.g., a parent getting a professional credential while supporting their undergraduate child and high-school child who may be applying).

New technologies offer unique opportunities, such as identity graphs, that can help us with the nuance of a person’s identity. In combination with a zero-trust approach, we can begin to see a future that is on a not-so-distant horizon, where your access to suitable university systems is powered by an understanding of who you are, what you’d like to do and our appreciation of how best to facilitate that experience.

“The Sun Never Sets on a Sun Devil”

- Donna Kidwell, Chief Information Security and Digital Trust Officer

21

14

Push Towards Passwordless

A student comes to university to learn many things, but new passwords should not be one of them. Our teams have worked to eliminate the need to choose a new password periodically. NIST recognizes this, with guidance, that we should not ask folks to change secrets that they have to memorize. Doing this was harder than it may sound, and the teams worked on multiple threads to roll out this modern strategy.

Today, when someone establishes an ASU password, we will check it against known exposed lists and ensure it is robust. Once established, we will only ask the user to choose a new one if we find evidence that it has been compromised. The road to a future without passwords lies ahead, and in the meantime, we will continue to ensure the password selected is rigorous, secure, and not a hassle.

15

Recommit to Good Things

Sometimes, one of the simplest things you can do is recognize a good practice and commit to intentionally making it even better. Most universities have tools such as a CMDB. Similar tools lie in ticketing and tracking systems (ServiceNow and Salesforce). Revisiting such tools across teams allows us to harmonize processes and find points of automation. Determine which of these systems works for you, and identify places where you could improve how they work and integrate in the next 3-6 months.

22

Kudos for Multi-factor

Many of us have spent the past few years rolling out and managing new approaches to multi-factor authentication. Keep heartened on that journey! If you need to prioritize your efforts, let compliance and regulatory frameworks help you. With new Safeguards Rules in GLBA, best practices for FERPA, and the stewardship of Health Insurance Portability and Accountability Act (HIPAA) data, these can help you roadmap with partners on campus and ensure a robust MFA approach.

23

Looking Forward

We can position ourselves for the future with a solid grounding in community and technology. Looking ahead, embracing AI, experimenting with new federated technology models, and adopting an entrepreneurial mindset will ensure we are anticipating what’s ahead.

Experiment toward a federated future 16

Universities are navigating their evolution of distributed vs. centralized technology teams; we are not alone. Web3 brought experimentation and scrutiny to new technologies that push against centralized models. Non-Fungible Tokens (NFTs) sought to solve for digital provenance and create new means to disrupt models but were dominated by modernday tulip crazes and new-fashioned intermediaries, not so much removing the middle but demonstrating that we can shift who controlled the middle. Blockchain efforts have proven attractive for some use cases, where the features of decentralization, distributed ownership, and security are paramount, such as digital wallets (Leow et. al, 2023). As new technologies for trust mature, it is crucial to find new technical

opportunities and models for governance.

Be Authentic, Honor Provenance

An interesting model for federation is emerging in social media, where the winds are quickly shifting. The days when Twitter (currently known as “X”) streams and corporate algorithms, informed by who we know and interact with, are passing, making way for new models of online social gathering. Our learners are flocking to new models, such as Discord, where they can foster self-governing communities and create, share, and amplify content in new ways. New federate models are emerging, promised by Threads and Bluesky and tested for the past years at Mastodon, that allow you to determine where and how to feed information across communities and are experimenting with marketplaces of algorithms. New protocols that rethink how we consider authenticity and provenance are emerging. Authenticity is critical in an age when it is easy to generate powerful content, assisted by AI and ever more potent creative tools. Is the content you are seeing what it appears to be, or is it a clever deepfake?

Generative AI has made it incredibly easy to take content and spin up derivatives, testing the edges of fair use and what we find acceptable. Content creators are facing content scammers profiting from their work and creativity. Kyle Hill, an award-winning science creator, has a lively discussion of this (Hill, 2024), highlighting the challenge for advocates for engaging science and weather content.

Provenance asks where and from whom content originates. By design, data passes through the internet without the overhead of metadata on its origins. In the early days of the internet, this may have allowed us to address bandwidth challenges, but as with many choices, one that made sense in the beginning leaves us with challenges today.

How do we know who and where a digital asset originated? NFTs experimented with mechanisms for proving digital ownership in artwork. Still, we are a long way from scalable solutions that are easy to use and make sense across the diversity of content. How about verification and knowing that content is coming from a genuine source? We are seeing early experiments (Bluesky, n.d.) lean into time-tested trusted mechanisms

24

for verification such as using a web address, where a journalist verifies their authenticity through their newspaper’s web address (The New York Times (@nytimes.com), n.d.), or a public official confirms through their .gov email (Senator Ron Wyden @ wyden.senate.gov), n.d.), rather than paying a fee to a private company running amok by a megalomaniac entrepreneur.

As universities, this should sound like a call to action - who are the champions and role models for concepts around attribution? What can we do to promote healthy practices of authenticity and provenance?

17

Take entrepreneurial action

As university technologists, we commit daily to ensuring technology works, informs and serves our communities. We have the opportunity to do this inside an institution whose very role in society is to serve to push the boundaries of knowledge, create new discoveries, and nurture the minds that will solve complex challenges and craft our future. Each day, insights and lessons from our work should inform the road ahead, and the university offers a perfect space for experimenting together on future models for learning, discovery, and societal impact.

In 1980, the Bayh-Dole Act paved the way for universities to be innovative and entrepreneurial, setting the stage for universities to take active roles in ensuring that they not only discover knowledge but partner with others to ensure that discovery can take root in the

world around them. Technology transfer activities gave universities a path to entrepreneurial action, with an emphasis on collaborating with small businesses. Over 40 years later, it has proven effective in creating over $1.3 trillion in economic growth and 4.2 million jobs (Copan, 2020). Technology transfer, while effective, is one of many means of being entrepreneurial on campus. A university campus is naturally generative and creative, and it is time to advance how the university considers entrepreneurship seriously.

The Trusted Learner Network

A lovely example is the work with the Trusted Learner Network, which started as an initiative collaboration between Enterprise Technology, EdPlus and the Provost’s Office. Kate Giovacchini, Executive Director of Trust Technologies, has been leading teams innovating to change how we think about digital credentials. They have been leaning strongly into new technologies that could shape a future where learners own their data and institutions act as stewards and validators. In 2024, The Trusted Learner Network (Trusted Learner Network, N.D.) will celebrate its fiveyear gathering this April, bringing together colleagues from community colleges, universities, and K-12, alongside industry and non-profit partners, to form new governance models while innovating on new technical approaches.

Learning along the way

A few lessons emerge from this work:

• Bringing entrepreneurship in-house is challenging and requires committed leadership that actively listens to colleagues across campus and among other institutions and partners.

• Universities have a unique opportunity to leverage our place - as a trusted institution that issues demonstrations of accomplishment, as the stewards of those whose digital records may be vulnerable, and as partners to K-12 and community colleges.

• This work requires investment in great people and technology, with a commitment from university leadership, who also recognize the opportunity to attract funding from strategic partners.

• The university has long been shown to be a good place for pilots for new ventures, with ready access to students and the vibrant cities where students live. Access to students should be somewhere other than where our role ends; instead, we can actively design and conduct pilots that would be challenging for small businesses or distracting for large enterprises. We can provide the fabric for innovating where the work is complex and challenging, but the opportunities are truly transformational.

25

A final word (why we should be incredibly optimistic)

We have covered a lot of territory and reflected on hard work while casting our eyes on the work to come. We have the privilege of working for an extraordinary institution, a public university committed to a mission of access, creating research of shared value, and with a direct responsibility for our communities. Universities have been here since the 12th Century, creating new knowledge that is the foundation for things that are often surprising and sometimes startling to us.

Each day, we are creating experiences that establish digital trust. Each project has the opportunity to explore what digital trust should feel like and how it manifests itself. We see it in small interactions, data shown at the right time to offer insights, and interfaces inviting exploration and active decision-making.

Wouldn’t you trust an institution more if it:

• Knew your name as you present it, tying back your history to names you had in prior chapters while respecting who you are now? Lived names that honor how you present yourself to the world as you learn and grow.

Wouldn’t you trust a successful partner more if it:

• Understood from insights collected across many other students where the most precarious success moments were, and offered resources and support to guide you through those without sounding like a scolding nanny?

Wouldn’t you trust a learning partner more if it:

• It opened up vast resources in

delightful ways so you could discover and explore, inviting you with access to digital libraries, interactive notebooks, and collaborative creation spaces.

Wouldn’t you trust a career partner more if it:

• Supported portfolios that help showcase the work you are proudest of, alongside the context of the skills (both technical and interpersonal) you used to create them? What if the university could issue and validate your work, grounding your efforts with an authority that universities have been committed to for hundreds of years?

We are looking through an incredible window of opportunity to shape the future: one that is welcoming, resilient and committed to learning together.

We have unprecedented tools to leverage: we have mature tools to

discover, share, iterate and improve upon each other’s work alongside international dialogue around the opportunities and approaches afforded by AI.

The days ahead promise to give us many opportunities to create new models for digital trust, and I look forward to co-creating them with you!

“No, there is too much. Let me sum up.”

-Inigo Montoya, The Princess Bride

26

Donna Kidwell is ASU’s Chief Information Security and Digital Trust Officer, joining the University Technology Office in November 2020.

In her most recent role as Chief Technology Officer for EdPlus, Donna partnered on digital trust initiatives. For example, Kidwell helped develop the Trusted Learner Network (TLN), which takes a tiered approach to explore new and emerging “technologies of trust,” including identity management, a trusted learner record, reverse transfer with community colleges and course credit exchange. Kidwell will continue to lead the TLN and many other trust initiatives in her new role.

As CISDTO, Donna has stewardship of the portfolio of both internal and external facing technical and socio-technical elements reflecting the full breadth of the university’s digital infrastructure as well as the ongoing need to educate the campus community.

Donna Kidwell received her doctorate at the Ecole de Management in Grenoble, France, and her Master’s in Science and Technology Commercialization at The University of Texas at Austin. She led the development of The Innovation Readiness Series™, an online course developed to help global innovators. Her research interests include innovation and commercialization.

She has worked globally to encourage economic development through science and innovations.

Prior to her work with UT Austin, Donna was a software consultant, and developed custom database applications and eLearning environments for companies

such as Exxon, Agilent, and Keller Williams Realty International. She is passionate about creating action based online systems to educate and help others achieve their goals.

You can contact Donna at donna.kidwell@asu.edu

27

28

References

Photo credit: Mike Sanchez, Photojournalist, ASU

Special thank you to the members of the AI in Digital Trust Working Group at Arizona State University

August 2023 Data incident, U-M Public Affairs. (n.d.). https://publicaffairs. vpcomm.umich.edu/key-issues/august-2023-data-incident/

Bluesky, How to set your domain as your handle (n.d.). https://bsky.social/ about/blog/4-28-2023-domain-handle-tutorial

Cavoukian, A. & Information & Privacy Commissioner, Ontario, Canada. (n.d.). Privacy by design. https://privacy.ucsc.edu/resources/privacy-by-design---foundational-principles.pdf

Copan, W., Reflections on the impacts of the Bayh-innovation, on the occasion of the 40th landmark legislation. 2020. https://ipwatchdog. com/2020/11/02/reflections-on-the-impacts-of-the-bayh-dole-act-for-us-innovation-on-the-occasion-of-the-40th-anniversary-of-this-landmarklegislation/id=126980/

Data incident. (2023). University of Minnesota System. https://system.umn. edu/data-incident

Arizona State University. (2023). Facts and figures https://www.asu.edu/ about/facts-and-figures

Hill, Kyle. (2024). Suing YouTube’s science scammers [Video]. YouTube. https://www.youtube.com/watch?v=ZMfk-zP4xr0

Leow, Litan, Farahmand, Valdes, Hyde Cycle for Blockchain and Web3, 2023. https://www.gartner.com/en/documents/4594099

Mahendru, P. (2023, July 19). The State of Ransomware in Education 2023. Sophos News. https://news.sophos.com/en-us/2023/07/20/ the-state-of-ransomware-in-education-2023/

National Student Clearinghouse. (2023). https://alert.studentclearinghouse. org/

Policy and resources, Artificial Intelligence. (2023). https://ai.asu.edu/policy-and-resources

Ross, R., Pillitteri, V., Dempsey, K., Riddle, M. A., & Guissanie, G. (2020). Protecting controlled unclassified information in nonfederal systems and organizations. https://doi.org/10.6028/nist.sp.800-171r2

Senator Ron Wyden (@wyden.senate.gov). (n.d.). Bluesky Social. https:// bsky.app/profile/wyden.senate.gov

Simas, Z. (2024, March 7). Unpacking the MOVEIT breach: Statistics and analysis. Emsisoft | Cybersecurity Blog. https://www.emsisoft.com/en/ blog/44123/unpacking-the-moveit-breach-statistics-and-analysis/

SolarWinds Cyberattack Demands Significant Federal and Private-Sector Response (infographic). (2023, September 27). U.S. GAO. https://www.gao. gov/blog/solarwinds-cyberattack-demands-significant-federal-and-private-sector-response-infographic

Trusted Learner Network. (n.d.). https://tln.asu.edu/

The New York Times (@nytimes.com). (n.d.). Bluesky Social. https://bsky. app/profile/nytimes.com

29

GetProtected getprotected.asu.edu/