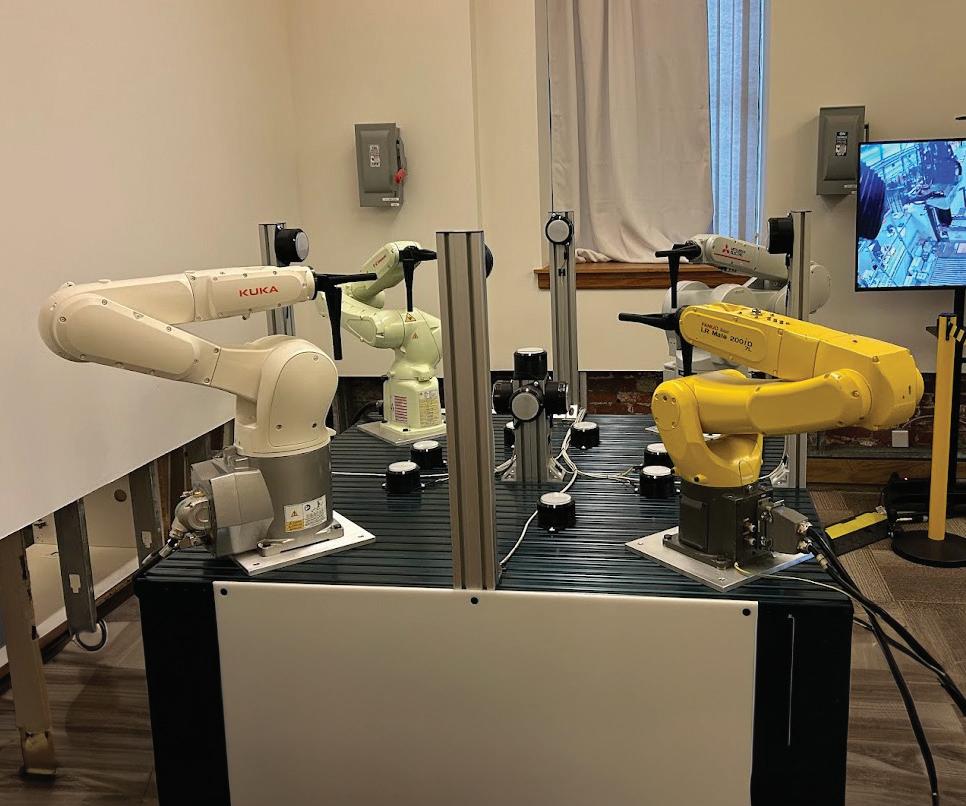

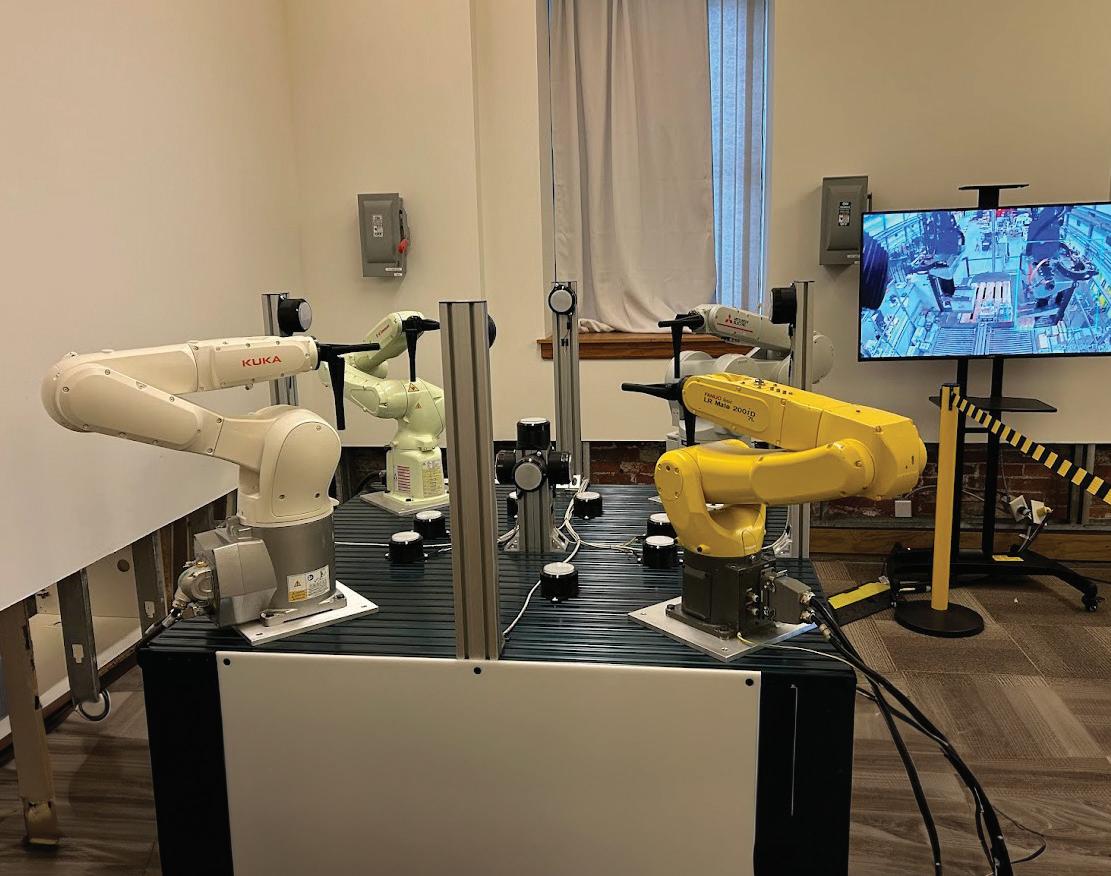

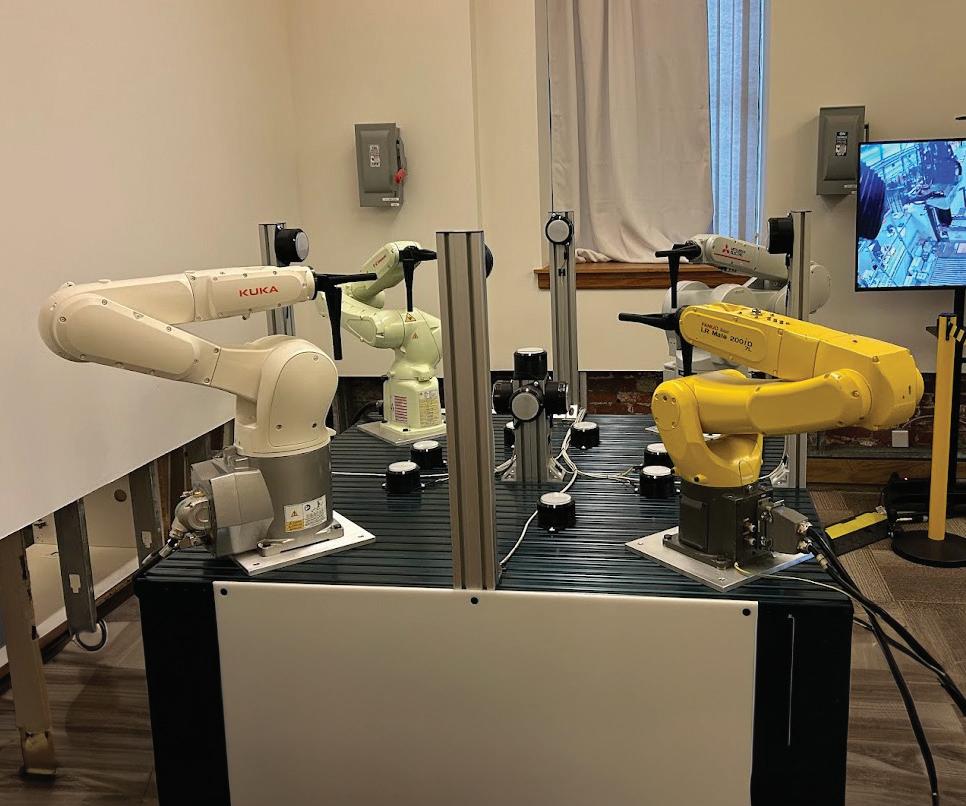

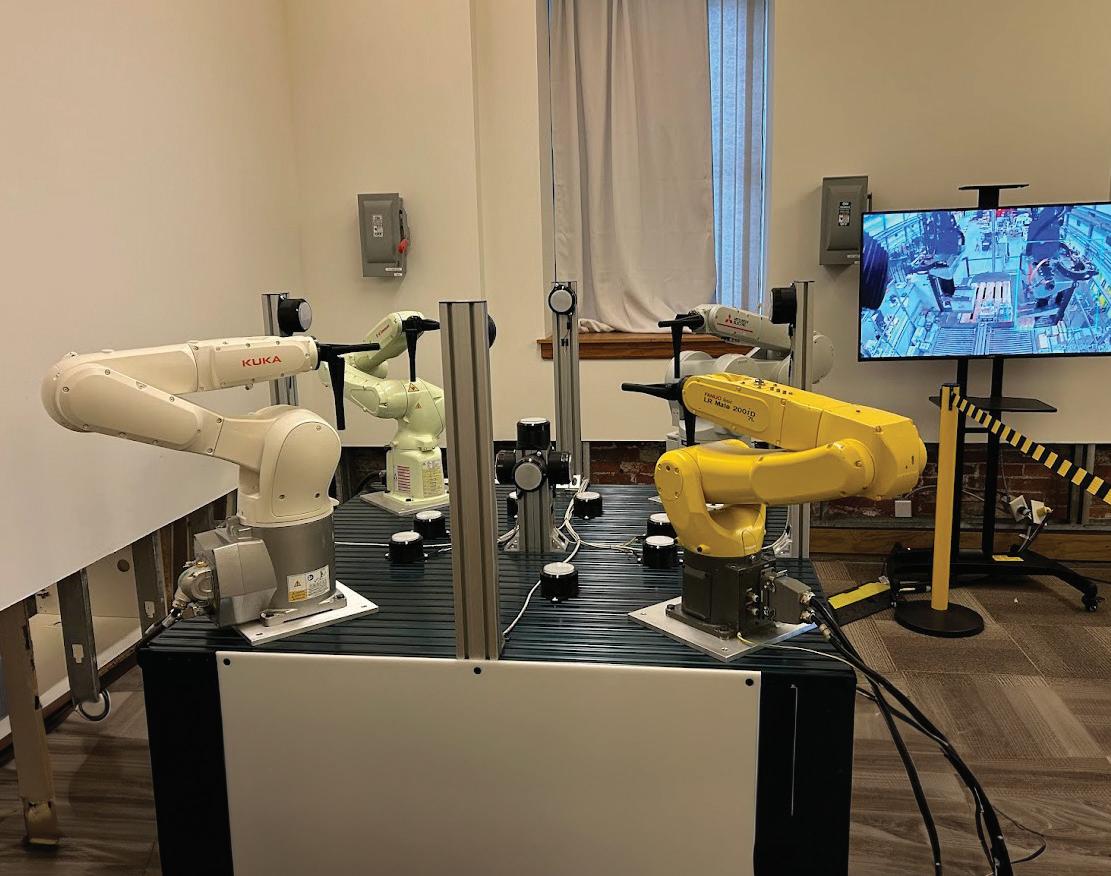

Realtime Robotics demonstrates a multirobot workcell during Mitsubishi Electric’s visit.

| Eugene Demaitre

Realtime Robotics demonstrates a multirobot workcell during Mitsubishi Electric’s visit.

| Eugene Demaitre

— As factories and warehouses look to automate more of their operations, they need confidence that multiple robots can conduct complex tasks repeatedly, reliably, and safely. Realtime Robotics has developed hardware-agnostic software to run and coordinate industrial workcells smoothly without error or collision.

“The lack of coordination on the fly is a key reason why we don’t see multiple robots in many applications today — even in machine tending, where multiple arms could be useful,” said Peter Howard, CEO of Realtime

Robotics (RTR). “We’re planning with Mitsubishi Electric to put our motion planner into its CNC controller.”

The company recently received strategic investment from Mitsubishi Electric Corp. as part of its ongoing Series B round. Realtime Robotics said it plans to use the funding to continue scaling and refining its motion-planning optimization and runtime systems.

In June, a high-ranking delegation from Mitsubishi Electric Co. (MELCO) visited Realtime Robotics to celebrate the companies’ collaboration. RTR demonstrated a workcell with four robot arms from different vendors, including

Mitsubishi, that was able to optimize motion as desired in seconds.

“Mitsubishi Electric is a multibusiness conglomerate, a technology leader, and one of the leading suppliers of factory automation products worldwide,” said Dr. Toshie Takeuchi, executive officer and group president for factory automation systems at Mitsubishi. “I see this partnership as the perfect point where experience meets innovation to create value for our customers, stakeholders, and society.”

She and Howard answered the following questions from The Robot Report:

The IDT Series is a family of compact actuators with an integrated servo drive with CANopen® communication. They deliver high torque with exceptional accuracy and repeatability, and feature Harmonic Drive® precision strain wave gears combined with a brushless servomotor. Some models are available with a brake and two magnetic absolute encoders with the second providing output position sensing. This revolutionary product line eliminates the need for an external drive and greatly simplifies cabling, yet delivers high-positional accuracy and torsional stiffness with a compact form factor.

• Actuator with Integrated Servo Drive utilizing CANopen®

• 24 or 48 VDC nominal supply voltage

• A single cable with only 4 conductors is needed: CANH, CANL, +VDC, 0VDC

• Zero Backlash Harmonic Drive® Gearing

• Panel Mount Connectors or Pigtail Cables Available with Radial and Axial Options

• Control Modes include: Torque, Velocity, and Position Control, CSP, CSV, CST

Mitsubishi Electric, Realtime Robotics integrate technologies

How is Realtime Robotics’ motionoptimization software unique? How will it help Mitsubishi Electric’s customers?

Takeuchi: Realtime Robotics’ software is unique in many ways. It starts with the ability to do collision-free motion planning. From there, the motion planning in single robot cells as well as multirobot cells can be automatically optimized for cycle time.

Our customers will benefit by optimizing cycle time to improve production efficiency and reducing the amount of engineering efforts required for equipment design.

Howard: Typically, to provide access for multiple tools at once, you need an interlocked sequence, which loses time. According to the IFR [which recognized the company for its “choreography” tool], up to 70% of the cost of a robot is in programming it.

With RapidPlan, we automatically tune for fixed applications, saving time. Our cloud service can consume files and send back an optimized motion

plan, enabling hundreds of thousands of motions in a couple of hours. It’s like Google Maps for industrial robots.

Does Mitsubishi have a timeframe in mind for integrating Realtime’s technology into its controls for factory automation (FA)? When will they be available?

Takeuchi: We are starting by integrating RTR’s motion-planning and optimization

technology into our 3D simulator to significantly improve equipment and system design.

Our plan is to incorporate this technology into our FA control systems, including PLCs and CNCs, and this integration is currently under development and testing, with a launch expected soon.

Howard: We’re currently validating and characterizing for remote

Position, angle and speed measurement

Contactless, no wear and maintenance-free

High positioning accuracy and mounting tolerances

Linear and rotary solutions

optimization with customers. We’re also doing longevity testing here at our headquarters.

In the demo cell, you couldn’t easily program 1.7 million options for four different arms, but RapidPlan automates motion planning and calculates space reservations to avoid obstacles in real time. We do point-to-point, integrated spline-based movement.

Toyota asked us for a 16-arm cell to test spot welding, and we can add a second controller for an adjacent cell. We can currently control up to 12 robots for welding high and low on an auto body.

Mitsubishi Electric recently launched the RV-35/50/80 FR industrial robots — are they designed to work with Realtime’s technology?

Takeuchi: Yes, they are. Our robots are developed on the same platform which seamlessly integrate with RTR’s technology.

CGI Motion standard products are designed with customization in mind. Our team of experts will work with you on selecting the optimal base product and craft a unique solution to help di erentiate your product or application. So when you think customization, think standard CGI assemblies.

Connect with us today to explore what CGI Motion can do for you.

Howard: For example, Sony uses Mitsubishi robots to manufacture 2-cm parts, and we can get down to submillimeter accuracy if it’s a known object with a CAD file.

Cobots are fine for larger objects and voxels, but users must still conduct safety assessments.

RTR optimizes motion for multiple applications

What sorts of applications or use cases do Mitsubishi and Realtime expect to benefit from closer coordination among robots?

Takeuchi: Our interaction with and understanding from customers suggest that almost all manufacturing sites are continuously in need of increasing production, efficiency, profitability, and sustainability.

With our collaboration, we can reduce the robots’ cycle time, hence increasing efficiency. Multi-robot applications can collaborate seamlessly,

increasing throughput and optimizing floor space.

By implementing collision-free motion planning, we help our customers reduce the potential for collisions, thereby reducing losses and improving overall performance.

Howard: It’s all about shortening cycle times and avoiding collisions. In Europe, energy efficiency is increasingly a priority, and in Japan, floor space is at a premium, but throughput is still the most important.

Our mission is to make automation simpler to program. For customers like Mitsubishi, Toyota, and Siemens, the hardware has to be industrial-grade, and so does the software. We talk to all the OEMs and have close relationships with the major robot suppliers.

This is ideal for uses cases such as gluing, deburring, welding and assembly. RapidSense can also be helpful in mixed-case palletizing. For mobile manipulation, RTR’s software

could plan for the motion of both the AMR [autonomous mobile robot] and the arm.

Mitsubishi strengthens partnership

Do you expect that the addition of a member to Realtime Robotics’ board of directors will help it jointly plan future products with Mitsubishi Electric?

Takeuchi: Yes. Since our initial investment in Realtime Robotics, we have both benefited from this partnership. We look forward to integrating the Realtime Robotics technology into our portfolio of products to continue enhancing our next-gen products with advanced features and scalability.

Howard: RTR has been working with Mitsubishi since 2018, so it’s our longest customer and partner. We have other investors, but our relationship with Mitsubishi is more holistic, broader, and deeper.

We’ve seen a lot of Mitsubishi Electric’s team as we create our products, and we look forward to reaching the next steps to market together. RR

• Bore sizes up to 1-3/4” and 45mm

• Torque up to 1150 in-lbs (130 Nm)

• Reduced vibration due to a balanced design

• Double disc for increased misalignment

• Bore sizes up to 1-3/4” and 45mm

• Torque up to 1300 in-lbs (152 Nm)

• Reduced vibration due to a balanced design

• Highest torsional stiffness

• Maximum mounting flexibility with drilled holes

• Most secure mounting connection with thread- ed holes

• Bore sizes from 3/8”-2” and 10-50mm

• Carefully made by Ruland in aluminum, steel, and stainless steel

• Widest selection of standard inch-tometric bores with or without keyways

• Supplied with proprietary Nypatch® anti-vibration hardware

• One-piece style for easy installation

• Two-piece style with a balanced design

The Robot Report recently spoke with Ph.D. student Cheng Chi about his research at Stanford University and recent publications about using diffusion AI models for robotics applications. He also discussed the recent universal manipulation interface, or UMI gripper, project, which demonstrates the capabilities of diffusion model robotics.

The UMI gripper was part of his Ph.D. thesis work, and he has opensourced the gripper design and all of the code so that others can continue to help evolve the AI diffusion policy work.

AI innovation accelerates

How did you get your start in robotics?

I worked in the robotics industry for a while, starting at the autonomous vehicle company Nuro, where I was doing localization and mapping.

And then I applied for my Ph.D. program and ended up with my advisor Shuran Song. We were both at Columbia University when I started my Ph.D., and then last year, she moved to Stanford to become full-time faculty, and I moved [to Stanford] with her.

For my Ph.D. research, I started as a classical robotics researcher, and I started working with machine learning, specifically for perception. Then in early 2022, diffusion models started to work for image generation, that’s when

MIKE OITZMAN • SENIOR EDITOR

The Universal Manipulation Interface demonstrates diffusion models for robotics.

|

Cheng Chi, Stanford University

DALL-E 2 came out, and that’s also when Stable Diffusion came out.

I realized the specific ways which diffusion models could be formulated to solve a couple of really big problems for robotics, in terms of end-to-end learning and in the actual representation for robotics.

So, I wrote one of the first papers that brought the diffusion model into robotics, which is called diffusion policy. That’s my paper for my previous project before the UMI project. And I think that’s the foundation of why the UMI gripper works. There’s a paradigm shift happening, my project was one of

them, but there are also other robotics research projects that are also starting to work.

A lot has changed in the past few years. Is artificial intelligence innovation accelerating?

Yes, exactly. I experienced it firsthand in academia. Imitation learning was the dumbest thing possible you could do for machine learning with robotics. It’s like, you teleoperate the robot to collect data, the data is paired with images, and the corresponding actions.

In class, we’re taught that people proved that in this paradigm of

The first diffusion policy research at Columbia was to push a T into position on a table.

| Cheng Chi

imitation learning or behavior, cloning doesn’t work. People proved that errors grow exponentially. And that’s why you need reinforcement learning and all the other methods that can address these limitations.

But fortunately, I wasn’t paying too much attention in class. So I just went to the lab and tried it, and it worked surprisingly well. I wrote the code, I applied the diffusion model to this and for my first task; it just worked. I said, “That’s too easy. That’s not worth a paper.”

I kept adding more tasks like online benchmarks, trying to break the algorithm so that I could find a smart angle that I could improve on this dumb idea that would give me a paper, but I just kept adding more and more things, and it just refused to break.

So there are simulation benchmarks online. I used four different benchmarks and just tried to find an angle to break it so that I could write a better paper, but it just didn’t break. Our baseline performance was 50% to 60%. And after applying the diffusion model to that, it was like 95%. So it was a jump in terms of these. And that’s the moment I realized, maybe there’s something big happening here.

How did those findings lead to published research?

That summer, I interned at Toyota Research Institute, and that’s where I started doing real-world experiments using a UR5 [cobot] to push a block into a location. It turned out that this worked really well on the first try.

Normally, you need a lot of tuning to get something to work. But this was different.

QM22 MINIATURE PACKAGE SIZE

Ø1.024” x 0.486” H Modular Bearingless Design

QM35 LOW PROFILE DESIGN

Ø1.44” x 0.43” H Modular Bearingless Design

Since 1999, Quantum Devices has been known for innovation and customization to match our customers’ motion control needs and requirements.

Our encoders are used in multiple industries utilizing Robotics and Automation & encompassing work environments like Warehousing, Material Handling and Packaging--ideal fits for Autonomous Mobile Robots, Automated Guided Vehicles, Robotic Arms and more.

We design and manufacture in-house with a process that accommodates large volume OEMs just as seamlessly as low volume made-to-orders.

OUR OFFERINGS INCLUDE:

• Standard optical encoders featuring numerous configurations

• In collaboration with customers--applicationspecific designs in the form of derivative products as well as ground-up designs

• Small to medium batch custom photodiodes from our own silicon foundry

• Custom caliper [ring] encoders for large bores--starting at 1”; incremental and commutation options available

When I tried to perturb the system, it just kept pushing it back to its original place.

And so that paper got published, and I think that’s my proudest work, I made the paper open-source, and I open-sourced all the code because the results were so good, I was worried that people were not going to believe it. As it turned out, it’s not a coincidence, and other people can reproduce my results and also get very good performance.

I realized that now there’s a paradigm shift. Before [this UMI Gripper research], I needed to engineer a separate perception system, planning system, and then a control system. But now I can combine all of them with a single neural network.

The most important thing is that it’s agnostic to tasks. With the same robot, I can just collect a different data set and train a model with a different data set, and it will just do the different tasks.

Obviously, collecting the data set part is painful, as I need to do it 100 to 300 times for one environment to get it to work. But in actuality, it’s maybe one afternoon’s worth of work. Compared to tuning a sim-to-real transfer algorithm takes me a few months, so this is a big improvement.

UMI Gripper training ‘all about the data’

When you’re training the system for the UMI Gripper, you’re just using the vision feedback and nothing else?

Just the cameras and the end effector pose of the robot — that’s it. We had two cameras: one side camera that was mounted onto the table, and the other one on the wrist.

That was the original algorithm at the time, and I could change to another task and use the same algorithm, and it would just work. This was a big, big difference. Previously, we could only afford one or two tasks per paper because it was so timeconsuming to set up a new task.

| Cheng Chi

But with this paradigm, I can pump out a new task in a few days. It’s a really big difference. That’s also the moment I realized that the key trend is that it’s all about data now. I realized after training more tasks, that my code hadn’t been changed for a few months.

The only thing that changed was the data, and whenever the robot doesn’t work, it’s not the code, it’s the data. So when I just add more data, it works better.

And that prompted me to think that we are into this paradigm of other AI fields as well. For example, large language models and vision models started with a small data regime in 2015, but now with a huge amount of internet data, it works like magic.

The algorithm doesn’t change that much. The only thing that changed is the scale of training, and maybe the size of the models, and makes me feel like maybe robotics is about to enter that that regime soon.

Can these different AI models be stacked like Lego building blocks to build more sophisticated systems? I believe in big models, but I think they might not be the same thing as you imagine, like Lego blocks. I suspect that the way you build AI for robotics will be that you take whatever tasks you want to do, you collect a whole bunch of data for the task, run that through a model, and then you get something you can use.

If you have a whole bunch of these different types of data sets, you can combine them, to train an even bigger model. You can call that a foundation model, and you can adapt it to whatever use case. You’re using data, not building blocks, and not code.

That’s my expectation of how this will evolve.

But simultaneously, there’s a problem here. I think the robotics industry was tailored toward the assumption that robots are precise, repeatable, and predictable. But they’re not adaptable. So the entire robotics industry is geared towards vertical end-use cases optimized for these properties.

Whereas robots powered by AI will have different sets of properties, and they won’t be good at being precise. They won’t be good at being reliable, they won’t be good at being repeatable. But they will be good at generalizing to unseen environments. So you need to find specific use cases where it’s okay if you fail maybe 0.1% of the time.

Robots in industry must be safe 100% of the time. What do you think the solution is to this requirement? I think if you want to deploy robots in use cases where safety is critical, you either need to have a classical system or a shell that protects the AI system so that it guarantees that when something bad happens, at least there’s a worst-case scenario to make sure that something bad doesn’t actually happen.

Or you design the hardware such that the hardware is [inherently] safe. Hardware is simple. Industrial robots for example don’t rely that much on perception. They have expensive motors, gearboxes, and harmonic drives to make a really precise and very stiff mechanism. RR

Siemens Industry is expanding its 1FK7 servomotor family, with the introduction of a new highinertia style. The higher rotor inertia of this design makes the control response of the new 1FK7-HI servomotors robust and suitable for high- and variable-load inertia applications.

Siemens said these self-cooled 1FK7HI servomotors provide stall torque in the 3 Nm to 20 Nm range. They are offered in IP64 or IP65 with IP67 flange degree of protection, with selectable options for plain or keyed shaft, holding brake, 22-bit incremental or absolute

encoders, as well as 18 color options.

A mechanical decoupler between the motor and encoder shaft protects the encoder from mechanical vibrations, providing a long service life.

In cases where the encoder needs to be exchanged, the device automatically aligns the encoder signal to the rotor pole position, enabling feedbacks to be changed in the field in less than five minutes.

These new Siemens 1FK7-HI servomotors also feature the unique Drive-Cliq serial bus and electronic nameplate recognition, allowing virtual

plug-n-play operation when paired to the Sinamics S drive platform.

All servomotors in this new line are also configured to interface with Siemens Sinumerik CNC technology for machine tool applications and the motion controller Simotion for general motion control use.

Selecting the proper motor to suit the application is facilitated by the Siemens Sizer toolbox and compatible 3D CAD model-generating CADCreator package. RR

Today’s increasing demands of automation and robotics in various industries, engineers are challenged to design unique and innovative machines to differentiate from their competitors. Within motion control systems, flexible integration, space saving, and light weight are the key requirements to design a successful mechanism.

Canon’s new high torque density, compact and lightweight DC brushless servo motors are superior to enhance innovative design. Our custom capabilities engage optimizing your next innovative designs.

We are committed in proving technological advantages for your success.

At CGI we serve a wide array of industries including medical, robotics, aerospace, defense, semiconductor, industrial automation, motion control, and many others. Our core business is manufacturing precision motion control solutions.

CGI’s diverse customer base and wide range of applications have earned us a reputation for quality, reliability, and flexibility. One of the distinct competitive advantages we are able to provide our customers is an engineering team that is knowledgeable and easy to work with. CGI is certified to ISO9001 and ISO13485 quality management systems. In addition, we are FDA and AS9100 compliant. Our unique quality control environment is weaved into the fabric of our manufacturing facility. We work daily with customers who demand both precision and rapid turnarounds.

3300 North First Street San Jose, CA, 95134

408-468-2320 www.usa.canon.com

The SHA-IDT Series is a family of compact actuators that deliver high torque with exceptional accuracy and repeatability. These hollow shaft servo actuators feature Harmonic Drive® precision strain wave gears combined with a brushless servomotor, a brake, two magnetic absolute encoders and an integrated servo drive with CANopen® communication.This revolutionary product eliminates the need for an external drive and greatly simplifies cabling yet delivers high-positional accuracy and torsional stiffness in a compact housing.

The market demands specific robots for industrial and service applications. Together, our customers and the maxon specialists define and develop the required joint drives. A customized drive system with high power density and competitive safety functions make our drives the perfect solution for applications in robotics. Our drives are perfectly suited for wherever extreme precision and the highest quality standards are needed and where compromises cannot be tolerated.

Features at a Glance

• Continuous (RMS) torque: 34Nm

• Repeatable peak torque: 57Nm

• Hollow shaft: 20mm

• Zero-backlash gear

• Dual-encoder with absolute output encoder

• EtherCAT position controller

• 2.5kg

• Design Configuration: motor, gear, brake, encoder and controller

maxon 125 Dever Drive Taunton, MA 02780

www.maxongroup.us | 508-677-0520

Why accept a standard product for your custom application? NEWT is committed to being the premier manufacturer of choice for customers requiring specialty wire, cable and extruded tubing to meet existing and emerging worldwide markets. Our custom products and solutions are not only engineered to the exacting specifications of our customers, but designed to perform under the harsh conditions of today’s advanced manufacturing processes. Cables we specialize in are LITZ, multi-conductor cables, hybrid configurations, coaxial, twin axial, miniature and micro-miniature coaxial cables, ultra flexible, high flex life, low/high temperature cables, braids, and a variety of proprietary cable designs. Contact us today and let us help you dream beyond today’s technology and achieve the impossible.

New England Wire Technologies www.newenglandwire.com 603.838.6624

Renishaw’s FORTiS™ delivers superior repeatability, reduced hysteresis, and improved measurement performance due to an innovative non-contact mechanical design that does not require a mechanical guidance carriage. It provides high resistance to the ingress of liquids and solid debris contaminants. It features an extruded enclosure with longitudinally attached interlocking lip seals and sealed end caps. The readhead body is joined to a sealed optical unit by a blade, which travels through the lip seals along the length of the encoder. Linear axis movement causes the readhead and optics to traverse the encoder’s absolute scale (which is fixed to the inside of the enclosure), without mechanical contact.

Renishaw, Inc.

1001 Wesemann Dr. West Dundee, IL, 60118

Phone: 847-286-9953 email: usa@renishaw.com website: www.renishaw.com