SPECTRA

Michaelmas 2025

Social Media’s Control p26

Hydrogen Cars Behaviourism

What Happened? p29

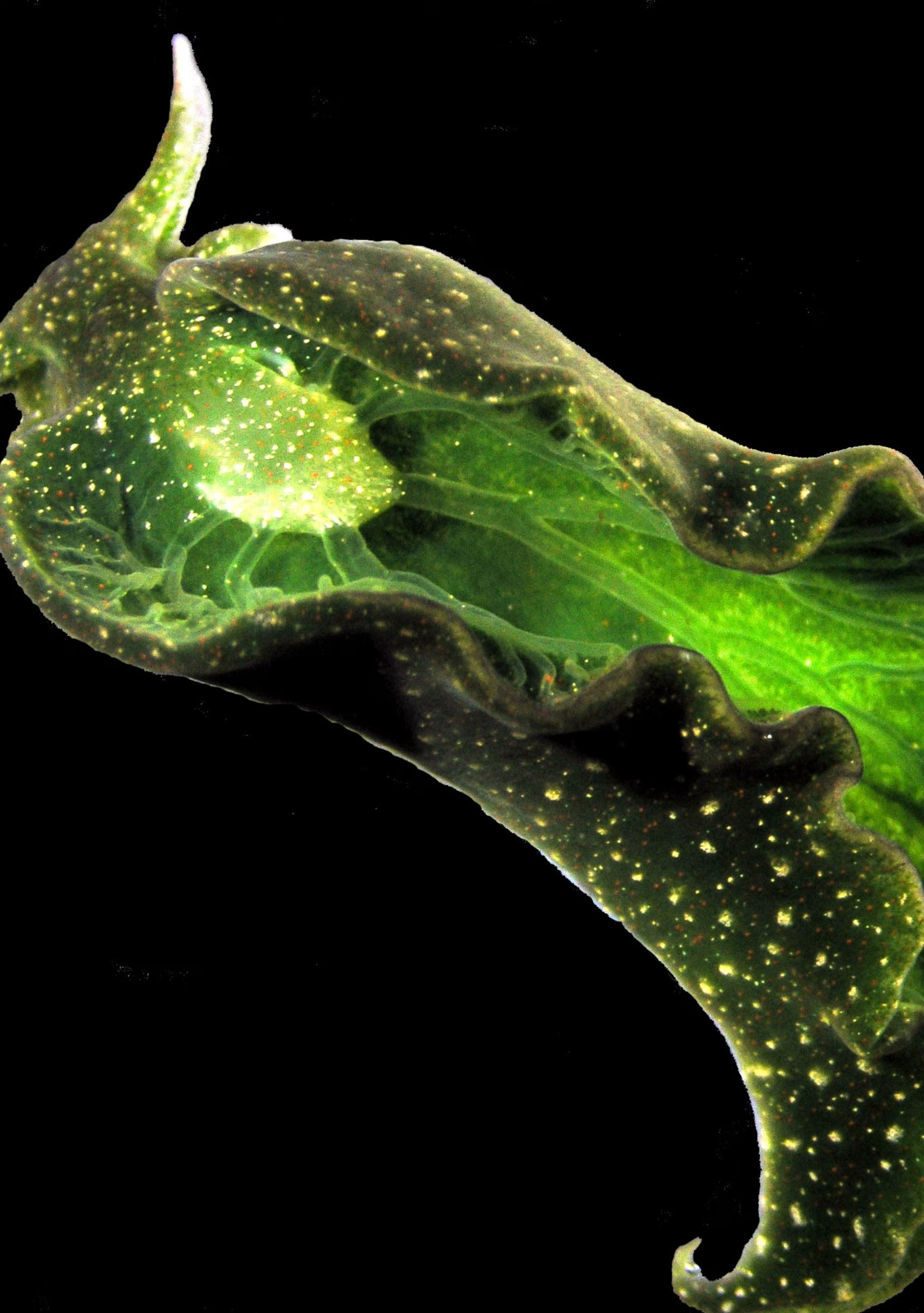

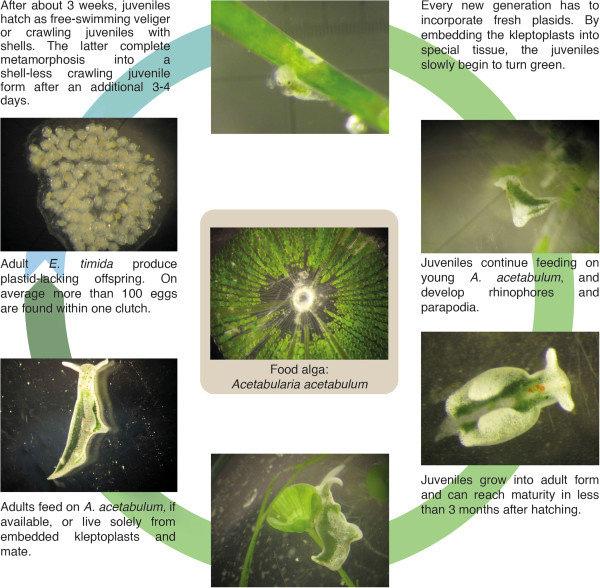

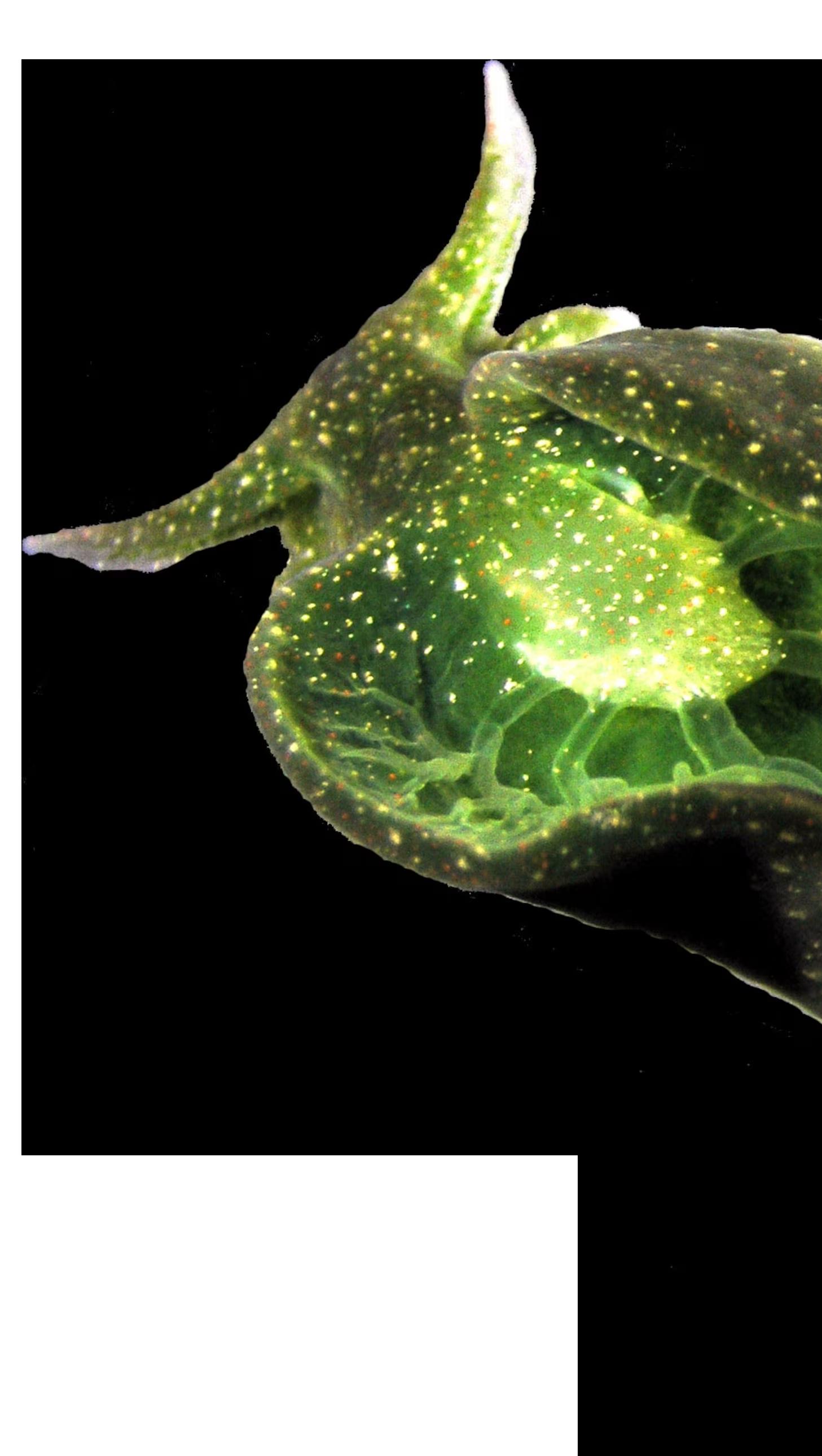

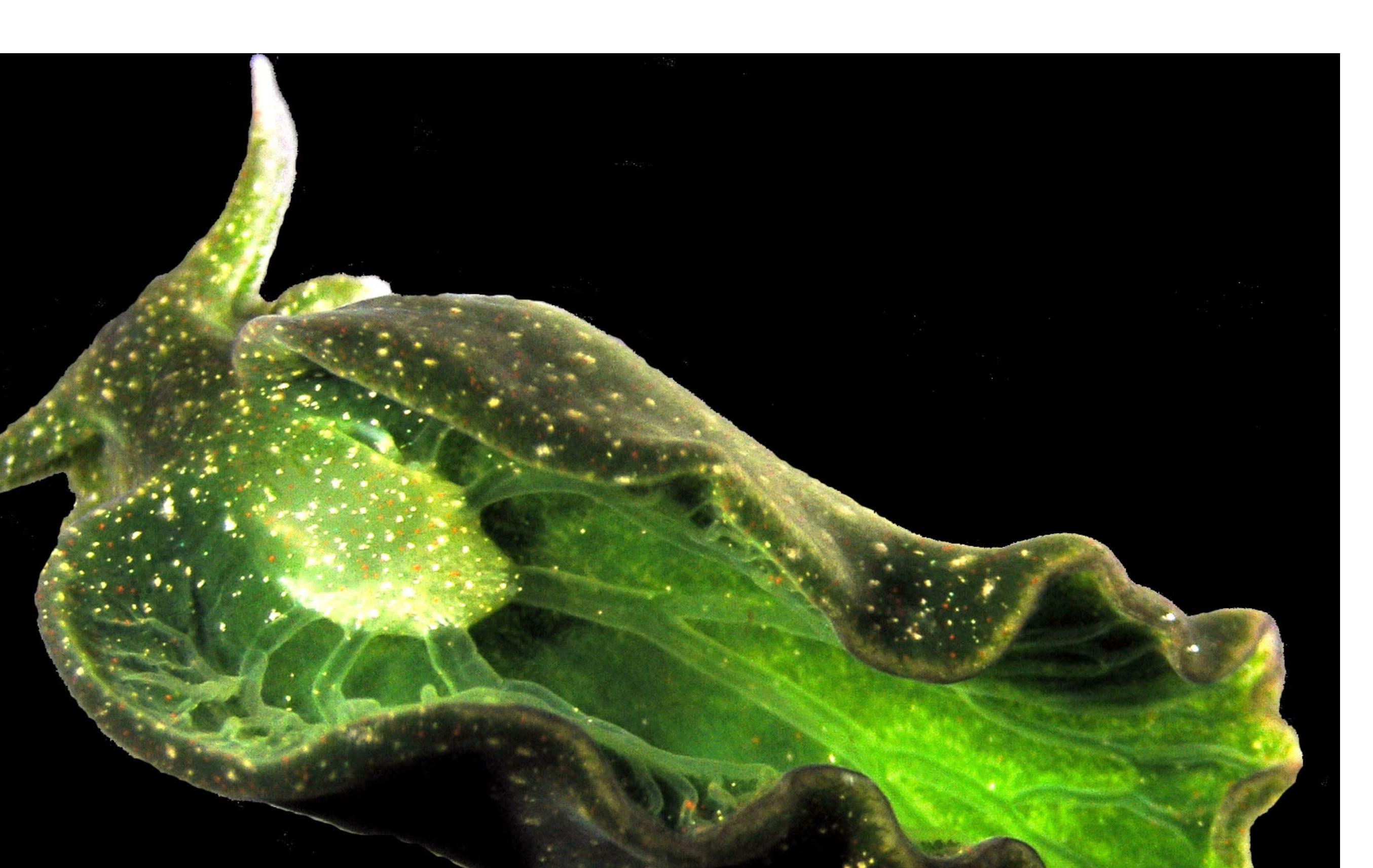

Living off the Sun

The solar powered sea slug p67

EARTH LIFE BEYOND

THE FERMI PARADOX Whats really out there?

EDITORIAL

Hello, and welcome to this years issue of Spectra

In an age where headlines are dominated by artificial intelligence, climate breakthroughs, and the limits of human knowledge being constantly redefined, this issue explores the future of science, whilst exploring theories and the past. In this issue, we explore everything from the creation of synthetic elements that stretch the boundaries of the periodic table, to self-healing materials that could revolutionise everyday life. This issue looks into space, and immortal animals, and questions how science and society shape one another in a world that is progressing faster than ever. There are words from plenty of young researchers, and passionate thinkers — proving that science isn’t just something done in labs, it is being lived, questioned, and celebrated across students.

HUMAN HEALTH & THE BRAIN

P11 Past, Present and Future of Robotic Surgery P14 Alcohol Related Neurological Diseases

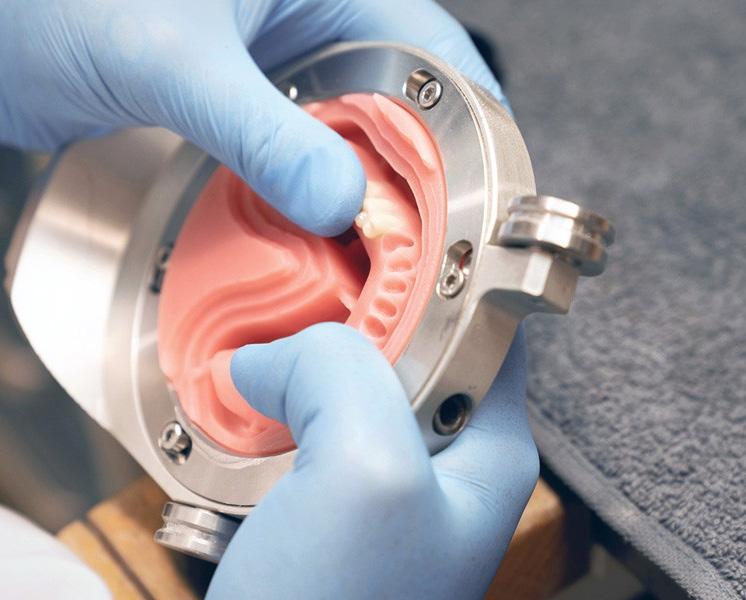

P42 Materials of Denture

P56 The Complex Biology of Epigenetic Modfications

EVOLUTION AND THE NATURAL WORLD

P8 Parasites

P67 The Solar Powered Sea Slug

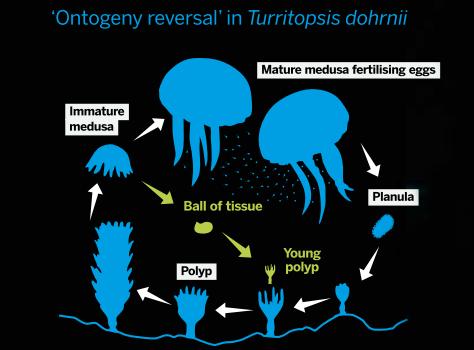

P62 The Immortal Jellyfish

TECHNOLOGY & ENGINEERING FRONTIERS

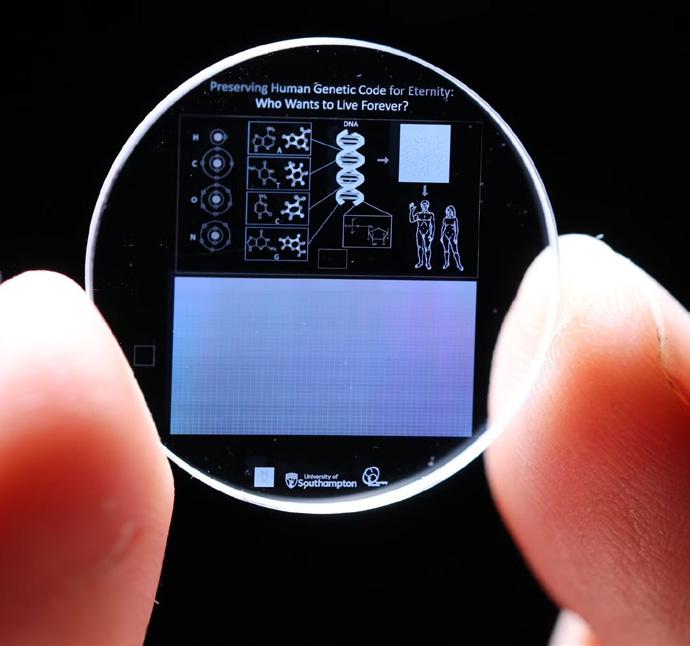

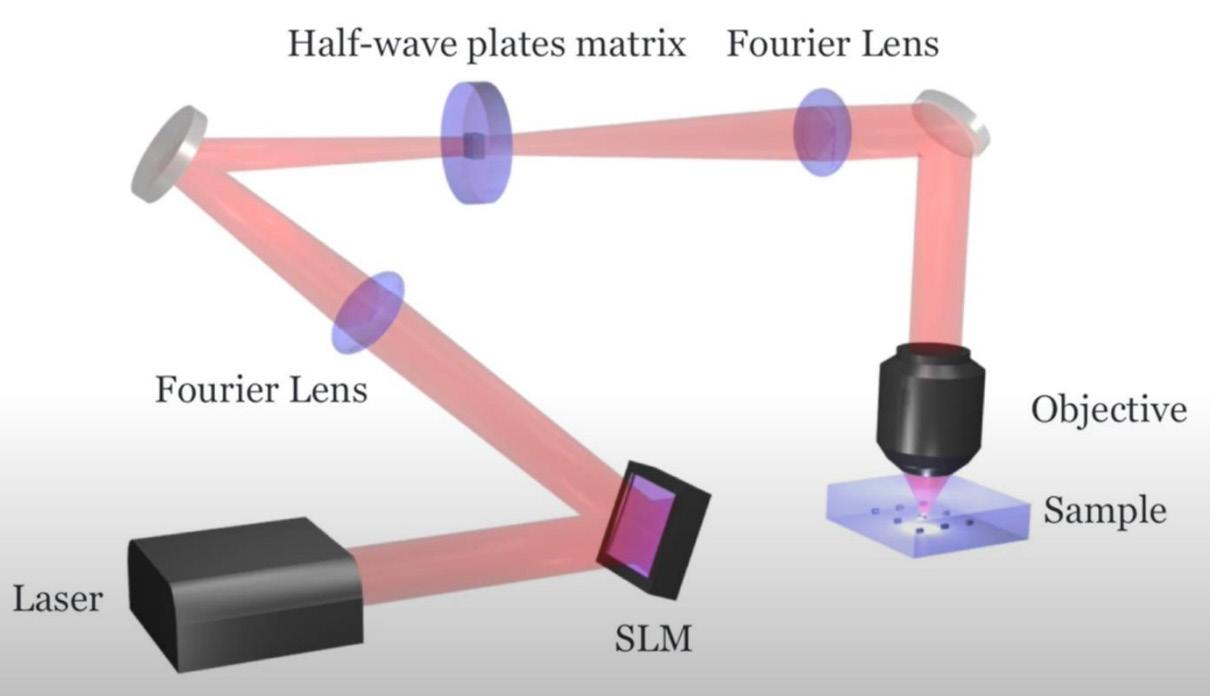

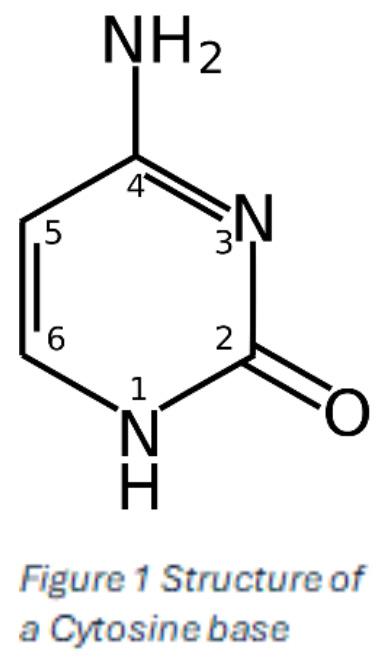

P54 Superman Memory Crystal

P75 Self Healing Polymers

P4 3D Printing

P29 Hydrogen

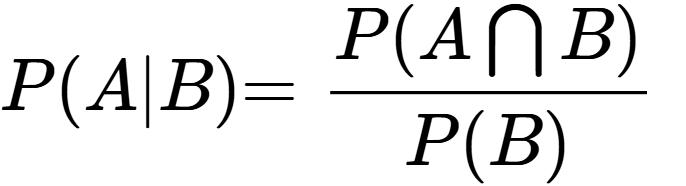

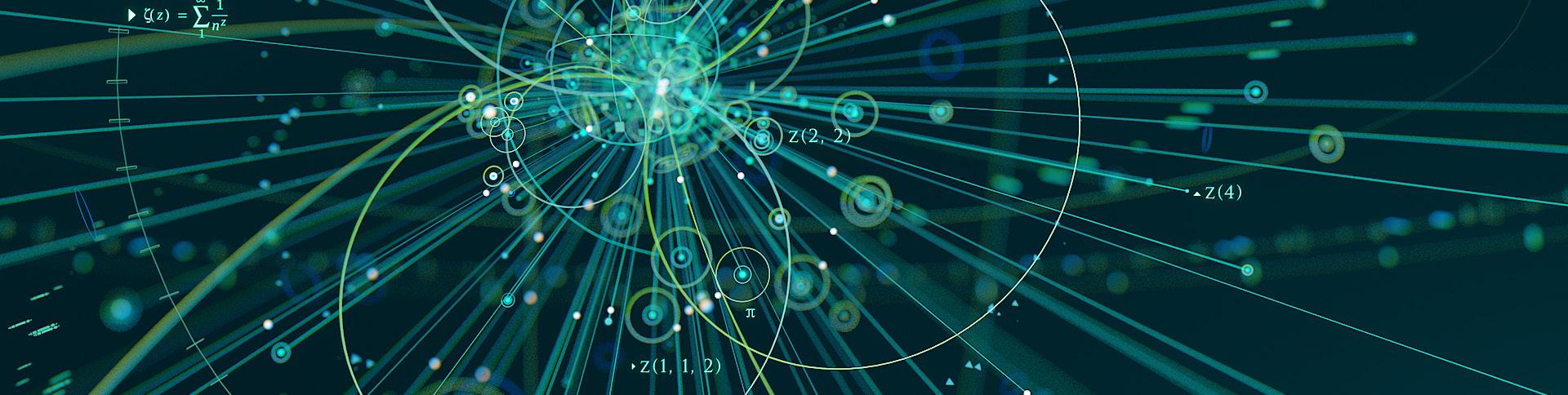

DATA, AI & MATHEMATICAL THINKING

P22 Natural Language Processing

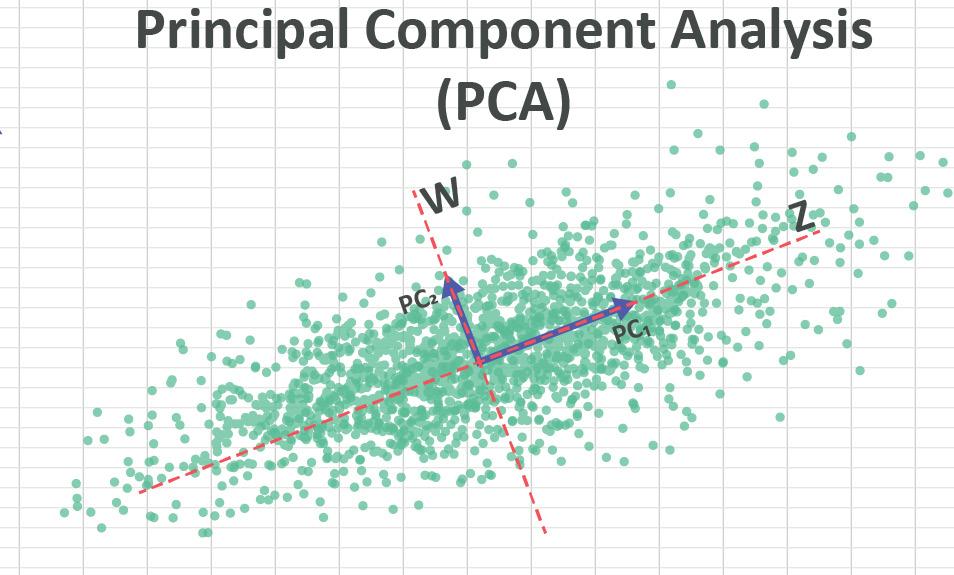

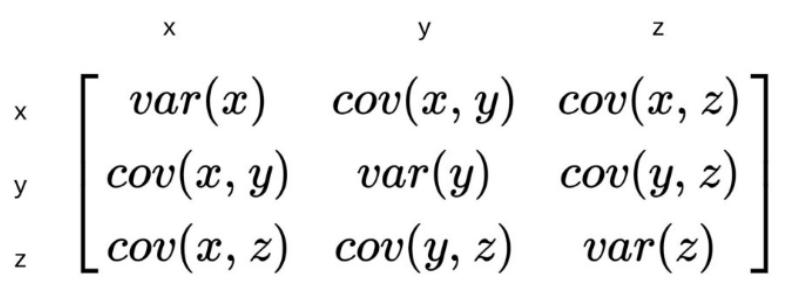

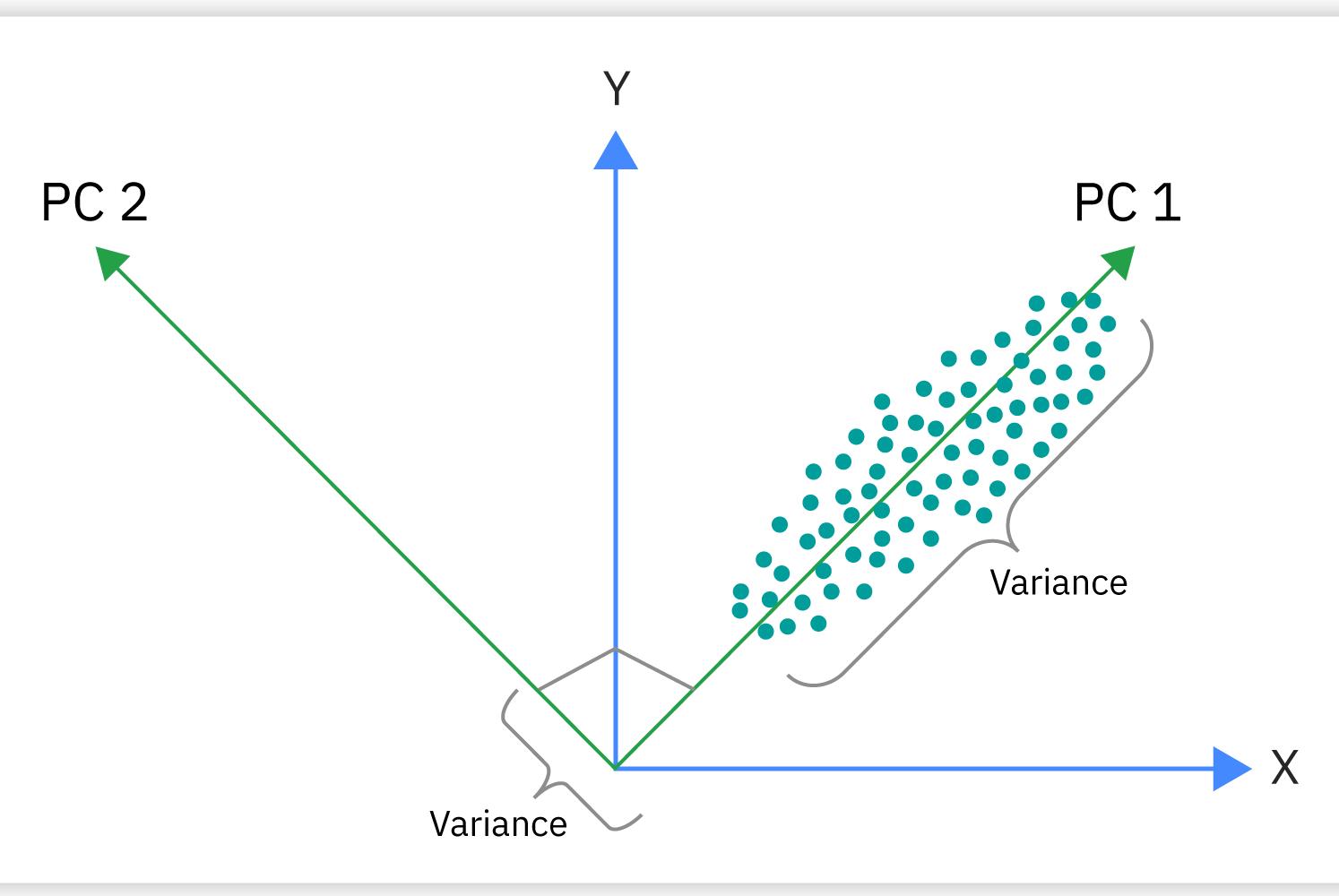

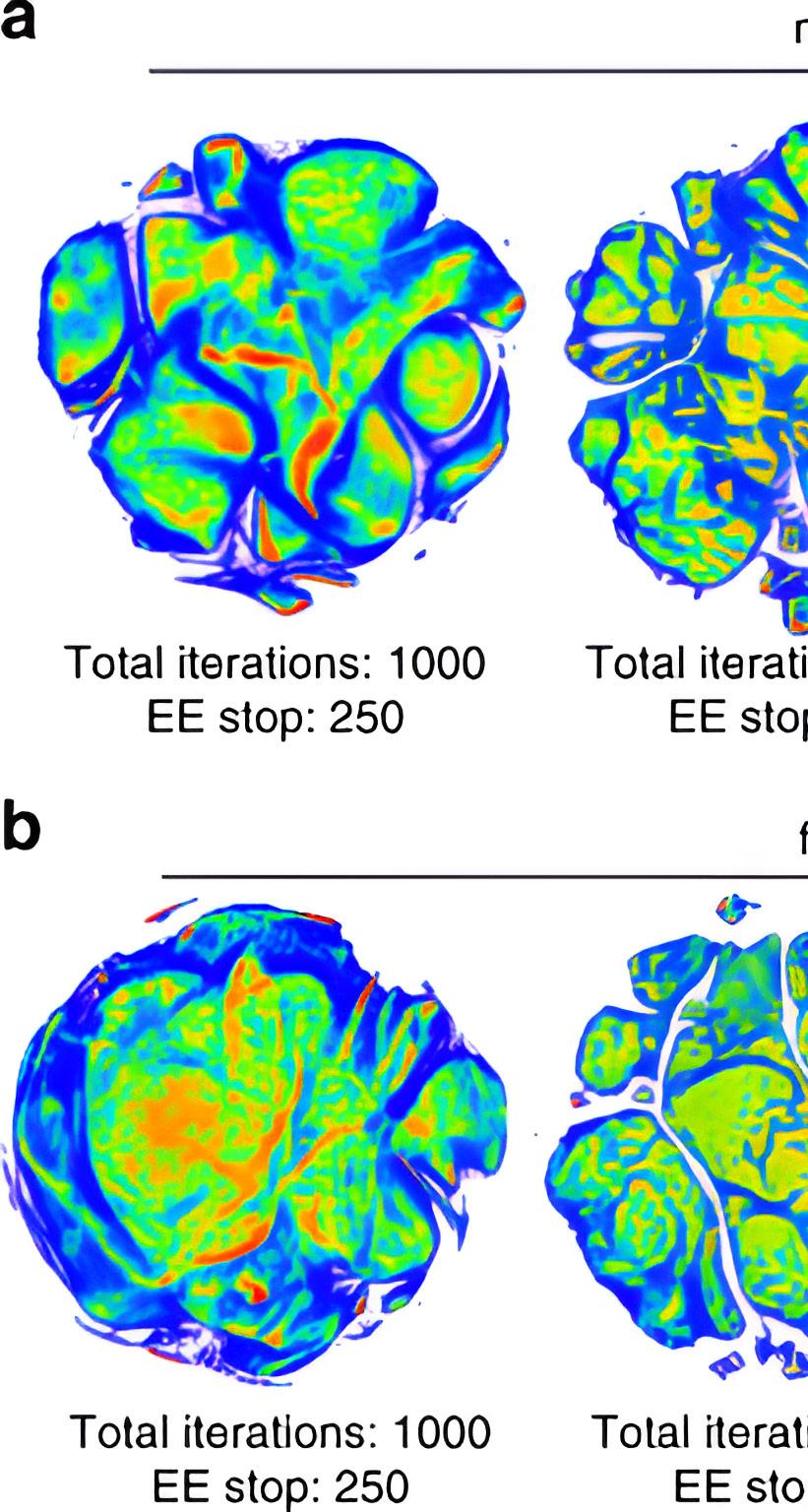

P44 Mathermatical Approaches for Reducing Data Dimensionality

P32 Introduction to Projective Geometry

SPACE, PHYSICS AND THE UNIVERSE

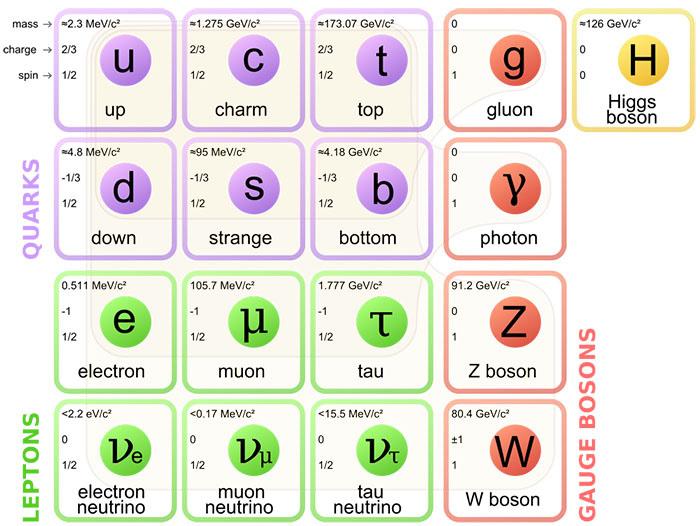

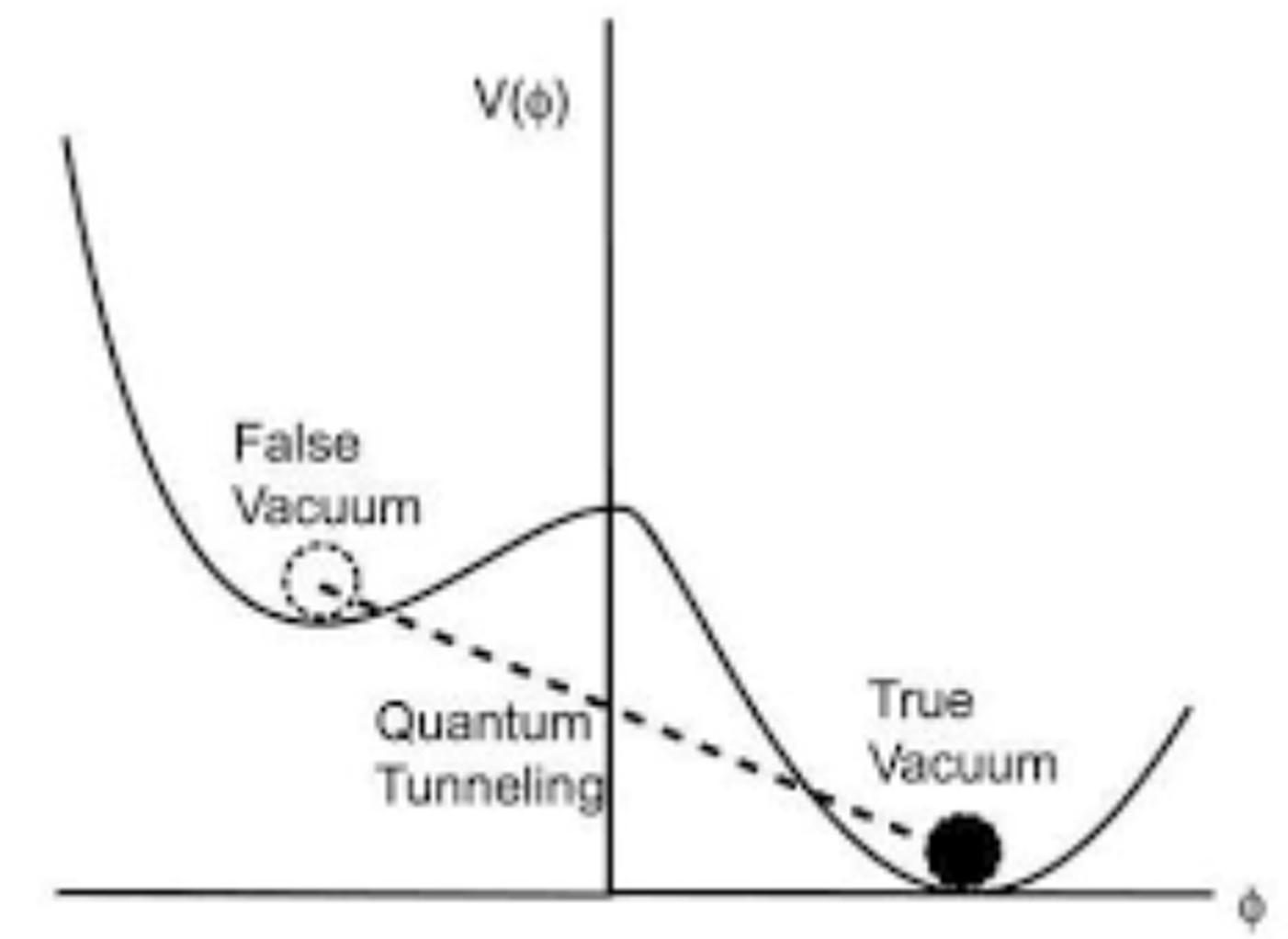

P52 False Vacuum Theory

P58 The Fermi Paradox

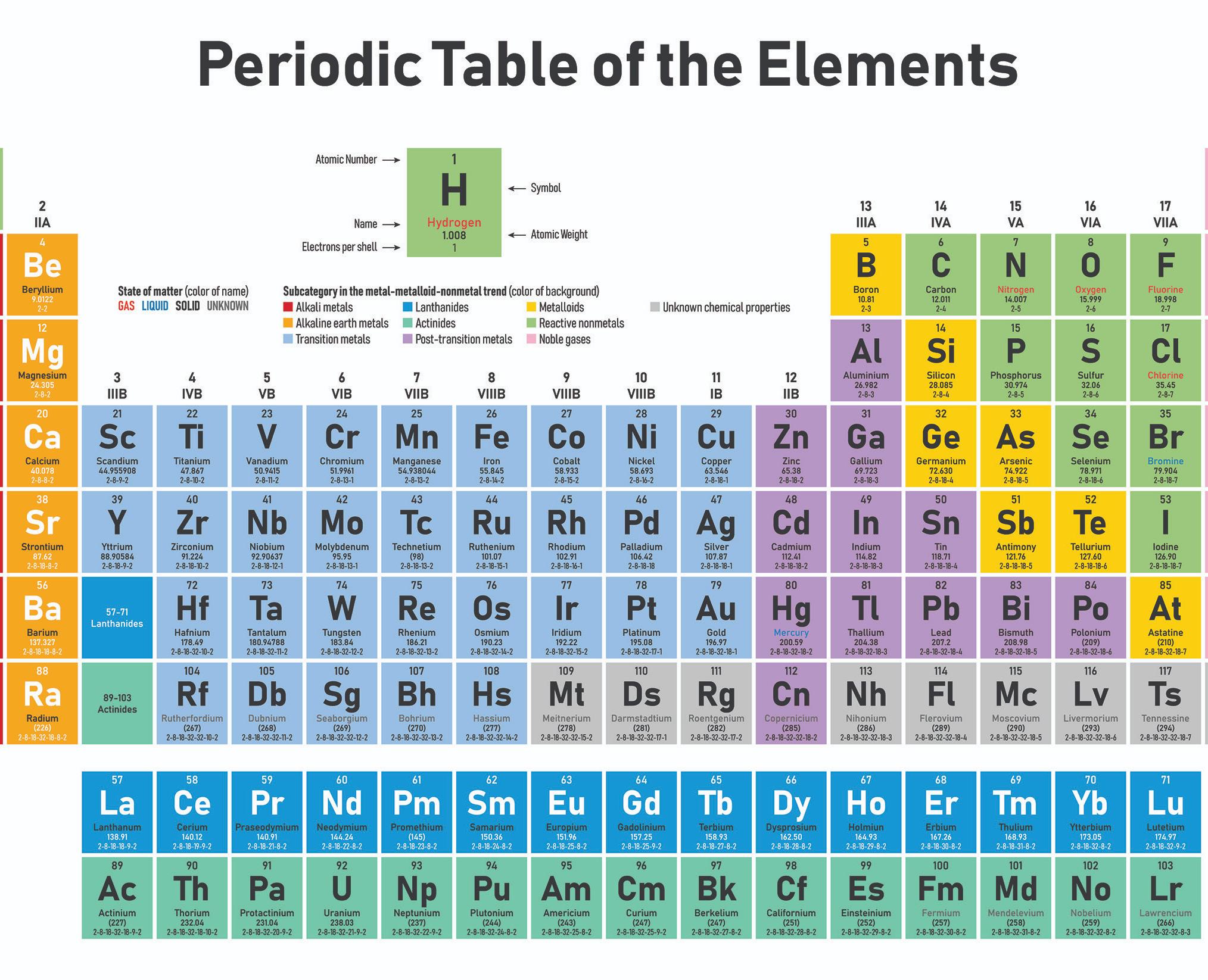

P65 The Periodic Table

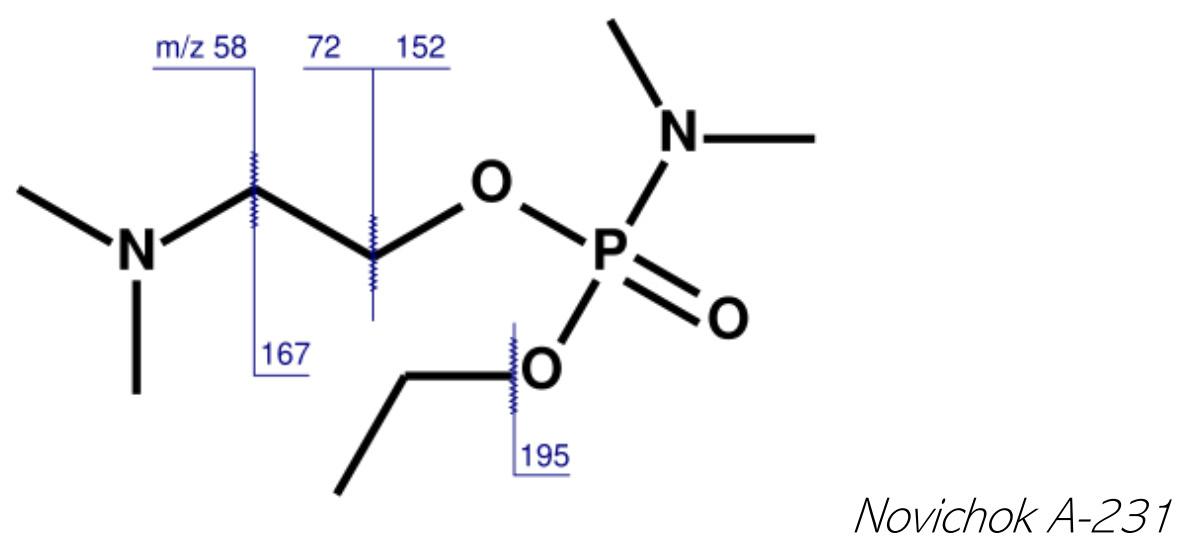

P48 Nerve Agents

P70 Pushing the boundaries of the Periodic Table

P26 Fluid Dynamics and Aerospace

3D PRIN TING Could it revolutionise the future of design and manufacture?

Words: Sammy Winson

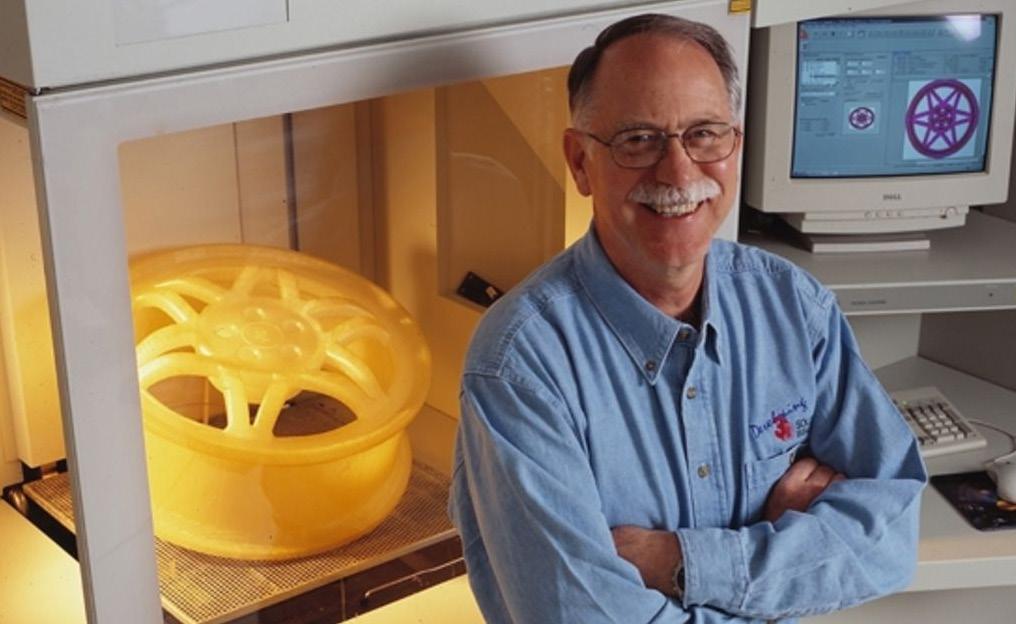

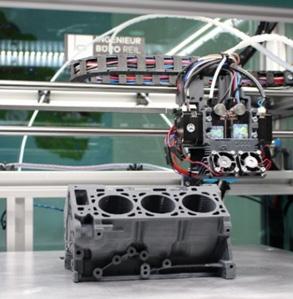

Over the last few decades, 3D printers have developed in design rapidly, allowing for finer detail on prints, use of stronger material, faster print time, and a multitude of other innovational features. The future of 3D printing is incredibly optimistic, with new, commercially, and industrially available printers being created and launched, each with new features. Before we explore the finer details of 3D Printer’s and their

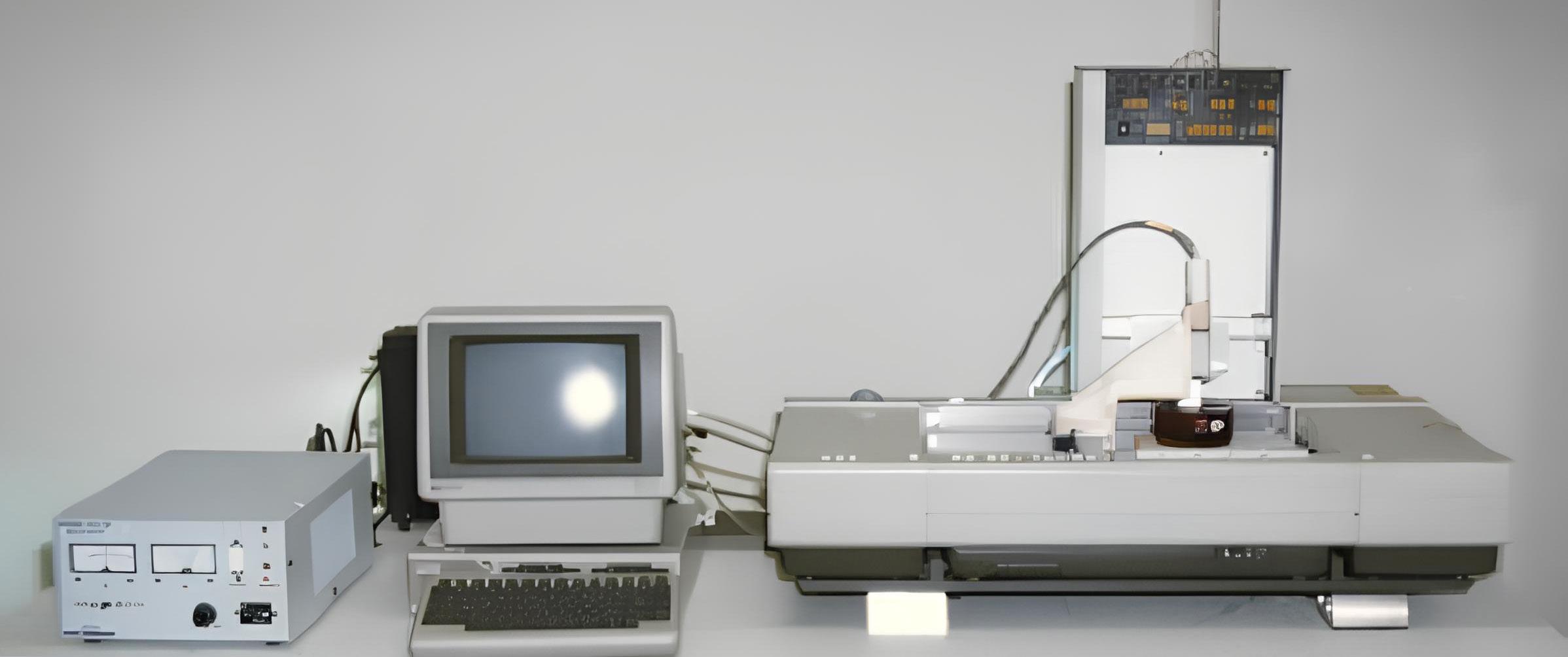

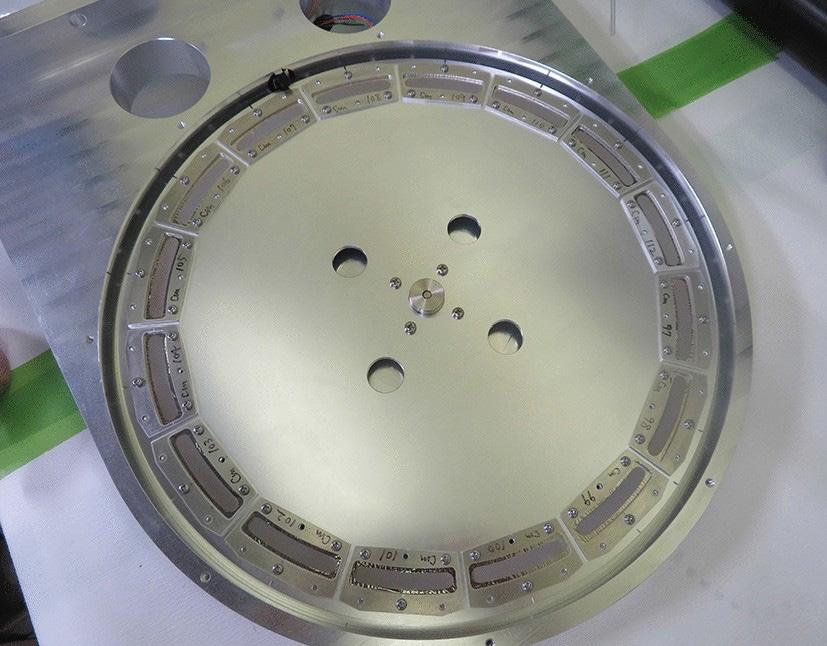

design, we need to understand the history behind them. In the 1980s, the idea of additive manufacturing and 3D printers came around, leading to the first 3D Printer concept being created in the early 1980s in the form of a Stereolithography Apparatus (SLA) printer, which uses a guided laser to cure liquid resin layer by layer into a three-dimensional object (see above). This led to the invention of the

Fused Deposition Modelling (FDM) printer. This variety of 3D printer now is the most commonly used variant, and it works by depositing layers of polymer, such as PLA or PETG on top of each other to create a three-dimensional object.

Rapid Prototyping & Iterative design

3D Printers are now used frequently in the process of rapid prototyping and iterative design, although mostly FDM printers are used for this. This is because they are faster, cheaper, and generally create stronger parts than SLA, or other methods of printing. Rapid prototyping is the continuous cycle of prototyping, testing, and analysing a product under development in order to slowly improve the design of the product. This method eliminates most issues with products and allows for cheap and easy iterations of design. Iterative design is the process of slowly improving design over many models in order to create a final product. 3D

Printing allows for quick iteration of design, decreasing the time and cost of developing products.

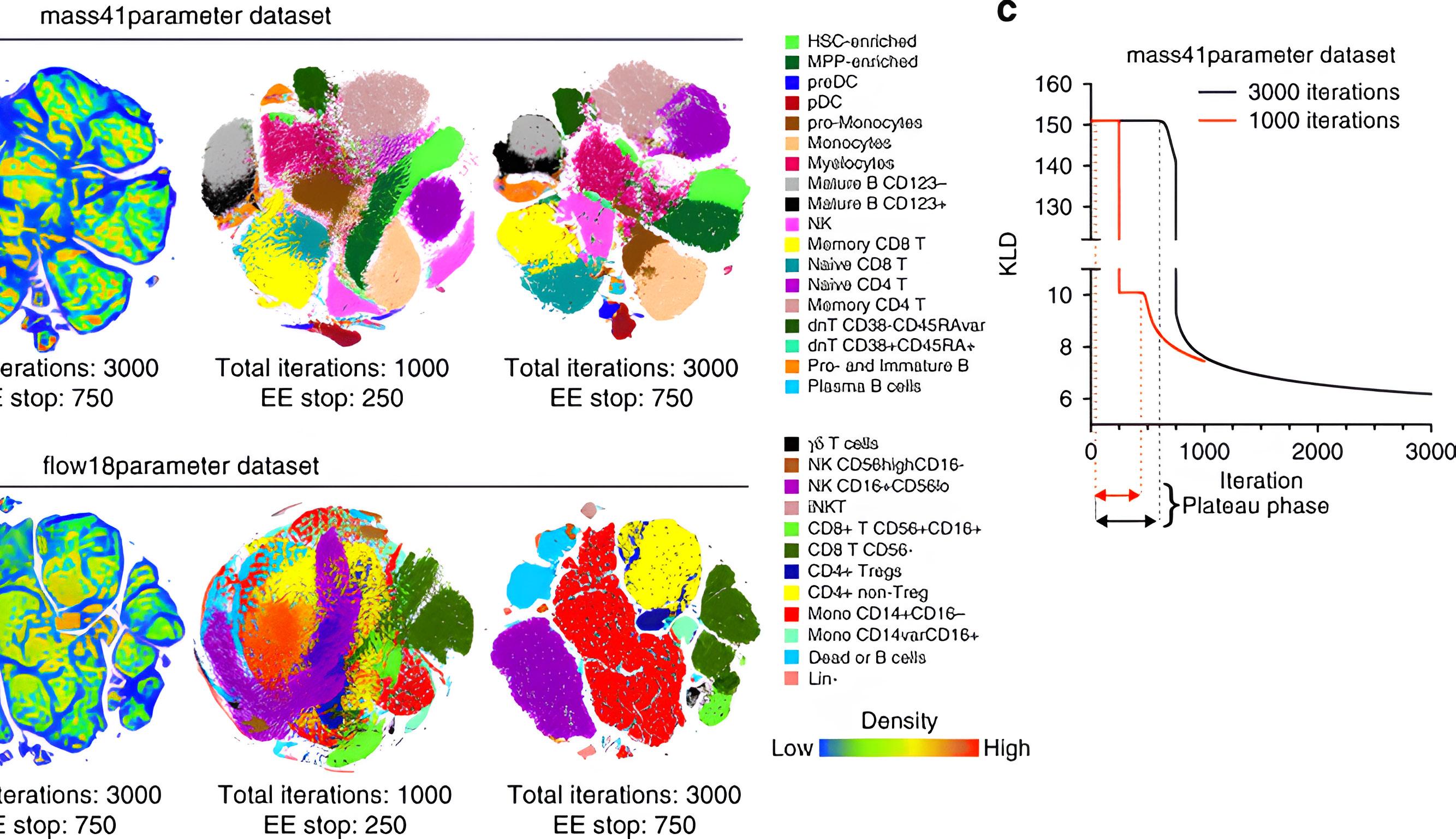

Use of consumer level 3D printers

As 3D printers have developed in design, the price has fallen as a result of mass production and optimized design. Companies such as Bambu Lab, Creality and Elegoo have revolutionized the industry of consumer level 3D printing by releasing various affordable and easy to use 3D printers. Over time, the price of these printers has dropped exponentially, going from Over £2000 fora 3D printer in 2014,

to under £200 for a high quality, entry-level printer in 2024. 3D printers are fantastic for anyone who loves to design products and create projects, as it allows for fast iterative design, usually being around a few hours for a smaller print. I can personally attest to 3D printing at home, having designed several of my own products, iteratively designing parts for projects, and printing functional parts for use around the house, including gutter brackets and other helpful items, although there is the occasional print failure which can arise from various different issues, but most are easy to correct. The

industry is growing exponentially, with 3D printers becoming increasingly common in homes due to the falling price, increase in quality and faster print times.

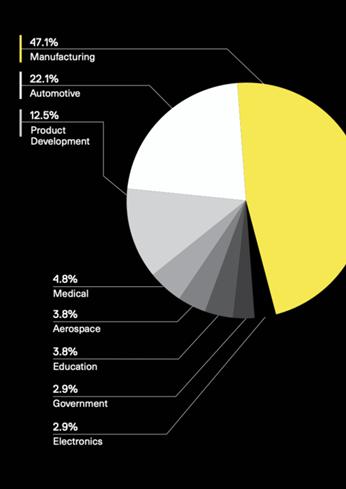

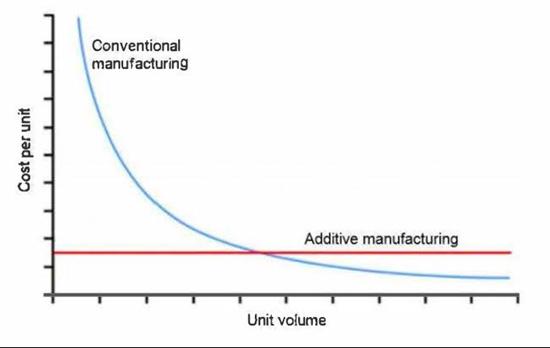

Use of 3D printers in industrial design and manufacture

Although 3D printers are not used much in manufacturing currently, they are becoming an increasingly popular choice over other methods of manufacture such as injection moulding. Many new innovative industrial 3D printers have been

introduced over the last few years, which come with significant benefits over home-use 3D printers, including incredibly fast speed, high build volume capacity, and the ability to print with new materials. These industrial printers use different technologies compared to consumer level printers, which primarily use FDM and SLA technology. These innovative technologies allow for faster printing time, and other materials to be printed. Nowadays, 3D printers not only use generic polymers such as PLA, but now both industrial 3D printers and many consumer level 3D printers can

print a variety of different materials, including some modern materials such as carbon-reinforced polymer, and flexible materials such as TPU. Some industrial printers now use new methods such as Selective Laser Melting (SLM) and Metal Fused Filament Fabrication (FFF) technology to print using metals such as titanium, steel, and aluminium, although this process is currently slow and expensive. Industrial printers have high startup costs, although it is becoming cheaper over time. In the future, the manufacture of products with 3D printers is expected to explode in popularity among manufacturers as the technology develops and can be used to fulfil orders using the Just In Time (JIT) manufacturing strategy which aims to be able

to fulfil orders as they come in, which can be done with 3D printers as custom, unique objects can be created completely from scratch.

The future of 3D printing

The future of additive manufacturing technology is very optimistic. Companies are entering the market at rapid pace, releasing high quality, affordable 3D printers for both consumers and industrial clients. New materials such as carbon-reinforced polymer is under development for use, bringing strength comparable to metal. Industrial printers are becoming more prominent in the manufacture and design of products. Materials are becoming stronger and cheaper, and the print time of prints is decreasing rapidly. Over the last 10 years, average print time has dropped significantly due to new technologies being developed, and that is expected to continue. One of the negatives of 3D printing, which is the requirement of supports for overhanging sections is expected to become less of an issue with new methods of printing such as non-planar printing, and new build plates with better adhesion. Methods of automating 3D printing have been developed, although they are not prominent in the industry yet however, they could allow for continuous manufacture of parts once a functional automated printer has been developed and created. New methods of monitoring prints such as artificial intelligence (AI) and cameras have allowed for print failures to be detected automatically or manually by the user via apps. Consumer level printers have brought along very simple to use software such as Bambu Studio and

Bambu Handy, allowing people with little printing experience to easily print products. Build volume capacities have increased significantly which allow for larger objects to be printed, although they require more filament and time to print. The innovation in 3D printing technology over the last few decades has allowed for functional, medical applications of 3D printers, including prosthetics, allowing for the creation of cheaper and lighter prosthetics, which is expected to continue to be used and improved in the future.

In conclusion, 3D printing and the additive manufacturing industry is has grown exponentially over the last 40 years and is expected to continue this momentum of growth as new, modern technologies are developed, including innovative printers with higher build volume, faster speeds are higher quality, new materials which can improve durability and strength while being affordable, and new applications of 3D printing in the manufacturing industry. 3D printing can be used to rapidly develop new products by iterative design, while also being able to continuously manufacture products on a large scale for any

use case. Fully unique and custom products can be created, with no extra cost to create a new, unique shape, unlike injection moulding which requires expensive moulds. Overall, 3D printing has impacted the design industry greatly over the last 40 years and has begun to enter the product manufacture industry, which will continue in the future as technologies develop. It is safe to say that 3D printing will revolutionize the future of design and manufacture around the world. ~

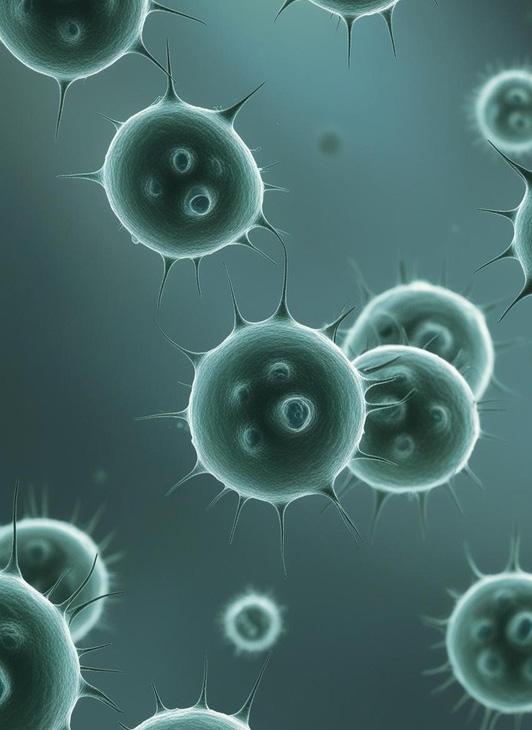

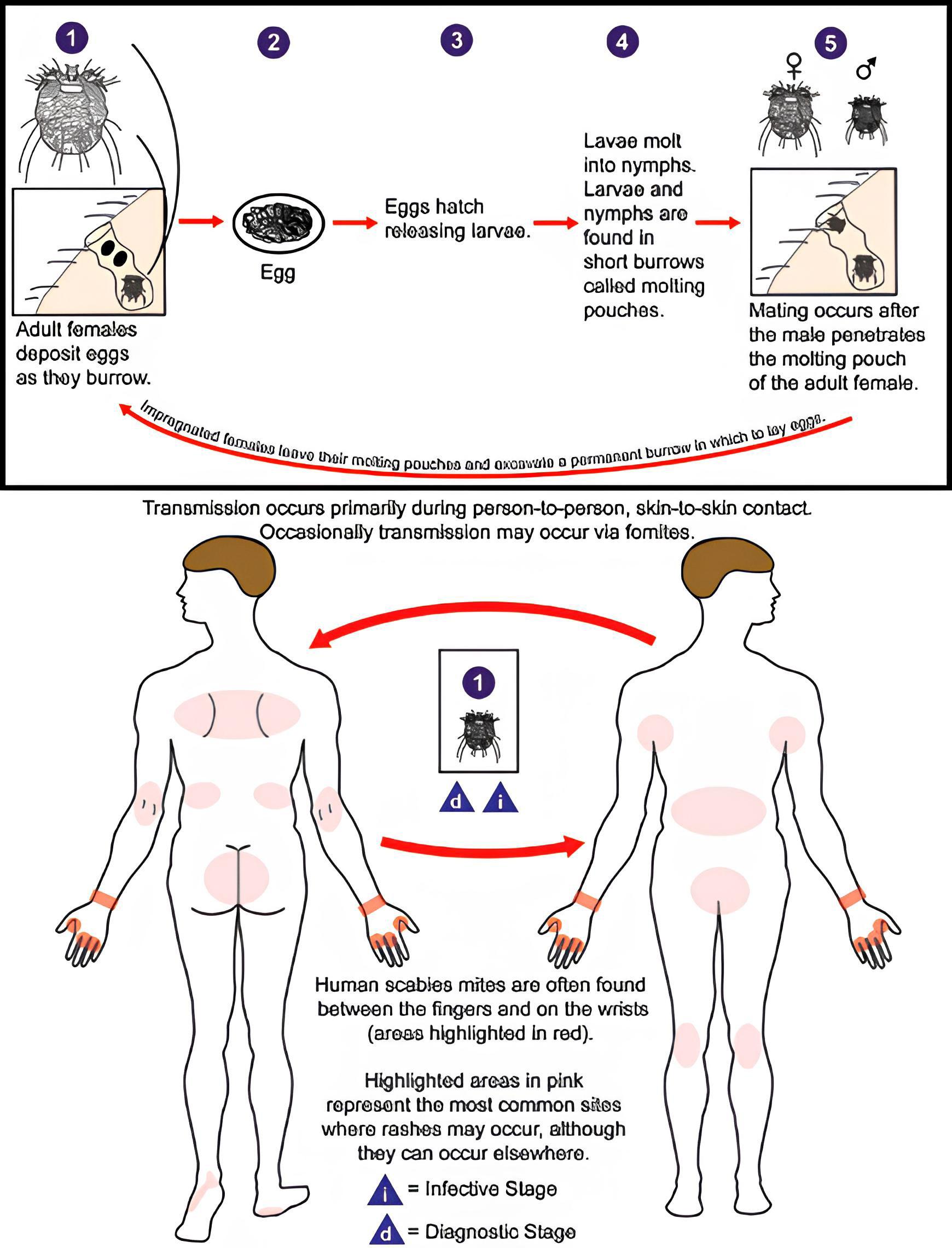

PARASITES The “Invisible” Partners in Life

Biological Features of Parasites

The biological success of parasites stems from four principal characteristics. Through these adaptations, parasites have maintained evolutionary success across countless generations and ecosystems.

Attachment and Feeding Structures: These specialised anatomical adaptations, such as hooks, suckers, or stylets, facilitate a secure attachment to the host and ensure efficient nutrient extraction.

Rapid Reproduction and Genetic Flexibility: High fecundity and genetic adaptability allow parasites to persist despite environmental pressures and host defenses. 1 2 3 4

Immune Modulation: Parasites can manipulate host immune responses through a variety of strategies, including the secretion of immunomodulatory molecules, the mimicry of host antigens, or the induction of regulatory immune cells (Maizels and McSorley, 2014).

Complex Life Histories: Many parasites, particularly helminths and protozoa, require different hosts for various developmental stages. This dependency creates intricate ecological webs and drives host evolution.

Parasites have long been perceived as malevolent agents of disease and suffering, featuring prominently in historical records of plagues, famines, and societal collapse. Diseases such as malaria, schistosomiasis, and trypanosomiasis have shaped human populations and public health policies for centuries. Yet, recent research challenges this negative narrative. Parasites are increasingly understood not only as pathogens but as essential regulators of ecosystems, immune systems, and even potential tools for medical innovation. This article explores the biological nature of parasites, their ecological and immunological importance, as well as their emerging applications in medicine.

Parasites are organisms that live on or within a host organism, obtaining nutrients at the host’s expense and often causing harm (Gadallah, n.d.). They vary widely, from unicellular protozoa such as Plasmodium, to complex multicellular helminths like tapeworms and nematodes, and ectoparasites such as ticks and lice. Parasites have evolved remarkable survival strategies. They often display sophisticated mechanisms for attachment, such as the scolex of tapeworms or the hooked mouthparts of a lice, which enable them to remain embedded within or upon the host. Furthermore, many parasites have complex life cycles involving multiple hosts, maximising their chances of

transmission and survival. Malaria parasite alternating between mosquitoes and humans to complete its development will be one of the perfect examples (Meekums et al., 2015).

Perhaps most impressively, parasites are excellent at immune evasion. For instance, Trypanosoma brucei, the causative agent of African sleeping sickness, undergoes antigenic variation by altering its surface glycoproteins to escape immune surveillance (Kocahan et al., 2019).

The Importance of Parasites in Human Health

While parasites are often associated with morbidity and mortality, their role in human health is more nuanced. Chronic exposure to parasitic organisms during evolution has profoundly shaped the human immune system.Parasitic infections typically induce a regulated immune response characterised by the expansion of regulatory T cells (Tregs) and the suppression of inflammatory responses. This modulation helps prevent overactive immune responses, reducing the risk of autoimmune diseases like type 1 diabetes, multiple sclerosis, and inflammatory bowel

disease (Maizels and McSorley, 2014). In addition, intestinal helminths interact with gut microbiota, influencing bacterial composition and promoting gut health (Anthony et al., 2007). Last but not least, the hygiene hypothesis suggests that the decline in parasitic infections in industrialised societies correlates with increased incidences of allergies and asthma which proposes that early parasitic exposure may calibrate the immune system towards tolerance rather than hypersensitivity (Maizels and McSorley, 2014). Hence, parasites have played a vital role not merely as pathogens but also as architects of immune balance.

Medical Uses of Parasites

Recent medical research highlights several ways in which parasites — or their biological mechanisms — can be harnessed therapeutically:

Helminth Therapy: Clinical trials have explored the intentional introduction of helminths, such as Trichuris suis ova, into patients with autoimmune diseases. Helminth-derived molecules can promote the development of regulatory immune cells and mitigate conditions like Crohn’s

disease (is a chronic inflammatory condition that can affect any part of the gastrointestinal (GI) tract from the mouth to the anus) and multiple sclerosis (a chronic autoimmune disease that affects the central nervous system, specifically the brain and spinal cord)(Maizels and McSorley, 2014; Kocahan et al., 2019).

Cancer Immunotherapy: Studies have revealed that parasitic infections can provoke strong type 1 immune responses, which are crucial for anti-tumor activity. Toxoplasma gondii has been investigated for its ability to stimulate the immune system to recognise and destroy cancer cells (Wang et al., 2023).

Vaccines and Immunomodulation: Understanding parasite immune evasion strategies informs vaccine design, especially through the study of innate lymphoid cells (ILCs) and pattern recognition receptors like Toll-like receptors (TLRs), which are pivotal in early immune activation (Maizels and McSorley, 2014).

These findings offer new avenues for therapies that are less invasive and potentially more natural than current synthetic pharmaceutical approaches.

The Role of Parasites in Nature

In ecosystems, parasites play a crucial role in maintaining balance and driving evolution. They act as natural population regulators by selective targeting weaker or overabundant species while preventing any single species from dominating the ecosystem. This promotes biodiversity and strengthens ecosystems by fostering competition and resilience. For instance, parasites like ticks and lice can reduce overpopulated deer or rodent populations and indirectly supporting predators by ensuring a stable food web (Meekums et al., 2015). Moreover, host-parasite dynamics drive evolutionary adaptations on both sides. This ongoing co-evolution fosters genetic diversity and resilience across species. Furthermore, certain parasites can alter host behaviors to ensure their transmission. For example, the Ophiocordyceps fungus turns ants into “zombies,” directing them to spread fungal spores before they die. Similarly, toxoplasma gondii reduces rodents’ fear of feline predator which enhances the likelihood of completing its life cycle in a

cat host (Gadallah, n.d.). Parasites are thus integral to ecological stability and evolutionary innovation. Parasites are far more than just agents of disease. From their biological complexities to their roles in medicine and ecosystems, they have significant impacts on life. While their harmful effects should be made aware, they should not be overlooked, as it is crucial to appreciate their benefits and contributions. By understanding parasites, we gain insights into evolution, health, and the interconnectedness of all living organisms. Nature’s balance, after all, often relies on even its most unexpected participants. ~

The Past, Present, and Future of

Robotic Surgery

Words -Cyril Sze Chau Leung

Over the last few decades, many industries have experienced rapid development, and massive changes have occurred to their structures. The medical world is no exception and developments across the field of medicine are happening even up until now. Today, we’ll dive into a biography of robotic surgery: the most cutting-edge medical technologies of the modern era.

Overview

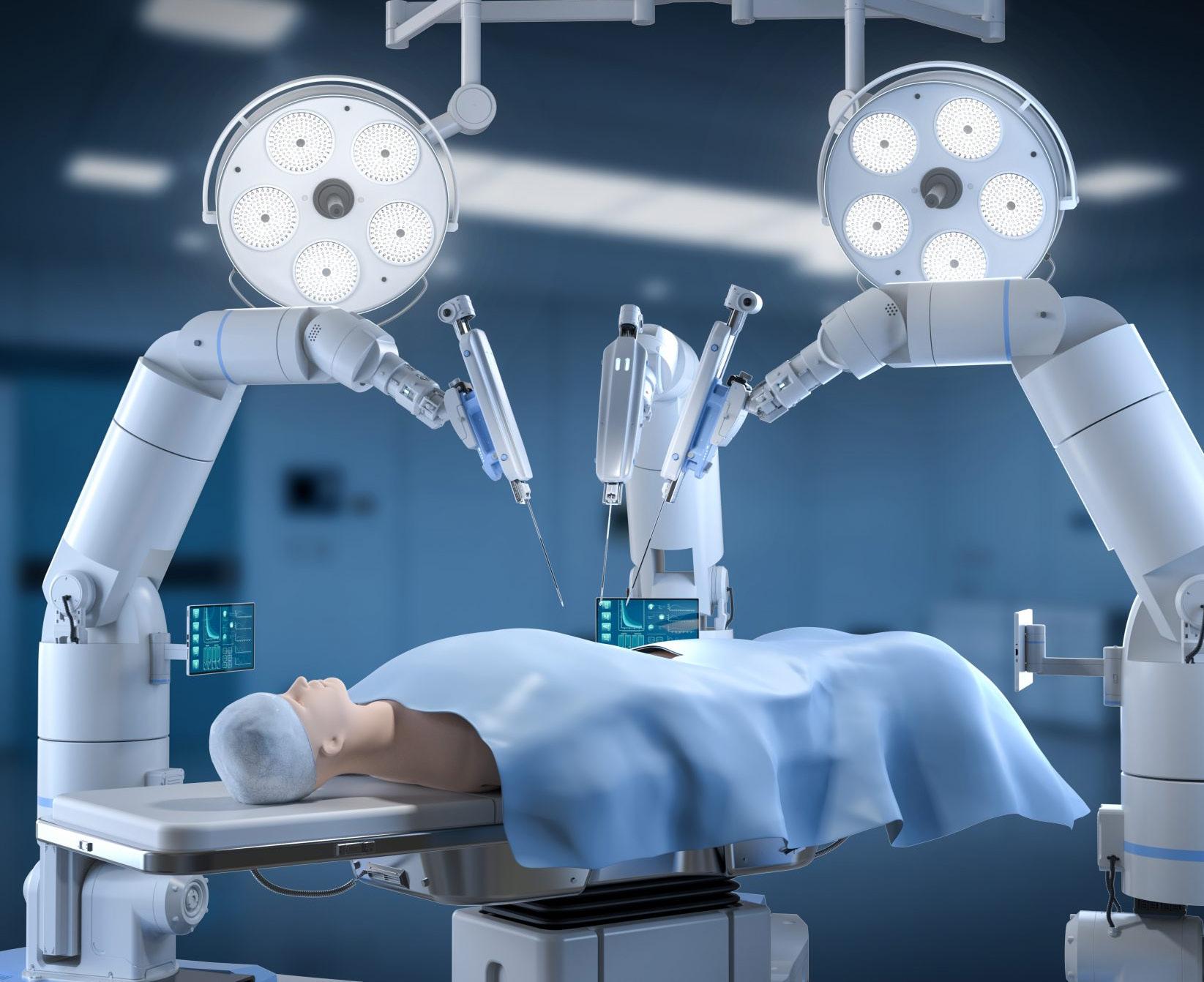

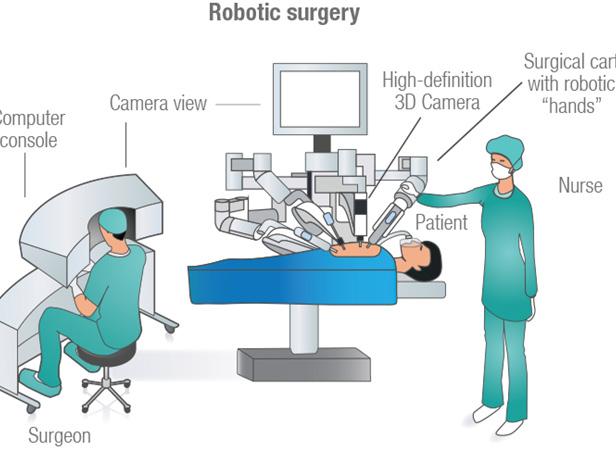

Robotic surgery or robotic-assisted surgery is a method that allows doctors to perform many

types of complex procedures with better precision, flexibility, and control than any other conventional technique. Typically, robotic surgery is performed through minimally invasive incision(s) also known as keyhole surgery, which has become the preferred choice by many surgeons over the last 20 years (Mayo Clinic, no date). The most widely used robotic surgical systems has several main components including a patient-side cart with mechanical arms attached to it. The console, which is where the operating surgeon controls the mechanical arms and a vision system that provides the surgeon with a

high-definition, magnified 3D view of the surgical site (Mayo Clinic, no date).

The Procedure

(Cleveland Clinic, 2024)

1. The surgeon first makes small keyhole incisions on the patient, these incisions are known as ports.

2. Through these ports, the robotic arms attached to the patient-side cart are inserted into them.

3. Each robotic arm may have a camera or surgical instrument attached to it.

4. The camera provides a high-definition, magnified 3D view of the surgical site and relays this back to a 3D vision

system at the console for the surgeon to see.

5. The surgeon uses joystick-like controls and pedals to control the robotic arms.

6. An assistant (usually a nurse) is also present to change any medical instruments.

Benefits

(Cleveland Clinic, 2024)

Improved precision using robotic devices (mechanical arms) compared to a surgeon’s hand, which allows for better access to hard-to-reach places. Eliminates a surgeon’s hand tremor.

Better visualisation of the surgical site due to the magnification of the camera, which is displayed on the console.

Shorter hospital stay, as less tissue damage due to robotic surgery usually being minimally invasive, therefore smaller incisions.

Less scarring, scars on the patient may cause psychological effects such as being self-conscious, as some individual may find the scar to be ‘unattractive’. Less risk of infection and blood loss, due to smaller incisions.

Risk

Human error whilst operating the technology: robotic surgery is a relatively new technology and not many surgeons are experienced with it. However, some medical schools are putting a larger emphasis on robotic training, but whether this will continue for all medical schools is unknown. (Cleveland Clinic, 2024)

Mechanical failure: while highly unlikely, the mechanical components of the system, such as the arms, instruments, or cameras could potentially fail. Therefore, specialists are always on site in the operating room to handle

these extremely rare events (Cleveland Clinic, 2024).

Electrical arcing: Unintentional burns may occur from the cauterising device, this occurs when current from the device leaves the robotic arm and is misdirected at surrounding tissue. However, newer, and more improved robotic systems such as da Vinci XI offer warnings for a risk of arcing (Cleveland Clinic, 2024).

Common risks associated with surgery:

Risk of pneumonia due to anaesthesia, as stomach acid enters the lungs, Allergic reactions from medication, Infection, bleeding, breathing problems.

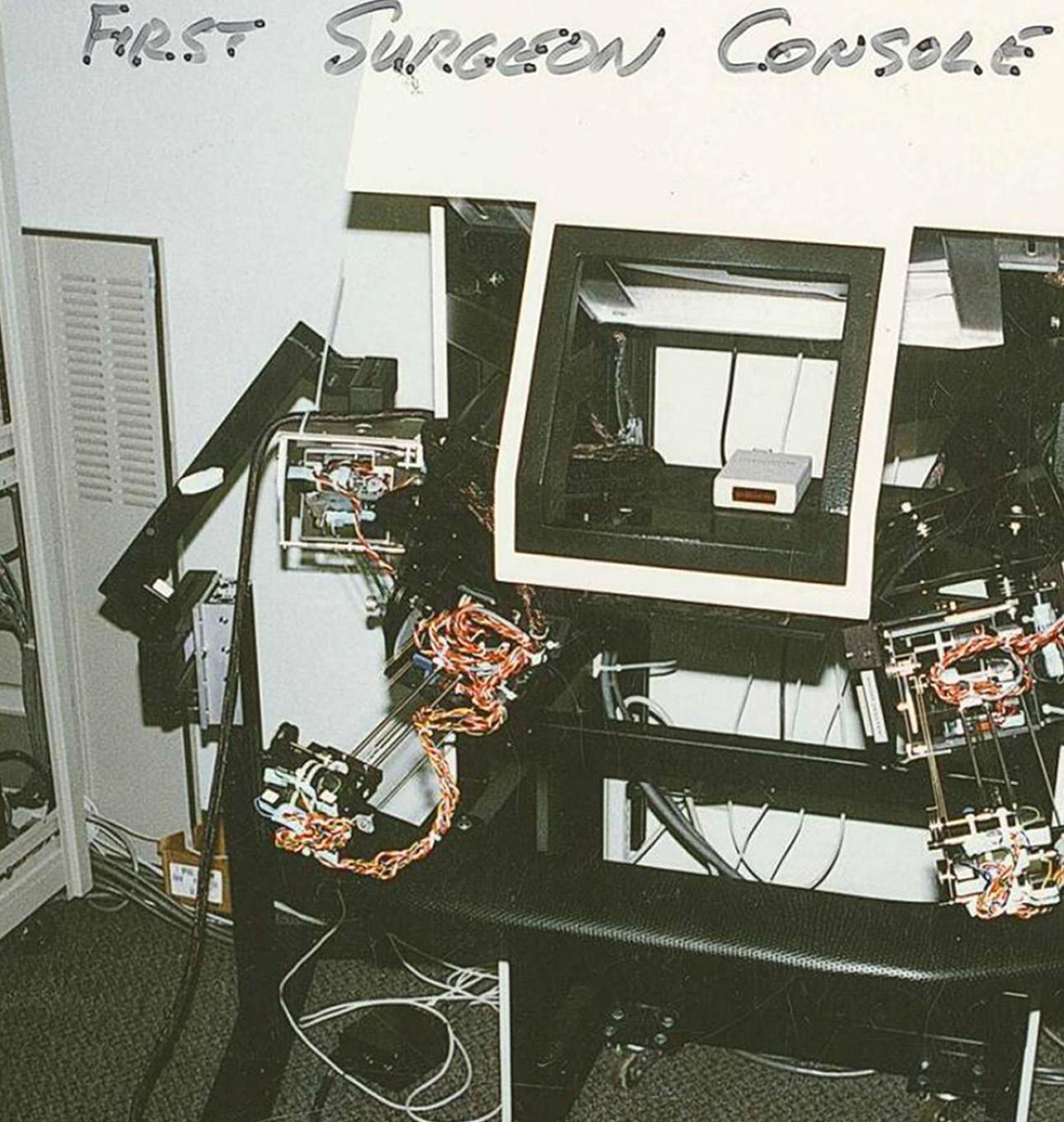

PAST

In 1967 the thought of robots and surgery had already been theorised, but it wasn’t until 1985 that the first robot, the PUMA 200 was used to perform a brain biopsy (the removal and examination of a tissue to test for any symptoms or causes of diseases). In the following years, robots in surgery became more and more common with robots such as ROBODOC, assisting in orthopaedic surgery. Eventually, in 1996, the first completely robotic-dependent surgical system, ZEUS, was created by Computer Motion. This system was the first in which a doctor would control mechanical arms behind a screen to operate on the patient (Morell et al., 2021).

PRESENT

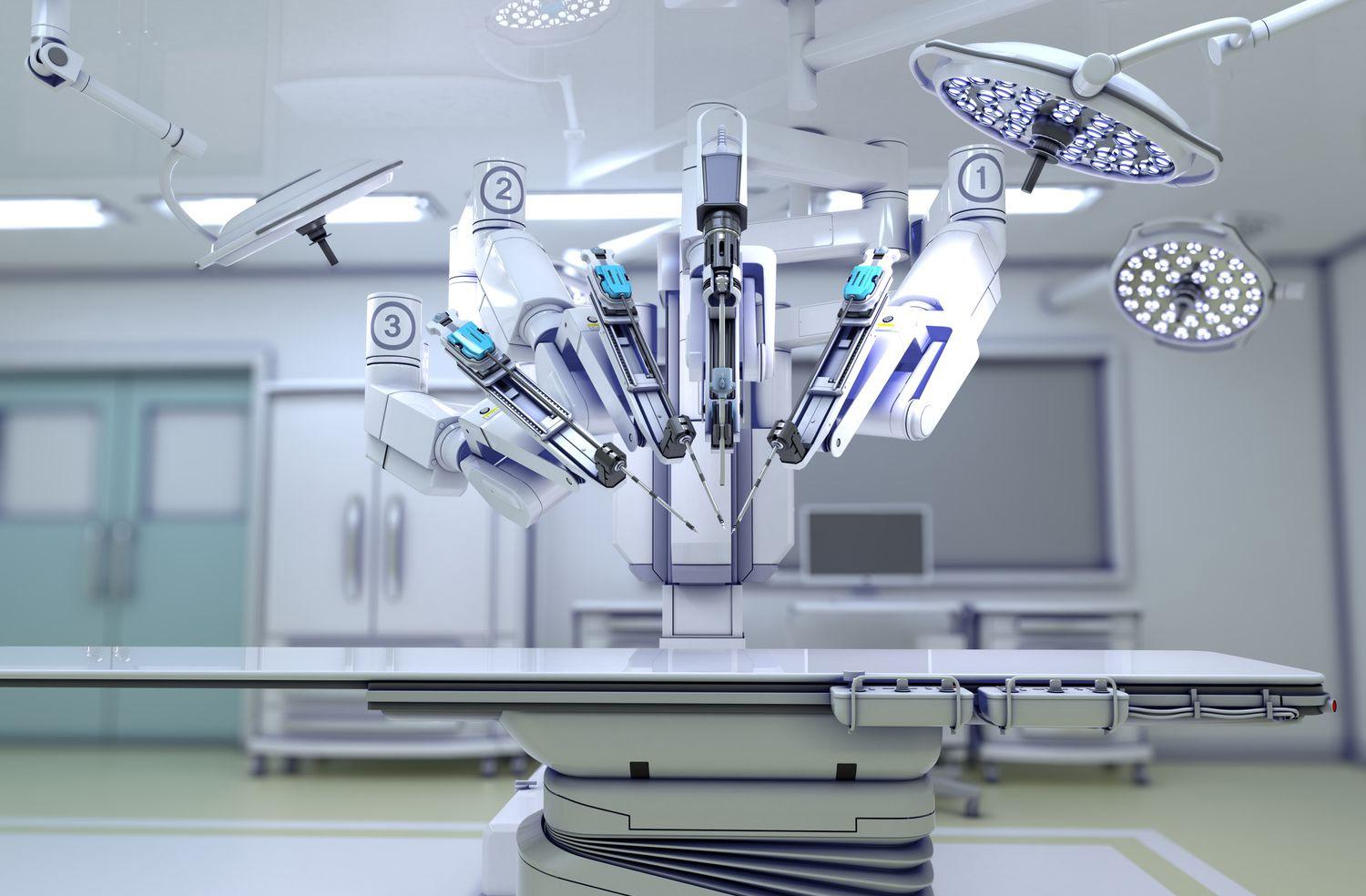

In 2000, the da Vinci system was fully cleared for action by the FDA (Food and Drug Administration) in the US. Now, the da Vinci system is the spearhead of robotic surgery; having 60,000 trained surgeons and completed more than 10 million procedures, it can be used in many specialty surgeries including cardiothoracic surgery, general surgery, neurological surgery, and many more; over its millions of operations, robotic surgery has had a favourable prognosis (the likely outcome of a medical procedure) of a 95% success rate. Since the first use of the da Vinci system, many newer and more improved da Vinci class systems with better technology and more advanced instruments are being used today.

FUTURE

For now, a surgeon is still required to operate a robotic surgical system, but when will robotic-assisted surgery become robotic-performed surgery? While we could theoretically pass on the role of decision-making entirely to AI (Artificial Intelligence), the question of “What happens if something goes wrong” will always linger around when entirely depending on robots, especially if it involves a delicate matter such as surgery. The future of surgery being performed entirely by robots will lie entirely on the patient’s trust and will. (Morell et al, 2021)

In my opinion, robotic surgery, at least in the coming years will likely still have humans in control – at least in a supervisory role, standing by in the event of an unlikely emergency. ~

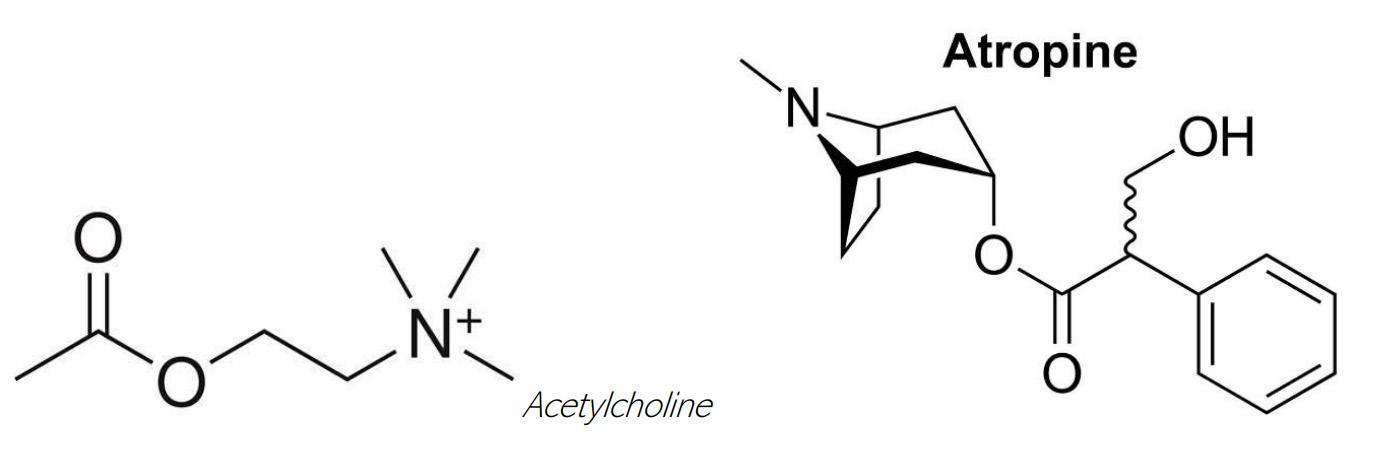

Alcohol-Related Neurological Diseases:

Are you “Nervous” about having a drink?

Alcohol is estimated to have been invented in 7000 BCE, China; Thus, it has long embedded itself in societies across different cultural backgrounds. If you enjoy TV and movies, you may notice how characters typically end up suffering from memory blackouts and terribleheadaches after a night wasting themselves at the bar, and whilst these masquerade as trivial occurrences, they may be symptoms of more a serious problem: Alcohol-Related Neurological Diseases – conditions that affect the central and peripheral nervous system caused by the intake of alcohol. It is important, however, to first understand the relationship between alcohol and the brain before diving into the topic.

In the Latino culture, drinking is often associated with masculinity, leading to high levels ofalcohol consumption, displaying how it is considered a social norm in countries and societies,where alcohol is often incorporated in celebrations and act as a lubricant. Alcohol is adistilled or fermented drink that is made up of ethanol, and it is a well-known depressant of the brain, as well as a toxic and psychoactive substance. It mainly targets the central nervoussystem (brain and spinal cord), which is responsible for receiving, perceiving, and processing general sensory information whilst also generating responses. The brain is the most delicateorgan within the body, comprising parts such as the cerebellum, frontal lobes, hippocampus,thalamus, and medulla; they play key roles in maintaining internal conditions, decisionmaking, forming thoughts and memories, muscle control, and many more vital processes. Alcohol damages nerve cells and interferes with communication pathways in the brain,severely undermining the CNS’s ability to work, and thus, it reduces the brain’s effectiveness.

Wernicke-Korsakoff Syndrome

Of all alcohol-related neurological diseases, one of the most dangerous is Wernicke-Korsakoff Syndrome (WKS), and while being considered one condition, it is separated into two stages: Wernicke’s Encephalopathy and Korsakoff’s Psychosis. Starting with Wernicke’s Encephalopathy, it is an acute onset and severe brain disorder that, with early diagnosis and treatment, can be reversible. Symptoms include confusion, lack of muscle coordination, hypothermia, vision problems, for instance nystagmus and double vision, and coma. However, it can progress into Korsakoff’s psychosis if not treated promptly, which is an irreversible condition. Individuals with the disease often present with memory impairments such as anterograde amnesia, hallucinations, repetitive speechand actions, emotional apathy, confabulation, and problems with decision-making. In people with WKS, there is permanent brain damage across a

variety of brain regions, notably the thalamus, hippocampus, hypothalamus, and the cerebellum, so it impairs crucial functions and processes such as vision, movement, speech, memory, and sleep. On the whole, WKS is caused by a lack of thiamine (Vitamin B1), and alcoholism is the leading cause of WKS, as it reduces thiamine absorption in the intestines, whilst those who are malnourished, undergo kidney dialysis, suffer from colon/gastric cancer, or have AIDS are also at risk of WKS. The disease is clinically diagnosed based on patients’ medical history and carrying out electrocardiograms (EKG) and magnetic resonance imaging (MRI) and computed tomography (CT) scans of the brain. Treatment includes intravenous administration of thiamine and glucose, oral supplements, and memory therapies. Serious cases may require intensive residential care.

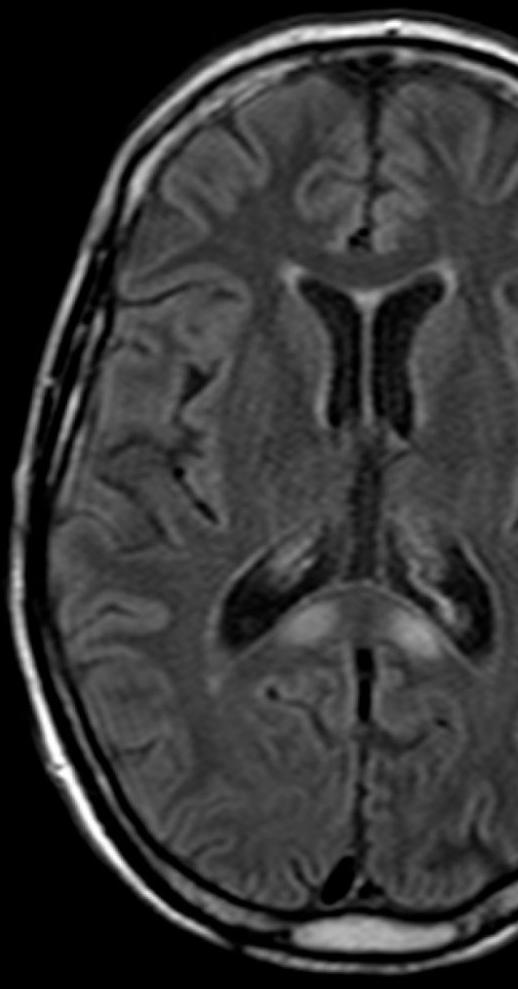

Marchiafava-Bignami Disease (MBD)

Marchiafava-Bignami Disease (MBD) is a rare CNS disorder associated with chronic alcoholism, characterised by demyelination of the corpus callosum, which can extend into the hemispheric white matter, internal capsule, and middle cerebellar peduncle. In rarer cases, Morel Laminar Sclerosis is visible. The disease is caused by a deficiency in the B vitamins, whilst males between the ages of 40 and 60 are most affected. Nonetheless, the tempo of onset and clinical presentation of this disease varies and are often limited to non-specific features (motor or cognitive disturbances). Other symptoms are seizures, stupor or coma, (acute, subacute, chronic) dementia, psychiatric disturbances, aphasia, apraxia, hemiparesis, and signs of interhemispheric disconnection. To diagnose for MBD, toxicology screening, CT scans, complete blood count, and serum measurements are used, but MRI scans are currently the best diagnostic tool for it. To treat MBD, vitamin B complex is administered, whereas thiamine, cobalamin, and folate supplements are also administered intravenously for management. Still, some patients do not recover and die from MBD.

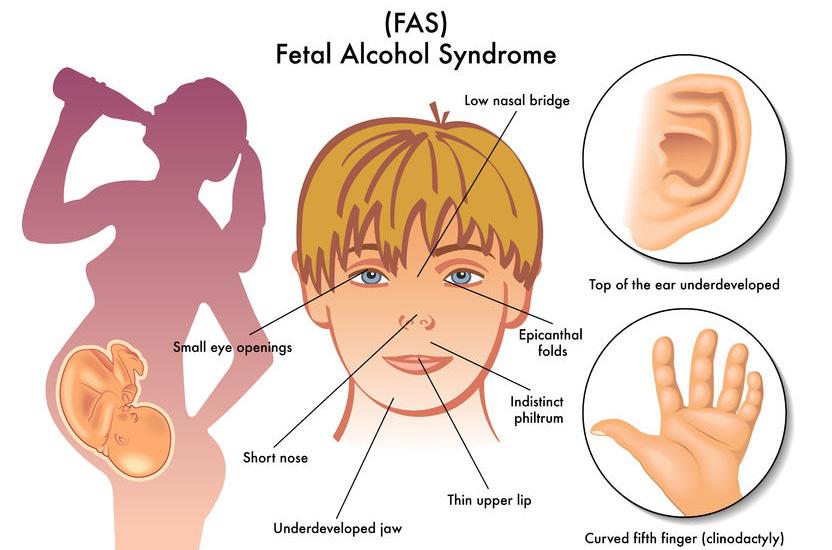

Foetal Alcohol Syndrome

The devastating effects of alcohol are not only harmful for drinkers, but also apply to innocent foetuses. Foetal Alcohol Syndrome (FAS) is a severe form of foetal alcohol spectrum disorders (FASD), which are caused by the alcohol consumption of pregnant mothers. As foetuses cannot process alcohol like adults, alcohol is more concentrated and prevents nutrition and oxygen from reaching vital organs. Children affected often present with specific facial features, impaired vision and hearing, smaller head and brain size, slow physical growth, poor coordination/balance, and changes with how the heart, bones, and kidneys develop. They may also have poor judgement, a short attention span, a lack of time awareness, issues with controlling large mood swings, challenges emotions

with in social interactions, and problems with managing life skills. Diagnosis of FAS requires monitoring of the symptoms mentioned above, and whilst there is no treatment, it can be prevented by stopping alcohol intake if there are uncertainties of pregnancy and contacting intervention services.

Alcoholic Neuropathy and Alcoholic Myopathy

Alcoholic Neuropathy is a form of nerve damage affecting both the central and peripheral nervous systems. It is typically permanent, as alcohol induces structural changes in the nerves. Nutritional deficiencies—particularly in thiamine, niacin, pyridoxine, folate, and vitamin E—exacerbate the condition. Common symptoms include dysesthesias (burning, tingling, and prickling sensations), muscle spasms, weakness, sexual dysfunction, gastrointestinal disturbances (nausea and vomiting), impaired speech, movement disorders, and dysphagia (difficulty swallowing). Treatment focuses on rehabilitation, alcohol abstinence, and nutritional support, especially through supplementation with B-complex vitamins. Alcoholic Myopathy is a progressive muscle disease caused by excessive alcohol consumption and manifests in two forms: acute and chronic.

Acute alcoholic myopathy is typically triggered by binge drinking and resolves within one to two weeks. However, it may cause rhabdomyolysis—a serious

condition where muscle breakdown products enter the bloodstream, potentially leading to kidney failure.

Chronic alcoholic myopathy results from long-term alcohol abuse, often affecting muscles in the hips and shoulders. Recovery can take several months after cessation of alcohol intake.

Symptoms include muscle atrophy, weakness, cramps, stiffness, fatigue, and dark-coloured urine. Unlike alcoholic neuropathy, alcoholic myopathy is generally reversible with appropriate intervention.

Both conditions share similar diagnostic approaches, including neurological examinations, blood tests, toxicology screening, and electromyography (EMG).

Alcohol Withdrawal Syndrome (AWS)

Alcohol Withdrawal Syndrome (AWS) affects individuals who abruptly stop or significantly reduce alcohol intake after prolonged use. Symptoms typically emerge within hours to a few days and include tremors, nausea, vomiting, anxiety, tachycardia (increased heart rate), sweating, headaches, insomnia, and vivid nightmares.

In severe cases, Delirium Tremens (DT) may develop, characterized by profound confusion, agitation, fever, seizures, hallucinations (tactile, auditory, and visual), and rapid breathing. Diagnosis relies on toxicology screening and physical assessments, such as monitoring for heart arrhythmias and dehydration.

Treatment involves administering benzodiazepines (e.g., chlordiazepoxide, lorazepam, alprazolam) and may be conducted at home or in a hospital depending on severity. Patients are also encouraged to engage in counselling to support long-term recovery.

Preventing Alcohol Misuse:

Alcohol Misuse refers to harmful or dependent drinking patterns, typically defined as consuming more than 14 units of alcohol per week (1 unit = 10 mL of pure alcohol).

At a global policy level, the World Health Organization’s SAFER initiative recommends five evidence-based strategies:

Strengthen restrictions on alcohol availability

Advance and enforce drink-driving countermeasures

Facilitate access to screening, brief interventions, and treatment

Enforce bans or comprehensive restrictions on alcohol advertising, sponsorship, and promotion

Raise alcohol prices via excise taxes and pricing policies

On an individual level, prevention begins with setting realistic personal goals to reduce consumption, using digital reminders, and replacing drinking habits with healthier alternatives such as exercise or social engagement. Maintaining open conversations with trusted individuals can also help promote accountability.

According to the World Health Organization, the European Region (9.2 litres per capita) and the Region of the Americas (7.5 litres per capita) report the highest levels of alcohol consumption globally. In 2019, 52% of men and 35% of women worldwide were regular drinkers, and approximately 7% of individuals over 15 years old were affected by Alcohol Use Disorder (AUD)—with 209 million diagnosed with alcohol dependence.

Alcohol misuse is responsible for around 3.3 million deaths annually, accounting for 6% of all global mortality. In addition to the disorders discussed above, alcohol is also linked to conditions such as alco-

hol-induced cerebellar degeneration, dementia, and other cognitive impairments.

While moderate alcohol consumption may offer some cardiovascular benefits, it is essential to recognize and avoid the risks of excessive drinking, which can lead to severe, sometimes fatal, neurological consequences. ~

Antidepressants Uncovered

The science, myths and future of mental health treatment

Words - Anish Thayalan

Imagine waking up every day on repeat feeling like a rain cloud is consistently following you, extracting every essence of joy and hope in you. For approximately 280 million people worldwide this is a common feeling . Depression – a condition which has ravaged the lives of countless people. Enter antidepressants: small seemingly insignificant pills which have ignited numerous debates amongst scientists, saved lives, and have caused some of the biggest misconceptions in the neurological field. Despite being one of the most prescribed meds in the world, there are still lots of misconceptions about what they do and how they do it. The most controversial of these is the idea that depression is simply caused by a chemical imbalance – in particular, low serotonin. To tackle these myths, I will be exploring three different sections in this article: What are

What are antidepressants ?

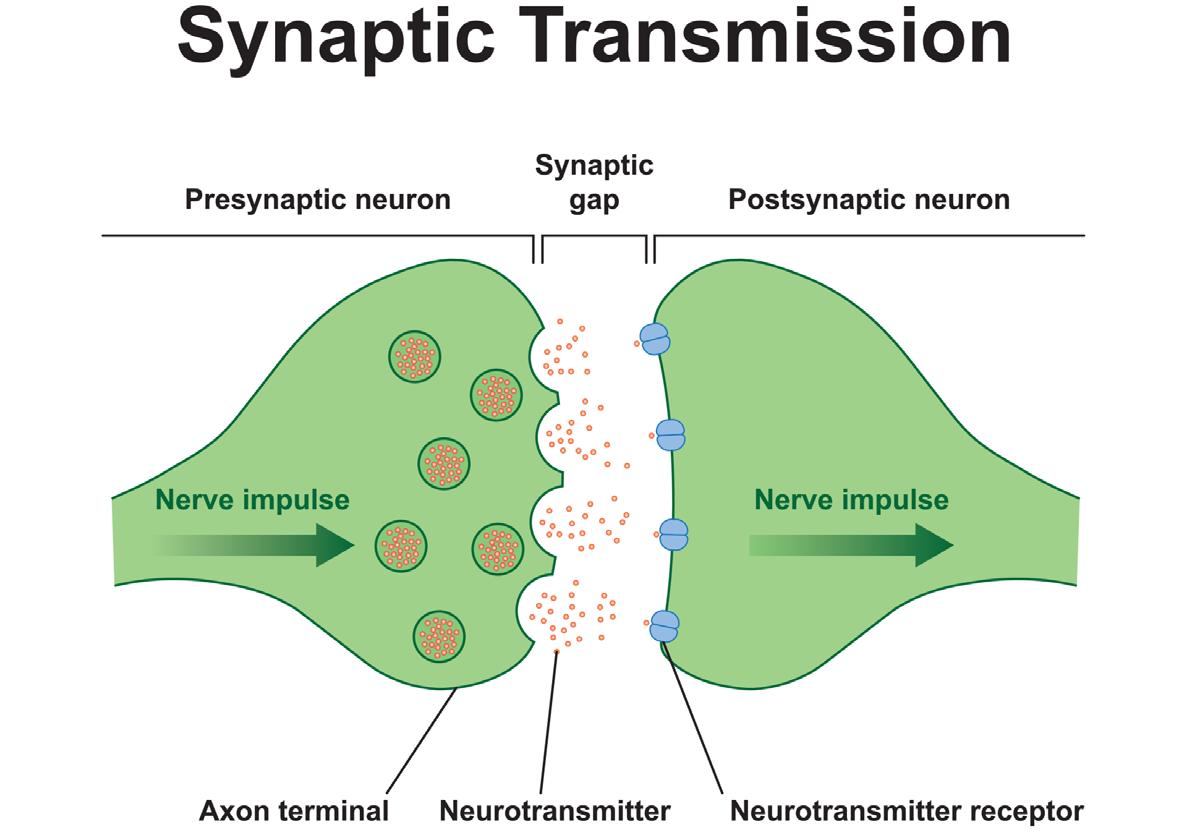

What Happens at The Synapse?, PictureAntidepressants are a section of medications which are created in order to negate the symptoms of depression and other similar disorders. It does this by targeting chemical signals in the brain between neurotransmitters, in particular, antidepressants commonly affect neurotransmitters such as serotonin, norepinephrine and dopamine. These chemicals serve as messengers between the synapses in neurons and control moods.

As seen above , these neurotransmitters cause nerve impulses to be carried along the neuron which results in feelings or muscle contractions. For those with Depression, I will be looking at the feelings that occur due to these neurotransmitters. In individuals with this problem, the processes controlling neurotransmitter release and reuptake may be dysregulated, which may lead to cognitive disruption. Antidepressants work by restoring these issues to the norm. However it is incorrect to assume that therefore low levels of these neurotransmitters are the problem.

Here are two common types of antidepressants:

Selective serotonin reuptake inhibitors (SSRIs) – these increase the serotonin levels in the brain by blocking its reabsorption into the neuron, this allows the transmitter to be able to send signals for longer.

Serotonin norepinephrine reuptake inhibitors (SNRIs) –these do the same thing as an SSRI but also targets the other neurotransmitter – norepinephrine.

However, as mentioned before, an antidepressant does more than just boost neurotransmitter level, they work

by enhancing the brain’s neuroplasticity. Neuroplasticity is the ability for the brain to adapt and form new connections by creating new neurons. Neuroplasticity occurs naturally within the brain, it occurs when a new memory is made. Depression is often linked to low neuroplasticity in areas such as the hippocampus, according to the national institution of health many people with depression have a smaller hippocampus.

(1) Antidepressants work by promoting neuroplasticity, resulting in increased brain health and connectivity.

A famous example and how it works

One of the most used antidepressant with approximately 14.8% of sales is fluoxetine. It is more widely known as Prozac. It is an SSRI and in this section we will be looking at what it does and how it affects neurotransmitters. Prozac (Fluoxetine) | Side Effects, Dosage, Uses & Interactions, PictureWe touched on neuroplasticity in the sections above and how it refers to how the brain can form new neural connections and strengthen existing ones. Prozac has been shown to promote neurogenesis. In a paper published in the national Library of medicine by David Samuels and co authors, studies were conducted on animal models and shows that Prozac has increased the expression of brain derived neurotrophic factor – this is simply a protein that causes the growth of neurons. Low levels of this protein have been linked to reduced neuroplasticity. However Prozac does not

not cause an immediate change even though it causes serotonin levels to increase within the first few hours of taking the drug. The effects typically take a couple of weeks to occur. This supports the idea that depression is not due to low serotonin levels. The Prozac drug only starts to work properly after a few weeks in order to allow the neuroplasticity to occur. It also increases the levels of the protein called BDNF as this allows new neurons to grow and join themselves to existing neuron pathways. These changes are gradual and show how the use of Prozac is a gradual increase and is not sudden. By promoting neuroplasticity, Prozac does not only alleviate the temporary symptoms of depression but it also helps the patient recover from the long term damages to the brain.

and antidepressants

sants have come far in the last 30 years but there are still various misconceptions about the illness and how the drugs work.

One of the key myths about antidepressants are that depression is simply caused by low serotonin and this idea is far too simple to truly grasp the complexity of the issue. Some of the key evidence supporting this idea is a study that was published in the 2022 review of molecular psychiatry which analysed various different experiments and concluded that the evidence supporting the idea of depression and low serotonin was not sufficient. This is be

There are still many other myths about depression as a whole which is the idea that depression is not a real thing however scientific experiments done on donated brains clearly show a scientific element to depression as evidence d by the smaller hippocampus and under developed prefrontal cortex in people with depression. However this does not mean that depression is purely genetic as people who have experienced significant trauma can have changes to their brain due to the neuroplastic nature of the brain.

Finally, one of the largest and most prominent beliefs is that antidepressants are a one size fits all, many of these drugs only work for about 50% of users. Prozac for example, reports only a 54% success rate for major depressive disorders. These types of myths about the causes for depression persist due to the simplicity of them in the past. This is because in the early days of diagnosing people with depression, the serotonin trend was easy to spot and pharmaceutical companies relied on this idea for marketing. Phrases such as ‘chemical imbalance’ became the norm and encouraged treatment but they also caused confusion and over simplification of a very complex issue.

Antidepressants have come a very long way in improving mental health treatment but it is important to remember that they are not magic pills that make you happy as some may think. As we develop our understanding of how the brain works, we can revolutionise the future of antidepressants and make new drugs that help even more people. Many may ask what is the purpose of creating new drugs when we already have many that work, the answer to this is: to help everyone living with this problem to find the relief they deserve. ~

Natural Language Processing (NLP)

How do computers understand us?

Words - Seb Past

An overview of NLP

NLP is a branch of artificial intelligence and computer science that “allows computers to process and respond to written and spoken language in a way that mirrors human ability” (Britannica). The fields of computational linguistics, statistics, machine learning (specifically, ‘deep learning’) are crucial in making NLP models work.

Nowadays, we can see the wide-ranging presence of NLP in everyday life. Apple’s ‘Siri’, Amazon’s ‘Alexa’, customer service chatbots on various websites, and the (in)famous ChatGPT all make use of some NLP model that acts as the bridge between the human on one side and the breadth of (some kind of) knowledge/information/data on the other. However, the usefulness of NLP doesn’t end at communication between e.g. a chatbot and a human. For example, NLP is extensively used in email applications for spam filtering and fraud detection purposes. As a result, its importance can clearly be seen, especially in the modern era when these kinds of phishing attempts are becoming increasingly more common.

Furthermore, one crucial task that NLP is often used for is “sentiment analysis”, in which a computer system is able to detect the tone of a given piece of text. Consequently, NLP can be used for applications such as monitoring brand sentiment and public opinion online regarding various issues.

But how does it all work?

A typical NLP model

It is worth noting that most processes/tasks listed below are possible only through the use of machine learning approaches. This is where a program is trained on a large set of data to recognise certain patterns of that data set using different machine learning algorithms – for instance, the ability to divide a string of words in an audio clip into individual words for analysis. I will not make machine/deep learning a focus point but understanding and awareness of this general idea is all that is required for the purposes of the rest of this article.

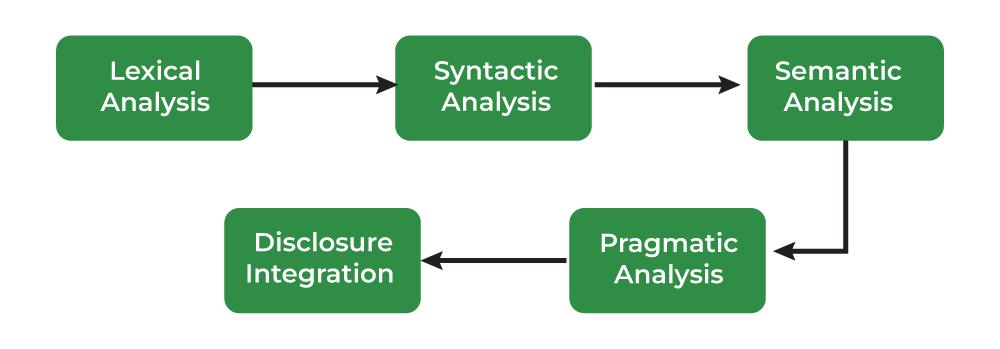

NLP usually consists of some form of the following steps (diagram provided by geeksforgeeks):

Step 1: Lexical (and morphological) analysis

The focus in this step is breaking down text into the smallest units possible for easier analysis and use later.

Text/speech processing

If an audio clip has been provided (e.g. through Siri), speech recognition, the ability to determine the textual representation of a given sound clip of speech, must be performed. Furthermore, speech segmentation is a necessary sub-task of speech recognition that allows for a string of words in a clip to be separated out for analysis.

Once the speech has been processed, or if a chunk of text has been provided, then word segmentation or tokenization must happen – this is the process of dividing a given piece of text into individual words called tokens. Tokenization results in the generation of a word index, in which each token/word is mapped to a numerical value (often in a dictionary format, as shown below). This task also produces tokenized text in which words are represented as numerical tokens (from the above word index) for use in deep learning methods – a specific type of machine learning that utilises artificial neural networks and multiple layers of processing to “extract progressively higher level features from data” (Oxford Dictionary) – for later processes. The next sub-task that must be performed on both text chunks and speech clips is lemmatization. This is when inflectional endings of a word are removed and the base dictionary form – known as a lemma – of a word is returned. For instance, ‘running’ would be converted into ‘run’, and ‘better’ would turn into ‘good’. This is in order to make processing of the text easier for the model.

Another common process involves stopword removal, in which common words without significant meaning are removed to sufficiently ‘clean’ the given text for easier analysis – this often includes words such as ‘a’, ‘the’, ‘and’, etc.

Morphological analysis

The next sub-task of Step 1 involves dividing individual words up into the smallest possible units that still carry some meaning – also known as morphemes.

Morphological segmentation, in which words are separated out into individual morphemes, is done to make later (machine learning) processes easier.

This task first involves identifying the types of morphemes in a given word: a free morpheme is a part of a word/text that would make sense on its own – for example, the word ‘chair’ is a free morpheme. The other type of morpheme is known as a bound morpheme. This is a part of a text/word that would not make sense on its own and would need to be attached to free morphemes to convey any kind of meaning. For instance, the suffix (and bound morpheme) ‘-ing’ needs to be connected to a free morpheme, ‘cook’, to form a sensical word, ‘cooking’.

Step 2: Syntactic analysis

The primary aim of this phase is to identify the structure and grammar of sentences in a given piece of text.

Part-of-speech tagging

This crucial sub-task involves identifying a word in a sentence as performing a specific part of speech (POS), which commonly include the likes of verbs, nouns, adjectives, etc.

However, a common difficulty with this stage is the fact that many words can have multiple possible parts of speech that they can serve as.

Take the word ‘fine’ as an example. Someone could say that they are ‘doing fine today’ (i.e. doing well), but you could also describe a piece of thread as ‘fine’ (i.e. thin). To overcome this, aforementioned deep learning programs are trained to identify the surrounding context of words in order to identify the best possible match.

Grammar/syntax checking

In order to make sure that the given text is error-free, a program may compare given sentences against standard grammar rules for a language using the partof-speech tagging process described above.

This sub-task is especially useful for applications such as machine translation and sentiment analysis (the ability to detect the tone of a piece of text).

Sentence breaking

Locating the boundaries of sentences – often marked by periods or other punctuation marks – within a given chunk of text is particularly useful for Step 4 (Discourse integration) in terms of finding relationships between various sentences (once again, in order to help the program identify the context surrounding a word or sentence).

Step 3: Semantic analysis

This stage focuses on deciphering the context of individual words.

Entity identification

This process consists of two smaller sub-tasks: named entity recognition and entity linking – an entity in a piece of writing is the name of a person, place, company, etc.

Named entity recognition (NER) is simply identifying these entities within a text. This may involve having the NLP program search the internet for a matching Wikipedia article, for example. Entity linking is a necessary subtask of the above. Many words can refer to many entities – the word ‘Paris’ in the name ‘Paris Hilton’ could refer to the capital of France or a person’s first name. In these cases, aforementioned deep learning algorithms are used to derive the correct entity from the context of the given sentence.

Relational semantics (semantics of individual sentences)

This process mainly involves a sub-task known as relationship

extraction, in which relationships are identified amongst named entities – e.g. who is who’s brother/wife/husband/etc.

Word-sense disambiguation (WSD)

Many words can have multiple meanings – this process focuses on determining which meaning fits the given context best, once again, with the help of machine learning programs that have already been trained to do this specific task.

Step 4: Discourse integration

In this phase, relationships between sentences in a text are evaluated to further derive context.

Coreference

resolution

Anaphora resolution is the commonly used method for this task. Put simply, it involves matching up pronouns with the nouns or names to which they refer. Consider the sentences, ‘Daisy was ready to leave. She picked up her bag and left’. Using the above process, we would be able to appreciate the fact that ‘she’ and ‘her’ in the second sentence are referring to ‘Daisy’ from the first sentence.

Discourse analysis

This process simply determines the types of speech acts in a given piece of text – e.g. whether a sentence is a yes-no question, a statement, an assertion, etc.

Topic segmentation and recognition

In this sub-task we separate a given chunk of text into different sections based on common topics identified in these segments. Step 5: Pragmatic analysis

Finally, the NLP model shifts the focus to understanding intentions behind word choices – i.e. the inferred meaning of a text rather than what has literally been written.

For example, suppose that someone said, ‘What time do you call this?’

This particular phrase could be interpreted very differently depending on the tone and the context of the situation – it could be an angry teacher questioning/ mocking a student for being late (i.e. a serious tone), or it could be a friend jokingly remarking about you being late to a get-together. This stage is crucial for sentiment analysis and, as is especially relevant to the present day, chatbots such as ChatGPT in terms of being able to ‘converse’ realistically with the human on the other end.

Uses of NLP

After looking at all of those technical aspects, it’s time to look at the bigger picture: what is this actually used for?

We have touched upon a few of these already, but some real-world use cases include:

Sentiment analysis (as mentioned above - the ability to detect the tone of a piece of text as sad/angry/serious etc., which would be very useful for e.g. a company trying to quickly get the general feelings of customers in their reviews without having to read them all)

Grammatical error correction (very useful for programs such as Word and email services)

Machine translation (NLP is used in e.g. Google Translate to effectively understand what has been inputted)

Question answering (as in ChatGPT or a customer-service chatbot)

Text-to-image generation

Text-to-scene generation (i.e. creating a 3D model)

Text-to-video generation

Fraud prevention and spam detection in email applications

Conclusion

Whilst NLP is truly fascinating, it is not without its challenges, one of the biggest being ambiguity in human language. The varying meanings that words and sentences can have in many different contexts means that the correct interpretation relies on a very accurate and well-trained model, which is quite difficult.

In addition to technical challenges, there are ethical ones, too. Depending on the training data provided to NLP models, biases could be developed and perpetuated, which could lead to unwanted and, in some cases, potentially discriminatory outcomes (e.g. in the hiring process for a company).

Fundamentally, however, what we have so far in terms of NLP technologies is nothing short of extraordinary and only the beginning of what will undoubtedly be an AI-, and, by extension, NLP-dominated next few decades globally. I believe we will see many more ground-breaking technologies and applications realised in the future that will further help in increasing computers’ ability to understand mankind and, thereby, help us accomplish great things. ~

on the aerospace industry

From Navier-Stokes equation to turbulence in planes

Introduction

Most of us would have been victims of turbulence once in our lives, whether it be on an aircraft or in a boat. This loosely used term “turbulence” refers to fluid dynamics. Fluid dynamics involves the study of the movement of liquids and gases in response to external forces from the environment that are exerted upon them. Fluid flow has a myriad of implications ranging from maximising efficiency in air conditioning units to modelling blood flow circulations to inform the design of medical devices. Fluid motion can either exhibit laminar flow (smooth or regular path of gas/liquid) or turbulent flow (regions of fluid move irregularly with colliding paths). We will start by discussing a simplified version of the Navier-Stokes equation, Euler’s Equation.

Words - Mark Tang

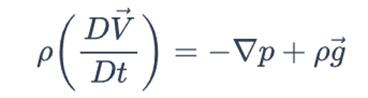

Euler’s equation

This equation is derived from the Newton’s second law of motion and describes the motion of inviscid fluids (Ideal fluid where there is zero viscosity). You have probably come across Newton’s second law of motion which states that the acceleration of an object is directly related to the net force and inversely related to its mass; F=ma. This equation describes how pressure, density and gravitational forces can cause acceleration changes in fluids. In order to understand Euler’s equation better, we need to grasp a fundamental understanding of Bernoulli’s principle. This states that an increase in speed occurs simultaneously with a decrease in pressure or fluid potential energy. This is important as it generates lift which is the force of flight caused by pressure imbalance; in other words, allowing the airplane to be pushed upwards. To relate this phenomenon more explicitly to Bernoulli’s principle, the air moving over the curved upper surface of the wing would travel faster which would lead to a lower pressure than the slower moving air on the flat underside of the airplane wing. Two conservation laws are crucial for Euler’s equation which include the law for conservation of mass and conservation of momentum. Finally, an understanding of fluid flow would prove to be useful. Euler’s equation assumes that the fluid is homogenous and incompressible (meaning mass density is constant) and that the flow is continuous and steady (flow does not vary with time). The effect of pressure and velocity can be seen from the left-hand side of the equation where DV/Dt represents the change in fluid velocity with respect to time. The ΔP represents pressure variation and is crucial as fluid naturally moves from a region of higher to lower pressure. This would hence

affect fluid motion and velocity patterns. The following section would provide an insight into the multitude of practical applications of Euler’s equation.

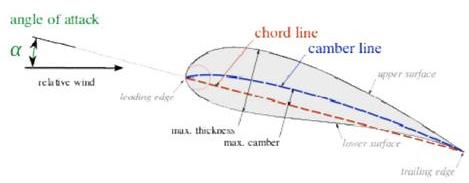

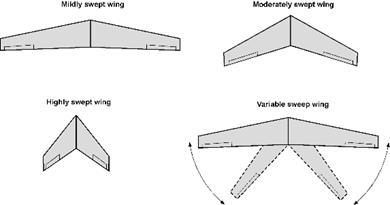

Practical applications of Euler’s Equation

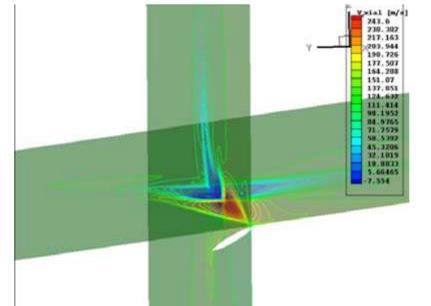

To start with, an application of Euler’s equation is analysing problems in flight dynamics. Euler’s equation can be used to simulate fluid flow over aircraft bodies, improve aerodynamics of airplanes, optimise fuel consumption and even determine aircraft stability. With the advancement of airplanes, more extreme manoeuvres at higher angles of attack are performed. The angle of attack refers to the angle at which a relative wind meets an Aerofoil. A Euler solver (computational tool to analyse fluid dynamics using Euler equations) can be applied to both steady (constant flight conditions) and unsteady flows. Specifically, we would be looking at the determination of stability derivatives and flow at high angles of attack in steady flow. To put this simply, I would be looking at how aerodynamic forces and moments change when airplanes fly at different angles in constant flight conditions. In highly manoeuvrable aircrafts, vortex lift (method by which highly swept wings produce lift at high angles of attack) that is created at high angles of attack allow for rapid turns and faster take off. The Euler solver is able to predict positions of the vortex by analysing flow pattern to determine vortex creation

and strength. The stability of these vortex systems can also be analysed. The determination of the positioning of the vortex is crucial in flight dynamics and structural analysis. Axial velocity helps to indicate the stability of a vortex. A symmetrical axial velocity with a clear peak in axial velocity at the vortex centre (as seen by the red section) would indicate stability. Therefore, changes in axial velocity would impact lift and stability of the aircraft. This is

relevant as particularly at high angles of attack could lead to different strength and positioning of vortex which would affect stability. Euler’s equation requires significantly less computational power than if calculating the Navier-stokes equation, and still has good levels of accuracy. It is also important to note that Euler’s equation is better when analysing supersonic aerodynamics where viscosity is negligible.

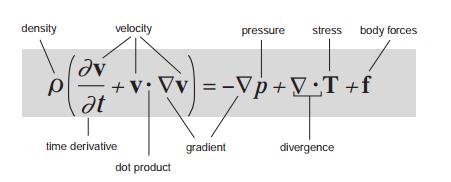

Navier-Stokes equation

Previously, Euler’s equation is described to be able to determine fluid dynamics however the main limitation was it excluded viscosity, assuming inviscid fluids. Viscosity is important as it describes the internal friction experienced by a fluid which would influence energy dissipation levels and transitions between laminar and turbulent flow. The equation is similar to Euler’s equation whereby law of conservation of mass and momentum are both important. Due to the computational unfeasibility of the Navier stokes equation, the Reynolds average Navier-Stokes (RANS) equation is commonly used whereby fluid motion is broken down into two parts, the mean flow which is the overall behaviour of the fluid and the fluctuations that are happening within the fluid as well. Mathematically written using Reynolds decomposition where u− is the mean velocity and u’ is the fluctuating component. Using this and then time averaging the values leads to the RANS equation formed. RANS eliminates the need to calculate instantaneous flow field however it introduces the Reynolds Stresses, an additional term which captures turbulence. The Reynolds Stress Tensor is a consequence of the Reynolds decomposition and averaging process that is used in deriving the RANS equations. The Reynolds stresses represent how turbulence would impact the overall fluid flow without calculations of every specific eddy/swirl in a fluid. Applications of the RANS equation include aeroacoustics whereby turbulence modelling can inform how much noise is generated by different aircraft engines.

HYDROGEN CARS What happened?

Words - Ethan MacPherson

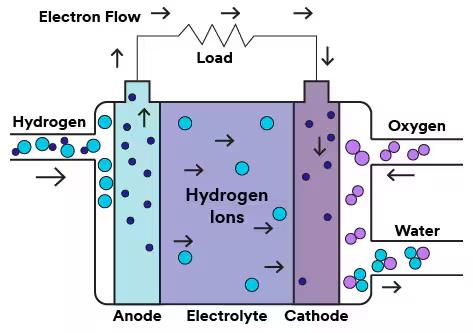

Remember a few years back when everyone was talking about hydrogen cars being the future? Cars like the Hyundai Nexo and Toyota Mirai were all the rage in mainstream media, being praised as alternatives to dirty petrol cars in the same breath as battery-powered cars. But what happened? Why did these battery cars succeed, and hydrogen cars have faded away?

How Do They Work?

Firstly, in order to understand why they failed, we need to understand how they work.

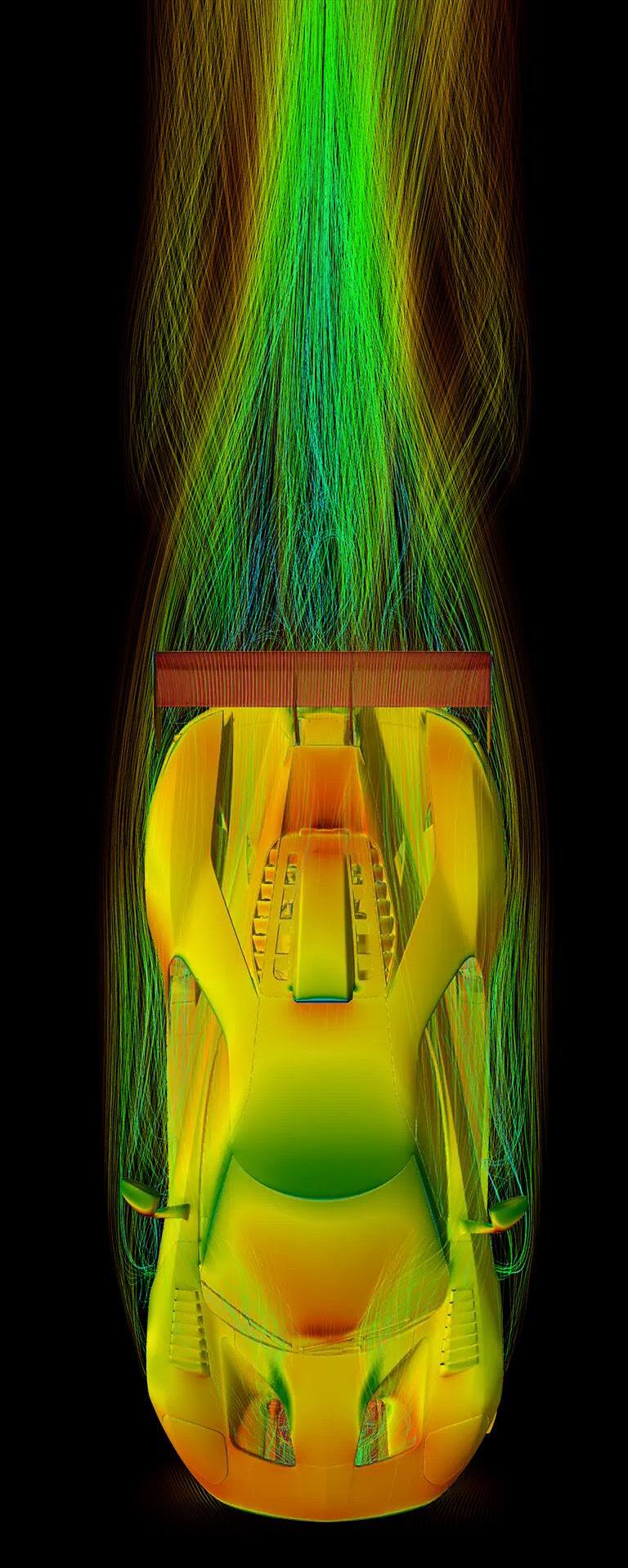

A hydrogen fuel cell turns the energy stored in hydrogen molecules into electricity. It does this by using two electrodes – a negative cathode, and a positive anode – that surround an electrolyte solution. They work in the opposite way to electrolysers. In an electrolysis reaction, an ionic compound is broken down into the separate molecules by putting in energy, but in this hydrogen fuel cell, energy is generated by combining hydrogen and oxygen to make oxygen. Hydrogen is supplied when the car is fuelled up at the anode, and oxygen is drawn from the air to the cathode. Hydrogen gains electrons at the anode, oxygen loses electrons at the cathode, and the ions are moved through the electrolyte to form water at one of the

electrodes, depending on the type of fuel cell. This is the equation:

2H2 + O2 → 2H2O

The type of fuel cell Toyota uses in the Mirai is the fuel cell we will be looking at – the PEM fuel cell. (See bottom right)

PEM fuel cells – the Proton-exchange membrane cells – is the main fuel cell used in the transport industry, and uses low temperatures while having high power density and quick startup times, making it ideal for use in cars. In PEM cells, the hydrogen is oxidised at the anode with the aid of a catalyst, which is usually platinum with carbon supports to expose it to the reactant, and the electron lost goes up through the external load circuit. The hydrogen then moves through the electrolyte membrane to form water at the oxygen side, with the oxygen ions having been reduced by the platinum catalyst and the electron from the Hydro-

gen. The circuit createdforms the energy used to make the car move.

So, Why Don’t We Use Them?

Why aren’t hydrogen fuel cells used in cars? Well, there are many reasons, and despite advantages such as short refuel times (the Mirai takes 4 minutes to fill up enough for 400 miles says Jean-Michel Billig, Stellantis CTO) and longer range, research into these cars have stagnated. There are 3 main reasons for this

Infrastructure

Simply put, the infrastructure just isn’t there for hydrogen cars. As of December 2023, there were “16 operational hydrogen fuel stations across the UK” (Pulse Energy), and that number was going down due to low demand. If you can’t refuel a car, you can’t buy it, and nobody wants to make a car that nobody is buying.

Electric vehicles have overcome this issue over time, with the number of chargers across the country (72,594 as of November 2024 according to Zapmap), but it required heavy investment from the government and companies for the infrastructure to be jumpstarted, which isn’t likely for hydrogen vehicles.

Production cost of hydrogen

Hydrogen production is also energy intensive. It is mainly produced in natural gas reforming, where high temperature steam is used to produce hydrogen from methane under heat (steam-methane reforming), or carbon monoxide and steam are reacted using a catalyst to form carbon dioxide and hydrogen (water-gas shift).

Steam-methane reforming:

CH4 + H2O (+ heat) → CO + 3H2

Water-gas shift:

CO + H2O → CO2 + H2 (+ small amount of heat)

This process emits carbon dioxide, which isn’t great for the environment. This counteracts the “greenness” of using hydrogen in order to not emit carbon dioxide whilst driving.

Although there is a green way to produce hydrogen (through electrolysis), it’s very energy inefficient and is not widely available.

Storage of the hydrogen is also expensive, as hydrogen needs high pressure tanks as a gas (350-700 bar) or very cold temperatures as a liquid (-253°C) and requires isolation due to its reactivity.

Battery-powered vehicles are better

Unfortunately, battery-powered vehicles are better than fuel-cell vehicles in the personal vehicle industry. Battery technology has just gotten better and better over time, with a more diverse range, better infrastructure, and lower prices, which makes them more attractive to consumers and producers. The development of these vehicles has just snowballed the industry into taking over the mainstream personal vehicle market, due to the economies of scale and regulatory incentives. It’s also far easier to just convert electricity into battery power than to produce, store, and transport hydrogen. The future for hydrogen powered vehicles doesn’t look too bright overall. Electric battery vehicles took the market by storm, leaving the hydrogen fuel cell technology in the personal vehicle industry in the dust. Hydrogen fuel cell cars haven’t gotten the same type of love as battery powered cars, and now that there is a cleaner alternative to petrol and diesel cars, the development of the hydrogen fuel cell for use in cars has stagnated, and they remain a thing of the past.

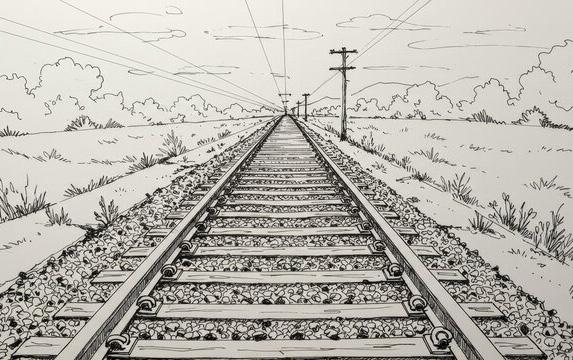

Introduction to Projective Geometry

Words - Forrest Zhu

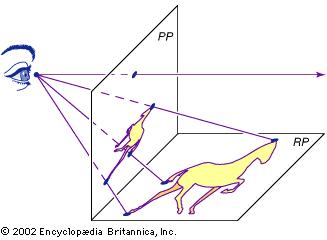

Geometry is a branch of mathematics that deals with the properties of figures in the plane or in space. The most common type of geometry taught in every school is known as Euclidian geometry, which is the x-y plane or x-y-z space that we are all very familiar with. It is based on the premises that space is flat and includes measurements like length and angle. I am sure that we all remember the equation for the distance between two points, or the gradient of perpendicular lines. But projective geometry is a type of non-Euclidian geometry, it has a completely different view of space, so we can forget everything we learned about geometry and start from the basics, again. Imagine looking at a picture, the picture itself is a 2D plane with lengths and angles all distorted from the original view, but we can still immediately recognize the geometrical structure of the 3D space. How is this possible? It must be because there are geometrical properties that remain unchanged though the process. The picture can be seen as a projection of the original view, and projective geometry is the study of properties that are invariant under projections. Or as the artists call it, perspective, everything looks small in the distance.

Projective Transformations

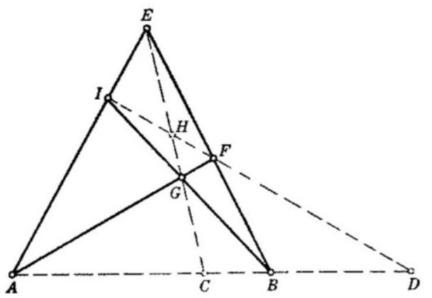

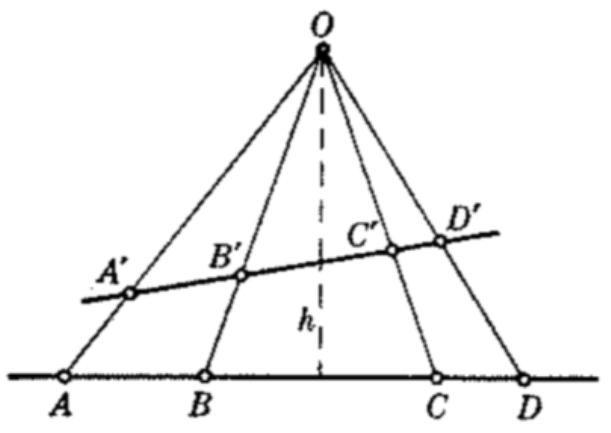

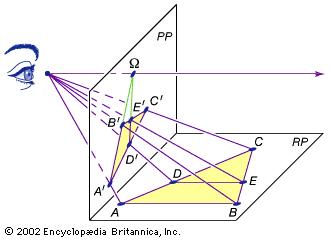

Projective transformations allow us to project points from one plane to another. If there is a point P on plane pi and you want to project it onto plane pi’, choose any point in space, point 0, draw a line through 0 and P, the intersection of line 0P and plane pi’, point P’, is the projection of point P on plane pi’. Similarly, because a line is a collection of points, lines can also be projected. Note that the planes do not need to be parallel to each other, although it is depicted as such in the diagram. This is basically how you take pictures, points in space are projected onto the plane of the camera. Remember from school that parallel lines never intersect each other? Well here, they do. Parallel lines are seen to intersect at a point infinitely far. So, point 0 can also be infinitely far and then the projection rays will be parallel to each other, and that is called a parallel projection.

When the cross ratio of four points is -1, or when CA/CB = -DA/DB, point C and D divide the segment AB internally and externally by the same ratio, C and D is said to harmonically divide the segment AB. For this to happen, point C is between A and B and point D is outside AB. An important conclusion is that if point D is at infinity, point C is the midpoint of AB. A complete quadrilateral can be used to generate points with cross ratio −1. A complete quadrilateral is a figure with any four straight lines which are not collinear, like the figure here. If we connect all three diagonals, the four points on each diagonal all have a cross ratio of −1. To prove this, we simply observe that projected from point E, x = (IFHD) = (ABCD); projected from point G, x = (IFHD) = (BACD). So (ABCD) = (BACD). Since (ABCD)= 1/(BACD) we have an equation x = 1/x, x = ±1.Because point C is between A and B, the cross ratio must be negative, x = −1. Similarly, x = −1 for the other two diagonals as well.

Cross Ratio

But what is the thing that does not change after projective transformations? If you have looked closely at the graph above, you might have noticed that CA/CB = C’A’/C’B’. the ratio CA/CB have stayed constant after the projection. If you did see that, congratulations, you just discovered the invariance of cross ratio. Cross ratio is defined as x = (CA/CB) / (DA/ DB) where A, B, C, D are points on a line and directions matters, for convenience I will denote it x = (ABCD). It is constant for any four points undergoing projective transformation. This can be proven simply by using the area of a triangle equation. We can find the area of a triangle in two ways, 1/2b×h, 1/2abSinC. So, for triangles OCA, OCB, ODA, ODB we have:

We can see that the cross ratio is only dependent on the angle at O, and since the angles are always the same under projective transformation, the cross ratio must also be constant. For the case before with only three points, since the planes are parallel, we can say that point D is at infinity, therefore DA/DB = 1 and the cross ratio becomes CA/CB. When you think about it, in a picture, all the objects are scaled down to a certain ratio, that is the idea.

Beyond

Projective geometry is another way of representing the world, it is like a more generalized version of the Euclidean space. The above are only the basics, there are so much more to projective geometry: Desargues’ Theorem, Pascal’s Theorem, Brianchon’s Theorem, representing conic curves, and hyperboloids. But this margin is too narrow to contain. ~

BEHAVIOURISM

Words - Jimi Ikumawoyi

Control is an important theme in the world we live in today, but many of us are not aware of the ways in which every major institution, from the government, to your school, to multibillion dollar companies, control us in our day-to-day lives and on a large scale. When we delve into the details of this, the revelations will shock you. In this article we will delve into Behaviourism, a concept with infinite reach that has disrupted and revolutionised the field of psychology as an important theory, quickly becoming the dominant school of thought and even a philosophical doctrine. Its applications are already prevalent in today’s society, in all areas including technology, education, law enforcement and general control of the masses. So continue reading to find out how and why humans are made to act a certain way without even knowing it.

What is Behaviourism?

First, we need to understand what Behaviourism is. Conceived by founding Father John B. Watson in 1924, Behaviourism can be simply defined as the theory that human or animal behaviour is based on conditioning, rather than thoughts, or feelings (Cambridge Dictionary, 2019). The ‘conditioning’ mentioned here refers to operant and classical conditioning, the two different methods of controlling behaviour. Ivan Pavlov, a Russian physiologist (18491936), released works on classical conditioning, where an organism was exposed to a stimulus (let’s say dogs salivating at the sight of food) and displayed a reflex, but once said stimulus had been associated with a new stimulus (the ringing of a bell before showing the food), the same reflex could be displayed in response to the new stimulus, even after the original stimulus was removed (ringing the bell would still make the dogs salivate at the thought of food, even if no food was ever brought).

John B. Watson

John B. Watson claimed that the at the time prevalent study of the mind was fundamentally impossible, and that only observable behaviour can be studied, and thus controlled by deriving insights from the data. His work on Behaviourism influenced the way psychological experiments are carried out today, the way behavioural therapy is carried out, classroom teachings, and the study of environmental influences on human behaviour. Watson once claimed in his if he was given a dozen healthy infants to raise in his own specific environment, he could raise each one to be a specialist of any type he might fancy- a doctor, lawyer, or even a thief, although admitting he was ‘going beyond the facts’ with this claim. Watson wasn’t a stranger to controversy, with the

credibility of his theories coming into criticism by R. Dale Nance (1970) on many grounds, one being that the theories were founded on a Watson’s tough upbringing on a farm in South Carolina with no father, causing a rude awakening to the world and the loss of childhood, influencing his treatment of children as young adults and lack of understanding of the nature of an unaffected child, and the intricacies of child-rearing. One experiment which aroused significant controversy around him was the ‘Little Albert’ experiment, his unethical experiment which set out to prove classical conditioning. Watson and his assistant Rayner were able to condition a 9-month-old infant (named ‘Little Albert’) to be afraid of a white rat.

Initially, the boy wasn’t afraid, but they began clanging an iron rod whenever they showed the rat. The iron rod, a negative stimulus, frightened Albert and made him cry, and thus over time, the two stimuli (rat and iron rod noises) were associated with each other, and thus when the rat on its own was shown to the infant, he immediately cried. This was widely considered a successful proof of classical conditioning in humans, and has been cemented in scientific history. However, the fact that Watson didn’t have the time to decondition the child as Albert was taken out of town immediately afterwards, and the late discovery that Albert was mentally disabled raised many ethical questions in the scientific community, causing people to question the integrity of the results, and stirring up controversy.

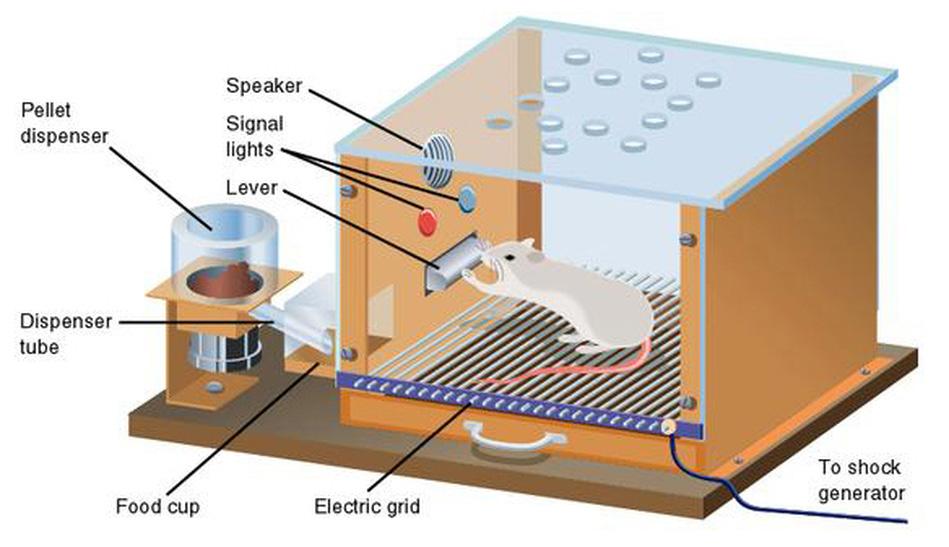

B.F. Skinner

B.F. Skinner, the most influential psychologist of the 20th century and a pioneer of Behaviourism with Pavlov and Watson, developed his own type of behaviourism called ‘Radical Behaviourism’, which made a key distinction between behaviours, citing respondent behaviours as behaviours displayed in response to certain stimuli, and operant behaviours as behaviours displayed intentionally as a result of consequences (positive and negative reinforcement). For example, a rat pressing a button for food that only works when a sound plays and doing this whilst the sound plays only is operant conditioning. Skinner carried out most of his work in what he called a ‘Skinner Box’, a box used to record the behaviour of an organism experiencing operant conditioning (see above). Now we’ve touched on the basics, how does this apply to us? We begin by looking at technology, specifically social media, the most relevant and perhaps disturbing use of behaviourism. Social Media Applications such as Snapchat, TikTok and Instagram all have the same two purposes: The first is their purpose to give you a platform to connect with others and communicate with your social circle. The second purpose, however, is to ward off any possibility of you ever leaving the app, to consume as much of your time as humanly possible.

How Social Media Controls You

How do they do this? The first way is by using a psychological effect and phenomenon called Herding. This is when, instead of acting perfectly rationally and solely based on the information available to you, individuals act based on the actions of others, often following large groups in making the same decisions due to the innate human desire to be part of a community, for example buying a trending jacket rather than the one you liked the look of in order to fit in with the social norms. On social media, this translates into staying on the site for long periods of time, simply because your friends are on there and active, so you feel the need to stay caught up due to FOMO, or the Fear Of Missing Out. This also applies to driving traffic to certain types of content, as certain videos will have many likes, and some will have significantly less despite being similar, but the user will always choose to like the video with more likes subconsciously, even if they prefer the one with less. This is a prime example of herding, and all of the 50 people I interviewed reported that they had experienced this effect before, due to the desire to feel part of a group.

The second way is through the use of forms of physical conditioning. When you scroll, chances are you are sitting and therefore sedentary, so your body begins shutting down as you spend time inactive. This has major implications because

even when you get bored of social media, or consider leaving to do something else, chances are that something requires energy, energy you now don’t have because of the amount of time you have spent staring at a screen and remaining sedentary. As a result, you remain on the app, or you leave it but are now so mentally and physically drained that you do absolutely nothing productive as it requires brainpower or output.

The third way is by exploiting your nature. Platforms like X are notorious for this- designing algorithms that deliberately generate outrageous content that is overwhelmingly offensive of shocking to you, which elicits a much more passionate response out of you, thus retaining your attention for longer periods of time, and sending you down rabbit holes arguing with people who you think are wrong, or posts claiming things that simply aren’t true, and are known as ‘ragebait’, made purely to troll you and get a reaction out of you.

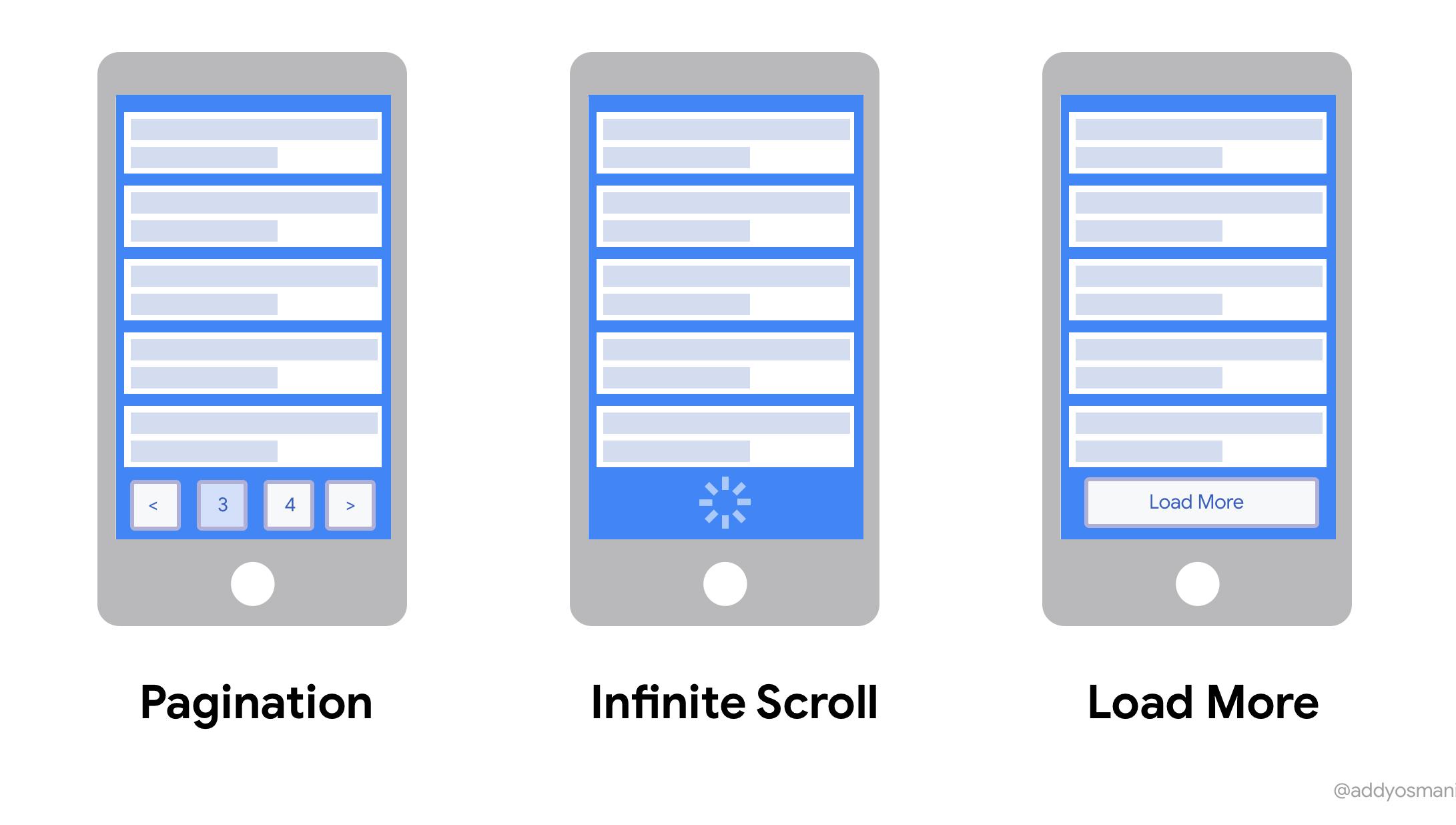

The final way is through the use of scrolling. You will notice almost all platforms have adopted scrolling as their main feature (think Instagram Reels, TikTok, Snapchat Spotlight). This is by far, the most powerful form of psychological manipulation used on the site. A major way companies keep you present on their apps or websites is through an emphasis on good UX. UX is short for user experience and refers to design focus on the way a user interacts with an interface. Said experience must be seamless in order to make the easiest option at any point for the user to be to remain on the site or app.

Therefore, when designer Aza Raskin developed the concept of infinite scrolling, it revolutionised the world of technology forever. It works by greatly reducing the inertia of existing on social media, as rather than clicking on each page and waiting for it to load which can take a while and becomes tedious with poor Wi-Fi, you can instead scroll down with the mere swipe of a finger and virtually no effort, and your brain is instantly rewarded with a rush of dopamine, the drug of desire, as you experience another funny meme. There is no friction whatsoever. However, as you overload your nucleus accumbens (region of the basal forebrain responsible for converting motivation to action) with large quantities of dopamine, it becomes desensitized over time, requiring larger and more frequent doses of dopamine for the very same reward, thus developing in you a short attention span and addictive behaviour towards social media as you need more frequent and potent doses to achieve the same proverbial ‘high’.

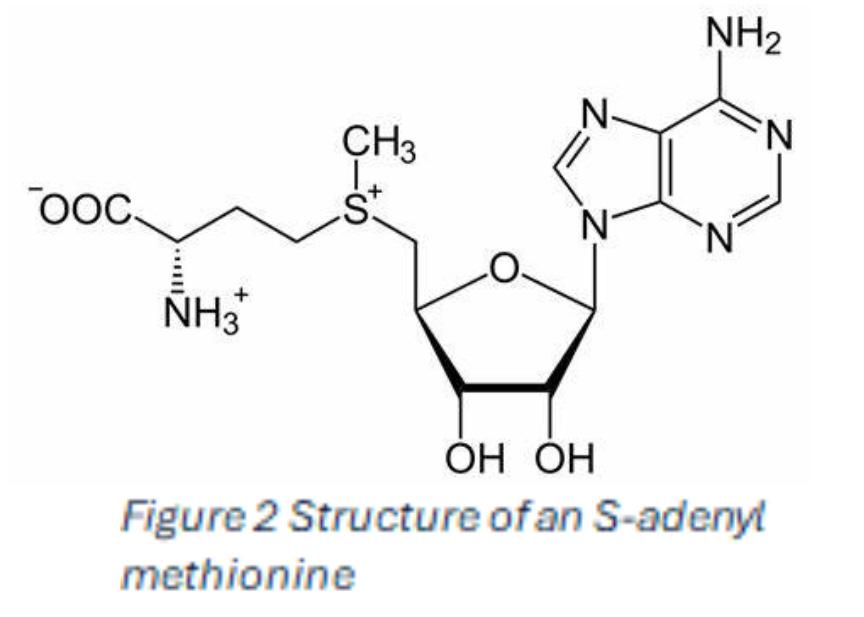

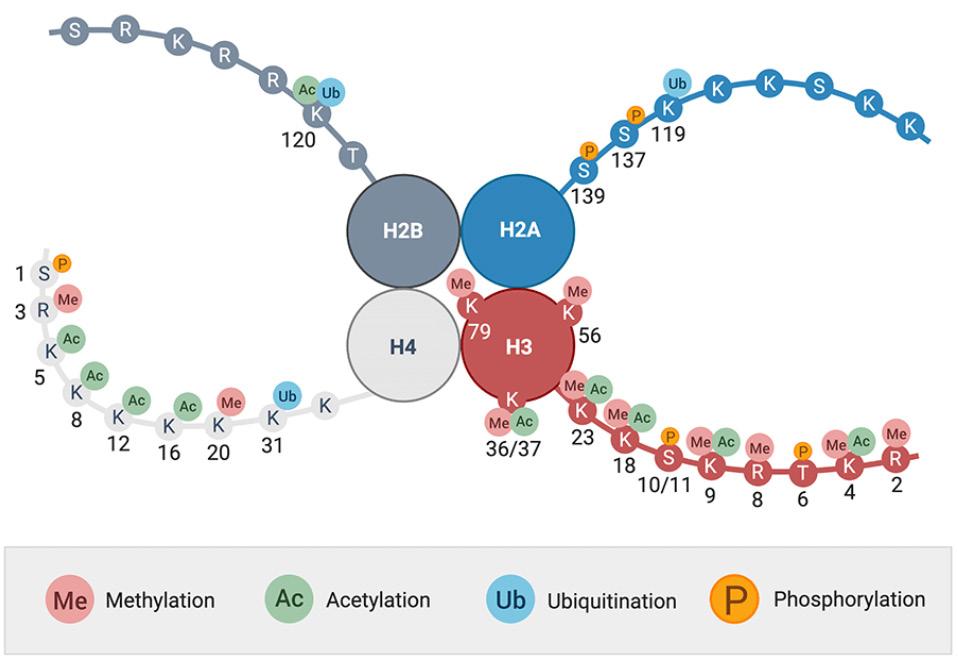

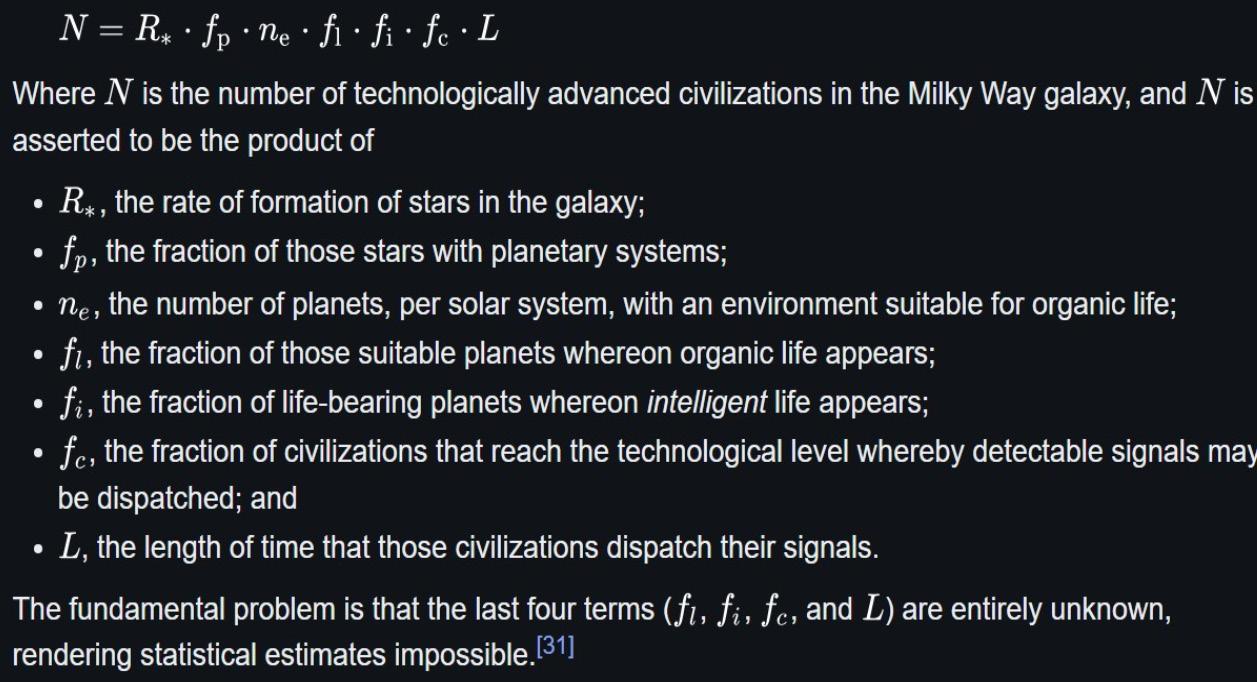

The results of infinite scrolling were so adverse that Raskin founded the Centre for Humane Technology, a non-profit organisation that focuses on leaders being accountable for, and addressing the consequences of technology, alerting people to the