DISSERTATION:

THE SUITABILITY OF INTRODUCING THE CONCEPT OF AGENT-BASED EMERGENCE BEYOND A THEORETICAL FRAMEWORK

Jeremy Paton

3-23-2018

Word Count: 8855

Module: AR602 Dissertation

Supervisor: Tim Ireland

THE SUITABILITY OF INTRODUCING THE CONCEPT OF AGENT-BASED EMERGENCE BEYOND A THEORETICAL FRAMEWORK

Jeremy Paton

3-23-2018

Word Count: 8855

Module: AR602 Dissertation

Supervisor: Tim Ireland

In the last decade emergence has surfaced as a topic of significant attention within the theoretical framework of architectural study. However, the concept of emergence is not a novel topic and is one with a multifaceted history and varied denotations. This paper establishes, through a literature review of the historical study of the phenomenon of emergence, a definition of the origin of the term. Additionally, some of the historical driving forces which have propelled the study of emergence into architectural are investigated.

To centre the paper within the scope of architecture, the investigation will focus on how emergent based design has been adopted as a design method within the theoretical framework of architecture, using agent-based emergent systems, as a process to produce original design. This is conducted through the qualitative assessment of two case studies which examine the application of an agentbased combinatorial-emergent approach towards design.

Through this investigation into emergence questions are considered, such as the role of the architect as the designer, the suitability of emergence within the practice of architecture and the potential which adopting an emergent based approach to design has to influence the future of the profession.

In the last decade emergence has arisen as a topic of significant attention within the theoretical framework of architectural study. Yet, the concept of emergence is not a novel topic – having been studied for well over a century – and is one with a multifaceted history and varied denotations (Abbott 2006). This paper firstly traces the literature, focusing on the history of the phenomenon of emergence within the fields of the sciences and philosophy, thereby establishing a comprehensive definition of the origin of the term. The purpose of this definition is to establish classifications and characteristics of emergent systems, as well as examples of agent based emergent systems, both natural and artificial.

The driving forces which have led to the investigation of emergence within the theoretical framework of architectural study, and how emergence has been incorporated into the design methodology within this academic field, are then examined. Essential to this investigation is how the introduction of computation affected the study of emergence.

A clear distinction between emergent based systems and self-organising systems is addressed, as this distinction will be critical in evaluating a system’s capacity to produce combinatoric novelty (Wolf and Holvoet 2005).

This is followed by a qualitative analysis of two case studies which demonstrate agent-based combinatorial-emergent system software Both of which were developed by myself using Processing – a Java based programming language – which demonstrate theoretical applications for such programs in the field of architecture. These applications are based on flocking-systems where each agent can exhibit individual behaviours.

Through a qualitative assessment of the potential, which agent based emergent design has, to amplify human creativity within the scope of architecture and to produce autonomous artificial systems that produce novel combinations, questions arise such as the role of the architect as the designer, the applicability of emergence within the practice of architecture and the potential which adopting an emergent based approach to design has on the future of the profession? These are addressed in the form of a speculative discussion.

Ageneral philosophical description of emergence is often presented as the phenomenon wherebythe interactions among simple delocalised entities result in the creation of larger, more complex entities or behaviours. These resulting properties, however, exhibit characteristics which their originating entities do not. Emergence can be referred to as the

appearance of novel entities which cannot have been predetermined from what came before. Peter Cariani (2008) directly relates an emergent based system to an “autopoiesis[1] of neuronal signals” in his essay entitled, Emergence and Creativity

An example of emergence can be found in ant colonies The colony is able to produce complex ventilated structures, harvest fungi farms, support aphid cattle and defend the colony from threats, however the individual ants which comprise the colony have limited intelligence and cannot achieve these activities individually. How does such collective complexity and intelligence emerge from simple non-intelligent elements? In the case of a colony, order is established out of chaos by each individual following a simple rule set. Often ant colonies have a distributed set of jobs including gathering, building, defending and caretaking. Yet no individual element within the colony orchestrates this distribution (see figure 1 and 2) Rather each ant emits pheromones which are used by neighbouring ants to identify that ant’s present job within the colony. If the ant colony is attacked and suffers a loss of gatherers, neighbouring ants will encounter fewer ants which emit ‘gather’ pheromones When an individual ant has not encountered a critical number of gathers that ant may change function to a gatherer, reestablishing a balance of jobs, and thus order, within the colony. In the above example, the interactions of each ant are relatively random and there is no central command dictating which ants a single ant will encounter, yet by following a basic decentralised rule set the characteristics of a colony emerge out of the chaos of the individuals.

Another more elemental example of emergence is organic life Living entities are an emergent property of the interactions between small lifeless atoms which collectively form molecules which support organic protein structures and which, in turn, comprise the elements of a cell. A structure with unique characteristics emerges as a result of the complex interactions of billions of atoms.

Other examples of emergence include the murmuration movements of a flock of starlings or nations which emerge from the collective interactions of people in geographic areas.

An important distinction to establish upfront is ‘resultant’ versus ‘emergent’. Over 100 years ago, in 1875, an English philosopher G.H. Lewes made such a distinction, with regard to compounds forming during chemical reactions. Lewes (cited in Wolf and Holvoet 2005, p.2) states:

…although each effect is the resultant of its components, we cannot always trace the steps of the process, so as to see in the product the mode of operation of each factor. In the latter case, I propose to call the effect an emergent… (italics added).

Therefore, by only tracing the underlying components of a system and their local properties an observer cannot conclude a pre-determined notion of the outcome without engaging the process itself.

Published in 1972, Philip Anderson’s landmark article, More is Different, argues that the theory of emergence is useful in countering what he describes as the constructionist hypothesis, namely the “ability to reduce everything to simple fundamental laws… [implying] the ability to start those laws and reconstruct the universe” (Abbot 2006, p. 14) and proceeds to state that “The whole becomes not only more than but very different from the sum of its parts.”

This notion of the ‘whole before its parts’ and ‘Gestalt’ – a system comprised of individual elements arranged in such a unified manner, that it reads as a whole, and which cannot be interpreted merely as a sum of these individual elements – can be traced, within Western philosophy, as far back as the time of the ancient Greeks (Wolf and Holvoet 2005). Yet, both

1 A self-referential system in which all the elements are interlinked and an outside influence that affects a single element will propagate change or a response throughout all elements within the system. An autopoiesis is also considered to be a system capable of reproducing and maintaining itself. The term was introduced in 1972 by the Chilean biologists Humberto Maturana and Franciso Varela. An example of autopoiesis is the self-maintaining chemistry of living cells.

the ‘whole before its parts’ and ‘Gestalt’ presuppose an existing entity, in comparison, emergence is not a pre-determined but a dynamical system[2] which occurs over time.

Fifty years before Anderson’s article, Lewes’ terminology of emergence was adopted during the 1920s within a quasi-movement in the sciences, philosophy and theology referred to as emergent evolutionism or proto-emergentism and during this period the contested definition of emergence was predominantly utilised to counter reductionism, a similar philosophical construct to Anderson’s constructionist hypothesis. Whilst emergent evolutionism struggled to provide reasoning for its processes, a second movement referred to as neo-emergence or complexity theory attempted to address this lack of understanding (Wolf and Holvoet 2005). Complexity theory is an interdisciplinary theory, predominately studied in the sciences and mathematics, which developed in the 1960s from systems theory and comprises the study of complex systems (Grobman 2005) The concept of emergence was useful within complexity theory to aid in understanding uncertainty and non-linearity Whilst emergence within complex systems also assisted in advances in cybernetics, evolutionary biology and artificial intelligence. The study of emergence within complex systems was influenced by four central schools of research, which are outlined by Wolf and Holvoet (2005):

1. Complex adaptive systems theory: defined emergence as the patterns perceptible at the macro-scale produced by the interactions of agents at the micro-scale

2. Nonlinear dynamical systems theory and Chaos theory: established the model of attractors – an explicit behaviour to which a complex system evolves[3] .

3. The synergetics school: the study of emergence within physical systems.

4. Far-from-equilibrium thermodynamics: a branch of thermodynamics, introduced by the chemist Ilya Prigogine embraces the concepts of discontinuity and states within flux. It refers to emergent phenomena as dissipative structures – affected by entropy – which require far-from-equilibrium conditions to occur.

Amore complete study of emergence is beyond the scope of this paper However, the multi-disciplinary nature of the study of emergence helps to better understand complex behaviours from biological systems to weather patterns, as well as crowd dynamics. At this point it is possible to consider some of the driving forces which led to the investigation of emergence within the field of architectural theory Since Anderson viewed his departure from the prevailing reductionist theory as radical, he thought it necessary to reaffirm his adherence to reductionism, by stating:

[The] workings of all the animate and inanimate matter of which we have any detailed knowledge are all … controlled by the same set of fundamental laws [of physics].… [W]e must all start with reductionism, which I fully accept. (Abbot 2006, p.15)

The reductionist program, within the field of physics, is the search for the common source of all explanations. While reductionism does not preclude the presence of that which we term emergent phenomena, it does necessitate the ability to understand those phenomena entirely in terms of the processes from which they are composed. Therefore, reductionist understanding differs substantially from emergentism, which intends that what emerges in a complex system is greater and fundamentally different than the sum of the processes from which it originates (Cohen and Axelrod 2014)

2 A system in which a procedure describes the time dependence of a point in its ambient space.

3 The philosopher of science David Newman describes such an attractor as the so called strange attractor, which he describes as a genuine emergent phenomenon (Newman 1996). A strange attractor is characterised as locally unstable yet globally stable (Grebogi, Ott, and Yorke 1987).

Pen, ink, watercolour and metal point on paper, 343 x 245 mm. Gallerie dell’Accademia, Venice.

Reductionism contains parallels with theories of Logical Positivism – a philosophical system which only recognises that which can be scientifically verified, or which is capable of logical or mathematical proof However, a shift away from an architecture established on principles of embodied experience, the concept of Umwelt – used to describe an organism’s perception of the world – or the relations of proportion derived from the Vitruvian Man, can be identified as early as the Renaissance (see figure 3). The Renaissance was a period of significant scientific discovery and architects of the time were adopting new theories and technologies. Such an example can be found in the provocative treatise; Ordonnance des cinq espèces de colonnes selon la méthode de anciens (1683) published by the architect Claude Perrault. Within Perrault’s treatise was a departure from the established body metaphors, which governed many of the principles of architecture, to a “scientific” rationale. Perrault investigated ancient and Renaissance architectures in order to verify or disprove the Vitruvian principles governing proportion and he discovered inconsistencies in the use of these principles and little correlation to the proportions prescribed by the Vitruvian Man. Perrault concluded that the use of these rules of proportion generated “arbitrary beauty” established on “fancy” and cultural “taste” rather than a scientifically verifiable truth. Perrault also rejected established methodologies of optical correction, including entasis,[4] as they emphasised fallible human perception above verifiable mathematics (Hight 2008) The architectural historian Alberto Pérez-Gómez collaborated in the English translation of Perrault’s Ordonnance and in Christopher Hight’s book Architectural Principles in the Age of Cybernetics, Hight refers to Pérez-Gómez publication titled Architecture and the Crisis of Modern Science, in which Pérez-Gómez proposes that Perrault’s Ordonnance was a turning point in architectural history Hight (2008, p.23) quotes Pérez-Gómez’s description as:

…a monumentally tragic marker of when “architectural proportion lost, for the first time, in an explicit way, its character as a transcendental link between microcosm and macrocosm.”

Perrault’s work influenced architectural theory by reducing the universe to a set of verifiable and pre-determined outcomes based on the absolute laws governing it, such as gravity. The new architectural theories rejected established frameworks, such as the metaphorical, metaphysical and erroneous outcome, in favour of rationalism and ideal formalism (Hight 2008). Therefore, developing architectural theories should be rooted in the mathematical rigor which the Renaissance had established. However, Logical Positivism would replace man and God, as the explanation of the known universe, with science as the new order of the universe. Due to this, science, empirical evidence and Logical Positivism would heavily influence architecture. Pérez-Gómez postulated that Perrault’s text has continued to influence architecture through to modernity, regardless of its empirical accuracy (Hight 2008)

The influence of positivism on the Bauhaus can be traced to the writings and teachings of the architect Jean-Nicolas-Louis Durand before the school’s inception in 1919. Durand wrote extensively on the topic of functionalism and Pérez-Gómez suggests that his influence gave rise to an architectural form which was condensed to basic symbols of technological utilitarian

4 A method in architecture which applies a convex curve to a surface for aesthetic purposes. It is commonly used in certain orders of Classical columns as an attempt to correct the optical illusion of a buckling in the column which gives rise to a sense of weakness

value (Hight 2008) Logical Positivism[5] was formally introduced to the school with the formation of the Vienna Circle, established by a group comprised of outsiders to philosophy and included primarily; mathematicians, physicists and sociologists, shortly after the publication of Principia Mathematica in 1922 The publication of Principia Mathematica, by Alfred North Whitehead and Bertrand Russell, in 1910 provided a precedent for many early scientific and mathematic theories, such as thermodynamics At this time, scientists commonly considered nature to be a predictable entity due to its adherence to numerous sets of laws and equations. Scientists assumed that with sufficient knowledge and enough data, the future state of the universe could be predicted as the universe could be reduced to a set of physical laws (Pham 2011). It was not until advancements in fields such as thermodynamics including Far-from-equilibrium thermodynamics that there was a shift in this perception of nature (Galison 1990).

The aim of the Vienna Circle was to establish principles of logic and science. The group’s ideas were introduced within the Bauhaus in 1959 through the lectures of Rudolf Carnap, a leading member of the Vienna Circle The earlier Wittgenstein Haus of 1929 also influenced the school’s curriculum with both ignoring the notions of metaphysics[6] and decoration in favour of a perceived contemporary view of a scientism that unites all aspects of life Galison (1990, p.710) states:

Both enterprises sought to instantiate a modernism emphasizing what I will call "transparent construction," a manifest building up from simple elements to all higher forms that would, by virtue of the systematic constructional programitself, guarantee the exclusion of the decorative, mystical, or metaphysical

The promotion of Logical Positivism encouraged rationalism and steered towards a universal truth system such as the Principia Mathematica The school became a research centre for the application of Logical Positivism in design, and along with Durand’s functionalism, profoundly influenced a generation of architects and influenced subsequent architectural styles such as, the International Style (Ireland and Zaroukas 2015) Even after the events of the second world war and the establishment of a New Bauhaus in Chicago, the new school imported the Vienna Circle’s Logical Positivism as a core component of its design program (Hight 2008) The influence of Logical Positivism on the Bauhaus emphasised an approach to design which favoured logical rule-based procedures such as “if/then”, “or” and “and” The approach required empirical knowledge, isolating it from uncertainty or elements of chance or flux. Galison (1990, p.711) describes how:

…the logical positivists sought to ground a "scientific," anti-philosophical philosophy that would set all reliable knowledge on strong foundations and isolate it from the unreliable

5 Logical Positivism is a theory within Epistemology that developed out of Positivism and advocated for a systematic reduction of all human knowledge to logical and scientific foundations. Therefore, a statement is meaningful only if it is either purely formal (essentially, mathematics and logic) or solvable through empirical verification.

6 A branch of philosophy which studies the first principles of abstract concepts such as being, knowing, identity, time, and space.

With a focus on rule-based logical systems the school’s curriculum promoted a new antiaesthetic that eliminated decoration in favour of a design which was centred around functionality. This was achieved by utilising scientific methodologies to combine simple geometric forms alongside prime colours (Galison 1990) The resulting architecture became a product of a technological world view (Hight 2008) It can be argued that Logical Positivism and the influence of the Bauhaus on architecture was a form of reductionist theory as both required a universal truth system in which the whole or future-state could be reduced to the sum of its parts. Hight (2008, p.25) argues that:

[i]f ornament was seen as meaningless supplement, it became appropriated by the most decadent formalisms of the Beaux-Arts. In response, early modernism rejected “form” and “style” in favour of what appeared to be more objective criteria. The literal construction, its function and its structure, were thought to lead to a more rational, objective ground free from subjective license.

The influence of the Bauhaus, Durand, Vienna Circle and Logical Positivism resulted in a modernistarchitecture which followed formalistand rationalist principles. These principles were rooted in the certainty and computability which science provided and central to this was the notion of an axiomatic system such as the Principia Mathematica The concept of solvability or computability has been an inherent characteristic of the known universe, examples include; biological and learned algorithms which guide human and animal behaviour; natural laws whose computability facilitate humanity to live and natural quantifiable constants such as π and e (Cooper 2012). Principia Mathematica aided the mathematician David Hilbert in his attempt to develop a mathematical program which would insure mathematics’ resistance to paradox and inconsistencies. Hillbert’s program (Zach 2005) included:

• Completeness: all mathematical systems can either be proved or disproved.

• Consistency: within the formalism of mathematics no contradictions can arise. Thus, a statement is either true or false

• Conservation: any result about ‘real objects’ constructed using reasoning about ‘idealobjects’ (such as an uncountable set) must be verified without using ‘ideal objects’

• Decidability: an algorithm for deciding the truth or falsity of any mathematical statement is required.

This adherence to any axiomatic system which attempted to be a universal truth system was, however, questioned as early as the formation of the Vienna Circle itself. The Austrian born mathematician and philosopher Kurt Friedrich Gödel published two theorems on incompleteness in 1931 Gödel questioned the Principia Mathematica along with any such universal truth system and was able to definitively prove that no logical or axiomatic system can be both consistent and complete (Ireland and Zaroukas 2015) Gödel’s theorems on incompleteness would set in motion a cataclysmic shift within the sciences as it abolishes notions of predetermination within complex systems and reductionist principles. Gödel’s theorems were followed shortly after by Alan Turing’s introduction of the idea of a Universal Turing Machine, which he published in 1937 and proposed as his proof The general concept of a Universal Turing Machine was a device with the capacity to simulate any other computational machine via an arbitrary input. The requirements for such a device were still analogue at the time and consisted of a ‘description’ of the machine to be simulated along with an input, both stored on tape. However, and of key importance, the Universal Turing Machine demonstrated a principle referred to as the undecidable problem, described as a decision problem where it is known to be impossible to build a single algorithm that always leads to a correct yes-or-no answer. This problem is of key importance to both natural and artificial systems, as it implies that there are problems which cannot initially be solved and require a guess or choice to be taken. Based on this undecidable problem Turing concluded that any substantial mathematical theory was undecidable, as it contained an incomputable set of theorems (Cooper 2012)

The outcome of Gödel’s theorems and Turing’s Universal Turing Machine was to invalidate Hilbert’s program, Principia Mathematica and the possibility for an axiomatic systems capacity to be complete, consistent and decidable (Ireland and Zaroukas 2015) This concluded 50 years of attempts to build a set of axioms sufficient for all mathematics and demonstrated the inaccuracy of formalist thinking.

Turing was also fascinated by the phenomena of emergence within nature and the topic of morphogenesis – a biological process which arises through the cellular growth and

differentiation within an organism and causes the organism to develop its form. At the time of Turing’s teenage years, “British emergentists” such as C. D. Broad, Samuel Alexander, and C. Lloyd Morgan, were at height of their careers and Cooper (2012) suggests that Turing was even interested in adding “computational convergence” to Darwin’s theory.

ith Modernism’s adherence to a formalist and rationalised dictum of the perceived world the invalidity of an axiomatic system also suggested an invalidity to an architecture which rejected notions of uncertainty or chaos No longer could the whole explain the parts, nor the parts explain the whole, only by following a process to its end could one gain an understanding of the microcosm and macrocosm. This suggested a new architecture which did not base design principally on the human body – a return to the Vitruvian Man – nor one that excludes scientific rigor but embraces a new methodology to tackle design of space and function through the investigation of the relations between interconnected elements. Hight (2008, p.9-10) comments on Norbert Wiener’s role as a founder of an emerging interdisciplinary research project titled Cybernetics:

Norbert Wiener replaced the architectural body metaphor by describing human experience as a whirlpool, a pattern, a momentarily stable system within a vast flowing ocean of information. “Life,” in this cybernetic framework, is an emergent epiphenomena produced by feedback loops, codes, and informational errors(mutations); bodies became performative containers for the transmission and transformation of this semiotic code across generations.

The word cybernetics originated from Greek cybernēticḗ defined as “governance”, cybernáō related to steering or navigation, cybérnēsis meaning "government". Cybernetics began as an interdisciplinary study of systems which operated through delocalised collective control and communication. Whilst cybernetics emerged in the 1940s, its focus was not limited to organic systems but also machines. Within the 21st century, the term is often related to the control of any system through technology. Cyberneticists study a broad field of topics including; cognition, adaptation, social control, emergence, communication, and connectivity. Cybernetics differentiates its epistemology, however, through abstracting from a specific organism, device or social organisation, the communication and control generated through its internal feedback processes.

Cybernetics represented a shift towards the logics of control which had its roots in Gödel’s and Turing’s proofs. and we are now able to see common threads between Cybernetics and Emergence as both relate to the small interactions of elements which bring about larger entities or characteristics.

hen trying to establish why emergence has proliferated within the theoretical framework of architectural study in the last twenty years, it is beneficial to look to precedent movements during the late 20th century particularly post-structuralism which, in terms of its architectural influence, sought the possibility of a post-humanist approach to design. Poststructuralism considered structuralism’s empirical reductionist principles and phenomenology’s experiential methodology as an approach to establishing knowledge to be impossible. Post-structuralism however subverted this impossibility by not considering it as a failure, but rather as something to be embraced. Post-structuralism argued that the world can’t be viewed in black and white, as the structuralist view true or false, but rather as a set of grey areas in between what is known. Space should not be bound to definitions such as; building/landscape, inside/outside or floor/wall It sought to achieve this through expanding architectural thought by introducing new scientific discourses, such as; chaos theory, complexity theory, genetic engineering, and more recently, ecology and emergence. These new scientific fields appeared to provide an alternative to the antiquated literal body metaphor and consider the ‘body’ rather as a metaphysical or metaphor for the relationship between

culture, the subject, the body and the environment (Hight 2008) Post-structuralism seemingly represented a return to Pérez-Gómez desire for an architecture which has a transcendental link between microcosm and macrocosm

Furthermore, post-structuralist theory, new scientific paradigms and computer-aided design have been disseminated into architectural schools during the 21st century through influential exhibitions, such as the infamous 1988 Deconstructivist Architecture exhibit at New York’s Museum of Modern Art and a new wave of journals such as AD, Assemblage and ANY. These journals provided a platform for reputable figures, such as K. Michael Hays, Eisenman, and Diana Agrest, as well as the emerging careers of Zaha Hadid, UNStudio, Diller + Scofidio and Greg Lynn (Hight 2008)

Within the framework of computational multi-agent applications there are two key concepts to consider: emergence and self-organisation A combination of the two are often prevalent in complex systems yet, are often mistakenly considered synonymous. Consequently, literature on multi-agent applications and working examples have generated confusion regarding the meaning of each term. Furthermore, assumptions can arise that a system which demonstrates characteristics of self-organisation, therefore, is a working example of an emergent system. Thus, it is important to start by establishing clear distinctions between emergence and self-organisation. This distinction is aided by the writings of Wolf and Holvoet (2005), who explain that both phenomena are mutually exclusive, yet can co-exist in a dynamical system.

Initially, the phenomenon where global colony-like behaviour arises through the interactions of delocalised entities was defined as emergence. This definition, however, is too vague to separate emergence from self-organisation. A system which exhibits emergence should be evaluated from both the macro-scale, in which the system is considered as a unified entity and the micro-scale, in which the system is considered on the individual entities that form the system itself. The use of the term emergence to describe a system’s global behaviour must maintain two rules:

• The global behaviour is determined through the actions of the delocalised entities and

• The global behaviour can’t be determined by the characteristics a single entity.

Wolf and Holvoet (2005, p.3) propose a working definition of emergence as:

A system exhibits emergence when there are coherent emergents at the macro-level that dynamically arise from the interactions between the parts at the micro-level. Such emergents are novel w.r.t. the individual parts of the system

Within this definition there are two critical components to address First, are the interactions between the micro-level parts of a system. Within an emergent system the individual parts require some capacity to pass information between each other. This passing of information provides a mechanism for behavioural relations between the parts of the system. The second is the concept of an ‘emergent’ which can be categorised as the result of the process of emergence, including, but not limited to: properties, behaviours, structures and patterns. Wolf and Holvoet (2005) propose that an emergent system requires the following characteristics; micro-macro effect, radical novelty, coherence, interacting parts, dynamical, decentralised control, two-way link and robustness and flexibility.

On an intuitive level, self-organization refers to exactly what is suggested: systems that appear to organize themselves without external direction, manipulation, or control (Beth and Dempster 1998, p. 41).

Whilst the above definition aids in describing a self-organisational system it does little to differentiate it from an emergent system In chemicalreactions self-organisation is often defined as self-assembly yet within computer science swarm systems use self-organisation to produce global emergent behaviour. The term ‘self-organisation’ was first encountered in 1947, in a paper by W. Ross Ashby in which he proposed that organisation refers to the functional dependence of a system’s future state to its present state and any existing external inputs (Wolf and Holvoet 2005) Wolf and Holvoet (2005) identify the following characteristics as essential to

describing a self-organisational system: Increase in drder, Autonomy, Adaptability to changes and Dynamical Self-organisation is considered an essential characteristic within multi-agent systems. Where the organisational aspect describes the system’s capacity to model cooperation or group behaviours and therefore increase the system’s order.

Wolf and Holvoet (2005, p.7) propose a working definition for self-organisation as:

Self-organisation is a dynamical and adaptive process where systems acquire and maintain structure themselves, without external control

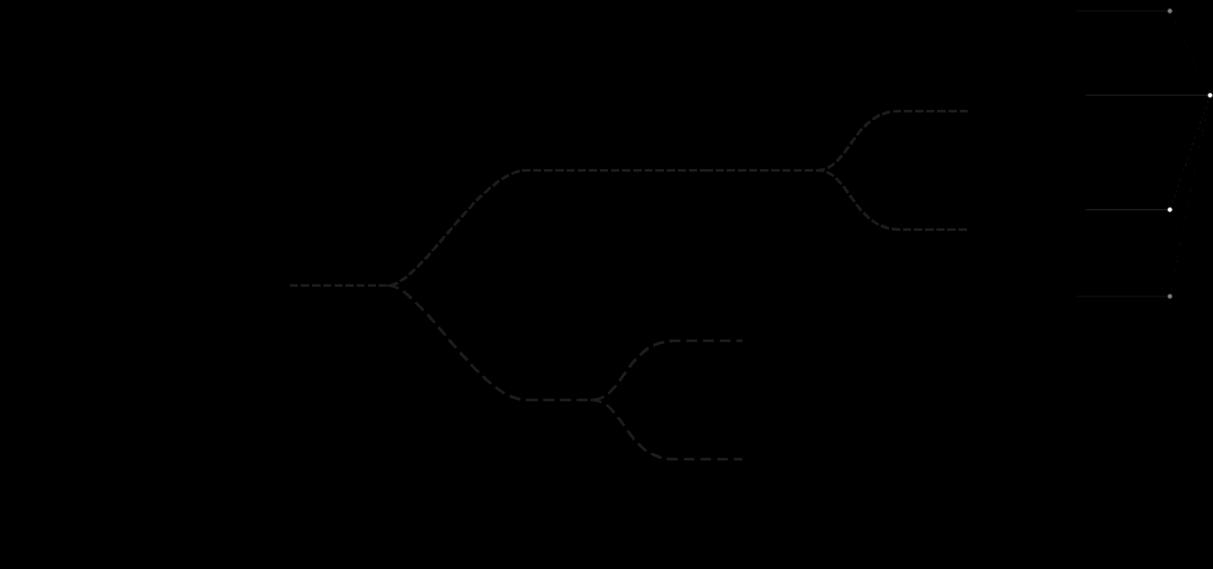

When reviewing the characteristics provided for each phenomenon by Wolf and Holvoet, the differences between the phenomena become slightly clearer The first key difference is an emergent system’s reliance on a bidirectional link between the macro and micro, which is not present in self-organising systems. A rudimentary model of a selforganising system can be constructed as an algorithm such as the following growth algorithm (See figure 4). The following algorithm is structured as a hierarchy of objects;[7] tree (containerobject) which stores a list of branches (agent-object) and a list of foods (particle-object) (See figure 5)

Figure 5: a diagram illustrating the logical hierarchical structure of the algorithm’s individual elements and the routes which pass information between these elements.

Figure 6: sample of code detailing the creation of anew branch with the randomisation of its direction highlighted.

In figure 4 we can see three sets of growth simulations and for each set a parameter’s value has been altered. A starting branch agent is given within each iteration of the algorithm and a predetermined number of food particles are provided. Each food particle is set a random velocity which governs its movement within a sphere. A new branch will occur if the previous branch is within range of a food particle. The new branch will have a unique random direction and store a reference to the branch it’s

7 Used in computer science within the class-based object-oriented programming archetype to refer to a unique instance of class. The object (class) can contain a combination of variables, functions and data structures.

connected to as a parent branch. The growth algorithm demonstrates an increase in order, autonomy is partially provided through a branch’s randomisation of its direction and lastly the system is inherently dynamical, as order and change occur over time.

A question arises over the nature of the food particles acting as a global control system for the growth. However, the food particles provide a motivation for growth, the direction of growth is randomised internally (see figure 6), the effect is one where the food particles influence the growth but don’t govern the structure or order of the growth. Wolf and Holvoet (2005, p.8) describe it thus

input is still possible as long as the inputs are no control instructions from outside the system. In other words, normal data input flows are allowed but the decision on what to do next should be made completely inside the system

This growth algorithm also demonstrates a system which lacks a ‘two-way’ link between the macro level behaviour and the micro-level behaviour. The interactions of the branches to a single previous branch and a single food particle govern the developing global structure, yet the global structure plays no role in affecting these processes. Furthermore, the system lacks interacting parts as each branch is only influenced by the branch which was generated before it.

Figure 7: Diagrammatic illustration of systems which incorporate emergence or/and self-organisation. (a) selforganisation without emergence (b) emergence without self-organisation (c) combination of emergence and self-organisation.

The algorithm above illustrates a system which conforms to the characteristics of a selforganising system and lacks the characteristics of an emergent system. However, an emergent system can be exclusive of self-organisation such as, if there is no statistical increase in complexity over time. Figure 7 illustrates how the combination of emergence and self-organisation can occur in a single system What becomes interesting is that selforganisation in such a system can be considered an emergent property of the macro – micro interactions of the system, yet no rule governs the order of operations, and it can also be considered that self-organisation leads to emergent properties. When dealing with complex, multi-agent systems (which are explored in the following chapter) it is recommended to combine both emergence and self-organisation, as the resultant complexity of the global governing rule set might have too many possibilities to account for. To achieve coherent behaviour at the global level requires that behaviour to arise through self-organisation.

Up to this point, this paper has established characteristics of emergence and selforganisation yet only demonstrated a system which makes use of self-organisation. I have shown how emerging fields of studywithin the late twentieth century, such as cybernetics and post-structuralism, have influenced architects to explore new design methodologies based on; behaviour and how emergent organisation and structure develop over time through the interactions of smaller entities. Their investigations have led to architects using complex systems to model crowd behaviours and processes behind morphogenesis as a new methodology for design To explore the potential of using emergence within the design process, two case studies will be discussed, both of which make use of autonomous agents.

Before discussing an agent-based emergent-design system, it is required that at least two terms are clearly defined, the first being agent and the second being emergent. The previous chapters in the paper have broadly covered the history and characteristics of emergence yet the term ‘agent’ remains undefined. Within the field of computing an agent is an entity which requires autonomy, hence the term autonomous agent. In a computer program an agent is a segment of code which finds its own data and generates a choice of action (Coates 2010) Autonomy implies some semblance of free will and where the agent’s actions are not determined via a global plan or designated leader. One can associate an agent with an individual ant within a colony. Often within an autonomous agent system, an agent’s ability to express autonomy is illustrated through movement yet autonomy does not need to be limited to movement. The concept of an autonomous agent system is one in which the forces for change are internally generated and are not caused through an external factor (Shiffman 2012). Daniel Shiffman describes three key components of autonomous agents in his book The Nature of Code. These components are essential when coding such a system and include that:

• An agent cannot perceive its entire environment: Like us, an agent can perceive its immediate environment, yet it should not be aware of the activities outside of its local domain. Examples of a local domain of an agent can include, a search radius, a field of view, or a classification type.

• An agent has no leader: Whilst not necessarily a requirement of an agent-based system, if we desire the system to abide by the rules of an emergent system which we will develop as a complex multi-agent system, then the behaviour of such a system should be governed by the agent to agent interactions

• An agent itself processes its perceivable environment and calculates an action: An agent will require some rule set of behaviours, which the agent can choose to use in response to changes it perceives within its local domain. An example of a behavioural action includes a seek action which the agent uses to move towards a desired target, if the target is within the agent’s local domain. In the case of an agent which moves these actions are describes as a force.[8]

Figure 7: Right: illustration of a computational emergent flocking algorithm.

8 Force within a computer program is calculated as a vector which contains directionality, a line pointing from the origin of cartesian coordinate system to another point, and magnitude which describes the lines length.

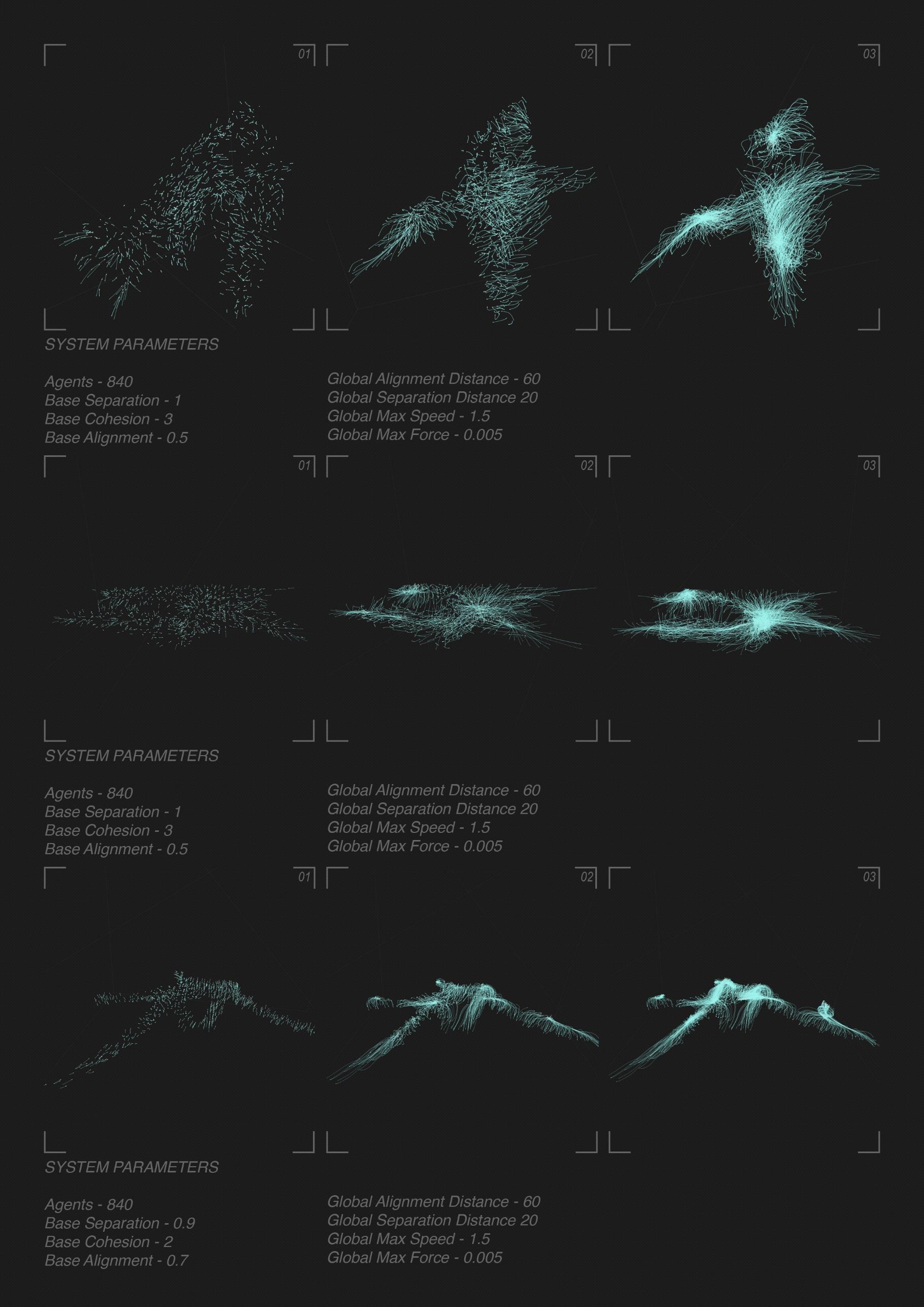

This agent-based system was developed within the first year of my MArch course. The system makes use of a flocking algorithm, most often associated with the study of bird formations, such as a murmuration of starlings, yet, can also be utilised to explore the creative (macro-patterns) which are often found within naturally occurring structures which arise through group behaviours (micro-processes) (Cariani 2008). The approach can be considered a bottom-up process towards design as the agent-to-agent behaviours are used as a method to draw an emergent spatial configuration. Within this project I was interested in exploring forms which could express visual characteristics similar to that of the Northern Lights The results of which are then exported to CAD software (Rhino) for evaluation and alteration to develop a tailored final form (see figure 8).

Figure 9: a diagram illustrating the logical hierarchical structure of the algorithm’s individual elements. To connect the two structures a middle-man is required, known as a function, which will contain a set of code to evaluate inputs if they exist and return a result based on the output of the function’s code.

The following case study’s algorithm was also structured as a hierarchy of objects yet in this case the algorithm was significantly more complex as there are two separate, yet interconnected hierarchies, illustrated in figure 9. This algorithm incorporated a data input which was used to pass a three-dimensional structural analysis of a pre-given voxalised form This data included a vector field which described the compression and tension stress lines of the form (see figure 10) Each vector is stored within a voxel object. Similar to the previous algorithm’s food particles, these vectors are used to influence a change within the behaviour of the agents but do not represent an instruction set for the behavioural change for an agent or group of agents. As the agents explore their environment three behavioural rules; separation, alignment and cohesion, are used to create the flocking characteristics (see figure 11). These are stored as weighted values, unique to each agent, by doing so each agent has true agency (autonomy) within the system. An agent’s behavioural weightings are further influenced by the structural data stored within the voxel which is most nearby to that agent.

This system’s capacity to form emergent behaviour can be evaluated by using the characteristics of emergence established in the previous chapter. Within the previous selforganising algorithm, the lack of a two-way link between the macro-level and micro-level was identified, however, within this algorithm, all agents have the capacity to communicate with any other agent within its local domain; in this case a set of neighbouring agents which is constantly reassessed. Thus, the emergent global configuration is a by-product of the micromacro effect, whilst the global configuration inherently affects the micro behaviours by causing an agent’s neighbours to be in a state of flux, and in doing so give rise to new agent-to-agent behaviour. Essentially, there is a feedback loop between the individual agents and the global configuration of the agents. Another key characteristic which this system exhibits is; flexibility and robustness, as no single agent can be considered central to the formation of the global configuration and can in fact be removed from the system without causing damage, therefore

whilst the system’s performance may decrease the degradation will be graceful, avoiding a sudden loss of function (Wolf and Holvoet 2005). Furthermore, we can also identify characteristics of self-organisation within this system such as, an increase in order though a statistical increase in complexity over time and the configuration of the agents does not form through randomness. Each agent exhibits autonomy as discussed earlier and finally the system demonstrates adaptability or robustness with regard to changes within its environment, including, but not limited to, discovering new agents.

Figure 13: a diagram illustrating the logical hierarchical structure of case study two’s algorithm’s individual elements. Within this algorithm there are three separate structures (Flock, Grid and Activity) therefore multiple functions are used to pass information as necessary between these structures. Furthermore, the Flock structure is comprised of two substructures (Agent and Zone).

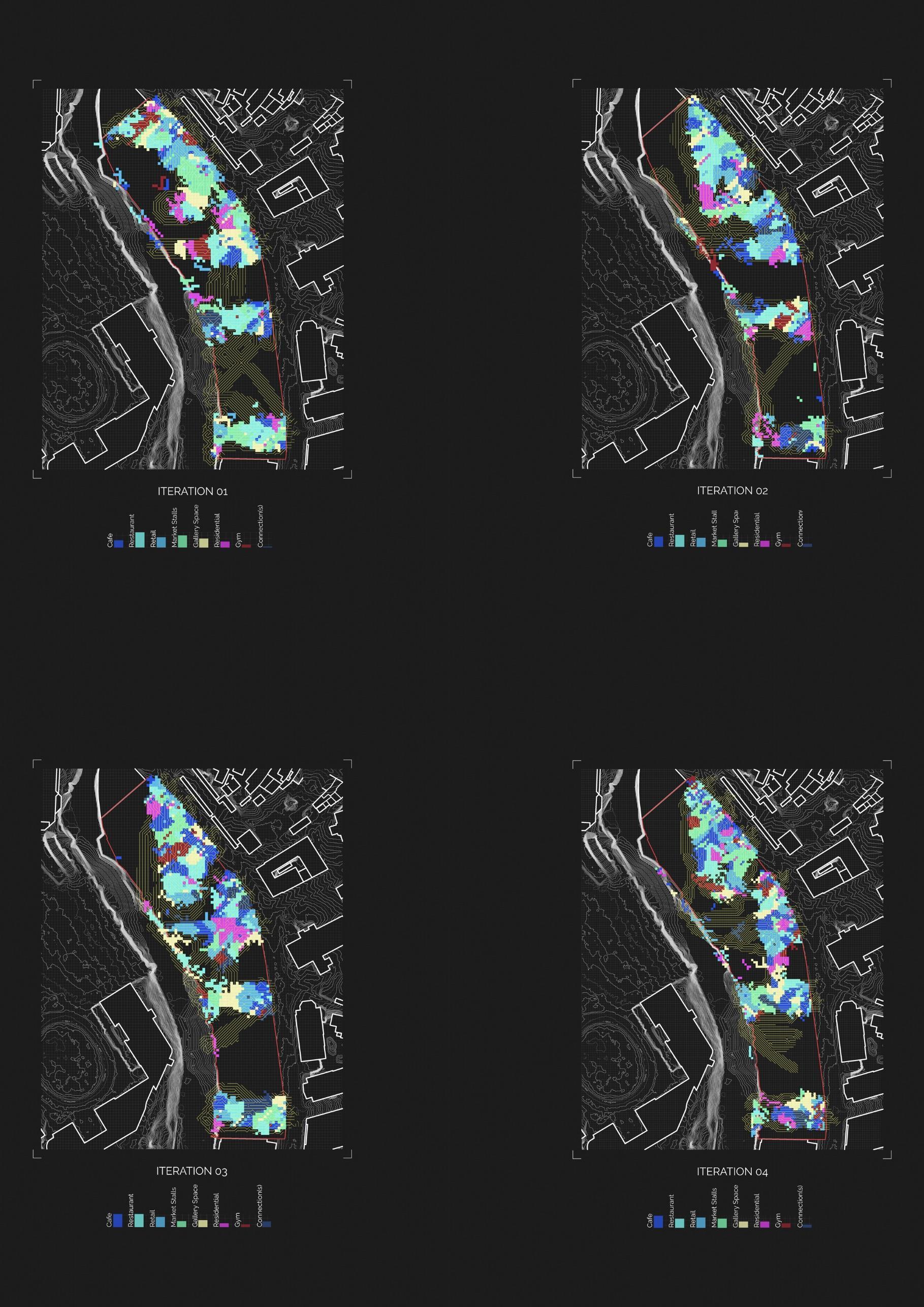

Case study two also explores an agent-based algorithm. However, in this example the collective movement of all the agents no longer produces an artefact which expresses the agent movement, trails, as a literal representation. Rather the agent-to-agent movement or ‘behaviour’ is used to develop an emergent urban-organisational system, which I utilised as part of the foundation of my design module during the second year of my MArch course. When considering our own perception of space, communication and interaction form the foundation of our understanding and capacity to influence change within the space around us. This case study considers the possibility of developing a master plan for a site which is developed through such interactions (see figure 12)

Whilst this algorithm inherently relies on the principles of a flocking algorithm (see figure 11), the desire here was to produce a model of possible human movements and interactions on a site. The emergent properties of this system are further developed by providing each agent with a randomised number of activities, which it desires to achieve whilst exploring the site, referred to as the agent’s itinerary. The possible range of activities is setup within an input file and include, but are not limited to; getting a coffee, seeing an exhibition, shopping at a retailer or dining at a restaurant, and this list could be expanded exponentially. An agent’s itinerary, its interaction with the site itself, illustrated as a grid of cells, and its interaction with neighbouring agents are combined to distribute potential spatial types and functions over the site. The distribution is partly an emergent phenomenon of the flocking behaviour of the agents, yet also a result of their shared activities. To determine shared activities, the agents use topological search to build a list (array) of which agents are within their local domain based on a search radius (see figure 14) By using topological search, the system can compare an agent’s itinerary against the itinerary of all other agents within its local domain and average the desires to find a common activity. At this point the agent, along with its local agents, will deploy this common activity to the site cell which the agent occupies, lastly the common activity is removed from the agent’s itinerary. One last characteristic of the system is an agent’s capacity to query the cell it currently occupies for its type, in doing so the agents are able to distinguish between unexplored regions and regions with an existing function. Whilst doing so an agent will also query the eight neighbouring cells of an unexplored region and if a common neighbouring function is discovered and the current agent’s itinerary includes this activity the unexplored cell which the agent currently occupies will be set to this common function. In doing so the emergent distribution of functions over the site can reveal common regions of similarity whilst retaining unique and unpredictable spatial scenarios such as residential spaces within public spaces. The distribution of functions over the site can be classified as an emerging globalconfiguration which arises through the combinations of agentto-agent behaviours and illustrates the two-way link characteristic of an emergent system as previously discussed. Furthermore, as an agent’s itinerary is depleted it is removed from the system, this rate cannot be determined as it is in a state of flux and a random numberof agents are added back into the system when an agent is removed. Here the system demonstrates Wolf and Holvoet’s (2005) characteristic of Robustness and Flexibility within an emergent system. The destruction of a single element (agent) does not cause a failure within the system as a single agent does not have centralised control over the operation of the system.

In the qualitative assessment of each case study, both systems demonstrate emergent characteristics as defined by Wolf and Holvoet (2005), yet the property of Radical Novelty within an emergent system remains inconclusive. To tackle this characteristic, this paper now examines one of the two branches of emergent systems outlined by Peter Cariani. According to Cariani (2008, p.1):

Emergence entails the creation of something fundamentally new – something that could have [not] been foreseen in terms of what came before. Emergence = fundamental novelty A new winning lottery number or a new record temperature on a given day do not rise to this standard. On the other hand, the appearance of self-producing, self-sustaining, living organisms on the earth was clearly an emergent event

Cariani’s statement along with Wolf and Holvoet’s property of Radical Novelty throw a metaphorical spanner in the works of computational emergent systems, such as the case studies previously discussed. As a designer of an autonomous agent system, one must define a set of parts (agents) and rules (behaviours such as flocking), and iteratively combine these parts and rules to form new aggregations/artefacts. The building blocks (code) are defined by Cariani as the “primitives” of the agent-system and their combinations define the possibilities of that system (Cariani 2008). An agent-based system’s inherit realm of possibilities may certainly be monumentally large, such as the possibly of a winning lottery number, yet their possibilities are finite and furthermore, each possibility exists within the given combinatorialrules, even if the designer has yet to stumble upon it as a result. This certainly raises the question of whether such systems are in-fact emergent systems at all? However, Cariani, offers a solution to this question by proposing a variation of emergence referred to as combinatoric emergence (see figure 15). Combinatorial emergence provides a method to generate what Cariani defines as “emergent novelty”. Yet, Lloyd Morgan defines this as a resultant property of novel combination (Cariani 2008). This also suggests how such systems cannot be considered to achieve creativity themselves and are an extension of the creativity of the designer. Thus, the authorship of the outputs produced through such systems cannot be considered vague, the designer maintains complete ownership and responsibility over the outputs.

Both case studies illustrate how the adoption of an agent-based emergent system, within the design process, can benefit the designer through the rapid production of a variety of unique, yet hypothetical outputs. The form of the outputs can vary significantly; from abstract forms to more pragmatic plan-like representations. The outputs can assistin developing an architecture which explores unusual spatial compositions free of the biases and prejudices which govern the intuitive knowledge of the designer. Therefore, such systems have the capacity to provoke, question and expand the understood limitations of a designer’s creativity by suggesting hypothetical scenarios, which they could not have predicted or have perceived, and therefore not considered. Such software tools can be considered as an augmentation of a designer’s sensory capacity as suggested by Cariani (2008, p.3), where he states:

By interposing such devices between our own senses and the world, we can change the relationship of our internal sensory states vis-à-vis the world. In effect we are temporally changing the external semantics of our sensory organs to enable us to access new aspects of our environment.

Within architecture the conception of emergence, as a methodology used to develop design, has a degree of utility as a heuristic for creativity (Cariani 2008) Combinatorial emergence, through digital computational tools, such as an agent-based system, are intrinsically compatible with the methodology by which architects go about structuring space as a combination of smaller parts which can be assembled to form larger structures and as an approach for generating variety from relatively simple, primitive parts. The outputs can be assessed by a designer for suitability yet exhibit peculiar and unforeseen formal properties. The criteria for judgementcan be purelysubjective,based on the designer’s aesthetic affection or utilitarian goals, alternatively they could be more objective based on numerical fitness or structural integrity. The role of the contemporary architect today resembles a computer programmer or system designer who incorporates elements of philosophy and biology to develop systems which produce hypothetical outputs, based on behavioural models or morphogenesis

When considering how such systems could be extended outside of architectural theory and be beneficial to architecture in practice it is essential to understand the limitations of these systems. In doing so it may be possible to incorporate such programs in practice without the concern of their systemic inability to produce working and complete architectural outputs. Furthermore, as these systems often lack the capacity to resolve all contextual, regulator and structural details they should only provide a layer of ‘new’ information to the designer. It’s possible at this point to combine the generative outputs of an agent-based system software with the intuitive knowledge of a designerand to feed the resulting output back into the system. This feedback loop between a combinatorial emergent system and human designer can facilitate both the generation of novel outcomes and outcomes which better articulate the pragmatic requirements of a design project within practice (see figure 16) When attempting to implement an emergent-based approach, within practice, one could consider the outputs of these programs as a layer of conceptual work which proposes hypothetical responses that implicitly incomplete and irregular yet aid in expanding and challenging our intuitive understanding of a design. Similarities to such a process can be found within many architects

sketches for a design, examples include the paintings by Zaha Hadid which formed part of her creative process. For Hadid painting was a tool for architectonic exploration, she stated:

I was very fascinated by abstraction and how it really could lead to abstracting plans, moving away from certain dogmas about what architecture is (Vicanova, 2018, p.13).

When inspecting Hadid’s paintings they often define little pragmatic detail suggesting how the painting could be translated into a fully rationalised building, they remain an abstract layer within the design process which provoke discourse. An example of such a work by Hadid was her painting for the MAXXI Museum in Rome (see figure 17).

The practice of architecture presents a challenge in that the very nature of buildings is unchanging and non-adaptive, thus they are often considered fixed elements in our environment. Additionally, the prevailing process which leads to a finalised design often requires the creation of a master plan which can be passed to all individuals involved in the construction of a building. This process has inherently favoured a top-down approach towards design, in which the macrocosm is defined at the beginning, often exemplified in contemporary residential master-plan development. The microcosm, often being an individual’s relationship with their inhabited spaces, is therefore regularly neglected. Emergence suggests a larger paradigm shift within practice, than simply incorporating novel computational tools, which encompasses an explicit alteration to the processes that inform design.

Extending the principles of an emergent process as an approach towards design, outside of architectural theory, evokes certain requirements of architects, such as extending their formal knowledge into multiple disciplines It furthermore requires a recognition within the profession that building design should no longer be considered based on singular fixed bodies but rather as dynamic and complex energy and material systems which have a life span and cohabitate within an environment of other buildings and beings which influence their use and formation.

A possible method to achieve this, within the present requirements of practice, is through implementing a feedback loop, during the design process, between human intuition and a generative emergentsystem.Thus,providing the capacity to simulate a design which emerges as a result of ‘human-like’ behaviour and interaction with our environment which occurs over time. Similar to the process by which some cities develop, wherein no formal masterplan can dictate the global configuration, yet the interactions of multiple smaller entities over time produce a functioning system.

However, emergence as a strategy for design maintains the requirement for a designer’s intuitive knowledge in order to complete a design to specification. By incorporating emergent processes as a tool within the practice of architecture new perspectives, interpretations or paradigms can appear through architects discovering new methodologies of perceiving the environment. Whilst traditionally practices may include a programmer as part of a design team to assist in coding it is evident that computational tools are an intrinsic method for exploring emergent processes and a working knowledge on how to develop such tools is beneficial in understanding their suitability to a given task, as well as adapting a system to produce a more coherent outcome, with regards to the unique specifications of a project Therefore, to promote a shift towards using emergent-processes within the design process in practice, it is essential that architects be taught programming within their studies as a new methodology for design.

Abbott, R. (2006). Emergence explained: Abstractions: Getting epiphenomena to do real work. Complexity, (12):13-26.

Cariani, P. (2008). Emergence and Creativity. In Emocão Art.ficial 4.0: Emergência [Exhibition volume] Itau Cultural, Sao Paulo, Brazil. p20-42. [Online]: http://www.cariani.com/CarianiNewWebsite/Publications_files/CarianiItauCultural2008Emergence.pdf. [Accessed 20 November 2017].

Grobman, G.G.M. (2005). Complexity Theory: a new way to look at organizational change. Public Administration Quarterly, (29), 350-382.

Wolf, T. De and Holvoet, T. (2005). Emergence Versus Self-Organisation: Different Concepts but Promising When Combined. ESOA 2004: Lecture Notes in Computer Science:1–15.

Zaroukas, E and Ireland, T. (2015). Actuating Auto(poiesis). Systema, 3(2), 34-55.

Abbott, R. (2006). Emergence explained: Abstractions: Getting epiphenomena to do real work. Complexity, 12(1), 13-26.

Galison, P. (1990). Aufbau/Bauhaus: Logical Positivism and Architectural Modernism. Critical Inquiry, 16(4), 709-752.

Pham, T. (2011). Thermodynamics of Far-from-Equilibrium Systems: A Shift in Perception of Nature. AME 36099 - Directed Readings.

Celani, G. and Vaz, C. (2012). CAD Scripting and Visual Programming Languages for Implementing Computational Design Concepts: A Comparison from a Pedagogical Point of View. International Journal of Architectural Computing, 10(1), 121-137.

Newman, D. (1996). Emergence and Strange Attractors. Philosophy of Science, 63(2), 245261.

Cooper, S. (2012). Incomputability after Alan Turing. Notices of the American Mathematical Society, 59(06), p.776.

Grebogi, C., Ott, E. and Yorke, J. (1987). Chaos, Strange Attractors, and Fractal Basin Boundaries in Nonlinear Dynamics. Science, 238(4827), 632-638.

Zach, R. (2005). Hilbert's Program Then and Now ArXiv Mathematics e-prints [Online], math/0508572 Available from: https://arxiv.org/abs/math/0508572 [Accessed 20 February 2018].

Hight, C. (2008). Architectural Principles in the Age of Cybernetics. 2nd Editio. Routledge. Cohen, M. and Axelrod, R. (2014). Harnessing complexity: organizational implications of a scientific frontier. London: Free Press.

Coates, P. (2010). Programming.architecture. London: Routledge. Shiffman, D. (2012). The nature of the code. [S.l.]: D. Shiffman.

Beth, M. and Dempster, L. (1998). A Self-organizing Systems Perspective On Planning For Sustainability. Master of Environmental Studies in Planning. University of Waterloo.

Video

The Coding Train. (2017). 10.1: Introduction to Neural Networks - The Nature of Code [Online]. [Accessed 20 February 2018]. Available from: https://www.youtube.com/watch?v=XJ7HLz9VYz0

The Coding Train. (2015). 6: Autonomous Agents - The Nature of Code [Online]. [Accessed 06 December 2017]. Available from: https://www.youtube.com/watch?v=JIz2L4tn5kM&list=PLRqwXV7Uu6YHt0dtyf4uiw8tKOxQLvlW

Websites

Vicanova, N. (2018). ZAHA HADID ON KAZIMIR MALEVICH. [online] Issuu. Available at: https://issuu.com/nelavicanova/docs/vicanova-nela-kniha [Accessed 16 Mar. 2018].

Cover Paton, J. (2018).

Figure 1 – 2, 416 Paton, J. (2018).

Figure 3 Viatour, L. (2018). Da Vinci Vitruve Luc Viatour. [photograph]. Available online: https://upload.wikimedia.org/wikipedia/commons/2/22/Da_Vinci_Vitr uve_Luc_Viatour.jpg

Figure 17 Santibañez, D. (2016). The Creative Process of Zaha Hadid, As Revealed Through Her Paintings. [online] ArchDaily. Available at: https://www.archdaily.com/798362/the-creative-process-of-zahahadid-as-revealed-through-her-paintings [Accessed 16 Mar. 2018].