NCURA MAGAZINE

With

Natural Language Search

AI Generated Insights

Email Notifications

Suggested Collaborators

Slack Integration Teams Integration

Learns from YOU

Much More!

Navigating the Grant Submission Process in the Time of Artificial Intelligence By Brandi Stephens, Vinita Bharat, and Liz Seckel 4

Your Next Research Assistant Might Be a Chatbot By Robert Pilgram and Dustin Schwartz 8

Find NCURA on your favorite social media sites!

Embracing the Crossroads: How New Research Administrators Can Lead the Way in AI Adoption

By Kathleen Halley-Octa and Kari Woodrum 14

Transforming Research Administration with Artificial Intelligence: Creating New Efficiencies and Supporting Data-Driven Decision Making

By David Richardson and David Smelser 17

AI: Research Administrators Meeting the Moment 21

Have You Forgotten How to Fly? By Lamar Oglesby 22

How USC Harnessed AI to Transform Sponsored Contracts Data into Strategic Insight By Lumi Bakos, Emily Devereux, and Alex Teghipco .............. 25

Navigating a Shifting Landscape: Institutional Strategies in Response to Federal Research Policy Changes

By Michelle D. Christy ........................................................ 33

Working at a Predominantly Undergraduate Institution (PUI) or Other Small Research Administration (RA) Office? Say Hello to Your New AI Coworker By Claudia Scholz 36

Beyond Personal Productivity: Scaling Artificial Intelligence for Institutional Research Administration Workflows

Barrie D. Robison, Nathan Layman, Jason Cahoon, Luke Sheneman, Sylvia Bradshaw, Katie Gomez, Nathan

Research Administration in the Middle East and North Africa: Artificial Intelligence and the Future of Research Administration:

IN THIS ISSUE: Artificial Intelligence is no longer theoretical—it’s here, and it’s transforming research administration. This issue of NCURA Magazine explores how AI tools are reshaping our work across multiple dimensions, from grant development to compliance oversight, and strategic planning.

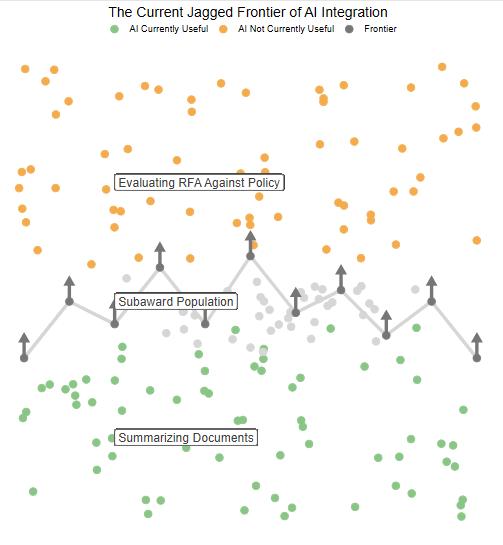

To begin, Brandi Stephens, Vinita Bharat, and Liz Seckel, in “Navigating the Grant Submission Process in the Time of Artificial Intelligence,” examine how AI can enhance the grant writing process. They highlight ways AI can support editing, simulate proposal reviews, and expand access for applicants with limited resources—while also cautioning against overreliance, especially when it comes to interpreting eligibility or compliance criteria.

Building on this foundation, Robert Pilgram and Dustin Schwartz explore the role of conversational AI in “Your Next Research Assistant Might Be a Chatbot.” Their article describes how chatbots trained on institutional policies can efficiently answer repetitive questions, streamline proposal support, and empower junior faculty with timely guidance— offering a glimpse into the future of scalable, user-centered administrative assistance.

Lori Ann Schultz’s “AI: Research Administrators Meeting the Moment” adds a call to action. She underscores how research administrators can harness generative AI to automate transactional tasks, freeing up time for more strategic support of researchers. Schultz emphasizes that the adoption of AI doesn’t diminish our role—it expands it, allowing professionals to lead with insight, creativity, and purpose.

Finally, David Richardson and David Smelser’s “Transforming Research Administration with Artificial Intelligence” provides a strategic lens. They explore how predictive analytics, automated compliance monitoring, and AI-powered dashboards can help institutions make smarter decisions, reduce risk, and better allocate resources.

Together, these articles make a compelling case: AI isn’t replacing research administrators—it’s empowering them. By offloading routine tasks and uncovering deeper insights, AI allows professionals to focus on high-value, strategic contributions. AI: The Next Frontier offers a roadmap for navigating this pivotal transformation. N

Author’s Note: In the spirit of embracing AI, the introduction to this issue was written with the assistance of ChatGPT.

Kathleen Halley-Octa, MA, CRA, is a Co-Editor for NCURA Magazine and serves as a Manager with Attain Partners. She has worked in research administration for more than a decade and has experience at both the central and departmental level. She can be reached at kmhalleyocta@attainpartners.com.

SENIOR EDITOR

Tanta Myles

Georgia Tech

CO-EDITORS

Tolise Dailey

Georgetown University School of Medicine

Kathleen Halley-Octa

Attain Partners

Martin Williams

Vaughn College of Aeronautics and Technology

CONTRIBUTING EDITORS

Career Development

Lamar Ogelsby

Rutgers University

Robyn Remotigue

University of North Texas HSC at Fort Worth

Clinical/Medical

Christina Stanger

MedStar Health Research Institute

Collaborators

Anthony Beckman

University of Rochester

Lisa Mosley

Yale University

Compliance

Jeff Seo

Northeastern University

Stacy Pritt

Texas A&M University System

Contracting

Beth Kingsley

Yale University

Laura Letbetter

Georgia Tech

Departmental Research Administration

Kelly Andringa

University of Iowa College of Medicine

Jennifer Cory

Stanford University

Diversity, Equity, & Inclusion

Sheleza Mohamed

American Heart Association

Laneika Musalini

Metropolitan State University of Denver

Financial Research Administration

Erin Bailey

University at Buffalo Clinical and Translational Science Institute

Brian Miller

Emory University

Global - Africa

Josephine Amuron

African Center for Global Health and Social Transformation

Global - Asia Pacific

Lisa Kennedy

University of Queensland

Global - Europe

Joey Gaynor

Trinity College Dublin

Kirsi Reyes-Anastacio

University of Helsinki

EXECUTIVE EDITOR

Marc Schiffman

NCURA

COPY EDITORS

Beth Jager

Claremont McKenna College

Jeanne Kisacky

Cornell University

Paulo Loonin

Duke University School of Medicine

Robin Ruetenik

University of Iowa

Global - Middle East

Reem Younis

United Arab Emirates Ministry of Education

Global - U.S.

MC Gaisbauer

University of California-San Francisco

Christopher Medalis

School for International Training

Pre-Award

Wendy Powers

University of Maine

Trisha Southergill

Colorado State University

Predominantly Undergraduate Institutions

Magui Cardona

University of Baltimore

Michelle Gooding

Frederick Community College

Research Development

Camille Coley

University of San Francisco

Self-Care

Rashonda Harris

Johns Hopkins University

Kim Moreland

University of Wisconsin - Madison

Senior Administrator

Lisa Nichols

University of Notre Dame

Lindsey Spangler

Duke University School of Medicine

Spotlight on Research

Derek Brown

Stanford University

Systems/Data/Intelligence

Thomas Spencer

University of Texas Rio Grande Valley

Dan Harmon

University of Illinois Urbana-Champaign

Training Tips

Helene Brazier-Mitouart

Weill Cornell Medicine

Work Smart

Hagan Walker

Prisma Health

Claire Stam

Prisma Health

Young Professionals

Carol Bitzinger

Ohio State University

Katie Gomez Freeman

Southern Utah University

By Denise Moody, NCURA President

elcome to our August issue recognizing the future of AI: The Next Frontier. At the time of this issue, NCURA will be hosting its Third Annual AI Symposium in Washington, DC on August 9th. Program Chairs Nancy Lewis, Lori Ann Schultz, and Thomas Spencer have put together an extremely robust program that highlights the impact of AI in our research administration profession, research community, and sponsors. This symposium precedes NCURA’s 67th Annual Meeting of the membership with the theme Forward Focused: Priming for Change, further demonstrating NCURA’s commitment to be strategic and looking forward.

In keeping with the Magazine theme, I asked ChatGPT what it means to consider AI: The Next Frontier in relation to our profession. The response emphasized “recognizing the transformative potential that AI has to improve various aspects of research management and administration” with key areas including “enhanced efficiency, data analysis, grant management, predictive analytics, improved communication, and ethical considerations and compliance”. Throughout many of this year’s NCURA webinar series on the Changing Federal Landscape, it has been said that the continuously increasing regulatory changes and compliance requirements partnered with the institutional budget constraints during 2025, NCURA’s members are often faced with doing more work with less resources. Utilizing AI to reduce our own administrative burden while enhancing our ability to comply is needed now more than ever.

During this challenging year, NCURA continues to focus on our mission to support and advance the research administration profession. In addition to our publicly available offerings (webinars, Changing Federal Landscape Collaborate Community, and monthly Career Center), NCURA’s Bridge Fund was launched (www.ncura. edu/MembershipVolunteering/Volunteering/NCURABridgeFund. aspx). This Fund was initiated by our 2025 Pre-Award Research Administration Conference keynote speaker, Dr. Andrea Hollingsworth, who donated her speaker fees to assist research administrators whose jobs were impacted due to funding changes. The Fund offers training, coaching, and a free year of NCURA membership to help individuals transition into new roles and provide them with the skills and resources needed for a successful job search. It is offered to research administrators or federal government employees whose activities are related to the administration of sponsored programs in areas of grants management, policy and administration.

“Utilizing AI to reduce our own administrative burden while enhancing our ability to comply is needed now more than ever.”

In September, NCURA’s current and future national and regional leaders will attend a leadership convention to develop a strategic “roadmap” on membership engagement and the “next frontier.” Information has been gathered over the past few months from several stakeholders on assessing trends and shifts in volunteer engagement, volunteer structure and support benefits and challenges, and ways to envision an optimal future of volunteer engagement.

As we navigate these challenging times together, let’s continue to uplift one another and foster our sense of community. I am grateful for the resilience, collaboration, and even good humor in stressful times that NCURA members continue to provide each other. We have the power to shape our experiences and support one another through every challenge. Thank you for your commitment to our mission, and I encourage everyone to take care of yourselves and each other. N

Most Sincerely,

SBy Brandi Stephens, Vinita Bharat, and Liz Seckel

ince the public release of ChatGPT, artificial intelligence (AI) has rapidly become integrated into nearly every aspect of our daily lives, including academic grant writing. An increasing number of the principal investigators (PIs) we support have been asking us how they can use AI to aid in the grant writing process. As professionals who support scientific development, we believe writing is a deeply personal process and that the final product is best when imbued with the ideas, style, and personality of the writer. The iterative process of drafting and refining one’s work also helps develop scientific writing skills (Quitadamo and Kurtz, 2007), which are essential for a successful long-term career in academia. However, we also believe that PIs and research administrators can benefit immensely from including AI in this process, especially as the algorithms powering these systems improve and become widely available.

Here are five ways that AI can support research administrators and their PIs in the grant application process.

1 Check sponsor and institutional guidelines regarding AI use. Before using AI, you should always check with the sponsor and your institution to ensure AI is allowed and, if so, note any restrictions regarding its use and disclosure. For example, the NIH issued a policy on July 17th, 2025 (NOT-OD-25-132) that prohibits applications that are substantially developed with AI. Whether the sponsor requests this or not, we recommend disclosing any AI usage in grant applications. If there are any questions about the policy or if one is not available, reach out to the program officer or your institutional official for clarification.

2 Consider data storage and privacy settings. All publicly available AI chatbots, including ChatGPT, save the prompts and conversations to ‘improve’ their algorithms. Research proposals often contain highly sensitive information—from initial drafts through final submission. Putting any of this precious text into a public chatbot runs the risk of the

AI incorporating the ideas and approach into its model. The AI could then suggest these ideas to other users, including competitors! Check if your institution has a preferred, secure AI platform that does not save inputted prompts and text. If so, that is the only AI platform you and your PIs should use. If not, reach out to your institutional officials and make them aware of the need for such guidance and recommendations. AI use will only increase in the future, and institutions need to adapt, or else risk the potential security concerns that could result from improper use of AI. It’s also important to remind PIs that even metadata, such as file names or associated researcher names, may be inadvertently exposed in public AI tools.

3 Use it to edit text. AI can enhance text editing processes, improving readability by simplifying technical terms and jargon-laden paragraphs, and correcting grammar and refining sentence structure. You and your

PIs can leverage AI to maintain consistency and clarity throughout the application, ensuring that the final document is coherent and professional.

Careful, though—one major disadvantage of using AI is its tendency to generate false information (known as “hallucinations”, to use the technical term), which can lead to factual errors and the spreading of misinformation. The risk of AI-generated text being copied verbatim from existing sources without any proper attribution or permission can lead to major research integrity and privacy concerns. Not to mention embarrassment and loss of professional reputation. Furthermore, relying on the automation of AI-generated content will hinder applicants from developing the grant writing skills needed for a long-term career in academia.

On the topic of hallucinations: we strongly caution against using AI to identify eligibility criteria, required documents or sections, and compliance risks at this point, as tempting as it may be. When we experimented and asked AI to generate a list of required documents for a grant, it took us more time to check the list for accuracy than it would have taken to make it ourselves. AI is programmed to sound convincing; it is not programmed for accuracy. Until the accuracy of the generated content from AI improves, we believe it is too error-prone for these critical tasks. As research administrators know, a small administrative error can lead to the entire grant being rejected.

AI is programmed to sound convincing; it is not programmed for accuracy.

4 Use AI to review the grant proposal. AI can roleplay as a grant reviewer, which can be especially helpful for those with limited resources or time. Just as with human reviewers, AI performs best when provided detailed instructions on what to focus on in a review. An AI prompt should include who you are (e.g., Assistant Professor), what you are applying for (e.g., American Heart Association Career Development Award), the specific task (e.g., give feedback on how well the text aligns with the American Heart Association mission), and any relevant context (e.g., the American Heart Association mission). Give additional instructions to further refine its feedback. Keep in mind that although AI can be a valuable tool, we still recommend seeking feedback from human reviewers. Additionally, you can ask AI to mimic specific review criteria from funding agencies, such as significance, innovation, or approach.

AI can roleplay as a grant reviewer, which can be especially helpful for those with limited resources or time.

access to dedicated grant writing support. This could especially benefit non-English preferred writers who face additional challenges with language barriers. Research shows that non-native English speakers often invest significantly more time and effort in conducting scientific activities than native English speakers, which can impede their career advancement (Armano et. al., 2023; Berdejo-Espinola & Amano, 2023). By leveraging AI, we can help level the playing field and enhance participation for all researchers.

As AI continues to improve, we invite our audience to reflect on the pain points and opportunities within their work and explore how they could incorporate AI to help improve efficiency and decrease administrative burden. We have recently published a paper (Seckel, Stephens & Rodrigues, 2024) and GitHub repository to collate and curate resources on this topic and we invite you to browse and contribute as you work on your next grant submission. We also published a handout highlighting all the key points for using AI in grant submissions (Stephens, Seckel & Bharat, 2025), which can be shared as a resource with grant writers and research administrators.

The best way to learn how to use AI for grant submissions is through exploration and experimentation. Generative AI is here to stay, and the sooner we get acquainted with its advantages and disadvantages, the faster we can unlock and harness its potential for research administration. We encourage research offices to begin piloting AI tools in safe, guided ways, and to share lessons learned with the broader community. N

References

Amano, T., Ramírez-Castañeda, V., Berdejo-Espinola, V., Borokini, I., Chowdhury, S., Golivets, M., González-Trujillo, J.D., Montaño-Centellas, F., Paudel, K, White, R.L., & Veríssimo, D. (2023). The manifold costs of being a non-native English speaker in science. PLOS Biology, 21(7), e3002184.

Berdejo-Espinola, V., & Amano, T. (2023). AI tools can improve equity in science. Science, 379(6636), 991.

Quitadamo I.J., & Kurtz M.J. Learning to improve: Using writing to increase critical thinking performance in general education biology. CBELife Sciences Educatio 2007; 6(2):140–154. https://doi.org/10.1187/cbe.06-11-0203 PMID: 17548876

Responsibly Use AI to Develop More Competitive Grants. Version 1. Stanford Digital Repository. Available at https://purl.stanford.edu/vn273fj8262/version/1. https://doi. org/10.25740/vn273fj8262

Seckel, E., Stephens, B. Y., & Rodriguez, F. (2024). Ten simple rules to leverage large language models for getting grants. PLOS Computational Biology, 20(3), e1011863.

Acknowledgements: The authors are grateful to Carolyn Trietsch, Carlos Perez, Jennifer Nguyen, and Sarah Yeats Patrick for their invaluable feedback.

Brandi Stephens, PhD, is a Research Development Strategist for Stanford’s Division of Cardiovascular Medicine and a trained physiologist in biomedical research. She combines her love for science with her passion for grant writing to support clinical fellows, postdocs, and junior faculty in developing competitive grant applications and maximizing funding success. Brandi can be reached at bstep@stanford.edu.

Vinita Bharat, PhD, is the Assistant Director of Science Communication & Research Development Training at Stanford University. Leveraging her 14+ years of research training, she provides personalized grantsmanship advice, leads grant writing bootcamps, and supports early-career researchers, empowering them to design their grant journeys for research excellence and enjoy the process. Vinita can be reached at vbharat@stanford.edu.

5 Use AI to level the playing field. AI holds significant promise in democratizing the grant writing and development process by providing accessible, low cost (or free) grant writing aids to applicants without

Liz Seckel is a Director of Strategic Research Development at Stanford, where she provides individualized grantsmanship advice to all tiers of trainees and faculty. She has helped raise nearly $100 million to advance health equity and received several awards for her scientific work as well as her commitment to philanthropy. Liz can be reached at eseckel@stanford.edu.

National Council of University Research Administrators

•

•Collaborate: NCURA’s Professional Networking Platform, including discussion boards and libraries sorted by topical area

•Automatic membership in your geographic region

•NCURA’s Resource Center: your source for the best of NCURA’s Magazine, Journal, YouTube Tuesday and Podcast resources, segmented into 8 topical areas

•NCURA Magazine: new issues published six times per year, and all past issues available online

•Sample Policies & Procedures

•NCURA Magazine’s e-Xtra and YouTube Tuesday delivered to your inbox each week

•

•Special member pricing on all education and products

•Podcasts and session recordings from our national conferences

•Access to and free postings to NCURA’s Career Center

•Leadership and Volunteer Opportunities

•

Diane Hillebrand, Assistant Director, Research & Sponsored Program Development, University of North Dakota, has been elected to the position of Vice President/President-Elect of NCURA. A dedicated NCURA member for more than 25 years, Diane currently serves as NCURA Secretary and as a faculty member for the Departmental Research Administration workshop. Her service includes roles on the Board of Directors, Chair and member of the Professional Development Committee, Co-Chair of the Pre-Award Research Administration Conference, and Region IV Chair. Diane’s contributions have been recognized with NCURA’s Julia Jacobsen Distinguished Service Award and the Region IV Distinguished Service Award. She is also a graduate of NCURA’s Executive Leadership Program. Upon her election, Diane shared: “I am truly honored and grateful to give back to NCURA by serving in this role. I am deeply committed to membership engagement, believe strongly in the research administration profession and am excited to collaborate with all stakeholders. I wholeheartedly support the future of NCURA and LOVE supporting research…together with my NCURA family. #ResearchImprovesYourLife.”

Tricia Callahan, Interim Director of Research Training, Office of Research Administration, Emory University, has been elected to the position of Secretary of NCURA. As an involved NCURA member for more than 25 years, she has served on the Board of Directors, as Chair of the Select Committee on Peer Programs/Peer Review, as Co-Chair of the Pre-Award Research Administration Conference, and as a member of both the Nominating & Leadership Development Committee and Professional Development Committee. Tricia currently serves as a faculty member for the Level I: Fundamentals of Sponsored Projects Administration workshop and as an NCURA Peer Reviewer. Tricia has been honored as an NCURA Distinguished Educator and a recipient of NCURA’s Julia Jacobsen Distinguished Service Award. She is also a graduate of NCURA’s Executive Leadership Program. Upon her election, Tricia shared: “I am excited and honored to have been elected as NCURA’s next Secretary. I look forward to working closely with my fellow officers, the dedicated staff, and the entire NCURA community to advance our shared mission of promoting excellence in research administration.”

Eva Björndal, Director of Research Award Administration (Pre- and Post-Award), King’s College London, has been elected to the position of Treasurer-Elect of NCURA. Throughout her 15 years of NCURA membership and service, Eva has served on the Board of Directors, as Chair of Region VIII, and Co-Chair of the Annual Meeting. Eva has served on several committees, including the Professional Development Committee, Nominating & Leadership Development Committee, and the Select Committee on Global Affairs. Eva has also received the NCURA Distinguished Educator designation and is a graduate of NCURA’s Executive Leadership Program. On being elected to this position, Eva shared: “It is an honor to be elected as the Treasurer-Elect of NCURA. I really look forward contributing to NCURA’s continued success and serving the membership in alignment with the organization’s mission and values.”

Tanya Blackwell, Grants and Contracts Supervisor, School of Medicine, Seattle Children’s Research Institute, has been elected to the position of At-Large Board Member. Tanya has spent her 12 years of membership in various roles of service, including as Chair of the Select Committee of Diversity, Equity, and Inclusion, as Chair of the Education Scholarship Fund Select Committee, and a member of the Professional Development Committee. Tanya has also served on the program committees for the Annual Meeting, Financial Research Administration Conference, and Pre-Award Research Administration Conference. She is also a graduate of NCURA’s Executive Leadership Program. On being elected to this position, Tanya shared: “Being elected to NCURA’s Board of Directors is an honor and responsibility that I do not take lightly, as I have witnessed first-hand how impactful these roles are to the future of our organization. Working alongside the other Board members, I look forward to serving NCURA’s membership while upholding our mission, core values, and commitment to diversity, equity, and inclusion. While these are uncertain times for us all, I am certain of our collective ability to advance the profession of research administration.”

Ashley Stahle, Associate Director, Sponsored Programs, Office of Sponsored Programs, Colorado State University, has been elected to the position of At-Large Board Member. As a member since 2015, Ashley has served on the Board of Directors, as a member of the Professional Development Committee, and as Chair of Region VII. Ashley has served on the program committees for the Pre-Award Research Administration Conference and the Financial Research Administration Conference. Ashley is currently serving as the Co-Chair for the 2026 Financial Research Administration Conference. On being elected to this position, Ashley shared: “I’m truly honored and excited to have been elected to serve on the NCURA Board of Directors. Returning to the Board is a meaningful opportunity to continue supporting the incredible work NCURA does for our profession. I’m grateful for the trust and support of my colleagues and all who voted—thank you! I look forward to collaborating with fellow Board members, NCURA staff, and our amazing community to advance the mission of this organization and the field of research administration.”

Hillebrand will take office January 1, 2026, for one year after which she will succeed to a one-year term as President of NCURA. Callahan will take office on January 1, 2026, and will serve a two-year term. Björndal will become Treasurer-Elect on January 1, 2026, and will serve for one year after which she will succeed to a two-year term as Treasurer. Both Blackwell and Stahle will begin serving January 1, 2026, for a two-year term.

By Robert Pilgrim and Dustin Schwartz

When NCURA held its 1st Annual AI Symposium in August 2023, most attendees had heard of ChatGPT, but few had used it. In just 18 months, generative AI has transformed from a curiosity to a critical asset. In another 18 months, it could become a standard part of every workflow, as familiar and necessary as Microsoft Word or email.

Generative AI can rapidly process vast amounts of content, identifying connections across documents. This means a staff member with basic knowledge of research administration and a well-trained AI could outperform peers who do not use AI. For research administrators, some immediate benefits include:

• Responding to repetitive faculty questions

• Reducing the time spent interpreting sponsor guidelines

• Creating and managing internal knowledge bases

• Assisting with report drafting and compliance summaries

A knowledge worker is someone whose primary job involves the creation, processing, and application of information, rather than physical labor. Faculty members, research administrators, analysts, and compliance officers fall into this category. Over the past few decades, tools such as the internet, email, and Google Search have become indispensable to knowledge workers. Today, a new tool is entering this essential toolkit: generative AI.

Just as we cannot imagine functioning without web search, those who embrace generative AI will soon feel the same way about their AI assistants. These tools enable knowledge workers to efficiently digest information across hundreds of documents in seconds, identify key points, suggest actions, and automate repeatable tasks. More importantly, these tools can elevate the contributions of junior or less experienced staff, offering them real-time access to expert-level guidance.

documents, “chat” to these documents, and receive insights. You can build context-specific tools to help:

• Answer guidelines, policies, and procedure questions

• Guide researchers in preparing bios

• Review and improve draft proposals

• Summarize compliance requirements

• Answer common questions from departments or units

These solutions can be made more effective by uploading sponsor guidelines such as the NSF Proposals & Award Policies & Procedures guidelines (PAPPG), which AI can reference for detailed responses.

In April 2025, a leaked internal memo from Shopify, a $129 billion global company, included details that may be a signal of things to come. It made one thing unmistakably clear: artificial intelligence is not optional. According to the memo, AI adoption is now a baseline expectation for every employee at every level, without exception (Louise, 2025). Some of the key points in the memo could be a powerful signal of what’s coming for the rest of us:

• AI is part of every workflow

• AI integration will be evaluated during annual reviews

• Before requesting resources, teams must justify why AI can’t meet their needs

Generative AI enables interaction through plain English. Platforms such as ChatGPT, Claude, Copilot, and Perplexity allow users to upload Figure 1 Academia is typically slower to adapt than industry to new technology

Institutions must adopt AI now to remain competitive and resource-efficient. While private sector organizations resemble agile speedboats, universities are more like oil tankers, difficult to steer but incredibly powerful once moving in the right direction. By empowering frontline administrators with AI, institutions can begin the long but necessary shift toward operational agility.

A Common Use Case

The Problem

Example: Proposal submissions are increasing, especially from junior faculty and interdisciplinary collaborations. Many of these users are unfamiliar with proposal procedures and submission systems. Staff are inundated with redundant questions and often refer to lengthy PDFs or SharePoint pages that few people read.

The Solution

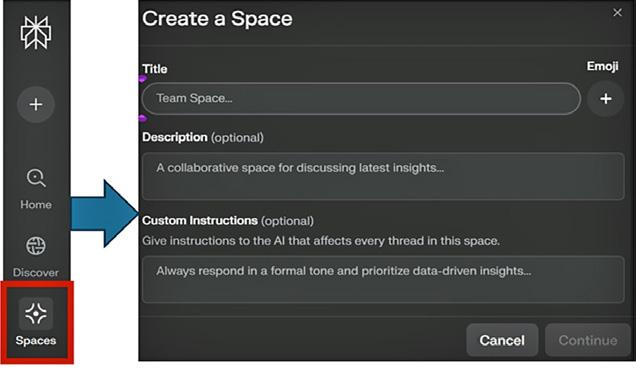

Setting Up and Deploying Your Chatbot

Use a platform such as Perplexity to get started. It doesn’t require an account to test or share bots so you can get up and running in minutes.

A chatbot trained on institutional workflows and public documents can answer common questions around proposals, submissions, and compliance. It can offer links to the correct templates, point out which training is required, and even quiz users on whether their project is eligible for submission under a particular program.

Training Your AI Assistant

Think of AI as a new hire. They may be technically skilled but need training in your institution’s specifics. Build a knowledge base using:

• Public-facing documents. Looking over your website is a good place to start.

• Internal procedures (if safe to share)

• Living Q&A documents added by staff

Maintain a central, editable file (e.g., Microsoft Word, Google Docs) to track new questions and answers. Update the chatbot weekly using this file. Assign someone to curate content and ensure consistency. This ensures the bot reflects new practices and avoids confusion from outdated answers.

Basic setup steps:

1. Title & Description: Choose a meaningful name and purpose.

2. Custom Instructions (Prompt Engineering): Specify the bot’s tone, user level, and what to do when unsure.

3. Upload Files: Documents such as policy guides.

4. Add Public Links: Include institutional and sponsor websites that are open access.

Example Custom Instructions: “You are an expert Research Administrator at an R1 institution. You are responsible for helping faculty navigate complex proposal processes, ensuring compliance with federal guidelines (e.g., NSF PAPPG), and improving proposal quality across your institution. Your tone should be clear, professional, and supportive. If you don’t know the answer, suggest a next step or resource. Prioritize concise, policy-aligned answers and clarify ambiguous questions through follow-up prompts. Assume your users are new faculty with limited grant writing experience. Use the supplied files and links to inform your responses. If relevant information can be found in those files, provide a summary and guide users to the appropriate section or document.”

Start small. Target junior faculty. They ask predictable, high-impact questions. As the bot gains value, expand its scope.

Imagine a scenario where:

• AI scans recent publications/proposals and awards and aligns faculty interests with new funding solicitations

• A second AI drafts proposal templates tailored to those opportunities

• A third AI evaluates the drafts using sponsor-specific rubrics, suggesting improvements

• The process iterates until a strong draft is ready for human review

All of this can happen in minutes, not weeks. The implications are massive: greater speed, increased quality, and more time for strategic work by human staff.

It may take many years of training before we have a knowledgeable, competent human research administrator. When this person leaves or retires, the process must begin again, and knowledge must constantly be updated as new regulations and guidelines are released. Unlike human knowledge, which cannot be transferred directly between people, a digital AI solution can be copied, pasted, modified, and merged in seconds for virtually zero cost.

This opens many opportunities for those in research administration to become significantly more efficient, effective, and productive, something

that would have been difficult or even impossible just 24 months ago without writing a single line of code. By using publicly available data and a few straightforward steps, you can create a helpful digital assistant tailored to your team’s specific needs with no coding required. Don’t get left behind, you can start building AI solutions for free today with public data! N

Reference Louise, N. (2025, April 8). Shopify CEO Tobi Lütke confirms leaked internal memo on social media about “hiring AI before humans” Tech Startups. https://techstartups. com/2025/04/07/shopify-ceo-tobi-lutke-confirms-leaked-internal-memo-on-social-media-about-hiring-ai-before-humans/

Robert Pilgrim, PhD, is the Associate Director for Data Strategy & Insights, Division of Research & Innovation, University of Arkansas. University of Arkansas. Robert helps support the research analytics projects on campus and has a keen interest in the emerging field of AI and its application to research administration. He can be reached at rpilgrim@uark.edu.

Dustin Schwartz is the Electronic Research Administration Manager, Division of Research & Innovation, University of Arkansas. Dustin specializes in leveraging technology to solve problems and recently helped lead the implementation of an AI powered faculty-solicitation matching platform for our researchers. He can be reached at djschwar@uark.edu.

Standard, Expedited, or Exempt: Can ChatGPT Determine IRB Review Category?

Emmett Lombard, Gannon University

Exploring Marginality, Isolation, and Perceived Mattering Among Research Administrators

Denis Schulz, California State University San Marcos, Karen Gaudreault, University of New Mexico, and Ruby Lynch-Arroyo, University of Texas at El Paso

A Case Study of Research Administrator Perceptions of Job Satisfaction in a Central Research Administration Unit at a Private University

Noelle Strom, University of Denver

Research Security and the Cost of Compliance: Phase I Report

Council on Government Relations

BOOK REVIEW: The SAGE Handbook for Research Management, 1st ed.

Chloe Brown, Texas State University

BOOK REVIEW: The Mentor’s Guide: Five Steps to Build a Successful Mentor Program, 2nd ed.

Clinton Patterson, Texas A&M University

The Research Management Review invites authors to submit article proposals. The online journal publishes a wide variety of scholarly articles intended to advance the profession of research administration. Authors can submit manuscripts on diverse topics.

www.ncura.edu/Publications/ResearchManagementReview.aspx

Research compliance and funding management are critical, but complex. Artificial intelligence can ease the burden and enhance institutional outcomes.

• Complex administrative processes

• Labor-intensive documentation

• Time-consuming risk detection and recordkeeping

• High-effort funding and collaborator identification

• Inefficient award portfolio management

• Data-heavy tasks like reporting and setup

• Smart summaries: Provides tailored guidance based on policies or project terms

• Proactive monitoring: Flags risk and potential violations

• Auto-filled forms: Uses your systems to complete submissions faster

• Collaboration matching: Connects researchers with shared goals

• Proposal tools: Recommends funding, compiles award info

• Workflow automation: Accelerates award setup and reporting

Let AI power your research compliance and operations.

Connect with our team to learn more.

go.hcg.com/research-enterprise

In June, the NCURA Board of Directors convened virtually for our regularly scheduled board meeting. President Denise Moody officially called the meeting to order and opened with thoughtful welcoming remarks. She encouraged all members to consider attending the upcoming 3rd Annual AI Symposium, a key initiative chaired by Nancy Lewis, Lori Schultz, and Thomas Spencer. Denise expressed her sincere appreciation for the Board members, recognizing their ongoing dedication and service to NCURA, particularly in light of the ongoing pressures and challenges affecting our profession and institutions.

Denise provided an update on the upcoming NCURA Regional Leadership Conference, which will take place in September. In preparation for the event, leaders from regional groups as well as national committees were asked to reflect on and respond to a series of strategic questions. These questions closely align with those posed by past President Kris Monahan during her term, continuing a thoughtful and intentional dialogue about the future direction and priorities of the organization.

The Board recognized and extended gratitude to Dr. Andrea Hollingsworth, keynote speaker at the 2025 PRA Conference, whose donation of her speaker fees inspired the creation of the NCURA Bridge Fund. Kris Monahan played a key role in drafting the initial vision for the fund, and thanks to the swift efforts of NCURA staff, the initiative has been brought to life and is already making a positive impact.

In addition to regular committee reports, a number of important action items were presented by several select and standing committees for Board discussion and approval. The following items were reviewed and approved:

■ Nominating and Leadership Development Standing Committee – Three Distinguished Educator nominations

■ Professional Development Committee – Six Traveling Workshop Faculty nominations and the nomination of a Co-Editor for NCURA Magazine

■ Select Committee on Global Affairs – Nominations for six Global Fellowships

Vice President Shannon Sutton provided an update on the 67th Annual Meeting (AM67). Shannon shared details highlighting the wide range of opportunities for professional networking, high-quality educational sessions, and member engagement. Alongside her co-chairs, Candice Ferguson and Kathy Durben, Shannon and the full program committee worked diligently to deliver an exceptional conference experience for all attendees.

As students return to campus and the Fall semester begins, I want to take a moment to extend my warmest wishes to our entire NCURA community. May this new academic year be filled with growth, connection, and success for you and your teams.

If you have any questions, comments, or concerns, please feel free to reach out to any member of the Board of Directors or NCURA staff.

Kay Gilstrap, CRA, is the NCURA Treasurer and serves as Director for Research Internal Controls and Policy Development at Georgia State University. She can be reached at kgilstrap@gsu.edu.

BOARD OF DIRECTORS

President

Denise Moody

Lundquist Institute for Biomedical Innovation

Vice President

Shannon Sutton

Georgia State University

Immediate Past President

Kris Monahan

Harvard University

Treasurer

Kay Gilstrap

Georgia State University

Secretary

Diane Hillebrand

University of North Dakota

Executive Director

Kathleen M. Larmett

National Council of University Research Administrators

Eva Björndal

King’s College London

Natalie Buys University of Colorado

Anshutz Medical Campus

Jennifer Cory

Stanford University

Jill Frankenfield

University of Maryland, Baltimore

Katy Gathron

MD Anderson Cancer Center

Melanie Hebl

University of Wisconsin-Madison

Katherine Kissmann

Texas A&M University

Rosemary Madnick

Lundquist Institute for Biomedical Innovation

Danielle McElwain

University of South Carolina

Nicole Nichols

Washington University in St. Louis

Scott Niles

Georgia Institute of Technology

Lamar Oglesby

Rutgers University

Geraldine Pierre

Boston Children’s Hospital

Lori Ann Schultz

Colorado State University

Thomas Spencer

University of Texas Rio Grande Valley

By Kathleen Halley-Octa and Kari Woodrum

In the ever-evolving landscape of research administration, artificial intelligence (AI) presents both challenges and opportunities. As new research administrators, your fresh perspective, digital fluency, and adaptability uniquely position you to help shape how we integrate these technologies into our daily work.

Understanding the AI Landscape

AI is no longer confined to science fiction or specialized computer labs. Today, AI tools appear in our inboxes, spreadsheets, budgeting tools, and compliance platforms. At its core, AI refers to systems trained to perform tasks that traditionally require human input, such as interpreting language, detecting anomalies, or generating reports. Machine learning and natural language processing allow these systems to learn from patterns and interact more naturally with users. The emergence of generative AI, such as ChatGPT or DALL-E, adds the ability to produce original text, images, and even financial forecasts. For instance, some research administrators are using these tools to help draft budget justifications, generate proposal summaries, or brainstorm outreach language for broader impacts.

But not all AI outputs are created equal. It is important to utilize critical analysis to recognize results that miss nuances or context that human interaction provides.

The Critical Role of Human Oversight AI can process massive amounts of data and identify patterns, but it can’t always understand context or anticipate ethical implications. For instance, an AI tool might flag a travel expense as unallowable when in reality, it’s a grant-approved expenditure tied closely to the scope of work approved by the sponsor. Without human review, these tools can cause confusion or frustration and lead to incorrect reporting.

This is why critical thinking remains central to the research administrator’s role. Your ability to ask questions, interpret results, and apply institutional knowledge makes AI effective, not vice versa.

AI is already being used across several areas of financial research administration to streamline tasks and support decision-making. While these tools are not magic solutions, they offer real efficiencies when paired with critical

human oversight. Below are some specific, real-world applications where AI is already making a difference:

1. Expense Auditing and Anomaly Detection: Tools like AppZen use AI to audit expense reports automatically. These systems scan receipts, flag potential policy violations, and detect patterns that may signal errors or fraud.

2. Monitoring Spending Trends and Forecasting: Platforms such as Anodot apply machine learning to financial data to identify outliers or unexpected shifts in spending behavior. While these tools don’t replace budgeting expertise, they can highlight areas that merit further reviews—such as a sudden increase in subaward payments or travel costs.

3. Budgeting and Financial Planning: Enterprise systems like Workday Adaptive Planning incorporate AI capabilities for financial forecasting and scenario modeling. This helps post-award teams assess how changes in funding levels or spending rates could impact the overall budget without manually recalculating spreadsheets.

4. Travel and Expense Automation: Products such as SAP Concur use AI to streamline travel and expense management. The system can read and categorize receipts using optical character recognition (OCR), match them to trips, and flag duplicate or out-of-policy charges.

5. Spend Analysis and Procurement Optimization: Coupa is another real-world tool that leverages AI to analyze institutional purchasing data. It can identify vendors with high error rates, suggest more cost-effective options, and monitor invoice processing trends for signs of inefficiency or risk.

While these tools can automate repetitive processes and surface insights faster than a manual review, they aren’t perfect. Anomalies flagged by AI still require human interpretation to determine if action is needed.

As a research administrator, your role is to bring context to the machine’s output, especially when understanding grant-specific stipulations or institutional policies. These tools don’t remove responsibility; they redistribute it. You’re still the expert—AI helps you move faster and focus your expertise where it matters most.

AI’s Limitations: Know What to Watch For It’s equally important to understand what AI can’t do—at least not reliably. Current AI systems are prone to a few well-known issues:

• Hallucinations – AI sometimes invents facts that sound plausible but aren’t true, such as citing a grant policy that doesn’t exist.

• Randomness – The same prompt can yield different outputs depending on how the system interprets it, meaning you ask an AI tool to draft a cost-share justification and receive different versions each time that may contain conflicting information.

• Bias – AI learns from the data it’s trained on. If that data is biased, so are the results. For example, an AI tool may suggest preferred vendors for lab supplies but consistently deprioritizes minorityowned businesses, reflecting biased training data from past procurement trends.

• Struggles with Negatives – AI often misinterprets statements with “not” or “except.” Meaning, if you prompt AI to list expenses not allowed under participant support costs, it may return a list of allowed expenses instead, leading to confusion and possible noncompliance if not caught.

• Lack of Context – It can’t always tell when something that looks incorrect is valid in a particular situation. For instance, AI flags a late subcontract payment as an error, unaware that it was intentionally delayed due to a pending IRB approval, something a human would recognize as a valid exception.

This is where your analytical skills come in. Every AI-generated output should be reviewed critically—ideally with a process that includes validation, ethical review, and a clear understanding of institutional guidelines. Think of AI as a first draft, not a final answer—your expertise ensures accuracy, context, and alignment with sponsor and institutional expectations.

How You Can Help Your Team Use AI Wisely

As someone early in your research administration career, you may already feel more comfortable with new technologies than some of your colleagues. That’s a strength you can bring to your organization. Here are a few ways to be a change leader:

• Model Curiosity, Not Certainty: Show that you’re experimenting thoughtfully with AI tools. Share both successes and things that didn’t work.

• Host Informal Demos: Offer your team short, informal “AI 101” sessions. Demonstrate how you used a tool to generate a report, visualize data, or double-check a budget narrative.

• Promote Ethical Use: Talk openly about AI limitations and privacy concerns. Remind colleagues to avoid feeding tools sensitive or proprietary data and never rely on AI alone for compliance tasks.

• Be Aware of Institutional Restrictions: Many universities have implemented policies that restrict or prohibit the use of generative AI tools with certain types of data—especially sensitive, confidential, or proprietary grant information. These limitations are often driven by concerns around data security, privacy, and vendor compliance. Before using AI tools in your workflow, check your institution’s guidelines and approved tools list to ensure you’re in compliance.

• Encourage Prompt Engineering: Share tips for writing better prompts: be specific, provide context, and ask follow-up questions. Show how small changes in phrasing can improve AI results.

• Start with Small Wins: Look for low-stakes ways to test AI in your day-to-day work—such as summarizing meeting notes or refining a budget narrative. Small successes can build your confidence and spark ideas to share with others.

One helpful way to think about AI is to treat it like an intern: eager, fast, and surprisingly capable—but still in need of guidance and oversight. The more clearly you explain what you need and provide feedback, the better the results. Framing it this way can help you use AI more effectively and build confidence as you explore its potential.

Ethical Questions Every Research Administrator Should Ask Ethics have always been foundational to research administration—from ensuring compliance to safeguarding integrity in proposal review and financial stewardship. As we integrate AI into our workflows, these responsibilities don’t go away—in fact, they become even more critical. AI introduces new questions about data use, accountability, and transparency that require the same level of thoughtfulness and scrutiny we already apply to sponsor regulations and institutional policies.

As we bring AI further into our daily work, we also have to ask the tough questions:

• Is the data we’re using fair and complete?

• Who is accountable when an AI system makes a mistake?

• Are we transparent with researchers, sponsors, and leadership about how we’re using AI?

• How do we ensure confidentiality, especially when tools process sensitive grant data?

These questions won’t always have simple answers, but asking them keeps ethics at the forefront. In a field where trust, compliance, and integrity are essential, introducing AI means those principles matter even more. By staying curious and cautious, you can help ensure that AI enhances—not compromises—the ethical standards research administration is built on.

The future of research administration is likely to include deeper AI integration—predictive financial modeling, automated reporting, and even AI-assisted peer review. As a new research administrator, you are in a unique position. You can help your organization explore AI in thoughtful, practical ways while fostering a culture of critical engagement. Whether you’re reconciling budgets, reviewing subawards, or drafting compliance language, AI can be a powerful co-pilot—if you remain in the driver’s seat. So, explore. Question. Share what you learn.

The tools will continue to evolve—and so will your skills. Staying informed, asking good questions, and engaging in open dialogue with colleagues will help you use AI wisely and confidently. You don’t have to have all the answers to lead the way—just a willingness to explore, learn, and share. The crossroads of AI and critical thinking is where the future of research administration begins—and you’re already standing at the intersection. N

Kathleen Halley-Octa is a Manager with Attain Partners’ Research practice. She has 15 years of experience in research administration, with expertise in pre-award operations, training development, and change management. Kathleen focuses on eRA system implementations, organizational assessments, and business process improvement. She can be reached at kmhalleyocta@attainpartners.com.

Kari Woodrum is a Grants and Contracts Assistant with the Prairie Research Institute at the University of Illinois, Urbana-Champaign. In this role, she supports researchers throughout the full life cycle of grants and contracts, including budget development, proposal preparation and submission, financial monitoring, and compliance with sponsor and institutional requirements. She can be reached at kwoodrum@illinois.edu.

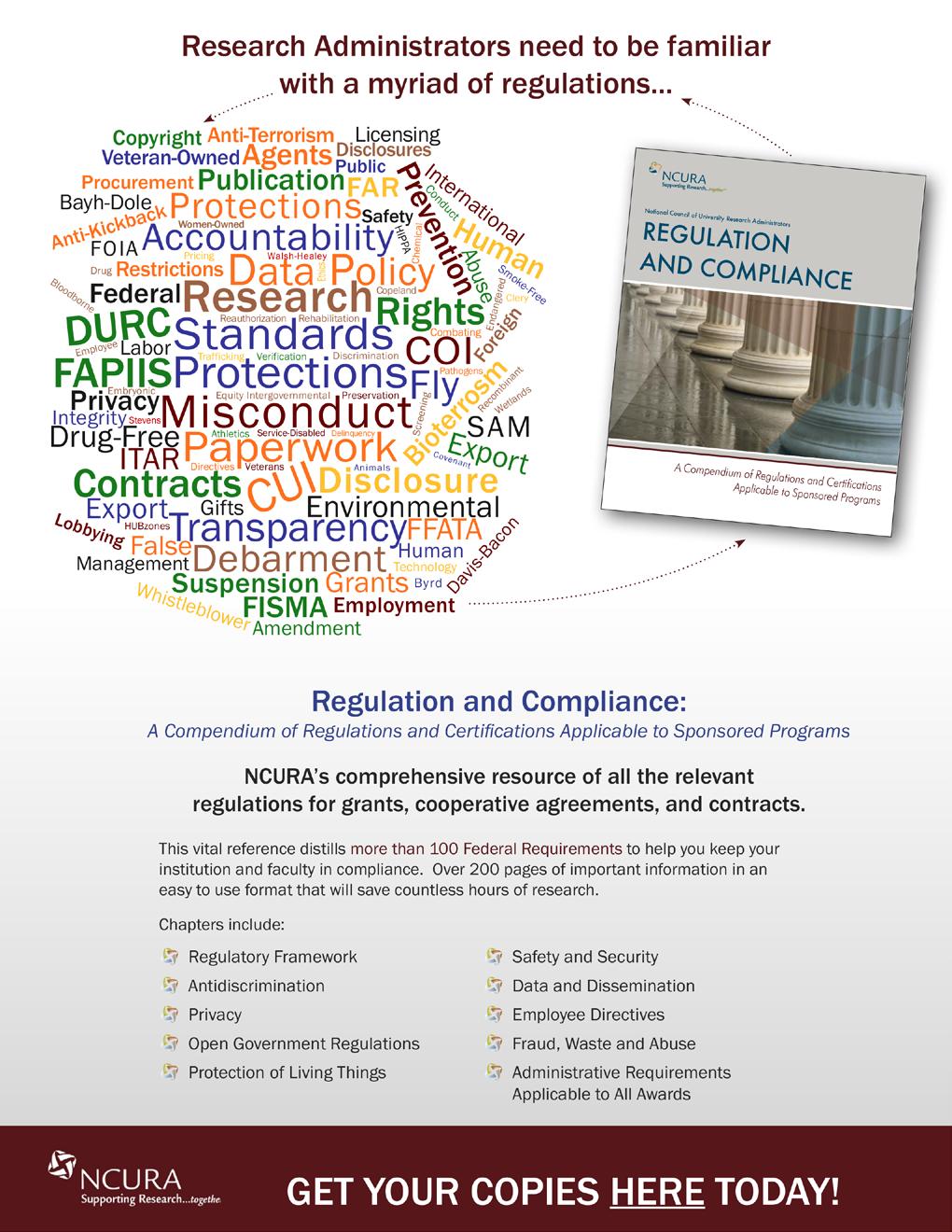

This handy reference includes the regulatory updates issued April 2024, OMB FAQS and Key Revisions issued January 2025. Bulk Pricing Available. Order Yours!

By David Richardson and David Smelser

One cannot ignore the words “artificial intelligence,” for they are everywhere, from the barrage of news stories and advertisements to promises and wild rumors. AI is increasingly becoming a part of our day-to-day lives at home and at the office. In a recent office-wide survey on the use of AI at the University of Illinois at Urbana-Champaign Sponsored Programs Administration, nearly all respondents indicated some daily use of AI on editing, drafting emails, researching information, and idea generation. With the inevitability that AI will become an integral part of your work, it is best to be knowledgeable about it so you can better understand its capabilities, limitations, and how

it will influence your job. While we cannot predict the future, we agree that the future of research administration involves artificial intelligence. We believe the advances in artificial intelligence will permit it to autonomously perform work that is rote, allowing us time to focus on work that is more meaningful, with more substantive contributions to the research enterprise. As an example, take the task of writing an article for NCURA Magazine. Everyone knows it is easier to respond to someone else’s draft than it is to start from scratch. We provided the following prompt (words in italics below) to Microsoft Copilot to generate a draft.

Write a 2.5 page article on Research Administration and how the use of Artificial Intelligence could be useful in transforming the field of Research Administration, creating new efficiencies, and supporting data-driven decision making.

Transforming Research Administration with Artificial Intelligence: Creating New Efficiencies and Supporting Data-Driven Decision Making

Research administration is a critical component of the research ecosystem, encompassing the management of research projects, funding, compliance, and reporting. As the volume and complexity of research activities continue to grow, the need for efficient and effective research administration has become more pronounced. Artificial Intelligence (AI) offers promising solutions to transform the field of research administration, creating new efficiencies and supporting data-driven decision making.

Research administration involves a wide range of activities, including grant management, compliance monitoring, financial oversight, and reporting. Administrators are responsible for ensuring that research projects are conducted in accordance with regulatory requirements, institutional policies, and funding agency guidelines. They also play a crucial role in managing budgets, tracking expenditures, and ensuring that research outcomes are accurately reported.

Given the multifaceted nature of research administration, administrators often face challenges related to data management, compliance, and

resource allocation. Traditional methods of managing these tasks can be time-consuming and prone to errors, leading to inefficiencies and potential compliance issues. This is where AI can make a significant impact.

AI technologies, such as machine learning, natural language processing, and robotic process automation, have the potential to revolutionize research administration. By automating routine tasks, enhancing data analysis, and providing predictive insights, AI can help administrators streamline their workflows and make more informed decisions.

One of the most immediate benefits of AI in research administration is the automation of routine tasks. For example, AI-powered tools can automate the process of grant application review, reducing the time and effort required to evaluate proposals. These tools can analyze large volumes of text, identify key information, and flag potential issues, allowing administrators to focus on more strategic activities.

Similarly, AI can automate compliance monitoring by continuously scanning research activities for potential violations of regulatory requirements. This can help institutions stay ahead of compliance issues and reduce the risk of penalties.

Research administration involves the management of vast amounts of data, from financial records to research outputs. AI can enhance data analysis by identifying patterns and trends that may not be immediately apparent to human administrators. For instance, machine learning algorithms can analyze historical funding data to identify factors that contribute to successful grant applications. This information can be used to guide future funding strategies and improve the chances of securing grants.

AI can also support data-driven decision making by providing real-time insights into research performance. For example, AI-powered dashboards can visualize key performance indicators, such as publication rates, citation counts, and funding levels, allowing administrators to monitor progress and make informed decisions about resource allocation.

Various AI models have made significant improvements in the last few years, however in its current state AI is not ready to assume full control over the entire sponsored project lifecycle. While Copilot produced a realistic draft, it is not perfect and should be validated by subject matter experts (SMEs) to ensure AI’s end product is accurate and reasonable. As SMEs, we take the following exceptions to Copilot’s draft:

1. AI’s predictive capabilities are subject to accurate and relevant data, especially if the data needed to forecast future actions is contained in a local or closed environment. However, not even the best AI predictive models would have predicted what has happened in research administration over the past six months. Some AI models may see things faster than humans, but humans can see things AI can’t.

2. We liked how it mentioned institutions willing to invest in AI, but we think getting institutions to implement AI remains a major challenge. For example, the University of Tennessee seems to have a willingness with a dedicated Associate Vice Chancellor and Director of the AI Tennessee Initiative, but it will take time and visionary directives to push AI adoption across the campus’ research administration community.

3. We advocate for you to invest your time, dollars, and energy in creating an AI environment that is supplemental and supportive of

One of the most powerful applications of AI in research administration is its ability to provide predictive insights. By analyzing historical data and identifying patterns, AI can predict future trends and outcomes. For example, AI can forecast funding availability based on historical trends and current economic conditions, helping institutions plan their research budgets more effectively.

AI can also predict potential compliance issues by analyzing patterns of past violations. This can help administrators proactively address compliance risks and implement preventive measures.

While the potential benefits of AI in research administration are significant, there are also challenges and considerations to keep in mind. One of the primary challenges is the need for high-quality data. AI algorithms rely on large volumes of accurate and relevant data to generate meaningful insights. Institutions must invest in robust data management practices to ensure that their data is reliable and up-to-date.

Another consideration is the ethical use of AI. Administrators must ensure that AI tools are used in a transparent and accountable manner, with appropriate safeguards to protect privacy and prevent bias. This includes regularly auditing AI algorithms to ensure that they are functioning as intended and do not perpetuate existing inequalities.

AI has the potential to transform research administration by automating routine tasks, enhancing data analysis, and providing predictive insights. By leveraging AI technologies, research administrators can create new efficiencies, support data-driven decision making, and ultimately improve the management of research activities. However, it is essential to address the challenges and considerations associated with AI implementation to ensure that its benefits are realized in a responsible and ethical manner.

As the field of research administration continues to evolve, AI will undoubtedly play an increasingly important role in shaping its future. Institutions that embrace AI technologies and invest in the necessary infrastructure and training will be well-positioned to navigate the complexities of modern research administration and achieve their research goals.

existing workflows and software. We encourage NCURA to continue to advocate for AI-focused sessions and specialized forums that showcase success stories across the sponsored lifecycle.

We look forward to the incorporation of AI-driven tools to improve our research administration operations, and we look forward to recreating this article as the evolution of AI changes our profession for the better! N

Dave Richardson is the Executive Associate Vice Chancellor for Research and Innovation at the University of Illinois, Urbana-Champaign. He can be reached at daverich@illinois.edu.

David Smelser is the Senior Director of Innovation & Optimization at the University of Tennessee, Knoxville. He can be reached at dsmelser@utk.edu.

Streamline the entire research lifecycle with fully integrated, user-friendly software

Trusted

The research management ecosystem is complex and diverse with multiple stakeholders supporting the research infrastructure.

NCURA’s comprehensive resource can help to support your team and partner offices across your institution. It covers the full range of issues impacting the grant lifecycle with more than 20 chapters including:

•Research Compliance

•Subawards

• Audits

•Export Controls

•Administering Contracts

•Sponsored Research Operations Assessment

•Pre-Award Administration

•Intellectual Property & Data Rights

• F&A Costs

•Regulatory Environment

•Communications

•Organizational Models

•Post-Award Administration

•Special Issues for Academic Medical Centers

•Special Issues for Predominantly Undergraduate Institutions (PUIs)

•Training & Education

•Staff and Leadership Development

•1100 index references

•40 articles added over the past year

• 21 chapters

•Updated 4 times a year

•1 low price

With today’sremote comprehensivenvironmentworkthis e PDF resource can be shared with institutionyour colleagues.

Recent Articles Include:

•Evaluating the Impact of Internal Submission Deadline Policy on Grant Proposal Success

•Preparing for and Surviving an Audit: Helpful Tips

•When Did You Say Your Proposal Was Due? Working on Short Deadlines

•Defining and Documenting Financial Compliance for Complex Costs

•Troublesome Clauses: What to Look for and How to Resolve Them

•Rewards of a Two-Part Subrecipient Risk Assessment

•Proposal Resubmission: Overcoming Rejection

• Mitigating Audit Risk at Small Institutions

•Fundamentals of Federal Contract Negotiation

•Managing Foreign Subawards from Proposal to Closeout

•How to Reduce Administrative Burden in Effort Reporting

•Strategies for Increasing Indirect Cost Recovery with NonFederal Sponsors

•A Guide to Industry-University Cooperative Research Centers (IUCRCs)

• Building Research Administration Community Through Service

•Turning Off Turnover - The Use of a Progression Plan to Attract and Retain Employees

By Lori Ann Schultz

For the last 70 years, research administrators have been behind the scenes of every major scientific and technology development. We have worked with our researchers in support of life-saving medical interventions, missions to space, and the sequence of the human genome. We have the curiosity to understand complex problems, the courage to navigate uncertainties and changing times, and the commitment to stay focused, even when the work is complex or unseen.

In the past five years, in particular, the work of research administrators has helped researchers navigate unprecedented events, such as the COVID-19 pandemic and the current state of the federal research ecosystem. We have proven our ability to adapt and continue to provide service and support to advise researchers when everything around them is changing. We can adopt that spirit to provide guidance, model best practices, and capitalize on the growth of generative AI tools and the possibilities they represent for our work.

Research administrators can utilize generative AI tools today in several ways to streamline day-to-day operations and support research within their organizations. We can use free versions of these tools without compromising regulated data, privacy, or business-sensitive information. There are even more options to use generative AI tools when our organizations are involved in setting guidelines, operating AI tools locally, and providing governance. The following is a list of examples of things research administrators can start doing today, as well as things that require institutional or organizational buy-in to help transform the way we provide service throughout the research administration lifecycle. These examples are not a replacement for the people who work in our field. Our expertise is needed to vet the output of these tools and understand the work deeply enough to use the tools effectively. Generative AI tools are innovative resources.

Proposal Development & Submission

Proposal review checklists against a published solicitation

Polishing language on budget justifications, letters of support

Receipt of Award/ Negotiation Award summary sheet for PI/Co-PIs

Suggested language in the negotiation process

Award Management Project management timelines

Calendar for project milestones & deadlines

Compliance Draft data management plans

Identify compliance requirements by sponsor

Training & Staff Development

User aids for proposal submission systems

Training calendars for staff development

Policy drafting

The list above is not exhaustive. The people working in AI in research administration are already devising more innovative and unique applications of AI to support the research mission. It’s what we do.

Generative AI tools have the potential for us to automate routine transactional activities, allowing us to spend more time on priorities such as supporting researchers. Freeing up time will enable us to help our researchers effectively utilize AI in their work. We can work to advise our leadership on how this technology transforms our work. It also means we can provide more meaningful development and opportunities for the staff we are hiring, developing, and promoting.

Checking proposal documents against sponsor requirements

Matching proposal narrative to the Request for Proposals

Matching opportunities to relevant faculty

Automate process for PI acceptance of award terms

Contract triage & fallback positions for common “troublesome” clauses

Automated invoicing/financial reporting

Analysis of spending: burn rates, allowable costs, alert for prior approval requirements

IRB protocol triage

IACUC protocol triage

Workload analysis & benchmarking

Chatbot for body of research knowledge

The use of AI tools is simply the next challenge, one we can meet head-on, and one that doesn’t have to create crisis. Let’s meet it like we have other challenges. This is our moment.

Keep an eye on the Federal Demonstration Partnership (FDP) as they work to include AI solutions to reduce administrative burden. Please get in touch with Lori Schultz at lori.schultz@ colostate.edu if you’d like to learn more. N

Lori Ann M. Schultz is the Assistant Vice President, Research Administration at Colorado State University. She loves to write and did not use a generative AI tool to write this article. She can be reached at lori.schultz@colostate.edu.

By Lamar Oglesby

There’s a moment in the movie Hook when a Lost Boy gently studies Peter Banning, a grown-up Peter Pan who no longer remembers who he was nor the beloved abilities he once had like flying, fighting, and crowing. And while flying is certainly a fantastic skill, in the story, it’s not really about flying. It’s about wonder, and the slow, quiet erosion of joyful thinking under the weight of deadlines, regulations, structures, processes, and spreadsheets. In a world of rapid adoption of artificial intelligence (AI), how readily and easily we trade the complex for automation, how our sense of purpose begins to shrink under the pressure of dashboards, AI scripts, and efficiency metrics.

In research administration, it seems that we are now being tempted to let the machine think for us. To let algorithms solve problems we once approached with intuition, collaboration, and deep institutional memory. But this profession, as demanding as it is, was never meant to be robotic: “When AI tools take over these tasks, individuals may become less proficient in developing and applying their own problem-solving strategies,

leading to a decline in cognitive flexibility and creativity” (Gerlich, 2025). Research administration is a human craft. One that calls on our imagination to solve the unsolvable, to guide faculty through chaos, to find beauty in budgets, and meaning in compliance.

So the question isn’t just for Peter Pan. It’s for us, for you—the analysts, the specialists, the directors, the grant whisperers: Have you forgotten how to fly?

In the age of AI, forgetting cuts even deeper. As the world advances, it’s natural to lose touch with the ways we used to engage in our work. Colleagues often have relics of yesteryear’s work tools on their desk to serve as nostalgic pieces of art such as old Mac or IBM monitors or printing calculators. But this is different and it feels accelerated. We live in a world where thinking itself is being outsourced. In research administration, where complexity and nuance have always demanded both precision and creativity, we’re watching a quiet shift unfold. Tools once designed to support insight now begin to replace it.

Bots can generate answers and prompts before we reflect and wonder. The voice of the machine grows louder than the whisper of our inner curiosity. And in that space between speed and silence, many of us have unknowingly let go of something essential. Not just our imagination, but the joy of solving messy problems, the satisfaction of navigating ambiguity, the art of human judgment. AI can optimize, but it cannot wonder. What I’m suggesting, and perhaps the greatest risk in over-relying on it, isn’t the error alone but forgetting the greatest attribute of a research administrator is knowing how to fly.

“You can’t use up creativity. The more you use, the more you have.”

Creativity is not a finite resource; it’s a muscle that is developed through resistance and nurture. Like any muscle, it atrophies when unused. AI tempts us to skip the warm-up, to outsource the effort. But doing so robs us of the chance to grow. We rob ourselves of the chance to stretch, to think through the nuance, to grow sharper in our judgment and bolder in our solutions. The work may be hard, but it’s in the doing, in the creative strain of navigating a budget crisis, rewriting a compliance memo, or writing a difficult but necessary email that we build our professional strength.

AI can assist and support our profession in ways beyond human ability, but it cannot replace the instincts we refine through repetition, the insights we earn through struggle, or the creative courage it takes to lead in uncertainty. If we forget to use our muscles, we may wake up one day brilliant at automation, have productivity and efficiency measures that are impressive, but out of shape for the very work that makes this profession matter.

There was a time when creativity felt like a storm, wild, disordered, and alive. You could feel it in a room full of people brainstorming with no agenda other than the pursuit of something new. One of the most electric moments I’ve experienced recently came during a brainstorming session. Five professionals and scholars from various walks of life, equipped with a white board and dry erase markers, were challenged to devise a theoretical model for virtuality. There were no slides, no formal structure, and laptops and easy access to ChatGPT. Just an open invitation to think dangerously, collaboratively, and without filters. It reminded us what true creative energy feels like: chaotic, uncertain, frustrating, and ultimately thrilling.

But in too many spaces, the storm has gone quiet and AI is right there, always ready with an answer, always eager to help, like Rosie from The Jetsons turned into a well-meaning but overbearing helicopter parent. History, and our favorite cartoons, have reminded us that convenience comes with a cost. Why chase the muse when a machine can deliver five ideas in five seconds? Why struggle with a question when an algorithm offers instant resolution? We may think we’re being more creative, but we’re outsourcing the very struggle that gives creativity its soul, humans their ability to create, and research administrators their ability to fly.

Embrace the storm in all its glory, inconvenience, and its deeply human experience where imagination lives. Creativity demands friction. It thrives in uncertainty. You know exactly what that feels like, don’t you? When it feels impossible to meet the deadlines, answer the questions, and make it

work under the most unreasonable timeline and circumstances. But you figured it out. You always have. There’s power in that process, but it needs space to stumble, to question, to be wrong. Many researchers have argued that pressure is an effective enabler or exhibitor of creativity (Gupta, 2015). In a study conducted by Robert Eisenhower and Justin Aselage, it was stated that “performance pressure was positively related to creativity…” (Eisenhower and Aselage, 2009). We haven’t stopped creating; we are becoming too quiet about it. We’ve mistaken silence for efficiency and automation for insight. The storm is the gift. The wind is sudden and unpredictable, and the conditions may not produce visibility and comfortability. Pressure is the altitude, and flight is impossible without pressure. That’s just…physics.

This is not an ANTI-AI article. Relearning how to fly doesn’t mean abandoning technology; it means reclaiming our humanity within it. It means choosing to feel something in the act of creating. To struggle. To doubt. To surprise ourselves. As Dr. Rajiv Nag so eloquently stated to Cohort 8 during his Qualitative Inquiry Methods course, “Sure, use AI, it is here in the room with us right now, but trust yourselves, and don’t be so quick to relinquish your power over to it. Yes, [this] (learning, completing a doctorate) is hard, and it will be challenging, but you can do it. As so many others have. Trust that you can do it too!”

So next time you reach for ChatGPT or Copilot to serve as a launchpad for ideas, consider starting it yourself instead. It might not be as fast or polished, but it might be yours. It might be the first step toward remembering who you are and what you’re capable of. Flight is not a gift; it’s a memory, and memories can be rekindled. All it takes is one wild, wonderful, imperfect thought.

Let Us Not Forget

AI is not the villain. It’s a powerful and beautiful tool. I remind you that it’s a two-way mirror reflecting how magical and powerful the human mind can be, while simultaneously reflecting how much of that power we’re willing to surrender in exchange for speed and ease.