Dear Reader,

The selection of research in this journal contributes to historical memory by illuminating lesser-known narratives and presenting arguments that arise from new perceptions of underlying contexts. This is the most extensive historical project many of us have conducted, and we are grateful to have had such an opportunity in high school. Our work has also laid the foundations for the editorial boards and issues in years to come.

Each of the contained papers bridge disciplines to provide perspectives that contribute to a more comprehensive understanding of past figures, events, and regions. As our school name would suggest, many of us have taken a historical approach to scientific subjects, while others have taken an empirical approach to history.

The journal’s name, Quetzalcoatl, was proposed by our advisor and instructor, Dr. Marcelo Aranda, and inspired by a family painting in his childhood home. The cover, which resembles the painting, is a representation of the Aztec god Quetzalcoatl (“Feathered Serpent”). The deity’s importance to Latin American tradition reflects the cultural connections that inspired many of our chosen topics. Quetzalcoatl is the god of knowledge and learning. We, as editors and researchers, have conducted interviews, peer-reviewed our drafted research, orally presented our findings, and finally merged the products into an extensive body of knowledge—this publication.

We would like to acknowledge Dr. Marcelo Aranda for his guidance and flexibility while we pursued the topics that excited our curiosity, and the Chair of Humanities at NCSSM-Morganton, Dr. Sarah Booker, for granting our request to publish and for laying out the logistics to achieve this goal. The Research in Humanities class and editorial board have given significant effort to composing this journal (So much so, that our work warranted homemade cookies and celebratory trips for tacos.)

In your hands is the first NCSSM-Morganton Humanities Research Journal, which we hope provokes new questions and influences further attention in historical academia to be given to the contained subjects

Sincerely,

The Research in Humanities

Founding Editorial Board:

Erin Collins

Sydney Covington

Kimberly Gómez-González

Nitya Kapilavayi

Rucha Padhye

Armed Spaniards approached hundreds of Aztecs greeting them to the New World. After a series of performances and ceremonies, the Aztecs led the Spanish across a lengthy bridge. Moctezuma, the ruler of the Aztec empire, and Hernán Cortés, a Spanish conquistador, were suddenly only a couple of meters away when Moctezuma decided to dismount from his horse. Approaching Cortés, the two men briefly embraced each other representing the first direct contact of the Spanish with Nahua civilization. Between them stood Malinche.1 From then on, she would become known as his interpreter, advisor, lover, mother of his child, and the greatest asset in the conquest of the Aztec empire.

Before her life took an extraordinary turn, Malinche was on track to fulfill her role in society as a woman. In Aztec society, the birth of a child was celebrated throughout the empire. As the baby leaves the mother upon birth, the midwife releases a series of battle cries in praise of the mother’s strength and enduring labor for her child. The midwife would continue to inform the newborn of its good fortune belonging to the family. She then would assure the newborn that a man or woman had many duties, but their stewardship of the empire and admiration of the gods would take priority for as long as they lived. Afterward, the midwife conducted the bathing ceremony in the presence of the child’s extended family. During this, she laid a basin of water on a reed mat and surrounded it with miniature tools appropriate to the baby’s sex and the family’s stand and profession. For example, boys who had been destined for the army by the tonalamtl priests would be laid with a small bow and arrow on top of an amaranth dough tortilla to represent a warrior’s shield. A female baby would be

1 Bernal Diaz, “Historia Verdadera: AHA,” Historia Verdadera | AHA, accessed November 14, 2023, https://www.historians.org/teaching-and-learning/teaching-resources-for-historians/teaching-and-learning-in-the-digital-age/the-history-of-the-americas/the-conquest-of-mexico/historia-verdadera.

laid with a tiny skirt together with spinning instruments to represent a woman’s work.2

The Aztec empire placed a great deal of importance on education. At around the age of three, daughters were taught to grind maize and to make tortillas. At the same age, boys began fetching and carrying water. When boys reached the age of six or seven years, they began to assist fathers, learning to fish and gather reeds. During early adolescence, children of commoners went to the telpochcalli (‘young person’s house’). At these institutions, girls and boys were taught separately. However, they were both taught history, public speaking, dancing, and singing and received religious instruction. Boys also underwent rigorous military training, while girls learned to serve in the temples of the city. The Aztec education system emphasized a young person’s responsibilities: he or she was encouraged to find fulfillment in serving the gods, the state, the calpulli (‘family homes’), and the family’s livelihood. Disparities in education were largely based on socioeconomic status. For example, the sons and daughters of the nobility were educated separately in establishments called calmecac. Children of nobility would learn military theory, agriculture and horticulture, astronomy, history, and the skills of architecture and arithmetic in addition to the topics commoners learned. They would also be instructed in religious rituals, learning how to follow the sacred calendar, interpret the tonalamatl, and understand the organization of the main festivals of the Aztec religious year.3

Within the domestic spaces of the empire, women would educate their daughters on the social etiquette specific to their gender in preparation for courtship and marriage. An exam-

2 Inga Clendinnen, Aztecs: an Interpretation (Cambridge, MA: Cambridge University Press, 1991).

3 Inga Clendinnen, Aztecs: an Interpretation (Cambridge, MA: Cambridge University Press, 1991).

ple of this is showing her how, when met with a man on a path, she should turn away and present her back to him, so that he could pass easily and undisturbed.4 Once in their late teens, professional match-makers would set young men with their chosen young women. The matchmaker presented his case on four consecutive days, and then the woman’s parents would be expected to announce whether they accepted the marriage offer. Marriage was every young Aztec girl’s dream and only option in life if she wished to be respected. The marriage ceremony was held in the house of the bride’s father. The mother of the bride spoke to her new son-in-law, asking him to remember that he owed his wife a duty of love, hard work, and self-sacrificing attention. After the wedding, the new husband and wife lived in the house or the group of houses occupied by the bride’s family for about six or seven years. The husband’s new in-laws benefited from his labor throughout this time and, indeed, by custom could evict him from their home compound if he refused to work.5

After marriage, the wife’s responsibility to go to the market to negotiate prices of goods and upkeep the household in addition to participating in social events was mandatory. Ultimately, if the marriage did not work out, both parties could petition the court to get the marriage nullified and separate from their partners.6 If there were younger children involved, they would typically remain with the mother, while older children tended to go with the same-sex parent. This social norm resulted in men divorcing their wives if they proved unable to have children or if they failed in duties such as making or preparing their evening baths. The level of independence and agency for women of this period was decent, due to living in smaller populations, and thus, had a lower need for the specialization of the sexes.

Born as Malinalli but called Malitzin in Nahuatl, and ultimately pronounced Malinche by Spaniards, Malinche was reborn when she was baptized and earned the name Doña Marina around 1519.8 According to caciques, or native chiefs,

4 Ibid.

5 Inga Clendinnen, “Wives,” Aztecs: an Interpretation (Cambridge, MA: Cambridge University Press, 1991).

6 Ibid.

7 Charles Phillips, “Aztecs,” essay, in The Complete Illustrated History of the Aztec & Maya: The Definitive Chronicle of the Ancient People of Central America & Mexico--Including the Aztec, Maya, Olmec, Mixtec, Toltec & Zapotec (London: Hermes House, 2010), 349.

8 Bernal Diaz, “Historia Verdadera: AHA,” Historia Verdadera | AHA, accessed November 14, 2023, https://www.historians.org/teaching-and-learning/teaching-resources-for-historians/teaching-and-learning-in-the-digital-age/the-history-of-the-americas/the-conquest-of-mexico/historia-verdadera.

near Coatzacoalcos, Malinche’s parents were raised by her two parents, Aztec caciques. After her father passed when she was young, her mother sold her to the Xicalango so that her stepbrother would be the sole heir of the estate.9 This anecdote about Malinche’s upbringing was even mentioned in detail in Bernal Diaz’s Historia Verdadera de Nueva España, otherwise known as True History of New Spain. According to Diaz, her mother “gave the little girl to some Indians from Xicalango at night so no one would see them, and told people that she had died.” Working for the Xicalango and Tabasco, Malinche picked up Yucatec, the language of the Maya civilization, contributing to her talent in aiding Spanish expansion. In 1519, Malinche, along with nineteen other native women, was given to the Spaniards by the Chontals, a Mayan people that occupied Tabasco, in an attempt to create a peace agreement while she was in her late teens. The Spaniards, identifying the ease at which she communicated, put Malinche to work with Spanish conquistador Alonso Hernandez de Portocarrero as a servant and mistress until she was sent to work with Hernán Cortés10

Diaz states, “... they were baptized, and the name Doña Marina was given to one Indian woman, who was given to us and who truly was a great Cacique…[She was of] the first women to become Christians in New Spain.” When the conquistadors found out the ease at which she communicated, they partnered her with Geronimo de Aguilar, a Spaniard who spoke Mayan, to translate for Cortés. Aguilar had funnily enough been stranded on the coast of Yucatec for eight years until Cortés had rescued him to form part of his team. La Malinche quickly picked up Spanish and arguably overshadowed Aguilar. Diaz would later write that“...Doña Marina had shown herself to be such an excellent woman and fine interpreter throughout the wars in New Spain, Tlaxcala, and Mexico, as I shall show later on, Cortés always took her with him…And Doña Marina was a person of the greatest importance, and was obeyed without question by the Indians throughout New Spain.”11 Due to her becoming an invaluable member of the expansion, she was then given the name Doña Marina as a sign of respect by the Spaniards and given the suffix -tzin, becoming Malitzin by the natives. The name Malitzin was then misheard by the Spaniards and became Malinche.

9 Oswaldo, Estrada, Troubled Memories: Iconic Mexican Women and the Traps of Representation (State University of New York Press, 2019).

10 Bernal Diaz, “Historia Verdadera: AHA,” Historia Verdadera | AHA, accessed November 14, 2023,https://www.historians.org/teaching-and-learning/teaching-resources-for-historians/teaching-and-learning-in-the-digital-age/the-history-of-the-americas/the-conquest-of-mexico/historia-verdadera.

11 Ibid.

La Malinche began her involvement with Spanish expansion as an understated intermediary, a crucial department in every growing empire’s development. The ruler, in this case, was Charles V who was both the Holy Roman Emperor and the King of Spain during the time of Hernán Cortés and La Malinche’s partnership. Alternatively, Moctezuma, the emperor of the Aztec empire, was also identified as the ruler. An example of a regional leader in this instance was Hernán Cortés, who had direct orders from Charles V and was leading other Spaniards in the conquest of the Aztec empire. Intermediaries, such as La Malinche, would have been translators, advisors, and essentially anyone native to the desired area. Intermediaries were used as a way to connect to natives and gain trust through that individual’s social connections.12 To ensure reliance and devotion to the empire, slaves and other people of low socioeconomic status would be elected to be intermediaries.13 Because of this, and her linguistic faculties, La Malinche was the perfect candidate to be an ally to the Spanish crown. Regardless of the strategy with which they were used, and despite the empire’s perspective of the natives, all empires utilized intermediaries in one form or another due to their effectiveness in aiding in the mission of expansion.

Fulfilling her role as an intermediary, La Malinche was particularly useful in gaining native allies to fight for the Spaniards against the Aztec empire. Malinche stood alongside Cortés in Cempoala as the Mexicas sent tax collectors and warned the Cempoalans against receiving the Spaniards. Malinche identified the tensions between the Cempoalans and the Mexica, and Cortés offered to protect the Cempoalans, many of whom accompanied him as he marched toward Tenochtitlan. Simultaneously, he released the Mexica tax collectors he was holding, telling them that he hoped that Moctezuma was well and eagerly awaited visiting him.14 This allowed Cortés to play to both sides until he could determine whom to trust to help him conquer the land.

After being heavily dependent on Malinche, as seen through images in the Florentine Codex and Cacique accounts, Cortés was called Señor Malinche, or even just Malinche by natives, making him seem one with his interpreter.15 Hernán Cortés had previously gained fame from an expedition to Cuba which earned him support in an expedition westward in 1519 when Spain heard murmurings of gold in what was occupied by the Aztec empire, and what is now considered to be Mexico. He was appointed head of an expedition of eleven ships and five hundred men to Mexico. Curiously, Cortés only ever mentions La Malinche twice in his letters that he would routinely send to the King while on the prolonged expedition. In 1520 he wrote, “my interpreter, who is an Indian woman” and in 1526 he wrote, “Marina, who traveled always in my company after she had been given to me as a present.”16 An integral part of the conquest were these native allies who had weapons, knew the land, and knew the weaknesses of the Mexica. La Malinche was Cortés’ only mode of communication to these communities, and so it can arguably be said that without the interpretation of La Malinche, the Spanish would have been unable to conquer the Aztec empire. Indigenous allies of the Spanish are seldom spoken of or given glory by the Spanish crown. Instead, Cortés was rewarded with fame and fortune in Spain and sole responsibility for the conquest and massacre being put on Malinche by the Mexicas.

12 Jane Burbank and Frederick Cooper, “The Empire Effect,” Public Culture 24, no. 2 (2012): 239–47, https://doi.org/10.1215/08992363-1535480. 13 Ibid.

14 “Divisions and Conflicts between the Tlaxacalans and the Mexicas: AHA,” Divisions and Conflicts between the Tlaxacalans and the Mexicas | AHA, accessed November 14, 2023, https://www.historians. org/teaching-and-learning/teaching-resources-for-historians/teaching-and-learning-in-the-digital-age/the-history-of-the-americas/ the-conquest-of-mexico/narrative-overviews/divisions-and-conflicts-between-the-tlaxacalans-and-the-mexicas ; Bernal Diaz, “Historia Verdadera: AHA,” Historia Verdadera | AHA, accessed November 14, 2023, https://www.historians.org/teaching-and-learning/teaching-resources-for-historians/teaching-and-learning-in-the-digital-age/the-history-of-the-americas/the-conquest-of-mexico/historia-verdadera.

Upon arrival at Tenochtitlan, the capital of the Aztec empire, Moctezuma gave an elaborate and honorific speech to his visitors. Like those given to ambassadors, this speech referred to the visitors as “my child,” demonstrating respect, especially in elite Aztec circles. Malinche was the only Nahua-speaking individual among the conquistadors and elected to speak brutally directly with the absence of any honorary titles.17 We will never know if Moctezuma said something worthy of this rude act of resistance. However, shortly thereafter, Cortés reported to Charles V the initiation of the conquest of the inhabitants of Mesoamerica. According to Spanish Law, the Spanish King had no right to demand foreign peoples to be subjects of the Spanish empire, but he did have the power to take control over rebels of the Spanish crown. By documenting the initial alliance of the Aztecs to the Spanish, they could later be identified

15 Oswaldo, Estrada, Troubled Memories: Iconic Mexican Women and the Traps of Representation (State University of New York Press, 2019).

16 Hernán Cortés, “Letters from Hernán Cortés,” American Historical Association, accessed November 15,2023,https://www.historians.org/ teaching-and-learning/teaching-resources-for-historians/teachingand-learning-in-the-digital-age/the-history-of-the-americas/the-conquest-of-mexico/letters-from-hernan-cortes.

17 Camilla Townsend, “Malintzin’s Choices : An Indian Woman in the Conquest of Mexico,” Internet Archive, January 1, 1970, https://archive. org/details/malintzinschoice0000town.

as rebels and ‘rightfully’ overthrown.18 This theory is further supported when it isn’t until 1552 that Cortés writes about his meeting with Moctezuma and the first Indigenous account isn’t until the later 1550s. Bernal Diaz, Spanish conquistador and close colleague to Cortés wrote as to how the Aztecs viewed the myths with which they have been associated. As the Spanish heard stories that they were almighty Gods, Diaz claimed Moctezuma dismissed the stories by saying, “You must take them as a joke, as I take the story of your thunders and your lightnings.”19 This misconception could have led to the Spanish viewing the Aztec empire as a threat and beginning the undertaking of justifying colonization.

After the conquest of the Aztec empire, Hernán Cortés impregnated La Malinche with her first son out of wedlock. Now known as Martin Cortés or El Mestizo, he is known for being the first mestizo. ‘Mestizo’ refers to an individual of both European and Indigenous descent. While it isn’t completely clear as to what the genuine nature of the relationship had been, she proceeded to live with him in a very lavish home in the later years of her life. Although he lived with multiple concubines, their long-standing proximity supports the claim they had a close relationship. This was until her skills were no longer needed and she was married off to a colleague of Cortés. Shortly thereafter, Cortés moved back to Spain with Martin by his side to become the “Commander of Santiago” according to Diaz.20 According to Bernal Diaz, La Malinche would outwardly exclaim how she was lucky to be a Christian woman working for Hernán Cortés, saying “Even if they were to make her mistress of all the provinces of New Spain…she would refuse the honor, for she would rather serve her husband and Cortés than anything else in the world.”21

During the beginning stages of the conversion of New Spain, the Spanish dedicated themselves to destroying temples, idols, and symbols of indigenous religions. This included closing religious schools, murdering pastors, and disposing of evidence of religious human sacrifice. In 1525, missionaries were sent to implement systemic evangelization. During this same time, cathedrals were built atop temples, and thousands of Indig-

18 Octavio Paz, Sor Juana, Or, The Traps of Faith, (Cambridge, MA: Harvard University Press, 1988).

19 Bernal Diaz, “Historia Verdadera: AHA,” Historia Verdadera | AHA, accessed November 14, 2023, https://www.historians.org/teaching-and-learning/teaching-resources-for-historians/teaching-and-learning-in-the-digital-age/the-history-of-the-americas/the-conquest-of-mexico/historia-verdadera.

20 Matthew Restall, Seven Myths of the Spanish Conquest (New York, N.Y: Oxford University Press, 2021).

21 Oswaldo Estrada, Troubled Memories: Iconic Mexican Women and the Traps of Representation (State University of New York Press, 2019).

enous peoples were baptized.22 Some of these missionaries dedicated themselves to studying the languages and religions of the Aztecs to communicate better. Of these missionaries was Fray Bernardino de Sahagún, who arrived in 1529 and is known today as history’s first anthropologist. He compiled information about the conquest from native students and elders into the Florentine Codex or The General History of the Things of New Spain. While he worked to convert the natives to Christianity, he gained a comprehensive understanding of the ways of the Aztec people, accompanied by 2,468 images, that uniquely blend both European and Nahua styles of drawing.23 Diaz later writes, “ …with Aguilar as interpreter, [they shared] many good things about our holy faith to the twenty Indians [women], who had been given us, telling them not to believe in the Idols, which they had been trusted in, for they were evil things and not gods, and that they should offer no more sacrifices to them for they would lead them astray, but that they should worship our Lord Jesus Christ.”24 This was about the first twenty women that were given to them by the Tabasco Indigenous peoples, including Malinche.

The stark contrasts between the gender roles assigned to the two sexes are completely alien to the beliefs of the Aztecs. This is especially reflected in the Aztec religion. Xochiquetzal is the deity of sexual love, artistry, and delight, and has historically been depicted as having multiple sexual relationships with deities. Deities with close connections to nature were particularly androgynous and could be depicted as either male or female. For example, Tlacatecutli, or ‘Earth Lord’ and the Earth Monster were deities who would often fluctuate from being depicted as either male or female. Furthermore, select deities were depicted as being gender-twinned such as Xochiquetzal and Xochipilli, Chalchihuitlicue, and Tlaloc. The deity representative of maize, was depicted to have a lifecycle resembling humans’ and changed sex throughout the year. Xilonen, the deity representative of the young maize, would embody the slender cob, milky kernels, and long, silky corn tassels, becoming either feminine or masculine versions of Centeotl. Young

22 Margarita Zires, “Los Mitos de La Virgen de Guadalupe. Su Proceso de Construcción y ...,” JSTOR, 1994, https://www.jstor.org/stable/1051899.

23 Bernardino de Sahagún, “General History of the Things of New Spain: The Florentine Codex.,” The Library of Congress, accessed November 14, 2023, https://www.loc.gov/item/2021667837/.

24 Bernal Diaz, “Historia Verdadera: AHA,” Historia Verdadera | AHA, accessed November 14, 2023, https://www.historians.org/teaching-and-learning/teaching-resources-for-historians/teaching-and-learning-in-the-digital-age/the-history-of-the-americas/the-conquest-of-mexico/historia-verdadera.

Lord Maize Cob was depicted as muscular and hardened like the cob and mainly depicted as a male.25

Queerness amongst the holy gods was not uncommon, with female deities being shown as lovers even more often than as sisters. There was a greater value associated with the social division of roles, rather than the sexual relationship. The war god Huitzilopochtli is said to have had intimate relations with his mother Coatlicue and his sister as well as his adversary Coyolxauhqui. The intenseness of the battle came from their relationship as siblings, not as sexual antagonists. The notion of a ‘war between the sexes’ and violent sexual acts, which is now completely engrained into Mexican society, is completely unassociated with gods worshipped for hundreds of years by the Aztecs.26 Attitudes towards androgyny within the Aztec empire are difficult to conclude. At birth, there would be no identification of anatomically ambiguous individuals. Additionally, transvestism during the Aztec empire supports the direct contrast between male and female individuals, instead of the blurring of femininity and masculinity. While homosexuality was recognized, some scholars believe that it was largely deplored. This was especially so with homosexual women’s sexuality being abused through prostitution.27

Religion continues to be an integral part of Mexico since it is attached to the birth of the Mexican nation. Celebrating the Virgin Mary has become a national project that facilitates the spread of Christianity and trespasses the colonial area, the war of independence, the Mexican revolution, and the long twentieth century of church-state tensions, social activism, marxism, economic development, human rights, and liberation theology. Media regarding Malinche’s relationship to Christianity were published to improve relations between the Catholic Church and Mexico to enhance the administration’s image with foreign lenders and private investors whose support would be crucial in a neoliberal area. Diplomatic relations between Mexico and the Vatican began in 1992 and would continue into the present day where they have now accomplished many projects and investments in the country.28

The association of certain Aztec religious traditions with Catholic traditions was permitted as a means of creating a smoother transition to Catholicism.29 An example of this is

25 Inga Clendinnen, “Wives,” Aztecs: An Interpretation (Cambridge, MA: Cambridge University Press, 1991).

26 Inga Clendinnen, “Wives,” Aztecs: An Interpretation (Cambridge, MA: Cambridge University Press, 1991)...

27 Ibid.

28 Ibid.

29 Margarita Zires, “Los Mitos de La Virgen de Guadalupe. Su Proceso de Construcción y ...,” JSTOR, 1994, https://www.jstor.org/stable/1051899.

a variety of gods and goddesses associated with saints. Most notably, the goddess Tonantzinwas associated with La Virgen de Guadalupe, the Virgin Mary.30 The Aztecs believed their gods led them to settle their new city near a lake where an eagle would stand on a cactus with a snake in its beak. Here they settled in Tenochtitlan in 1325 along the Valley of Mexico on an island in a large salty lake, what is now Mexico City. This story is even reflected in Mexico’s flag today. However, to facilitate spiritual national unity and move away from ‘demonic’ Aztec traditions, the story of the aspiration of the Virgin Mary was born in Tepeyac and popularized by Fray Alonso de Montúfar. The story of the Virgin Mary known to Spanish settlers was set in Europe. In contrast, the stories given to the Indigenous people during this transitional period were much different as she is representative of a foreign land.

Before religious conversion, there was no particular social capital placed upon people who decided to be celibate. Interestingly, sex workers were respected and could only be hired by Aztec warriors of high rank after giving a generous payment to the owner of these “state-controlled brothels or ‘Houses of Joy.’” Additionally, they were not only not cut off from female society, instead, they worked alongside other women in the market, and they were highly sought-after curers. It was not uncommon among men and women to be celibate as a devotion to their religion or even simply a particular deity. Spiritual purity was not solely based on virginity, as a ruler tells his young virgin daughter, “‘There is still jade in your heart, turquoise. It is still fresh, it has not been spoiled… nothing has twisted precious green stone’ and it is ‘still virgin, pure, undefiled.”31 This supported the idea that purity for Aztec women was based on things entirely unrelated to their sexual activity. Contrastly, the Roman Catholic Church has always idealized the Virgin Mary for her chastity, motherly nature, and selflessness. When Aztec society had been converted, they determined that La Malinche had embodied the polar opposite traits, causing already brewing hatred towards her to solidify sexist ideologies into the Mexican psyche.

Modern scholarship has given La Virgen, La Malinche, and Sor Juana Ines de la Cruz recognition for their contributions to what we know today as the Mexican consciousness. La Virgen de Guadalupe is seen to be the first archetype of Mexican women, she is seen as subordinate to the patriarchy, sacrificing herself for the salvation of humanity through her son.

30 Lara, Irene. “Goddess of the Américas in the Decolonial Imaginary: Beyond the Virtuous Virgen/Pagan Puta Dichotomy.” JSTOR, 2008. https://www.jstor.org/stable/20459183.

31 Inga Clendinnen, “Wives,” Aztecs: an Interpretation (Cambridge, MA: Cambridge University Press, 1991).

La Malinche is seen to be the second archetype, the Indigenous young woman whose collaboration and personal involvement with Hernan Cortez is said to be the reason for the Empire’s demise. As the story of La Malinche has been interpreted by many scholars throughout the ages, she has been described in multiple ways. In non-indigenous stories, she is portrayed as La Chingada Madre perspective, or the fucked mother describes the stances of multiple communities towards Malinche in which she is depicted as a hypersexual being. These perspectives towards Malinche are reinforced by telenovelas such as La Rosa de Guadalupe, with steamy scenes of sexual encounters as a form of agency. However, in various renditions of Malinche’s story, she is depicted with Spanish conquistadors, losing her virginity, participating in painful sex, and professing her undying love. This associates her participation as a result of her blind love for these conquistadors, almost making her innocent due to her endless love for these men. In Indigenous stories, Malinche is depicted as being a political agent for change. Regardless of whether it was correct or moral what she did, these individuals identify the agency Malinche demonstrated through her work as an intermediary. This comparison is critical as it is reflective of women’s agency in both indigenous and non-Indigenous communities. According to Bernal Diaz, La Malinche said, “She would give up the entire world just to serve her husband and Hernan Cortez.” It could also be theorized that her participation in the Conquest of the Mexica was a means of transgressing her marginal situation as a slave and thus becoming an indispensable woman in the conquest of Mexico. Regardless, we can not confirm nor deny that she enjoyed her sexual encounters with any of these conquistadors, including Hernán Cortés as we do not have any firsthand accounts from her stating it. While many consider the origins of what we now know as Mexico to be the Aztec empire, others believe it was when La Malinche had a son with Hernán Cortés. In Masculinidad, Imperio y Modernidad en Cartas de Relación de Hernán Cortés, Rubén Medina explores the relationship between masculinity and imperialism. With the stark contrast between the gender roles of Europe and the Aztec empire, the need to spread traditional Christian gender roles acted as a motivator to conquer Mesoamerica. La Malinche and Cortés’ son was of both Indigenous and European descent, considered to be the first mestizo. In Martyrs of Miscegenation; Racial and National Identities in Nineteenth-century Mexico, Lee Skinner explores the relationship between racial and national identities. These identities were social structures that were directly threatened by La Malinche, Hernán Cortés, and her mestizo son, causing uncertainty for the future. Hatred towards La Malinche coupled with efforts to convert the Indigenous to Christianity is what created Marianismo and subsequently Malinchismo and

its dyadic relationship to Machismo. As the antithesis of La Virgen de Guadalupe, she had a substantial negative impact on the perception of the female gender.

The third archetype is Sor Juana de la Cruz, the young scholar mestizo who openly defied gender stereotypes but constantly grappled with society’s expectations for her as a woman of the veil. Sor Juana Ines de la Cruz was the illegitimate daughter of Creole Spanish parents from the pueblo of Chimalhuacan, near the valley of Mexico. Identified as a child prodigy, she went to live with relatives in the capital at the age of eight. Her beauty, wit, and skill at poetry and her amazing knowledge of books and ideas made her an instant celebrity at the court. At age fifteen, the admiring viceroy and his wife sent her before a panel of learned professors at the University of Mexico. At this time the university was only for men and women were not permitted to study there. Given an oral exam by these professors with questions ranging from physics, mathematics, theology, and philosophy, they failed to stump her. Before her sixteenth birthday, she entered a Carmelite convent, quickly joining the Jeronymite Order as a nun where she stayed the rest of her life. Around the same time, Sor Juana stated she held a “total disinclination to marriage,” she would choose this conventional way to remain faithful to her religion and pursue her passion for study.32

While marriage and/or religious seclusion were common for Colonial Spanish women, misogyny still ran rampant in the clergy throughout the seventeenth century. Women were seen as daughters of Eve, temptresses who invited sin and damnation. The majority of Sor Juana’s works were written in these spaces. She connected to other literary friends and scientists in the vibrant city of Mexico and even abroad until 1694 during her time at the convent. She gave away her books and scientific and musical instruments and in her blood wrote out an unqualified renunciation of her learning by saying, “I Sor Juana Ines de la Cruz, the worst in the world…. Christ Jesus came into the world to save sinners, of whom I am the worst of all.” Shortly thereafter she would pass away while taking care of her fellow sisters who had contracted smallpox.33

While we can get a clearer sense of men’s perception of women through non-fictional works, women would often use fiction as a safer medium through which to spread their political thought. Sor Juana’s style of writing was reflective

32 William B. Taylor, Kenneth Mills, and Lauderdale Sandra Graham, Colonial Latin America: a Documentary History (Vancouver: Crane Library at the University of British Columbia, 2009).

33 William B. Taylor, Kenneth Mills, and Lauderdale Sandra Graham, Colonial Latin America: a Documentary History (Vancouver: Crane Library at the University of British Columbia, 2009)..

of her time, regularly using ornate, obscure styles popular at the time, seen through Christmas carols, morality plays, and allegorical pieces. While the subjects explored were not necessarily a demonstration of rebellion, she held beliefs that went against conventional boundaries for women’s lives and spiritual activity. El Divino Narciso, Los Empeños de una Casa, and La Respuesta were all written by Sor Juana de la Cruz. As an archetype of Mexican feminist literature, her works have been instrumental in learning about the internal struggle of mestizas. El Divino Narciso is a humorous play based on the story of the Holy Trinity. Many writers of Baroque Mexico would write these stories surrounding the Holy Trinity but Sor Juana’s rendition, like many of her works, was influenced by her political and religious beliefs. Los Empeños de una Casa is another example of her writing being used as a means of spreading political messages. While this play is based on two couples who are continuously pining for one another, through humor Sor Juana critiques gender roles and social dynamics.

However, La Respuesta is thought to be her most iconic contribution to feminist Mexican Baroque literature. In response to a letter from a Bishop, under a feminine pen name, Sor Juana recounts her internal struggle with her identity as an intellectual and a woman of the cloth. Sor Juana de la Cruz is evidence of women who grew up in this society and had this internal struggle of choosing their Indigeneity or religion that had been so embedded into her society.

Within the hallowed halls of Mestizo family households, “Hija de tu Madre!” is yelled at a person exhibiting undesirable traits. In English, the term translates to “daughter of your mother!” While this term always mentions “madre” or mother, “hija” is exchanged with “hijo,” emphasizing the individual’s relation to the mother. Similarly, the term “malinchista” has been associated with the feminine. The term is derived from “La Malinche” and means “a person who adopts foreign values, assimilates to a foreign culture, or serves foreign interests,” essentially used to insult someone for being a traitor to their people.34 This term accurately reflects just how the Mexica thought of La Malinche after the Spanish conquest. The ‘a’ at the end of ‘malinchista,’ genders the term, permanently associating the ‘betrayal’ of the Aztec empire with women, inherently saying that betrayal of the highest degree is a feminine trait. Marianismo is defined as “an idealized traditional feminine gender role characterized by submissiveness, selflessness,

chastity, hyper femininity, and acceptance of machismo in males.”35 The term is rooted in ‘Maria’, in reference to La Virgen Maria, the Virgin Mary, and values desirable qualities of the saint by the Catholic church. Machismo is rooted in the word ‘macho’ meaning male and is defined to be “a set of expectations for males in a culture where they exert dominance and superiority over women.” Marianismo and Machismo have a dyadic relationship, essentially upholding one another.36 For example, without Marianismo there cannot be Machismo since men need women to be submissive for them to be superior. Due to this, the two sexes are forced to follow the gender roles they have been predestined to follow by society.

I still hear the words echoing in my head as I am transported to my childhood home, tears blurring my vision as a broken vase lies at my feet. As I grew older I would associate the traits of my mother with what is wrong with me as a person. As I have grown into myself and my femininity, I reflect on this quote from Bonnie Burstow, “Often father and daughter look down on mother (woman) together. They exchange meaningful glances when she misses a point. They agree that she is not as bright as they are, cannot reason as they do. This collusion does not save the daughter from the mother’s fate.”37 For so long women have rejected their likeness to them and perpetuated injustice by upholding the patriarchy, white settler colonialism and greater systems of oppression. My cheeks are stained in empathy for the fate of my mother, La Malinche, La Virgen de Guadalupe, and Sor Juana Ines de la Cruz.

Barrientos, Joaquín Álvarez. “El Modelo Femenino En La Novela Española Del Siglo XVIII.” Hispanic Review 63, no. 1 (1995): 1–18. https://doi.org/10.2307/474375.

Bernardino de Sahagún. “General History of the Things of New Spain: The Florentine Codex.” The Library of Congress. Accessed November 14, 2023. https://www.loc.gov/ item/2021667837/.

Burstow, Bonnie. Radical feminist therapy: Working in the Context of Violence. Newbury Park: Sage Publications, 1992.

35 Emma Garcia , “Blending the Gender Binary: The Machismo-Marianismo Dyad as a Coping Mechanism,” Digital Commons , 2021, https:// digitalcommons.iwu.edu/cgi/viewcontent.cgi?article=1023&context=phil_honproj.

34 Inga Clendinnen, “Wives,” Aztecs: an Interpretation (Cambridge, MA: Cambridge University Press, 1991); Mary L Pratt, “‘Yo Soy La Malinche’: Chicana Writers and the Poetics of Ethnonationalism,” JSTOR, accessed November 15, 2023, https://www.jstor.org/stable/2932214?typeAccessWorkflow=login.

36 Julia L. Perilla, “Domestic Violence as a Human Rights Issue: The Case of Immigrant Latinos,” Hispanic Journal of Behavioral Sciences 21, no. 2 (1999): 107–33, https://doi.org/10.1177/0739986399212001.

37 Bonnie Burstow, Radical Feminist Therapy: Working in the Context of Violence (Newbury Park: Sage Publications, 1992).

Ceballos, Miriam. "Machismo: A Culturally Constructed Concept," California State University, 2013. https://scholarworks.calstate.edu/downloads/rj430597t.

Clendinnen, Inga. “Wives,” Aztecs: an interpretation. Cambridge, MA: Cambridge University Press, 1991.

Cortés, Hernan. “Cartas de Relacion.” Archive, 1519. https:// archive.org/details/cartasderelacion0000cort.

Cortés, Hernan. “Letters from Hernán Cortés.” American Historical Association. Accessed November 15, 2023. https:// www.historians.org/teaching-and-learning/teaching-resources-for-historians/teaching-and-learning-in-the-digital-age/the-history-of-the-americas/the-conquest-of-mexico/letters-from-hernan-cortes.

Diaz, Bernal. “Historia Verdadera: AHA.” Historia Verdadera | AHA. Accessed November 14, 2023. https://www. historians.org/teaching-and-learning/teaching-resources-for-historians/teaching-and-learning-in-the-digital-age/ the-history-of-the-americas/the-conquest-of-mexico/historia-verdadera.

“Divisions and Conflicts between the Tlaxacalans and the Mexicas: AHA.” Divisions and Conflicts between the Tlaxacalans and the Mexicas | AHA. Accessed November 14, 2023. https://www.historians.org/teaching-and-learning/ teaching-resources-for-historians/teaching-and-learningin-the-digital-age/the-history-of-the-americas/the-conquest-of-mexico/narrative-overviews/divisions-and-conflicts-between-the-tlaxacalans-and-the-mexicas.

Estrada, Oswaldo. Troubled Memories: Iconic Mexican Women and the Traps of Representation. State University of New York Press, 2019.

Garcia, Emma. “Blending the Gender Binary: The Machismo-Marianismo Dyad as a Coping Mechanism.” Digital Commons, 2021. https://digitalcommons.iwu.edu/cgi/viewcontent.cgi?article=1023&context=phil_honproj.

Hind, Emily. “The Sor Juana Archetype in Recent Works by Mexican Women Writers.” Hispanófila, no. 141 (2004): 89–103. http://www.jstor.org/stable/43807179.

Lara, Irene. “Goddess of the Américas in the Decolonial Imaginary: Beyond the Virtuous Virgen/Pagan Puta Dichotomy.” Feminist Studies 34, no. 1/2 (2008): 99–127. http:// www.jstor.org/stable/20459183.

Medina, Rubén. “Masculinidad, Imperio y Modernidad En Cartas de Relación de Hernán Cortés.” Hispanic Review 72, no. 4 (2004): 469–89. http://www.jstor.org/stable/3247142.

Paz, Octavio. Sor Juana, Or, The Traps of Faith. Cambridge, MA: Harvard University Press, 1988.

Perilla, Julia L. “Domestic Violence as a Human Rights Issue: The Case of Immigrant Latinos.” Hispanic Journal of Behavioral Sciences 21, no. 2 (1999): 107–33. https://doi. org/10.1177/0739986399212001.

Phillips, Charle. “Aztecs.” Essay in The Complete Illustrated History of the Aztec & Maya: The Definitive Chronicle of the Ancient People of Central America & Mexico--Including the Aztec, Maya, Olmec, Mixtec, Toltec & Zapotec, 349. London: Hermes House, 2010.

Pratt, Mary Louise. “‘Yo Soy La Malinche’: Chicana Writers and the Poetics of Ethnonationalism.” Callaloo 16, no. 4 (1993): 859–73. https://doi.org/10.2307/2932214.

Restall, Matthew. Seven Myths of the Spanish Conquest. New York, N.Y: Oxford University Press, 2021.

Skinner, Lee. “Martyrs of Miscegenation: Racial and National Identities in Nineteenth ...” JSTOR, May 2001. https://scholarship.claremont.edu/cgi/viewcontent.cgi?article=1364&context=cmc_fac_pub.

Taylor, William B., Kenneth Mills, and Lauderdale Sandra Graham. Colonial Latin America: a documentary history. Vancouver: Crane Library at the University of British Columbia, 2009.

Townsend, Camilla. “Malintzin’s Choices : An Indian Woman in the Conquest of Mexico.” Internet Archive, January 1, 1970. https://archive.org/details/malintzinschoice0000town.

Zires, Margarita. “Los Mitos de La Virgen de Guadalupe. Su Proceso de Construcción y Reinterpretación En El México Pasado y Contemporáneo.” Mexican Studies/ Estudios Mexicanos 10, no. 2 (1994): 281–313. https://doi. org/10.2307/1051899.

Logan Reich

Tracing back to ancient history, Jewish communities have constantly been persecuted and removed from many of the places they’ve settled. This continued during the Spanish Inquisition in 1492 when the Spanish crown signed the Alhambra decree to “Banish… Jews from [their] kingdoms” (The Alhambra Decree), and to scatter Jewish communities across the Atlantic. Spain took action to enforce the “separation of the said Jews in all the cities, towns, and villages of our kingdoms and lordships.” The decree removed Jews from across Spain in the name of preventing people from “Judaiz[ing] and apostatiz[ing] [the] holy Catholic faith.” The separation of Jews from their homes and spaces resulted in many communities losing access to culture, tradition, and faith. Because Spain’s intentions were rooted in the Catholic Church’s goal of spreading Catholicism, the influence of these actions spread beyond the Alhambra decree. This persecution of Jewish communities continued in 1654 when the Portuguese crown reclaimed the small portion of Brazil that Jews had settled in and displaced them once again. Other Jews had found space in different areas across Europe, but many were barred from full citizenship and freedoms. Accordingly, many Jews died in pogroms and religious tolerance was rare throughout the continent.

Eventually, the Sephardim, Jews with lineage to North Africa and the Iberian Peninsula, came to the colonies where their initial goal was not to grow the largest Jewish community in the world but, instead, to be safe in their new homes. These Jews experienced the process of acculturation; the cultural modification of an individual, group, or people by adapting to or borrowing traits from another culture (Merriam-Webster). The distinction from assimilation is that the Jewish community acculturated by becoming a part of a larger society without sacrificing what made them Jewish.

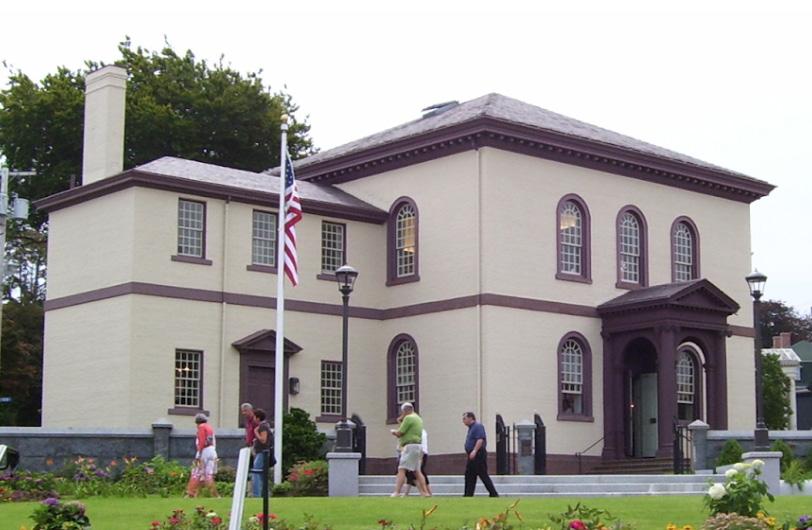

The essay focuses on Newport, a historically significant American Jewish community. As one of the oldest Jewish commu-

nities in the country, Newport exemplified American Jewry for generations.

The primary sources engaged throughout the essay highlight the history of Rhode Island’s establishment and the interactions of the Jewish community in Newport. The Rhode Island Parliamentary Patent established religious tolerance in Rhode Island in 1643 (Charles) and George Washington’s letter later in 1790 bookends this part of history by marking their acceptance (Washington). Further background on Rhode Island was given in Jonathan Beecher Field’s Errands Into the Metropolis academic paper on New England’s colonial history (Field). Secondary sources regarding Jewish people’s settlement in Newport include information on population numbers, occupations, and background. Acceptance and Assimilation by Daniel Ackermann provides context and details on the integration process undergone by Jews in Newport (Ackermann). This is continued in The Jews of Rhode Island by George Goodwin and Ellen Smith when they put numerics on the establishment and a longer timeline of Jews in Newport (Goodwin, Smith). All of the previous sources mentioned explain how Jews acculturated, but they lack context with architecture. The next sources elaborate on Jewish architecture. Laura Liebman and Steven Fine argue about the origins of the Touro synagogue’s architecture in their respective articles “Sephardic Sacred Space in Colonial America” and “Jewish Religious Architecture” to expand this. They mostly examine architectural components that were prominent in Europe or emphasized in Touro. These sources lack a holistic examination of how these concepts of Jewish Identity are related to the architecture of these synagogues. Using architecture to highlight the establishment of American Jewish identity will help share their story.

Through religion and culture, the Jewish people upheld their values and priorities as they acculturated into Rhode Island. While adapting to the norms that American colonists and countrymen expected of the new community, the early Jews of

Newport maintained what made them distinguishably Jewish while finding a place in the large context of the city. By examining the interactions between other religious groups in Rhode Island and the Jewish community, and looking at the architecture of Jewish spaces of the time, we can understand how they established themselves while also maintaining their identities.

In 1658, the first Jews settled into the young colony of Rhode Island in hopes that the emerging trade city of Newport would offer them the religious protection they sought. They quickly found footing despite the challenges one might assume they would face. Jew’s reasons for going to Newport were the same reason why Rhode Island was founded in the first place: religion. In 1636, Roger Williams founded Rhode Island based on religious tolerance after the Massachusetts Bay colony “ordered his immediate arrest” (Beecher Field 29) due to his vocal dissent on how Massachusetts played a role in religion. Just seven years later, in 1643, King Charles I signed the parliamentary patent that established Rhode Island as a colony (Parliamentary Patent, 1643). The charter says that the laws in Providence Plantations would be similar to those in England “so far as the nature and constitution of the place will admit.” This clause meant Rhode Island needed similar laws to England, but where the principal of the colony had different intentions than England, they had liberty. That extended to religion in the Rhode Island Colony. This distinction from other colonies’ establishments is markedly different in that it leaves room for Rhode Island to serve its purpose for the tolerance that Jews needed. Newport welcomed Jews, as well as other religious minorities, to make Rhode Island their home.

The community that Jews made in Newport became strong quickly. In 1677, they built a cemetery to establish themselves in the city. The construction of the cemetery was very basic and resembled what the Christian cemeteries in Newport looked like. This construction was also similar to what other Sephardic cemeteries had looked like back in Europe (Leibman 22). The cemetery’s construction indicated Jewish integration into Newport, but nothing about the architecture of the entrance or layout can be identified as being influenced by the colonists. As these people settled in, they would gain footing and integrate with the economy fairly quickly. In 1686, Rhode Island made an agreement with the Jewish community to let them conduct business with full protection of the law as “resident strangers” (Touro). Throughout this time they continued to grow and congregate in homes of Jewish leaders throughout Newport until they hit their “golden age” in the 1740s. They took advantage of these liberties to work as traders, merchants, and farmers. Jews would make up about 10 percent of the mercantile population in Newport in 1740 (Goodwin 2). This growth of

the community meant not only a cemetery but a synagogue as well.

In 1759, the Touro synagogue, the second oldest synagogue in the United States, was completed and became home to the two hundred Jews living in Newport. Whether it be ritual, passing down of cultural traditions, or fostering discussion around issues pertinent to Jewish people, synagogues historically have served as both a meeting place and as a house of worship. The synagogue served as an integral aspect of the Jewish community because it served as the home base for those who were establishing themselves in this new location, while also being the most prominent and visual representation of who Jews were to the larger community. Later the context of how the synagogue in Newport was so integral to this concept will be further expanded.

In Europe, there were common features found amongst Sephardic Synagogues that were constructed around the time that Jews began leaving for the Americas. Aside from their architecture, the buildings themselves were often in predominantly Jewish areas of the towns and not necessarily integrated into the rest of the community, but Newport diverted in this concept. The Touro Synagogue’s location in the middle of the town, near the water, and not far from their cemetery indicates that the Jewish people had become a part of the community in Newport (Ackermann 200). The synagogue being in such a central location was different from many of the churches in Newport at the time and expanded the understanding of the deep respect and strong relationships the Jews of Newport had made with the larger community.

The theme of integration continued with the architectural features of the Touro synagogue as well. When evaluating architectural features that were incorporated because of larger influences in Newport compared to what was traditionally built, context plays a large role. The facade of Touro shifts the focus to the high arching windows, the first defining feature. Sephardic European synagogues often had tall arches that were found as windows or in the interior support of the buildings (Fine 224). This is seen on the exterior of the Portuguese Synagogue in Amsterdam (Figure 1) and the diagram of the building’s interior (Figure 2). The high arches can be attributed to the influence of other architecture surrounding the Jewish communities but were prominent in the majority of European synagogues. With no religious significance, they were simply a cultural norm amongst the Jews of Europe. The prominence of arches in European synagogues could be attributed to the fact that stained glass was very rarely found in Sephardic synagogues. Whether it was due to religious tradition or the cost of materials throughout the world, elaborate glass mosaics were

rarely found in European Sephardic spaces. It would make sense that as an alternative to that art, they used these arches. This example of how Touro worked these into their facade perfectly sums up what Jewish acculturation at the time meant: a balancing act between working in the standard of the community, and maintaining individual tradition. The windows

are still arched but instead of longer stretching panes, they are the same size as these other buildings with the curve on top. This is seen in Figures 3 and 4 where the front sides of the buildings are almost identical, including the arched windows. Unlike many churches, there is no indication or outstanding detail that labels the space as a specifically religious building. Touro didn’t have any Hebrew or a name of the building on the exterior.

The next feature was the incorporation of the mechitza, the partition between men and women during the time of ritual, into their sanctuaries. The tradition in the Sephardic community was to build a balcony that overlooked the central prayer space on the bottom floor. Women would pray from the top while the men prayed on the floor of the sanctuary. The mechitza in Touro was, in principle, similar to what was

prominent in other Sephardic synagogues, however, the balcony space was much smaller than in many of their European counterparts (Ackermann, 198). In Figure 2, you can see how there are two rows of seats in a seemingly larger sanctuary while the interior of the Touro synagogue has a narrow space above that has room for one row of seats. This could have indicated shifting ritual priorities as they settled into a new environment with different religious influences. With the branches of Christianity not being as ritually focused, it would make sense for some of the practices within Judaism to lose some prominence. It also could have simply been a matter of space, given that there was more space to build the synagogue on the property as seen in Figure 3.

Shifting the focus further, understanding how the layout of the sanctuary, the central location of ritual in Judaism, was preserved furthers the argument. The floor and layout of the synagogue was virtually unchanged from what was traditional in Europe. The bimah, a platform from which prayers are chanted and ritual is led, in the middle of the sanctuary plays a central role in the orientation of the space. The bimah traditionally worked with the “Divine ratio” which separated the synagogue in a 37:100 ratio. Jewish law did not require this; however, the common ratio that separated the back, the thirty-seven part is behind the center bimah and the one-hundred part is between the bimah and the arc where the Torah was housed. This ratio was found in the Amsterdam Portuguese Synagogue, London Bevis Marks synagogue, amongst many other synagogues constructed in the fourteenth and fifteenth centuries throughout Europe (Liebman, 27). This distinction of the appearance of the synagogue changing while the central space remained unchanged is a testament to how the Jewish community acculturated.

The Jewish community in Newport grew relations with other religious groups that had many effects on other people throughout Newport and beyond. The Seventh Day Baptist Meeting House, one of the oldest surviving Baptist churches in the country, sits next door to the Touro Synagogue. The notable features of the meeting house are that it looks extremely similar to the front awning and the shape is almost identical. These similarities indicate there were some interactions between the groups however the interior balcony that was made, directly mirroring the mechitza inside the Touro synagogue, serves as a further support that these communities interacted both socially and ritually. Although the synagogue was built after the church was, considering their geographic proximity and range in architectural similarities, it can be reasoned that they had a strong influence on each other’s construction. The Jewish community, although small, branched out to establish relations and build bonds that persisted through any sort of

tension that might exist between the Jewish community and other religious groups.

One of the more notable interactions of the Newport Jewish community in their history was in their correspondence with President George Washington. As was done by many religious organizations throughout the United States, the Touro synagogue wrote a letter congratulating the President on his election and his new role in leading the country. In his response, he placed a heavy emphasis on religious freedom saying that “It is now no more that toleration is spoken of as if it were the indulgence of one class of people that another enjoyed the exercise of their inherent natural rights” (Letter from George Washington to Hebrew Congregation at Newport 1790). The significance at the surface is executive support for expanded religious freedom. The deeper impact is that in so many countries that Jews had been removed from, there was no connection between strong Jewish communities and those in power. In his letter, George Washington committed to ensuring that the “Government of the United States [would] give bigotry no sanction, and to persecution no assistance.” (Letter from George Washington). This further supported that even George Washington believed in supporting Jewish safety and culture within the United States.

The Jews of Newport, Rhode Island may have taken a long way to get there, but their resilience to establish themselves into the groundwork of the colony and the larger Jewish community into the framework of this country was well documented. As they evolved their culture and built their structures, their story was shown. This group of two hundred Jews balanced the task of preserving their ritual and culture while becoming genuine parts of the society that they had to incorporate into. While documents quantify the ways in which the Jewish people acculturated into the colony, the synagogue they left behind tells much more than just the facade of a building on the corner of Touro Street, it tells the story of so many.

Bibliography

“Acculturation Definition & Meaning.” Merriam-Webster, 6 November 2023, https://www.merriam-webster.com/dictionary/acculturation. Accessed 27 November 2023.

Ackermann, Daniel Kurt. “Acceptance and Assimilation.” Magazine Antiques, vol. 183, no. 1, Jan. 2016, pp. 196–201. EBSCOhost, research.ebsco.com/linkprocessInor/ plink?id=81dc46af-e82d-3215-84d9-07de31e72e8c.

Charles I, King. Parliamentary Patent, 1643. 1643, https:// docs.sos.ri.gov/documents/civicsandeducation/teacherresources/Parliamentary-Pat ent.pdf.

Everett. “History.” Touro Synagogue, https://tourosynagogue.org/history/. Accessed 5 December 2023.

Field, Jonathan Beecher. Errands Into the Metropolis: New England Dissidents in Revolutionary London. Dartmouth College Press, 2012. Accessed 30 November 2023.

Finch, John. “Seventh Day Baptist Meeting House Exterior.” Trace Your Past, 2018, www.traceyourpast.com/locations/ rhode-island.

Fine, Steven, and Ronnie Perelis. Jewish Religious Architecture: From Biblical Israel to Modern Judaism. Brill, 2020. pp. 221-237. EBSCOhost, research.ebsco.com/linkprocessor/ plink?id=1e1104c5-3fd9-3282-83a8-e90c6d12c8d1.

Goodwin, George M., and Ellen Smith, editors. The Jews of Rhode Island. Brandeis University Press, 2004. Accessed 8 November 2023, TheJewsofRhodeIsland.pdf (brandeis.edu).

Hoberman, Michael. New Israel / New England: Jews and Puritans in Early America, University of Massachusetts Press, 2011. ProQuest Ebook Central, https://ebookcentral-proquest-com.proxy216.nclive.org/lib/ncssm/detail.action?docID=453 2908.

Leibman, Laura. “Sephardic Sacred Space in Colonial America.” Jewish History, vol. 25, no. 1, 2011, pp. 13-41. ProQuest, https://www.proquest.com/scholarly-journals/sephardic-sacred-space-colonial-america/do cview/824413491/se-2, doi:https://doi.org/10.1007/s10835-010-9126-7.

Queen Isabella I, and King Ferdinand II. Alhambra Decree. 31 March 1492, https://www.fau.edu/artsandletters/pjhr/ chhre/pdf/hh-alhambra-1492-english.pdf.

Yasmine, Sarah. “Touro Synagogue Newport Rhode Island 1” Wikimedia Commons, commons.wikimedia.org/w/index. php?curid=18002825. Accessed 17 Apr. 2024.

Veenhuysen, Jan. “Interieur van de Portugese Synagoge Aan de Houtgracht Te Amsterdam.” Rijksmuseum, 2010, www. rijksmuseum.nl/en/collection/RP-P-AO-24-28.

Washington, George. Letter from George Washington to the Hebrew congregation at Newport. 1790, https://teachingamericanhistory.org/document/letter-to-the-hebrew-congregation at-newport/.

William, Ridden.“Portuguese Synagogue.” Flickr, Yahoo!, 17 Apr. 2024, www.flickr.com/photos/97844767@ N00/8780652959.

Sugar is a word that is used frequently in today’s society. With some type of sugar being in almost every food and drink consumed, its effects need to be talked about so that the public can understand what they are ingesting. This thinking can also be extended to learning the history of sugar and how it impacted the world. This is important because, not surprisingly, the world would be different if sugar had never been discovered. However, the impacts of sugar are more significant and far-reaching than one may believe. Since its discovery, sugar has left its mark on the world in a variety of ways. Many of these impacts still resonate in the present day, yet two major events stand out which occurred due to the implementation of sugar into the everyday lives of society. When sugar was introduced into the New World, it brought with it a unique aspect of the plantation system and it birthed the development of the West African Slave Trade, two events that heavily influenced the history and future of the world.

Even though the major changes for sugar didn’t occur until it was brought to the New World, the crave for sugar began long before the first sugar plantations had appeared in the Caribbean in the fifteenth century. In fact, it was about 3000 years ago that sugar first became refined into the granules that are so sought after today. Sugar was first processed in Eastern Asia, and at a meeting at Jundi Shapur, an Iranian university, “one of the most important seminars the world has ever seen” occurred (Rook). There “...Greek, Christian, Jewish and Persian scholars gathered in around 600 A.D. and wrote about a powerful Indian medicine, and how to crystallise it” (Rook). Around this time, Hippocratic medicine was the dominant medical model in Europe. Hippocratic medicine focused on keeping a person’s body in balance, and this mostly dealt with their diet; sugar was an aid to their ailments because it helped with stomach-related problems and dietary issues. Sugar was also known to relieve a cough and sore throat, so it was used by many doctors then. After sugar became more popular in Europe, though, it changed from being solely for medicinal purposes to now becoming a spice and sweetener in newly discovered foods.

The change in the reason for the demand for sugar came with changes in the European diet. After the Crusades, Europeans

began to change how they ate. Most importantly, they wanted more sugar. New foods were being discovered, namely coffee, and the Europeans wanted to sweeten this newfound commodity. This renewed yearning for sugar made it a product that was highly sought after, and many planters began to notice this. In fact, “Beginning in the twelfth century, Venetians established sites of sugar production in the Mediterranean” (Kernan). However, although Europeans could now have a backyard sugar-making facility, still “they were limited to small quantities due to climate, space, and labor” (Kernan). With sugar production now being a lot closer to home for most Europeans, the prices fell, allowing more people to buy the sweet treat. Yet, as more people tried sugar, the demand increased. New sugar production facilities continued to be created along the Mediterranean to meet demands. However, there came a point where there was not enough space for more plantations to be built, so a new place needed to be found to continue growing the sweet treat. Additionally, the climate was not optimal for growing the plant in the Mediterranean because in the subtropics it required a lot more labor than normal with irrigation and fertilization. Yet, at the turn of the fifteenth century, with the discovery of a brand new continent, things began to change in the sugar industry. The discovery of America changed the future of sugar. It changed sugar from being a scarce treat only for the wealthy to the most exported commodity from the Caribbean in the span of a few decades. Sugar demand was ever-growing, and during the 15th century, the leading nations of Europe were competing in a dominance race to see which was the most powerful. These powers believed that investing in sugar would help them become the dominant force of Europe, with Prince Henry of Portugal stating that “sugar production held the key to success for his Atlantic acquisitions” (Hancock). Portugal and Spain were the first countries to establish sugar plantations in the Caribbean, and these plantations quickly became profitable. One instance is of the Island of Madeira, in which “the first sugar was refined…in 1432, and by 1460 the island was the world’s largest sugar producer.” (Hancock). In a span of just 28 years, sugar had changed from a substance only for the wealthy to now one of the most influential cash crops in the world. After Madeira, many other sugar plantations were

established on other Caribbean islands, and sugar became the main export of the Caribbean islands. The production and exportation of sugar was making the islands, and therefore the colonizing European nations, very wealthy, so they continued to expand their industries by making them more cost-efficient. The sugar industry became more efficient by implementing two world-changing practices: the plantation system and the Transatlantic slave trade.

When the Caribbean Islands were first colonized, European settlers had relatively small estates to grow sugar. However, as time went on, the newly established fields could not keep up with the ever-increasing demand for the sweet substance. Due to this, the plantation system emerged. Although the plantation system was known to the world prior to its implementation on the sugar estates, its introduction into the Caribbean brought changes that affected the future of agricultural practices. It quickly grew in popularity and lasted for centuries, and it was unique in that it introduced monoculture to the Caribeean, a practice where only one type of crop was grown on a large scale. The introduction of the plantation system also produced the need for each island to essentially become self-sustaining. Sugarcane is a very fleeting product, as it spoils just days after being harvested. Furthermore, due to the sheer amount of cane that the fields were producing, there was no time for it to be shipped somewhere else to be processed, so all of the refining had to be done on the islands. Yet, this caused an increase in prices of founding and supporting a sugar plantation, as “the cost of building plantation works to process the sugar put the financial endeavour beyond the reach of all but the wealthiest merchants or landowners” (“Sugar Plantations”). Because each island had to have its own processing facilities, costs became very unaffordable for most of the smaller planters that had established their own plantations. As a result, the larger plantations that were run by the wealthier planters began to take over. Essentially, the demand for sugar had turned the industry into a monopoly, where only a few wealthy planters were able to afford the initial costs of establishing a sugar plantation. Yet, the rising costs of sugar plantations not only weeded out the small planters, but also caused the wealthier ones to want to cut down on expenses in other places in order to save some extra money. The planters found that the right spot was with their workforce.

When colonists from Europe first came to the Americas to establish sugar farms, they used labor from indentured servants, or the natives if the island was previously inhabited. However, the planters were dissatisfied with the current labor force. Indentured servants were expensive to maintain, and they would get their freedom in 5-7 years, in addition to gaining land after their release. This not only made the planters

have to replace their workforce frequently, but the workers then became an obstacle for the planters to expand their industry, both physically and economically, as the workers became competitors after their release. The enslavement of the natives didn’t work either, as the harsh labor conditions caused many of them to die. After seeing this, the planters wanted to find people suited for the tropical environment and the harsh labor to work their plantations. Their eyes landed upon Africans. The planters thought that “Africans would be more suited to the conditions…as the climate resembled that [of] the climate of their homeland in West Africa” (“Slavery in the Caribbean”). The plantation owners also realized that “Enslaved Africans were also much less expensive to maintain than indentured European servants or paid wage labourers” (“Slavery in the Caribbean”). The West Africans proved to be more fit for the work than the previous laborers, so the planters decided to keep importing an increasing number of slaves. Thus began the West African Slave Trade, an event spanning centuries that displaced millions of Africans from their homes. The importation of slaves into the Americas continued as the sugar industry expanded, and out of the total number of slaves imported, “some 40 per cent of enslaved Africans were shipped to the Caribbean Islands, which, in the seventeenth century… [became] the principal market for enslaved labour” (Sherman-Peter). Though slaves were shipped all over the Americas, the primary place for their use was in the Caribbean, where sugar plantations were still continuing to expand in size, number, and efficiency. The introduction of slaves into the Caribbean also created the Triangular Trade. The Triangular Trade involved the shipping of slaves from Africa to the Caribbean, the shipping of sugar from the Caribbean to other American colonies to be refined into rum, and then the shipping of that rum back to Africa to trade for more slaves. The sugar and rum were shipped to other places, but the Triangular Trade kept the sugar industry sustainable for many years. In addition to all of this, the introduction of enslaved Africans in America led to long-term issues regarding race and white supremacy in the United States.

Slavery has been prevalent in most of human history, so nothing was new about the fact that slaves were being used to work fields in the Caribbean. However, there was something that made slavery in the New World unique: it became based on race. The European colonists viewed the Africans as an inferior people due to their skin color, and they believed that the Africans being slaves was their natural state. Brenda Wilson puts it perfectly when she states that “ideas about white supremacy and inferiority of non-whites justified Black African enslavement and exploitation for the production of the luxury of sugar…” (page 4). This racial hierarchy was most prevalent

in the United States, though it was disputed ever since slavery was used in the region, even before it became a sovereign nation. The arguments over the morals of slavery continued to grow heated to the point where a civil war eventually broke out in the United States in 1861, and they continued up until the passage of the Thirteenth Amendment, which freed all of the slaves in the United States. However, even after the Civil War, in which slavery was abolished, the resonance of white supremacy and black inferiority continued to reign supreme in the Jim Crow South for nearly 100 years. After a century, blacks finally gained more rights through the Civil Rights Movement of the 1960s. Yet presently, around 60 years later, blacks are still facing societal prejudices solely due to their race. It has been around 160 years since slavery was abolished in the United States, but the effects of it are still widely felt. It has taken, and will continue to take, the efforts of many people to combat these prolonging prejudices that affect so many today. All of these issues that affect so many stemmed from “the production of the luxury of sugar” (Wilson). It is difficult to imagine that a sweet treat such as sugar could cause such turmoil and violence in history, yet the seemingly innocent granule has given rise to numerous societal problems regarding race. Additionally, the rise of sugar not only caused violence and turmoil among races, it introduced problems into the environment due to the practices of the plantation system and their resemblances to modern factories.

European colonists viewed the Africans as an inferior people due to their skin color, and they believed that the Africans being slaves was their natural state. Brenda Wilson puts it perfectly when she states that “ideas about white supremacy and inferiority of non-whites justified Black African enslavement and exploitation for the production of the luxury of sugar…” (page 4). This racial hierarchy was most prevalent in the United States, though it was disputed ever since slavery was used in the region, even before it became a sovereign nation. The arguments over the morals of slavery continued to grow heated to the point where a civil war eventually broke out in the United States in 1861, and they continued up until the passage of the Thirteenth Amendment, which freed all of the slaves in the United States. However, even after the Civil War, in which slavery was abolished, the resonance of white supremacy and black inferiority continued to reign supreme in the Jim Crow South for nearly 100 years. After a century, blacks finally gained more rights through the Civil Rights Movement of the 1960s. Yet presently, around 60 years later, blacks are still facing societal prejudices solely due to their race. It has been around 160 years since slavery was abolished in the United States, but the effects of it are still widely felt. It has taken, and will continue to take, the efforts of many people to combat these prolonging preju-

dices that affect so many today. All of these issues that affect so many stemmed from “the production of the luxury of sugar” (Wilson). It is difficult to imagine that a sweet treat such as sugar could cause such turmoil and violence in history, yet the seemingly innocent granule has given rise to numerous societal problems regarding race. Additionally, the rise of sugar not only caused violence and turmoil among races, it introduced problems into the environment due to the practices of the plantation system and their resemblances to modern factories.

The demand for sugar continued growing, and this non-cessation of sugar cravings forced the plantations to become more efficient. In fact, “By the 1700s, sugar was the most important internationally traded commodity and was responsible for a third of the whole European economy” (Hancock). Sugar had become one of the highest sought-after commodities globally, and its value as a cash crop never diminished. As a result, plantations continued to become more efficient in their production and processing so that they could export as much sugar as possible. The greatest increase in the efficiency of sugar production came with the rise of the Industrial Revolution. The mechanization of processes around the world made things remarkably more efficient, and sugar plantations were no exception. One instance of this was in Cuba, where “in 1827 only 2.5 percent of the 1,000 ingenios [sugar mills] in Cuba were steam-powered…yet they accounted for 15 percent of the island’s sugar crop” (Tomich). With the rise of industrialization, sugar plantations could now easily export more sugar and gain more profit. Through mechanization of the sugar industry, though, the plantations began to look similar to the factories that emerged as a result of the Industrial Revolution. This raises the question of whether the sugar plantations were the antecedents of mechanized factories or not.