DISCOVER

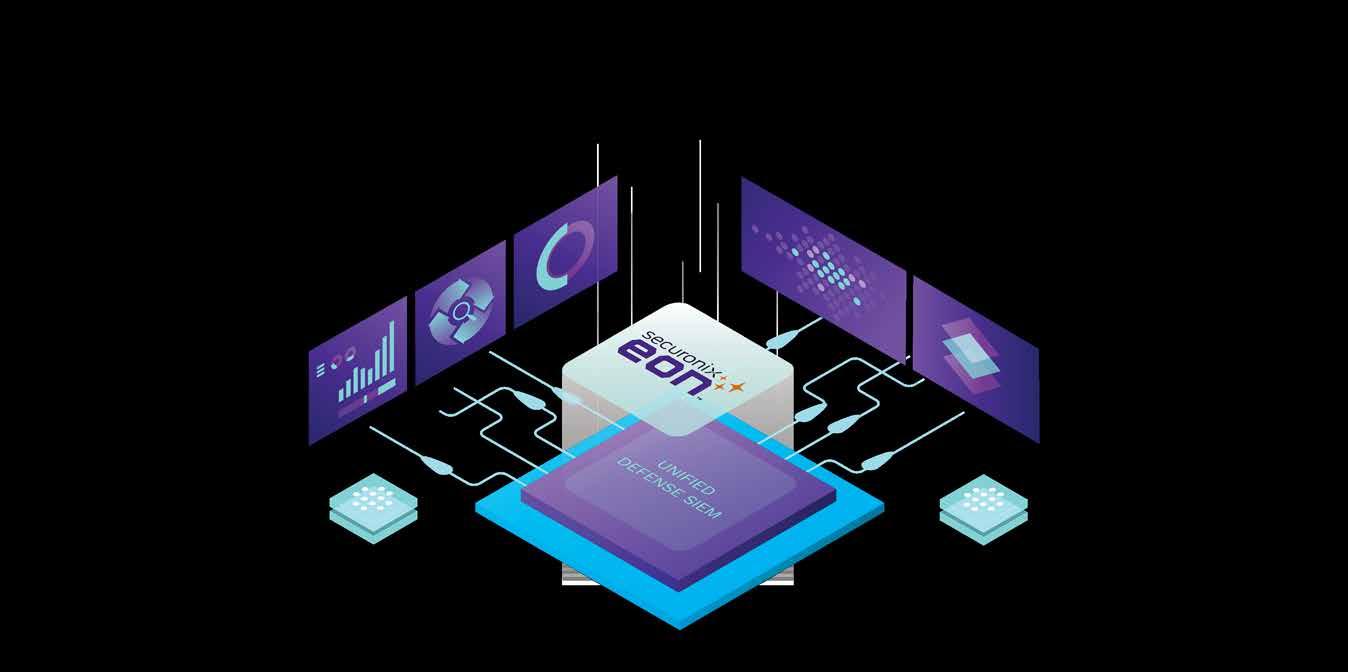

Combat Advanced Attacks with Speed, Precision, and Efficacy

Maximize Efficiency and Reduce Alert Fatigue Navigate the Complex Regulatory and Compliance Landscape

Securonix is pushing forward in its mission to secure the world by staying ahead of cyber threats. Securonix EON provides organizations with the first and only AI-Reinforced threat detection, investigation and response (TDIR) solution built with a cybersecurity mesh architecture on a highly scalable data cloud. The innovative cloud-native solution delivers a frictionless CyberOps experience and enables organizations to scale up their security operations and keep up with evolving threats. For more information, visit www.securonix.com or follow us on LinkedIn and Twitter.

CXO DX / JUNE 2025

FROM PILOTS TO PRODUCTION

Enterprises are rapidly adopting new technologies such as GenAI, data streaming, digital twins, and so on. They’re becoming central to how businesses operate, compete, and scale.

This month’s cover story on Omnix highlights some of the significant trends of AI adoption and how the company is addressing the challenges that enterprises face when it comes to ROI from AI deployments. With a three-pronged approach that includes AI monetization, conversational AI, and engineering-led digital transformation, Omnix is helping enterprises move from fragmented pilots to AI-first architectures. Among other highlights, in the feature on enterprise GenAI adoption, CIO perspectives reveal that GenAI strategies are increasingly aligned with business KPIs—whether through copilots for internal automation or fine-tuned models tailored to domain-specific tasks.

The Confluent interviews featured in this issue highlight that real-time data infrastructure is the backbone of any scalable AI strategy. Data streaming is not just replacing legacy data lakes and warehouses, it’s unifying them. From Flink-powered stream processing to Tableflow’s seamless data lake integration, Confluent is enabling organizations to act on data at the speed of the business.

Equally critical is the question of cyber readiness in all enterprise approaches to technology adoption. Positive Technologies is taking an ecosystem view of security by building cyber talent pipelines, embedding practical training into university curricula, and advancing cybersecurity sovereignty through strategic regional partnerships.

Across these conversations, one thing stands out: technology adoption in the enterprise is moving fast but with purpose. It’s not just about scaling tools but about enhancing outcomes.

It is also evident that there is a growing emphasis on interoperability and flexibility. Enterprises aren’t looking for siloed solutions; they want platforms that integrate across cloud, edge, and on-prem environments, that can meet evolving compliance mandates while staying agile enough to support new AI workloads.

RAMAN NARAYAN

Co-Founder & Editor in Chief narayan@leapmediallc.com Mob: +971-55-7802403

Sunil Kumar Designer

R. Narayan Editor in Chief, CXO DX

SAUMYADEEP HALDER

Co-Founder & MD saumyadeep@leapmediallc.com Mob: +971-54-4458401

Nihal Shetty Webmaster

MALLIKA REGO

Co-Founder & Director Client Solutions mallika@leapmediallc.com Mob: +971-50-2489676

Fred Crehan, Area Vice President Growth Markets at Confluent discusses regional momentum, cloud flexibility, and why streaming data is essential for AI success.

Waqas Butt, Group Head of ICT & AI, Alpha Dhabi Holding PJSC advocates moving beyond fragmented AI experiments to strategic AI-first integration in the enterprise

Omnix is helping enterprises unlock value from AI, data, and digital twin ecosystems across verticals.

Yuliya Danchina, Positive Technologies Customer and Partner Training Director, Head of Positive Education discusses how the company is helping build global cybersecurity talent ecosystem

Jose Thomas Menacherry, Managing Director of Bulwark Technologies provides valuable insights into Bulwark Technologies’ strategies and initiatives in the ever-evolving cybersecurity landscape.

Yazen Rahmeh, Cybersecurity Expert at SearchInform highlights the most important competencies for Data Loss Prevention (DLP) systems

As generative AI gains momentum across industries, enterprise leaders are shifting focus from experimentation to scalable, compliant deployment

Yevhen Zuhrer, Head of Sales and Business Development, Syteca shares how the company is redefining privileged access and user activity monitoring for modern enterprises.

Antoinette Hodes, Evangelist & Global Solution Architect, Office of The CTO at Check Point Software discusses the rising threat of exploited edge devices in cyber security

Shaun Clowes, Chief Product Officer of Confluent explains how the company is redefining data architecture for the AI era with innovations like Flink, Tableflow, and a governance-first approach.

Moty Cohen, Director of EMEA at Vicarius, discusses the company’s innovative approach to vulnerability and patch management

Biju Unni, Vice President at Cloud Box Technologies says that the evolving cyberthreat complexities demand a new kind of leadership

international cybersecurity festival Positive Hack Days, hosted by Positive Technologies emphasized the need for

cooperation as the basis

Bart Lenaerts, Senior Product Marketing Manager, Infoblox write about how adversaries innovate with GenAI and the case for predictive intelligence

CISCO JOINS STARGATE UAE INITIATIVE

Cisco to collaborate with G42, OpenAI, Oracle, NVIDIA and SoftBank Group to power AI innovation and infrastructure development in recently announced UAE-US AI Campus in Abu Dhabi

Cisco has announced the signing of a Memorandum of Understanding (MoU) to join the Stargate UAE consortium as a preferred technology partner. The strategic MoU, signed by Cisco’s Chair and Chief Executive Officer Chuck Robbins together with other consortium partners, G42, OpenAI, Oracle, NVIDIA and SoftBank Group, envisions the construction of an

AI data center in Abu Dhabi with a target capacity of 1 GW, with an initial 200 MW capacity to be delivered in 2026.

As a partner in this initiative, Cisco will provide advanced networking, security and observability solutions to accelerate the deployment of next-generation AI compute clusters.

IBM UNVEILS HYBRID CAPABILITIES TO SCALE ENTERPRISE AI

Agent Catalog in watsonx Orchestrate to simplify access to 150+ agents and pre-built tools

At its annual THINK event, IBM unveiled new hybrid technologies that break down the longstanding barriers to scaling enterprise AI – enabling businesses to build and deploy AI agents with their own enterprise data.

IBM estimates that over one billion apps will emerge by 2028, putting pressure on businesses to scale across increasingly fragmented environments. While AI investments are accelerating, only 25% of initiatives meet ROI expectations, as per the new IBM CEO study, prompting IBM to offer hybrid technologies, agent capabilities, and deep industry expertise from IBM Consulting to help businesses operationalize AI.

"The era of AI experimentation is over. Today's competitive advantage comes from purpose-built AI integration that drives measurable business outcomes," said Arvind Krishna, Chairman and CEO, IBM. "IBM is equipping enterprises with hybrid technologies that cut through complexity and accelerate production-ready

AI implementations."

IBM is providing a comprehensive suite of enterprise-ready agent capabilities in watsonx Orchestrate to help businesses put them into action, including pre-built domain-specific agents for areas such as human resources, sales, and procurement, as well as utility agents. It also facilitates integration with over 80 business applications, including Salesforce, Workday, and SAP, while providing comprehensive agent lifecycle management. IBM is also introducing the new Agent Catalog in watsonx Orchestrate to simplify access to 150+ agents and pre-built tools from both IBM and its wide ecosystem of partners.

The enhanced watsonx.data, now supports unstructured data, offering up to 40% more accurate AI agent performance than traditional methods. New tools include watsonx.data integration (cross-format orchestration), watsonx.data intelligence (insight extraction) and Content-aware storage (CAS) on IBM Fusion for real-time data processing

“With the right infrastructure in place, AI can transform data into insights that empower every organization to innovate faster, tackle complex challenges, and deliver tangible outcomes,” said Chuck Robbins, Cisco Chair and CEO. “Cisco is proud to join this consortium to harness the power of AI and deliver the infrastructure that will enable tomorrow’s breakthroughs.”

This announcement follows Robbins’ recent visit to Bahrain, Saudi Arabia, Qatar, and the UAE where Cisco announced a series of strategic initiatives across all phases of the AI transformation in the region. These new initiatives employ Cisco’s trusted technology across the region’s AI infrastructure buildouts, leveraging the company’s deep expertise in networking and security together with longstanding regional partnerships. By fostering the development of secure, AI-powered digital infrastructure and collaborating with key Cisco partners, the company is delivering worldclass, trusted technology to the region.

Arvind Krishna Chairman and CEO, IBM

IBM is also expanding its capabilities via DataStax acquisition and integration with Meta’s Llama Stack, reinforcing its leadership in generative AI openness and scalability.

IBM's new content-aware storage (CAS) capability is now available as a service on IBM Fusion.

IBM is launching IBM LinuxONE 5, its most secure and performant Linux platform for data, applications, and trusted AI, capable of processing up to 450 billion AI inference operations per day.

ZOHO LAUNCHES AI-POWERED ULAA ENTERPRISE BROWSER

AI-powered enterprise browser offers proactive phishing protection, security, and centralised control for organisations

Zoho announced the launch of Ulaa Enterprise, a new secure enterprise browser designed to help businesses in the Middle East and North Africa (MENA) region enhance their cybersecurity posture amid escalating threats—particularly phishing attacks, which have emerged as the region’s most pervasive cybersecurity concern.

As MENA organisations embrace cloud solutions, the browser has become the primary workspace—and the largest attack surface. Ulaa Enterprise secures this critical access point by embedding protection directly into the browser, eliminating the need for complex third-party tools or virtual environments. The browser’s AI capabilities, powered by Zoho’s proprietary AI engine Zia, provide an additional layer of intelligent protection. Equipped with an integrated ZeroPhish system, it analyses URLs and web page behaviour in real-time

to detect and block phishing attempts before users even interact with malicious links. Zia also categorises and filters unsafe web content automatically, creating a safer and more compliant browsing experience without disrupting employee productivity.

“It’s uncommon for businesses to consider investing in paid browsers as part of their security strategy. However, with the sharp rise in cyberattacks across the MENA— particularly those stemming from unsafe and unsecured browsing—this mindset is shifting. Ulaa Enterprise was built specifically for organisations that want to strengthen their first line of defence, enhance cybersecurity hygiene, and safeguard both their data and their customers’ trust.” said Saran B Paramasivam, Regional Director MEA, Zoho.

Ulaa Enterprise offers IT and security

SOPHOS TO LAUNCH NEW DATA CENTER IN THE UAE

Strategic investment strengthens local data sovereignty, performance, and partner enablement in support of the UAE’s digital transformation goals

Sophos, a global leader of innovative security solutions for defeating cyberattacks, has announced plans to launch a new data center in the UAE by year end. The expansion is part of Sophos’ broader regional investment strategy and reinforce its commitment to supporting the UAE’s vision of becoming a global digital hub, while enabling local organizations to benefit from enhanced performance, data sovereignty, and regulatory compliance.

Hosted on Amazon Web Services (AWS) infrastructure within the UAE, the data center will power Sophos’ advanced, cloud native security solutions - bringing improved performance, regulatory compliance, and data sovereignty to organizations across the region.

“This launch reflects our mission to defend organizations of all sizes against inevitable cyberattacks with unmatched expertise and adaptive defenses,” said

Gerard Allison, senior vice president EMEA Sales at Sophos. “By bringing local infrastructure to the UAE, we’re delivering on our vision of enabling every organization to achieve superior cybersecurity outcomes. This expansion supports our strategy of democratizing, leveraging AI and automation, and empowering our partners to scale securely.”

Key Benefits for Local Customers:

• Enhanced Data Sovereignty: Local hosting ensures adherence to national and sector - specific regulations – critical for industries like government, healthcare, and finance.

• Improved Performance: Reduces latency for faster responsiveness for cloud – based services, including Sophos Central.

• Enterprise and Public Sector Readiness: Built to meet the high standards of mission – critical environments with advanced security and operational resilience.

teams complete visibility and granular control over browser activity. Administrators can centrally define security policies, restrict downloads and extensions, monitor user behaviour, and enforce rules across different departments or user groups—all from a single console. Built-in data loss prevention measures ensure that sensitive information cannot be shared, copied, or downloaded without authorisation, while detailed audit logs and real-time monitoring allow teams to act quickly and decisively against potential threats.

The new data center enables Sophos’ regional partners to deliver in-region data hosting to their customers, enabling them to meet local compliance demands while improving service delivery. It also reflects Sophos’ ongoing commitment to empowering partners with the tools, performance, and innovation needed to succeed in an evolving threat landscape.

Gerard Allison

Senior VP EMEA Sales, Sophos

Saran B Paramasivam

Regional Director MEA, Zoho

FINESSE PARTNERS WITH SECURITI

Partnership will seek to bolster data security and AI governance

Finesse, a leading Digital Transformation and AI Revolution has announced a strategic, partnership with Securiti, a pioneer of the Data+AI Command Center.

The partnership brings together Finesse’s proven expertise in deploying transformative digital solutions with Securiti’s groundbreaking technologies in managing sensitive data, offering a sophisticated blend of capabilities designed to address the multifaceted demands of today’s cybersecurity landscape. By integrating Securiti’s innovative AI-driven data privacy and security solutions into Cyberhub’s comprehensive portfolio, Finesse is set to equip organizations with advanced tools to protect their critical information across

both structured and unstructured data environments.

Finesse’s Cyberhub already boasts an impressive suite of solutions, including its industry-leading Cognitive Security Operations Center (CSOC), automated incident response frameworks, AI-driven Zero Trust architecture, and governance frameworks for generative AI. With the addition of Securiti’s advanced platforms, such as Data Vault, Data Leakage Protection, and privacy-compliance automation, the enhanced Cyberhub offering will empower businesses to deploy proactive, scalable, and highly intelligent cybersecurity strategies.

Megha Shastri, Vice President – Enterprise Accounts Finesse, said, “Together with Securiti we will address critical set of challenges that organizations face in securing sensitive data across modern, distributed

environments—especially in the cloud. This partnership will empower our customers with continuous visibility and control over sensitive data, reducing security risks, and ensuring compliance across environments.”

“Organizations today face a dual imperative- to innovate rapidly with AI and cloud technologies, while simultaneously maintaining stringent data security and privacy controls,” said Tahir Latif, Chief Privacy Officer ( META) at Securiti. “Through this partnership, we are enabling enterprises to automate the discovery, classification, and protection of sensitive data at scale, providing the foundational intelligence that downstream security and AI governance solutions rely on.”

Securiti’s unique ability to combine robust data visibility and controls with AI intelligence aligns seamlessly with Cyberhub’s mission to ensure businesses maintain visibility and control over their digital assets while staying ahead of emerging threats. This integration also enables enterprises to achieve and sustain compliance with international privacy regulations, such as GDPR, CCPA, and similar standards, through automation and data-driven insights.

GOOGLE CLOUD SUMMIT DOHA CELEBRATES THE SECOND ANNIVERSARY OF ITS LOCAL CLOUD REGION

Second annual Summit showcases AI advancements and customer success

Google Cloud hosted its second annual Google Cloud Summit in Doha, held under the patronage of His Excellency Mohammed bin Ali bin Mohammed Al Mannai, Minister of Communications and Information Technology, bringing together over 1,500 industry leaders, developers, and IT professionals. The Summit marked two years of the Google Cloud Doha region empowering local innovation and is set to explore the latest advancements in AI, data analytics, and cloud technologies. The event featured significant discussions and

announcements on strategic collaborations.

The Google Cloud Summit Doha showcased the vibrant tech ecosystem in Qatar and Google Cloud’s commitment to it. Attendees experienced keynotes from Google Cloud executives and Qatari leaders, gained insights into transformative AI technologies like Gemini, AI Agents, and NotebookLM, heard compelling customer success stories, and participated in deep-dive sessions on data management and cybersecurity. This ongoing partnership with Qatar is poised to play a vital role in building a resilient, secure, and digitally advanced ecosystem in the nation.

Sami Al Shammari, Assistant Undersecretary for Infrastructure and Operations Affairs at MCIT, stated: "Our collaboration with Google Cloud has served as a key

enabler in Qatar’s journey toward a knowledge-based, innovation-driven economy. Since the launch of the Doha cloud region two years ago, this collaboration has yielded tangible outcomes that directly support the objectives of Qatar National Vision 2030, particularly in enhancing digital infrastructure, delivering scalable and secure government services, and building a future-ready digital workforce."

The Summit also highlighted how a diverse range of leading Qatari organizations are leveraging Google Cloud for their transformation journeys. These include Al Jazeera Media Network (AJMN), Aspire, beIN MEDIA GROUP, Media City Qatar, Ministry of Endowment & Islamic Affairs (Awqaf), Ministry of Labour, Ooredoo Group, Ooredoo Qatar, Qatar Airways, Qatar Foundation Pre-University Education, Qatar Free Zones Authority (QFZA), Qatar Insurance Company (QIC), Snoonu, University of Doha for Science and Technology (UDST) among many others who are driving innovation across various sectors.

TITAN DATA SOLUTIONS AND NEXSAN SIGN DISTRIBUTION AGREEMENT FOR MENA

This agreement will expand access to Nexsan’s high-performance, secure, and scalable storage infrastructure across the MENA region

Titan Data Solutions, a specialist distributor for server and storage solutions, has signed a distribution agreement with Nexsan, a global leader in enterprise-class storage solutions. The partnership will expand access to Nexsan’s high-performance, secure, and scalable storage infrastructure across the Middle East and North Africa (MENA), supporting the region’s rapid investment in AI and advanced computing technologies.

As governments and enterprises across MENA accelerate their digital transformation agendas, investment in artificial intelligence, machine learning, and high-performance computing is surging. According to a recent Deloitte report, businesses in the region face mounting pressure to adopt AI and ramp up investments while a critical disconnect exists between their ambitious goals and the availability of the necessary infrastructure.

“This agreement with Nexsan is a key mile-

stone in our MENA strategy,” said Anand Chakravarthi, Regional Director, Titan Data Solutions. “Businesses across the region are eager to harness the power of AI and advanced computing, but success depends on a strong foundation, particularly when it comes to secure, reliable, and high-performance storage. That’s exactly where Nexsan delivers.”

Nexsan offers a broad portfolio of storage solutions designed to meet modern data challenges, including:

• High-capacity, high-throughput storage for large-scale, critical workloads

• Secure archiving for compliance, data sovereignty, and long-term retention

• Cost-effective, high-performance storage solutions for data centers and edge deployments

• Hybrid and unified storage that integrates seamlessly with existing infrastructure, enhanced by S3 edge caching for optimized data delivery and reduced cloud costs.

Anand Chakravarthi Regional Director, Titan Data Solutions

• Advanced storage for Digital Video Surveillance (DVS) and CCTV, delivering scalable, secure storage for critical video data

“We are thrilled to expand our partnership with Titan Data Solutions into the MENA region,” said Adrian Hedges, Regional Sales Director, Nexsan. “Nexsan’s technologies are designed for environments where rapid data growth, high-performance demands, and reliability are non-negotiable. By joining forces with Titan, we’re committed to bring our technologies to more partners across the region, helping them bridge the infrastructure gap and unlock the full potential of their data.”

MANAGEENGINE ENHANCES UNIFIED PAM WITH NATIVE INTELLIGENCE AND ADVANCED AUTOMATION

The Company's Unified PAM Platform, PAM360, Now Offers AI-Governed Cloud Access Policies and Qntrl-Powered Task Automation for Identity-Centric Routines

ManageEngine, a division of Zoho Corporation and a leading provider of enterprise IT management solutions, announced that it has added AI-powered enhancements— featuring intelligent least privilege access and risk remediation policy recommendations—to its privileged access management platform, PAM360. A new privileged task automation module enabled by Qntrl, Zoho’s unified workflow orchestration platform, has also been introduced. Together, these newly added capabilities help enterprises automate enterprise-wide administrative routines, enforce least privilege at scale with intelligent, context-aware controls and reduce security risks through automated remediation.

AI-Governed Least Privilege Access

Traditional PAM models, which rely on static policies and manual processes, often operate without sufficient context. This can result in excessive permissions, entitlement drift, and configuration errors. To address

these challenges, organizations should adopt an adaptive, context-driven approach to privileged access management—one that leverages AI to enable dynamic, riskbased access control. In fact, according to ManageEngine's 2024 Identity Security Insights, 68% of the respondents are looking for AI-driven improvements in risk-based access control.

"Today’s hybrid, multi-cloud environments have led to an explosion of human and non-human identities, creating complex access workflows and rampant privilege sprawl. To tackle this, organizations require dynamic policies that can intelligently enforce the principle of least privilege across their identity stack. With the AI-driven CIEM module in PAM360, IT security teams can now generate intelligent least privilege policies, proactively flag risky entitlements and automate remediation, helping enterprises close critical identity security gaps before they’re exploited," said Ramanathan Kannabiran, director of

Ramanathan Kannabiran Director of Product Management, ManageEngine

product management at ManageEngine.

PAM360’s CIEM module now features AI-generated least privilege policies, automated remediation of shadow admin risks and real-time access and session summaries. These AI-driven capabilities help organizations proactively tackle access sprawl and misconfigurations in hybrid environments with minimal manual effort.

IFS LAUNCHES NEXUS BLACK

IFS Nexus Black to co-create solutions at the forefront of Industrial AI, rapidly delivering tangible business outcomes at scale

IFS, the leading provider of enterprise cloud and Industrial AI software, announced the launch of IFS Nexus Black, a strategic innovation program to expedite high-impact AI adoption for industrial organizations. Nexus Black provides a credible alternative to legacy software vendors by delivering bespoke solutions at pace with guaranteed industrial scalability and security.

Nexus Black combines advanced AI technologies, deep industrial context and a dedicated delivery team, partnering with customers to tackle bespoke, complex challenges in asset-intensive industries. Built on the foundation of IFS.ai, Nexus Black enables rapid development and de-

ployment of AI capabilities to turn bold ideas into tangible outcomes in a matter of weeks.

Nexus Black offering to customers comprises:

• Agile, sprint-based co-creation and prototyping. A proven co-development model that is safe, scalable and fast

• Structured four phase model: Problem Definition; Proof of Value; Accelerated Development; Digital Continuity

• Access to dedicated AI engineers, domain experts, and solution architects, with deep expertise in industrial contexts and enterprise architecture

• Collaboration on agentic AI and contextual intelligence with industrial scalability

REDINGTON OFFERS ILLUMIO SEGMENTATION TO STOP BREACHES

Illumio segmentation proactively protects critical assets, contains attacks, and enhances cyber resilience

Redington, a leading technology aggregator and innovation powerhouse across emerging markets, announced a new distribution partnership with Illumio, the breach containment company. The partnership will see Redington work with Illumio to evolve its channel strategy, drive partner enablement, and accelerate go-to-market momentum for Illumio Segmentation, helping organizations across the region reduce risk, contain attacks, and stop cyberattacks from

turning into cyber disasters.

Despite record spending on cybersecurity, the volume, cost, and impact of cyberattacks continue to rise. Ransomware and other threats bypass perimeter defenses, with attackers exploiting vulnerabilities in hybrid and multi-cloud environments to move across networks and reach critical data, assets, and infrastructure.

Illumio Segmentation proactively protects critical assets, contains attacks, and enhances cyber resilience. By applying the principles of Zero Trust to stop lateral movement across multi-cloud and hybrid infrastructure, it enables organizations to protect critical resources and prevent the spread of cyberattacks.

“Our partnership with Illumio reflects Redington’s continued commitment to bringing the most advanced and relevant cybersecurity solutions to our partners and custom-

Nexus Black turns intelligence into applied impact thanks to IFS’s deep industry footprint and proximity to rich industrial asset data, combining context and AI resulting in trusted contextual AI into live operations, quickly and securely.

“Too many businesses are stuck choosing between inflexible enterprise tools or niche AI vendors with no roadmap to scale. Nexus Black changes that,” said Mark Moffat, CEO of IFS. “Nexus Black is IFS’s commitment to rapid, high-impact AI innovation for leading industrial organizations. It combines the agility of a start-up with the industrial context, security and delivery strength IFS is known for. It’s how we help our customers leap ahead - not just catch up.”

Nexus Black enables customers to access capabilities ahead of general launch and directly engage in the creation process. Through a co-investment model, customers gain a fast-mover advantage in their industries and influence solutions that enhance their agility. Initial use cases include predictive maintenance, manufacturing scheduling optimization, AI copilots for service and sales, and intelligent automation for finance and supply chain.

ers,” said Dharshana Kosgalage, Executive Vice President, Technology Solutions Group, Redington. “In today’s threat landscape, Zero Trust Segmentation is no longer optional—it’s essential. Through our extensive channel ecosystem, we will accelerate access to this critical technology, enabling partners to drive real cyber resilience for their customers.”

Recognized as a leader in The Forrester Wave: Microsegmentation Solutions, Q3 2024 report, Illumio Segmentation is proven to strengthen cyber resilience and reduce the impact of attacks. A Forrester Total Economic ImpactTM report shows Illumio reduces the blast radius of attacks by 66%, saving $1.8 million in decreased risk exposure.

“Breaches today are inevitable, but disasters don’t have to be,” said Sam Tayan, Director of Sales for Middle East, Turkey and Africa (META) at Illumio. “Illumio Segmentation provides a simple and effective way to contain threats, minimize risk, and build resilience, so that organizations can thrive without fear of cyber disasters. We’re thrilled to partner with Redington to jointly deliver value to customers and empower them to stay agile in the face of today’s cyberthreats.”

AI FALLING SHORT IN CUSTOMER SERVICE, FINDS SERVICENOW’S SURVEY

54% of UAE consumers say failure to understand emotional cues is more of an AI than human trait, pushing AI to evolve beyond automation

ServiceNow has released the ServiceNow Consumer Voice Report 2025. Now in its third year, the report, which surveyed 17,000 adults across 13 countries in EMEA — including 1000 in the UAE — explores consumer expectations when it comes to AI’s role in customer experience (CX).

Need to put the EQ in AI

Despite rapid advancements in AI and its widespread use in customer service, UAE consumers overwhelmingly (at least 68%) prefer to interact with people for customer support. Based on the findings of the research, this can be attributed to the perceived lack of AI’s general emotional intelligence (EQ). More than half (54%) of UAE consumers say that failing to understand emotional cues is more of an AI trait than human; 51% feel agents having a limited understanding of context is more likely to be AI; and an equal number (51%) say misunderstanding slang, idioms and informal language is more likely AI. Meanwhile, nearly two thirds (64%) of UAE consumers feel repetitive or scripted responses are more of an AI trait.

“The key takeaway for business leaders is that AI can no longer be just another customer service tool – it has to be an essential partner to the human agent. The future of customer relationships now lies at the intersection of AI and emotional intelligence (EQ). Consumers no longer want AI that just gets the job done; they want AI that understands them,” commented William O’Neill, Area VP, UAE at ServiceNow.

High Stakes, Low Trust

The report also highlights a clear AI trust gap, particularly for urgent or complex requests. UAE consumers embrace AI for speed and convenience in low-risk/routine tasks — 23% of UAE consumers trust an AI chatbot for scheduling a car service appointment and 24% say they are happy to use an AI chatbot for tracking a lost or delayed package. However, when it comes to more sensitive or urgent tasks, consumer confidence in AI drops. Only 13% would trust AI to dispute a suspicious transaction on their bank account with 43% instead preferring to handle this in-person. Similarly, when it comes to troubleshooting a home internet issue, only 20% of consumers across the Emirates are happy to rely on an AI chatbot, with 50% preferring to troubleshoot the issue with someone on the phone.

Humans and AI

For all the frustrations with AI — almost half (47%) of UAE consumers say their customer service interactions with AI chatbots have not met their expectations — the research does suggest that consumers consider AI as crucial for organizations looking to deliver exceptional customer experiences.

William ONeill Area VP UAE, ServiceNow

For one, in addition to seamless service (90%), quick response times (89%) and accurate information (88%), more than three quarter (76%) of UAE consumers expect the organizations they deal with to provide a good chatbot service. But perhaps more interestingly, 85% of consumers across the Emirates expect the option for self-service problem solving, which does indicate the need for organizations to integrate AI insights and data analysis into service channels to anticipate customer needs before they arise.

“While AI in customer service is currently falling short of consumer expectations, it is not failing. Rather, it is evolving. There is an opportunity for businesses to refine AI by empowering it with the right information, making it more adaptive, emotionally aware, and seamlessly integrated with human agents to take/recommend the next best action and deliver unparalleled customer relationships,” added O’Neill. “Consumers do not want less AI – they want AI that works smarter. By understanding the biggest pain points, companies can make AI a trusted ally rather than a frustrating barrier.”

GBM ENHANCES CYBERSECURITY

AT PRIME HEALTH

The collaboration involves 24x7 threat monitoring, detection and response, technology enablement, advanced incident response, healthcare-specific threat intelligence, brand protection, and security posture improvement

Gulf Business Machines (GBM), a leading end-to-end digital solutions provider, has entered a strategic partnership with PRIME Health to deliver a comprehensive managed detection and response (MDR) service designed to enhance cybersecurity across the healthcare provider’s ecosystem.

Through this collaboration, GBM will deliver around-the-clock threat monitoring, rapid detection, and swift incident response to protect PRIME Health’s digital environment. At the core of the collaboration are fully managed Security Information and Event Management (SIEM) and Security Orchestration, Automation, and Response (SOAR) platforms, empowering security teams with automated workflows, real-time insights, and a unified dashboard for enhanced visibility and faster decision-making.

In addition, PRIME Health will leverage GBM’s expert Digital Forensics and Incident Response (DFIR) team to rapidly investigate and contain threats, while gaining access to contextual, healthcare-specific threat intelligence. Furthermore, the partnership will help safeguard the healthcare provider’s digital reputation by proactively monitoring for misuse,

impersonation, and threats targeting the brand.

Beyond day-to-day operations, GBM will also provide ongoing support to strengthen PRIME Health’s cybersecurity posture, ensuring continuous alignment with best practices, regulatory compliance, and evolving threat landscapes.

Driven by a strong commitment to technological advancement in healthcare, the UAE's digital health market is projected to reach US$ 2.65 billion by 2030. The collaboration further taps into this opportunity by proactively identifying and mitigating cyber threats targeting patient data and medical devices, thereby contributing to safeguarding the critical healthcare ecosystem in the UAE.

Jaleel Rahiman, IT Director at PRIME Health, said, “At PRIME Health, we recognize that cybersecurity is essential for ensuring operational resilience and safeguarding patient data, and preserving the trust of every individual who walks through our doors. As part of our continued pursuit of excellence, we were looking for a globally recognized provider that could deliver top-grade cybersecurity while securing our complex environment end to end so that our internal teams are free to focus on innovation and care delivery. This initiative reflects our promise of ‘Personalised Care, Personally’—where every patient interaction is built on a foundation of trust and data confidentiality. With its proven reputation, strong partnerships, and local presence in the UAE, GBM is our trusted partner in supporting our security goals and compliance roadmap.”

Ossama El Samadoni, General Manager of GBM Dubai, said, “Our MDR service will enable PRIME Health to ensure uninterrupted care delivery while staying ahead of cyber threats. Our multi-layered collaboration reflects our shared commitment to building a secure and resilient

healthcare environment. We are delighted to help PRIME Health demonstrate leadership in secure digital transformation, build patient trust by proactively defending sensitive health data, and strengthen their reputation as a security-conscious, future-ready healthcare provider.”

PRIME Health is one of the UAE’s most trusted healthcare providers, known for its commitment to medical excellence and patient-centric care. With a portfolio that includes Prime Hospital, Prime Medical Centers, Premier Diagnostic Center, Medi Prime Pharmacies, and specialized services such as Home Care and Corporate Medical Services, the company plays a vital role in the UAE’s healthcare landscape. The group’s leadership continues to drive innovation across clinical, operational, and digital domains.

The increased use of technology in the Middle East and North Africa has created a rich playground for cybercrimes. With sophisticated and powerful cyberattacks compromising businesses at an unprecedented rate, redesigning security for the digital-first world has become a key priority for organizations, with revenue in the region’s cybersecurity market projected to reach US$4.63 billion this year.

Jaleel Rahiman IT Director, PRIME Health

Ossama El Samadoni General Manager, GBM Dubai

NUTANIX AND PURE STORAGE PARTNER TO DELIVER NEW INTEGRATED SOLUTION FOR MISSION-CRITICAL WORKLOADS

Combined benefits of Nutanix Cloud Platform with Pure Storage FlashArray to provide flexibility, security, and scale with the high-performance needed for the most demanding environments

Nutanix, a leader in hybrid multicloud computing, and Pure Storage, an IT pioneer that delivers the world’s most advanced data storage platform and services, announced a partnership aimed at providing a deeply integrated solution that will allow customers to seamlessly deploy and manage virtual workloads on a scalable modern infrastructure.

This integrated solution comes at a pivotal time for customers as the virtualization market evolution is top of mind. IT leaders are focused on helping their organizations maintain pace with the rapidly changing technology landscape while simultaneously implementing greater operational effectiveness. Gartner predicts that “by 2028, cost concerns will drive 70% of enterprise-scale VMware customers to migrate 50% of their virtual workloads.”

With this collaboration, the Nutanix Cloud Infrastructure solution, powered by the Nutanix AHV hypervisor along with Nutanix Flow virtual networking and security, will integrate with Pure Storage FlashArray over NVMe/TCP to deliver a customer experience uniquely designed for high-demand data workloads, including AI.

Key Benefits:

• Scalable, Modern Infrastructure - This partnership will provide customers with access to high-performance, flexible, and efficient full-stack infrastructure to power their most business-critical workloads through the simplicity and agility of Nutanix Cloud Infrastructure for virtual compute, and the consistency, scalability, and performance density of Pure Storage all-flash systems.

• Built-in Cyber Resilience - Customers will be able to strengthen their end-to-end cyber-resilience posture by leveraging native Nutanix capabilities, such as Flow micro-segmentation and disaster recovery orchestration, alongside Pure Storage FlashArray capabilities, such as data-atrest encryption and SafeMode.

• Freedom of Choice - Customers want agility and control of their mission-critical environments. The combination of Nutanix and Pure Storage will offer a resilient and easy-to-use alternative to existing market options.

“We’re thrilled to see Nutanix and Pure Storage joining forces. Their collective expertise, innovative technologies, and shared commitment to reliability and performance will deliver a compelling solution that directly addresses critical needs in the market,” said Anthony Jackman, Chief Innovation Officer at Expedient. “Expedient is proud to be an early design partner, collaborating closely with both companies to ensure this solution elevates the quality of service we deliver, ultimately enhancing the value and experience for our clients nationwide.”

“This new solution will help Nutanix and Pure Storage reach more customers together and help them better manage and modernize their mission-critical applications,” said Tarkan Maner, Chief Commercial Officer at Nutanix. “Our integrated solution will be ideally suited for companies with storage-rich environments looking for choices in modernization.”

“With more than 13,500 global customers, I’m hearing more than ever that organizations of all shapes and sizes have a growing

Maciej Kranz

General Manager, Enterprise, Pure Storage

need for efficient, flexible, and high-performance solutions that can also scale to support their most critical, data-intensive applications,” said Maciej Kranz, General Manager, Enterprise at Pure Storage. “Nutanix and Pure Storage are both known for pushing the boundaries of traditional infrastructure, driving innovation, and enabling unmatched agility. With this easy-to-manage solution, our joint customers will have the power of a virtual infrastructure that’s truly built for change.”

This solution will be supported on major server hardware partners that currently support Pure Storage FlashArray, including Cisco, Dell, HPE, Lenovo and Supermicro, for both existing and new deployments.

Additionally, Cisco and Pure Storage are expanding their partnership of more than 60 FlashStack validated designs to include Nutanix in the portfolio - further simplifying full-stack delivery.

The solution is currently under development and is expected to be in early access by the summer of 2025 and generally available at the end of this calendar year through both Nutanix and Pure Storage channel partners.

Tarkan Maner Chief Commercial Officer, Nutanix

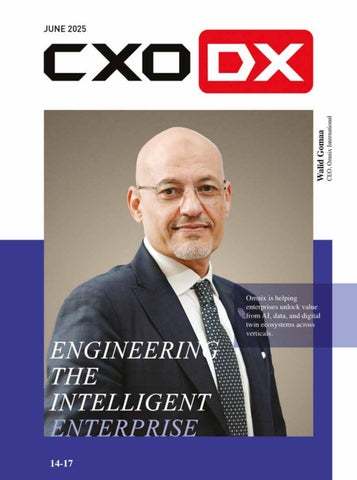

ENGINEERING THE INTELLIGENT ENTERPRISE

Omnix is helping enterprises unlock value from AI, data, and digital twin ecosystems across verticals.

With a strong legacy of over 30 years of operations, Omnix offers proven capabilities across both engineering and ICT, positioning itself as a strategic enabler of transformation and bringing a pragmatic, grounded approach to the complex landscape of enterprise digitalization. This has earned the company the trust of a growing client base that includes several of the region’s leading enterprise players. And now Omnix is sharpening its focus on how enterprises deploy AI, implement digital twins, and manage data across their digital transformation journeys.

Walid Gomaa, CEO of Omnix International, says, “What we're really focusing on right now is staying in tune with the evolving market landscape—keeping up with what’s happening out there, particularly in the realm of AI. While AI has been a dominant theme of discussion for some time now, if we look at the ground reality in terms of adoption and implementation, it’s still not where we would like it to be.”

He mentions there are several reasons for this. One is that many organizations still approach AI with a trial-and-error mindset, which, while not wrong, often lacks a clear path or outcome. The business use cases are frequently unclear, and ROI is rarely well-calculated or articulated. These gaps end up delaying or derailing the actual execution of AI systems and solutions.

“To address these challenges, we’ve been working on a few key areas. The first is from a structural perspective—how we approach AI in a way that’s practical and outcome-focused. We’ve realized that customers need help in the early stages of the AI cycle. So we work closely with them—right from the beginning—to define use cases. This involves engaging both IT teams and business users to bridge the common gap that exists between them. We sit with them, collaborate on real, practical use cases, and ensure the business side is involved from the outset.”

Often in traditional IT-led implementations, the IT team builds a solution and hands it over to the business, expecting adoption. But because the business users weren’t part of the process, the feedback is often negative.

Walid Gomaa CEO, Omnix International

“We are actively breaking that cycle. We’ve created a specific service offering to do just that, and we call it ‘AI Monetization Service’. It’s focused on clearly defining the use case, establishing a strong business case, and aligning the entire effort across IT and business functions,” says Walid.

An example would be implementing AI-led Computer Vision. Omnix has implemented several use cases utilizing computer vision, which is rapidly becoming one of the most widely used technologies in the AI space.

“We have worked on face recognition, people counting, occupancy management, and predictive analytics in specific industry scenarios. These use cases are real, tangible, and are already making an impact,” Walid adds.

Another major area of focus for Onnix is conversational AI, which is becoming increasingly important for enhancing interactions between employees and their organizations, citizens and government, and customers and enterprises.

“Improving the user experience is a key goal here. We’re not just talking about basic chatbots. We’re looking at full conversational ecosystems that incorporate natural language processing, chatbots, and LLM integration to ensure responses are accurate, contextual, and aligned with enterprise data. Conversational AI push is more than just convenience. It’s about meaningful engagement, and we see significant potential both internally for employee support systems and externally for customer interactions,” says Walid.

Alongside AI, data is a priority focus at Omnix as structured, clean, and accurate data is a necessity to enable successful AI systems..

“We have taken a firm position on helping customers across the entire data lifecycle. This includes everything from data definition and cataloging, all the way to ETL (Extract, Transform, Load) processes, building data lakes, and progressing into the analytics phase. Without proper data management at the foundation, any AI initiative is likely to fail or underperform. That’s why our efforts in this area are comprehensive. We don’t just clean up data but help build the infrastructure to manage it going forward. It’s a very intentional effort to strengthen the data backbone of every organization we work with,” elaborates Walid.

The third key area and one that sets Omnix apart is the company’s strong engineering heritage.

“At Omnix, we carry two types of DNA. One is digital, and the other is engineering. And we still maintain a strong hold in engineering services. That includes everything from training to building information management (BIM) services to product lifecycle management, and project delivery solutions.”

Now, Omnix has extended this even further with 3D scanning and asset digitalization capabilities. This capability allows Omnix to scan and digitize physical environments from university campuses to industrial facilities and convert them into interactive, data-rich models. This is the foundation for the company’s digital twin offerings.

“With 3D scanning, we can not only visualize the asset but also tag and map individual components including furniture, equipment, infrastructure. Then we attach IoT devices to these assets. That’s when the digital twin really comes to life. We can track real-time data, control environments remotely, perform predictive maintenance, and simulate future states. It’s a powerful way to deliver value, especially in sectors like oil and gas, education, and manufacturing.”

Digital twins - use cases and delivery processes

Omnix has been taking a phased approach to digital twin implementations as the company sees it as a process with multiple stages. For instance, Omnix executed a project for a university in Abu Dhabi. The goal was to digitally map the entire campus. Omnix started by offering a complete scan with 3D laser scanners and drones and captured the full physical environment, including buildings, interiors, and assets.

“Once the scanning was complete, we took all that input, what we call point cloud data and began transforming it into a 3D digital model. We use platforms like Unity for this stage, which allows us to build interactive visualizations. The final output is a walkable 3D representation of the entire site. You can do virtual walkthroughs, examine specific areas, and interact with objects,” says Walid.

But they didn’t stop at visual modelling and extended the process to asset tagging.

“Because we were scanning everything in detail, we also conducted asset tagging. That means every chair, table, screen, or device we captured is logged as an individual asset within the system. That’s what we refer to as digitization—taking the physical and converting it into digital with intelligence attached.”

From there, the next logical step is IoT integration. Once assets are tagged, IoT sensors and devices are attached to them. This allows for live data streaming information like energy consumption, equipment status, or environmental metrics (temperature, humidity, occupancy). This makes it possible to control and monitor systems remotely. For example, operations like switching lights on or off, tracking environmental conditions, or scheduling maintenance, etc can be executed from a centralized system.

“This is what brings the digital twin to life. It’s not just about having a visual replica of your environment; it’s about having a functional, responsive digital interface tied to your physical operations. We’ve applied this model in oil and gas, in petrochemical facilities, and other industrial settings, where the stakes are high and the environments complex,” says Walid.

"Some clients define transformation only as process digitization. But we go beyond that. For us, it includes data readiness, digital twins, AI use cases, and conversational interfaces, all integrated based on the organization’s priorities."

In such deployments, Ommix uses hardware like physical scanners, drones, and laser devices to capture spatial and environmental data. That raw data is then processed into point clouds, which are further modeled into 3D environments using software like Unity. From there, the system becomes interactive, often accessed through dashboard views.

“These dashboards are customized depending on the use case. For a manufacturing plant, it might show machine temperature or failure warnings. For a building, it could reflect energy usage or air quality. And once you’ve got enough historical data coming in, you can go a step further into predictive analytics. For example, if three specific sensors trigger together consistently before a fault, we can pre-emptively alert the team that a breakdown may occur within 24 hours or 3 days, depending on the pattern,” says Walid.

Access to this information is managed securely. Omnix works with clients to define user roles and privileges, from data scientists to general staff, and implement access controls. This ensures the right people have the right access, aligned with enterprise policies. Omnix is thus offering the infrastructure layer, both hardware and software, to support real-time, intelligent, and secure data-driven operations.

“From defining the use case, to building the system, to implementing it in the live environment, it’s a collaborative journey.And this is a core part of our digital transformation offering. Some clients define transformation only as process digitization. But we go beyond that. For us, it includes data readiness, digital twins, AI use cases, and

"At Omnix, we carry two types of DNA. One is digital, and the other is engineering. And we still maintain a strong hold in engineering services. That includes everything from training to building information management (BIM) services to product lifecycle management, and project delivery solutions."

conversational interfaces, all integrated based on the organization’s priorities,” says Walid.

Defining AI use cases via a consultative approach

AI is increasingly seen as the entry point for many conversations around digital transformation. Everyone wants AI, but the challenge is to find relevant use cases aligned with the business processes of the organization.

“When we engage with clients, we emphasize: AI by itself is not the solution. It's a tool. The real value comes from how well it is aligned with the organization's business objectives. We handle these conversations by breaking AI down into practical use cases. For some clients, that might mean starting with conversational AI to improve customer service. For others, it could be computer vision to manage footfall or safety compliance. In some cases, it's predictive analytics to anticipate operational failures. The idea is to identify the use case that will have the highest immediate impact on the organization. Interestingly, many clients, especially in the government, are now under pressure to implement AI. Some come to us with a mandate that they need to implement five use cases by year-end. But when we ask if they have defined those use cases, often the answer is no.”

Omnix, therefore, guides these customers through a gap analysis, helping them define and prioritize the most relevant use cases, and then proceed from there.

“It's not about implementing everything at once—that's not realistic. What we recommend is: start with two or three use cases, build the data models, execute, learn, and then scale. Because every implementation will require adjustments—whether it's internal processes, team reskilling, or new hires like data scientists and engineers.”

Sometimes, what the customer thought was an AI problem would turn out to a workflow or automation issue. In such instances, Omnix helps solve the problem with a low-code automation platform, that’s still transformation but doesn’t have to be an AI model. In some cases, Omnix helps enhance the use case with NLP capabilities, so there's still an AI element involved.

“But the point is: it's about solving the real problem, not forcing AI where it’s not needed,” Walid adds.

He also discusses the approach to Agentic AI.

“People often ask how it ties into conversational AI, and the answer is—it’s the next layer. Conversational AI is essentially the front-end interface, where users interact via chat, voice, or app. Behind the scenes, agentic AI kicks in, orchestrating multiple tasks simultaneously. Think of it this way: when a user makes a request, an agentic system deploys multiple specialized agents. One might fetch data from a database. Another could trigger a backend workflow. A third might perform reasoning or offer recommendations based on patterns. These agents collaborate in real time, but the user only experiences a seamless front-end interaction. That’s where real intelligence and value generation happens. It’s not just about responding to a question. It’s about reasoning, synthesizing, and even anticipating— pulling from multiple systems, understanding context, and delivering outcomes.”

H.O.T systems and AI at the edge

One of the most impactful innovations Omnix has developed over the years is H.O.T. systems, short for Hardware Optimized Technology.

“These were born out of a very real pain point we observed among engineers and designers. When using high-performance visualization or design software, they were spending an excessive amount of time simply waiting, waiting for models to open, waiting for renders to complete, waiting for data to save. So we asked ourselves: How can we optimize the hardware path to boost performance? It’s not just about throwing in a powerful GPU or the latest CPU. It’s about understanding how the software interacts with memory, disk, bus speeds, and graphics rendering and streamlining that entire workflow. That’s what our HOT systems do,” says Walid.

Omnix has created its own intellectual property around performance optimization, enabling users to experience up to 30–35% better performance compared to standard machines with similar specs. It’s a solution that’s already been deployed at more than 500 client sites across the UAE and other Gulf countries. Walid claims that whenever these systems consistently outperform traditional configurations.

The emphasis is on ensuring better productivity.

Walid elaborates, “If you’re wasting 20% of your time waiting for your machine to respond, then you’re losing 20% of your salary—or rather, the organization is. When we present this to CFOs or operations heads, the value becomes very clear: investing in better systems equals better ROI on human capital.”

Omnix is now adapting these HOT systems to support edge AI workloads. He says that this is where the market is headed and there will be more and more AI processing happening at the edge—on laptops, mobile devices, even field-deployed sensors. To do this effectively, those endpoints need to be powerful and optimized for local AI execution. That’s the next focus for Omnix.

Omnix is also exploring how these systems can support LLMs and agentic models locally, without relying on the cloud for every transaction.

“This makes AI more accessible, responsive, and secure—especially for sectors like defense, healthcare, and manufacturing where data sovereignty is critical,” Walid adds.

Omnix offers both on-premises and in the cloud deployments. It has partnered with HyperFusion, which allows it to offer GPU-based development and test environments in the cloud.

He elaborates, “Let’s say a customer wants to experiment with a use case—they’re not ready to commit to buying infrastructure yet. We create a GPU-powered sandbox environment for development and

testing. Once the use case is validated, the client has two choices: either they move to on-prem hardware for full deployment, or they continue using the hosted environment in the cloud. It’s flexible and scalable. And this isn’t limited to AI. These environments can support simulation workloads, 3D rendering, digital twin environments, and even high-performance computing (HPC).”

What really makes Omnix unique is the company’s dual DNA. On one hand, it has deep roots in engineering and AEC (architecture, engineering, construction) and they have been long-time partners with Autodesk, and continue to support the full ecosystem around BIM, digital twin, and wearables.

On the other hand, Omnix also has an equally strong digital technology capability.

“We’re known for our work in low-code/no-code platforms, application modernization, data engineering, and smart infrastructure. Our teams execute on both fronts, and more importantly, they collaborate across disciplines,” adds Walid.

In an era where there is a lot of convergence happening, this twin legacy of Omnix gives it ample advantages. For instance a customer working on a construction project might need a custom application. A client deploying a digital twin might need data integration and dashboarding. Omnix with its engineering and digital expertise is best placed to address such requirements through a unified approach.

What’s next for Omnix

Omnix is looking at further growth not just within the UAE, but across the broader GCC region.

“We’re placing a significant strategic focus on Saudi Arabia right now. The momentum there is incredible, and we’re expanding our salesforce and technical teams on the ground to meet growing demand. But it doesn’t stop there. We’re also actively pursuing expansion in Oman, Kuwait, and Qatar,” says Walid.

While the UAE and Saudi Arabia have been at the forefront of adopting new technologies, every country in the region is launching its own vision plans with clear digital strategies backed by mandates, funding, and national-level objectives.

“We’ve started to see Kuwait, Qatar, and Oman take bold steps forward, setting their own transformation agendas. And that creates a massive opportunity for organizations like us who are equipped to execute. The dynamics have shifted. It’s no longer about trying to convince clients to digitize. In many cases, the governments themselves are mandating transformation. This changes the nature of the conversation. Clients now come to us with clear directives: “We have to implement this by year-end.” There’s less resistance. The budget is there. The timelines are defined. What they need is a partner who can deliver across use cases and domains, “ says Walid.

Omnix today has a core workforce of about 250 employees, and including their outsourced and managed services, the company is managing over 1,300 professionals across the region. Omnix has physical offices in five countries including the UAE, Saudi Arabia, Qatar, Oman, and Kuwait.

However, the goal isn’t just to grow headcount or geographic footprint at Omnix. The focus is to build a strong, cross-functional team, people who understand engineering, digital transformation, AI, and smart infrastructure, and who can come together to deliver holistic outcomes for their customers. And this is driving Omnix ahead.

RIGHT-SIZING ENTERPRISE GENAI

As generative AI gains momentum across industries, enterprise leaders are shifting focus from experimentation to scalable, compliant deployment.

As generative AI (GenAI) matures, the enterprise world is rapidly transitioning from cautious exploration to confident deployment. Across industries and regions, business leaders are recognizing that GenAI is not just a technological experiment—it is an enabler of strategic value, competitive differentiation, and operational efficiency. In the Middle East in particular, a wave of national AI strategies and private-sector investments is accelerating this shift.

Aligning Capability with Context

For enterprises to realize value from GenAI, the starting point is right-sizing—adapting AI capabilities to meet organizational needs without overburdening resources or compromising compliance.

“Right-sizing GenAI is the process of aligning AI capabilities with the unique strategic, operational, and risk context of the enterprise,” says Dr. Ali Katkahda, Group CIO of Depa United Group. “It depends largely on the organization's digital maturity, regulatory environment, leadership priorities, and industry dynamics.” He emphasizes that in sectors where IT has traditionally played a support role, GenAI offers a rare opportunity to reposition it as a driver of value, especially when quick-win use cases such as copilots for employee inquiries or inventory lookups can create fast, tangible impact.

Sudhir Kumaran, Director IT in Aviation sector, offers a complementary perspective. He says, “The objective is to strike a balance between performance, cost-efficiency, risk management, and regulatory compliance. From a strategic standpoint, it involves matching the model’s capabilities to specific use cases— avoiding the inefficiency of using large-scale models for simple tasks, or the inaccuracy that can come from underpowered models for complex problems.”

The consensus seems to be that right-sizing is an ongoing process, requiring continuous optimization of latency, cost, and operational overhead as business needs evolve.

Foundation Model or Fine-Tuned LLM?

When it comes to choosing between general-purpose foundation models and fine-tuned large language models (LLMs), the decision has to be taken in the context of the company’s requirements. “The decision hinges on business goals, risk tolerance, and regu-

latory obligations,” says Dr. Katkahda. “General-purpose models like ChatGPT or Gemini offer speed and flexibility, but they are not always suitable for regulated industries or data-sensitive use cases.”

Kumaran adds, “Foundation models are great for broad, multimodal tasks and fast deployment scenarios, especially where time-to-market matters. But fine-tuned models excel in use cases that require domain-specific accuracy, data governance, and compliance, such as legal analysis, healthcare diagnostics, or financial operations.”

While foundation models offer convenience through API access, they become cost-intensive at scale. Fine-tuned models, although resource-intensive initially, often offer better long-term value for repetitive, high-precision tasks. The decision ultimately depends on the complexity of the use case, the sensitivity of the data, and the need for internal control.

Choosing the Right Model

Dr. Katkahda outlines four primary criteria for choosing between pre-trained and fine-tuned models: data sensitivity, domain spec-

Sudhir Kumaran Director IT in Aviation sector

ificity, latency and performance requirements, and cost-to-ROI balance. For highly sensitive applications or those involving proprietary data, internal fine-tuning on open-source models is the preferred route. It not only improves performance but also enhances control over data residency and auditability.

Kumaran seems to concur with this, and he emphasizes that enterprises dealing with niche domains, such as aviation compliance or financial risk require custom-trained models to avoid generic and potentially misleading outputs. "Pre-trained models are effective for non-specialized, public-facing tasks. But when it comes to regulated or high-stakes domains, customization is not optional but essential.”

Deployment Challenges

Despite the excitement surrounding GenAI, its enterprise deployment comes with real challenges.

“Data readiness is a major hurdle,” says Dr. Katkahda. “Legacy systems, unstructured datasets, and fragmented sources impede model training and degrade output quality.” He also notes the shortage of GenAI-skilled professionals in the region, the ambiguity around AI liability and explainability, and the infrastructure costs associated with GPUs and orchestration platforms.

Kumaran points out the difficulties in integrating GenAI into legacy enterprise systems and workflows. “It’s not just a technical deployment. it’s a cultural and procedural shift. Internal resistance, lack of governance frameworks, and inconsistent data

policies can all slow down GenAI adoption.”

For both leaders, one solution lies in strong change management and governance. Enterprises need clear internal policies around AI ethics, data classification, model monitoring, and responsible use, especially as regulatory pressure builds.

Data Sovereignty and Model Strategy

Regulatory requirements are not just constraints; they’re now shaping AI strategies from the ground up.

“In both UAE and KSA, data sovereignty laws require critical data to remain within national borders,” says Dr. Katkahda. “This mandates localized model hosting, strict access controls, and often excludes reliance on global public APIs.” His organization’s GenAI strategy incorporates role-based access, encryption, audit trails, and jurisdiction-aware governance mechanisms.

Kumaran agrees, noting that for public entities or sectors governed by civil aviation or financial regulations, using external APIs introduces unacceptable risks. “In regulated environments, explainability is not a luxury; rather, it’s a necessity. We must be able to trace how a GenAI model arrived at a decision, especially if it influences operational outcomes.”

Regional Outlook

The Middle East is entering a high-growth phase for GenAI, and we could see a more rapid pace of deployments.

Dr. Katkahda says, “The UAE’s AI market is projected to grow from USD 3.47 billion in 2024 to USD 46.33 billion by 2030. We’ve seen a clear shift from experimentation to deployment across sectors.”

Kumaran concurs, and he adds, “Countries like the UAE and Saudi Arabia are at the forefront of AI adoption. With strong government backing, public-private partnerships, and focused upskilling programs, the pace of GenAI implementation is accelerating sharply.”

This optimism is supported by significant infrastructure developments, including a forecasted 6+ GW of datacenter capacity in the region by 2030, and a 344% year-over-year increase in GenAI course enrollments. Industries such as media, healthcare, manufacturing, and banking are leading adoption, with use cases ranging from cybersecurity to predictive maintenance and customer service automation.

GenAI in the enterprise is this no longer experimental—it’s becoming operational.

CIOs are at the forefront of this shift, navigating a complex landscape of performance optimization, regulatory compliance, and organizational change. For organizations looking to kickstart their GenAI journeys, they need to start with value-aligned use cases, invest in governance and skills, and build AI strategies that are both scalable and sovereign. In summary, GenAI is about building smarter, more adaptive enterprises for the future.

Dr. Ali Katkahda Group CIO, Depa United Group

DATA STREAMING IN MODERN ENTERPRISES

As enterprises shift toward real-time intelligence, data streaming is emerging as a core enabler of agility, AI, and unified data management. Shaun Clowes, Chief Product Officer of Confluent explains how the company is redefining data architecture for the AI era with innovations like Flink, Tableflow, and a governance-first approach.

Shaun Clowes Chief Product Officer, Confluent

How do you define the total addressable market for data streaming, and how widespread is its adoption?

We estimate the overall addressable market for streaming platforms at around $100 billion. Over 150,000 organizations are using Apache Kafka in some form, and Confluent serves more than 6,000 enterprise customers globally. These organizations use data streaming not just to transport data but to unify and simplify their data architecture, replacing a range of fragmented tools—from data lakes and warehouses to event brokers and integration software.

Can you elaborate on the origin story of Confluent and Kafka?

Kafka was created at LinkedIn about 13 years ago to solve the challenge of real-time data synchronization across multiple systems. The company needed a way to build AI and ML capabilities on top of a seamless data pipeline. Confluent was founded shortly after to commercialize and evolve Kafka, starting with on-prem deployments, then a cloud-native version, and now an elastic, scalable platform with built-in stream processing, governance, and integration features.

How does Confluent position itself in the competitive landscape?

Kafka was the first true streaming architecture at internet scale. While other streaming tools exist, including some cloud-native and open-source alternatives, Confluent remains the only truly integrated platform that works across on-prem, cloud, and multi-cloud environments. Most enterprise customers operate in hybrid setups, and Confluent uniquely supports that complexity with a unified, vendor-agnostic solution.

Can your solution integrate with existing, diverse enterprise architectures?

Absolutely. Our set of connectors, called "Connect," enables customers to plug Confluent into a wide range of applications, databases, and infrastructure. Streaming doesn’t replace all legacy systems at once; it’s additive. Organizations can start small and gradually expand their use of streaming to unlock more value from their data.

How does data streaming relate to broader data management efforts?

Confluent approaches data from the perspective of motion and meaning—not just storage. Unlike traditional storage-led data

management solutions, we manage the shape, movement, and governance of data in real time. Our approach ensures that the quality and compliance of data is enforced from the moment it is created, minimizing downstream errors and regulatory risks.

Can you elaborate on governance as a priority?

Yes, governance is critical to making data reusable. We’ve layered governance tools on top of our streaming foundation to provide lineage, quality, metadata, and access controls. This ensures that data is not only real-time but also trusted and compliant—particularly important for regulated sectors.

Why is unifying stream and batch processing significant?

All data is created in real time, but legacy systems forced us into batch processing because real-time movement was too hard. This created inefficiencies and data inconsistencies. Our Tableflow feature bridges this gap by allowing real-time data to be queried like a table in a data lake, while still being used in real-time applications. It enables consistent, reliable data for both real-time and analytical use cases.

What are some public sector or government use cases for your platform?

Use cases in government range from real-time customs data and import/export tracking to social security claim verification and citizen authorization. The common theme is the need for data to move instantly, reliably, and across siloed systems—something that streaming platforms are uniquely capable of addressing.

Is the platform customizable for specific verticals or processes?

We provide a generic data streaming platform that is used by developers and technical teams across industries. While we offer patterns and examples, our strength lies in flexibility. Our platform supports many of the world’s largest banks, airlines, and telecom companies who build highly customized real-time experiences on top of our architecture.

What’s the significance of recent announcements related to Flink and Tableflow?

Flink allows users to run SQL-like queries on streaming data as if it were static. With snapshot queries, you can experiment with historical data and then deploy those queries into real-time production environments—enabling faster development cycles and better data-driven experiences. Tableflow, meanwhile, lets streaming data appear as tables in data lakes, ensuring that real-time and batch data are aligned.

Are these features immediately available to customers?

Yes. Our business model is consumption-based. All customers

have access to the unified platform and can choose which services to use. Some are charged per use, like Flink jobs, but there are no separate licenses required. It’s flexible and on-demand.

What kind of customers does Confluent serve—SMBs or large enterprises?

We serve all customer types, from small and medium businesses to Fortune 500 firms. The same platform powers both ends of the spectrum. Customers can choose between self-managed deployments or our managed cloud service, depending on their needs.

What can customers expect from Confluent over the next year?

We’re working to further unify batch and stream processing. This means customers will be able to run real-time and historical queries on a single platform, integrate their data with all major SaaS and enterprise tools, and benefit from deeper analytics integrations. Our goal is to make data usable, consistent, and available wherever it’s needed, in real time or in retrospect.

"While other streaming tools exist, including some cloud-native and open-source alternatives, Confluent remains the only truly integrated platform that works across on-prem, cloud, and multi-cloud environments. Most enterprise customers operate in hybrid setups, and Confluent uniquely supports that complexity with a unified, vendor-agnostic solution"

DATA STREAMING OUTLOOK IN THE MIDDLE EAST

With AI adoption surging across the Middle East, Confluent is positioning its data streaming platform as a foundation for real-time, intelligent enterprises. In this interview, Fred Crehan, Area Vice President Growth Markets at Confluent discusses regional momentum, cloud flexibility, and why streaming data is essential for AI success.

Discuss Confluent’s growth and presence in the Middle East?

We officially launched our Middle East operations shortly after the pandemic, around 2021, and have since set up our regional base in Dubai Media City. The region has been fantastic for us — we’re growing steadily, acquiring new customers across verticals, and building strong partner relationships. Given the recent strategic announcements in Saudi Arabia and the UAE, especially around AI and data infrastructure, we see this region as a priority for sustained investment.

Which verticals are seeing the most traction for Confluent’s platform in the region?

Confluent, built around Apache Kafka, has wide appeal across industries. Kafka is one of the most adopted open-source technologies globally, and we’re seeing strong interest from banking, finance, retail, travel, telecom, and even government sectors. Any enterprise that wants to build a strategic edge with real-time data is a potential fit for us and that’s practically every modern organization today.

What do your latest product announcements mean for customers in the Middle East?