34 minute read

ARCHES AND LAND BRIDGES AND PILES OF ROCK by Lydia Conklin

when I moved to New York for graduate school, and encountered wealthy Manhattanites who appeared never to have met a black person before in their lives.

I’d spent that week at home showing the city as I knew it off to my creative team: my school, my favorite bookstore, my childhood home. But in the days following the incident at the cafe, I would catch myself doing a double take every time I sat down next to a white person at a restaurant. Each time someone in front of me didn’t hold a door open or failed to make eye contact, I would wonder: Did every white person harbor secret neo-Nazi tendencies? I’d had black and white friends my whole life, and had learned to move more or less seamlessly between both worlds. Now, I could tell, that no longer mattered. The rules had changed and the game was no longer one I felt equipped to play.

Advertisement

In truth, I’d started to feel this way the month before, in October 2016, on an earlier research trip we’d taken to Memphis, where I’d visited the National Civil Rights Museum at the Lorraine Motel. Next to well-known resistance figures like Martin Luther King Jr. and Rosa Parks, exhibits highlighted the work of Fannie Lou Hamer, Diane Nash, and Claudette Colvin, less famous activists who nearly gave their lives to change racist laws. The museum was full of oral histories and life-sized recreations of landmark events in the Civil Rights movement. Hearing the Freedom Fighters’ voices and seeing what they’d been up against brought home to me the extraordinary value and difficulty of their achievements.

Back then, the nation was still at the height of the frenzy that surrounded Trump’s presidential campaign. I moved, most of the time, in a cloud of cynicism and frustration. I remember wondering if the generation of activists that had risen to take the place of people like Hamer and Nash would achieve what its forebears had. Was it still possible to effect change in the real world? More and more it seemed that the answer was no, that the people who tried wound up speaking in echo chambers on Twitter and Facebook to those who already agreed with them, while protests became sensationalist stories feeding the news cycle.

I also suspected that I was part of the problem: as a prep school student in Houston, and later as a student at Brown, I’d learned to validate everyone’s feelings, to ask questions instead of making demands, to make sure everyone around me felt safe and secure enough to voice their opinions. When I wanted to express displeasure or disdain, I couched it in sarcasm or irony, using coded attempts at shaming my opposition rather than outright argument. It was this same irony I heard every day in the voices of political pundits on MSNBC and in the op-ed pages of the New York Times and the Washington Post. I’d been indoctrinated so deeply in this mode of communication that I no longer knew how to fight back any other way. The same went, it sometimes seemed, inexcusably, for our chosen leaders on the left.

Irony, Jonathan Lear writes in his book of lectures on the subject, A Case for Irony, is “a form of denial with the purpose to minimize the importance of the object.” Sarcasm, meanwhile, “is a form of aggressive discharge.” Neither works as a weapon against an enemy that doesn’t care whether its opposition is trying to minimize it or not. The left’s sardonic criticism of Trump’s buffoonery only strengthened old-guard conservatives’ faith in his ability to lead. It’s impossible to fight an enemy you don’t know, and Democrats and Republicans didn’t seem to be speaking, even remotely, the same language. The day I visited the Civil Rights museum also happened to be the day Trump won, and I remember feeling shocked when all my latent suspicions appeared suddenly to have been justified. But then, in the months that followed, I discovered I’d been wrong.

The problem wasn’t just that the left was deploying irony as a failed weapon. It was that the deployment of that same weapon by the far right had, at the same time, turned out to be wildly successful. In forums and on social media, white nationalist rabble-rousers deployed comic cynicism and sarcasm to turn people dabbling with white supremacist ideas into full-blown devotees of the neo-Nazi movement.

Movement leader Richard Spencer popularized the phrase “alt-right” to make his ideals more palatable to mainstream journalists, but the term itself actually comprises several disparate groups. In a study of the far right’s manipulation of the media, Alice Marwick and Rebecca Lewis describe both “an aggressive trolling culture […] that loathes establishment liberalism and conservatism, embraces irony and in-jokes, and uses extreme speech to provoke anger in others,” and “a loosely affiliated aggregation of blogs, forums, podcasts, and Twitter personalities united by a hatred of liberalism, feminism, and multiculturalism.”

Some groups espouse anti-Semitic and racist views, while others favor anti-feminist or antiIslam platforms instead. Figuring out who adheres to which aspect of the movement — or who is taking it seriously at all — can be difficult because so much of it depends on ironic posturing. Determining whether a person is sarcastic or sincere online can often be impossible without some sort of referent. Adherents to the movement gleefully exploit the confusion engendered by this phenomenon “to spread white supremacist thought, Islamophobia, and misogyny through irony and knowledge of internet culture,” Marwick and Lewis write.

The Daily Stormer, which The Atlantic called “arguably the leading hate site on the internet,” featured text that mimicked the caustic tone of gossip sites like Gawker. Its founder, Andrew Anglin according to reporter Luke O’Brien, drew inspiration both from Hitler’s Mein Kampf and Saul Alinsky’s Rules for Radicals. (“One Alinsky rule in particular stuck with Anglin,” O’Brien writes, “Ridicule is man’s most potent weapon.”) When GoDaddy stopped hosting the site, Anglin reportedly wrote that he’d been joking all along. His was an “[i]ronic Nazism disguised as real Nazism disguised as Ironic Nazism.”

It’s important not to underestimate how much this combination of irony, internet culture, and far-right politics appeals to reporters. In an interview for Vice News conducted at a conference hosted by the National Policy Institute, Spencer’s nonprofit, reporter Elle Reeve calls Spencer out as a fraud, accusing him of having accomplished nothing more than “[figuring] out how to manipulate journalists’ fixation on Twitter.” Spencer doesn’t disagree. “We understand PR,” he answers, after pointing out the fact that she’s interviewing him at that moment. “We understand how to manipulate journalists.” Even journalists who dislike writing about people like Spencer and Anglin have done so because not writing about them means not talking about the problems they represent — yet another irony of the movement, and further evidence of the ways in which they’ve deployed social media’s predilection for ironic reversals to their advantage.

In her book Kill All Normies: Online Culture Wars from 4Chan and Tumblr to Trump and the Alt-Right, Angela Nagle points to Harambe, a gorilla at the Cincinnati Zoo who was shot and killed after a boy fell into his habitat. The event sparked widespread outrage on social media, which then became the object of derision and mockery among critics who accused the outraged of virtue signaling. Ultimately, the far right co-opted pictures of Harambe to troll liberals and left-leaning celebrities, until the famous dead gorilla transformed, somehow, into a symbol of white nationalism.

“Given the Harambe meme became a favorite of alt-right abusers,” Nagle writes,

was it then just old-fashioned racism dressed up as Internet-savvy satire, as it appealed most to those seeking to mock liberal sensitivities? Or was it a clever parody of the inane hysteria and faux-politics of liberal Internet-culture? Do those involved in such memes any longer know what motivated them and if they themselves are being ironic or not? Is it possible that they are both ironic parodists and earnest actors in a media phenomenon at the same time?

This is another way in which the white nationalist movement weaponizes irony — by tangling its critics up so hopelessly in language that it sometimes seems as if words aren’t up to the task of describing it at all. In an article about internet-speak for The Baffler in 2013, Evgeny Morozov wrote, “Old trusted words no longer mean what they used to mean; often, they don’t mean anything at all. Our language, much like everything else these days, has been hacked.” The far right picked up on the ways in which language can be warped, distorted, and rendered free of meaning on the internet through irony, and, rather than writing a think piece about it, exploited this weakness merrily to its benefit.

Back in Houston, the morning after the Confederate flag incident, I went back to Black Hole. There was Thomas, standing in front of me in line. He ordered a coffee from the girl behind the counter and I planted myself squarely in his path. I met his eyes and kept my gaze neutral. He tossed me an expression I can only describe as a smirk and headed through the glass doors of the cafe, toward the Infiniti SUV he’d double-parked in the coffee shop’s cramped lot. The cashier waved as he left, and told him to have a nice day. Once he was gone, I fantasized about outing him to her, just like I’d fantasized about confronting him the night before. Instead, I paid for my coffee, left the cafe, and walked down Montrose Boulevard toward the city’s museum district, which was less than a mile away.

The Rothko Chapel, situated alone on a grassy block near Houston’s Menil Collection, has eight concrete walls and a polished concrete floor. What light does get in comes through a partially covered skylight set into the pointed ceiling. On each wall is a triptych of Rothko canvases, each painted black, each panel painted differently. Some of them feature wild, hurried brushstrokes, and others look solid, as if the layer of black paint was applied all at once. The paintings absorb all light and sound, and the silence inside the chapel when it is empty is not like any I have ever known. Set in the center of the room are comfortable wooden pews and the effect of sitting on them, depending on one’s mood, can feel like looking through rows of windows into deep space or like sitting in an empty space bordered on all sides by locked doors. Inside the chapel, my breathing slowed. I sat on one of the pews and thought about Thomas driving his Infiniti SUV around the city, double-parking in tiny cafe parking lots, going to classes at the University of Houston, still wishing he’d become a filmmaker, and, when no one was watching, terrorizing people who didn’t look like him. Meanwhile, in the weeks since Trump’s election, I’d called my Congress people, donated to the ACLU, and protested in the streets, and still, I’d never felt so powerless, so certain that nothing I could ever do would begin to be enough.

I took a brochure from a stack on a table near the door and sat down on a bench outside to read. Past visitors to the Chapel included Nelson Mandela, Rigoberta Menchú, Desmond Tutu, and the Dalai Lama. The structure itself had been constructed to serve as an environment in which those who fought against injustice could be recognized and rewarded. For years, the Óscar Romero awards, honoring heroic human rights activity, had been presented there. It occurred to me that with his paintings, Rothko managed to say something without words, that was, nevertheless, universally understood. His art existed outside language, functioning as a wordless inciting force for change.

The choral piece I had come to Houston to research was called dreams of the new world. It follows the course of the western frontier from Memphis to Houston to Los Angeles, stretching from a period just after the Civil War to today. The night before, while Thomas listened, my team and I had been discussing Robert Church, a freed slave who became a millionaire in Memphis during the Reconstruction era. A respected entrepreneur, Church helped Memphis become a hub of black artistic and commercial activity, and his granddaughter, Sara Roberta Church, had been the first black woman in Memphis elected to public office. The Church family was also instrumental in helping African-American people get and keep the right to vote.

I wondered if it wasn’t this conversation Thomas had been retaliating against, if his antagonism hadn’t been a direct response to the story we were trying to tell. While it was true that Thomas was out there in the streets, spreading his message, it was also true that we were fighting back against him and his kind with what tools we had. I imagined the Los Angeles Master Chorale singing louder and louder, making their voices heard over the din of people like him.

Our research trip ended. We all left Houston and went back to our respective homes. In February 2017, I started volunteering with students at a high school in South Los Angeles, mostly first-generation Americans whose parents were Mexican and Latin American immigrants. I constantly worried about what would happen to my students and their families under the Trump regime. One afternoon, I was driving home from class, stuck on the 101 freeway, when I looked up. Someone had painted IMPEACH in 10-foot-high black letters on a white wall looking out over the traffic. The letters were clumsy, poorly drawn but legible, the black paint bleeding into the white cinderblock wall. In their ugliness, they overtook the landscape, and became, for a few minutes, the only thing I could see.

While I watched, men in hard hats and construction uniforms used paint rollers to erase the word and make the wall white again. Even after the wall got its final coat of paint, I still thought about the message whenever I passed that particular stretch of the 101, imagining the work it must have taken an amateur graffiti artist to put the letters there in the dark. I bet the

thousands of people who take that freeway to work each day do too. What it came to represent for me is not just a statement of revolution and resistance, but a hope and a knowledge and an understanding, a continuation of what I’d started to figure out in the Rothko Chapel.

If a group’s only MO is destruction, it stands to reason that eventually it will destroy itself. What The New York Times calls the “alt-right” isn’t a monolith, but a swarm of tribes with different agendas, some of which radically oppose one another. Ultimately, it’s not difficult to imagine them all simply tearing each other apart as the entire movement implodes. Until that point, I wonder if we shouldn’t broaden our perspective. We should not only work on undermining the white supremacist agenda, but also the cultural environment that helped it thrive and grow in the first place. Irony was an integral part of that cultural environment. I wonder then if sincerity is the last radical act left. Perhaps it is time to say what we mean.

This may be difficult in a conversation that so often takes place online. The left has, historically, held up social media as a catalyst for change, but, by this point, the right has caught on to that potential and run with it, with the president himself taking Twitter as his primary vehicle of communication. While social media platforms have served as a galvanizing force for women of color and others whose voices the mainstream media tends to ignore, they also tend to reward extremes, making political movements easy to derail by those who know how to game them (something Russia’s interference in the 2016 presidential election more than proves). The primary goal of Google, Facebook and Twitter is to capture users’ attention for the longest possible amount of time; how that attention gets captured — whether through hate speech, racist memes, far right propaganda, or progressive discourse — has, for years, been none of their concern.

And yet, once upon a time, social media was supposed to save us. In Kill All Normies, for example, Nagle recounts Twitter’s placement of itself at the center of the “Arab Spring” when activists in Africa and the Middle East reportedly used the platform to organize collectively and bring down oppressive regimes. By the time those revolutions gave way to civil war, widespread violence, and, in some cases, the return to power of the same military dictatorships that had been overthrown, Twitter had removed itself from the conversation. Nagle lists the fawning paeans published about social media over the course of the movement, including Heather Brooke’s The Revolution Will be Digitised: Dispatches From the Information War and Paul Mason’s Why It’s Kicking Off Everywhere. She cites a similar example in Occupy.

Twitter and Facebook have unquestionably failed the left. The conviction that these companies are supposed to operate in the service of some ultimate humanitarian good reminds me now of the way certain techies fetishize Elon Musk’s plan to save humanity by moving to Mars. There, rather than succumbing to the planet’s toxic atmosphere and lack of natural resources, humans will somehow build a lasting, utopian society where we don’t repeat any of the same mistakes we’ve made here. It also calls to mind the reverence of futurist Ray Kurzweil, and his theory of an eventual human merger with machines that may make us all knowing and immortal. This future, which he calls the Singularity, is (much like the Rapture) always approaching but never quite here.

I wonder if rather than looking to technology to fix us at some point in the future, it might not make sense to look at where it has brought us. I also wonder whether it might not be the work unlikely to make any kind of splash online — odd non-denominational chapels featuring priceless works of art, huge, un-Instagrammable protest murals, new classical music performances, and other work viewers have to engage with sincerely and in real life to experience — that we need most right now.

In that same vein, what might it mean to gaze clear-eyed at the far-right agenda, admit that we are both frightened by it and ashamed of the role liberal complacency played in its rise, and start by building our weapons from there? Rather than denying what can’t be denied and minimizing what can’t be minimized, thus forfeiting the fight altogether? I’m not suggesting the left abandon cynicism, only that we stop affording it a weight it can’t carry under the current administration. Leaving kneejerk irony and the rewards it offers behind might be difficult at first. But it might also be worth it, if it means we get to take back the actual world, and build in it something new.

EARLY PASTORAL

SHARON OLDS

I did not push my baby brother in, when he fell into the swimming pool in the woods at our father’s parents’, I was not even near by, he was talking to the baby on the surface of the water, who was talking to him when he tipped over and went in, as if back into the sky from which he had descended on the last day that I was my mother’s baby. They say he went straight to the bottom of the handmade clay pool, and when my mother got to the edge he was lying near the drain, smiling. She dove in, in her clothes, like a bird in its feathers, I wish I had seen that, Olympus moment in my mom’s life, she brought him and his smile up into the air again. Sometimes that pool was dry, like a pit a child might be put in, like the one Joseph was put in, when they took from him his coat of many colors, the most beautiful thing in the world, like being your mother’s baby -- you were magic, then, and not again. And something took him and threw him back where he had come from, and she dove from heaven into the deep to recover her heart’s desire. Then the pool was drained, no more guppying about, like a tailless boy, a pole-less tad, but just the mountain sun, around the baked and cracking rim, with clay ducks hissing with dryness, and if you were invited you could go into the bush of thorns and find a massive blackberry and put it in your mouth and slowly crush it, all for yourself, your solitary voluptuous mouth.

THEME PSALM

SHARON OLDS

There were corn-cob salt and pepper shakers, and corn-cob napkin racks, and corn-cob tea cosies, and picture frames -the cabin where my father’s parents lived was chowdered with knickknacks in the shape of ears of corn, because their name was Cobb. Part of me, the Cobb part, liked it, there was a theme to things, we were linked to a real vegetable, an archaic being like an ancestor, a totem of golden nourishment, we were kin to a pattern, a grid, a lovely mounded and depressed order -and part of me had seen my mother’s lip curl, when she saw the cornsome bric-a-brac. The kernels of his genes seemed to displease her -- I think she saw them in me, my DNA a maize half hers, half wrong. But I loved to run my thumb over the abacus glaze of chromosomal grains -- to know I was my father, in a way my mother was not, she was a Cobb in name only. And it was a sight to see my mother say my father’s sister’s name, to see her hold herself above such folly, she pronounced it like a sentence on Cobbs: Cornelia.

PASADENA ODE

SHARON OLDS

(for my mother)

When I drove into your home town, for the first time, a big pine-cone hurtled down in front of the hood! I parked and retrieved it, the stomen tip green and wet. An hour later, I realized that you had never once thrown anything at me. And, as days passed, the Ponderosa oval opened, its bracts stretched apart, and their pairs of wings on top dried and lifted. Thank you for every spoon, and fork, and knife, and saucer, and cup. Thank you for keeping the air between us kempt, empty, aeolian. Never a stick, or a perfume bottle, or pinking shears -- as if you were saving an inheritance of untainted objects to pass down to me. You know why I’m still writing you, don’t you. I miss you unspeakably, as I have since nine months after I was born, when you first threw something at me while keeping hold of it -- then threw it again, and again and again -- when you can throw the same thing over and over, it’s as if you have a magic power, an always replenishable instrument. Of course if you had let go of the big beaver-tail hairbrush -if it had been aimed at my head -- I would have had it! I’m letting you have it, here, casting a line out, to catch you, then coming back, then casting one out, to bind you to me, flinging this flurry of make-a-wish milkweed.

STEPHANIE WASHBURN, HERE ABOUT 3, 2013, FOUND PHOTOGRAPH AND SCRUB BRUSH, 5.5 X 7.5 INCHES

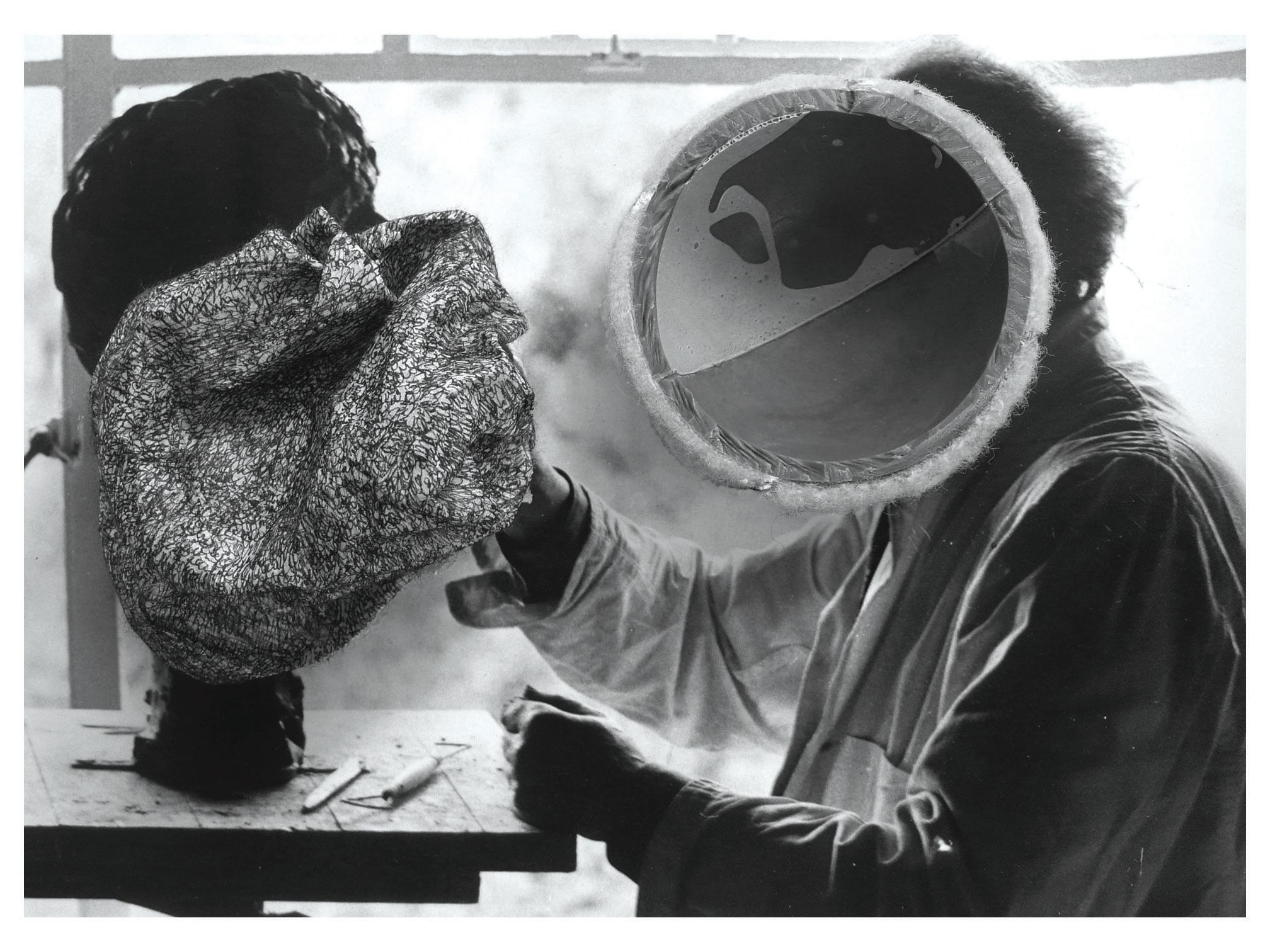

MARTIN KERSELS, FAT IGGY 1, 2008, BLACK AND WHITE C-PRINT, 13 X 19 INCHES ©MARTIN KERSELS / COURTESY OF THE ARTIST AND MITCHELL-INNES & NASH, NY

BINGESPEAK

ALEXANDER STERN

In a little-watched 1947 comedy, The Sin of Harold Diddlebock, directed by Preston Sturges, the title character, an accountant, takes shelter against the bureaucratic drudgery of his existence with a literal wall of clichés. Diddlebock covers every inch of the space next to his desk with wooden tiles engraved with cautious slogans like “Success is just around the corner” and “Look before you leap.” Head down, green visor shielding him from the light, he passes the years under the aegis of cliché, until one day he’s fired, forced to pry the clichés off his wall one by one, and face the world — that is, Sturges’s screwball world — as it is.

The French word “cliché,” as Sturges must have known, originally referred to a metal cast from which engravings could be made. Diddlebock’s tiles point to one of the primary virtues of cliché: it is reliable, readymade language to calm our nerves and get us through the day. Clichés assure us that this has all happened before — in fact, it’s happened so often that there’s a word, phrase, or saying for it.

This points to one of the less remarked upon uses of language: the way it can, rather than interpret the world or make it available, cover it up. As George Orwell and Hannah Arendt recognized, this can be socially and politically dangerous. Arendt’s Eichmann spoke almost exclusively in clichés, which, she wrote, “have the socially recognizable function of protecting us against reality.” And, referring to go-to political phrases like “free peoples of the world” and “stand shoulder to shoulder,” Orwell wrote:

A speaker who uses that kind of phraseology has gone some distance towards turning himself into a machine. The appropriate noises are coming out of his larynx, but his brain is not involved as it would be if he were choosing his words

for himself. If the speech he is making is one that he is accustomed to make over and over again, he may be almost unconscious of what he is saying, as one is when one utters the responses in church. And this reduced state of consciousness, if not indispensable, is at any rate favorable to political conformity.

Orwell’s critique of political language was grounded in a belief that thinking and language are intertwined. Words, in his view, are not just vehicles for thoughts but make them possible, condition them, and can therefore distort them. Where words stagnate thought does too. Thus, ultimate control in 1984 is control over the dictionary. “Every year fewer and fewer words, and the range of consciousness always a little smaller.”

Orwell worried not just about the insipid thinking conditioned and expressed by cliché, but also the damaging policies justified by euphemism and inflated, bureaucratic language in both totalitarian and democratic countries. “Such phraseology,” he wrote — as “pacification” or “transfer of population” — “is needed if one wants to name things without calling up mental pictures of them.”

In our democracies words may not mask mass murder or mass theft, but they do become a means of skirting or skewing difficult issues. Instead of talking about the newest scheme to pay fewer workers less money, “job creators” talk about “disruption.” Instead of talking about the end of fairly compensated and dignified manual labor, politicians and journalists talk about the “challenges of the global economy.” Instead of talking about a campaign of secret assassinations that kill innocent civilians, military leaders talk about the “drone program” and its “collateral damage.”

The problem is not that these phrases are meaningless or can’t be useful, but, like Diddlebock’s desperate clichés, they direct our thinking along a predetermined track that avoids unpleasant details. They take us away from the concrete and into their own abstracted realities, where policies can be contemplated and justified without reference to their real consequences.

Even when we set out to challenge these policies, as soon as we adopt their prefab language we channel our thoughts into streams of recognizable opinion. The “existing dialect,” as Orwell puts it, “come[s] rushing in” to “do the job for you.” Language, in these cases, “speaks us,” to borrow a phrase from Heidegger. Our ideas sink under the weight of their own vocabulary into a swamp of jargon and cant.

Of course politics is far from the only domain where language can serve to protect us from reality. There are many unsightly truths we screen with chatter. But how exactly is language capable of this? How do words come to forestall thought?

I.

The tendency of abstracted and clichéd political language to hide the phenomena to which it refers is not unique. All language is reductive, at least to a degree. In order to say anything at all of reality, language must distill and thereby deform the world’s infinite complexity into finite words. Our names bring objects into view, but never fully and always at a price. Before the term “mansplaining,” for example, the frustration a woman felt before a yammering male egotist might have remained vague, isolated, impossible to articulate, unnameable (and all the more frustrating therefore). With the term, the egotist can be classed under a broader phenomenon, which can then be further articulated, explained, argued over, denied, et cetera. The word opens up a part, however small, of the world. (This domain, unconscious micro-behavior and its power dynamics, has received concentrated semantic attention of late: “microaggression,” “manspreading,” “power poses,” “implicit bias”.)

But, like all language, the word is doomed to fall short of the individual cases. It will fit some instances better than others, and, applied too broadly, it will run the risk of distortion. Like pop stars and pop songs, words tend to devolve, at varying speeds, from breakthrough to banality, from innovative truth to insipid generalization we can scarcely bare to listen to. As words age, we tend to forget the work they did to wrench open a door into the world in the first place. In certain cases, this means the door effectively closes, sealing off the reality behind the word, even as it names it.

When a word is new, the reality it opens up is too, and it remains fluid to us. We can still experience the poetry of the word — we can see the reality as the coiner of the word might have. We can also see how the word approaches its object, and for that matter that it does approach the object from a certain perspective. But over time, the door can close and leave us only with a word, one that was never capable of completely capturing the thing to begin with.

Nietzsche, for example, rejected the notion that the German word for snake, Schlange, could live up to its object, since it “refers only to its winding [schlingen means “to wind”] movements and could just as easily refer to a worm.” The idea that a medium evolving in such a partial, anthropocentric, and haphazard manner might be able to speak truth about the world, when it could only ever take a sliver of its objects into account, struck him as laughable. He famously wrote:

What is truth? A mobile army of metaphors, metonymies, anthropomorphisms — in short a sum of human relations, which are poetically and rhetorically intensified, passed down, and embellished and which, after long use, strike a people as firm, canonical, and binding.

Language is made up of countless corrupted, abused, misused, retooled, coopted, and eroded figures of speech. It is a museum of disfigured insights (arrayed alongside trendy innovations) that eventually congeal into the kind of insipid prattle we consider suitable for stating facts about the world.

Still, we may not want to go as far as Nietzsche in denying language its pretension to objective truth simply because of its unseemly evolution. Language, in a certain sense, needs to be disfigured. In order to function at all, it must be disengaged and its origins forgotten. If using words constantly required the kind of poetic awareness and insight with which Adam named the animals — or anything approaching it — we’d be in perpetual thrall to the singularity of creation. We might have that kind of truth, but not the useful facts we need to manage our calendars and pay our bills. If the word for snake really did measure up to its object, it would be no less unique than an individual snake — more like a work of art than a word — and conceptually useless. Poetry, like nature, must die so we can put it to use.

II.

Take the phrase the “mouth of the river” — an example Elizabeth Barry discusses in a book on Samuel Beckett’s use of cliché. We certainly don’t have an anatomical mouth in mind anymore when we use that phrase. The analogy that gave the river its mouth is, in this sense, no longer active, it has died and, in death, become an unremarkable, unconscious, and useful part of our language.

This kind of metaphor death is typical of, perhaps even central to the history of language. As Nietzsche’s remarks on the word “snake” suggest, our sense that when we use “mouth” to refer to the cavity between our lips we are speaking more literally than when we use the phrase “mouth of a river” is something of an illusion. It is a product of time and amnesia, rather than some hard and fast distinction between the literal and the figurative. The oral “mouth” was once figurative too; it has just been dead longer.

Clichés, as Orwell writes of them, are one step closer to life than river “mouths.” They are “dying,” he says. Not quite dead since they still function as metaphor, neither are they quite alive since they are far from a vibrant figurative characterizations of an object. Orwell’s examples include “toe the line,” “fishing in troubled waters,” “hotbed,” “swan song.”

These metaphors are dying since we use them without seeing them. We don’t see, except under special conditions, the line to be toed, the unhatched chickens we should resist counting, the dead horse we may have been flogging. We may even use these phrases without understanding the metaphor behind them. Thus, Orwell points out, we often find the phrase spelled “tow the line.”

But they haven’t become ordinary words, like the river’s “mouth.” We’re much more aware of their metaphorical character and they are much more easily brought back to life. They remain metaphorical even if their metaphors usually lie dormant. This gives them the peculiar ability to express thoughts that haven’t, strictly speaking, been thought. We don’t so much use this kind of language as submit to it.

This in-between character is what leaves cliché susceptible to the uses of ideology and selfdeception. We come to use cliché like it’s ordinary language with obvious, unproblematic meanings, but in reality, the language remains a particular and often skewed or softened interpretation of the phenomena.

In politics and marketing, spin-doctors invent zombie language like this in a relatively transparent way — phrases that present themselves as names when they really serve to pre-interpret an issue. “Entitlement programs.” “A more connected world.” These phrases answer a question before it is asked; they try to forestall alternative ways of looking at what is referred to. Cliché is the bane of journalism not just for stylistic reasons, but also because it can betray a news story’s pretense of objectivity — its tendency to insinuate its own view into the reader.

Euphemistic clichés are particularly good at preventing us from thinking about what they designate. Sometimes this is harmless: we go from “deadborn” to “stillborn.” Sometimes it isn’t. We go, as George Carlin documented, from “shell shock” to “battle fatigue” to “posttraumatic stress disorder,” to simply “PTSD.” By the time we’re done, the horrors of war have been replaced by the jargon of a car manual, and a social pathology is made into an individual one. To awaken the death and mutilation beneath the phrase takes an effort most users of the phrase won’t make.

This is how a stylistic failure can pass into an ethical one. Language stands in the way of a clear view into an object. The particular gets buried beneath its name.

We might broaden the definition of cliché here to include not just particular overused, unoriginal phrases and words, but a tendency in language in general to get stuck in this coma between life and death. Cliché is neither useful, “dead” literal language that we use unthinkingly to get us through the day, nor vibrant, living language meant to name

something new or interpret something old in a new way. This latter type of language figures or articulates its object, translating something in the world into words, modeling reality in language and making it available to us in a way it wasn’t before. The former, “dead” language need only point to its object.

The trouble with cliché is that it plays dead. We use it as dead language, simply pointing at the world, when it is really figuring it in a particular way. It is language that has ceased to seem figurative without ceasing to figure, and it is this that accounts for its enormous usefulness in covering up the truth or reinforcing ideology. In cliché, blatant distortions wear the guise of mundane realities. In the process, it is not just language that is deadened, but experience itself.

III.

I want to suggest now that in popular language today irony has become clichéd. Consider “binge-watching.” The phrase might seem too new to really qualify as a cliché. But, in our ever-accelerating culture a clever tweet can become a hackneyed phrase with a single heave of the internet. In “binge-watching” what has become clichéd is not a metaphor, but a particular tone — irony. “Binge-watching” is of course formed on the model of “binge-drinking” and “binge-eating.” According to the OED, our sense of “binge” comes from a word originally meaning “to soak.” It has been used as slang to refer to getting drunk (“soaked”) since the 19th century. A Glossary of Northamptonshire words and phrases from 1854 helpfully reports that “a man goes to the alehouse to get a good binge, or to binge himself.” In the early 20th century the word was extended to cover any kind of spree, and even briefly, as a verb, to mean “pep up” as in “be encouraging to the others and binge them up.”

Like most everything else, binging started to become medicalized in the 1950s. “Binge” was used in clinical contexts as a prefix to “eating” and “drinking,” and both concepts underwent diagnostic refinement in the second half of the century. Binge-eating first appeared in the DSM in 1987. Binge drinking is now defined by the NIH as five drinks for men and four for women in a two-hour period.

By the time “binge-watch” was coined and popularized (Collins Dictionary’s 2015 word of the year), the phrase “binge-drinking” had moved from the emergency room to the dorm room. The binge-drinkers themselves now refer to binge-drinking as “binge-drinking” while they’re binge-drinking. In a way, they’re taking the word back to its slang origins, but, in the meantime, it has accumulated some serious baggage.

The usage is ironic, since when the binge-drinkers call binge-drinking “binge-drinking,” they don’t mean it literally, the way the nurse does in the hospital the next morning. Take this example from Twitter. “I’m so blessed to formally announce that I will continue my career of binge drinking and self loathing at Louisiana state university!” The tweet is a familiar kind of self-deprecating irony that aims, most of all, to express self-awareness.

It elevates the user above the concept of binge-drinking. If I actually had a problem with binge-drinking, the writer suggests, would I be talking about it like this? By ironically calling binge-drinking “binge-drinking,” binge-drinkers deflect anxiety that they might have a problem with binge-drinking. They preempt criticism or concern by seeming to confess everything up front.

The phenomenon is part of a larger incursion of the language of pathology into everyday life, where it has, in many contexts, replaced the language of morality. Disease is no longer just for sick people. Where we might have once worried about doing wrong, we now worry about being ill. One result is that a wealth of clinical language enters our everyday lexicon.

These words are often not said with the clinical definition in mind. “I’m addicted to Breaking Bad/donuts/Zumba.” “I’m so OCD/ADD/anorexic.” “I’m such an alcoholic/a hoarder.” “I was stalking you on Facebook and I noticed…” They are uttered half-ironically to assure you that the utterer is aware that his or her behavior lies on a pathological continuum. But that very awareness is marshaled here as evidence that the problem is under control. It would only really be a problem if I didn’t recognize it, if I were in denial.

This is a kind of automatic version of the talking cure. If I’m not embarrassed to call my binge-drinking “binge-drinking,” it must mean it’s not a problem. In effect, we hide these problems in plain sight, admitting to them so readily, with just the right dose of irony, that