International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

K. D.

Abstract- A significant communication divide separates users of sign language from those who do not, often leading to the social and professional isolation of the deaf and hard-of-hearing community. This gap is widened by a shortage of real-time, portable, and affordable translation technologies. This project introduces a system engineered to overcome these barriers by translating sign language into text or speech, and vice versa, in real time. The primary aim is to facilitate seamless communication between signers and non-signers. By doing so, the project anticipates fostering greater inclusivity, enhancing independence for deaf individuals, and enabling their full participation in all social and professional environments.

Keywords sign language recognition, computer vision, deep learning, human-computer interaction, continuous SLR, transformers, accessibility technology Introduction

Communicationisafundamentalpillarofhumansociety.Forthedeafandhard-of-hearingcommunity,signlanguage a visual language using hand gestures, facial expressions, and body movements is a primary means of communication. However,asignificantcommunicationgapexistsbetweensignlanguageusersandthosewhodonotknowsignlanguage. This barrier can lead to social and professional exclusion, limiting opportunities in education, workplaces, and daily life The reliance on human interpreters is not always feasible or accessible, highlighting an urgent need for an affordable, portable, and accurate technological solution. AI-powered Sign Language Recognition (SLR) systems aim to address this need directly. A Live Sign Language Translator captures gestures in real-time using a standard camera, employing computervision,machinelearning,andnaturallanguageprocessingtorecognizeandtranslatethem.Thegoalistocreate aseamless,two-waycommunicationtoolthatconvertssignlanguageintotextorspeech,andcanalsoconvertspeechinto sign language representations. Such a system promotes greater accessibility and independence for its users. Beyond accessibility,thisprojecthighlightshowAIcanbeappliedforsocialgood,promotinginclusivityandequalparticipationin society. It can be used in schools to support deaf students, in hospitals to assist communication between doctors and patients, and in workplaces to create inclusive environments. Moreover, as wearable and mobile devices evolve, such translators can become portable and user-friendly, making them widely accessible. By eliminating communication barriers, it empowers individuals with hearing impairments to interact freely, gain equal opportunities, and live more independently. This project demonstrates the true potential of AI when applied to human needs, combining technology withcompassionforabettersociety.Thispaperpresentsacomprehensivereviewofthestate-of-the-artinAI-basedSLR Weprovideastructuredoverviewofthetechnologicalevolution,methodologies,andpersistentchallengesinthefield.The key contributions of this review are: 1.A detailed survey of modern deep learning architectures for SLR. 2.An analysis of the role of datasets and the critical bottlenecks related to data scarcity. 3.A thorough discussion of the open challenges defined by the core problem statement. 4.An outlook on future research directions required to build a user-friendly and effectivesystemwithlowlatencythatrequiresnospecialhardwarelikeglovesorsensor.

Thecentral problemisthelack ofimmediateandaccessiblecommunication betweensignersandnon-signers. Thisleads toseveralinterconnectedissues:

A. Social and Professional Exclusion: Communicationbarriersareaprimarycauseofexclusionforthedeafcommunity.

B. Interpreter Dependency: The reliance on human interpreters is a significant bottleneck, as they are not always availableoraffordable.

C. Technological Gaps: Existingtranslationtoolsareoftenexpensive,notportable,orlackreal-timeaccuracy

D. Linguistic Barriers: Inconsistenciesacrossregionalsignlanguagescanleadtomisscommunicationandlearningsign languagepresentstimeandresourceconstraintsfornon-signers.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

Toaddressthis,theprimaryobjectiveistodevelopareal-timesignlanguagetranslationsystemusingAI.Thekeygoalsfor suchasystemareto:

1) Accuratelyrecognizehandgesturesandfacialexpressions.

2) Translaterecognizedsignsintotextandspeechoutput.

3) Achievehighaccuracyandlowlatencyforsmooth,instantconversation.

4) Ensurethesystemisuser-friendlyandworkswithstandardcameraswithoutextrahardware

III. BACKGROUND: THE LINGUISTICS OF SIGN LANGUAGE

Beforedelvingintothetechnicalmethodologies,itisessentialtounderstandthelinguisticpropertiesofsignlanguage, asthesepropertiesdefinethecorechallengesforanyautomatedrecognitionsystem.Unlikespokenlanguages,whichare sequentialandauditory,signlanguagesarevisual-spatialandcanconveyinformationinparallel.

IV. LITERATURE REVIEW

Ref No.

Author(s) & Year

1) Fang, Co, and Zhang (2018)

Title/ Focus

Deep ASL – Infrared-Based Sentence-LevelTranslation

Key Contribution/ Relevance

DeepASL,asystemusinginfrared sensing plus a hierarchical bidirectional RNN and CTC framework, achieving ~945% accuracy on sentence- level American Sign Language (ASL) translation pioneering nonintrusive,ubiquitoustranslation.

2) Shahin&Ismail(2024) TransformerModelsinSign Language Machine Translation(SLMT) surveyed the evolution from rulebased methods to deep-learning transformerarchitecturesforSLMT, offering a taxonomy and noting transformers outperform previous models in tasks like gloss-to-text translation.

3) Tanetal(2024) Comprehensive Deep Learning Study of Sign LanguageProcessing landscape recognition,translation, generation highlighting limitations of gloss-based approaches,datasetinconsistencies, and evaluation challenges, while emphasizing the emerging role of LargeLanguageModels(LLMs)

4) Abroadreview(2024) Machine Learning with Gesture, Face, and Lip Detection sign language interpretation systemsfromML,computervision, and animation perspectives includinggesture,facialexpression, and lip reading and discussed real-worlddeployedmobileapps

5) Kimetal(2024) Gloss-Free Translation via MultimodalLLMs novel gloss-free SLT framework using large multimodal language models (MLLMs) to describe sign components, aligning them with spokensentences achievingstateof-the-art performance on benchmarkslikePHOENIX14T.

6) Kapiletal(2025) Real-Time Multimodal a real-time multimodal translator combining speech recognition, Media Pipe hand tracking, and

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

TranslatorPrototype Google Translate API demonstrating practical bridge between spoken, written, and signedformats.

7) Leppetal.(2025)

8) Kouremenos & Ntalianis(2025)

Community-Centered Sign Language Machine Translation the lack of meaningful user (especially Deaf community) involvement in SLMT R&D, urging more inclusive and representative developmentpractices.

GLaM-Sign: Multimodal GreekAccessibilityDataset

9) ElvinLalsiembulHmar, Bornali Gogoi, Nelson R. Varte (IJERT, Aug 2025)

Sign Language Recognition (SLR); static, continuous, translation tasks, plus architecturesetc.

GLaM-Sign, a multimodal dataset combiningaudio,video,textual,and GreekSignLanguagetranslationsto aid real-time translation with applications in tourism, education, andhealthcare.

highlights attention models, glossfree systems, neuromorphic hardware;identifieschallengeslike signer variability, linguistic complexity; suggests future directions like edge-optimized architectures, inclusive datasets, explainableAI

10) Hanna M. Dostal, JessicaA.Scott,Marissa D. Chappell, Christopher Black (Behavioural Sciences, Aug2025)

11) RefiaDaya, Santiago BerrezuetaGuzman, Stefan Wanger(2025)

12) Hanaa ZainEldin, SamahA.Gamel,Fatma M. Talaat, Mansourah Aljohani, Nadiah A. Baghdadi,AmerMalki, Mahmoud Badawy, Mostafa A. Elhosseini (2025)

13) Jalindar Nivrutti Ekatpure, Divya Bhagvat, Shaikh Aman Hashim,SaniyaRashid, Siddhesh Bhanudas Thombare(2025)

14) The Review of Socionetwork Strategies (2025)

A Scoping Review of LiteracyInterventionsUsing Signed Languages for School-AgeDeafStudents

Virtual Reality in Sign Language Education: Opportunities, Challenges, andtheRoadAhead

Found that integrating signed language into literacy instruction improves access and outcomes; identified gaps (e.g. fewer studies with large samples, underrepresentation in certain regions; variablemethodologies).

gesture recognition + real-time feedback,interactiveenvironments, gamification, personalization, inclusivity; constraints: hardware, accuracy, inclusivity of design; suggests actionable recommendations.

A Survey of Advancements inReal-TimeSignLanguage Translators: Integration withIoTTechnology

ASurveyonSignLanguage Translation Systems: BridgingGestures,Text,and Audio for Enhanced Communication

A Critical Study of Recent Deep Learning-Based Continuous Sign Language Recognition(Review)

LooksatAI/ML/DLapproachesin thesesystems;discusseschallenges like latency, device constraints, varied sign language vocabularies, real-worlddeployment.

Categorizes existing systems; discusseschallengesinrecognition accuracy, usability, accessibility; explores approaches and suggests futurelinestoimprovetranslation quality.

Emphasizes issues like sign boundary detection, temporal segmentation, the need for more realistic datasets, better modelling of non-manual cues (facial, body posture)etc.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

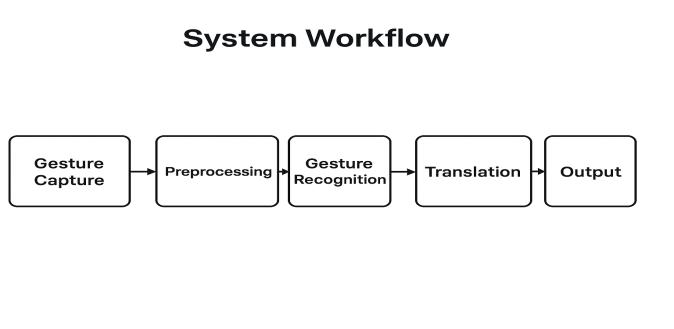

1) System Work flow :

As illustrated in the general architecture, a typical SLR system operates as follows:

a) Gesture Capture:Astandardwebcamormobilecameracapturesalivevideostreamoftheuser'sgestures.

b)Preprocessing:Individual framesare extracted fromthe video. Techniquesareappliedtoreduce background noise andnormalizetheimagesforconsistentanalysis.

c) Gesture Recognition:AnAI/MLmodelprocessesthepreprocessedframes.Thismodel,oftenaCNNoraspecialized frameworklikeMediaPipeHandTracking,detectskeyhandlandmarksandclassifiesthegestureasaspecificsign.

d) Translation: Therecognizedsignorsequenceofsignsismappedtoitscorrespondingwordorphrase.

e) Output: Thetranslatedtextisdisplayedon-screenandsimultaneouslyconvertedintoaudiblespeech.

2) Machine Learning Models:

Thecoreoftherecognitionenginecanbeimplementedusingseveralapproaches,rangingfromsimpletocomplex:

a) Rule-Based Mapping: Thismethodinvolvesadirectmappingofarecognizedwordorlettertoapre-storedimage orGIFofthecorrespondingsign.Whilesimpletoimplement,thisapproachisnotscalableforalargevocabulary.

b) CNN for Isolated Signs: For recognizing individual alphabet signs (A-Z) or static gestures, a Convolutional Neural Network(CNN)ishighlyeffective.TheCNNistrainedtoclassifyimagesofhandshapesintodistinctcategories

c) CNN + RNN for Sequences:Torecognizeshortsignsequencesordynamicgestures,ahybridmodelisoftenused.A CNNextractsspatialfeaturesfromeachframe,andaRecurrentNeuralNetwork(RNN),suchasanLSTMorGRU,processes thesequenceofthesefeaturestounderstandthetemporalpattern.

d) Transformer-Based Models: For the highly complex task of continuous sign language translation, Transformer modelshavebecomethestate-of-the-art.AsnotedbyHadfield&Bowden,transformersshowstronggains,althoughthey stillfacechallengeswithnon-manualfeaturesandsignergeneralization.

Asigniscomposedofseveralfundamentalparameters,oftenreferredtoasphonemesinspokenlanguage.Theprimary componentsare:

A. Hand shape: Thespecificconfigurationofthefingersandthumb.

B. Orientation:Thedirectionthepalmisfacing

C. Location:Theplaceonthebodyorinthesigningspacewherethesignisproduced

D. Movement:Thetrajectoryandactionofthehands.

E. Non-Manual Features (NMFs): Thesearecrucialforconveyinggrammaticalinformation,syntax,andemotion.They include:

1) Facial Expressions: Eyebrowmovements,forinstance,candistinguishastatementfromaquestion.

2) Mouth Morphemes: Specificmouthshapescanmodifythemeaningofamanualsign.

3) Body Posture and Head Tilt:Thesecanindicatetense,subject-objectrelationships,orreportedspeech.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

The parallel and multi-channel nature of these components makes vision-based SLR exceptionally challenging. A robust systemmust notonlytrack thehands butalsoperceive andinterpretsubtlechangesinthesigner'sfaceandupper body simultaneously.

Here are some notable datasets available for ISL recognition research:

A. INCLUDE Dataset: Developed by researchers at IIIT-Delhi, this is one of the most significant ISL datasets. It features continuous sentences signed by multiple native signers. The dataset is particularly valuable for its focus on daily conversationandincludesannotationsforgloss,part-of-speechtags,andcorrespondingHinditranslations.

B. IIT Madras Indian Sign Language Dataset: Thisisalarge-scaledatasetfocusingonisolatedsigns.Itcontainsthousands ofvideosofcommonISLwordssignedbymultipleindividuals,makingitsuitablefortrainingmodelsforisolatedSLR tasks.

C. BITS Pilani ISL Dataset: This collection focuses on static alphabet signs and numbers. It's often used for building foundationalmodelsthatcanrecognizefingerspelling,whichisacrucialcomponentofsignlanguage.

D. Jadavpur University School of Education Technology (JU-SET) Dataset: This dataset includes a vocabulary of isolated wordscommonlyusedinacademicandclassroomsettings,aimingtobridgecommunicationgapsineducation.

Despite significant progress, several major challenges prevent SLR systems from achieving the fluency required for widespreaduse.

1) Co-articulation and Segmentation: In continuous signing, the boundaries between signs are blurred. Identifying whereonesignendsandanotherbeginsisextremelydifficult.

2) Non-Manual Features (NMFs): Asnotedintheliterature,facialexpressionsandbodyposturearevitalgrammatical components but are often weakly modeled or ignored. Accurately recognizing and integrating these features is a key hurdle.

3) Signer Independence: Models often fail to generalize to new users whose signing style differs from the training data. Thisissueof"signergeneralization"isapersistentweakpoint.

4) Data Scarcity and Diversity: The lack of large, varied, and well-annotated datasets remains the single biggest bottleneck.Existingcorporaareoftenlimitedindomain,numberofsigners,orenvironmentalconditions.

5) Scalability: Expandingthesystem’svocabularytothousandsofsignsisanon-trivialtask.Ascalablearchitectureis neededtoallowfortheadditionofmoresignsovertimewithoutacompleteredesign.

The future of SLR research lies in addressing these challenges. The work done on this project, including the preparationofa datasetwithalphabetsigns,common phrases, andGIFsofsignactions,servesasa foundation. The next stepsalignwiththebroadergoalsinthefield:

A. Model Training and UI Development: The immediate work involves training a robust machine learning model and developingasimple,interactiveuserinterfacefortwo-waycommunication.

B. Advanced Multi-modal Fusion: Future models must effectively integrate features from the hands, face, and body to capturethefulllinguisticrichnessofsignlanguage.

C. Self-Supervised Learning: To combat data scarcity, self-supervised techniques can be used to learn powerful visual representationsfromlargeamountsofunlabeledvideodata.

D. Personalization: Creating models that can adapt to an individual user's signing style would significantly improve usabilityandaccuracy.

E. Community-Centric Development: It is paramount that development is done in collaboration with the Deaf community toensurethefinalproductisuseful,respectful,andtrulymeetstheirneeds.

Arealsignlanguage,however,hasamuchlargerlexicon,akintoaspokenlanguage.Scalingmodelstohandletensof Thousands of signs is a significant challenge due to the "curse of dimensionality" and the increased risk of confusion betweenvisuallysimilarsigns.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

AI-basedSignLanguageRecognitionhasmaderemarkablestrides,movingfromconcepttoincreasinglyviableprototypes. The goal is a portable, cost-effective, and user- friendly system that bridges the communication gap for deaf and mute individuals. By leveraging modern AI models like signer variability, and the complexity of language itself remain. Future workmustfocusoncreatingmorerobustandgeneralizablemodels,expandingdatasets,andensuringthatthetechnology isdeveloped ethicallyandinclusively.Theultimatevisionistofosterseamlesscommunication,therebypromoting equal participationandgreaterindependencefortheDeafcommunityinallaspectsoflife.

1) Aggarwal,J.K.,&Ryoo,M.S.(2011).Humanactivityanalysis:Areview.ACMComputingSurveys(CSUR),43(3),143. DOI:https://doi.org/10.1145/1922649.1922653

2) Cheok,M.J.,Omar,Z.,&Jaward,M.H.(2019).Areviewofhandgestureandsignlanguagerecognitiontechniques. International Journal of Machine Learning and Cybernetics, 10(1), 131-153. DOI: https://doi.org/10.1007/s13042-0170705-5

3) Koller, O. (2020). Quantitative survey of the state of the art in sign language recognition. arXiv preprint arXiv:2008.09918. arXiv:https://arxiv.org/abs/2008.09918

4) Rastgoo, R., Kiani, K., & Escalera, S. (2021). Sign language recognition: A review of the state of the art. Expert SystemswithApplications,164,114044. DOI:https://doi.org/10.1016/j.eswa.2020.114044

5) Bragg, D., Koller, O., Bellard, M., et al. (2019). Sign language recognition, generation, and translation: An interdisciplinary perspective. In The 21st International ACM SIGACCESS Conference on Computers and Accessibility (pp. 16-31). DOI:https://doi.org/10.1145/3308561.3353774

6) Pigou, L., Van Herreweghe, M., & Dambre, J. (2015). Sign language recognition using deep convolutional neural networks. In European Conference on Computer Vision Workshops (pp. 573-586). DOI: https://doi.org/10.1007/978-3319-25958-1_41

7) Ji, S., Xu, W., Yang, M., & Yu, K. (2013). 3D convolutional neural networks for human action recognition. IEEE transactionsonpatternanalysisandmachineintelligence,35(1),221-231.DOI:https://doi.org/10.1109/TPAMI.2012.59

8) Koller, O., Zargaran, S., Ney, H., & Bowden, R. (2016). Deep sign: A vision-based statistical framework for sign languagerecognition.International Journal ofComputer Vision, 119(3),279-299. DOI: https://doi.org/10.1007/s11263016-0901-y

9) Cui, R., Liu, H., & Zhang, C. (2017). Recurrent convolutional neural networks for continuous sign language recognition by staged optimization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp.1612-1621). DOI:https://doi.org/10.1109/CVPR.2017.653

10) Guo, D., Zhou, W., Li, H., & Wang, M. (2018). Online sign language recognition with hierarchical-LSTM. In Proceedings of the 26th ACM international conference on Multimedia (pp. 710-718). DOI: https://doi.org/10.1145/3240508.3240632

11) Li, D., Rodriguez, M., Yu, X., & Li, H. (2020). Word-level deep sign language recognition from video: A new largescale dataset and methods. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (pp. 1448-1457). DOI:https://doi.org/10.1109/WACV45572.2020.9093463

12) Konstantinidis,D., Dimitropoulos,K., &Daras, P.(2018).A deeplearningapproachforsignlanguage recognition usingspatiotemporaldata.In2018IEEEInternationalConferenceonImageProcessing(ICIP)(pp.3673-3677). DOI: https://doi.org/10.1109/ICIP.2018.8451152

13) Camgoz, N. C., Kındıroğlu, K. A., Sener, O., O'Reilly, A., & Koller, O. (2020). Sign language transformers: A new architecture for word-level and continuous sign language recognition. arXiv preprint arXiv:2010.03870. arXiv: https://arxiv.org/abs/2010.03870

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

14) Pu, J., Zhou, W., & Li, H. (2019). Iterative alignment network for continuous sign language recognition. In ProceedingsoftheIEEE/CVFconferenceoncomputervisionandpatternrecognition(pp.4175-4184). DOI:https://doi.org/10.1109/CVPR.2019.00430

15) Yin, K., & Read, J. (2020). Better sign language translation with spatial-temporal multi-cue transformers. arXiv preprintarXiv:2006.00288. arXiv:https://arxiv.org/abs/2006.00288

16) Camgoz, N. C., Sener, O., O'Reilly, A., Hadfield, S., & Bowden, R. (2018). Neural sign language translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 7784-7793). DOI: https://doi.org/10.1109/CVPR.2018.00812

17) Zhou,H.,Zhou,W.,&Li,H.(2021).Improvingsignlanguagetranslationwithcomplexspatial-temporalmodeling. IEEETransactionsonMultimedia,24,1478-1489. DOI: https://doi.org/10.1109/TMM.2021.3065406

18) Joze, H. R. V., & Koller, O. (2019). Handling scarce data in 3d human pose estimation for sign language recognition.In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops DOI: https://doi.org/10.1109/ICCVW.2019.00331

19) Jiang,S.,Sun,B.,Wang,L.,Liu,W.,Han,Y.,&Liu,J.(2021).Skeleton-awaremulti-modalsignlanguagerecognition. In Proceedings of the 29th ACM International Conference onMultimedia (pp. 4500-4508). DOI: https://doi.org/10.1145/3474085.3475306

20)

21) Tunga, A., Sinclair, D., & Collomosse, J. (2021). Pose-based sign language recognition using graph convolutional networks.InProceedingsoftheIEEE/CVFConferenceonComputerVisionandPatternRecognitionWorkshops(CVPRW). DOI:https://doi.org/10.1109/CVPRW53098.2021.00234

22) Vassiliou, C., Vourssollouki, A., & Potamianos, G. (2021). Graph-based spatiotemporal feature learning for sign languagerecognition.In2021IEEEInternationalConferenceonImageProcessing(ICIP)(pp.3174-3178). DOI:https://doi.org/10.1109/ICIP42928.2021.9506450

23) Forster, J., Schmidt, C., Koller, O., Zini, M., & Ney, H. (2012). RWTH-PHOENIX-Weather: A large vocabulary sign languagerecognitionandtranslationcorpus.InLREC. Link: http://www.lrecconf.org/proceedings/lrec2012/pdf/138_Paper

24) Camgoz, N. C., Hadfield, S., Koller, O., & Bowden, R. (2018). The PHOENIX-Weather 2014 T: A large-scale parallel corpus for German sign language to German text translation. In Proceedings of the LREC 2018. Link:http://www.lrecconf.org/proceedings/lrec2018/pdf/317.pdf (NoDOIassigned)

25) Joze,H.R.V.,&Koller,O.(2018).MS-ASL:Alarge-scaledatasetandbenchmarkforunderstandingamericansign language.arXivpreprintarXiv:1812.01053.arXiv:https://arxiv.org/abs/1812.01053

26) Li, D., op den Akker, R., & van der Loo, M. P. J. (2020). WLASL: A large-scale word-level american sign language video dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (pp. 944-945). DOI:https://doi.org/10.1109/CVPRW50498.2020.00473

27) Stoll, S., Camgoz, N. C., Hadfield, S., & Bowden, R. (2018). Sign language production using neural machine translation and generative adversarial networks. In British Machine Vision Conference (BMVC).Link: https://bmvc2018.org/contents/papers/0656.pdf (NoDOIassigned)

28) Saunders, B., Camgoz, N. C., & Bowden, R. (2020). Progressive transformers for end-to-end sign language production.InEuropeanConferenceonComputerVision(pp.732-749).DOI:https://doi.org/10.1007/978-3-030-585808_43

29) Zelinka,P.,&Kanis,J.(2020).Neuralsignlanguagesynthesis:Asystematicreview.ComputerScienceReview,38, 100299.DOI:https://doi.org/10.1016/j.cosrev.2020.100299

2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified Journal | Page428

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

30) Shi,B.,Oliveira,G.L.,&Davis,L.S.(2018).Americansignlanguagefingerspellingrecognitionfromvideowithdeep neural networks. In 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018) (pp. 211-218). DOI:https://doi.org/10.1109/FG.2018.00040

31) Koller, O., Ney, H., & Bowden, R. (2015). Deep learning of mouth shapes for sign language. In Proceedings of the IEEEInternationalConferenceonComputerVisionWorkshops(pp.1-9).DOI:https://doi.org/10.1109/ICCVW.2015.11

32) Al-Hammadi,M.,Muhammad,G.,Abdul,W.,etal.(2020).Deeplearning-basedapproachforsignlanguagegesture recognition with efficient hand gesture representation. IEEE Access, 8, 79545-79561.DOI: https://doi.org/10.1109/ACCESS.2020.2990520

33) Ong, S. C., & Ranganath, S. (2005). Automatic sign language analysis: a survey and the future beyond lexical meaning. IEEE transactions on pattern analysis and machine intelligence, 27(6), 873-891.DOI: https://doi.org/10.1109/TPAMI.2005.112

34) Fang, B., Coquin, D., Chen, L., & Li, J. (2017). A real-time sign language recognition system using data gloves and camera. In 2017 10th international congress on image and signal processing, biomedical engineering and informatics (CISP-BMEI)(pp.1-6). DOI:https://doi.org/10.1109/CISP-BMEI.2017.830206

35) Lee,C.,&Xu,Y.(2017).Onlinegesturerecognitionwithawearablesensor.IEEEPervasiveComputing,16(2),6474. DOI:https://doi.org/10.1109/MPRV.2017.29

36) Moryossef,A.,Tsochantaridis,I.,Dikshtein,J.,etal.(2021).Dataaugmentationforsignlanguageglosstranslation. arXivpreprintarXiv:2104.05837. arXiv:https://arxiv.org/abs/2104.05837

37) Adarkwah,C.C.,&J,J.D.(2021).Areviewonvision-basedsignlanguageclassificationusingdeeplearning.Journal ofEngineeringResearchandReports,20(1),1-22.DOI:https://doi.org/10.9734/jerr/2021/v20i117289

38) Ko, D. H., & Kim, Y. H. (2019). A brief review of the sign language system. Electronics, 8(7), 777. DOI: https://doi.org/10.3390/electronics8070777

39) DeCoster,M.,D'hondt, T.,VanHerreweghe,M., &Dambre, J.(2020).Signlanguage recognition withtransformer networks.arXivpreprintarXiv:2003.01633.arXiv:https://arxiv.org/abs/2003.01633

40) Cheng,K.,Yang,Z.,Chen,Q.,&Zhang,Y.(2020).Fullyconvolutionalnetworkwithsequence-to-sequencelearning for sign language recognition. Multimedia Tools and Applications, 79(3), 2005-2020.DOI: https://doi.org/10.1007/s11042-019-08204-6

41) Hu, H., Cui, Y., & Zhang, B. (2021). Sign language recognition with temporal-spatial feature fusion. Neurocomputing,442,164-173.DOI:https://doi.org/10.1016/j.neucom.2021.01.125

BIOGRAPHIES

CHINMAY R PATHAK

Pursuing B Tech final year in Artificial Intelligence And Data Science at K D K College of Engineering, Nagpur

GUNMAY A KHARABE

Pursuing B Tech final year in Artificial Intelligence And Data Science at K. D. K.College of Engineering, Nagpur

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072 © 2025, IRJET | Impact Factor value: 8.315 | ISO 9001:2008 Certified

RUSHIKESH H BHISE

Pursuing B Tech final year in Artificial Intelligence And Data Science at K D K College of Engineering, Nagpur

SHISHIR K. MANE

Pursuing B. Tech final year in Artificial Intelligence And Data Science at K D K College of Engineering, Nagpur