International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

SUMAIYA 1, ANUSHA R PRASAD2 , SUKRUTH M3 , VARSHA R4 , VARSHITH S5

1Professor, Dept. of Computer Science & Engineering, Maharaja Institute Of Technology Thandavapura, Karnataka, India

2 5 Students, Dept. of Computer Science & Engineering, Maharaja Institute Of Technology Thandavapura, Karnataka, India ***

Abstract The literature contains many proposed solutionsforautomaticlanguagerecognition.However,the ARSL (Arabic Sign Language), unlike ASL (American Sign Language), didn't take much attention from the research community.Inthispaper,weproposeanewsystemwhich doesn't require a deaf wear inconvenient devices like gloves to simplify the method of hand recognition. The system is based on gesture extracted from 2D images. the scale Invariant Features Transform (SIFT) technique is employedtoachievethistaskbecauseitextractsinvariant features which are robust to rotation and occlusion. Also, the Linear Discriminant Analysis (LDA) technique is employedtosolvedimensionalityproblemoftheextracted feature vectors and to extend the separability between classes, thus increasing the accuracy of the introduced system. The Support Vector Machine (SVM), k Nearest Neighbor (kNN), and minimum distance are going to be usedtoidentifytheArabicsigncharacters.Experimentsare conductedtotesttheperformanceoftheproposedsystem and it showed that the accuracy of the obtained results is around 99%. Also, the experiments proved that the proposed system is strong against any rotation and that they achieved an identification rate concerning 99%. Moreover,theevaluationshownthatthesystemissuchas therelatedwork.

Key Words: SIFT, LDA, KNN, ARSL.1. INTRODUCTION

A sign language may be a collection of gestures, movements, postures, and facial expressions similar to lettersandwordsinnaturallanguages.So,thereshouldbe the way for the non deaf people to recognize the deaf language(i.e.,signlanguage).Suchprocessisunderstoodas a sign language recognition. The aim of the sign language recognition is to supply an accurate and convenient mechanismtotranscribesigngesturesintomeaningfultext or speech so communication between deaf and hearing society can easily be made. to achieve this aim, many proposal attempts are designed to createfully automated systemsorHumanComputerInteraction(HCI) to facilitate interaction between deaf and non deaf people. There are two main categories for gesture recognition glove based systems and vision based systems. Glove based systems: In these systems, electromechanical devices are

accustomed collect data about deaf’ s gestures. With these systems, the deaf person should wear a wired glove connected to many sensors to gather the gestures of the person'shand.

1.1 PROBLEM DEFINITION

Thetraditionalexistingsystemforsignlanguagerecognition isbasedonmainlyhandrecognitiontechniques,whichwere useful in communicating the words between the normal people and deaf people. The combinational use of sign and behavior signals used in our project helps in identifying whatthepersonistryingtocommunicate.So muchhelpful in security concerned field. Based on various signs we can communicate with the person with more accuracy using manyinstancelearningalgorithms.Usinghandrecognition, wecan communicate with a deaf person and dumb person to display weather it is letter, word or trying to convey something. This software system is designed to recognize thehandusinggesture. The system thencomputesvarious hand parameters of the person’s gesture. Upon identifying and recognizing these parameters, the system compares these parameters with gesture for human communication. Based on this static gesture the system concludes the person’scommunicationstate.

1.1 OBJECTIVE

The main objective of our project is to make the communicationexperienceascompleteaspossibleforboth hearing and deaf people. The work presented in Indian Regionallanguage,Kannada,thegoalistodevelopasystem for automatic translation of static gestures of alphabets in Kannada sign language. Sign of the deaf individual can be recognized and translated in Kannada language for the benefitofdeaf&dumbpeople.

1.2 SCOPE

Communicationformsaveryimportantandbasicaspectof our lives. Whatever we do, whatever we say, somehow does reflect some of our communication, though may not be directly.Tounderstandtheveryfundamentalbehaviorofa human, we need to analyze this communication through some hand gesture, also called, the affect data. This data can

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

be sign, image etc. Using this communicational data for recognizing the gesture also forms an interdisciplinary field, called Affective Computing. This paper summarizes thepreviousworksdoneinthefieldof gesturerecognitionbased on various sign models and computationalapproaches

2. LITRATURE SURVEY

[A]Title: NewMethodologyforTranslationofStaticSign SymboltoWordsinKannadaLanguage.

Authors: Ramesh M. Kagalkar, Nagaraj H.N PublicationJournal&Year: IRJET 2020.

Summary: During this paper the goal is to develop a system for automatic translation of static gestures of alphabetsinkannadasignlanguage.Itmapsletters,words and expression of a particular language to a collection of hand gesturesenablinganinindividualexchangebyusing handsgesturesinsteadofbyspeaking.Thesystemcapable ofrecognizing signing symbols may be used as a way of communicationwithhardofhearingpeople

[B]Title: SignlanguageRecognitionUsingMachine LearningAlgorithm.

Authors: Prof.RadhaS.Shirbhate,Mr.VedantD.Shinde PublicationJournal&Year: IJASRT,2020.

Summary: Hand gestures differ from one person to a different person in shape and orientation, therefore, a controversy of linearity arises. Recent systems have come up with various ways and algorithms to accomplish the matter and build this method. Algorithms like KNearest neighbors(KNN),Multi classSuperVectorMachine(SVM), andexperimentsusinghandgloveswereusingdecodethe hand gesture movements before. during this paper, a comparison between KNN, SVM, and CNN algorithms is completed to see which algorithm would offer the simplestaccuracyamongall.Approximately29,000images were split into test and train data and preprocessed to suitintotheKNN,SVM,andCNNmodelstogetanaccuracy of93.83%,88.89%,and98.49%respectively

[C]Title: SignLanguageRecognitionUsingDeepLearning OnStaticGestureImages.

Authors:AdityaDas,Shantanu PublicationJournal&Year: 2019

Summary:- The image dataset used consists of static sign gestures captured on an RGB camera. Preprocessing was performed on the pictures, which then served as cleaned input.Thepaperpresentsresultsobtainedbyretrainingand testing this signing gestures dataset on a convolutional neural network model using Inception v3. The model consists of multiple convolution filter inputs that are processed on the identical input. The validation accuracy

obtained was above 90% This paper also reviews the assorted attempts that are made at sign language detection using machine learning and depth data of images. It takesstock of the varied challenges posed in tackling such anissue,anddescriptionsfuturescopealso.

[D]Title: Indian Sign Language Recognition Based ClassificationTechnique.

Authors: Joyeeta Singha, Karen Das Publication Journal & Year: IEEE,2019.

Summary: Handgesturerecognition(HGR)becameavery important research topic. This paper deals with the classification of single and double handed Indian sign languagerecognitionusingmachinelearningalgorithmwith thehelpofMATLABwith92 100%ofaccuracy.

[E]Title: IndianSignLanguageGestureRecognitionusing ImageProcessingandDeepLearning.

Authors: VishnuSaiY,RathnaGN. PublicationJournal&Year: IJSCSEIT,2018.

Summary: Speech impaired people use hand based gesturestotalk.Unfortunately,theoverwhelmingmajority of the people aren't tuned in to the semantics of these gestures. To bridge the identical attempt to bridge the identical,weproposearealtimehandgesturerecognition system supported the data captured by the Microsoft Kinect RGBD camera. on condition that there is no one to 1 mappingbetweenthepixelsofthedepthandthustheRGB camera, we used computer vision techniques like 3D contruction and transformation. After achieving one to 1 mapping,segmentationofthehandgestureswasdonefrom the background. Convolutional Neural Networks (CNNs) wereutilisedfortraining36staticgesturesrelatingIndian sign Language(ISL) alphabets and numbers. The model achieved an accuracy of 98.81% on training using 45,000 RGB images and 45,000 depth images. Further Convolutional LSTMs were used for training 10 ISL dynamic word gestures and an accuracy of 99.08% was obtained by training 1080 videos. The model showed accurate real time performance on prediction of ISL static gestures, leaving a scope for further research on sentence formation through gestures. The model also showed competitive adaptability to American Sign language (ASL) gestures when the ISL models weights were transfer learnedtoASLanditresultedingiving97.71%accuracy

3. EXISTING SYSTEM

The Indian sign language lag its American counterpart as the research in this field is hampered by the lack of standard datasets. Unlike American sign language uses single hands for making gesture. Indian sign language is subjected to variance in locality and the existence of multiplesignsforthesamecharacter.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

4. PROPOSED SYSTEM

Thetrainingsetconsistsof70%oftheaggregatedataand remaining 30%are used as testing. We concentrate more ondevelopingISLalongwithIndianregionallanguage, Kannada.KSL uses single hand for text recognition, providedinKSLSwaragalu( ),isinunderresearchand implementation.ImplementingbothSwaragalu( ), and vyanjanagalu( O ),Wetotally implement 49 Varna male( ಣ ) letters and Try recognisinggivingthe80 90%accuracy.

5.1 Scale Invariant Features Transform (SIFT)

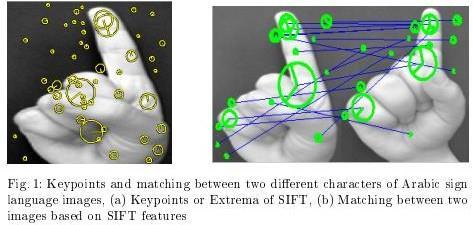

The SIFTalgorithm takesan image and transforms itinto a collection of local feature vectors. Each of these feature vectors is supposed to be distinctive and invariant to any scaling,rotationortranslationoftheimage.

We locate the highest peak in the histogram and use this peak and any other local peak to create a keypoint with thatorientation. Some points will be assigned multiple orientations if there are multiple peaks of similar magnitude. The assigned orientation, location and scale for each keypoint enables SIFT features to be robust to rotation,scaleandtranslation.

5.2 Descriptor Computation

Inthisstage,thegoalistocreatedescriptiveforthepatch that is compact, highly distinctive and to be robust to changesinilluminationand camera viewpoint.Theimage gradientmagnitudesandorientationsaresampledaround thekeypointlocation.

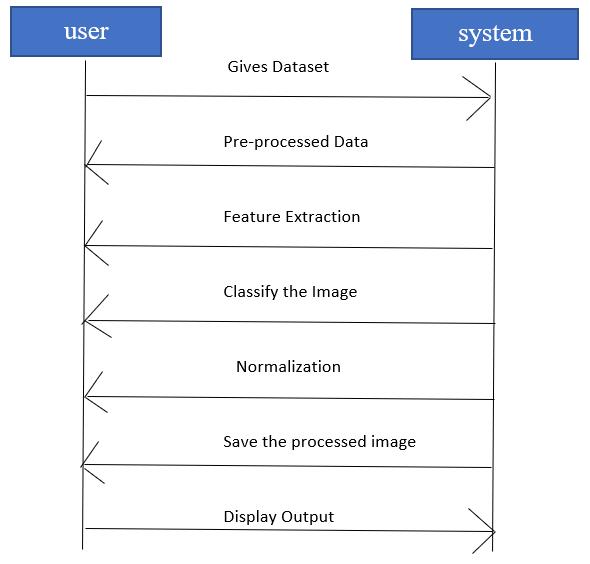

Fig. 1: Sequence Diagram

5. METHODOLOGY

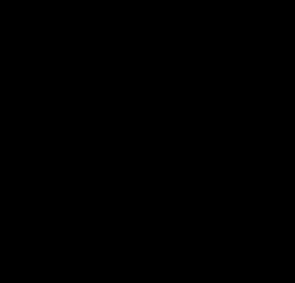

Fig. 2: Flow Chart

The Number of features depends on image content, size, and choice of various parameters such as patch size, number of angels and bins, and peak threshold. These parameterswillbebrieydescribedbelow.PeakThreshold (PeakThr) parameter is used to determine the dimension offeaturevectorsbecausePeakThrrepresentstheamount of contrastto extract a keypoint. The optimum value of PeakThr is 0.0 because when the value of PeakThr parameter increased, the number of features decreased andmorekeypointsareeliminated.

The patch size (Psize) parameter is used to extract differentgrainedoffeatures.Increasingthesizeofpatches willdecreasethedimensionoffeature.

5.3 Linear Discriminant Analysis (LDA)

LDA is one of the most famous dimensionality reduction method used in machine learning. LDA attempts to find a linear combination of features which separate two or moreclasses.

5.4

Classifiers

In this paper, we have applied three classifiers to assess theirperformancewithourapproach.Anoverviewofthese classifiersisgivenbelow:

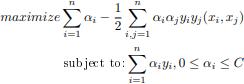

5.4.1 Support Vector Machine (SVM)

SVMisoneoftheclassifierswhichdealswitha problem ofhighdimensionaldatasetsandgivesverygoodresults. SVMtriestofindoutanoptimalhyperplaneseparating2classesbasingontrainingcases.

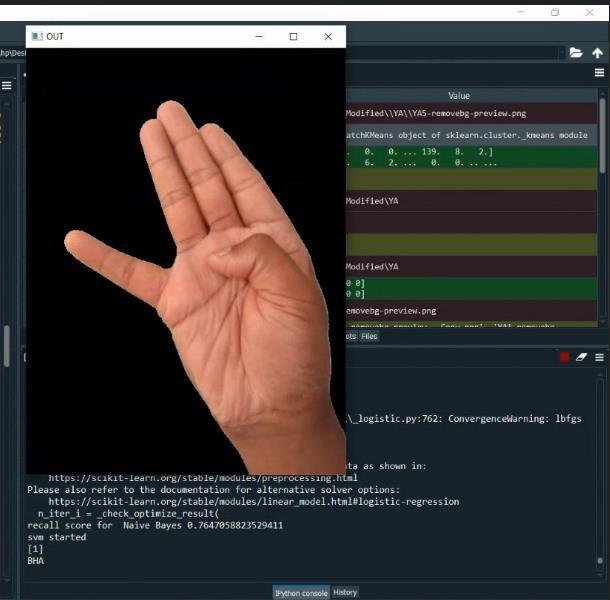

Geometrically, the SVM modeling algorithm finds an optimalhyperplanewiththemaximalmargintoseparate two classes, which requires solving the optimization problem

5.4.3 The Proposed ArSL Recognition System

The output of the SIFTalgorithm is feature vectors with high dimension for each image. Processing these high dimension vectors takes more CPU time. So, we need to reduce the dimensions of the features by applying dimensionality reduction method, such as LDA which is one of the most suitable methods to reduce the dimensions and increase the separation between different classes. LDA also decreases the distance betweentheobjectsbelongtothesameclass.Thefeature extraction and reduction are performed in both training and testing phases. The matching or the classification of thenewimagesareonlydoneinthetestingphase.

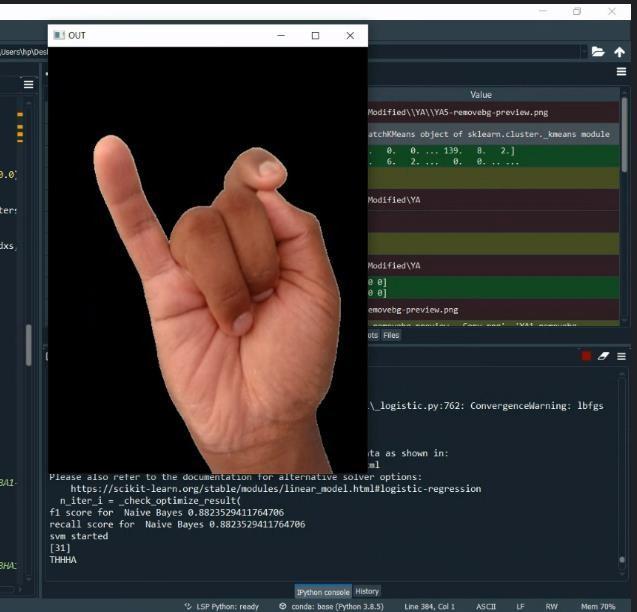

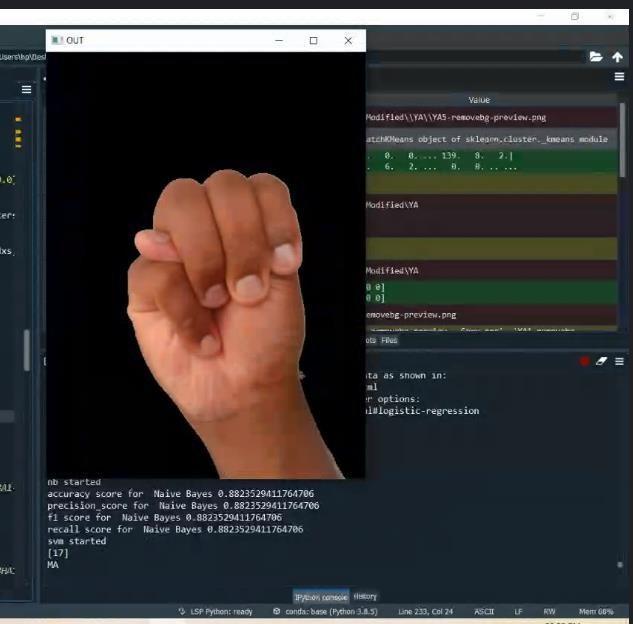

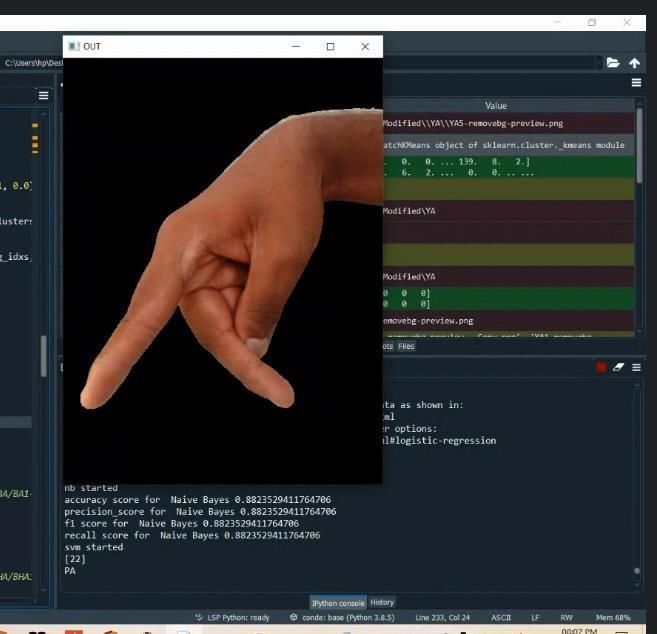

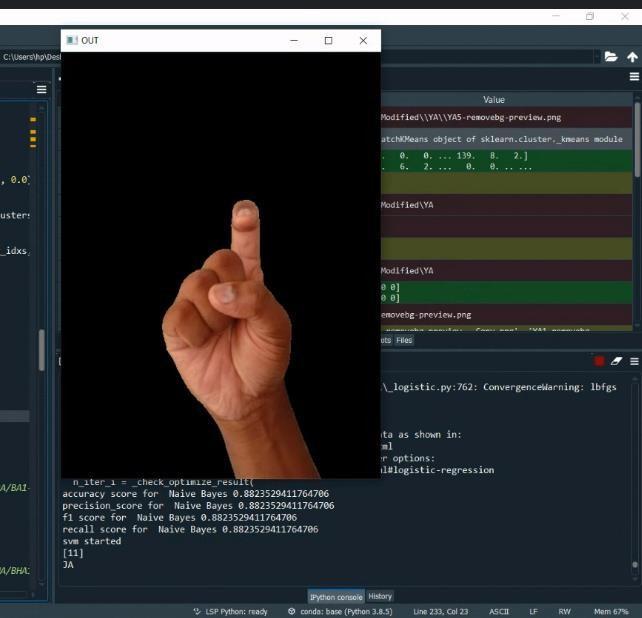

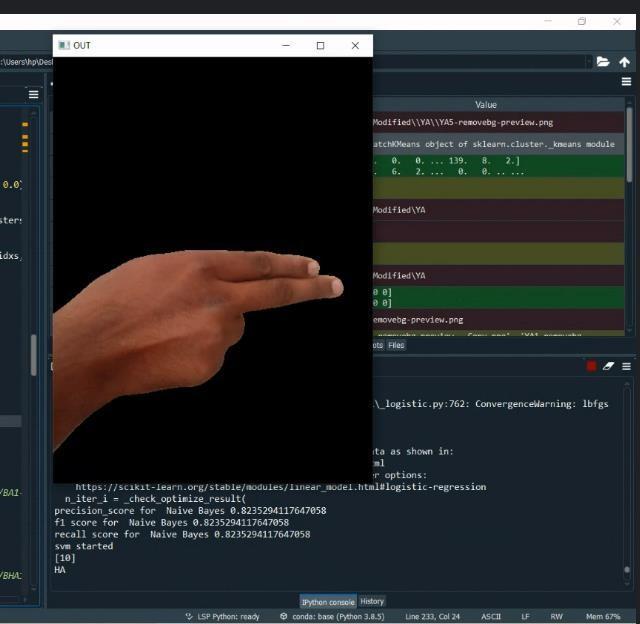

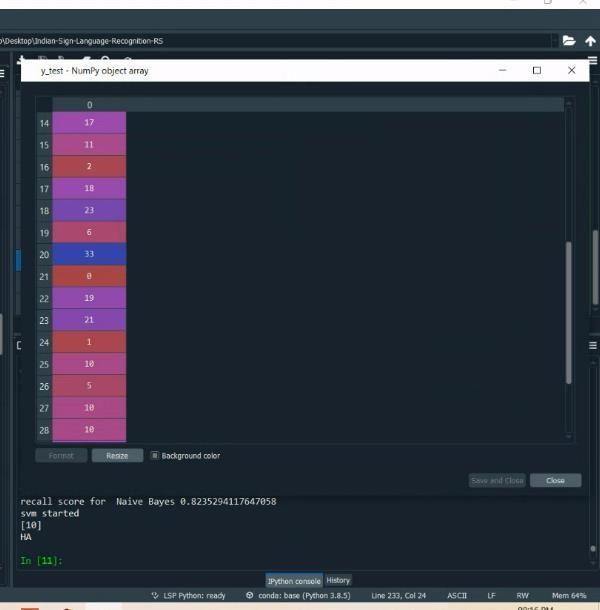

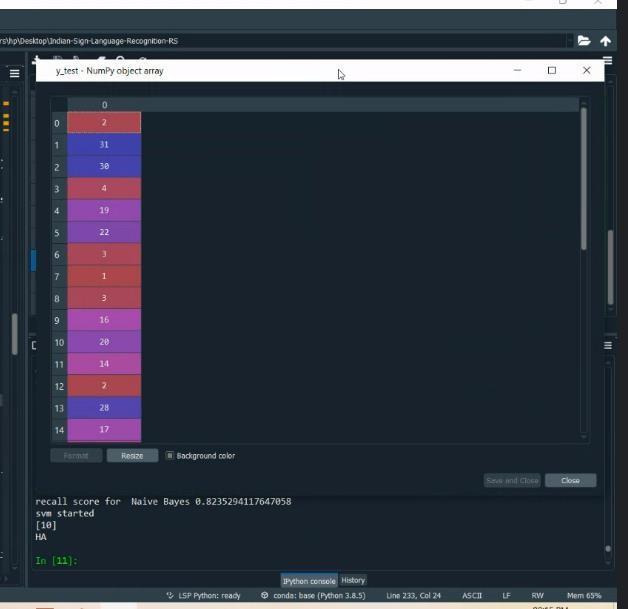

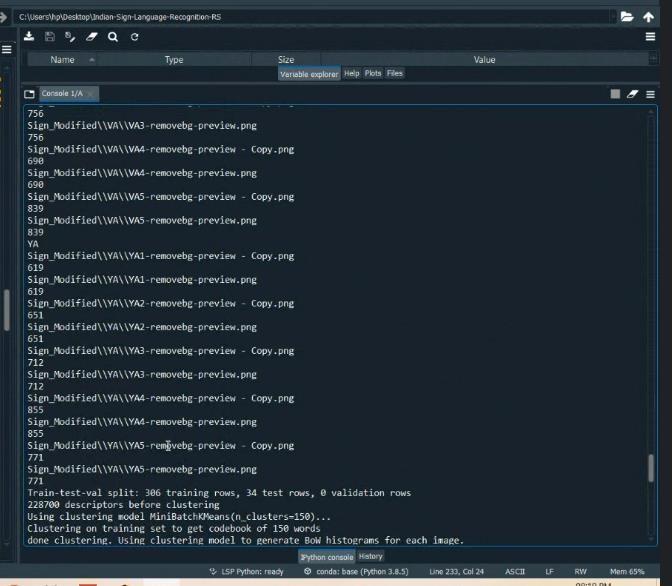

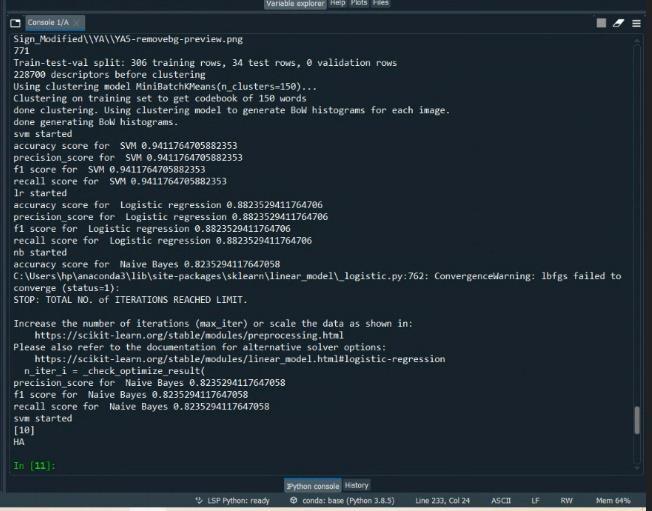

6. RESULTS

Followingarethescreenshotsoftheinterfaceandoutput oftheproposedsystem.

where,αiistheweightassignedtothetrainingsamplexi (if αi > 0,then xi is called a support vector); C is a regulationparameterusedtofindatradeofbetweenthe training accuracy and the model complexity so that a superiorgeneralizationcapabilitycanbeachieved;andK is a kernel function, which is used to measure the similaritybetweentwosamples.

5.4.2 Nearest-Neighbor Classifier

The nearest neighbor or minimum distance classifier is one of the oldest known classifiers. Its idea is extremely simple as it does not require learning. Despite its simplicity, nearest neighbors has been successful in a large number of classification and regression problems. To classify an object I, first one needs to find its closest neighbor Xi among all the training objects X and then assigns to unknown object the label Yi of Xi . Nearest neighbor classifier, works very well in low dimensions. The distance can, in general, be any metric measure: standardEuclideandistanceisthemostcommonchoice.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

7. CONCLUSION

In this paper, we have proposed a system for ArSL recognition based on gesture extracted from Arabic sign images. We have used SIFT technique to extract these features.TheSIFTisusedasitextractsinvariantfeatures which are robust to rotation and occlusion. Then, LDA technique is used to solve dimensionality problem of the extractedfeaturevectorsandtoincreasetheseparability between classes, thus increasing the accuracy for our system. In our proposed system, we have used three classifiers, SVM, k-NN, and minimum distance. The experimentalresultsshowedthatoursystemhasachieved an excellent accuracy around 98.9%. Also, the results proved that our approach is robust against any rotation andtheyachievedanidentificationrateofnearto99%.In case of image occlusion (about 60% of its size ), our approach has accomplished an accuracy (approximately 50%). In our future work, we are going to find a way to improve the results of our system in case of image occlusionandalsoincreasethesizeofthedatasettocheck itsscalability.Also,wewilltrytoidentifycharactersfrom video frames and then try to implement real time ArSL system. We can develop a model for KSL word and sentencelevelrecognition.Thiswillrequireasystemthat

can detect changes with respect to the temporal space. We can develop a complete product that will help the speech and hearing-impaired people, and thereby reduce the communication gap We will try to recognize signs which include motion. Moreover, we will focus on convertingthe sequenceofgesturesintotexti.e.word andsentences canbeheard. thenconvertingitintothespeechwhich

REFERENCES

and

[1] Assaleh, K., Al-Rousan, M.: Recognition of Arabic sign language alphabet using polynomial classifiers.

EURASIP Journal on Applied Signal Processing 2005 (2005)21362145

[2] Samir, A., Aboul-Ela, M.: Error detection and correction approachfor Arabic sign language recognition. In: Computer Engineering & Systems (ICCES), 2012 SeventhInternationalConferenceon,IEEE(2012)117123

[3] Tolba, M., Elons, A.: Recent developments in sign languagerecognitionsystems.In:ComputerEngineering& Systems (ICCES), 2013 8th International Conference on, IEEE(2013)

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

[4] Tolba, M., Samir, A., Aboul Ela, M.: Arabic sign languagecontinuoussentencesrecognitionusingpcnnand graphmatching.NeuralComputingandApplications23(3 4)(2013)9991010

[5] Al Jarrah,O.,Halawani,A.:Recognitionofgesturesin Arabic signlanguage using neuro fuzzy systems. Artificial Intelligence133(1)(2001)117138

[6] Albelwi, N.R., Alginahi, Y.M.: Real time Arabic sign language (arsl) recognition. In: International Conference on Communications and Information Technology (ICCIT 2012),Tunisia,2012.(2012)497501

[7] Tolba, M., Samir, A., Abul-Ela, M.: A proposed graph matching technique for Arabic sign language continuous sentences recognition. In: 8th IEEE International Conference on Informatics and Systems (INFOS),. (2012) 1420

[8] Al Jarrah, O., Al Omari, F.A.: Improving gesture recognition in the Arabic sign language using texture analysis. Journal of Applied Artificial Intelligence 21(1) (2007)1133

[9] Digital Image. Processing. Third Edition. Rafael C. Gonzalez. University of Tennessee. Richard E. Woods. NledDataInteractive.PearsonInternationalEdition.

BIOGRAPHIES

Sumaiya AssistantProfessor, DeptofCS&E, MITT

AnushaRPrasad 8thsem, DeptofCS&E, MITT

SukruthM 8thsem, DeptofCS&E, MITT VarshaR 8thsem, DeptofCS&E, MITT

VarshithS 8thsem, DeptofCS&E, MITT

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |Page866