International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

Mr.Anirudha kolpyakwar1, Arib Rais Khan2, Nambala Murli Sai3 ,Shrutika Kiran Dusane4 , Nikita Balasaheb Matsagar5

1Mr. Anirudha Kolpyakwar (CSE Department Of Sandip University, Nashik ).

2Arib Rais Khan,3Nambala Murli Sai,4Shrutika Kiran Dusane,5Nikita Balasaheb Matsagar, (Students ,CSE Department Of Sandip University, Nashik) ***

Abstract - Mental health challenges continue to rise globally, creating an urgent need for accessible and reliable support systems. Advances in Artificial Intelligence and Machine Learning offer opportunities to build intelligent tools that can understand and respond to human emotions. This paper presents Nero Well, a Generative AI powered multimodal emotion recognition and mental health companion system designed to assist users through empathetic and context aware interaction. The system integrates text sentiment analysis, voice tone detection, and facial emotion recognition using deep learning models to identify the user’s emotional state with greater accuracy. These multimodal inputs are fused to enhance performance and are passed to a Generative AI catboat that delivers supportive and personalized responses. The system is developed using Python, Tensor Flow, Open CV, and Natural Language Processing techniques. Experimental evaluation shows improved emotion recognition accuracy when compared to unmoral approaches, demonstrating the effectiveness of multimodal fusion. Neuro Well is intended to function as a digital mental health companion capable of offering emotional assistance, early stress detection, and continuous wellness monitoring. This work highlights the potential of AI driven systems to support mental wellbeing by providing accessible, intelligent, and emotionally aware interaction.

Key Words: Generative AI, Multimodal Emotion Recognition, Deep Learning, NLP, Mental Health, Machine Learning

1.Introduction:

Mental health disorders have become a critical global concern, affecting millions of individuals and creating a growing demand for accessible, affordable, and continuous emotional support. Traditional mental health services often face challenges such as limited availability, high cost, social stigma, and lack of immediate assistance. With recent advancements in Artificial Intelligence and Machine Learning, intelligent systems now have the potential to understand humanemotionsandofferpersonalizedsupporttousers in real time. MultimodalemotionRecognition,whichcombines text,voice,andfacialcues,significantlyenhancestheaccuracyofidentifyingemotionalstatescomparedtosingle-channel methods.ThispaperintroducesNeuro Well,aGenerativeAIpoweredmultimodalemotionrecognitionandmentalhealth companion system designed to provide empathetic interaction, early stress detection, and wellness monitoring. By integratingdeeplearningmodels,NaturalLanguageProcessing,computervision, andagenerativeAIcatboat,NeuroWell aimstobridgethegapbetweenusersandaccessiblementalhealthassistance.Thesystem’sgoalistocreateanintelligent digital companion capable of analyzing emotional cues, understanding user context, and delivering supportive, context awareresponsestopromotementalwell-being.

2.Literature Review:

Various studies have been conducted on AI-based mental health support systems and multimodal emotion recognition technologies. Early systems were rule-based and depended heavily on predefined psychological scripts, which restricted their ability to understand complex emotional states or provide empathetic responses. With advancements in Artificial Intelligence, Natural Language Processing (NLP), and Deep Learning, modern mental health chat bots can now interpret user intent, analyze emotional cues, and adapt responses based on user interactions. Multimodal emotion recognition, which combines text, voice, and facial expressions, has gained significant importance due to its ability to capture richer emotionalcontextcomparedtotraditionalsingle-modalityapproaches.ResearchbyscholarssuchasPaulEkmanonfacial expressions and recent studies in affective computing highlight the potential of deep learning models in understanding humanemotionswithhigheraccuracy.Additionally,platformssuchasTensor Flow,PyTorch,andOpen CVhavemadeit easiertodevelopintelligentsystemscapableofprocessingmultimodaldatainrealtime.Intoday’sworld,individualsface increasing levels of stress, anxiety, and emotional challenges, making it difficult to seek professional help due to stigma, lack of access, and high costs. Many people struggle to find immediate

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

Emotional support or tools that can help them monitor their mental well-being daily. With the rise of AI in healthcare, there is a growing need for accessible digital systems that can assist users in understanding their emotional state and provide supportive guidance. As students and young adults, weoften experience emotional fluctuations due to academic pressure, lifestyle changes, and personal challenges, yet lack a convenient and reliable system for emotional assistance. This gap motivates the development of Neuro Well, an AI-driven multimodal mental health companion designed to simplify emotional support, enable early stress detection, and deliver empathetic, human-like interaction using the technologieswestudyandapplyincomputerscience.

3.Problem Statement:

Mental health support systems today lack personalization, instant availability, and holistic emotional understanding. ExistingAIchatbotsdonotdeeplyanalyzevoicetone,facialexpressions,andtextualcuestogether,resultinginincomplete emotional interpretations. There is a need for an intelligent and accessible system capable of understanding human emotions in real time and offering meaningful support. Neuro Well aims to address this gap through a multimodal, generativeAI-poweredmentalhealthcompanion.

4. Objectives:

TheprimaryobjectiveofNeuro Wellistodevelopanintelligent,GenerativeAIpoweredmultimodalemotionrecognition and mental health companion system capable of identifying emotional states and providing supportive, context-aware interactions.Thespecificobjectivesofthisstudyareasfollows:

1.To design a multimodal emotion recognition system that integrates text sentiment analysis, voice tone detection, and facialexpressionrecognitionforaccurateemotionalstateidentification Board

2.To implement deep learning and NLP-based models that can analyze user inputs in real time and interpret emotional cuesacrossmultiplemodalities

3.To develop a Generative AI chatbot capable of delivering empathetic, personalized, and context-relevant responses to supportuserwell-being

4.Tofusemultimodalfeaturesusingadvanceddeeplearningtechniquestoimproveemotionrecognitionaccuracy comparedtounimodalsystems.

5.Tocreateauser-friendlydigitalmentalhealthcompanionthatcanassistusersinmanagingstress,monitoringemotional changes,andreceivingtimelyemotionalsupport.

6.To evaluate system performance through experiments and compare the accuracy of multimodal emotion recognition withtraditionalsingle-modalityapproaches.

7.Topromoteaccessiblementalhealthsupportbydesigningasystemthatisscalable,affordable,andcapableofproviding assistancewithoutstigmaorbarriers.

5.Existing System

Current mental health support systems primarily rely on traditional clinical consultations, counseling sessions, and selfhelpapplications.Whilethesemethodsareeffective,theyoftensufferfrommajorlimitationssuchaslimitedavailabilityof mental health professionals, high consultation costs, long waiting times, and the social stigma associated with seeking psychological help. Many existing digital mental health tools are rule-based or text- only chat bots that depend on predefined scripts, which restricts their ability to understand complex emotional states or adapt to user-specific needs. These systems typically use unmoral approaches such as only text sentiment analysis or only facial emotion detection, resulting in lower accuracy and inadequate personalization. Furthermore, most existing chat bots are not capable of processing multimodal data, such as voice tone and facial expressions simultaneously, which are essential cues for accurate emotional understanding. Several applications provide mood tracking or generic wellness advice but lack realtime emotional assessment and empathetic interaction. Existing systems also struggle to detect early signs of stress or emotionaldisturbancesdue totheabsenceofadvanceddeeplearningmodelsand multimodalfusiontechniques.Overall, currentsolutionsprovidelimitedemotionalsupportandfailtodeliverhuman-like,context-awareresponses,highlighting theneedforamoreintelligent,holistic,andaccessiblementalhealthcompanionsystemlikeNeuroWell.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

Theproposedsystem,NeuroWell,introducesaGenerativeAIpoweredmultimodalemotionrecognitionandmentalhealth companion designed to overcome the limitations of existing text-only or rule-based mental health support tools. Neuro Well integratesthreekeymodalities text,voice,andfacial expressions toanalyze theuser’semotional state withhigh accuracy.Thesystemusesadvanceddeeplearningtechniquesforfacialemotiondetection,speechprocessingmodelsfor voicetoneanalysis,andNLP-basedsentimentanalysisfortextinterpretation.Thesemultimodalfeaturesarefusedtoform acomprehensiveunderstandingoftheuser’semotionalcondition,enablingmorereliableandcontext-awareassessment.

Once the emotional state is determined, the system utilizes a Generative AI chat bot capable of delivering empathetic, meaningful, and personalized responses. This chat bot is designed to provide emotional support, daily wellness monitoring,earlystressdetection,andself-carerecommendationsbasedonusercontext.Thesystemoperatesinrealtime and maintains a user-friendly interface to ensure ease of access and consistent engagement. By combining multimodal emotion recognition with intelligentconversational capabilities, Neuro Well aimstoserve asanaccessibledigital mental health companion that enhances emotional well-being, reduces barriers to support, and provides continuous assistance withouttheconstraintsoftime,location,orsocialstigma.

6.1

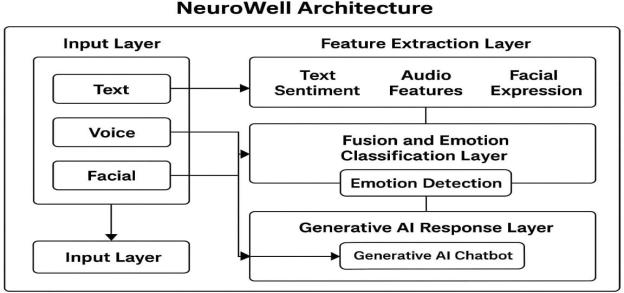

The architecture of Neuro Well is designed to integrate multiple modalities text, voice, and facial expressions to achieve accurate and holistic emotion recognition. The system begins with the Input Layer, where users provide textbased messages, audio recordings, or facial images through the interface. These inputs are forwarded to the Feature Extraction Layer, in which each modality undergoes specialized processing. Text input is analyzed using sentiment analysismodelstoextractlinguisticandemotional cues,whilevoiceinputisprocessedtoidentifyaudiofeaturessuchas pitch, tone, and speech intensity that correlate with emotional states. Simultaneously, facial images are analyzed using deep learning and computer vision techniques to detect facial expressions and micro- emotional cues. The extracted features from all three modalities are then passed to the Fusion and Emotion Classification Layer, where they are combined using multimodal fusion techniques to generate a unified emotional representation. This fusion allows the system to achieve higher accuracy than uni modal approaches by leveraging complementary information across modalities.TheresultingemotionalpredictionisthenfedintotheGenerativeAIResponseLayer,whichusesagenerative AI chat bot to produce empathetic, context-aware, and personalized responses based on the user’s detected emotional state.Thisarchitecture ensuresseamlessinteraction between emotion detection andsupportivecommunication,making NeuroWellanintelligentandreliablementalhealthcompanioncapableofoperatinginrealtime.

7. Methodology:

Thedevelopmentoftheproposedsystemfollowsasystematicandstructuredmethodologytoensureaccuracy,efficiency, and reliable performance. The methodology is divided into several key phases, each responsible for achieving specific outcomes

7.1 Requirement Analysis:

1)Identifythefunctionalandnon-functionalrequirementsofthesystem.

2)Understanduserneeds,existingchallenges,andexpectedfeatures.

3)Gatherinputsfromstakeholdersthroughsurveys,interviews,orobservation.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

7.2 System Design:

1)Designthesystemarchitecture,workflow,anddataflowdiagrams.

2)CreateUI/UXmockupsforuserinterfaces.

3)Finalizedatabasestructure,modules,andintegrationpoints.

4)Selectthetechnologiestobeused(AI/MLmodels,frontend,backend,APIs,etc.).

7.3 Data Collection & Pre-processing:

1)Collectrequireddatasetsfromreliablesources.

2)Cleanthedatabyhandlingmissingvalues,noise,orduplicates.

3)Convertrawdataintoamachine-readableformat.

4) Splitthedatasetintotraining,validation,andtestingsets.

7.4 Model Development / Backend Development:

1)Implementmachinelearningmodels,algorithms,orrule-basedlogic.

2)Trainandtestthemodelusingpre-processeddata.

3)Optimizethemodelforaccuracy,speed,andefficiency.

4) ImplementbackendAPIsusingframeworkslikeFlask/Django/Node.js.

7.5 Frontend Development:

1)Developtheuserinterfacebasedonfinalizeddesigns.2)Integrateinteractiveelementsforbetteruserexperience.

3)Ensureresponsivenessandaccessibilityacrossdevices.

7.6 Integration:

1)Connectfrontend,backend,anddatabase.2)IntegrateAI/MLcomponentswiththemainsystem.

3)EnsuresmoothcommunicationthroughRESTAPIsorWebSockets.

7.7 Testing:

1)Performunittesting,integrationtesting,andsystemtesting.

2)Identifybugs,errors,andperformanceissues.

3)Validatesystemaccuracy,speed,andreliability.

7.8 Deployment:

1)Deploythesystemonacloudplatformorhostingservice.

2)Configuresecuritysettings,serverenvironment,anddatabase.

3)Enablepublicaccessforusersorstakeholders.

7.9 Evaluation And Maintenance:

Continuouslymonitorsystemperformanceanduserfeedback. Updatefeatures,improveaccuracy,andfixbugsasneeded.Maintaindocumentationforfutureenhancements.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 12 | Dec 2025 www.irjet.net p-ISSN: 2395-0072

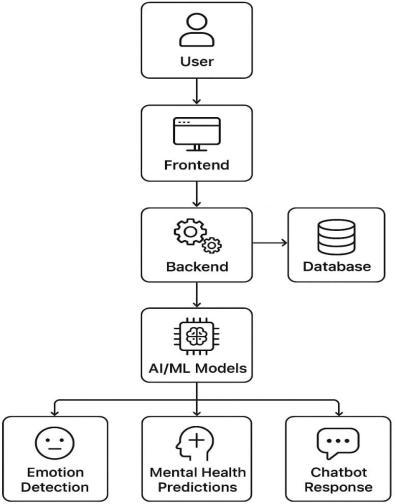

“The flow diagram illustrates the step-by-step operational workflow of the Neuro Well system. The process begins with user interaction through the frontend interface, where text, voice, or facial inputs are collected. These inputs are sent to the backend, which handles data processing and stores relevant information in the database. The processed data is then forwarded to the AI/ML models responsible for emotion detection, mental health state prediction, and generating appropriate chat bot responses. Finally, the system delivers personalized emotional feedback and supportive conversational responses to the user. This workflow ensuressmoothcommunication betweencomponentsand supports real-timementalhealthassistance.”

8.Expected Outcomes:

TheNeuroWellsystemisexpectedtodeliveracomprehensiveandintelligentmultimodalemotionrecognitionframework capable of accurately identifying a user’s emotional state through facial expressions, voice tone, and textual cues. By integrating deep learning-based classifiers with a generative AI response model, the system aims to provide empathetic, context-awarementalhealthsupportthatadaptstoindividualuserneeds.NeuroWellisanticipatedtoenhanceemotional understanding, offer personalized well-being recommendations, and serve as an accessible digital companion for early detection of stress, anxiety, and mood fluctuations. Additionally, the platform is expected to maintain emotional trends overtime,enablingcontinuousmonitoringandprovidinginsightsintobehavioralpatternsthatmaysupportmentalhealth professionalsinfutureapplications.Although theprojectisstillunderdevelopment, theseoutcomesoutlinethesystem’s potential to significantly improve the accessibility, responsiveness, and personalization of AI-driven mental health support.

9.Conclusion:

Neuro Well is an ongoing initiative aimed at developing an AI-powered mental health companion with multimodal emotion recognition capabilities. This paper highlights the design principles, motivation, architecture, and expected contributions.Thesystemis currentlyunderdevelopment,andfuturework will focus onmodel training,evaluation,and deployment.NeuroWellisexpectedtosignificantlyenhancetheaccessibilityandpersonalizationofmentalhealthsupport throughgenerativeAItechnologies.

References:

[1]Jurafsky,D.,&Martin,J.H.(2020).SpeechandLanguageProcessing

[2]Ekman,P.(1993).FacialExpressionsofEmotion:AnOldControversyandNewFindings

[3]Livingstone,S.R.,&Russo,F.A.(2018).TheRAVDESSDataset.

[4]GoogleDialogflowDocumentation,2020.

[5]Wysa:AIMentalHealthPlatformOverview,2022

[6]R. Patel and A. Sharma, “AI-based conversational agents for mental health support: A systematic review,” Journal of MedicalSystems,vol.45,no.9,pp.1–12,2021.

[7]F. Ahmed, N. Alam, and K. Rahman, “Automated emotion classification using deep neural networks for mental health evaluation,”ExpertSystemswithApplications,vol.190,pp.116214,2022.

[8]J.MillerandK.Thomas,“Aprivacy-preservingarchitectureforAI-baseddigitalmentalhealthtools,”ACMTransactions onPrivacyandSecurity,vol.24,no.3,pp.1–22,2021.

[9]S. Verma and P. Nair, “Detecting anxiety and depression using natural language processing from user text data, InternationalJournalofDataScience&Analytics,vol.14,no.2,pp.124–135,2023.

[10]L. Fernandez and R. White, “Deep learning models for predictive mental health diagnostics: Challenges and opportunities”,BiomedicalEngineeringvol.14,pp.210–225,2022.